Law of Effect Skinner and R Herrnstein noted

- Slides: 51

Law of Effect • Skinner and R. Herrnstein noted several assumptions about the Law of Effect: • Anoimals improve on performance: • Optimize their behavior • Not just repeat same behavior but make it better and more efficient • Animals adapt responding with experience

Law of Effect • Adaptation to the environment occurs via learning • Herrnstein considers this behavior change/adaptation a question • Not an answer • E. g. , what are adapting to; how are adapting; how know to adapt, etc • Wants to know if there is a similar way in which animals optimize, and can it be described by a unified paradigm.

Reinforcement as strength: • Reinforcement = making a stronger link between responding and reward • Uses Relative frequency: • Measure of response-reinforcer strength • What is the strength of responding relative to (time) or (other responding)

Reinforcement as strength: • Absolute rates of responding: P 1/time and Sr/time • How many responses per unit of time • How many reinforcers per unit of time • Response rate relative to time • Relative rate or responding = P 1/P 2 and Sr 1/Sr 2 • How many responses to R 1 compared to responses to R 2 • Is a comparison measure • Response rate as function of reinforcer rate

Reinforcement as strength: • Plot proportion of responses as function of proportion of reward • Should be a linear relationship • As rate of responses increases, the rate of reinforcement should increase • Note: This is a continuous measure, and not discrete trial: animal has more “choice” • Discrete trial – trial by trial • Free operant: animal controls how many responses it makes

Reinforcement as strength: • Differences when organism controls rate vs. time controls rate • Get exclusive choice on FR or VR schedules • faster respond = more reinforcer • In time, faster responding does not necessarily get you more • But: should alter rate of one response alternative in comparison to another • BUT: VI schedules allow examination of changes in response rate as a function of predetermined rate of reinforcer • With VI schedules, can use reinforcer rate as the independent variable! • This becomes basis of the matching law • P 1/(P 1 +P 2) = R 1/(R 1 + R 2) • Ratio of responding should approximate the rate of reinforcement

Reinforcement as strength: • Differences when organism controls rate vs. time controls rate • Get exclusive choice on FR or VR schedules • faster respond = more reinforcer • In time, faster responding does not necessarily get you more • But: should alter rate of one response alternative in comparison to another • BUT: VI schedules allow examination of changes in response rate as a function of predetermined rate of reinforcer • With VI schedules, can use reinforcer rate as the independent variable! • This becomes basis of the matching law • P 1/(P 1 +P 2) = R 1/(R 1 + R 2) • Ratio of responding should approximate the rate of reinforcement

A side bar: The Use of CODs • Most Matching law studies use a COD = Change Over Delay • Use of a COD affects response strength and choice: • Shull and Pliskoff (1967): used COD and no COD • Got better approximation of matching with COD • Why important: • COD not controlling factor, • controlling factor = response ratio • COD increases discriminability between the two reinforcer schedules • Increased discriminability = better “matching” • Why?

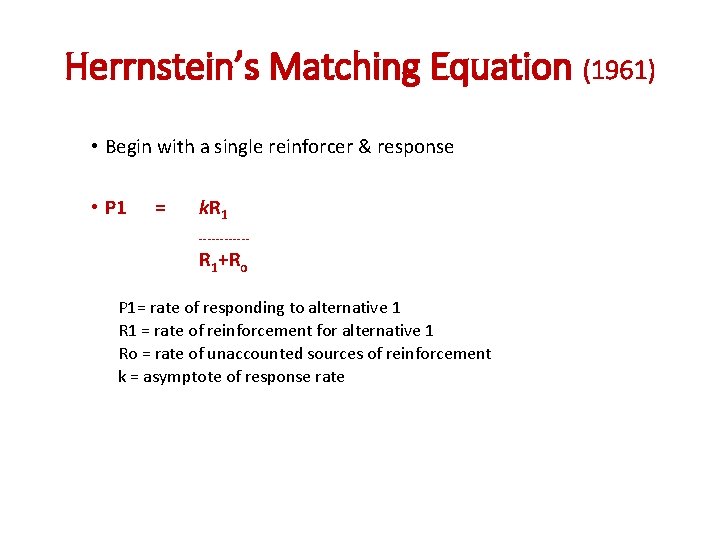

Herrnstein’s Matching Equation (1961) • Begin with a single reinforcer & response • P 1 = k. R 1 ------ R 1+Ro P 1= rate of responding to alternative 1 R 1 = rate of reinforcement for alternative 1 Ro = rate of unaccounted sources of reinforcement k = asymptote of response rate

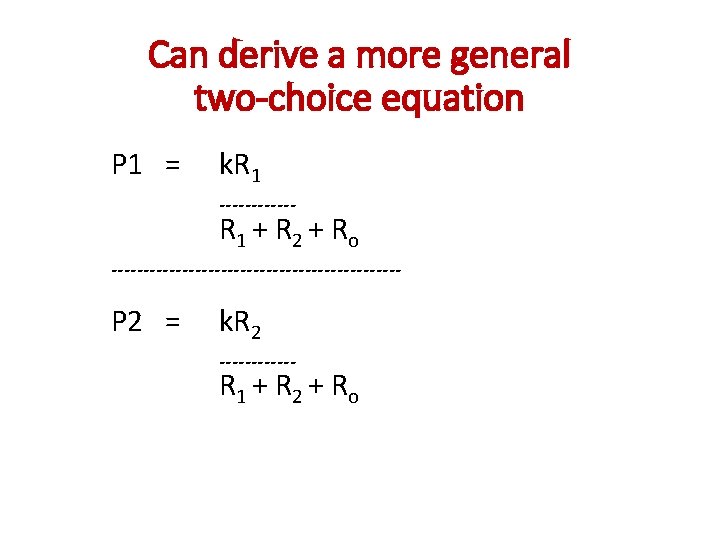

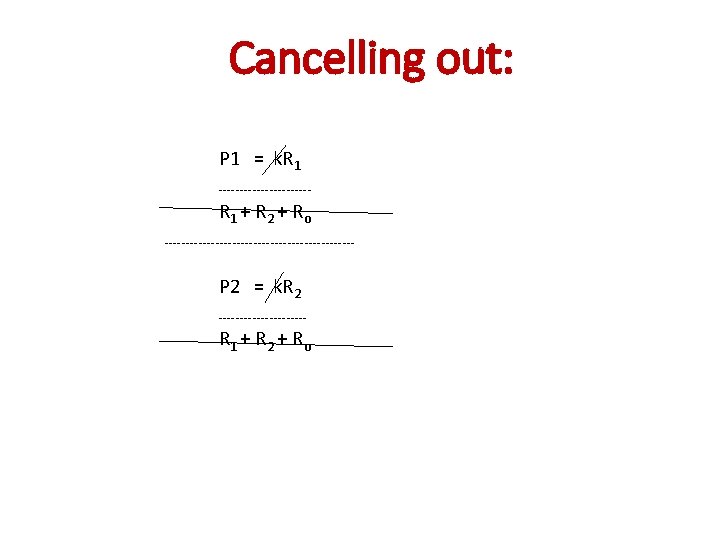

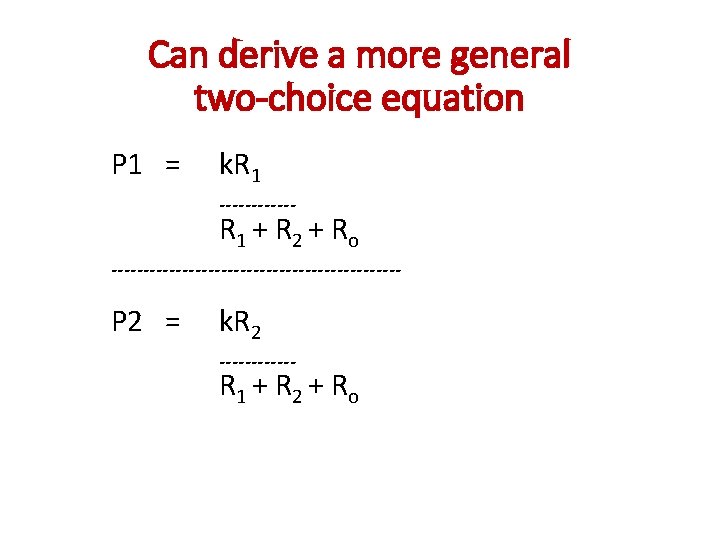

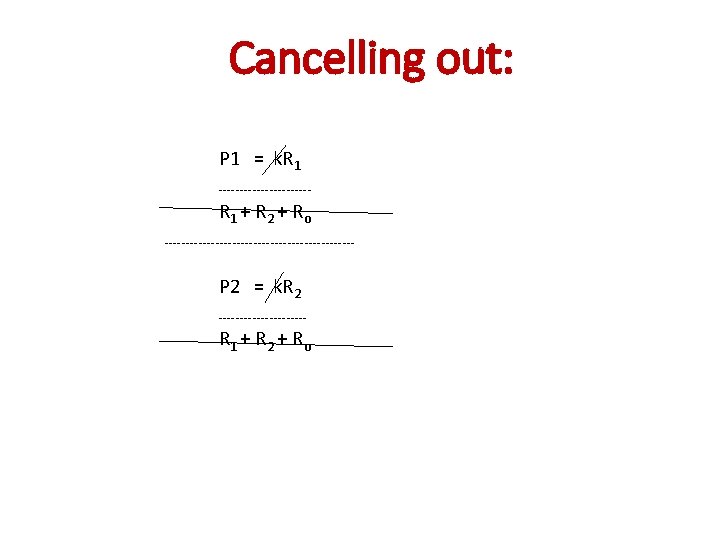

Can derive a more general two-choice equation P 1 = k. R 1 ------ R 1 + R 2 + Ro ----------------------- P 2 = k. R 2 ------ R 1 + R 2 + Ro

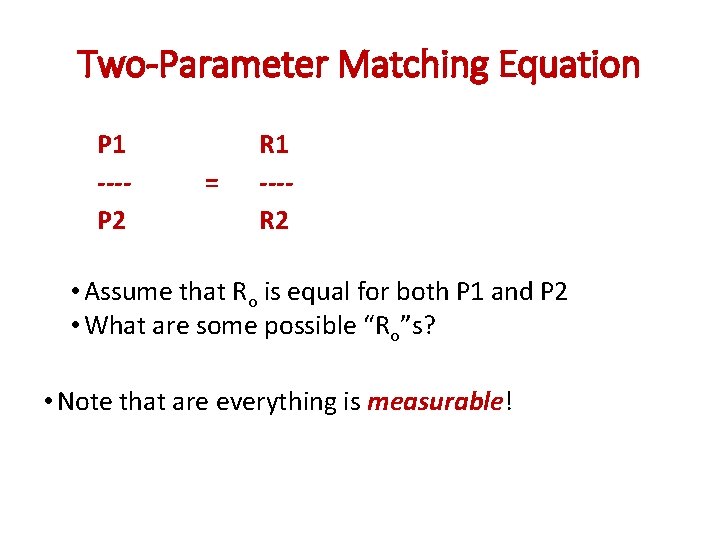

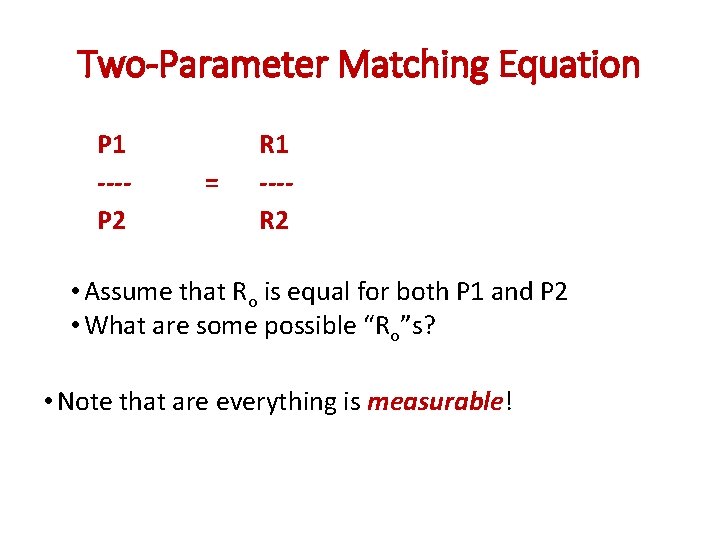

Two-Parameter Matching Equation P 1 ---P 2 = R 1 ---R 2 • Assume that Ro is equal for both P 1 and P 2 • What are some possible “Ro”s? • Note that are everything is measurable!

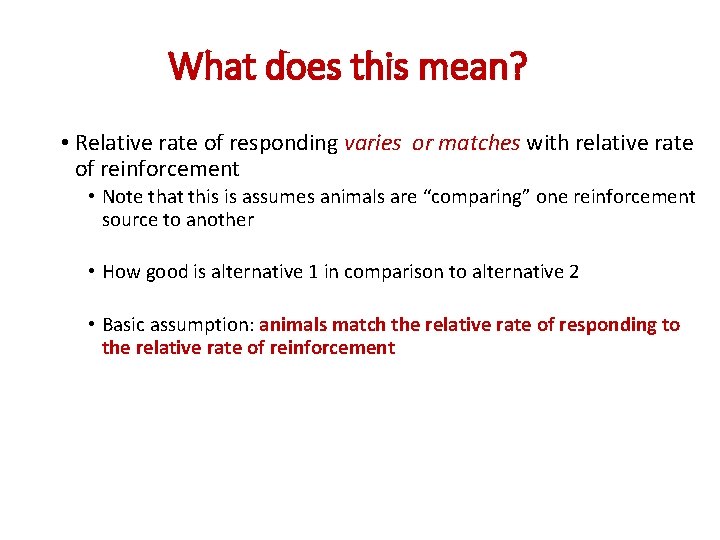

What does this mean? • Relative rate of responding varies or matches with relative rate of reinforcement • Note that this is assumes animals are “comparing” one reinforcement source to another • How good is alternative 1 in comparison to alternative 2 • Basic assumption: animals match the relative rate of responding to the relative rate of reinforcement

What does this mean? • Must have some effect on absolute rates of responding as well. • Simple matching law: P 1 = k. R 1/R 1 + Ro • Makes a hyperbole function • Some maximum rate of responding • Compares responding for alternative 1 to responding to that choice plus unknown or other reinforcers

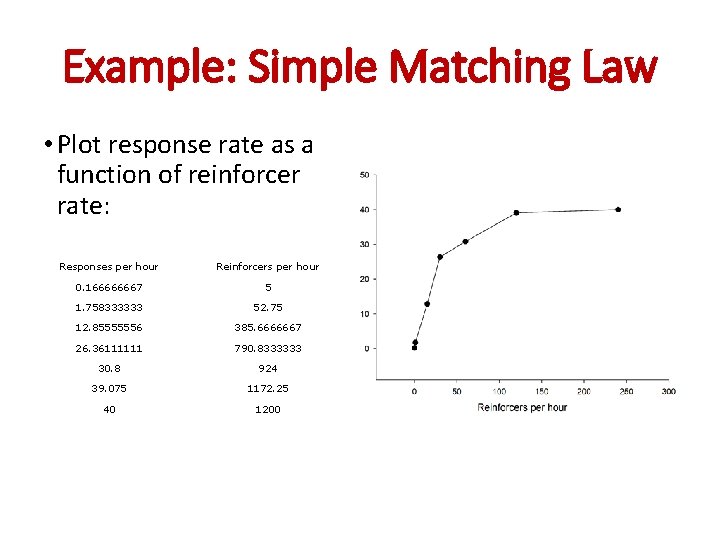

How plot? Simple Matching Law • Plot response rate (R/min) as a function of reinforcement rate (Sr/min) • Makes a hyperbola or asymptotic curve • Decelerating ascending curve • Why decelerating- why reach asymptote? • Note is a STEADY STATE theory, not an acquisition model • Law of performance or adaption • NOT a law of learning!

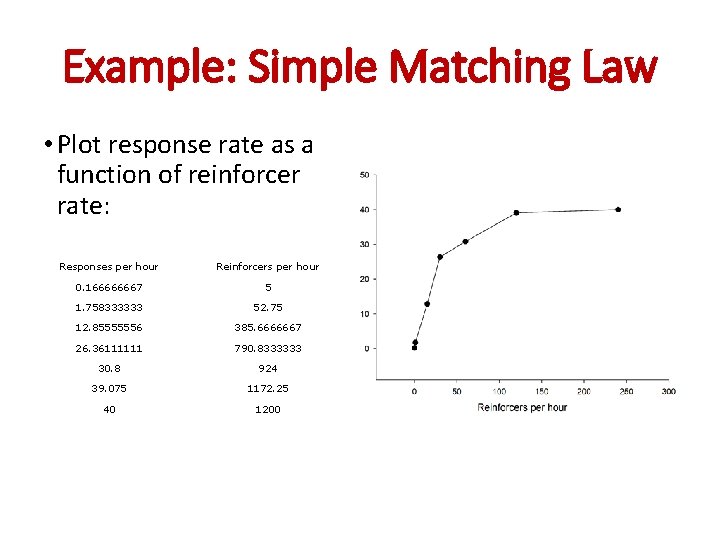

Example: Simple Matching Law • Plot response rate as a function of reinforcer rate: Responses per hour Reinforcers per hour 0. 166666667 5 1. 758333333 52. 75 12. 85555556 385. 6666667 26. 36111111 790. 8333333 30. 8 924 39. 075 1172. 25 40 1200

Factors affecting the hyperbola • Absolute rates are affected by reinforcement rates • Higher the reinforcement rate the higher the rate of responding • True up to some point (asymptote)- why? • What would be the effect of changes in Ro? • Steeper or shallower climb? • Why?

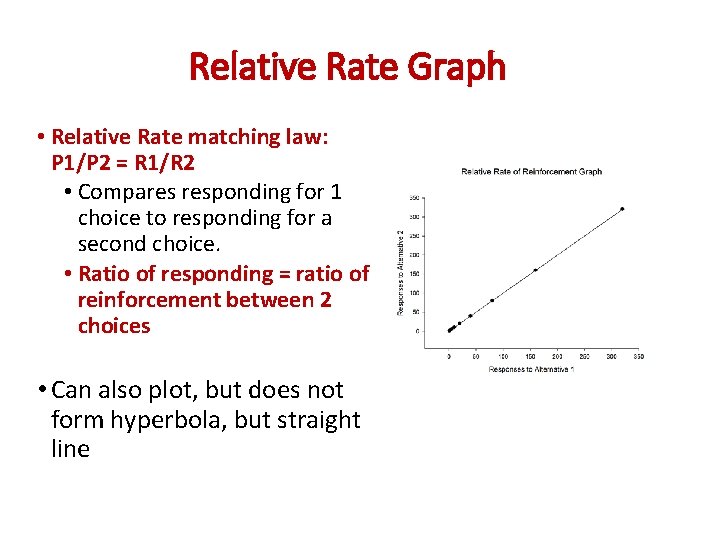

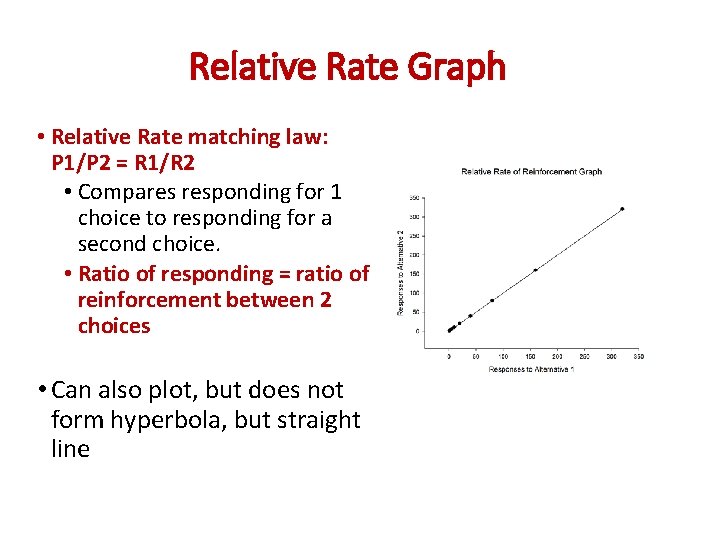

Relative Rate Graph • Relative Rate matching law: P 1/P 2 = R 1/R 2 • Compares responding for 1 choice to responding for a second choice. • Ratio of responding = ratio of reinforcement between 2 choices • Can also plot, but does not form hyperbola, but straight line

Baum, 1974 Generalized Matching Law

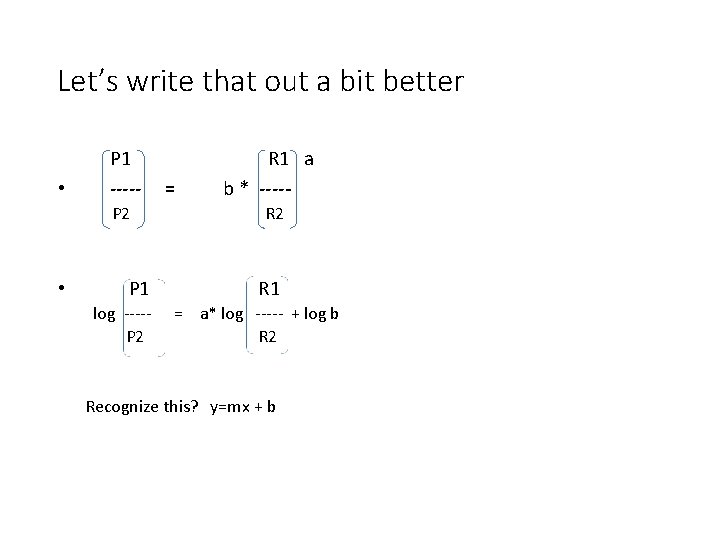

Describes basic matching law: • Uses Herrnstein’s model: P 1/(P 1+P 2) = R 1/(R 1 + R 2) • Revises to: P 1/P 2=R 1/R 2 (okay, we already did that) • ADDS two parameters: • b (bias) • a (reward sensitivity or matching) • New version: Log(P 1/P 2) = a*log(R 1/R 2) + log b • P 1/P 2 = b(R 1/R 2)a • a = the undermatching or sensitivity to reward parameter • b = bias

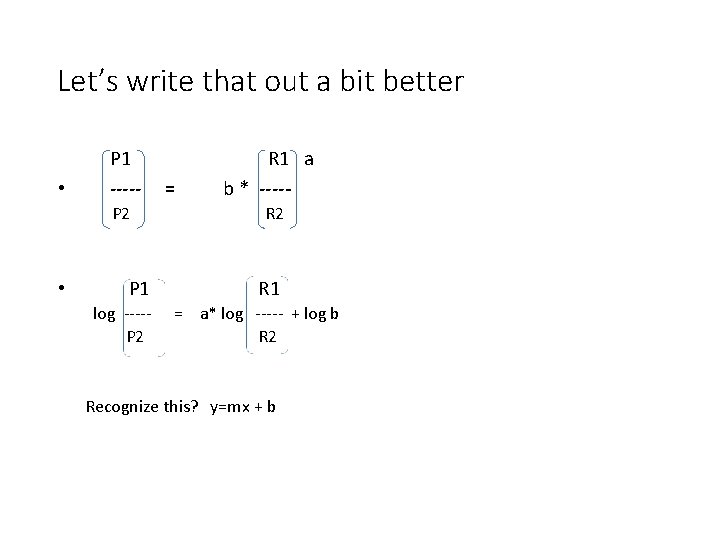

Let’s write that out a bit better • P 1 ----P 2 • P 1 log ----P 2 = R 1 a b * ----R 2 R 1 = a* log ----- + log b R 2 Recognize this? y=mx + b

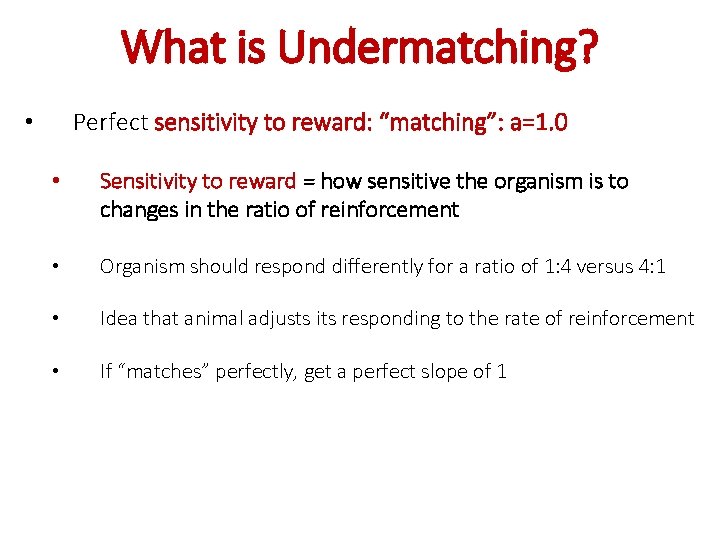

What is Undermatching? Perfect sensitivity to reward: “matching”: a=1. 0 • • Sensitivity to reward = how sensitive the organism is to changes in the ratio of reinforcement • Organism should respond differently for a ratio of 1: 4 versus 4: 1 • Idea that animal adjusts its responding to the rate of reinforcement • If “matches” perfectly, get a perfect slope of 1

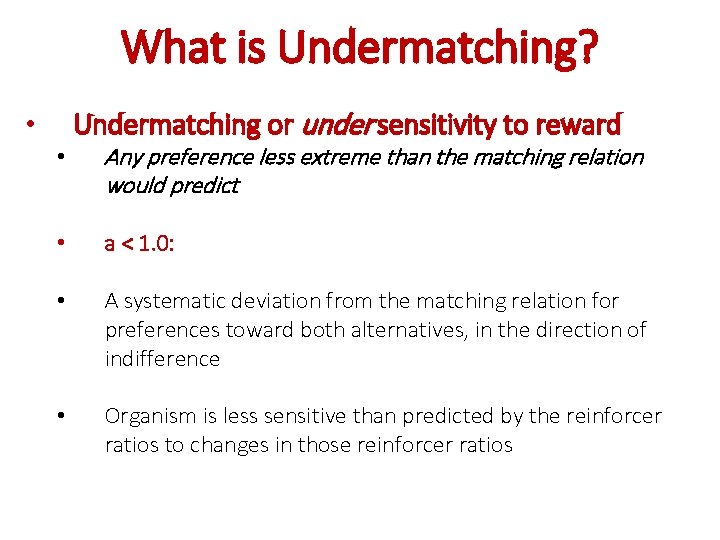

What is Undermatching? • • Undermatching or under sensitivity to reward Any preference less extreme than the matching relation would predict • a < 1. 0: • A systematic deviation from the matching relation for preferences toward both alternatives, in the direction of indifference • Organism is less sensitive than predicted by the reinforcer ratios to changes in those reinforcer ratios

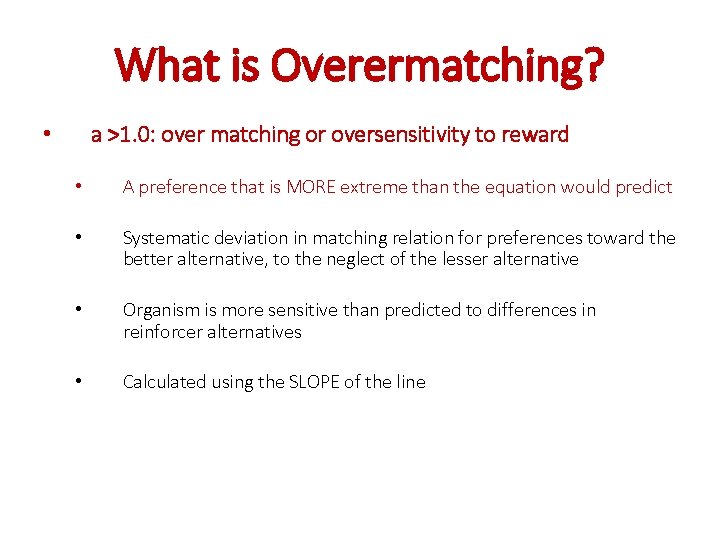

What is Overermatching? a >1. 0: over matching or oversensitivity to reward • • A preference that is MORE extreme than the equation would predict • Systematic deviation in matching relation for preferences toward the better alternative, to the neglect of the lesser alternative • Organism is more sensitive than predicted to differences in reinforcer alternatives • Calculated using the SLOPE of the line

Why Undermatching? • Reward sensitivity = discrimination or sensitivity model: • Tells us how sensitive the animal is to changes in the (rate) of reward • Can the animal tell the difference between changing reinforcement ratios • Can the animal adapt its behavior as the reinforcement situation changes • We can study different circumstances and observe how the animal adjusts between the two alternatives

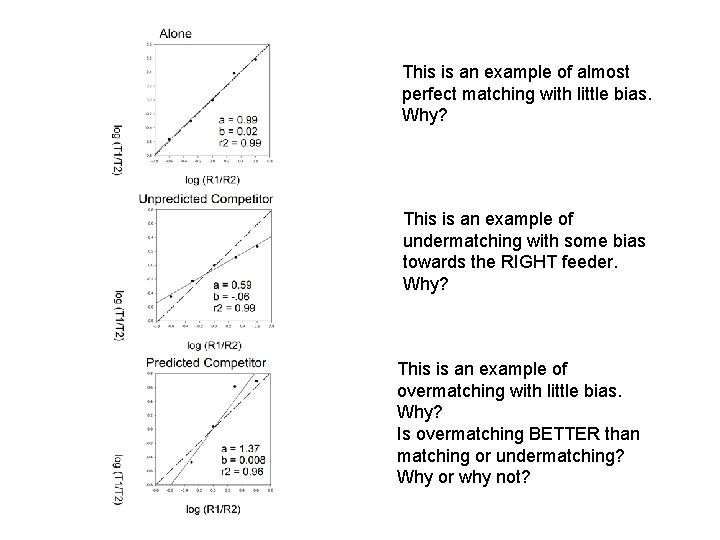

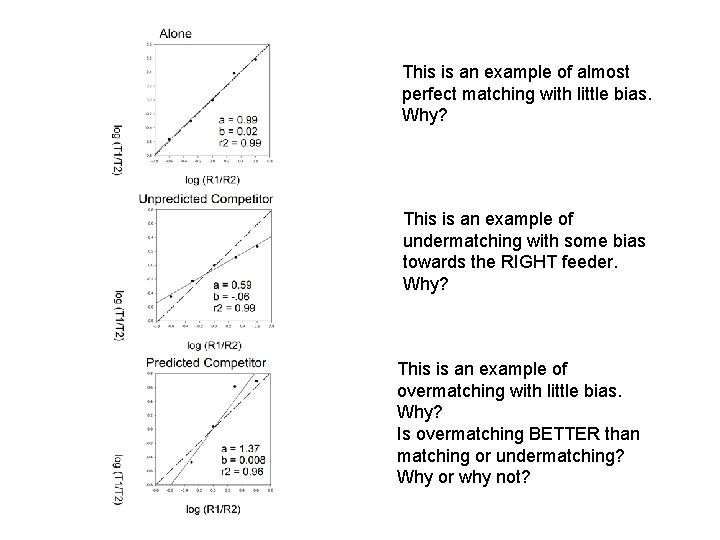

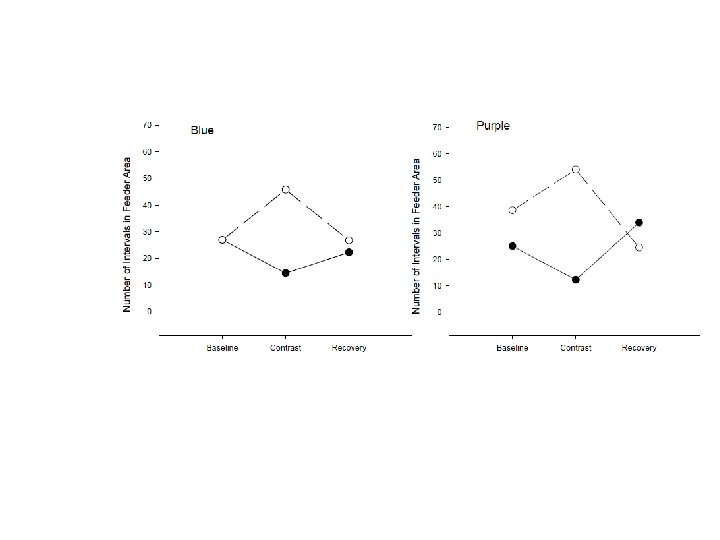

This is an example of almost perfect matching with little bias. Why? This is an example of undermatching with some bias towards the RIGHT feeder. Why? This is an example of overmatching with little bias. Why? Is overmatching BETTER than matching or undermatching? Why or why not?

Factors affecting the a or undermatching parameter: • Discriminability between the stimuli signaling the two schedules • Discriminability between the two rates of reinforcers • Component duration • COD and COD duration • Deprivation level • Social interactions during the experiment • Others?

Bias • Definition: Magnitude of preference is shifted to one reinforcer when there is apparent equality between the rewards • Unaccounted for preference • Is experimenter’s failure to make both alternative responses equal! • Calculated using the intercept of the line: • • Positive bias is a preference for R 1 Negative bias is a preference for R 2

Four Sources of Bias • Response bias: Difference in effort between the 2 response choices. • Discrepancy between scheduled and obtained reinforcement • Qualitatively different reinforcers • Qualitatively different reinforcement schedules • Examples: • • Difficulty of making response: one response key harder to push than other Qualitatively different reinforcers: Spam vs. Steak Color Preference for a side of box, etc

Qualitatively Different Rewards • Matching law only takes into consideration the rate of reward • If qualitatively different, must add this in • • • Must add in additional factor for qualitative differences So replace bias parameter with new ratio parameter: P 1/P 2 = V 1/V 2*(R 1/R 2)a Log(P 1/P 2)=a * log(V 1/V 2)*log(R 1/R 2) + log b Assumes value stays constant regardless of richness of a reinforcement schedule Interestingly, can get U-shaped functions rather than hyperbolas • • Has to do with changing value of reward ratios when dealing with qualitatively different reinforcers Different satiation/habituation points for each type of reward Move to economic models that allow for U-shaped rather than hyperbolic functions.

Qualitatively different reinforcement schedules Type of schedule can make a difference in predictions of the matching law: • • Use of VI versus VR Animal should show exclusive choice for VR, or minimal responding to VI Animal can control response rate Cannot control time. Herrnstein was correct about “adaptation” Not “match” in typical sense, but is still optimizing

So, does the matching law work? • It is a really OLD model! • Matching holds up well under mathematical and data tests • Some limitations for model • Tells us about sensitivity to reward and bias

Applications of the Matching Law: SIMPLE Matching law • Mc. Dowell, 1984: Wanted to apply Herrnstein's equation to clinical settings so uses Herrnstein's equation: P=P 1/R 1+Ro • Makes an important point about Herrnstein's equation: • ro governs rapidity with which hyperbola reaches asymptote • What? How much “other” reinforcement controls how fast the organism increases responding • Thus: extraneous reinforcement can affect response strength (rate)

Shows Importance of Equation • Contingent reinforcement supports higher rate of reinforcement in barren environments than in rich environments • Get more effectiveness of reinforcement when there is no competition from other sources of reinforcement • What? High rates of Ro can affect the effectiveness of your reinforcer! • When few other Sr's available, scheduled Sr's matter more • When many other Sr’s are available, scheduled Srs matter less!

Applications: Mc. Dowall, 1982 • Law of diminishing returns: Given an increment in reinforcement rate • “(delta-Sr) produces a larger increment in the response rate (delta-r) when the prevailing rate of contingent reinforcement is low (r 1) than high (r 2)” • The change in the reinforcer rate produces a larger change in the response rate when the reinforcement rate is low versus high. • Response rate increases hyperbolically with increases in reinforcement

Applications: Mc. Dowall, 1982 • Okay, too much theory: Let’s look at some applications!! • Reinforcement by experimenter/therapist DOES NOT OCCUR in isolation- must deal with Ro • What else and where else is your client getting reward? • What are they comparing YOUR reward to?

Demonstrates with several human studies • Bradshaw, Szabadi, & Bevan (1976; 1977; 1978) • Button pressing for money • Would “human” organisms match responses to reinforcement rates? • Yes, with excellent fits to the equation (r 2 =. 9 or higher!) • Bradshaw, et al, 1981: Bipolar vs. Typical subjects • Response rate was hyperbolic regardless of mood state • k was larger, Ro smaller when manic • k was smaller, Ro larger when depressed

Applications of Herrnstein’s Equation • Mc. Dowell: Self Injurious Behavior (SIB) in young boy • Young boy scratched self to point of bleeding • Used punishment (obtained reprimands) for scratching • Found large value of Ro, but kid did match rate of punishment to rate of scratching • Amount of inappropriate behavior changed according to punishment schedule • Alternative reinforcement for scratching was still dominant behavior • Ro (the itch) was so pervasive, was dominant Response • Ayllon & Roberts (1974): 5 th grade boys and studying • Reading test performance was rewarded (R 1) • Disruptive behavior/attention = Ro • When increased reinforcement for reading (R 1), responding increased and the disruptive behavior decreased (reduced values of Ro)

Three ways to Deal with Noncontingent Reinforcement (Ro) To increase responding: 2 alternatives: 1. Increase rate of contingent reinforcement (P 1) by increasing rate of R 1 • Get more for “being good” than for “being bad” 2. Decrease rate of free, noncontingent reinforcement (Ro)

What happens if we increase the reinforcement rate? • Value of staying in seat: 10 • Value of out of seat: 50 • Ratio of 10/50 or 1/5 • How much time should child stay in his seat? 20% of time • Intervention: Increase value of staying in seat: 100 • Value of out of seat remains at: 50 • Ratio now is 100/50 or 2/1 • How much time should child stay in his seat?

Dealing with noncontingent reinforcement (Ro) • Another example: Unconditional Positive Regard = free, noncontingent reinforcement • Will reduce frequency of undesired responding • BUT, will also reduce behaviors that may want!!! • BUT: Remember using Ro can be problematic because it does not target a specific response • Like a DRO schedule • May end up reinforcing another unwanted behavior.

The Good Behavior Game • One kid is always ‘bad’ and the other kids pick on her • Reduce “bad” behavior by catching her being good • Reinforce other kids for catching her being good • Decreasing reinforcement for attending to “bad” behavior • Other kids ignore bad behavior • Reinforcement for bad behavior decreases • Increasing reinforcement for attending to good behavior • Other kids reinforced for attending to good behavior • Reinforcement for good behavior INCREASES • Good Behavior Game works well in rich environments where have more opportunity to alter reinforcement rates.

Dealing with Noncontingent Reinforcement (Ro) • Alternative: Don’t increase your reinforcer, but decrease the other reinforcers! • Not have to add reinforcers • Ignore or extinguish the Ro • DECREASE reinforcement to alternative situation; also avoid satiation/habituation for YOUR reinforcer • Allows for contextual changes in reinforcement

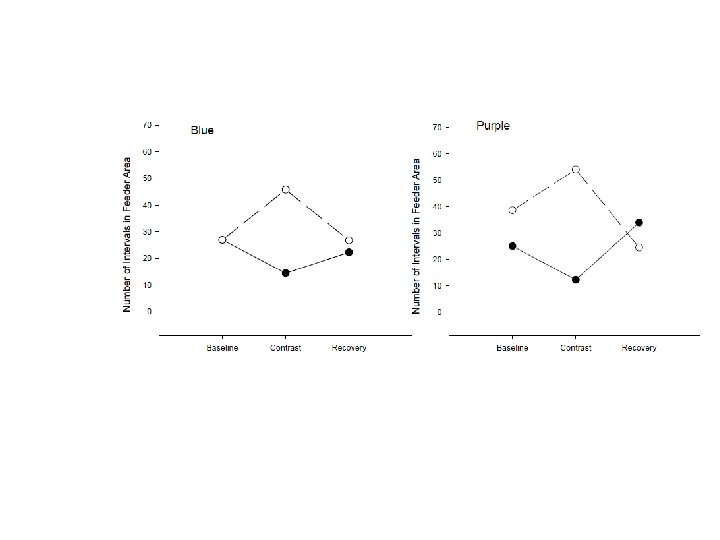

Behavioral Contrast • Behavioral contrast: Often found "side effect“ in applied situations: • Original study: Reynolds, 1961 basic laboratory study • Pigeons on CONC schedules of reinforcement with equal schedules at first • Then, extinguish reinforcement on one alternative • Got HUGE change in responding for non-EXT alternative

Behavioral Contrast • Why? Behavioral contrast- changed value of schedule • Remember what you are asking: How is the rate of reinforcer for 1 alternative compared to the rate of the other alternative! • In baseline: value of VI 60 compared to VI 60 • 60/60 = 1 • Value of P 1 is the same as value of P 2

Behavioral Contrast • During next condition: Value of VI 60 compared to EXTINCTION • 60/0 = ? • Value of P 1 is extraordinarily higher than value of nothing! • Did absolute value change? • Did RELATIVE value change?

Behavioral Contrast • Helps explain "side effects" of reinforcement: • Child talks out, shouts answers to teacher during class • Also does some small amount of talking to peers • Teacher puts “shout outs” on EXT • Result: decrease in talking to teacher during class, but then kid talks more to peers • Kids are providing the reinforcers; this becomes more valuable because teacher is ignoring!

Behavioral Contrast • Example: • Boy talking to teacher during class, • Teacher puts the talking on EXT • But then kid talks more to peers • Look at ratios: P 1 R 1 -- = -P 2 R 1

Change Behavior using the Matching Law • Take home message: • It is the disparity between 2 relative rates of reinforcement that is important, not the incompatibility of the 2 responses • CONTEXT matters!

Conclusions: Clinical applications: • MUST consider broader environmental conceptualizations of problem behavior • Must account for sources of reinforcement other than that provided by therapist • again- Herrnstein's idea of context of reinforcement • if not- shoot yourself in the old therapeutic foot