Lattices Segmentation and Minimum Bayes Risk Discriminative Training

Lattices Segmentation and Minimum Bayes Risk Discriminative Training for Large Vocabulary Continuous Speech Recognition Vlasios Doumpiotis, William Byrne Johns Hopkins University Speech Communication. Accepted, in Revision Speech Lab NTNU Present by shih-hung 2005/10/31

Reference n n Discriminative training for segmental minimum bayes risk decoding (ICASSP 2003) (Alphadigits) Lattice segmentation and minimum bayes risk discriminative training (Eurospeech 2003) (Alphadigits) Pinched lattice minimum bayes risk discriminative training for large vocabulary continuous speech recognition (ICSLP 2004) Minimum bayes risk estimation and decoding in large vocabulary continuous speech recognition (ATR workshop 2004) Speech Lab NTNU 2

Outline n n Introduction Minimum bayes risk discriminative training – Update parameters via Extended Baum Welch algorithm – Efficient computation of risk – Risk-based pruning of the evidence space n n n Pinched lattice minimum bayes risk discriminative training Pinched lattice MMIE for whole word acoustic models One worst pinched lattice MBRDT Small vocabulary ASR performance and analysis (Alphadigits) MBRDT for LVCSR results (Switchboard, MALACH-CZ) Conclusion Speech Lab NTNU 3

Introduction n Discriminative acoustic modeling procedures, such as MMIE, are powerful modeling techniques that can be used to improve the performance of ASR systems. n MMI is often motivated as an estimation procedure by observing that it increases the a posteriori probability of the correct transcription of the speech in the training set. MMI (MP) ML Speech Lab NTNU 4

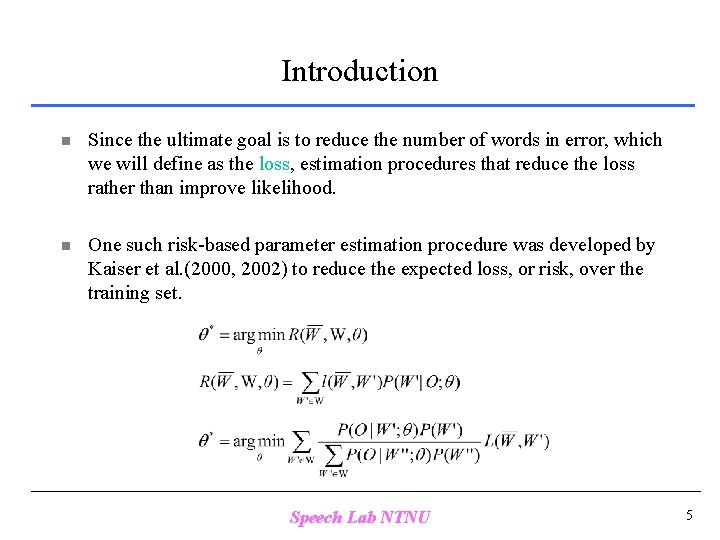

Introduction n Since the ultimate goal is to reduce the number of words in error, which we will define as the loss, estimation procedures that reduce the loss rather than improve likelihood. n One such risk-based parameter estimation procedure was developed by Kaiser et al. (2000, 2002) to reduce the expected loss, or risk, over the training set. Speech Lab NTNU 5

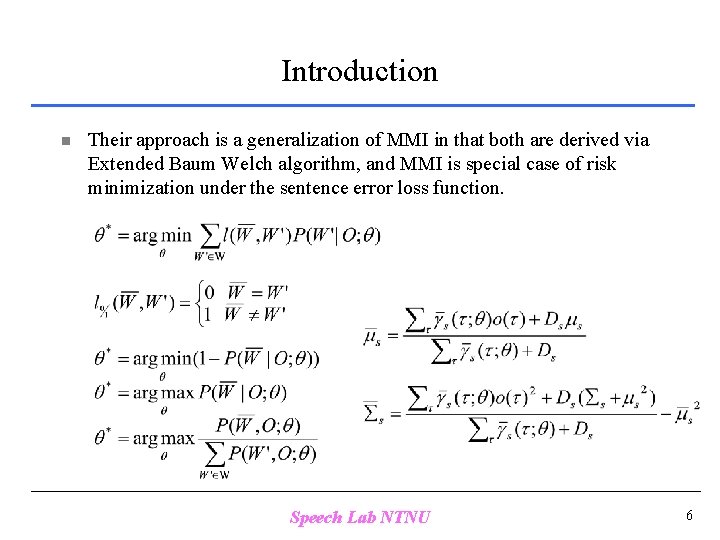

Introduction n Their approach is a generalization of MMI in that both are derived via Extended Baum Welch algorithm, and MMI is special case of risk minimization under the sentence error loss function. Speech Lab NTNU 6

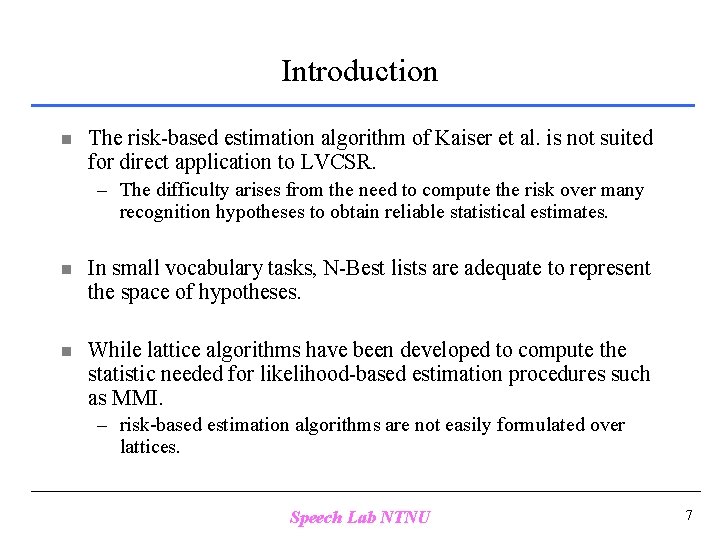

Introduction n The risk-based estimation algorithm of Kaiser et al. is not suited for direct application to LVCSR. – The difficulty arises from the need to compute the risk over many recognition hypotheses to obtain reliable statistical estimates. n In small vocabulary tasks, N-Best lists are adequate to represent the space of hypotheses. n While lattice algorithms have been developed to compute the statistic needed for likelihood-based estimation procedures such as MMI. – risk-based estimation algorithms are not easily formulated over lattices. Speech Lab NTNU 7

Introduction n The focus of this paper is the efficient computation of loss and likelihood in risk-based parameter estimation for LVCSR. n We use lattice-cutting techniques developed for Minimum Bayes Risk decoding (Goel 2001) to efficiently compute the statistics needed by the algorithm of Kaiser et al. Speech Lab NTNU 8

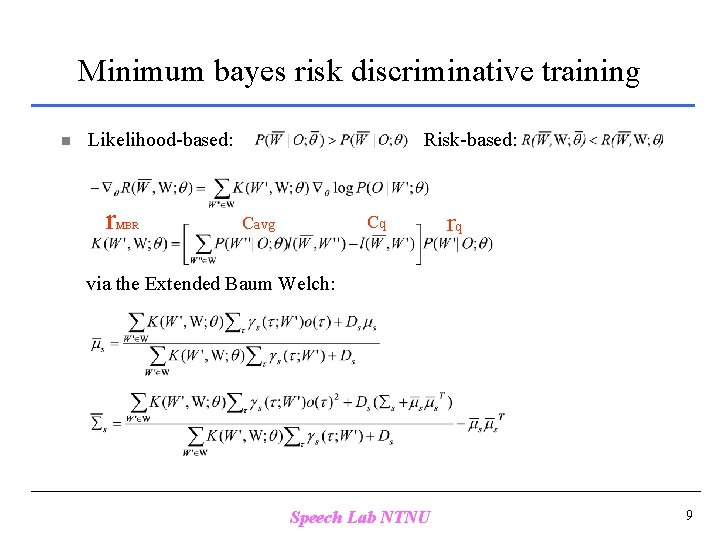

Minimum bayes risk discriminative training n Likelihood-based: r MBR Risk-based: Cq Cavg rq via the Extended Baum Welch: Speech Lab NTNU 9

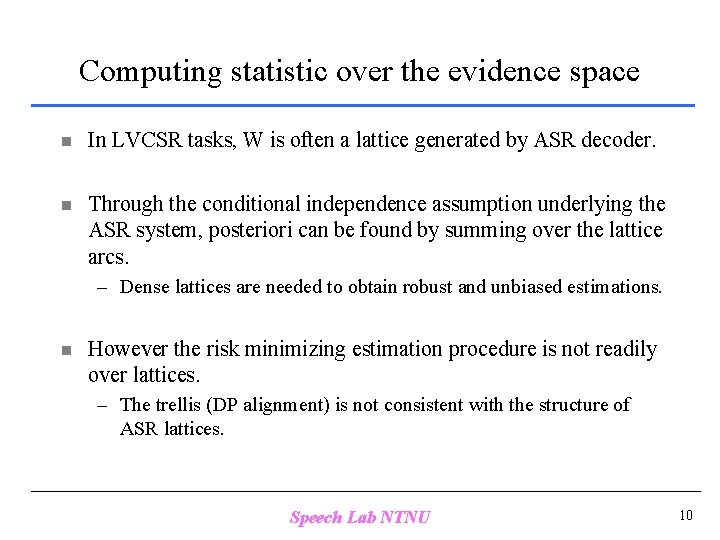

Computing statistic over the evidence space n In LVCSR tasks, W is often a lattice generated by ASR decoder. n Through the conditional independence assumption underlying the ASR system, posteriori can be found by summing over the lattice arcs. – Dense lattices are needed to obtain robust and unbiased estimations. n However the risk minimizing estimation procedure is not readily over lattices. – The trellis (DP alignment) is not consistent with the structure of ASR lattices. Speech Lab NTNU 10

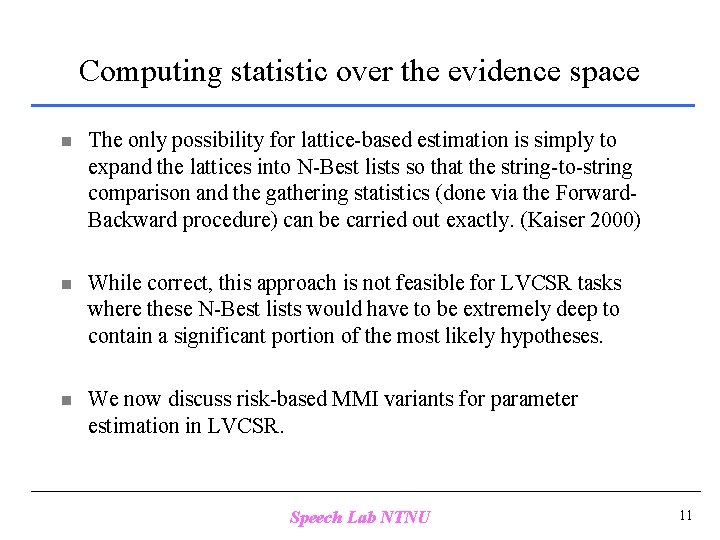

Computing statistic over the evidence space n The only possibility for lattice-based estimation is simply to expand the lattices into N-Best lists so that the string-to-string comparison and the gathering statistics (done via the Forward. Backward procedure) can be carried out exactly. (Kaiser 2000) n While correct, this approach is not feasible for LVCSR tasks where these N-Best lists would have to be extremely deep to contain a significant portion of the most likely hypotheses. n We now discuss risk-based MMI variants for parameter estimation in LVCSR. Speech Lab NTNU 11

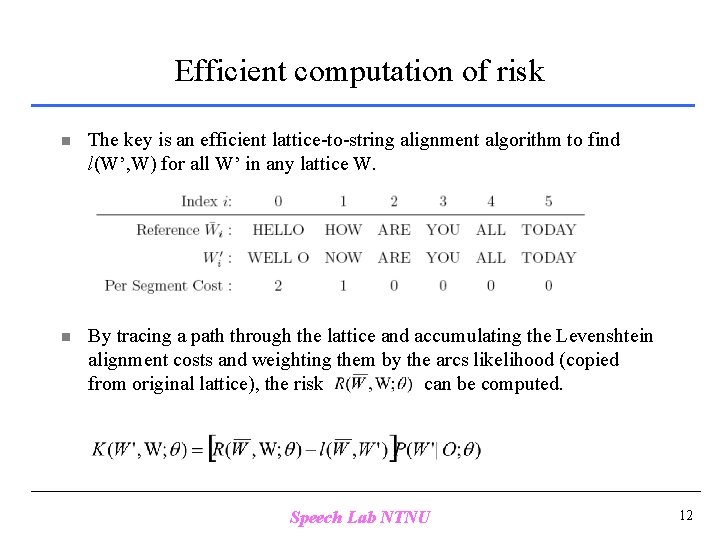

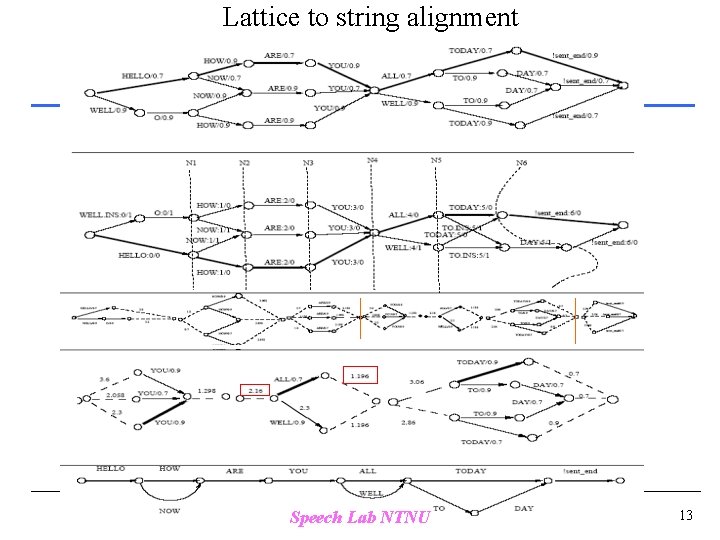

Efficient computation of risk n The key is an efficient lattice-to-string alignment algorithm to find l(W’, W) for all W’ in any lattice W. n By tracing a path through the lattice and accumulating the Levenshtein alignment costs and weighting them by the arcs likelihood (copied from original lattice), the risk can be computed. Speech Lab NTNU 12

Lattice to string alignment Speech Lab NTNU 13

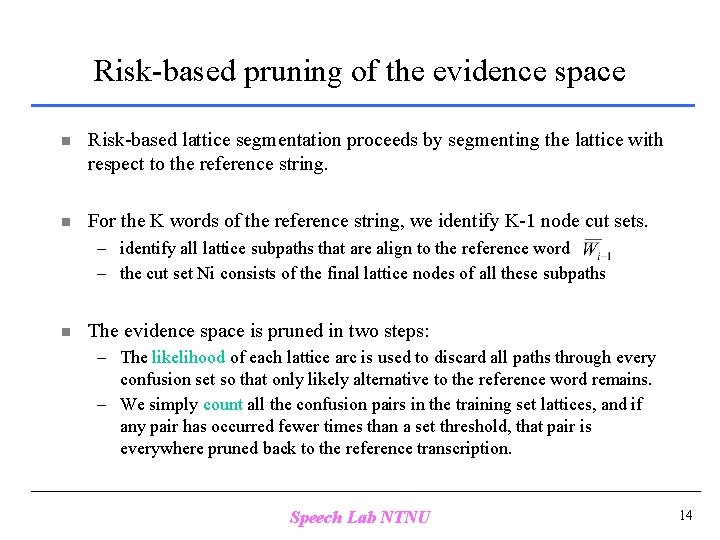

Risk-based pruning of the evidence space n Risk-based lattice segmentation proceeds by segmenting the lattice with respect to the reference string. n For the K words of the reference string, we identify K-1 node cut sets. – identify all lattice subpaths that are align to the reference word – the cut set Ni consists of the final lattice nodes of all these subpaths n The evidence space is pruned in two steps: – The likelihood of each lattice arc is used to discard all paths through every confusion set so that only likely alternative to the reference word remains. – We simply count all the confusion pairs in the training set lattices, and if any pair has occurred fewer times than a set threshold, that pair is everywhere pruned back to the reference transcription. Speech Lab NTNU 14

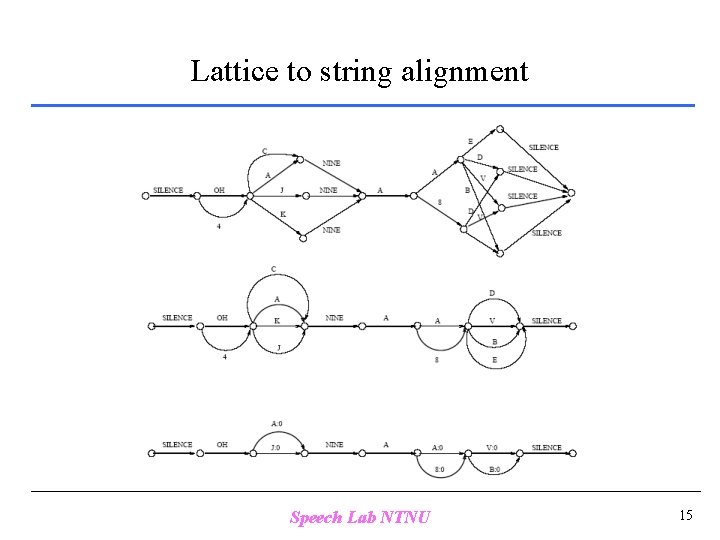

Lattice to string alignment Speech Lab NTNU 15

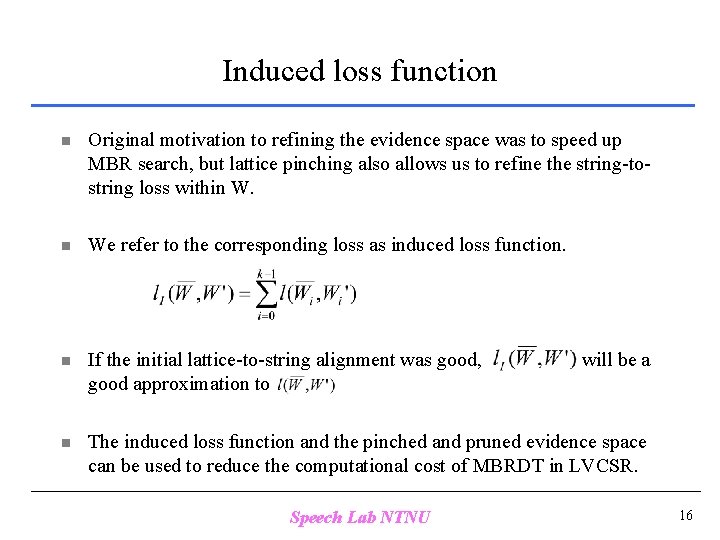

Induced loss function n Original motivation to refining the evidence space was to speed up MBR search, but lattice pinching also allows us to refine the string-tostring loss within W. n We refer to the corresponding loss as induced loss function. n If the initial lattice-to-string alignment was good, good approximation to n The induced loss function and the pinched and pruned evidence space can be used to reduce the computational cost of MBRDT in LVCSR. Speech Lab NTNU will be a 16

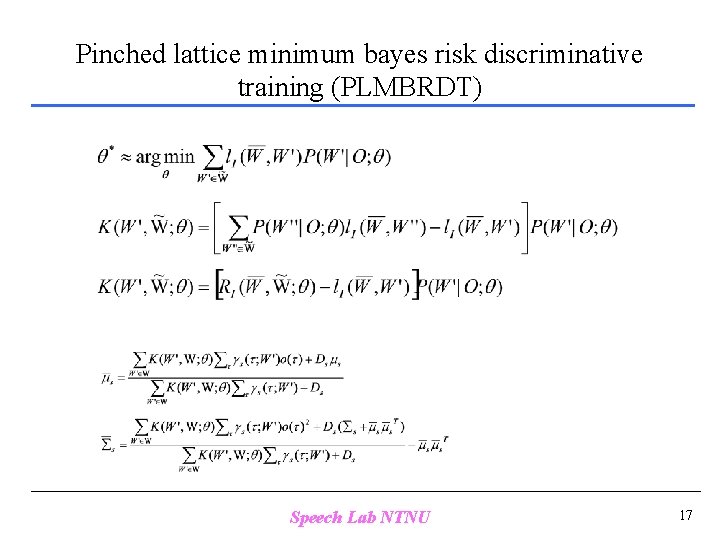

Pinched lattice minimum bayes risk discriminative training (PLMBRDT) Speech Lab NTNU 17

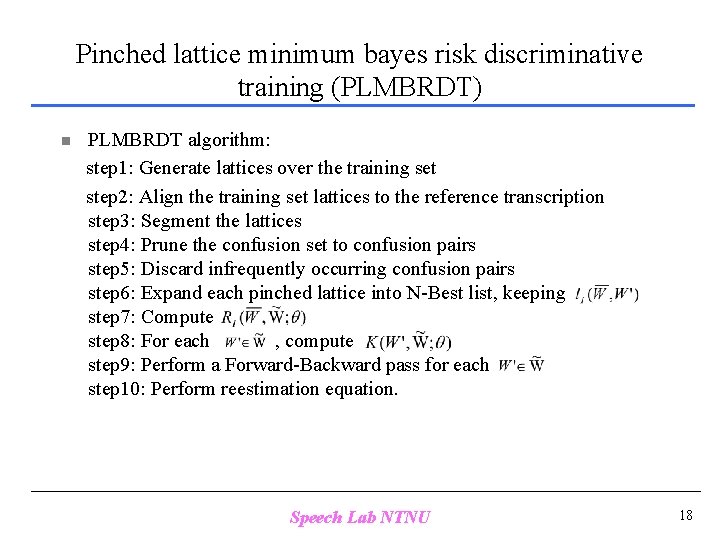

Pinched lattice minimum bayes risk discriminative training (PLMBRDT) n PLMBRDT algorithm: step 1: Generate lattices over the training set step 2: Align the training set lattices to the reference transcription step 3: Segment the lattices step 4: Prune the confusion set to confusion pairs step 5: Discard infrequently occurring confusion pairs step 6: Expand each pinched lattice into N-Best list, keeping step 7: Compute step 8: For each , compute step 9: Perform a Forward-Backward pass for each step 10: Perform reestimation equation. Speech Lab NTNU 18

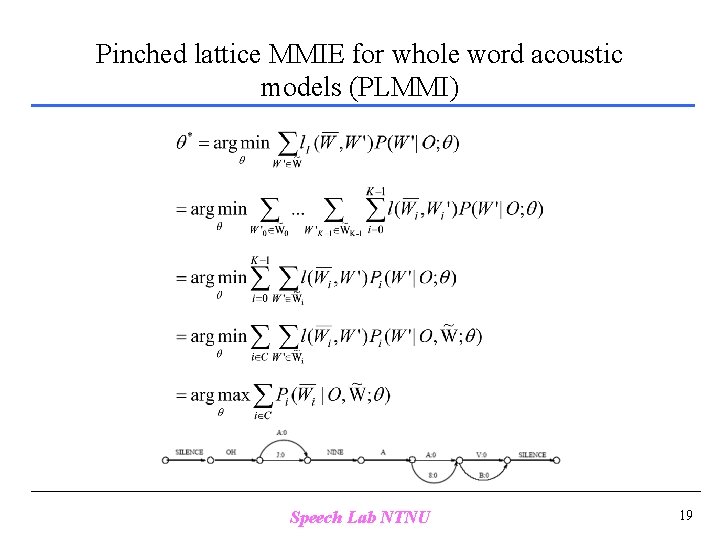

Pinched lattice MMIE for whole word acoustic models (PLMMI) Speech Lab NTNU 19

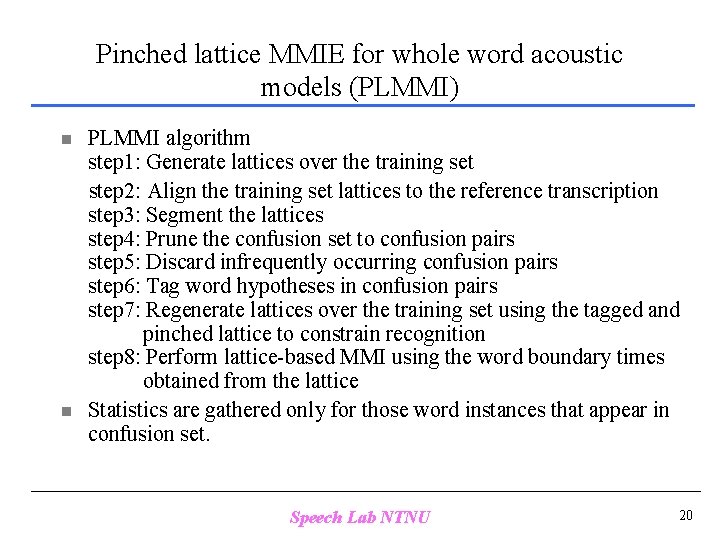

Pinched lattice MMIE for whole word acoustic models (PLMMI) n n PLMMI algorithm step 1: Generate lattices over the training set step 2: Align the training set lattices to the reference transcription step 3: Segment the lattices step 4: Prune the confusion set to confusion pairs step 5: Discard infrequently occurring confusion pairs step 6: Tag word hypotheses in confusion pairs step 7: Regenerate lattices over the training set using the tagged and pinched lattice to constrain recognition step 8: Perform lattice-based MMI using the word boundary times obtained from the lattice Statistics are gathered only for those word instances that appear in confusion set. Speech Lab NTNU 20

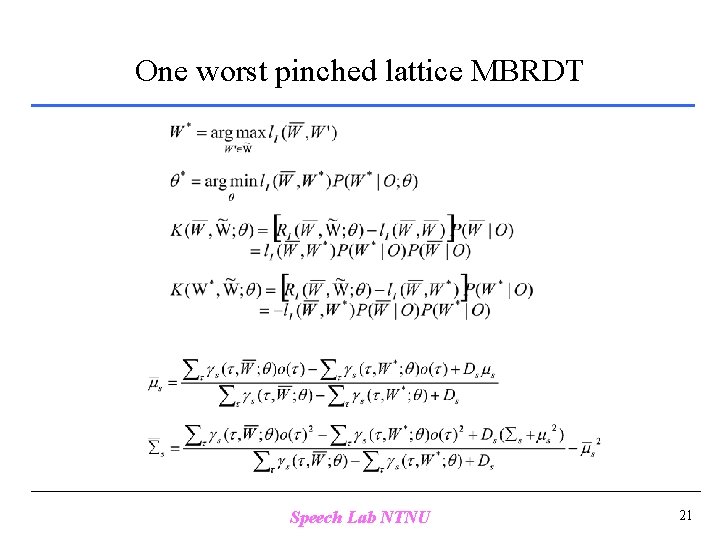

One worst pinched lattice MBRDT Speech Lab NTNU 21

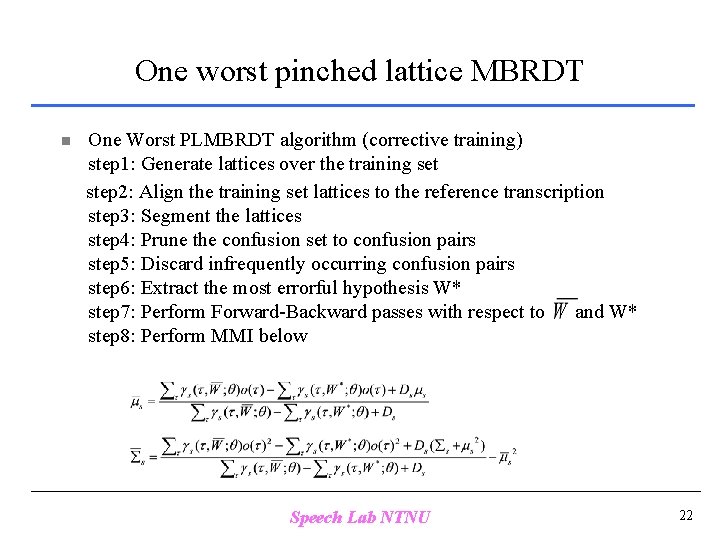

One worst pinched lattice MBRDT n One Worst PLMBRDT algorithm (corrective training) step 1: Generate lattices over the training set step 2: Align the training set lattices to the reference transcription step 3: Segment the lattices step 4: Prune the confusion set to confusion pairs step 5: Discard infrequently occurring confusion pairs step 6: Extract the most errorful hypothesis W* step 7: Perform Forward-Backward passes with respect to and W* step 8: Perform MMI below Speech Lab NTNU 22

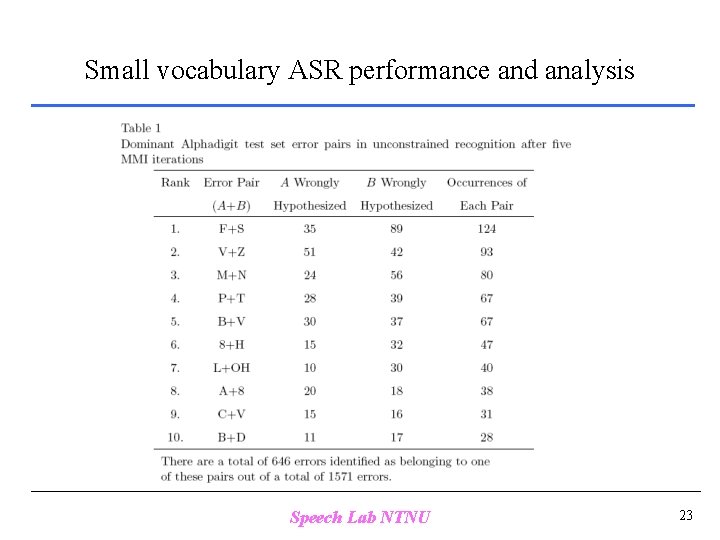

Small vocabulary ASR performance and analysis Speech Lab NTNU 23

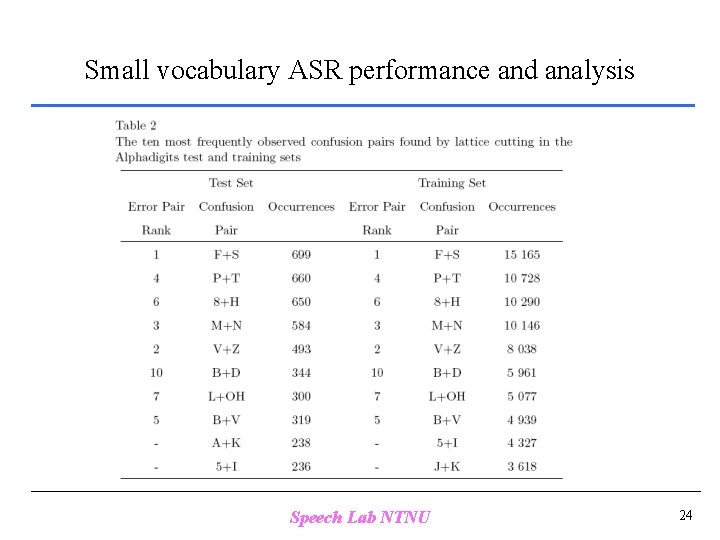

Small vocabulary ASR performance and analysis Speech Lab NTNU 24

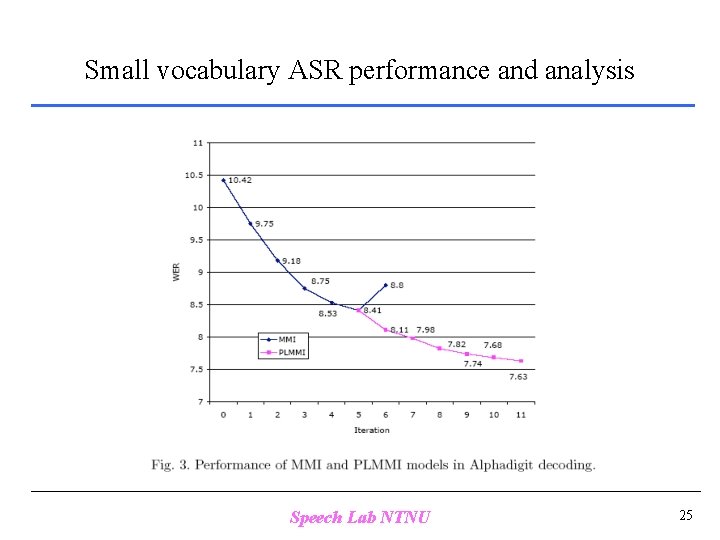

Small vocabulary ASR performance and analysis Speech Lab NTNU 25

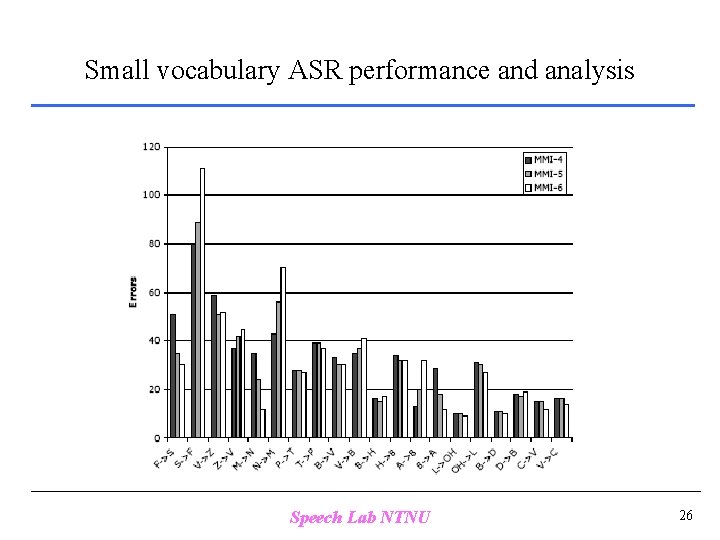

Small vocabulary ASR performance and analysis Speech Lab NTNU 26

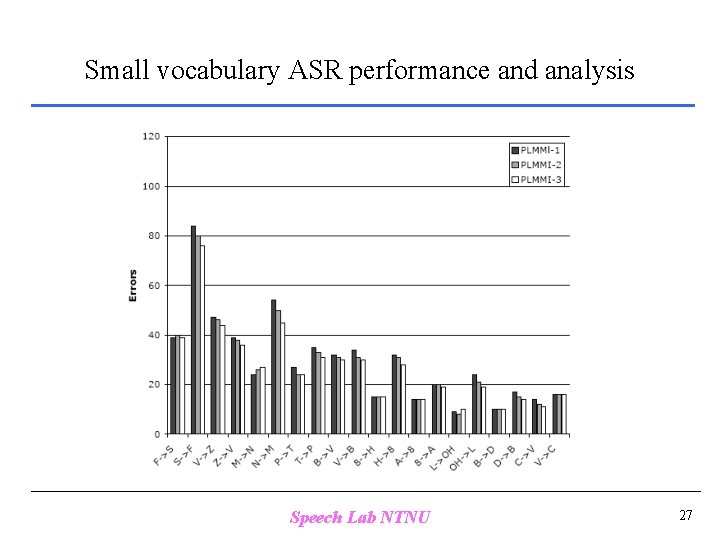

Small vocabulary ASR performance and analysis Speech Lab NTNU 27

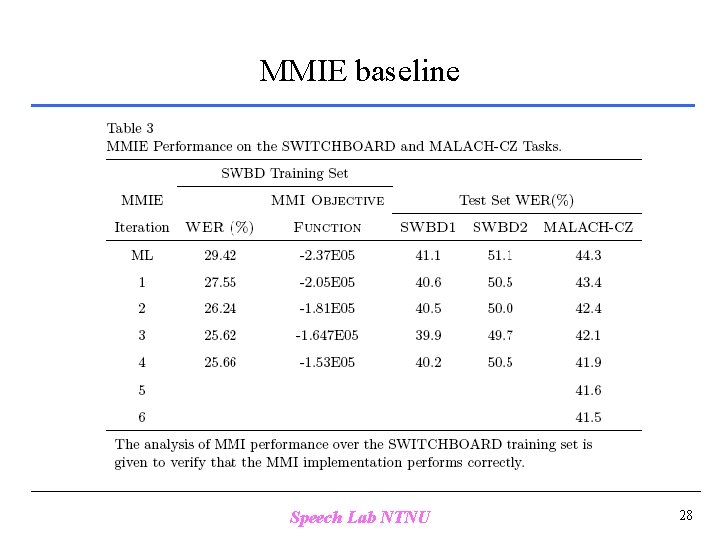

MMIE baseline Speech Lab NTNU 28

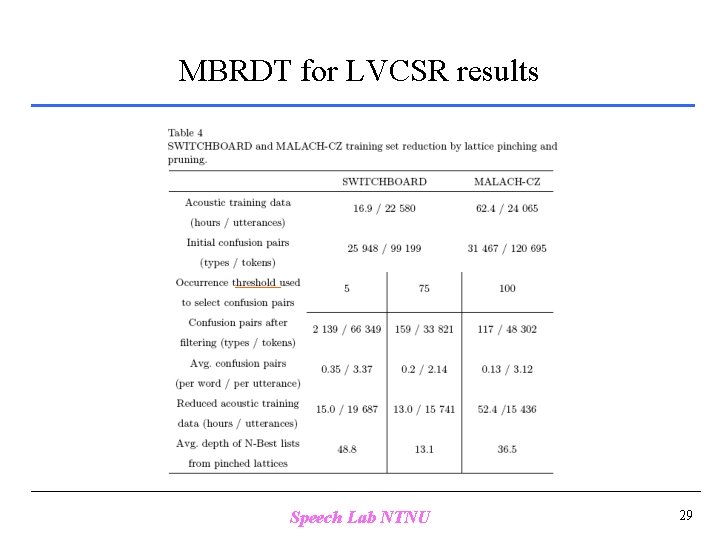

MBRDT for LVCSR results Speech Lab NTNU 29

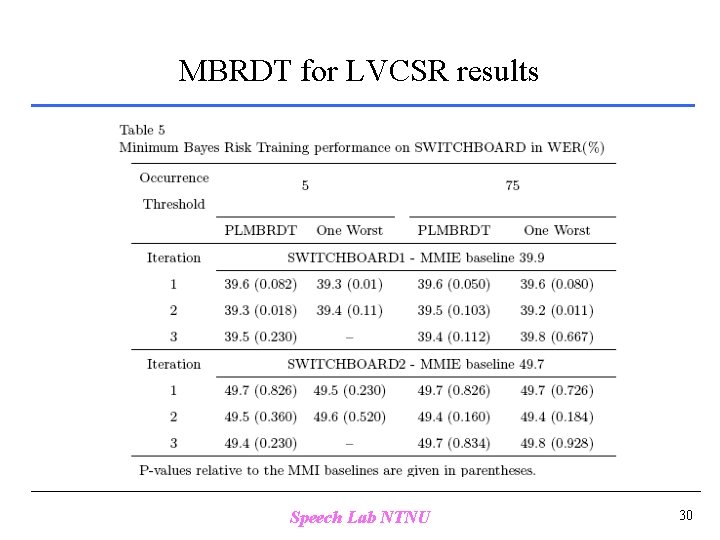

MBRDT for LVCSR results Speech Lab NTNU 30

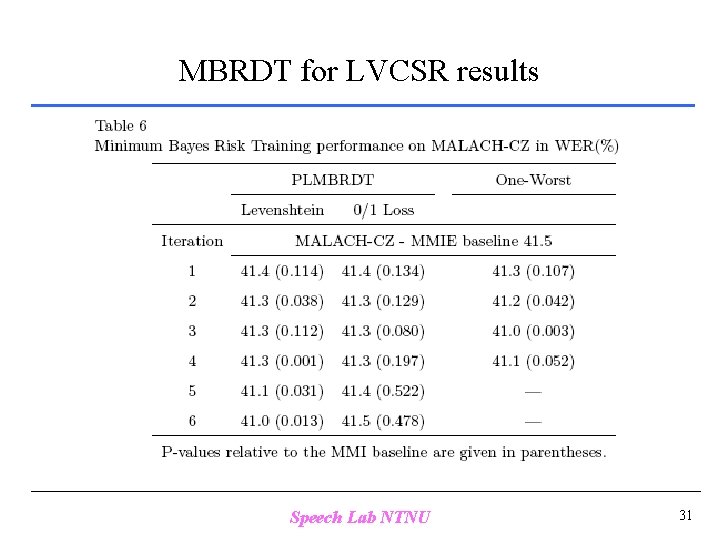

MBRDT for LVCSR results Speech Lab NTNU 31

Conclusion n We have demonstrated how techniques developed for MBR decoding make it possible to apply risk-based parameter estimation algorithm to LVCSR. – PLMBRDT, PLMMI, One-worst PLMBRDT n Our approach starts with the original derivations of Kaiser et al. which show the Extended Baum Welch algorithm can be used to derive a parameter estimation procedure to reduce expected loss over training data. Speech Lab NTNU 32

- Slides: 32