Latent Semantic Analysis A Gentle Tutorial Introduction Tutorial

- Slides: 33

Latent Semantic Analysis A Gentle Tutorial Introduction Tutorial Resources http: //cis. paisley. ac. uk/giro-ci 0/GU_LSA_TUT M. A. Girolami 11/21/2020 University of Glasgow DCS Tutorial

Contents n Latent Semantic Analysis n n n Probabilistic Views on LSA n n Motivation Singular Value Decomposition Term Document Matrix Structure Query and Document Similarity in Latent Space Factor Analytic Model Generative Model Representation Alternate Basis to the Principal Directions Latent Semantic & Document Clustering (In the Bar later) n n Principal Direction Clustering Hierarchic Clustering with LSA 11/21/2020 University of Glasgow DCS Tutorial

Latent Semantic Analysis n Motivation n n Lexical matching at term level inaccurate (claimed) Polysemy – words with number of ‘meanings’ – term matching returns irrelevant documents – impacts precision Synonomy – number of words with same ‘meaning’ – term matching misses relevant documents – impacts recall LSA assumes that there exists a LATENT structure in word usage – obscured by variability in word choice Analogous to signal + additive noise model in signal processing 11/21/2020 University of Glasgow DCS Tutorial

Latent Semantic Analysis n n n Word usage defined by term and document cooccurrence – matrix structure Latent structure / semantics in word usage Clustering documents or words – no shared space Two mode factor analysis – dyadic decomposition into ‘latent semantic’ factor space - employing - Singular Value Decomposition Cubic Computational Scaling – reasonable ! 11/21/2020 University of Glasgow DCS Tutorial

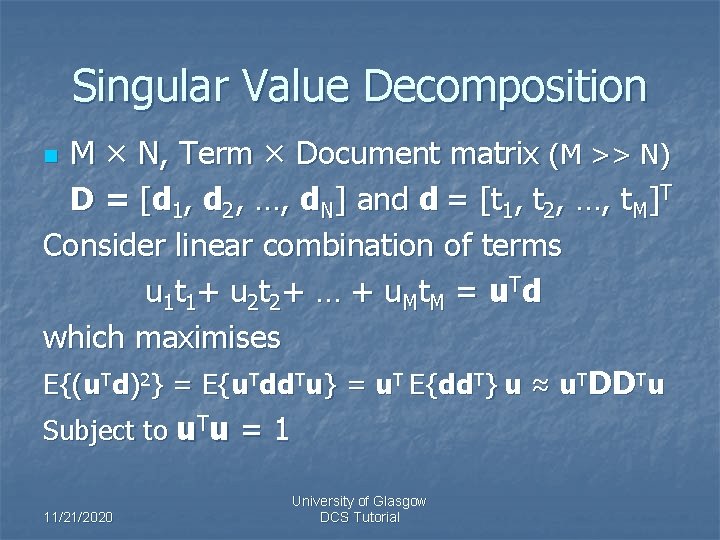

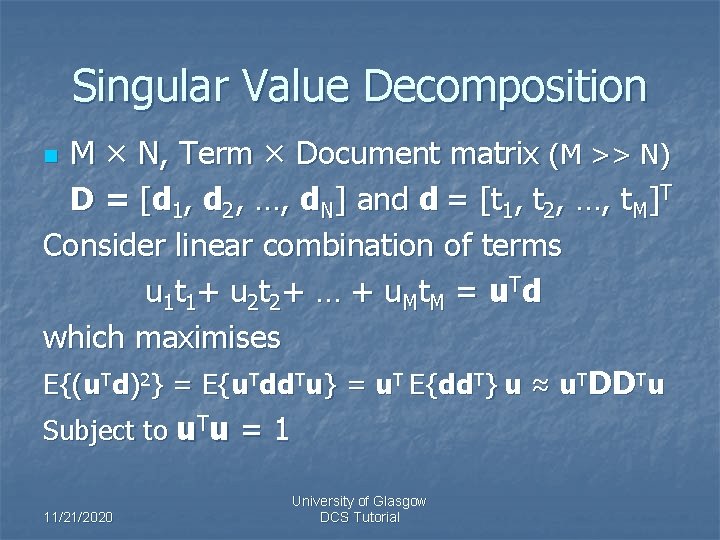

Singular Value Decomposition M × N, Term × Document matrix (M >> N) D = [d 1, d 2, …, d. N] and d = [t 1, t 2, …, t. M]T Consider linear combination of terms u 1 t 1 + u 2 t 2 + … + u M t M = u. T d which maximises E{(u. Td)2} = E{u. Tdd. Tu} = u. T E{dd. T} u ≈ u. TDDTu Subject to u. Tu = 1 n 11/21/2020 University of Glasgow DCS Tutorial

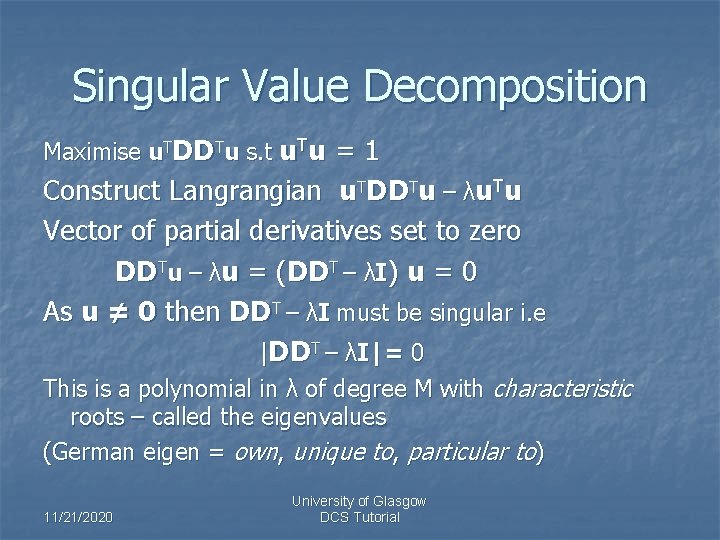

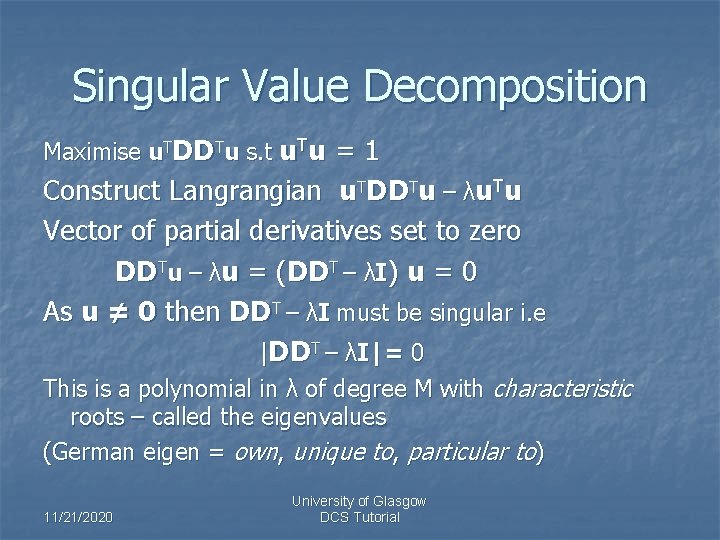

Singular Value Decomposition Maximise u. TDDTu s. t u. Tu = 1 Construct Langrangian u. TDDTu – λu. Tu Vector of partial derivatives set to zero DDTu – λu = (DDT – λI) u = 0 As u ≠ 0 then DDT – λI must be singular i. e |DDT – λI|= 0 This is a polynomial in λ of degree M with characteristic roots – called the eigenvalues (German eigen = own, unique to, particular to) 11/21/2020 University of Glasgow DCS Tutorial

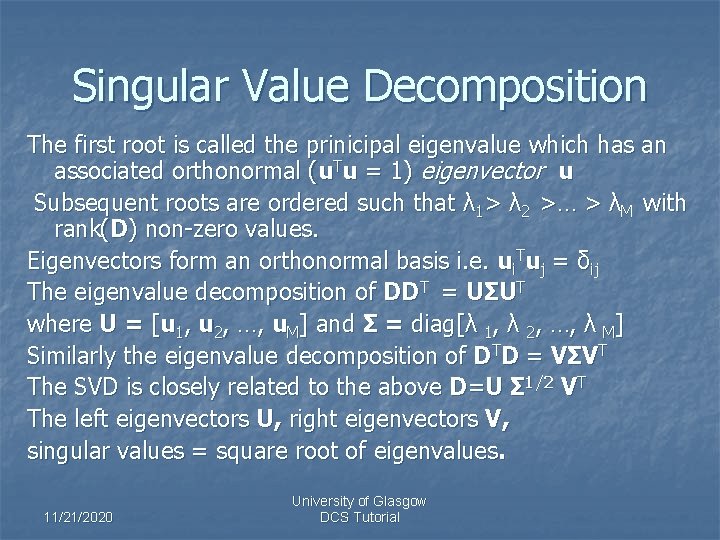

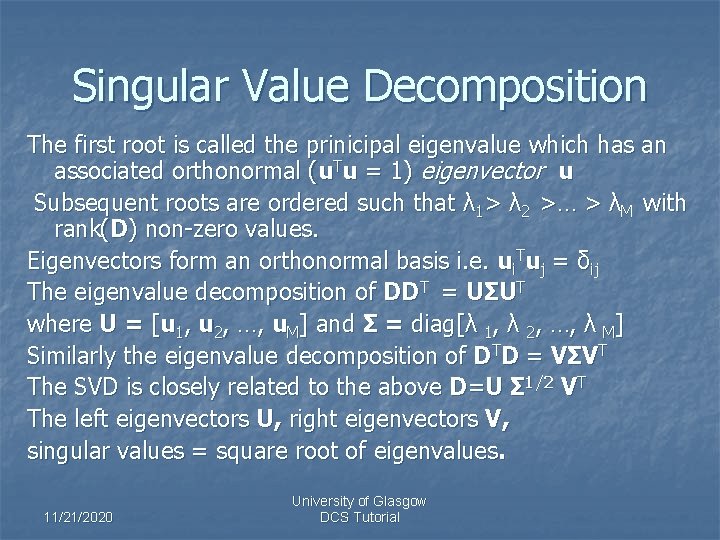

Singular Value Decomposition The first root is called the prinicipal eigenvalue which has an associated orthonormal (u. Tu = 1) eigenvector u Subsequent roots are ordered such that λ 1> λ 2 >… > λM with rank(D) non-zero values. Eigenvectors form an orthonormal basis i. e. ui. Tuj = δij The eigenvalue decomposition of DDT = UΣUT where U = [u 1, u 2, …, u. M] and Σ = diag[λ 1, λ 2, …, λ M] Similarly the eigenvalue decomposition of DTD = VΣVT The SVD is closely related to the above D=U Σ 1/2 VT The left eigenvectors U, right eigenvectors V, singular values = square root of eigenvalues. 11/21/2020 University of Glasgow DCS Tutorial

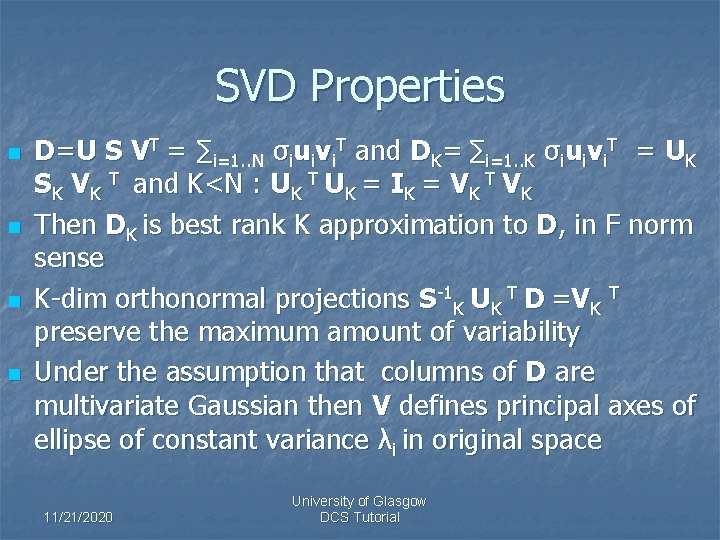

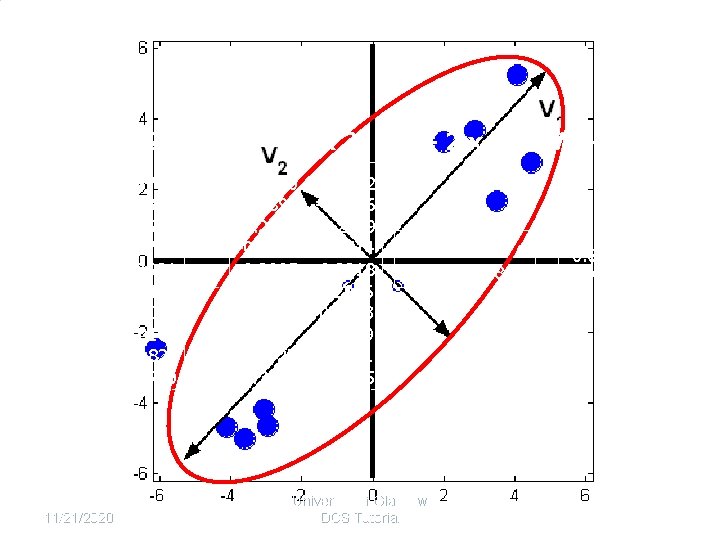

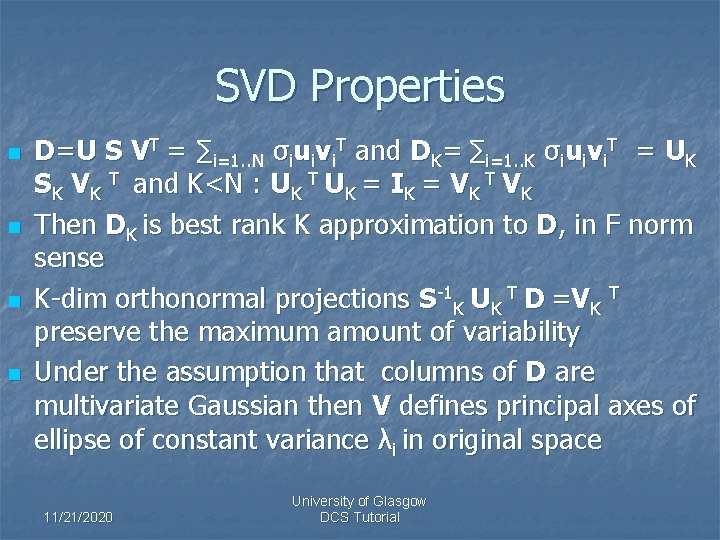

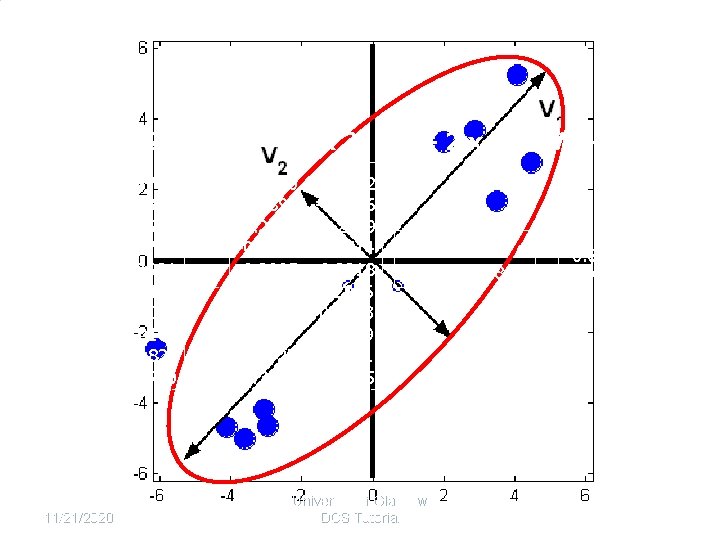

SVD Properties n n D=U S VT = ∑i=1. . N σiuivi. T and DK= ∑i=1. . K σiuivi. T = UK SK VK T and K<N : UK T UK = IK = VK Then DK is best rank K approximation to D, in F norm sense K-dim orthonormal projections S-1 K UK T D =VK T preserve the maximum amount of variability Under the assumption that columns of D are multivariate Gaussian then V defines principal axes of ellipse of constant variance λi in original space 11/21/2020 University of Glasgow DCS Tutorial

SVD Example D -- 10 x 2 2. 9002 4. 0860 1. 9954 3. 5069 4. 4620 -2. 9444 -4. 1132 -3. 6208 -3. 0558 -6. 1204 11/21/2020 3. 6790 5. 2366 3. 3687 1. 6748 2. 7684 -4. 6447 -4. 7043 -5. 0181 -4. 1821 -2. 4790 U -- 10 x 2 -0. 2750 -0. 3896 -0. 2247 -0. 2150 -0. 3005 0. 3177 0. 3682 0. 3613 0. 3027 0. 3563 -0. 1242 -0. 1846 -0. 2369 0. 3514 0. 3318 0. 2906 0. 0833 0. 2319 0. 1861 -0. 6935 S -- 2 x 2 16. 9491 0 0 3. 8491 University of Glasgow DCS Tutorial V T -- 2 x 2 -0. 6960 -0. 7181 -0. 6960

SVD Properties n n There is an implicit assumption that the observed data distribution is multivariate Gaussian Can consider as a probabilistic generative model – latent variables are Gaussian – sub-optimal in likelihood terms for non-Gaussian distribution Employed in signal processing for noise filtering – dominant subspace contains majority of information bearing part of signal Similar rationale when applying SVD to LSI 11/21/2020 University of Glasgow DCS Tutorial

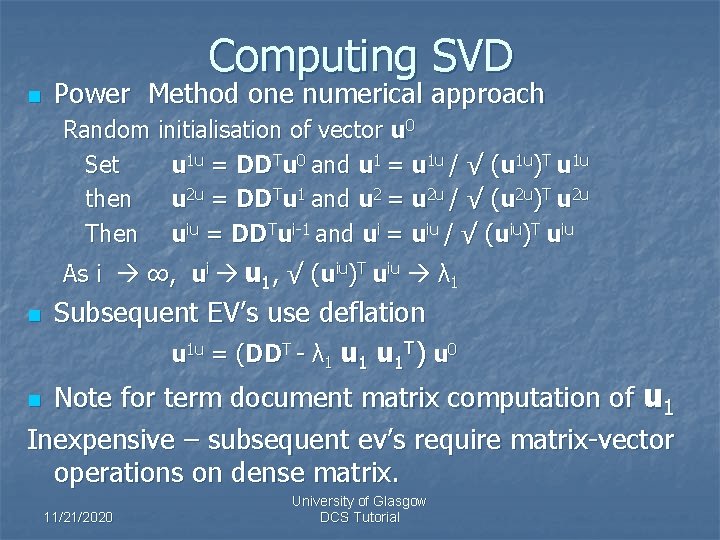

Computing SVD n Power Method one numerical approach Random initialisation of vector u 0 Set u 1 u = DDTu 0 and u 1 = u 1 u / √ (u 1 u)T u 1 u then u 2 u = DDTu 1 and u 2 = u 2 u / √ (u 2 u)T u 2 u Then uiu = DDTui-1 and ui = uiu / √ (uiu)T uiu As i ∞, ui u 1, √ (uiu)T uiu λ 1 n Subsequent EV’s use deflation u 1 u = (DDT - λ 1 u 1 T) u 0 Note for term document matrix computation of u 1 Inexpensive – subsequent ev’s require matrix-vector operations on dense matrix. n 11/21/2020 University of Glasgow DCS Tutorial

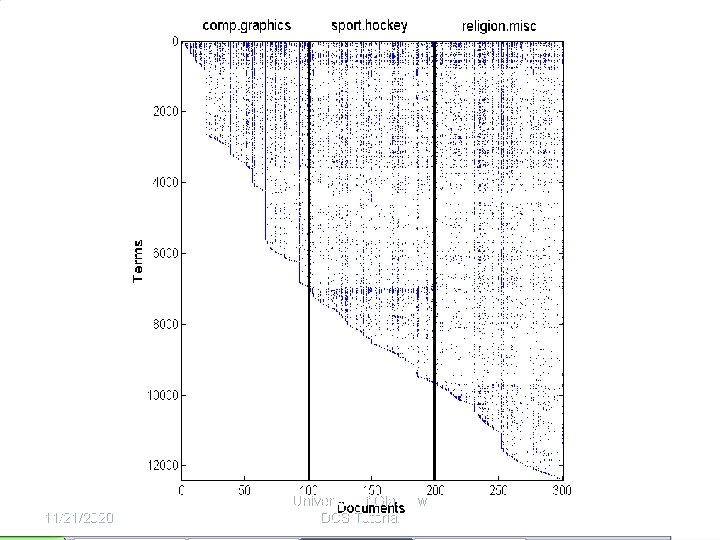

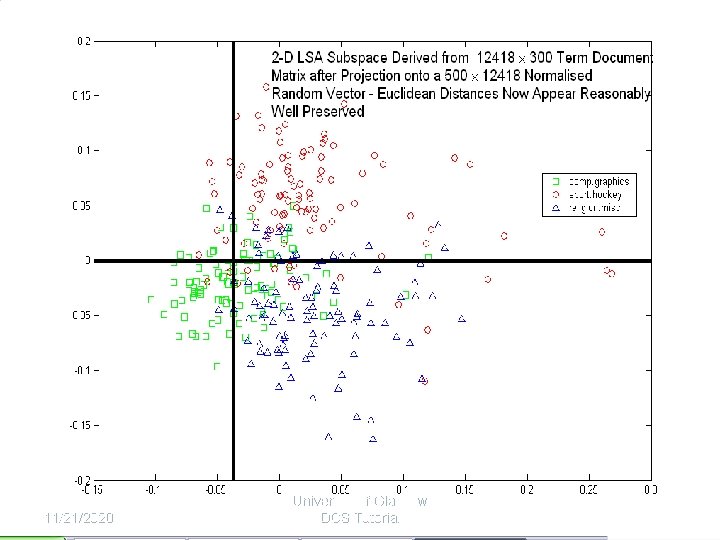

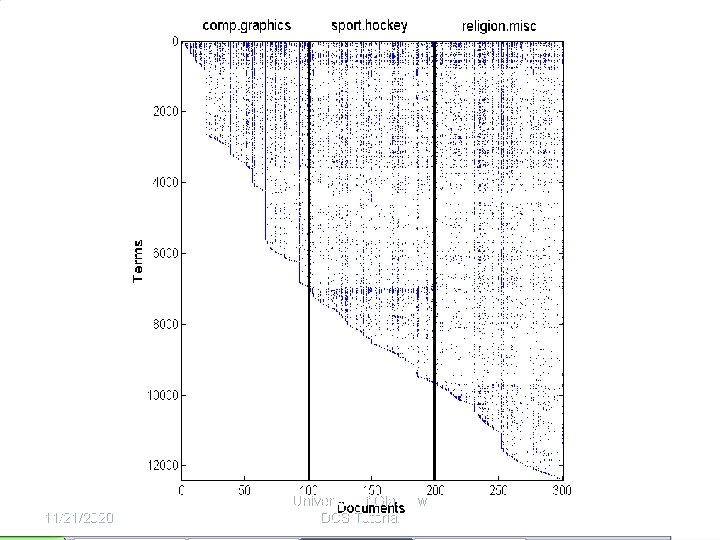

Term Document Matrix Structure n n n n n Create artificially heterogeneous collection 100 documents from 3 distinct newsgroups Indexed using standard stop word list 12418 distinct terms Term × Document Matrix (12418 × 300) 8% fill of sparse matrix Sort terms by rank – structure apparent Matrix of cosine similarity between documents Clear structure apparent 11/21/2020 University of Glasgow DCS Tutorial

Term Document Matrix Structure 11/21/2020 University of Glasgow DCS Tutorial

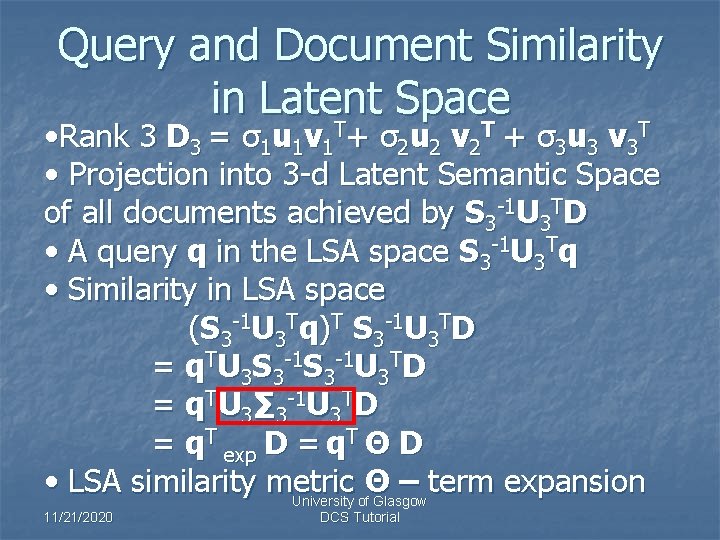

Query and Document Similarity in Latent Space • Rank 3 D 3 = σ1 u 1 v 1 T+ σ2 u 2 v 2 T + σ3 u 3 v 3 T • Projection into 3 -d Latent Semantic Space of all documents achieved by S 3 -1 U 3 TD • A query q in the LSA space S 3 -1 U 3 Tq • Similarity in LSA space (S 3 -1 U 3 Tq)T S 3 -1 U 3 TD = q. TU 3 S 3 -1 U 3 TD = q. TU 3∑ 3 -1 U 3 TD = q. T exp D = q. T Θ D • LSA similarity metric Θ – term expansion University of Glasgow 11/21/2020 DCS Tutorial

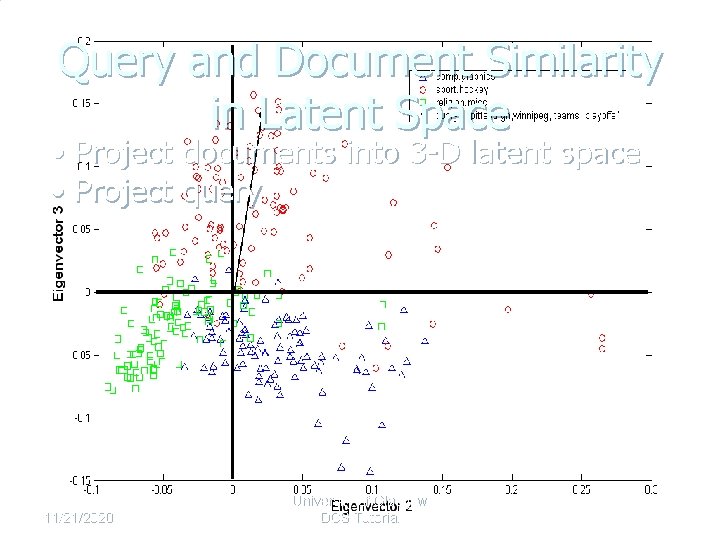

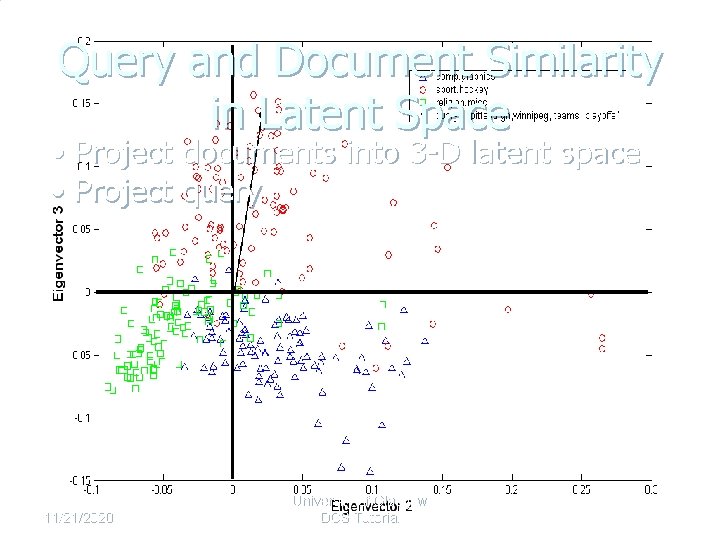

Query and Document Similarity in Latent Space • Project documents into 3 -D latent space • Project query 11/21/2020 University of Glasgow DCS Tutorial

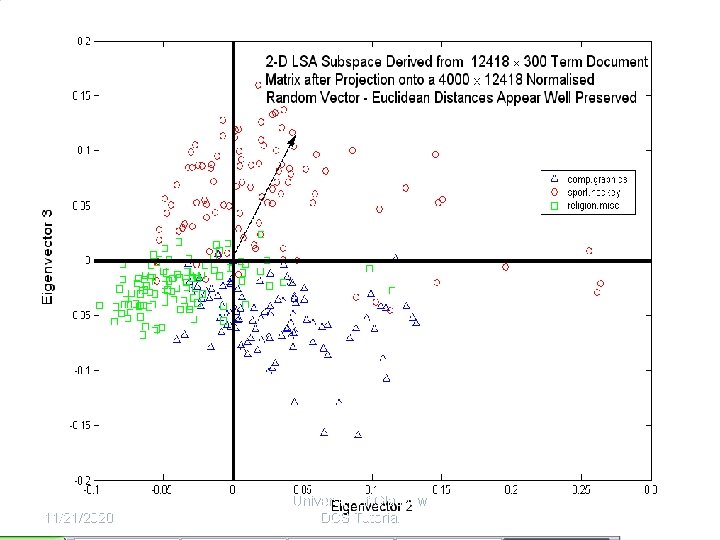

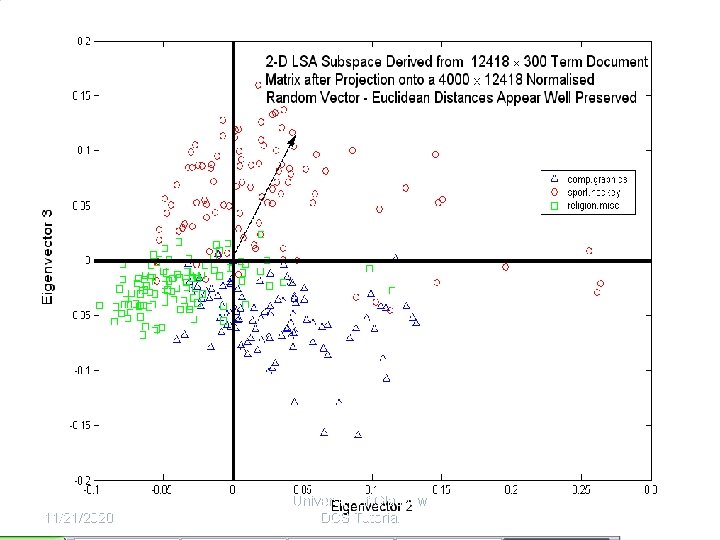

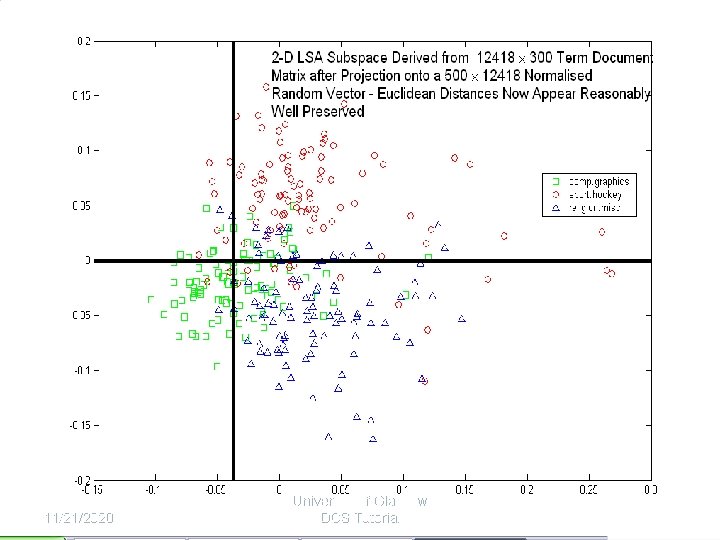

Random Projections n Important theoretical result n n Random projection from M - dim to L - dim space Where L << M then Euclidean distance and angles (norms and inner products) are preserved with high probability LSA can then be performed using SVD on the reduced dimensional L × N matrix (less costly) 11/21/2020 University of Glasgow DCS Tutorial

11/21/2020 University of Glasgow DCS Tutorial

LSA Performance n n LSA consistently improves recall on standard test collections (precision/recall generally improved) Variable performance on larger TREC collections Dimensionality of Latent Space – a magic number – 300 – 1000 seems to work fine – no satisfactory way of assessing value. Computational cost – at present – prohibitive 11/21/2020 University of Glasgow DCS Tutorial

Probabilistic Views on LSA n Factor Analytic Model n Generative Model Representation n Alternate Basis to the Principal Directions 11/21/2020 University of Glasgow DCS Tutorial

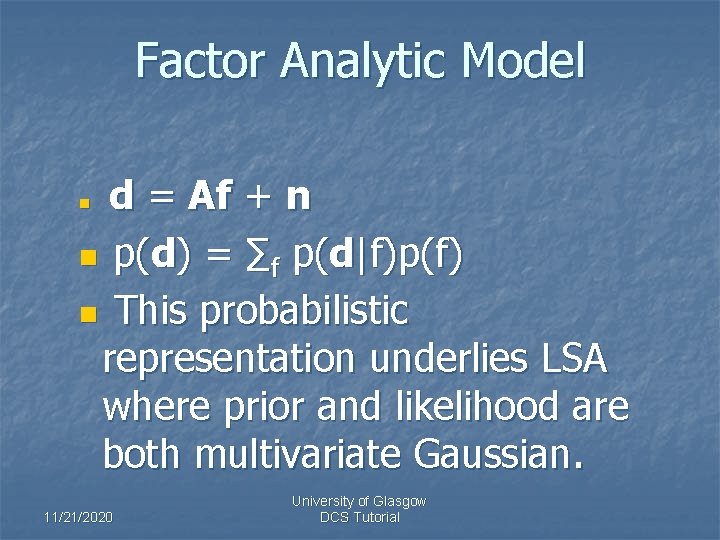

Factor Analytic Model d = Af + n n p(d) = ∑f p(d|f)p(f) n This probabilistic representation underlies LSA where prior and likelihood are both multivariate Gaussian. n 11/21/2020 University of Glasgow DCS Tutorial

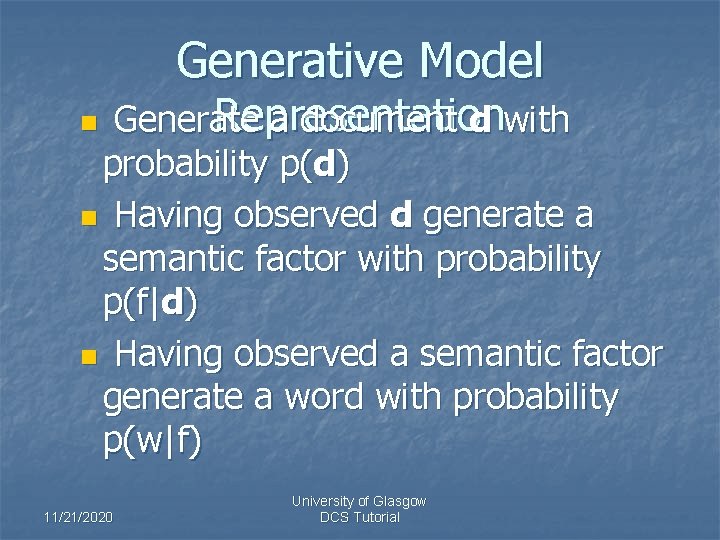

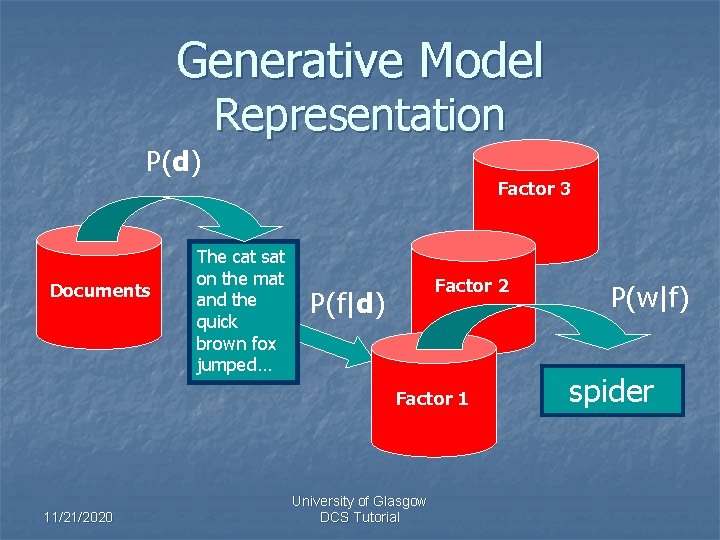

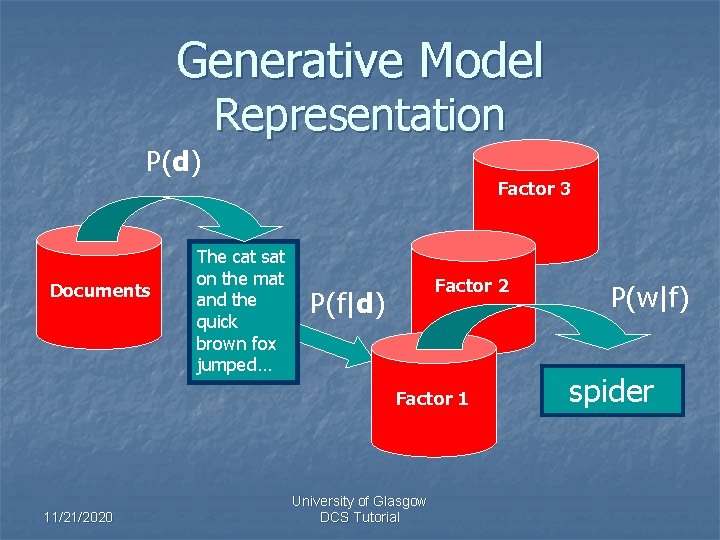

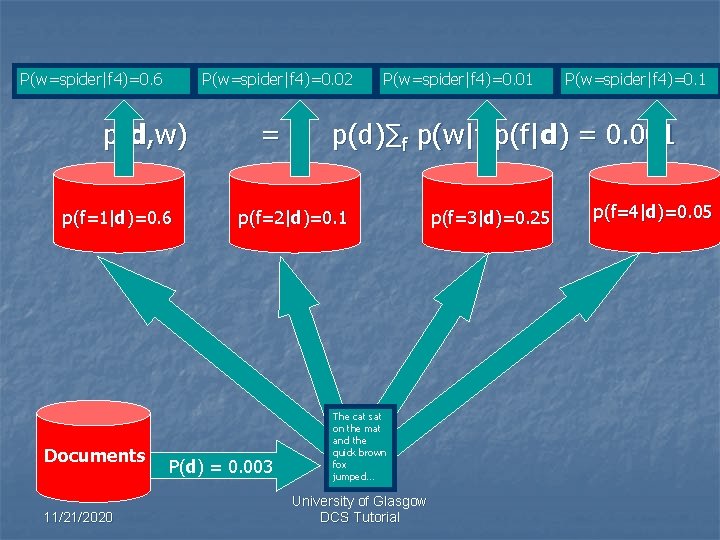

Generative Model Representation Generate a document d with probability p(d) n Having observed d generate a semantic factor with probability p(f|d) n Having observed a semantic factor generate a word with probability p(w|f) n 11/21/2020 University of Glasgow DCS Tutorial

Generative Model Representation P(d) Factor 3 Documents The cat sat on the mat and the quick brown fox jumped… Factor 2 P(f|d) Factor 1 11/21/2020 University of Glasgow DCS Tutorial P(w|f) spider

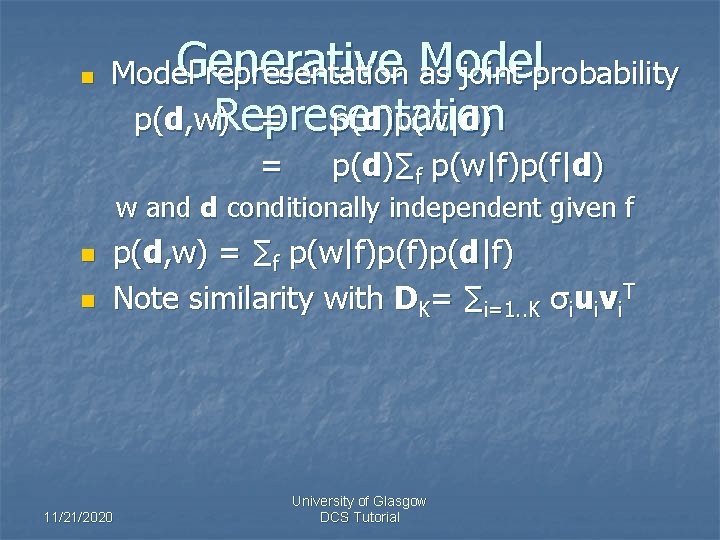

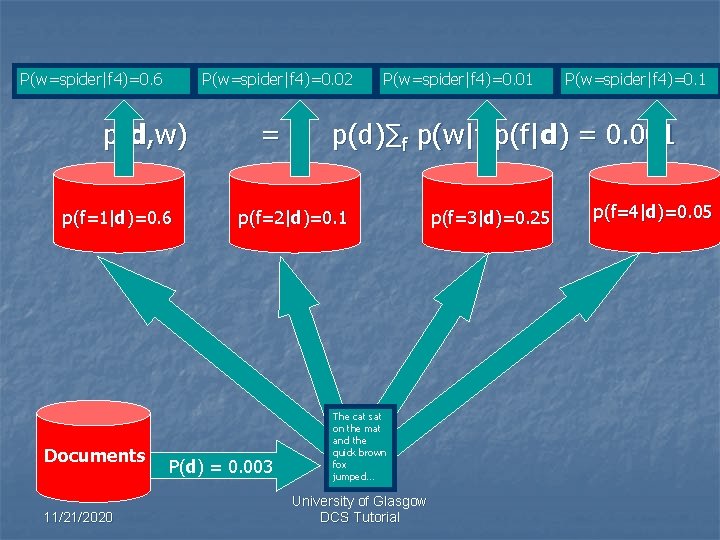

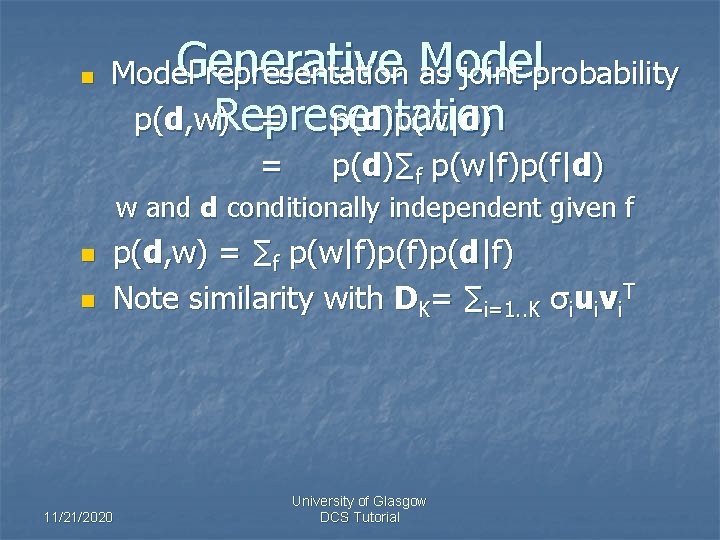

n Generative Model representation Model as joint probability p(d, w. Representation ) = p(d)p(w|d) = p(d)∑f p(w|f)p(f|d) w and d conditionally independent given f n n p(d, w) = ∑f p(w|f)p(d|f) Note similarity with DK= ∑i=1. . K σiuivi. T 11/21/2020 University of Glasgow DCS Tutorial

P(w=spider|f 4)=0. 6 P(w=spider|f 4)=0. 02 p(d, w) p(f=1|d)=0. 6 Documents 11/21/2020 = P(w=spider|f 4)=0. 01 p(d)∑f p(w|f)p(f|d) = 0. 001 p(f=2|d)=0. 1 P(d) = 0. 003 P(w=spider|f 4)=0. 1 The cat sat on the mat and the quick brown fox jumped… University of Glasgow DCS Tutorial p(f=3|d)=0. 25 p(f=4|d)=0. 05

multinomial – counts in successive trials n More appropriate than Gaussian n Note that Term × Document matrix is a sample from the true distribution pt(d, w) Representation n ∑ij. D(i, j) log p(dj, wi) – cross-entropy between model and realisation – maximise likelihood that the model p(dj, wi) generated the realisation D – subject to conditions on p(f|d) and p(w|f) Generative Model 11/21/2020 University of Glasgow DCS Tutorial

Generative Model Representation Estimation of p(f|d) and p(w|f) requires use of a standard EM algorithm. n Expectation Maximisation n General iterative method for ML parameter estimation Ideal for ‘missing variable’ problems Estimate p(f|d, w) using current estimates of p(w|f) and p(f|d) n Estimate new values of p(w|f) and p(f|d) using current estimate of p(f|d, w) n 11/21/2020 University of Glasgow DCS Tutorial

Generative Model Once parameters estimated Representation n p(f|d) gives posterior probability that Semantic factor ‘f’ is associated with d n p(w|f) gives the probability of word ‘w’ being generated from Semantic factor ‘f’ n Nice clear interpretation unlike U and V terms in SVD n ‘Sparse’ representation – unlike SVD n 11/21/2020 University of Glasgow DCS Tutorial

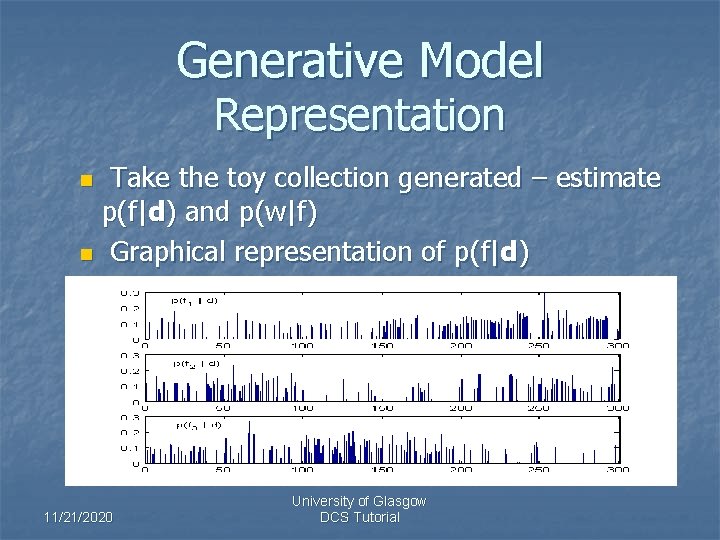

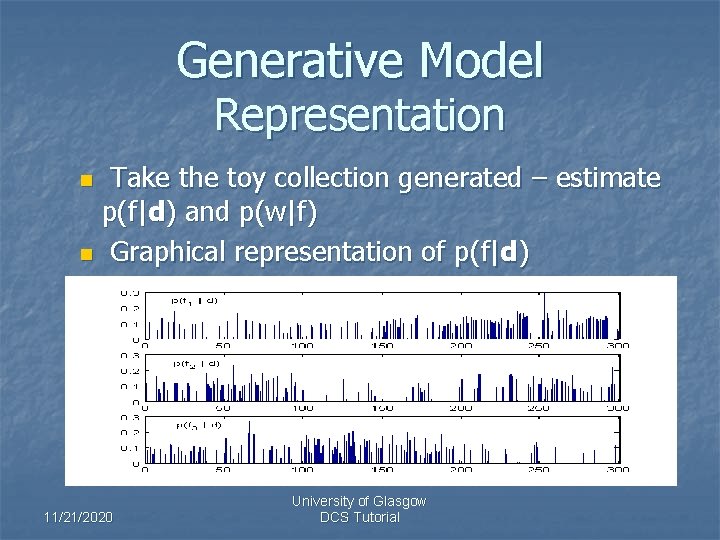

Generative Model Representation Take the toy collection generated – estimate p(f|d) and p(w|f) n Graphical representation of p(f|d) n 11/21/2020 University of Glasgow DCS Tutorial

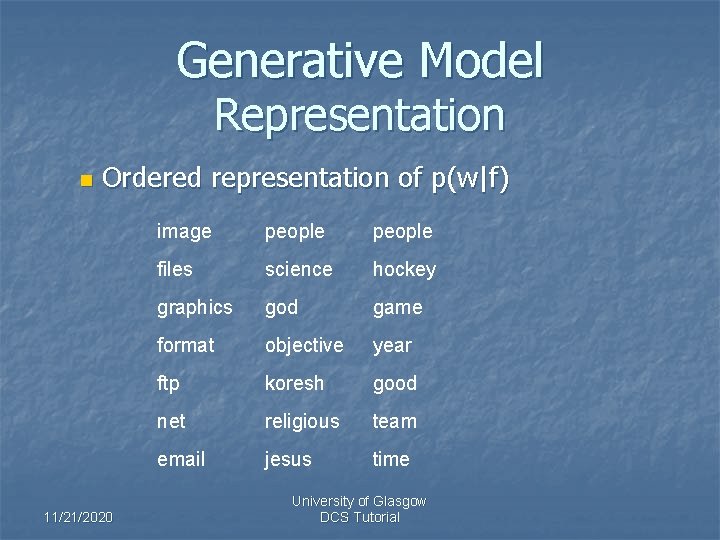

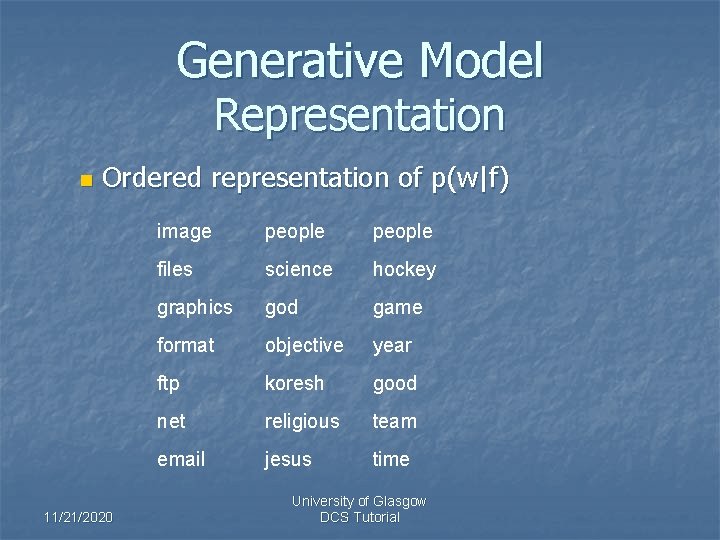

Generative Model Representation n Ordered representation of p(w|f) 11/21/2020 image people files science hockey graphics god game format objective year ftp koresh good net religious team email jesus time University of Glasgow DCS Tutorial

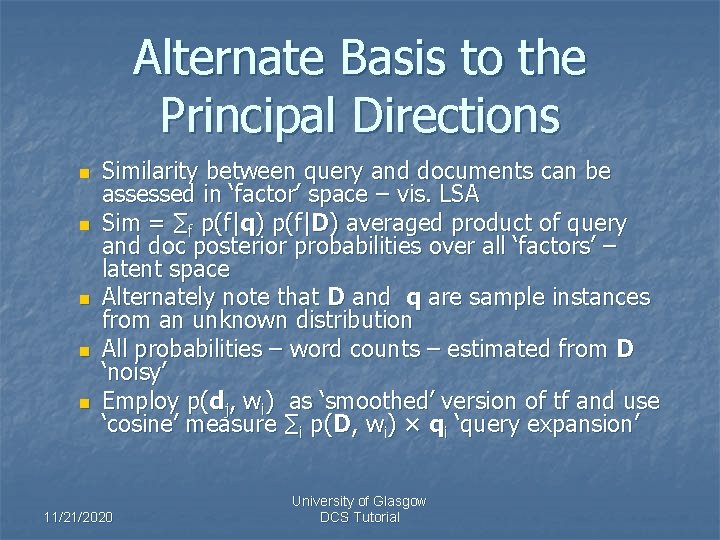

Alternate Basis to the Principal Directions n n n Similarity between query and documents can be assessed in ‘factor’ space – vis. LSA Sim = ∑f p(f|q) p(f|D) averaged product of query and doc posterior probabilities over all ‘factors’ – latent space Alternately note that D and q are sample instances from an unknown distribution All probabilities – word counts – estimated from D ‘noisy’ Employ p(dj, wi) as ‘smoothed’ version of tf and use ‘cosine’ measure ∑i p(D, wi) × qi ‘query expansion’ 11/21/2020 University of Glasgow DCS Tutorial

Alternate Basis to the Principal Directions Both forms of matching shown to improve on LSA (MED, CRAN, CACM) n Elegant statistically principled approach – can employ (in theory) Bayesian model assessment techniques. n Likelihood nonlinear function of parameters n p(f|d) and p(w|f) – Huge parameter space – small number of relative samples – high bias and variance expected n Assessment of correlation with likelihood and P/R – yet to be studied in depth 11/21/2020 University of Glasgow DCS Tutorial

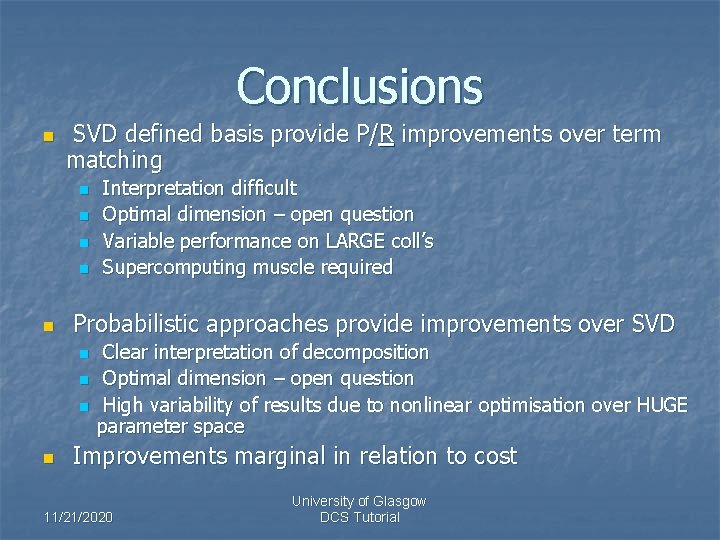

Conclusions n SVD defined basis provide P/R improvements over term matching n n n Probabilistic approaches provide improvements over SVD n n Interpretation difficult Optimal dimension – open question Variable performance on LARGE coll’s Supercomputing muscle required Clear interpretation of decomposition Optimal dimension – open question High variability of results due to nonlinear optimisation over HUGE parameter space Improvements marginal in relation to cost 11/21/2020 University of Glasgow DCS Tutorial

Latent Semantic & Hierarchic Document Clustering n Had n enough ? …. …. . To the Bar… 11/21/2020 University of Glasgow DCS Tutorial