Latent Problem Solving Analysis LPSA A computational theory

Latent Problem Solving Analysis (LPSA): A computational theory of representation in complex, dynamic problem solving tasks

Complex problem solving (CPS) definition • dynamic, because early actions determine the environment in which subsequent decision must be made, and features of the task environment may change independently of the solver’s actions; • time-dependent, because decisions must be made at the correct moment in relation to environmental demands; and • complex, in the sense that most variables are not related to each other in one-to-one manner 2

‘Despite 10 years of research in the area, there is neither a clearly formulated specific theory nor is there an agreement on how to proceed with respect to the research philosophy. Even worse, no stable phenomena have been observed’ (Funke, 1992, p. 25) 3

"How similar are two participant's solutions? " • For CPS there is no common, explicit theory to explain why a complex, dynamic situation is similar to any other situation or how two slices of performance taken from a problem solving task can possibly be compared quantitatively. • This lack of formalized, analytical models is slowing down the development of theory in the field. 4

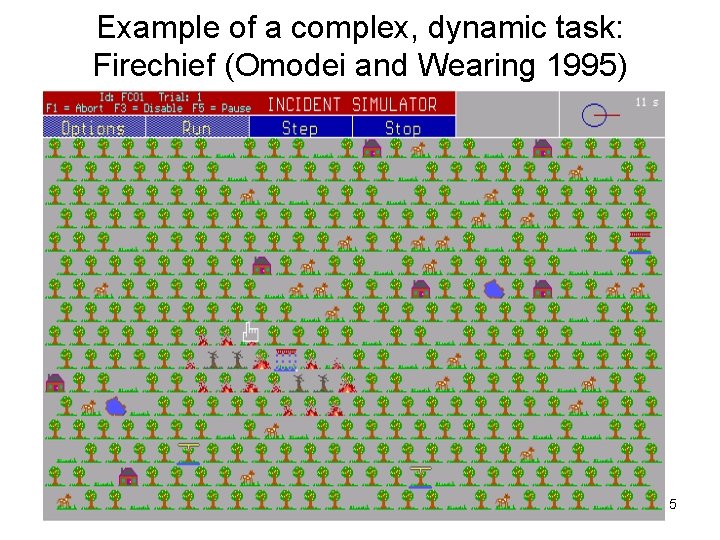

Example of a complex, dynamic task: Firechief (Omodei and Wearing 1995) 5

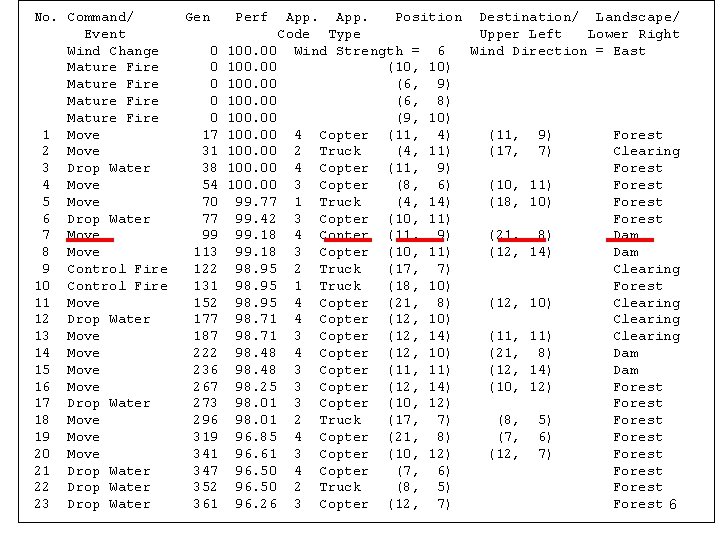

No. Command/ Event Wind Change Mature Fire 1 Move 2 Move 3 Drop Water 4 Move 5 Move 6 Drop Water 7 Move 8 Move 9 Control Fire 10 Control Fire 11 Move 12 Drop Water 13 Move 14 Move 15 Move 16 Move 17 Drop Water 18 Move 19 Move 20 Move 21 Drop Water 22 Drop Water 23 Drop Water Gen 0 0 0 17 31 38 54 70 77 99 113 122 131 152 177 187 222 236 267 273 296 319 341 347 352 361 Perf App. Position Destination/ Landscape/ Code Type Upper Left Lower Right 100. 00 Wind Strength = 6 Wind Direction = East 100. 00 (10, 10) 100. 00 (6, 9) 100. 00 (6, 8) 100. 00 (9, 10) 100. 00 4 Copter (11, 4) (11, 9) Forest 100. 00 2 Truck (4, 11) (17, 7) Clearing 100. 00 4 Copter (11, 9) Forest 100. 00 3 Copter (8, 6) (10, 11) Forest 99. 77 1 Truck (4, 14) (18, 10) Forest 99. 42 3 Copter (10, 11) Forest 99. 18 4 Copter (11, 9) (21, 8) Dam 99. 18 3 Copter (10, 11) (12, 14) Dam 98. 95 2 Truck (17, 7) Clearing 98. 95 1 Truck (18, 10) Forest 98. 95 4 Copter (21, 8) (12, 10) Clearing 98. 71 4 Copter (12, 10) Clearing 98. 71 3 Copter (12, 14) (11, 11) Clearing 98. 48 4 Copter (12, 10) (21, 8) Dam 98. 48 3 Copter (11, 11) (12, 14) Dam 98. 25 3 Copter (12, 14) (10, 12) Forest 98. 01 3 Copter (10, 12) Forest 98. 01 2 Truck (17, 7) (8, 5) Forest 96. 85 4 Copter (21, 8) (7, 6) Forest 96. 61 3 Copter (10, 12) (12, 7) Forest 96. 50 4 Copter (7, 6) Forest 96. 50 2 Truck (8, 5) Forest 96. 26 3 Copter (12, 7) Forest 6

Problems with the classic 'problem space’ approach! Most of theories about cognitive skill acquisition and procedural learning are based in two principles: – The problem space hypothesis – Representation of procedures as productions 7

Problems with the classic 'problem space’ approach! 1. The problem with the ‘generation’ of the problem space 2. The utility of the state space representation for tasks with inner dynamics is reduced because in most CPS environments it is not possible to undo the actions, and prepare a different strategy: 8

Problems with the classic 'problem space’ approach! 3. The classic problem solving theory used mainly verbal protocols as data. However, TALK ALOUD INTERFERES PERFORMANCE IN COMPLEX DYNAMIC TASKS (Dickson, Mc. Lennan & Omodei, 2000) 4. Independence (or very short-term dependences) of actions/states is assumed in some of the methods for representing performance. That is, the features that represent performance are local 9

What is LPSA and how it relates to these problems and other theories

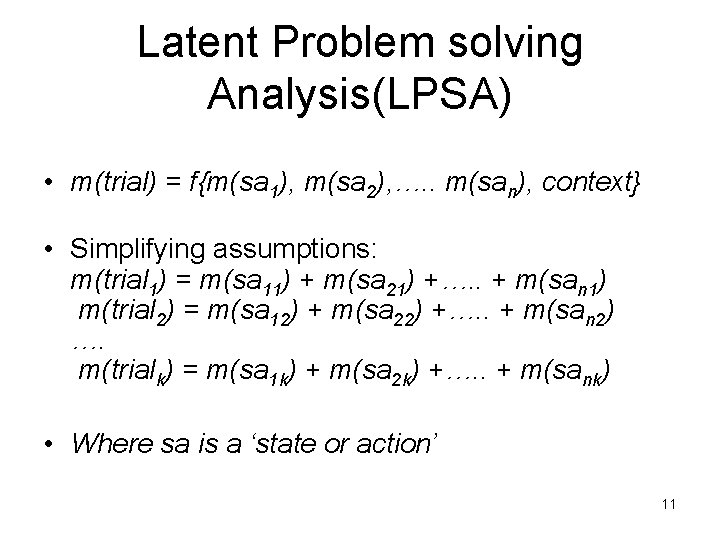

Latent Problem solving Analysis(LPSA) • m(trial) = f{m(sa 1), m(sa 2), …. . m(san), context} • Simplifying assumptions: m(trial 1) = m(sa 11) + m(sa 21) +…. . + m(san 1) m(trial 2) = m(sa 12) + m(sa 22) +…. . + m(san 2) …. m(trialk) = m(sa 1 k) + m(sa 2 k) +…. . + m(sank) • Where sa is a ‘state or action’ 11

Latent Problem solving Analysis(LPSA) • Complexity reduction: Reducing the number of dimensions in the space reduces the noise 12

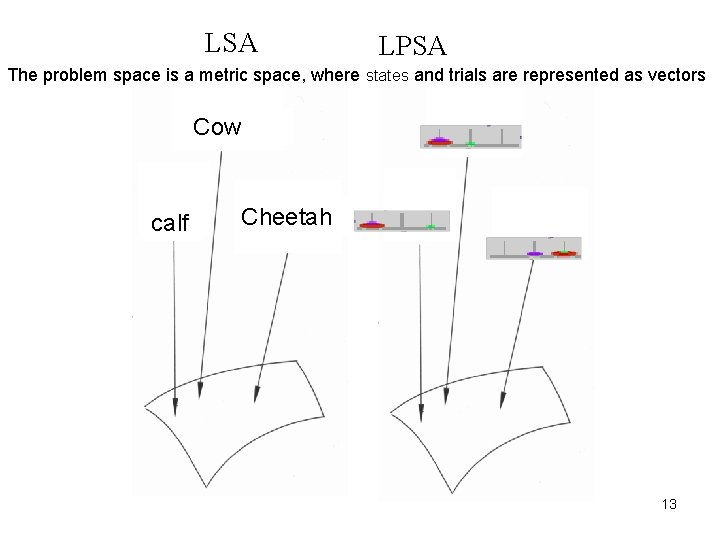

LSA LPSA The problem space is a metric space, where states and trials are represented as vectors Cow calf Cheetah 13

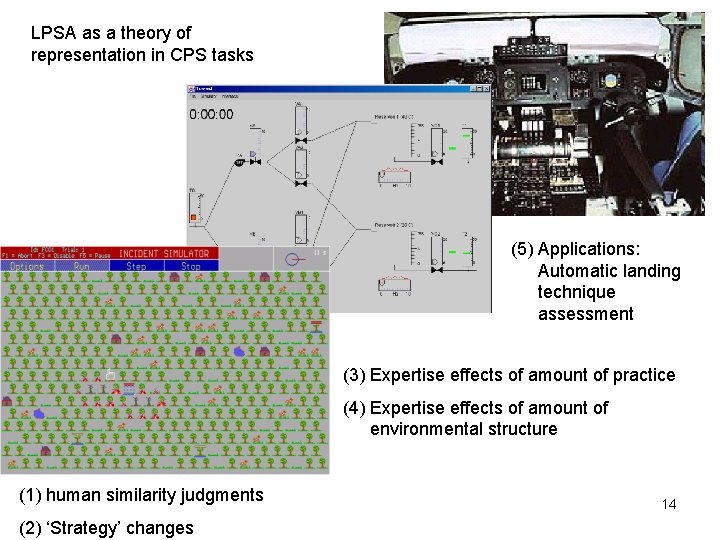

LPSA as a theory of representation in CPS tasks (5) Applications: Automatic landing technique assessment (3) Expertise effects of amount of practice (4) Expertise effects of amount of environmental structure (1) human similarity judgments (2) ‘Strategy’ changes 14

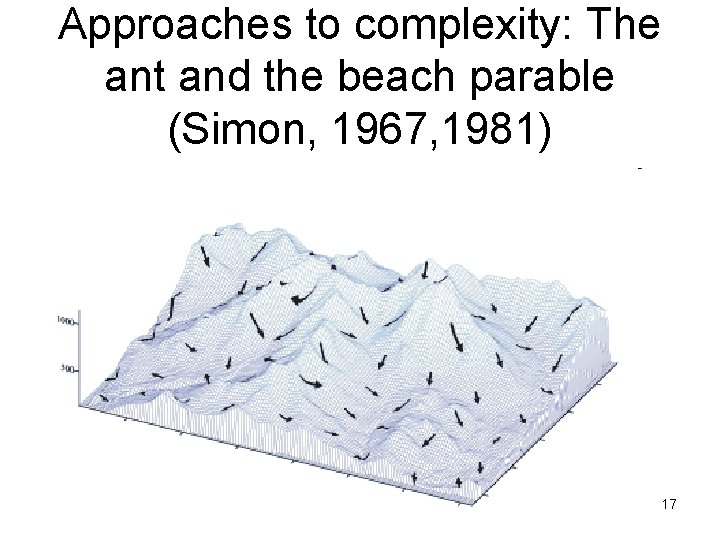

Approaches to complexity: The ant and the beach parable (Simon, 1967, 1981) 15

Approaches to complexity: The ant and the beach parable (Simon, 1967, 1981) 16

Approaches to complexity: The ant and the beach parable (Simon, 1967, 1981) 17

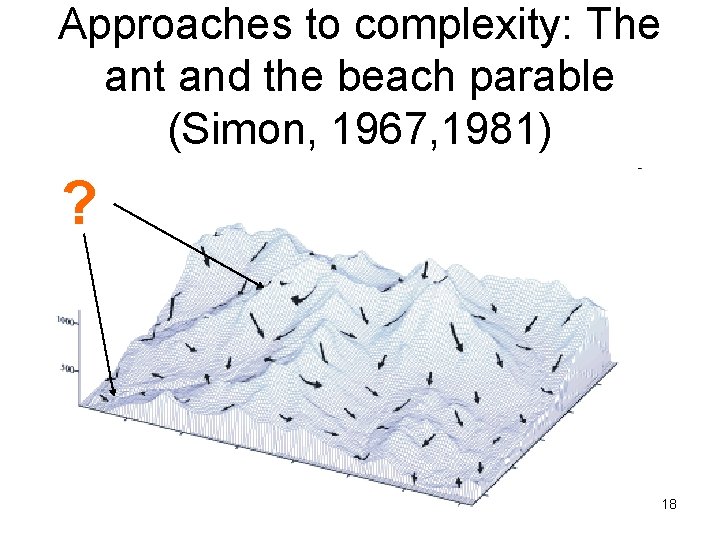

Approaches to complexity: The ant and the beach parable (Simon, 1967, 1981) ? 18

• Unsupervised learning • Empirical adjustment of a problem space • Definition of a productivity mechanism and a similarity measure. • LPSA: addition and cosine. 19

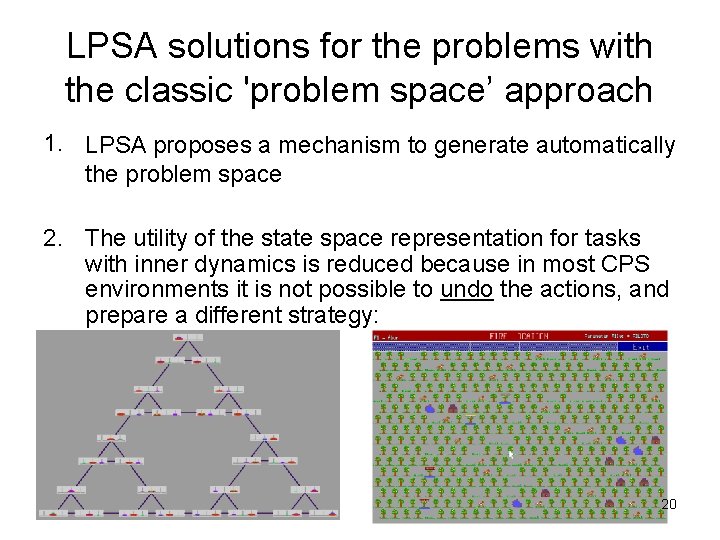

LPSA solutions for the problems with the classic 'problem space’ approach 1. LPSA proposes a mechanism to generate automatically The problem with the ‘generation’ of the problem space 2. The utility of the state space representation for tasks with inner dynamics is reduced because in most CPS environments it is not possible to undo the actions, and prepare a different strategy: 20

LPSA solutions for the problems with the classic 'problem space’ approach • The classic problem solving theory used mainly verbal LPSA uses log files and human judgments as data, but protocols as data. However, TALK ALOUD not concurrent verbal protocols INTERFERES PERFORMANCE IN COMPLEX DYNAMIC TASKS (Dickson, Mc. Lennan & Omodei, 2000) • LPSA does not assume independence or short Independence (or very short-term dependences) of dependences between states/actions. Indeed, it uses actions/states is assumed in some of the methods for representing performance. That is, the features that the dependences of all of them simultaneously to represent performance are local derive the problem space. The features that represent performance are global 21

Theoretical surroundings of Latent Problem Solving Analysis

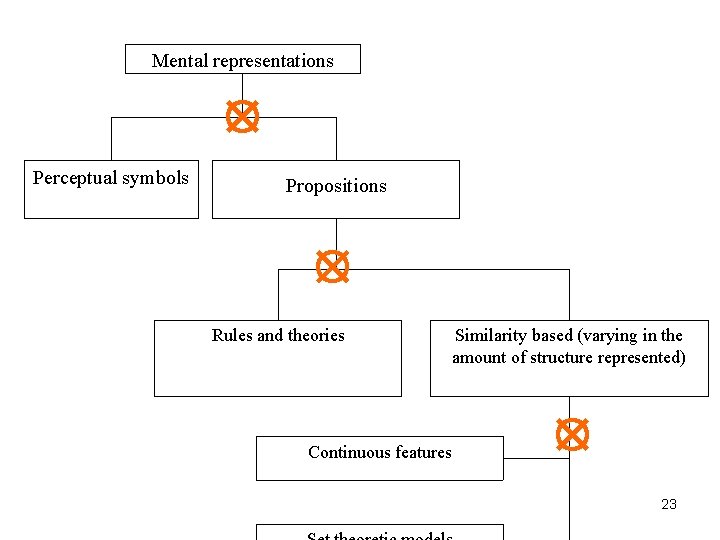

Mental representations Perceptual symbols Propositions Rules and theories Similarity based (varying in the amount of structure represented) Continuous features 23

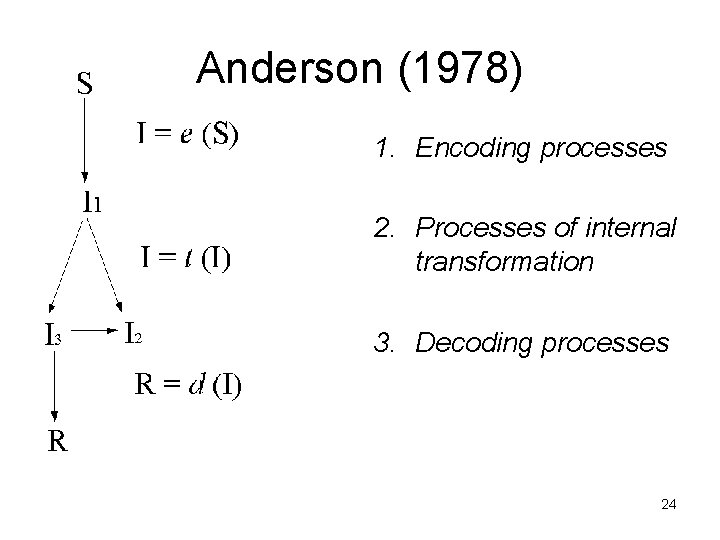

Anderson (1978) 1. Encoding processes 2. Processes of internal transformation 3. Decoding processes 24

LPSA applied to model human judgments

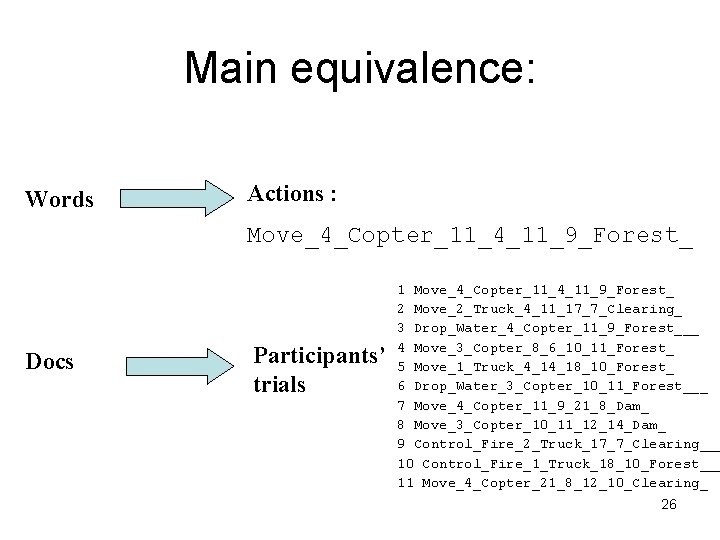

Main equivalence: Words Actions : Move_4_Copter_11_4_11_9_Forest_ Docs Participants’ trials 1 Move_4_Copter_11_4_11_9_Forest_ 2 Move_2_Truck_4_11_17_7_Clearing_ 3 Drop_Water_4_Copter_11_9_Forest___ 4 Move_3_Copter_8_6_10_11_Forest_ 5 Move_1_Truck_4_14_18_10_Forest_ 6 Drop_Water_3_Copter_10_11_Forest___ 7 Move_4_Copter_11_9_21_8_Dam_ 8 Move_3_Copter_10_11_12_14_Dam_ 9 Control_Fire_2_Truck_17_7_Clearing___ 10 Control_Fire_1_Truck_18_10_Forest___ 11 Move_4_Copter_21_8_12_10_Clearing_ 26

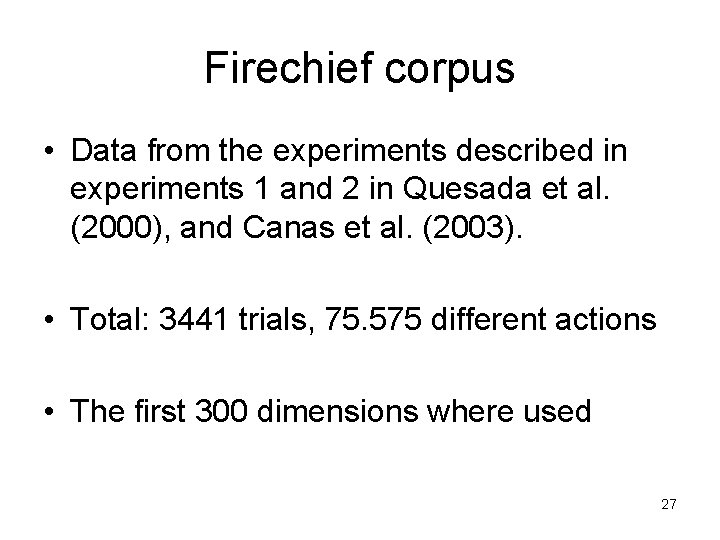

Firechief corpus • Data from the experiments described in experiments 1 and 2 in Quesada et al. (2000), and Canas et al. (2003). • Total: 3441 trials, 75. 575 different actions • The first 300 dimensions where used 27

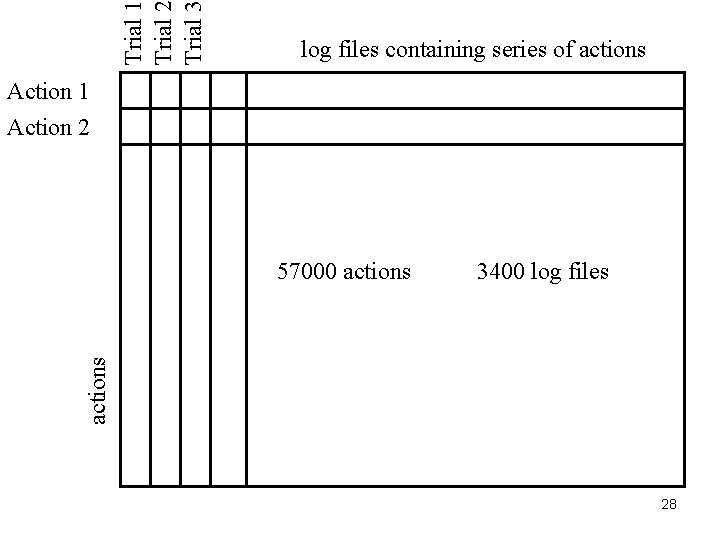

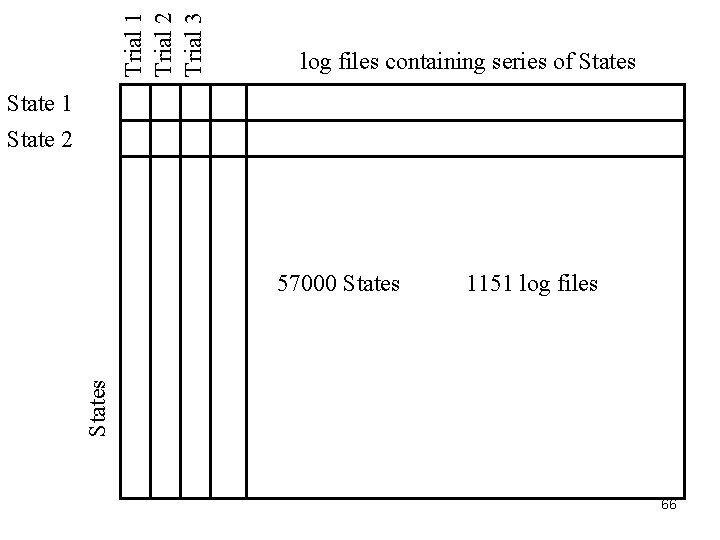

Trial 1 Trial 2 Trial 3 log files containing series of actions Action 1 Action 2 3400 log files actions 57000 actions 28

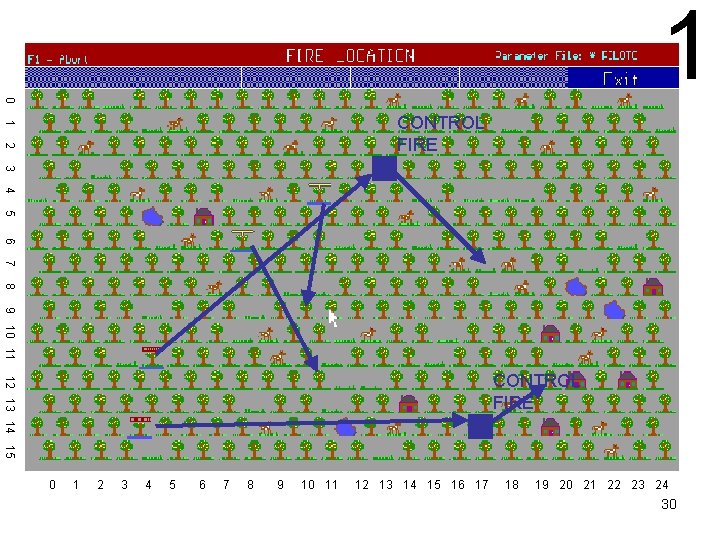

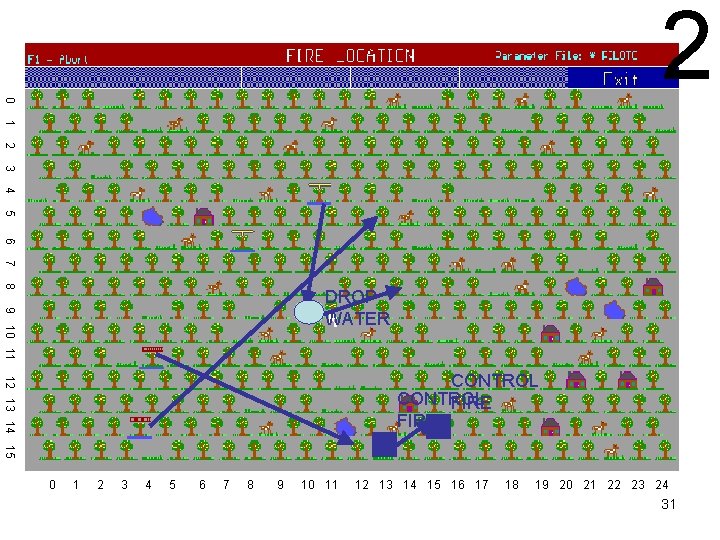

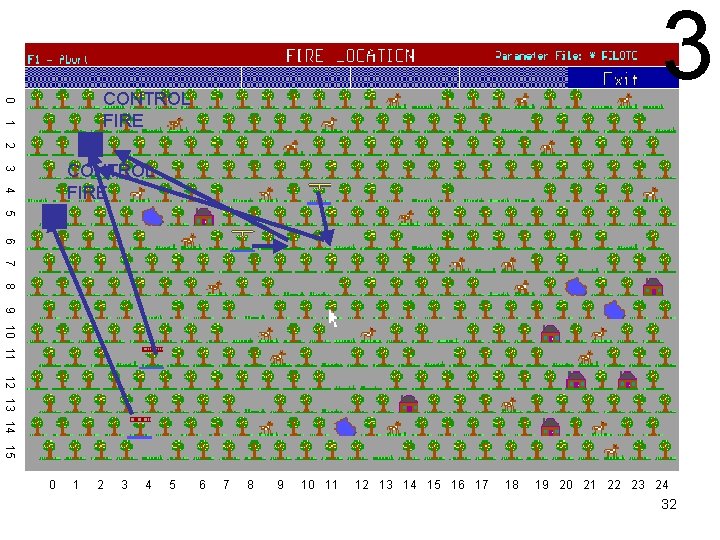

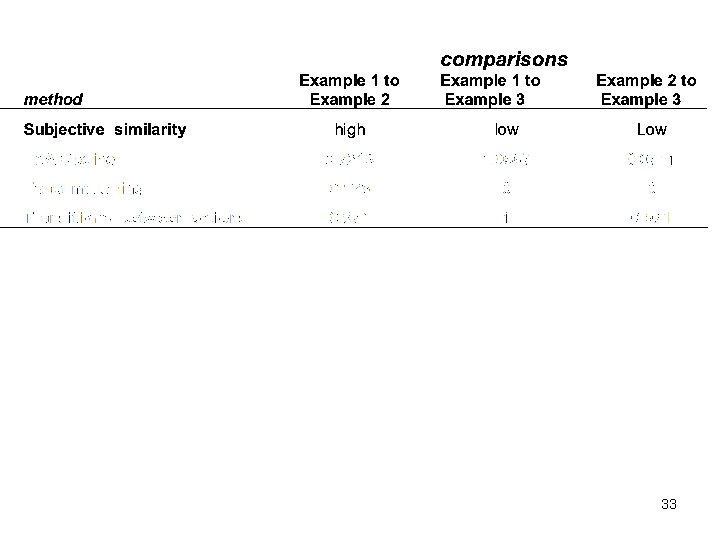

Three examples of performance • 8 first actions in a trial 2 D TE LA RE N NO 1 RELATED 3 29

0 1 1 CONTROL FIRE 2 3 4 5 6 7 8 9 10 11 12 13 14 15 CONTROL FIRE 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 30

0 2 1 2 3 4 5 6 7 8 9 10 11 DROP WATER 12 13 14 15 CONTROL FIRE 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 31

3 0 CONTROL FIRE 1 2 3 CONTROL FIRE 4 5 6 7 8 9 10 11 12 13 14 15 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 32

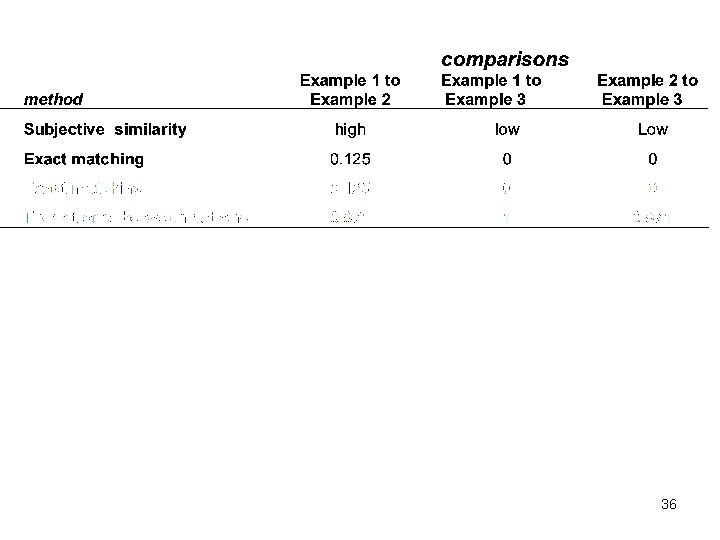

33

Possible way of comparison: Exact matching of actions • Exact matching: count the number of common actions in two files. The higher this number, the more similar they are 34

35

36

Possible way of comparison: Transitions between actions • count the number transitions between actions in two files. Create matrices, and correlate them 37

38

39

40

41

Possible way of comparison: Transitions between actions 42

43

• Exact matching is not sensitive to similarity differences (exigent criterion). Since Transitions between actions is blind to most of the information in the logs, it fails because declares as similar performances that are not • LSA has correctly inferred that the remaining actions, although different, are functionally related 44

LPSA - Human Judgments correlation

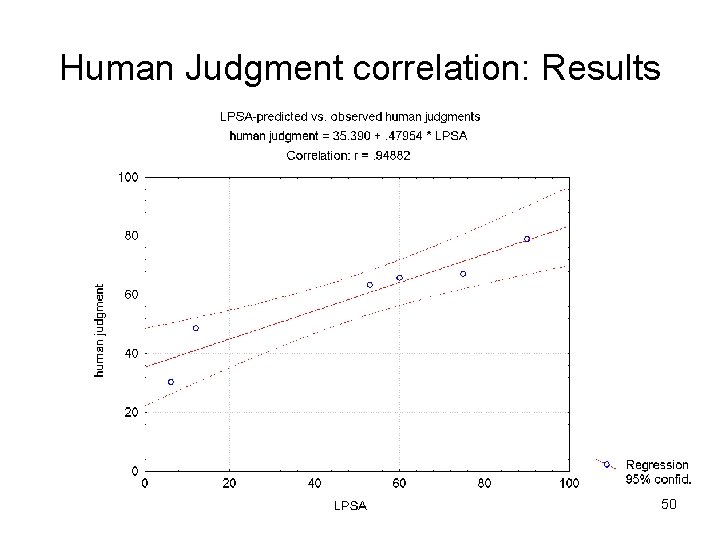

Human Judgment correlation • if LSA captures similarity between complex problem solving performances in a meaningful way, any person with experience on the task could be used as a validation • To test our assertions about LSA, we recruited 15 persons and exposed them to the same amount of practice as our experimental participants, so they could learn the constraints of the task. 46

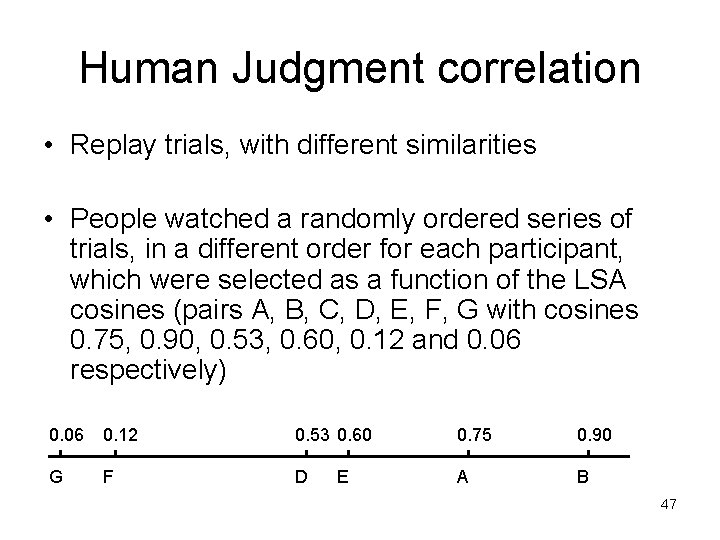

Human Judgment correlation • Replay trials, with different similarities • People watched a randomly ordered series of trials, in a different order for each participant, which were selected as a function of the LSA cosines (pairs A, B, C, D, E, F, G with cosines 0. 75, 0. 90, 0. 53, 0. 60, 0. 12 and 0. 06 respectively) 0. 06 0. 12 0. 53 0. 60 0. 75 0. 90 G F D A B E 47

Human Judgment correlation One of the pairs was presented twice to measure test-retest reliability. That is, for example, pair G was exactly the same as pair A for one participant, the same as pair F for another participant, etc. Filling out a form that presented all the possible pairings of ‘stimuli pairs’ were presented 48

Human Judgment correlation FULL-SCREEN REPLAY OF THE TRIAL SELECTED, 8 TIMES FASTER THAN NORMAL SPEED 49

Human Judgment correlation: Results 50

Conclusions • Applied: LSA is an automatic way of generating a problem space and compare slices of performance in complex tasks. It scales up very well and does not depend on a-priori task analyses • Theoretical: LSA proposes that problem spaces are metric spaces that are derived from experience. Actions or States that are functionally related are represented in similar regions of the space. In this sense Problem solving is unified with theories of object recognition and semantics. 51

LPSA as a theory of expertise in problem solving

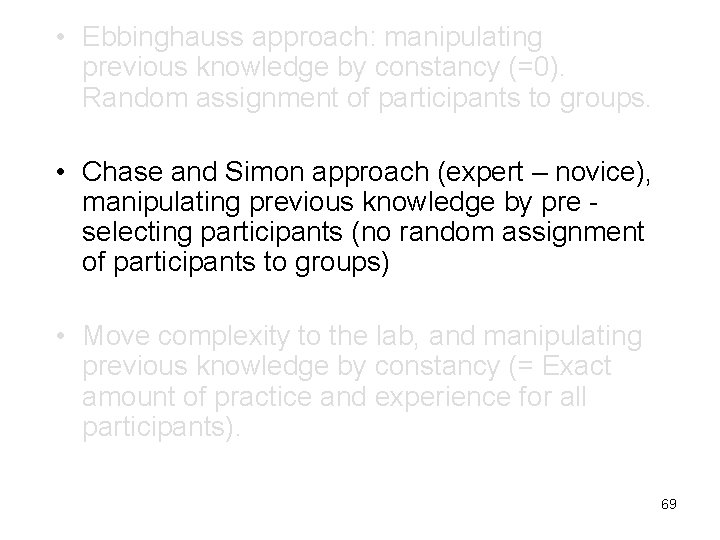

• Ebbinghaus approach: manipulating previous knowledge by eliminating it. Random assignment of participants to groups. • Chase and Simon approach (expert – novice), manipulating previous knowledge by pre - selecting participants (no random assignment of participants to groups) • Move complexity to the lab, and manipulate previous knowledge (exactly = amount of practice and experience for all participants) 53

• Ebbinghaus approach: manipulating previous knowledge by eliminating it. Random assignment of participants to groups. • Chase and Simon approach (expert – novice), manipulating previous knowledge by pre - selecting participants (no random assignment of participants to groups) • Move complexity to the lab, and manipulate previous knowledge (exactly = amount of practice and experience for all participants) 54

Move complexity to the lab • To simulate expertise environments in labs, we need tasks more complex than the standard ones: – More representative – Long learning curve – Interesting enough to keep the motivation for a long period of time 55

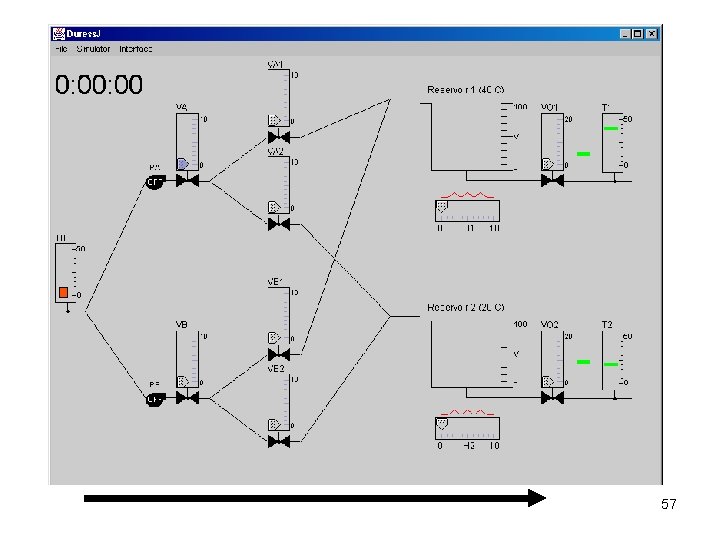

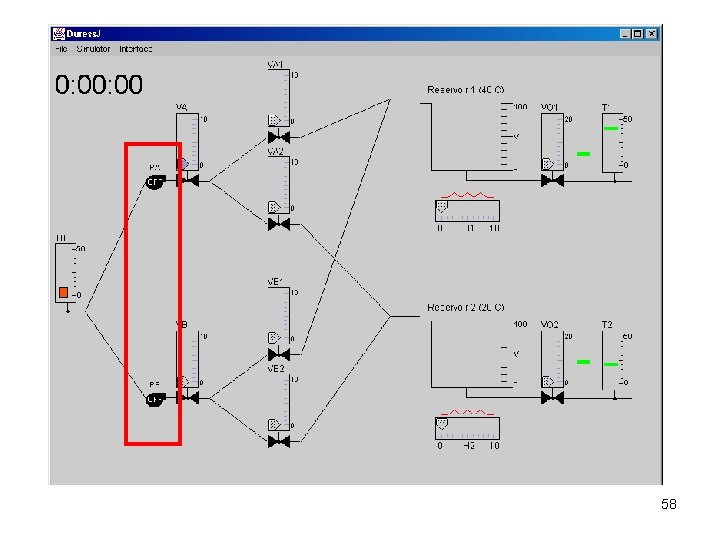

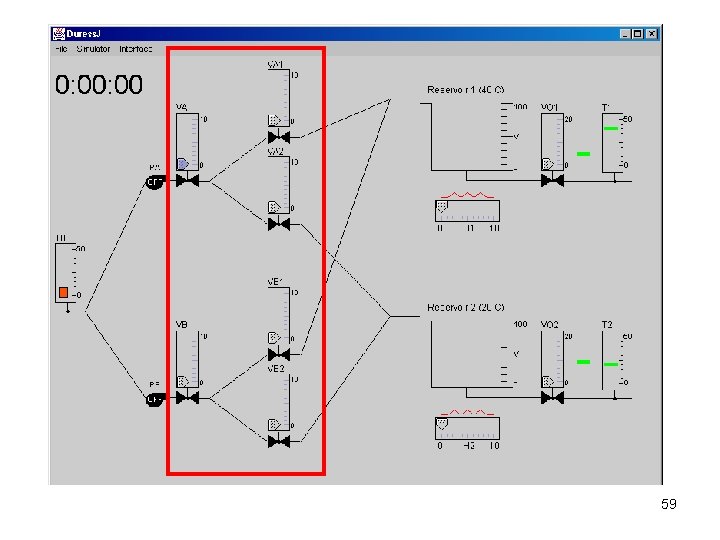

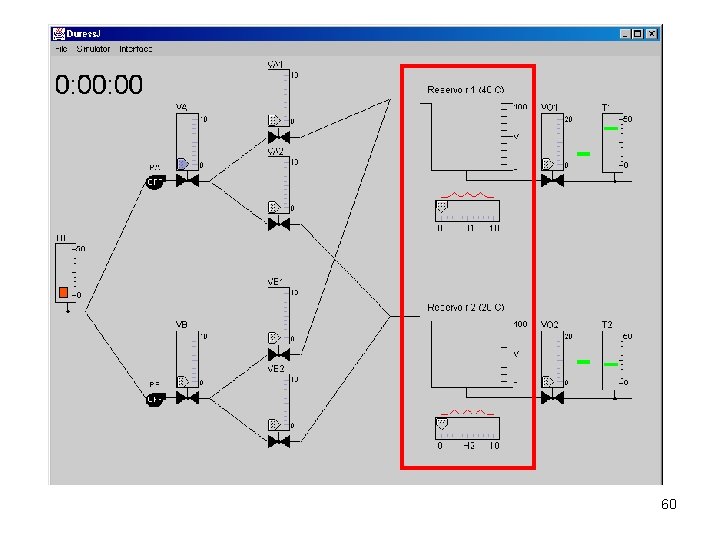

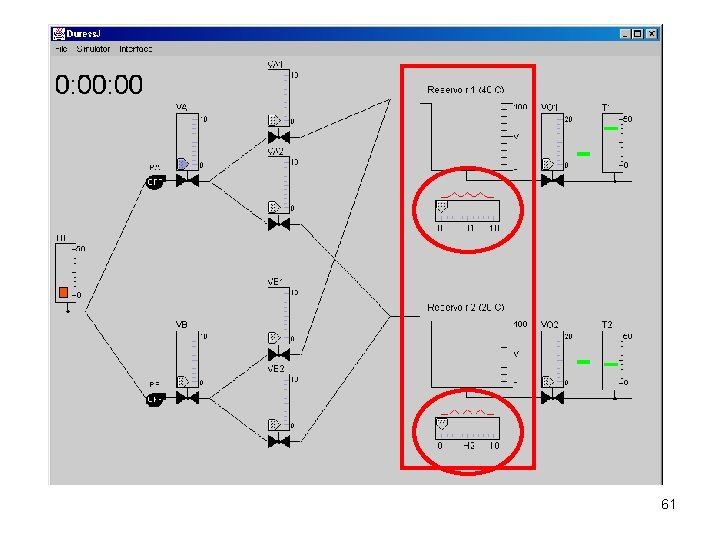

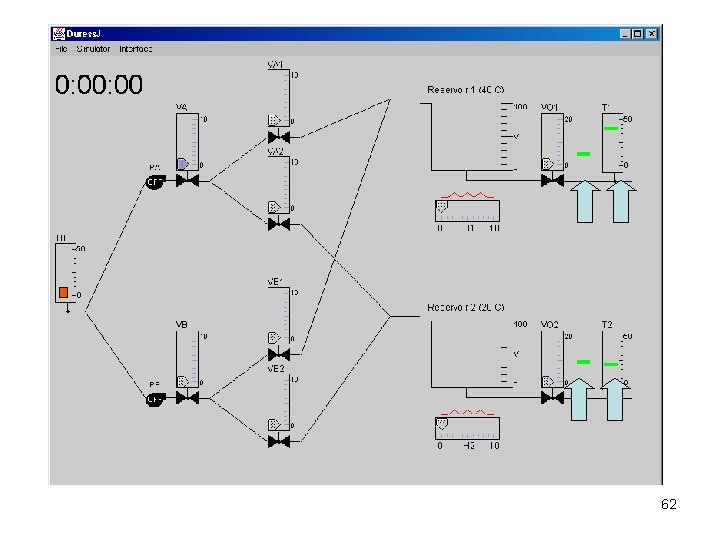

The DURESS Microworld • Goals: – To keep each of the reservoir temperatures (T 1 and T 2) at a prescribed temperature ( e. g. , 40 C and 20 C, respectively) – To satisfy the current mass (water) output demand ( 5 liters by second and 7 liters by second, respectively) 56

57

58

59

60

61

62

DURESS • Christoffersen Hunter, & Vicente (1996, 1997, 1998) 6 -month longitudinal experiment using Duress II. 225 trials, with different goals values. Every participant received exactly the same kind of trials. • However, analysis mostly qualitative. Not without a good reason… 63

34 variables, governed by mass and energy conservation laws Example DURESS II protocol 64

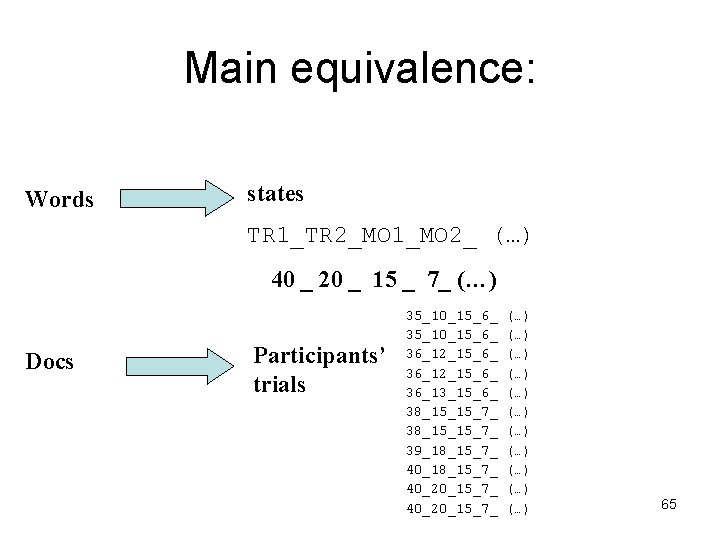

Main equivalence: Words states TR 1_TR 2_MO 1_MO 2_ (…) 40 _ 20 _ 15 _ 7_ (…) Docs Participants’ trials 35_10_15_6_ 36_12_15_6_ 36_13_15_6_ 38_15_15_7_ 39_18_15_7_ 40_20_15_7_ (…) (…) (…) 65

Trial 1 Trial 2 Trial 3 log files containing series of States State 1 State 2 1151 log files States 57000 States 66

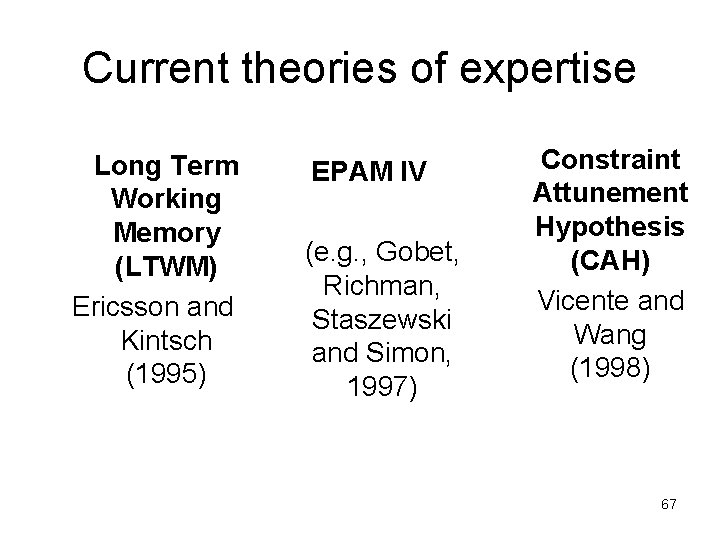

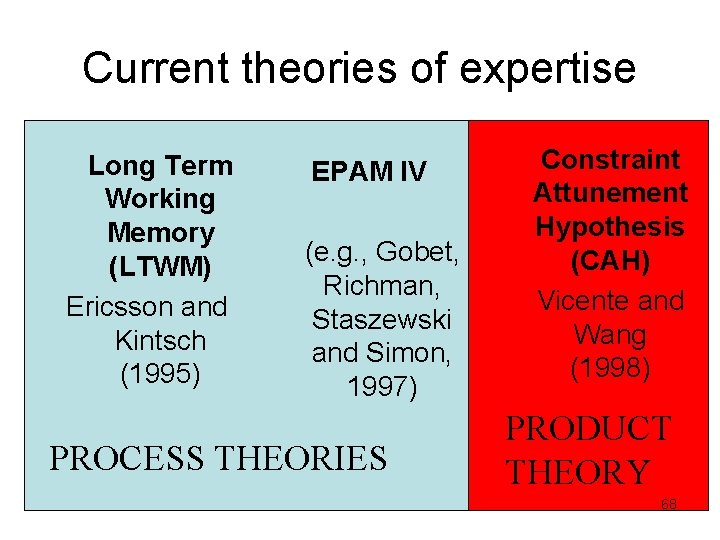

Current theories of expertise Long Term Working Memory (LTWM) Ericsson and Kintsch (1995) EPAM IV (e. g. , Gobet, Richman, Staszewski and Simon, 1997) Constraint Attunement Hypothesis (CAH) Vicente and Wang (1998) 67

Current theories of expertise Long Term Working Memory (LTWM) Ericsson and Kintsch (1995) EPAM IV (e. g. , Gobet, Richman, Staszewski and Simon, 1997) PROCESS THEORIES Constraint Attunement Hypothesis (CAH) Vicente and Wang (1998) PRODUCT THEORY 68

• Ebbinghauss approach: manipulating previous knowledge by constancy (=0). Random assignment of participants to groups. • Chase and Simon approach (expert – novice), manipulating previous knowledge by pre - selecting participants (no random assignment of participants to groups) • Move complexity to the lab, and manipulating previous knowledge by constancy (= Exact amount of practice and experience for all participants). 69

LTWM (Ericsson and Kintsch, 1995) • STM accounts for working memory in unfamiliar activities but does not appear to provide sufficient storage capacity for working memory in skilled complex activities (p. 220) • LTWM is acquired in particular domains to meet specific demands imposed by a given activity on storage and retrieval. LTWM is task specific. 70

LTWM (Ericsson and Kintsch, 1995) • Intense practice in a domain creates retrieval structures: associations between the current context and some parts of LTM that can be retrieved almost immediately without effort (example: SF and digits). • LTWM permits rapid and reliable reinstantiation of a context after interruption without a decrease in performance. 71

LTWM (Ericsson and Kintsch, 1995) • LTWM theory proposes that LTWM is generated dynamically by the cues that are present in short term memory. • During text comprehension, where the average human adult is an expert, retrieval structures are retrieving propositions from LTM and merging them with the ones derived from text. 72

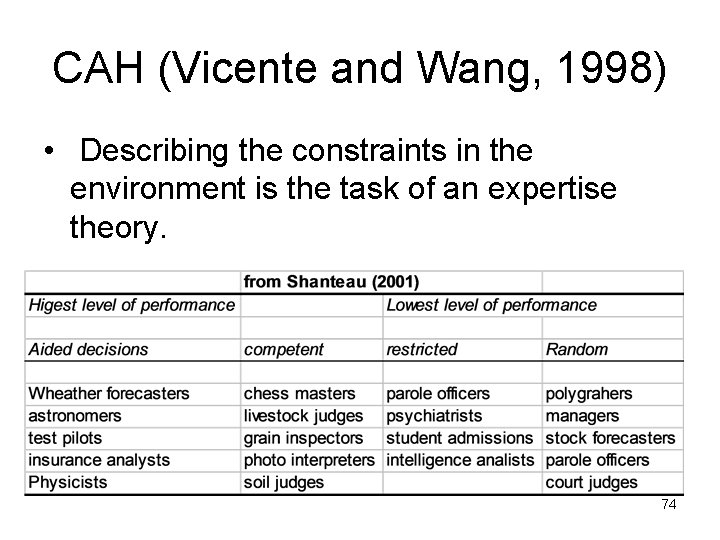

CAH (Vicente and Wang, 1998) • Contrary to what process theories maintain, Constrain Attunement Hypothesis (CAH) does not commit to a particular psychological mechanism to explain the phenomenon of expertise. 1. How should one represent the constrains that the environment (i. e. , the problem domain) places on expertise? 2. Under what conditions will there be an expertise advantage? 3. What factors determine how large the advantage can be? 73

CAH (Vicente and Wang, 1998) • Describing the constraints in the environment is the task of an expertise theory. 74

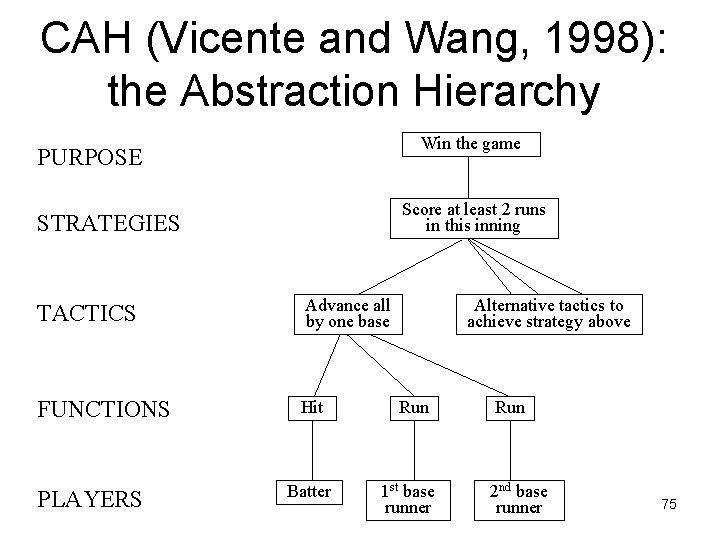

CAH (Vicente and Wang, 1998): the Abstraction Hierarchy Win the game PURPOSE Score at least 2 runs in this inning STRATEGIES TACTICS Advance all by one base FUNCTIONS Hit PLAYERS Batter Alternative tactics to achieve strategy above Run 1 st base runner Run 2 nd base runner 75

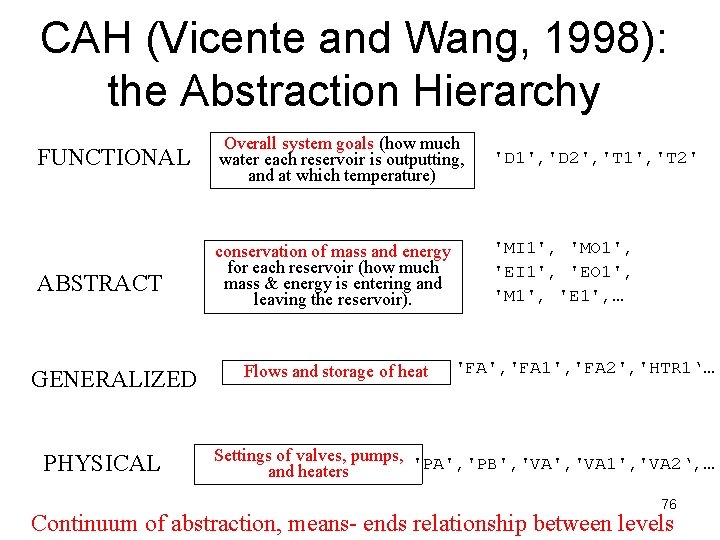

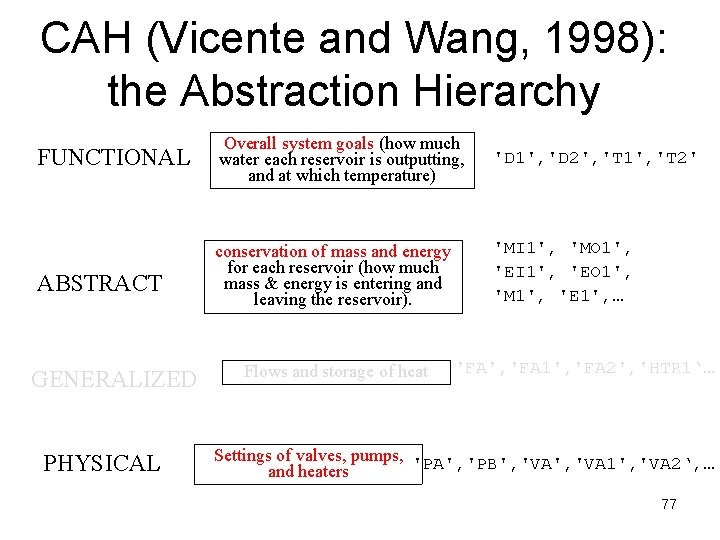

CAH (Vicente and Wang, 1998): the Abstraction Hierarchy FUNCTIONAL Overall system goals (how much water each reservoir is outputting, and at which temperature) 'D 1', 'D 2', 'T 1', 'T 2' ABSTRACT conservation of mass and energy for each reservoir (how much mass & energy is entering and leaving the reservoir). 'MI 1', 'MO 1', 'EI 1', 'EO 1', 'M 1', 'E 1', … GENERALIZED PHYSICAL Flows and storage of heat 'FA', 'FA 1', 'FA 2', 'HTR 1‘… Settings of valves, pumps, 'PA', 'PB', 'VA 1', 'VA 2‘, … and heaters 76 Continuum of abstraction, means- ends relationship between levels

CAH (Vicente and Wang, 1998): the Abstraction Hierarchy FUNCTIONAL Overall system goals (how much water each reservoir is outputting, and at which temperature) 'D 1', 'D 2', 'T 1', 'T 2' ABSTRACT conservation of mass and energy for each reservoir (how much mass & energy is entering and leaving the reservoir). 'MI 1', 'MO 1', 'EI 1', 'EO 1', 'M 1', 'E 1', … GENERALIZED PHYSICAL Flows and storage of heat 'FA', 'FA 1', 'FA 2', 'HTR 1‘… Settings of valves, pumps, 'PA', 'PB', 'VA 1', 'VA 2‘, … and heaters 77

LTWM vs. CAH • LTWM claims that the magnitude of expertise effects is “related to the level of attained skill and to the amount of relevant prior experience” • CAH argues that this claim is incomplete. Expertise effects in memory recall are also determined by the amount of structure in the domain (and by active attunement to that structure) • LPSA is sensible both to ‘relevant previous practice’ and to ‘amount of structure in the domain’ 78

Design and predictions

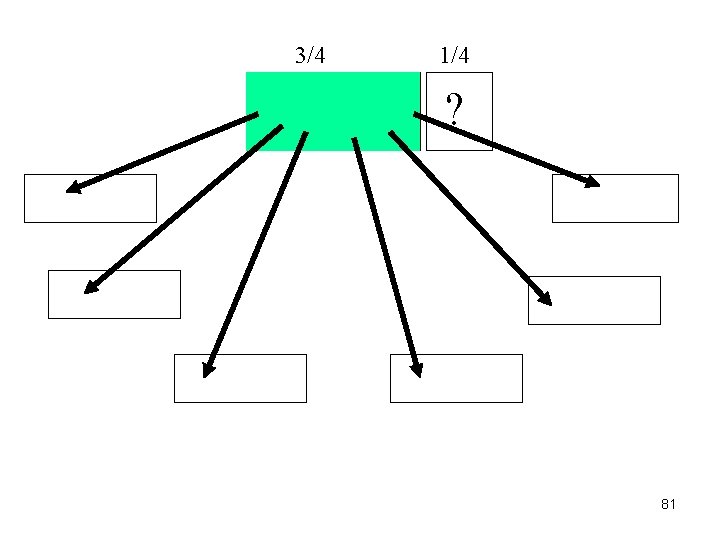

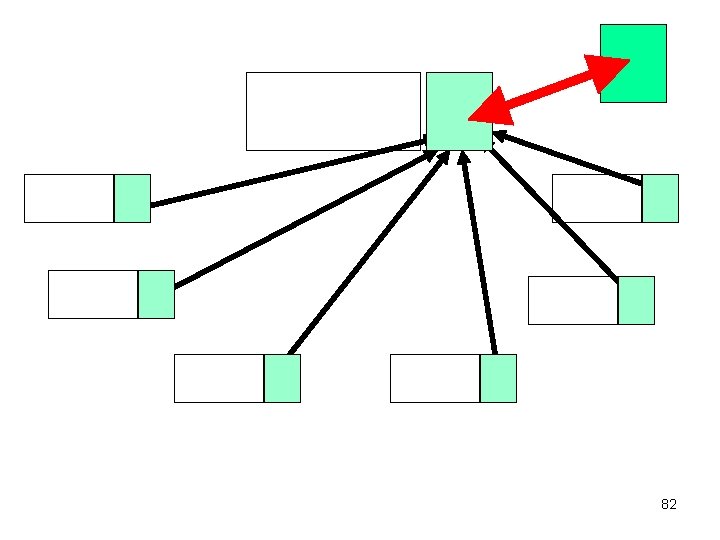

3/4 1/4 ? 80

3/4 1/4 ? 81

82

Predictions • Only huge amounts of experience with the system would enable the actor (human or model) to make accurate predictions of the last quarter of the trial • Sparse practice should clearly lead to poor prediction • Only structured environments should show the expertise advantage. Following CAH, the expert (human or model) should not do well in a 83 completely unstructured environment

Results

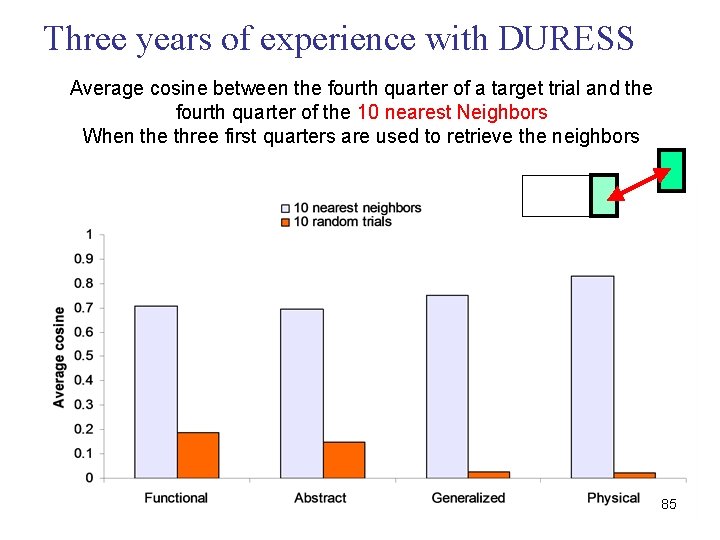

Three years of experience with DURESS Average cosine between the fourth quarter of a target trial and the fourth quarter of the 10 nearest Neighbors When the three first quarters are used to retrieve the neighbors 85

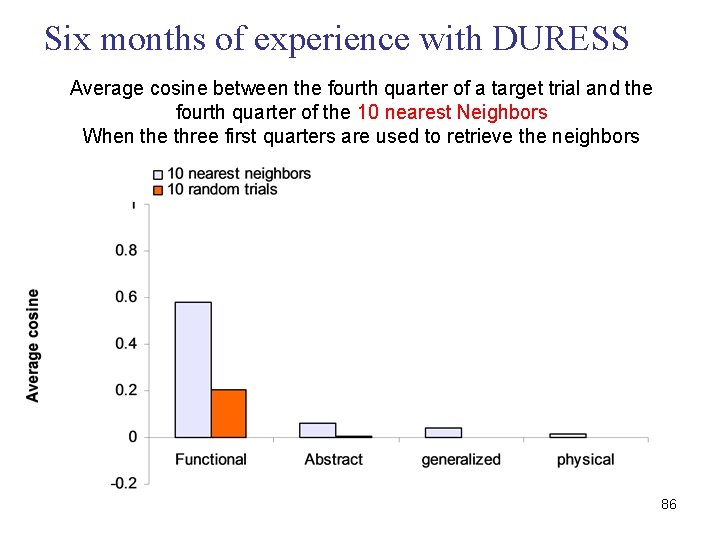

Six months of experience with DURESS Average cosine between the fourth quarter of a target trial and the fourth quarter of the 10 nearest Neighbors When the three first quarters are used to retrieve the neighbors 86

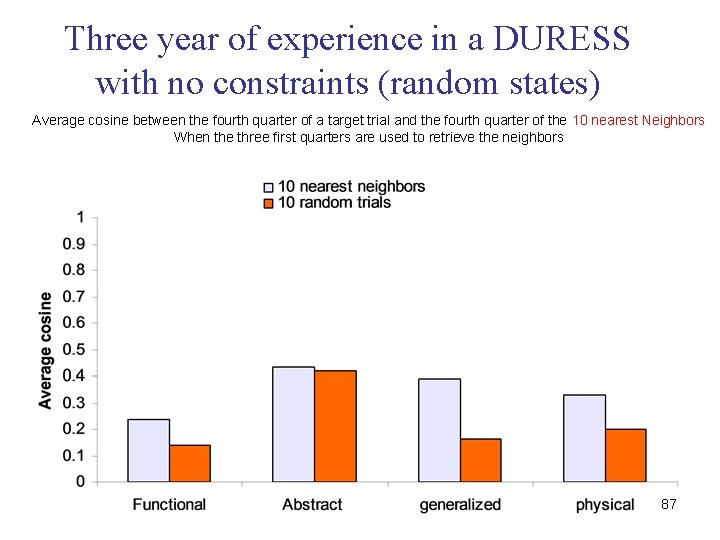

Three year of experience in a DURESS with no constraints (random states) Average cosine between the fourth quarter of a target trial and the fourth quarter of the 10 nearest Neighbors When the three first quarters are used to retrieve the neighbors 87

Conclusions

conclusions • In LTWM’s original formulation the retrieval structures were under-specified. In LPSA, the basic mechanisms postulated are defined computationally. • In CAH’s original formulation, the representation of the environmental constraints (its most central assertion) where under-specified too. LPSA proposes an automatic mechanism to represent the statistical regularities of the environment. 89

conclusions • LPSA can explain both LTWM and CAH main assertions – LTWM claims that the magnitude of expertise effects is related to the level of attained skill and to the amount of relevant prior experience – CAH claims that expertise effects in memory recall are also determined by the amount of structure in the domain (and by active attunement to that structure) • Better yet, LPSA proposes both processes and representational structures 90

conclusions • What does this mean for theorizing about problem solving? – As in LTWM for text comprehension, we propose that in expert problem solving the current context automatically and effortless retrieve past knowledge, and adapt it to the current situation. – This retrieval is specific to the domain of expertise, and requires a long period of practice. Short period will not do. – This retrieval is only possible in domains that show constrains that the expert can use (attune). 91

conclusions • GENERALITY: the fact that the same mechanism, with the very same underlying assumptions, can be used for language and Problem Solving is interesting per-se: In LTWM, the retrieval structures for chess are different compared to the ones proposed for text comprehension; In CAH, two AH for two different tasks are different too; In LPSA, any space for any task is a vector space. 92

Automatic Landing Technique Assessment using Latent Problem Solving Analysis (LPSA)

The problem • There is currently no methodology to automatically assess landing technique in a commercial aircraft or a flying simulator. Instructors are a significant cost for training and evaluation of pilots, and the use of instructors also incorporates a subjective component that may vary from pilot to pilot. • The advantages of automatic landing technique evaluation are many: (1) Reduced cost of the evaluation. (2) Increased objectivity in the evaluation. (3) Decrease the influence of the instructor. (4) Perfect Test-retest reliability. (5) It is always available and can be triggered by the trainee at will. (6) The model can rate as many landings as time enables, etc. 94

A solution: Latent Problem Solving Analysis (LPSA) • Latent Problem Solving Analysis (LPSA, Quesada, Kintsch and Gomez, 2002) is based on Latent Semantic Analysis (LSA, Landauer and Dumais, 1997). Instead of using word occurrence statistics and huge samples of text, LPSA uses a representative amount of activity in controlling dynamic systems (actions or states). • Like words, states and actions appear in particular contexts but not in others. Some states and actions are interchangeable, being ‘functional synonyms’. Given the right algorithms and sufficient amounts of logged trials, a problem space can be derived in a similar way as semantic spaces are. • In this application of LPSA to landing technique evaluation, we assume that an expert uses her past knowledge to emit landing ratings by comparing the current situation to the past ones, and generates an expanded representation of the environment by composing the past situations that are most similar to the current one. 95

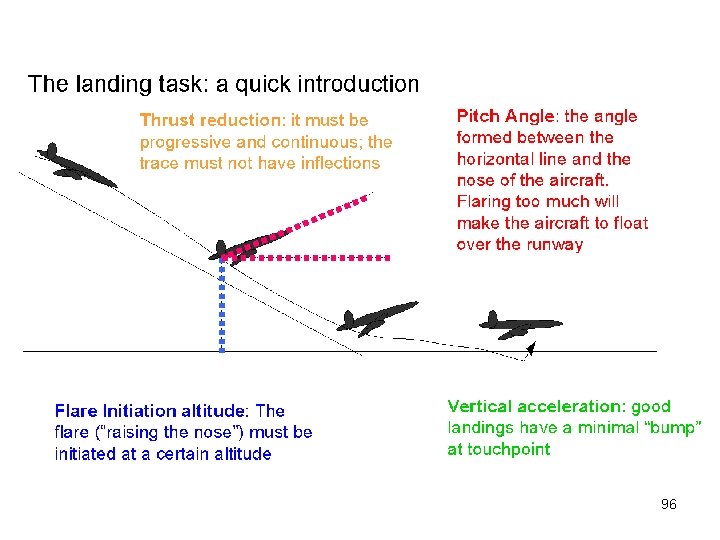

96

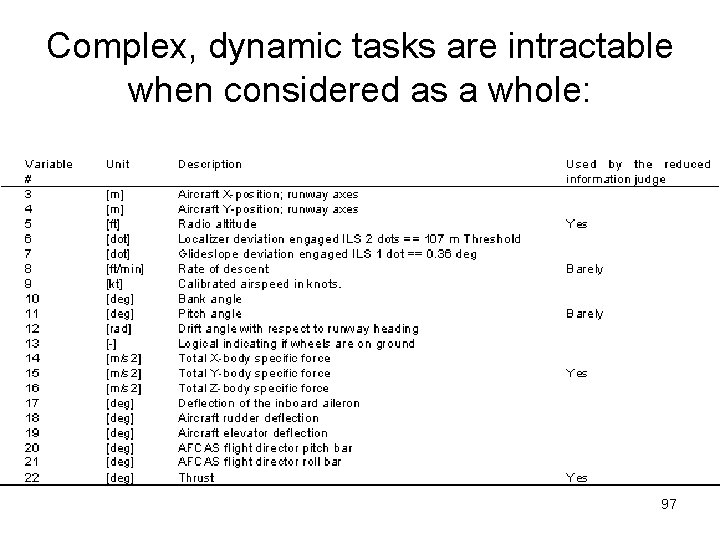

Complex, dynamic tasks are intractable when considered as a whole: 97

Complex, dynamic tasks are intractable when considered as a whole: • We need to perform complexity reduction, in a mostly automatic way – The triangulation technique – Dimensionality reduction (LPSA) 98

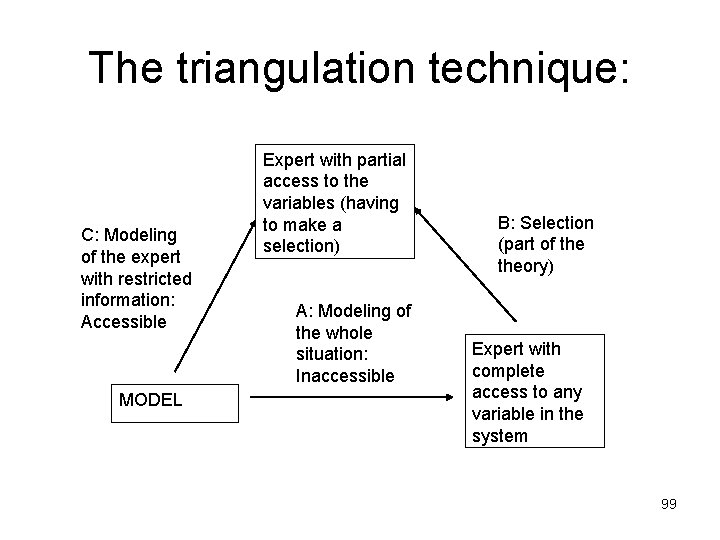

The triangulation technique: C: Modeling of the expert with restricted information: Accessible MODEL Expert with partial access to the variables (having to make a selection) A: Modeling of the whole situation: Inaccessible B: Selection (part of theory) Expert with complete access to any variable in the system 99

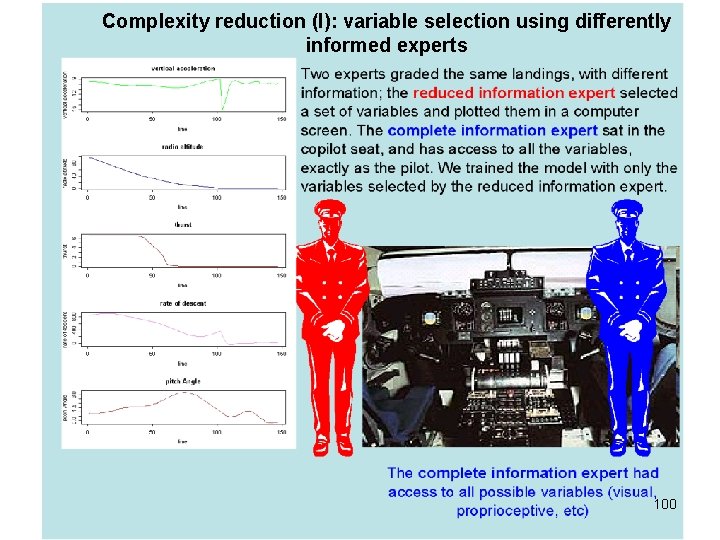

Complexity reduction (I): variable selection using differently informed experts 100

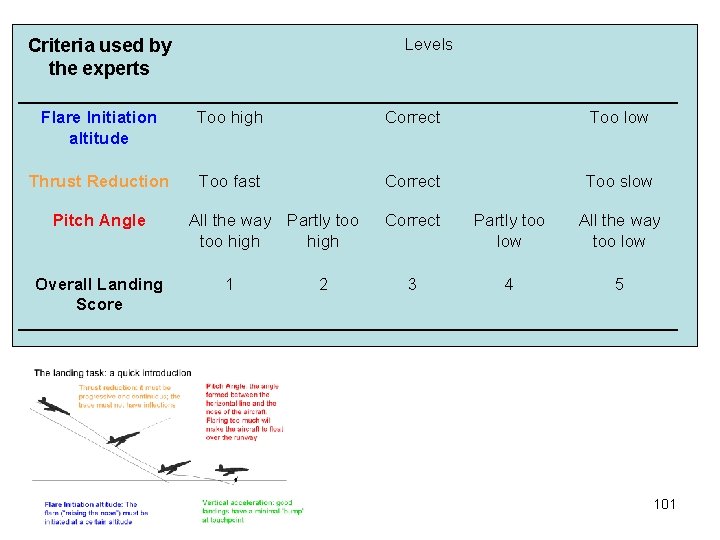

Levels Criteria used by the experts Flare Initiation altitude Too high Correct Too low Thrust Reduction Too fast Correct Too slow Correct Partly too low All the way too low 3 4 5 Pitch Angle Overall Landing Score All the way Partly too high 1 2 101

Complexity reduction (II) : Using SVD, the problem space is a vector space • A state is a string of text consisting of the values of each variable (reduced information expert’s) joined by underscores, to make it a single token, like: “time tag_vertical acceleration_Radio altitude_Thurst” • A landing is a collection of these states. The variables were sampled ten times per second, and the landing time was 15 seconds approximately, so each landing contained about 150 states 102

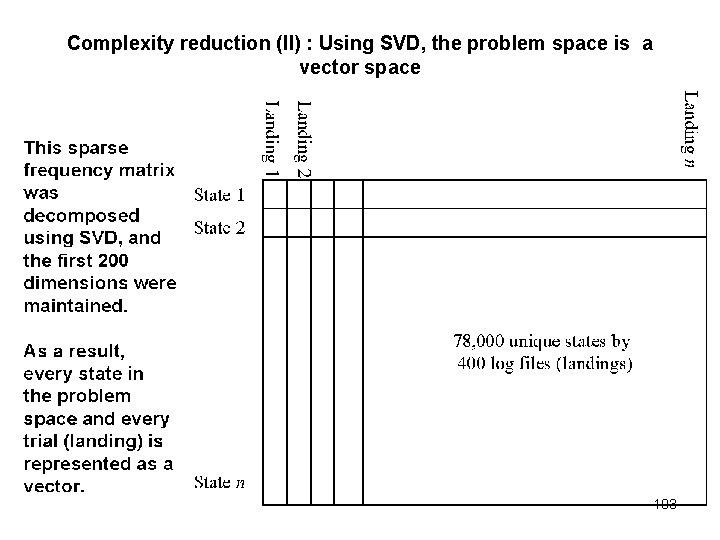

Complexity reduction (II) : Using SVD, the problem space is a vector space 103

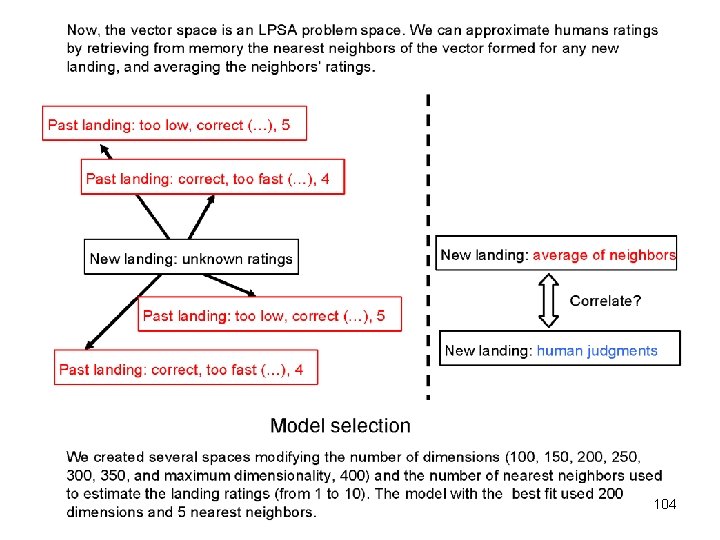

104

Results

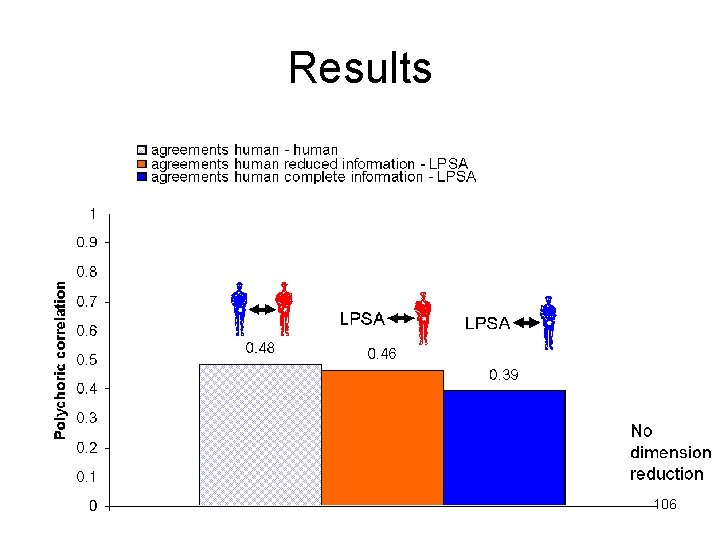

Results 106

Results • The two landing raters’ agreement is not too high; however, it is similar to other experts’ agreement, such as Clinical Psychologists (0. 40), Stockbrokers (<0. 32), Polygraphers (0. 32) and Livestock Judges (0. 50). Their agreement is lower than the ones reported for Weather Forecasters (0. 95), Pathologists (0. 55), Auditors (0. 76) and Grain Inspectors (0. 60) (Shanteau. 2001). • The correlation between the model, and the reduced information expert is about the same as the correlation between the two humans (0. 48 vs. 0. 46). Note that the ceiling for the model is the correlation between two humans doing the task: a model that correlates with one human better than the between-human correlation is under suspicion. The correlation for the complete information expert was. 39, even though the model was not trained to mimic him. 107

Results 108

Results Note that the only criterion where the model correlates with any of the experts more than they correlate to each other is thrust reduction. Thrust reduction seems to be a very difficult feature to judge, since the agreement between human experts is the lowest (0. 27) and also it is the one in which the reduced information expert obtains the lowest test-retest reliability (0. 538, see Table 1 4 in page 119). All the polychoric correlations between the reduced information expert and the model were significant (p =. 002), so were the correlations between complete information expert and model. The equivalent model without dimensionality reduction (400 dimensions, 5 neighbors, no weighting, no timestamp) produced correlation values of 0. 37, 0. 08, 0. 57 and 0. 50 for the above used criteria respectively. 109

Results: no-constraints corpus 110

Conclusions Previously LPSA has been proved as a powerful theory to model behavior in complex, dynamic problem-solving tasks, and has been proposed as a theory of expertise, see Quesada (unpublished). However, this is the first time that LPSA is used to develop technology that can be used in industrial applications. In previous work, we have presented an experience-based approach to problem solving. Problem solving is viewed as the extraction of useful representations from a corpus of situations. The creation of the representations is a primarily bottom-up, unsupervised process. It is proposed that the problem space can be viewed as a vector space. People use their past knowledge to perform complex, dynamic tasks by comparing the current situation to past ones, and generate an expanded representation of the environment by composing the past situations that are most similar to the current one. In complex dynamic situations, this intuitive, pattern-based system can have a very important role. 111

Conclusions It is possible to construct systems that grade landing technique automatically as well as humans, if we consider that the limit of performance for such a model is the human-human agreement. The correlation human-human was low (0. 46) but within the range of some other areas reported (Shanteau, 2001). In a large-scale application of the model (for a training and evaluation department, for example), we can imagine that 500 pilots need to be evaluated. In that situation, only a small proportion of randomly sampled landings (that can be kept from previous sessions) must be evaluated by humans; the rest is performed by the system. Since the model has different landing criteria, it could emit recommendations such as: ‘In this landing, you initiated the flare too high, and reduced the thrust too late. Keep that in your mind for the next one’. 112

Conclusions A direct consequence of the availability of a system like LPSA for the development of psychological theory is that some experiments that were prohibitive before could now be planned within the budget. Since instructors are a sparse resource, an experimenter may decide that she cannot afford to run a particular, very promising experiment, because of the expenses associated with performance assessment. With an automatic and reliable method to perform the evaluation, more complex experiments could be feasible. 113

- Slides: 113