Latent Dirichlet Allocation David M Blei Andrew Y

Latent Dirichlet Allocation David M Blei, Andrew Y Ng & Michael I Jordan presented by Tilaye Alemu & Anand Ramkissoon

Motivation for LDA In lay terms: document modelling text classification collaborative filtering. . . in the context of Information Retrieval The principal focus in this paper is on document classification within a corpus

Structure of this talk Part 1: Theory Background (some) other approaches Part 2: Experimental results some details of usage wider applications

LDA: conceptual features Generative Probabilistic Collections of discrete data 3 -level hierarchical Bayesian model mixture models efficient approximate inference techniques variational methods EM algorithm for empirical Bayes parameter estimation

How to classify text documents Word (term) frequency tf-idf term-by-document matrix discriminative sets of words fixed-length lists of numbers little statistical structure Dimensionality reduction techniques Latent Semantic Indexing Singular value decomposition not generative

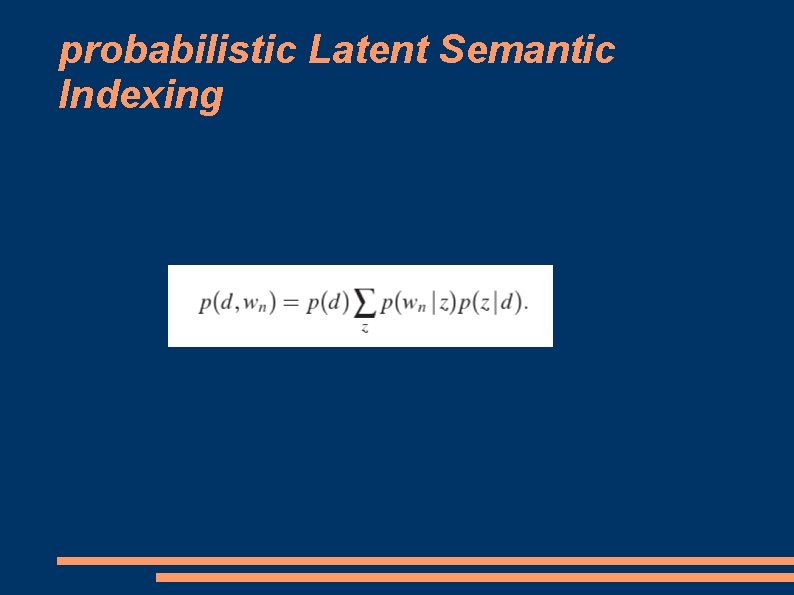

How to classify text documents ct'd probabilistic LSI (PLSI) each word generated by one topic each document generated by a mixture of topics a document is represented as a list of mixing proportions for topics No generative model for these numbers Number of parameters grows linearly with the corpus Overfitting How to classify documents outside training set

A major simplifying assumption A document is a “bag of words” A corpus is a “bag of documents” order is unimportant exchangeability de Finetti representation theorem any collection of exchangeable random variables has a representation as a (generally infinite) mixture distribution

A note about exchangeability Does not mean that random variables are iid when conditioned on wrt to an underlying latent parameter of a probability distribution Conditionally the joint distribution is simple and factored

Notation word: unit of discrete data, an item from a vocabulary indexed {1, . . . , V} each word is a unit basis V-vector document: sequence of N words w=(w 1, . . . , w. N) corpus a collection of M documents D=(w 1, . . . , w. M) Each document is considered a random mixture over latent topics Each topic is considered a distribution over words

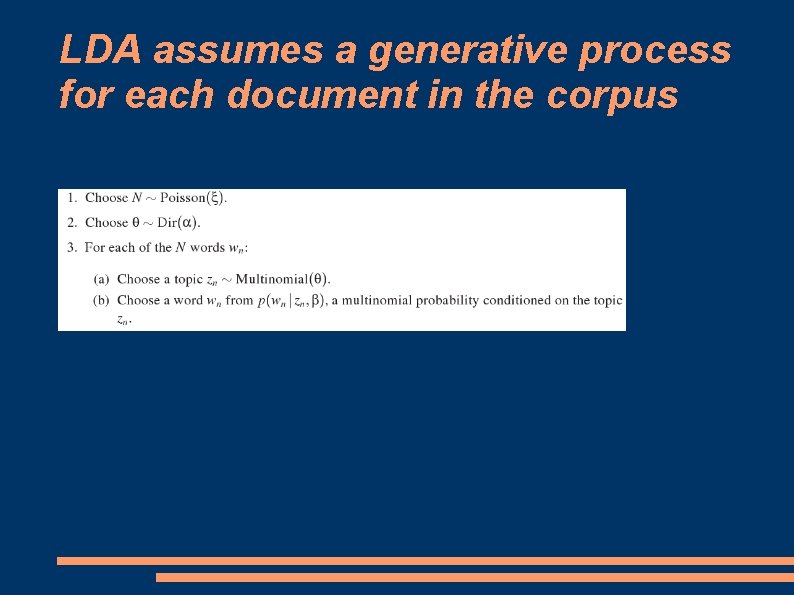

LDA assumes a generative process for each document in the corpus

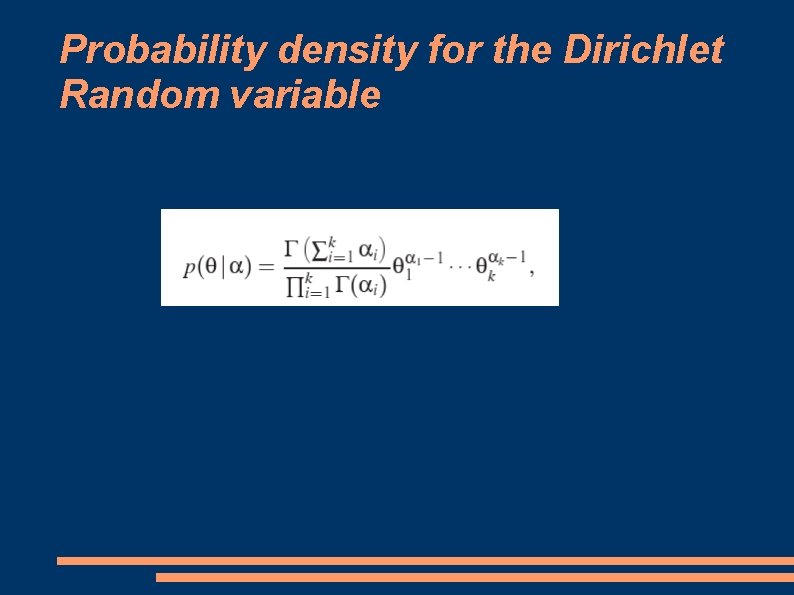

Probability density for the Dirichlet Random variable

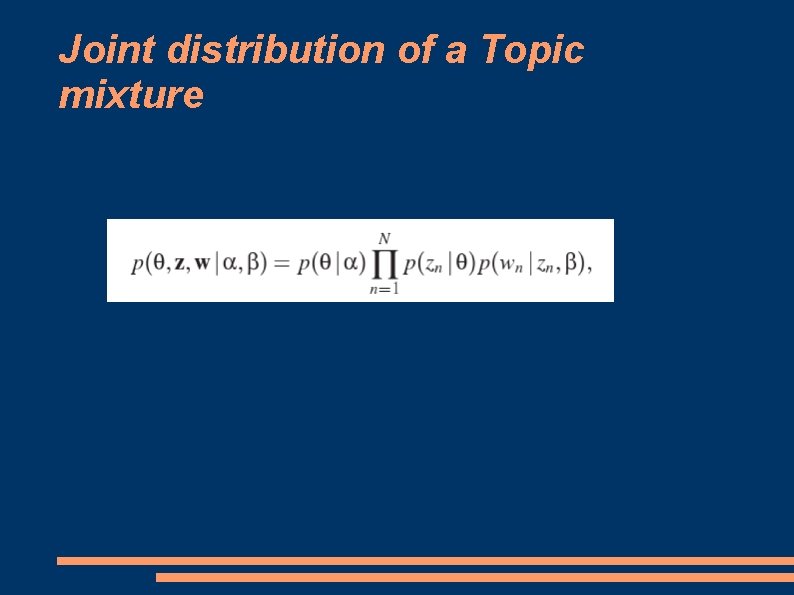

Joint distribution of a Topic mixture

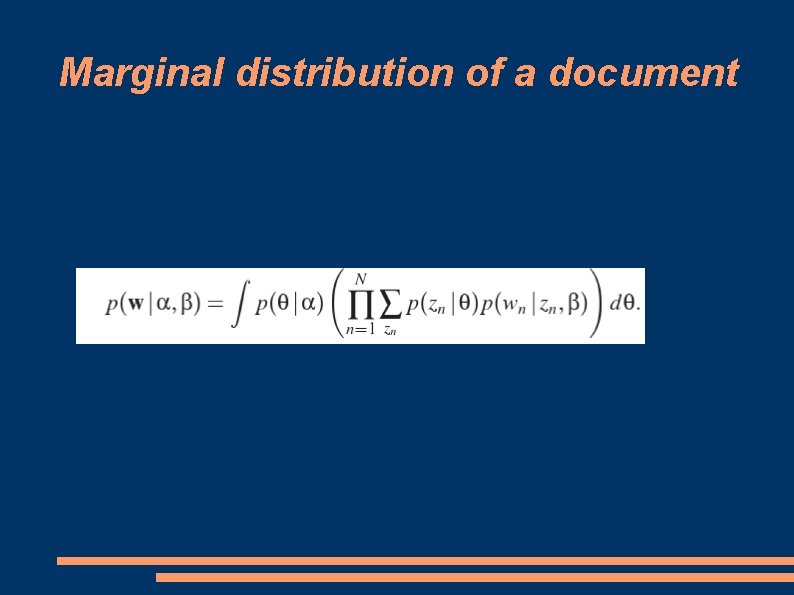

Marginal distribution of a document

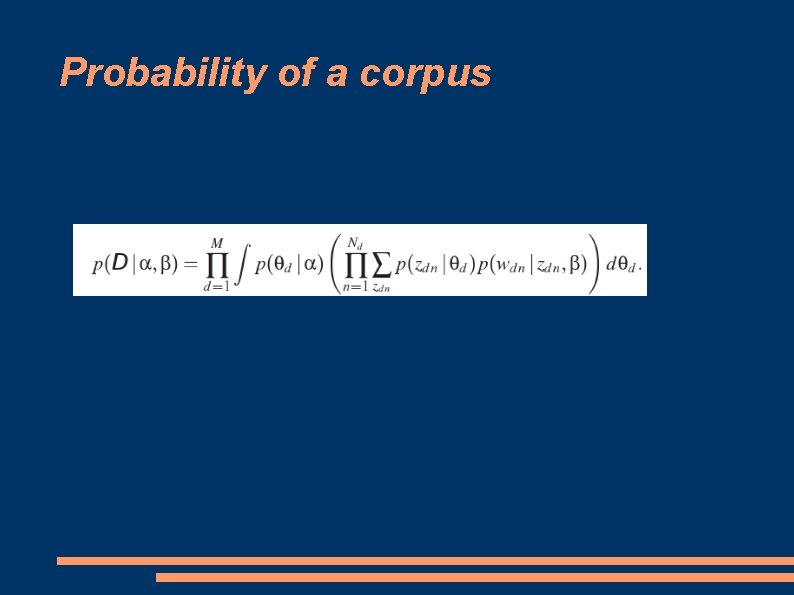

Probability of a corpus

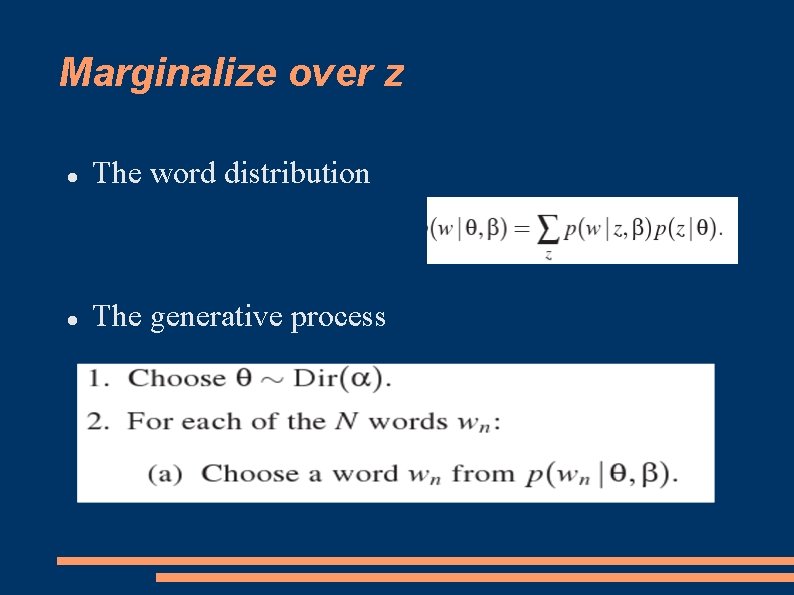

Marginalize over z The word distribution The generative process

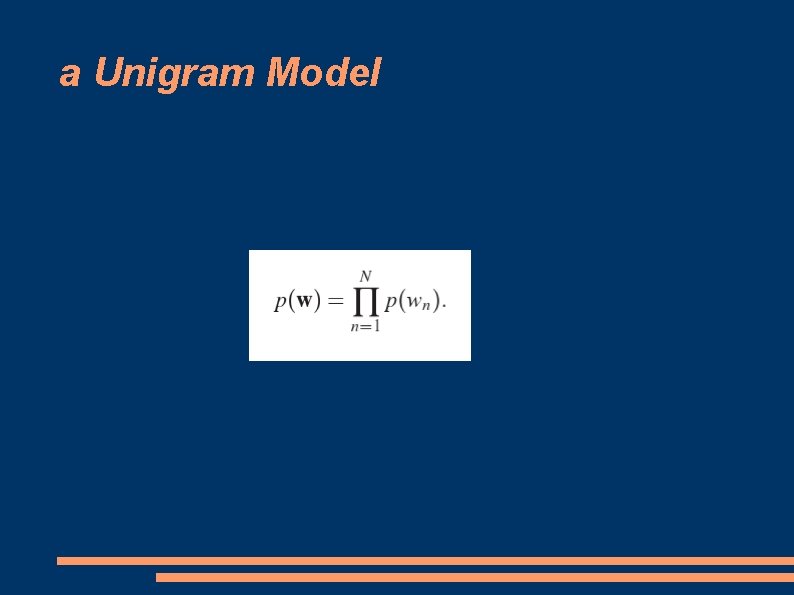

a Unigram Model

probabilistic Latent Semantic Indexing

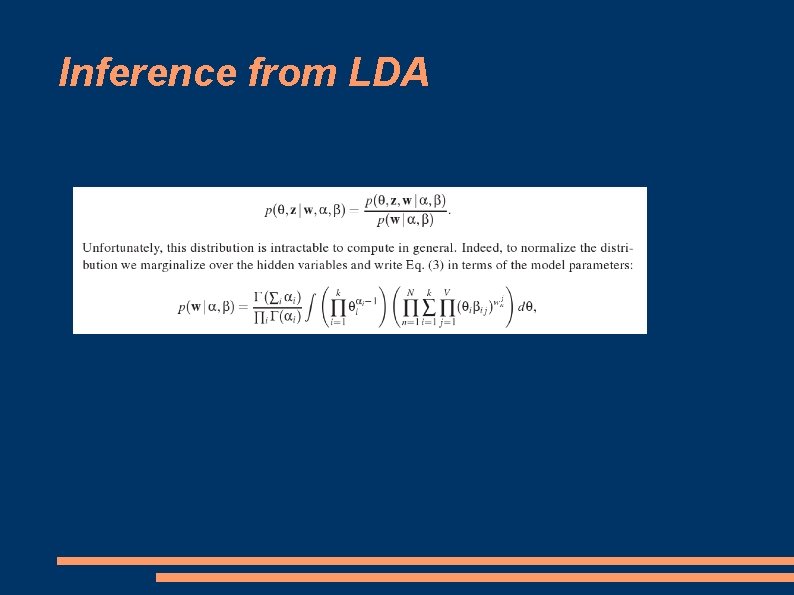

Inference from LDA

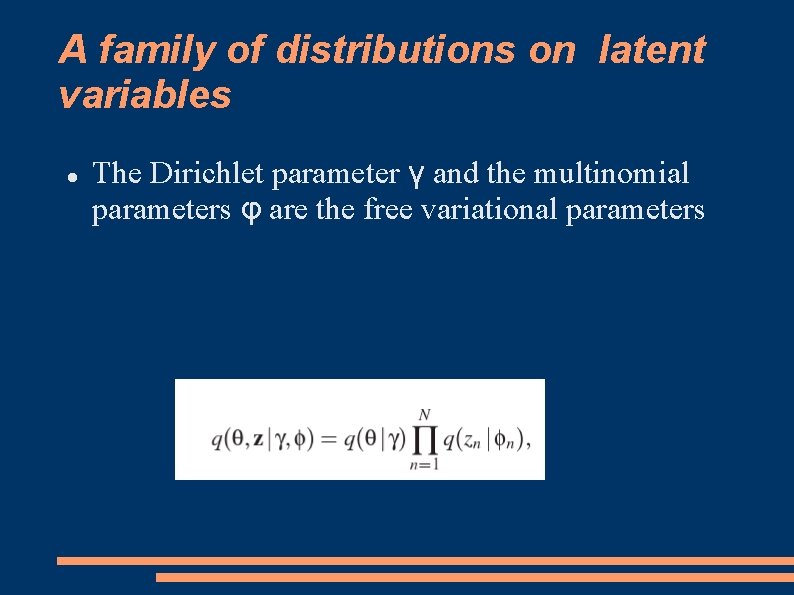

Variational Inference

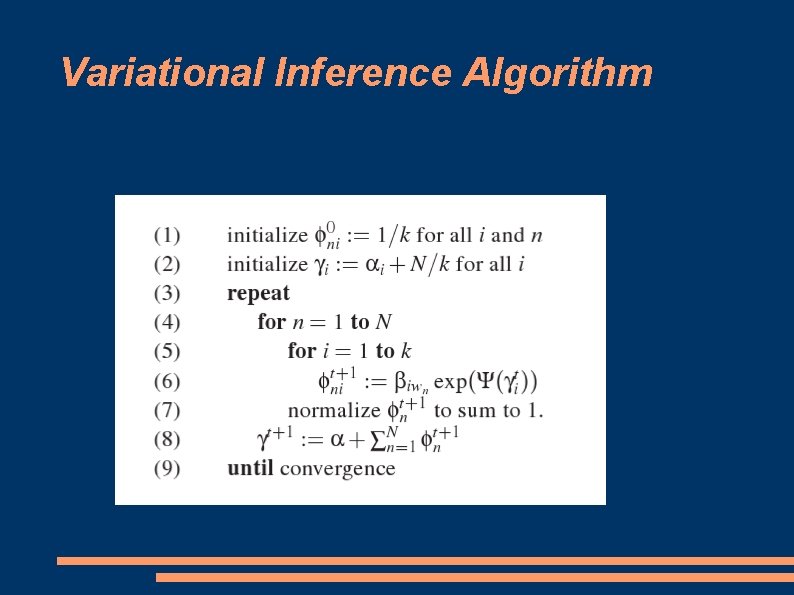

A family of distributions on latent variables The Dirichlet parameter γ and the multinomial parameters φ are the free variational parameters

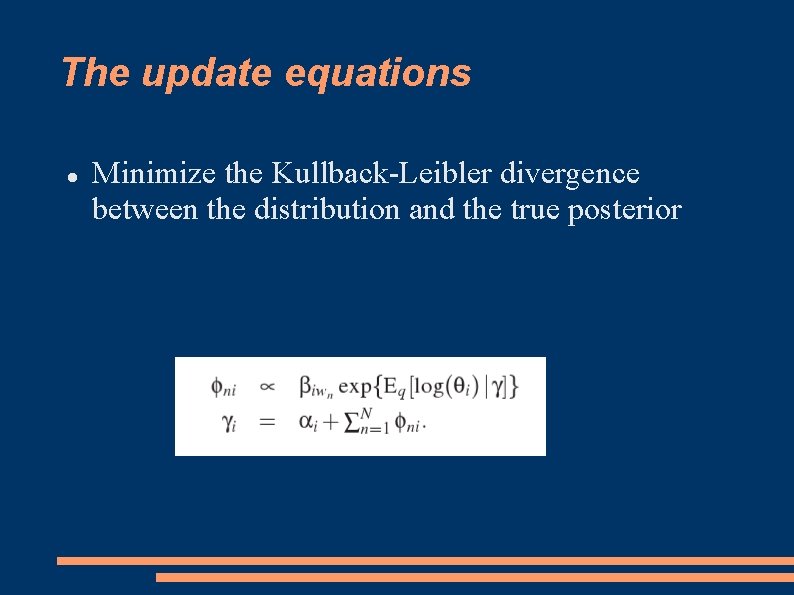

The update equations Minimize the Kullback-Leibler divergence between the distribution and the true posterior

Variational Inference Algorithm

- Slides: 22