Last Time Local Lighting model Diffuse illumination Specular

- Slides: 28

Last Time • Local Lighting model – Diffuse illumination – Specular illumination – Ambient illumination • Light sources – Point – Directional – Spotlights 11/09/04 © University of Wisconsin, CS 559 Fall 2004

Today • Shading Interpolation • Mapping Techniques 11/09/04 © University of Wisconsin, CS 559 Fall 2004

Shading so Far • So far, we have discussed illuminating a single point • We have assumed that we know: – – The point The surface normal The viewer location (or direction) The light location (or direction) • But commonly, normal vectors are only given at the vertices • It is also expensive to compute lighting for every point 11/09/04 © University of Wisconsin, CS 559 Fall 2004

Shading Interpolation • Take information specified or computed at the vertices, and somehow propagate it across the polygon (triangle) • Several options: – Flat shading – Gouraud interpolation – Phong interpolation 11/09/04 © University of Wisconsin, CS 559 Fall 2004

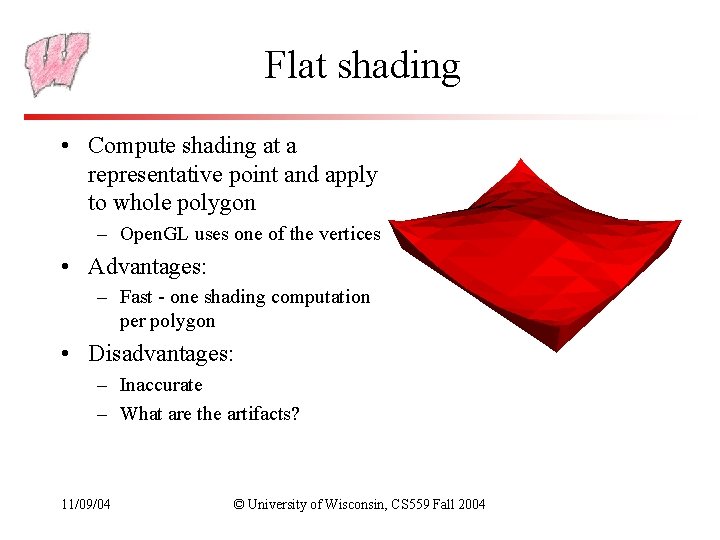

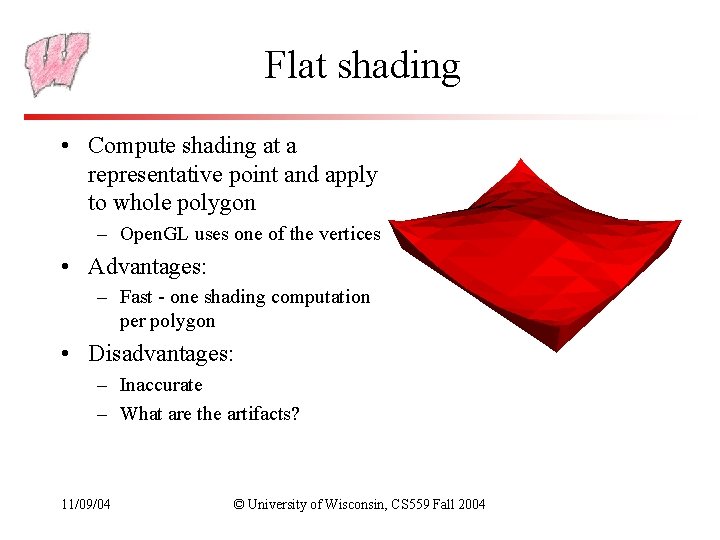

Flat shading • Compute shading at a representative point and apply to whole polygon – Open. GL uses one of the vertices • Advantages: – Fast - one shading computation per polygon • Disadvantages: – Inaccurate – What are the artifacts? 11/09/04 © University of Wisconsin, CS 559 Fall 2004

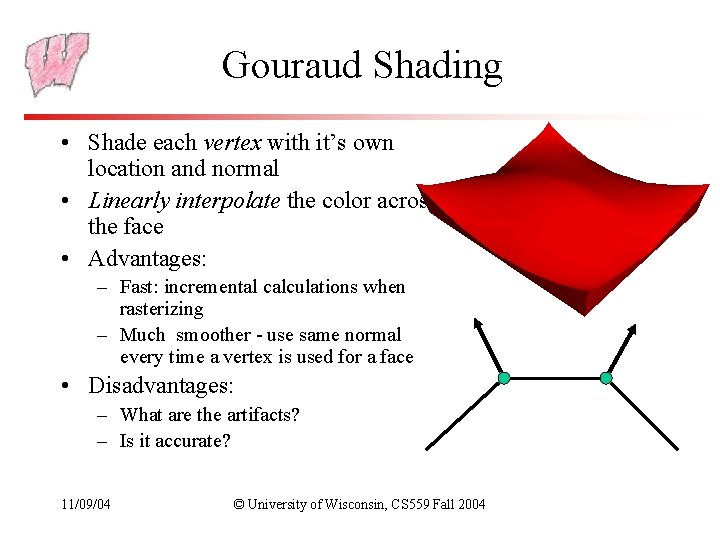

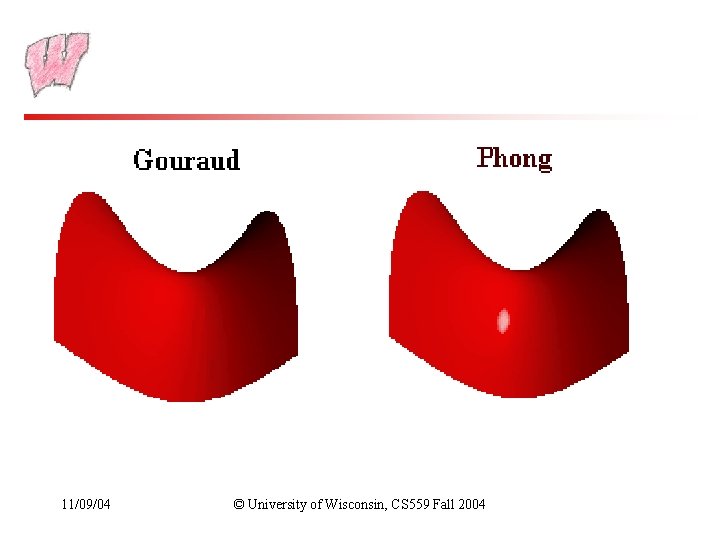

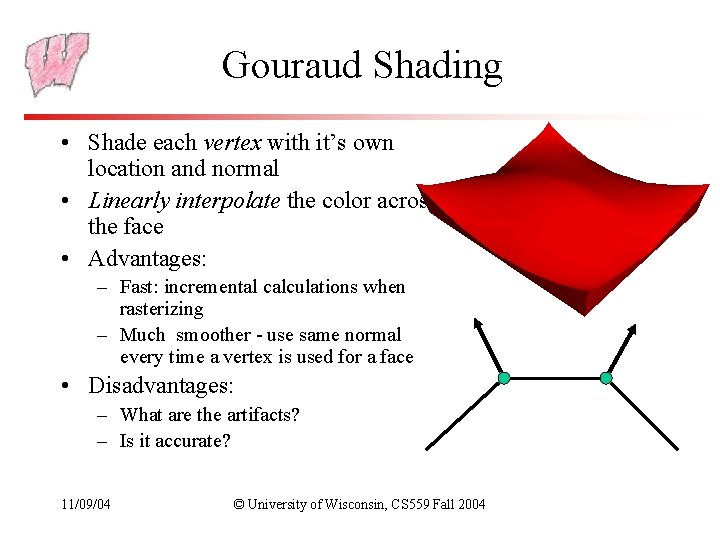

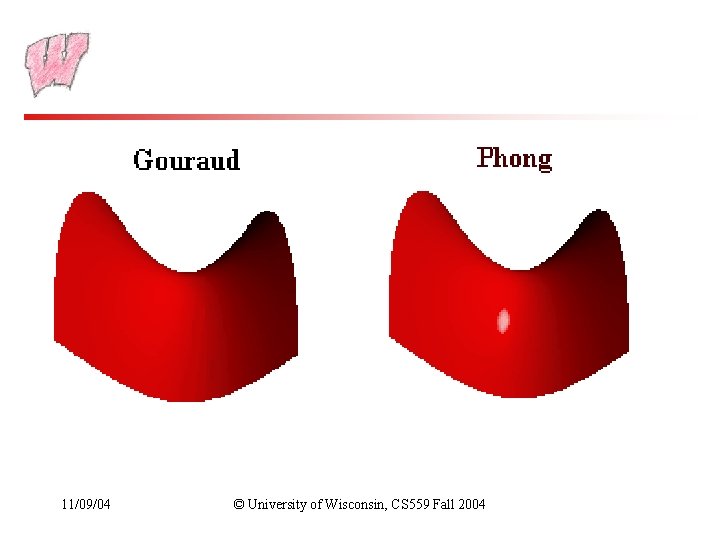

Gouraud Shading • Shade each vertex with it’s own location and normal • Linearly interpolate the color across the face • Advantages: – Fast: incremental calculations when rasterizing – Much smoother - use same normal every time a vertex is used for a face • Disadvantages: – What are the artifacts? – Is it accurate? 11/09/04 © University of Wisconsin, CS 559 Fall 2004

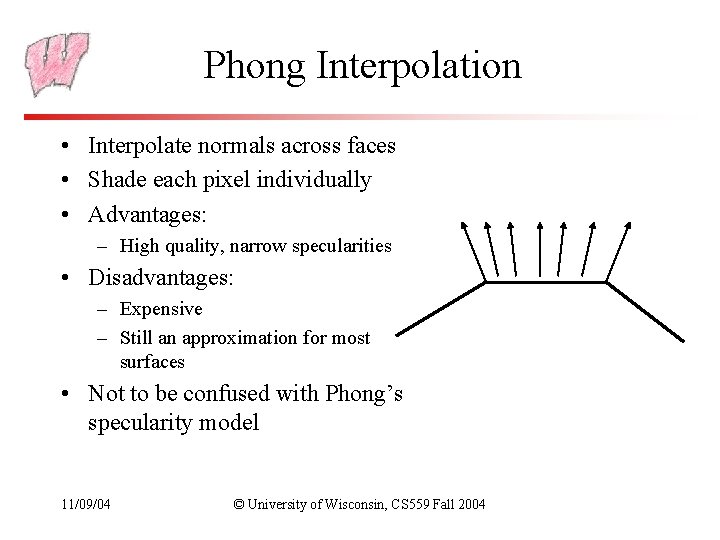

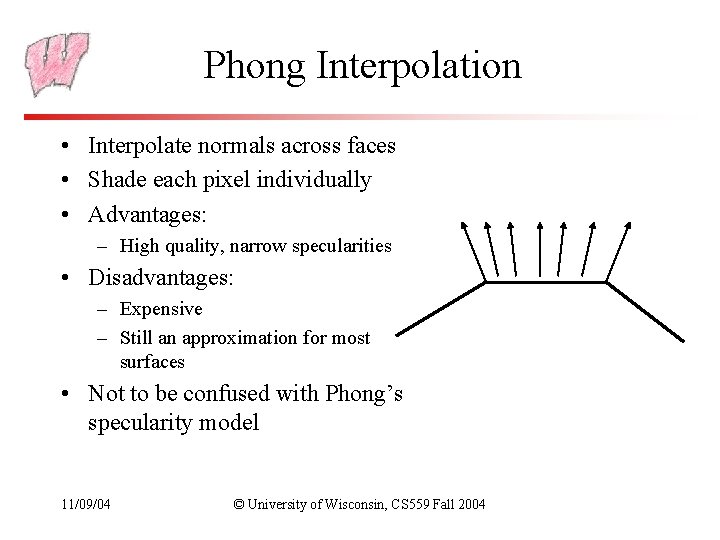

Phong Interpolation • Interpolate normals across faces • Shade each pixel individually • Advantages: – High quality, narrow specularities • Disadvantages: – Expensive – Still an approximation for most surfaces • Not to be confused with Phong’s specularity model 11/09/04 © University of Wisconsin, CS 559 Fall 2004

11/09/04 © University of Wisconsin, CS 559 Fall 2004

Shading and Open. GL • Open. GL defines two particular shading models – Controls how colors are assigned to pixels – gl. Shade. Model(GL_SMOOTH) interpolates between the colors at the vertices (the default, Gouraud shading) – gl. Shade. Model(GL_FLAT) uses a constant color across the polygon • Phong shading requires a significantly greater programming effort – beyond the scope of this class – Also requires fragment shaders on programmable graphics hardware 11/09/04 © University of Wisconsin, CS 559 Fall 2004

The Current Generation • Current hardware allows you to break from the standard illumination model • Programmable Vertex Shaders and Fragment Shaders allow you to write a small program that determines how the color of a vertex or pixel is computed – Your program has access to the surface normal and position, plus anything else you care to give it (like the light) – You can add, subtract, take dot products, and so on • Fragment shaders are most useful for lighting because they operate on every pixel 11/09/04 © University of Wisconsin, CS 559 Fall 2004

The Full Story • We have only touched on the complexities of illuminating surfaces – The common model is hopelessly inadequate for accurate lighting (but it’s fast and simple) • Consider two sub-problems of illumination – Where does the light go? Light transport – What happens at surfaces? Reflectance models • Other algorithms address the transport or the reflectance problem, or both – Much later in class, or a separate course (CS 779) 11/09/04 © University of Wisconsin, CS 559 Fall 2004

Mapping Techniques • Consider the problem of rendering a soup can – The geometry is very simple - a cylinder – But the color changes rapidly, with sharp edges – With the local shading model, so far, the only place to specify color is at the vertices – To do a soup can, would need thousands of polygons for a simple shape – Same thing for an orange: simple shape but complex normal vectors • Solution: Mapping techniques use simple geometry modified by a detail map of some type 11/09/04 © University of Wisconsin, CS 559 Fall 2004

Texture Mapping • The soup tin is easily described by pasting a label on the plain cylinder • Texture mapping associates the color of a point with the color in an image: the texture – Soup tin: Each point on the cylinder gets the label’s color • Question to address: Which point of the texture do we use for a given point on the surface? • Establish a mapping from surface points to image points – Different mappings are common for different shapes – We will, for now, just look at triangles (polygons) 11/09/04 © University of Wisconsin, CS 559 Fall 2004

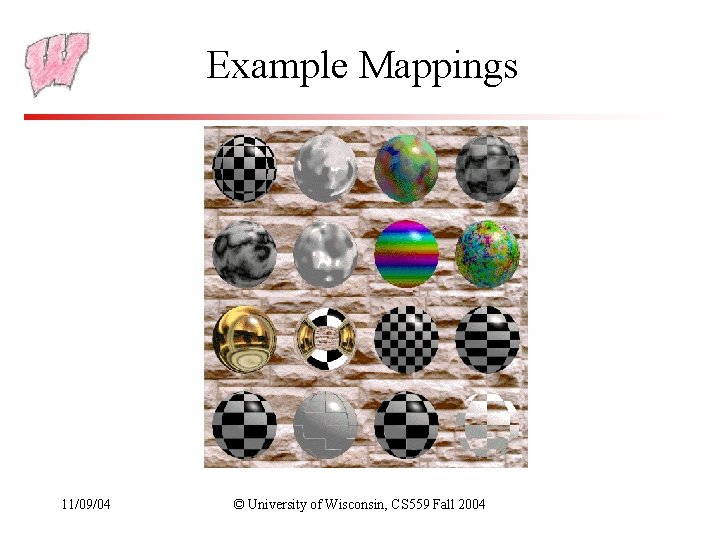

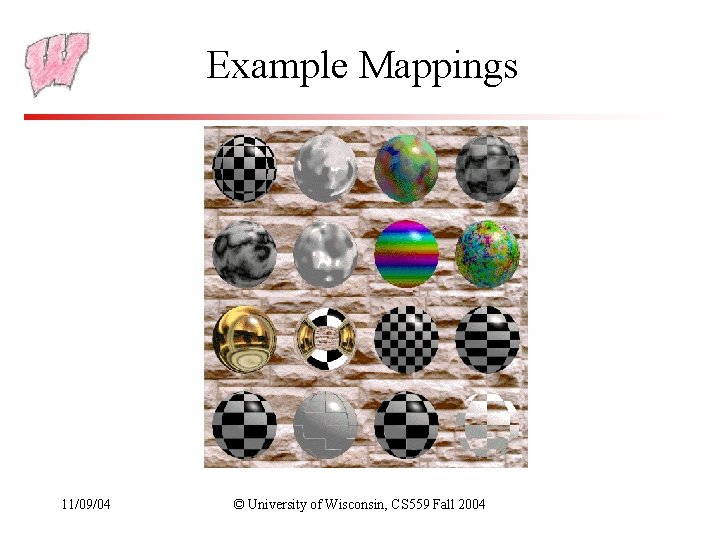

Example Mappings 11/09/04 © University of Wisconsin, CS 559 Fall 2004

Basic Mapping • The texture lives in a 2 D space – Parameterize points in the texture with 2 coordinates: (s, t) – These are just what we would call (x, y) if we were talking about an image, but we wish to avoid confusion with the world (x, y, z) • Define the mapping from (x, y, z) in world space to (s, t) in texture space – To find the color in the texture, take an (x, y, z) point on the surface, map it into texture space, and use it to look up the color of the texture – Samples in a texture are called texels, to distinguish them from pixels in the final image • With polygons: – Specify (s, t) coordinates at vertices – Interpolate (s, t) for other points based on given vertices 11/09/04 © University of Wisconsin, CS 559 Fall 2004

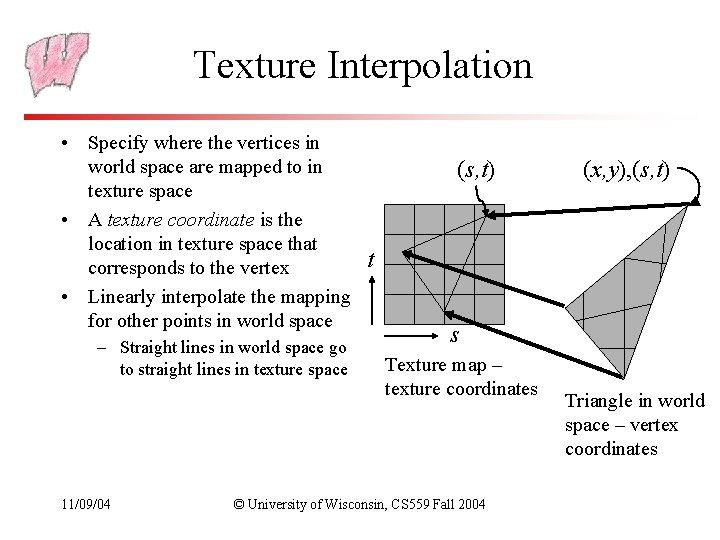

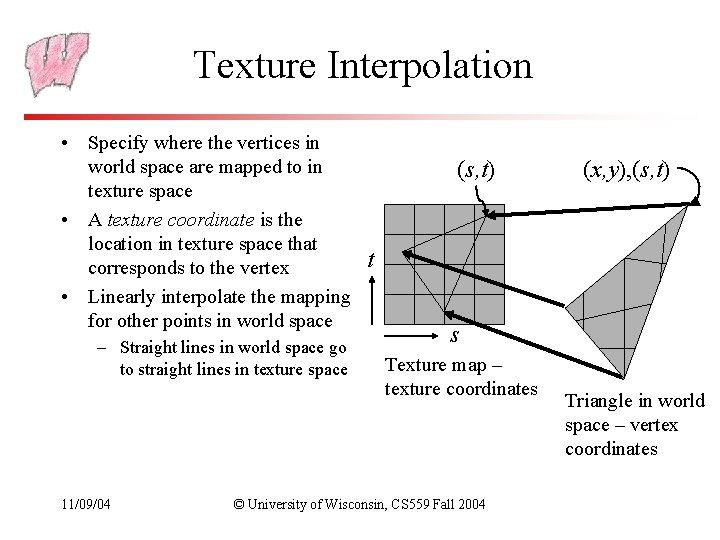

Texture Interpolation • Specify where the vertices in world space are mapped to in texture space • A texture coordinate is the location in texture space that t corresponds to the vertex • Linearly interpolate the mapping for other points in world space – Straight lines in world space go to straight lines in texture space 11/09/04 (s, t) (x, y), (s, t) s Texture map – texture coordinates © University of Wisconsin, CS 559 Fall 2004 Triangle in world space – vertex coordinates

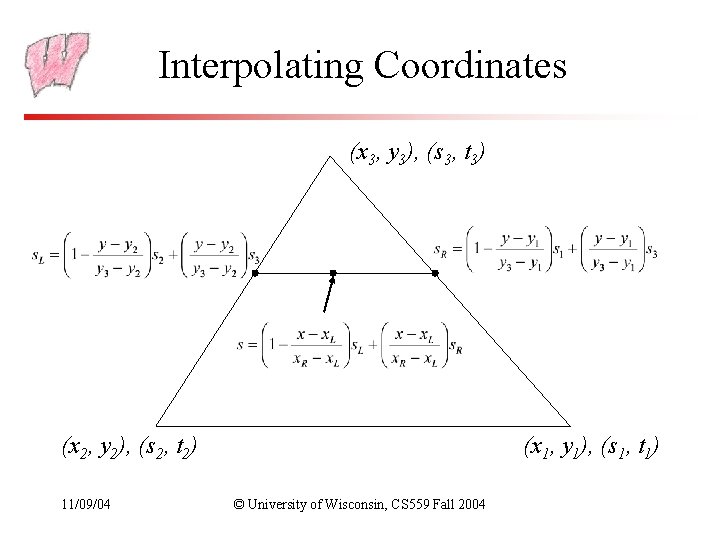

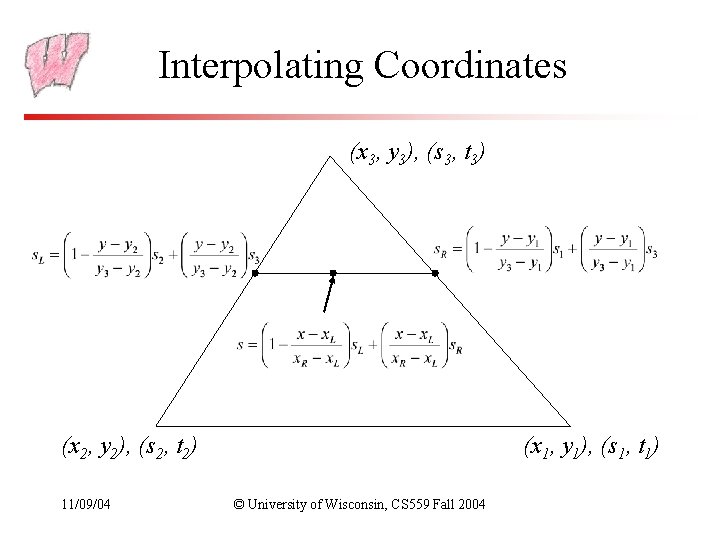

Interpolating Coordinates (x 3, y 3), (s 3, t 3) (x 2, y 2), (s 2, t 2) 11/09/04 (x 1, y 1), (s 1, t 1) © University of Wisconsin, CS 559 Fall 2004

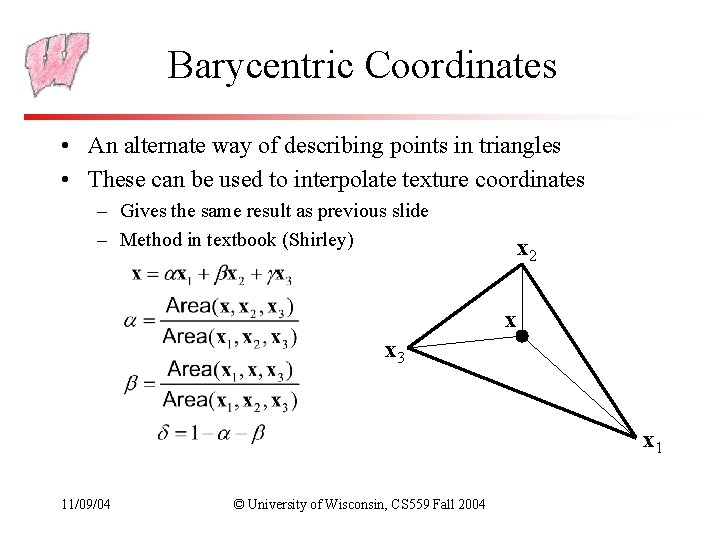

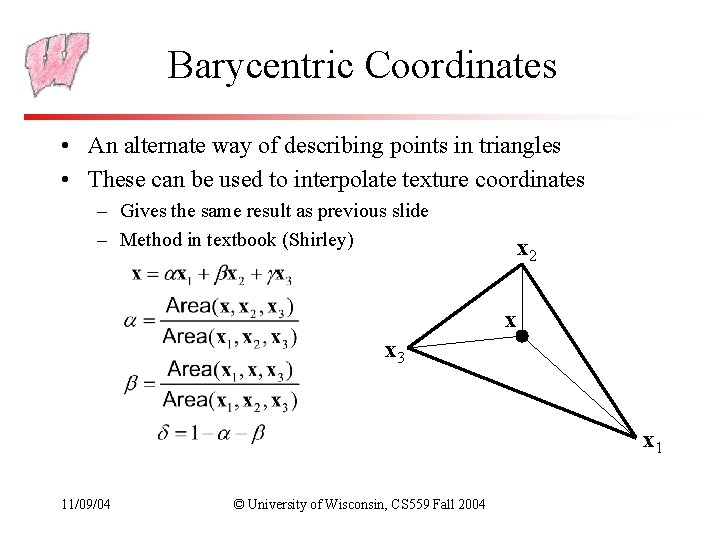

Barycentric Coordinates • An alternate way of describing points in triangles • These can be used to interpolate texture coordinates – Gives the same result as previous slide – Method in textbook (Shirley) x 2 x x 3 x 1 11/09/04 © University of Wisconsin, CS 559 Fall 2004

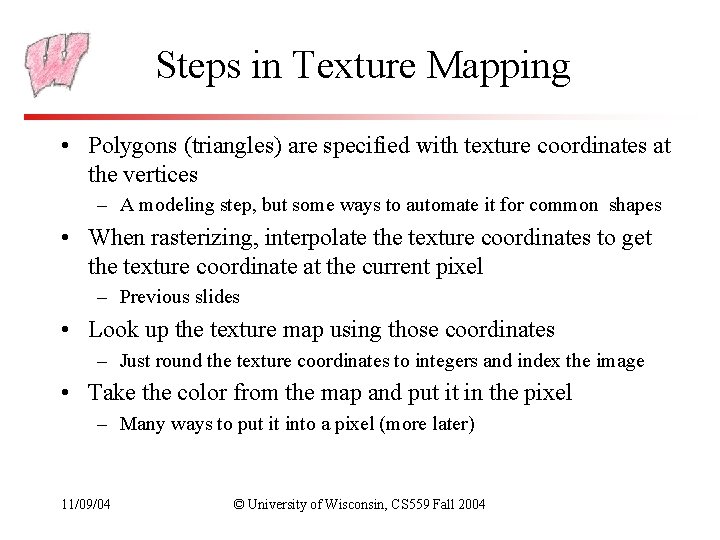

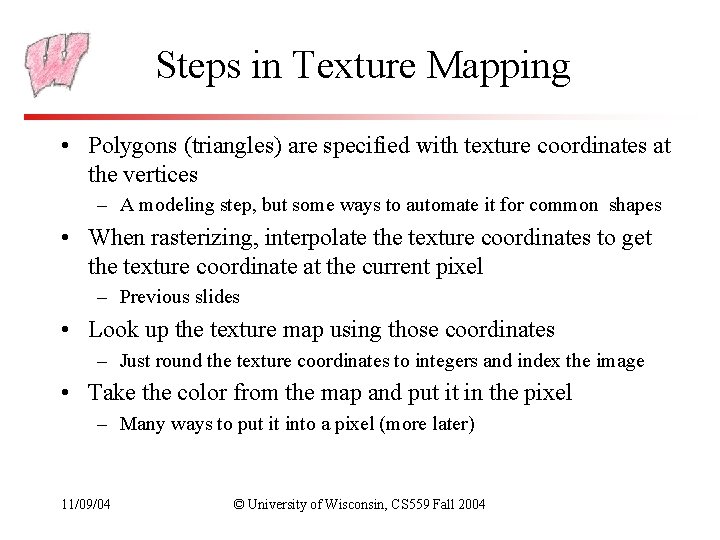

Steps in Texture Mapping • Polygons (triangles) are specified with texture coordinates at the vertices – A modeling step, but some ways to automate it for common shapes • When rasterizing, interpolate the texture coordinates to get the texture coordinate at the current pixel – Previous slides • Look up the texture map using those coordinates – Just round the texture coordinates to integers and index the image • Take the color from the map and put it in the pixel – Many ways to put it into a pixel (more later) 11/09/04 © University of Wisconsin, CS 559 Fall 2004

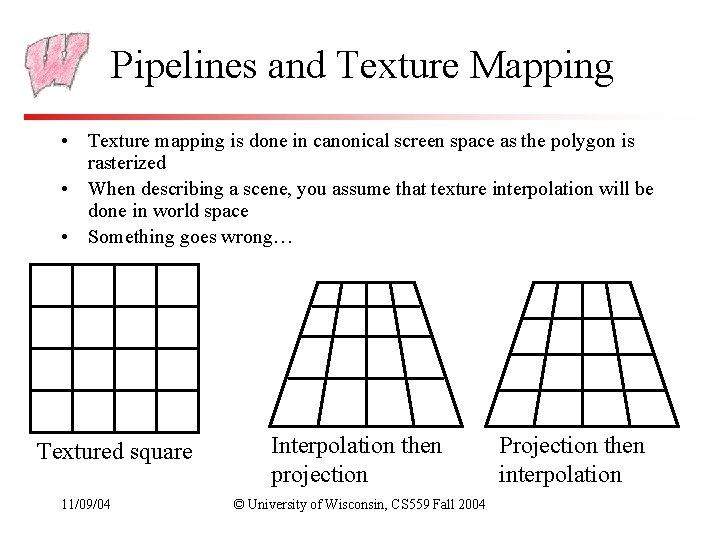

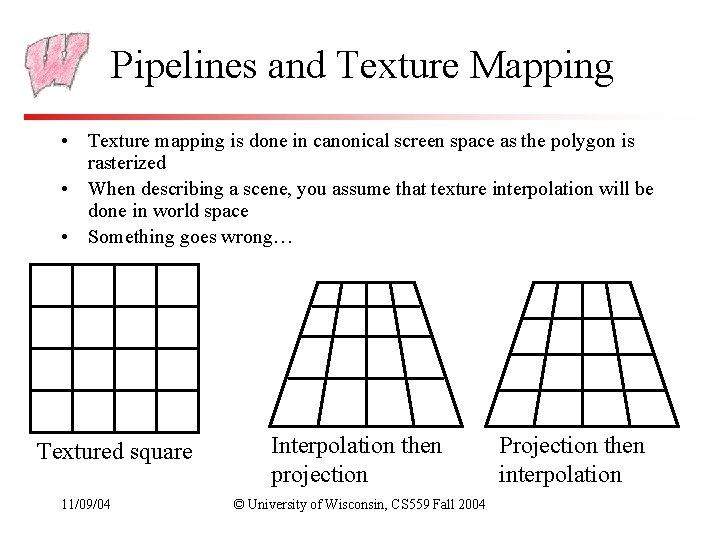

Pipelines and Texture Mapping • Texture mapping is done in canonical screen space as the polygon is rasterized • When describing a scene, you assume that texture interpolation will be done in world space • Something goes wrong… Textured square 11/09/04 Interpolation then projection © University of Wisconsin, CS 559 Fall 2004 Projection then interpolation

Perspective Correct Mapping • Which property of perspective projection means that the “wrong thing” will happen if we apply our simple interpolations from the previous slide? • Perspective correct texture mapping does the right thing, but at a cost – Interpolate homogeneous coordinate w and divide it out just before indexing texture • Is it a problem with orthographic viewing? 11/09/04 © University of Wisconsin, CS 559 Fall 2004

Basic Open. GL Texturing • Specify texture coordinates for the polygon: – Use gl. Tex. Coord 2 f(s, t) before each vertex: • Eg: gl. Tex. Coord 2 f(0, 0); gl. Vertex 3 f(x, y, z); • Create a texture object and fill it with texture data: – gl. Gen. Textures(num, &indices) to get identifiers for the objects – gl. Bind. Texture(GL_TEXTURE_2 D, identifier) to bind the texture • Following texture commands refer to the bound texture – gl. Tex. Parameteri(GL_TEXTURE_2 D, …, …) to specify parameters for use when applying the texture – gl. Tex. Image 2 D(GL_TEXTURE_2 D, …. ) to specify the texture data (the image itself) MORE… 11/09/04 © University of Wisconsin, CS 559 Fall 2004

Basic Open. GL Texturing (cont) • Enable texturing: gl. Enable(GL_TEXTURE_2 D) • State how the texture will be used: – gl. Tex. Envf(…) • Texturing is done after lighting • You’re ready to go… 11/09/04 © University of Wisconsin, CS 559 Fall 2004

Nasty Details • There a large range of functions that control the layout of texture data: – You must state how the data in your image is arranged – Eg: gl. Pixel. Storei(GL_UNPACK_ALIGNMENT, 1) tells Open. GL not to skip bytes at the end of a row – You must state how you want the texture to be put in memory: how many bits per “pixel”, which channels, … • Textures must be square with width/height a power of 2 – Common sizes are 32 x 32, 64 x 64, 256 x 256 – Smaller uses less memory, and there is a finite amount of texture memory on graphics cards – Some extensions to Open. GL allow arbitrary textures 11/09/04 © University of Wisconsin, CS 559 Fall 2004

Controlling Different Parameters • The “pixels” in the texture map may be interpreted as many different things. For example: – As colors in RGB or RGBA format – As grayscale intensity – As alpha values only • The data can be applied to the polygon in many different ways: – Replace: Replace the polygon color with the texture color – Modulate: Multiply the polygon color with the texture color or intensity – Similar to compositing: Composite texture with base color using operator 11/09/04 © University of Wisconsin, CS 559 Fall 2004

Example: Diffuse shading and texture • Say you want to have an object textured and have the texture appear to be diffusely lit • Problem: Texture is applied after lighting, so how do you adjust the texture’s brightness? • Solution: – – 11/09/04 Make the polygon white and light it normally Use gl. Tex. Envi(GL_TEXTURE_2 D, GL_TEXTURE_ENV_MODE, GL_MODULATE) Use GL_RGB for internal format Then, texture color is multiplied by surface (fragment) color, and alpha is taken from fragment © University of Wisconsin, CS 559 Fall 2004

Specular Color • Typically, texture mapping happens after lighting – More useful in general • Recall plastic surfaces and specularities: the highlight should be the color of the light • But if texturing happens after the lighting, the color of the specularity will be modified by the texture – the wrong thing • Open. GL lets you do the specular lighting after the texture 11/09/04 © University of Wisconsin, CS 559 Fall 2004

Some Other Uses • There is a “decal” mode for textures, which replaces the surface color with the texture color, as if you stick on a decal – But texture happens after lighting, so the light info is lost – BUT, you can use the texture to store lighting info, and generate better looking lighting • You can put the color information in the polygon, and use the texture for the brightness information – Called “light maps” – Normally, use multiple texture layers, one for color, one for light 11/09/04 © University of Wisconsin, CS 559 Fall 2004