Large Vocabulary Continuous Speech Recognition Subword Speech Units

Large Vocabulary Continuous Speech Recognition

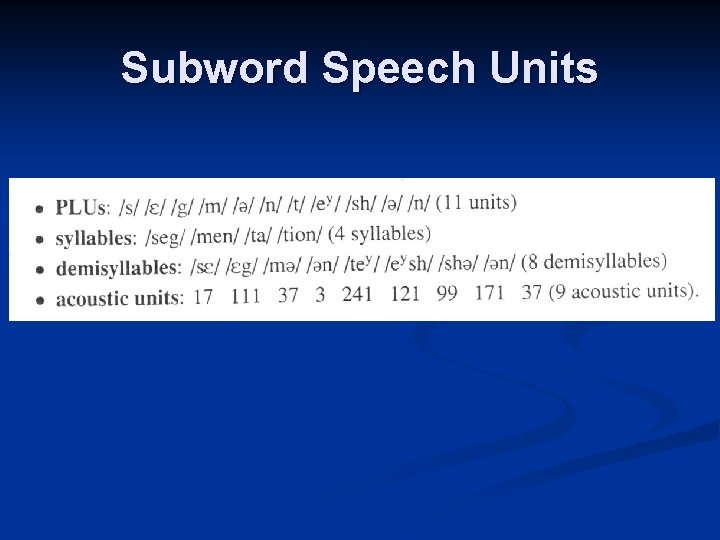

Subword Speech Units

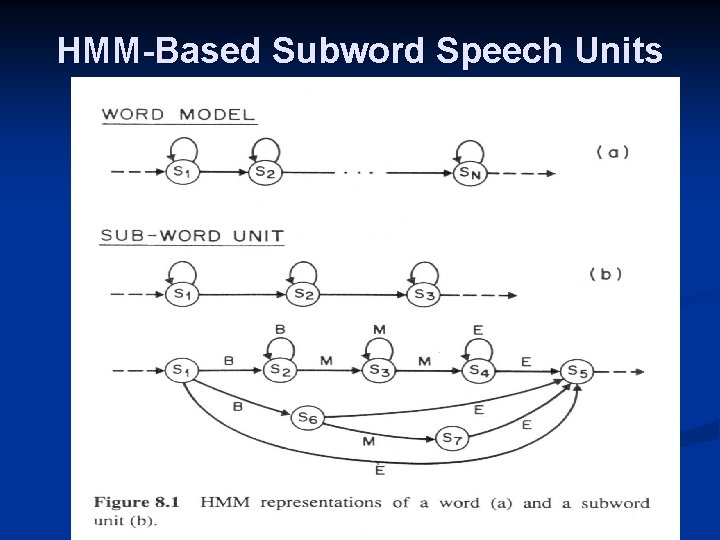

HMM-Based Subword Speech Units

Training of Subword Units

Training of Subword Units

Training Procedure

Errors and performance evaluation in PLU recognition n n Substitution error (s) Deletion error (d) Insertion error (i) Performance evaluation: If the total number of PLUs is N, we define: n n Correctness rate: N – s – d /N Accuracy rate: N – s – d – i / N

Language Models for LVCSR Word Pair Model: Specify which word pairs are valid

Statistical Language Modeling

Perplexity of the Language Model Entropy of the Source: First order entropy of the source: If the source is ergodic, meaning its statistical properties can be completely characterized in a sufficiently long sequence that the Source puts out,

We often compute H based on a finite but sufficiently large Q: H is the degree of difficulty that the recognizer encounters, on average, When it is to determine a word from the same source. Using language model, if the N-gram language model PN(W) is used, An estimate of H is: In general: Perplexity is defined as:

Overall recognition system based on subword units

Naval Resource (Battleship) Management Task: 991 -word vocabulary NG (no grammar): perplexity = 991

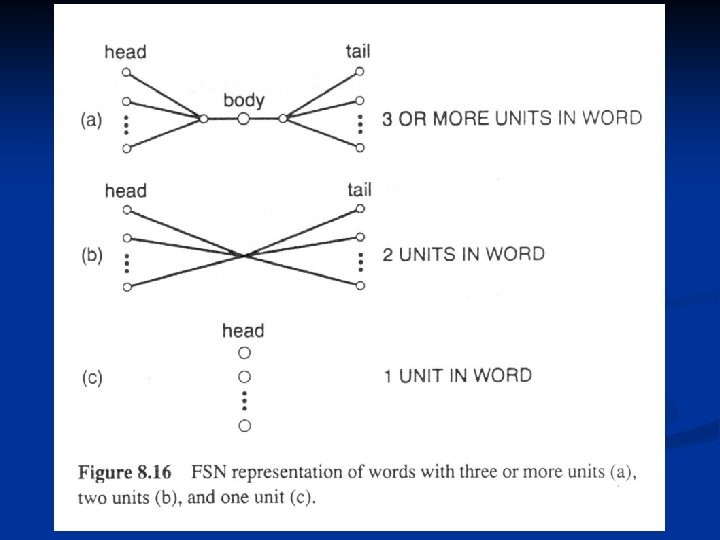

Word pair grammar We can partition the vocabulary into four nonoverlapping sets of words: The overall FSN allows recognition of sentences of the form:

WP (word pair) grammar: Perplexity=60 FSN based on Partitioning Scheme: 995 real arcs and 18 null arcs WB (word bigram) Grammar: Perplexity =20

Control of word insertion/word deletion rate In the discussed structure, there is no control on the sentence length n We introduce a word insertion penalty into the Viterbi decoding n For this, a fixed negative quantity is added to the likelihood score at the end of each word arc n

Context-dependent subword units Creation of context-dependent diphones and triphones

If c(. ) is the occurrence count for a given unit, we can use a unit reduction rule such as: CD units using only intraword units for “show all ships”: CD units using both intraword and itnerword units:

Smoothing and interpolation of CD PLU models

Implementation issues using CD units

Word junction effects To handle known phonological changes, a set of phonological rules are Superimposed on both the training and recognition networks. Some typical phonological rules include:

Recognition results using CD units

Position dependent units

Unit splitting and clustering

A key source of difficulty in continuous speech recognition is the So-called function words, which include words like a, and, for, in, is. The function words have the following properties:

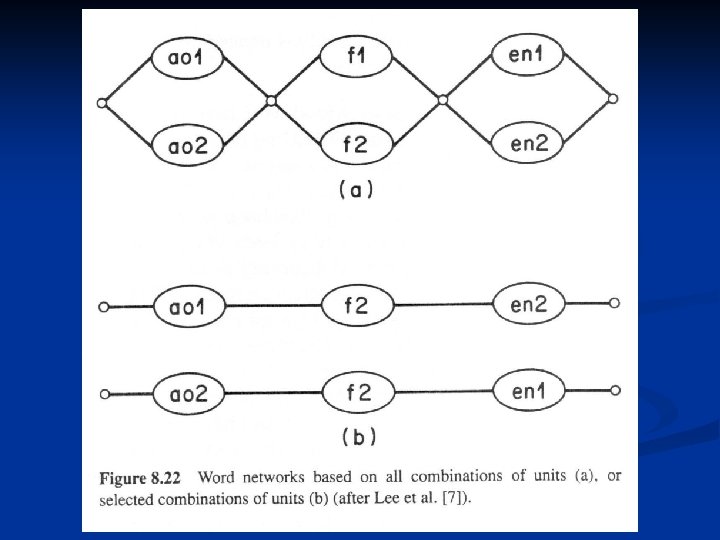

Creation of vocabulary-independent units

Semantic Postprocessor For Recognition

- Slides: 47