Large Models for Large Corpora preliminary findings Patrick

![VTLN: Linear equivalence • According to [Pitz, ICSLP 2000], VTLN is equivalent to a VTLN: Linear equivalence • According to [Pitz, ICSLP 2000], VTLN is equivalent to a](https://slidetodoc.com/presentation_image/0d2dd53f72f291b6396793fba3570424/image-28.jpg)

- Slides: 36

Large Models for Large Corpora: preliminary findings Patrick Nguyen Ambroise Mutel Jean-Claude Junqua Panasonic Speech Technology Laboratory (PSTL)

… from RT-03 S workshop • Lots of data helps • Standard training can be done in reasonable time with current resources • 10 kh: it’s coming soon • Promises: – Change the paradigm – Use data more efficiently – Keep it simple

The Dawn of a New Era? • Merely increasing the model size is insufficient • HMMs will live on • Layering above HMM classifiers will do • Change topology of training • Large models with no impact on decoding • Data-greedy algorithms • Only meaningful with large amounts

Two approaches: changing topology • Greedy models – Syllable units – Same model size, but consume more info – Increase data / parameter ratio – Add linguistic info • Factorize training: increase model size – Bubble splitting (generalized SAT) – Almost no penalty in decoding – Split according to acoustics

Syllable units • • Supra-segmental info Pronunciation modeling (subword units) Literature blames lack of data TDT+ coverage is limited by construction (all words are in the decoding lexicon) • Better alternative to n-phones

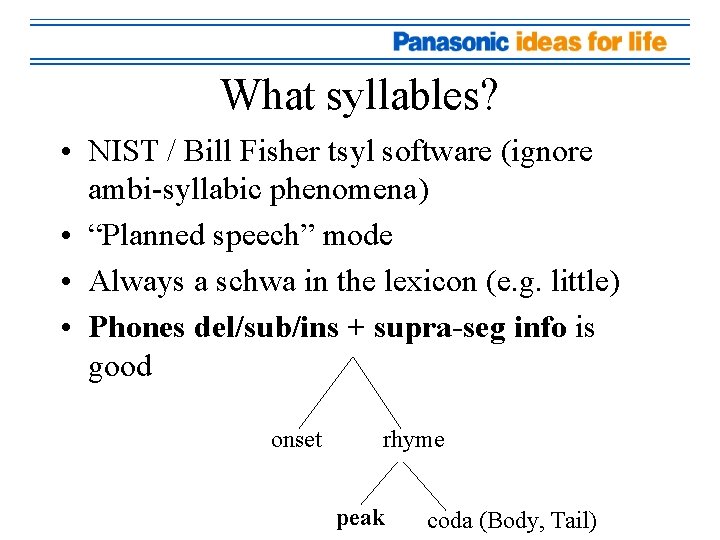

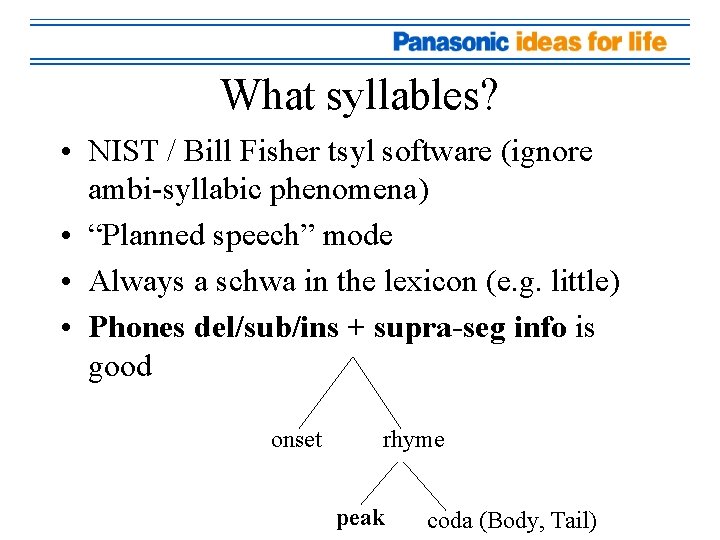

What syllables? • NIST / Bill Fisher tsyl software (ignore ambi-syllabic phenomena) • “Planned speech” mode • Always a schwa in the lexicon (e. g. little) • Phones del/sub/ins + supra-seg info is good onset rhyme peak coda (Body, Tail)

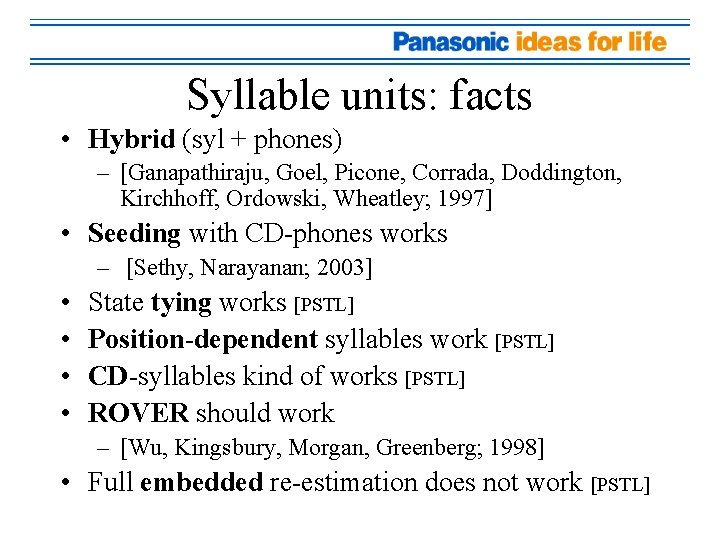

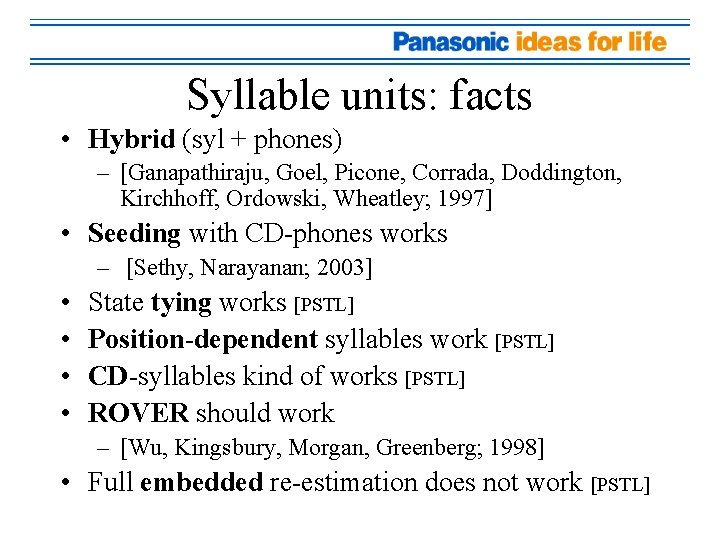

Syllable units: facts • Hybrid (syl + phones) – [Ganapathiraju, Goel, Picone, Corrada, Doddington, Kirchhoff, Ordowski, Wheatley; 1997] • Seeding with CD-phones works – [Sethy, Narayanan; 2003] • • State tying works [PSTL] Position-dependent syllables work [PSTL] CD-syllables kind of works [PSTL] ROVER should work – [Wu, Kingsbury, Morgan, Greenberg; 1998] • Full embedded re-estimation does not work [PSTL]

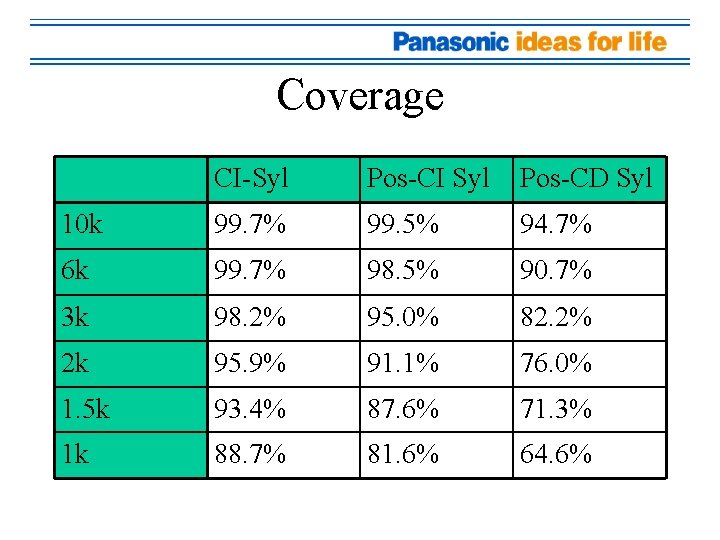

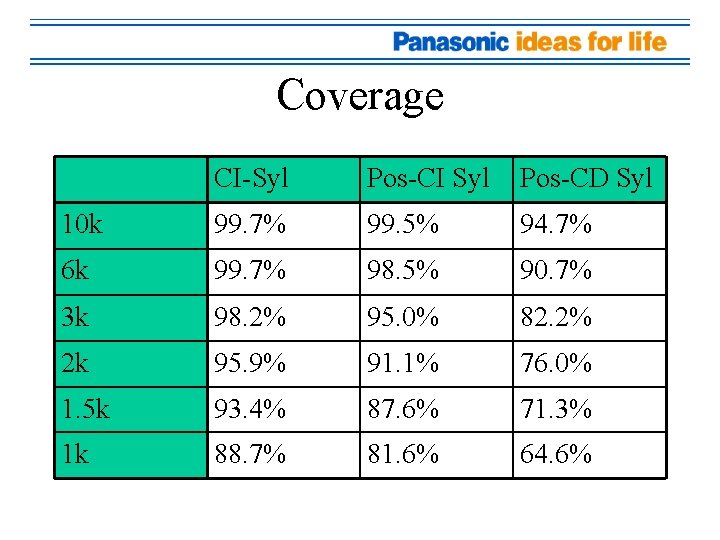

Coverage and large corpus • Warning: biased by construction • In the lexicon: 15 k syllables 127 examples 1 example 14 examples • About 15 M syllables total (10 M words) • Total: 1600 h / 950 h filtered

Coverage CI-Syl Pos-CI Syl Pos-CD Syl 10 k 99. 7% 99. 5% 94. 7% 6 k 99. 7% 98. 5% 90. 7% 3 k 98. 2% 95. 0% 82. 2% 2 k 95. 9% 91. 1% 76. 0% 1. 5 k 93. 4% 87. 6% 71. 3% 1 k 88. 7% 81. 6% 64. 6%

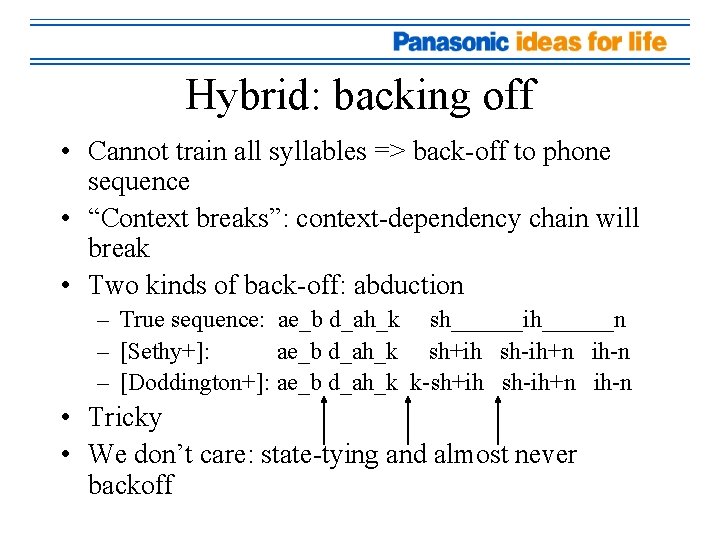

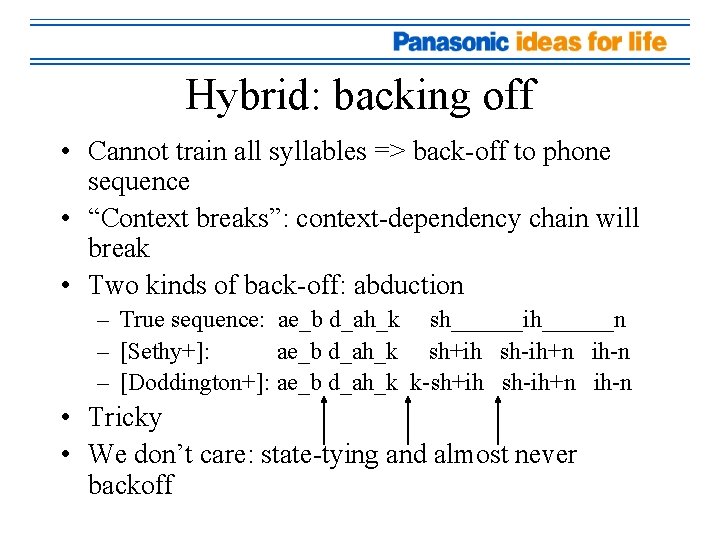

Hybrid: backing off • Cannot train all syllables => back-off to phone sequence • “Context breaks”: context-dependency chain will break • Two kinds of back-off: abduction – True sequence: ae_b d_ah_k sh______ih______n – [Sethy+]: ae_b d_ah_k sh+ih sh-ih+n ih-n – [Doddington+]: ae_b d_ah_k k-sh+ih sh-ih+n ih-n • Tricky • We don’t care: state-tying and almost never backoff

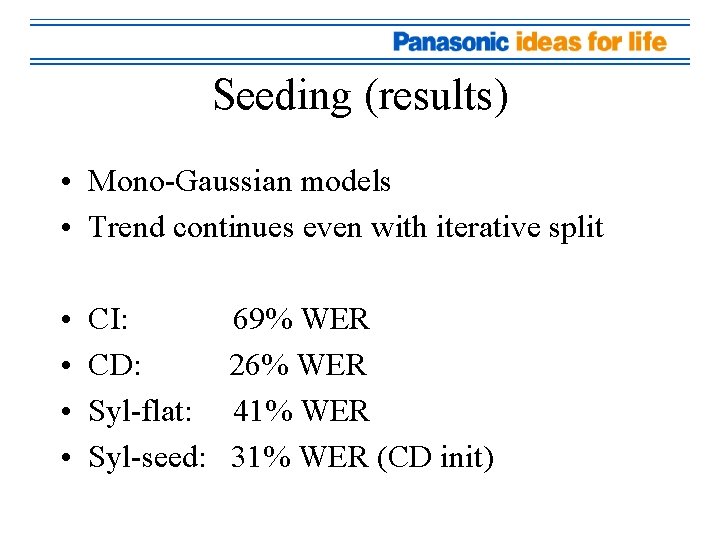

Seeding • Copy CD models instead of flat-starting • Problem at syllable boundary (context break) • CI < Seed syl < CD • Imposes constraints on topology ? ? -sh+ih sh-ih+n ih-n+? ?

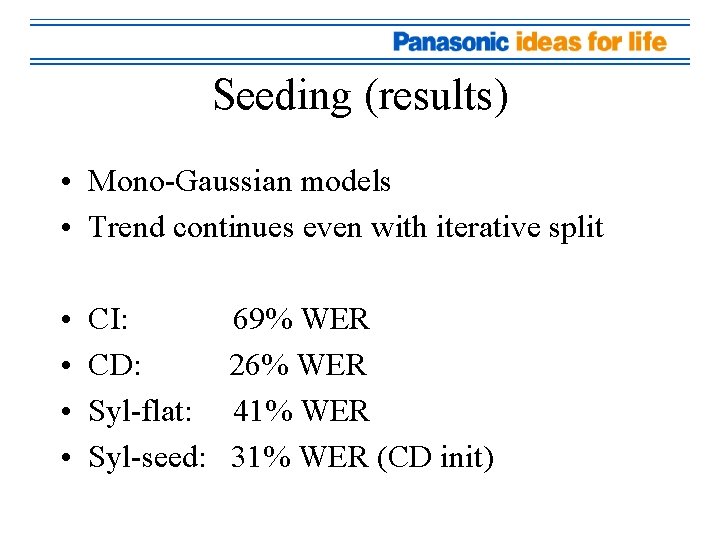

Seeding (results) • Mono-Gaussian models • Trend continues even with iterative split • • CI: CD: Syl-flat: Syl-seed: 69% WER 26% WER 41% WER 31% WER (CD init)

State-tying • Data-driven approach • Backing-off is a problem => train all syllables – too many states/distributions – too little data (skewed distribution) • Same strategy as CD-phone: entropy merge • Can add info (pos, CD) w/o worrying about explosion in # of states

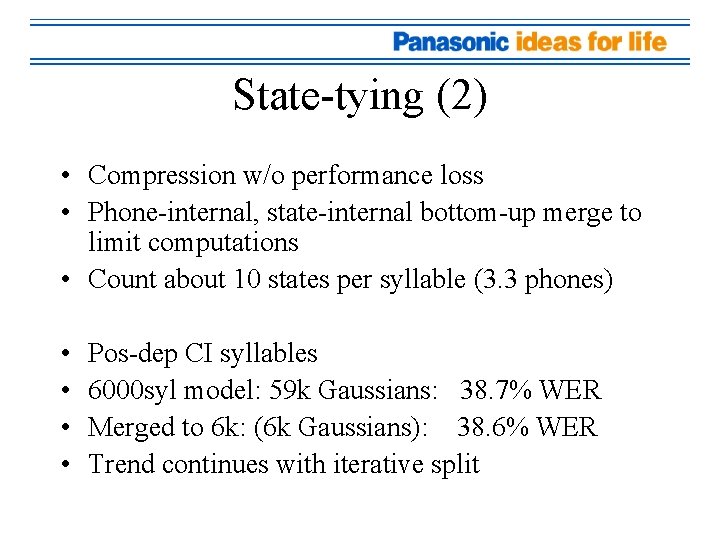

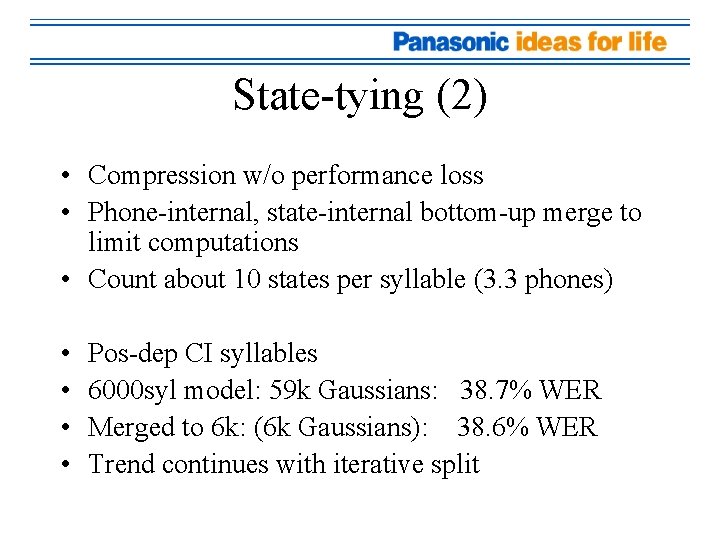

State-tying (2) • Compression w/o performance loss • Phone-internal, state-internal bottom-up merge to limit computations • Count about 10 states per syllable (3. 3 phones) • • Pos-dep CI syllables 6000 syl model: 59 k Gaussians: 38. 7% WER Merged to 6 k: (6 k Gaussians): 38. 6% WER Trend continues with iterative split

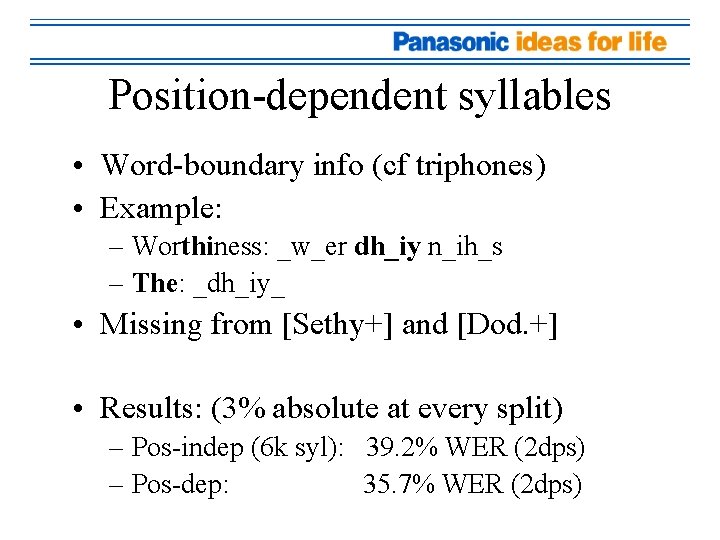

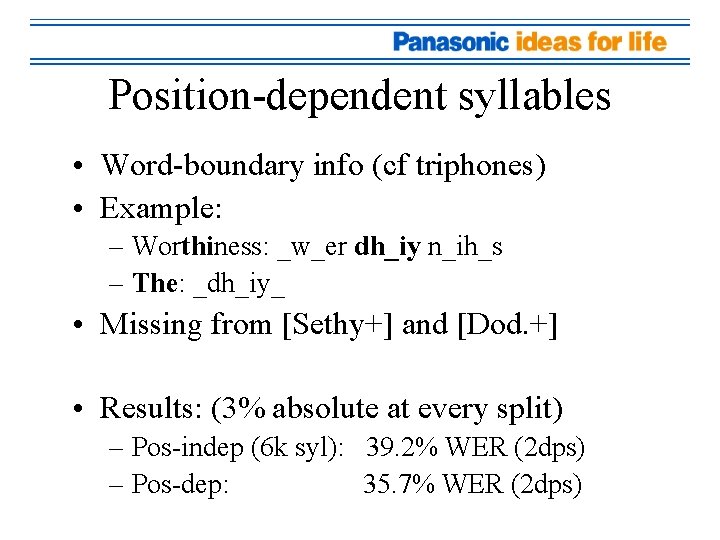

Position-dependent syllables • Word-boundary info (cf triphones) • Example: – Worthiness: _w_er dh_iy n_ih_s – The: _dh_iy_ • Missing from [Sethy+] and [Dod. +] • Results: (3% absolute at every split) – Pos-indep (6 k syl): 39. 2% WER (2 dps) – Pos-dep: 35. 7% WER (2 dps)

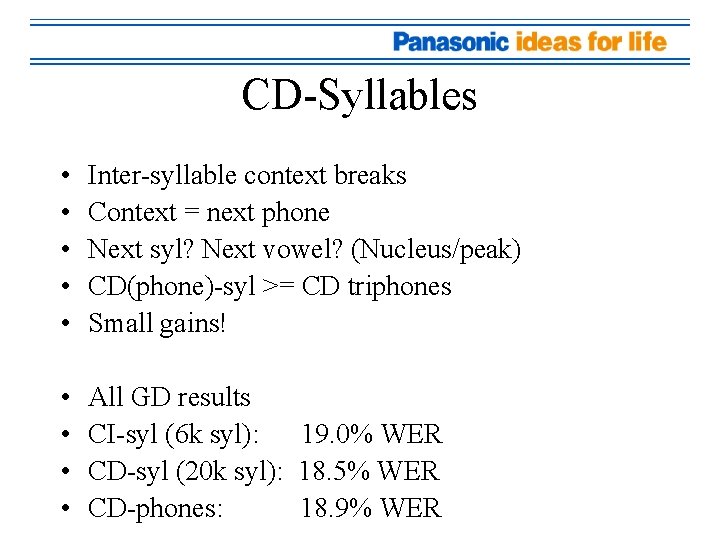

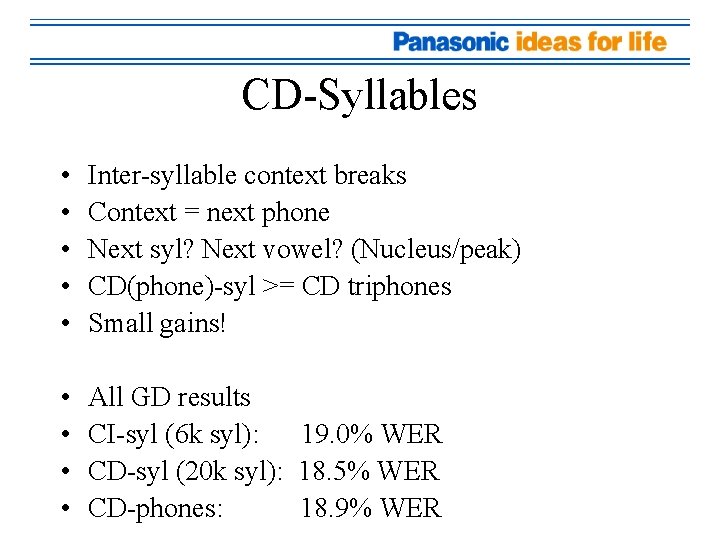

CD-Syllables • • • Inter-syllable context breaks Context = next phone Next syl? Next vowel? (Nucleus/peak) CD(phone)-syl >= CD triphones Small gains! • • All GD results CI-syl (6 k syl): 19. 0% WER CD-syl (20 k syl): 18. 5% WER CD-phones: 18. 9% WER

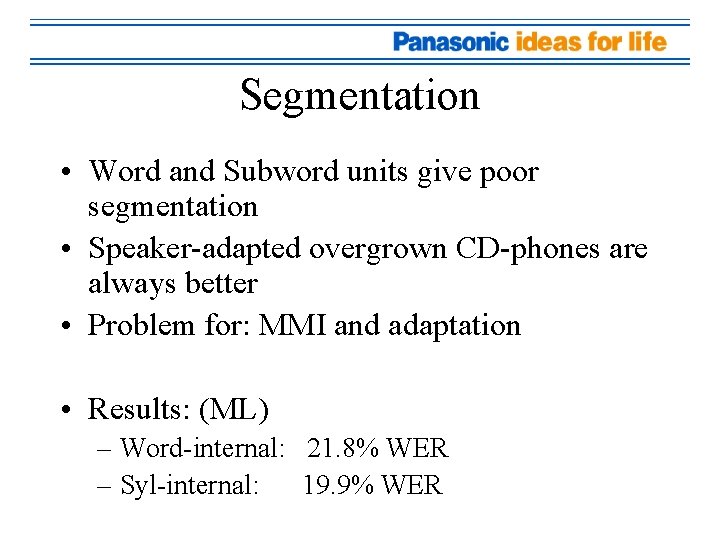

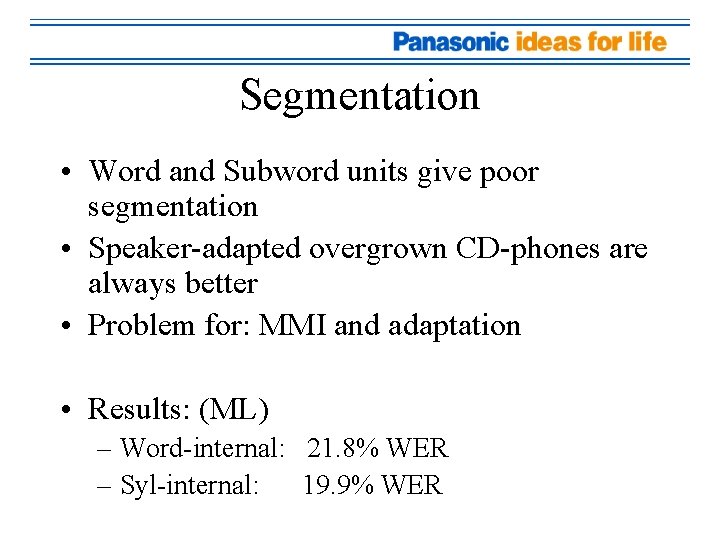

Segmentation • Word and Subword units give poor segmentation • Speaker-adapted overgrown CD-phones are always better • Problem for: MMI and adaptation • Results: (ML) – Word-internal: 21. 8% WER – Syl-internal: 19. 9% WER

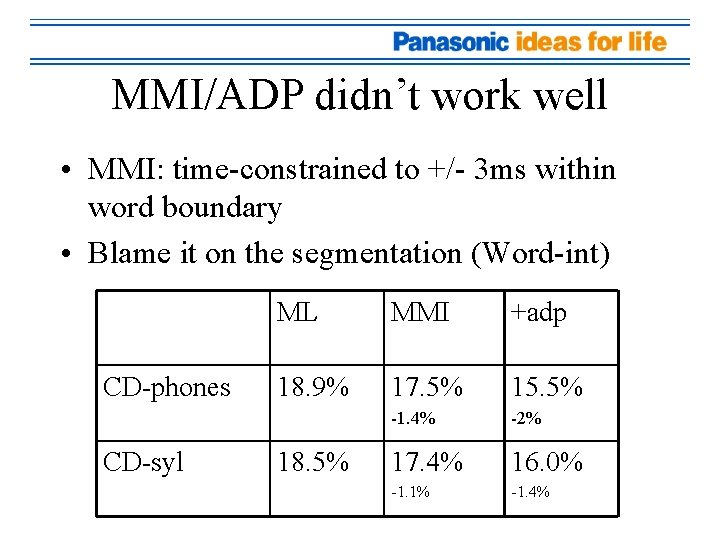

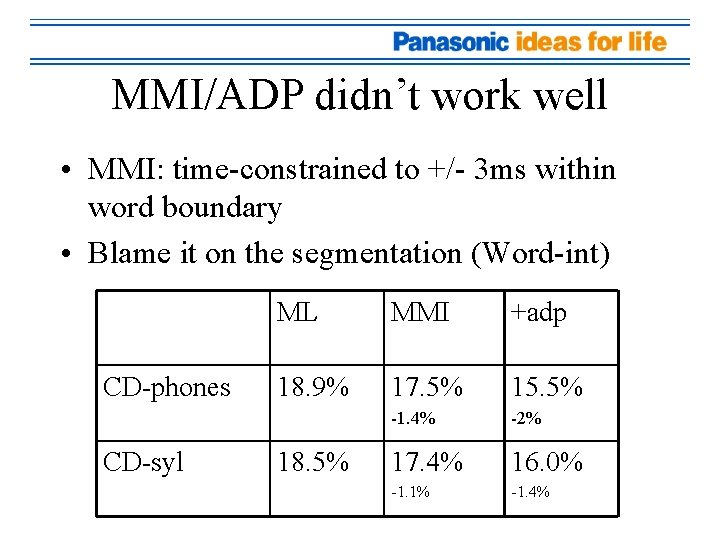

MMI/ADP didn’t work well • MMI: time-constrained to +/- 3 ms within word boundary • Blame it on the segmentation (Word-int) CD-phones CD-syl ML MMI +adp 18. 9% 17. 5% 15. 5% -1. 4% -2% 17. 4% 16. 0% -1. 1% -1. 4% 18. 5%

ROVER • Two different systems can be combined • Two-pass “transatlantic” ROVER architecture • CD-phones align, phonetic classes • No gain (broken confidence), deletions – MMI+adp: 15. 5% (CDp) and 16. 0% (SY) – Best ROVER: 15. 5% WER (4 -pass, 2 -way) Syllable models Adapted CD-phones

Summary: architecture CD-phones POS-CI syllables Merged (6 k) POS-CD syllables GD / MMI Decode Adapt+decode ROVER Merged (3 k)

Conclusion (Syllable) • Observed similar effects as literature • Added some observations (state tying, CD, pos, ADP/MMI) • Performance does not beat CD-phones yet – CD phones: 15. 5% WER ; syl: 16. 0% WER • Some assumptions might cancel the benefit of syllable modeling

Open questions • Is syllabification (grouping) better than random? Syllable? • Planned vs spontaneous speech? • Did we oversimplify? • Why do subword units resist to autosegmentation? • Why didn’t CD-syl work better? • Language-dependent effects

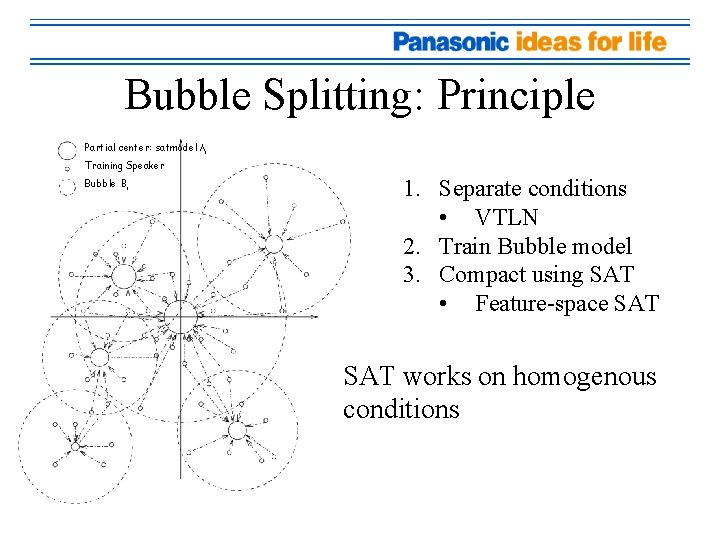

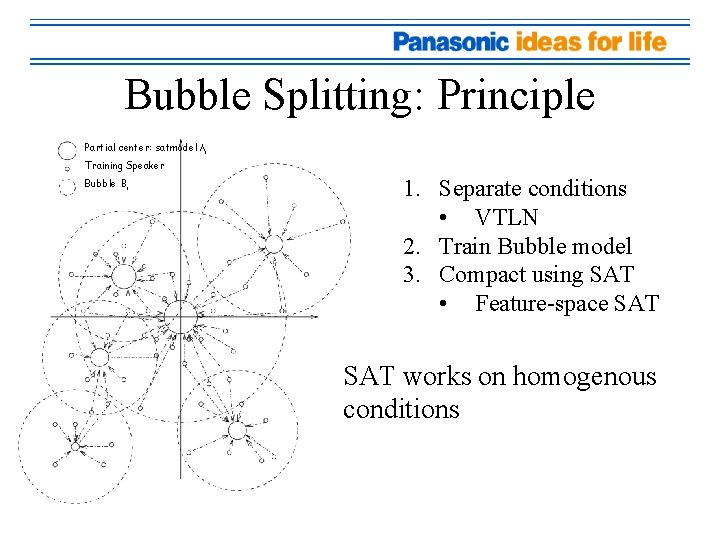

Bubble Splitting • Outgrowth of SAT • Increase model-size 15 -fold w/o computational penalty in train/decode • Also covers VTLN implementation • Basic idea: – Split training into locally homogenous regions (bubbles), and then apply SAT

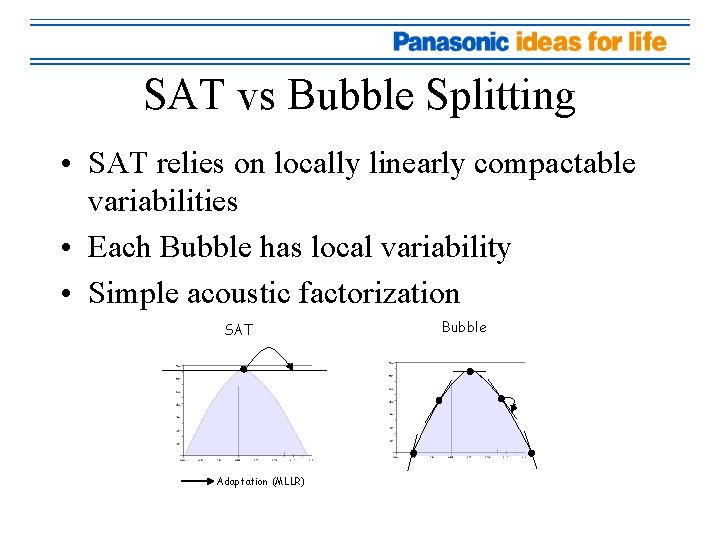

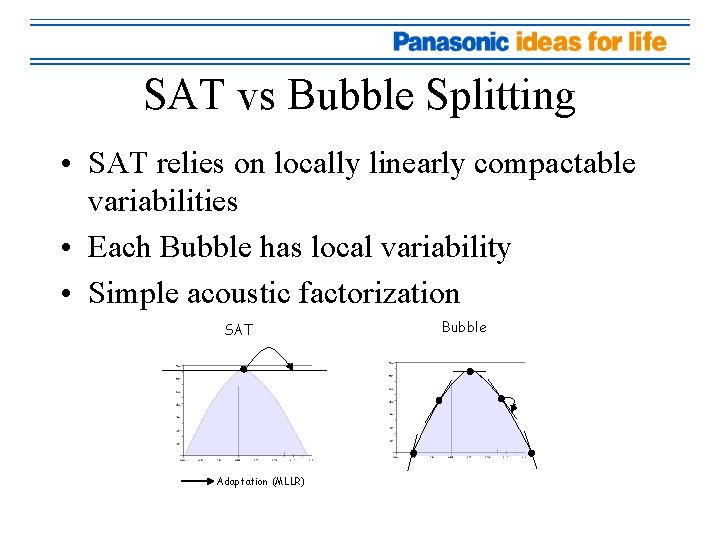

SAT vs Bubble Splitting • SAT relies on locally linearly compactable variabilities • Each Bubble has local variability • Simple acoustic factorization SAT Adaptation (MLLR) Bubble

TDT and speakers labels • TDT is not speaker-labeled • Hub 4 has 2400 nominative speakers • Use decoding clustering (show-internal clusters) • Males: 33 k speakers • Females: 18 k speakers • Shows: 2137 (TDT) + 288 (Hub 4)

Bubble-Splitting: Overview Input Speech M A L SPLIT ADAPT NO Maximum Likelihood RM E ALI ZE Multiplex TDT F E M A R SPLIT L E Compact Bubble Models (CBM) ADAPT NO E LIZ MA Decoded Words

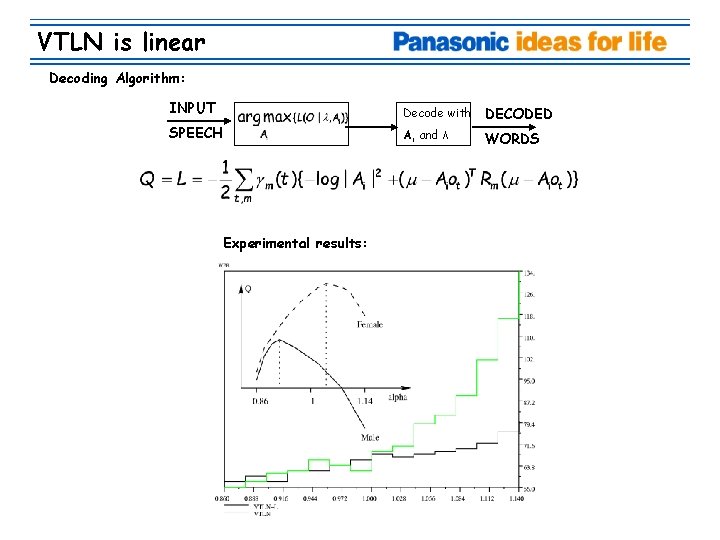

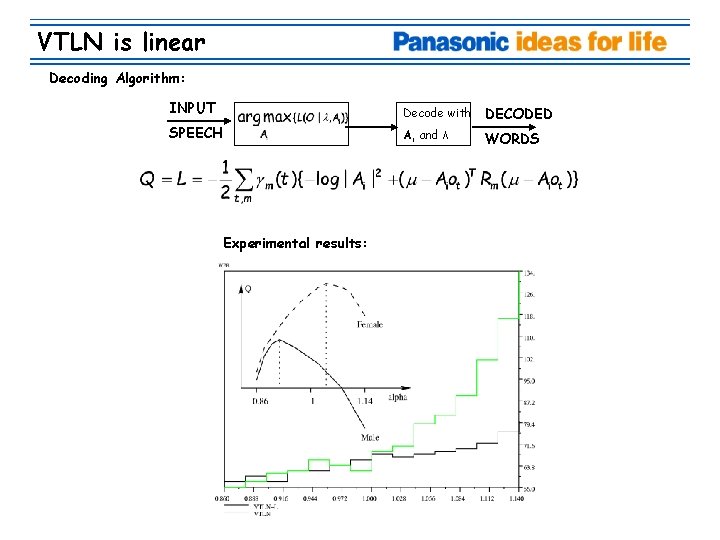

VTLN implementation • VTLN is used for clustering • VTLN is a linear feature transformation (almost) • Finding the best warp

![VTLN Linear equivalence According to Pitz ICSLP 2000 VTLN is equivalent to a VTLN: Linear equivalence • According to [Pitz, ICSLP 2000], VTLN is equivalent to a](https://slidetodoc.com/presentation_image/0d2dd53f72f291b6396793fba3570424/image-28.jpg)

VTLN: Linear equivalence • According to [Pitz, ICSLP 2000], VTLN is equivalent to a linear transformation in the cepstral domain: • The relationship between a cepstral coefficient ck and a warped one (stretched or compressed) is as follows: • The Authors didn’t take the Mel-scale into account. No closed-form solution in that case : • Energy, Filter-banks, and cepstral liftering imply non-linear effects

VTLN is linear Decoding Algorithm: INPUT Decode with SPEECH DECODED Ai and λ WORDS Experimental results:

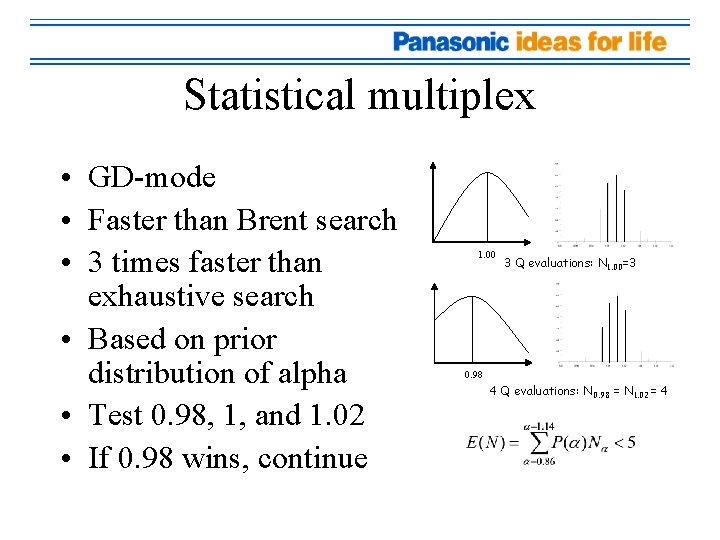

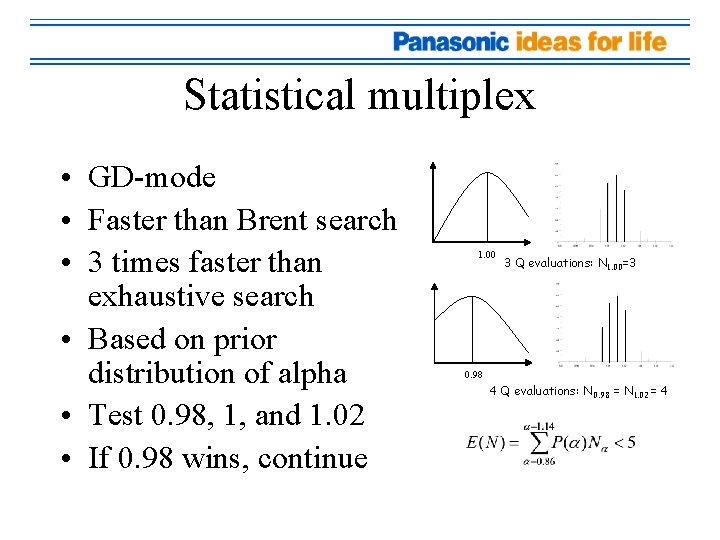

Statistical multiplex • GD-mode • Faster than Brent search • 3 times faster than exhaustive search • Based on prior distribution of alpha • Test 0. 98, 1, and 1. 02 • If 0. 98 wins, continue 1. 00 3 Q evaluations: N 1. 00=3 0. 98 4 Q evaluations: N 0. 98 = N 1. 02 = 4

Bubble Splitting: Principle Partial center: satmodel λi Training Speaker Bubble Bi 1. Separate conditions • VTLN 2. Train Bubble model 3. Compact using SAT • Feature-space SAT works on homogenous conditions

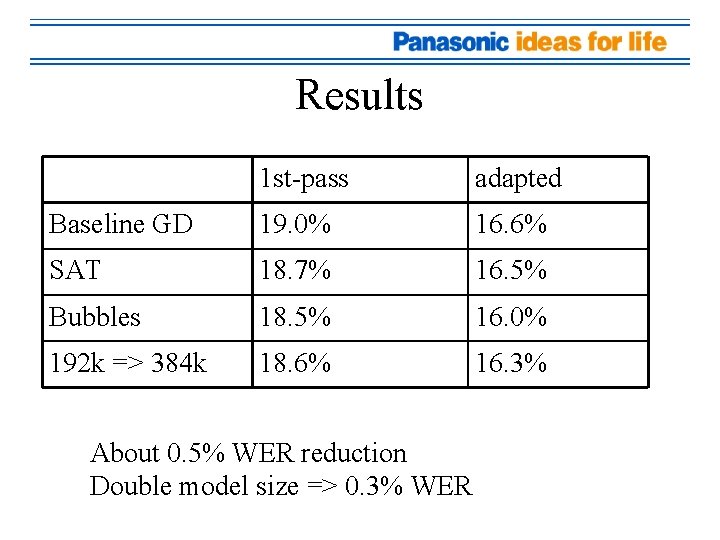

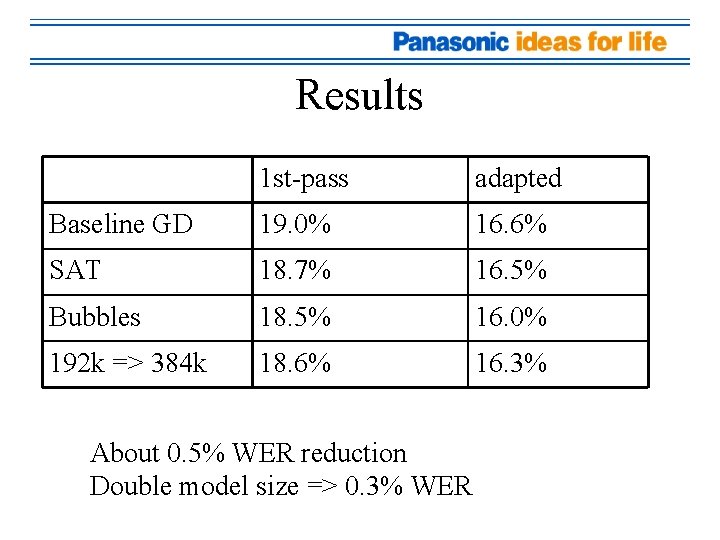

Results 1 st-pass adapted Baseline GD 19. 0% 16. 6% SAT 18. 7% 16. 5% Bubbles 18. 5% 16. 0% 192 k => 384 k 18. 6% 16. 3% About 0. 5% WER reduction Double model size => 0. 3% WER

Conclusion (Bubble) • Gain: 0. 5% WER • Extension of SAT model compaction • VTLN implementation more efficient

Open questions • • • Baseline SAT does not work? Speaker definition? Best splitting strategy? (One per warp) Best decoding strategy? (Closest warp) Best bubble training? (MAP/MLLR) MMIE

Conclusion • What do we do with all of these data? • Syllable + bubble splitting • Two narrowly explored paths among many • Promising results but nothing breathtaking • Not ambitious enough?

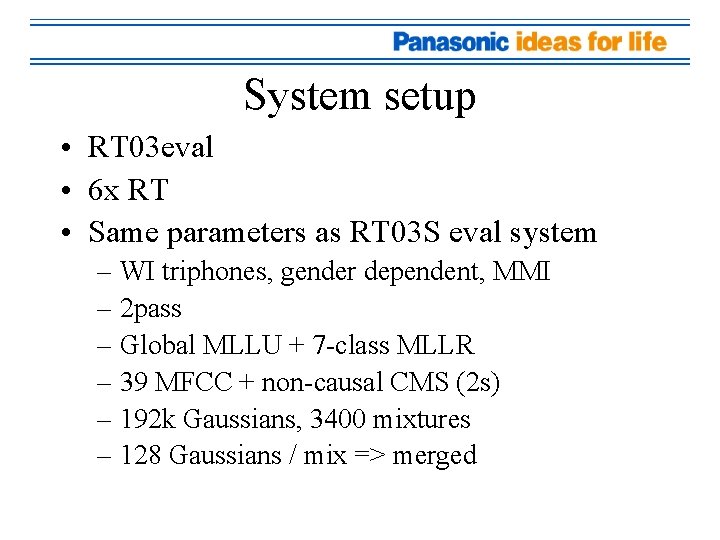

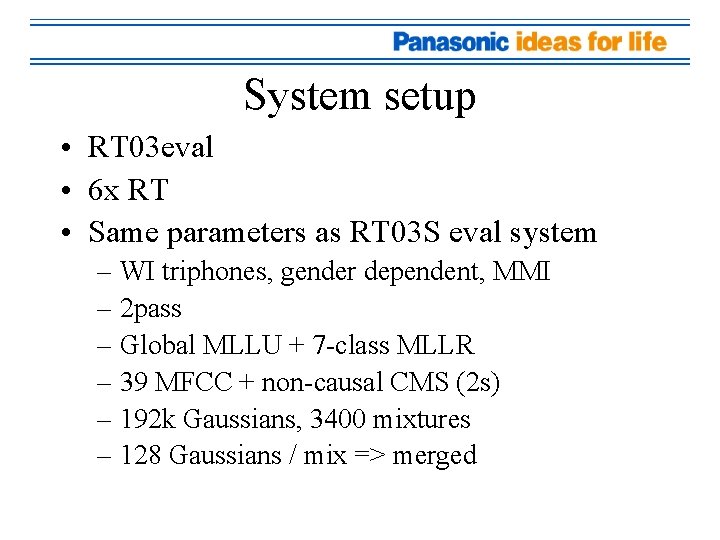

System setup • RT 03 eval • 6 x RT • Same parameters as RT 03 S eval system – WI triphones, gender dependent, MMI – 2 pass – Global MLLU + 7 -class MLLR – 39 MFCC + non-causal CMS (2 s) – 192 k Gaussians, 3400 mixtures – 128 Gaussians / mix => merged