Large Margin Hidden Markov Models for Speech Recognition

![Large Margin HMMs for ASR • According to the statistical learning theory [Vapnik ], Large Margin HMMs for ASR • According to the statistical learning theory [Vapnik ],](https://slidetodoc.com/presentation_image/40a293d433fa00d30c8ebb0b70201e4c/image-9.jpg)

- Slides: 40

Large Margin Hidden Markov Models for Speech Recognition Hui Jiang, Xinwei Li, Chaojun Liu Present by Fang-Hui Chu

Outline • • • Introduction Large margin HMMs for ASR Formulation of large margin estimation for CDHMM Optimization based on the penalized gradient decent Experiments Conclusions 2

Introduction • The most successful modeling approach to automatic speech recognition (ASR) is to use a set of hidden Markov models (HMMs) as the acoustic models • As for HMM-based acoustic models, the dominant estimation methods is the Baum-Welch algorithm which is based on the maximum likelihood (ML) criterion • As an alternative to the ML estimation, discriminative training (DT) has also been extensively studied for HMMs in ASR 3

Introduction • The DT methods aim to minimize or reduce classification errors in training data as model estimation criterion • Although both MMI and MCE criteria have been shown to be an asymptotic upper bound of the Bayes error when an infinite amount of training data is available, a low classification error rate in a finite training set does not necessarily guarantee lower error rate in a new test – Several techniques have been used to improve generalization of a DT method • Smoothing sigmoid function in MCE • Acoustic scaling and weaken language modeling in MMI 4

Introduction • On the other hand, the generalization problem of a learning algorithm has been theoretically studied in the field of machine learning – where the Bayes error is shown to be bounded by the classification error rate in a finite training set plus a quantity related to the so-called VC dimension – the fact that it is the margin in classification rather than the raw training error • The concept of large margin has been identified as a unifying principle for analyzing many different approaches in pattern recognition 5

Introduction • Generally speaking, the original formulation of SVM does not suit well to SR tasks in many aspects – First, the standard SVM expects a fixed-length feature vector as input while speech pattern is dynamic and it always leads to variable-length features – Second, the standard SVM is originally formulated for a binary pattern classification problem – Third, SVM is a static classifier in nature and it is not straightforward to solve sequence recognition problem where the boundary information about each potential pattern is unknown, as in continuous SR 6

Introduction 7

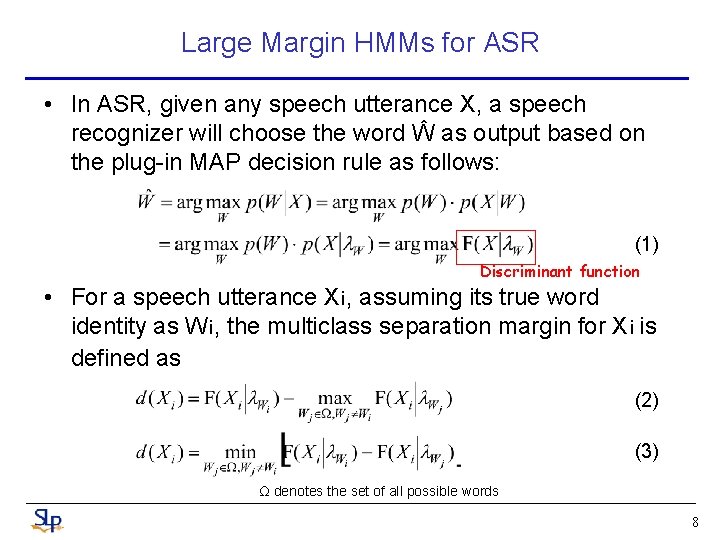

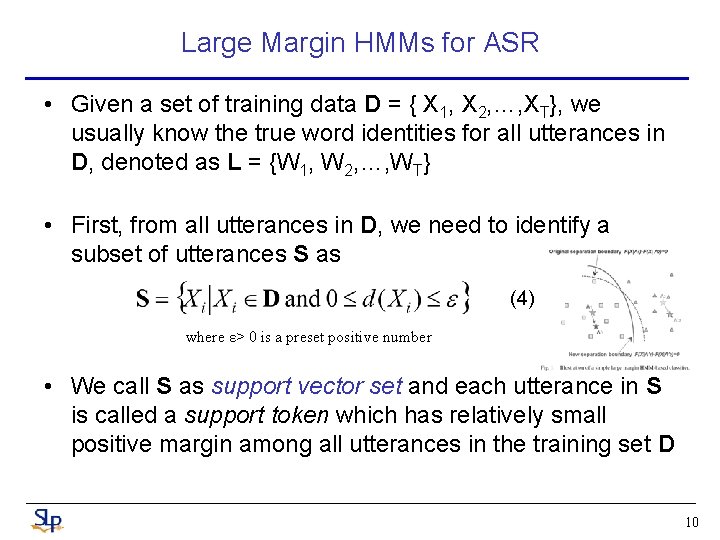

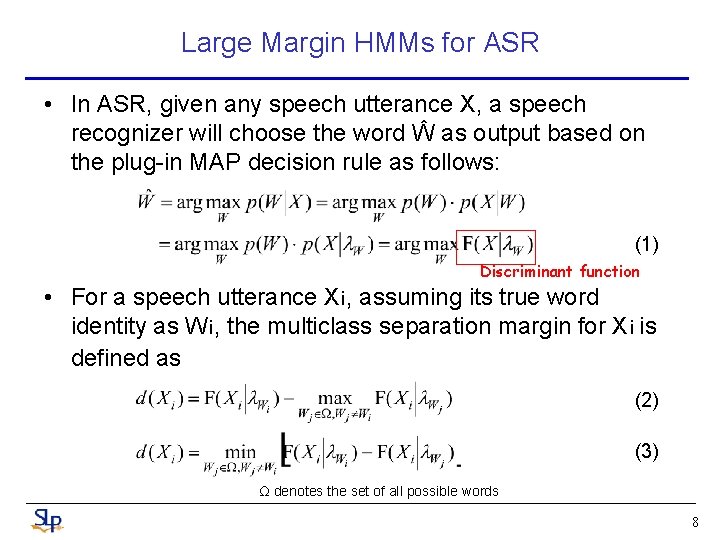

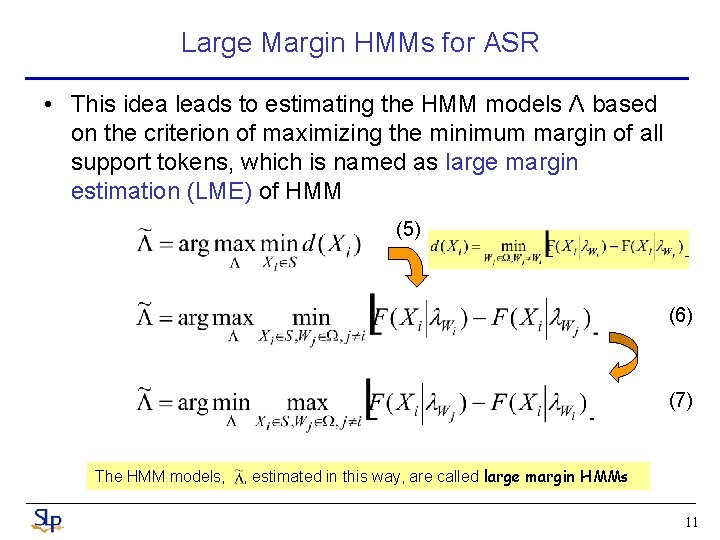

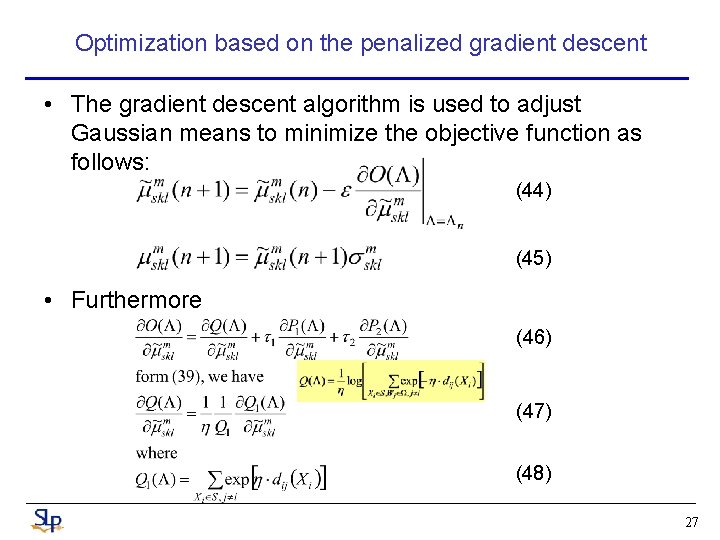

Large Margin HMMs for ASR • In ASR, given any speech utterance Χ, a speech recognizer will choose the word Ŵ as output based on the plug-in MAP decision rule as follows: (1) Discriminant function • For a speech utterance Xi, assuming its true word identity as Wi, the multiclass separation margin for Xi is defined as (2) (3) Ω denotes the set of all possible words 8

![Large Margin HMMs for ASR According to the statistical learning theory Vapnik Large Margin HMMs for ASR • According to the statistical learning theory [Vapnik ],](https://slidetodoc.com/presentation_image/40a293d433fa00d30c8ebb0b70201e4c/image-9.jpg)

Large Margin HMMs for ASR • According to the statistical learning theory [Vapnik ], the generalization error rate of a classifier in new test sets is theoretically bounded by a quantity related to its margin • Motivated by the large margin principle, even for those utterances in the training set which all have positive margin, we may still want to maximize the minimum margin to build an HMM-based large margin classifier for ASR 9

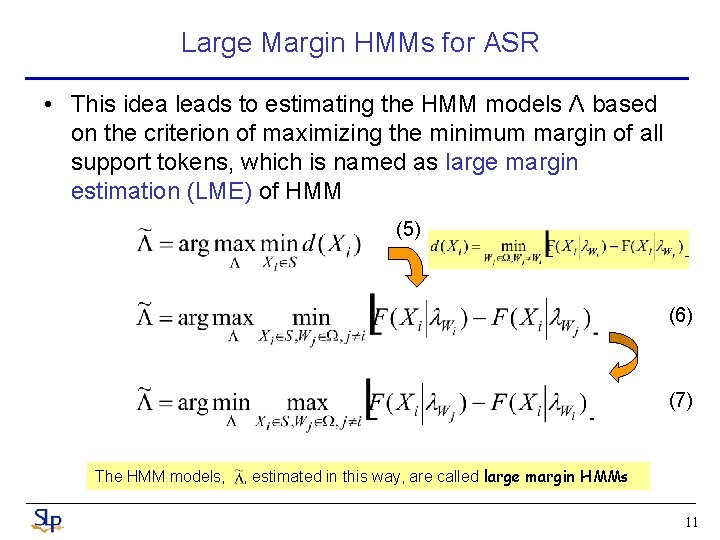

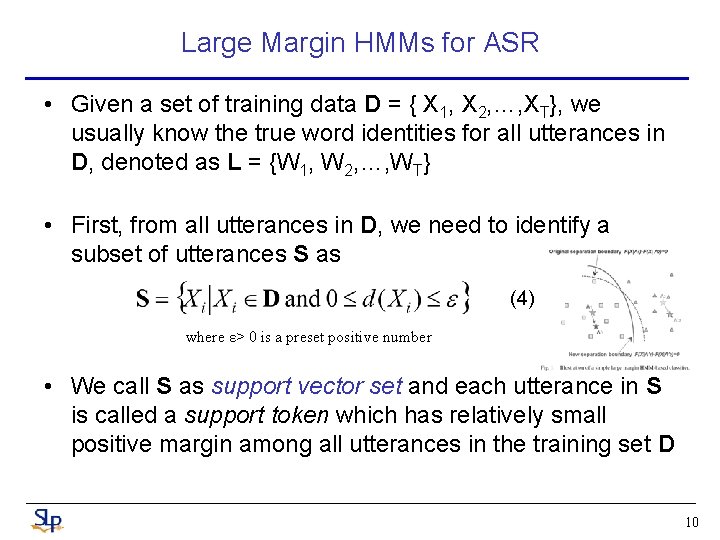

Large Margin HMMs for ASR • Given a set of training data D = { X 1, X 2, …, XT}, we usually know the true word identities for all utterances in D, denoted as L = {W 1, W 2, …, WT} • First, from all utterances in D, we need to identify a subset of utterances S as (4) where ε> 0 is a preset positive number • We call S as support vector set and each utterance in S is called a support token which has relatively small positive margin among all utterances in the training set D 10

Large Margin HMMs for ASR • This idea leads to estimating the HMM models Λ based on the criterion of maximizing the minimum margin of all support tokens, which is named as large margin estimation (LME) of HMM (5) (6) (7) The HMM models, , estimated in this way, are called large margin HMMs 11

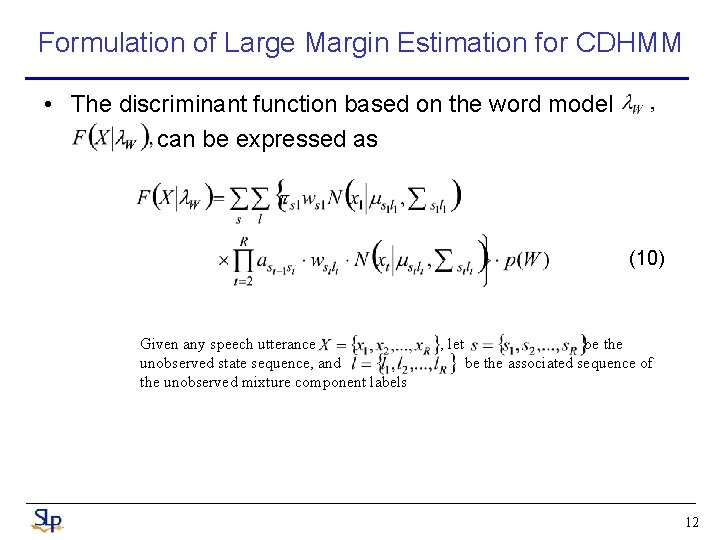

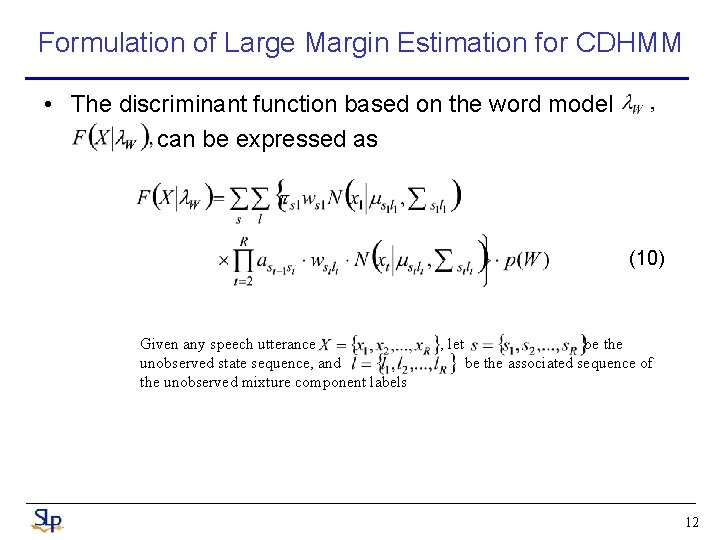

Formulation of Large Margin Estimation for CDHMM • The discriminant function based on the word model can be expressed as (10) Given any speech utterance unobserved state sequence, and the unobserved mixture component labels , let be the associated sequence of 12

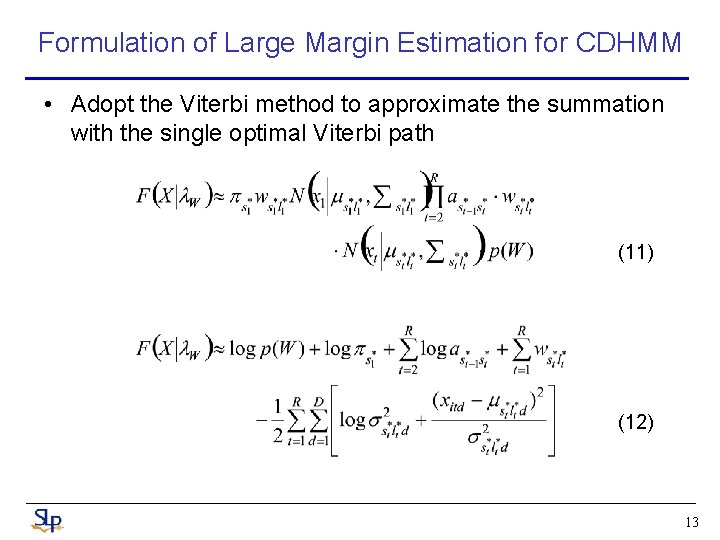

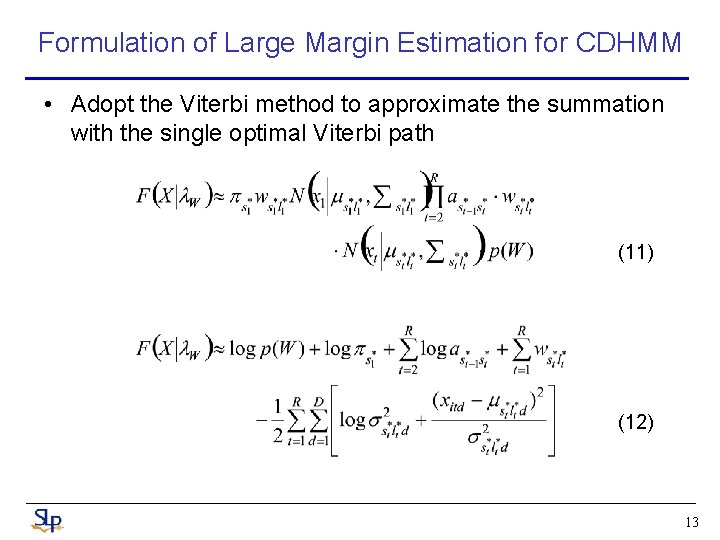

Formulation of Large Margin Estimation for CDHMM • Adopt the Viterbi method to approximate the summation with the single optimal Viterbi path (11) (12) 13

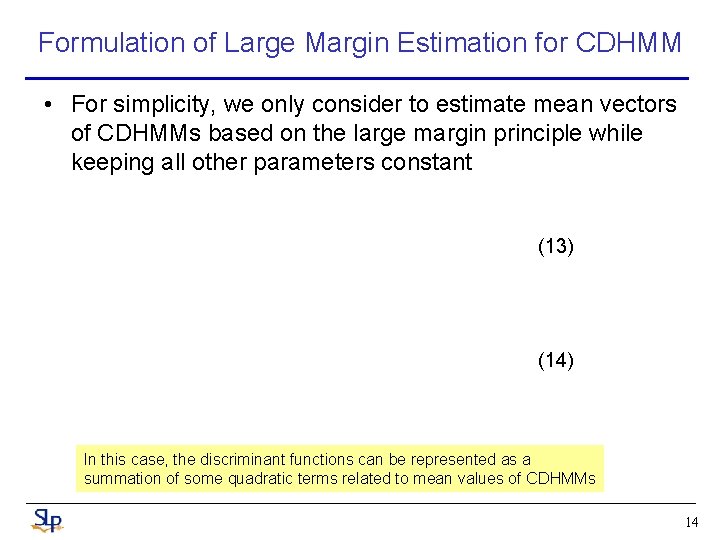

Formulation of Large Margin Estimation for CDHMM • For simplicity, we only consider to estimate mean vectors of CDHMMs based on the large margin principle while keeping all other parameters constant (13) (14) In this case, the discriminant functions can be represented as a summation of some quadratic terms related to mean values of CDHMMs 14

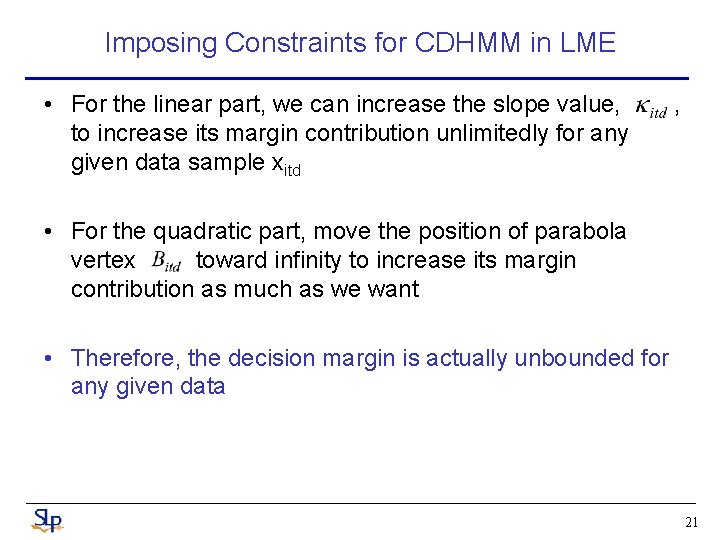

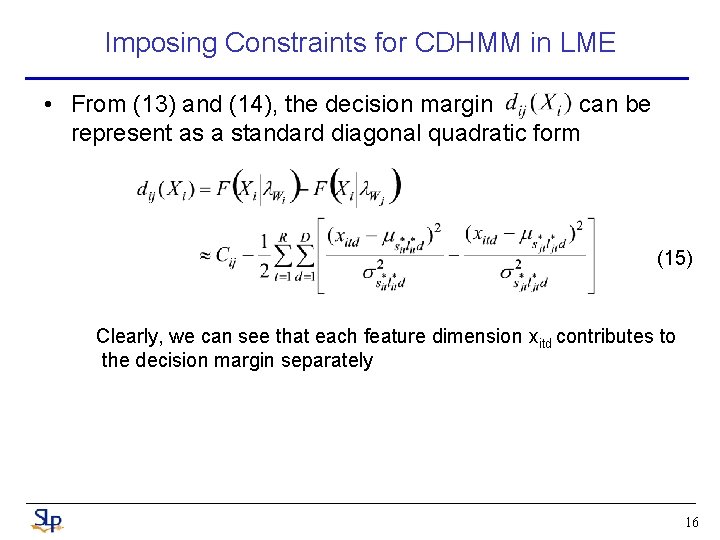

Imposing Constraints for CDHMM in LME • The decision margins are actually unbounded for the CDHMMs – In other words, we can adjust CDHMM parameters in a way to increase the margin unlimitedly so that the minimax optimization in (7) is actually not solvable • In this paper, we will mathematically analyze the definition of margin and introduce some theoretically sound constraints for the minimax optimization in LME of CDHMMs in speech recognition 15

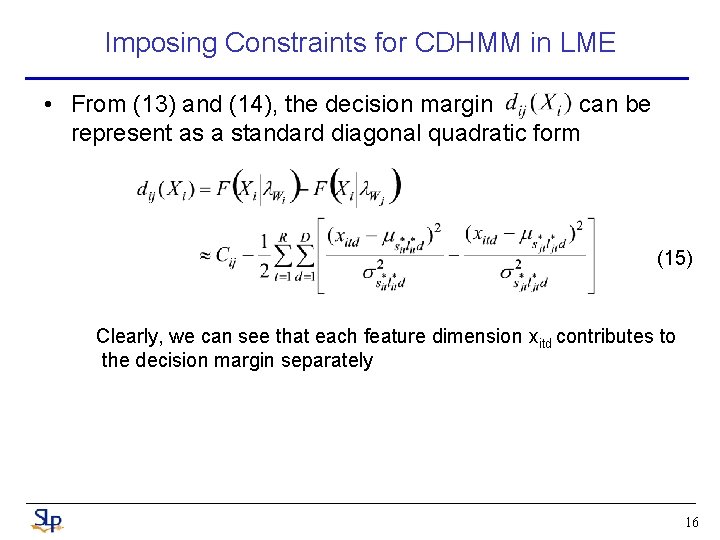

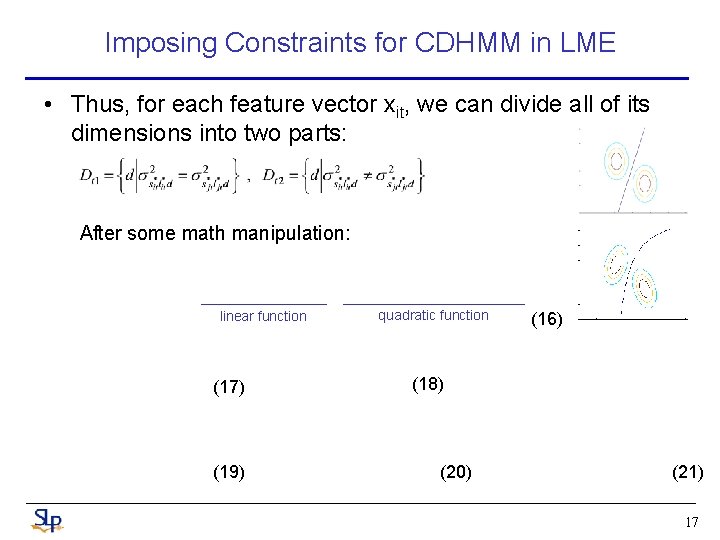

Imposing Constraints for CDHMM in LME • From (13) and (14), the decision margin can be represent as a standard diagonal quadratic form (15) Clearly, we can see that each feature dimension xitd contributes to the decision margin separately 16

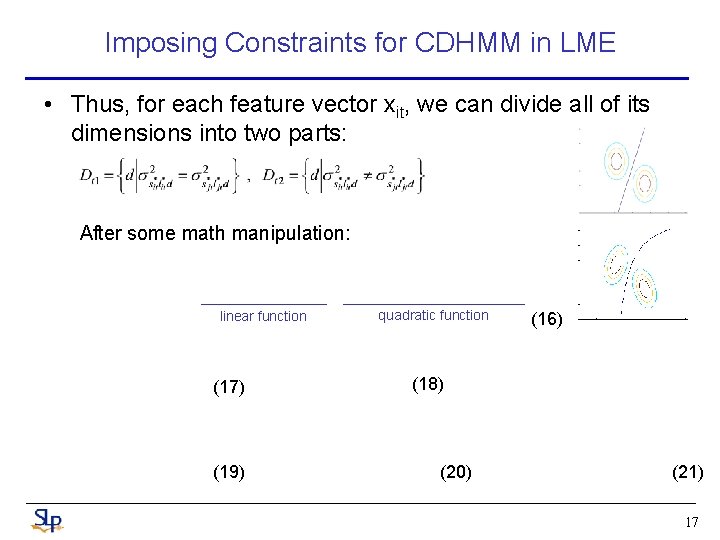

Imposing Constraints for CDHMM in LME • Thus, for each feature vector xit, we can divide all of its dimensions into two parts: After some math manipulation: linear function (17) (19) quadratic function (16) (18) (20) (21) 17

Derivation of decision margin 18

Derivation of decision margin 19

Derivation of decision margin 20

Imposing Constraints for CDHMM in LME • For the linear part, we can increase the slope value, to increase its margin contribution unlimitedly for any given data sample xitd , • For the quadratic part, move the position of parabola vertex toward infinity to increase its margin contribution as much as we want • Therefore, the decision margin is actually unbounded for any given data 21

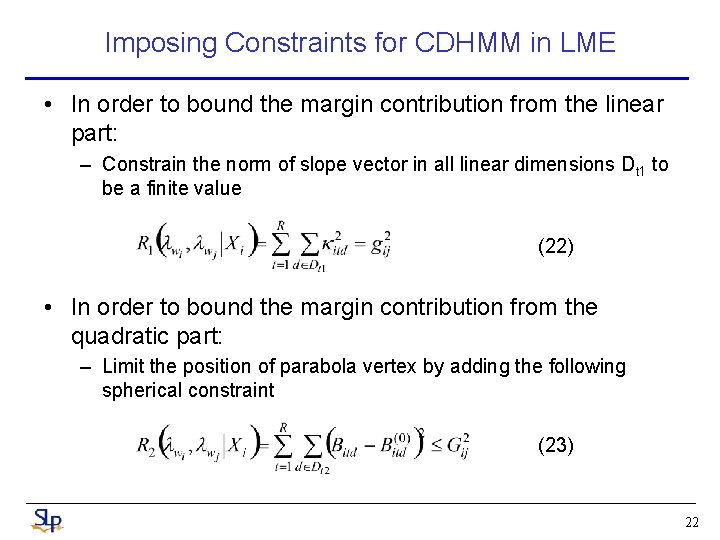

Imposing Constraints for CDHMM in LME • In order to bound the margin contribution from the linear part: – Constrain the norm of slope vector in all linear dimensions Dt 1 to be a finite value (22) • In order to bound the margin contribution from the quadratic part: – Limit the position of parabola vertex by adding the following spherical constraint (23) 22

Imposing Constraints for CDHMM in LME • We reformulate the large margin estimation as the following constrained minimax optimization problem: (34) (35) (36) (37) 23

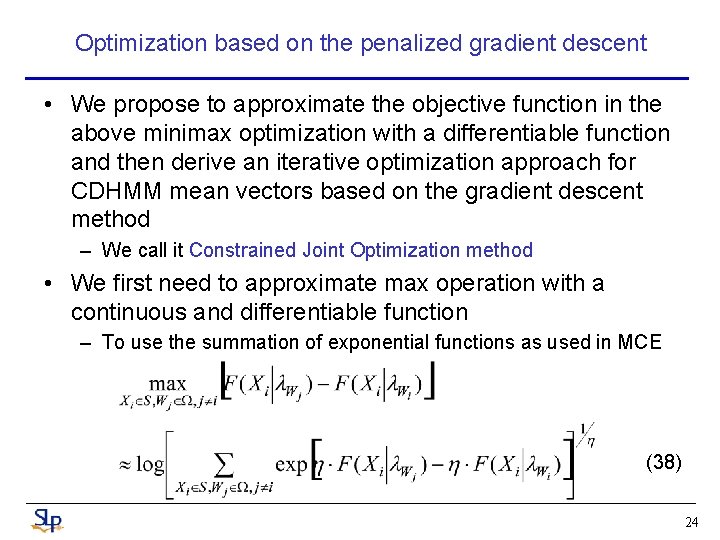

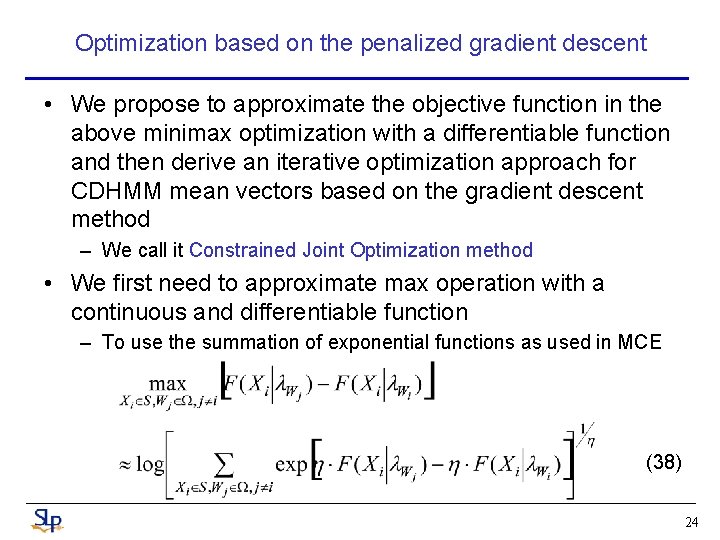

Optimization based on the penalized gradient descent • We propose to approximate the objective function in the above minimax optimization with a differentiable function and then derive an iterative optimization approach for CDHMM mean vectors based on the gradient descent method – We call it Constrained Joint Optimization method • We first need to approximate max operation with a continuous and differentiable function – To use the summation of exponential functions as used in MCE (38) 24

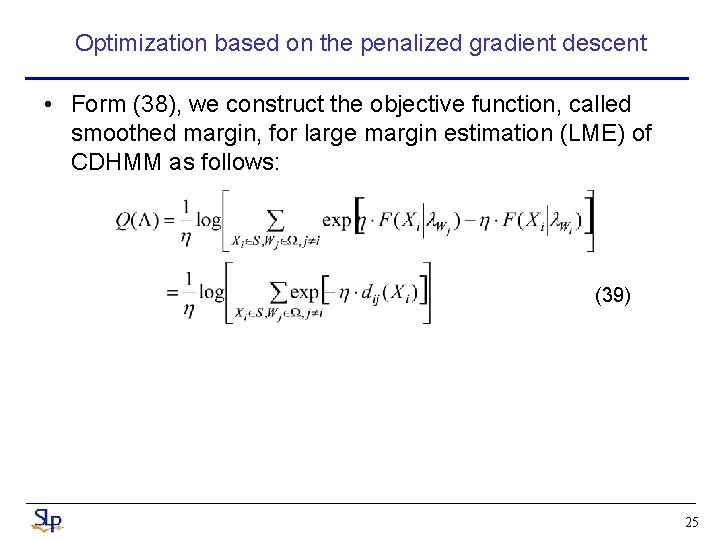

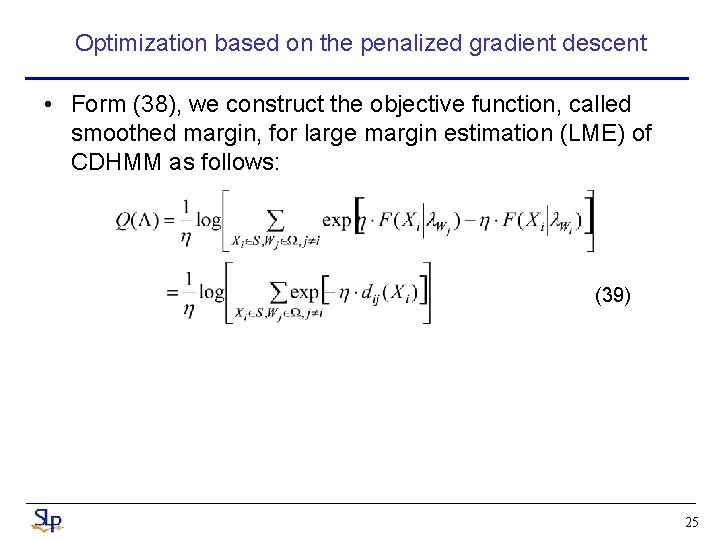

Optimization based on the penalized gradient descent • Form (38), we construct the objective function, called smoothed margin, for large margin estimation (LME) of CDHMM as follows: (39) 25

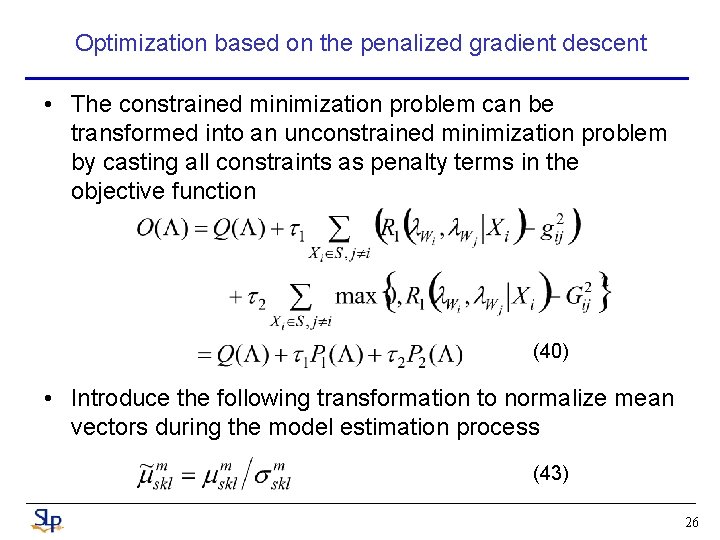

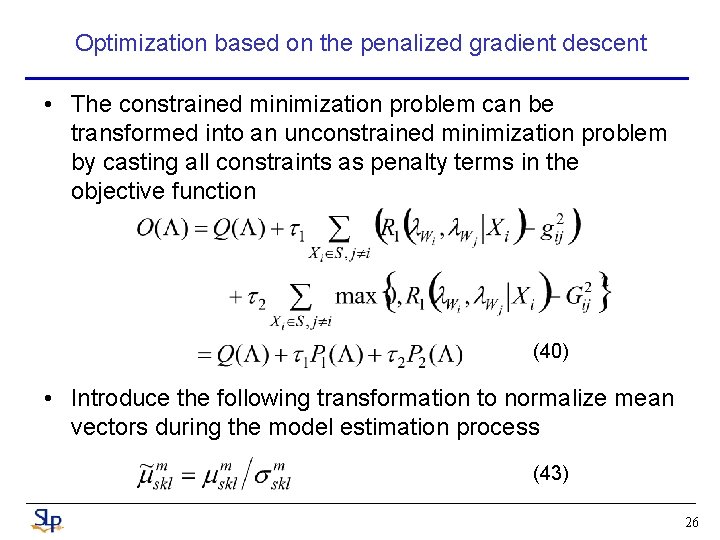

Optimization based on the penalized gradient descent • The constrained minimization problem can be transformed into an unconstrained minimization problem by casting all constraints as penalty terms in the objective function (40) • Introduce the following transformation to normalize mean vectors during the model estimation process (43) 26

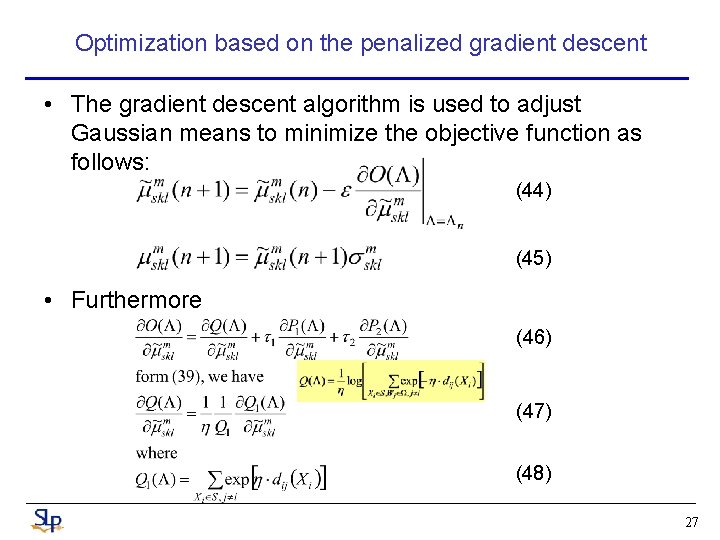

Optimization based on the penalized gradient descent • The gradient descent algorithm is used to adjust Gaussian means to minimize the objective function as follows: (44) (45) • Furthermore (46) (47) (48) 27

Derivation of part 1 (49) (50) (51) 28

Derivation of part 2 29

Derivation of part 2 30

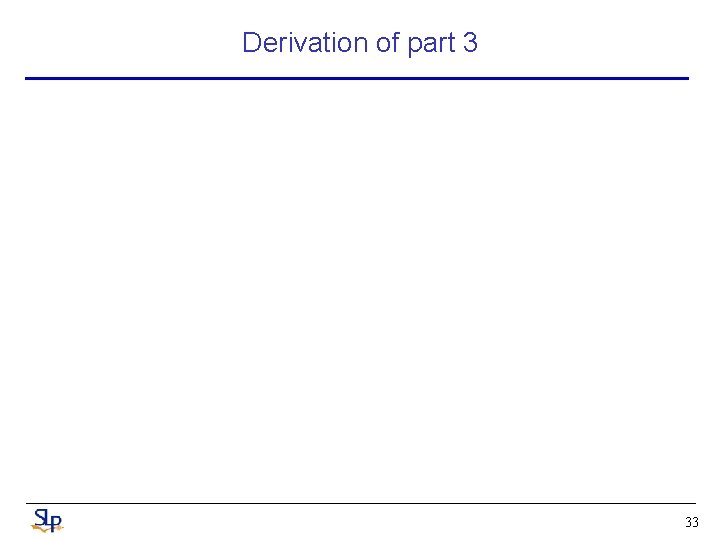

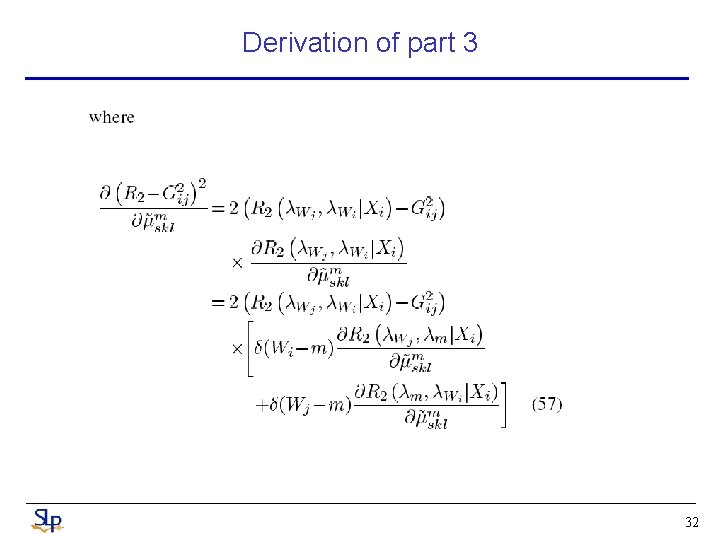

Derivation of part 3 31

Derivation of part 3 32

Derivation of part 3 33

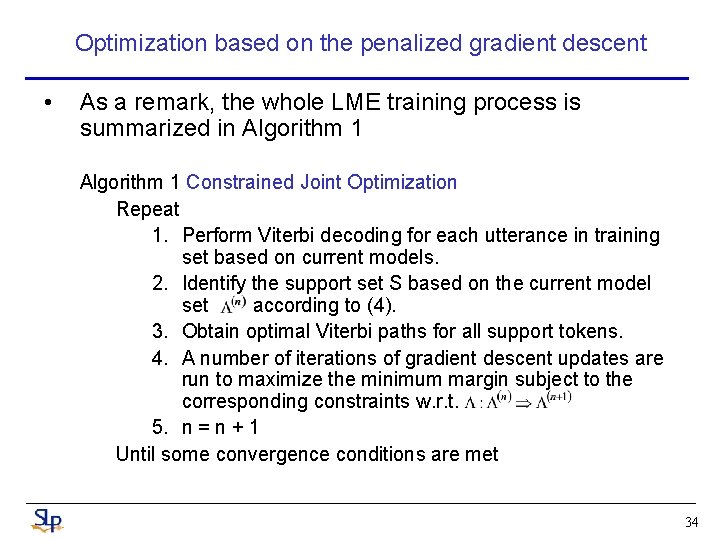

Optimization based on the penalized gradient descent • As a remark, the whole LME training process is summarized in Algorithm 1 Constrained Joint Optimization Repeat 1. Perform Viterbi decoding for each utterance in training set based on current models. 2. Identify the support set S based on the current model set according to (4). 3. Obtain optimal Viterbi paths for all support tokens. 4. A number of iterations of gradient descent updates are run to maximize the minimum margin subject to the corresponding constraints w. r. t. 5. n = n + 1 Until some convergence conditions are met 34

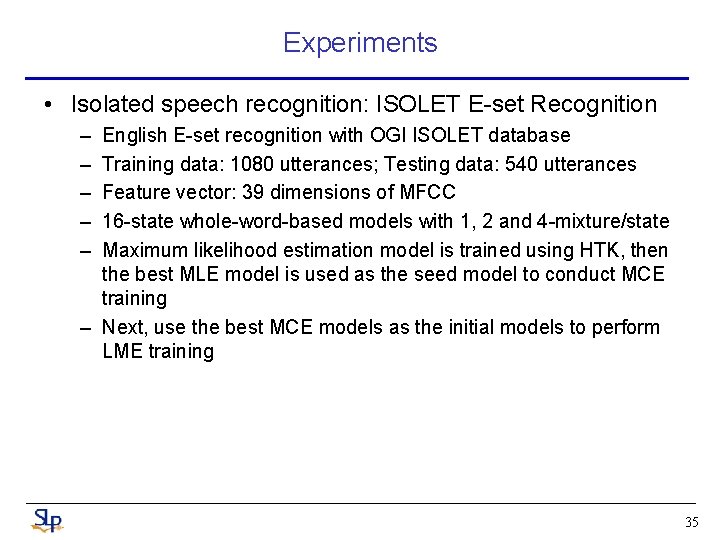

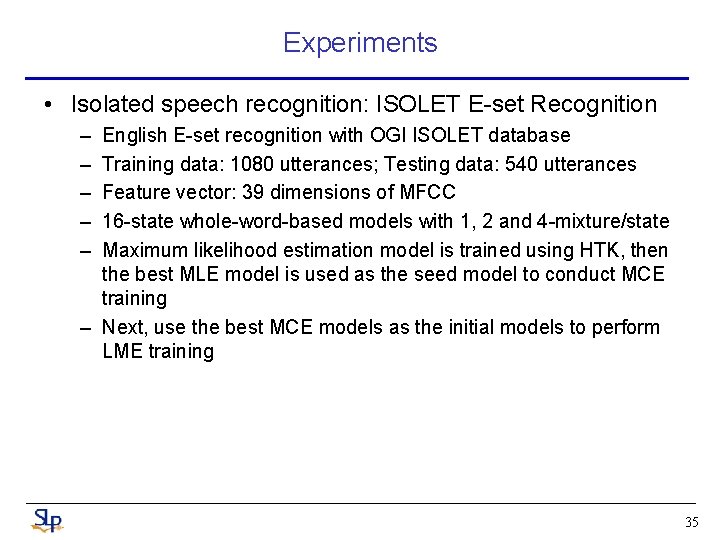

Experiments • Isolated speech recognition: ISOLET E-set Recognition – – – English E-set recognition with OGI ISOLET database Training data: 1080 utterances; Testing data: 540 utterances Feature vector: 39 dimensions of MFCC 16 -state whole-word-based models with 1, 2 and 4 -mixture/state Maximum likelihood estimation model is trained using HTK, then the best MLE model is used as the seed model to conduct MCE training – Next, use the best MCE models as the initial models to perform LME training 35

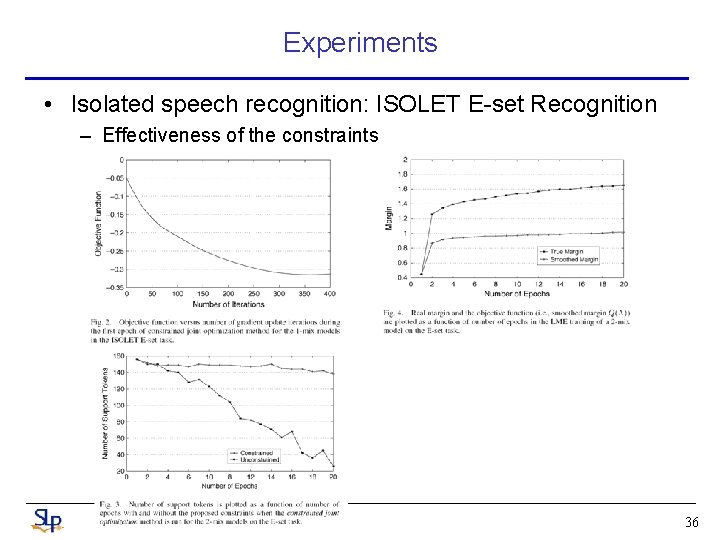

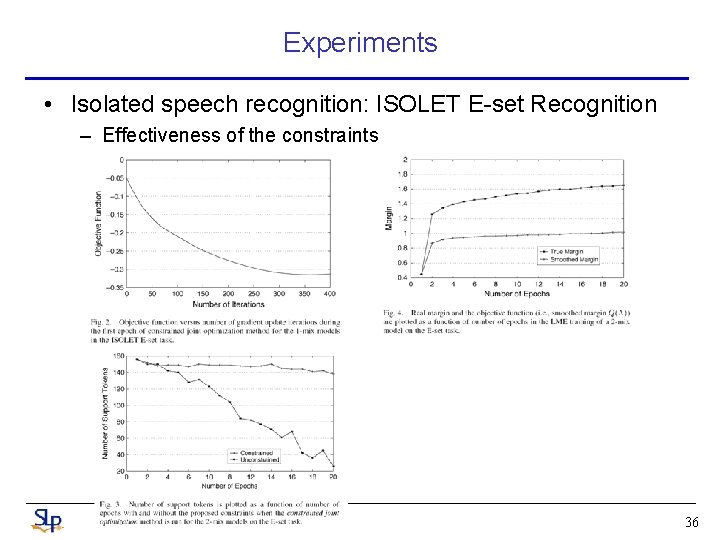

Experiments • Isolated speech recognition: ISOLET E-set Recognition – Effectiveness of the constraints 36

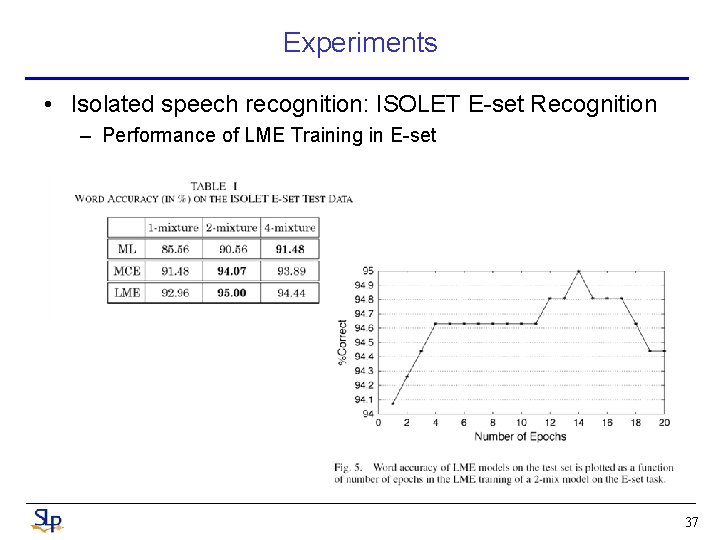

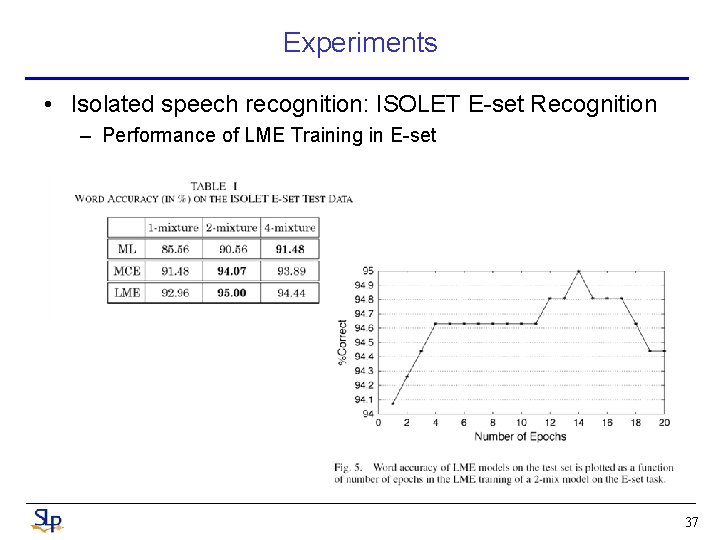

Experiments • Isolated speech recognition: ISOLET E-set Recognition – Performance of LME Training in E-set 37

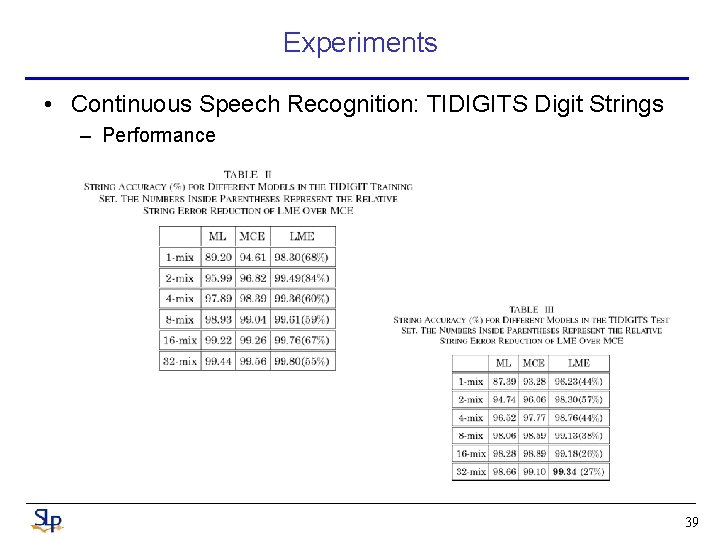

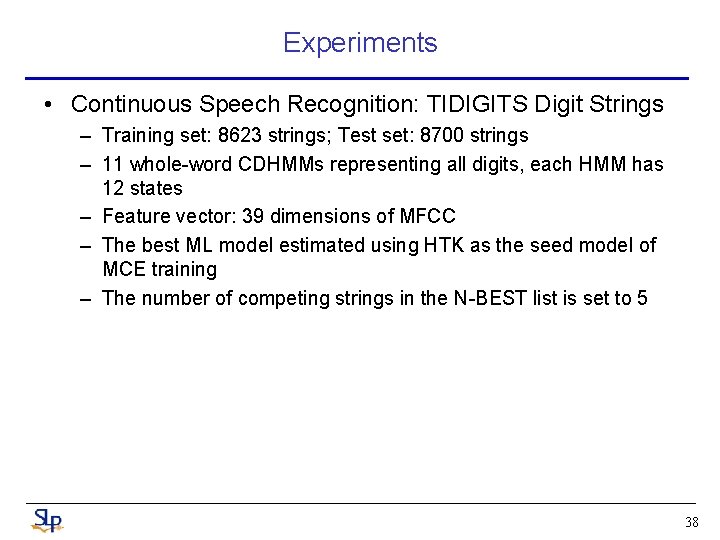

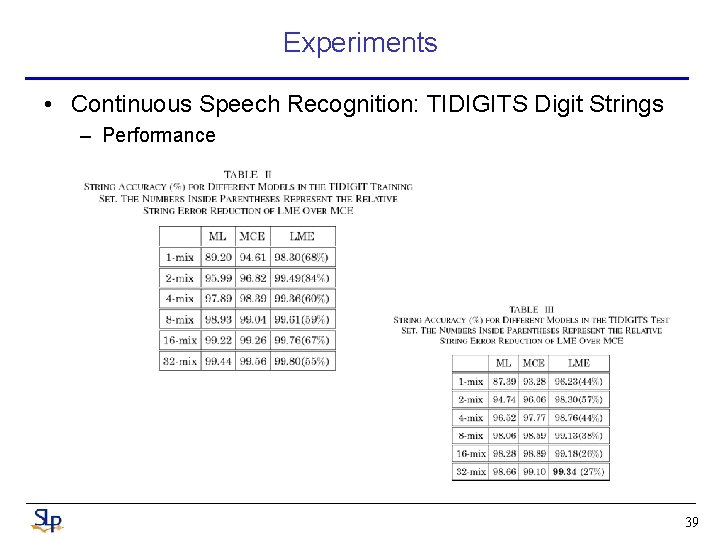

Experiments • Continuous Speech Recognition: TIDIGITS Digit Strings – Training set: 8623 strings; Test set: 8700 strings – 11 whole-word CDHMMs representing all digits, each HMM has 12 states – Feature vector: 39 dimensions of MFCC – The best ML model estimated using HTK as the seed model of MCE training – The number of competing strings in the N-BEST list is set to 5 38

Experiments • Continuous Speech Recognition: TIDIGITS Digit Strings – Performance 39

Conclusions • This paper shows the proposed framework of the socalled large margin HMMs is superior to other early efforts to combine SVM with HMM in speech recogniton • More importantly, the new framework looks very promising to be capable of solving other larger scale speech recognition tasks as well • Some extensive research works are under way to extend the large margin training method to subword-based large vocabulary continuous speech recognition tasks and to investigate how to handle misrecognition utterances in the training set 40