Language Identification from Text Using Cumulative Frequency Addition

- Slides: 9

Language Identification from Text Using Cumulative Frequency Addition Bashir Ahmed Student/Faculty Research Day Pace University May 07, 2004

Problem Statement • Existing Text-to-Speech (TTS) systems fail to recognize foreign words in written Text. As a result, they try to sound foreign lingual words that are embedded in native text using native lexicon. This causes poor TTS conversion, and unpleasant sounding of foreign words with garbled meaning. To be really useful in production environment, a great deal of improvement is necessary in the current TTS technology such as recognition of foreign words and their proper sounding using the correct lexicon. The proposal in this thesis is to investigate a solution to detect language shift in written text which can be useful for TTS modules to switch to the proper lexicon. http: //www. naturalvoices. att. com/demos/

What Do We Need? • To be able to detect language shift in a written • • document, we must be able to detect the major language first. So, we need a Language Identifier. The Language Identifier must be efficient – this is my focus at this early stage of my research. Existing language Identifiers such as Ngram based rank-order statistics and Naïve Bayesian classifiers work well, but they have their pros and cons.

Approaches to Language Identification • Dictionary Based Approach • Machine Learning (ML) Approach

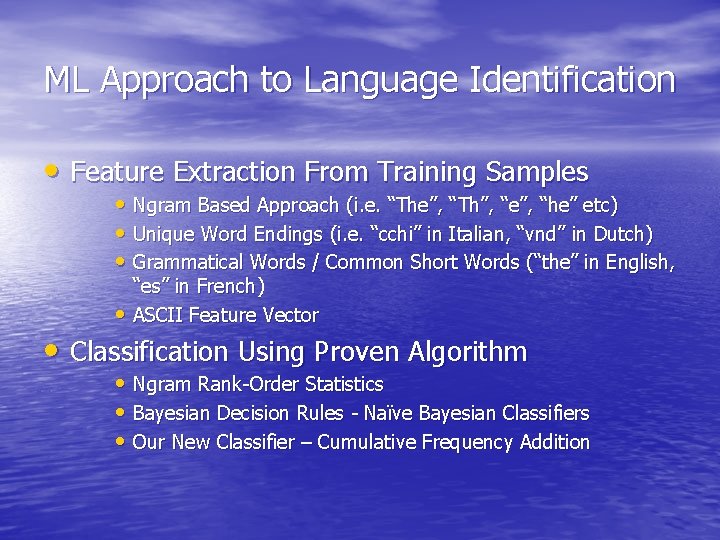

ML Approach to Language Identification • Feature Extraction From Training Samples • Ngram Based Approach (i. e. “The”, “Th”, “e”, “he” etc) • Unique Word Endings (i. e. “cchi” in Italian, “vnd” in Dutch) • Grammatical Words / Common Short Words (“the” in English, “es” in French) • ASCII Feature Vector • Classification Using Proven Algorithm • Ngram Rank-Order Statistics • Bayesian Decision Rules - Naïve Bayesian Classifiers • Our New Classifier – Cumulative Frequency Addition

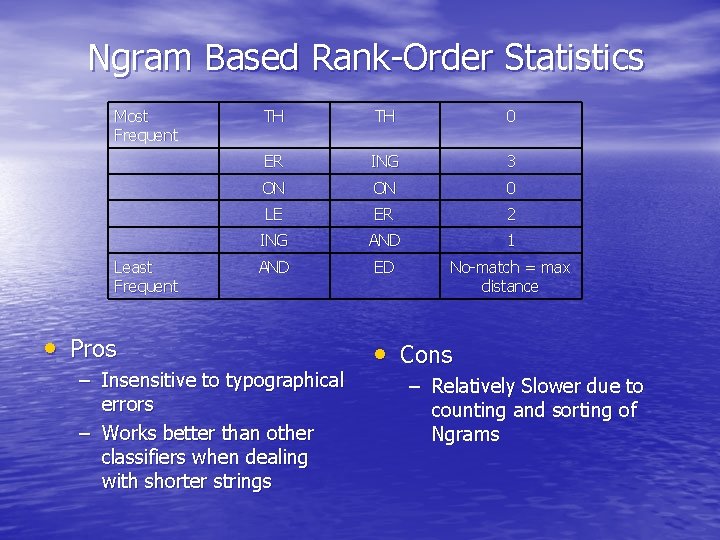

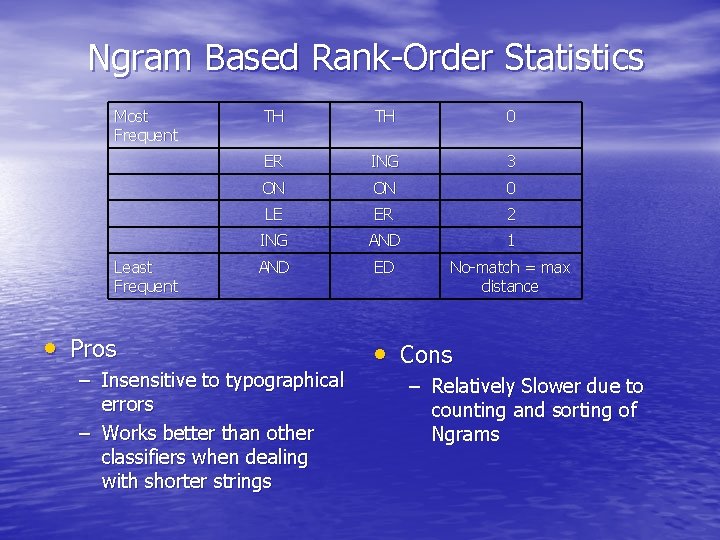

Ngram Based Rank-Order Statistics Most Frequent Least Frequent TH TH 0 ER ING 3 ON ON 0 LE ER 2 ING AND 1 AND ED No-match = max distance • Pros – Insensitive to typographical errors – Works better than other classifiers when dealing with shorter strings • Cons – Relatively Slower due to counting and sorting of Ngrams

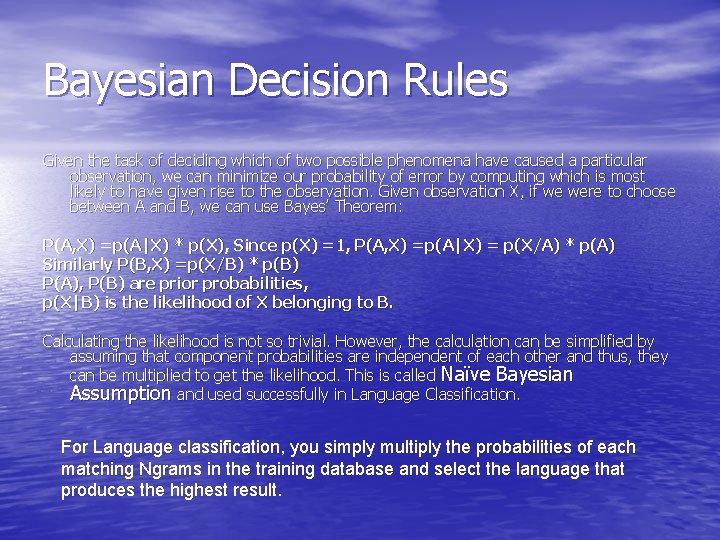

Bayesian Decision Rules Given the task of deciding which of two possible phenomena have caused a particular observation, we can minimize our probability of error by computing which is most likely to have given rise to the observation. Given observation X, if we were to choose between A and B, we can use Bayes’ Theorem: P(A, X) =p(A|X) * p(X), Since p(X) =1, P(A, X) =p(A|X) = p(X/A) * p(A) Similarly P(B, X) =p(X/B) * p(B) P(A), P(B) are prior probabilities, p(X|B) is the likelihood of X belonging to B. Calculating the likelihood is not so trivial. However, the calculation can be simplified by assuming that component probabilities are independent of each other and thus, they can be multiplied to get the likelihood. This is called Naïve Bayesian Assumption and used successfully in Language Classification. For Language classification, you simply multiply the probabilities of each matching Ngrams in the training database and select the language that produces the highest result.

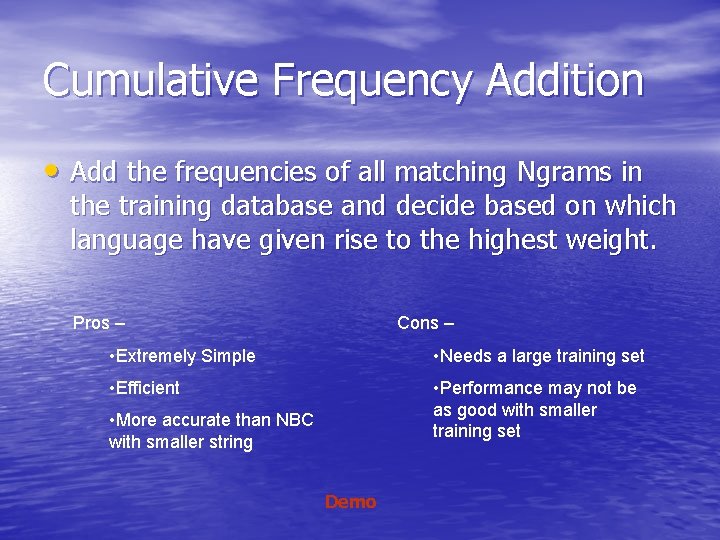

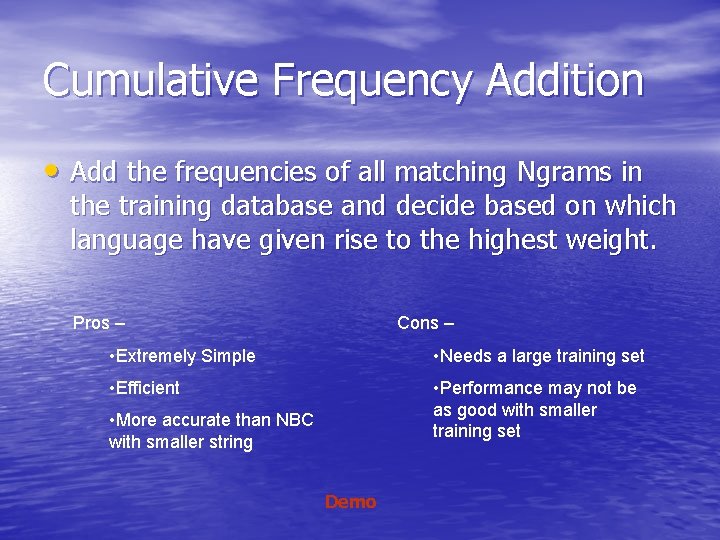

Cumulative Frequency Addition • Add the frequencies of all matching Ngrams in the training database and decide based on which language have given rise to the highest weight. Pros – Cons – • Extremely Simple • Needs a large training set • Efficient • Performance may not be as good with smaller training set • More accurate than NBC with smaller string Demo

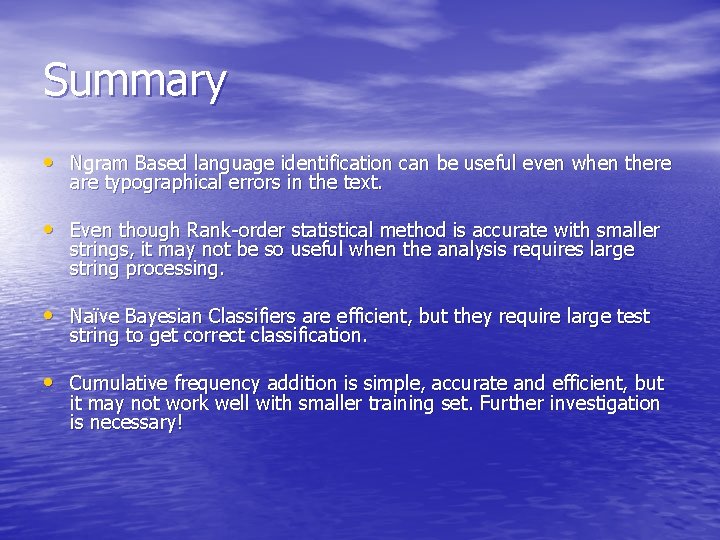

Summary • Ngram Based language identification can be useful even when there are typographical errors in the text. • Even though Rank-order statistical method is accurate with smaller strings, it may not be so useful when the analysis requires large string processing. • Naïve Bayesian Classifiers are efficient, but they require large test string to get correct classification. • Cumulative frequency addition is simple, accurate and efficient, but it may not work well with smaller training set. Further investigation is necessary!