L 2 CPUs and DAQ Interface Progress and

- Slides: 24

L 2 CPUs and DAQ Interface: Progress and Timeline Kristian Hahn, Paul Keener, Joe Kroll, Chris Neu, Fritz Stabenau, Rick Van Berg, Daniel Whiteson, Peter Wittich UPenn 9/13/2021 Daniel Whiteson/Penn

Requirements • Data Flow – Keep data processing path simple; make rejection very fast – Add no slow controls to event processing • Control: – Configuration (Load trigger exe, prescales, etc) – System Reset (HRR) • Monitoring – Trigger rates, algorithm times, resource usage, etc – Vital for commissioning of system • Commissioning – Phase I is Trigger Evaluation Director + single box – Phase II is Trigger Evaluation Director + 4 boxes 9/13/2021 Daniel Whiteson/Penn

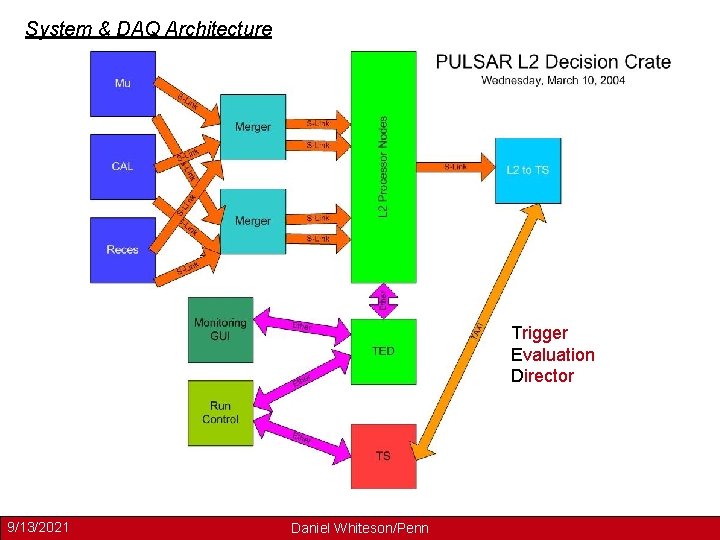

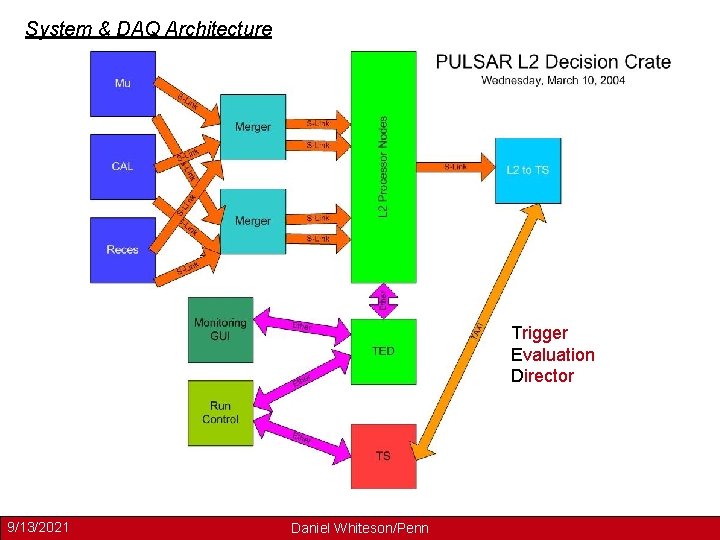

System & DAQ Architecture Trigger Evaluation Director 9/13/2021 Daniel Whiteson/Penn

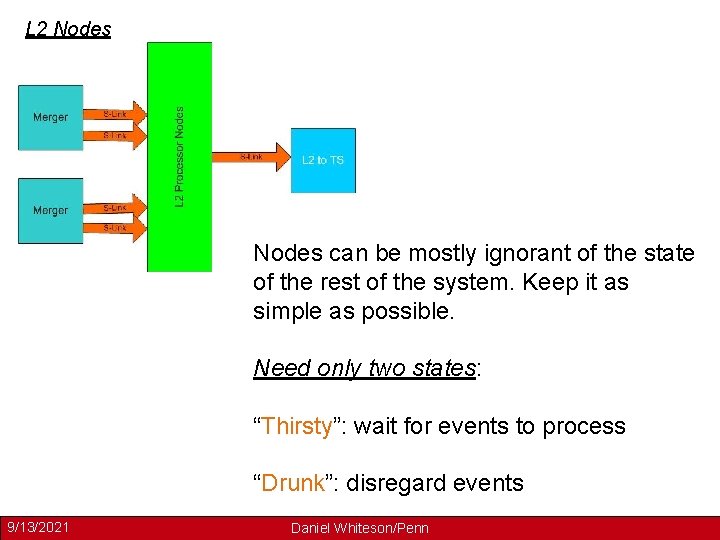

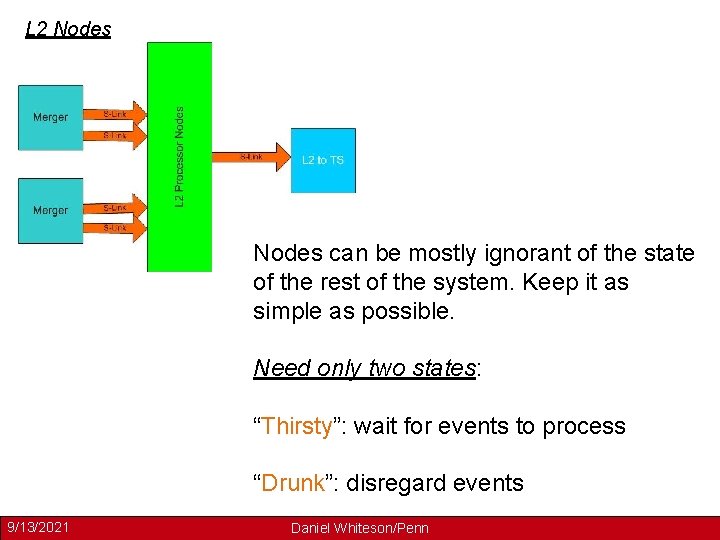

L 2 Nodes can be mostly ignorant of the state of the rest of the system. Keep it as simple as possible. Need only two states: “Thirsty”: wait for events to process “Drunk”: disregard events 9/13/2021 Daniel Whiteson/Penn

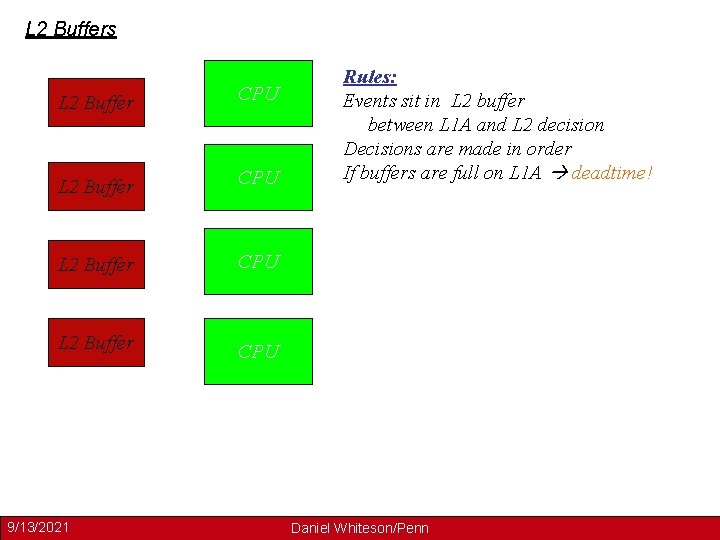

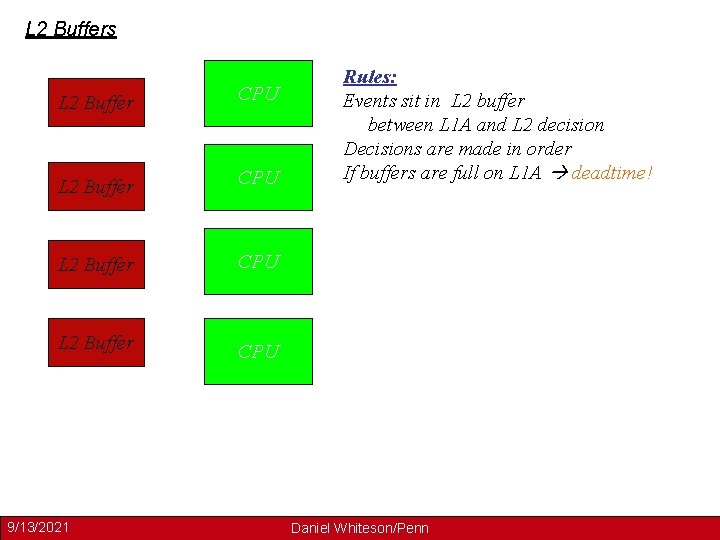

L 2 Buffers L 2 Buffer CPU 9/13/2021 Rules: Events sit in L 2 buffer between L 1 A and L 2 decision Decisions are made in order If buffers are full on L 1 A deadtime! Daniel Whiteson/Penn

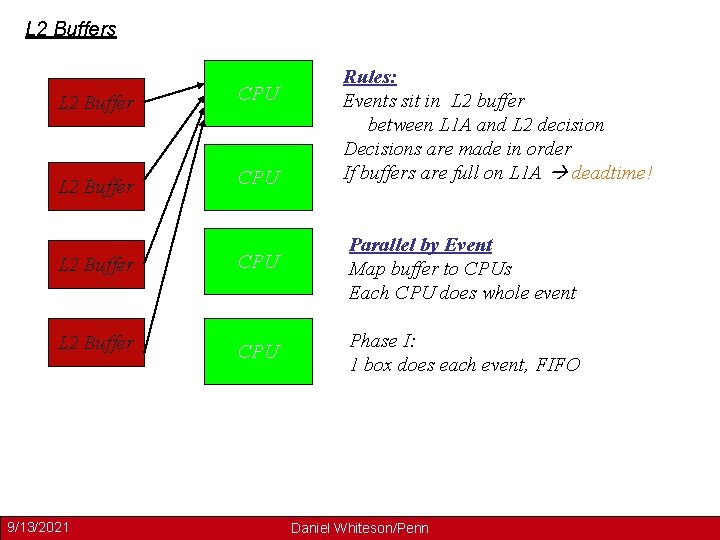

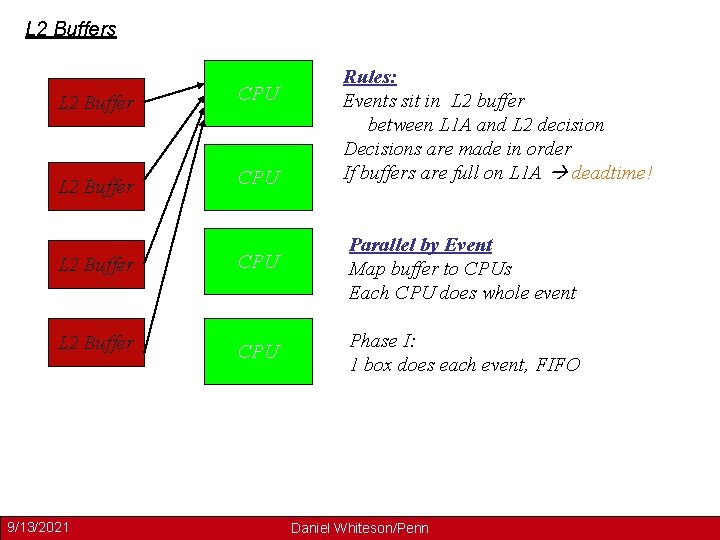

L 2 Buffers L 2 Buffer CPU Rules: Events sit in L 2 buffer between L 1 A and L 2 decision Decisions are made in order If buffers are full on L 1 A deadtime! L 2 Buffer CPU Parallel by Event Map buffer to CPUs Each CPU does whole event L 2 Buffer CPU Phase I: 1 box does each event, FIFO 9/13/2021 Daniel Whiteson/Penn

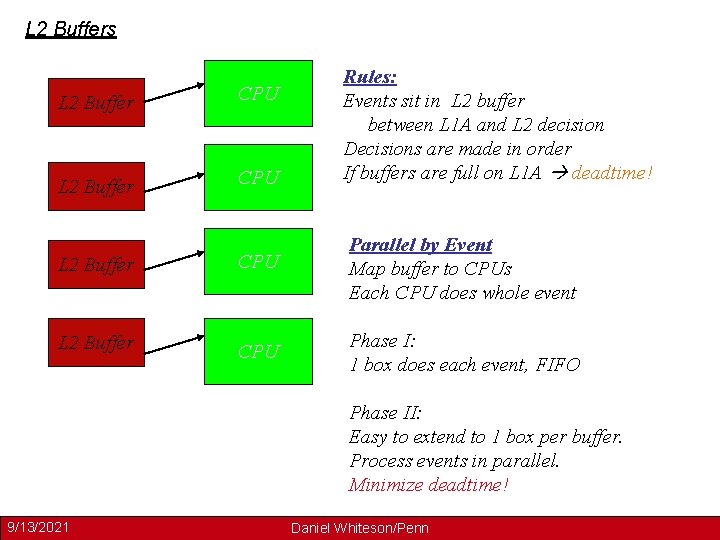

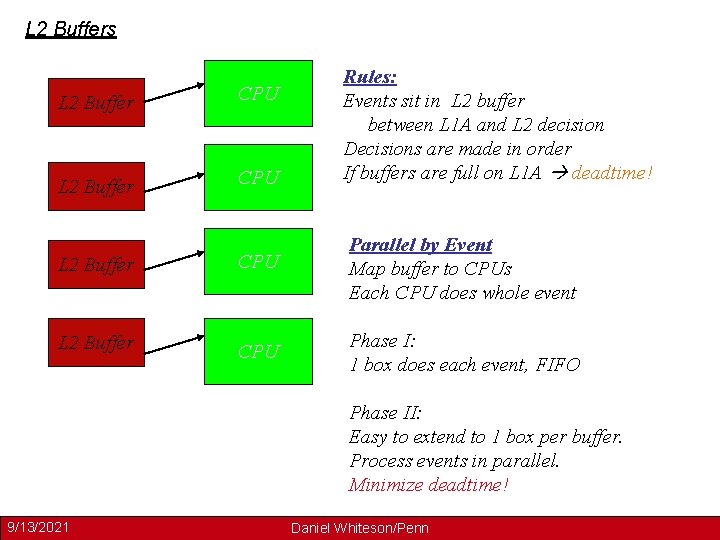

L 2 Buffers L 2 Buffer CPU Rules: Events sit in L 2 buffer between L 1 A and L 2 decision Decisions are made in order If buffers are full on L 1 A deadtime! L 2 Buffer CPU Parallel by Event Map buffer to CPUs Each CPU does whole event L 2 Buffer CPU Phase I: 1 box does each event, FIFO Phase II: Easy to extend to 1 box per buffer. Process events in parallel. Minimize deadtime! 9/13/2021 Daniel Whiteson/Penn

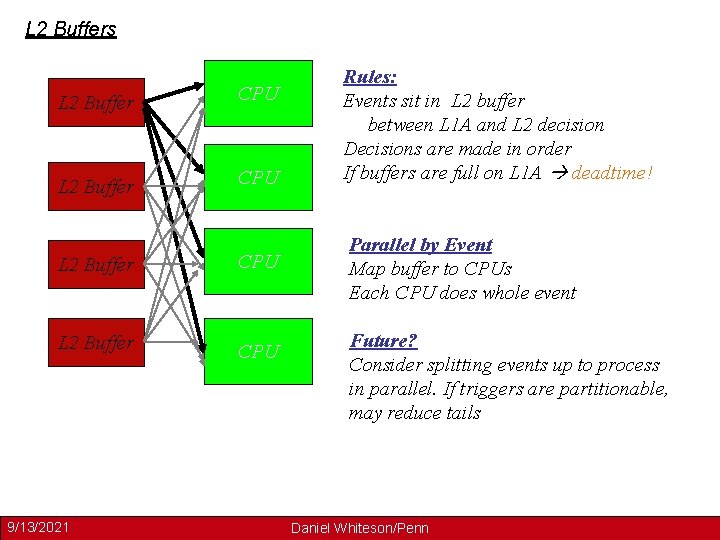

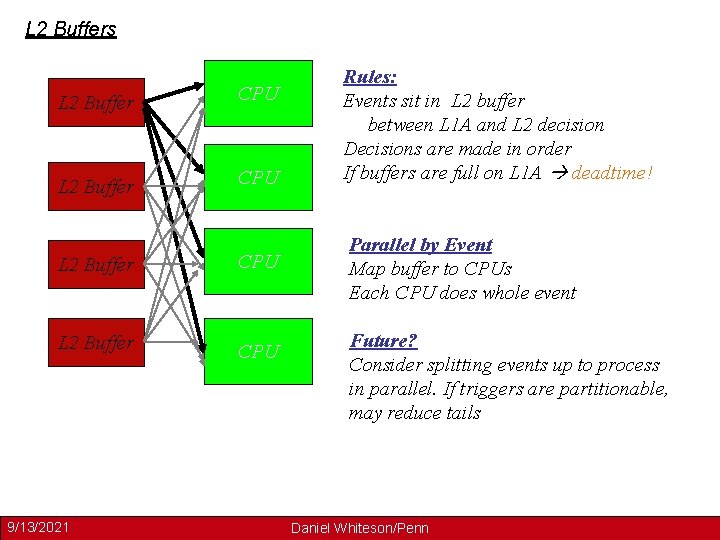

L 2 Buffers L 2 Buffer CPU 9/13/2021 Rules: Events sit in L 2 buffer between L 1 A and L 2 decision Decisions are made in order If buffers are full on L 1 A deadtime! Parallel by Event Map buffer to CPUs Each CPU does whole event Future? Consider splitting events up to process in parallel. If triggers are partitionable, may reduce tails Daniel Whiteson/Penn

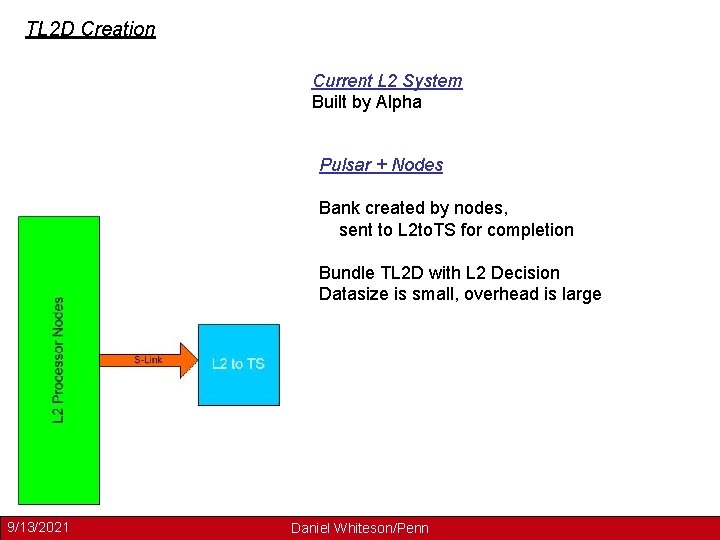

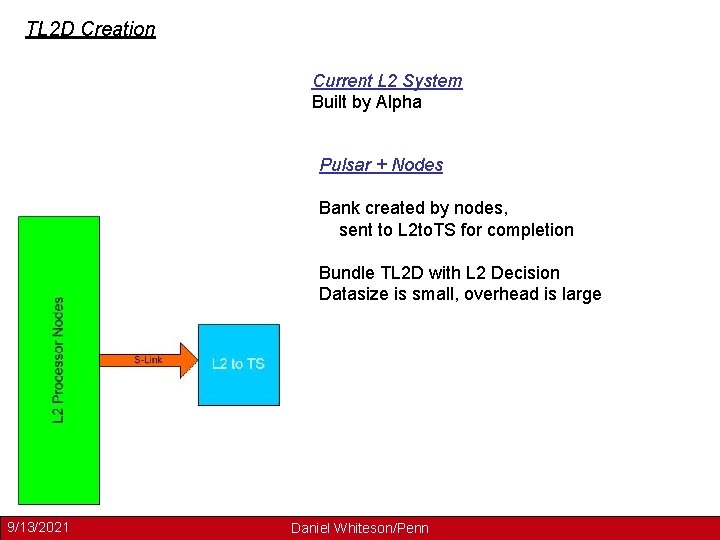

TL 2 D Creation Current L 2 System Built by Alpha Pulsar + Nodes Bank created by nodes, sent to L 2 to. TS for completion Bundle TL 2 D with L 2 Decision Datasize is small, overhead is large 9/13/2021 Daniel Whiteson/Penn

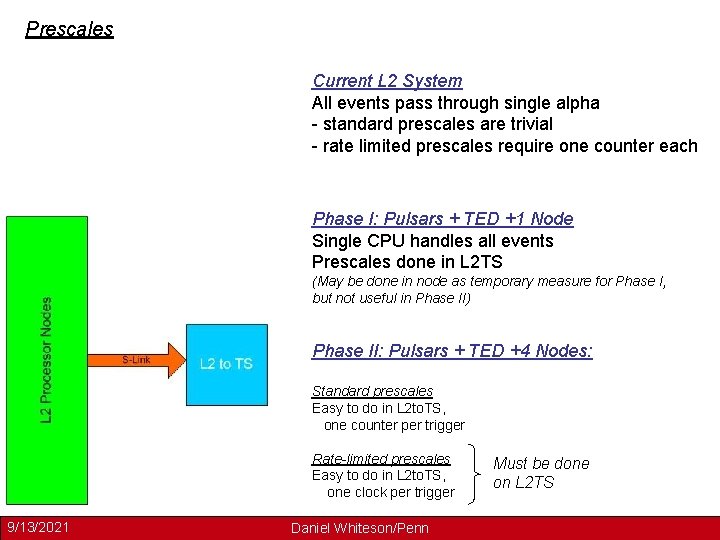

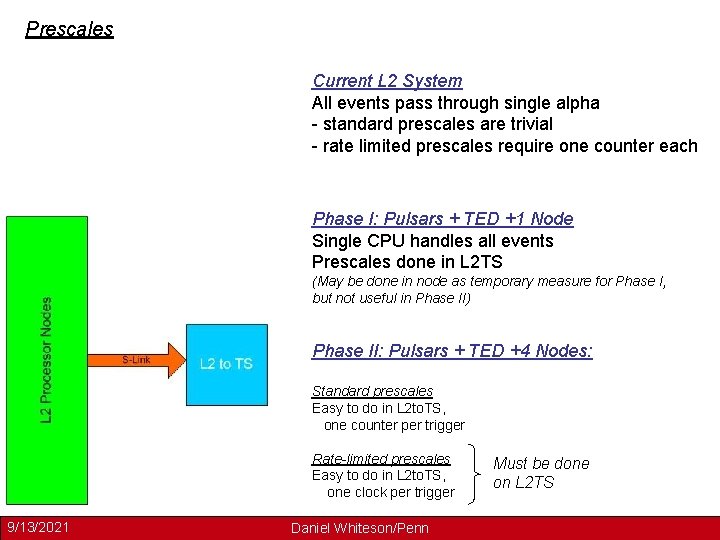

Prescales Current L 2 System All events pass through single alpha - standard prescales are trivial - rate limited prescales require one counter each Phase I: Pulsars + TED +1 Node Single CPU handles all events Prescales done in L 2 TS (May be done in node as temporary measure for Phase I, but not useful in Phase II) Phase II: Pulsars + TED +4 Nodes: Standard prescales Easy to do in L 2 to. TS, one counter per trigger Rate-limited prescales Easy to do in L 2 to. TS, one clock per trigger 9/13/2021 Daniel Whiteson/Penn Must be done on L 2 TS

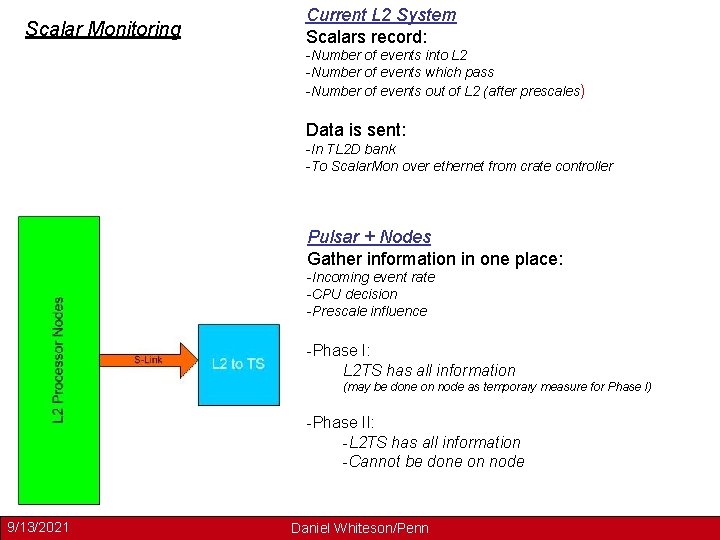

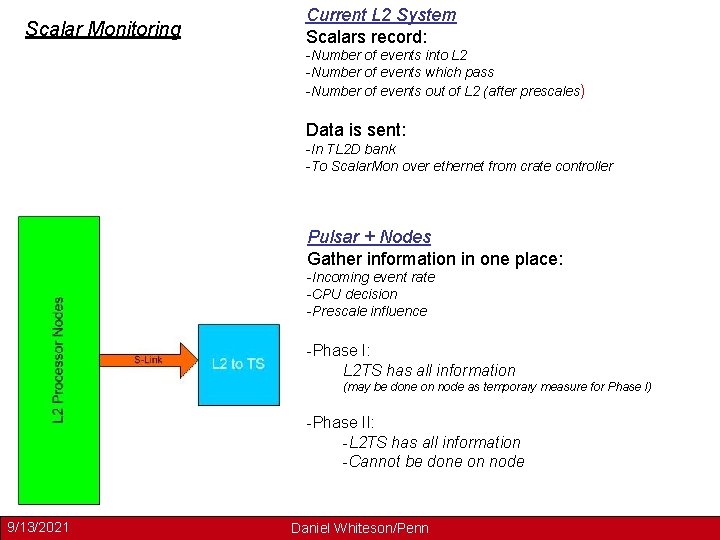

Scalar Monitoring Current L 2 System Scalars record: -Number of events into L 2 -Number of events which pass -Number of events out of L 2 (after prescales) Data is sent: -In TL 2 D bank -To Scalar. Mon over ethernet from crate controller Pulsar + Nodes Gather information in one place: -Incoming event rate -CPU decision -Prescale influence -Phase I: L 2 TS has all information (may be done on node as temporary measure for Phase I) “In alpha, scalar incrementation takes 1 -3 ms” -Greg Feild 9/13/2021 -Phase II: -L 2 TS has all information -Cannot be done on node Daniel Whiteson/Penn

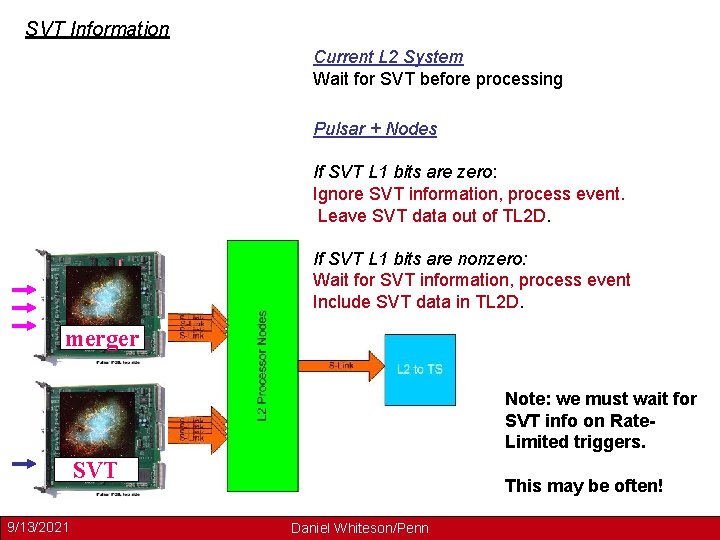

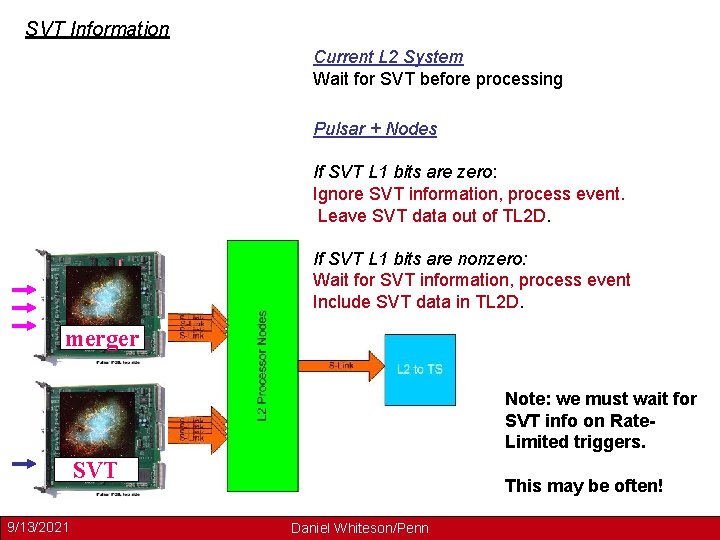

SVT Information Current L 2 System Wait for SVT before processing Pulsar + Nodes If SVT L 1 bits are zero: Ignore SVT information, process event. Leave SVT data out of TL 2 D. If SVT L 1 bits are nonzero: Wait for SVT information, process event Include SVT data in TL 2 D. merger Note: we must wait for SVT info on Rate. Limited triggers. SVT 9/13/2021 This may be often! Daniel Whiteson/Penn

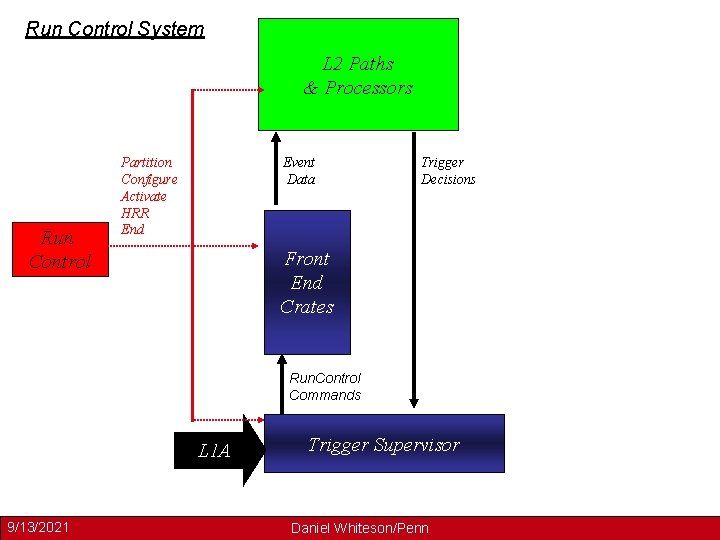

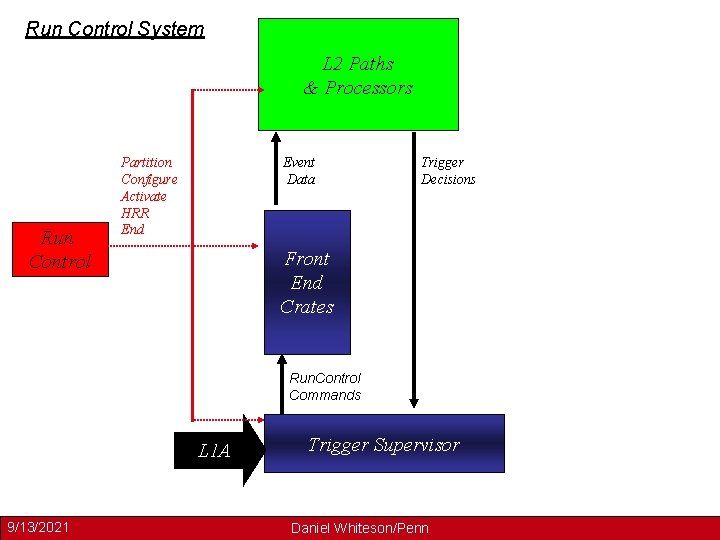

Run Control System L 2 Paths & Processors Run Control Event Data Partition Configure Activate HRR End Trigger Decisions Front End Crates Run. Control Commands L 1 A 9/13/2021 Trigger Supervisor Daniel Whiteson/Penn

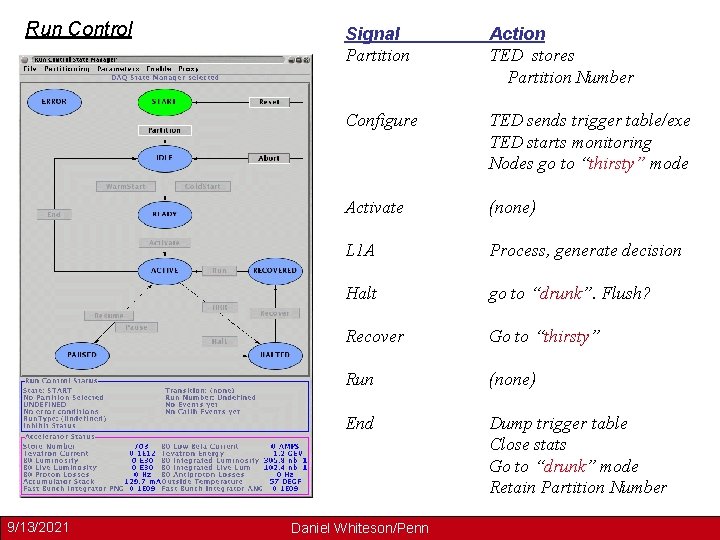

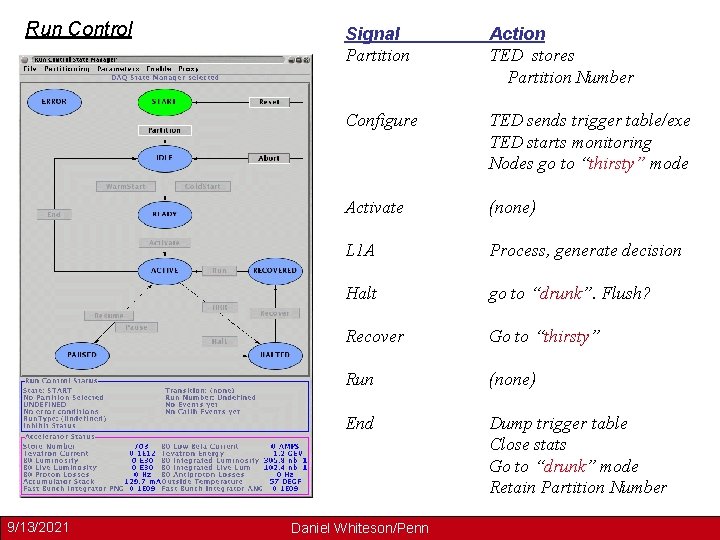

Run Control 9/13/2021 Signal Partition Action TED stores Partition Number Configure TED sends trigger table/exe TED starts monitoring Nodes go to “thirsty” mode Activate (none) L 1 A Process, generate decision Halt go to “drunk”. Flush? Recover Go to “thirsty” Run (none) End Dump trigger table Close stats Go to “drunk” mode Retain Partition Number Daniel Whiteson/Penn

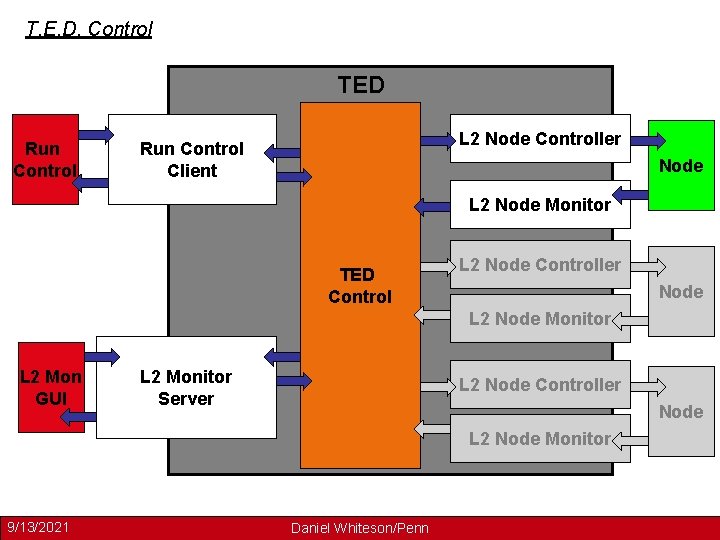

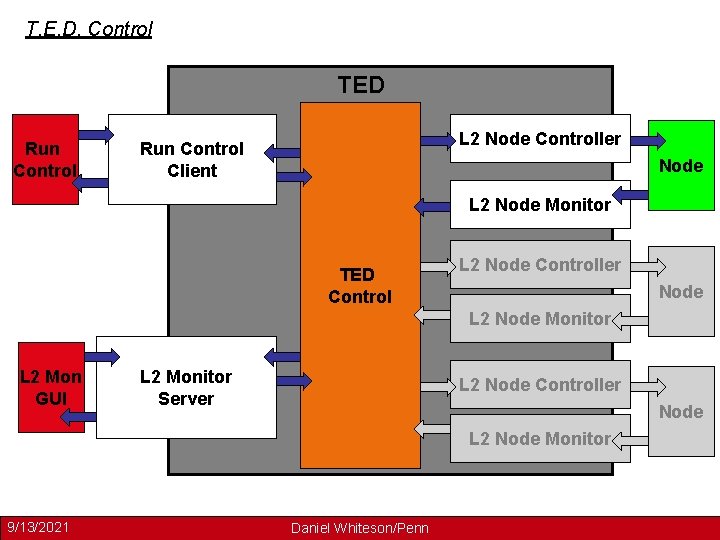

T. E. D. Control TED Run Control L 2 Node Controller Run Control Client Node L 2 Node Monitor TED Control L 2 Node Controller Node L 2 Node Monitor L 2 Mon GUI L 2 Monitor Server L 2 Node Controller Node L 2 Node Monitor 9/13/2021 Daniel Whiteson/Penn

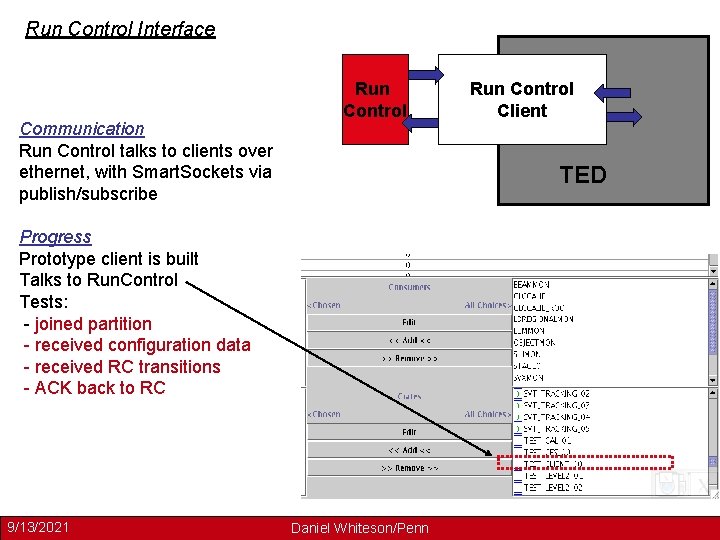

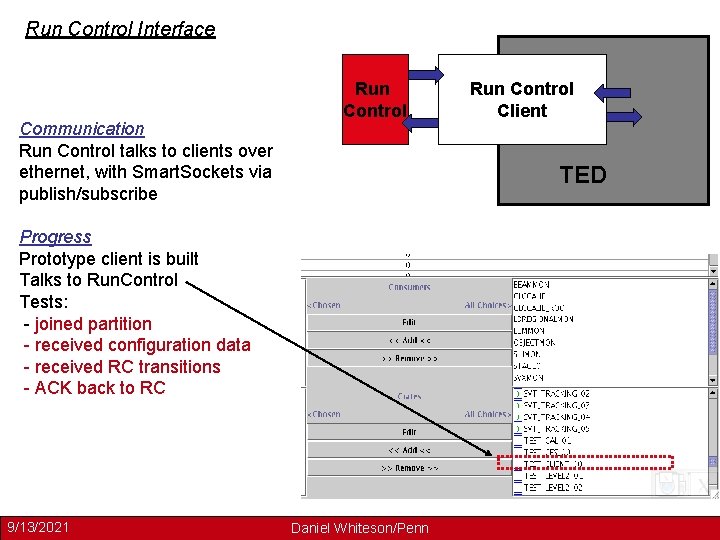

Run Control Interface Communication Run Control talks to clients over ethernet, with Smart. Sockets via publish/subscribe Run Control TED Progress Prototype client is built Talks to Run. Control Tests: - joined partition - received configuration data - received RC transitions - ACK back to RC 9/13/2021 Run Control Client Daniel Whiteson/Penn

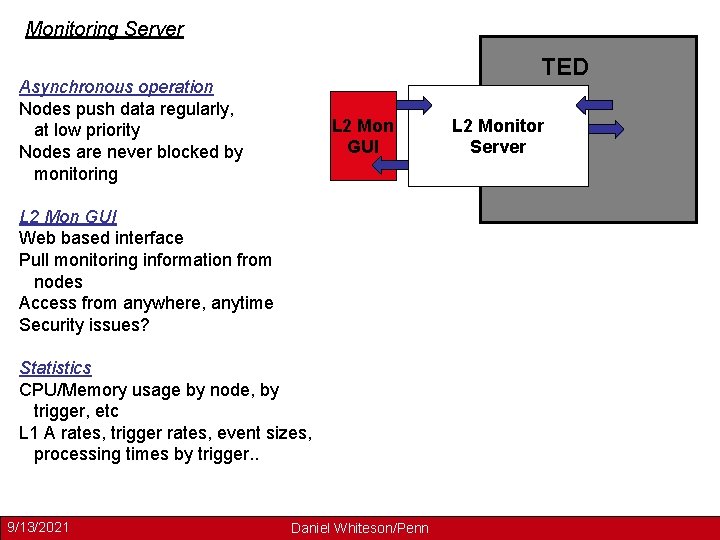

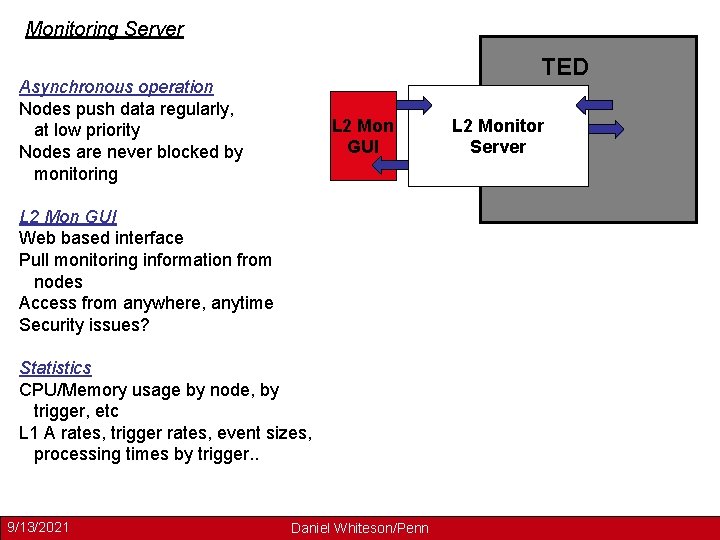

Monitoring Server TED Asynchronous operation Nodes push data regularly, at low priority Nodes are never blocked by monitoring L 2 Mon GUI Web based interface Pull monitoring information from nodes Access from anywhere, anytime Security issues? Statistics CPU/Memory usage by node, by trigger, etc L 1 A rates, trigger rates, event sizes, processing times by trigger. . 9/13/2021 Daniel Whiteson/Penn L 2 Monitor Server

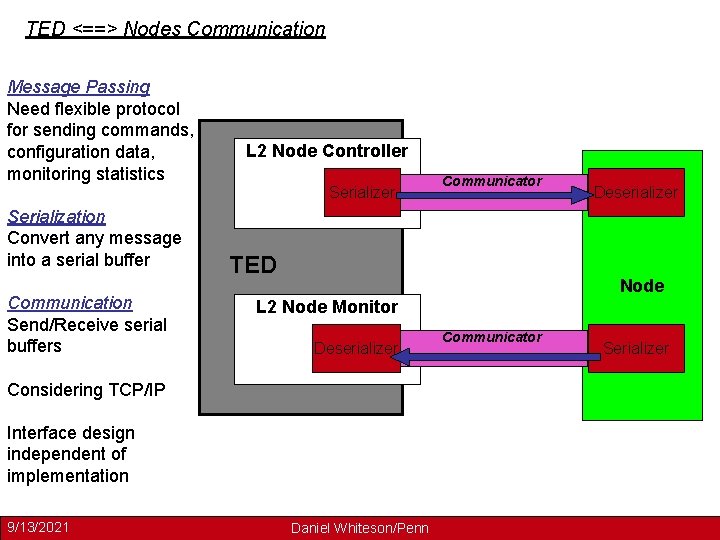

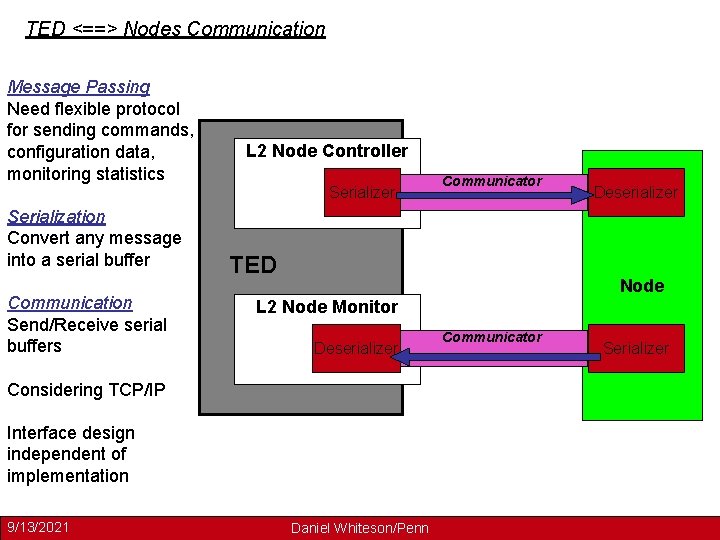

TED <==> Nodes Communication Message Passing Need flexible protocol for sending commands, configuration data, monitoring statistics L 2 Node Controller Serialization Convert any message into a serial buffer Communication Send/Receive serial buffers TED Deserializer Node L 2 Node Monitor Deserializer Considering TCP/IP Interface design independent of implementation 9/13/2021 Communicator Daniel Whiteson/Penn Communicator Serializer

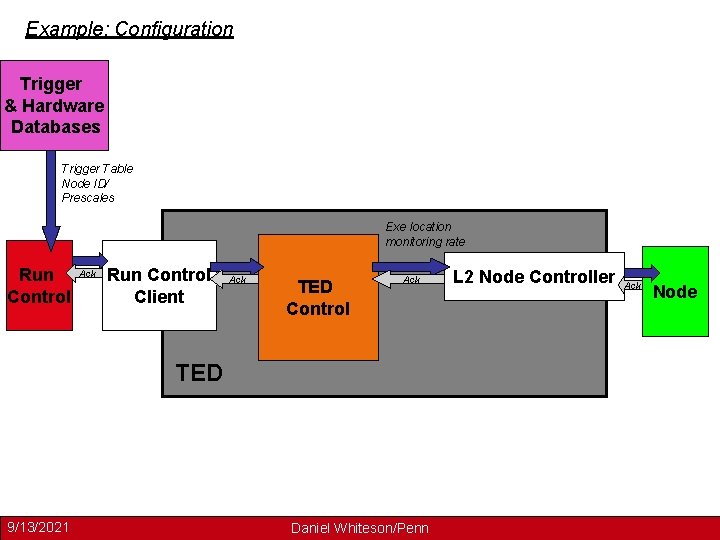

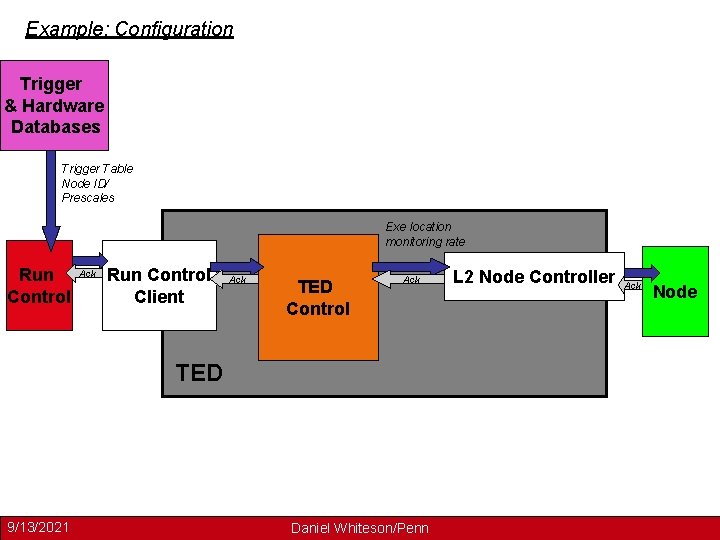

Example: Configuration Trigger & Hardware Databases Trigger Table Node ID/ Prescales Exe location monitoring rate Run Control Ack Run Control Client Ack TED Control Ack TED 9/13/2021 Daniel Whiteson/Penn L 2 Node Controller Ack Node

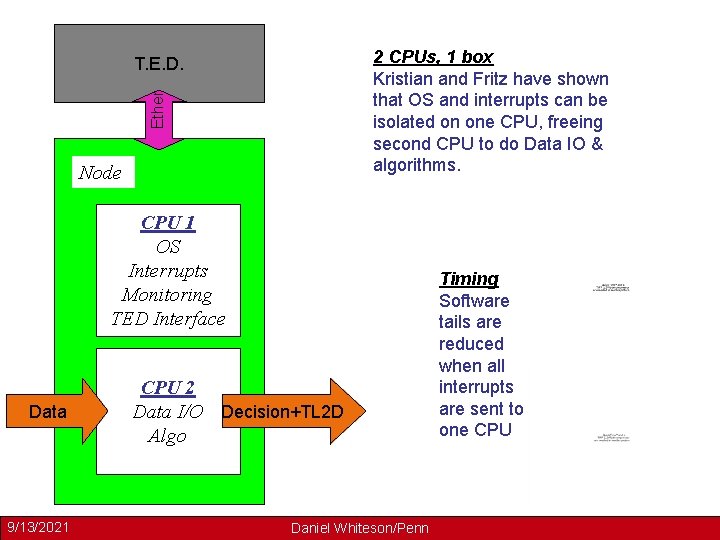

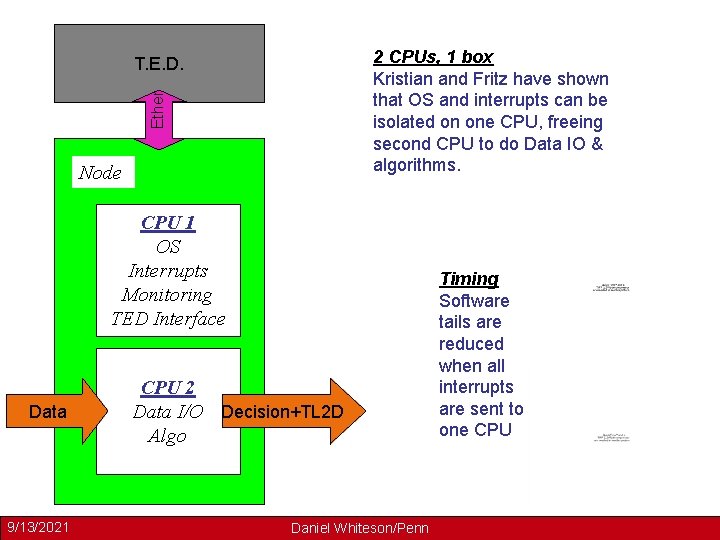

2 CPUs, 1 box Kristian and Fritz have shown that OS and interrupts can be isolated on one CPU, freeing second CPU to do Data IO & algorithms. Ether T. E. D. Node CPU 1 OS Interrupts Monitoring TED Interface Data 9/13/2021 CPU 2 Data I/O Decision+TL 2 D Algo Daniel Whiteson/Penn Timing Software tails are reduced when all interrupts are sent to one CPU

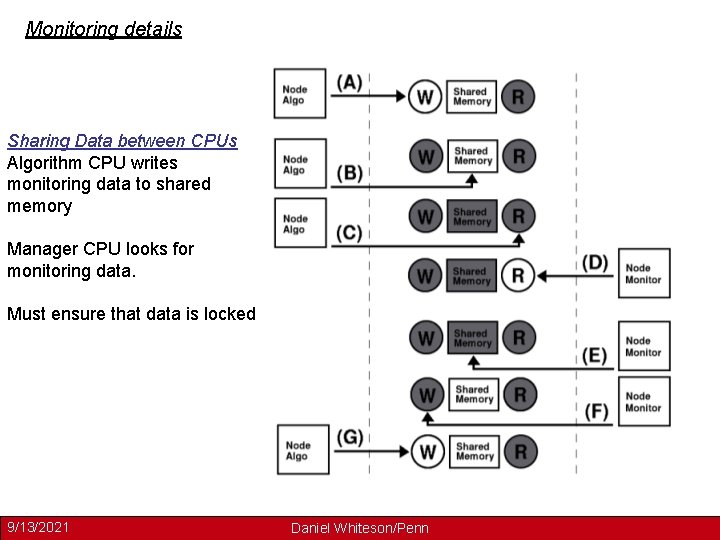

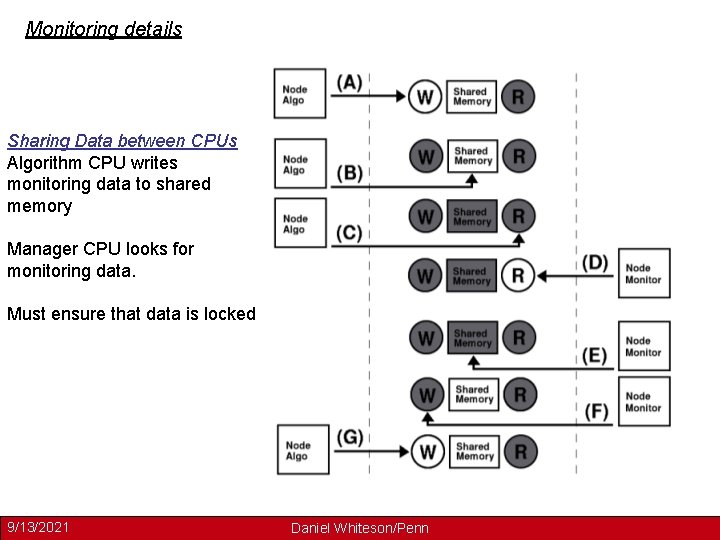

Monitoring details Sharing Data between CPUs Algorithm CPU writes monitoring data to shared memory Manager CPU looks for monitoring data. Must ensure that data is locked 9/13/2021 Daniel Whiteson/Penn

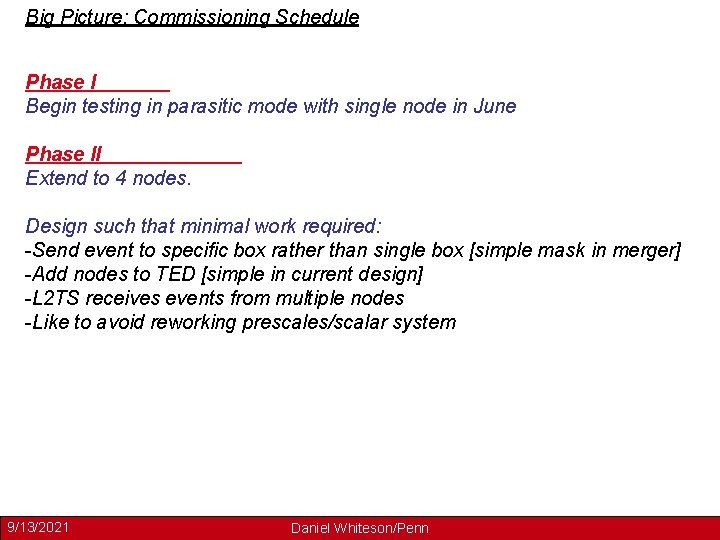

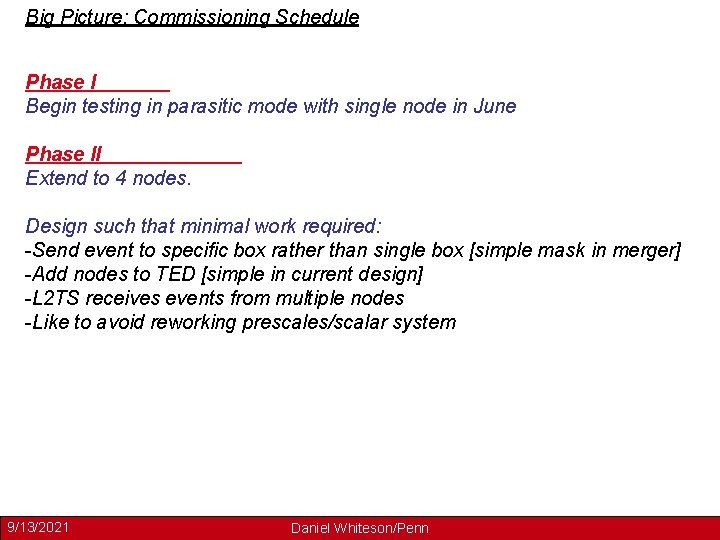

Big Picture: Commissioning Schedule Phase I Begin testing in parasitic mode with single node in June Phase II Extend to 4 nodes. Design such that minimal work required: -Send event to specific box rather than single box [simple mask in merger] -Add nodes to TED [simple in current design] -L 2 TS receives events from multiple nodes -Like to avoid reworking prescales/scalar system 9/13/2021 Daniel Whiteson/Penn

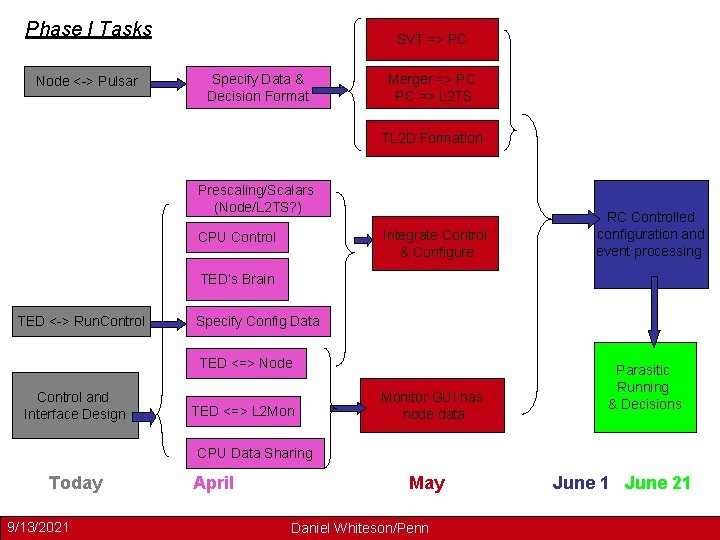

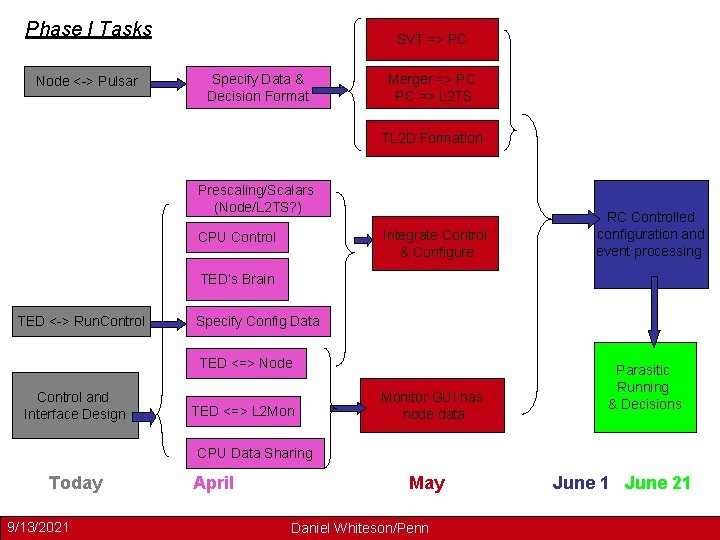

Phase I Tasks Node <-> Pulsar SVT => PC Specify Data & Decision Format Merger => PC PC => L 2 TS TL 2 D Formation Prescaling/Scalars (Node/L 2 TS? ) Integrate Control & Configure CPU Control RC Controlled configuration and event processing TED’s Brain TED <-> Run. Control Specify Config Data TED <=> Node Control and Interface Design TED <=> L 2 Mon Monitor GUI has node data Parasitic Running & Decisions CPU Data Sharing Today 9/13/2021 April May Daniel Whiteson/Penn June 1 June 21

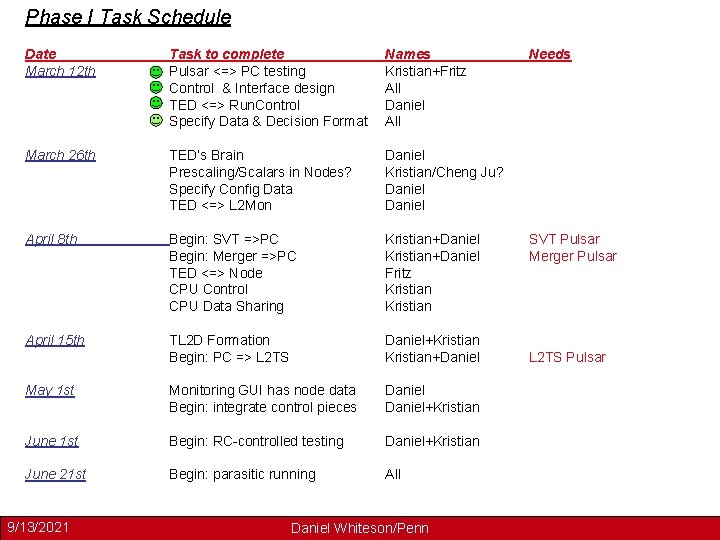

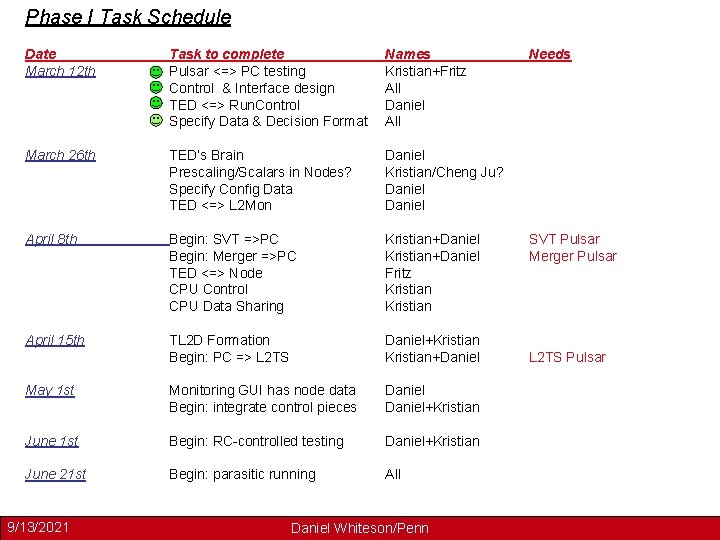

Phase I Task Schedule Date March 12 th Task to complete Pulsar <=> PC testing Control & Interface design TED <=> Run. Control Specify Data & Decision Format Names Kristian+Fritz All Daniel All March 26 th TED’s Brain Prescaling/Scalars in Nodes? Specify Config Data TED <=> L 2 Mon Daniel Kristian/Cheng Ju? Daniel April 8 th Begin: SVT =>PC Begin: Merger =>PC TED <=> Node CPU Control CPU Data Sharing Kristian+Daniel Fritz Kristian April 15 th TL 2 D Formation Begin: PC => L 2 TS Daniel+Kristian+Daniel May 1 st Monitoring GUI has node data Begin: integrate control pieces Daniel+Kristian June 1 st Begin: RC-controlled testing Daniel+Kristian June 21 st Begin: parasitic running All 9/13/2021 Daniel Whiteson/Penn Needs SVT Pulsar Merger Pulsar L 2 TS Pulsar