Knowledge in Learning Chapter 21 CS 471598 by

Knowledge in Learning Chapter 21 CS 471/598 by H. Liu Copyright, 1996 © Dale Carnegie & Associates, Inc.

Using prior knowledge z. For DT and logical description learning, we assume no prior knowledge z. We do have some prior knowledge, so how can we use it? z. We need a logical formulation as opposed to the function learning. CS 471/598 by H. Liu 2

Inductive learning in the logical setting z. The objective is to find a hypothesis that explains the classifications of the examples, given their descriptions. Hypothesis ^ Description |= Classifications y. Descriptions - the conjunction of all the example descriptions y. Classifications - the conjunction of all the example classifications CS 471/598 by H. Liu 3

A cumulative learning process z Fig 21. 1 z The new approach is to design agents that already know something and are trying to learning some more. z Intuitively, this should be faster and better than without using knowledge, assuming what’s known is always correct. z How to implement this cumulative learning with increasing knowledge? CS 471/598 by H. Liu 4

Some examples of using knowledge z One can leap to general conclusions after only one observation. y. Your such experience? z Traveling to Brazil: Language and name y? z A pharmacologically ignorant but diagnostically sophisticated medical student … y? CS 471/598 by H. Liu 5

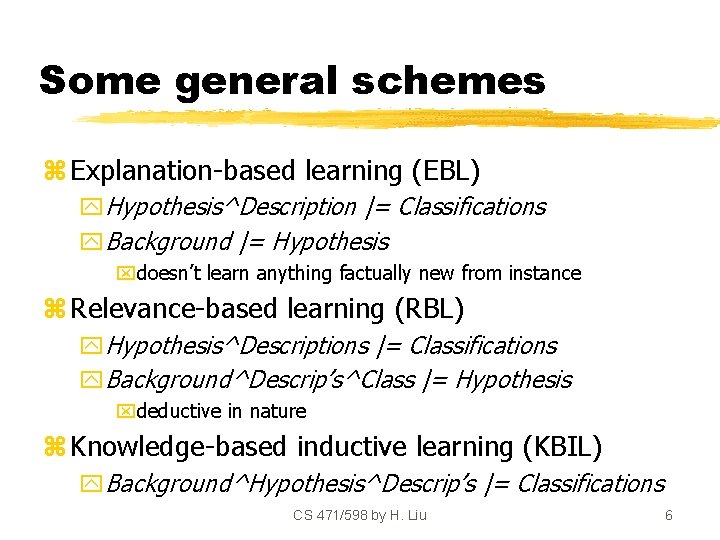

Some general schemes z Explanation-based learning (EBL) y. Hypothesis^Description |= Classifications y. Background |= Hypothesis xdoesn’t learn anything factually new from instance z Relevance-based learning (RBL) y. Hypothesis^Descriptions |= Classifications y. Background^Descrip’s^Class |= Hypothesis xdeductive in nature z Knowledge-based inductive learning (KBIL) y. Background^Hypothesis^Descrip’s |= Classifications CS 471/598 by H. Liu 6

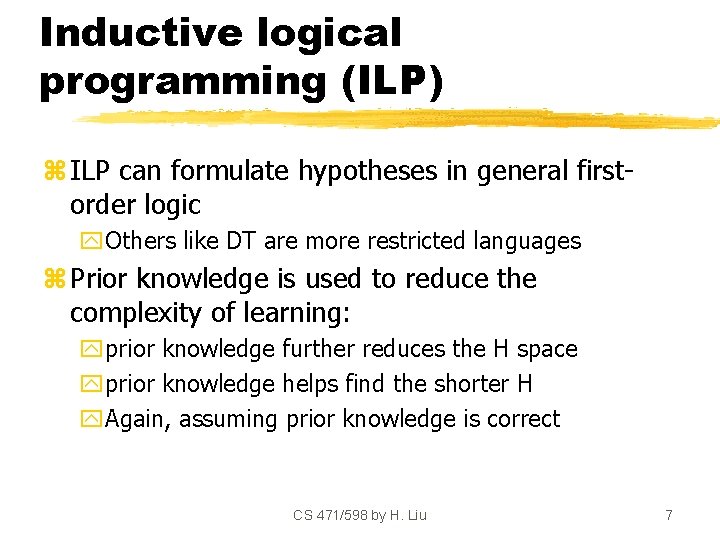

Inductive logical programming (ILP) z ILP can formulate hypotheses in general firstorder logic y. Others like DT are more restricted languages z Prior knowledge is used to reduce the complexity of learning: yprior knowledge further reduces the H space yprior knowledge helps find the shorter H y. Again, assuming prior knowledge is correct CS 471/598 by H. Liu 7

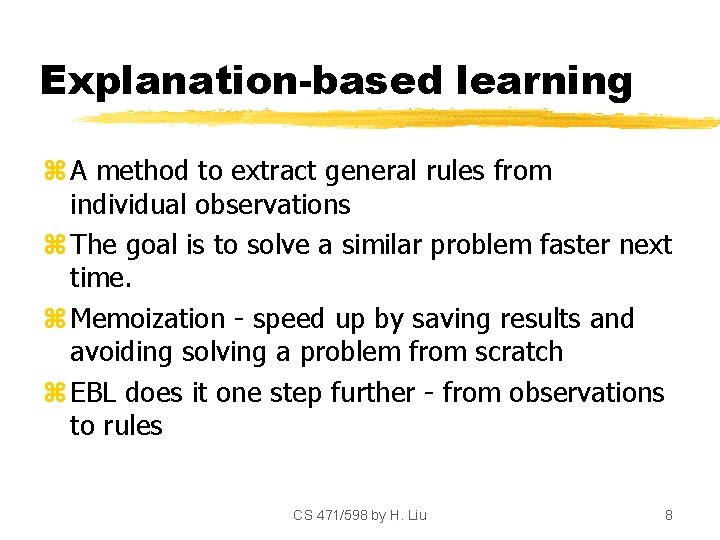

Explanation-based learning z A method to extract general rules from individual observations z The goal is to solve a similar problem faster next time. z Memoization - speed up by saving results and avoiding solving a problem from scratch z EBL does it one step further - from observations to rules CS 471/598 by H. Liu 8

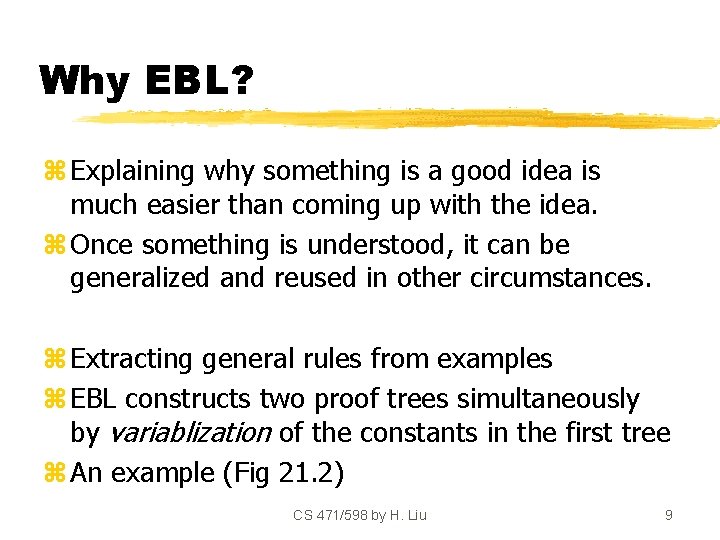

Why EBL? z Explaining why something is a good idea is much easier than coming up with the idea. z Once something is understood, it can be generalized and reused in other circumstances. z Extracting general rules from examples z EBL constructs two proof trees simultaneously by variablization of the constants in the first tree z An example (Fig 21. 2) CS 471/598 by H. Liu 9

Basic EBL z Given an example, construct a proof tree using the background knowledge z In parallel, construct a generalized proof tree for the variabilized goal z Construct a new rule (leaves => the root) z Drop any conditions that are true regardless of the variables in the goal CS 471/598 by H. Liu 10

Efficiency of EBL z Choosing a general rule ytoo many rules -> slow inference yaim for gain - significant increase in speed yas general as possible z Operationality - A subgoal is operational means it is easy to solve y. Trade-off between Operationality and Generality z Empirical analysis of efficiency in EBL study CS 471/598 by H. Liu 11

Learning using relevant information z Prior knowledge: People in a country usually speak the same language z Observation: Given Fernando is Brazilian & speaks Portuguese z We cab logically conclude via resolution CS 471/598 by H. Liu 12

Functional dependencies z We have seen a form of relevance: determination - language (Portuguese) is a function of nationality (Brazil) z Determination is really a relationship between the predicates z The corresponding generalization follows logically from the determinations and descriptions. CS 471/598 by H. Liu 13

z We can generalize from Fernando to all Brazilians, but not to all nations. So, determinations can limit the H space to be considered. z Determinations specify a sufficient basis vocabulary from which to construct hypotheses concerning the target predicate. z A reduction in the H space size should make it easier to learn the target predicate. CS 471/598 by H. Liu 14

Learning using relevant information z A determination P Q says if any examples match on P, they must also match on Q z Find the simplest determination consistent with the observations y. Search through the space of determinations from one predicate, two predicates y. Algorithm - Fig 21. 3 (page 635) y. Time complexity is n choosing p. CS 471/598 by H. Liu 15

z Combining relevance based learning with decision tree learning -> RBDTL z Its learning performance improves (Fig 21. 4). z Other issues ynoise handling yusing other prior knowledge yfrom attribute-based to FOL CS 471/598 by H. Liu 16

Inductive logic programming z It combines inductive methods with FOL. z ILP represents theories as logic programs. z ILP offers complete algorithms for inducing general, first-order theories from examples. z It can learn successfully in domains where attribute-based algorithms fail completely. z An example - a typical family tree (Fig 21. 5) CS 471/598 by H. Liu 17

Inverse resolution z If Classifications follow from B^H^D, then we can prove this by resolution with refutation (completeness). z If we run the proof backwards, we can find a H such that the proof goes through. z Generating inverse proofs y. A family tree example (Fig 21. 6) CS 471/598 by H. Liu 18

z Inverse resolution involves search y. Each inverse resolution step is nondeterministic x. For any C and C 1, there can be many C 2 z Discovering new knowledge with IR y. It’s not easy - a monkey and a typewriter z Discovering new predicates with IR y. Fig 21. 7 z The ability to use background knowledge provides significant advantages CS 471/598 by H. Liu 19

Top-down learning (FOIL) z A generalization of DT induction to the first-order case by the same author of C 4. 5 y. Starting with a general rule and specialize it to fit data y. Now we use first-order literals instead of attributes, and H is a set of clauses instead of a decision tree. z Example: =>grandfather(x, y) (page 642) ypositive and negative examples yadding literals one at a time to the left-hand side ye. g. , Father (x, y) => Grandfather(x, y) y. How to choose literal? (Algorithm on page 643) CS 471/598 by H. Liu 20

Summary z Using prior knowledge in cumulative learning z Prior knowledge allows for shorter H’s. z Prior knowledge plays different logical roles as in entailment constraints z EBL, RBL, KBIL z ILP generate new predicates so that concise new theories can be expressed. CS 471/598 by H. Liu 21

- Slides: 21