Knowledge Enhanced Clustering Clustering Find the Groups of

![Where Data Driven Clustering Fails: a) Pandemic Preparation [Davidson and Ravi 2007 a] • Where Data Driven Clustering Fails: a) Pandemic Preparation [Davidson and Ravi 2007 a] •](https://slidetodoc.com/presentation_image_h/957ec7643fd1e3edf67b33537ece6ed4/image-12.jpg)

![Another Example: c) CMU Faces Database [Davidson, Wagstaff, Basu, ECML 06] Useful for biometric Another Example: c) CMU Faces Database [Davidson, Wagstaff, Basu, ECML 06] Useful for biometric](https://slidetodoc.com/presentation_image_h/957ec7643fd1e3edf67b33537ece6ed4/image-18.jpg)

![Other Implications of Results For Algorithm Design: Getting Worse [Davidson and Ravi 2007 b] Other Implications of Results For Algorithm Design: Getting Worse [Davidson and Ravi 2007 b]](https://slidetodoc.com/presentation_image_h/957ec7643fd1e3edf67b33537ece6ed4/image-36.jpg)

![Incrementally Adding In Constraints: Quite Bad [Davidson, Ester and Ravi 2007 c] • User-centric Incrementally Adding In Constraints: Quite Bad [Davidson, Ester and Ravi 2007 c] • User-centric](https://slidetodoc.com/presentation_image_h/957ec7643fd1e3edf67b33537ece6ed4/image-37.jpg)

![Interesting Phenomena – CL Only [Davidson et’ al DMKD Journal, AAAI 06] Cancer No Interesting Phenomena – CL Only [Davidson et’ al DMKD Journal, AAAI 06] Cancer No](https://slidetodoc.com/presentation_image_h/957ec7643fd1e3edf67b33537ece6ed4/image-39.jpg)

![Satisfying All Constraints (Cop-k-Means) [Wagstaff, Thesis 2000] Algorithm aims to minimize VQE while satisfying Satisfying All Constraints (Cop-k-Means) [Wagstaff, Thesis 2000] Algorithm aims to minimize VQE while satisfying](https://slidetodoc.com/presentation_image_h/957ec7643fd1e3edf67b33537ece6ed4/image-40.jpg)

- Slides: 50

Knowledge Enhanced Clustering

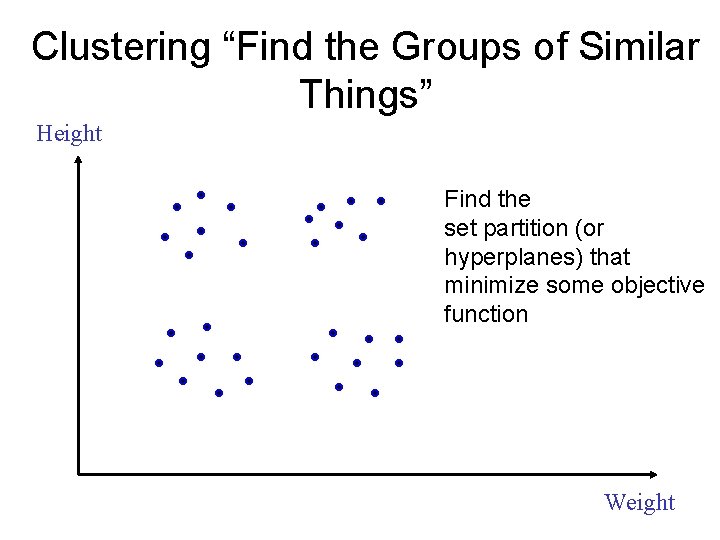

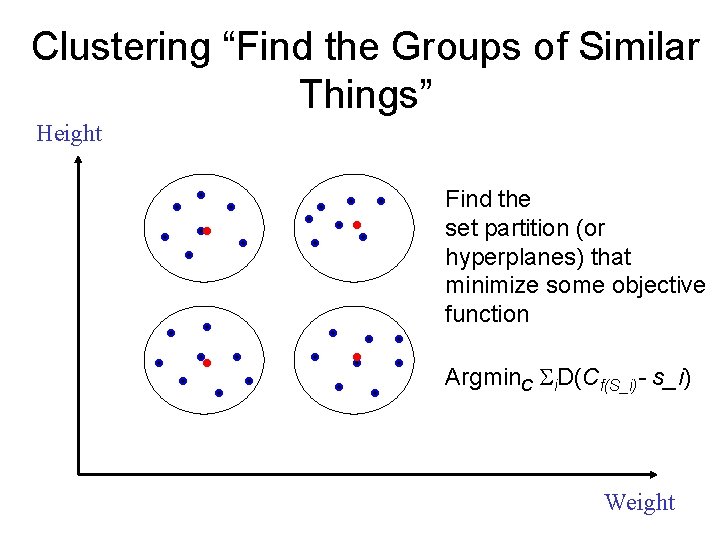

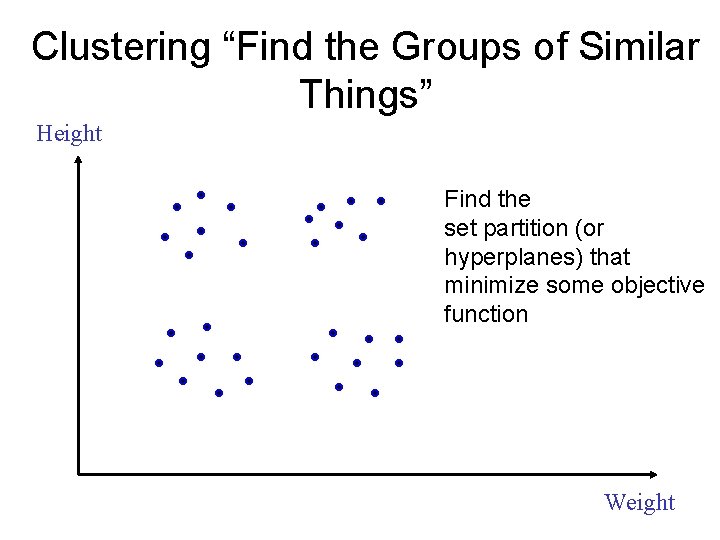

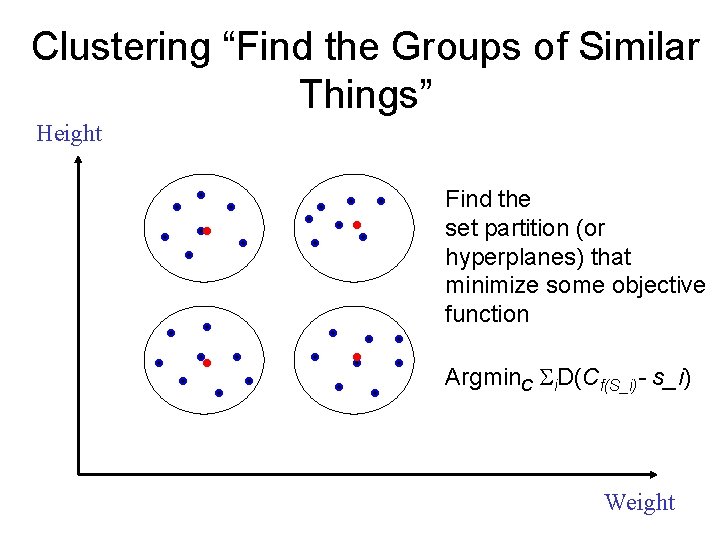

Clustering “Find the Groups of Similar Things” Height Find the set partition (or hyperplanes) that minimize some objective function Weight

Clustering “Find the Groups of Similar Things” Height Find the set partition (or hyperplanes) that minimize some objective function Argmin. C i. D(Cf(S_i)- s_i) Weight

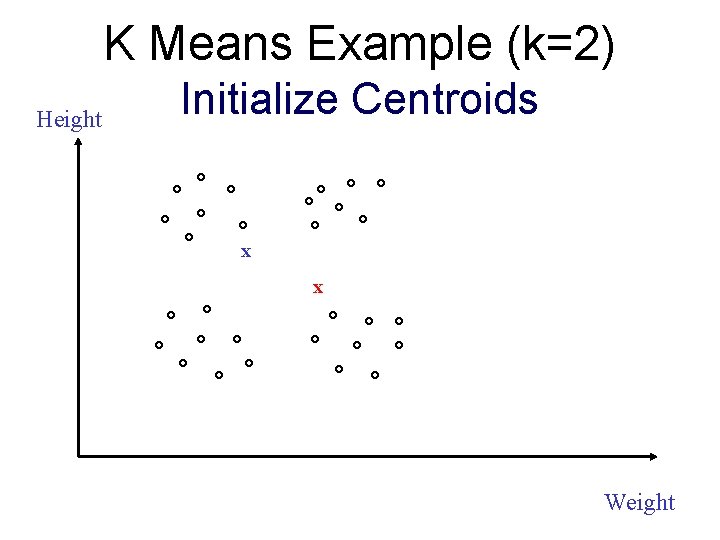

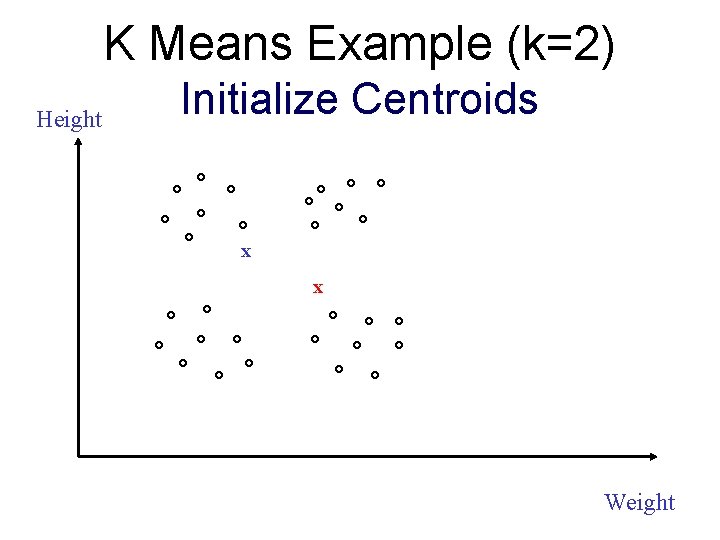

K Means Example (k=2) Height Initialize Centroids x x Weight

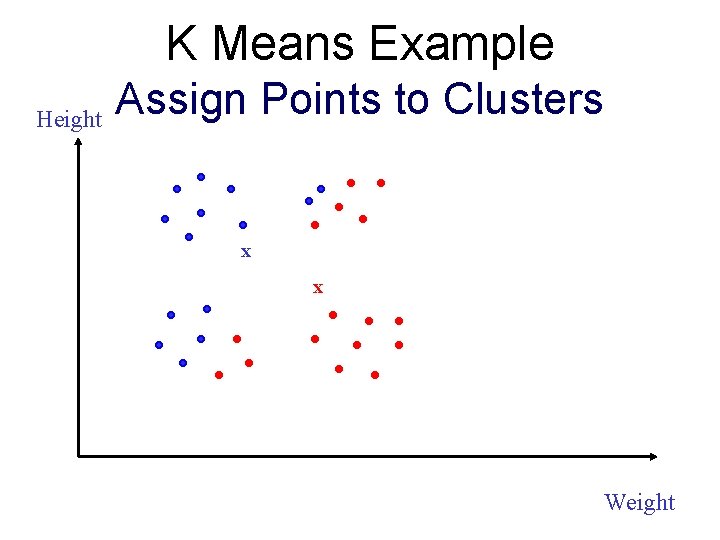

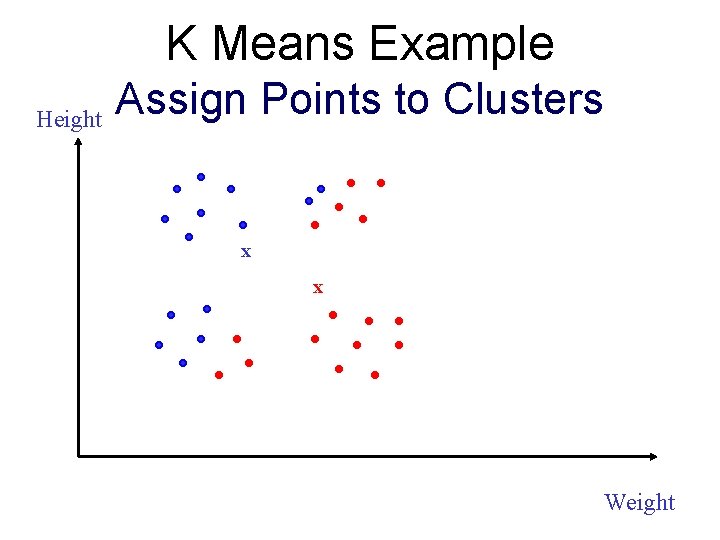

K Means Example Height Assign Points to Clusters x x Weight

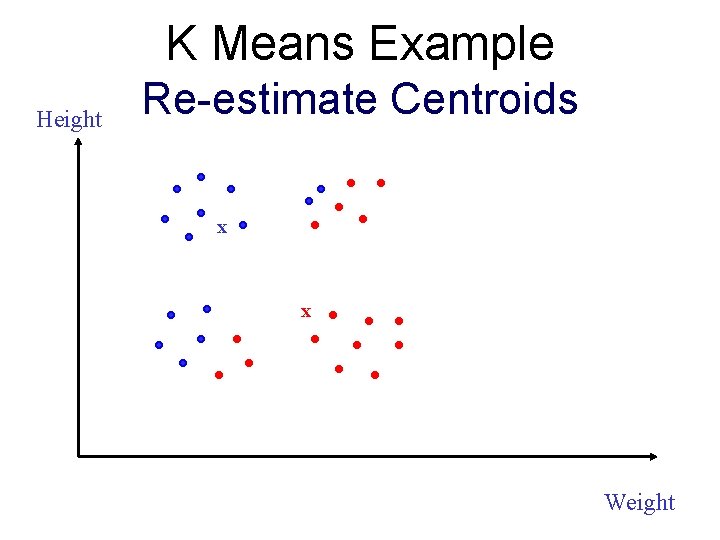

K Means Example Height Re-estimate Centroids x x Weight

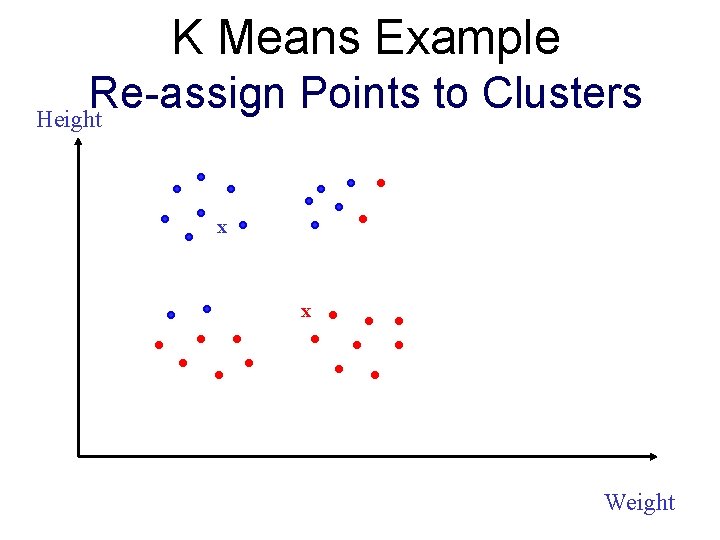

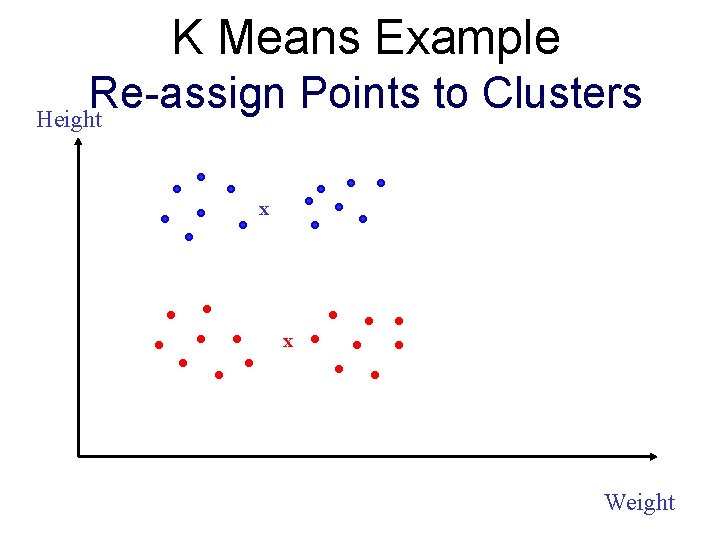

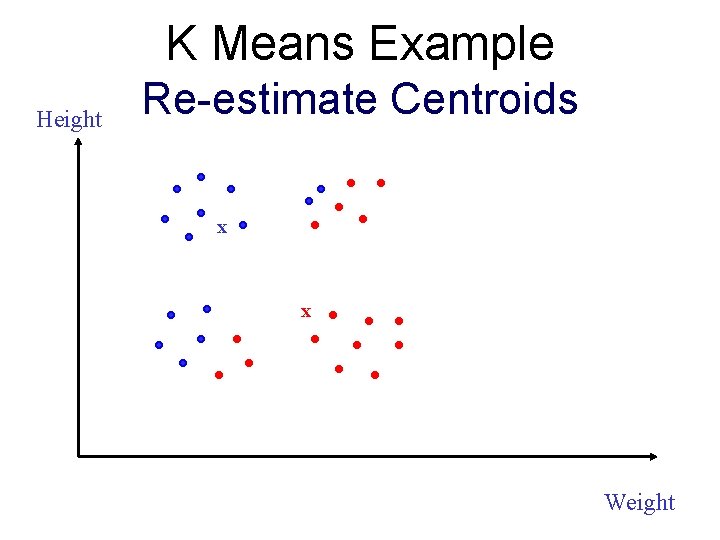

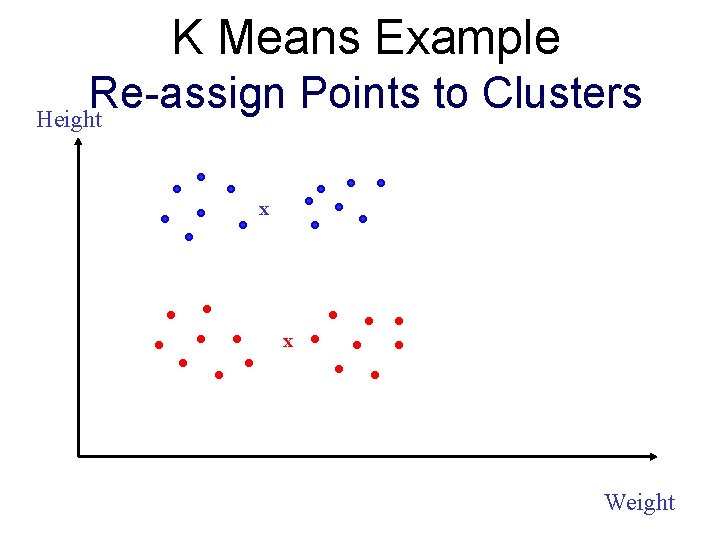

K Means Example Re-assign Points to Clusters Height x x Weight

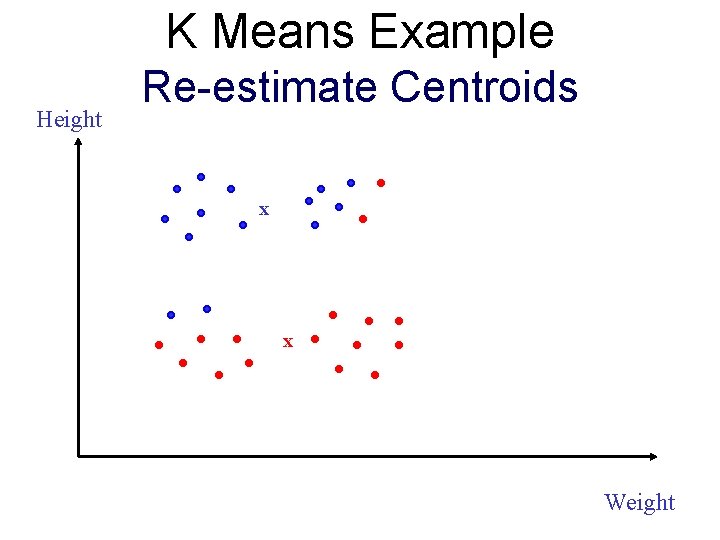

K Means Example Height Re-estimate Centroids x x Weight

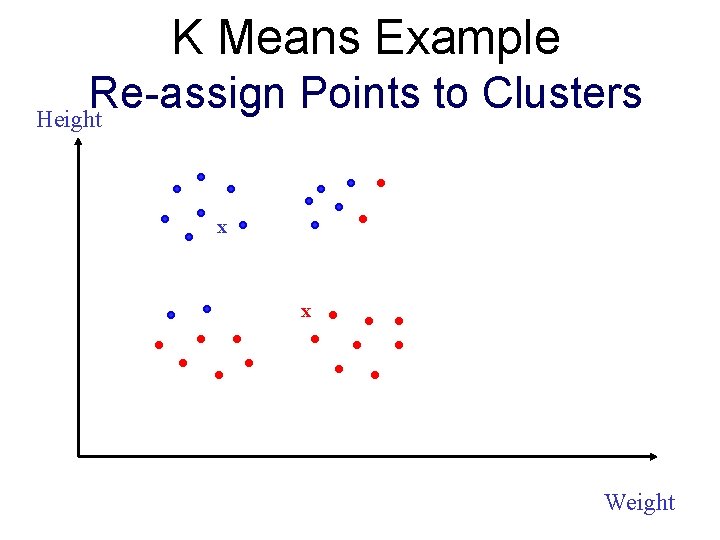

K Means Example Re-assign Points to Clusters Height x x Weight

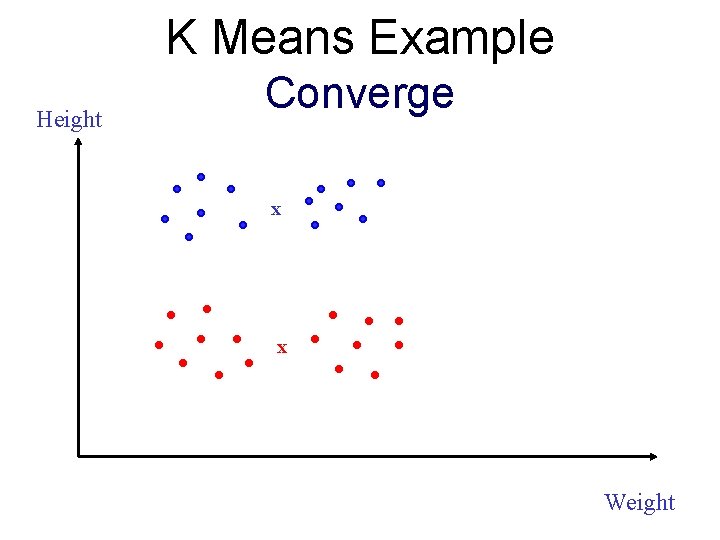

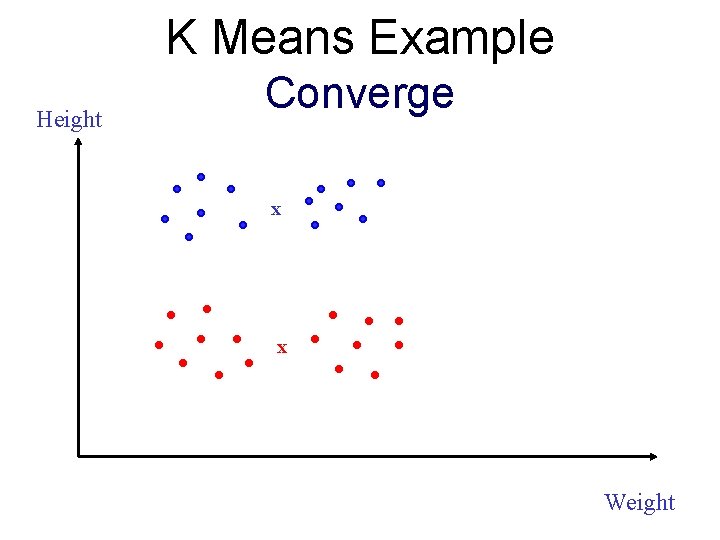

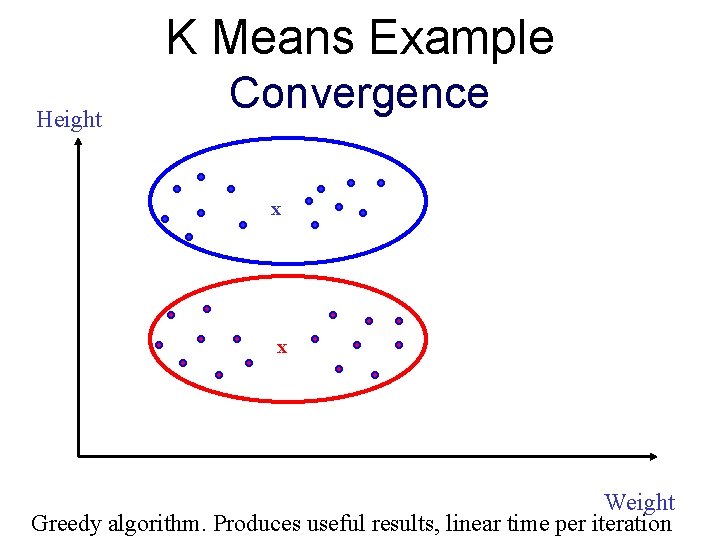

K Means Example Height Converge x x Weight

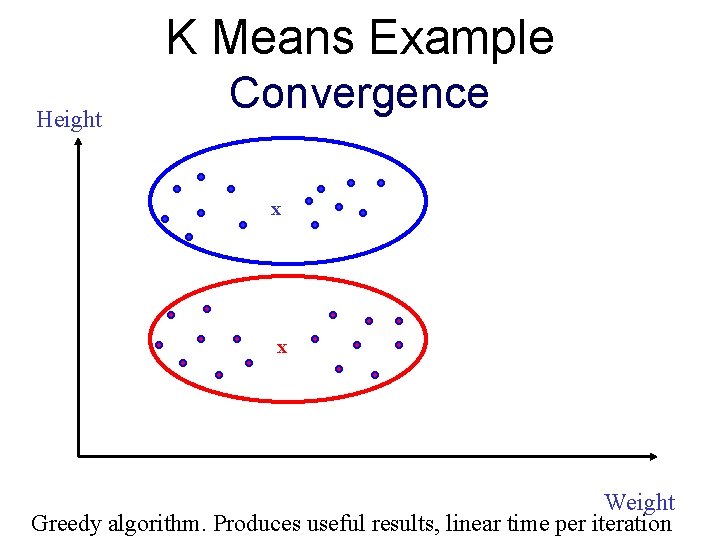

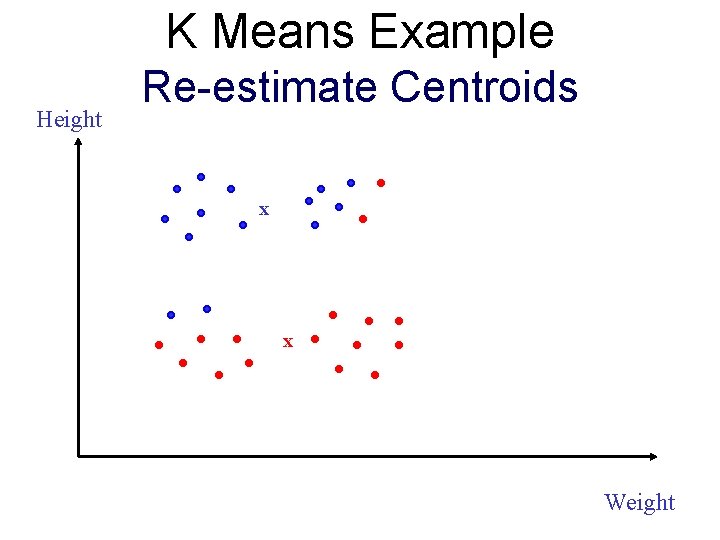

K Means Example Height Convergence x x Weight Greedy algorithm. Produces useful results, linear time per iteration

![Where Data Driven Clustering Fails a Pandemic Preparation Davidson and Ravi 2007 a Where Data Driven Clustering Fails: a) Pandemic Preparation [Davidson and Ravi 2007 a] •](https://slidetodoc.com/presentation_image_h/957ec7643fd1e3edf67b33537ece6ed4/image-12.jpg)

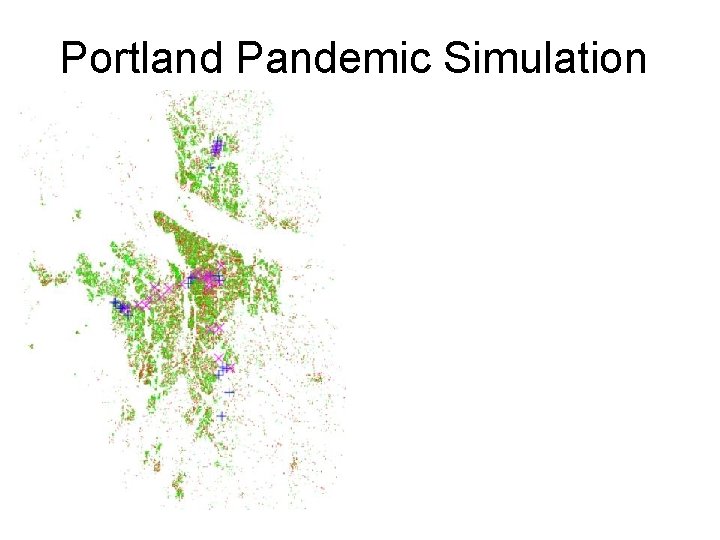

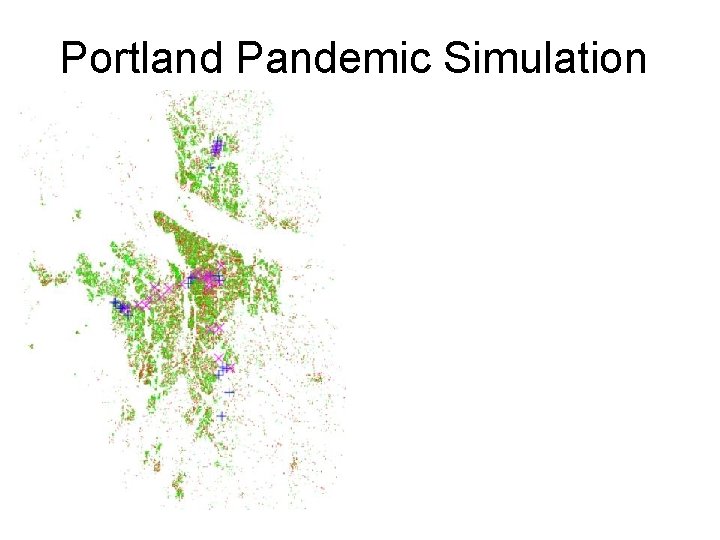

Where Data Driven Clustering Fails: a) Pandemic Preparation [Davidson and Ravi 2007 a] • In collaboration with Los-Alamos/Virginia Tech Bio-informatics Institute – VBI Micro simulator based on census data, road network, buildings etc. – Ideal to model pandemics due to bird flu, bioterrorism. – Problem: Find spatial clusters of households that have a high propensity to be infected or not infected. – Currently at city level (million households), but soon the eastern seaboard, entire country.

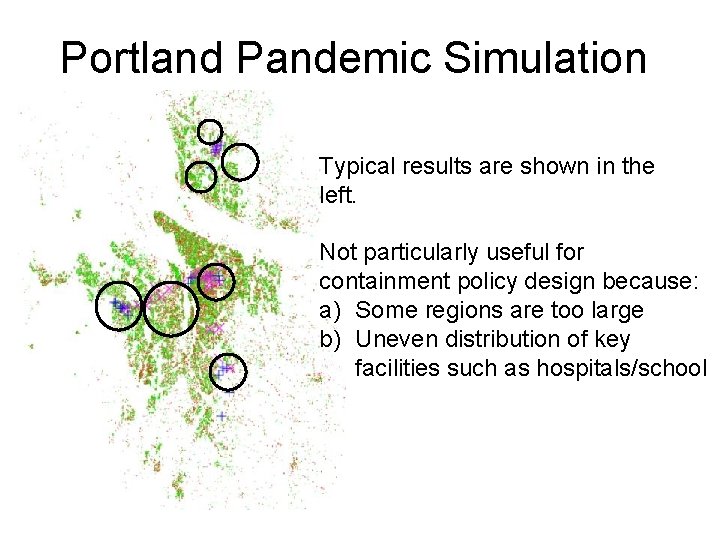

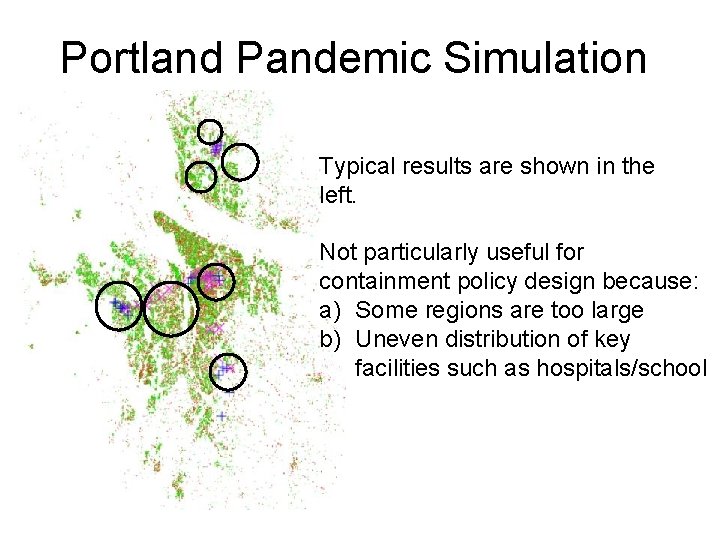

Portland Pandemic Simulation

Portland Pandemic Simulation Typical results are shown in the left. Not particularly useful for containment policy design because: a) Some regions are too large b) Uneven distribution of key facilities such as hospitals/school

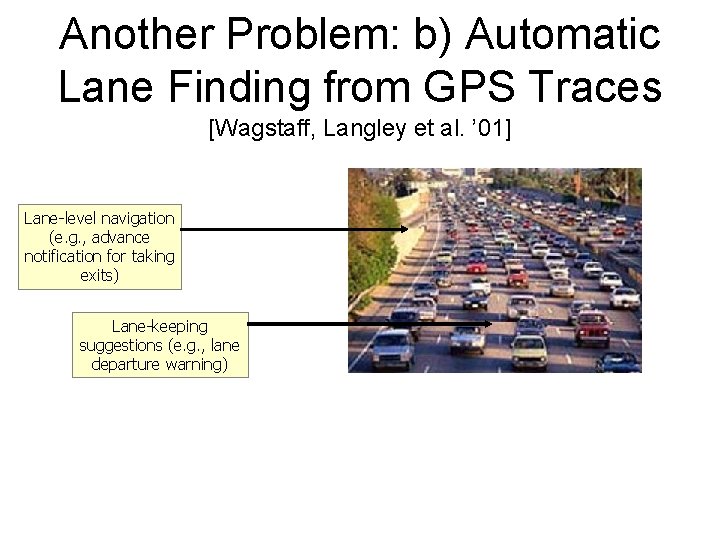

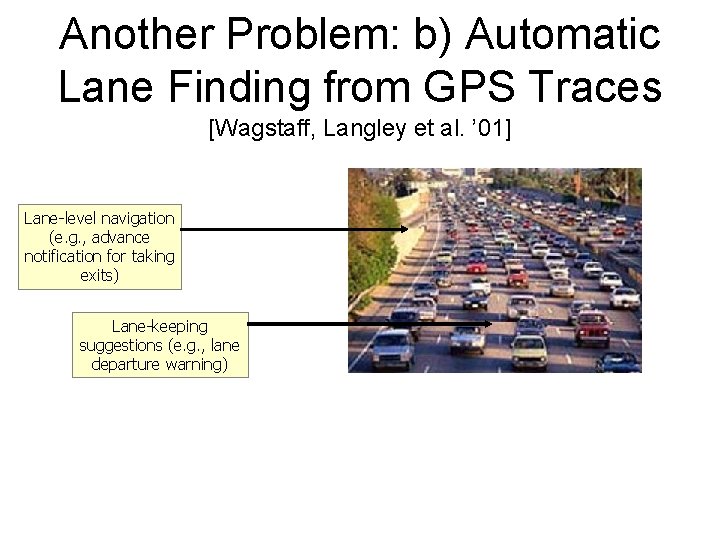

Another Problem: b) Automatic Lane Finding from GPS Traces [Wagstaff, Langley et al. ’ 01] Lane-level navigation (e. g. , advance notification for taking exits) Lane-keeping suggestions (e. g. , lane departure warning)

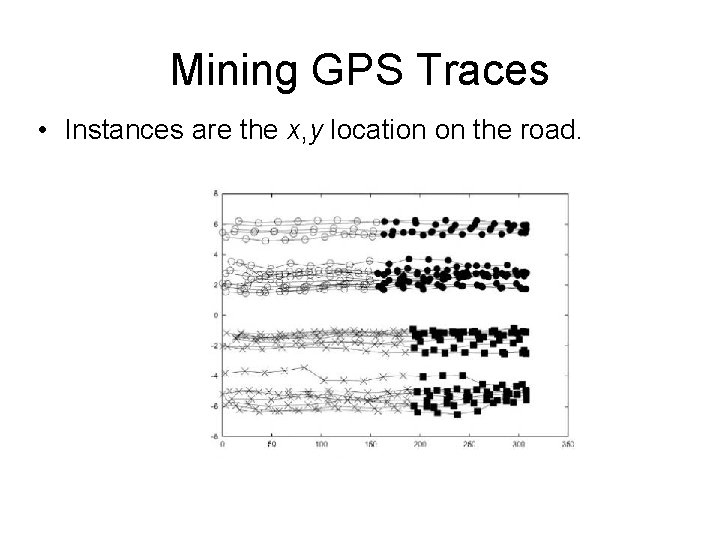

Mining GPS Traces • Instances are the x, y location on the road.

Mining GPS Traces • Instances are the x, y location on the road. This is a very good local minimum of the algorithm’s objective function

![Another Example c CMU Faces Database Davidson Wagstaff Basu ECML 06 Useful for biometric Another Example: c) CMU Faces Database [Davidson, Wagstaff, Basu, ECML 06] Useful for biometric](https://slidetodoc.com/presentation_image_h/957ec7643fd1e3edf67b33537ece6ed4/image-18.jpg)

Another Example: c) CMU Faces Database [Davidson, Wagstaff, Basu, ECML 06] Useful for biometric applications such as face recognition etc.

Typical But Not Useful Clusters For Our Purpose

Limitations of Data Driven Clustering at a High Level • Objective functions were reasonably minimized. • Hoping patterns are “novel and actionable” is a long-shot. • Problem: Find a general purpose and principled way to encode knowledge into the many data mining algorithms. – Bayesian approach?

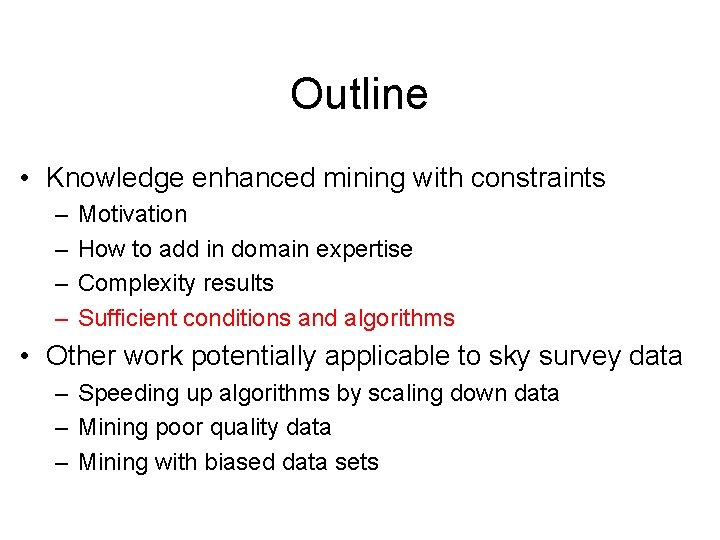

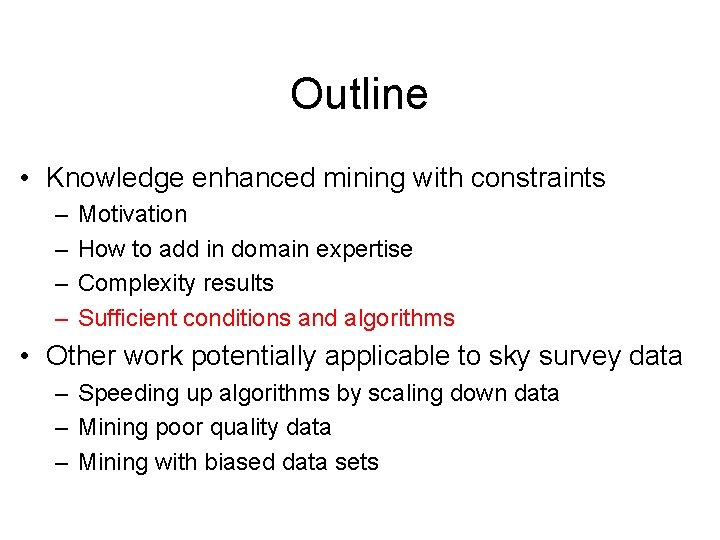

Outline • Knowledge enhanced mining with constraints – – Motivation How to add in domain expertise Complexity results Sufficient conditions and algorithms • Other work potentially applicable to sky survey data – Speeding up algorithms by scaling down data – Mining poor quality data – Mining with biased data sets

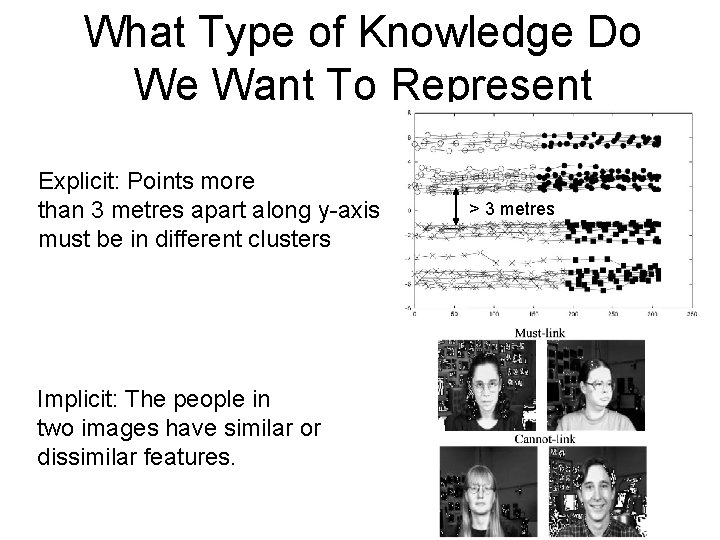

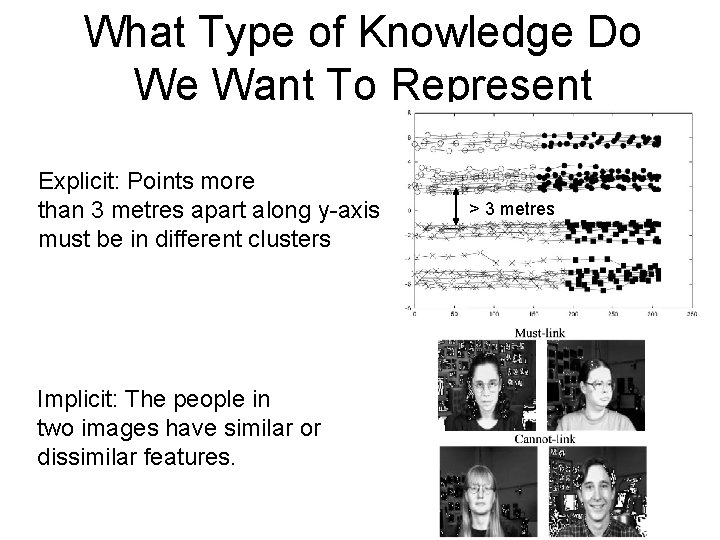

What Type of Knowledge Do We Want To Represent Explicit: Points more than 3 metres apart along y-axis must be in different clusters Implicit: The people in two images have similar or dissimilar features. > 3 metres

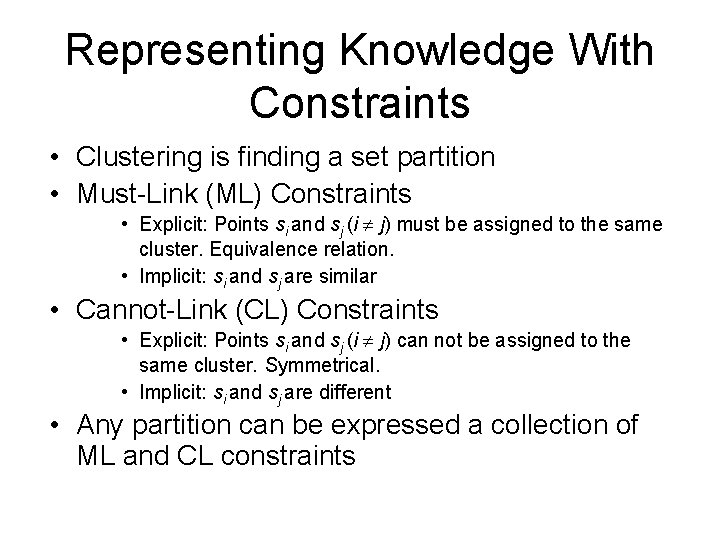

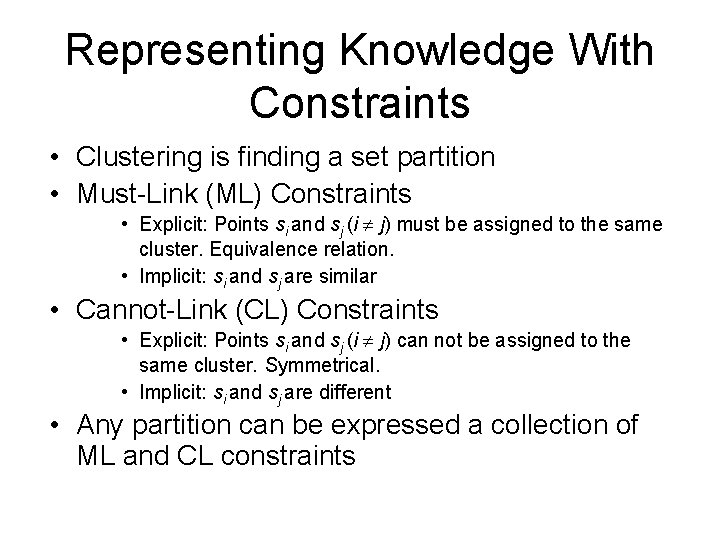

Representing Knowledge With Constraints • Clustering is finding a set partition • Must-Link (ML) Constraints • Explicit: Points si and sj (i j) must be assigned to the same cluster. Equivalence relation. • Implicit: si and sj are similar • Cannot-Link (CL) Constraints • Explicit: Points si and sj (i j) can not be assigned to the same cluster. Symmetrical. • Implicit: si and sj are different • Any partition can be expressed a collection of ML and CL constraints

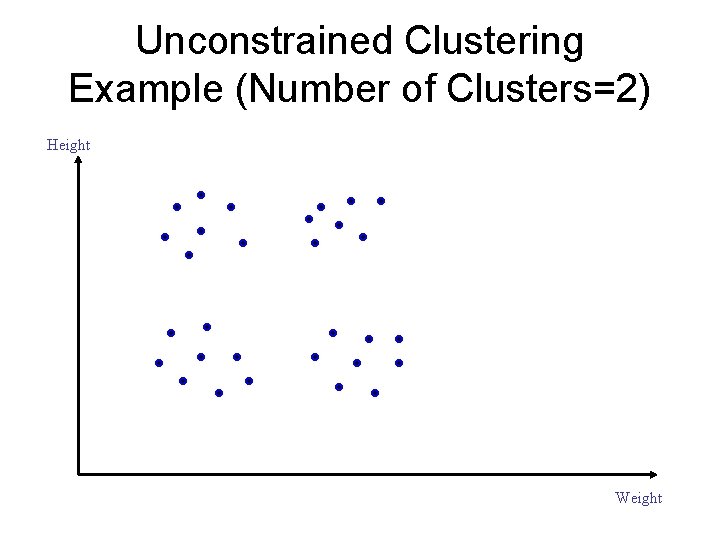

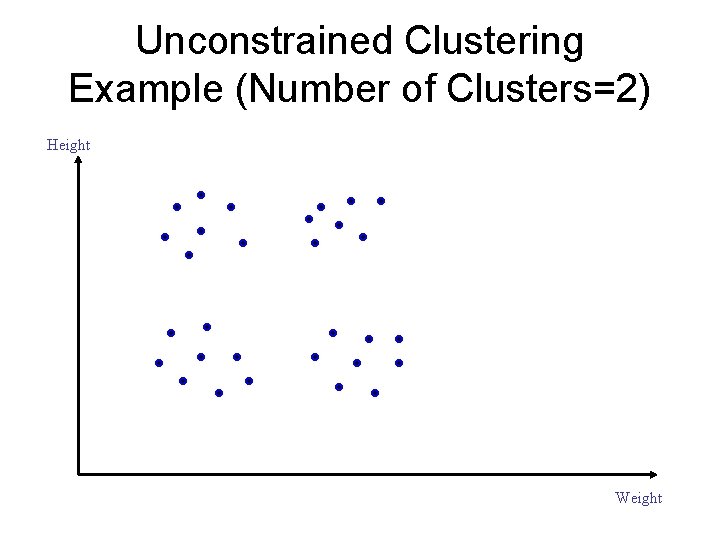

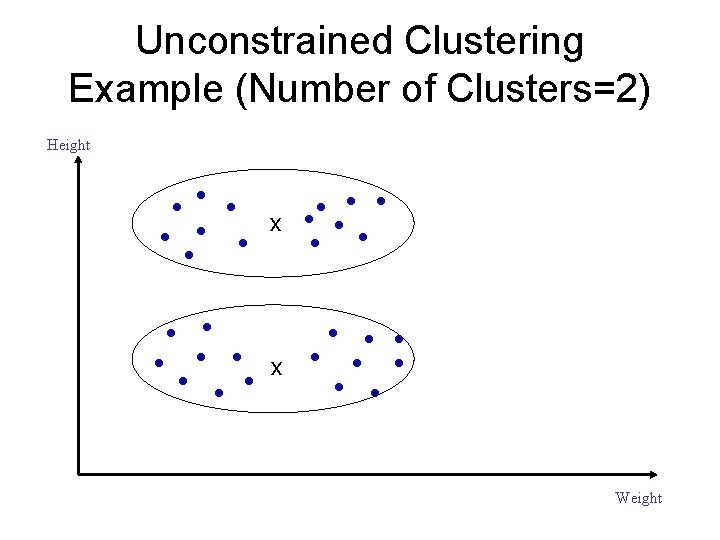

Unconstrained Clustering Example (Number of Clusters=2) Height Weight

Unconstrained Clustering Example (Number of Clusters=2) Height x x Weight

Unconstrained Clustering Example (Number of Clusters=2) Height x x Weight

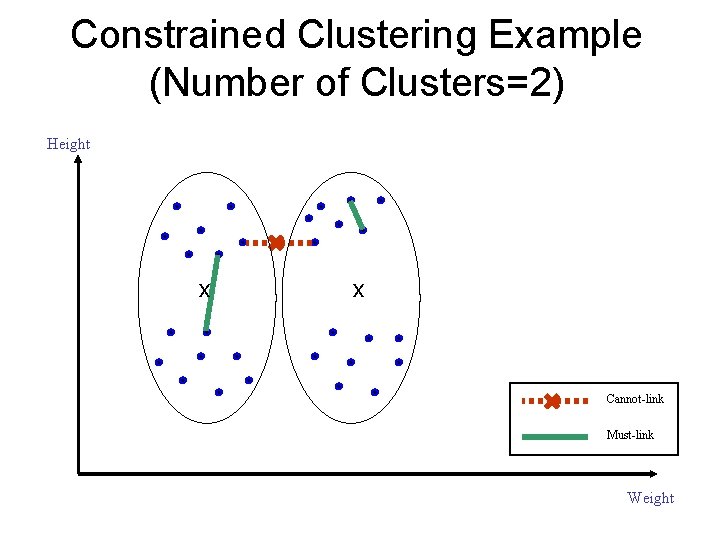

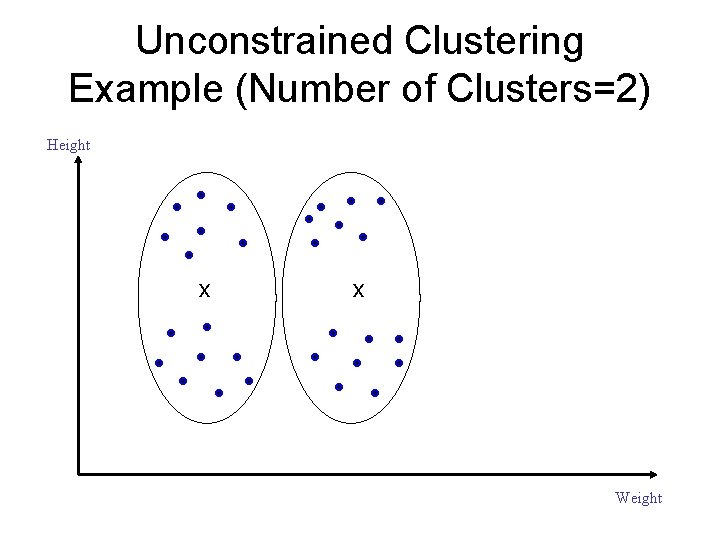

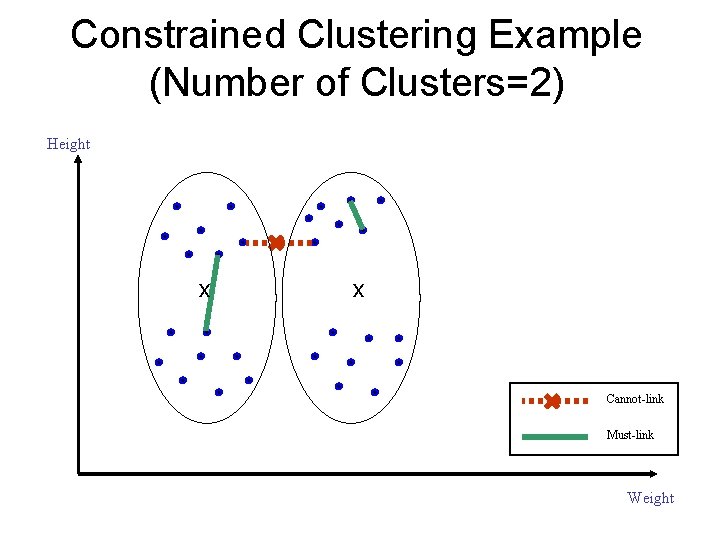

Constrained Clustering Example (Number of Clusters=2) Height x x Cannot-link Must-link Weight

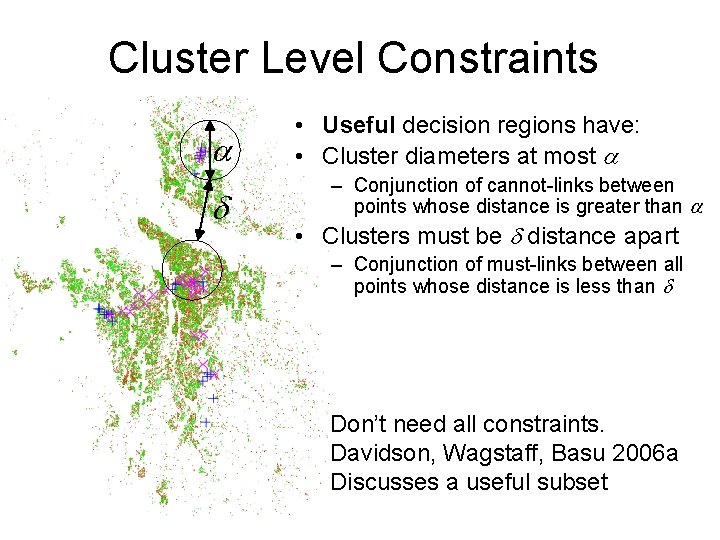

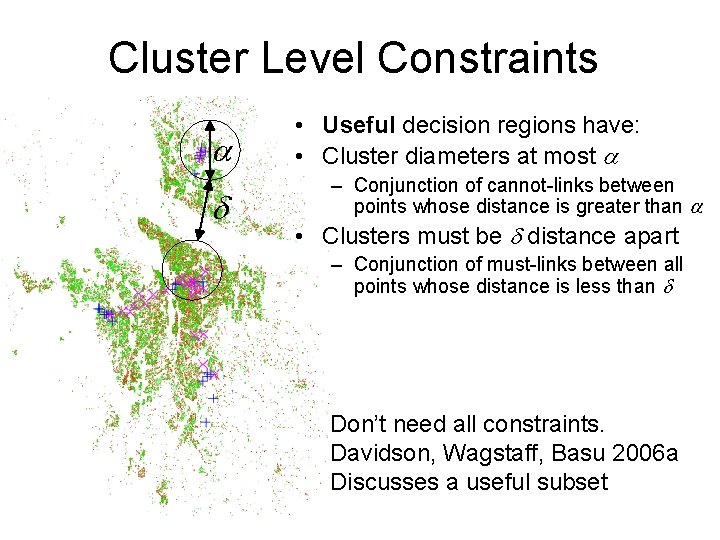

Cluster Level Constraints • Useful decision regions have: • Cluster diameters at most – Conjunction of cannot-links between points whose distance is greater than • Clusters must be distance apart – Conjunction of must-links between all points whose distance is less than Don’t need all constraints. Davidson, Wagstaff, Basu 2006 a Discusses a useful subset

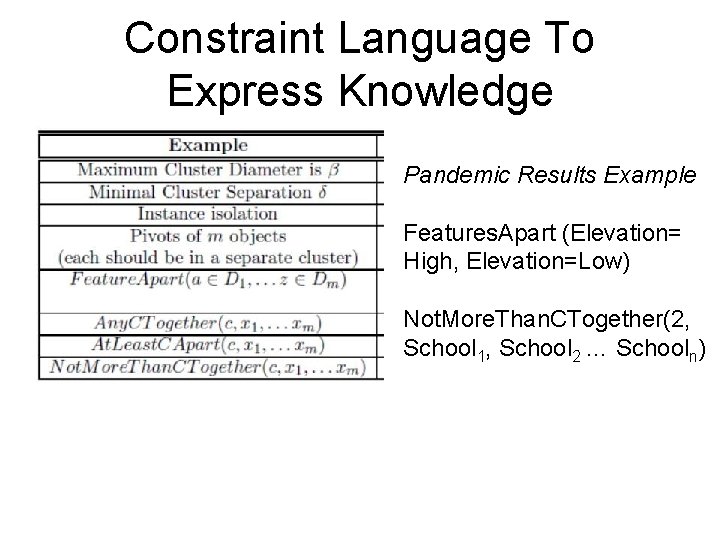

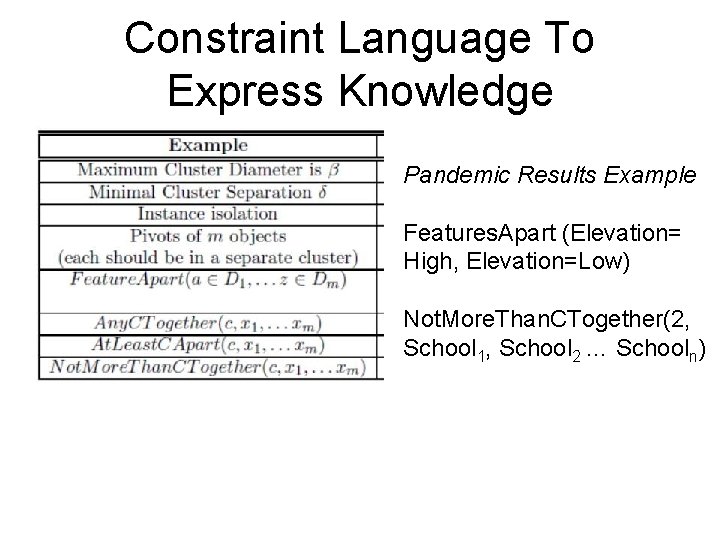

Constraint Language To Express Knowledge Pandemic Results Example Features. Apart (Elevation= High, Elevation=Low) Not. More. Than. CTogether(2, School 1, School 2 … Schooln)

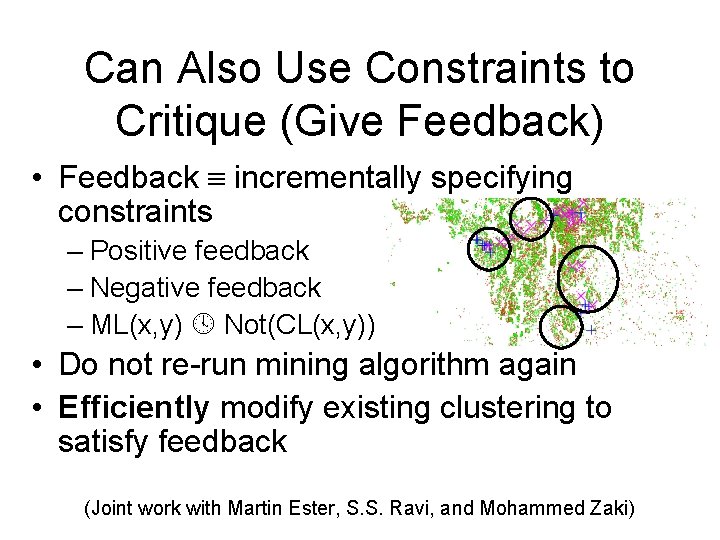

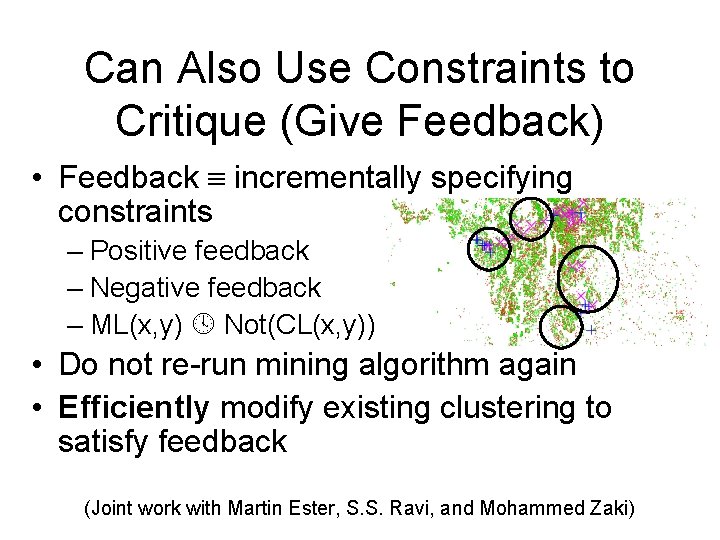

Can Also Use Constraints to Critique (Give Feedback) • Feedback incrementally specifying constraints – Positive feedback – Negative feedback – ML(x, y) Not(CL(x, y)) • Do not re-run mining algorithm again • Efficiently modify existing clustering to satisfy feedback (Joint work with Martin Ester, S. S. Ravi, and Mohammed Zaki)

Outline • Knowledge enhanced mining with constraints – – Motivation How to add in domain expertise Complexity results Sufficient conditions and algorithms • Other work potentially applicable to sky survey data – Speeding up algorithms by scaling down data – Mining poor quality data – Mining with biased data sets

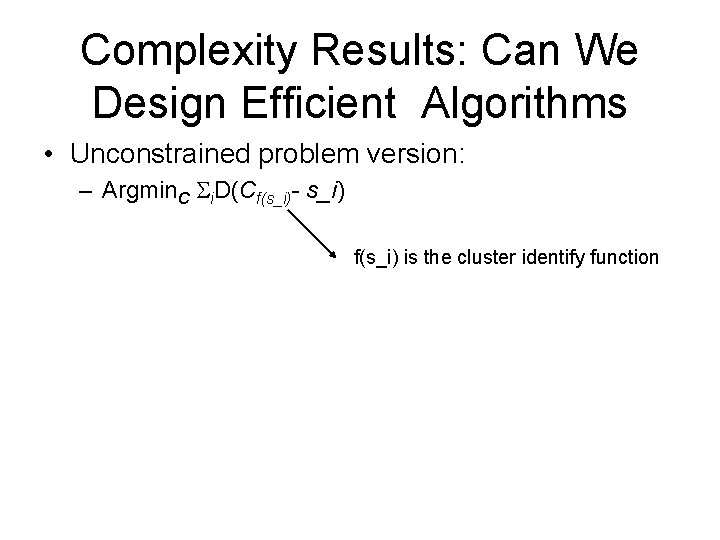

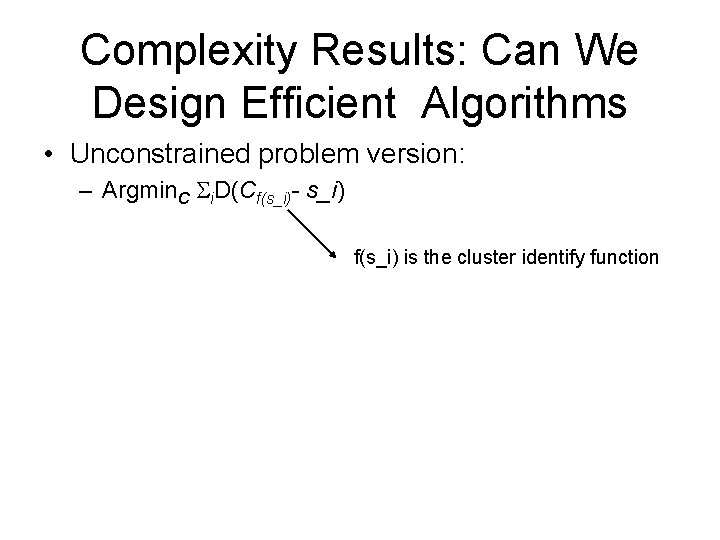

Complexity Results: Can We Design Efficient Algorithms • Unconstrained problem version: – Argmin. C i. D(Cf(s_i)- s_i) f(s_i) is the cluster identify function

Complexity Results: Can We Design Efficient Algorithms • Constrained problem version: – Argmin. C i. D(Cf(s_i)- s_i) – s. t. (i, j) ML : f(s_i) = f(s_j), (i, j) CL : f(s_i) f(s_j) – Feasibility sub-problem – i. e. No solution for k=2: CL(x, y), CL(x, z), CL(y, z) x z y – Important: Relates to generating a feasible clustering

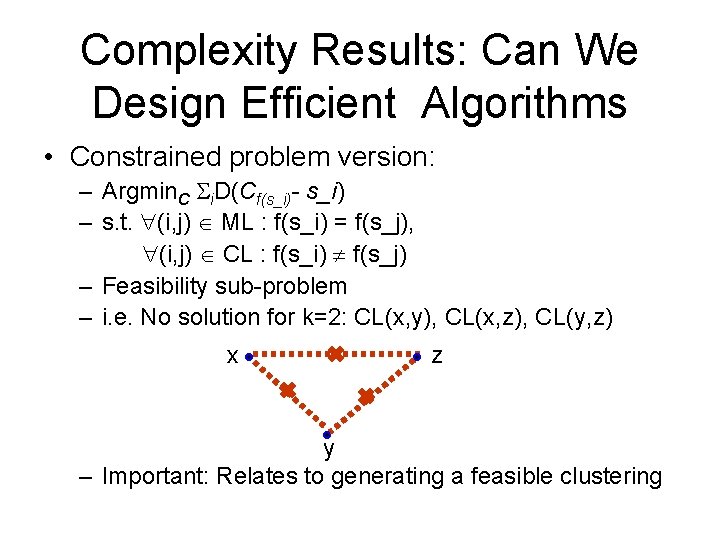

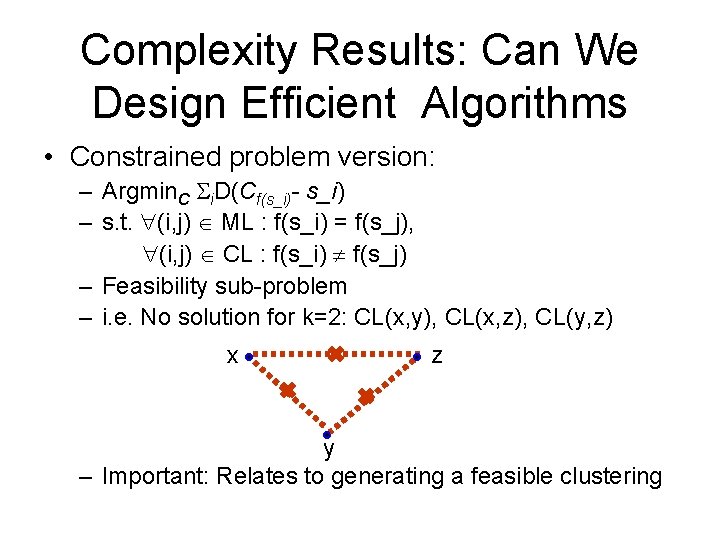

Clustering Under Cannot Link Constraints is Graph Coloring Instances a thru z Constraints: ML(g, h) CL(a, c), CL(d, e), CL(f, g), CL(c, f) c f g, h a d e Graph k-coloring problem

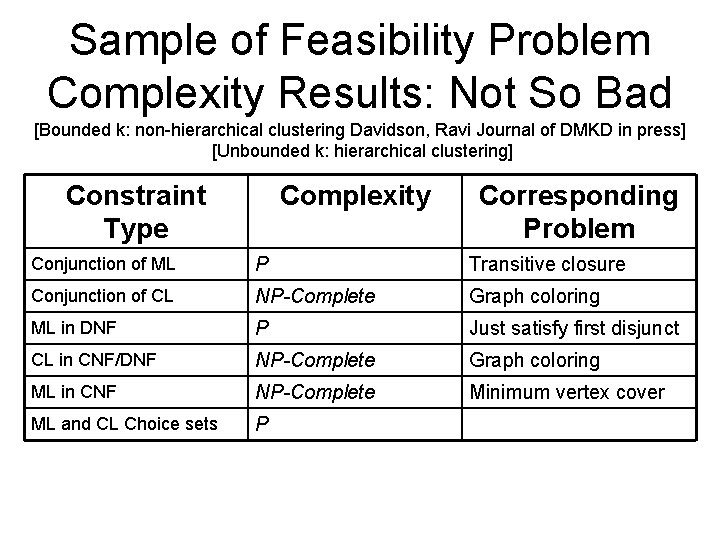

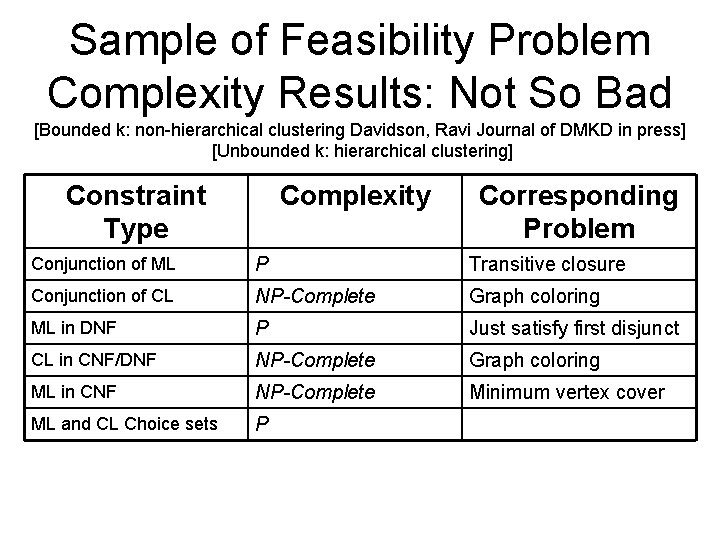

Sample of Feasibility Problem Complexity Results: Not So Bad [Bounded k: non-hierarchical clustering Davidson, Ravi Journal of DMKD in press] [Unbounded k: hierarchical clustering] Constraint Type Complexity Corresponding Problem Conjunction of ML P Transitive closure Conjunction of CL NP-Complete Graph coloring ML in DNF P Just satisfy first disjunct CL in CNF/DNF NP-Complete Graph coloring ML in CNF NP-Complete Minimum vertex cover ML and CL Choice sets P

![Other Implications of Results For Algorithm Design Getting Worse Davidson and Ravi 2007 b Other Implications of Results For Algorithm Design: Getting Worse [Davidson and Ravi 2007 b]](https://slidetodoc.com/presentation_image_h/957ec7643fd1e3edf67b33537ece6ed4/image-36.jpg)

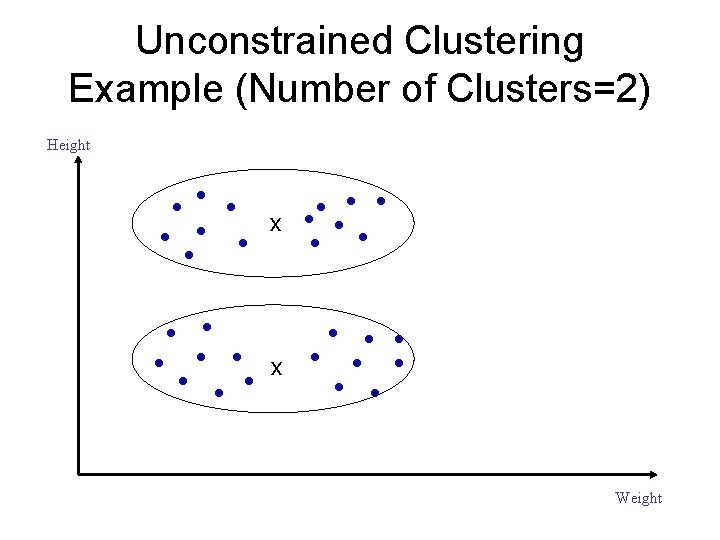

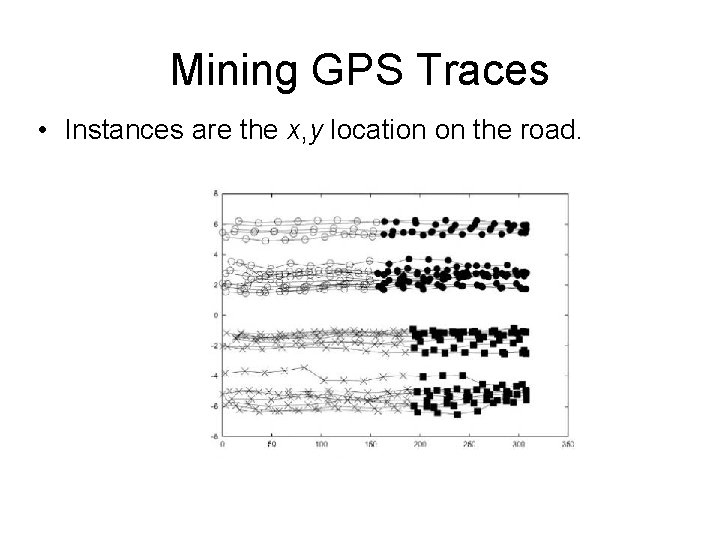

Other Implications of Results For Algorithm Design: Getting Worse [Davidson and Ravi 2007 b] • Algorithm design idea: • Find the best clustering that satisfies most constraints in C. • Can’t be done efficiently: – Repair to satisfy C. – Minimally prune C to satisfy

![Incrementally Adding In Constraints Quite Bad Davidson Ester and Ravi 2007 c Usercentric Incrementally Adding In Constraints: Quite Bad [Davidson, Ester and Ravi 2007 c] • User-centric](https://slidetodoc.com/presentation_image_h/957ec7643fd1e3edf67b33537ece6ed4/image-37.jpg)

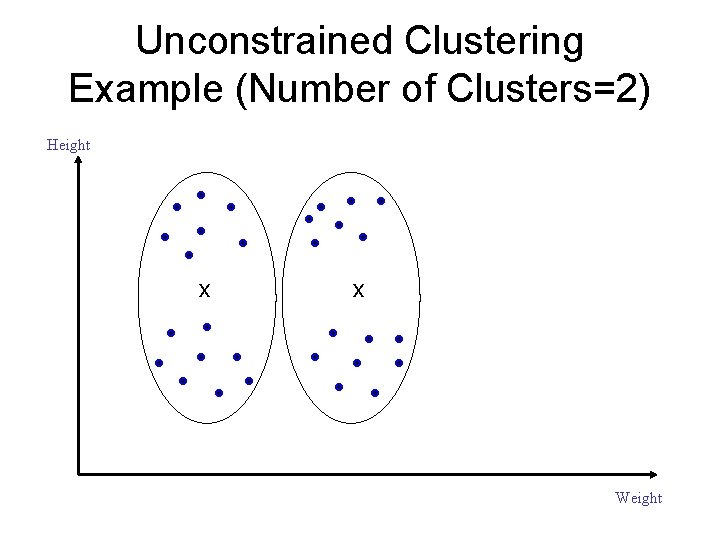

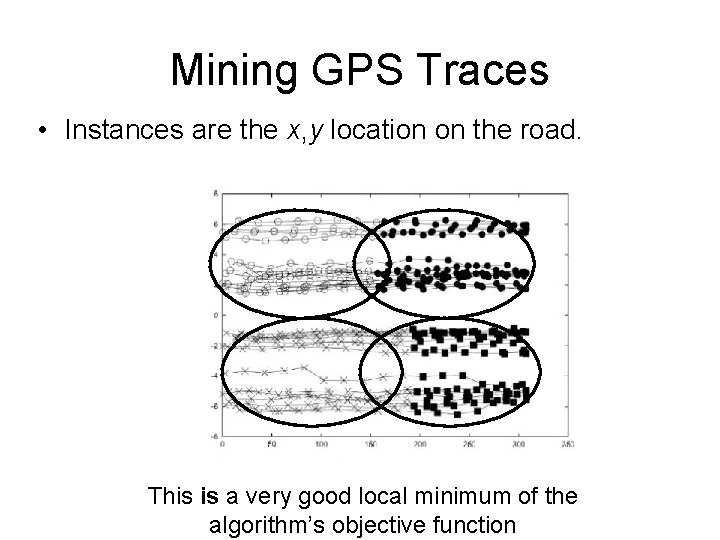

Incrementally Adding In Constraints: Quite Bad [Davidson, Ester and Ravi 2007 c] • User-centric mining • Given a clustering that satisfies a set of constraints C • Minimally modifying to satisfy C and just one more ML or CL constraint is intractable.

Outline • Knowledge enhanced mining with constraints – – Motivation How to add in domain expertise Complexity results Sufficient conditions and algorithms • Other work potentially applicable to sky survey data – Speeding up algorithms by scaling down data – Mining poor quality data – Mining with biased data sets

![Interesting Phenomena CL Only Davidson et al DMKD Journal AAAI 06 Cancer No Interesting Phenomena – CL Only [Davidson et’ al DMKD Journal, AAAI 06] Cancer No](https://slidetodoc.com/presentation_image_h/957ec7643fd1e3edf67b33537ece6ed4/image-39.jpg)

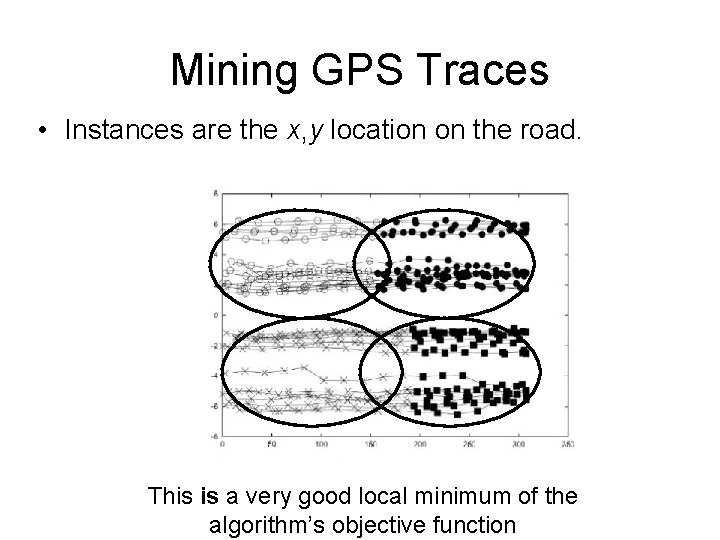

Interesting Phenomena – CL Only [Davidson et’ al DMKD Journal, AAAI 06] Cancer No feasibility Issues Phase. Transitions? [Wagstaff, Cardie 2002]

![Satisfying All Constraints CopkMeans Wagstaff Thesis 2000 Algorithm aims to minimize VQE while satisfying Satisfying All Constraints (Cop-k-Means) [Wagstaff, Thesis 2000] Algorithm aims to minimize VQE while satisfying](https://slidetodoc.com/presentation_image_h/957ec7643fd1e3edf67b33537ece6ed4/image-40.jpg)

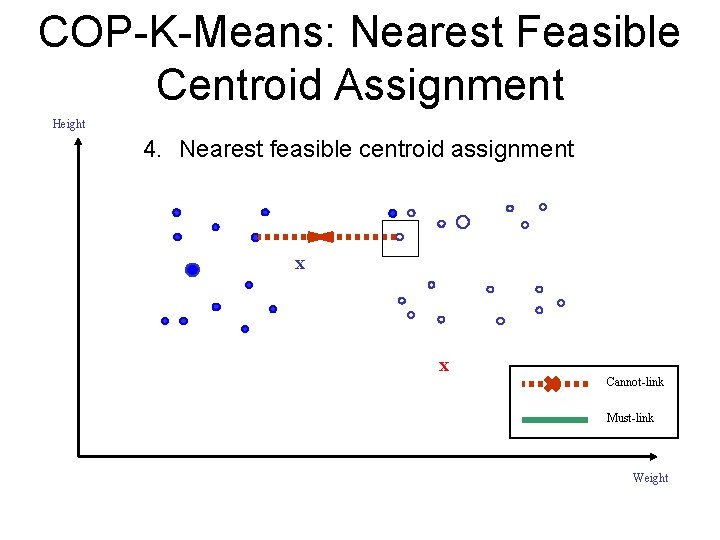

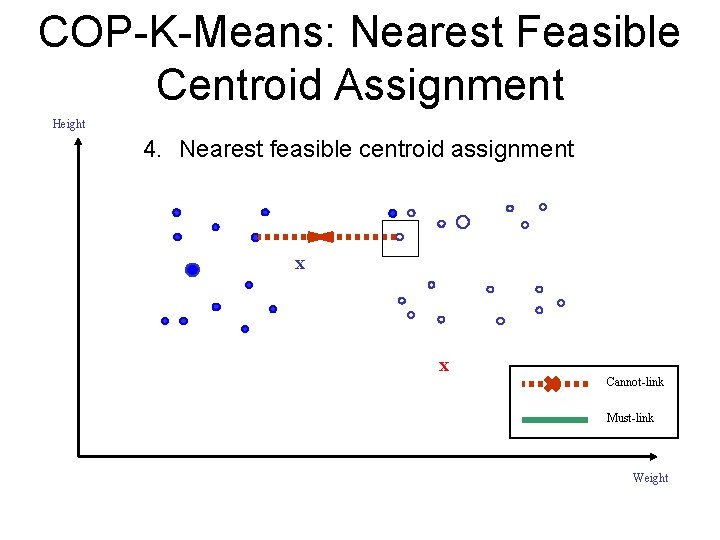

Satisfying All Constraints (Cop-k-Means) [Wagstaff, Thesis 2000] Algorithm aims to minimize VQE while satisfying all constraints. 1. Calculate the transitive closure over ML points. 2. Replace each connected component with a weighted single point. 3. Randomly generate cluster centroids. 4. Begin Nearest feasible centroid assignment Calculate centroids 5. Loop until VQE is small

COP-K-Means: Nearest Feasible Centroid Assignment Height 4. Nearest feasible centroid assignment x x Cannot-link Must-link Weight

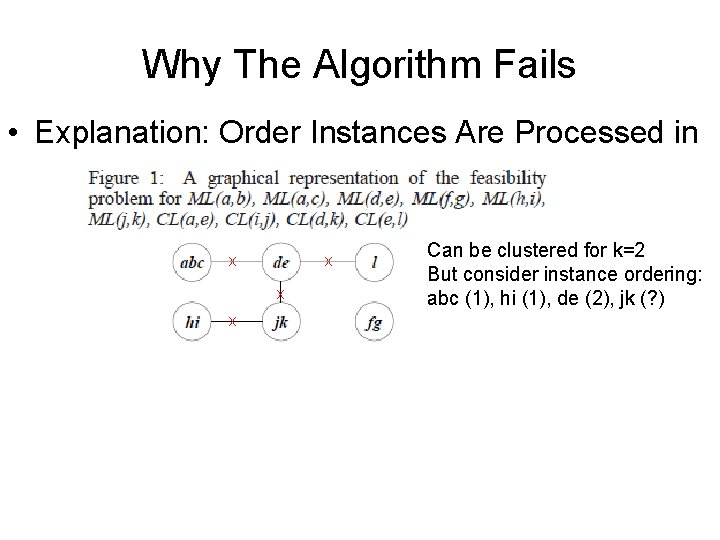

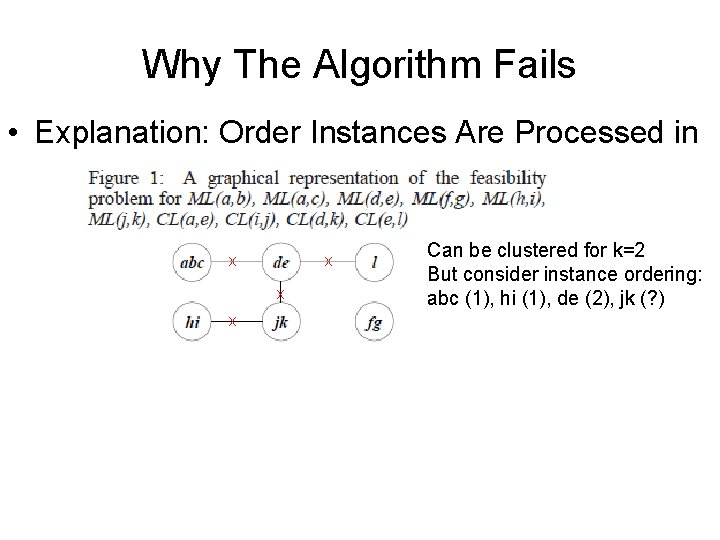

Why The Algorithm Fails • Explanation: Order Instances Are Processed in x x Can be clustered for k=2 But consider instance ordering: abc (1), hi (1), de (2), jk (? )

Why The Algorithm Fails • Explanation: Instance Ordering x x Can be clustered for k=2 But consider instance ordering: abc (1), hi (1), de (2), jk (? )

Why The Algorithm Fails • Explanation: Instance Ordering x x Can be clustered for k=2 But consider instance ordering: abc (1), hi (1), de (2), jk (? )

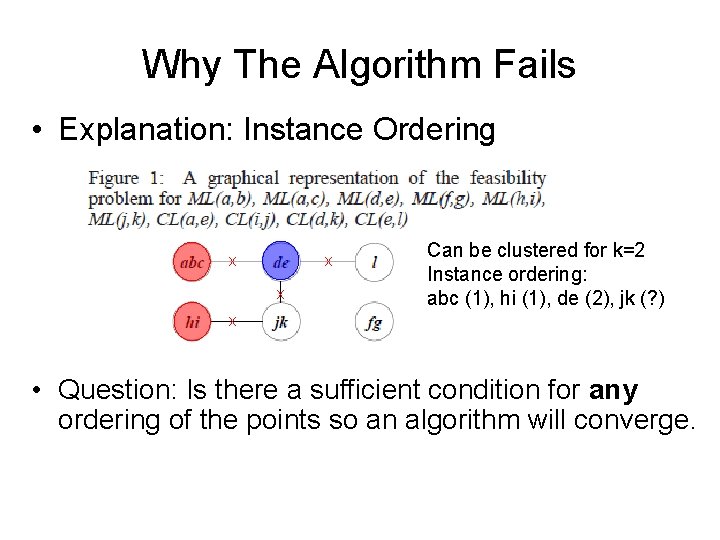

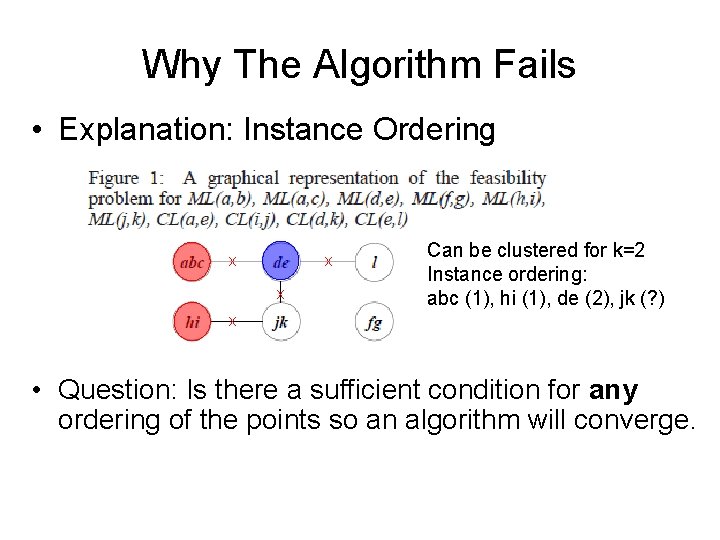

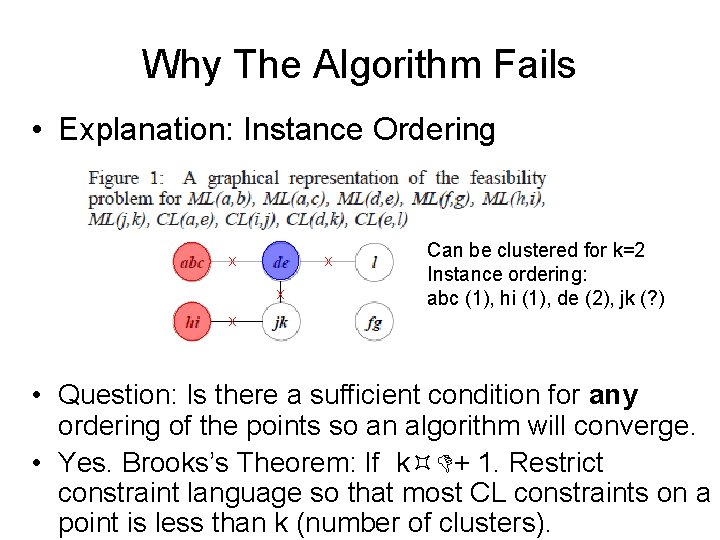

Why The Algorithm Fails • Explanation: Instance Ordering x x x Can be clustered for k=2 Instance ordering: abc (1), hi (1), de (2), jk (? ) x • Question: Is there a sufficient condition for any ordering of the points so an algorithm will converge.

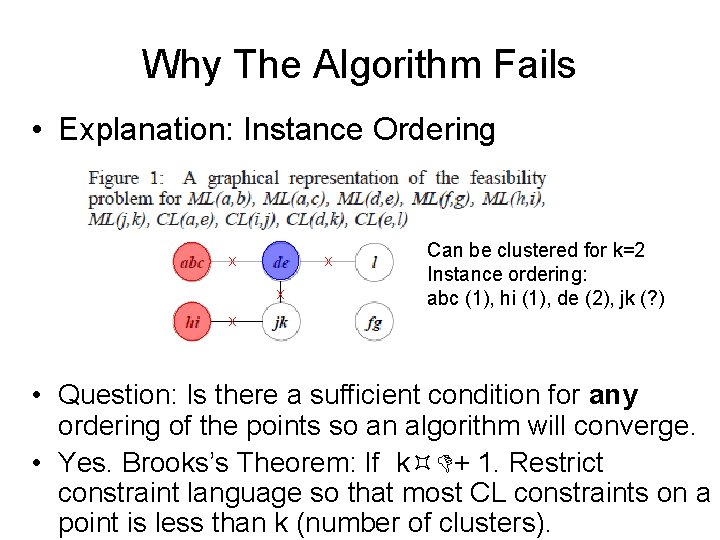

Why The Algorithm Fails • Explanation: Instance Ordering x x x Can be clustered for k=2 Instance ordering: abc (1), hi (1), de (2), jk (? ) x • Question: Is there a sufficient condition for any ordering of the points so an algorithm will converge. • Yes. Brooks’s Theorem: If k + 1. Restrict constraint language so that most CL constraints on a point is less than k (number of clusters).

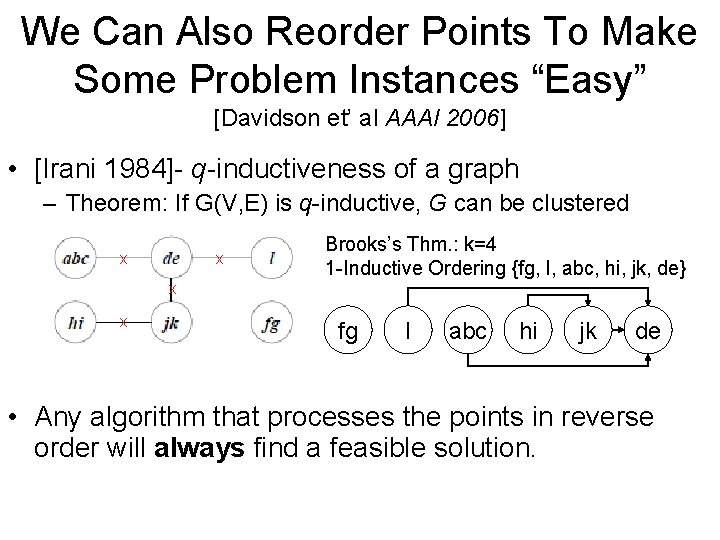

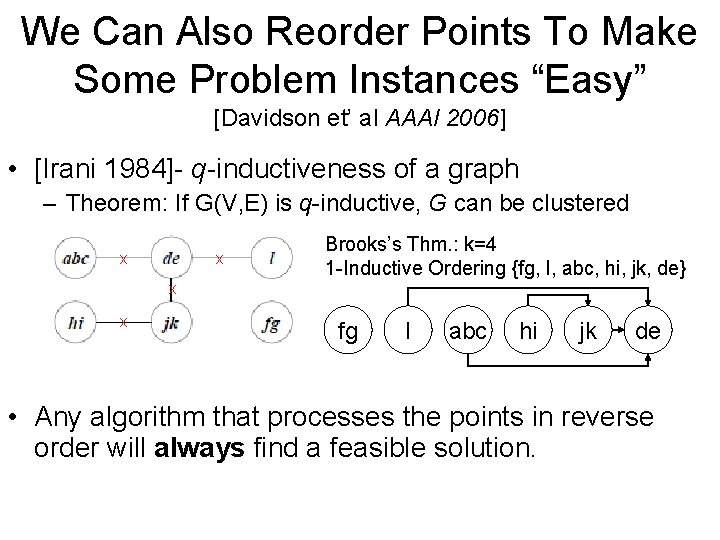

We Can Also Reorder Points To Make Some Problem Instances “Easy” [Davidson et’ al AAAI 2006] • [Irani 1984]- q-inductiveness of a graph – Theorem: If G(V, E) is q-inductive, G can be clustered x x Brooks’s Thm. : k=4 1 -Inductive Ordering {fg, l, abc, hi, jk, de} x x fg l abc hi jk de • Any algorithm that processes the points in reverse order will always find a feasible solution.

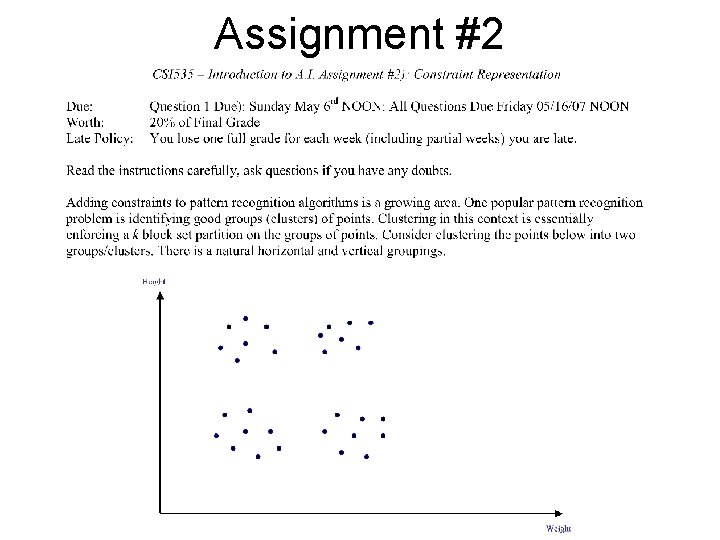

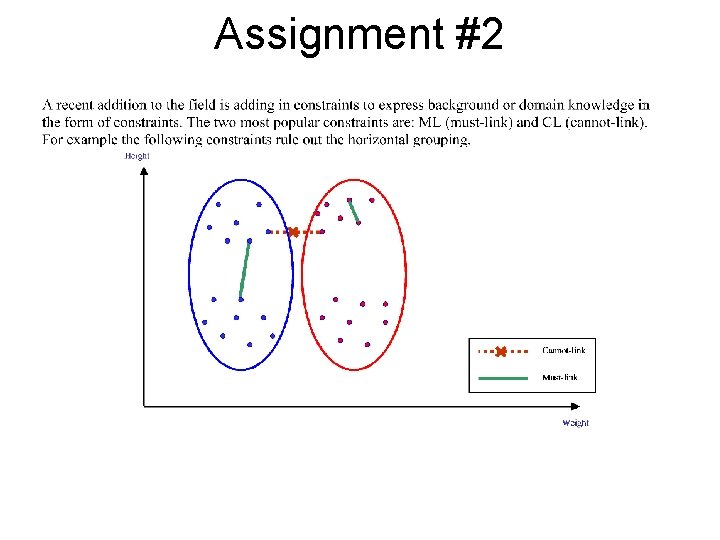

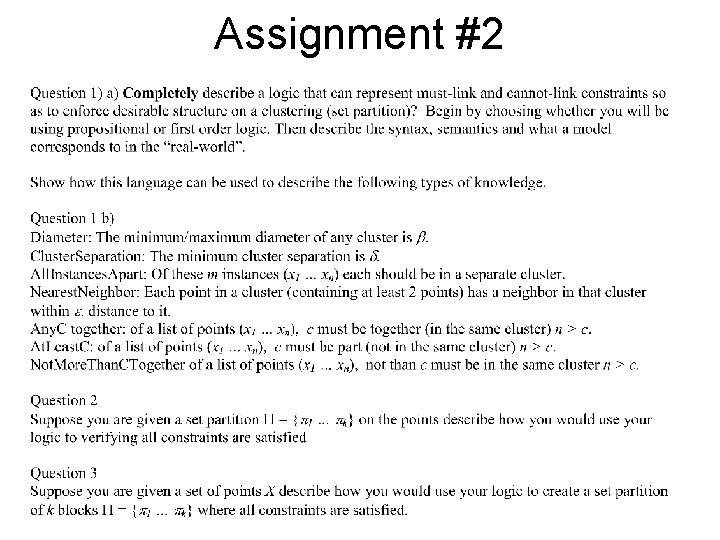

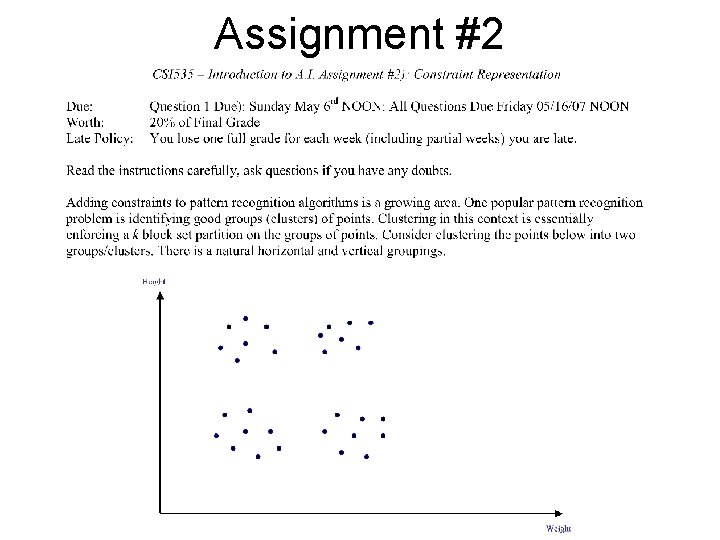

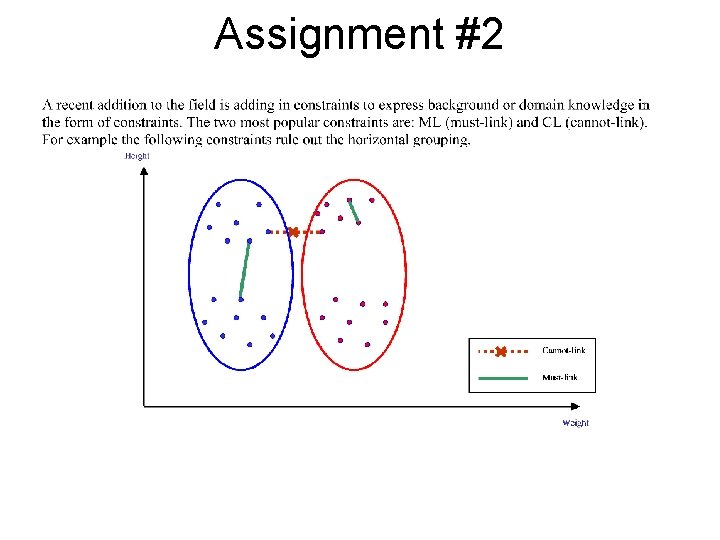

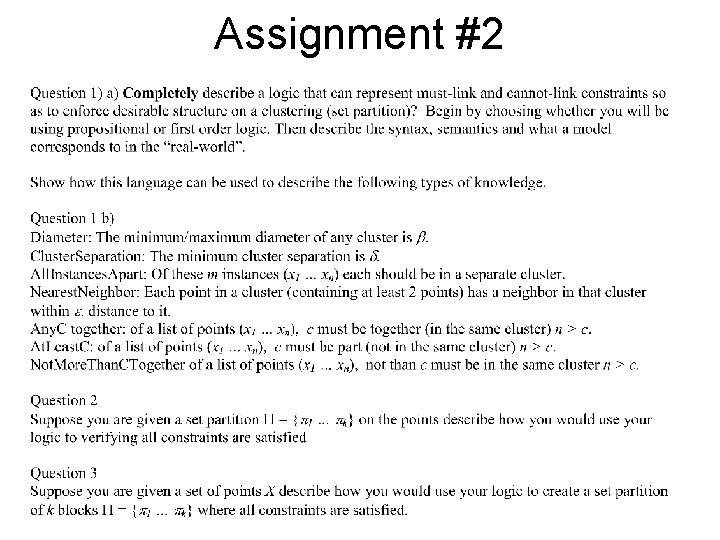

Assignment #2

Assignment #2

Assignment #2