Knowledge and Information Retrieval Session 6 Dictionary Retrieval

![K-NN >>> X = [[0], [1], [2], [3]] >>> y = [0, 0, 1, K-NN >>> X = [[0], [1], [2], [3]] >>> y = [0, 0, 1,](https://slidetodoc.com/presentation_image_h/091d965a37662e2788f1cc8c343c0165/image-56.jpg)

- Slides: 93

Knowledge and Information Retrieval Session 6 Dictionary, Retrieval Evaluation and Text Classification

Agenda • • Dictionary compression and Retrievaluation Machine Learning in Text Classification Unsupervised vs Supervised learning Deep Learning Methods in Text Classification Features selection Evaluation and Metrics Bina Nusantara University 2

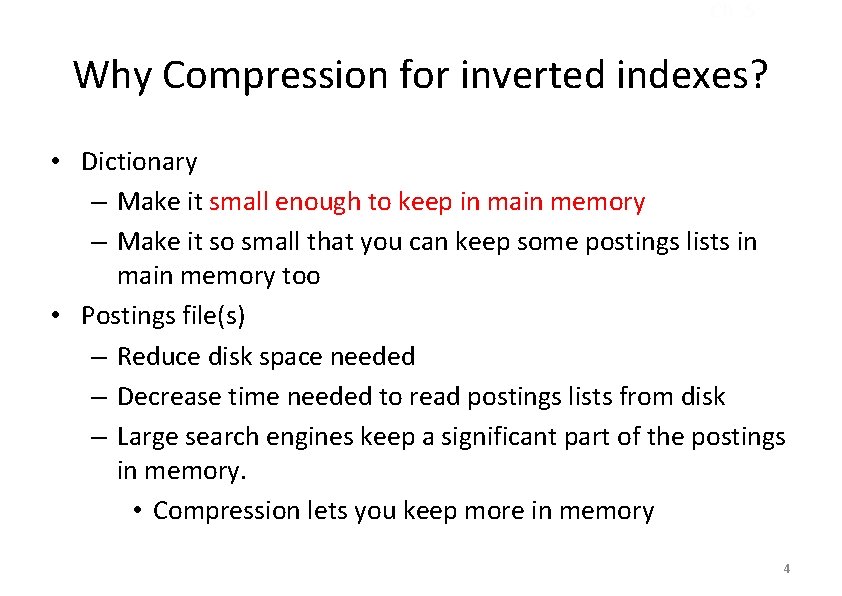

Sec. 5. 2 Why compress the dictionary? • Search begins with the dictionary • We want to keep it in main memory to support high query throughput. • Embedded/mobile devices may have very little memory • Even if the dictionary isn’t in memory, we want it to be small for a fast search startup time • So, compressing the dictionary is important 3

Ch. 5 Why Compression for inverted indexes? • Dictionary – Make it small enough to keep in main memory – Make it so small that you can keep some postings lists in main memory too • Postings file(s) – Reduce disk space needed – Decrease time needed to read postings lists from disk – Large search engines keep a significant part of the postings in memory. • Compression lets you keep more in memory 4

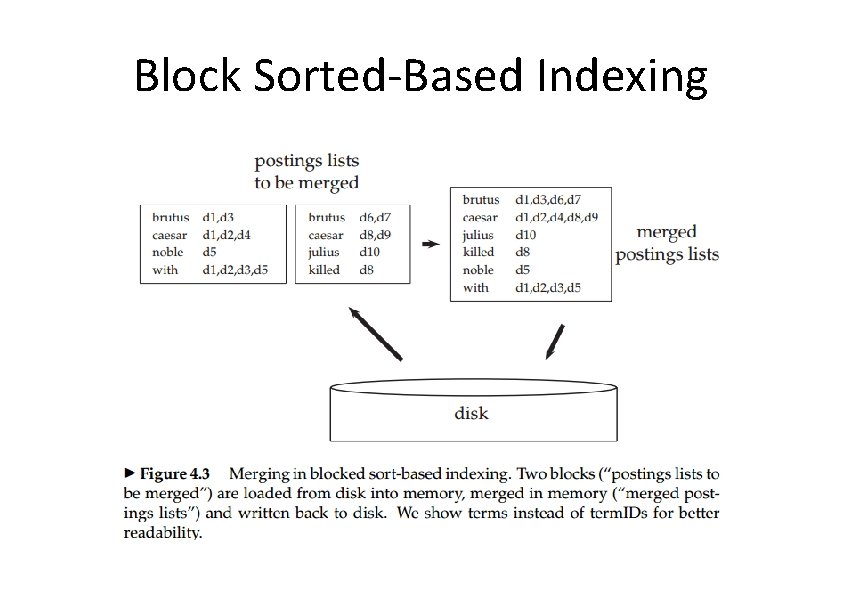

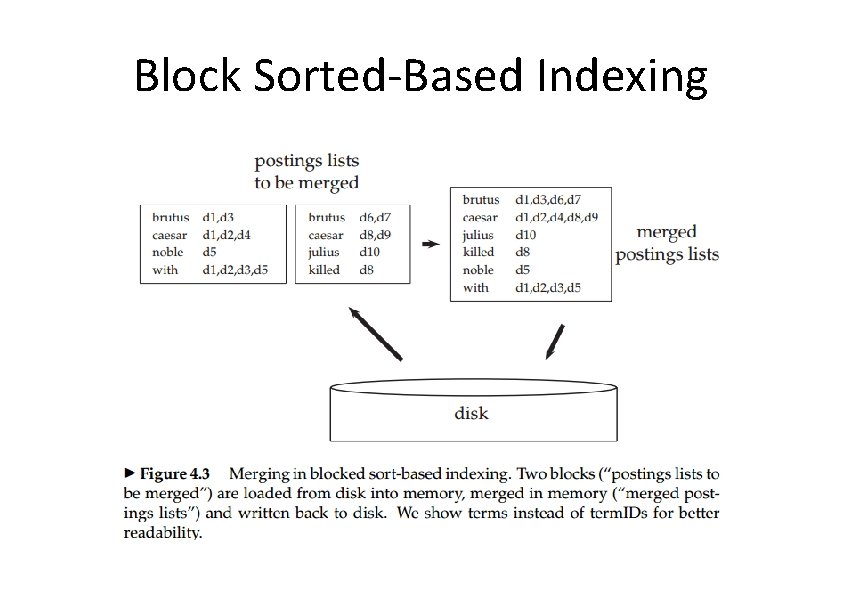

Block Sorted-Based Indexing

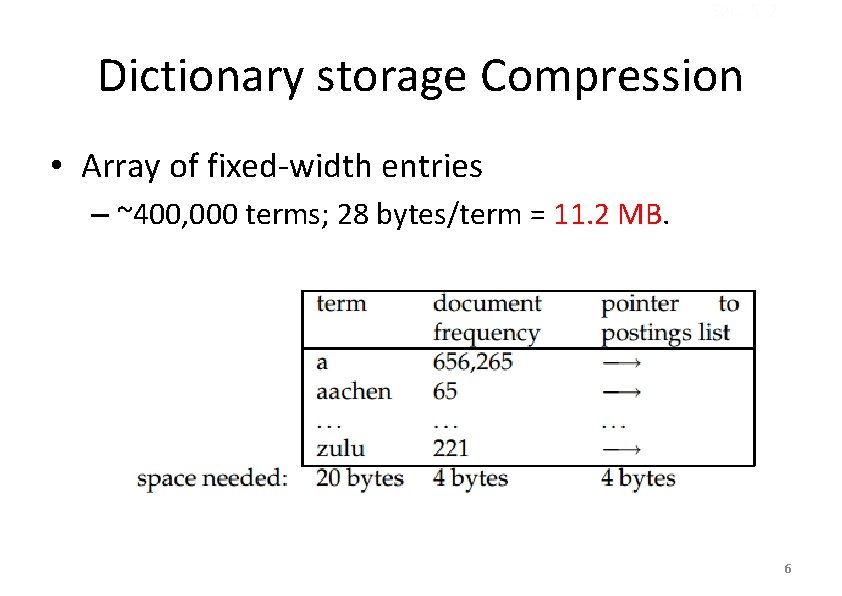

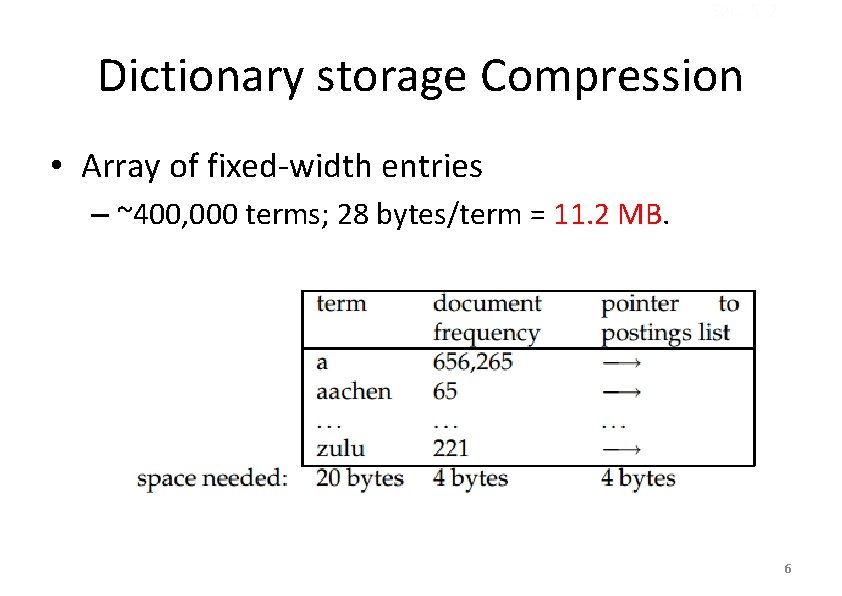

Sec. 5. 2 Dictionary storage Compression • Array of fixed-width entries – ~400, 000 terms; 28 bytes/term = 11. 2 MB. 6

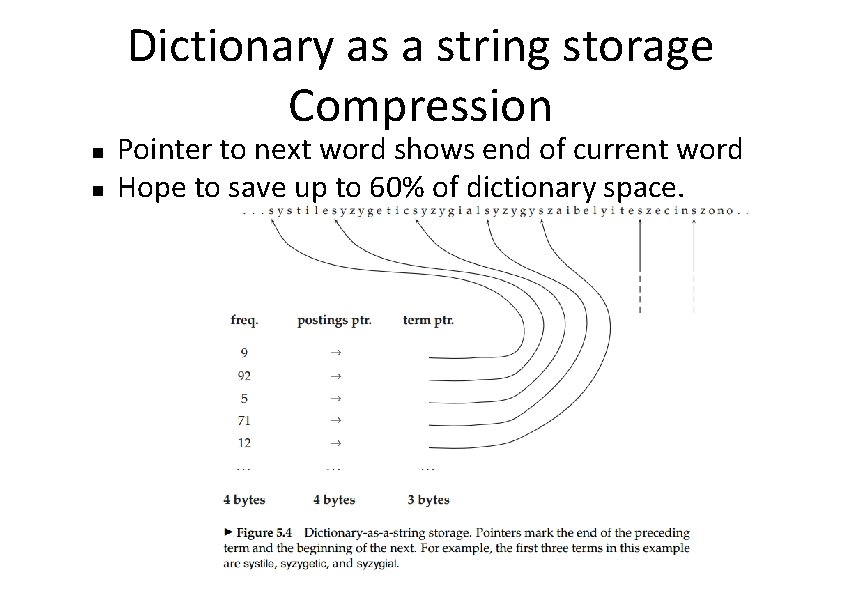

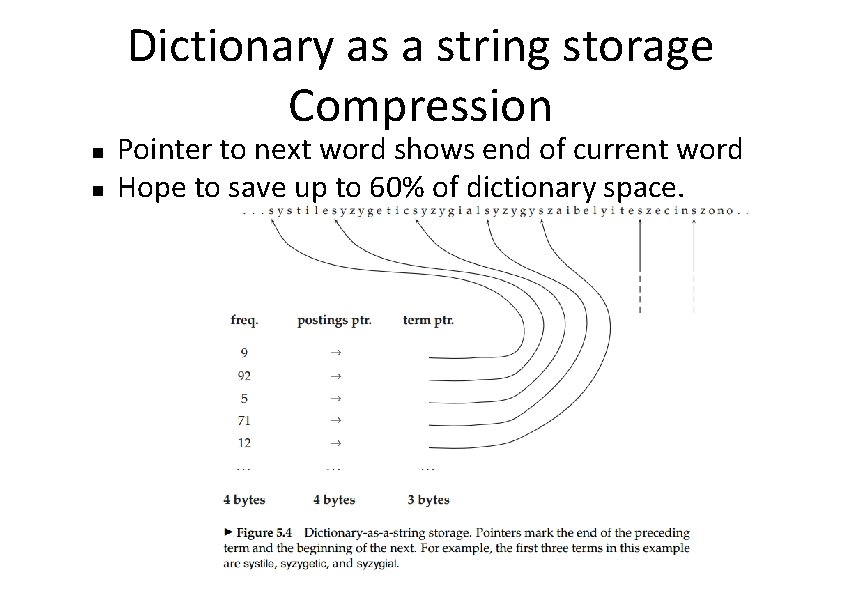

Dictionary as a string storage Compression n n Pointer to next word shows end of current word Hope to save up to 60% of dictionary space.

Sec. 5. 2 Fixed-width terms are wasteful • Most of the bytes in the Term column are wasted – we allot 20 bytes for 1 letter terms. – And we still can’t handle supercalifragilisticexpialidocious or hydrochlorofluorocarbons. • Written English averages ~4. 5 characters/word. – Exercise: Why is/isn’t this the number to use for estimating the dictionary size? • Ave. dictionary word in English: ~8 characters – How do we use ~8 characters per dictionary term? • Short words dominate token counts but not type average. 8

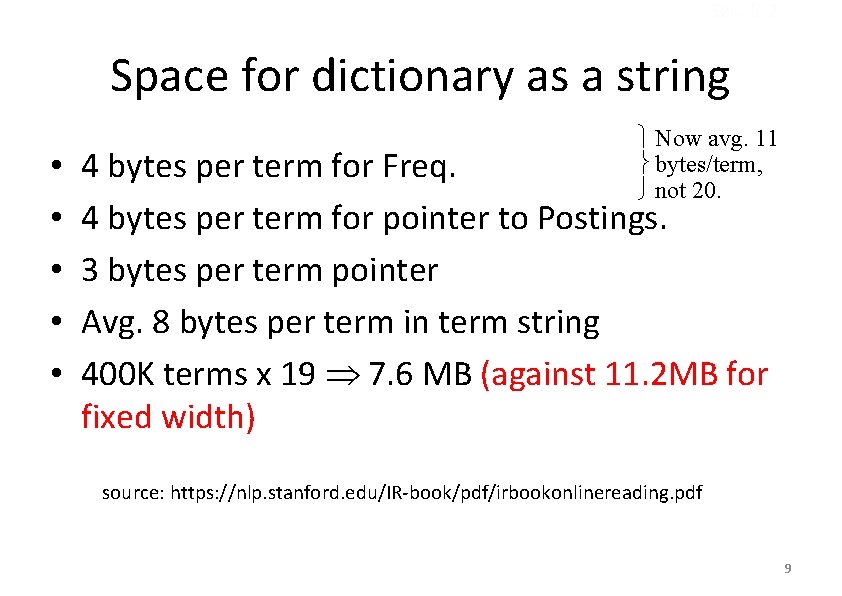

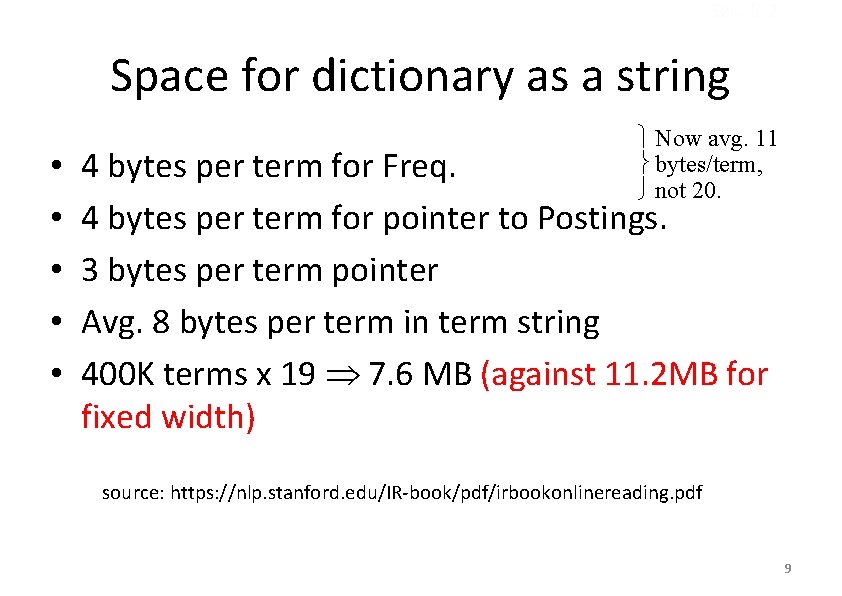

Sec. 5. 2 Space for dictionary as a string • • • Now avg. 11 bytes/term, not 20. 4 bytes per term for Freq. 4 bytes per term for pointer to Postings. 3 bytes per term pointer Avg. 8 bytes per term in term string 400 K terms x 19 7. 6 MB (against 11. 2 MB for fixed width) source: https: //nlp. stanford. edu/IR-book/pdf/irbookonlinereading. pdf 9

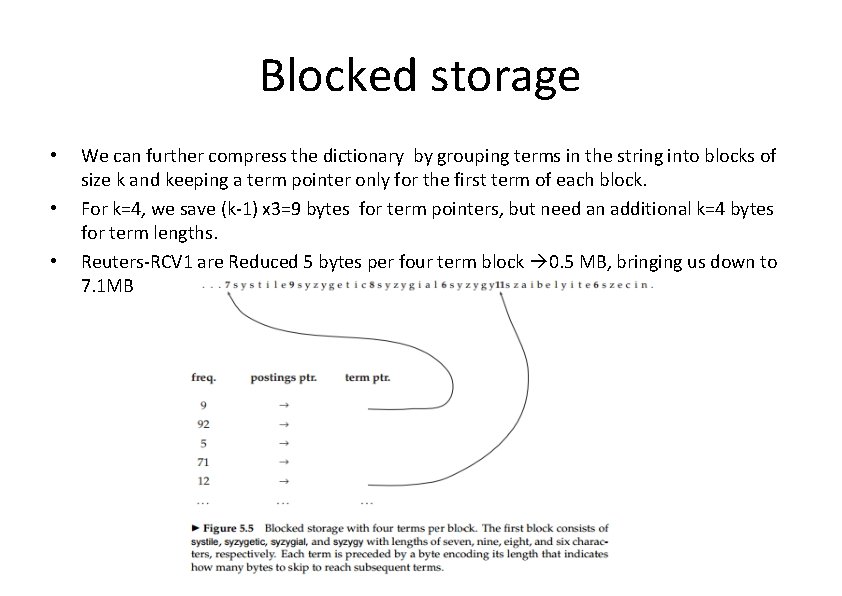

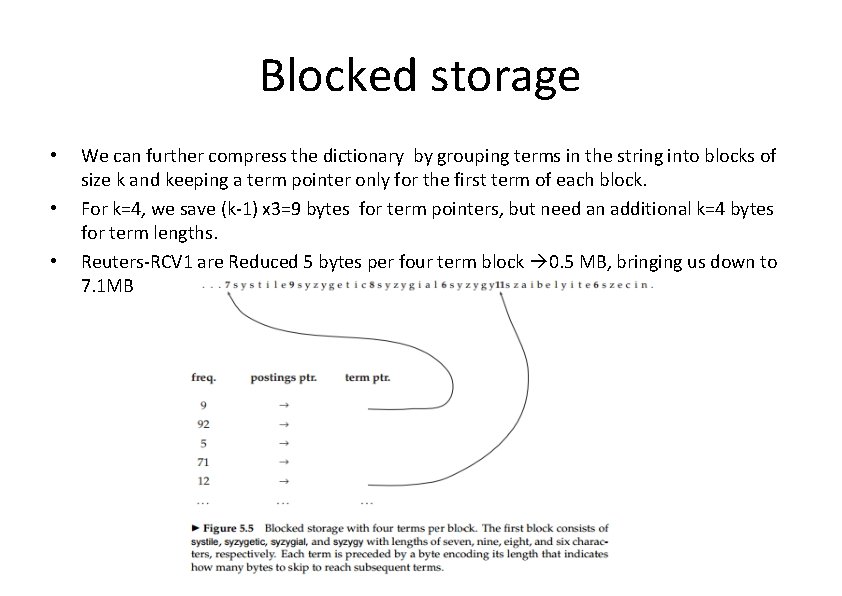

Blocked storage • • • We can further compress the dictionary by grouping terms in the string into blocks of size k and keeping a term pointer only for the first term of each block. For k=4, we save (k-1) x 3=9 bytes for term pointers, but need an additional k=4 bytes for term lengths. Reuters-RCV 1 are Reduced 5 bytes per four term block 0. 5 MB, bringing us down to 7. 1 MB

Sec. 5. 2 Front coding • Front-coding: – Sorted words commonly have long common prefix – store differences only – (for last k-1 in a block of k) 11

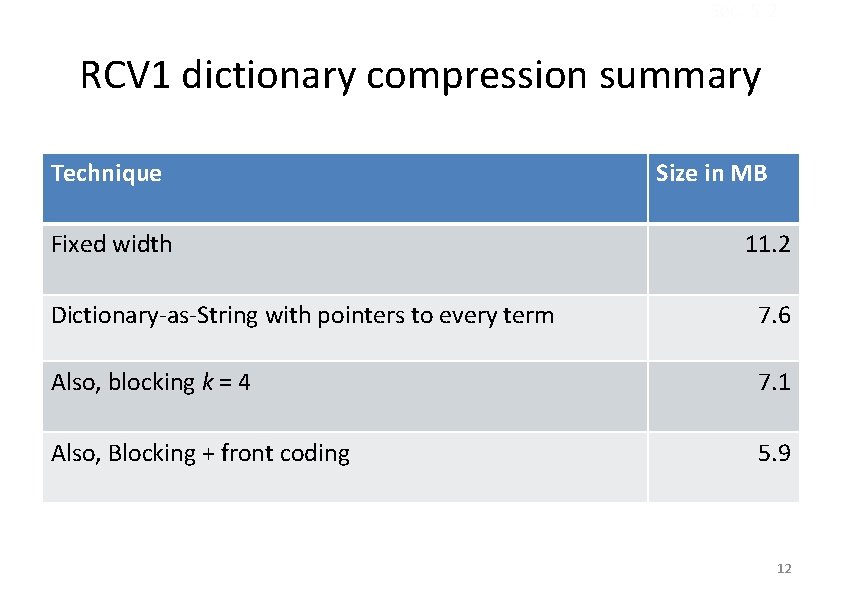

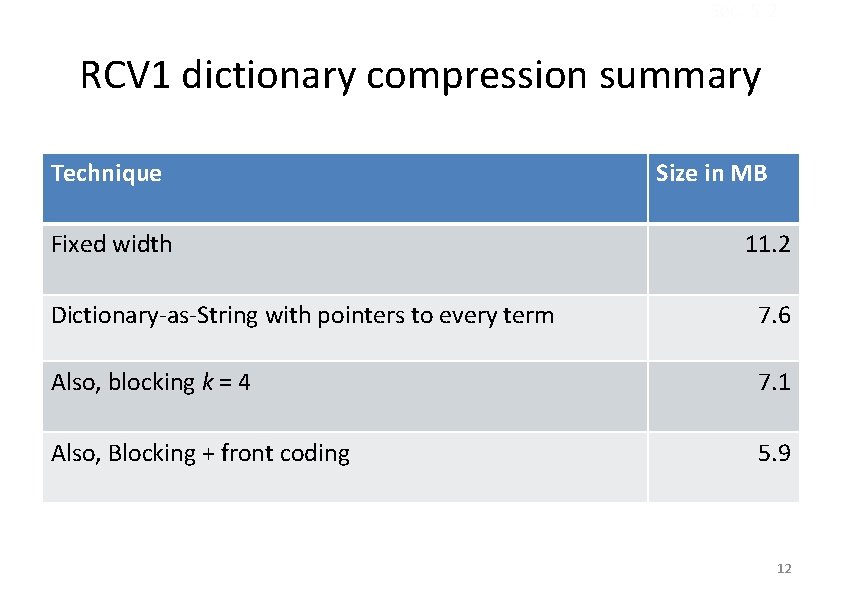

Sec. 5. 2 RCV 1 dictionary compression summary Technique Fixed width Size in MB 11. 2 Dictionary-as-String with pointers to every term 7. 6 Also, blocking k = 4 7. 1 Also, Blocking + front coding 5. 9 12

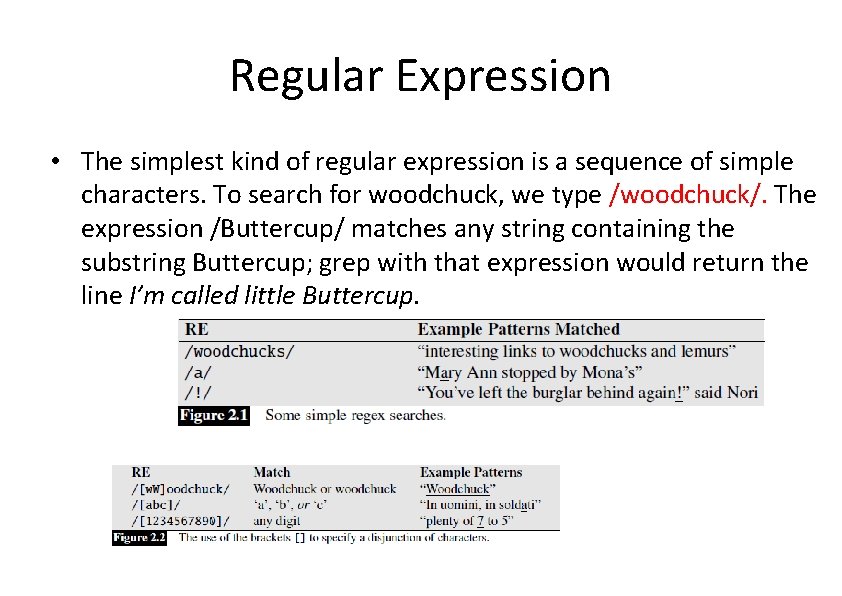

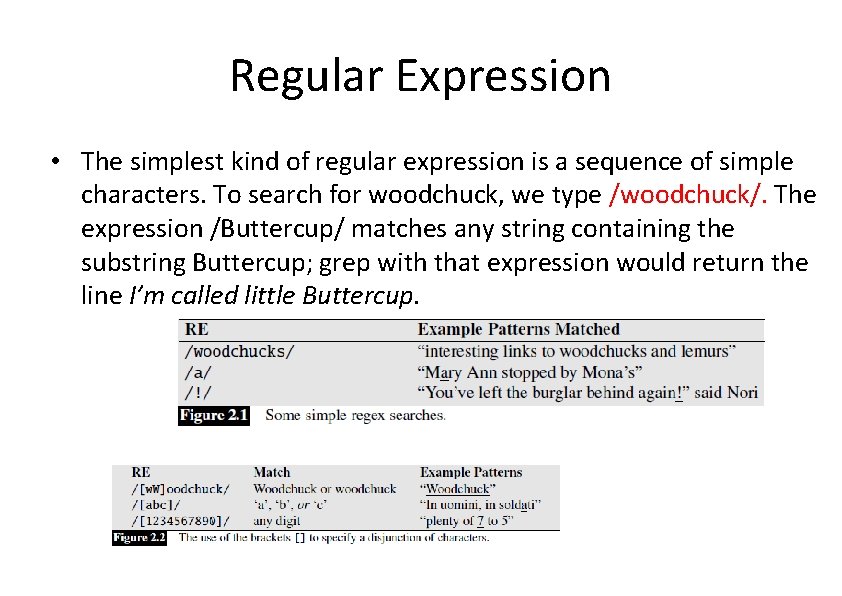

Regular Expression • The simplest kind of regular expression is a sequence of simple characters. To search for woodchuck, we type /woodchuck/. The expression /Buttercup/ matches any string containing the substring Buttercup; grep with that expression would return the line I’m called little Buttercup.

N-gram Language Models • Probabilities are essential in any task in which we have to identify words in noisy, ambiguous input, like speech recognition or handwriting recognition. • In the movie Take the Money and Run, Woody Allen tries to rob a bank with a sloppily written hold-up note that the teller incorrectly reads as “I have a gub”. As Russell and Norvig (2002) point out, a language processing system could avoid making this mistake by using the knowledge that the sequence “I have a gun” is far more probable than the non-word “I have a gub” or even “I have a gull”.

N-gram • In spelling correction, we need to find and correct spelling errors like Their are two midterms in this class, in which There was mistyped as Their. A sentence starting with the phrase There are will be much more probable than one starting with Their are, allowing a spellchecker to both detect and correct these errors. • Models that assign probabilities to sequences of words are called language model or LMs. We introduce the simplest model that assigns probabilities LM to sentences and sequences of words, the n-gram.

N-gram • An n-gram is a sequence of N gram words: a 2 -gram (or bigram) is a two-word sequence of words like “please turn”, “turn your”, or ”your homework”, and a 3 -gram (or trigram) is a three-word sequence of words like “please turn your”, or “turn your homework”. • Let’s begin with the task of computing P(w|h), the probability of a word w given some history h. Suppose the history h is “its water is so transparent that” and we want to know the probability that the next word is the: P(the|its water is so transparent that)

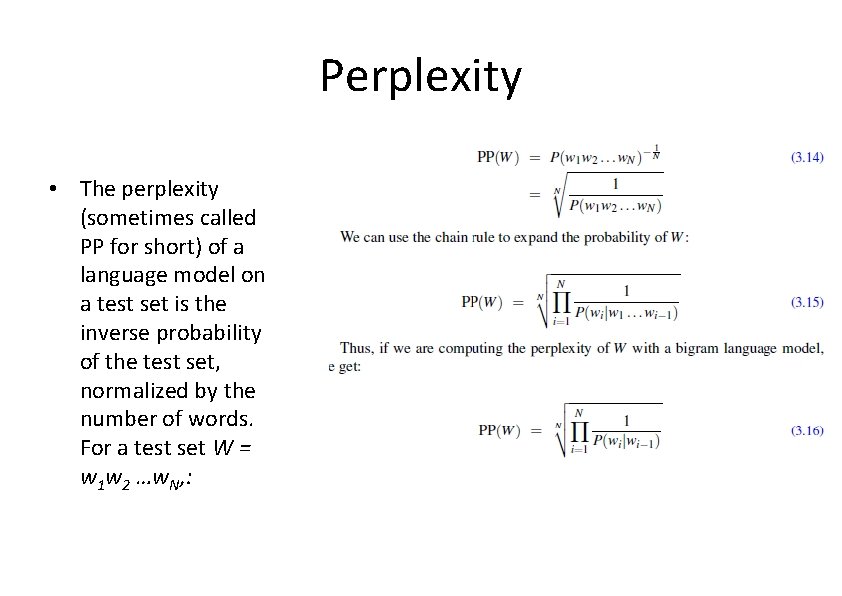

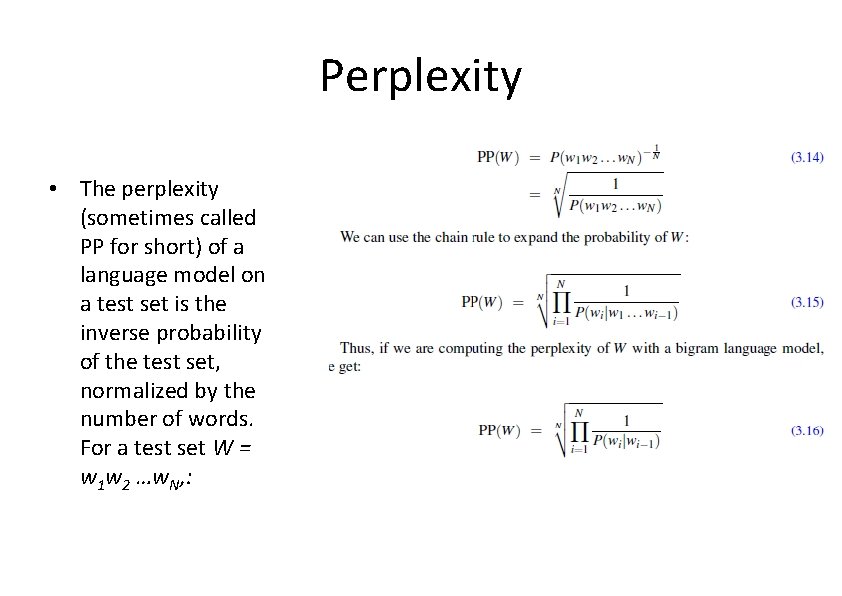

Perplexity • The perplexity (sometimes called PP for short) of a language model on a test set is the inverse probability of the test set, normalized by the number of words. For a test set W = w 1 w 2 …w. N, :

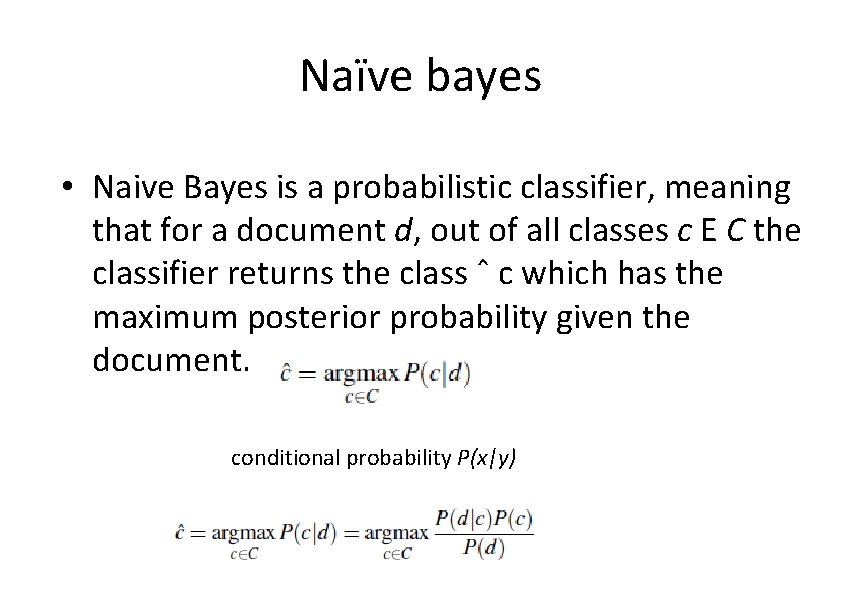

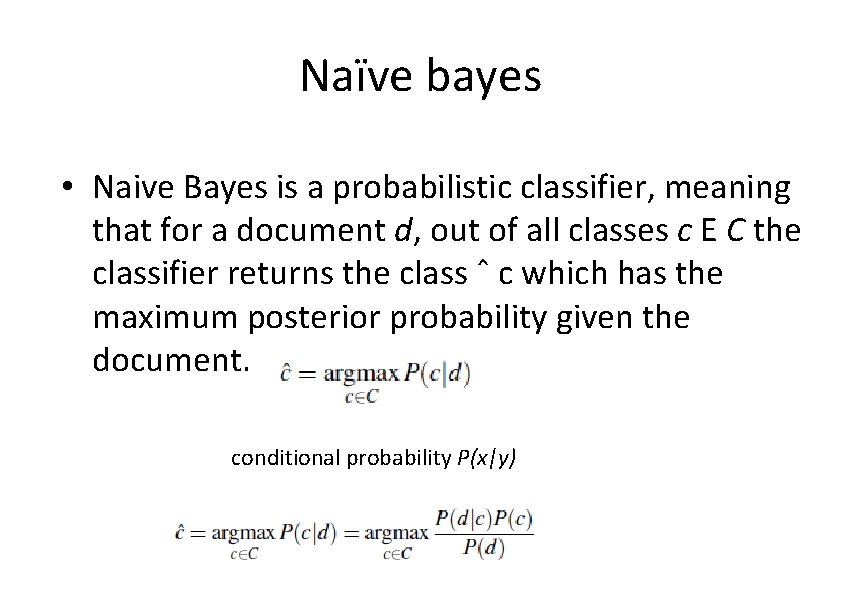

Naïve bayes • Naive Bayes is a probabilistic classifier, meaning that for a document d, out of all classes c E C the classifier returns the class ˆ c which has the maximum posterior probability given the document. conditional probability P(x|y)

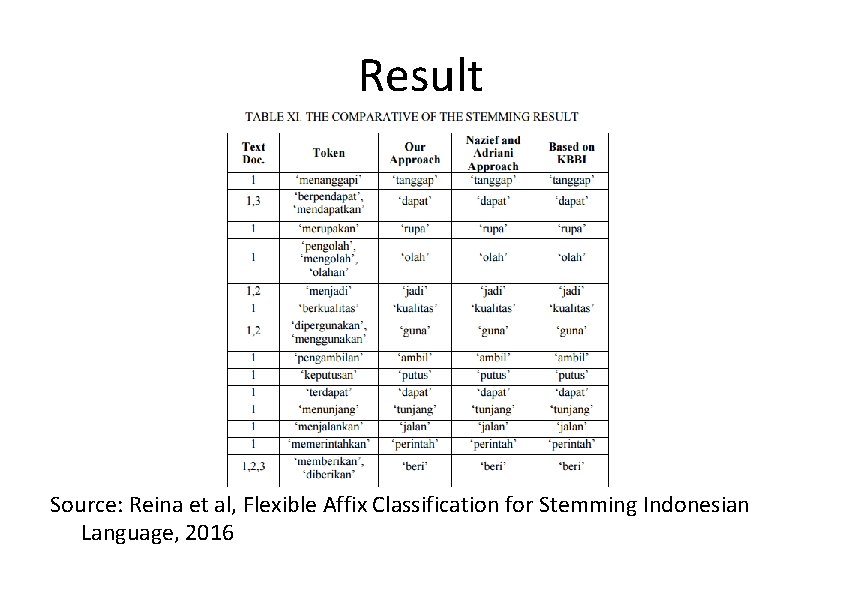

Affix Concept in Bahasa • Indonesian language (Bahasa) affix consists of prefix, infix, suffix, and confix Source: Reina et al, Flexible Affix Classification for Stemming Indonesian Language, 2016

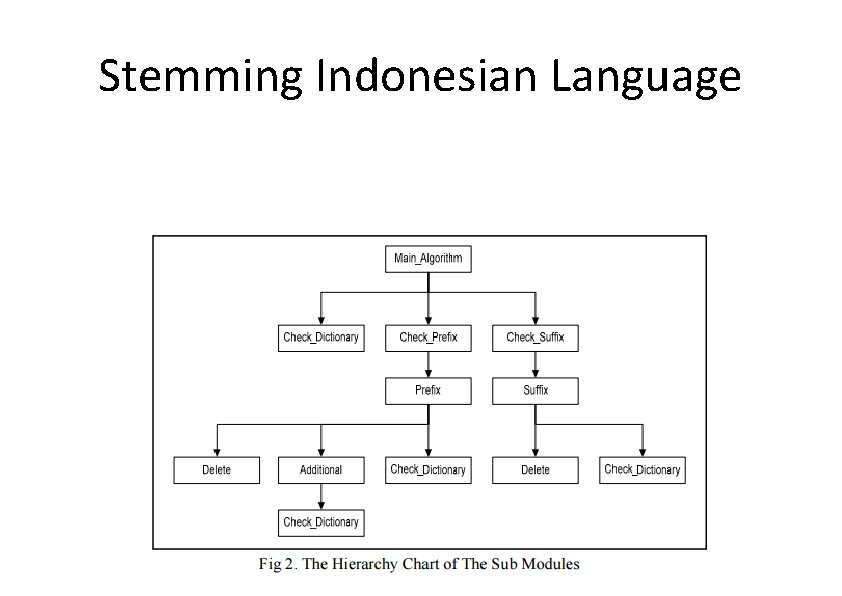

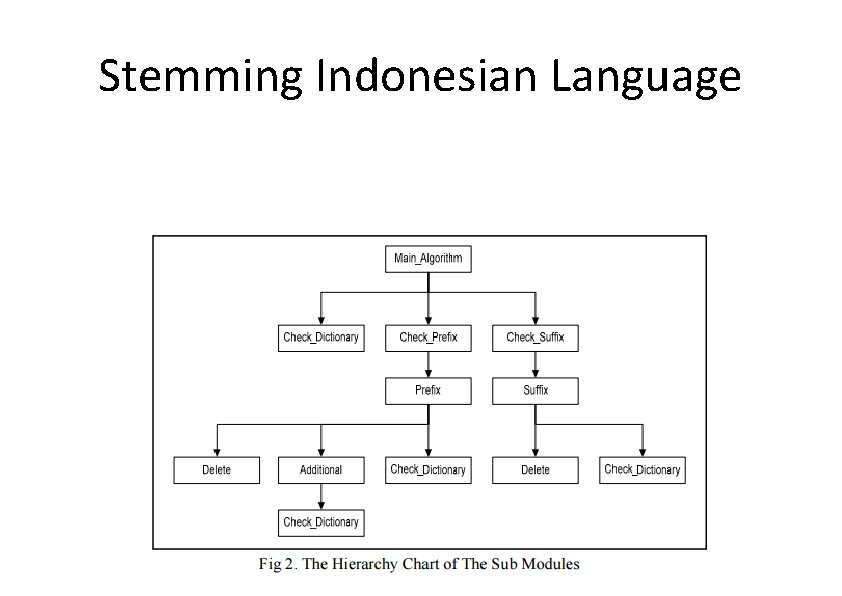

Stemming Indonesian Language

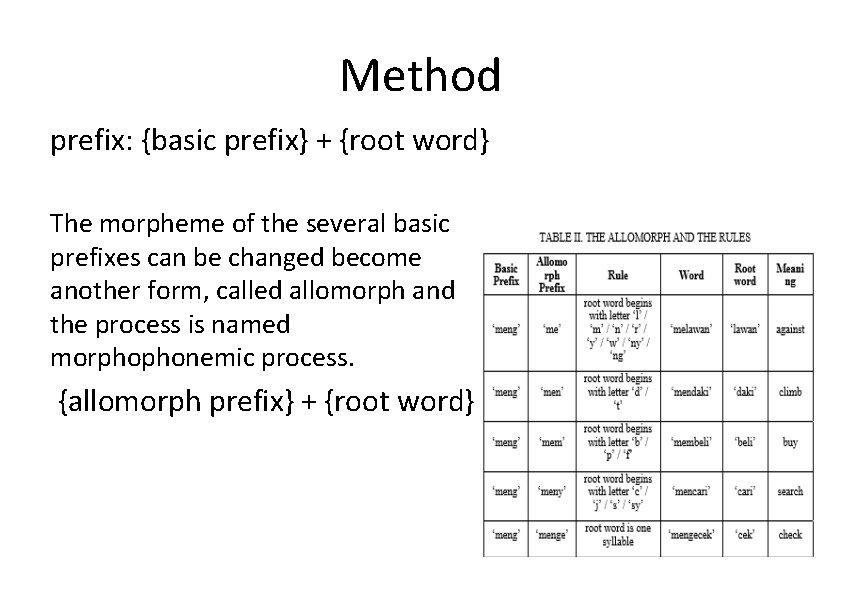

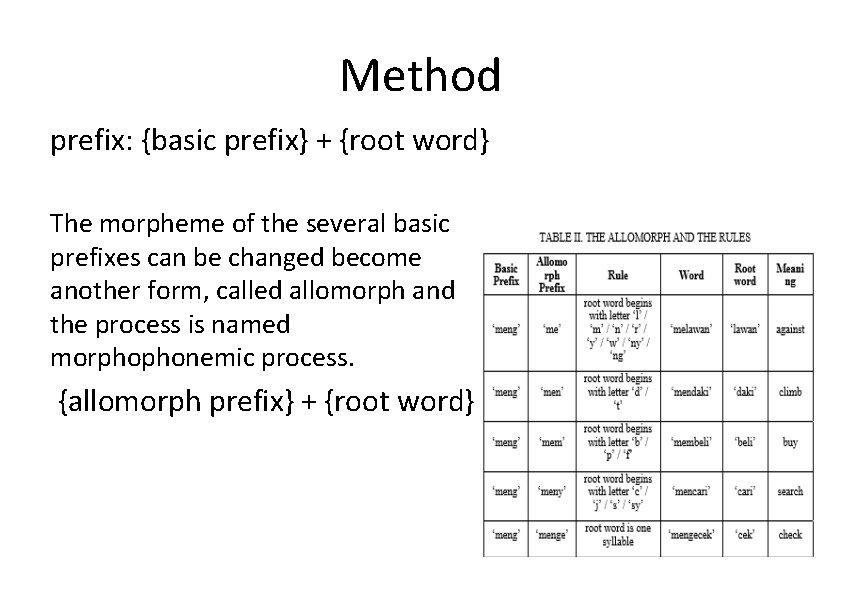

Method prefix: {basic prefix} + {root word} The morpheme of the several basic prefixes can be changed become another form, called allomorph and the process is named morphophonemic process. {allomorph prefix} + {root word}

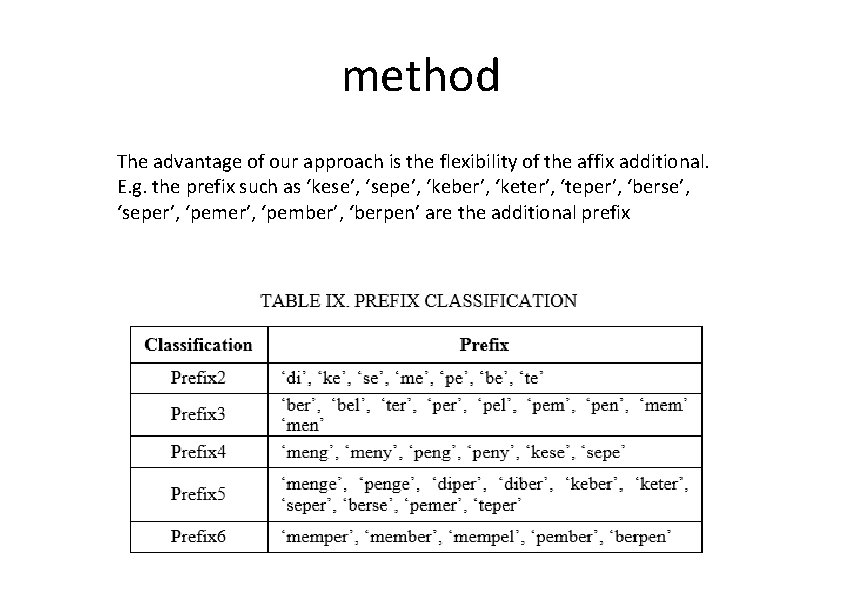

Method • The wide variety of affixes, especially in prefix and suffix encourage our approach to create the classification of the basic prefixes including the allomorph prefixes and suffixes based on number of letter of the prefix and number of letter of the suffix. Therefore we use the number of letter to name the classification. E. g. the prefix 2 classification consists of prefixes with two letters: ‘ke’, ‘di’, ‘se’, ‘me’, ‘pe’, ’be’, ’te’.

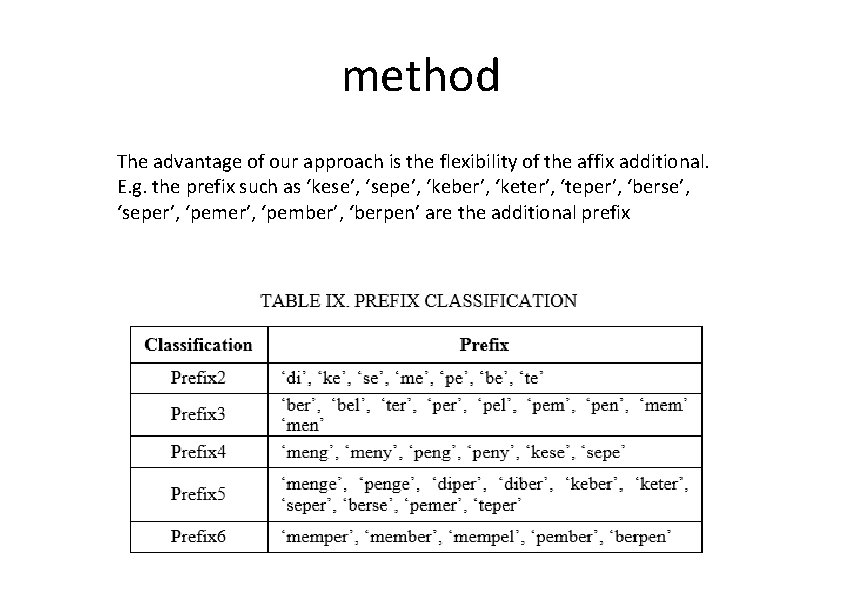

method The advantage of our approach is the flexibility of the affix additional. E. g. the prefix such as ‘kese’, ‘sepe’, ‘keber’, ‘keter’, ‘teper’, ‘berse’, ‘seper’, ‘pember’, ‘berpen’ are the additional prefix

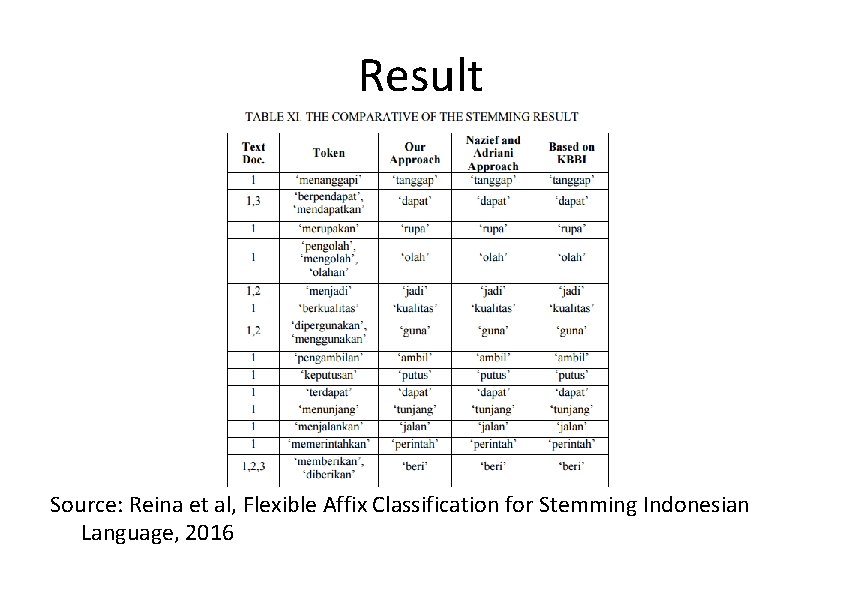

Result Source: Reina et al, Flexible Affix Classification for Stemming Indonesian Language, 2016

Retrieval Evaluation • Performance of IR Systems measured by efficiency(query time, space, response time) and effectivity(relevance, provides by reviewers)

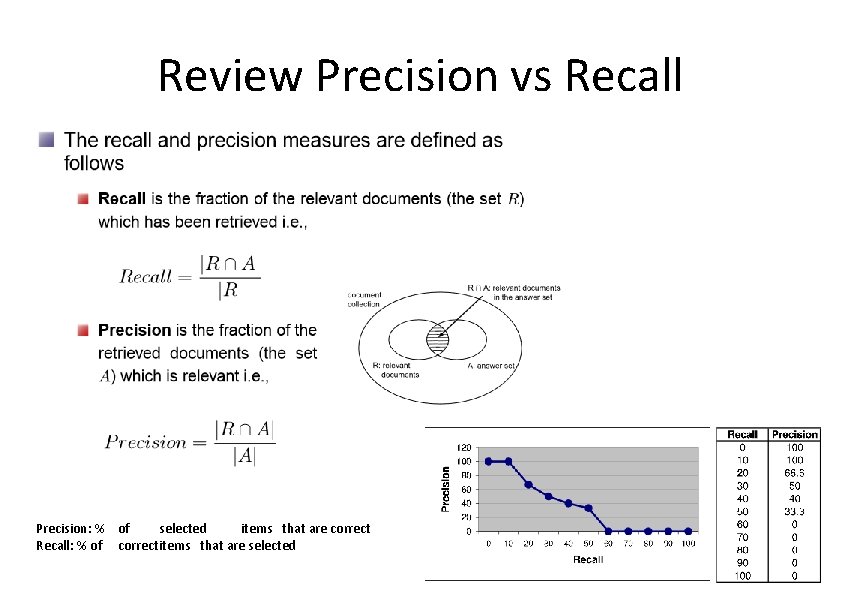

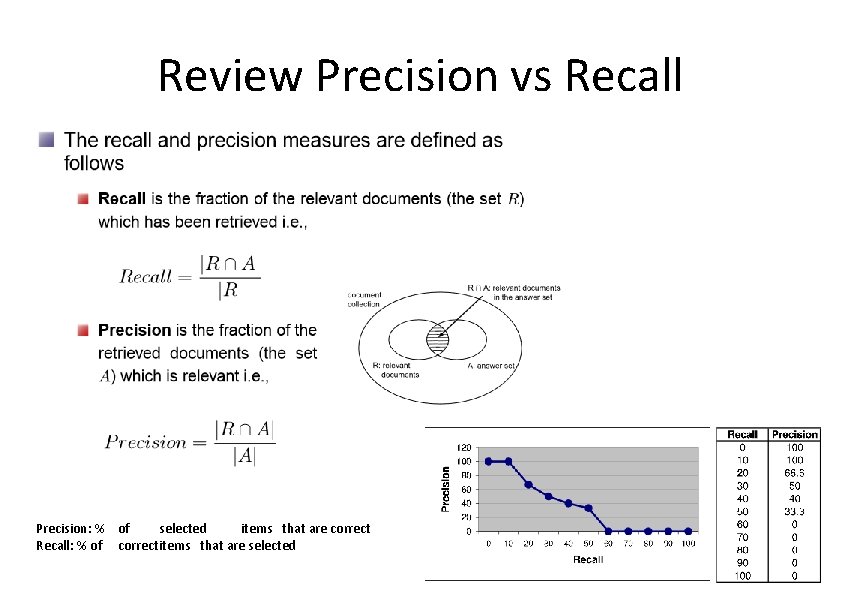

Review Precision vs Recall Precision: % of selected items that are correct Recall: % of correctitems that are selected

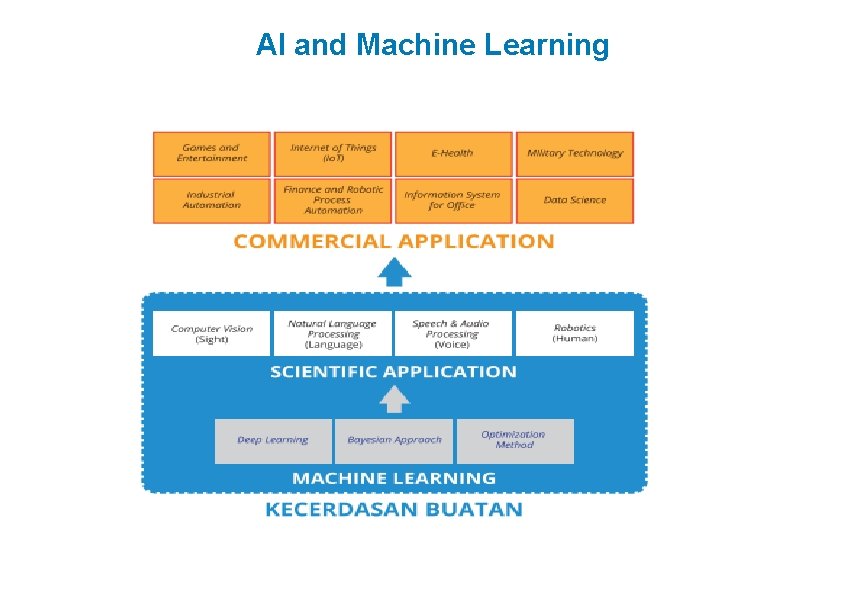

Machine Learning • Machine learning is an application of Artificial Intelligence (AI) that: • Provides systems the ability to automatically learn from training data and improve from experience without being explicitly programmed. • For example, machine learning system can be trained in an email message to learn to distinguish between spam and non-spam messages.

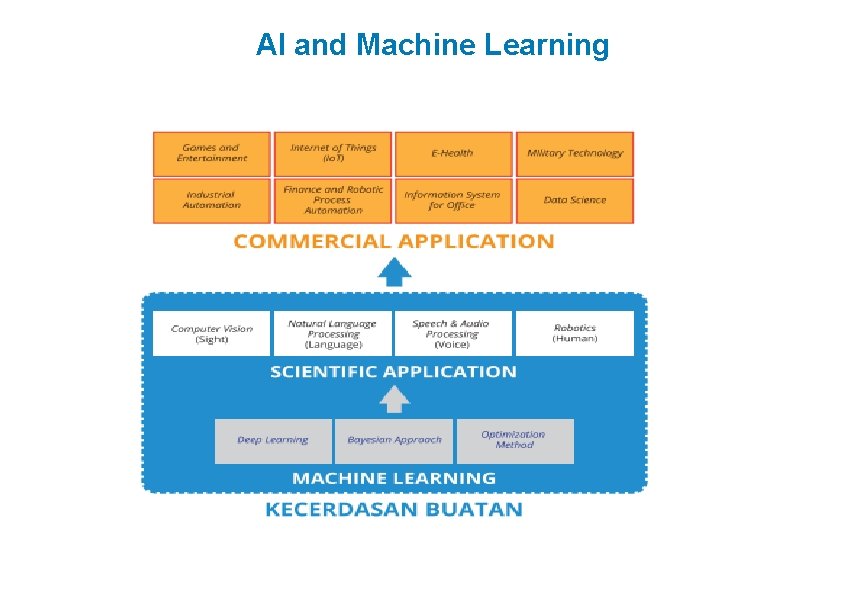

AI and Machine Learning

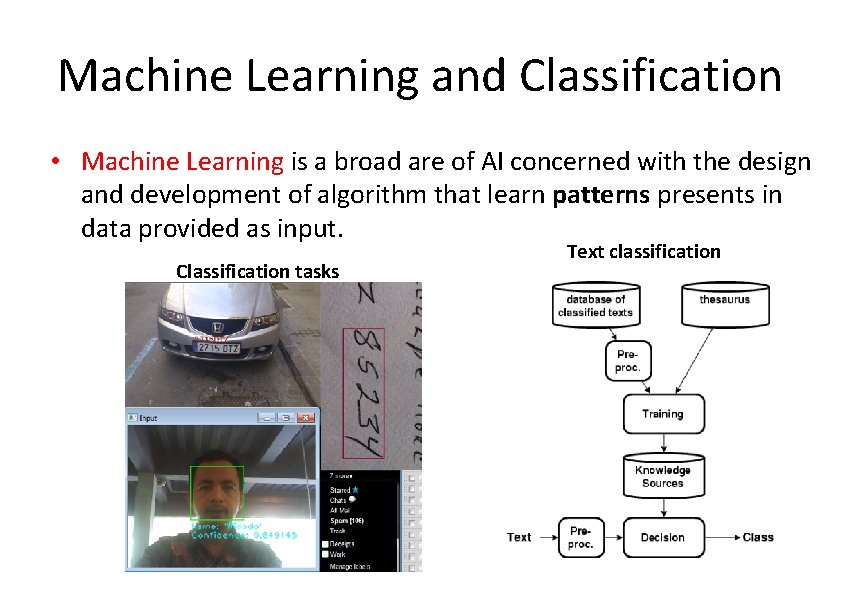

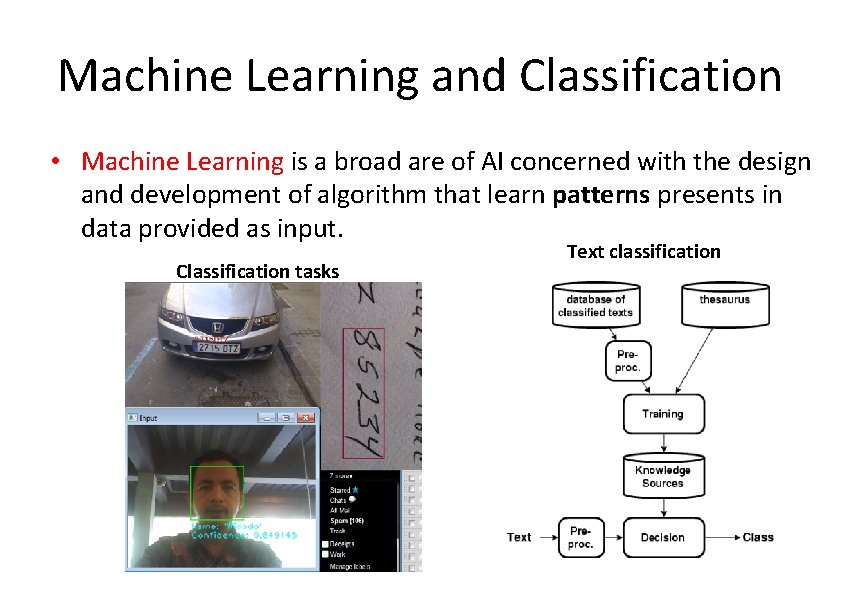

Machine Learning and Classification • Machine Learning is a broad are of AI concerned with the design and development of algorithm that learn patterns presents in data provided as input. Classification tasks Text classification

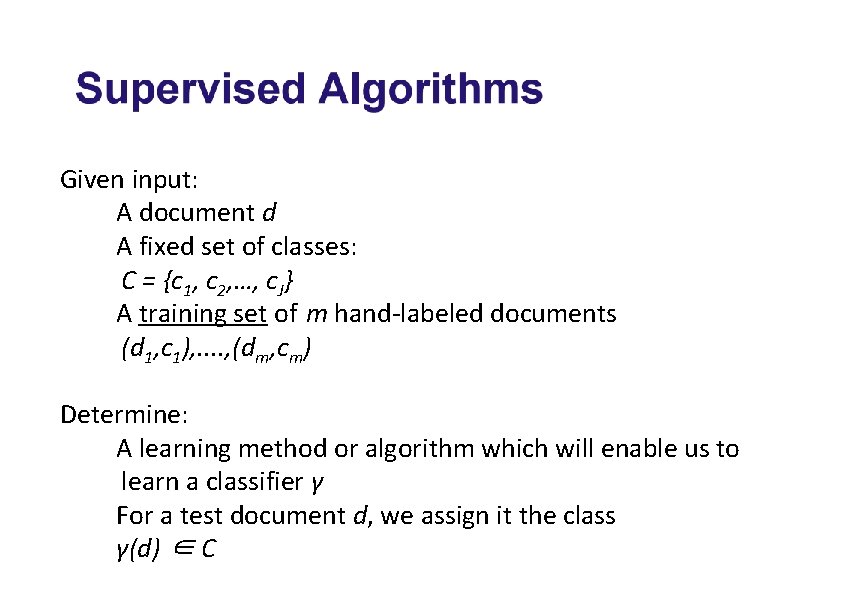

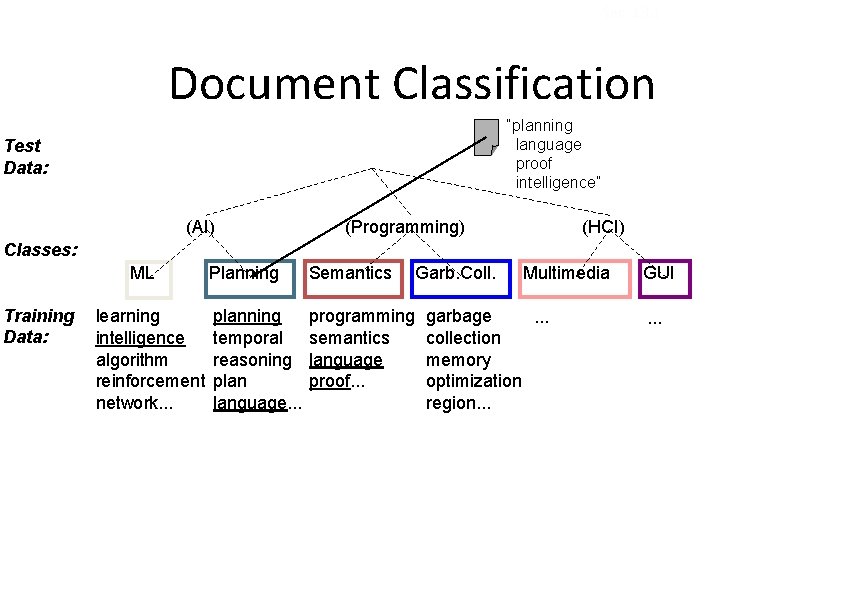

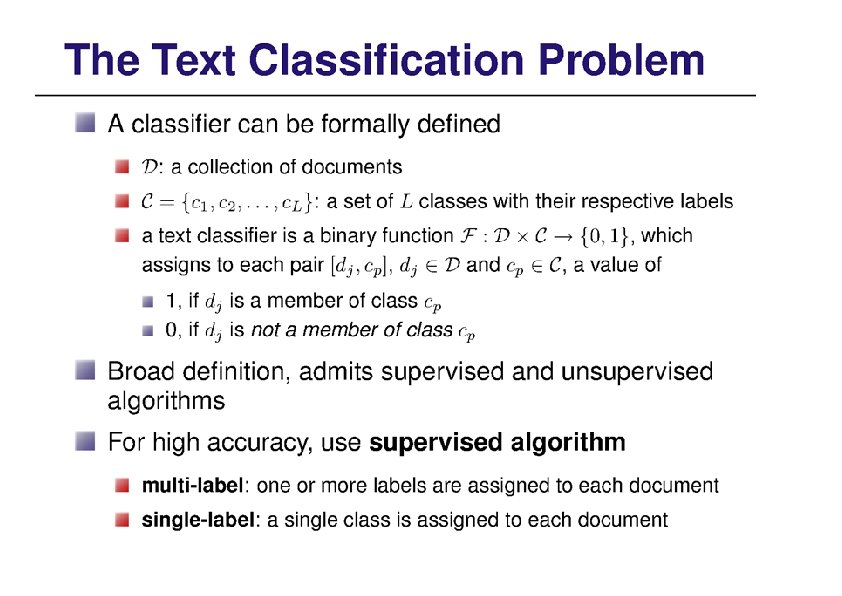

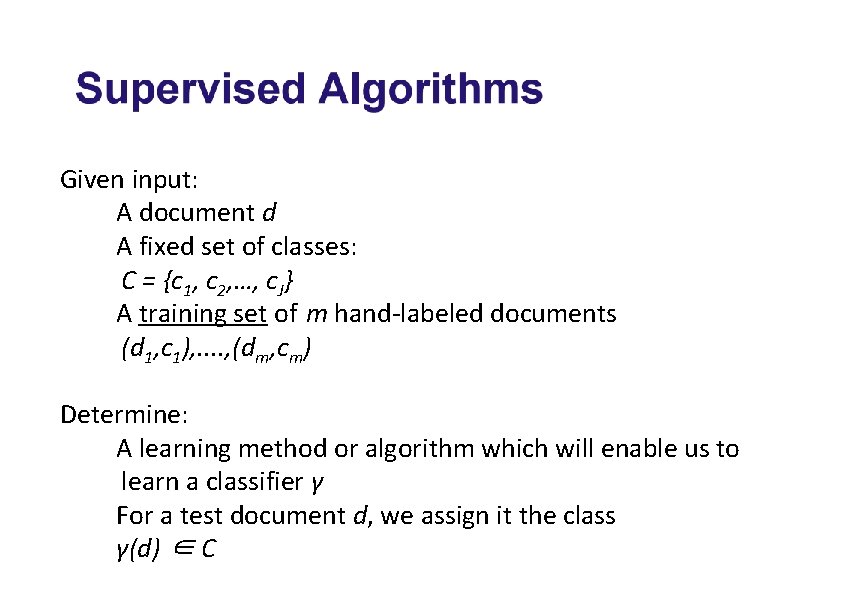

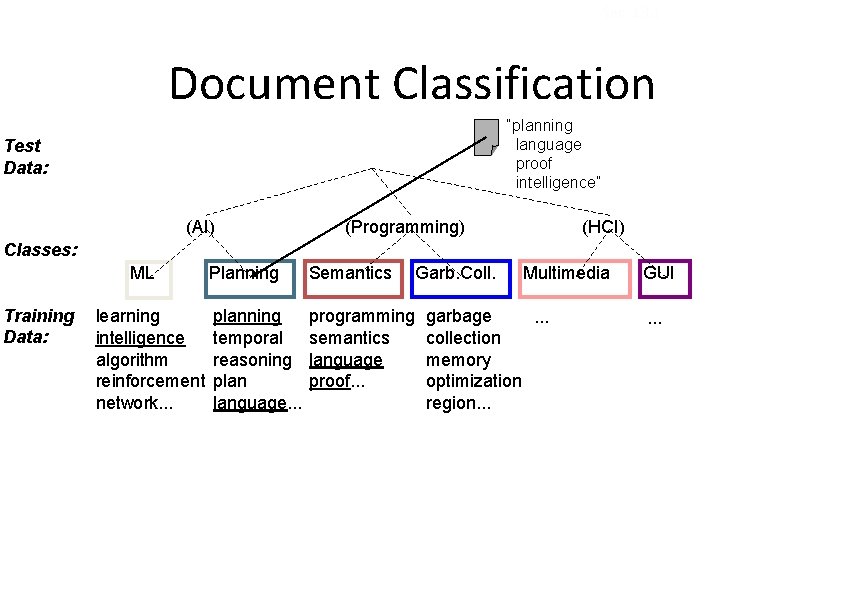

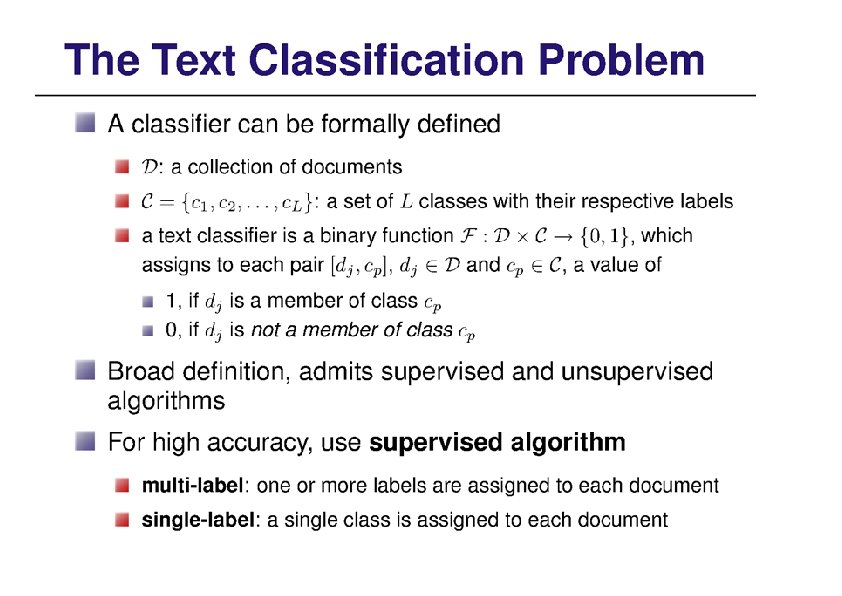

Given input: A document d A fixed set of classes: C = {c 1, c 2, …, c. J} A training set of m hand-labeled documents (d 1, c 1), . . , (dm, cm) Determine: A learning method or algorithm which will enable us to learn a classifier γ For a test document d, we assign it the class γ(d) ∈ C

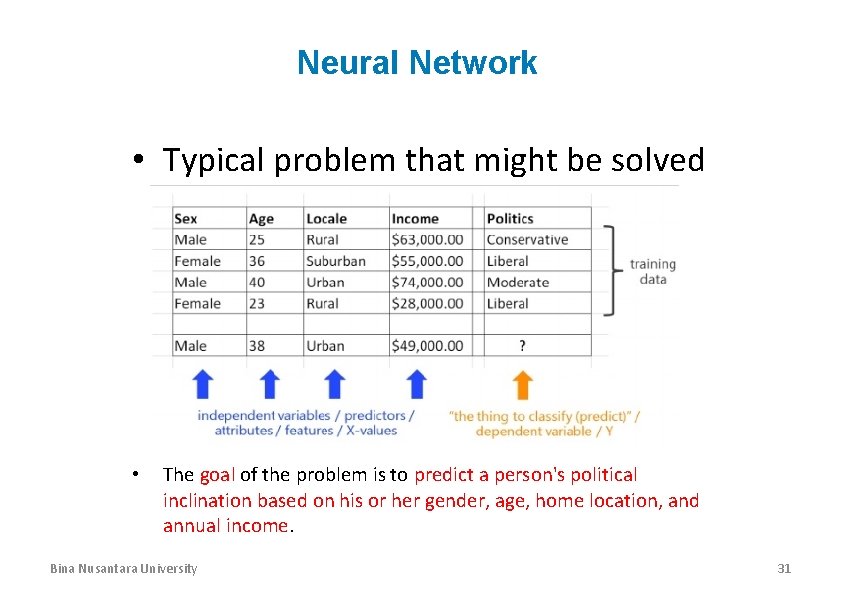

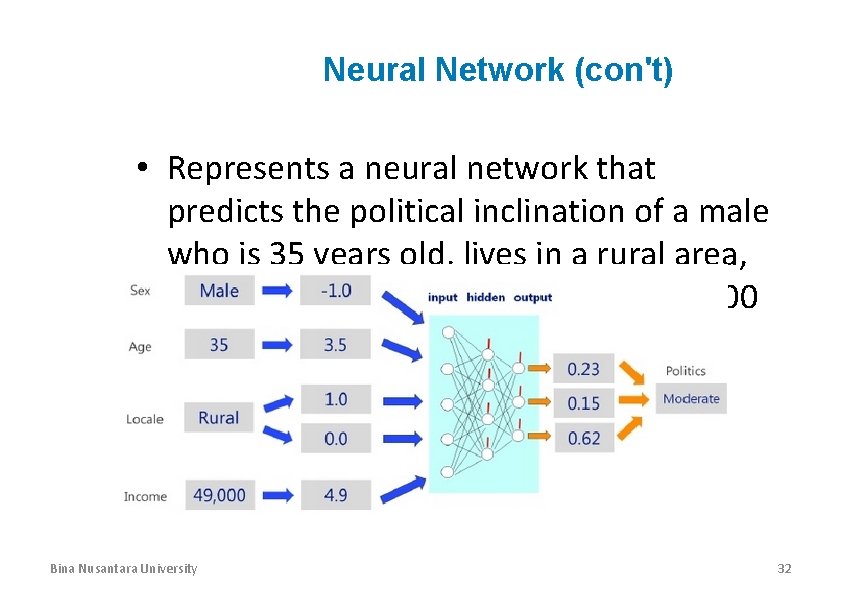

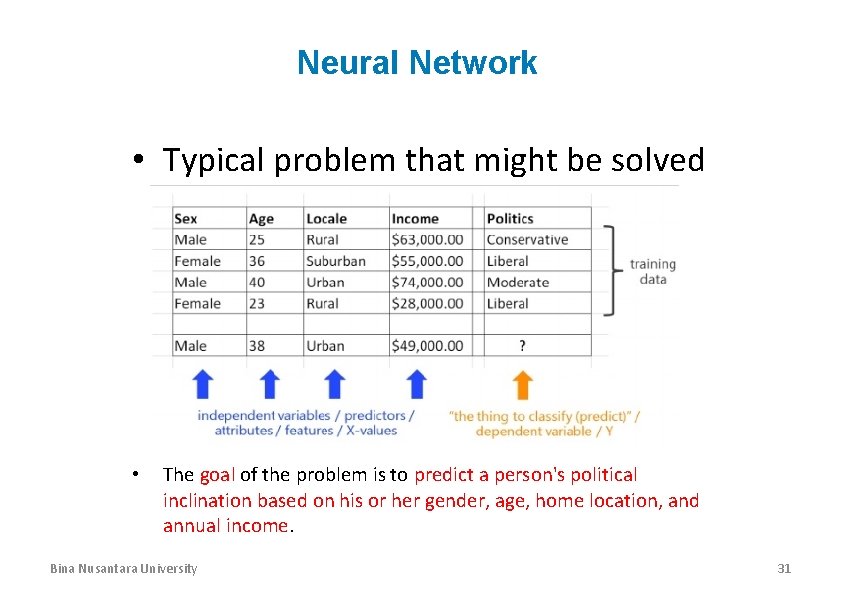

Example Problem Neural Network • Typical problem that might be solved by NN • The goal of the problem is to predict a person's political inclination based on his or her gender, age, home location, and annual income. Bina Nusantara University 31

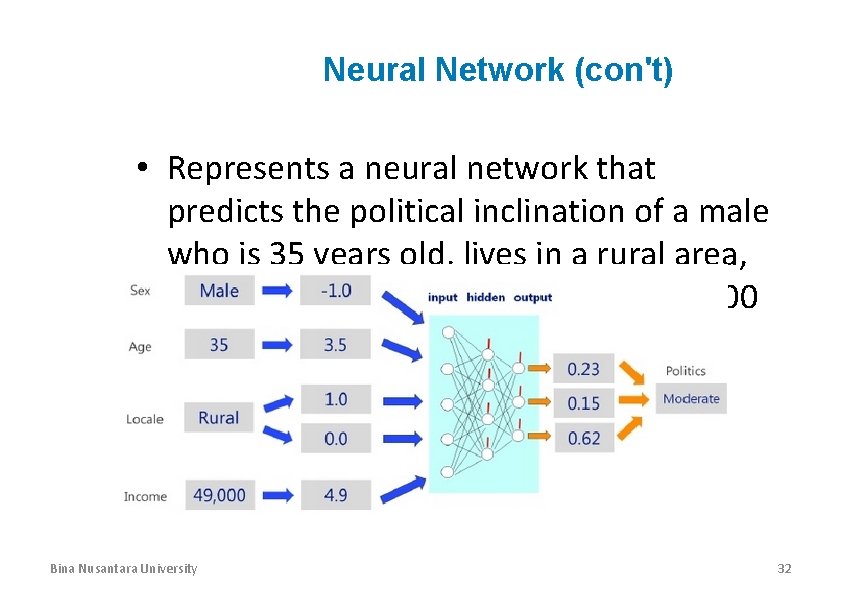

Solution Neural Network (con't) • Represents a neural network that predicts the political inclination of a male who is 35 years old, lives in a rural area, and has an annual income of $49, 000. 00 Bina Nusantara University 32

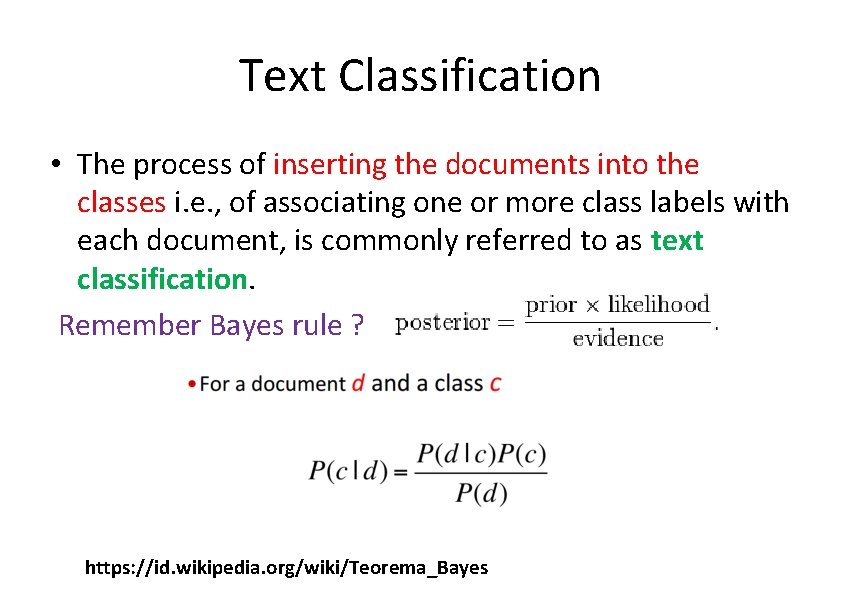

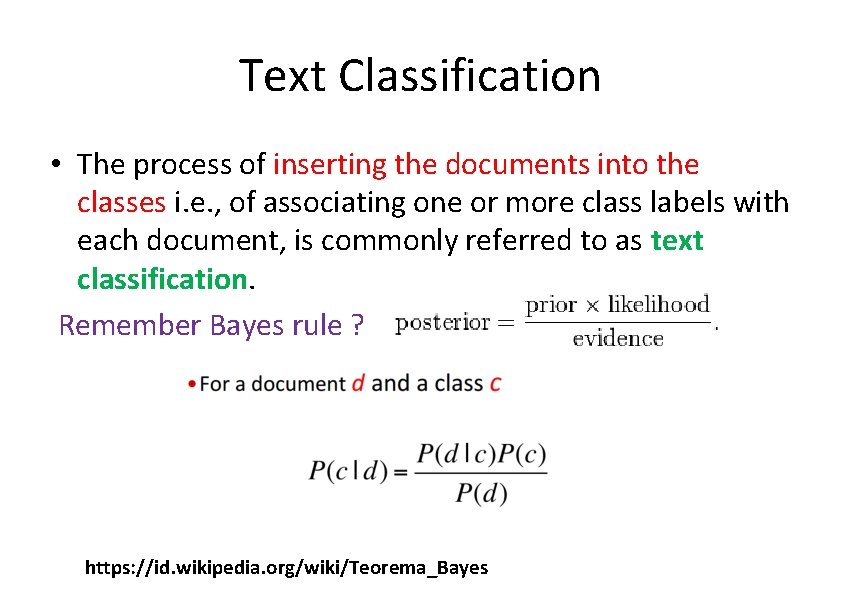

Text Classification • The process of inserting the documents into the classes i. e. , of associating one or more class labels with each document, is commonly referred to as text classification. Remember Bayes rule ? https: //id. wikipedia. org/wiki/Teorema_Bayes

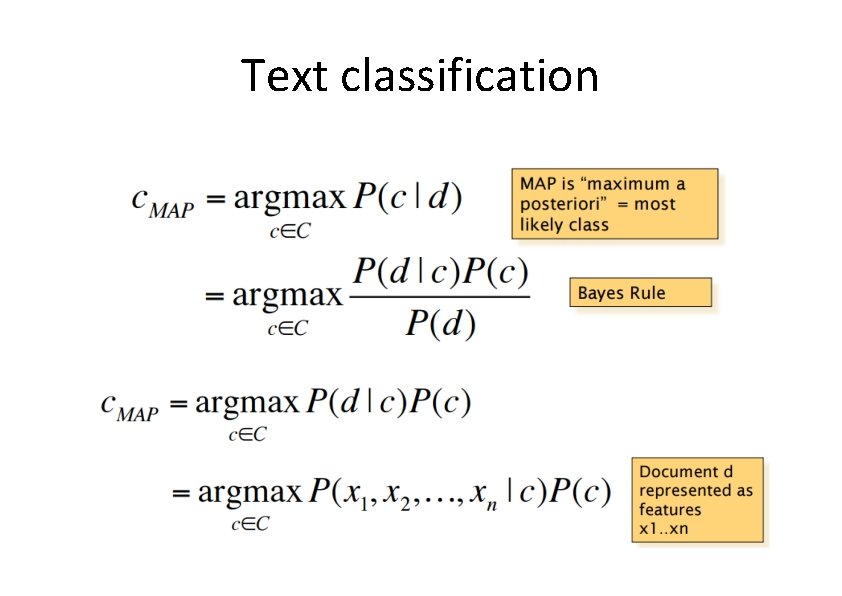

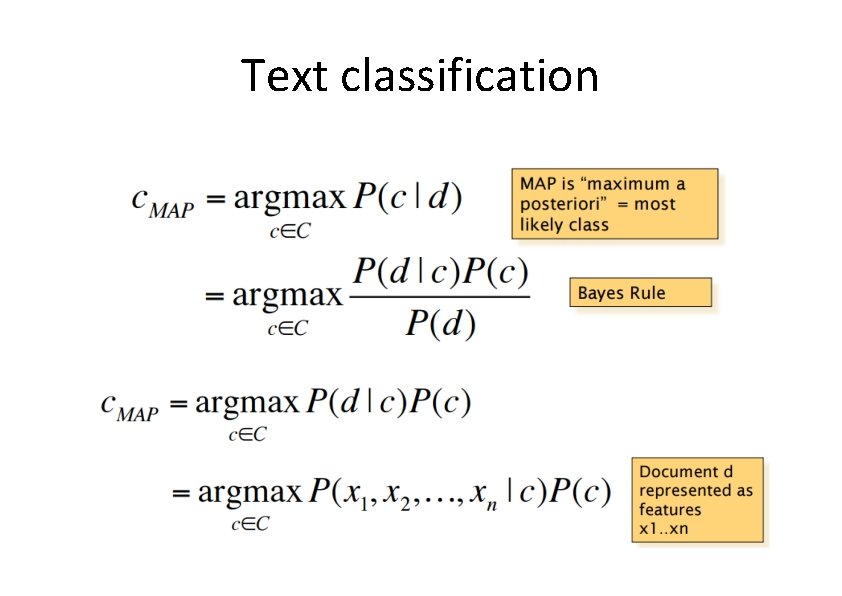

Text classification

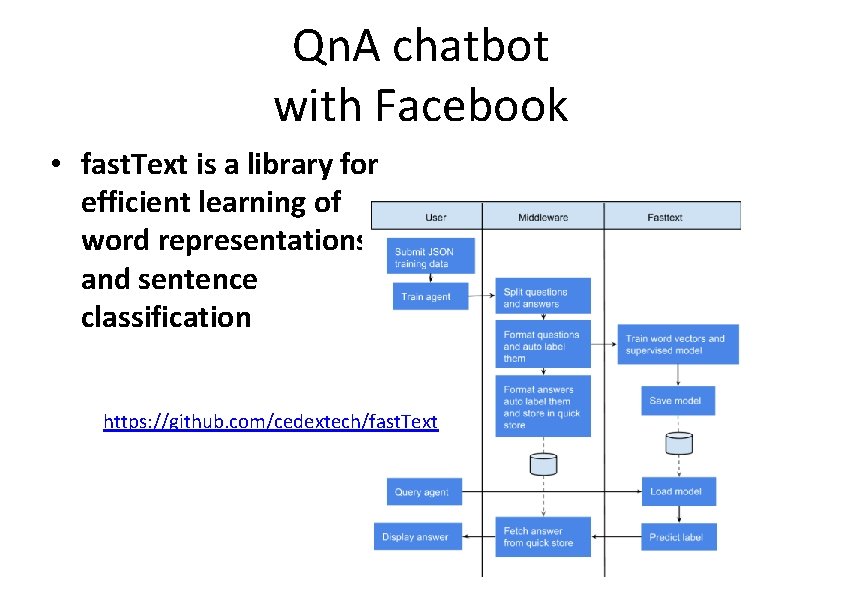

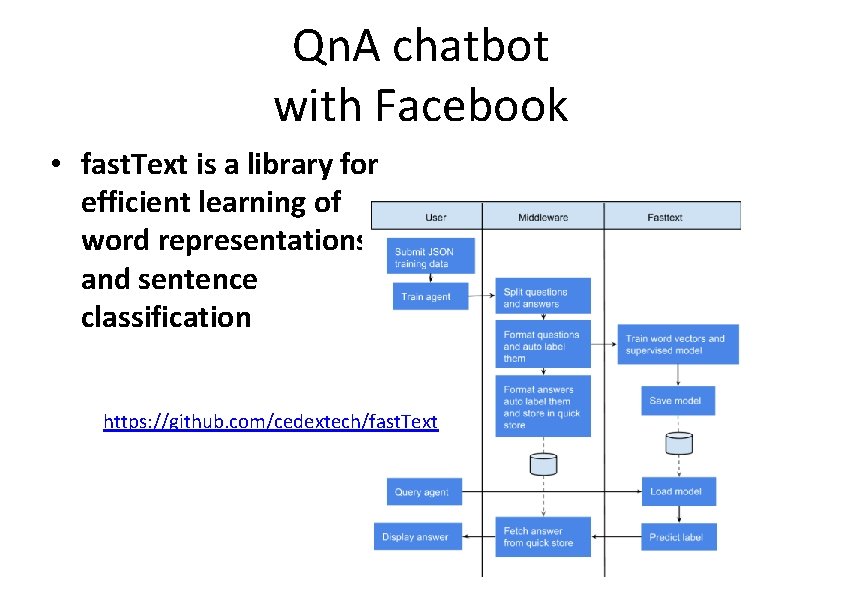

Qn. A chatbot with Facebook • fast. Text is a library for efficient learning of word representations and sentence classification https: //github. com/cedextech/fast. Text

Spam filtering Another text classification task From: "" <takworlld@hotmail. com> Subject: real estate is the only way. . . gem oalvgkay Anyone can buy real estate with no money down Stop paying rent TODAY ! There is no need to spend hundreds or even thousands for similar courses I am 22 years old and I have already purchased 6 properties using the methods outlined in this truly INCREDIBLE ebook. Change your life NOW ! ========================= Click Below to order: http: //www. wholesaledaily. com/sales/nmd. htm =========================

Sec. 13. 1 Document Classification “planning language proof intelligence” Test Data: (AI) (Programming) (HCI) Classes: ML Training Data: learning intelligence algorithm reinforcement network. . . Planning Semantics Garb. Coll. planning temporal reasoning plan language. . . programming semantics language proof. . . Multimedia garbage. . . collection memory optimization region. . . GUI. . .

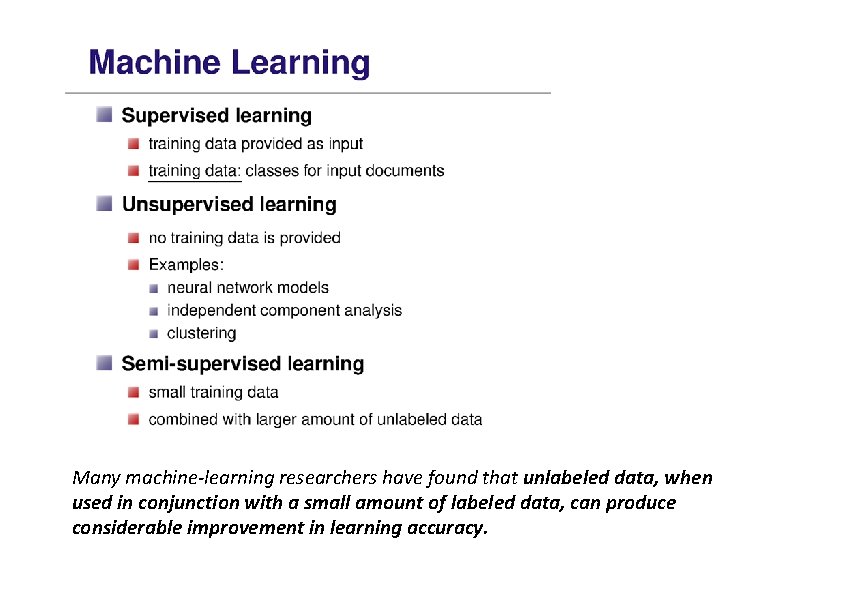

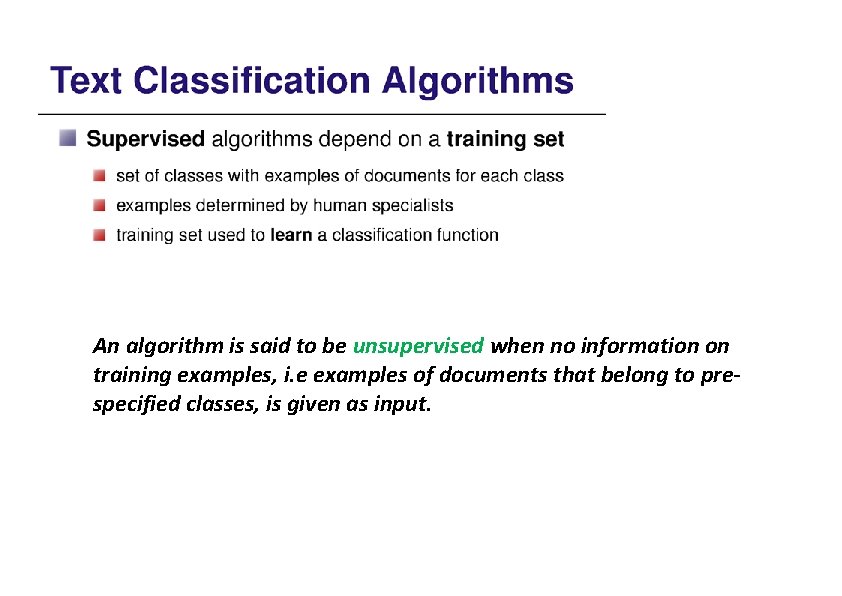

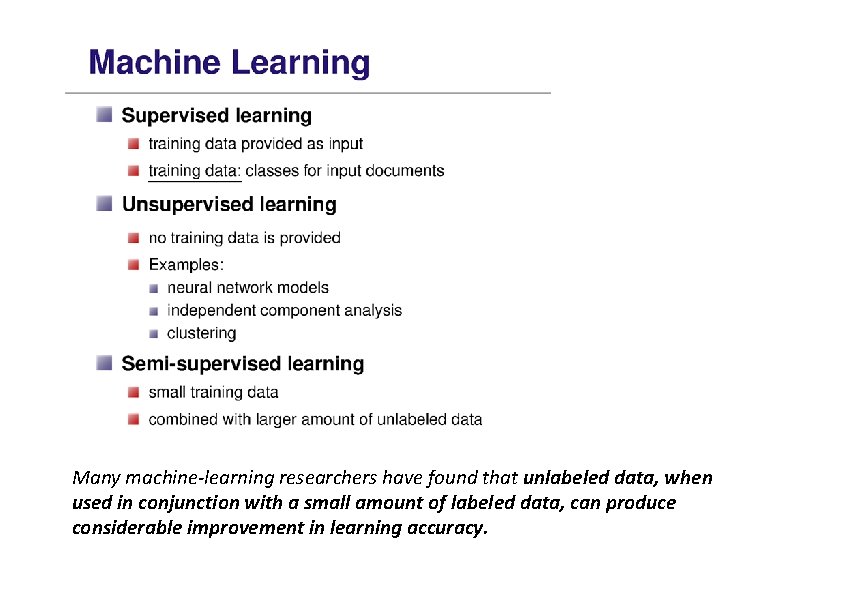

Many machine-learning researchers have found that unlabeled data, when used in conjunction with a small amount of labeled data, can produce considerable improvement in learning accuracy.

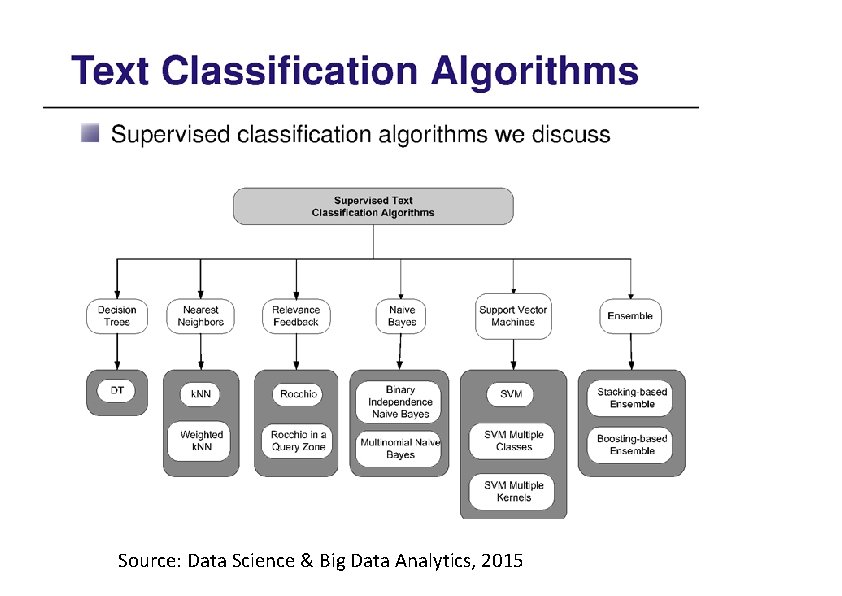

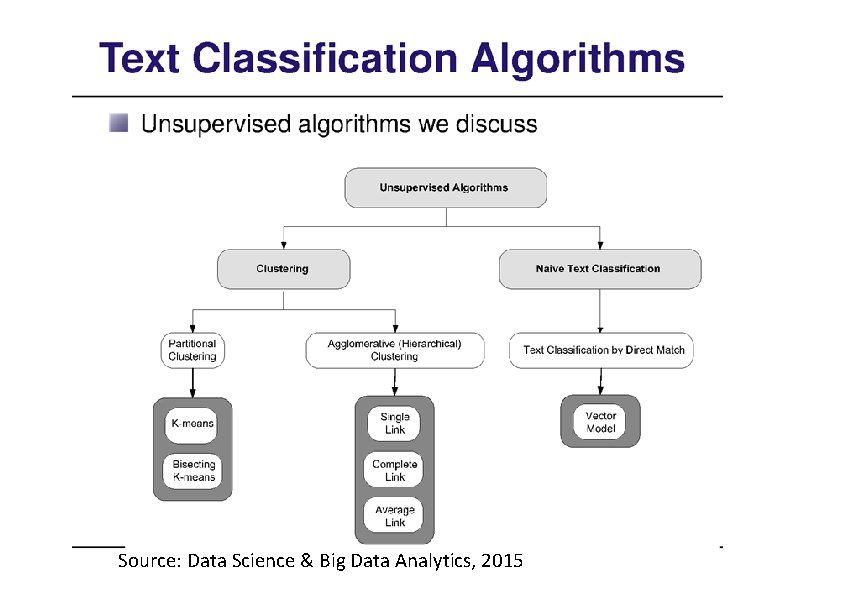

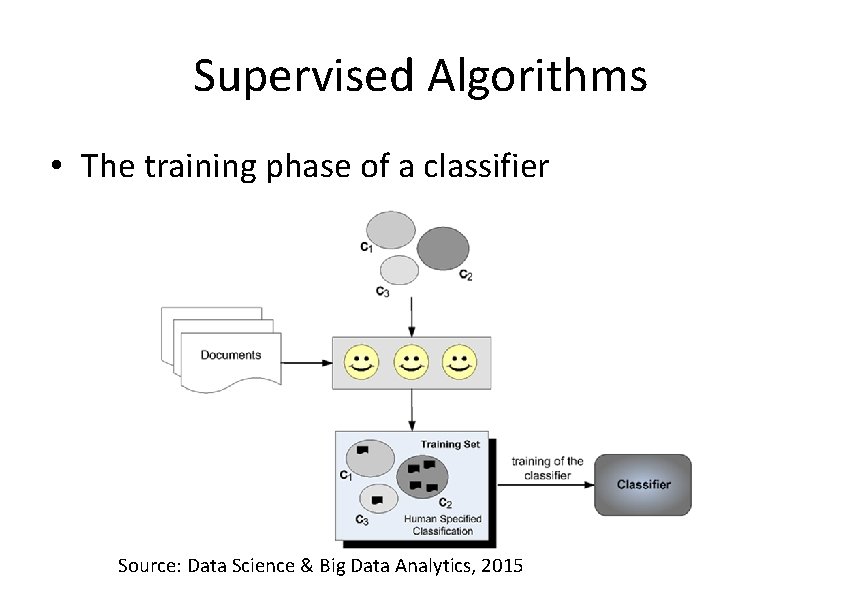

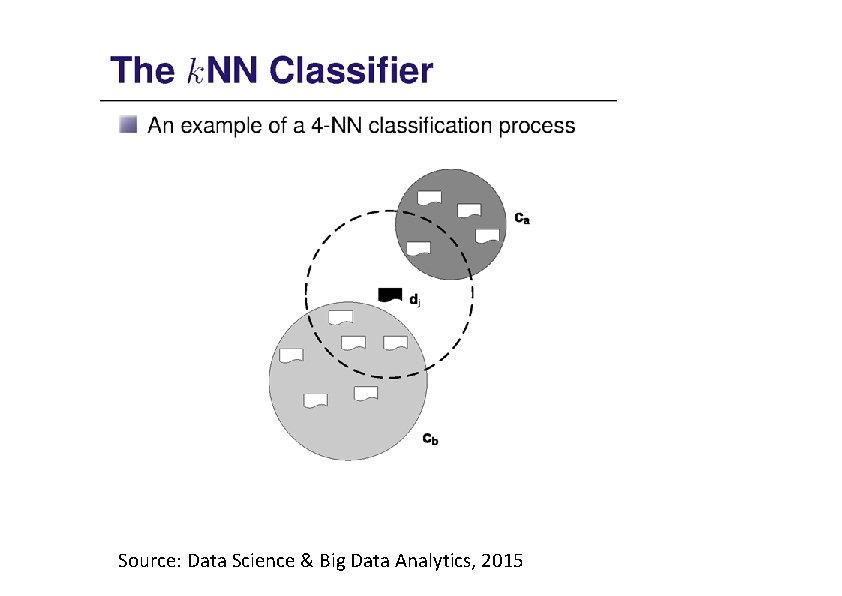

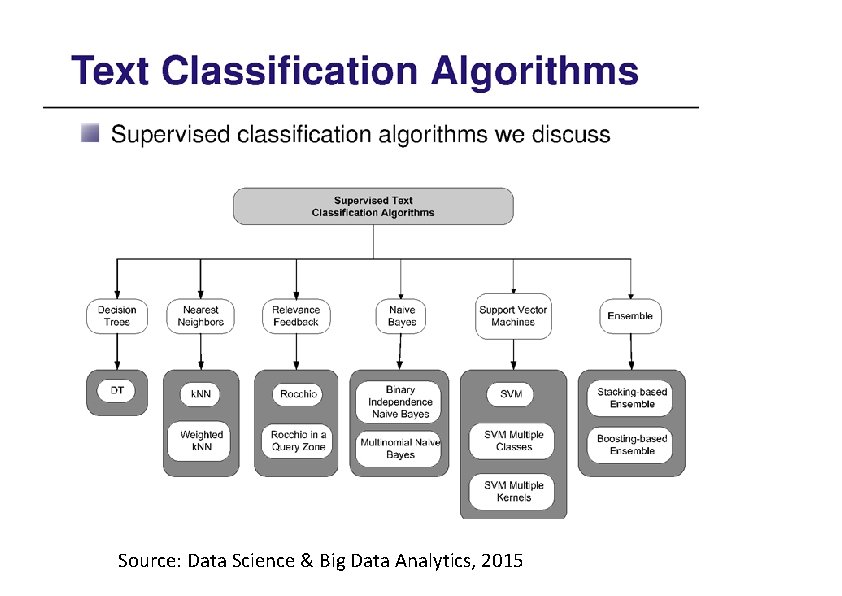

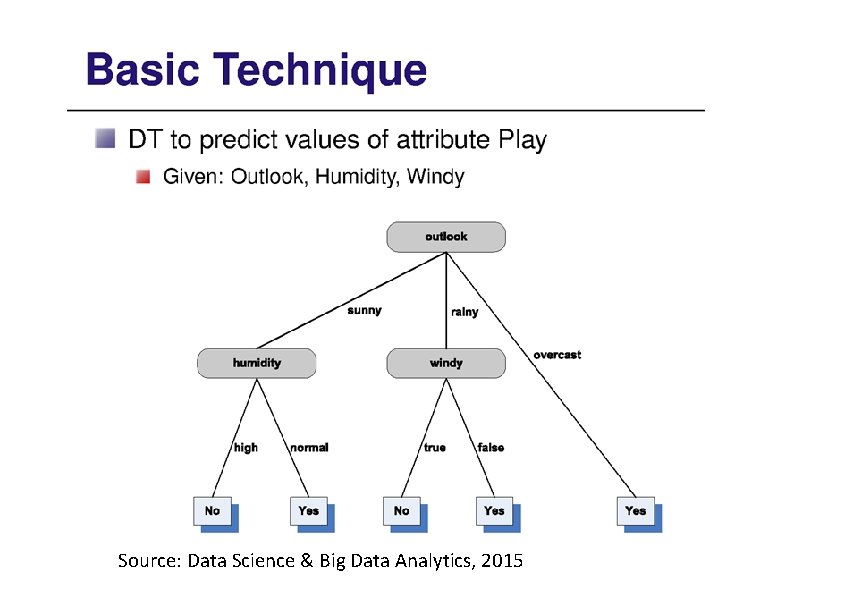

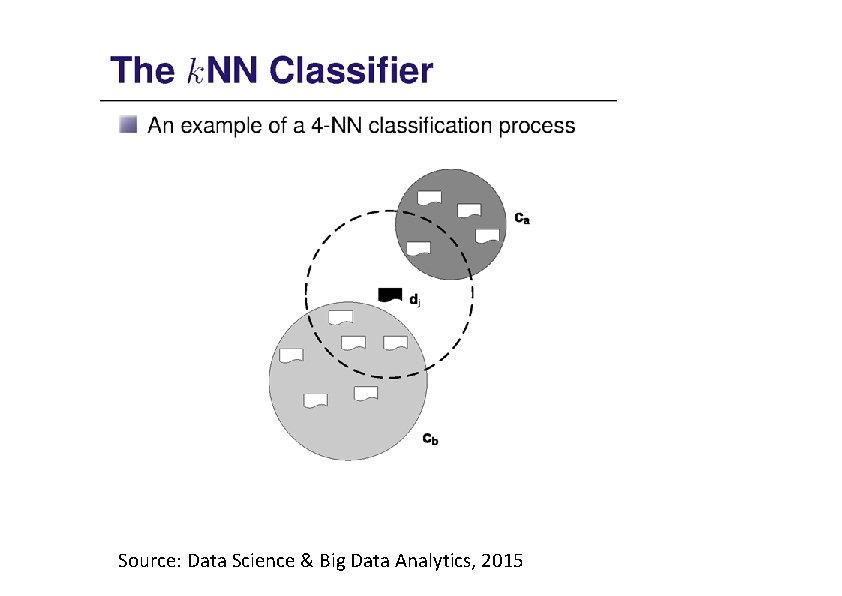

Source: Data Science & Big Data Analytics, 2015

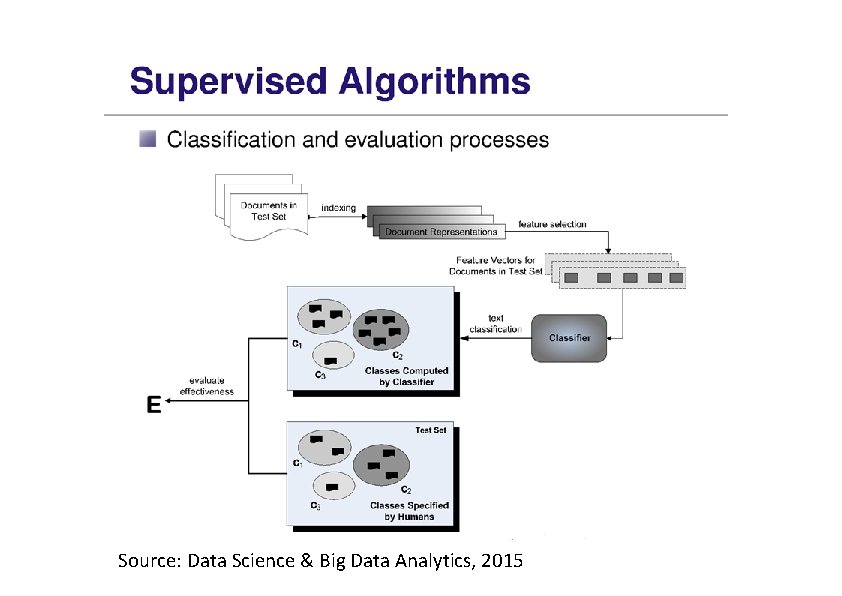

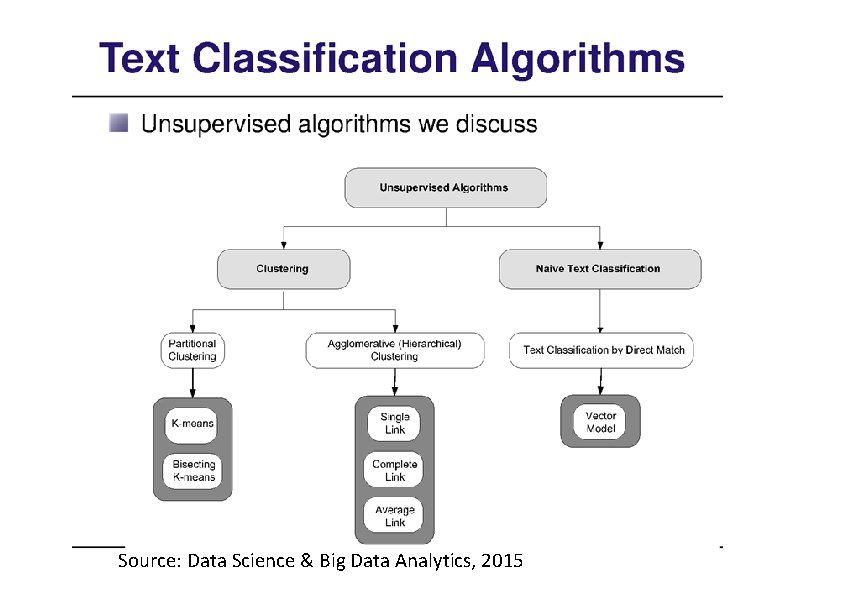

Source: Data Science & Big Data Analytics, 2015

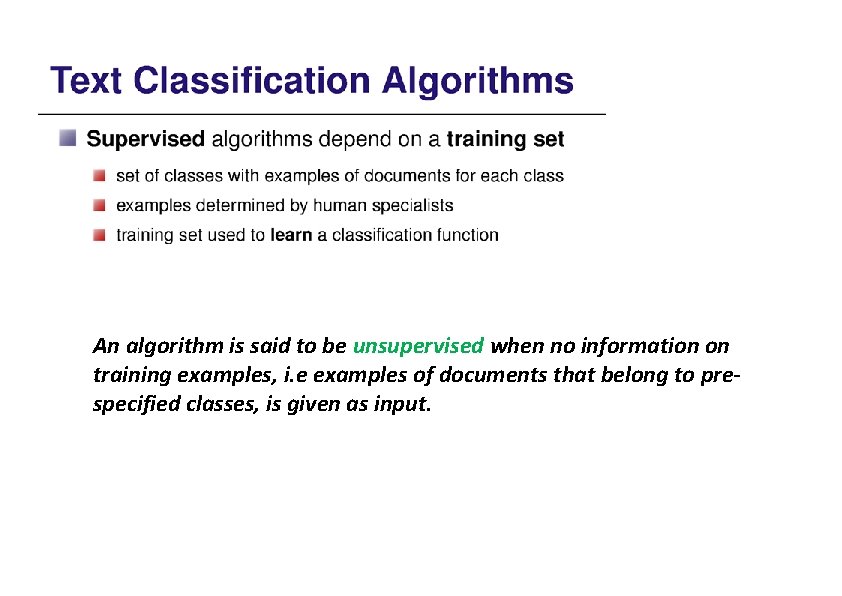

An algorithm is said to be unsupervised when no information on training examples, i. e examples of documents that belong to prespecified classes, is given as input.

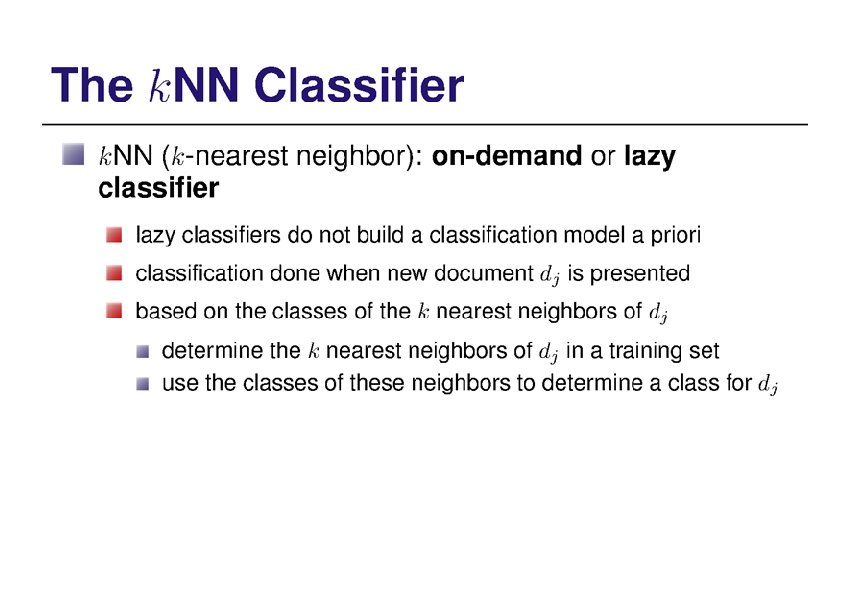

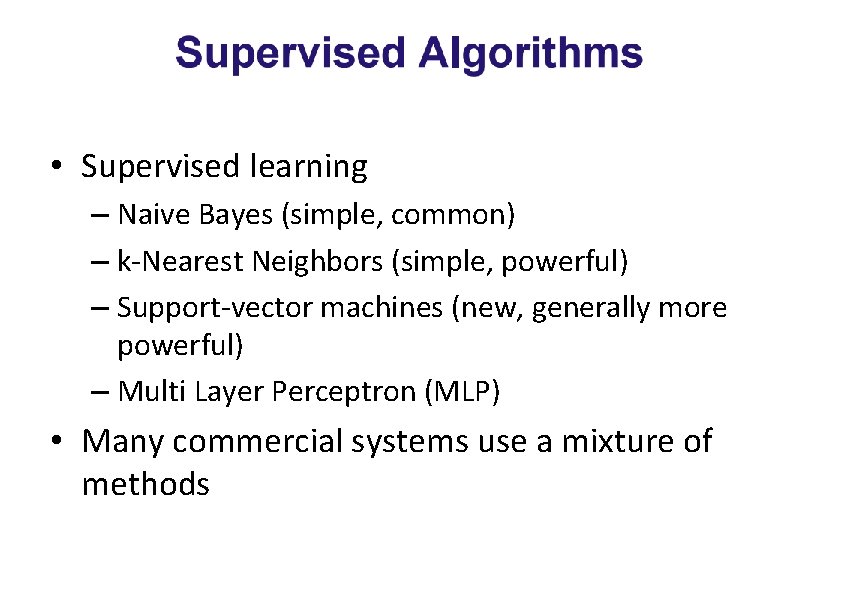

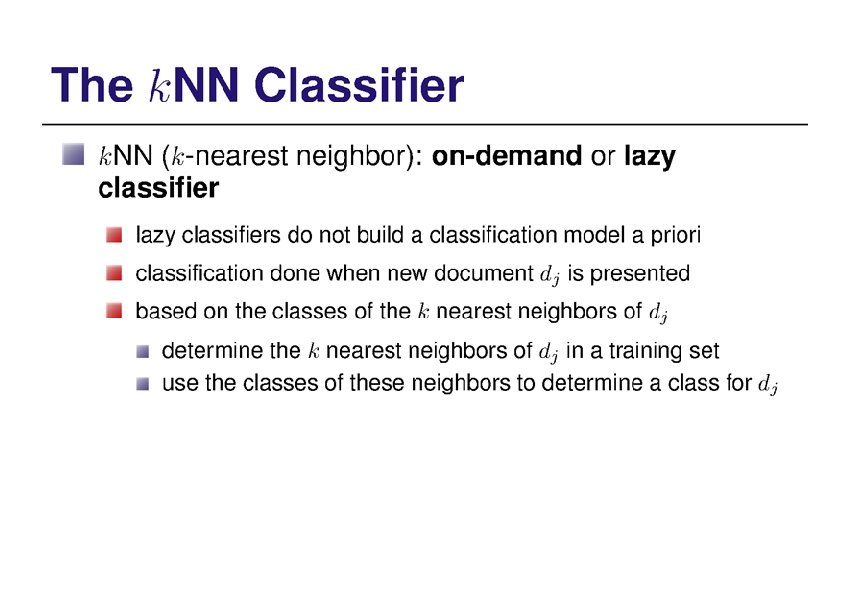

• Supervised learning – Naive Bayes (simple, common) – k-Nearest Neighbors (simple, powerful) – Support-vector machines (new, generally more powerful) – Multi Layer Perceptron (MLP) • Many commercial systems use a mixture of methods

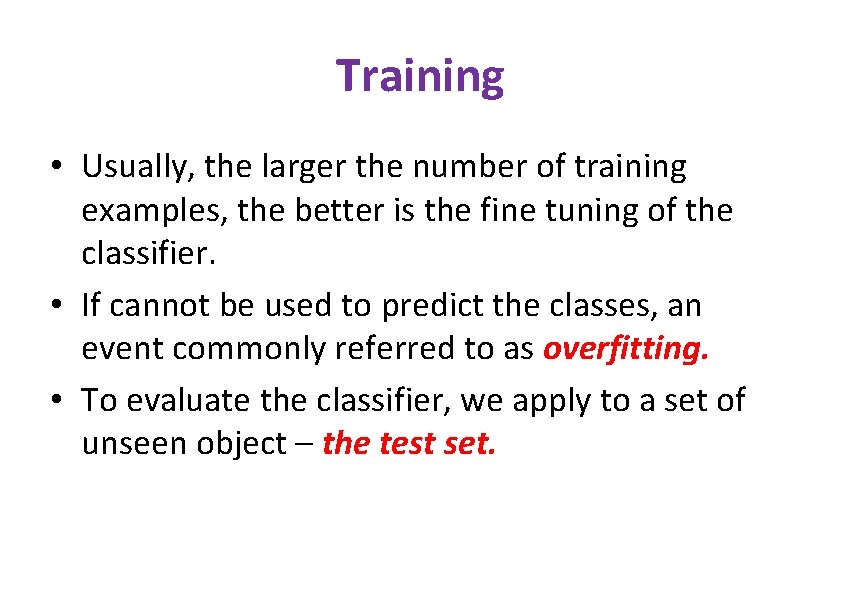

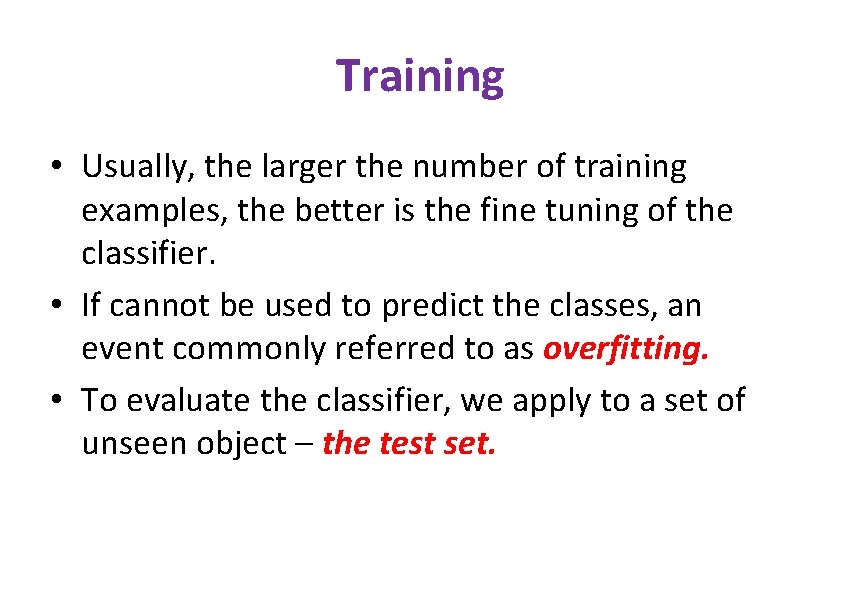

Training • Usually, the larger the number of training examples, the better is the fine tuning of the classifier. • If cannot be used to predict the classes, an event commonly referred to as overfitting. • To evaluate the classifier, we apply to a set of unseen object – the test set.

Naive Bayes • Naive Bayes learners and classifiers can be extremely fast compared to more sophisticated methods.

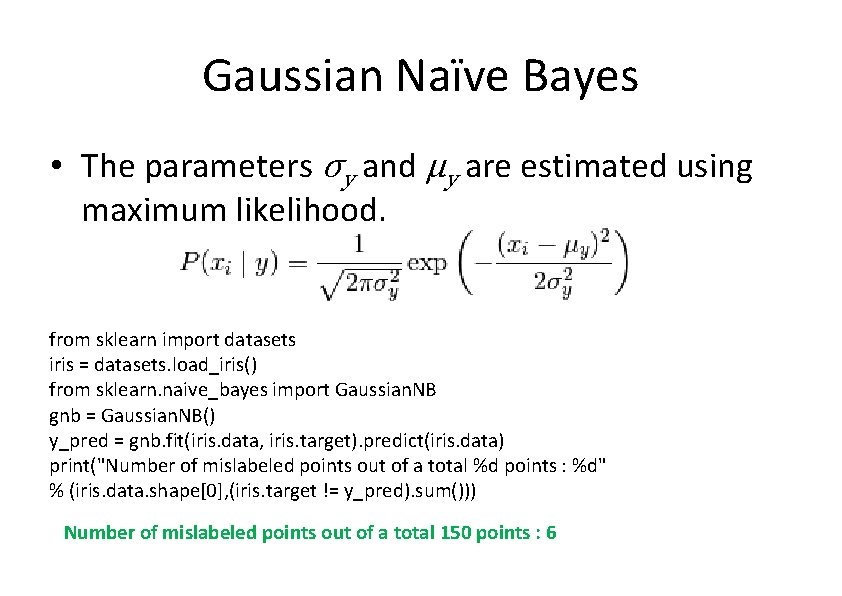

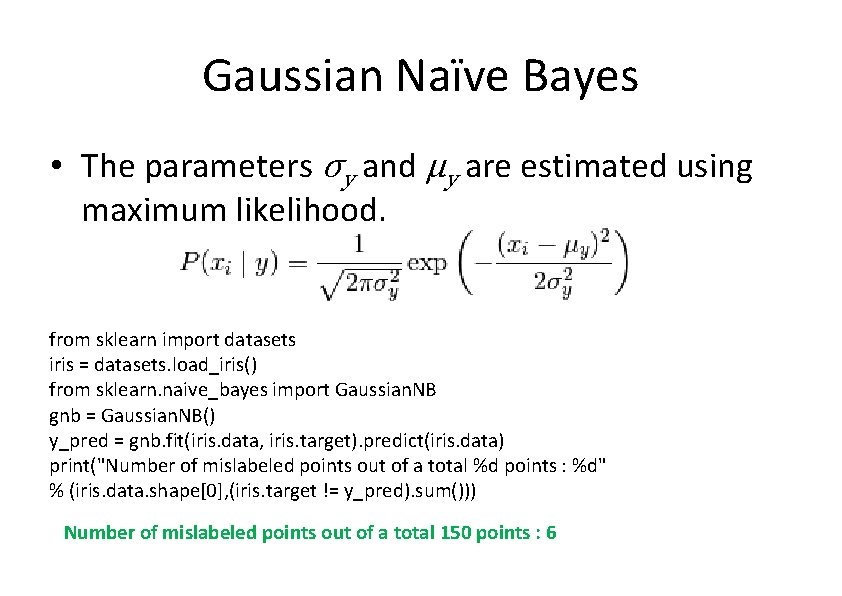

Gaussian Naïve Bayes • The parameters σy and μy are estimated using maximum likelihood. from sklearn import datasets iris = datasets. load_iris() from sklearn. naive_bayes import Gaussian. NB gnb = Gaussian. NB() y_pred = gnb. fit(iris. data, iris. target). predict(iris. data) print("Number of mislabeled points out of a total %d points : %d" % (iris. data. shape[0], (iris. target != y_pred). sum())) Number of mislabeled points out of a total 150 points : 6

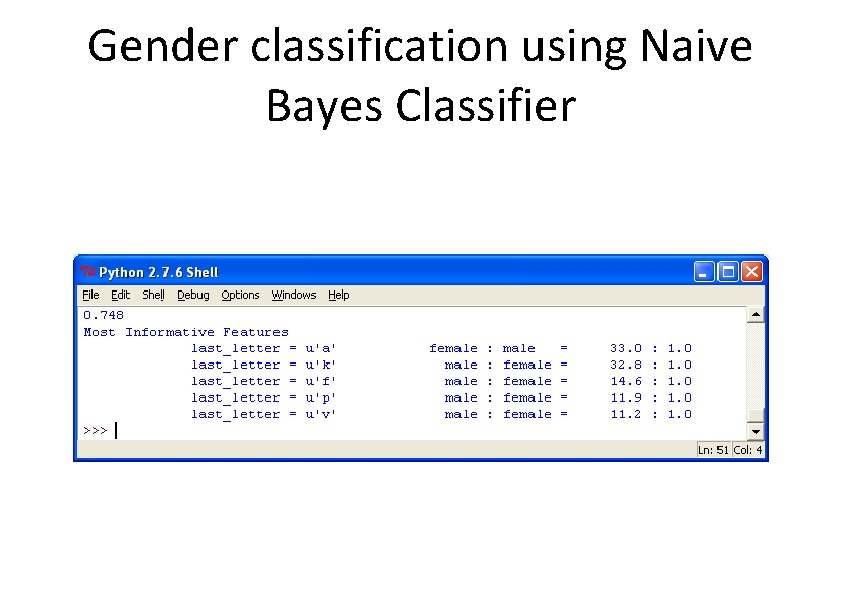

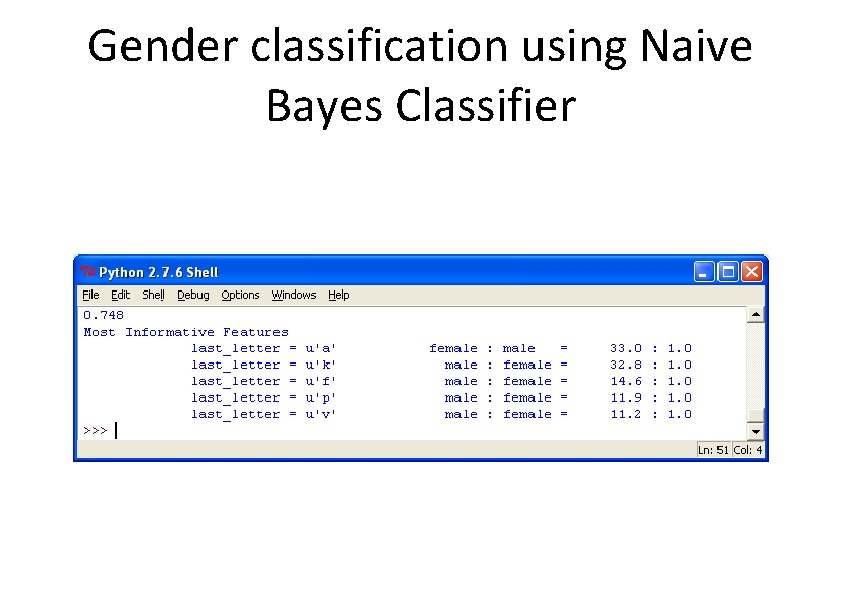

Gender classification using Naive Bayes Classifier

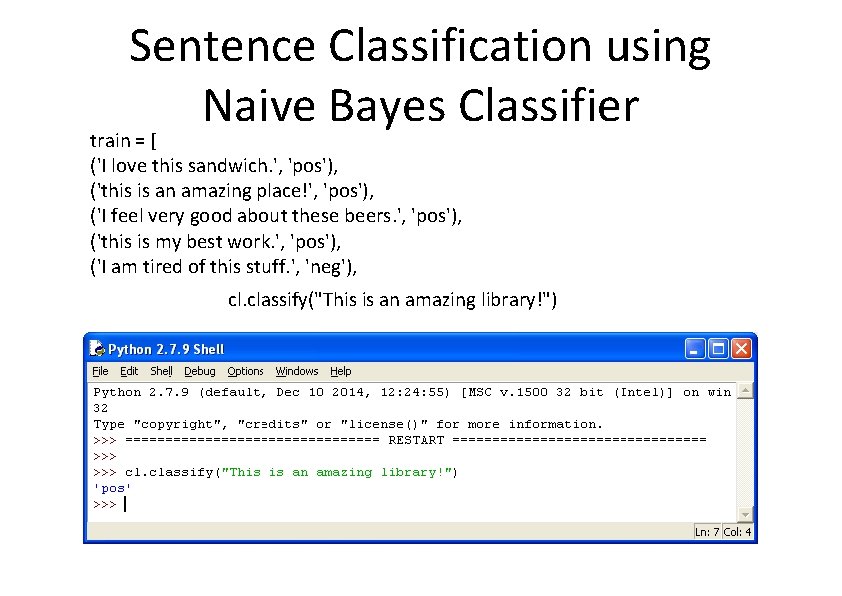

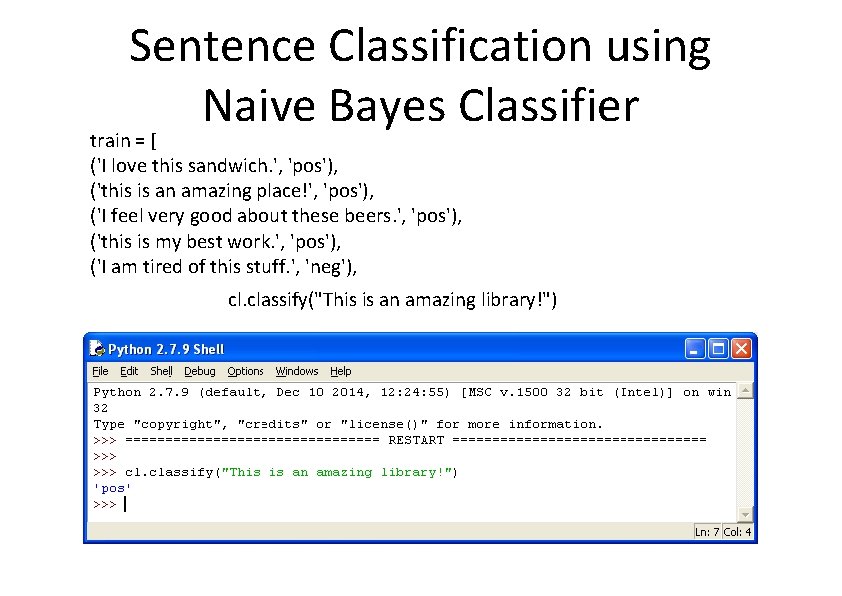

Sentence Classification using Naive Bayes Classifier train = [ ('I love this sandwich. ', 'pos'), ('this is an amazing place!', 'pos'), ('I feel very good about these beers. ', 'pos'), ('this is my best work. ', 'pos'), ('I am tired of this stuff. ', 'neg'), cl. classify("This is an amazing library!")

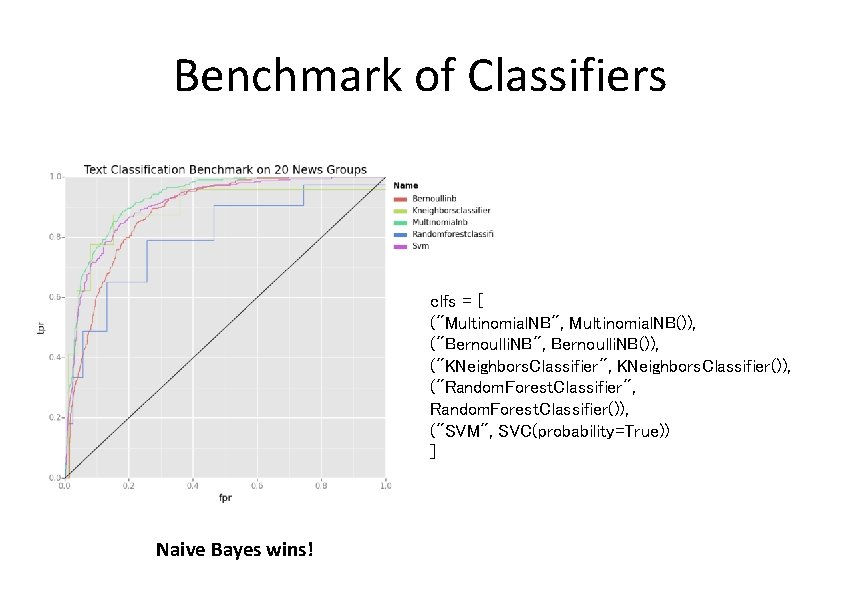

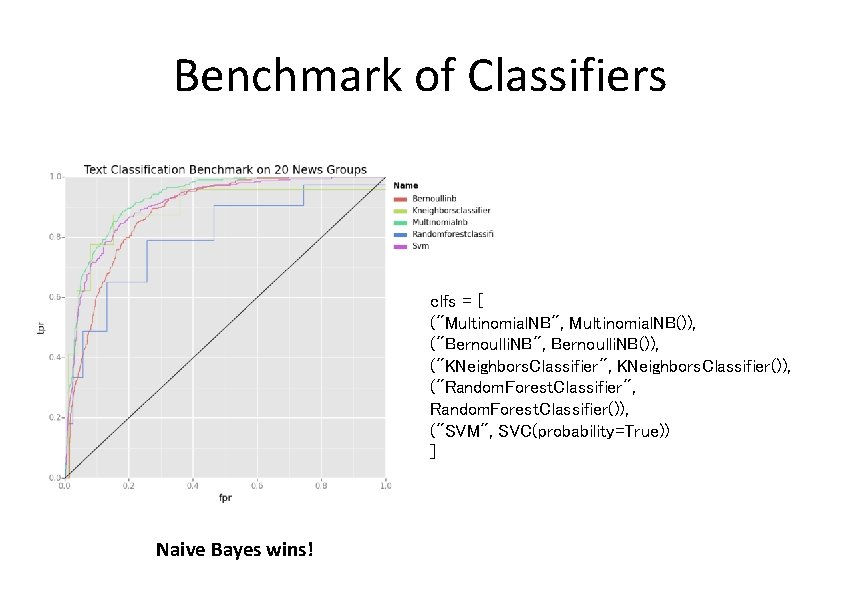

Benchmark of Classifiers clfs = [ ("Multinomial. NB", Multinomial. NB()), ("Bernoulli. NB", Bernoulli. NB()), ("KNeighbors. Classifier", KNeighbors. Classifier()), ("Random. Forest. Classifier", Random. Forest. Classifier()), ("SVM", SVC(probability=True)) ] Naive Bayes wins!

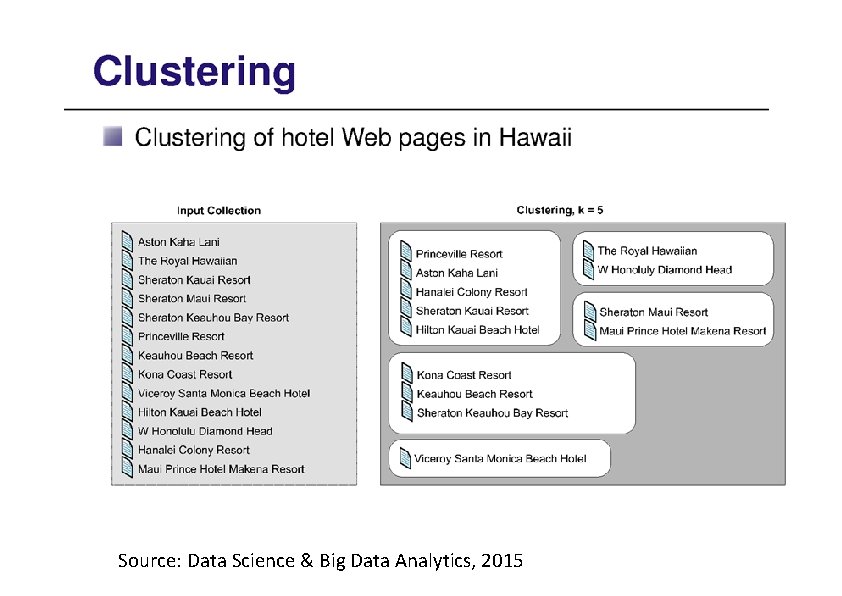

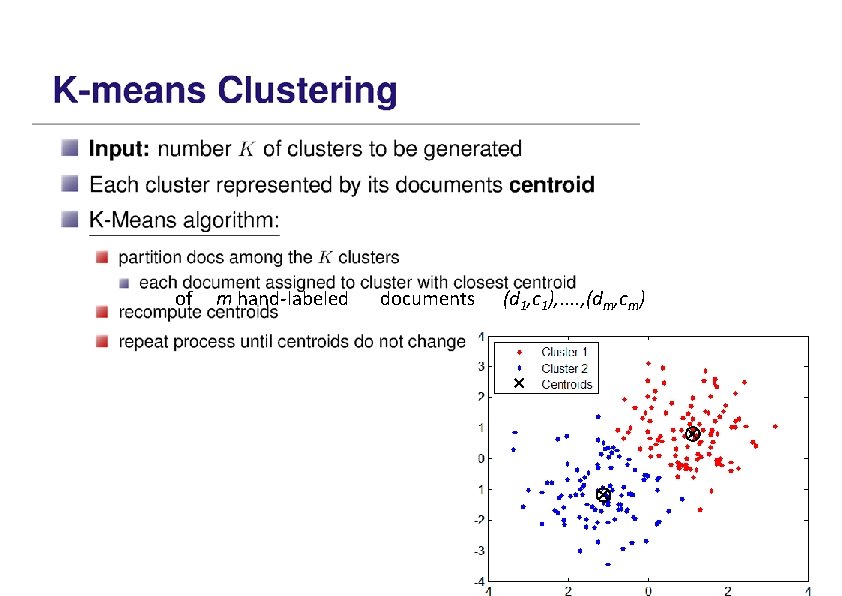

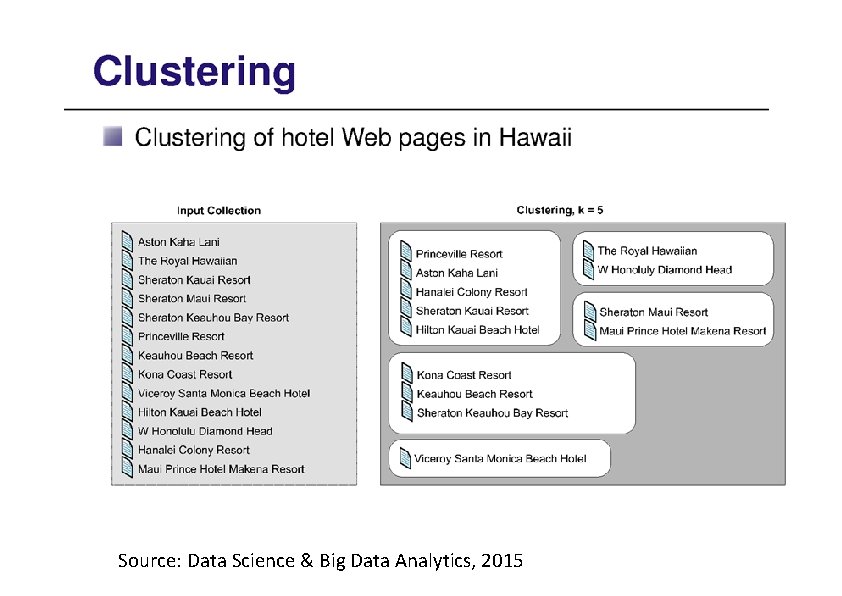

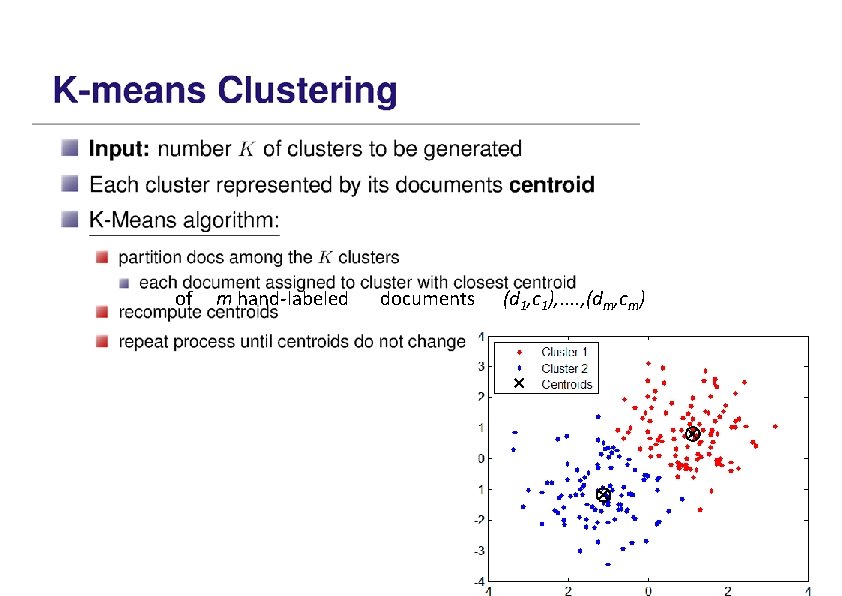

Clustering • The task of the classifier is to separate the documents into groups or clusters – clustering Text clustering: given a collection D of documents, a text clustering method automatically separates these documents into K clusters according to some predefined criteria. In K-means clustering, the number K of clusters to be generated is provided as input.

Source: Data Science & Big Data Analytics, 2015

of m hand-labeled documents (d 1, c 1), . . , (dm, cm)

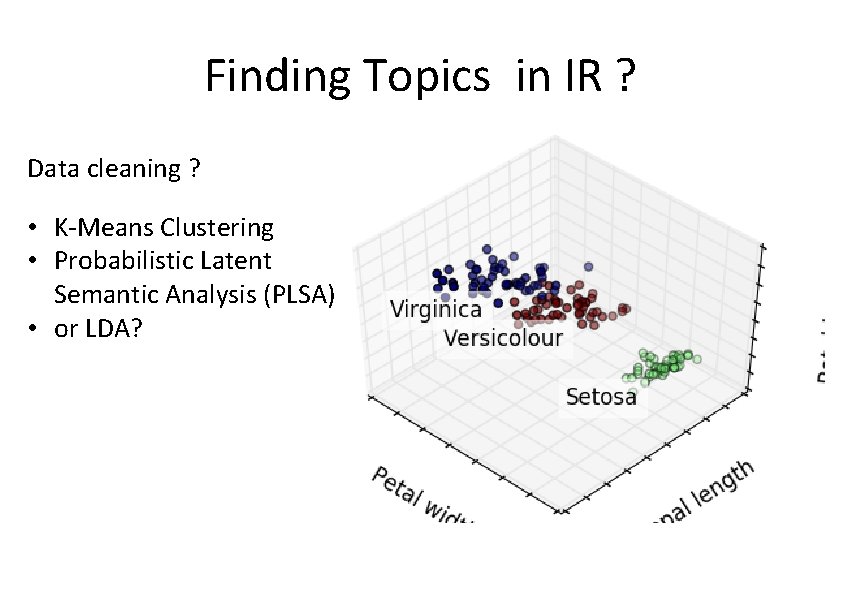

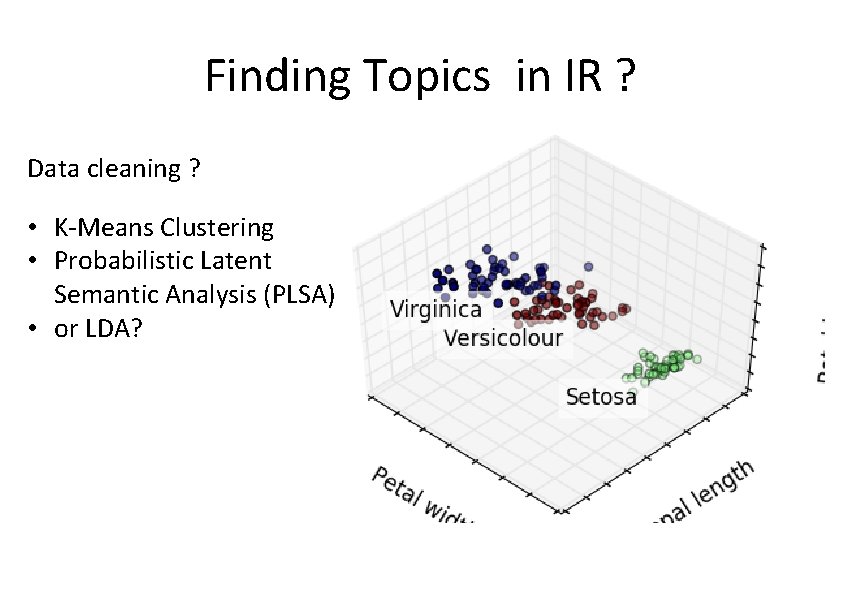

Finding Topics in IR ? Data cleaning ? • K-Means Clustering • Probabilistic Latent Semantic Analysis (PLSA) • or LDA?

![KNN X 0 1 2 3 y 0 0 1 K-NN >>> X = [[0], [1], [2], [3]] >>> y = [0, 0, 1,](https://slidetodoc.com/presentation_image_h/091d965a37662e2788f1cc8c343c0165/image-56.jpg)

K-NN >>> X = [[0], [1], [2], [3]] >>> y = [0, 0, 1, 1] >>> from sklearn. neighbors import KNeighbors. Classifier >>> neigh = KNeighbors. Classifier(n_neighbors=3) >>> neigh. fit(X, y) >>> print(neigh. predict([[1. 1]])) [0] >>> print (neigh. predict([[3. 1]])) [1]

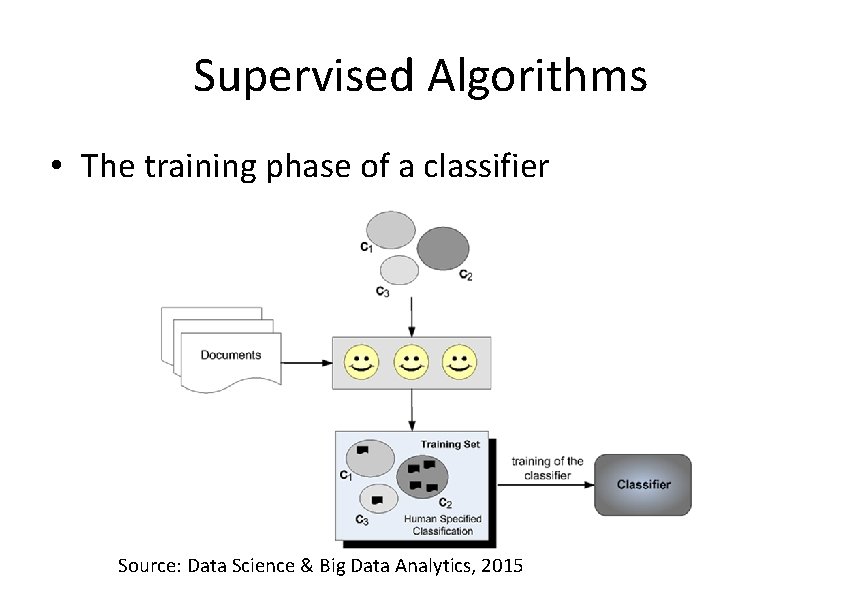

Supervised Algorithms • The training phase of a classifier Source: Data Science & Big Data Analytics, 2015

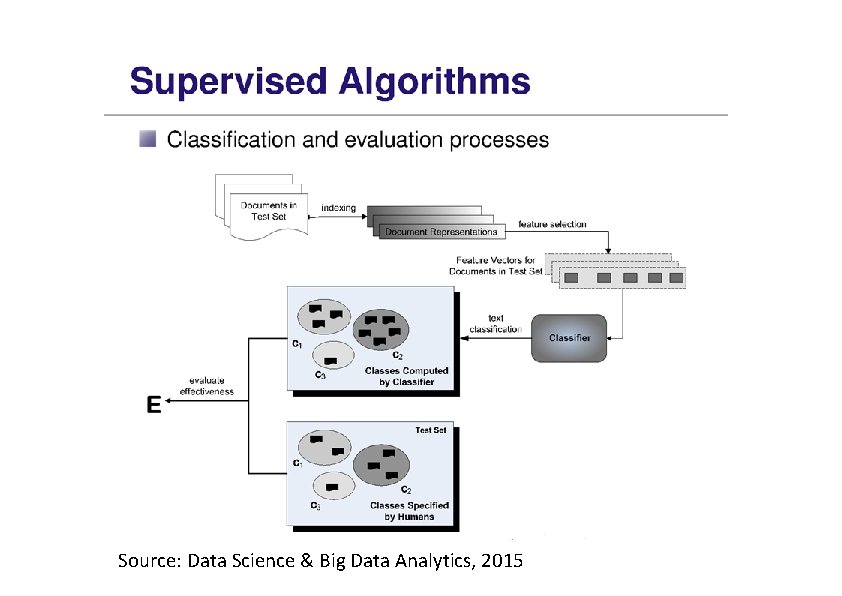

Source: Data Science & Big Data Analytics, 2015

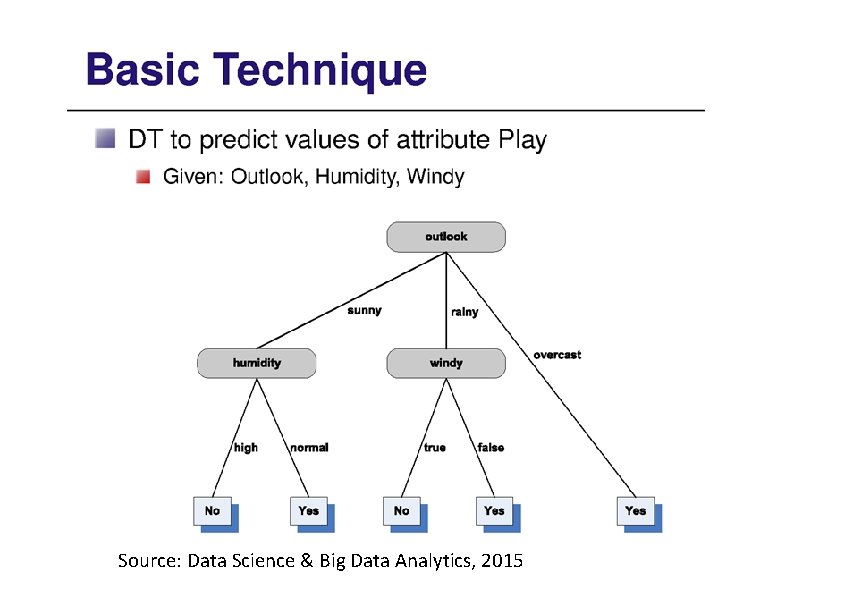

Source: Data Science & Big Data Analytics, 2015

Source: Data Science & Big Data Analytics, 2015

Latent Semantic Analysis (LSA) • LSA aims to discover something about the meaning behind the words; about the topics in the documents. • What is the difference between topics and words? – Words are observable – Topics are not. They are latent. • How to find out topics from the words in an automatic way? – We can imagine them as a compression of words – A combination of words

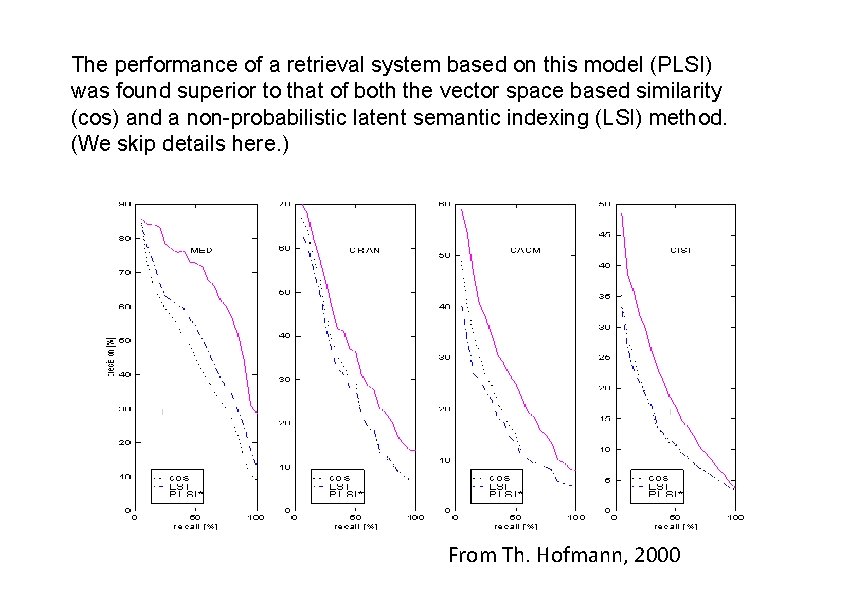

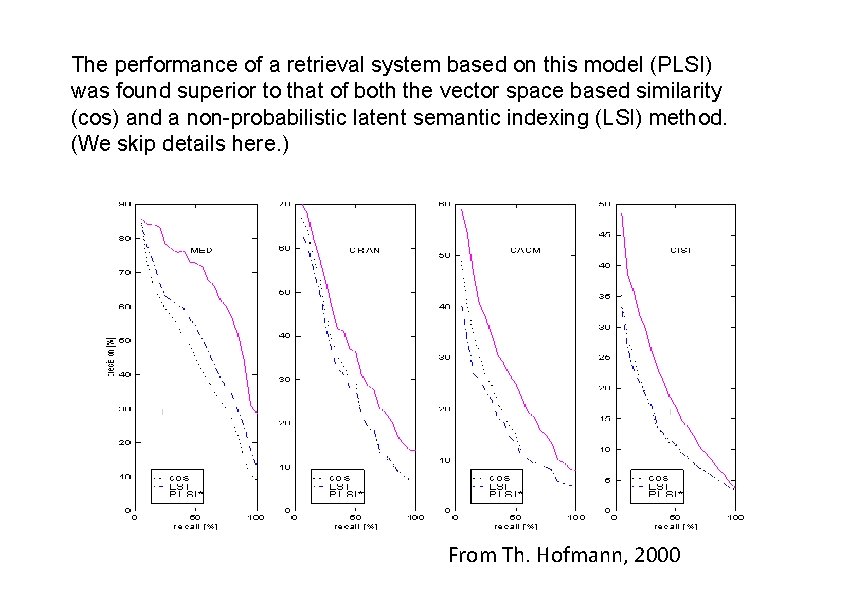

The performance of a retrieval system based on this model (PLSI) was found superior to that of both the vector space based similarity (cos) and a non-probabilistic latent semantic indexing (LSI) method. (We skip details here. ) From Th. Hofmann, 2000

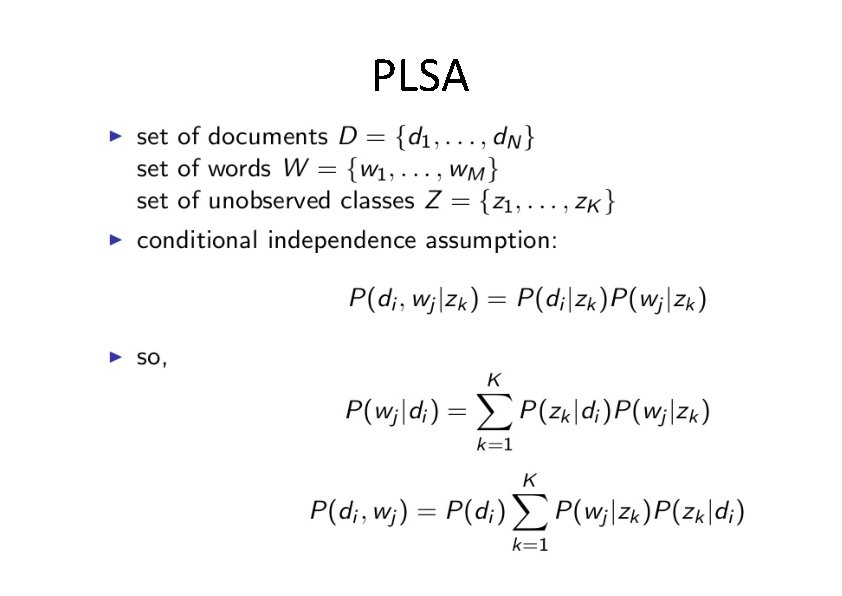

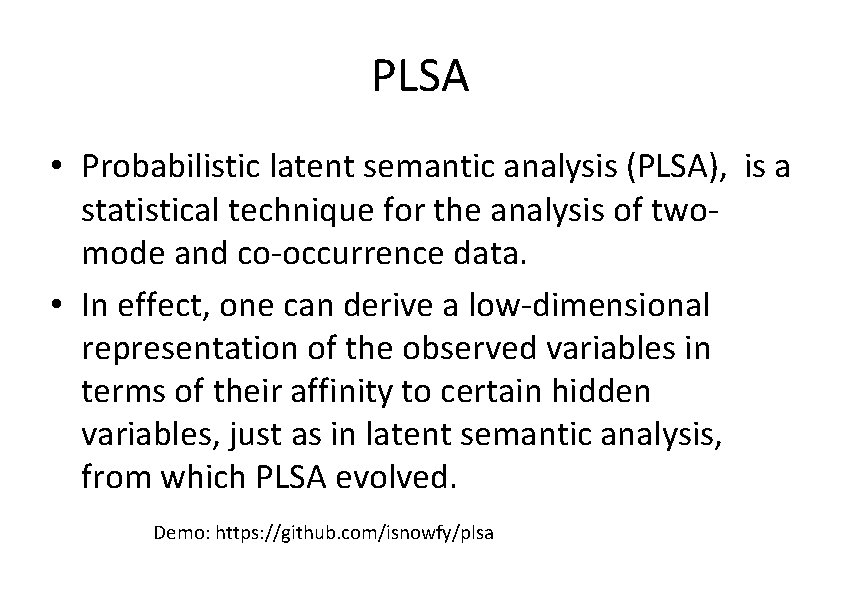

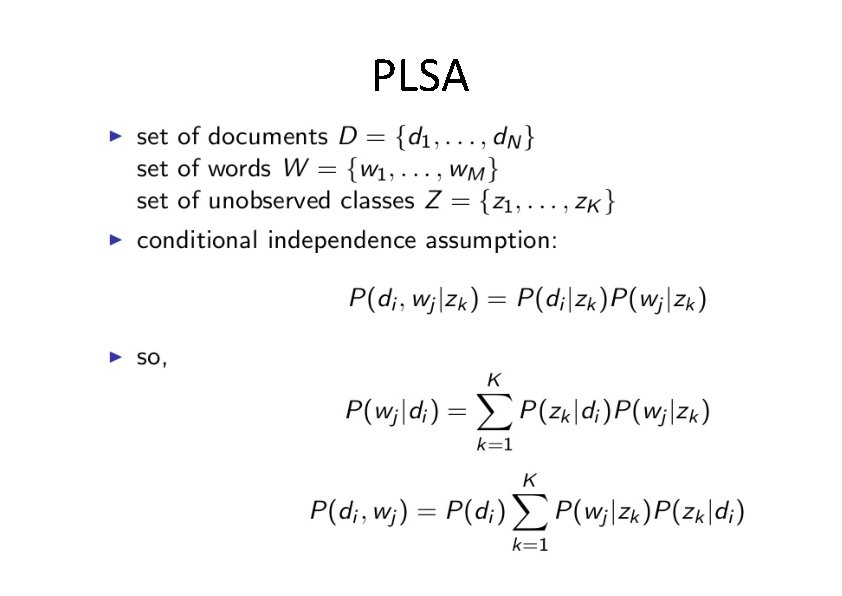

PLSA • Probabilistic latent semantic analysis (PLSA), is a statistical technique for the analysis of twomode and co-occurrence data. • In effect, one can derive a low-dimensional representation of the observed variables in terms of their affinity to certain hidden variables, just as in latent semantic analysis, from which PLSA evolved. Demo: https: //github. com/isnowfy/plsa

PLSA

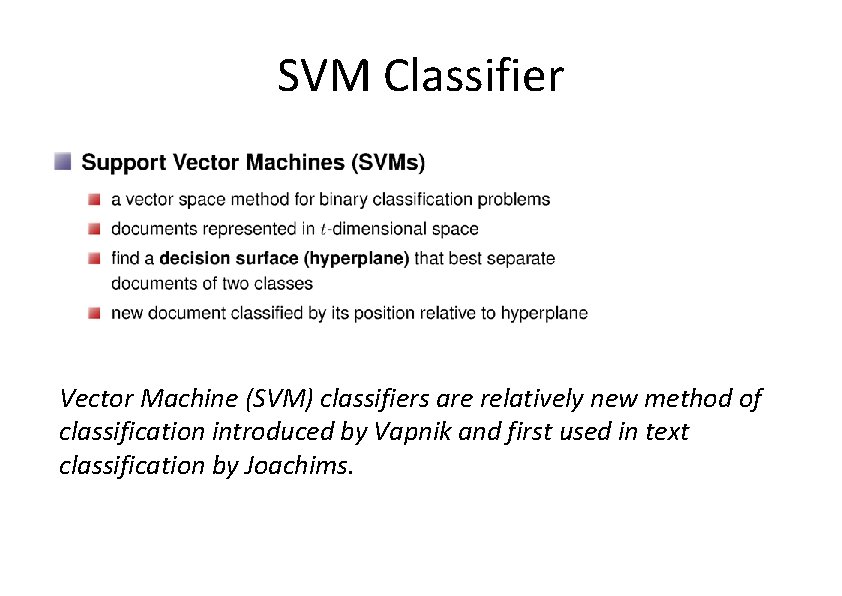

SVM Classifier Vector Machine (SVM) classifiers are relatively new method of classification introduced by Vapnik and first used in text classification by Joachims.

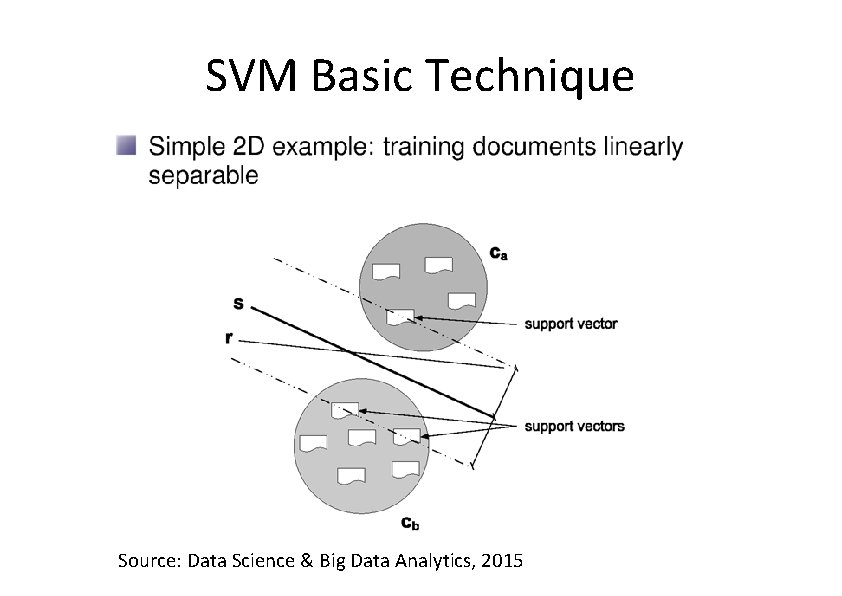

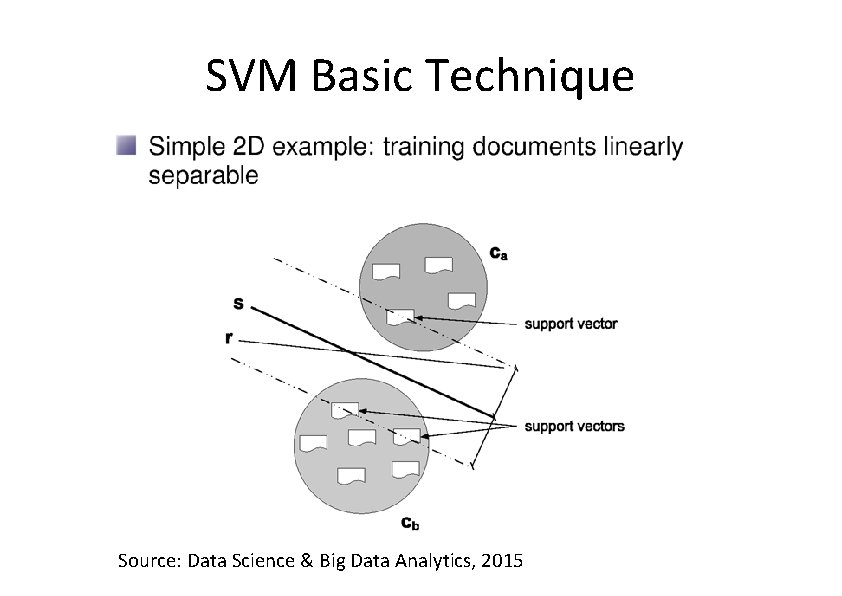

SVM Basic Technique Source: Data Science & Big Data Analytics, 2015

Source: Data Science & Big Data Analytics, 2015

Boosting-based Ensemble Classifier • The training set used for each member of the series is chosen based on the performance of the earlier classifier(s) in the series. • In Boosting, examples that are incorrectly predicted by previous classifiers in the series are chosen more often than examples that were correctly predicted.

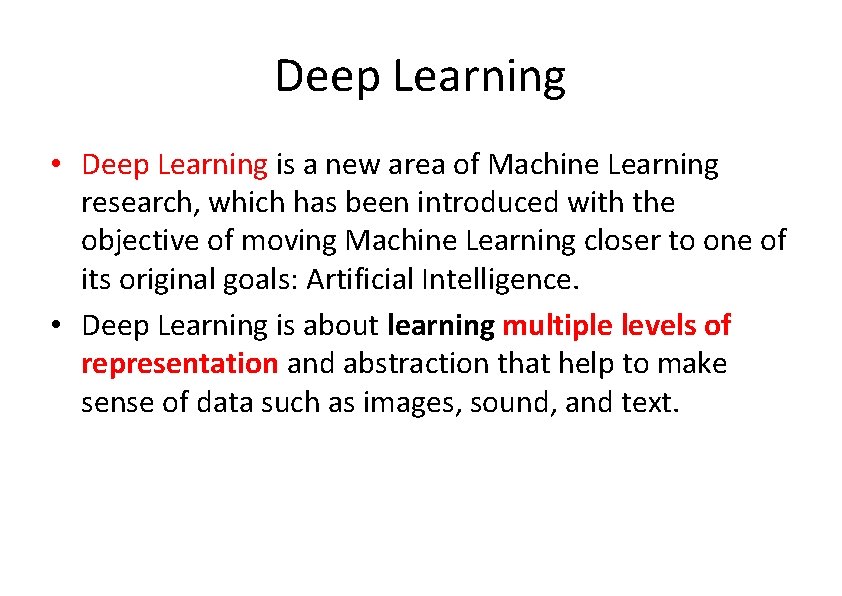

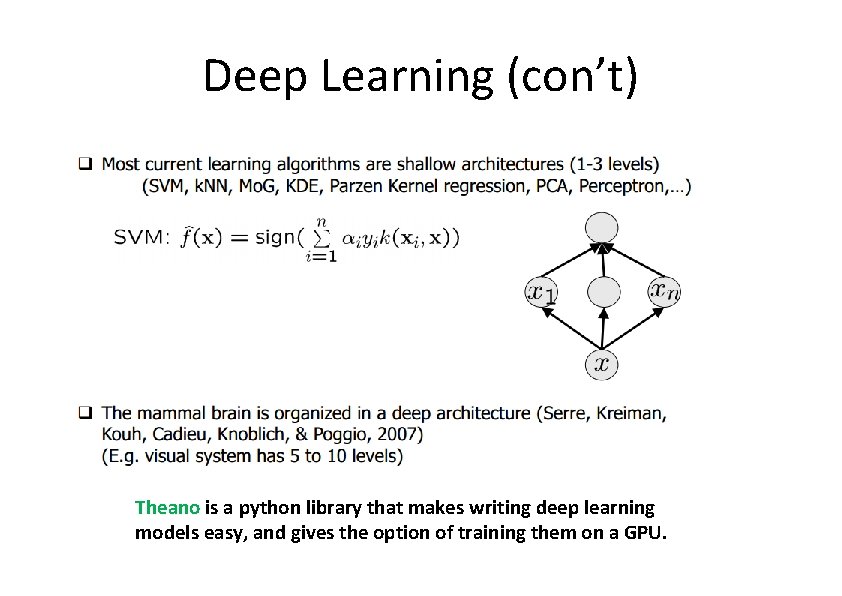

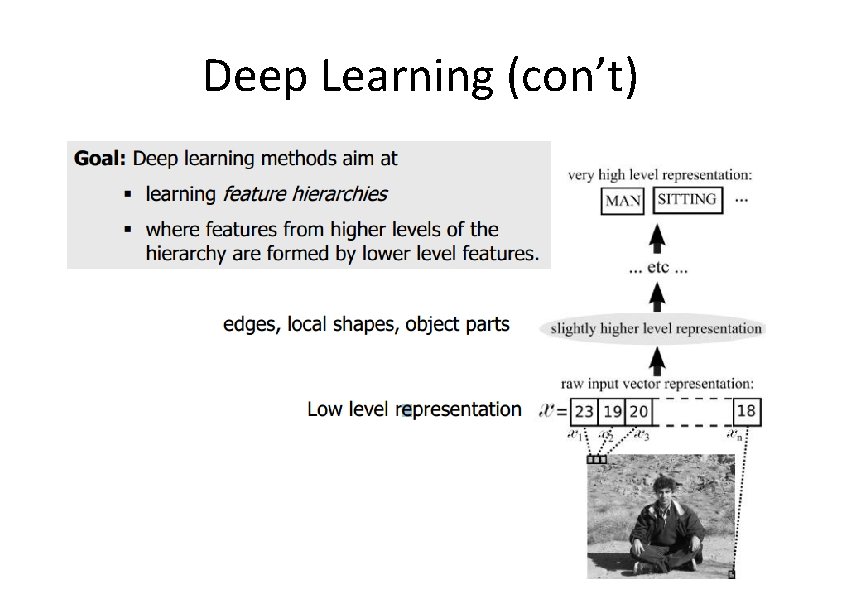

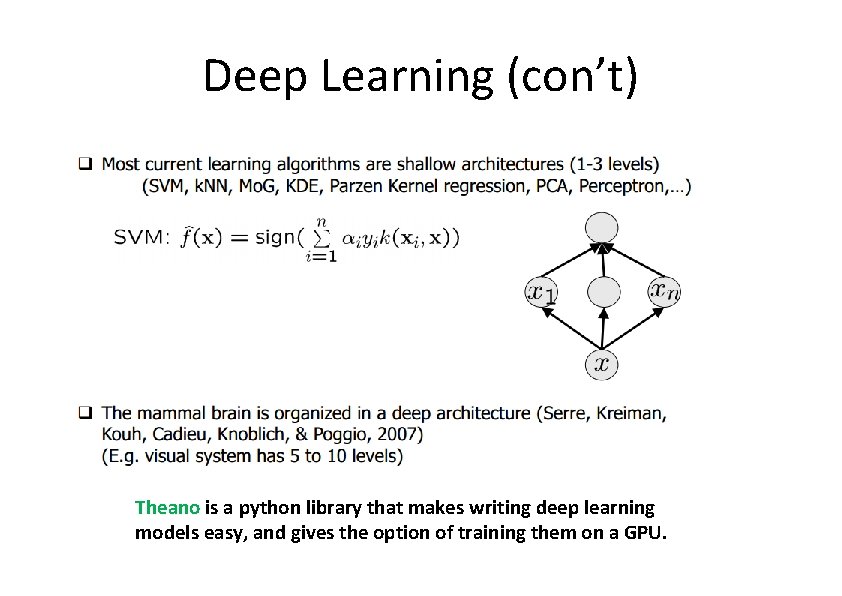

Deep Learning • Deep Learning is a new area of Machine Learning research, which has been introduced with the objective of moving Machine Learning closer to one of its original goals: Artificial Intelligence. • Deep Learning is about learning multiple levels of representation and abstraction that help to make sense of data such as images, sound, and text.

Deep Learning (con’t)

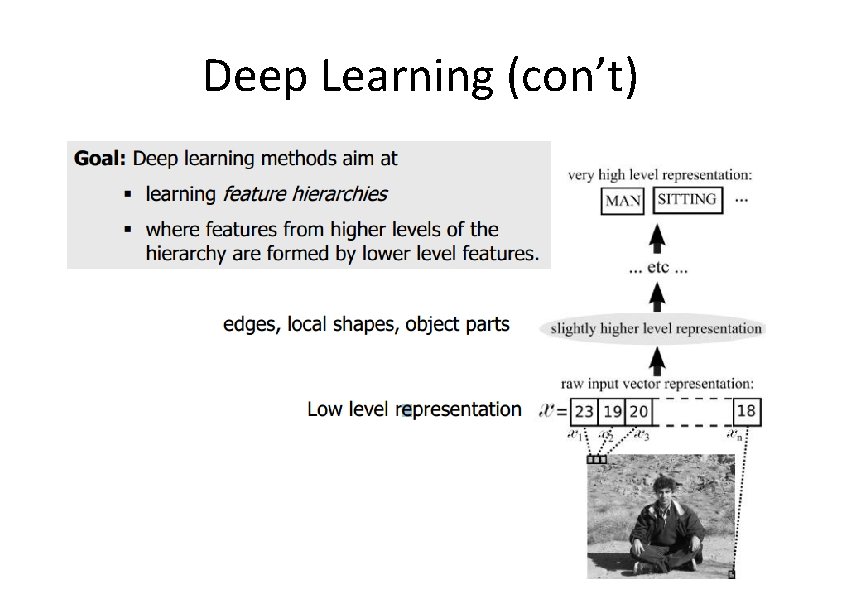

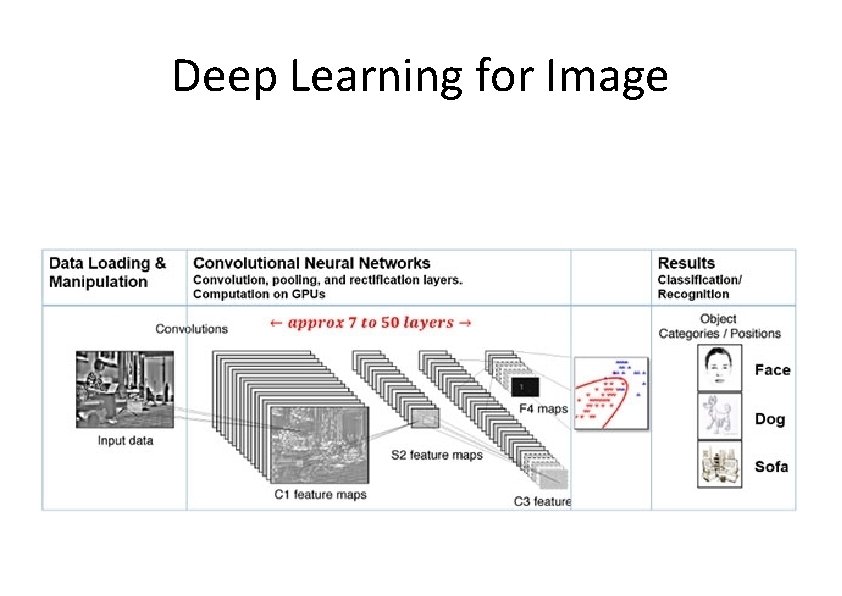

Deep Learning for Image

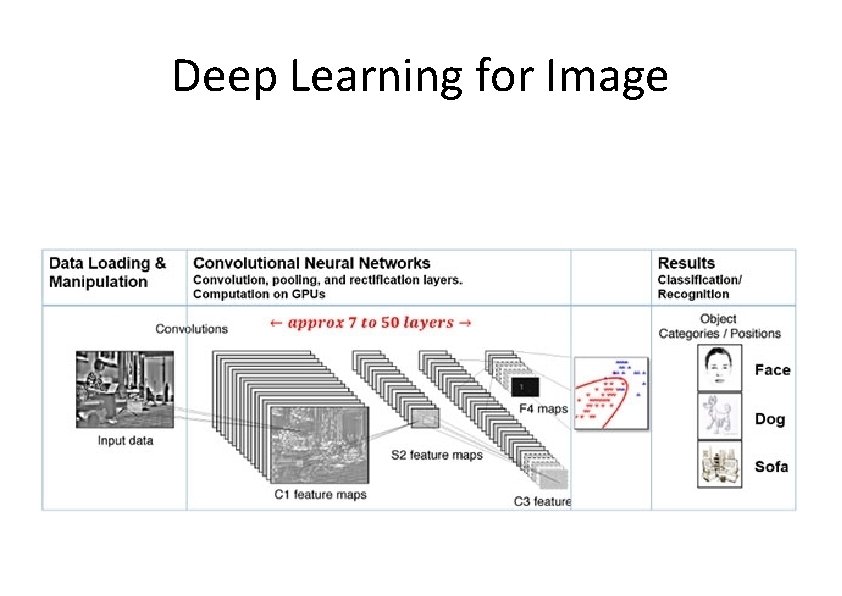

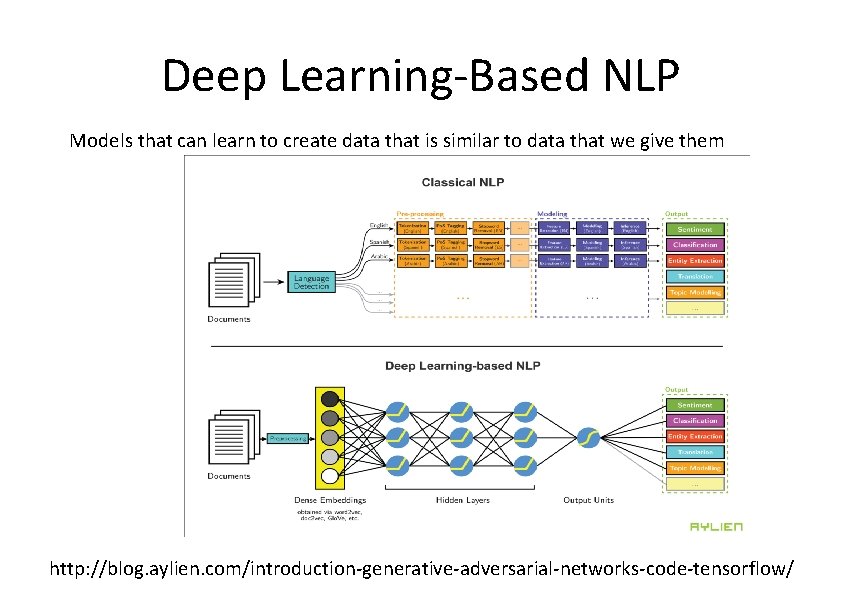

Deep Learning-Based NLP Models that can learn to create data that is similar to data that we give them http: //blog. aylien. com/introduction-generative-adversarial-networks-code-tensorflow/

Deep Learning (con’t) Theano is a python library that makes writing deep learning models easy, and gives the option of training them on a GPU.

Source: Data Science & Big Data Analytics, 2015

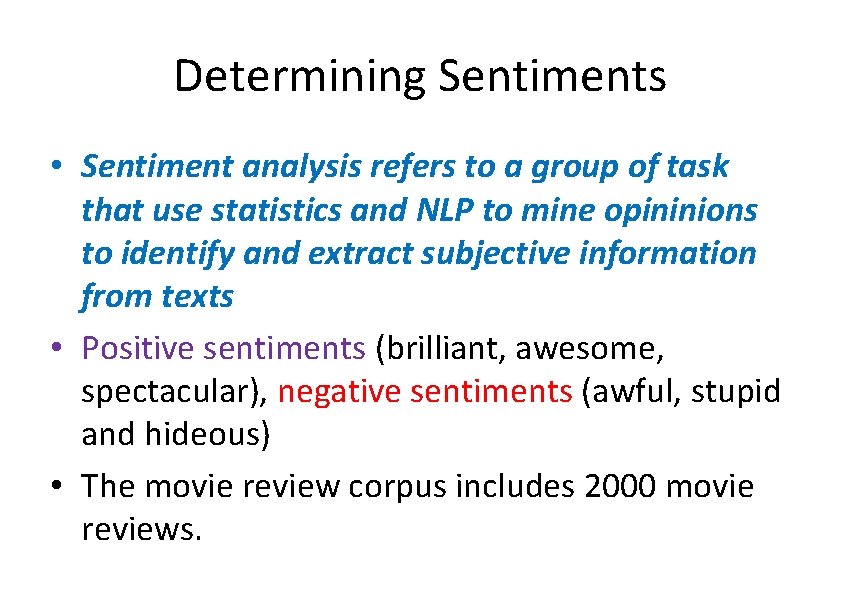

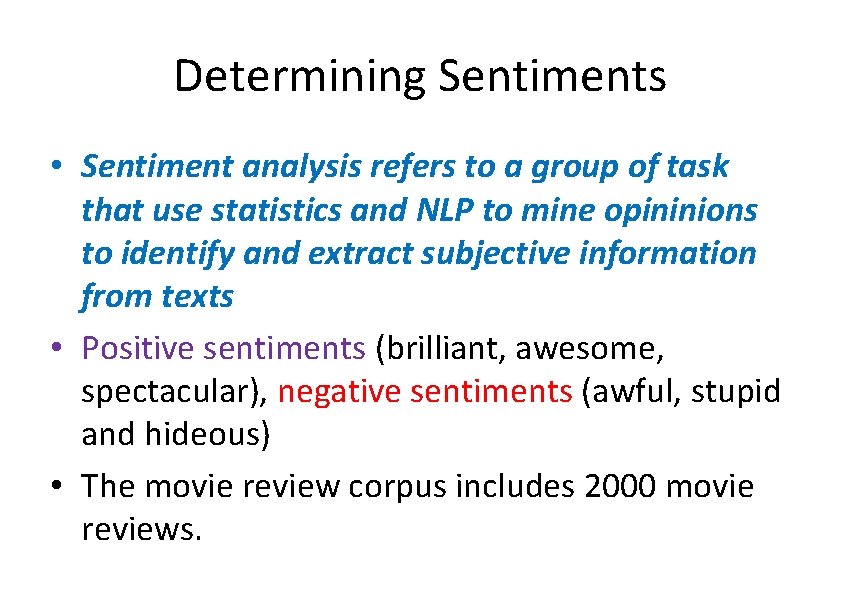

Determining Sentiments • Sentiment analysis refers to a group of task that use statistics and NLP to mine opininions to identify and extract subjective information from texts • Positive sentiments (brilliant, awesome, spectacular), negative sentiments (awful, stupid and hideous) • The movie review corpus includes 2000 movie reviews.

Sentiment analysis (sentiment 140. com) • Go et al use classification methods includeng naïve Bayes and SVM over the training and testing dataset to perform sentiment classifications.

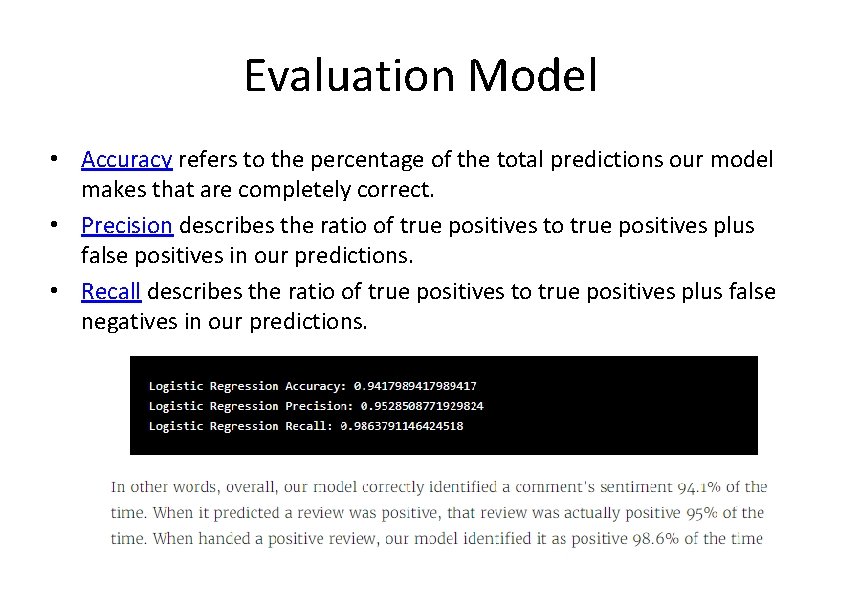

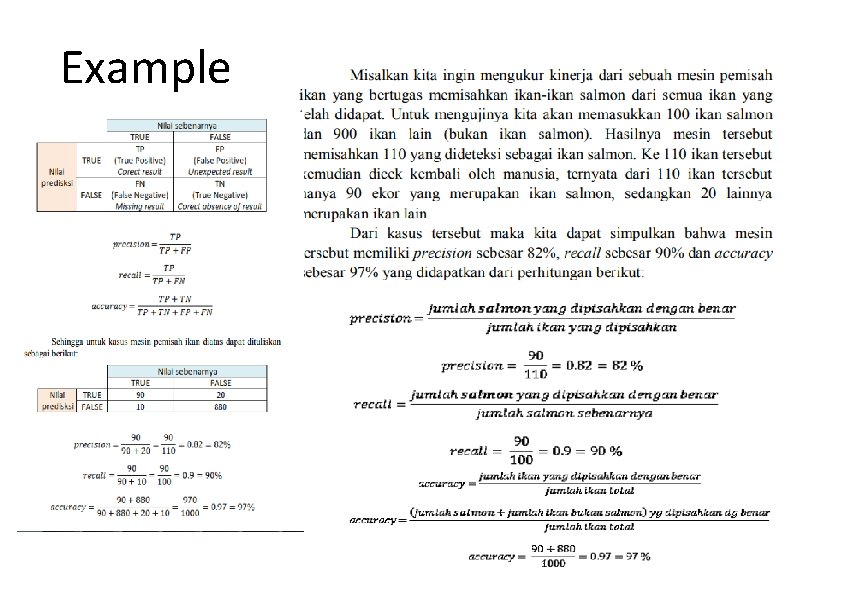

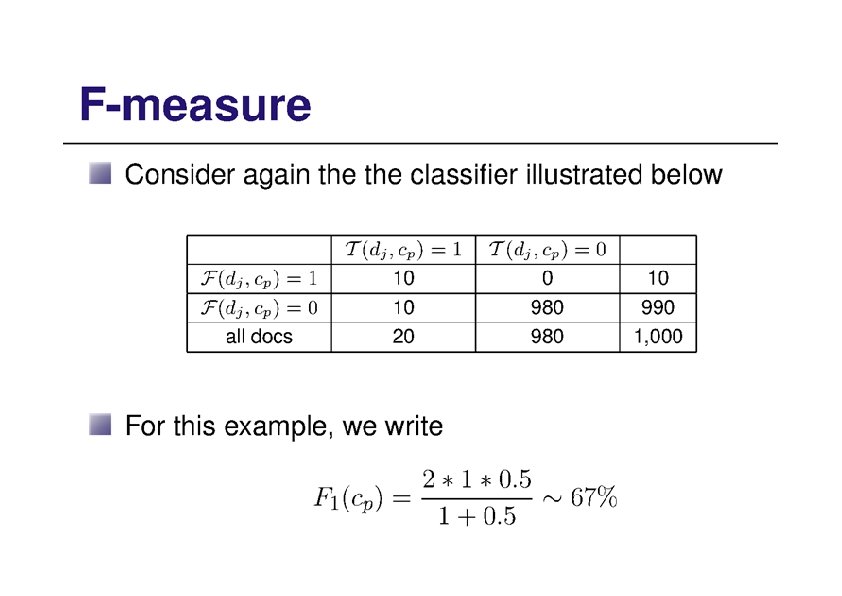

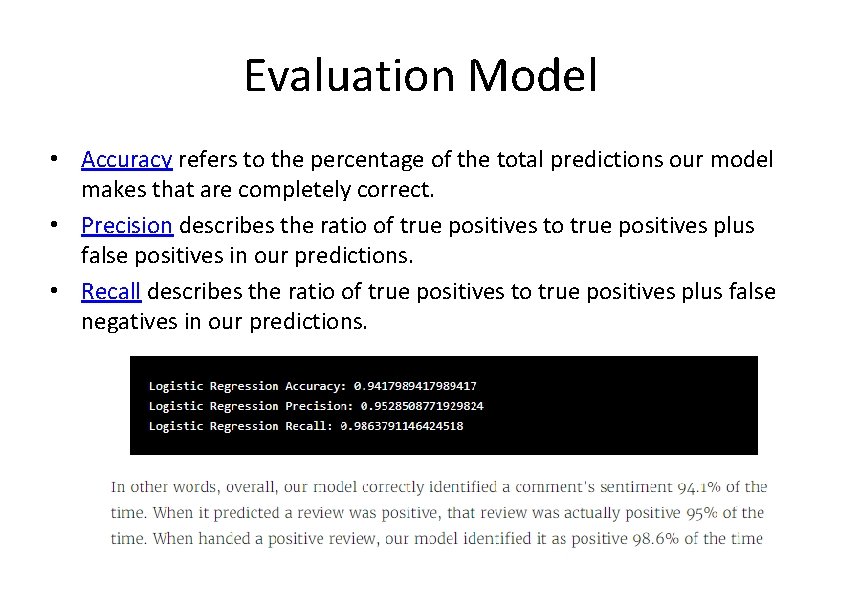

Evaluation Model • Accuracy refers to the percentage of the total predictions our model makes that are completely correct. • Precision describes the ratio of true positives to true positives plus false positives in our predictions. • Recall describes the ratio of true positives to true positives plus false negatives in our predictions.

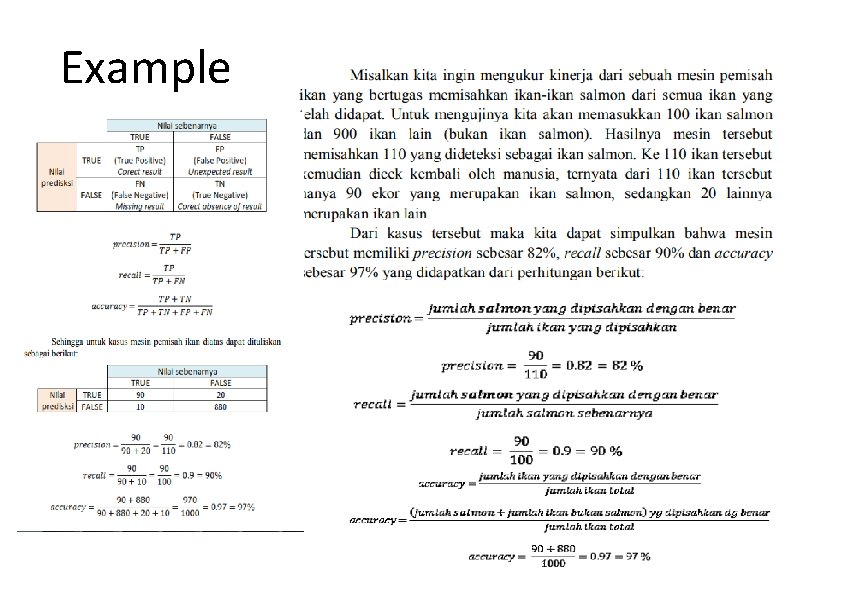

Example

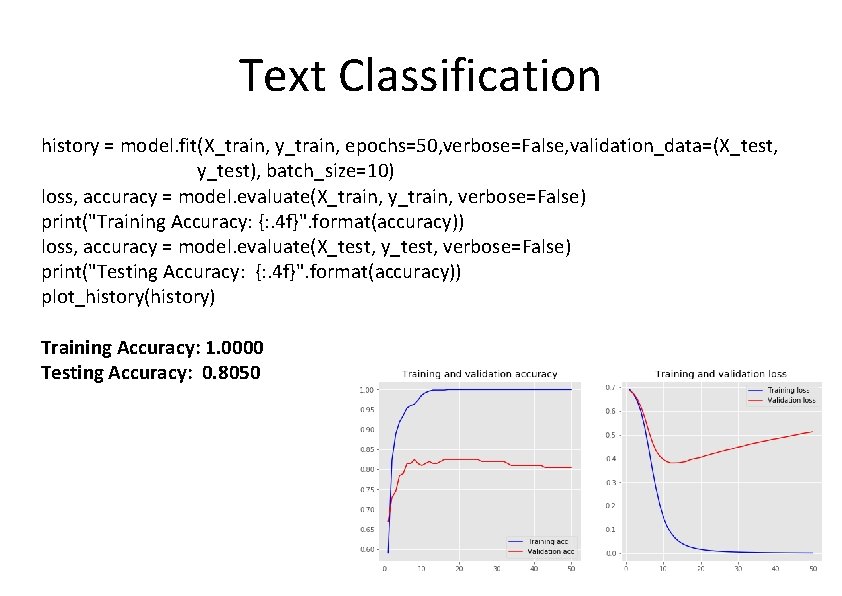

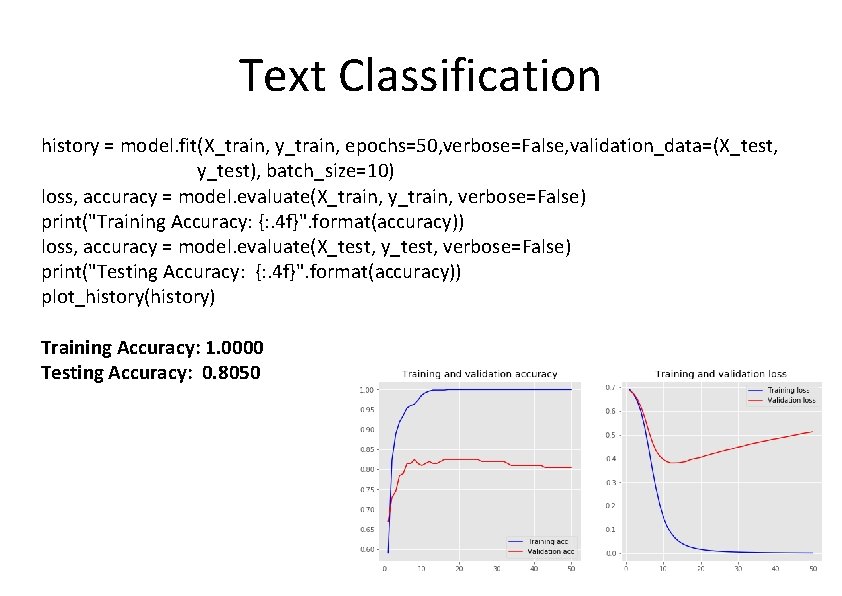

Text Classification history = model. fit(X_train, y_train, epochs=50, verbose=False, validation_data=(X_test, y_test), batch_size=10) loss, accuracy = model. evaluate(X_train, y_train, verbose=False) print("Training Accuracy: {: . 4 f}". format(accuracy)) loss, accuracy = model. evaluate(X_test, y_test, verbose=False) print("Testing Accuracy: {: . 4 f}". format(accuracy)) plot_history(history) Training Accuracy: 1. 0000 Testing Accuracy: 0. 8050

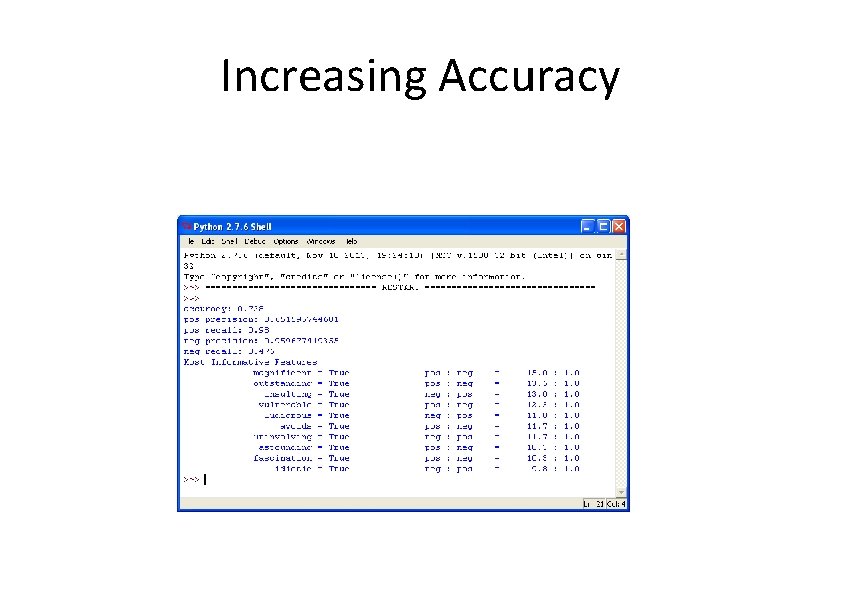

experiment • 2000 reviews split into 1600 reviews as the training set and 400 reviews as the testing set.

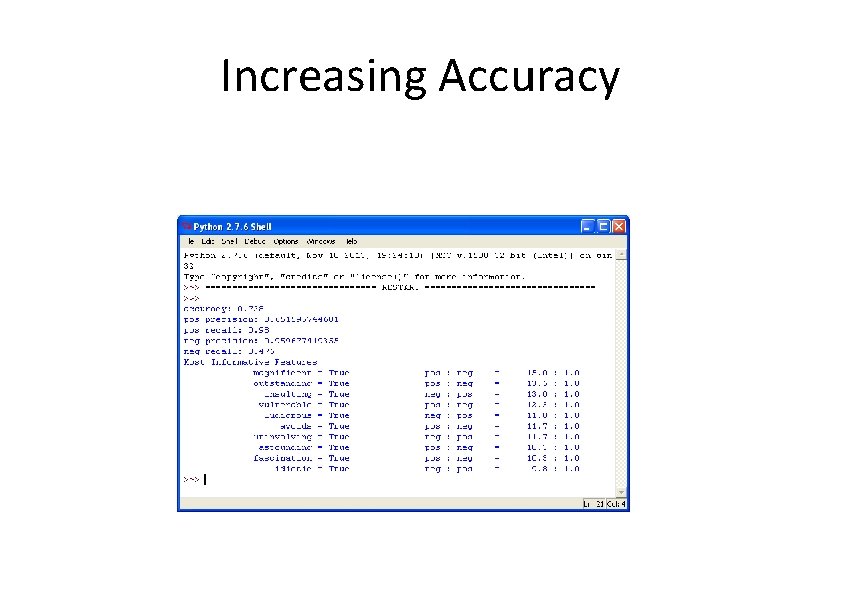

Increasing Accuracy

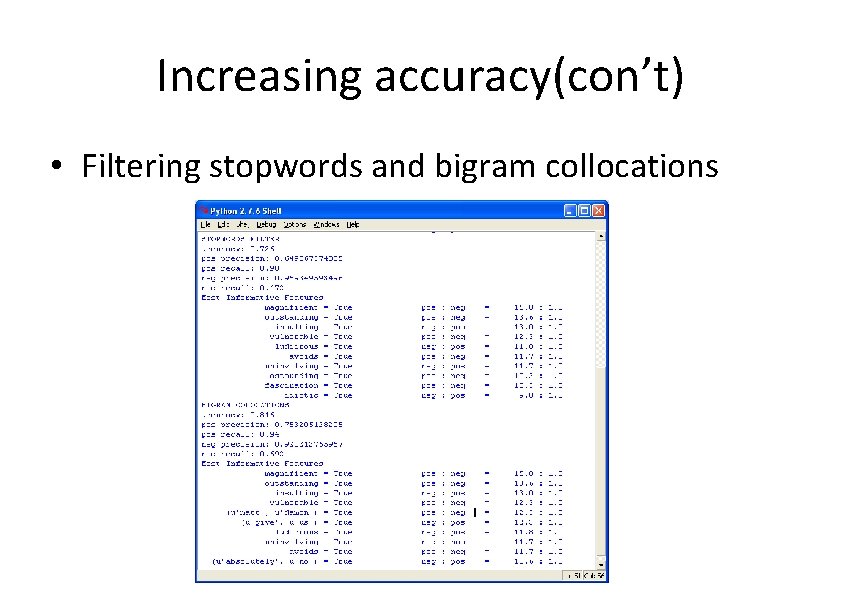

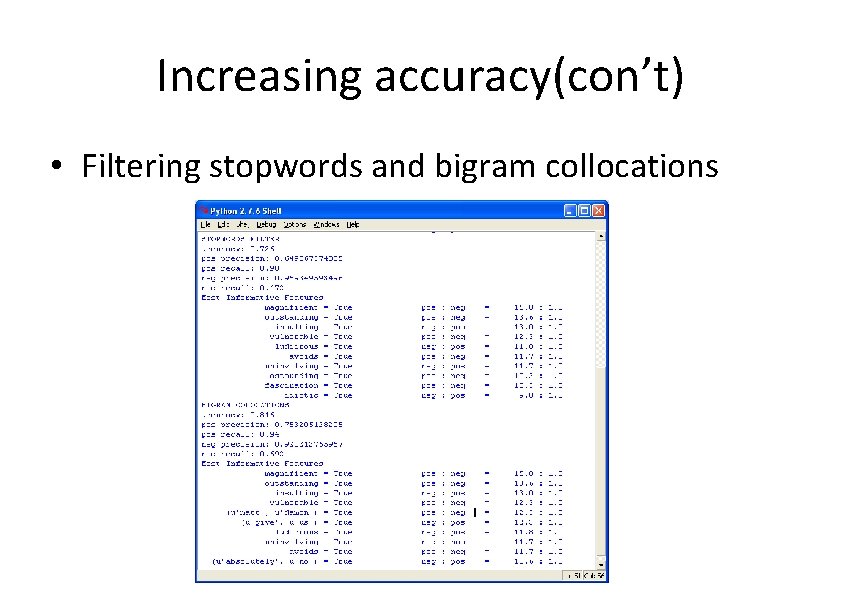

Increasing accuracy(con’t) • Filtering stopwords and bigram collocations

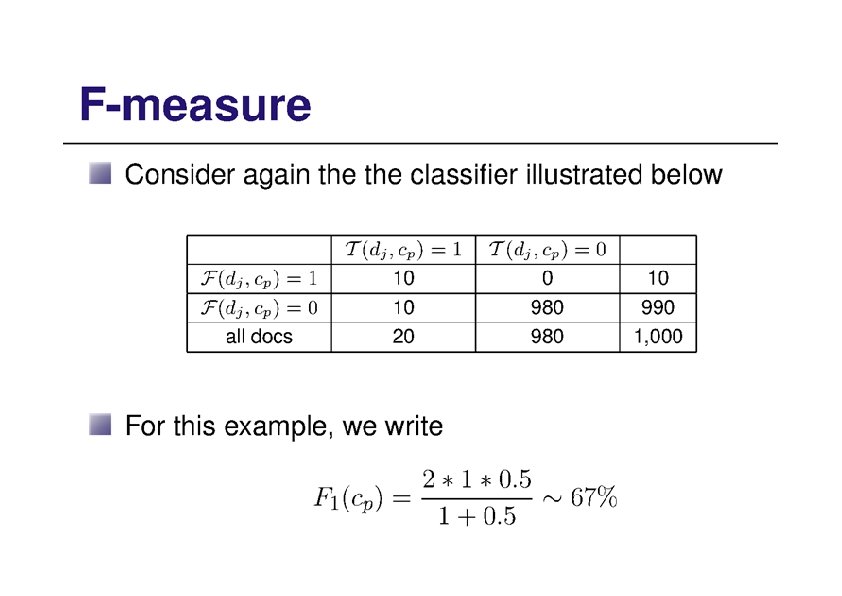

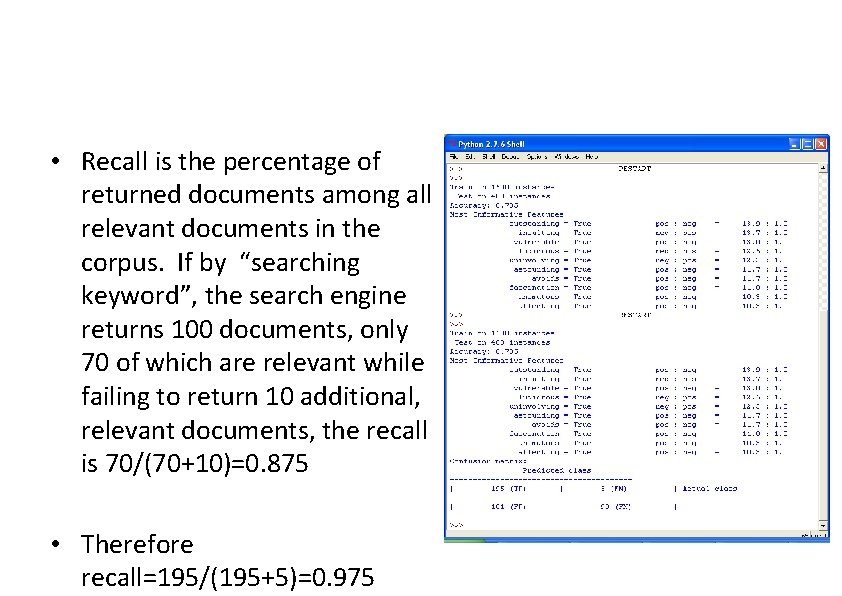

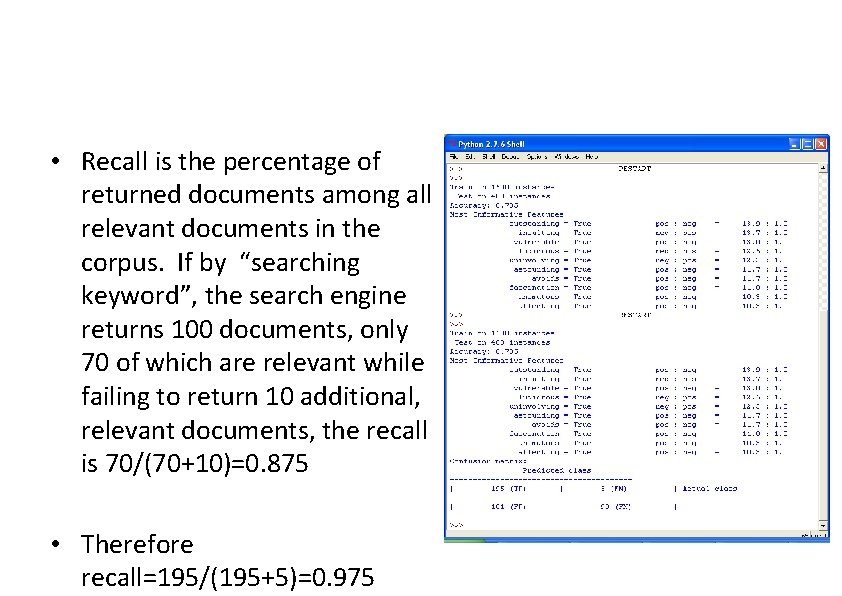

example Precision: percentage of documents in the results that are relevant. The precision of the search engine result: 195/(195+101)=0. 659

• Recall is the percentage of returned documents among all relevant documents in the corpus. If by “searching keyword”, the search engine returns 100 documents, only 70 of which are relevant while failing to return 10 additional, relevant documents, the recall is 70/(70+10)=0. 875 • Therefore recall=195/(195+5)=0. 975

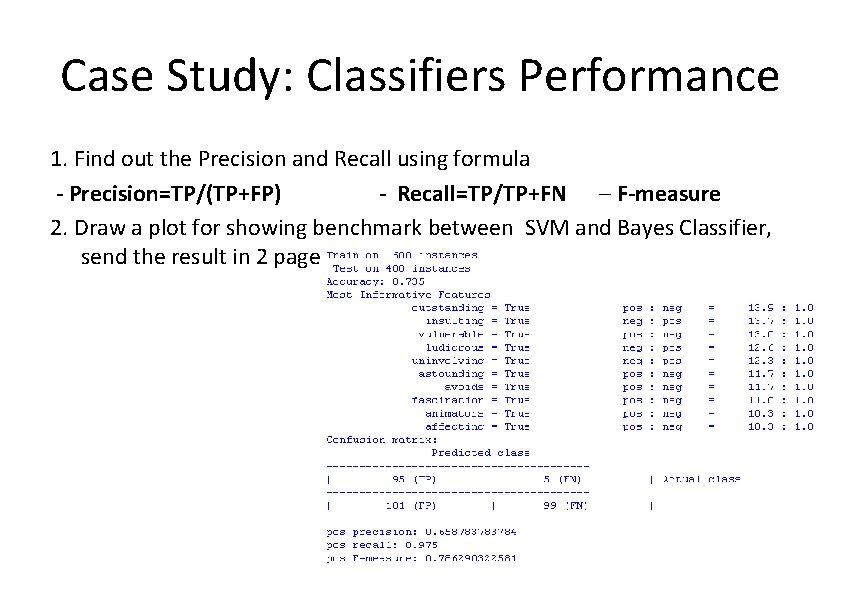

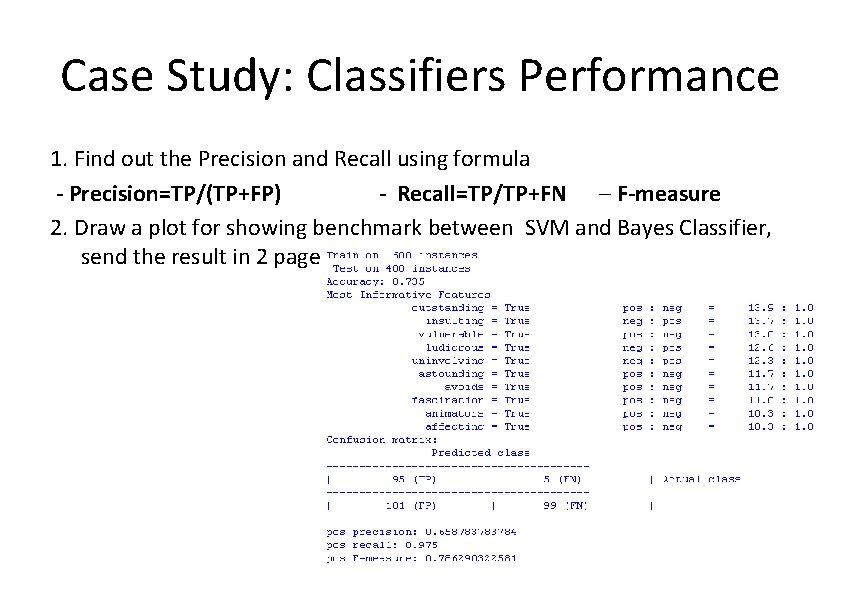

Case Study: Classifiers Performance 1. Find out the Precision and Recall using formula - Precision=TP/(TP+FP) - Recall=TP/TP+FN – F-measure 2. Draw a plot for showing benchmark between SVM and Bayes Classifier, send the result in 2 page

Homework • Please propose a. Multimedia Retrieval Systems using Deep Learning b. Object recognition using Deep Learning (create our own dataset) • Framework: Keras or Tensorflow You need Python and libraries

References • EMC Education (2015), Science and Big Data Analytics, Wiley Publisher. • https: //nlp. stanford. edu/IR-book/pdf/irbookonlinereading. pdf • http: //deeplearning. net/tutorial/deeplearning. pdf • http: //blog. aylien. com/introduction-generative-adversarialnetworks-code-tensorflow/ • Christoper D. Manning (2008), Introduction to Information Retrieval. 2 nd ed. John Wiley & Sons. USA. • Jungi Kim et al (2012), Learning Semantics with Deep Belief Network for Cross-Language Information Retrieval , Proceeding of COLING Conferences. , pp. 578 -588. • Reina, Adit Kurniawan, Iman HK, Widodo Budiharto and Harjanto P, Flexible affix classification for stemming Indonesian Language, 13 th ECTI-CON 2016. 92

References http: //www. albertauyeung. com/post/generatingngrams-python/ https: //stackabuse. com/python-for-nlp-developing-anautomatic-text-filler-using-n-grams/