Knowing What Audiences Learn Outcomes and Program Planning

- Slides: 41

Knowing What Audiences Learn: Outcomes and Program Planning Association of Children’s Museums 2003 I N S T I T U T E of M U S E U M and L I B R A R Y S E R V I C E S Washington, DC , www. imls. gov 1

Overview We will l Distinguish Outcome-based Planning and Evaluation (“OBE”) from other kinds l Talk about choosing outcomes l Talk about basic elements of a Logic Model (a project or program plan) l Talk about measuring outcomes l Review and summarize 2

What are Outcomes? Outcomes are achievements or changes in l Skill – Painting, basketball l Knowledge – Zoology, state capitols l Behavior – Visits museums, reads daily l Attitude – I like science, I love animals 3

What are Outcomes? Outcomes can be achievements or changes in l Status – In school, citizen l Life condition – Overweight, healthy 4

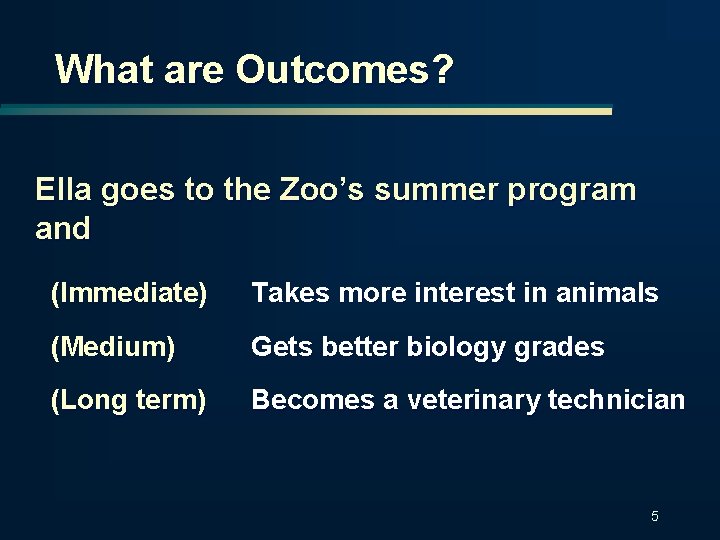

What are Outcomes? Ella goes to the Zoo’s summer program and (Immediate) Takes more interest in animals (Medium) Gets better biology grades (Long term) Becomes a veterinary technician 5

Outcomes Where do they come from? l l l Social Services and United Way OMB: (GPRA) 1993 and PART Trends in funding l The need to focus on audience The need to communicate museum value l IMLS l 6

Forms of Evaluation Formative Evaluation You want to know which brochure works best to bring school tours to the zoo Develop two or three prototypes and test on a sample of your target audience; decide which worked best 7

Forms of Evaluation Process Evaluation You want to see how efficiently your conservation education program is run You count the number of participants in program components, examine how the components are delivered, how long they take, and what it costs 8

Forms of Evaluation Summative Evaluation You want to know if your exhibit program made visitors aware of how they can protect their environment You run the program, then interview visitors to learn how many might use “green” products more in future 9

Forms of Evaluation Impact Evaluation (aggregated outcomes create impact) You want to know if your programs helped increase recycling in your area You find out the rate of recycling, run the program, then see if the recycling rate has increased 10

Forms of Evaluation Outcome-Based Program Evaluation You want to know if your conservation education program changes participants’ behaviors You identify audience needs, plan services to provide participant-oriented outcomes, and assess program results on a regular basis 11

What can OBE achieve? l Increase program effectiveness l Provide a logical, focused framework to guide program design l Inform decision-making l Document successes l Communicate program value 12

What are its limitations? l This is a management tool l OBE may suggest cause and effect – it doesn't try to prove it l OBE settles for contribution, not attribution l OBE uses some of the same methods as research, but … 13

What are its limitations? OBE is not the same as RESEARCH: l Doesn’t try to compare your program with another, similar program l Doesn’t try to compare your methods with different methods to create a similar result l Accepts “good enough” data 14

How to develop an outcome-based program: Example What need do you see? l Many kids don’t understand basic science principles; many girls are intimidated by or uninterested in science l Head Start is an opportunity for early science experience, but many teachers have no science education and don’t know how to make it fun l Parents in at-risk families often have little science knowledge, limited child-development skills, and limited reading skills 15

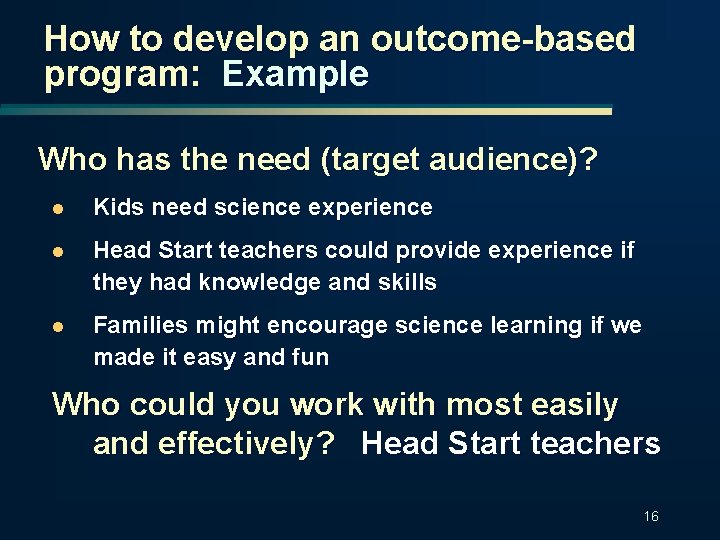

How to develop an outcome-based program: Example Who has the need (target audience)? l Kids need science experience l Head Start teachers could provide experience if they had knowledge and skills l Families might encourage science learning if we made it easy and fun Who could you work with most easily and effectively? Head Start teachers 16

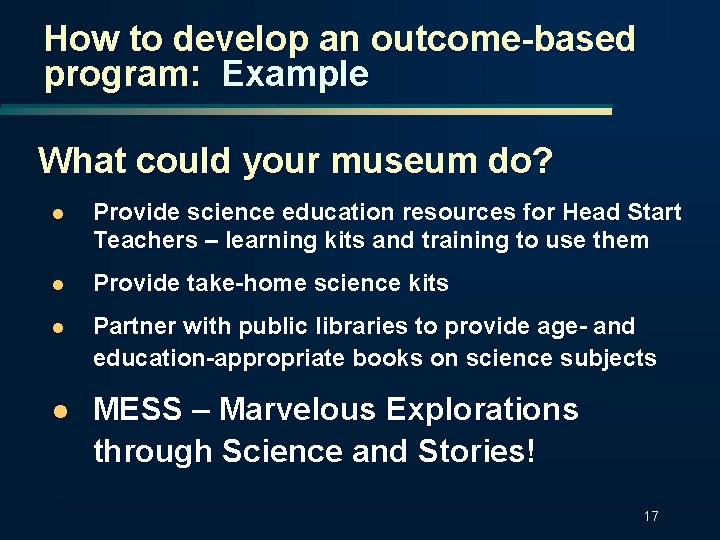

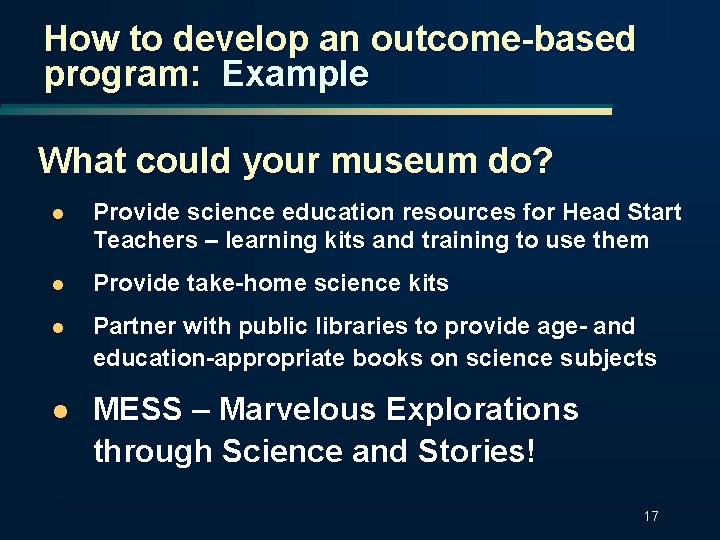

How to develop an outcome-based program: Example What could your museum do? l Provide science education resources for Head Start Teachers – learning kits and training to use them l Provide take-home science kits l Partner with public libraries to provide age- and education-appropriate books on science subjects l MESS – Marvelous Explorations through Science and Stories! 17

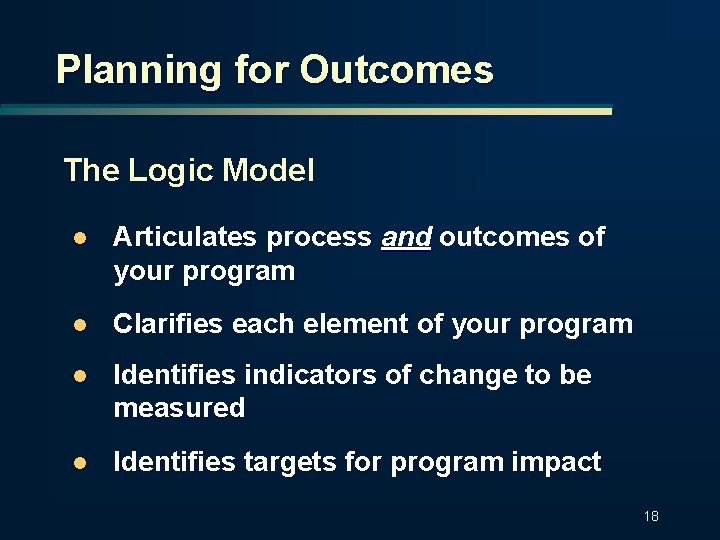

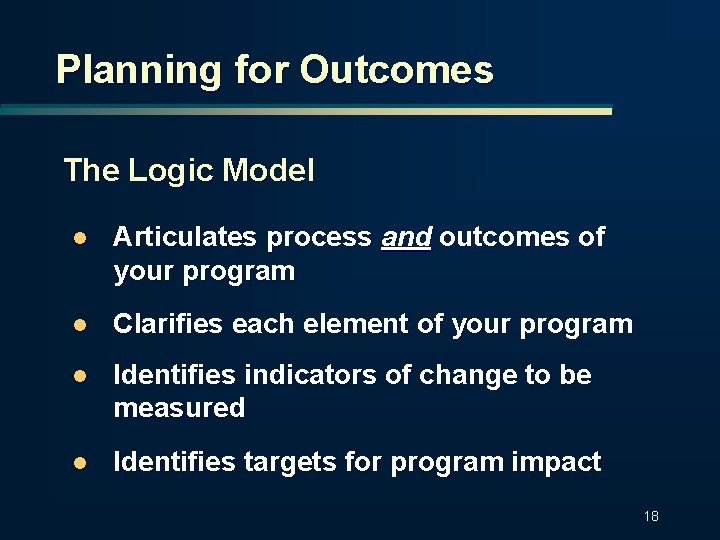

Planning for Outcomes The Logic Model l Articulates process and outcomes of your program l Clarifies each element of your program l Identifies indicators of change to be measured l Identifies targets for program impact 18

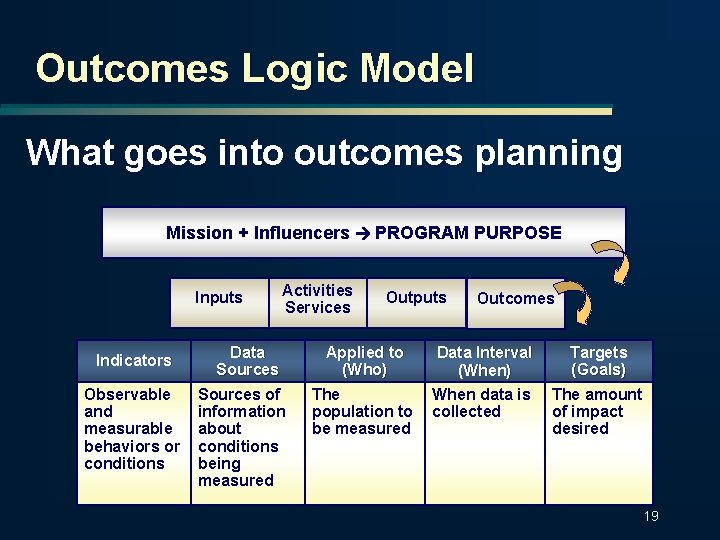

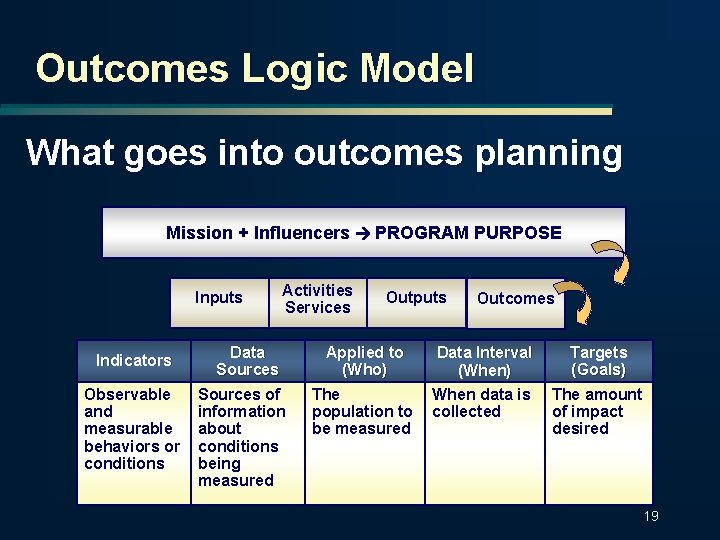

Outcomes Logic Model What goes into outcomes planning Mission + Influencers PROGRAM PURPOSE Inputs Indicators Observable and measurable behaviors or conditions Activities Services Data Sources of information about conditions being measured Outputs Applied to (Who) The population to be measured Outcomes Data Interval (When) When data is collected Targets (Goals) The amount of impact desired 19

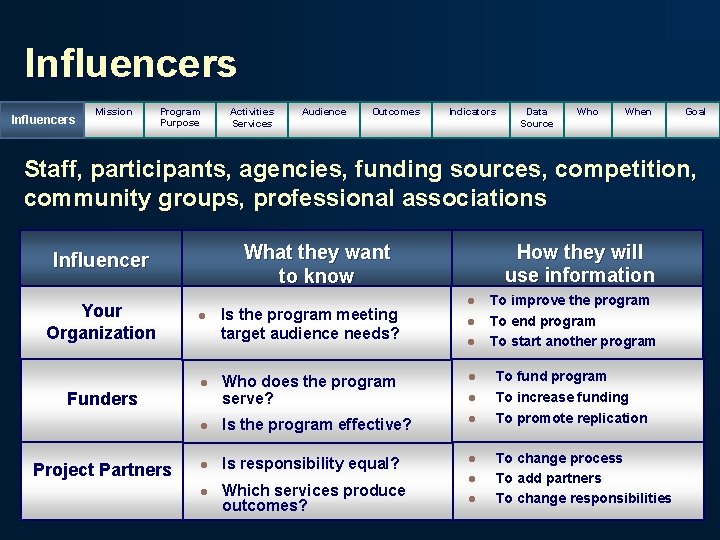

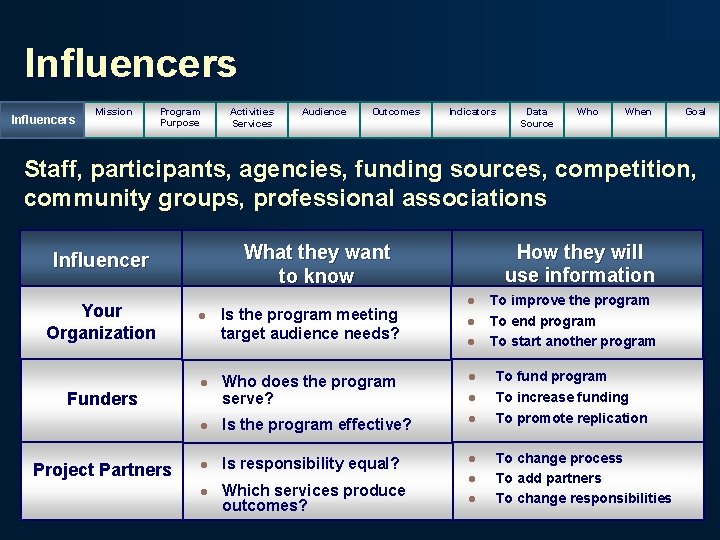

Influencers Mission Program Purpose Activities Services Audience Outcomes Indicators Data Source Who When Goal Staff, participants, agencies, funding sources, competition, community groups, professional associations What they want to know Influencer Your Organization Funders Project Partners l Is the program meeting target audience needs? How they will use information l l l To improve the program To end program To start another program Who does the program serve? l To fund program l l Is the program effective? l To increase funding To promote replication l Is responsibility equal? l l l Which services produce outcomes? l l To change process To add partners To change responsibilities 20

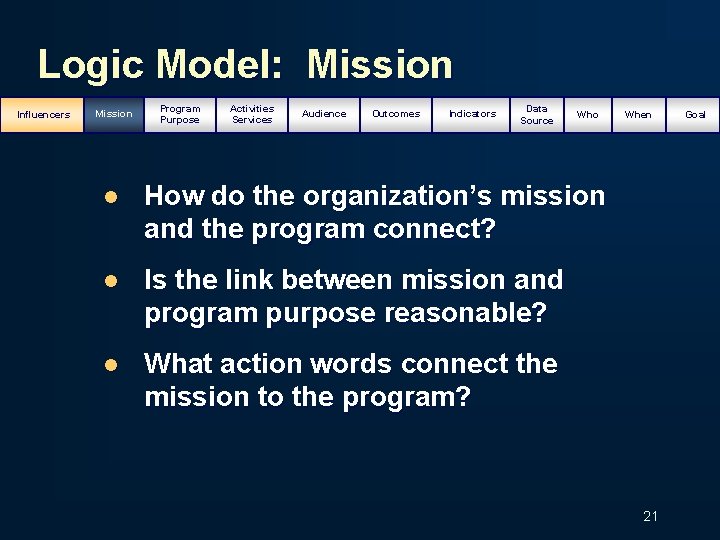

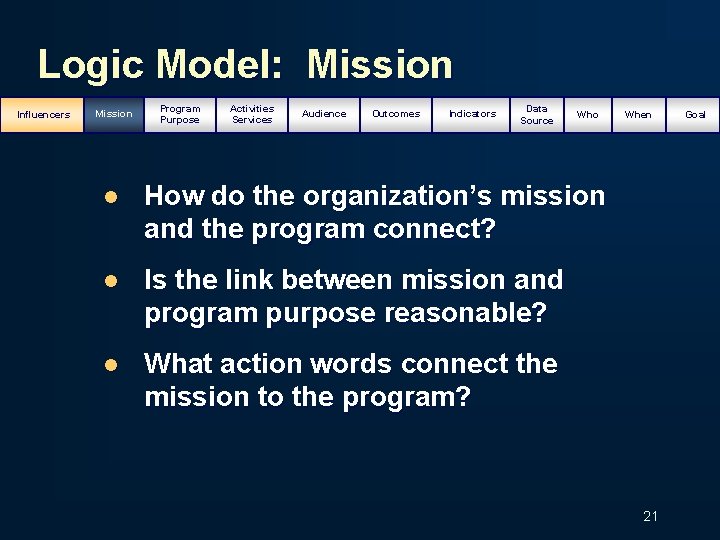

Logic Model: Mission Influencers Mission Program Purpose Activities Services Audience Outcomes Indicators Data Source Who l How do the organization’s mission and the program connect? l Is the link between mission and program purpose reasonable? l What action words connect the mission to the program? When 21 Goal

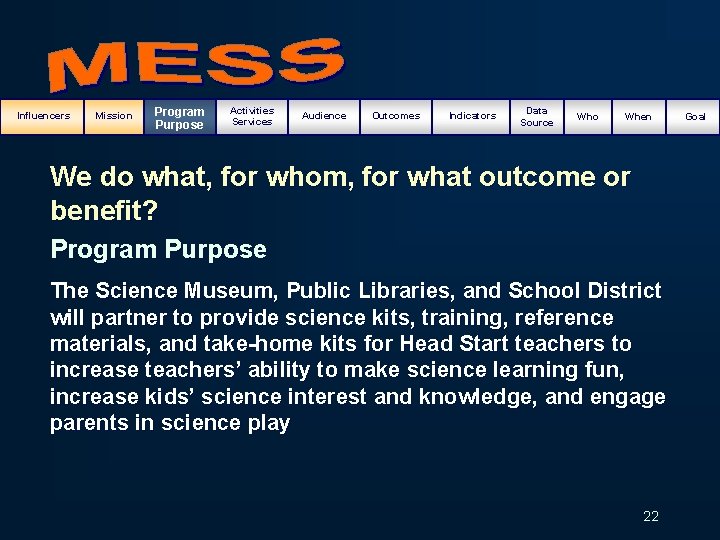

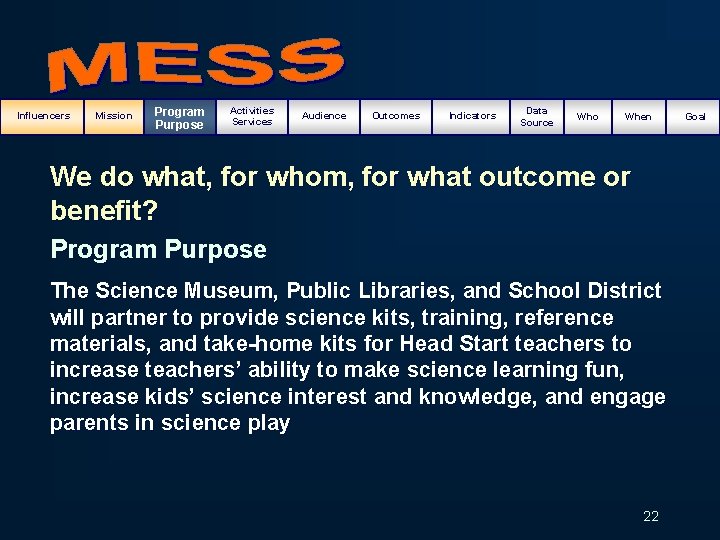

Influencers Mission Program Purpose Activities Services Audience Outcomes Indicators Data Source Who When We do what, for whom, for what outcome or benefit? Program Purpose The Science Museum, Public Libraries, and School District will partner to provide science kits, training, reference materials, and take-home kits for Head Start teachers to increase teachers’ ability to make science learning fun, increase kids’ science interest and knowledge, and engage parents in science play 22 Goal

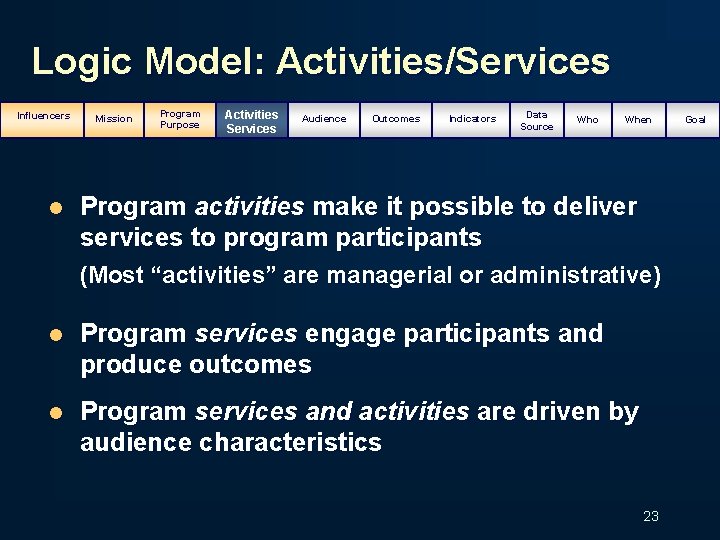

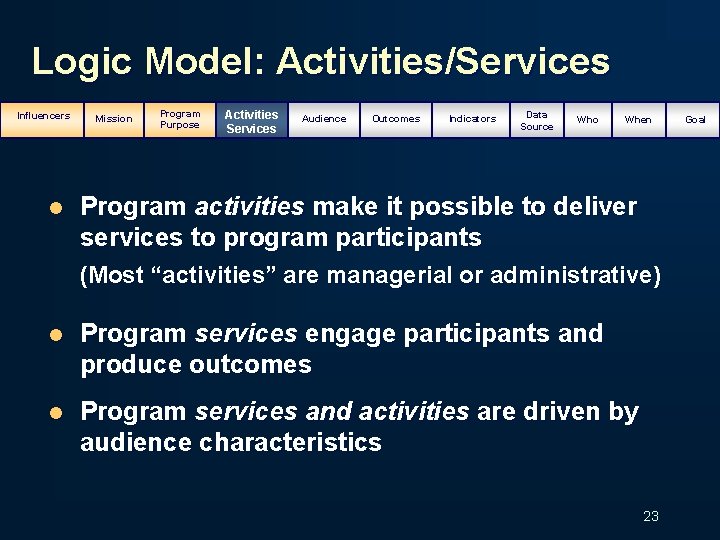

Logic Model: Activities/Services Influencers l Mission Program Purpose Activities Services Audience Outcomes Indicators Data Source Who When Program activities make it possible to deliver services to program participants (Most “activities” are managerial or administrative) l Program services engage participants and produce outcomes l Program services and activities are driven by audience characteristics 23 Goal

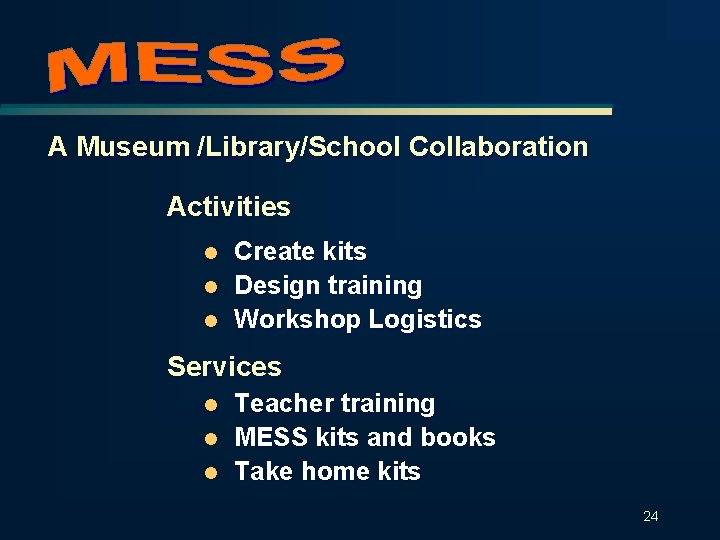

A Museum /Library/School Collaboration Activities l l l Create kits Design training Workshop Logistics Services l l l Teacher training MESS kits and books Take home kits 24

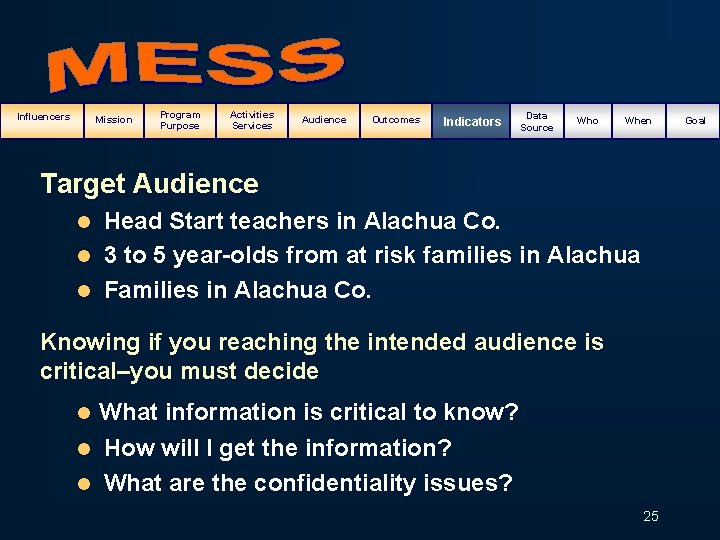

Influencers Mission Program Purpose Activities Services Audience Outcomes Indicators Data Source Who When Target Audience Head Start teachers in Alachua Co. l 3 to 5 year-olds from at risk families in Alachua l Families in Alachua Co. l Knowing if you reaching the intended audience is critical–you must decide What information is critical to know? l How will I get the information? l What are the confidentiality issues? l 25 Goal

Logic Model: Outcomes Influencers Program Purpose Mission Activities Services Audience Outcomes Indicators Data Source Who When Outcomes l State how you expect people to benefit from your program l State the intended results of your services l Describe changes in skills, attitudes, behaviors, knowledge 26 Goal

Influencers Mission Program Purpose Activities Services Audience Outcomes Indicators Data Source Who When Samples Outcome 1 Teachers will be more confident helping students learn about science Outcome 2 Teachers will include science experiences in their classrooms 27 Goal

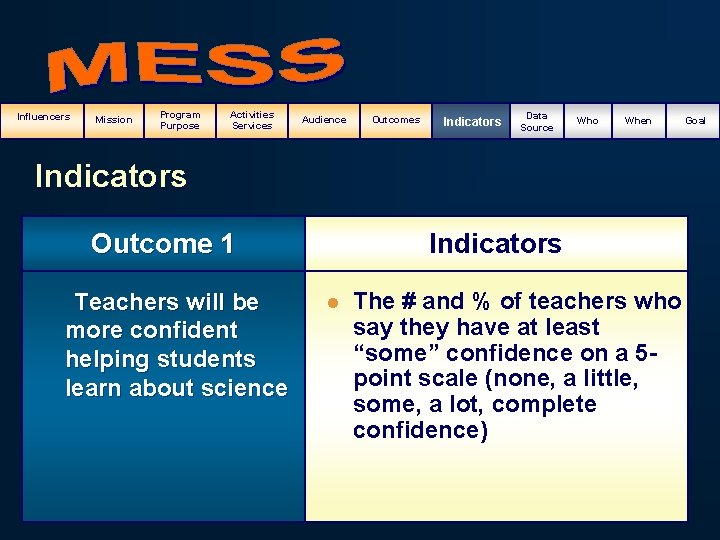

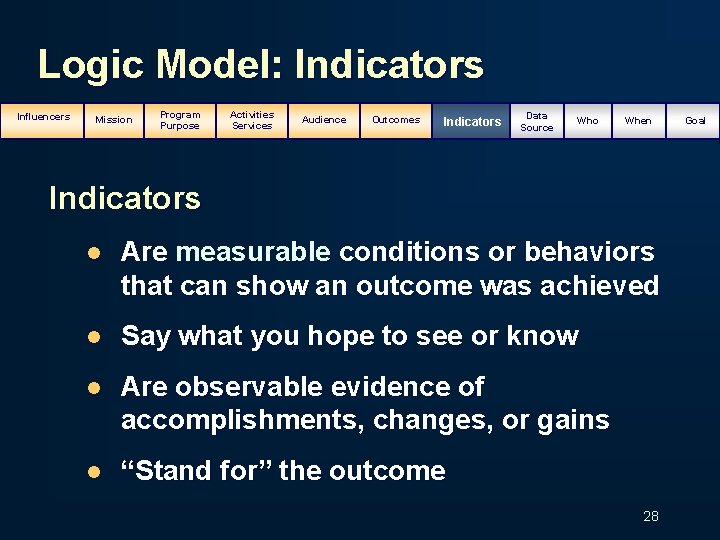

Logic Model: Indicators Influencers Mission Program Purpose Activities Services Audience Outcomes Indicators Data Source Who When Indicators l Are measurable conditions or behaviors that can show an outcome was achieved l Say what you hope to see or know l Are observable evidence of accomplishments, changes, or gains l “Stand for” the outcome 28 Goal

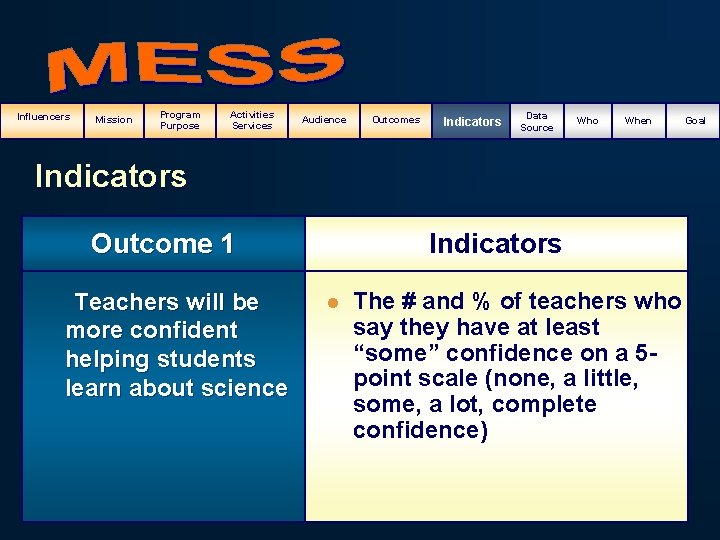

Influencers Mission Program Purpose Activities Services Audience Outcomes Indicators Data Source Who When Indicators Outcome 1 Teachers will be more confident helping students learn about science Indicators l The # and % of teachers who say they have at least “some” confidence on a 5 point scale (none, a little, some, a lot, complete confidence) 29 Goal

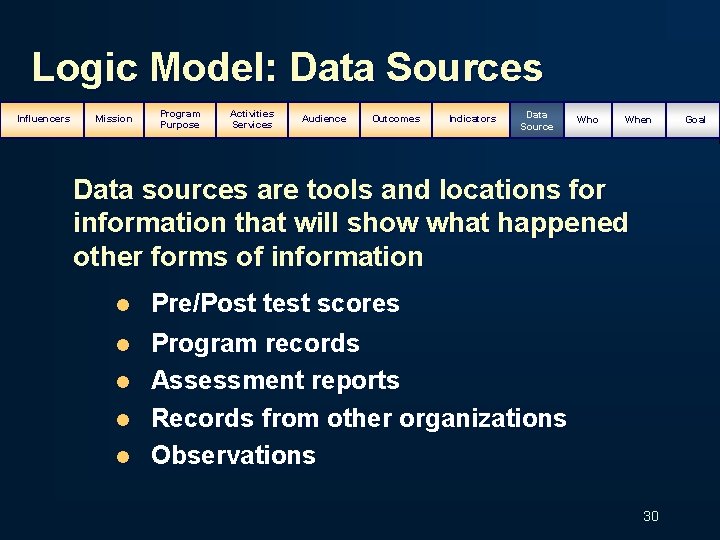

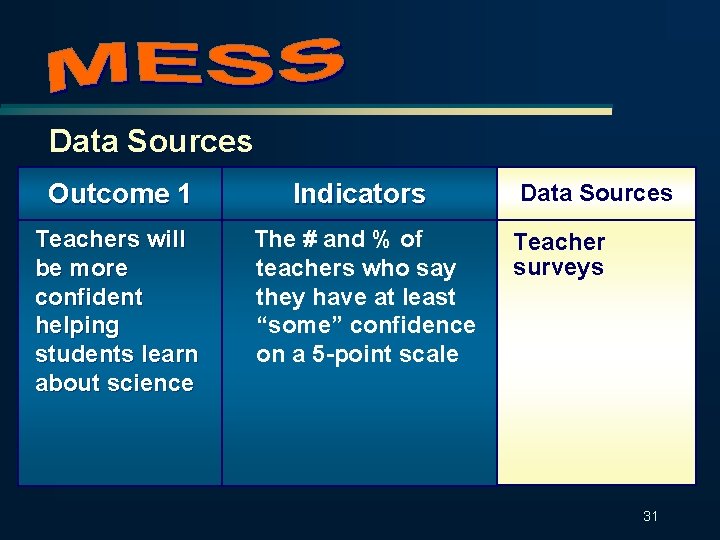

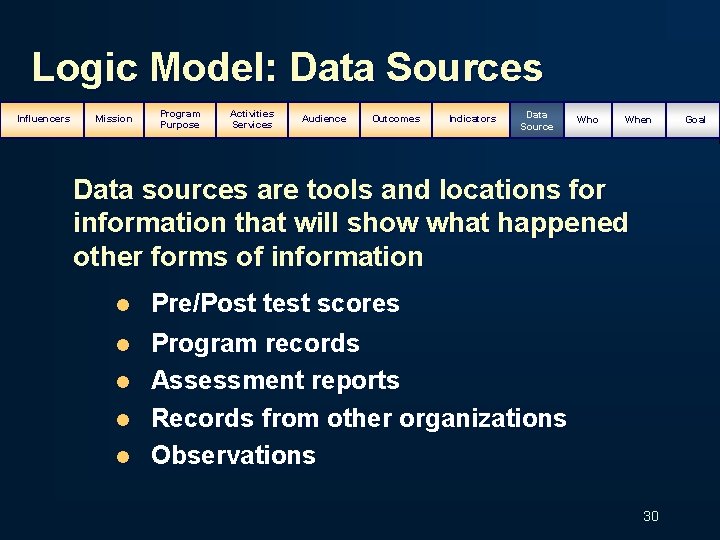

Logic Model: Data Sources Influencers Mission Program Purpose Activities Services Audience Outcomes Indicators Data Source Who When Data sources are tools and locations for information that will show what happened other forms of information l Pre/Post test scores l Program records Assessment reports Records from other organizations Observations l l l 30 Goal

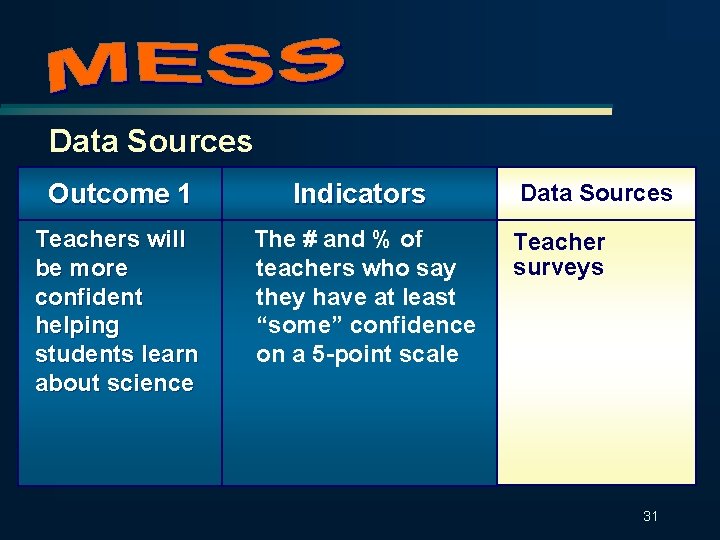

Data Sources Outcome 1 Indicators Teachers will be more confident helping students learn about science The # and % of teachers who say they have at least “some” confidence on a 5 -point scale Data Sources Teacher surveys 31

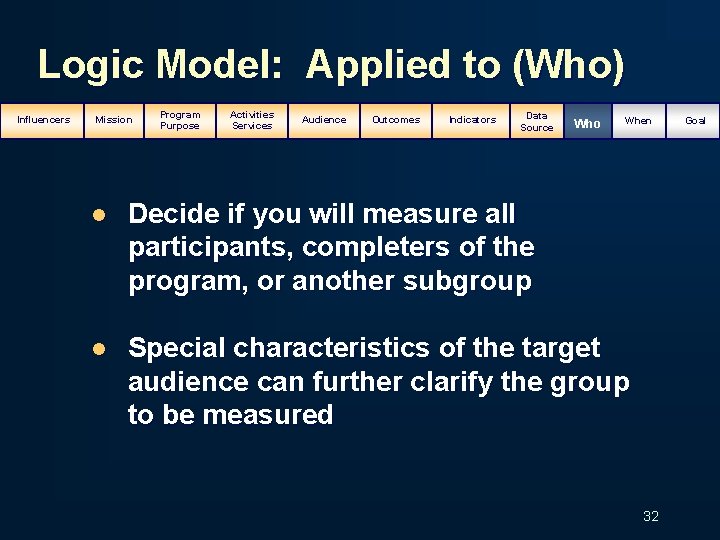

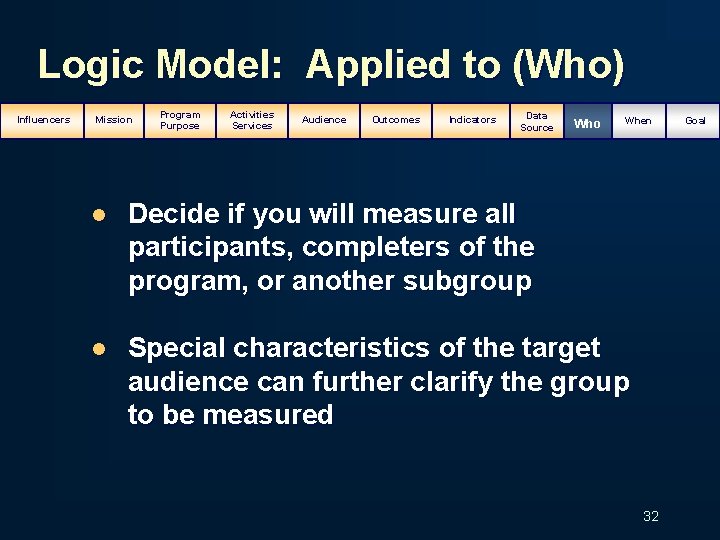

Logic Model: Applied to (Who) Influencers Mission Program Purpose Activities Services Audience Outcomes Indicators Data Source Who When l Decide if you will measure all participants, completers of the program, or another subgroup l Special characteristics of the target audience can further clarify the group to be measured 32 Goal

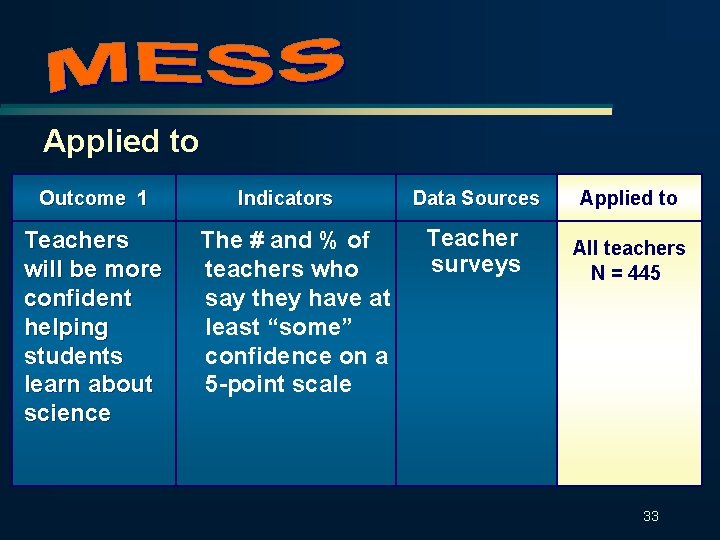

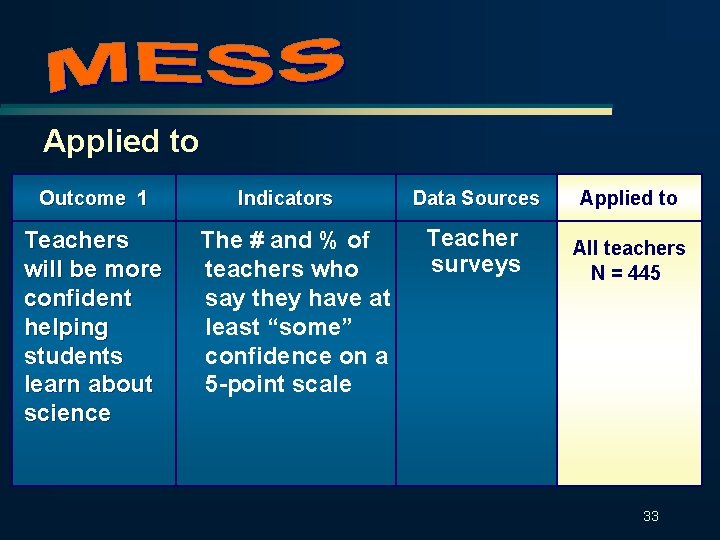

Applied to Outcome 1 Teachers will be more confident helping students learn about science Indicators The # and % of teachers who say they have at least “some” confidence on a 5 -point scale Data Sources Applied to Teacher surveys All teachers N = 445 33

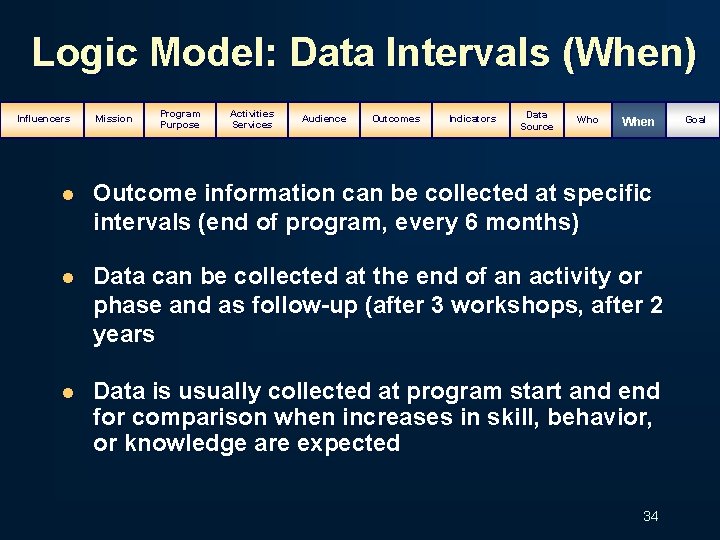

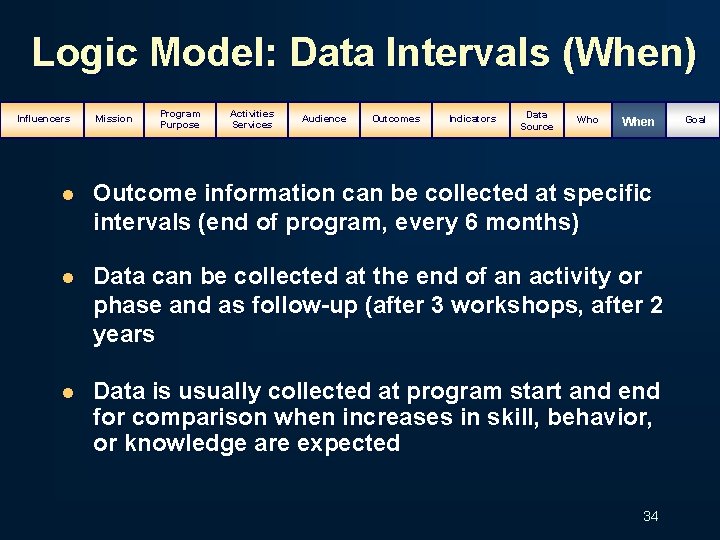

Logic Model: Data Intervals (When) Influencers Mission Program Purpose Activities Services Audience Outcomes Indicators Data Source Who When l Outcome information can be collected at specific intervals (end of program, every 6 months) l Data can be collected at the end of an activity or phase and as follow-up (after 3 workshops, after 2 years l Data is usually collected at program start and end for comparison when increases in skill, behavior, or knowledge are expected 34 Goal

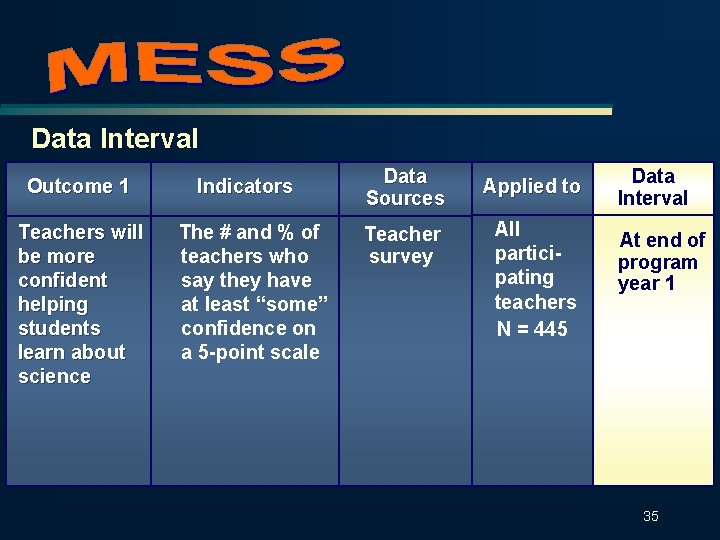

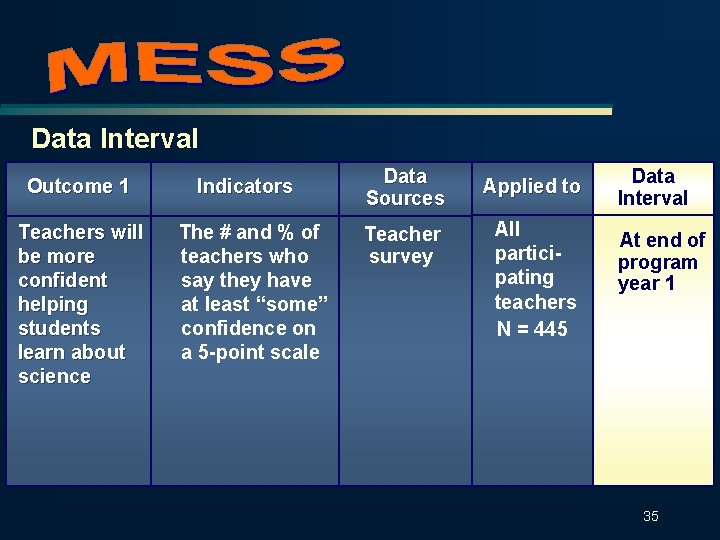

Data Interval Outcome 1 Teachers will be more confident helping students learn about science Indicators The # and % of teachers who say they have at least “some” confidence on a 5 -point scale Data Sources Applied to Teacher survey All participating teachers N = 445 Data Interval At end of program year 1 35

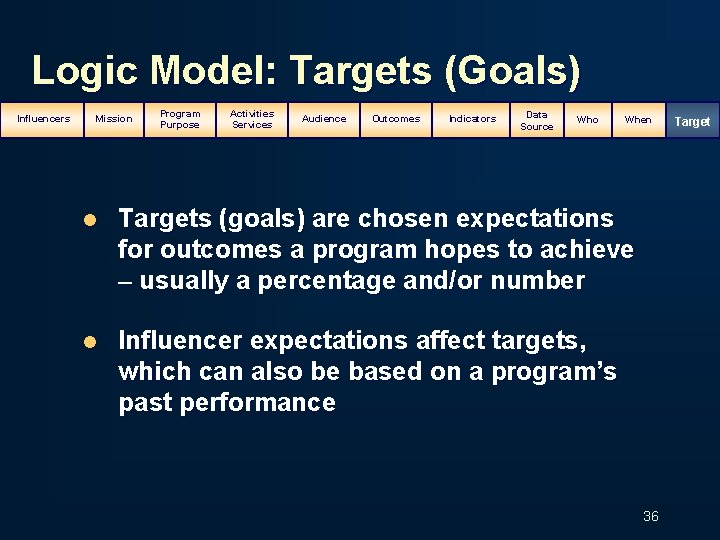

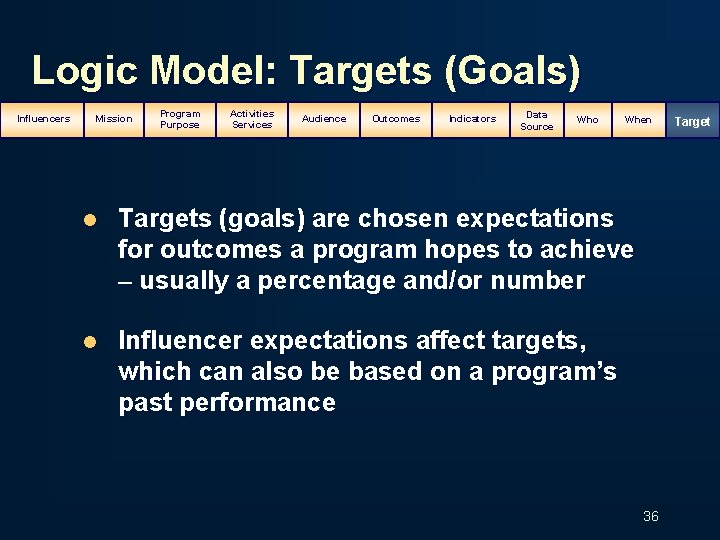

Logic Model: Targets (Goals) Influencers Mission Program Purpose Activities Services Audience Outcomes Indicators Data Source Who When l Targets (goals) are chosen expectations for outcomes a program hopes to achieve - usually a percentage and/or number l Influencer expectations affect targets, which can also be based on a program’s past performance 36 Target

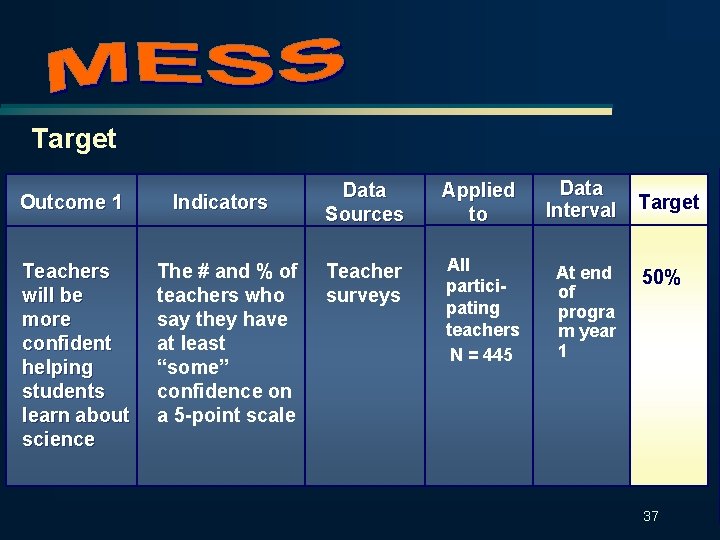

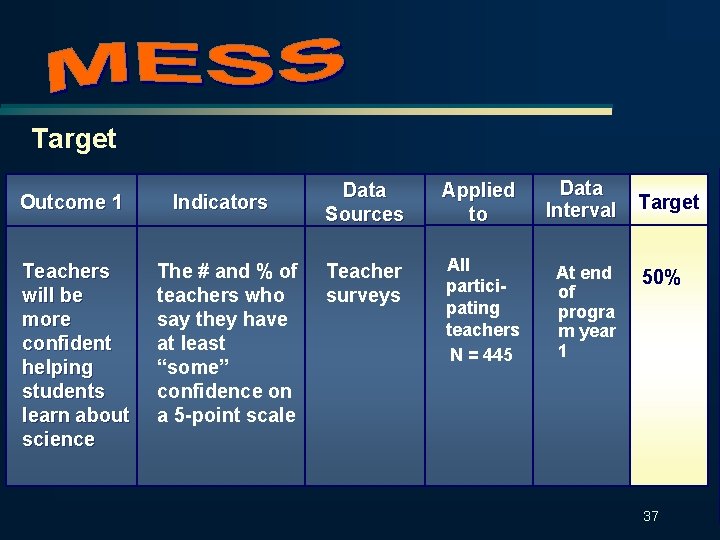

Target Outcome 1 Teachers will be more confident helping students learn about science Indicators The # and % of teachers who say they have at least “some” confidence on a 5 -point scale Data Sources Applied to Data Interval Teacher surveys All participating teachers N = 445 At end of progra m year 1 Target 50% 37

What should reports say? l l l We wanted to do what? We did what? So what? Above all l Reports should meet influencer needs for information and program results l Reports should guide program staff to improve outcomes for program participants 38

What will it cost? Assume 7 -10% of program costs for program evaluation (non-research) What will you get? l Low cost – Know numbers, audience characteristics, and customer satisfaction l Low to moderate cost – Know changes in audience skills, knowledge, behaviors, and attitudes 39

What will you get? l Moderate to high cost – Comparison groups can show attribution, short-term changes due to program l High cost – Long-term follow up, can attribute long-term changes to audience due to program services (research) 40

For more information Karen Motylewski Institute of Museum and Library Services 1100 Pennsylvania Avenue, NW Washington, DC 20506 202 -606 -5551 http: //www. imls. gov kmotylewski@imls. gov 41