Keyword Search over XML 1 Inexact Querying Until

- Slides: 91

Keyword Search over XML 1

Inexact Querying • Until now, our queries have been complex patterns, represented by trees or graphs • Such query languages are not appropriate for the naive user: – if XML “replaces” HTML as the web standard, users can’t be expected to write graph queries Allow Keyword Search over XML! 2

Keyword Search • A keyword search is a list of search terms • There can be different ways to define legal search terms. Examples: – keyword: label, e. g. , author: Smith – keyword, e. g. , : Smith – label, e. g. , author: – value (without distinguishing between keywords and labels) 3

Challenges (1) • Determining which part of the XML document corresponds to an answer – When searching HTML, the result units are usually documents – When searching XML, a finer granularity should be returned, e. g. , a subtree 4

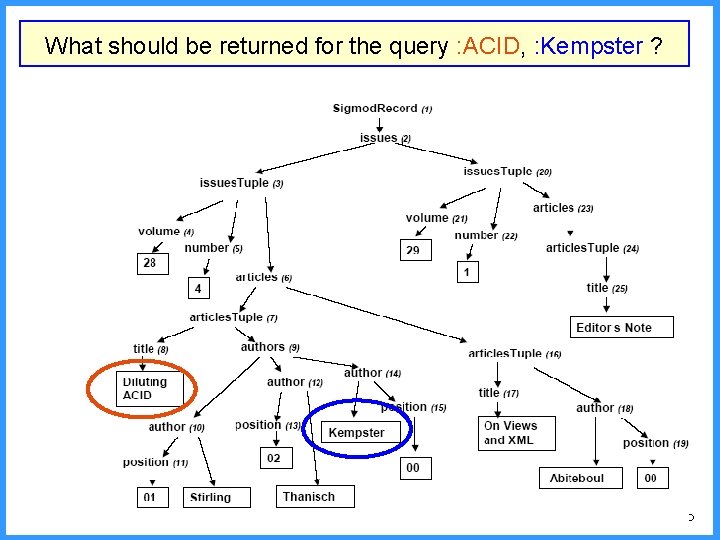

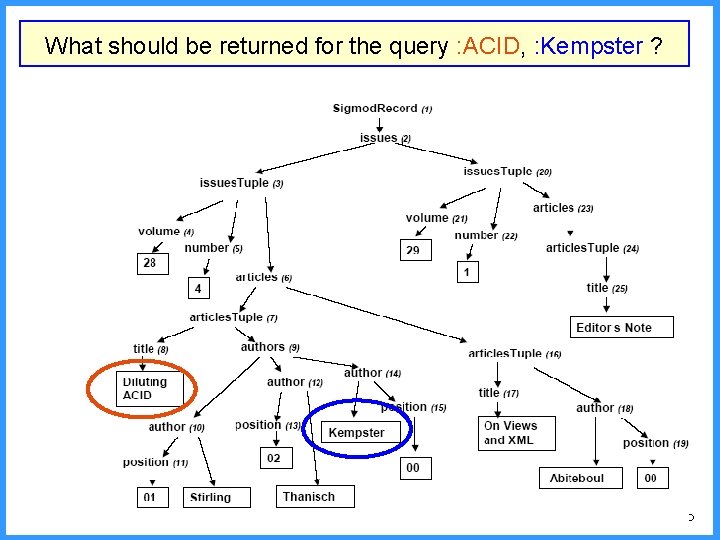

What should be returned for the query : ACID, : Kempster ? 5

Challenges (2) • Avoiding the return of non-meaningfully related elements – XML documents often contain many unrelated fragments of information. Can these information units be recognized? 6

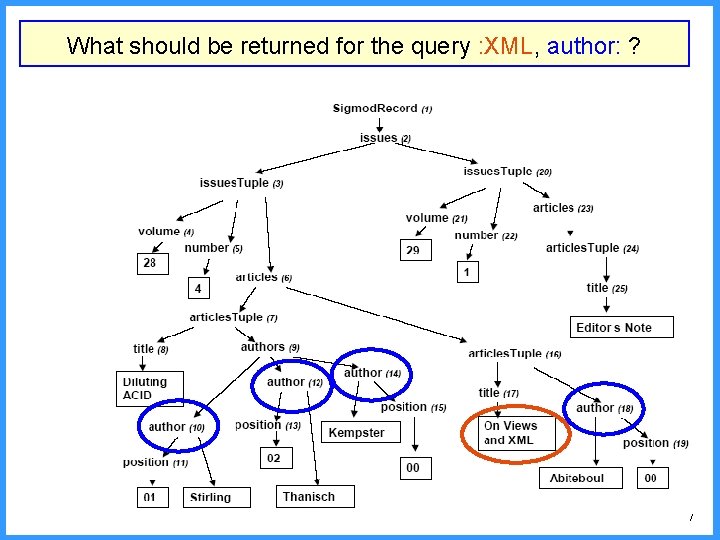

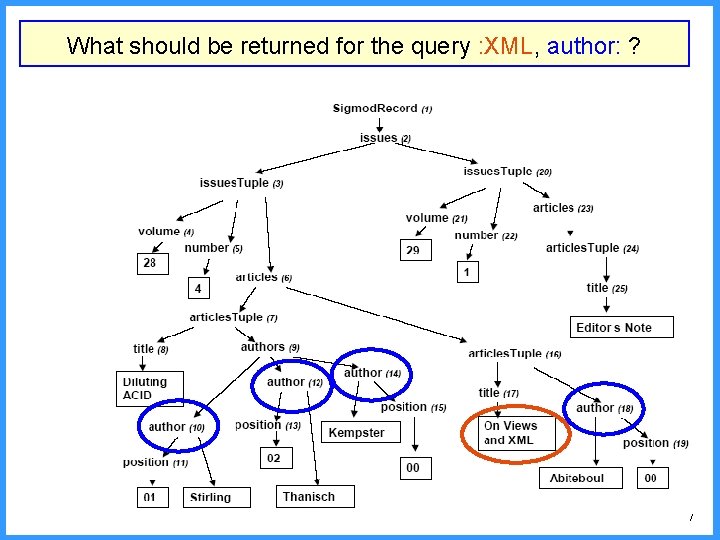

What should be returned for the query : XML, author: ? 7

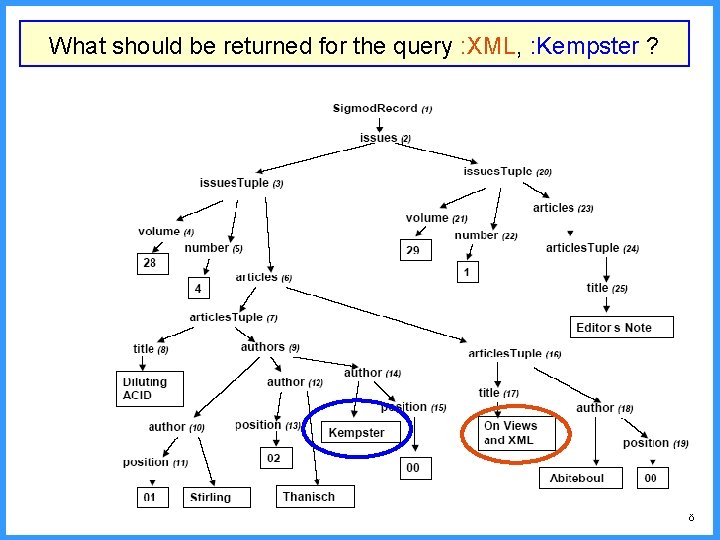

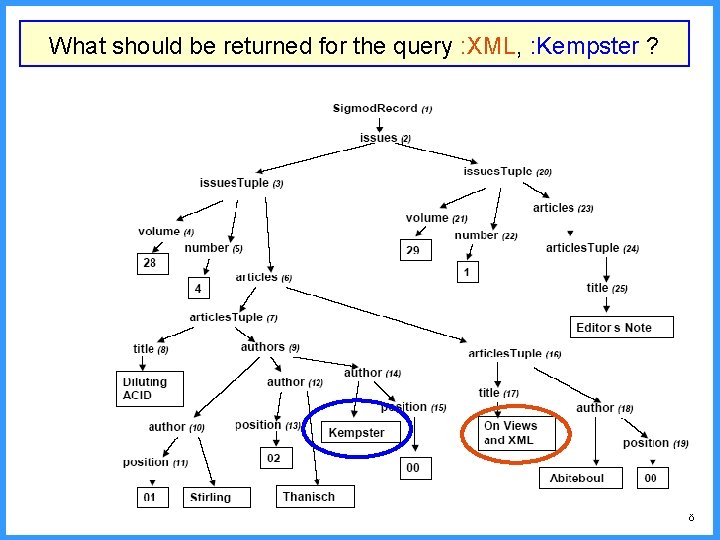

What should be returned for the query : XML, : Kempster ? 8

Challenges (3) • Ranking mechanisms – How should document fragments/XML elements be ranked • Ideas? 9

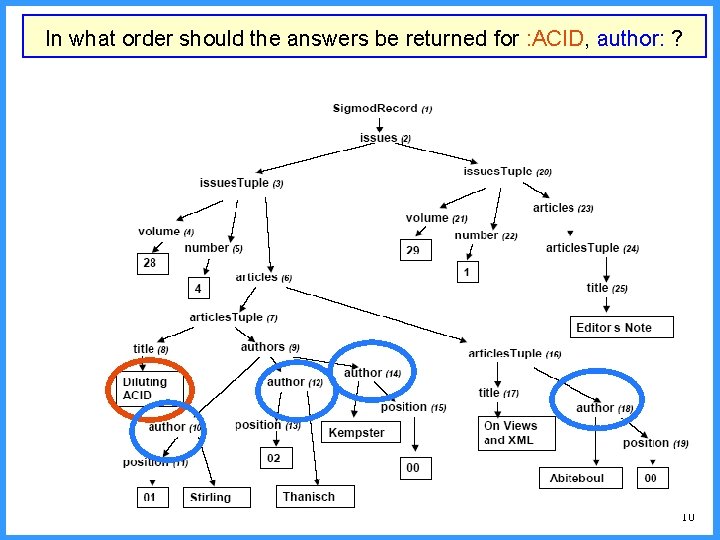

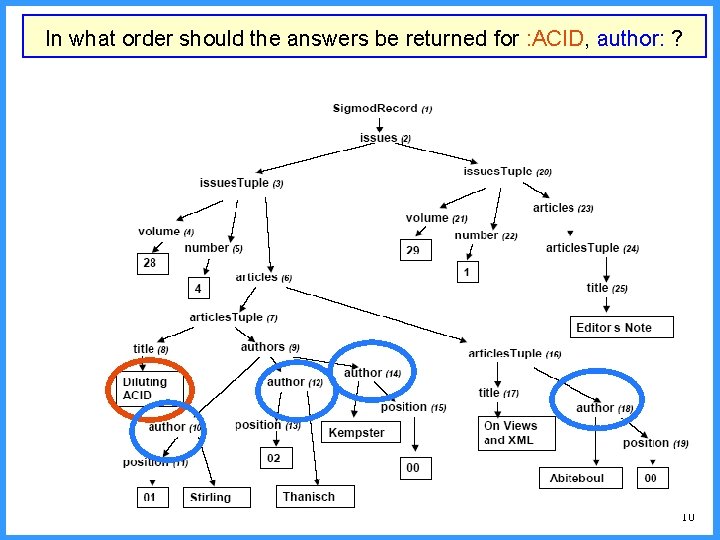

In what order should the answers be returned for : ACID, author: ? 10

Defining a Search Semantics • When defining a search over XML, all previous challenges must be considered. • We must decide: – what portions of a document are a search result? – should any results be filtered out since they are not meaningful? – how should ranking be performed • Typically, research focuses on one of these problems and provides simple solutions for the other problems. 11

XRank: Ranked Keyword Search over XML Documents Guo, Shao, Botev, Shanmugasundram SIGMOD 2003 12

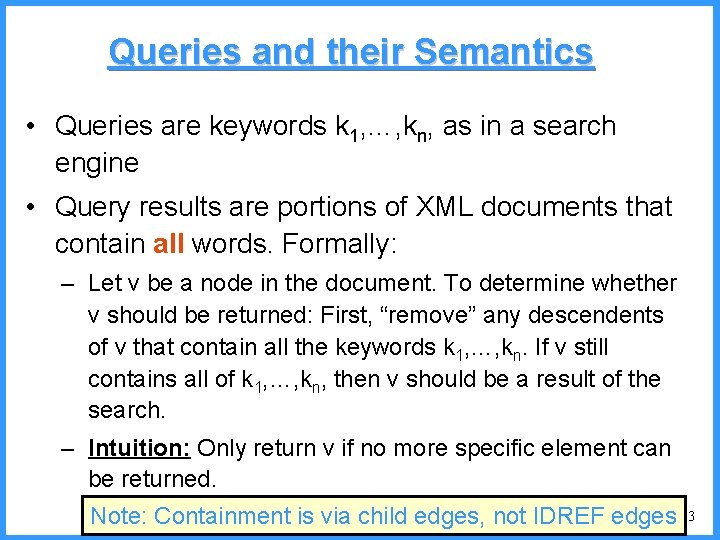

Queries and their Semantics • Queries are keywords k 1, …, kn, as in a search engine • Query results are portions of XML documents that contain all words. Formally: – Let v be a node in the document. To determine whether v should be returned: First, “remove” any descendents of v that contain all the keywords k 1, …, kn. If v still contains all of k 1, …, kn, then v should be a result of the search. – Intuition: Only return v if no more specific element can be returned. Note: Containment is via child edges, not IDREF edges 13

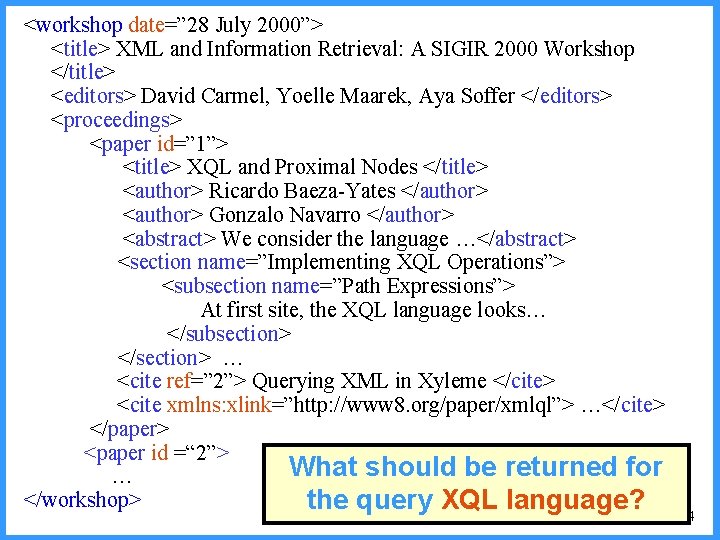

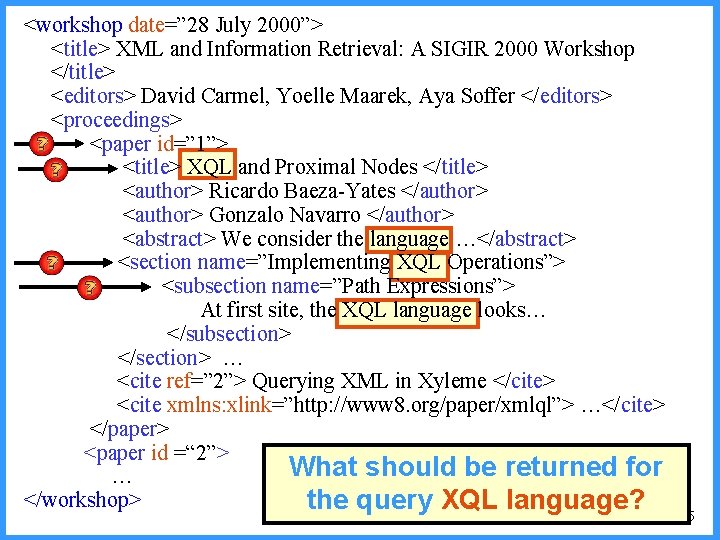

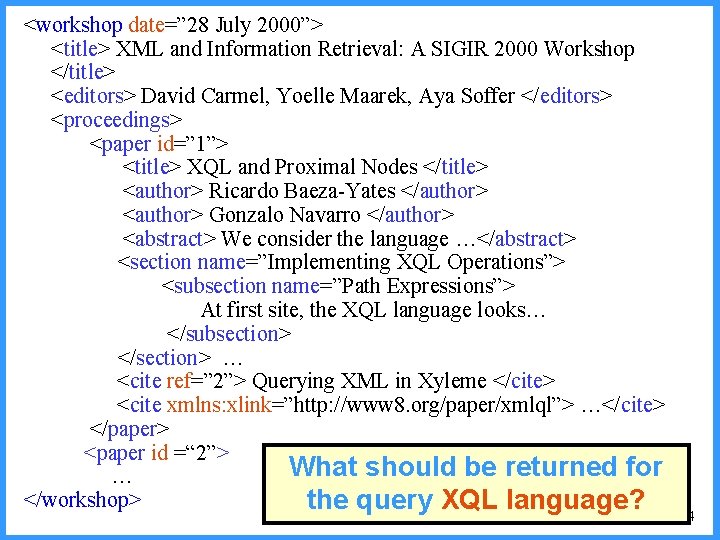

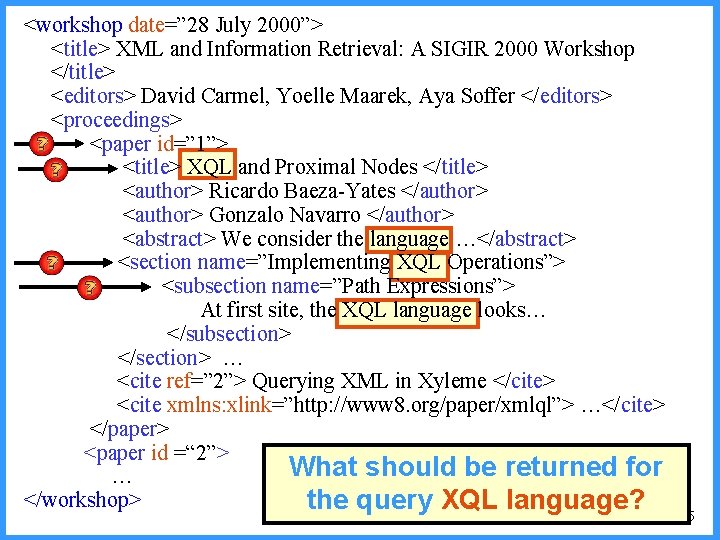

<workshop date=” 28 July 2000”> <title> XML and Information Retrieval: A SIGIR 2000 Workshop </title> <editors> David Carmel, Yoelle Maarek, Aya Soffer </editors> <proceedings> <paper id=” 1”> <title> XQL and Proximal Nodes </title> <author> Ricardo Baeza-Yates </author> <author> Gonzalo Navarro </author> <abstract> We consider the language …</abstract> <section name=”Implementing XQL Operations”> <subsection name=”Path Expressions”> At first site, the XQL language looks… </subsection> </section> … <cite ref=” 2”> Querying XML in Xyleme </cite> <cite xmlns: xlink=”http: //www 8. org/paper/xmlql”> …</cite> </paper> <paper id =“ 2”> What should be returned for … </workshop> the query XQL language? 14

<workshop date=” 28 July 2000”> <title> XML and Information Retrieval: A SIGIR 2000 Workshop </title> <editors> David Carmel, Yoelle Maarek, Aya Soffer </editors> <proceedings> <paper id=” 1”> <title> XQL and Proximal Nodes </title> <author> Ricardo Baeza-Yates </author> <author> Gonzalo Navarro </author> <abstract> We consider the language …</abstract> <section name=”Implementing XQL Operations”> <subsection name=”Path Expressions”> At first site, the XQL language looks… </subsection> </section> … <cite ref=” 2”> Querying XML in Xyleme </cite> <cite xmlns: xlink=”http: //www 8. org/paper/xmlql”> …</cite> </paper> <paper id =“ 2”> What should be returned for … </workshop> the query XQL language? 15

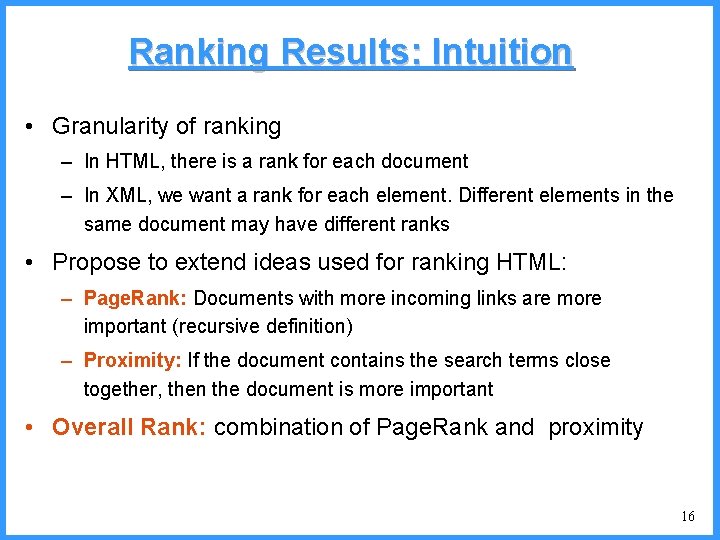

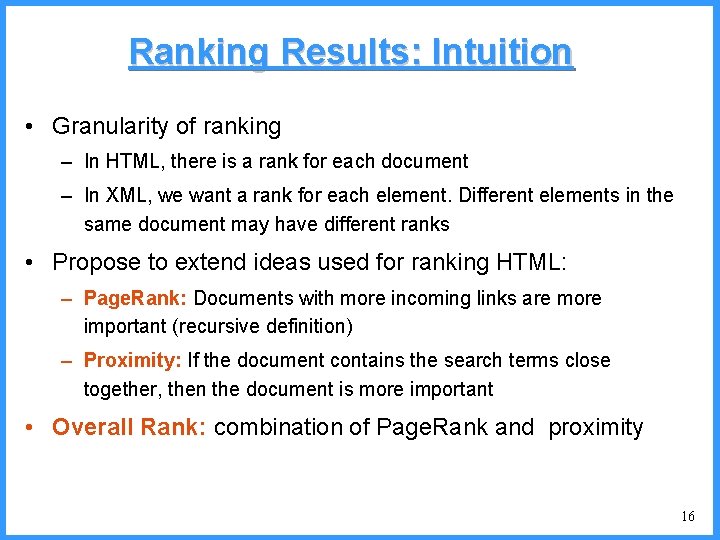

Ranking Results: Intuition • Granularity of ranking – In HTML, there is a rank for each document – In XML, we want a rank for each element. Different elements in the same document may have different ranks • Propose to extend ideas used for ranking HTML: – Page. Rank: Documents with more incoming links are more important (recursive definition) – Proximity: If the document contains the search terms close together, then the document is more important • Overall Rank: combination of Page. Rank and proximity 16

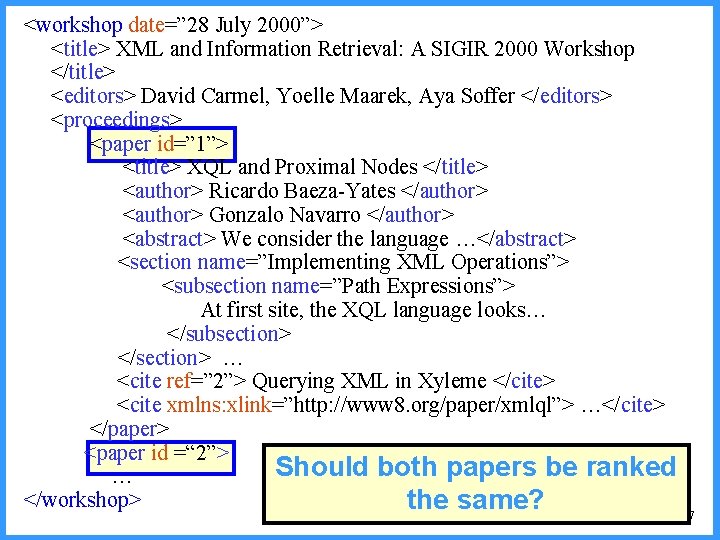

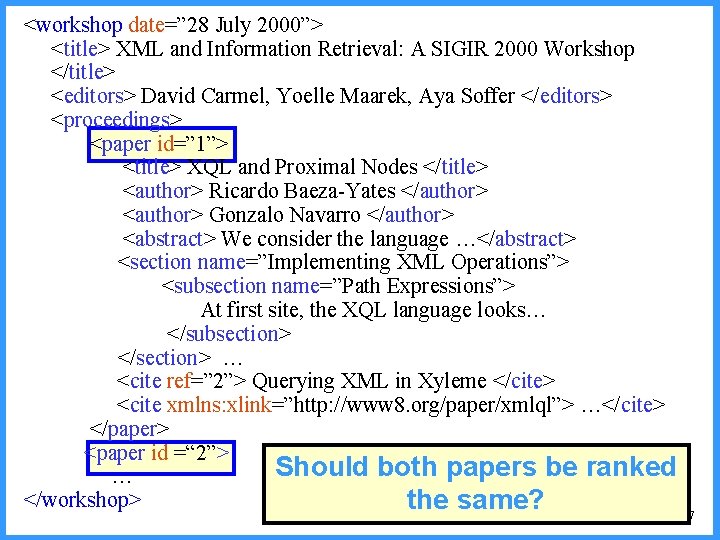

<workshop date=” 28 July 2000”> <title> XML and Information Retrieval: A SIGIR 2000 Workshop </title> <editors> David Carmel, Yoelle Maarek, Aya Soffer </editors> <proceedings> <paper id=” 1”> <title> XQL and Proximal Nodes </title> <author> Ricardo Baeza-Yates </author> <author> Gonzalo Navarro </author> <abstract> We consider the language …</abstract> <section name=”Implementing XML Operations”> <subsection name=”Path Expressions”> At first site, the XQL language looks… </subsection> </section> … <cite ref=” 2”> Querying XML in Xyleme </cite> <cite xmlns: xlink=”http: //www 8. org/paper/xmlql”> …</cite> </paper> <paper id =“ 2”> Should both papers be ranked … </workshop> the same? 17

Topics • We discuss: – Ranking – The Index Structure – Query Processing 18

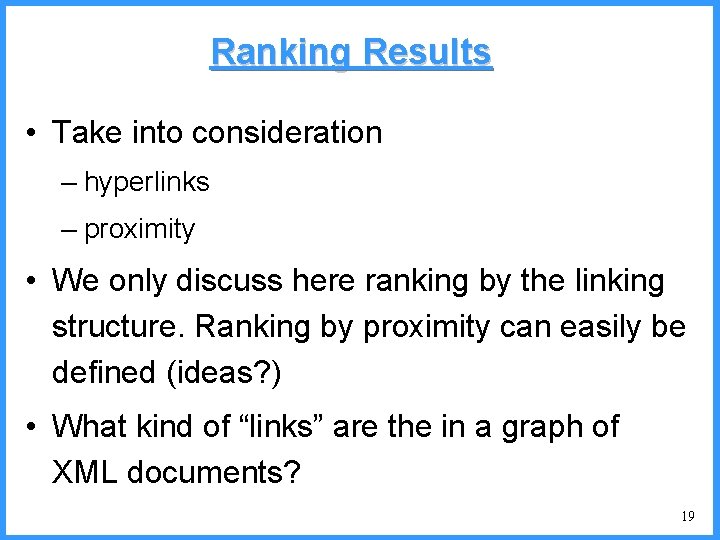

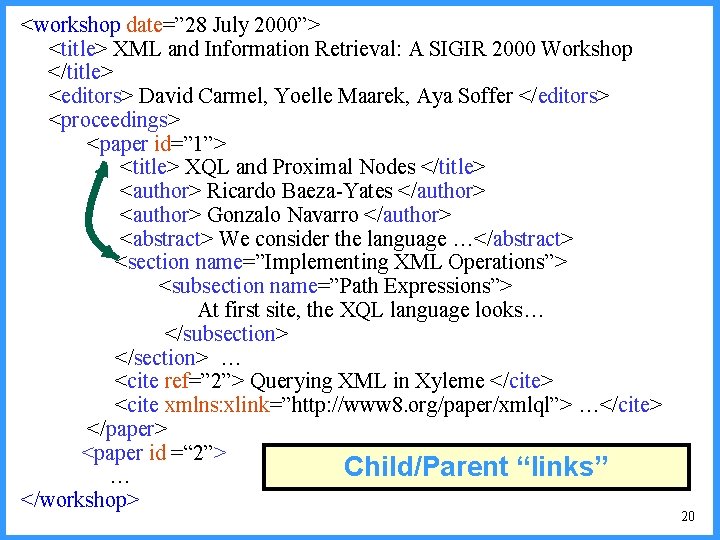

Ranking Results • Take into consideration – hyperlinks – proximity • We only discuss here ranking by the linking structure. Ranking by proximity can easily be defined (ideas? ) • What kind of “links” are the in a graph of XML documents? 19

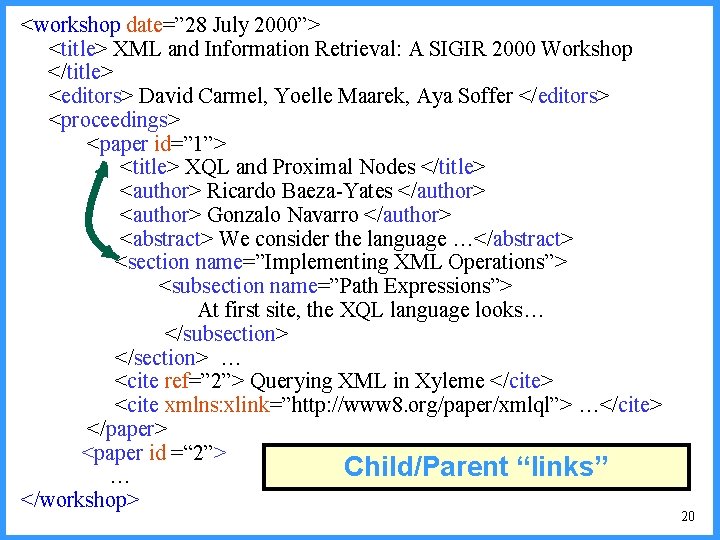

<workshop date=” 28 July 2000”> <title> XML and Information Retrieval: A SIGIR 2000 Workshop </title> <editors> David Carmel, Yoelle Maarek, Aya Soffer </editors> <proceedings> <paper id=” 1”> <title> XQL and Proximal Nodes </title> <author> Ricardo Baeza-Yates </author> <author> Gonzalo Navarro </author> <abstract> We consider the language …</abstract> <section name=”Implementing XML Operations”> <subsection name=”Path Expressions”> At first site, the XQL language looks… </subsection> </section> … <cite ref=” 2”> Querying XML in Xyleme </cite> <cite xmlns: xlink=”http: //www 8. org/paper/xmlql”> …</cite> </paper> <paper id =“ 2”> Child/Parent “links” … </workshop> 20

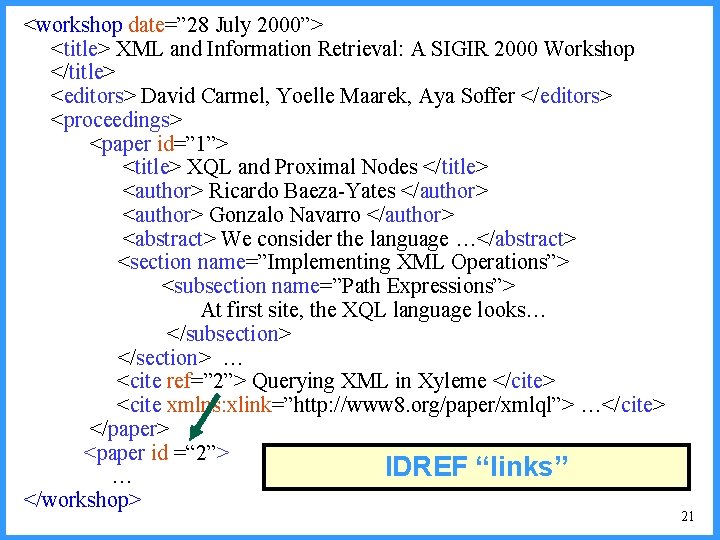

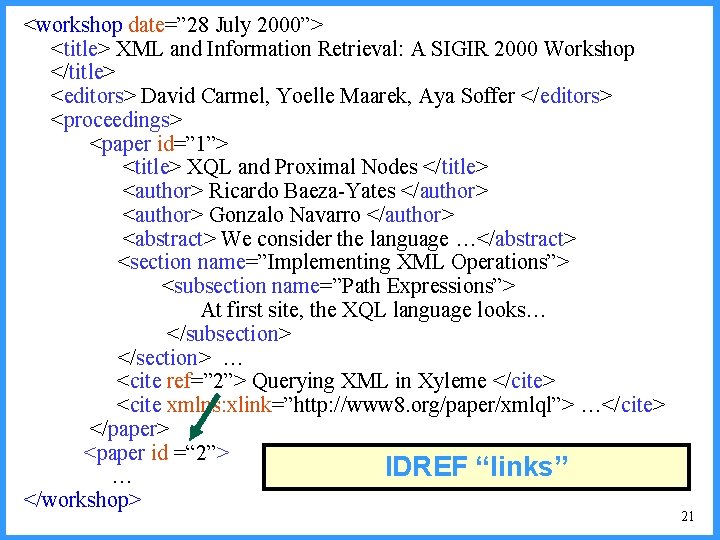

<workshop date=” 28 July 2000”> <title> XML and Information Retrieval: A SIGIR 2000 Workshop </title> <editors> David Carmel, Yoelle Maarek, Aya Soffer </editors> <proceedings> <paper id=” 1”> <title> XQL and Proximal Nodes </title> <author> Ricardo Baeza-Yates </author> <author> Gonzalo Navarro </author> <abstract> We consider the language …</abstract> <section name=”Implementing XML Operations”> <subsection name=”Path Expressions”> At first site, the XQL language looks… </subsection> </section> … <cite ref=” 2”> Querying XML in Xyleme </cite> <cite xmlns: xlink=”http: //www 8. org/paper/xmlql”> …</cite> </paper> <paper id =“ 2”> IDREF “links” … </workshop> 21

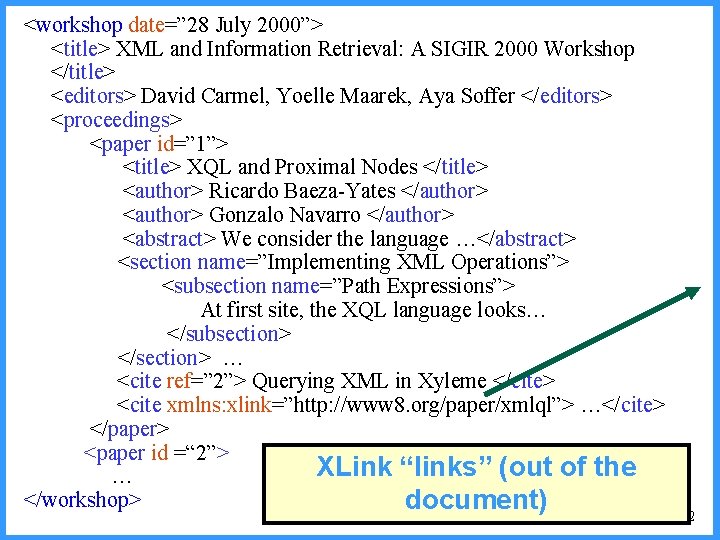

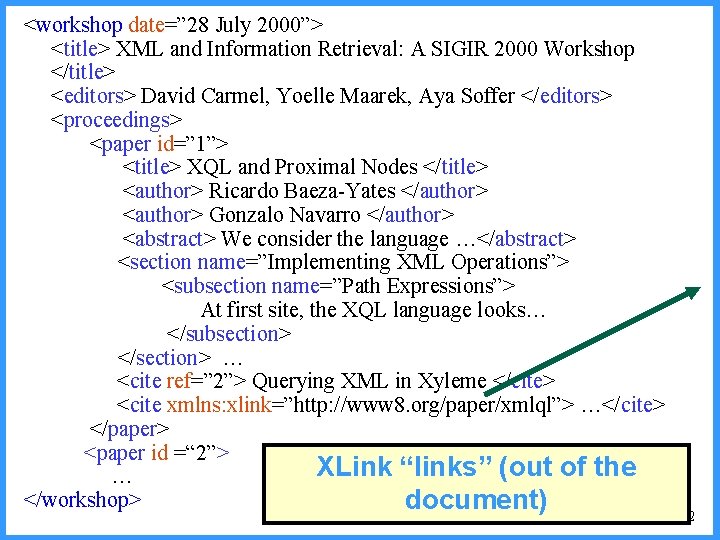

<workshop date=” 28 July 2000”> <title> XML and Information Retrieval: A SIGIR 2000 Workshop </title> <editors> David Carmel, Yoelle Maarek, Aya Soffer </editors> <proceedings> <paper id=” 1”> <title> XQL and Proximal Nodes </title> <author> Ricardo Baeza-Yates </author> <author> Gonzalo Navarro </author> <abstract> We consider the language …</abstract> <section name=”Implementing XML Operations”> <subsection name=”Path Expressions”> At first site, the XQL language looks… </subsection> </section> … <cite ref=” 2”> Querying XML in Xyleme </cite> <cite xmlns: xlink=”http: //www 8. org/paper/xmlql”> …</cite> </paper> <paper id =“ 2”> XLink “links” (out of the … </workshop> document) 22

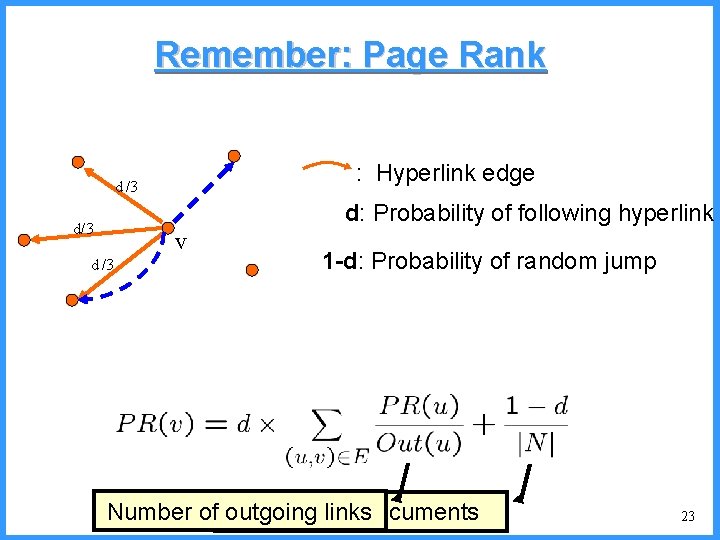

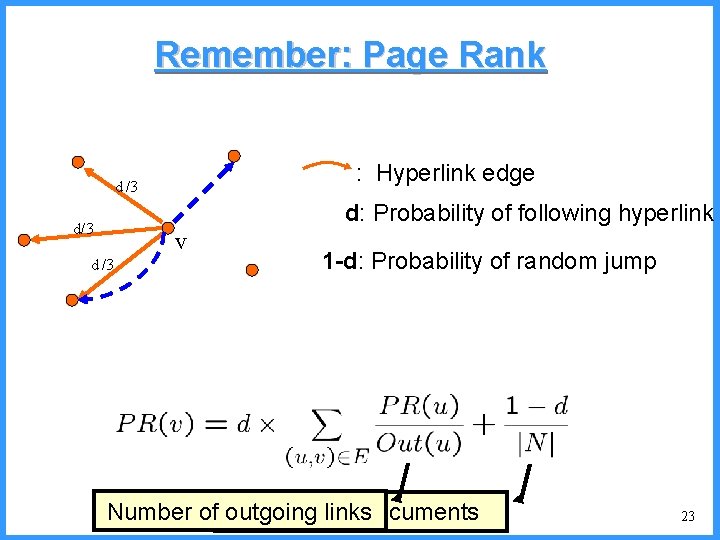

Remember: Page Rank : Hyperlink edge d /3 d: Probability of following hyperlink d/3 v d /3 1 -d: Probability of random jump Number of outgoing Numberlinks of documents 23

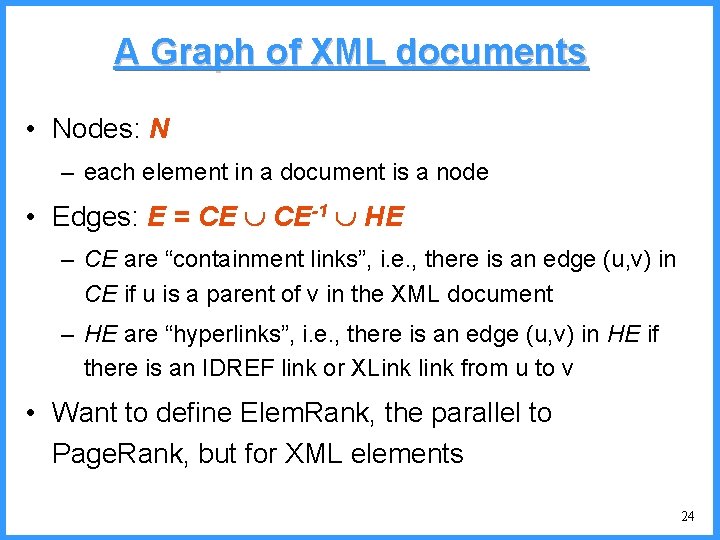

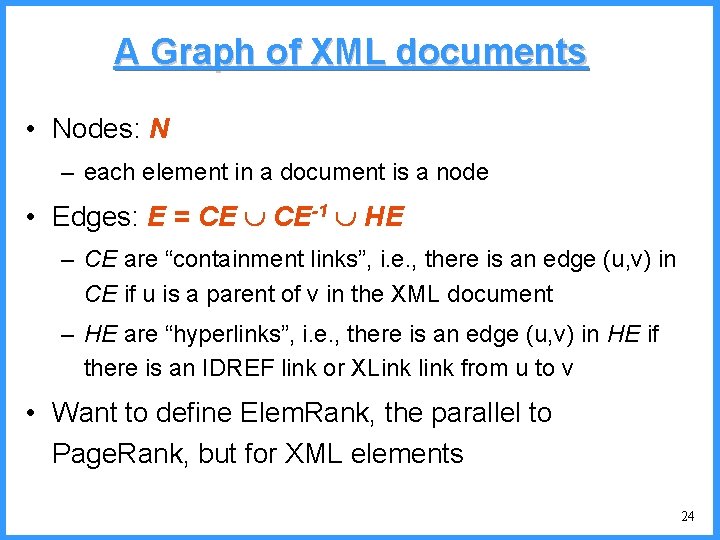

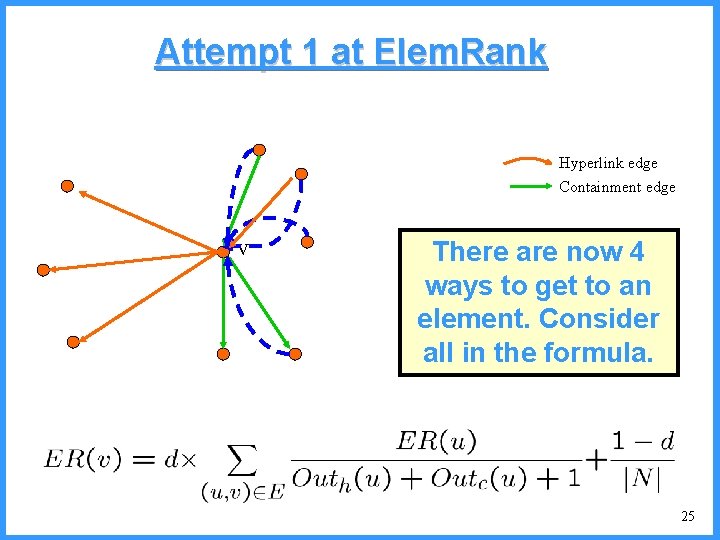

A Graph of XML documents • Nodes: N – each element in a document is a node • Edges: E = CE CE-1 HE – CE are “containment links”, i. e. , there is an edge (u, v) in CE if u is a parent of v in the XML document – HE are “hyperlinks”, i. e. , there is an edge (u, v) in HE if there is an IDREF link or XLink link from u to v • Want to define Elem. Rank, the parallel to Page. Rank, but for XML elements 24

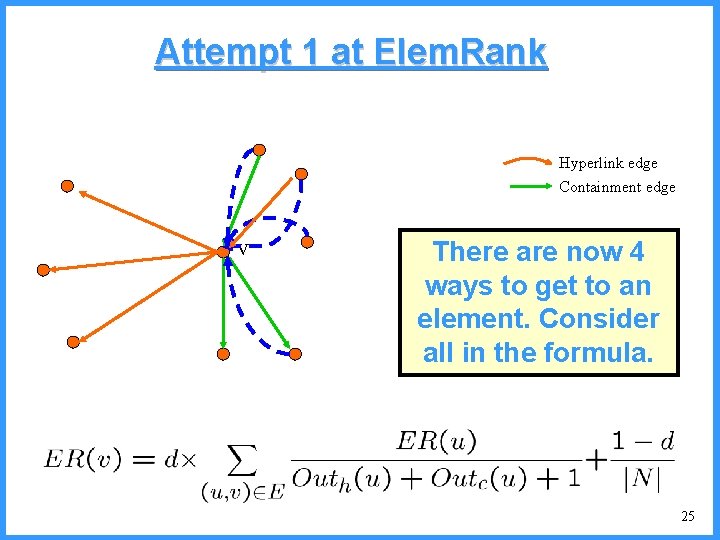

Attempt 1 at Elem. Rank Hyperlink edge Containment edge v There are now 4 ways to get to an element. Consider all in the formula. 25

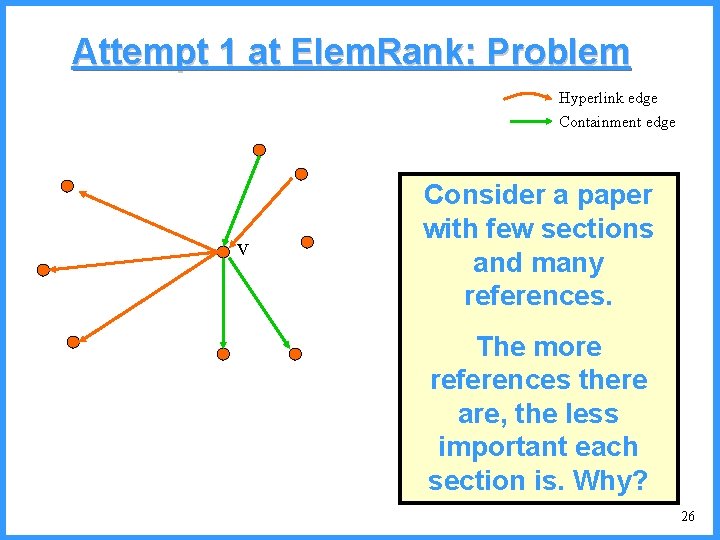

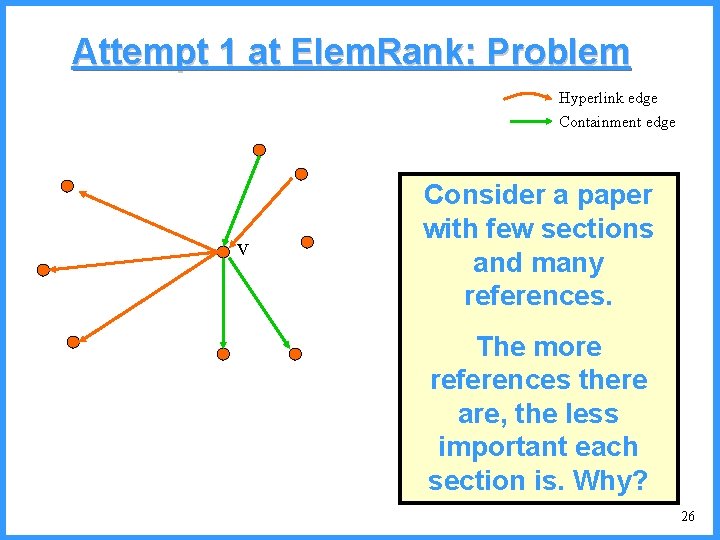

Attempt 1 at Elem. Rank: Problem Hyperlink edge Containment edge v Consider a paper with few sections and many references. The more references there are, the less important each section is. Why? 26

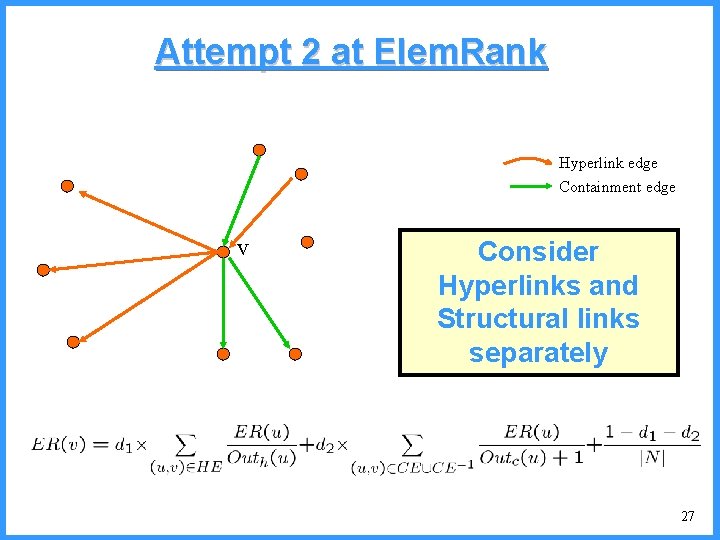

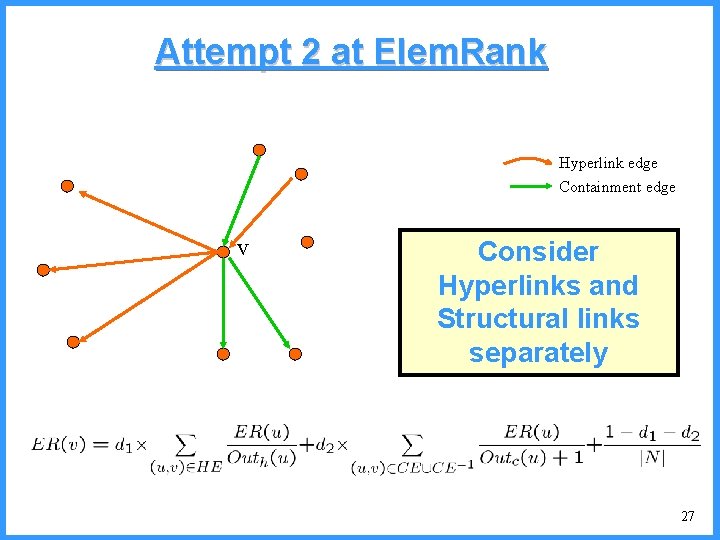

Attempt 2 at Elem. Rank Hyperlink edge Containment edge v Consider Hyperlinks and Structural links separately 27

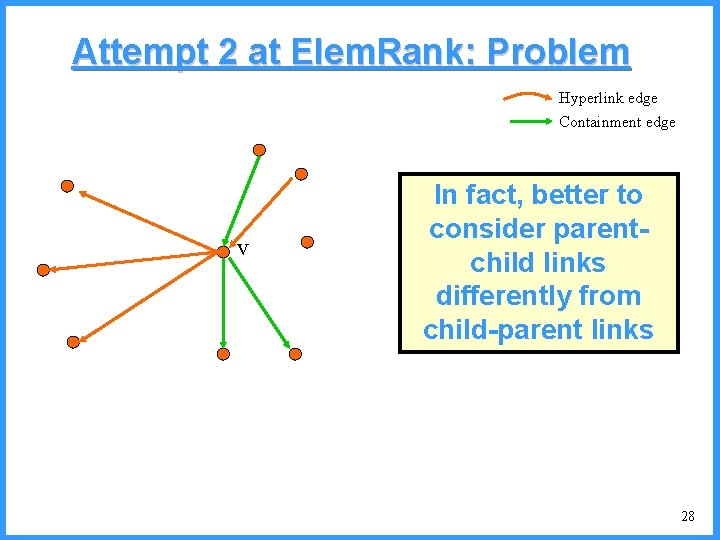

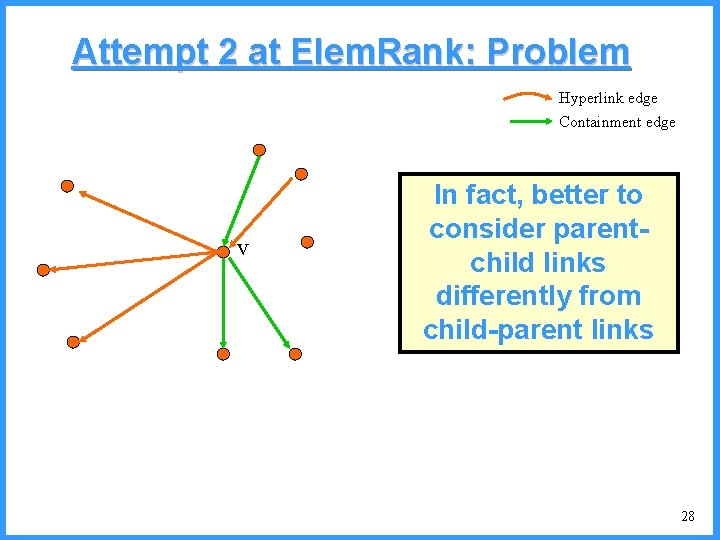

Attempt 2 at Elem. Rank: Problem Hyperlink edge Containment edge v In fact, better to consider parentchild links differently from child-parent links 28

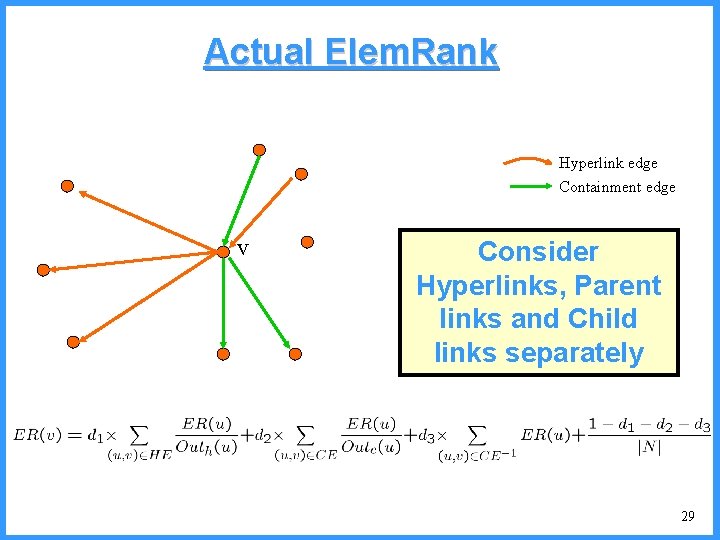

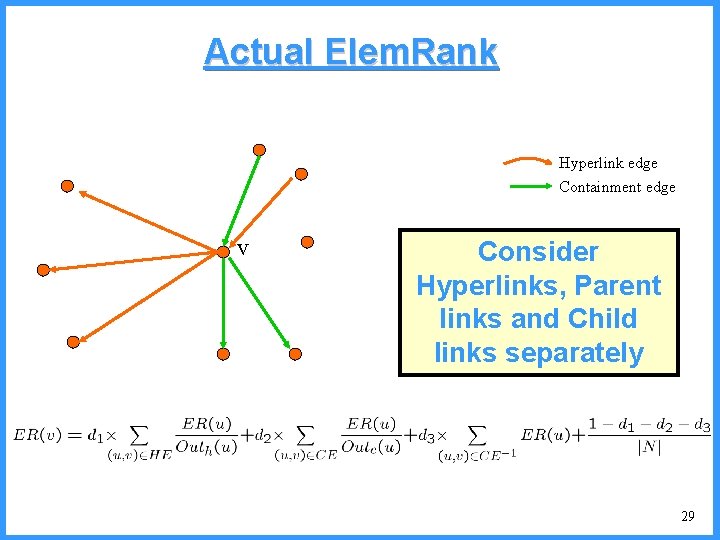

Actual Elem. Rank Hyperlink edge Containment edge v Consider Hyperlinks, Parent links and Child links separately 29

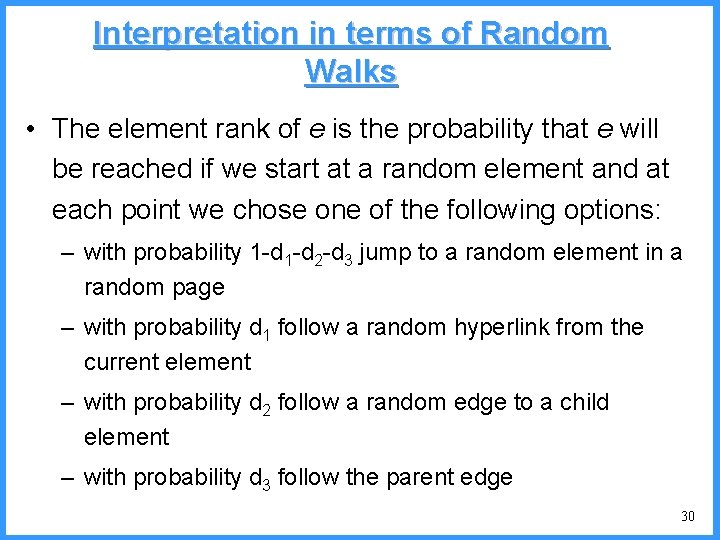

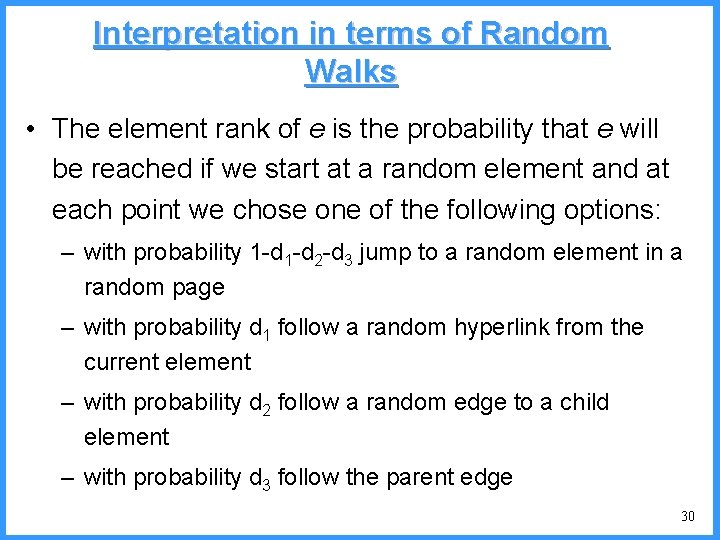

Interpretation in terms of Random Walks • The element rank of e is the probability that e will be reached if we start at a random element and at each point we chose one of the following options: – with probability 1 -d 2 -d 3 jump to a random element in a random page – with probability d 1 follow a random hyperlink from the current element – with probability d 2 follow a random edge to a child element – with probability d 3 follow the parent edge 30

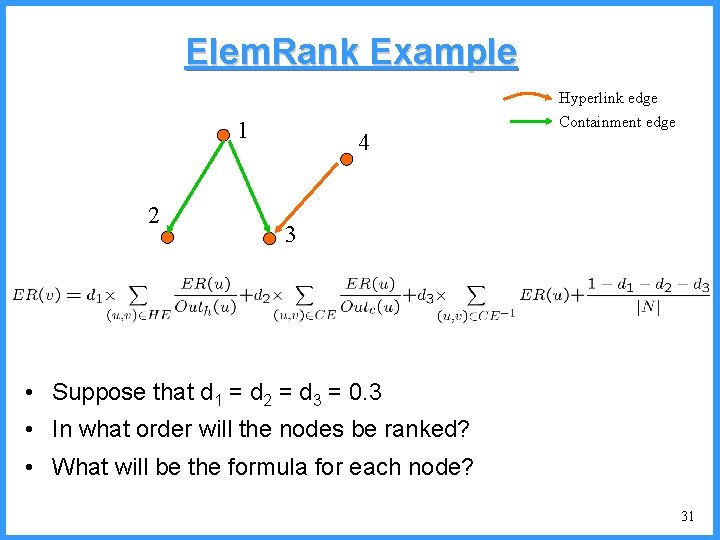

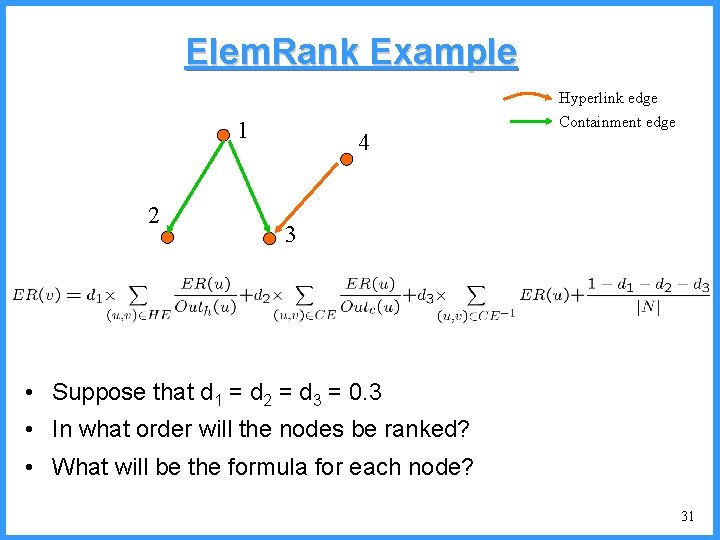

Elem. Rank Example 1 2 4 Hyperlink edge Containment edge 3 • Suppose that d 1 = d 2 = d 3 = 0. 3 • In what order will the nodes be ranked? • What will be the formula for each node? 31

Think About it • Very nice definition of Elem. Rank • Does it make sense? Would Elem. Rank give good results in the following scenarios: – IDREFs connect articles with articles that they cite – IDREFs connect managers with their departments – IDREFs connect cleaning staff with their departments in which they work – IDREFs connect countries with bordering contries (as in the CIA factbook) 32

Topics • We discuss: – Ranking – The Index Structure – Query Processing 33

Indexing • We now discuss the index structure • Recall that we will be ranking according to Elem. Rank • Recall that we want to return “most specific elements” • How should the data be stored in an index? 34

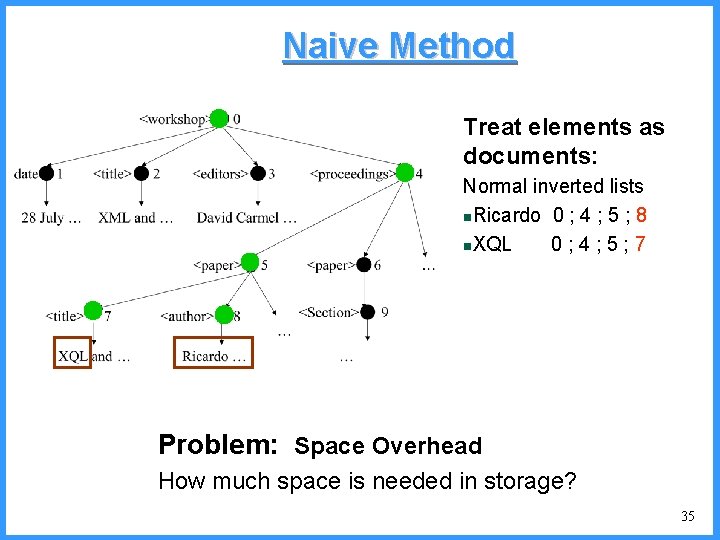

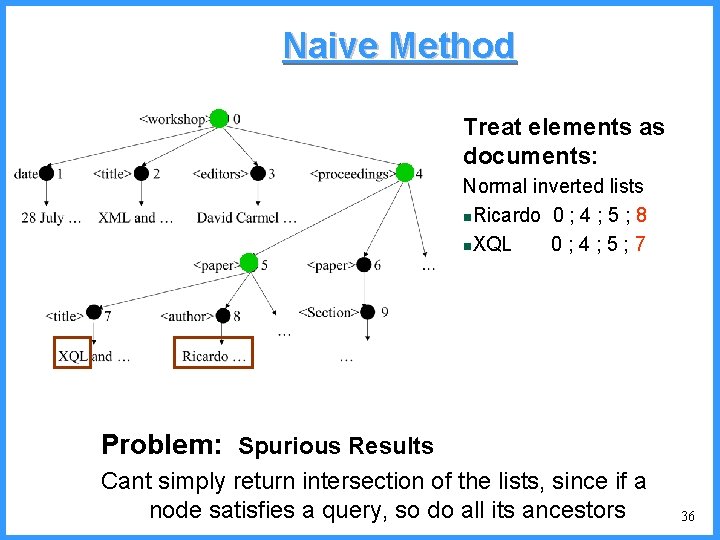

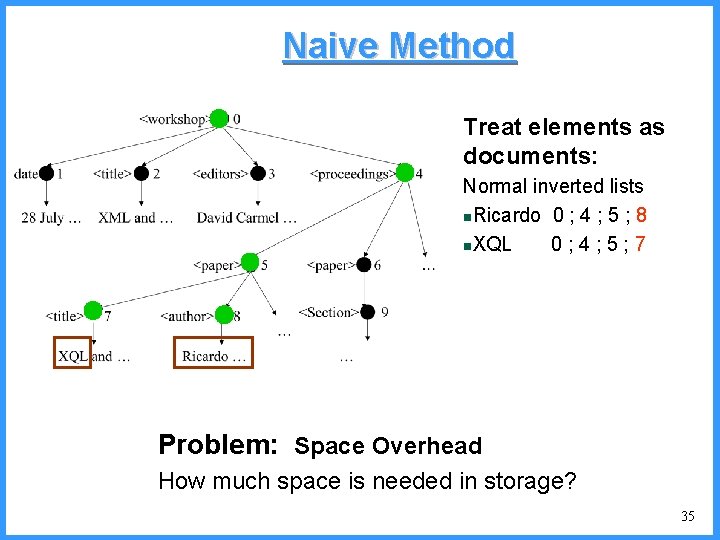

Naive Method Treat elements as documents: Normal inverted lists n. Ricardo 0 ; 4 ; 5 ; 8 n. XQL 0; 4; 5; 7 Problem: Space Overhead How much space is needed in storage? 35

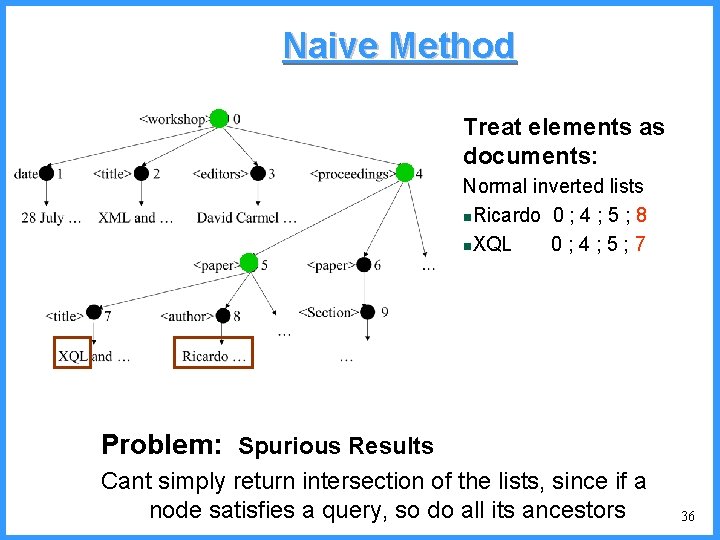

Naive Method Treat elements as documents: Normal inverted lists n. Ricardo 0 ; 4 ; 5 ; 8 n. XQL 0; 4; 5; 7 Problem: Spurious Results Cant simply return intersection of the lists, since if a node satisfies a query, so do all its ancestors 36

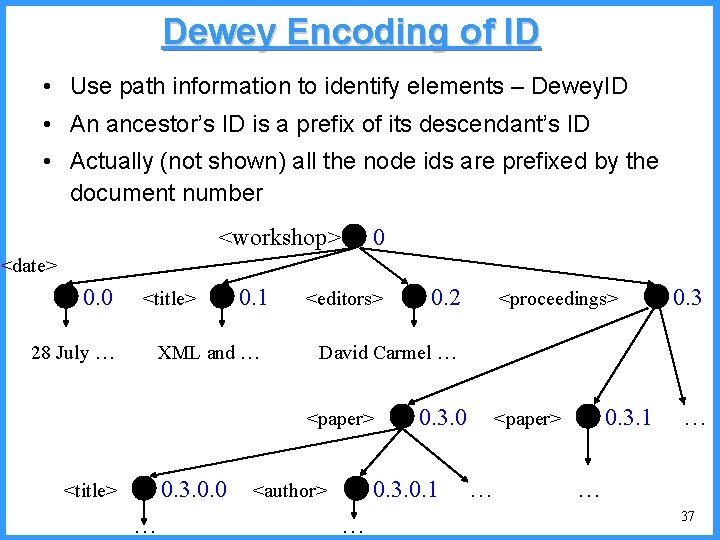

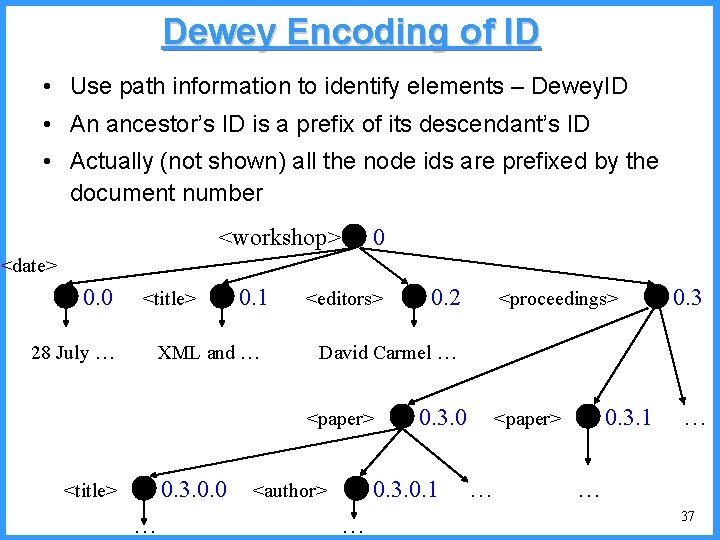

Dewey Encoding of ID • Use path information to identify elements – Dewey. ID • An ancestor’s ID is a prefix of its descendant’s ID • Actually (not shown) all the node ids are prefixed by the document number <workshop> 0 <date> 0. 0 <title> 28 July … 0. 1 XML and … <editors> 0. 3. 0. 0 … <proceedings> 0. 3 David Carmel … <paper> <title> 0. 2 0. 3. 0. 1 <author> … 0. 3. 1 <paper> … … … 37

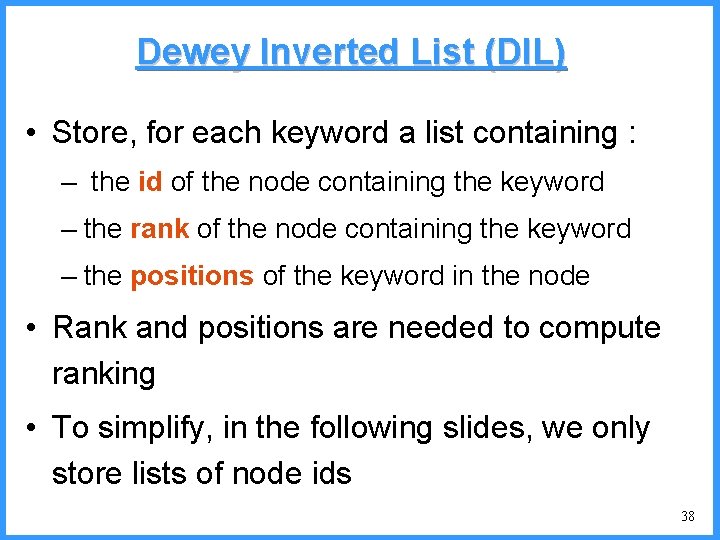

Dewey Inverted List (DIL) • Store, for each keyword a list containing : – the id of the node containing the keyword – the rank of the node containing the keyword – the positions of the keyword in the node • Rank and positions are needed to compute ranking • To simplify, in the following slides, we only store lists of node ids 38

Topics • We discuss: – Ranking – The Index Structure – Query Processing 39

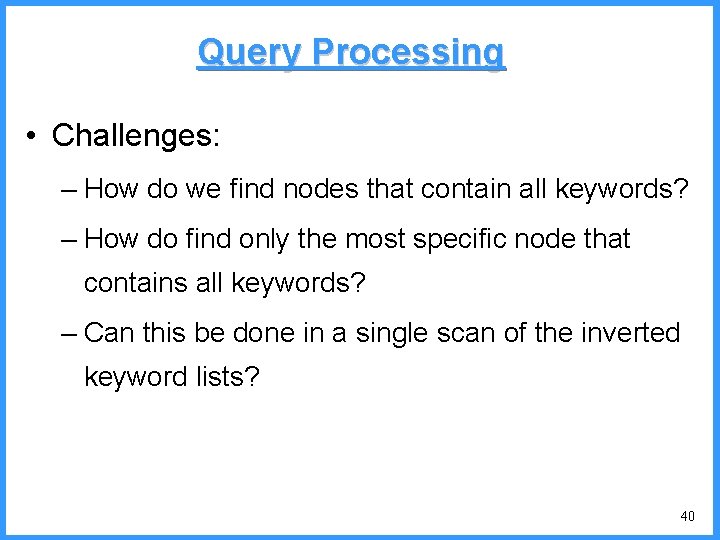

Query Processing • Challenges: – How do we find nodes that contain all keywords? – How do find only the most specific node that contains all keywords? – Can this be done in a single scan of the inverted keyword lists? 40

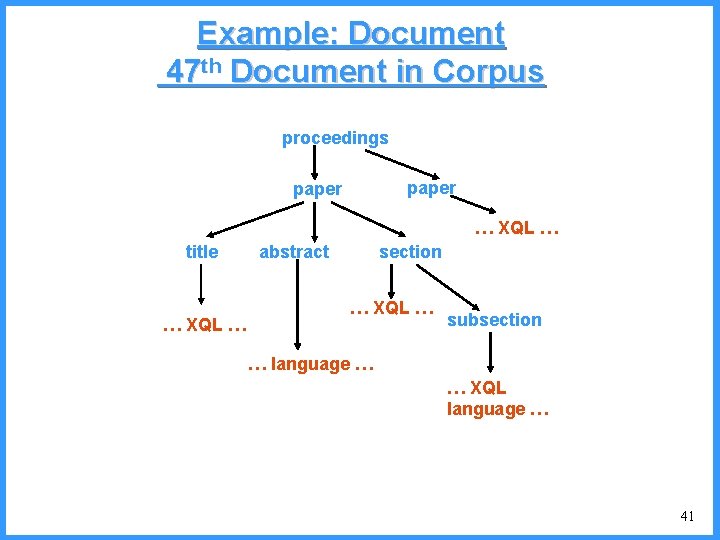

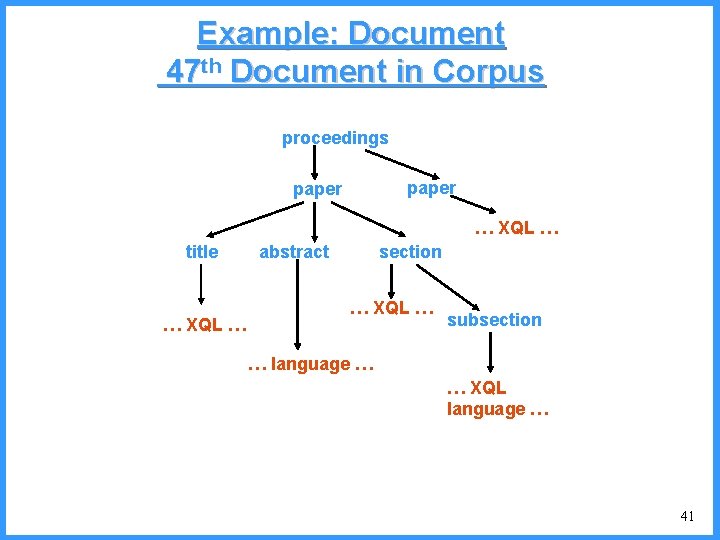

Example: Document 47 th Document in Corpus proceedings paper … XQL … title … XQL … abstract section … XQL … subsection … language … … XQL language … 41

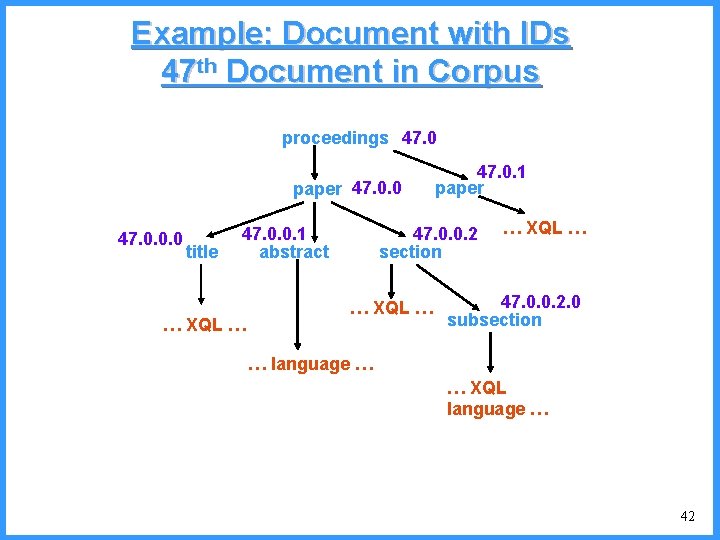

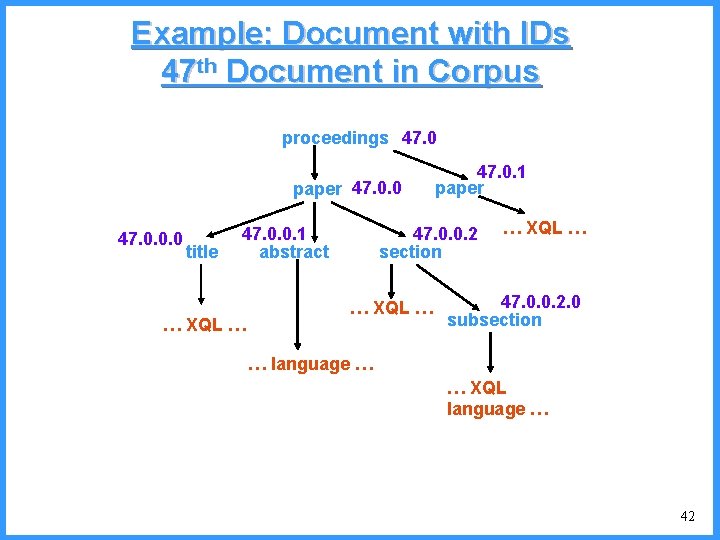

Example: Document with IDs 47 th Document in Corpus proceedings 47. 0 paper 47. 0. 0. 0 title 47. 0. 0. 2 section 47. 0. 0. 1 abstract … XQL … 47. 0. 1 paper … XQL … 47. 0. 0. 2. 0 subsection … language … … XQL language … 42

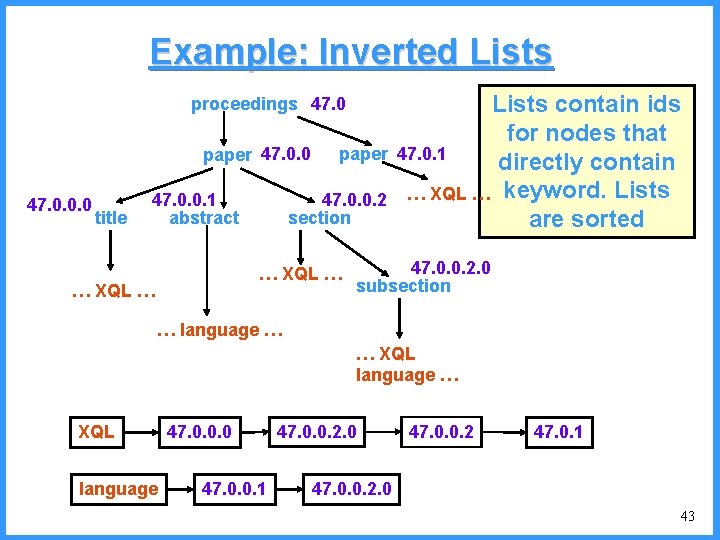

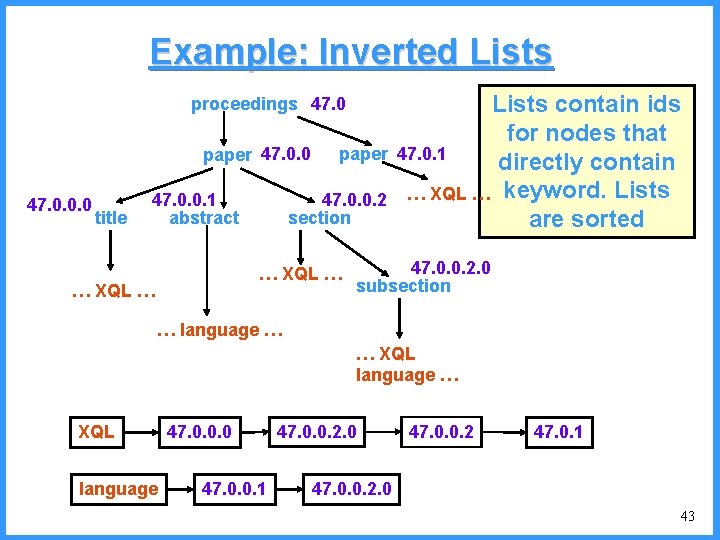

Example: Inverted Lists proceedings 47. 0 paper 47. 0. 0. 0 title paper 47. 0. 0. 2 section 47. 0. 0. 1 abstract … XQL … Lists contain ids for nodes that 47. 0. 1 directly contain … XQL … keyword. Lists are sorted 47. 0. 0. 2. 0 subsection … language … … XQL language 47. 0. 0. 0 47. 0. 0. 1 47. 0. 0. 2. 0 47. 0. 0. 2 47. 0. 1 47. 0. 0. 2. 0 43

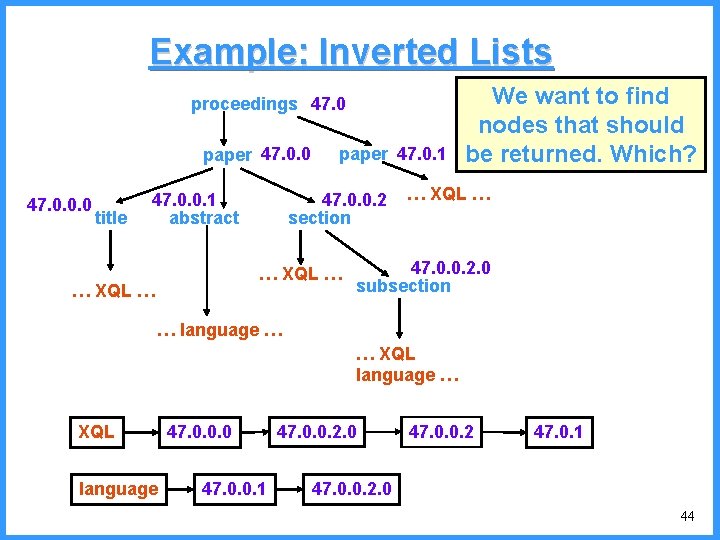

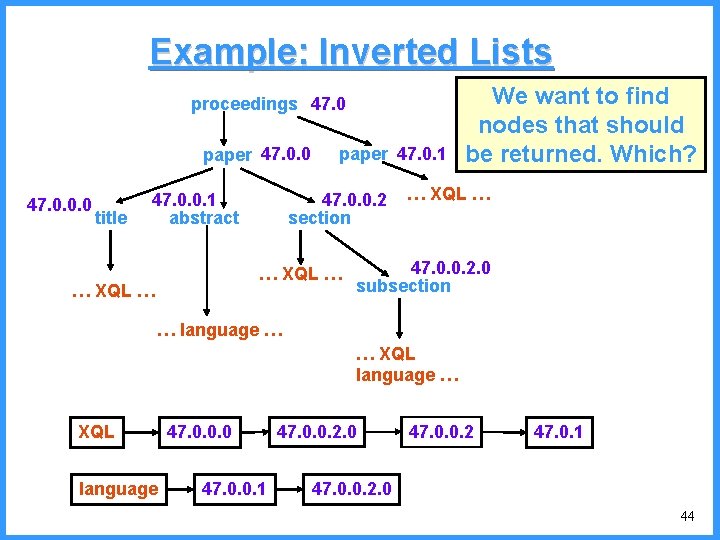

Example: Inverted Lists proceedings 47. 0 paper 47. 0. 0. 0 title paper 47. 0. 1 47. 0. 0. 2 section 47. 0. 0. 1 abstract … XQL … We want to find nodes that should be returned. Which? … XQL … 47. 0. 0. 2. 0 subsection … language … … XQL language 47. 0. 0. 0 47. 0. 0. 1 47. 0. 0. 2. 0 47. 0. 0. 2 47. 0. 1 47. 0. 0. 2. 0 44

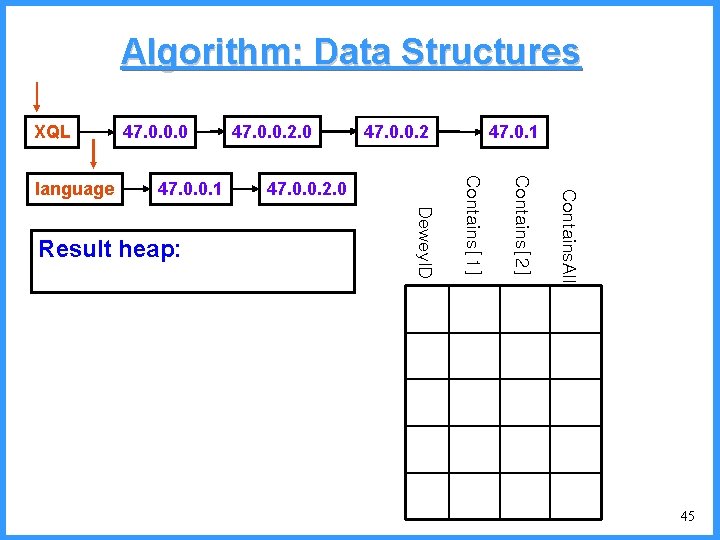

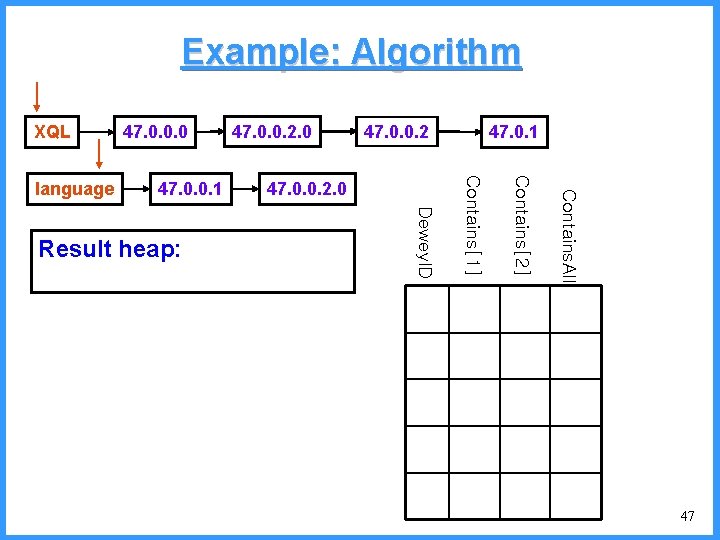

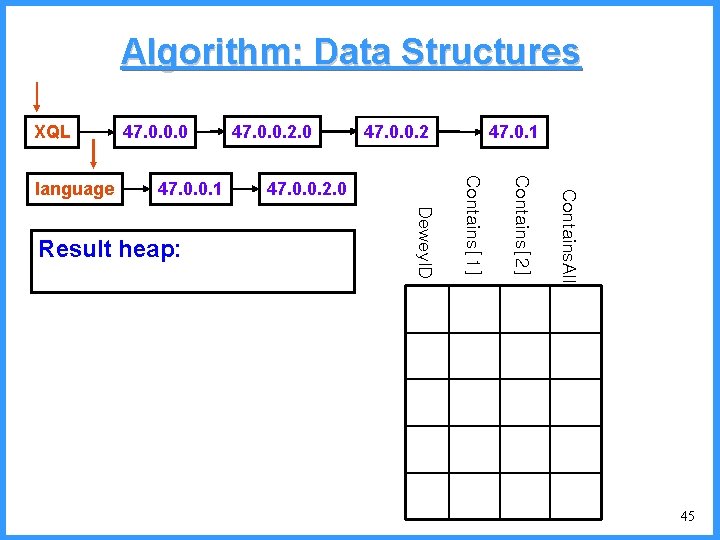

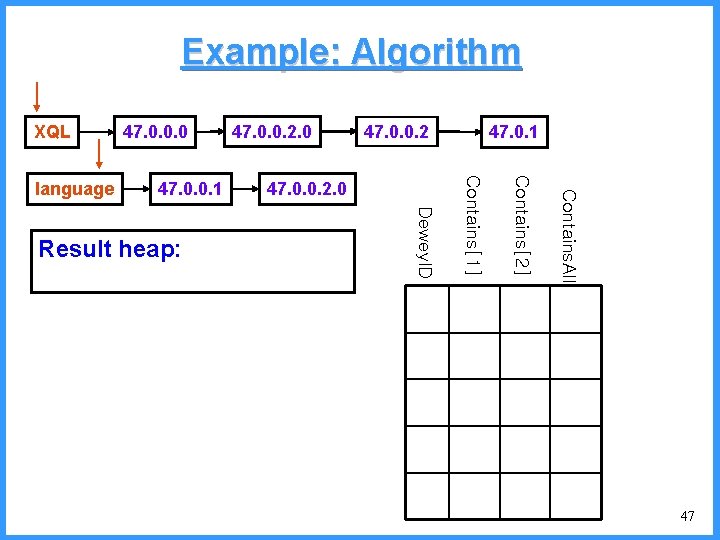

Algorithm: Data Structures XQL 47. 0. 1 Contains. All 47. 0. 0. 2. 0 Dewey. ID Result heap: 47. 0. 0. 2 Contains[2] 47. 0. 0. 1 47. 0. 0. 2. 0 Contains[1] language 47. 0. 0. 0 45

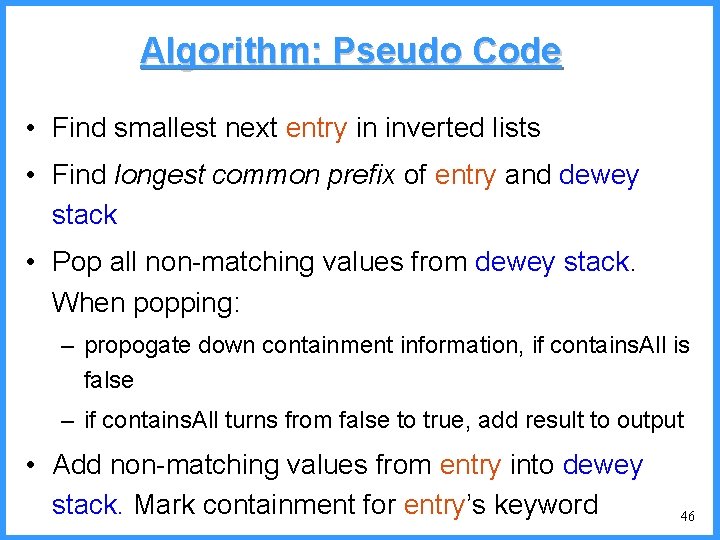

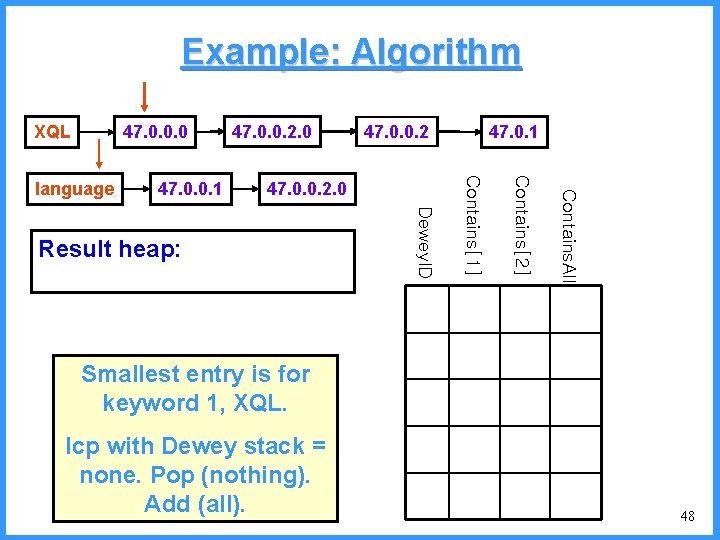

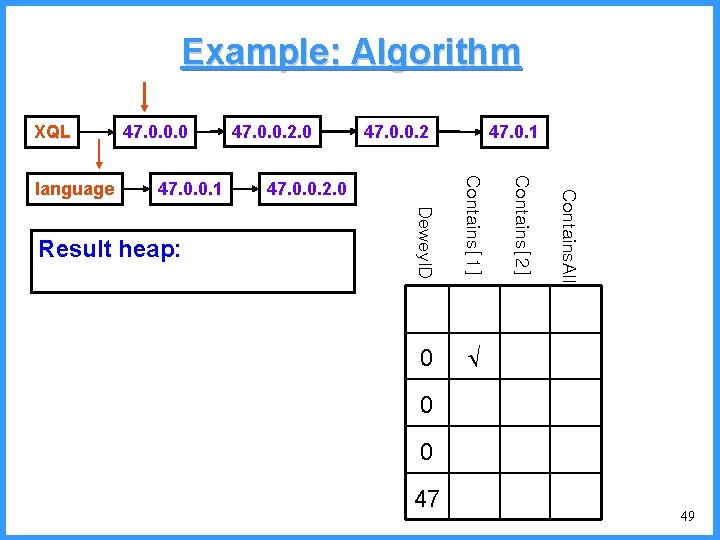

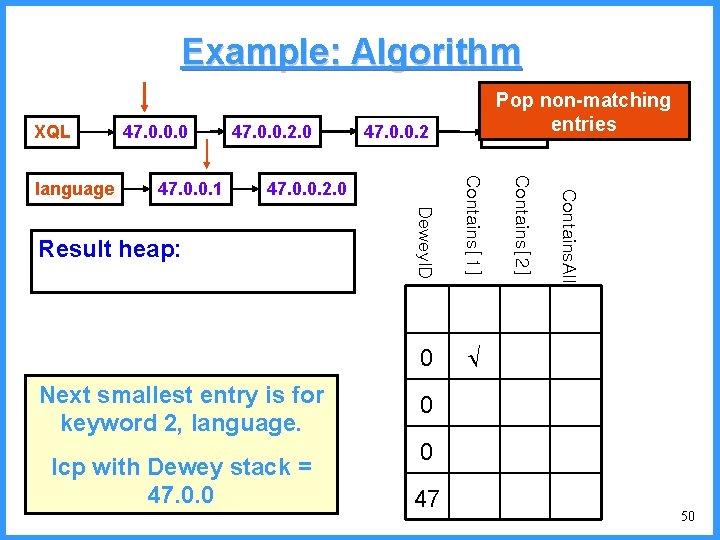

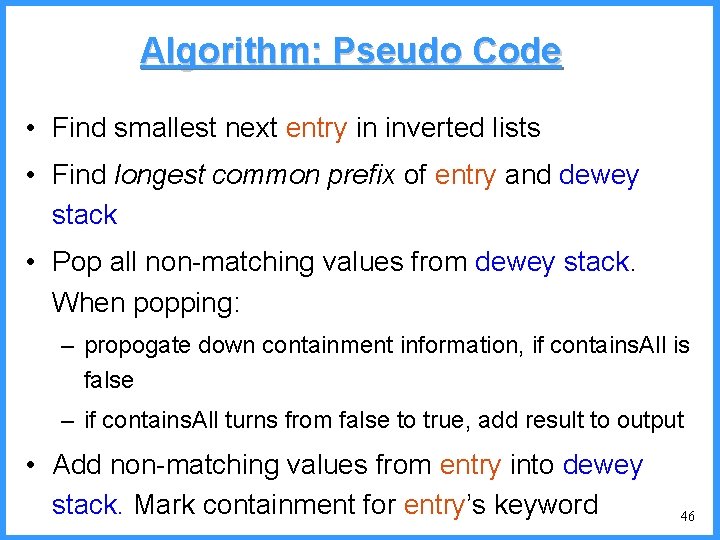

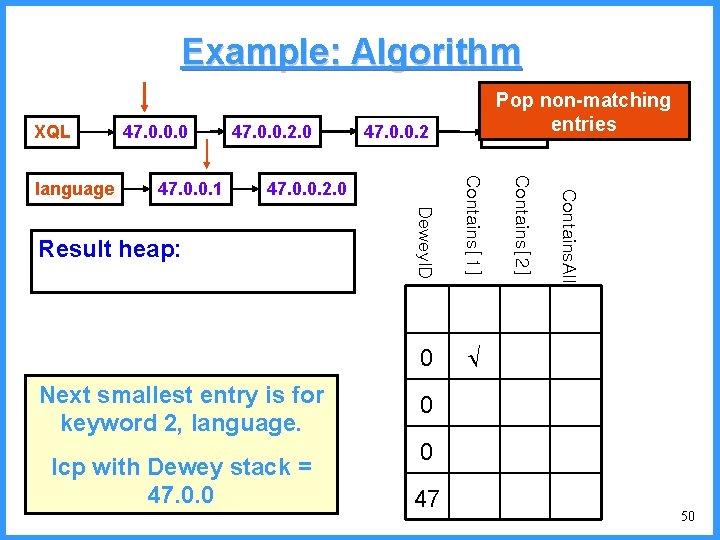

Algorithm: Pseudo Code • Find smallest next entry in inverted lists • Find longest common prefix of entry and dewey stack • Pop all non-matching values from dewey stack. When popping: – propogate down containment information, if contains. All is false – if contains. All turns from false to true, add result to output • Add non-matching values from entry into dewey stack. Mark containment for entry’s keyword 46

Example: Algorithm XQL 47. 0. 1 Contains. All 47. 0. 0. 2. 0 Dewey. ID Result heap: 47. 0. 0. 2 Contains[2] 47. 0. 0. 1 47. 0. 0. 2. 0 Contains[1] language 47. 0. 0. 0 47

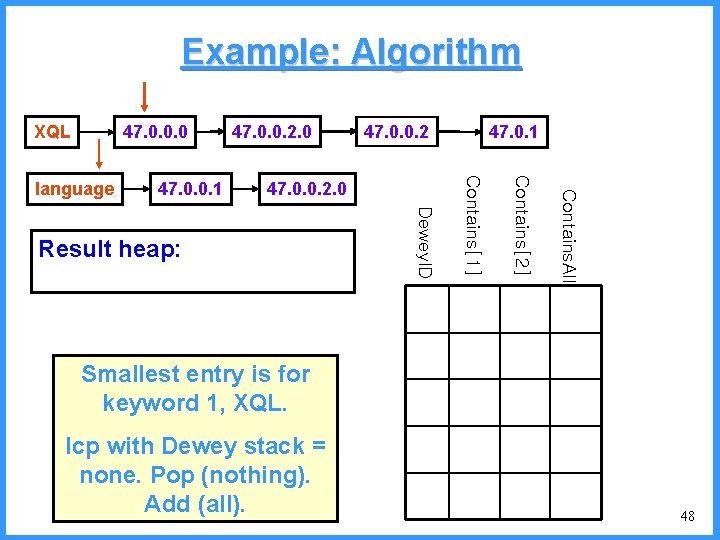

Example: Algorithm XQL 47. 0. 0. 0 Dewey. ID Result heap: Contains. All 47. 0. 0. 2. 0 47. 0. 1 Contains[2] 47. 0. 0. 1 47. 0. 0. 2 Contains[1] language 47. 0. 0. 2. 0 Smallest entry is for keyword 1, XQL. lcp with Dewey stack = none. Pop (nothing). Add (all). 48

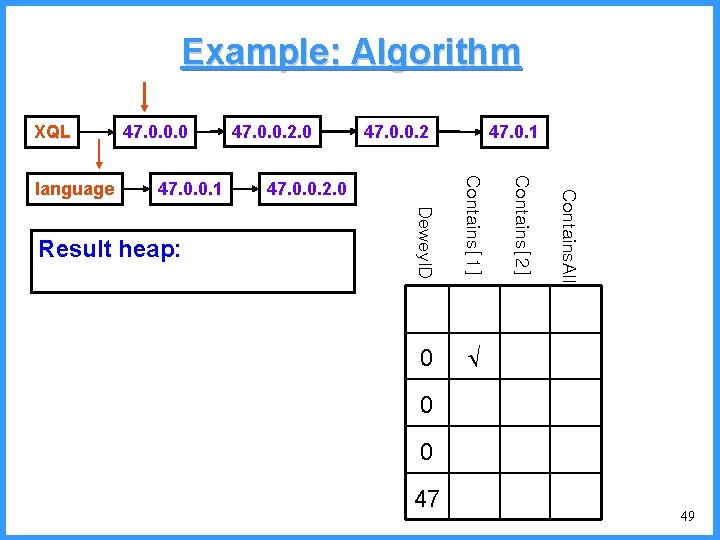

Example: Algorithm XQL 47. 0. 1 Contains. All 0 47. 0. 0. 2. 0 Contains[2] Result heap: 47. 0. 0. 2 Contains[1] 47. 0. 0. 1 47. 0. 0. 2. 0 Dewey. ID language 47. 0. 0. 0 0 0 47 49

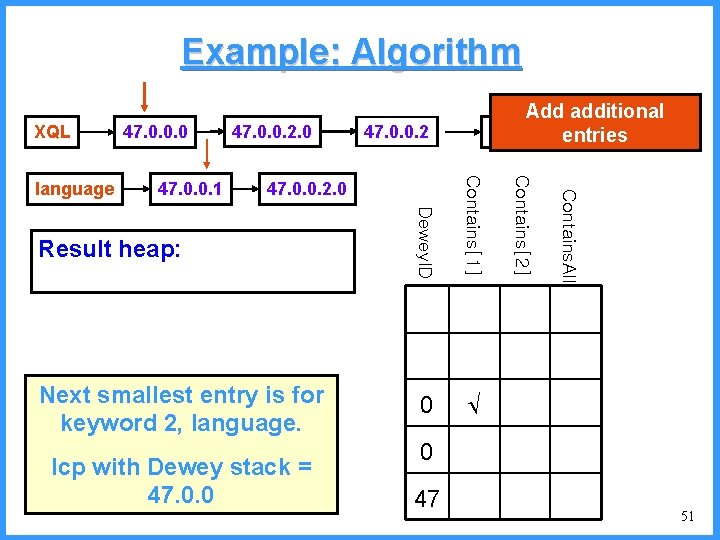

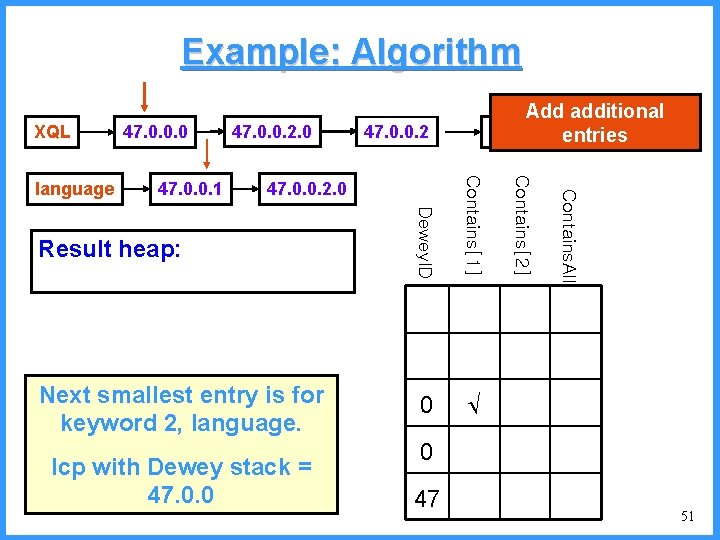

Example: Algorithm XQL 47. 0. 0. 2 Result heap: Next smallest entry is for keyword 2, language. lcp with Dewey stack = 47. 0. 0 Contains. All 0 47. 0. 0. 2. 0 Contains[2] Contains[1] 47. 0. 0. 1 47. 0. 0. 2. 0 Dewey. ID language 47. 0. 0. 0 Pop non-matching 47. 0. 1 entries 0 0 47 50

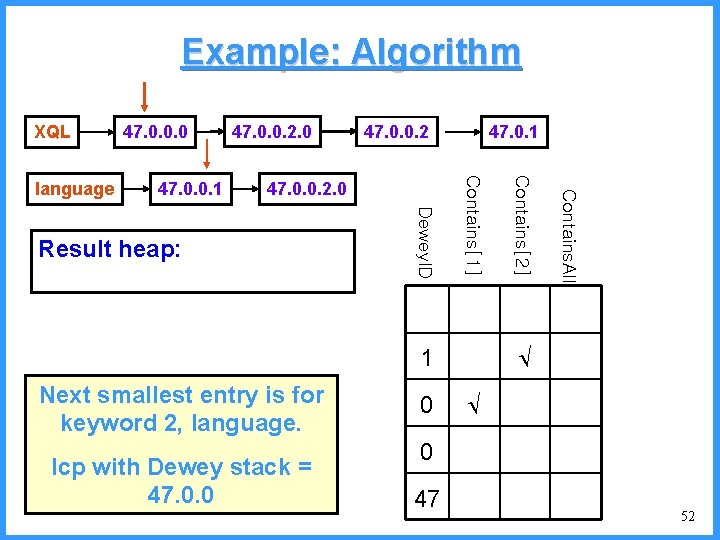

Example: Algorithm XQL 47. 0. 0. 0 47. 0. 0. 2. 0 Add additional 47. 0. 1 entries 47. 0. 0. 2 Contains[1] 0 47. 0. 0. 2. 0 lcp with Dewey stack = 47. 0. 0 Contains. All Dewey. ID Next smallest entry is for keyword 2, language. 47. 0. 0. 1 Contains[2] Result heap: language 0 47 51

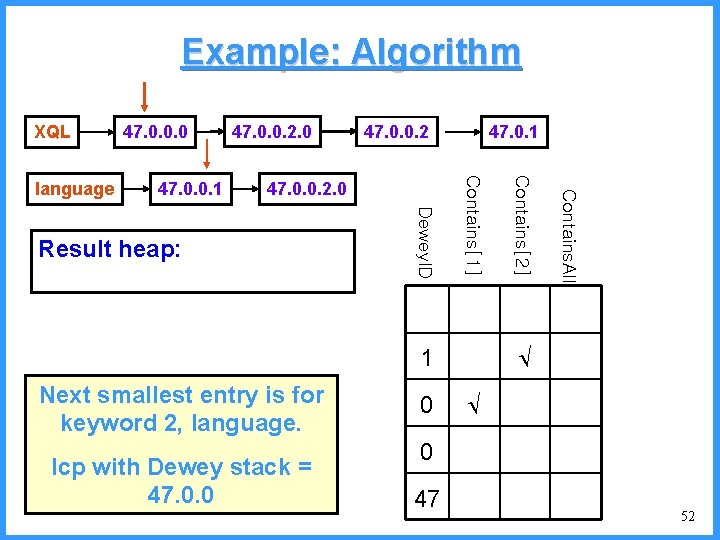

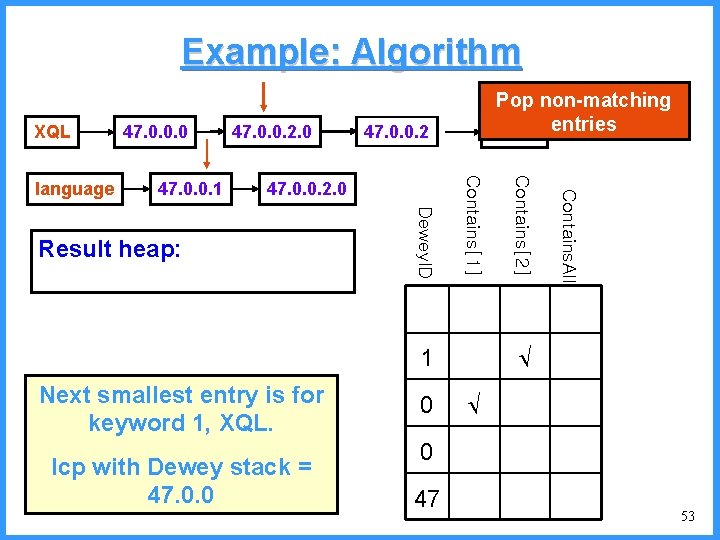

Example: Algorithm XQL 47. 0. 0. 2 Dewey. ID Result heap: 1 Next smallest entry is for keyword 2, language. lcp with Dewey stack = 47. 0. 0 0 Contains. All 47. 0. 0. 2. 0 47. 0. 1 Contains[2] 47. 0. 0. 1 47. 0. 0. 2. 0 Contains[1] language 47. 0. 0. 0 0 47 52

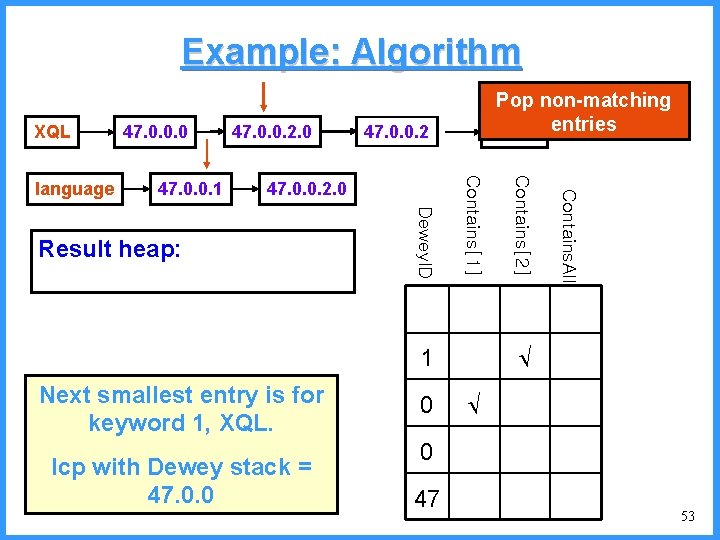

Example: Algorithm XQL 47. 0. 0. 2 Dewey. ID Result heap: 1 Next smallest entry is for keyword 1, XQL. lcp with Dewey stack = 47. 0. 0 0 Contains. All 47. 0. 0. 2. 0 Contains[2] 47. 0. 0. 1 47. 0. 0. 2. 0 Contains[1] language 47. 0. 0. 0 Pop non-matching 47. 0. 1 entries 0 47 53

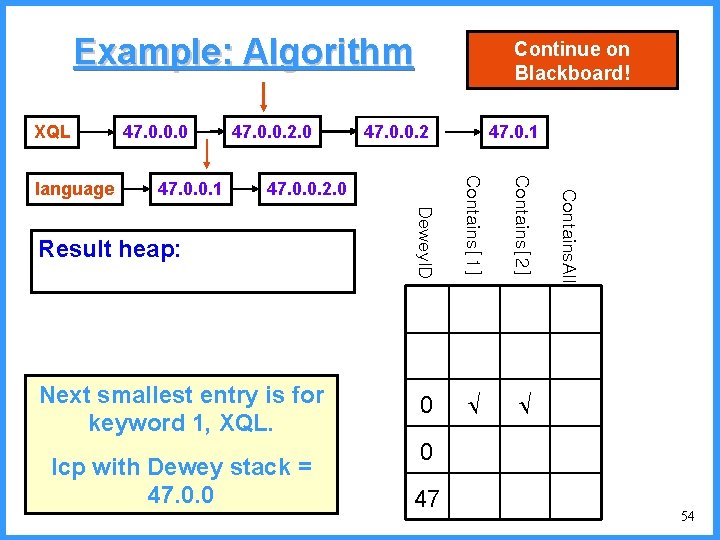

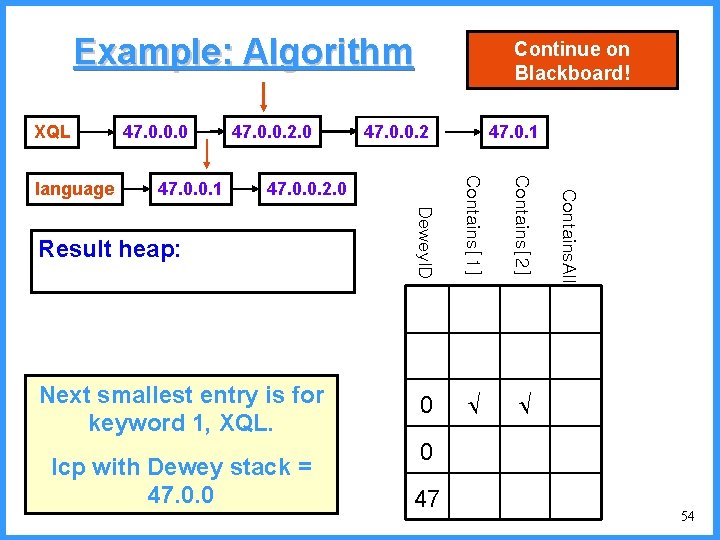

Example: Algorithm XQL 47. 0. 0. 0 47. 0. 0. 2. 0 Continue on Blackboard! 47. 0. 0. 2 47. 0. 1 Dewey. ID Contains[1] Contains[2] Next smallest entry is for keyword 1, XQL. 0 47. 0. 0. 1 47. 0. 0. 2. 0 lcp with Dewey stack = 47. 0. 0 Contains. All Result heap: language 0 47 54

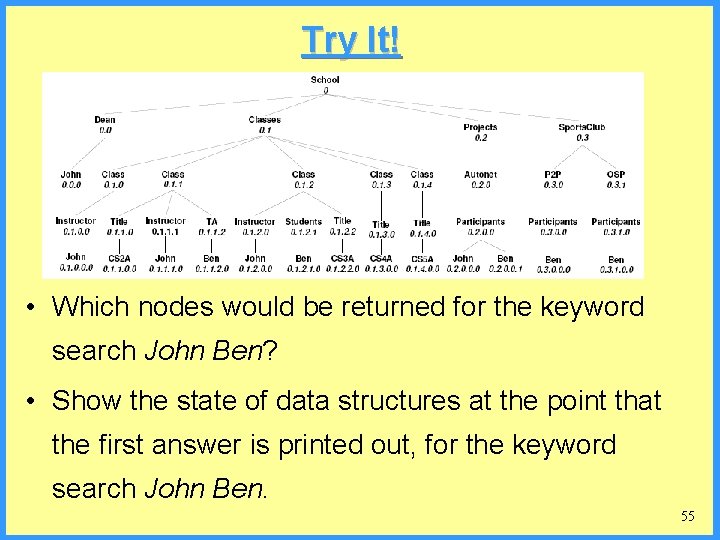

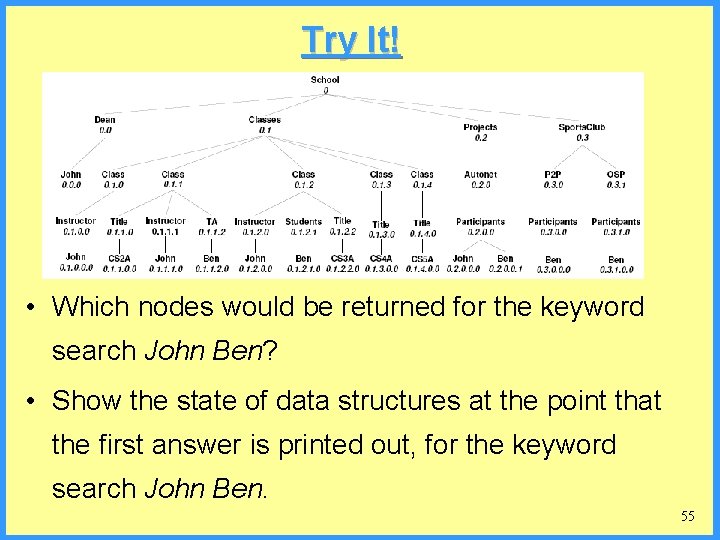

Try It! • Which nodes would be returned for the keyword search John Ben? • Show the state of data structures at the point that the first answer is printed out, for the keyword search John Ben. 55

Inexact Querying of XML

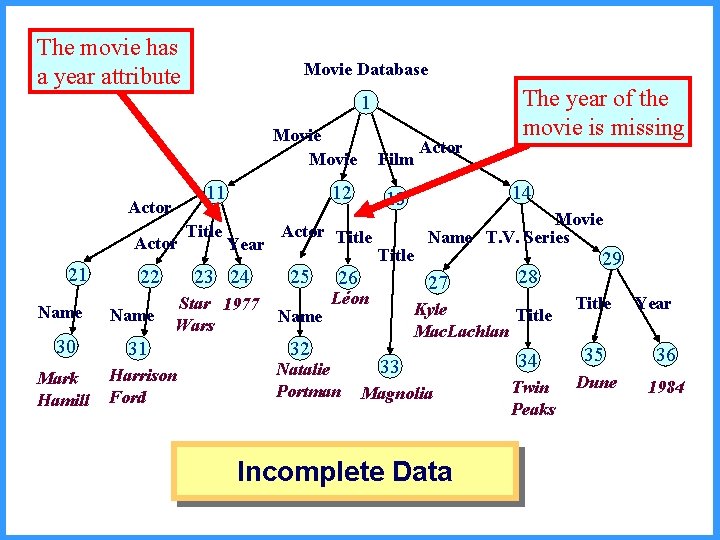

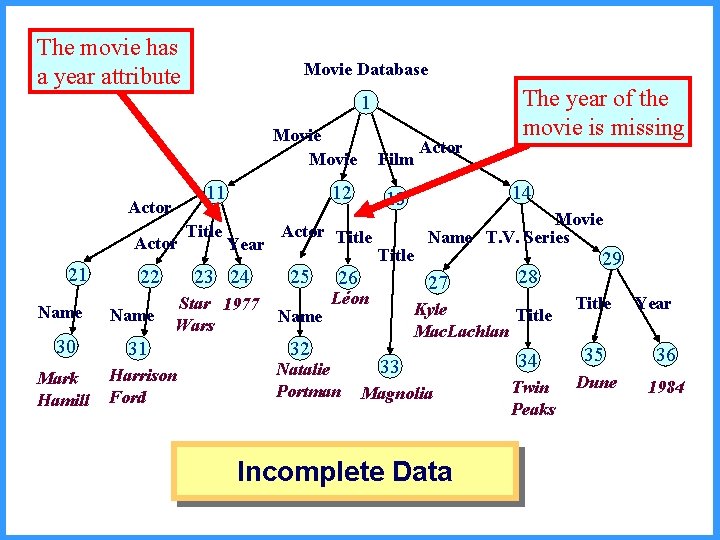

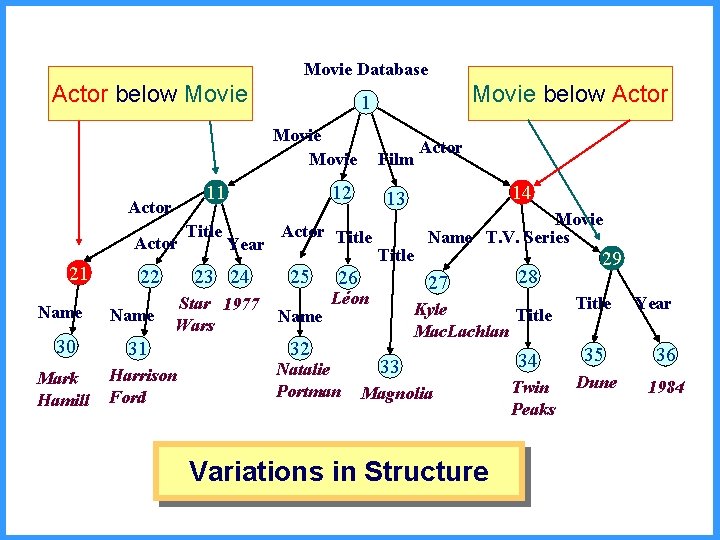

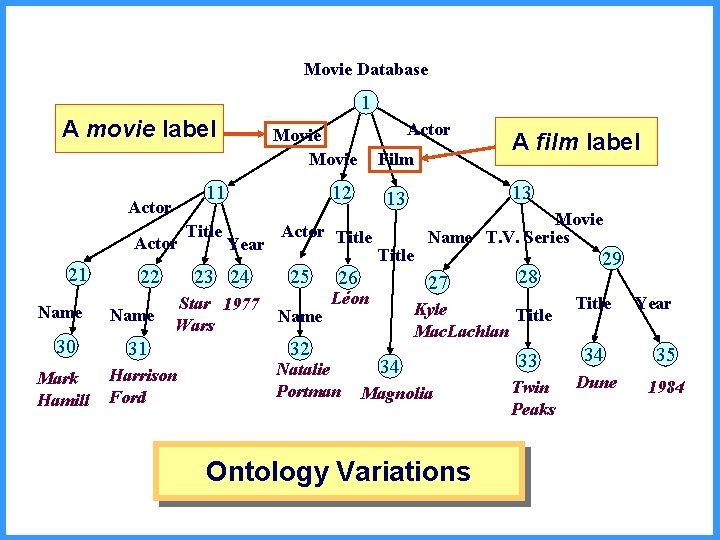

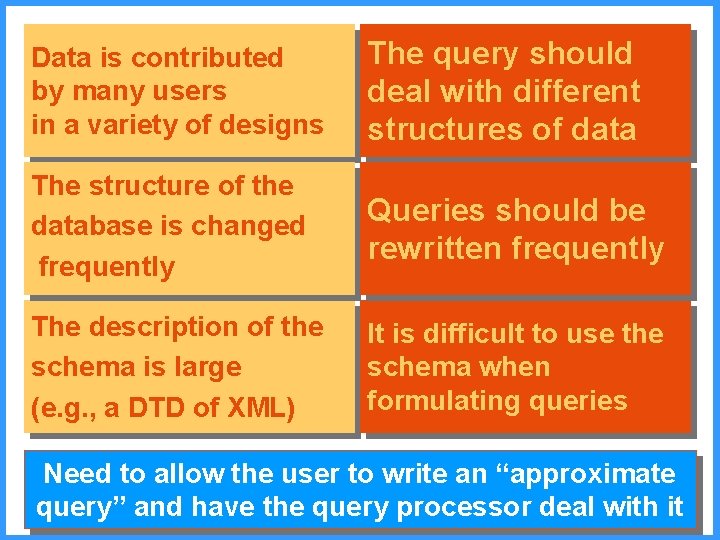

XML Data May be Irregular • Relational data is regular and organized. XML may be very different. – Data is incomplete: Missing values of attributes in elements – Data has structural variations: Relationships between elements are represented differently in different parts of the document – Data has ontology variations: Different labels are used to describe nodes of the same type • (Note: In some of the upcoming slides, we have labels on edges instead of on nodes. )

The movie has a year attribute Movie Database 1 Movie 11 Actor 21 Name 30 Mark Hamill 22 Name Title 12 Star 1977 Wars Harrison Ford 25 Name 32 Title 26 Movie Name T. V. Series 27 Léon Natalie Portman Actor 14 13 Actor Title Year 23 24 31 Film The year of the movie is missing 28 Kyle Title Mac. Lachlan 33 Magnolia Incomplete Data 29 Title Year 34 35 36 Twin Peaks Dune 1984

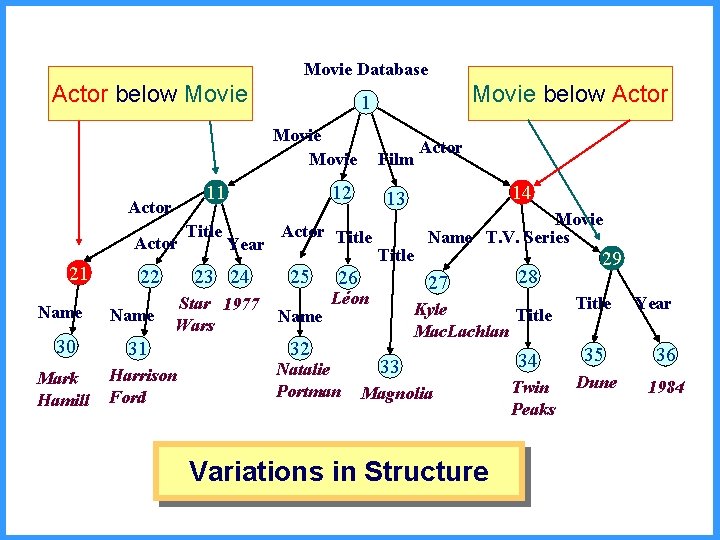

Movie Database Actor below Movie 11 Actor 21 Name 30 Mark Hamill 22 Name Title Harrison Ford 25 Name 32 Title 26 Movie Name T. V. Series 27 Léon Natalie Portman Actor 14 13 Actor Title Year Star 1977 Wars 31 Film 12 23 24 Movie below Actor 1 28 Kyle Title Mac. Lachlan 33 Magnolia Variations in Structure 29 Title Year 34 35 36 Twin Peaks Dune 1984

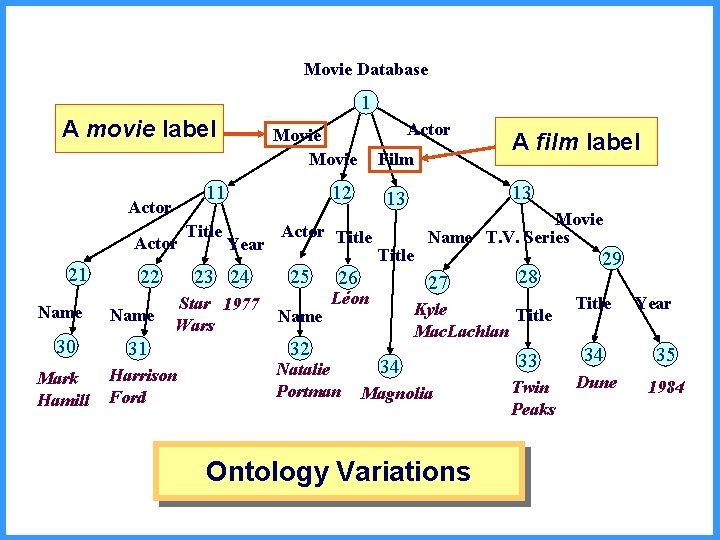

Movie Database 1 A movie label 11 Actor 21 Name 30 Mark Hamill 22 Name Title 25 Name 32 Title 26 Natalie Portman Movie Name T. V. Series 27 Léon A film label 13 13 Actor Title Year Star 1977 Wars Harrison Ford Film 12 23 24 31 Actor Movie 28 Kyle Title Mac. Lachlan 34 Magnolia Ontology Variations 29 Title Year 33 34 35 Twin Peaks Dune 1984

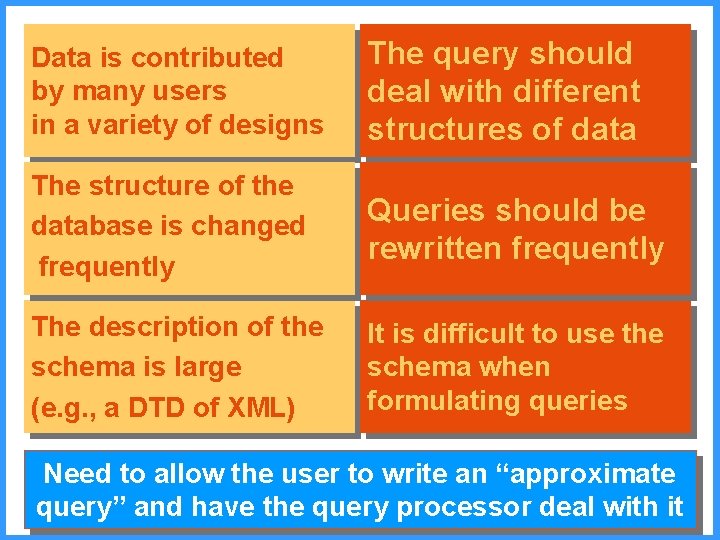

Data is contributed by many users in a variety of designs The query should deal with different structures of data The structure of the database is changed frequently Queries should be rewritten frequently The description of the schema is large (e. g. , a DTD of XML) It is difficult to use the schema when formulating queries Need to allow the user to write an “approximate query” and have the query processor deal with it

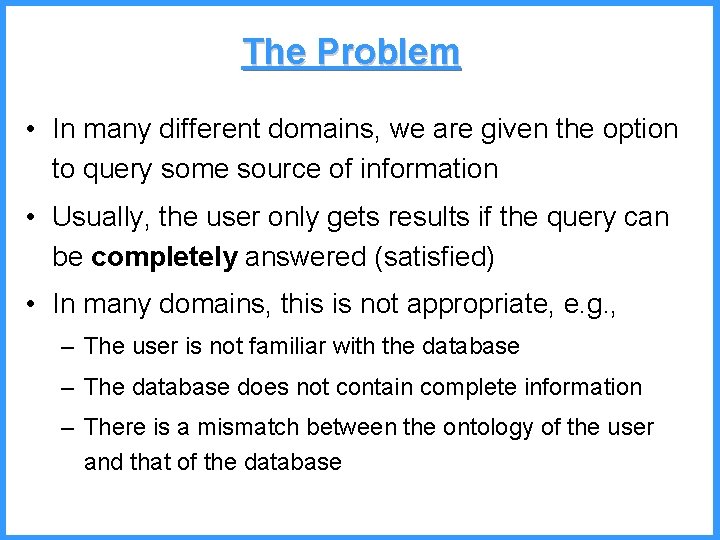

The Problem • In many different domains, we are given the option to query some source of information • Usually, the user only gets results if the query can be completely answered (satisfied) • In many domains, this is not appropriate, e. g. , – The user is not familiar with the database – The database does not contain complete information – There is a mismatch between the ontology of the user and that of the database

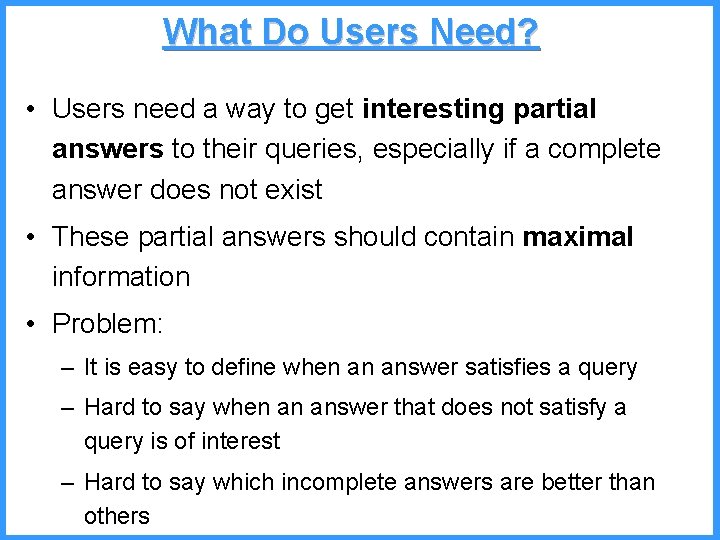

What Do Users Need? • Users need a way to get interesting partial answers to their queries, especially if a complete answer does not exist • These partial answers should contain maximal information • Problem: – It is easy to define when an answer satisfies a query – Hard to say when an answer that does not satisfy a query is of interest – Hard to say which incomplete answers are better than others

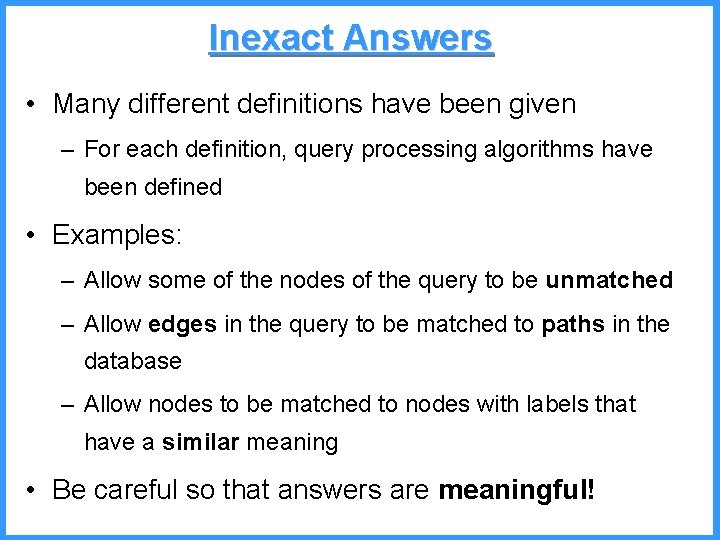

Inexact Answers • Many different definitions have been given – For each definition, query processing algorithms have been defined • Examples: – Allow some of the nodes of the query to be unmatched – Allow edges in the query to be matched to paths in the database – Allow nodes to be matched to nodes with labels that have a similar meaning • Be careful so that answers are meaningful!

Tree Pattern Relaxation Amer-Yahia, Cho, Srivastava EDBT 2002 65

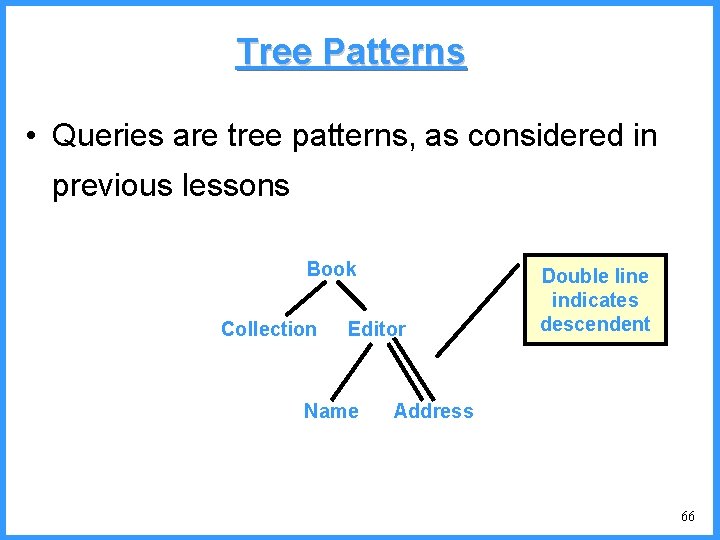

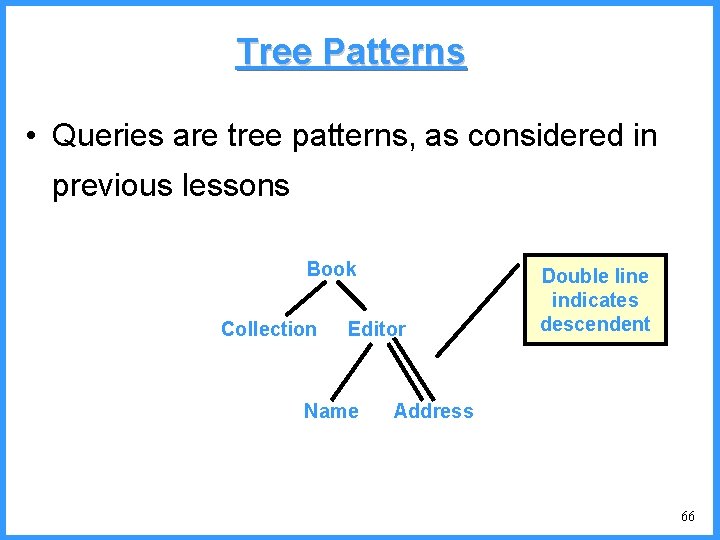

Tree Patterns • Queries are tree patterns, as considered in previous lessons Book Collection Editor Name Double line indicates descendent Address 66

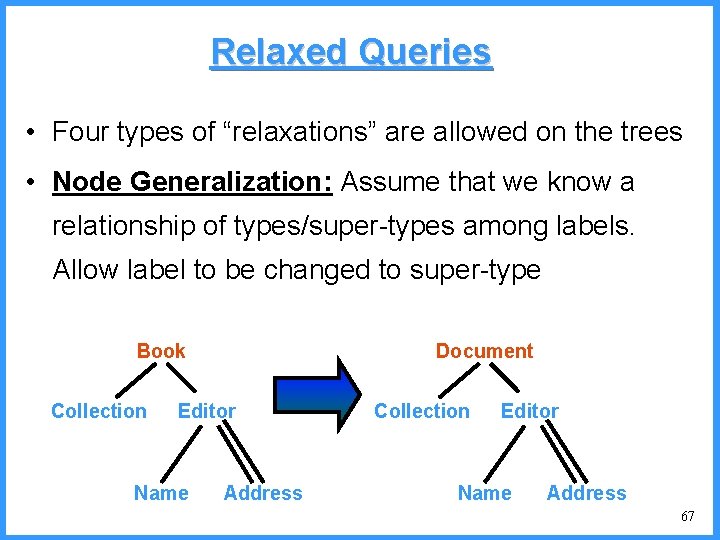

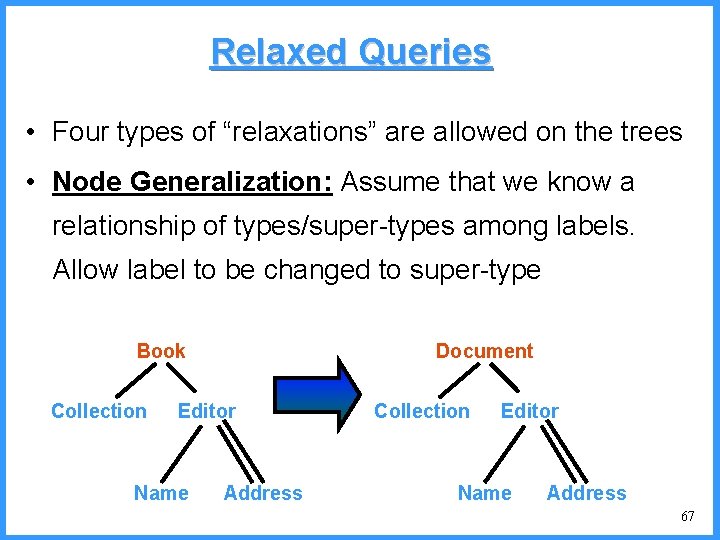

Relaxed Queries • Four types of “relaxations” are allowed on the trees • Node Generalization: Assume that we know a relationship of types/super-types among labels. Allow label to be changed to super-type Book Collection Document Editor Name Address Collection Editor Name Address 67

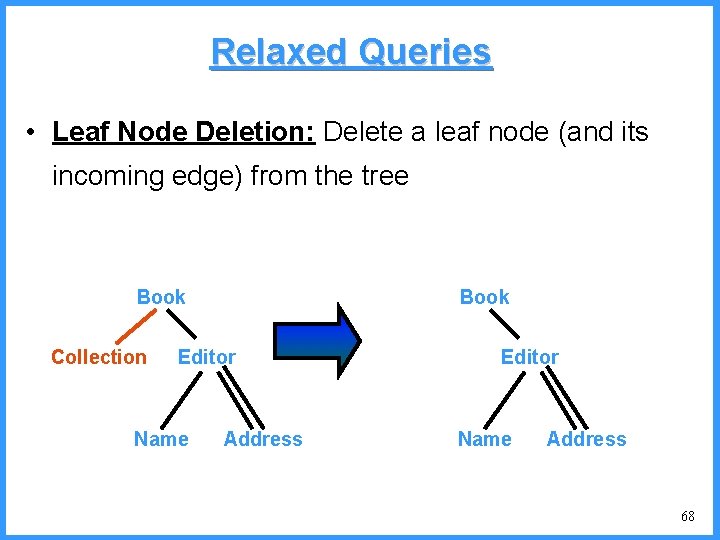

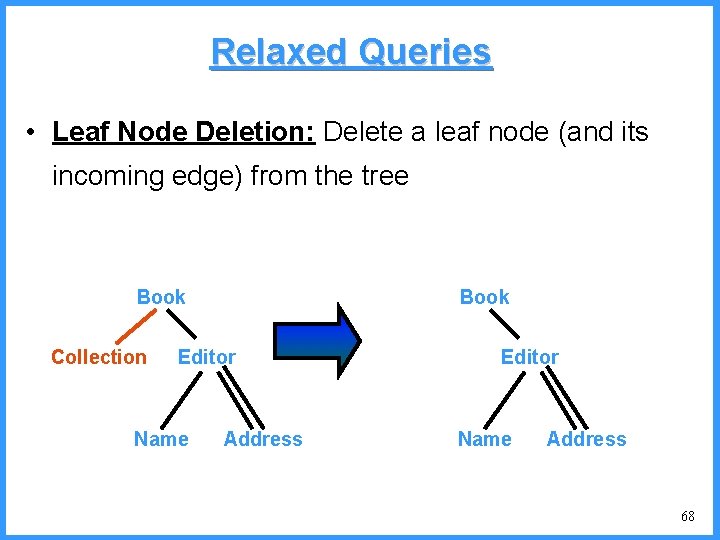

Relaxed Queries • Leaf Node Deletion: Delete a leaf node (and its incoming edge) from the tree Book Collection Book Editor Name Address 68

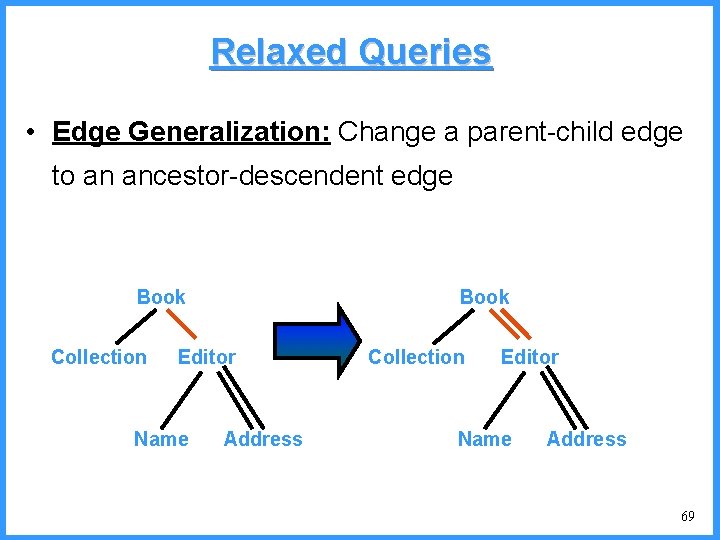

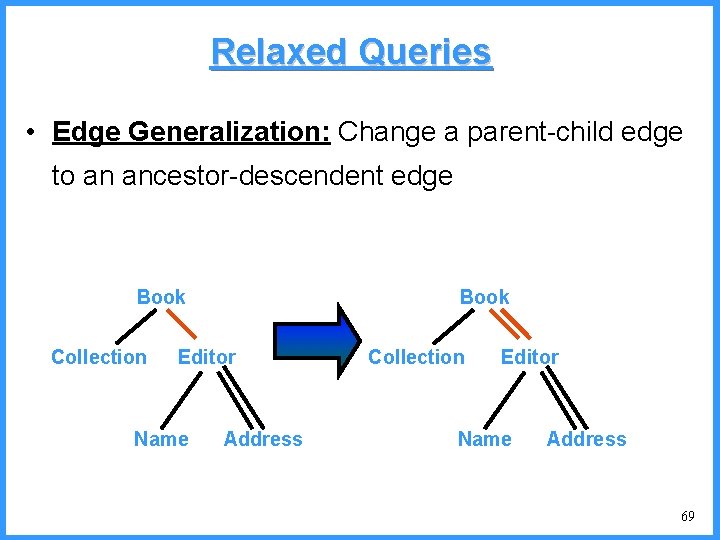

Relaxed Queries • Edge Generalization: Change a parent-child edge to an ancestor-descendent edge Book Collection Book Editor Name Address Collection Editor Name Address 69

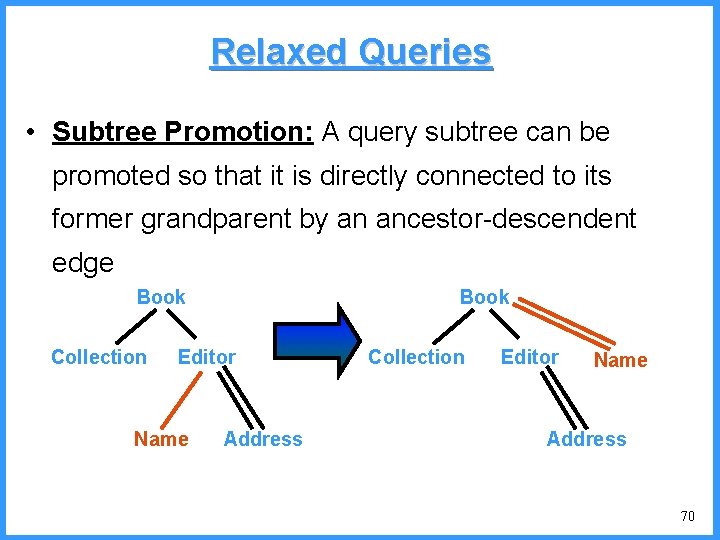

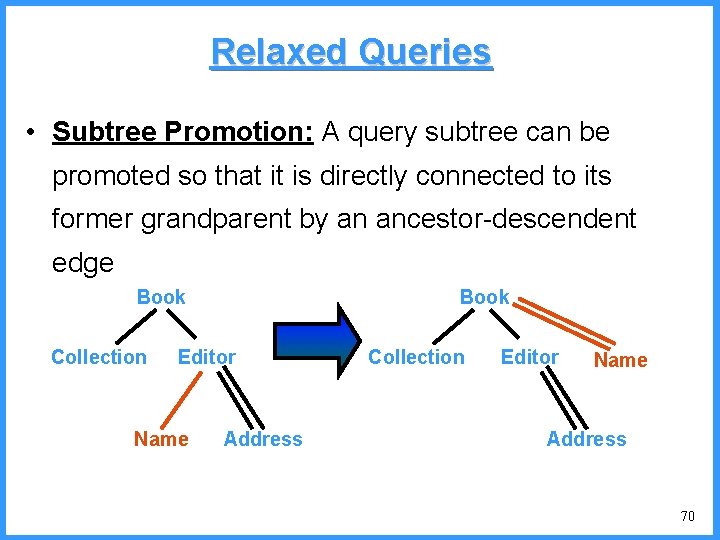

Relaxed Queries • Subtree Promotion: A query subtree can be promoted so that it is directly connected to its former grandparent by an ancestor-descendent edge Book Collection Book Editor Name Address Collection Editor Name Address 70

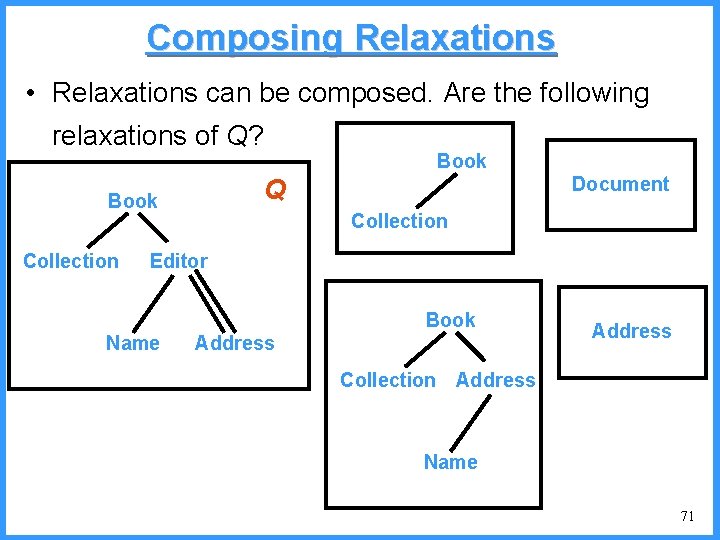

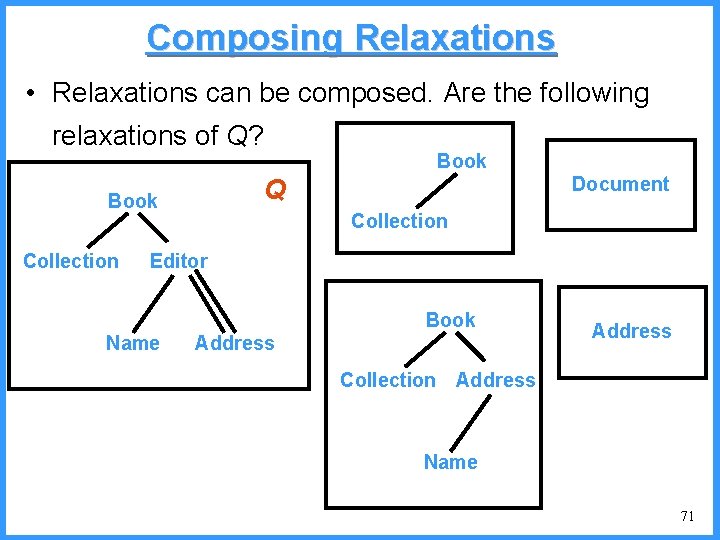

Composing Relaxations • Relaxations can be composed. Are the following relaxations of Q? Document Q Book Collection Editor Book Name Address Collection Address Name 71

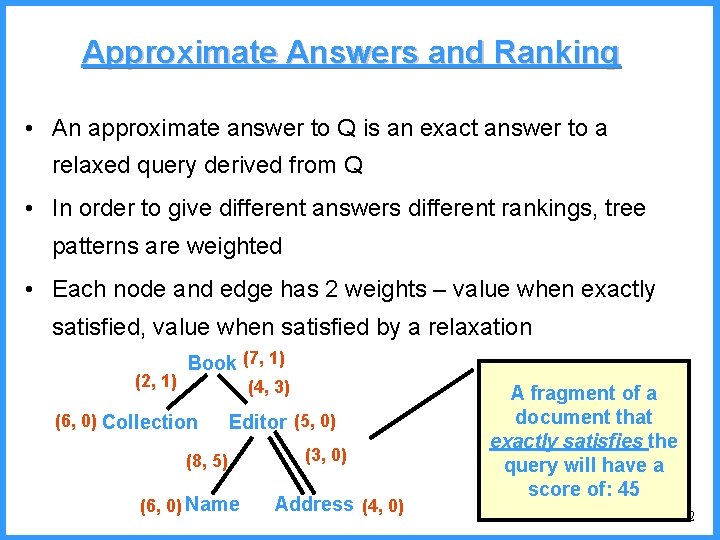

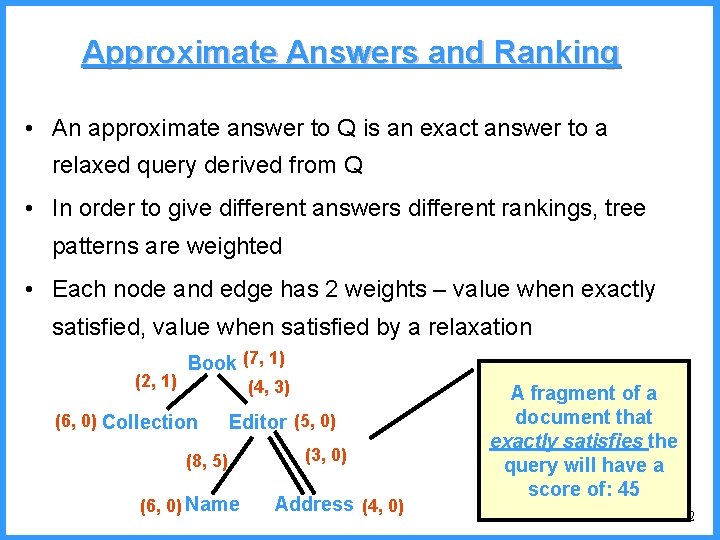

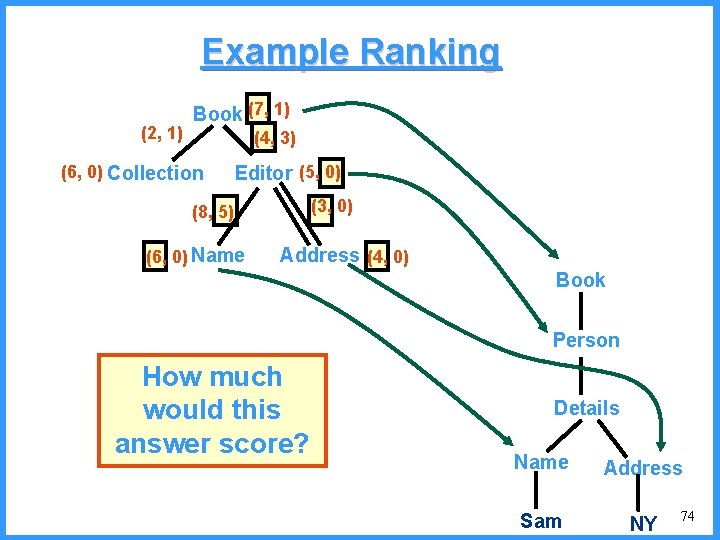

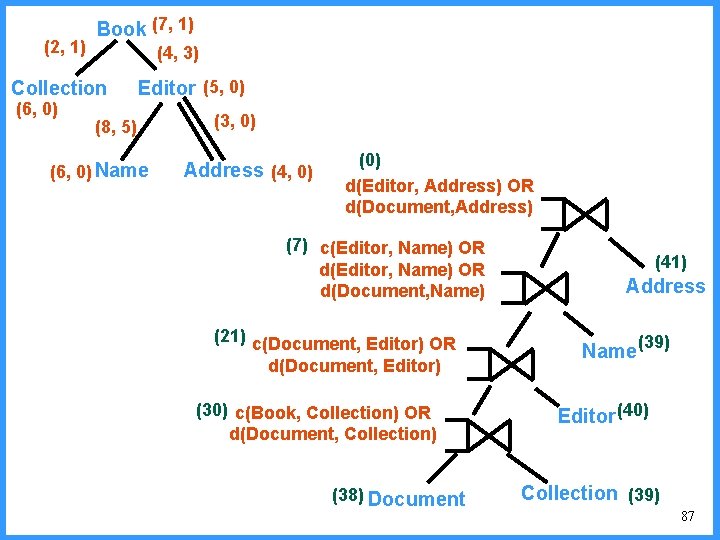

Approximate Answers and Ranking • An approximate answer to Q is an exact answer to a relaxed query derived from Q • In order to give different answers different rankings, tree patterns are weighted • Each node and edge has 2 weights – value when exactly satisfied, value when satisfied by a relaxation (2, 1) Book (7, 1) (4, 3) (6, 0) Collection Editor (5, 0) (8, 5) (6, 0) Name (3, 0) Address (4, 0) A fragment of a document that exactly satisfies the query will have a score of: 45 72

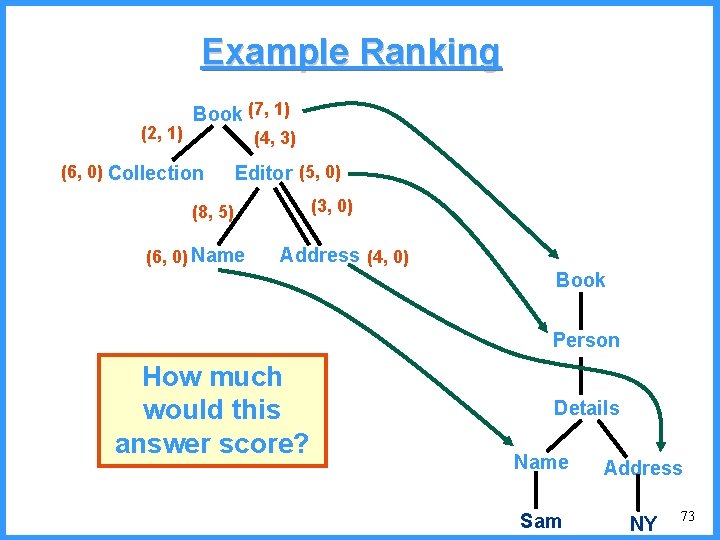

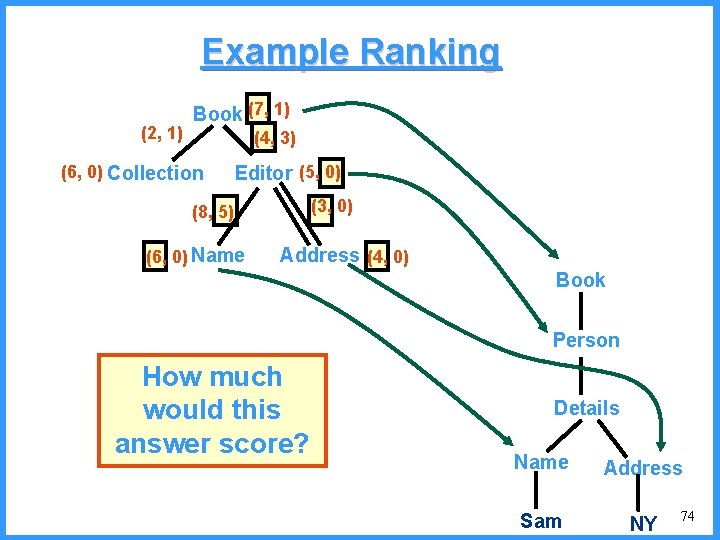

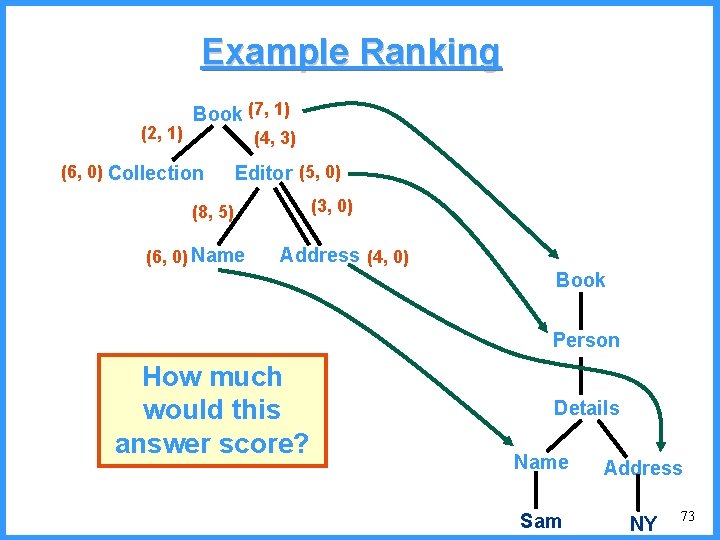

Example Ranking (2, 1) Book (7, 1) (4, 3) (6, 0) Collection Editor (5, 0) (3, 0) (8, 5) (6, 0) Name Address (4, 0) Book Person How much would this answer score? Details Name Address Sam NY 73

Example Ranking (2, 1) Book (7, 1) (4, 3) (6, 0) Collection Editor (5, 0) (3, 0) (8, 5) (6, 0) Name Address (4, 0) Book Person How much would this answer score? Details Name Address Sam NY 74

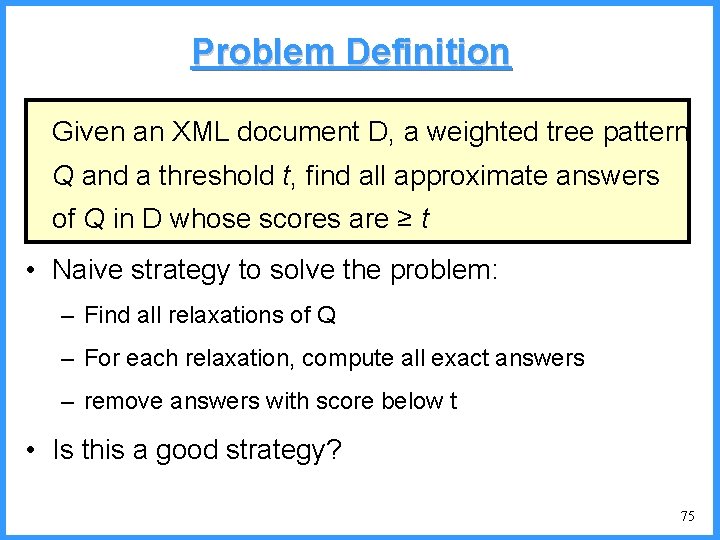

Problem Definition Given an XML document D, a weighted tree pattern Q and a threshold t, find all approximate answers of Q in D whose scores are ≥ t • Naive strategy to solve the problem: – Find all relaxations of Q – For each relaxation, compute all exact answers – remove answers with score below t • Is this a good strategy? 75

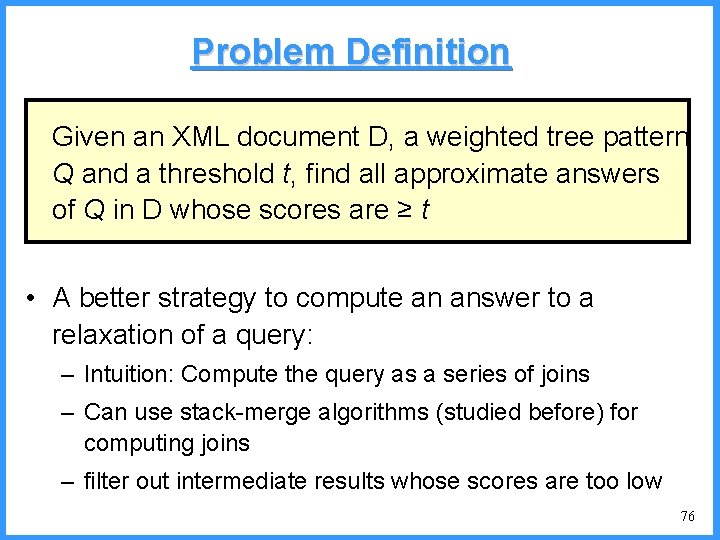

Problem Definition Given an XML document D, a weighted tree pattern Q and a threshold t, find all approximate answers of Q in D whose scores are ≥ t • A better strategy to compute an answer to a relaxation of a query: – Intuition: Compute the query as a series of joins – Can use stack-merge algorithms (studied before) for computing joins – filter out intermediate results whose scores are too low 76

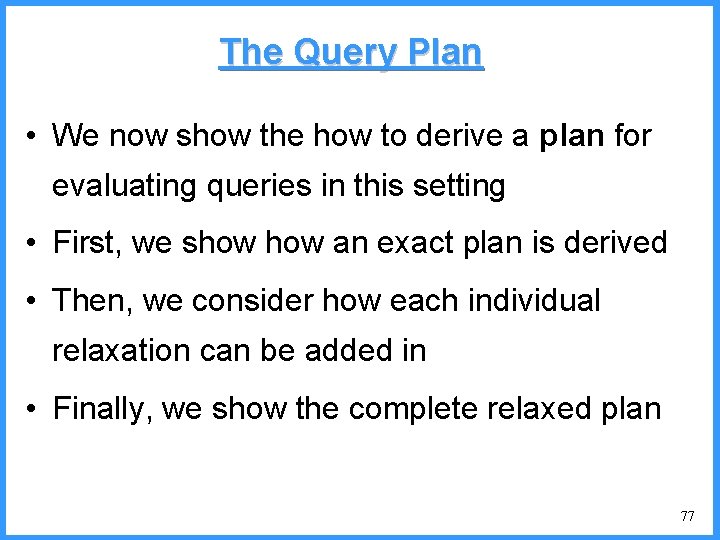

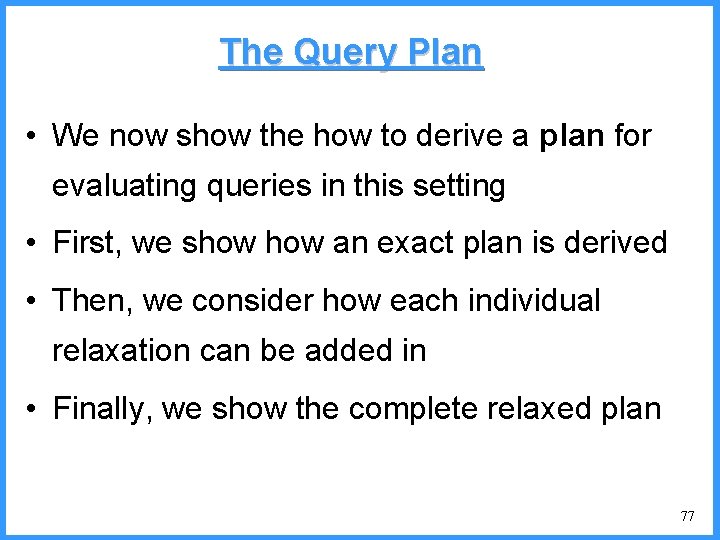

The Query Plan • We now show the how to derive a plan for evaluating queries in this setting • First, we show an exact plan is derived • Then, we consider how each individual relaxation can be added in • Finally, we show the complete relaxed plan 77

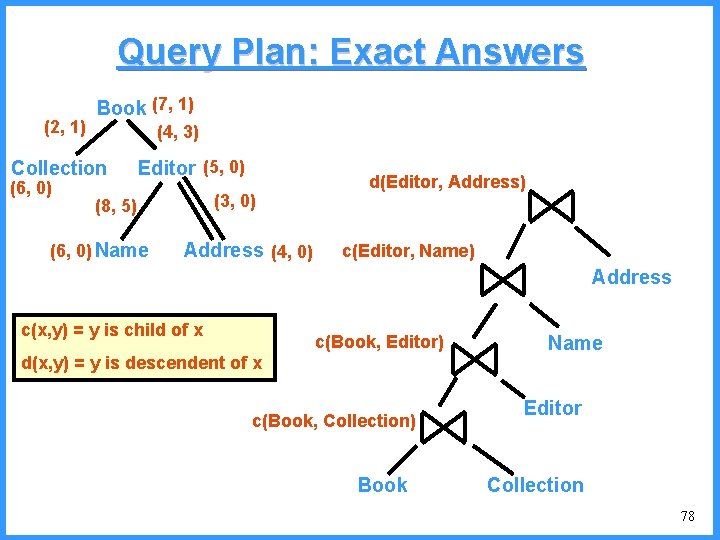

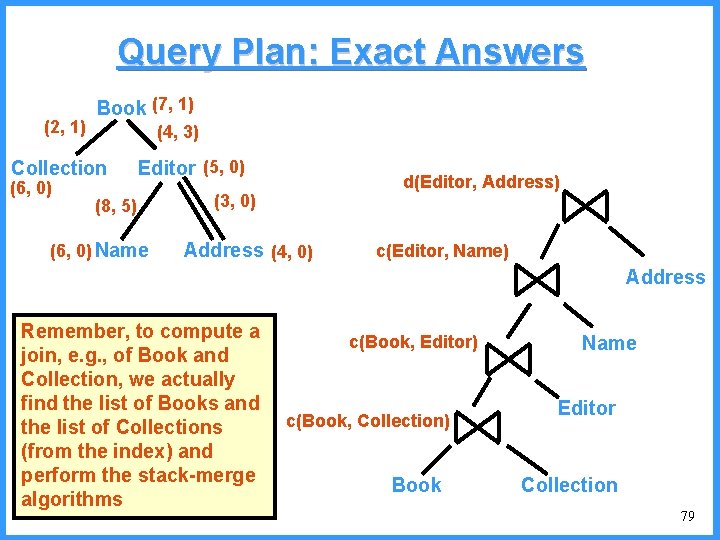

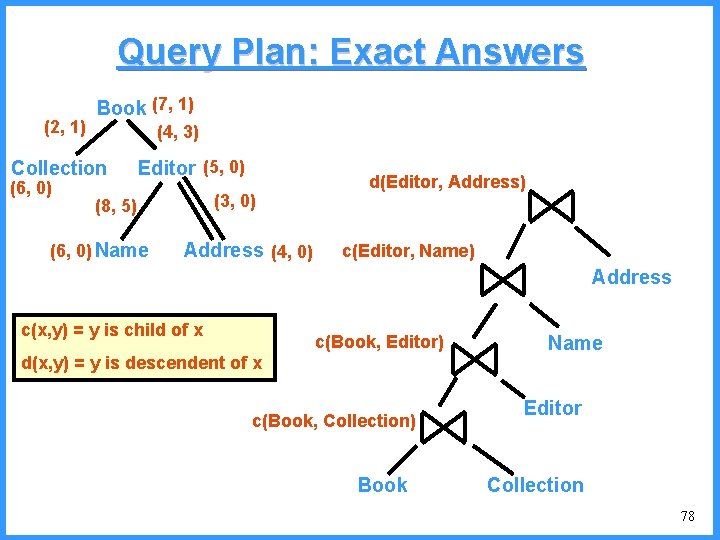

Query Plan: Exact Answers (2, 1) Book (7, 1) (4, 3) Collection (6, 0) Editor (5, 0) (3, 0) (8, 5) (6, 0) Name Address (4, 0) d(Editor, Address) c(Editor, Name) Address c(x, y) = y is child of x c(Book, Editor) d(x, y) = y is descendent of x c(Book, Collection) Book Name Editor Collection 78

Query Plan: Exact Answers (2, 1) Book (7, 1) (4, 3) Collection (6, 0) Editor (5, 0) (8, 5) (6, 0) Name d(Editor, Address) (3, 0) Address (4, 0) c(Editor, Name) Address Remember, to compute a join, e. g. , of Book and Collection, we actually find the list of Books and the list of Collections (from the index) and perform the stack-merge algorithms c(Book, Editor) c(Book, Collection) Book Name Editor Collection 79

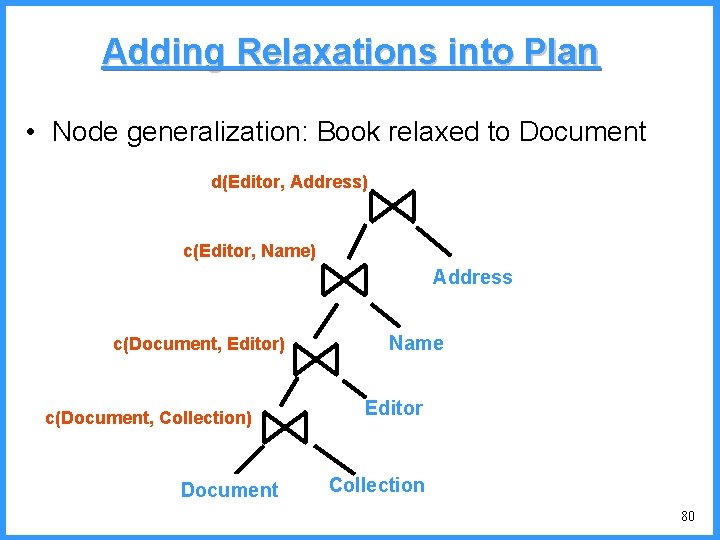

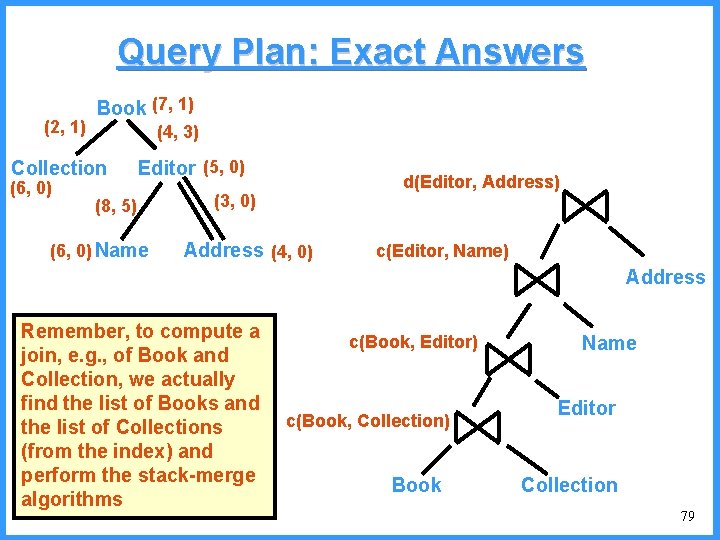

Adding Relaxations into Plan • Node generalization: Book relaxed to Document d(Editor, Address) c(Editor, Name) Address c(Book, Editor) c(Document, c(Book, Collection) c(Document, Document Book Name Editor Collection 80

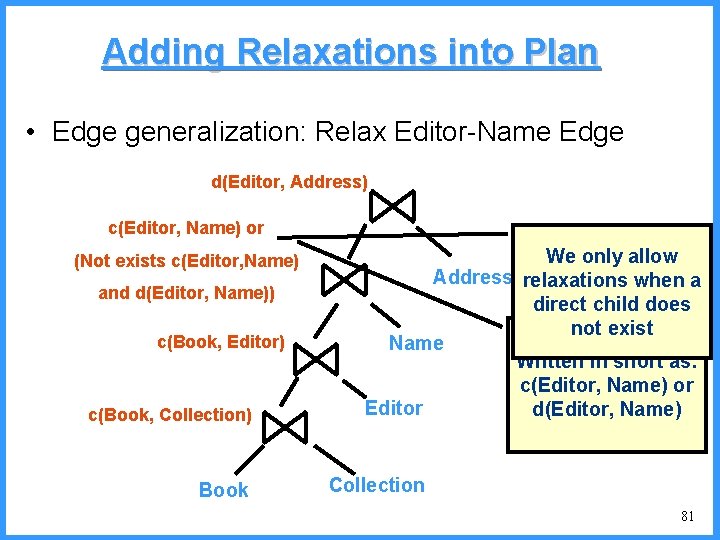

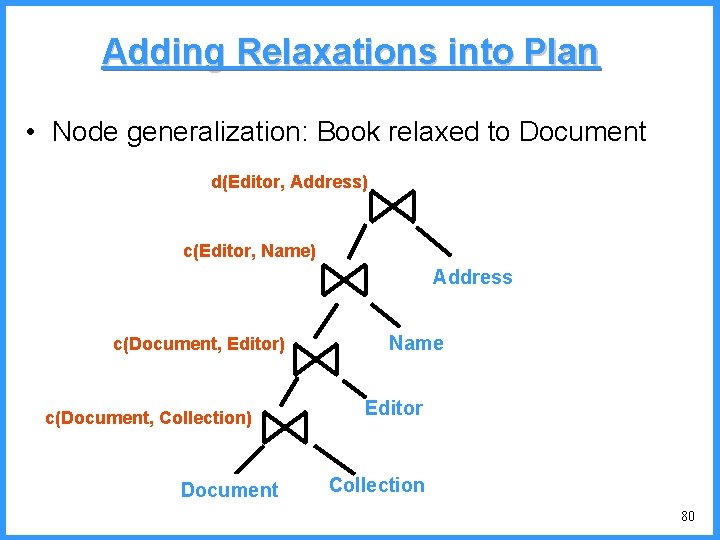

Adding Relaxations into Plan • Edge generalization: Relax Editor-Name Edge d(Editor, Address) c(Editor, Name) or c(Editor, Name) (Not exists c(Editor, Name) and d(Editor, Name)) c(Book, Editor) c(Book, Collection) Book We only allow Address relaxations when a direct child does not exist Name Written in short as: c(Editor, Name) or Editor d(Editor, Name) Collection 81

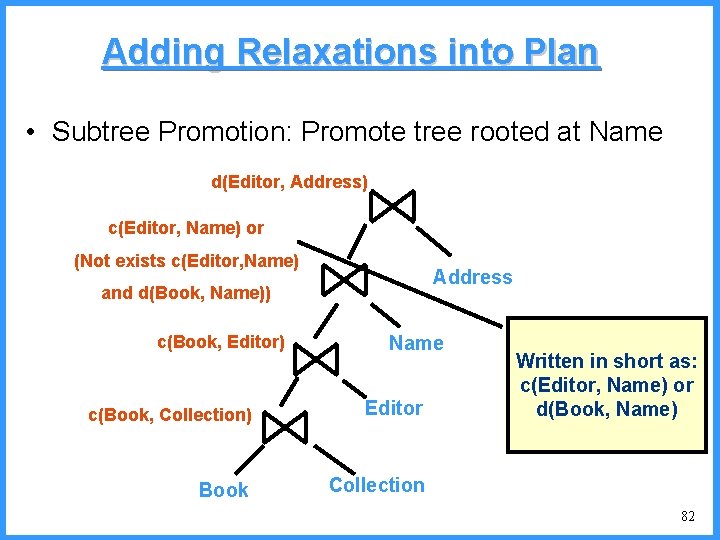

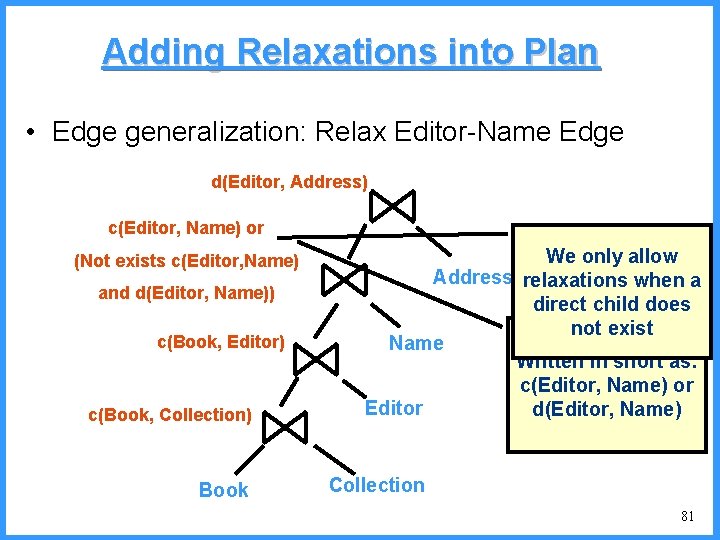

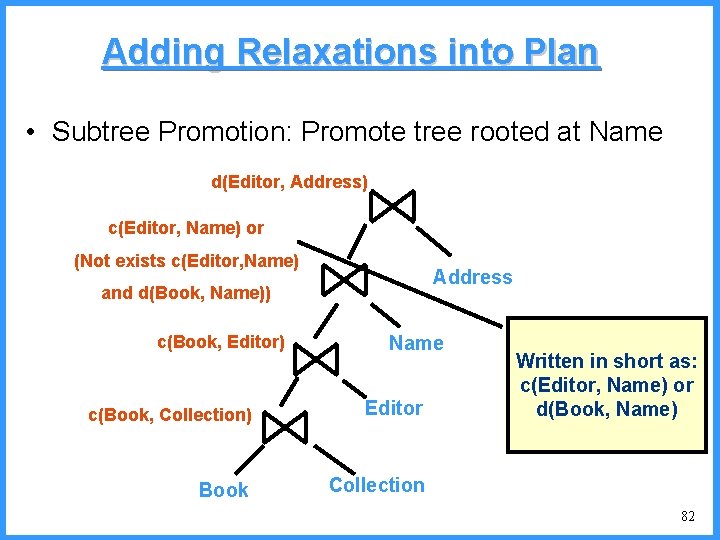

Adding Relaxations into Plan • Subtree Promotion: Promote tree rooted at Name d(Editor, Address) c(Editor, Name) or c(Editor, Name) (Not exists c(Editor, Name) Address and d(Book, Name)) c(Book, Editor) Name c(Book, Collection) Editor Book Collection Written in short as: c(Editor, Name) or d(Book, Name) 82

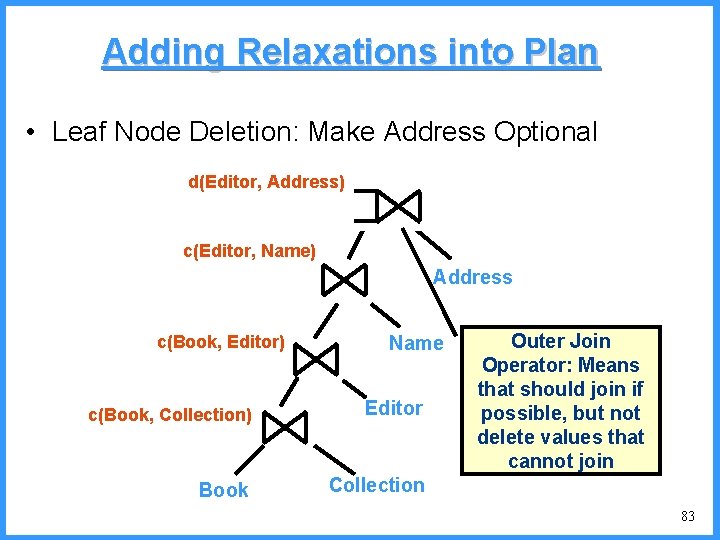

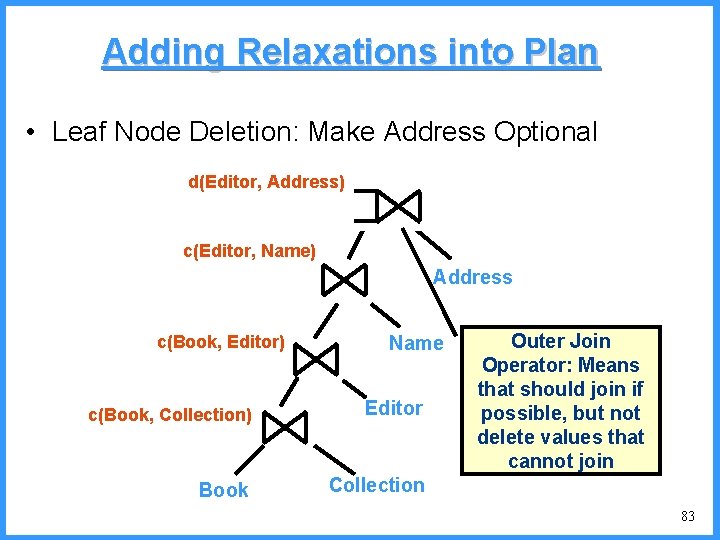

Adding Relaxations into Plan • Leaf Node Deletion: Make Address Optional d(Editor, Address) c(Editor, Name) Address c(Book, Editor) Name c(Book, Collection) Editor Book Collection Outer Join Operator: Means that should join if possible, but not delete values that cannot join 83

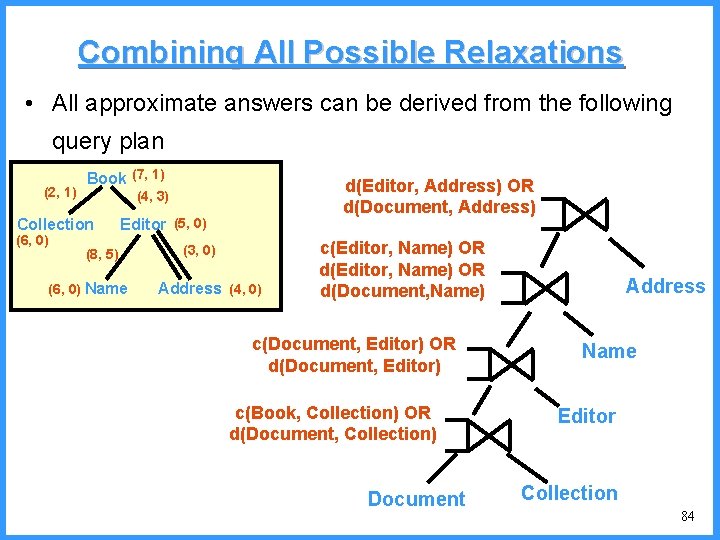

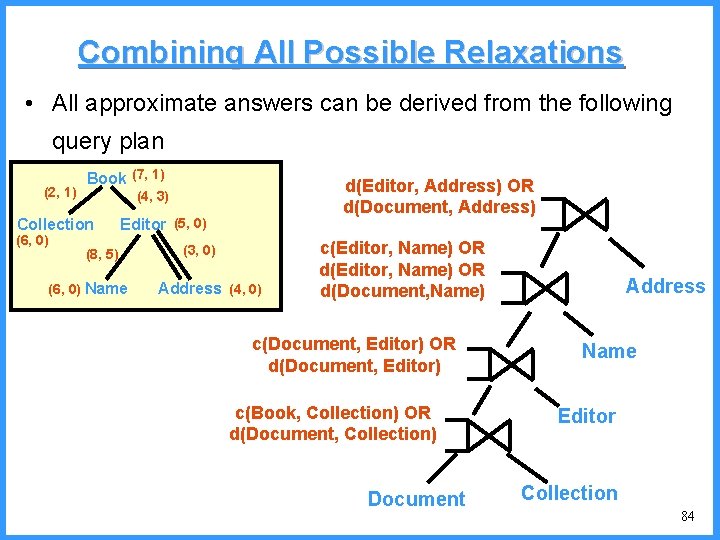

Combining All Possible Relaxations • All approximate answers can be derived from the following query plan (2, 1) Book (7, 1) Collection (6, 0) d(Editor, Address) OR d(Document, Address) (4, 3) Editor (5, 0) (8, 5) (6, 0) Name (3, 0) Address (4, 0) c(Editor, Name) OR d(Document, Name) c(Document, Editor) OR d(Document, Editor) c(Book, Collection) OR d(Document, Collection) Document Address Name Editor Collection 84

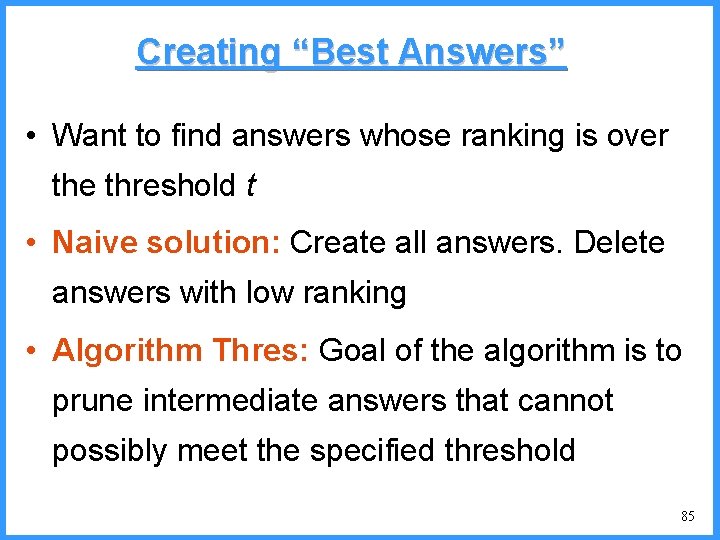

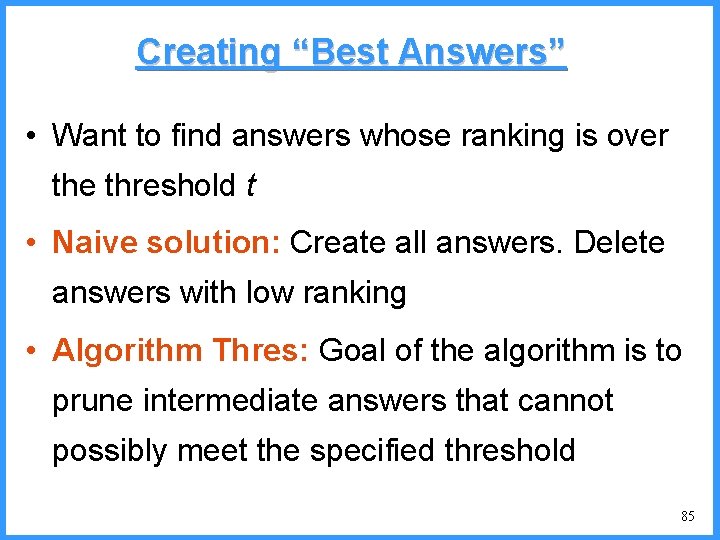

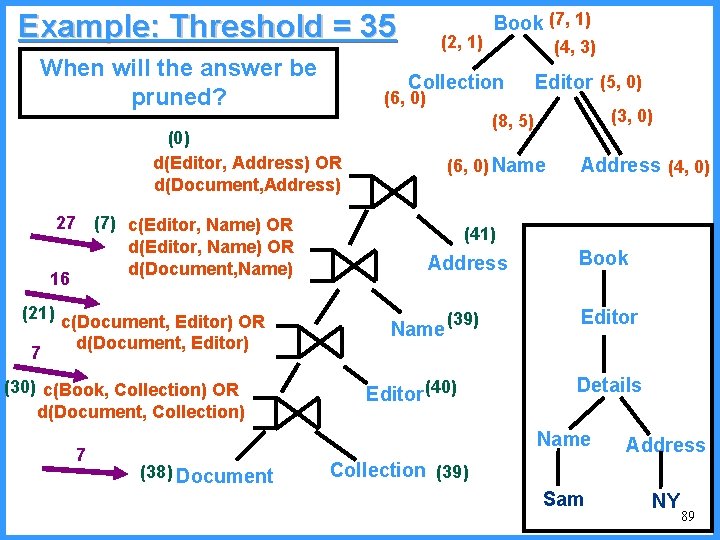

Creating “Best Answers” • Want to find answers whose ranking is over the threshold t • Naive solution: Create all answers. Delete answers with low ranking • Algorithm Thres: Goal of the algorithm is to prune intermediate answers that cannot possibly meet the specified threshold 85

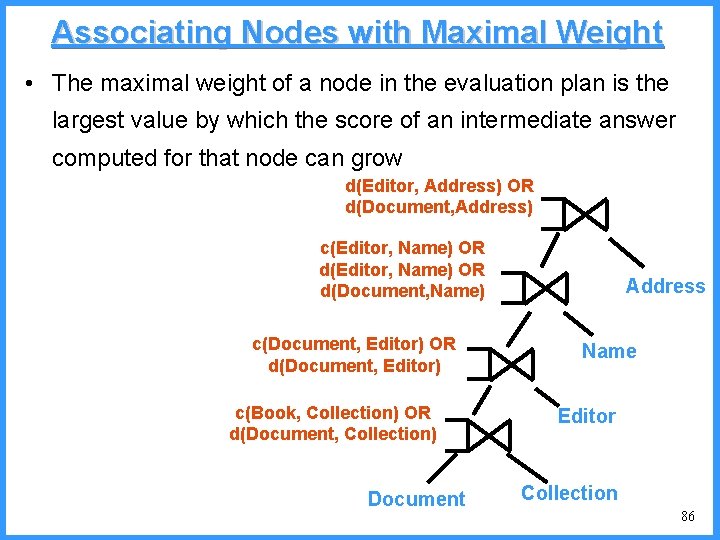

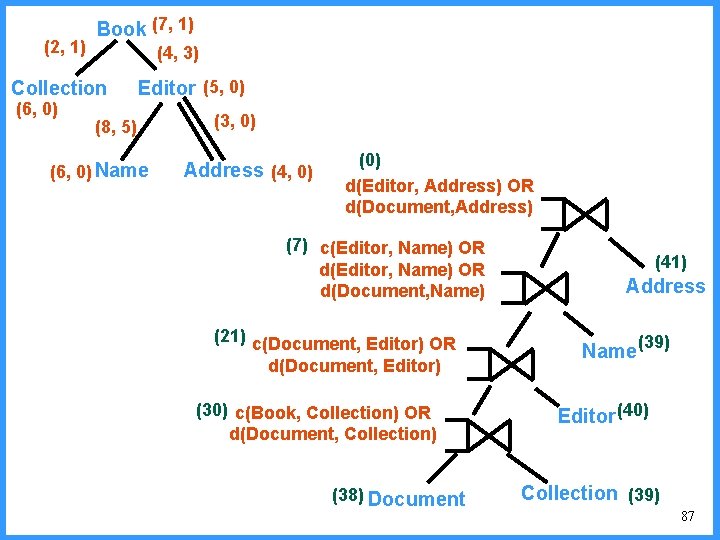

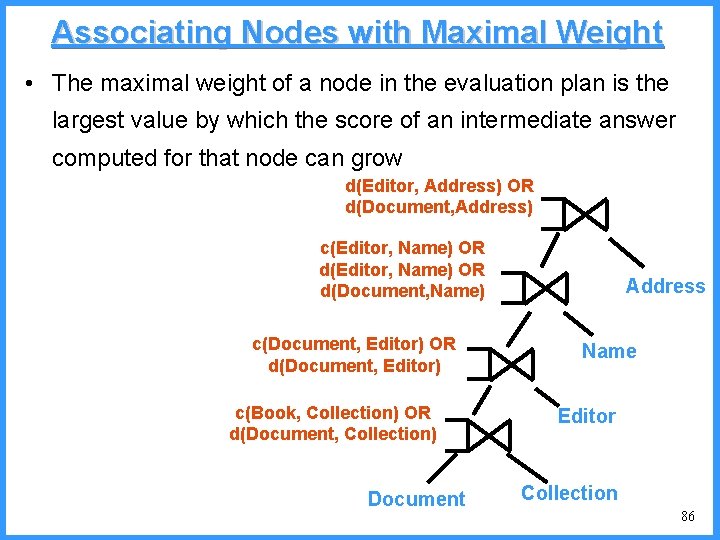

Associating Nodes with Maximal Weight • The maximal weight of a node in the evaluation plan is the largest value by which the score of an intermediate answer computed for that node can grow d(Editor, Address) OR d(Document, Address) c(Editor, Name) OR d(Document, Name) c(Document, Editor) OR d(Document, Editor) c(Book, Collection) OR d(Document, Collection) Document Address Name Editor Collection 86

(2, 1) Book (7, 1) (4, 3) Collection (6, 0) Editor (5, 0) (8, 5) (6, 0) Name (3, 0) Address (4, 0) (0) d(Editor, Address) OR d(Document, Address) (7) c(Editor, Name) OR d(Document, Name) (21) c(Document, Editor) OR d(Document, Editor) (30) c(Book, Collection) OR d(Document, Collection) (38) Document (41) Address Name (39) Editor (40) Collection (39) 87

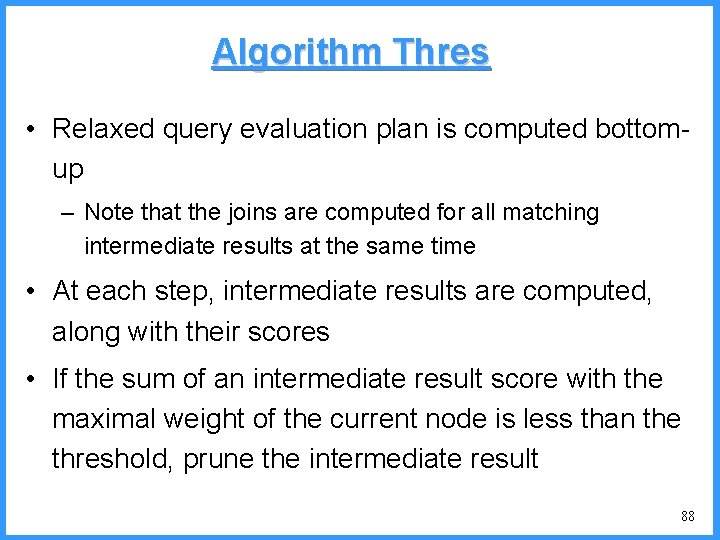

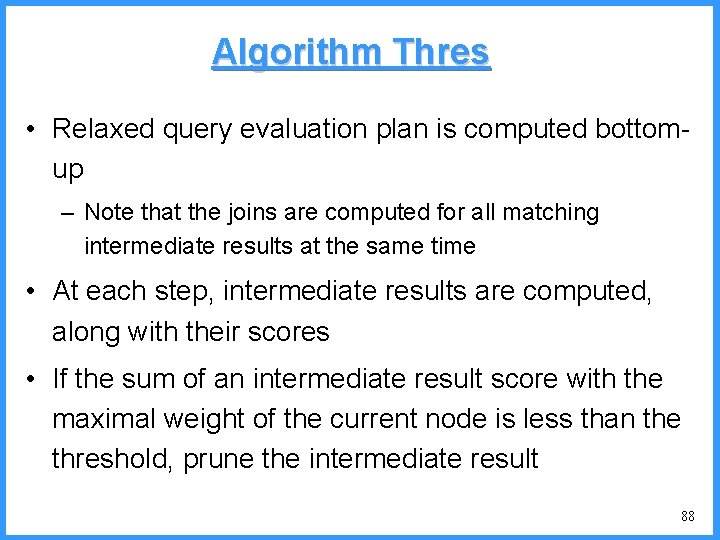

Algorithm Thres • Relaxed query evaluation plan is computed bottomup – Note that the joins are computed for all matching intermediate results at the same time • At each step, intermediate results are computed, along with their scores • If the sum of an intermediate result score with the maximal weight of the current node is less than the threshold, prune the intermediate result 88

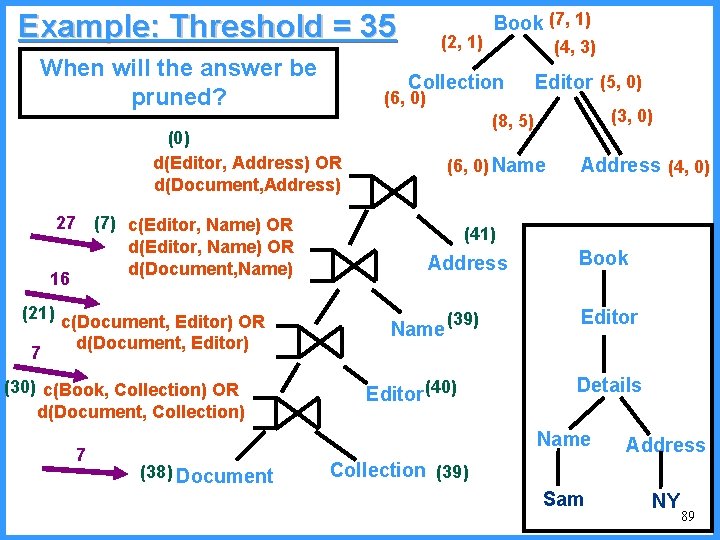

Example: Threshold = 35 When will the answer be pruned? (2, 1) (7) c(Editor, Name) OR d(Document, Name) 16 (21) c(Document, Editor) OR d(Document, Editor) 7 (30) c(Book, Collection) OR d(Document, Collection) 7 (38) Document (4, 3) Collection (6, 0) Editor (5, 0) (3, 0) (8, 5) (0) d(Editor, Address) OR d(Document, Address) 27 Book (7, 1) (6, 0) Name Address (4, 0) (41) Address Name (39) Editor (40) Book Editor Details Name Address Sam NY Collection (39) 89

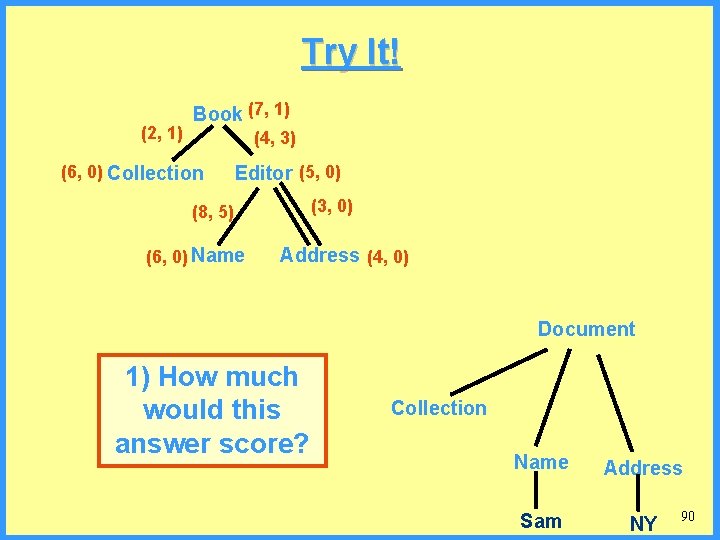

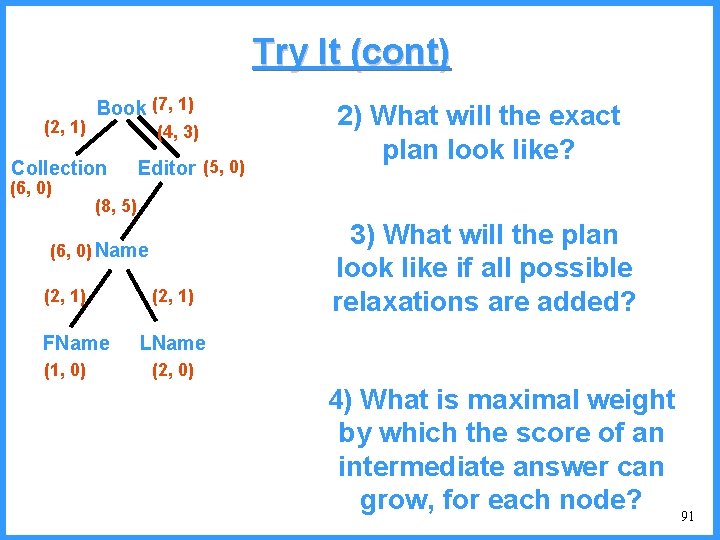

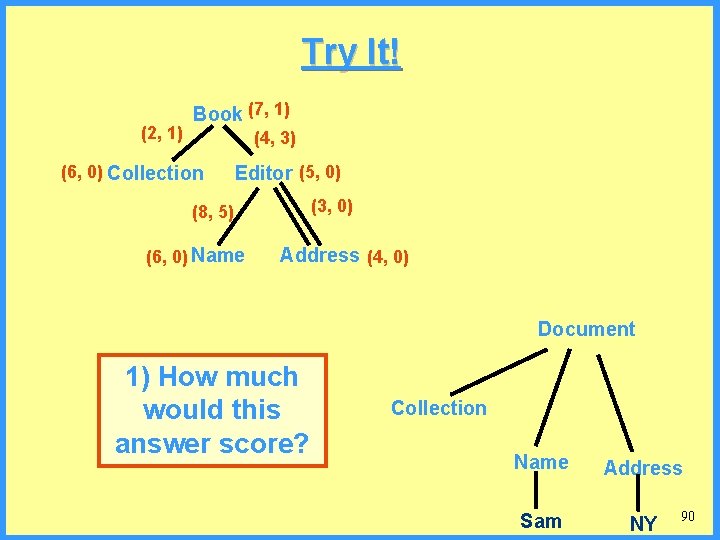

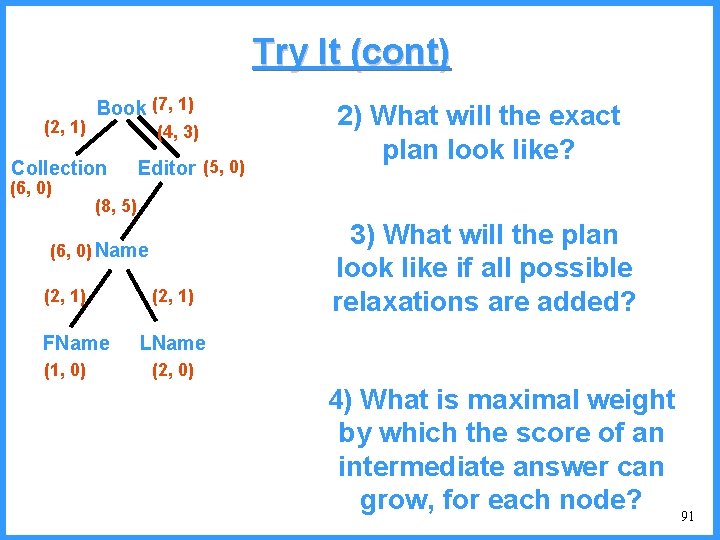

Try It! (2, 1) Book (7, 1) (4, 3) (6, 0) Collection Editor (5, 0) (3, 0) (8, 5) (6, 0) Name Address (4, 0) Document 1) How much would this answer score? Collection Name Address Sam NY 90

Try It (cont) (2, 1) Book (7, 1) (4, 3) Collection (6, 0) Editor (5, 0) (8, 5) (6, 0) Name (2, 1) FName (1, 0) 2) What will the exact plan look like? (2, 1) 3) What will the plan look like if all possible relaxations are added? LName (2, 0) 4) What is maximal weight by which the score of an intermediate answer can grow, for each node? 91