Kernels Usman Roshan CS 675 Machine Learning Feature

- Slides: 21

Kernels Usman Roshan CS 675 Machine Learning

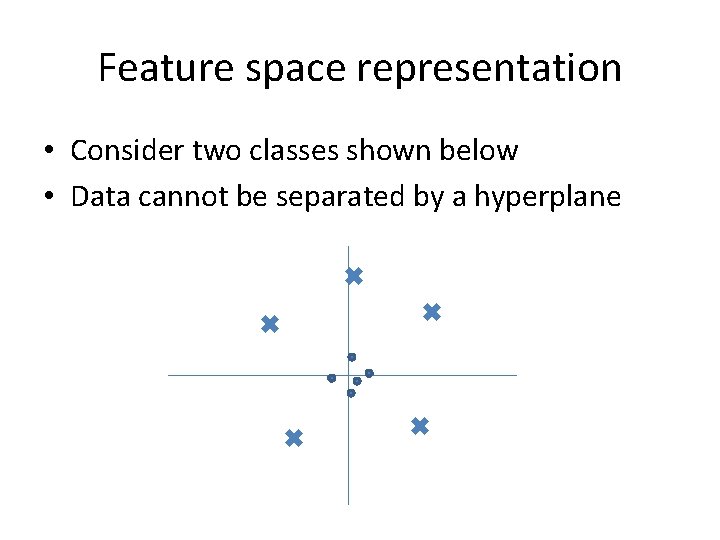

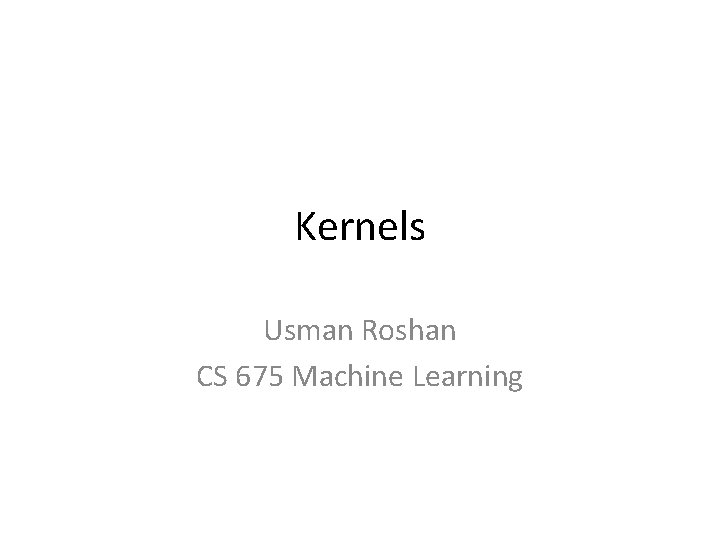

Feature space representation • Consider two classes shown below • Data cannot be separated by a hyperplane

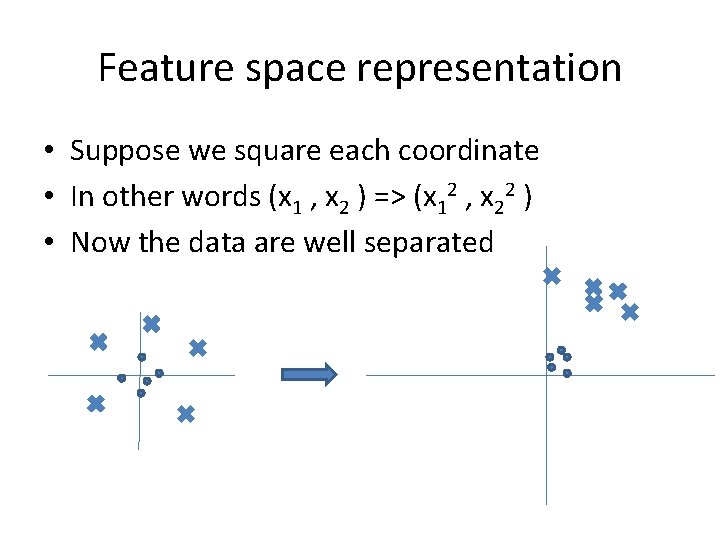

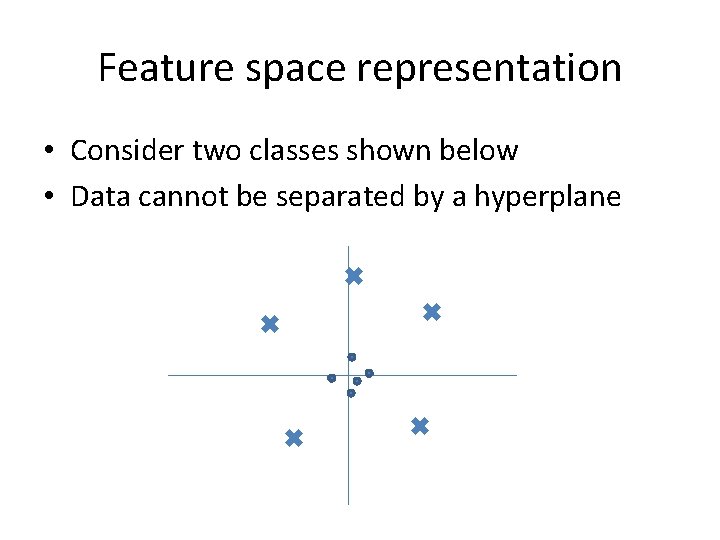

Feature space representation • Suppose we square each coordinate • In other words (x 1 , x 2 ) => (x 12 , x 22 ) • Now the data are well separated

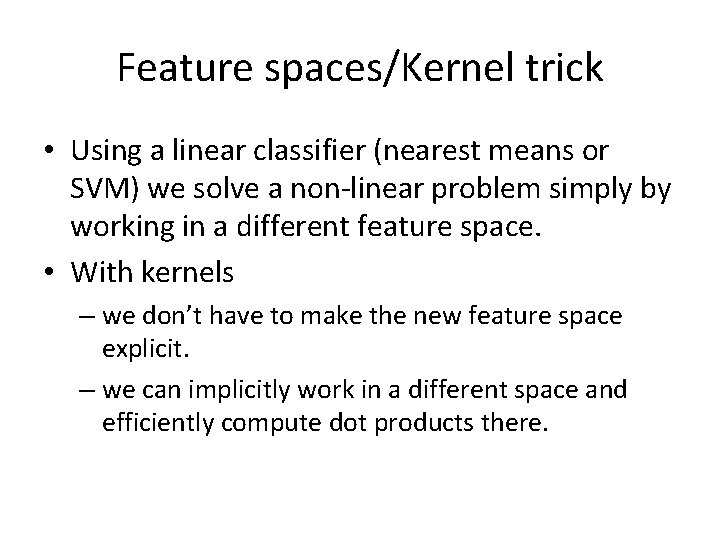

Feature spaces/Kernel trick • Using a linear classifier (nearest means or SVM) we solve a non-linear problem simply by working in a different feature space. • With kernels – we don’t have to make the new feature space explicit. – we can implicitly work in a different space and efficiently compute dot products there.

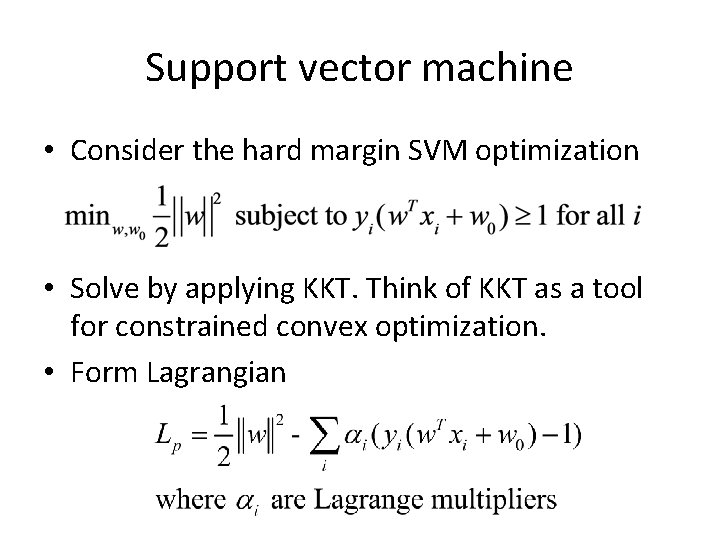

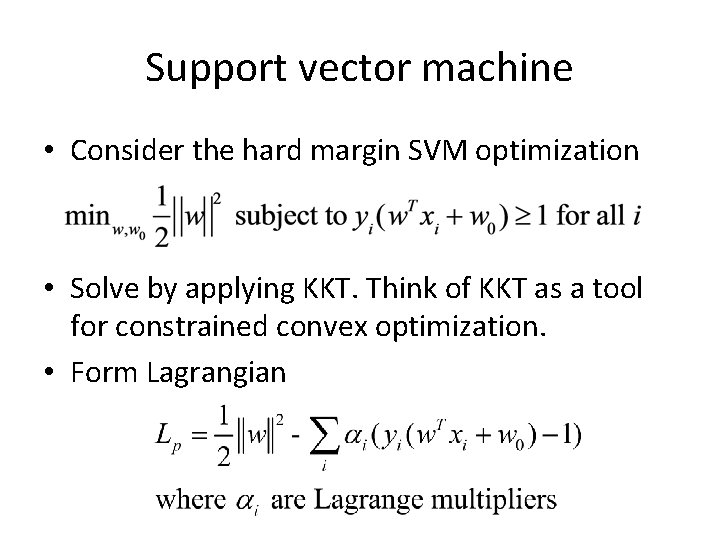

Support vector machine • Consider the hard margin SVM optimization • Solve by applying KKT. Think of KKT as a tool for constrained convex optimization. • Form Lagrangian

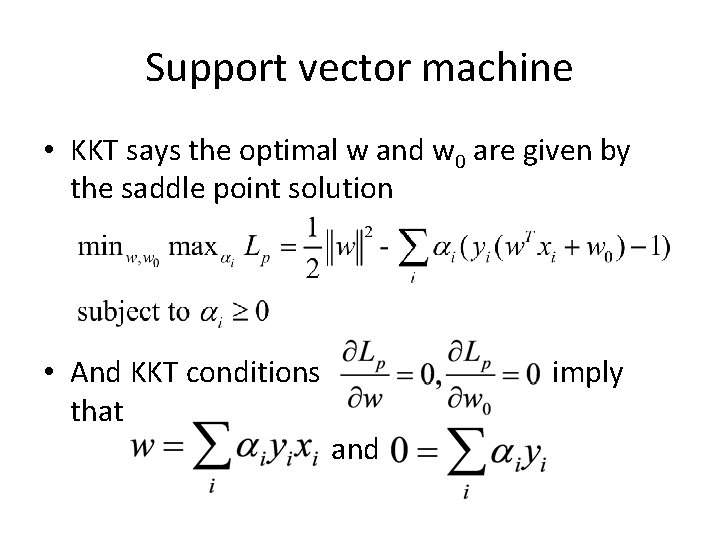

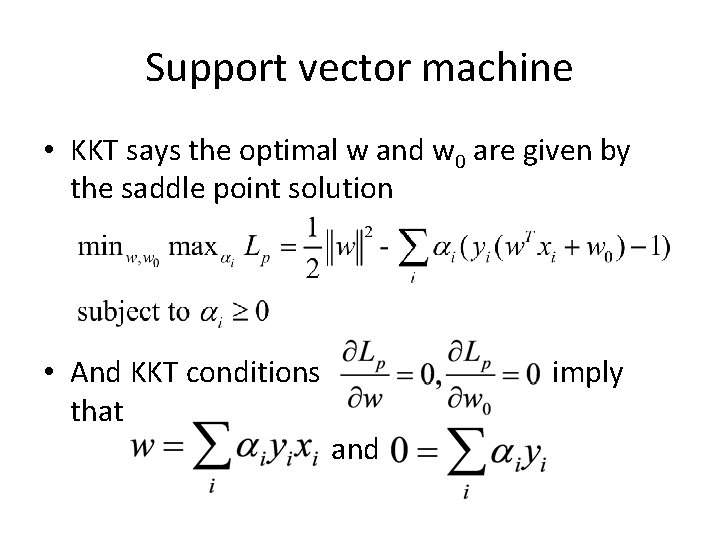

Support vector machine • KKT says the optimal w and w 0 are given by the saddle point solution • And KKT conditions that imply and

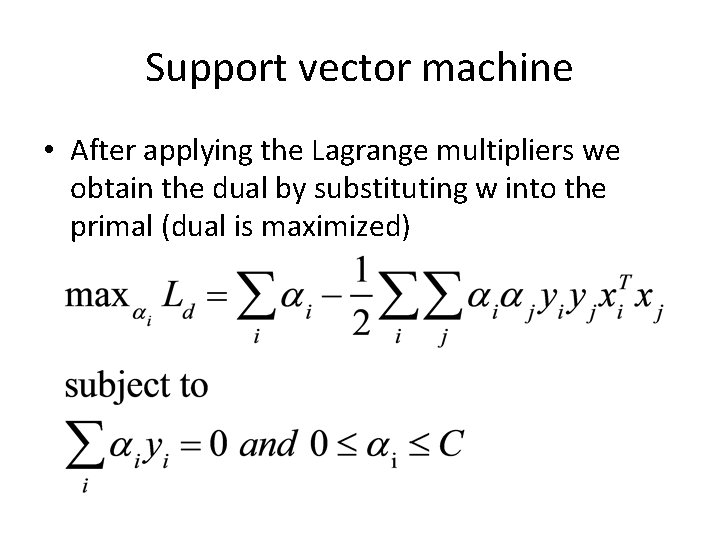

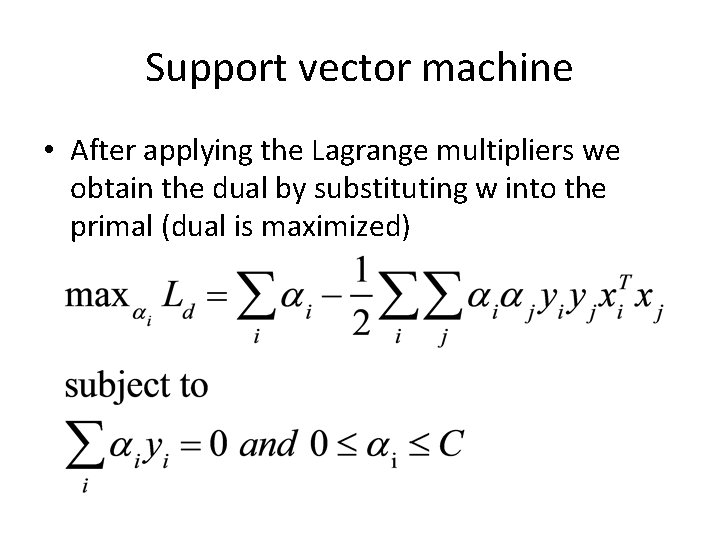

Support vector machine • After applying the Lagrange multipliers we obtain the dual by substituting w into the primal (dual is maximized)

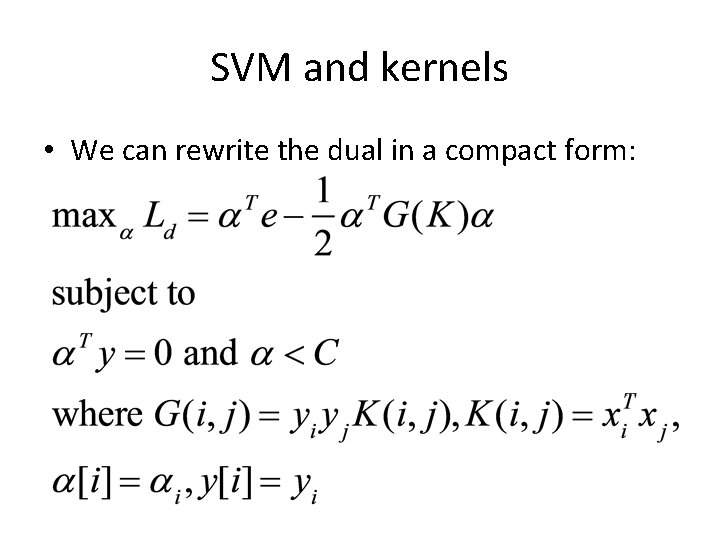

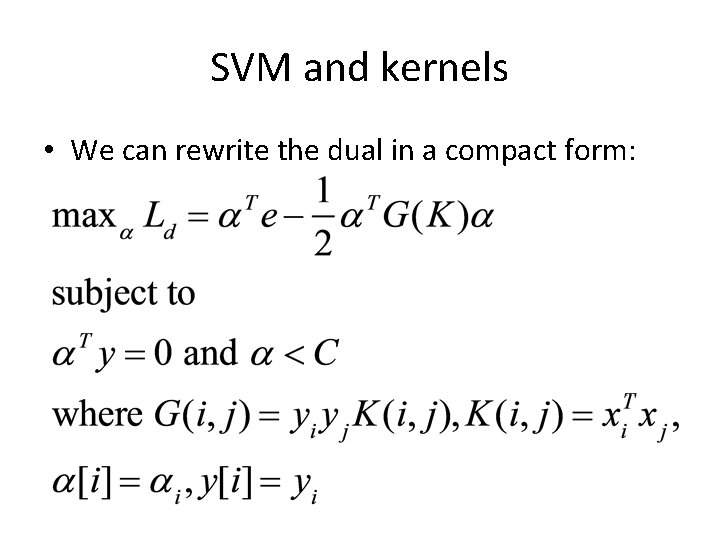

SVM and kernels • We can rewrite the dual in a compact form:

Optimization • The SVM is thus a quadratic program that can be solved by any quadratic program solver. • Platt’s Sequential Minimization Optimization (SMO) algorithm offers a simple specific solution to the SVM dual • Idea is to perform coordinate ascent by selecting two variables at a time to optimize • Let’s look at some kernels.

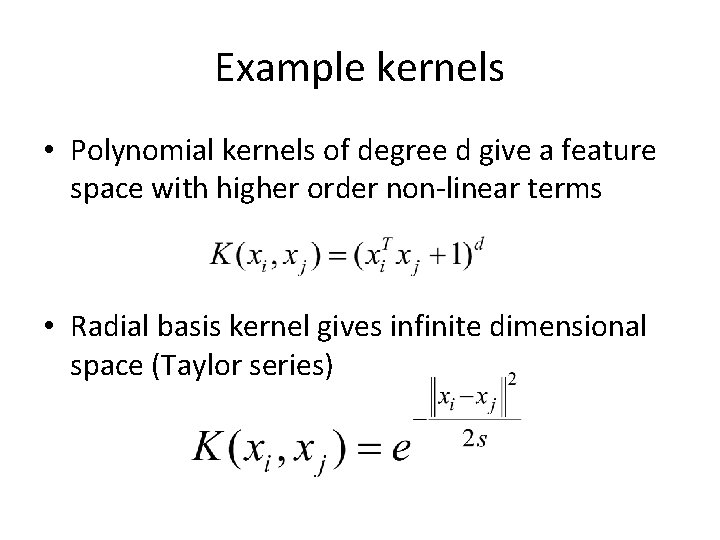

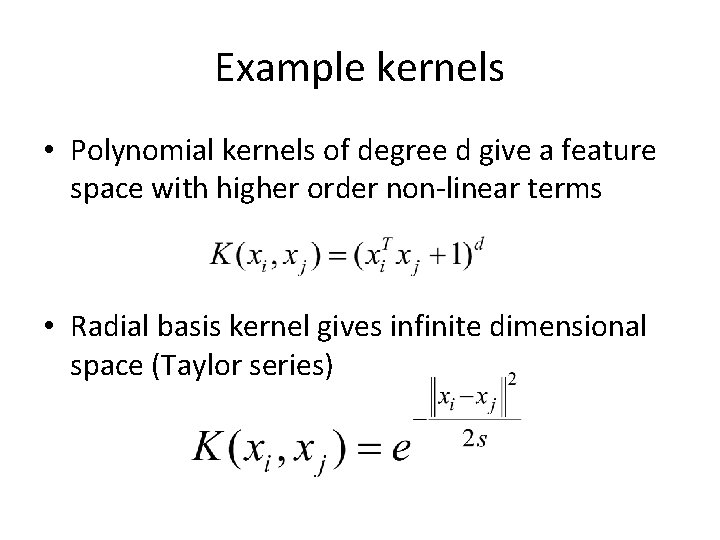

Example kernels • Polynomial kernels of degree d give a feature space with higher order non-linear terms • Radial basis kernel gives infinite dimensional space (Taylor series)

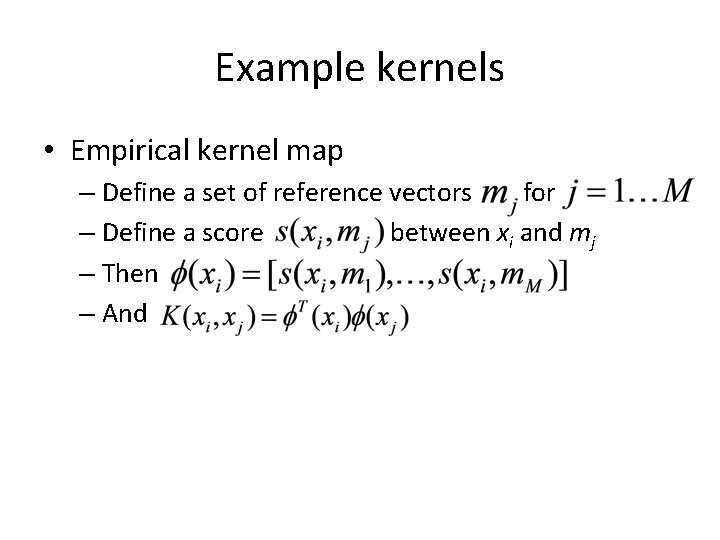

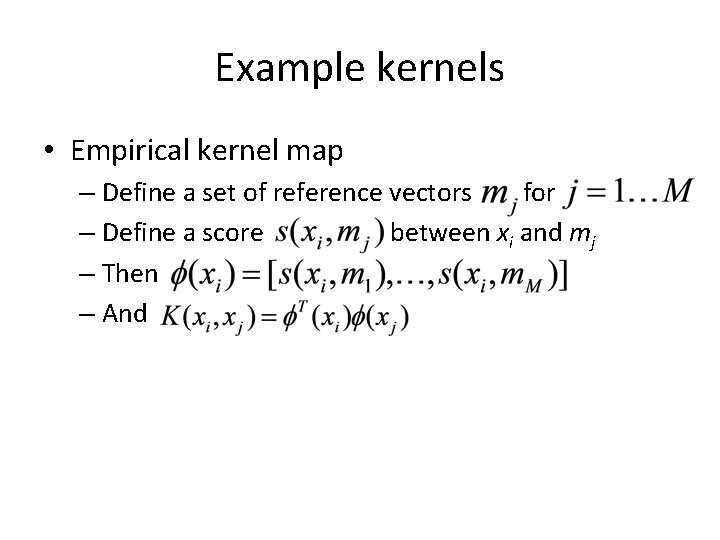

Example kernels • Empirical kernel map – Define a set of reference vectors for – Define a score between xi and mj – Then – And

Example kernels • Bag of words – Given two documents D 1 and D 2 the we define the kernel K(D 1, D 2) as the number of words in common – To prove this is a kernel first create a large set of words Wi. Define the mapping Φ(D 1) as a high dimensional vector where Φ(D 1)[i] is 1 if the word Wi is present in the document.

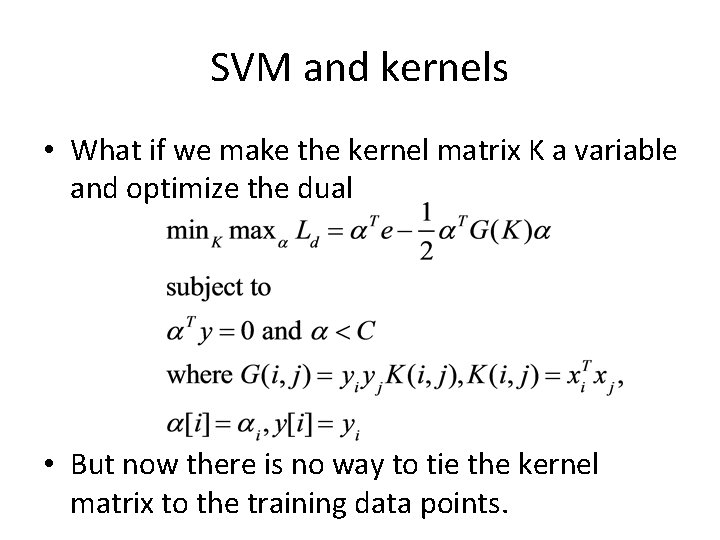

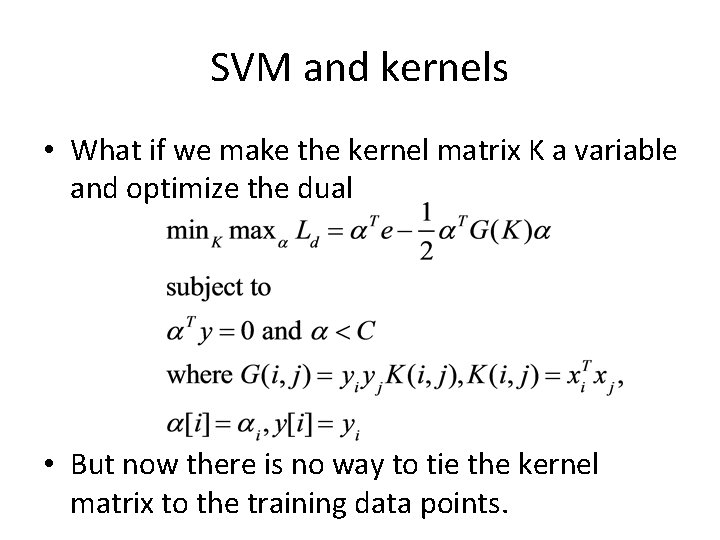

SVM and kernels • What if we make the kernel matrix K a variable and optimize the dual • But now there is no way to tie the kernel matrix to the training data points.

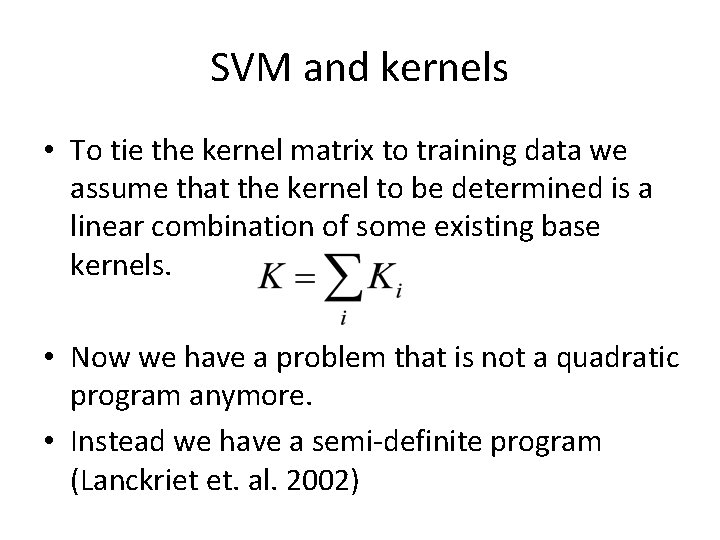

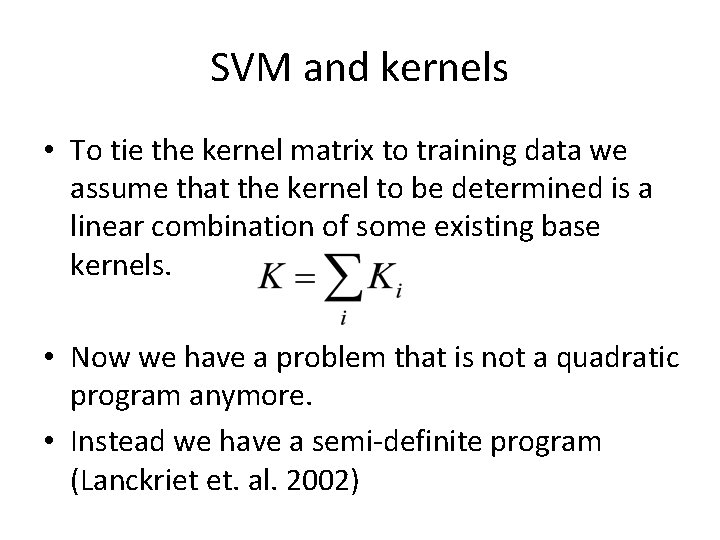

SVM and kernels • To tie the kernel matrix to training data we assume that the kernel to be determined is a linear combination of some existing base kernels. • Now we have a problem that is not a quadratic program anymore. • Instead we have a semi-definite program (Lanckriet et. al. 2002)

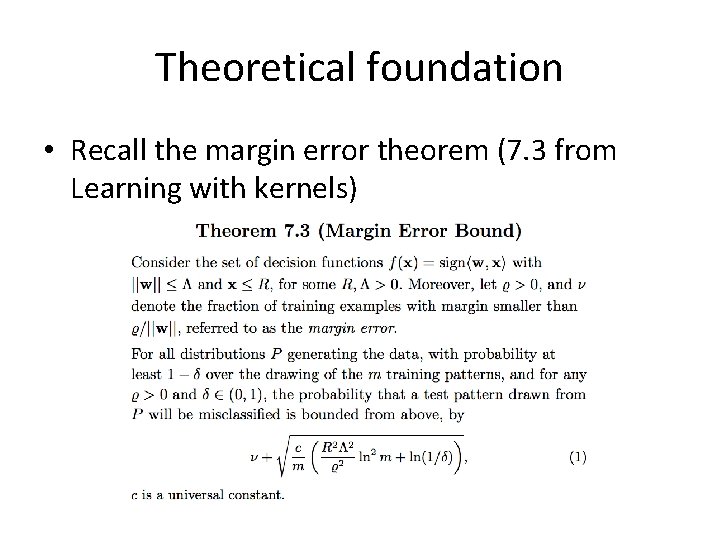

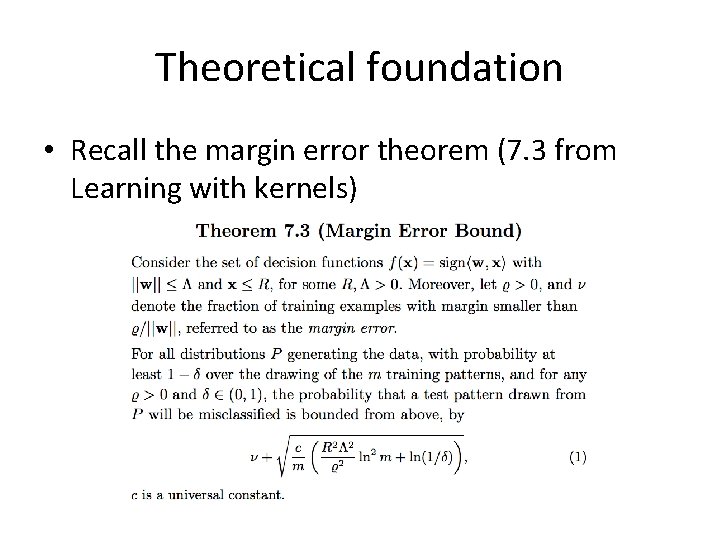

Theoretical foundation • Recall the margin error theorem (7. 3 from Learning with kernels)

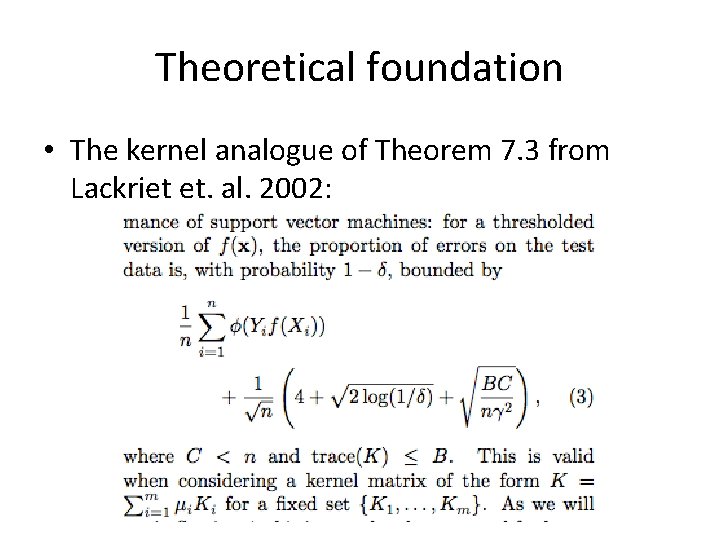

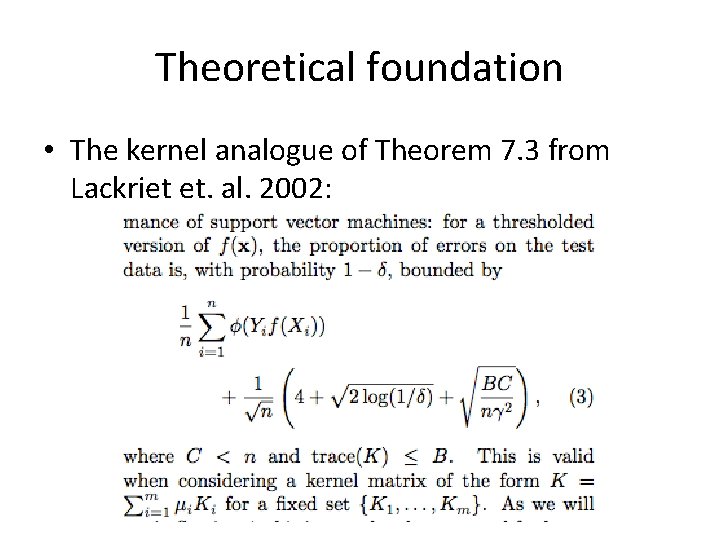

Theoretical foundation • The kernel analogue of Theorem 7. 3 from Lackriet et. al. 2002:

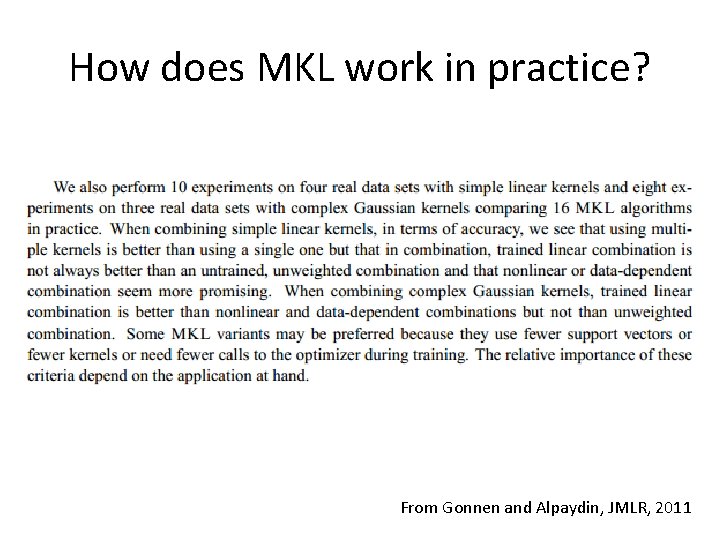

How does MKL work in practice? • Gonnen and Alpaydin, JMLR, 2011 • Datasets: – Digit recognition, – Internet advertisements – Protein folding • Form kernels with different sets of features • Apply SVM with various kernel learning algorithms.

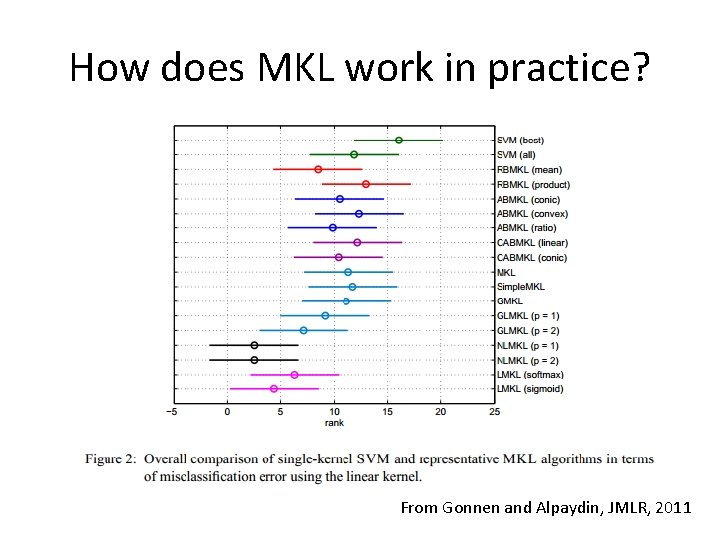

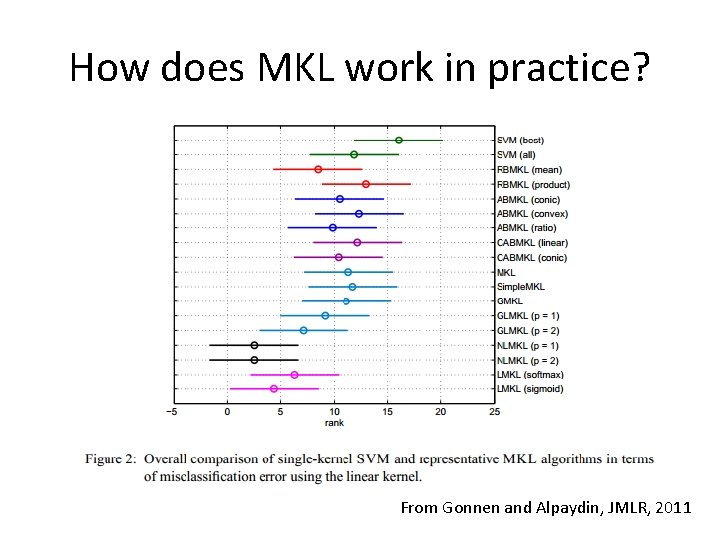

How does MKL work in practice? From Gonnen and Alpaydin, JMLR, 2011

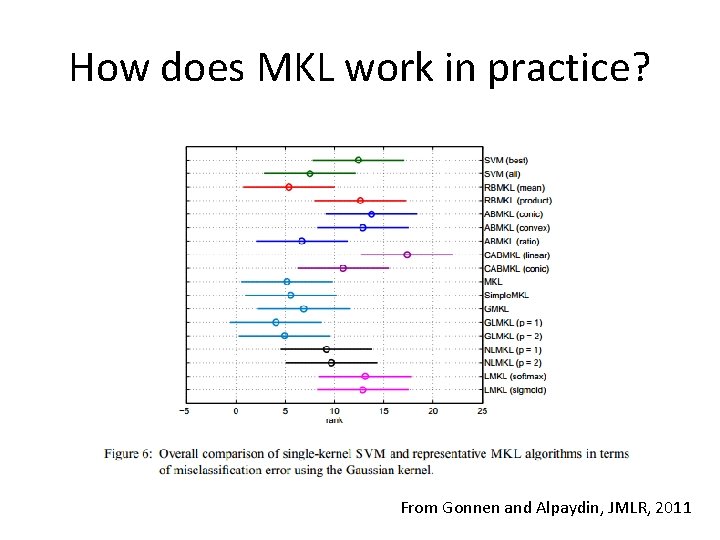

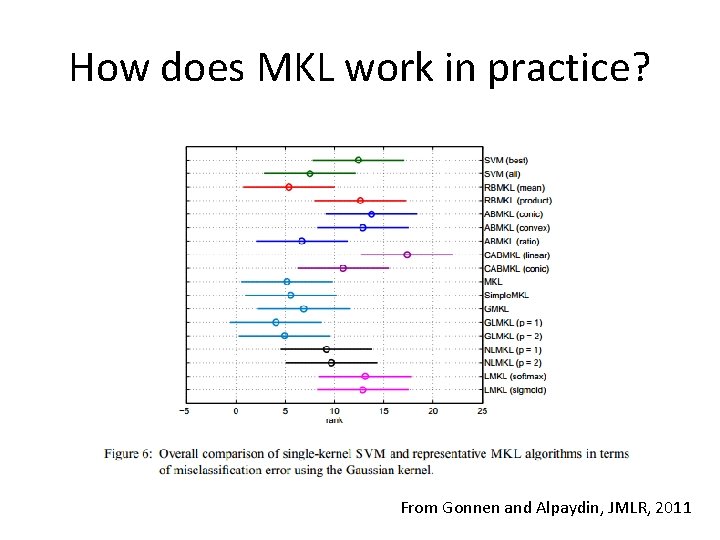

How does MKL work in practice? From Gonnen and Alpaydin, JMLR, 2011

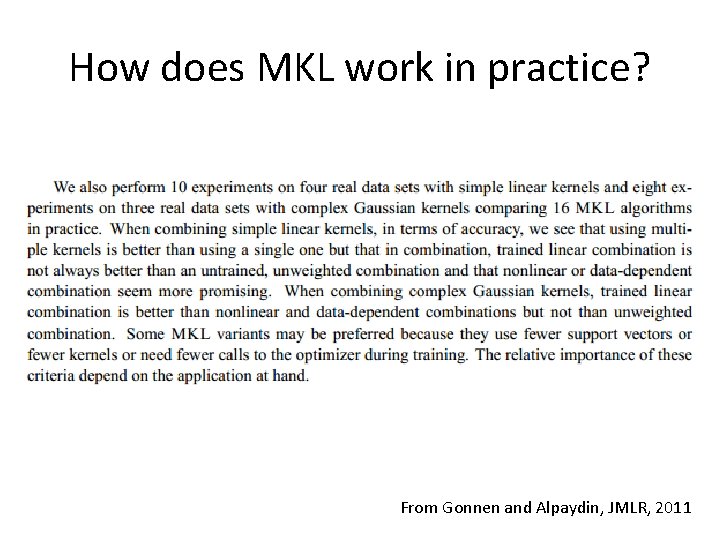

How does MKL work in practice? From Gonnen and Alpaydin, JMLR, 2011

How does MKL work in practice? • MKL better than single kernel • Mean kernel hard to beat • Non-linear MKL looks promising