Kernel Memory Allocation Direct Memory Access Simon Jackson

![Preferred method of memory allocation #define BUF_LEN 2048 void function(void) { char buf[BUF_LEN]; /* Preferred method of memory allocation #define BUF_LEN 2048 void function(void) { char buf[BUF_LEN]; /*](https://slidetodoc.com/presentation_image/2175c1340c7e8bb84d2b52d2cfaab8ca/image-21.jpg)

- Slides: 52

Kernel Memory, Allocation & Direct Memory Access Simon Jackson James Sleeman Pete Hemery

Simon Jackson Kernel Memory

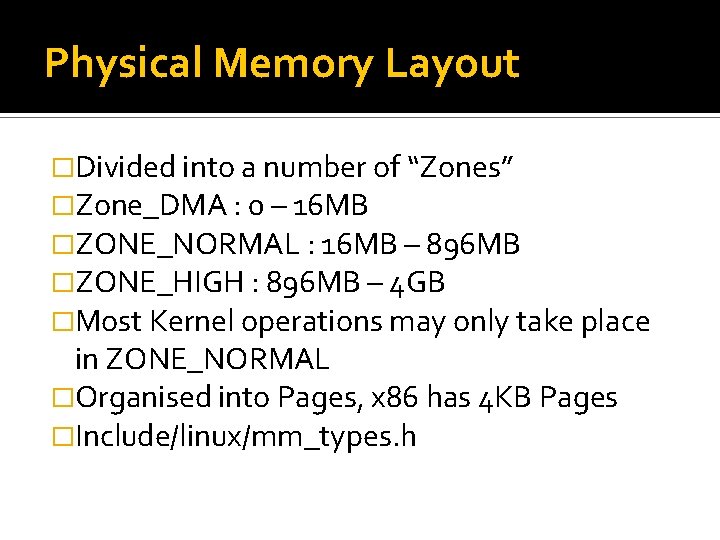

Physical Memory Layout �Divided into a number of “Zones” �Zone_DMA : 0 – 16 MB �ZONE_NORMAL : 16 MB – 896 MB �ZONE_HIGH : 896 MB – 4 GB �Most Kernel operations may only take place in ZONE_NORMAL �Organised into Pages, x 86 has 4 KB Pages �Include/linux/mm_types. h

Pages �Each page has a struct page associated with it �The kernel maintains one or more arrays of these that track all of the physical memory on the system �Functions and macros are defined for translating between struct page pointers and virtual addresses �struct page *virt_to_page(void *kaddr); �struct page *pfn_to_page(int pfn); �void *page_address(struct page *page);

ZONE_DMA � 0 – 16 MB �Used for Direct Memory Access �Legacy ISA devices can only access first 16 MB of memory and thus the kernel tries to dedicate this area to them

ZONE_NORMAL � 16 MB – 896 MB �AKA Low Memory �Normally addressable region for kernel �Kernel addresses that map it are called Logical Addresses and have a constant offset from their physical addresses

ZONE_HIGH � 896 MB – 4 GB �Kernel can only access by mapping into ZONE_NORMAL �Results in a virtual address, not logical �Kmap first checks to see if page is already in low memory �Kmap uses a page table to track mapped memory called pkmap_page_table which is located at PKMAP_BASE and set up during system initialisation

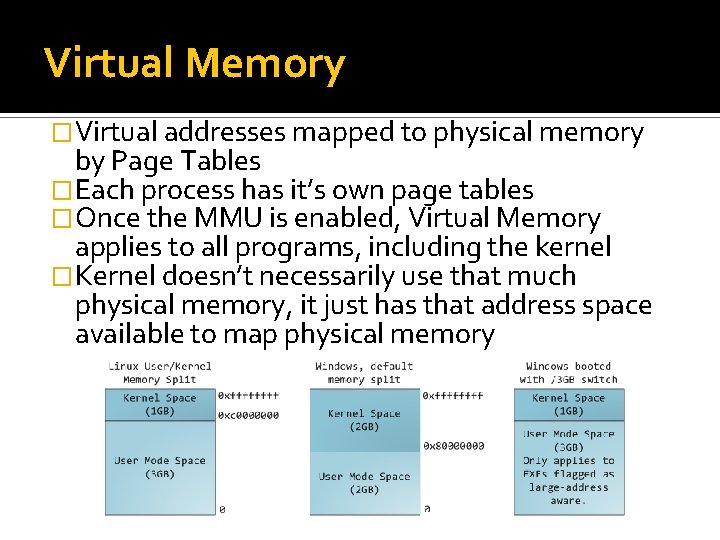

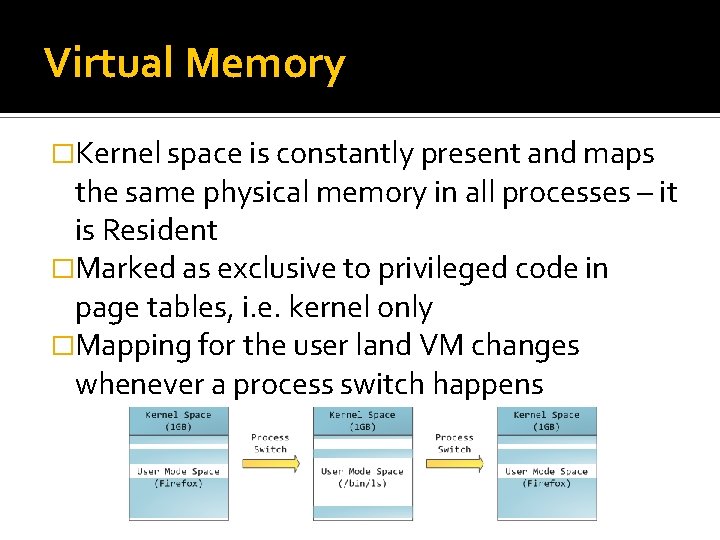

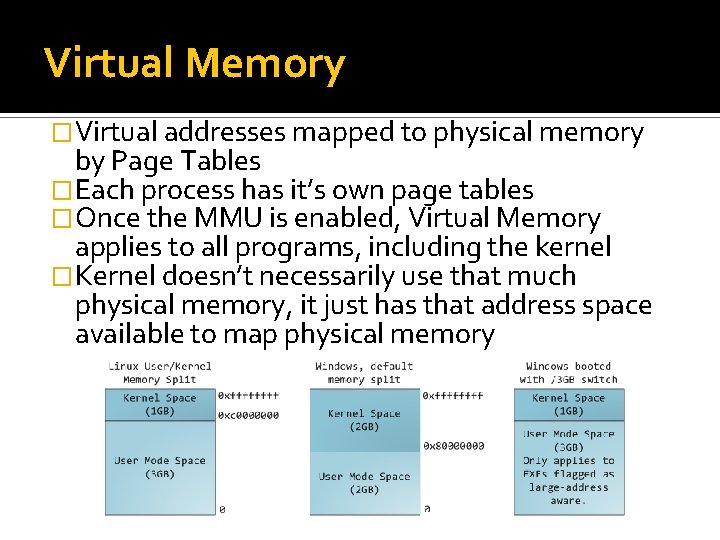

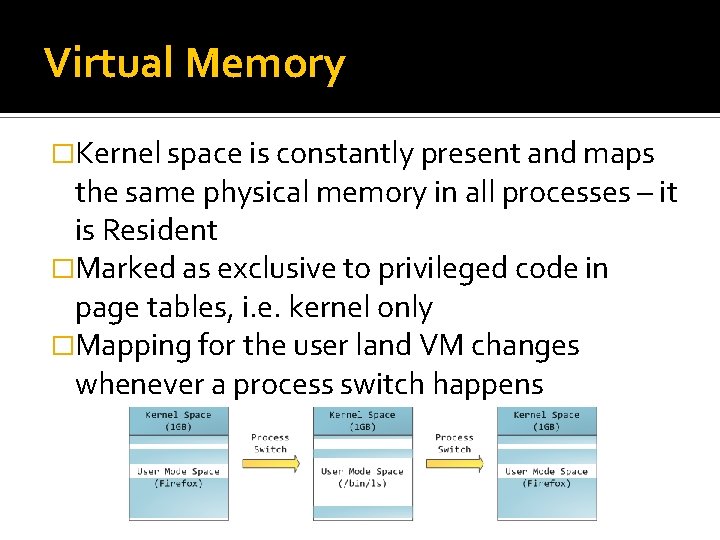

Virtual Memory �Virtual addresses mapped to physical memory by Page Tables �Each process has it’s own page tables �Once the MMU is enabled, Virtual Memory applies to all programs, including the kernel �Kernel doesn’t necessarily use that much physical memory, it just has that address space available to map physical memory

Virtual Memory �Kernel space is constantly present and maps the same physical memory in all processes – it is Resident �Marked as exclusive to privileged code in page tables, i. e. kernel only �Mapping for the user land VM changes whenever a process switch happens

Bounce Buffers �For devices that cannot access full address range, such as 32 bit devices on 64 bit systems �In memory low enough for device to address �Copied to desired page in high memory �Used as buffer pages for DMA to and from the device �Data is copied via the bounce buffer differently depending on whether it is a read or write buffer �Buffer can be reclaimed once IO done

Memory Pools �In 2. 4, the high memory manager was the only subsystem that maintained emergency pools of pages �In 2. 6, memory pools are implemented as a generic concept where a minimum of memory is needed even when memory is low �Two emergency pools are maintained for the express use by bounce buffers

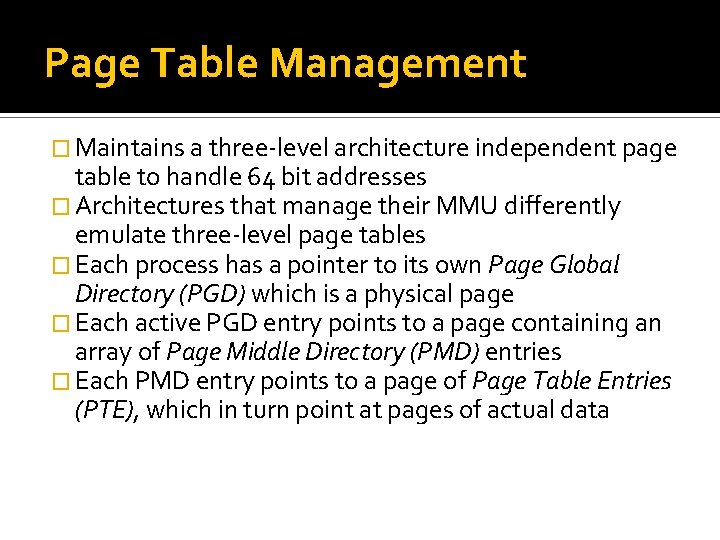

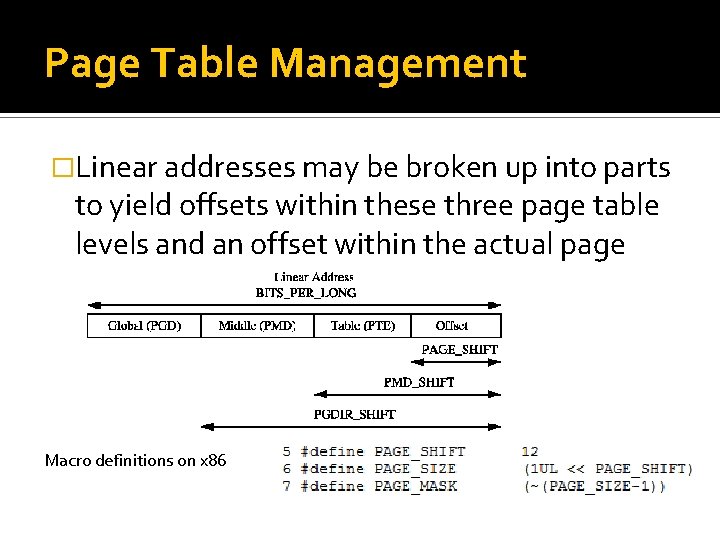

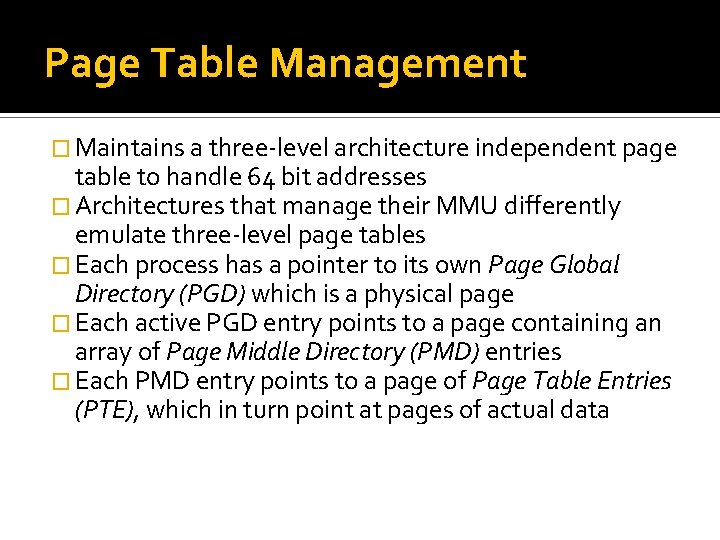

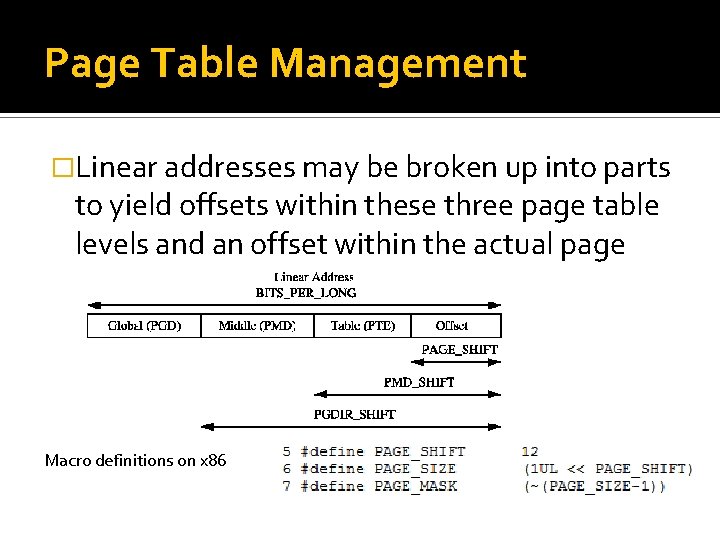

Page Table Management � Maintains a three-level architecture independent page table to handle 64 bit addresses � Architectures that manage their MMU differently emulate three-level page tables � Each process has a pointer to its own Page Global Directory (PGD) which is a physical page � Each active PGD entry points to a page containing an array of Page Middle Directory (PMD) entries � Each PMD entry points to a page of Page Table Entries (PTE), which in turn point at pages of actual data

Page Table Management �Linear addresses may be broken up into parts to yield offsets within these three page table levels and an offset within the actual page Macro definitions on x 86

James Sleeman Kernel memory allocation and a bit of DMA

Kernel Memory Allocation �Slab allocation �Buddy Allocation �Mempools �Look aside buffers

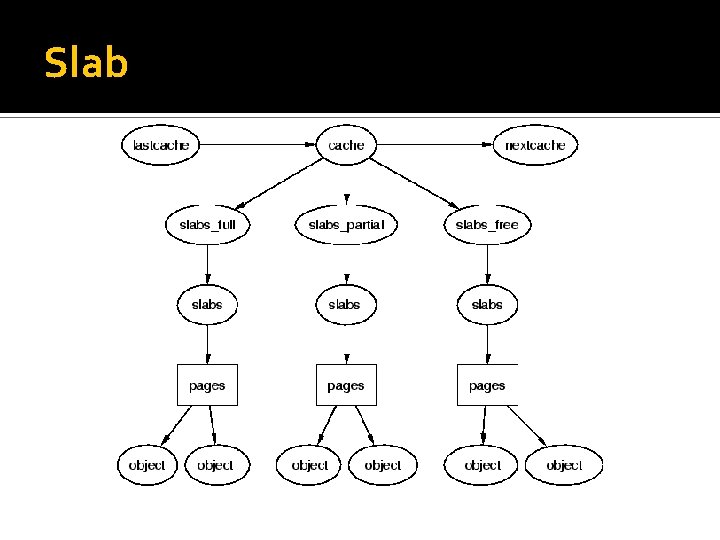

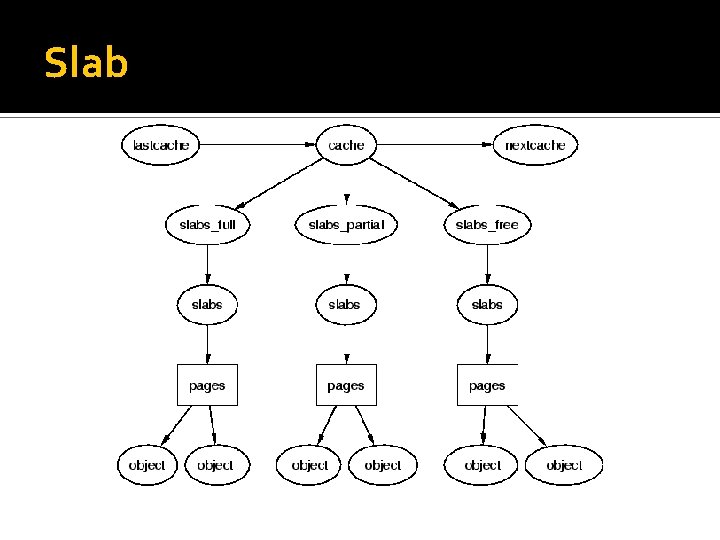

Slab Memory Allocation �The main motivation for slab allocation is initialising and freeing Kernel data objects can outweigh the cost of allocating them. �With slab allocation, memory chunks suitable to fit data objects of certain type or size are preallocated.

Slab

Buddy Memory Allocation �Is a fast memory allocation technique that divides memory into power of 2 partitions and attempts to allocate memory on a best fit approach �When memory is freed by the user, the buddy block is checked to see if any of its contiguous neighbours have also been freed. If so, the blocks are combined to minimize fragmentation

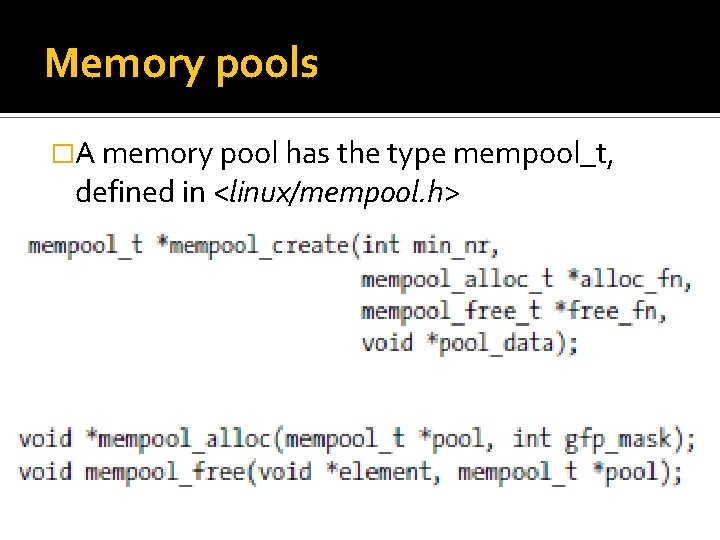

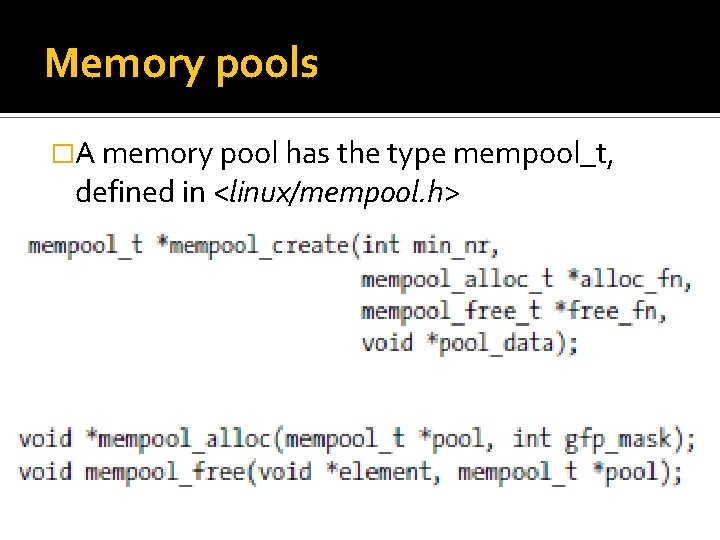

Memory pools �A memory pool has the type mempool_t, defined in <linux/mempool. h>

kmalloc and kfree �Kmalloc is a memory allocation function that returns contiguous memory from kernel space. �Void *kmalloc(size_t size, int flags) �buf = kmalloc(BUF_SIZE, GFP_DMA | GFP_KERNEL); �void kfree(const void *ptr) �Kfree(buf); �<linux/slab. h> and <linux/gfp. h>

![Preferred method of memory allocation define BUFLEN 2048 void functionvoid char bufBUFLEN Preferred method of memory allocation #define BUF_LEN 2048 void function(void) { char buf[BUF_LEN]; /*](https://slidetodoc.com/presentation_image/2175c1340c7e8bb84d2b52d2cfaab8ca/image-21.jpg)

Preferred method of memory allocation #define BUF_LEN 2048 void function(void) { char buf[BUF_LEN]; /* Do stuff with buf */ } #define BUF_LEN 2048 void function(void) { char *buf; buf = kmalloc(BUF_LEN, GFP_KERNEL); if (!buf) /* error! */ }

kmalloc flags �All flags are listed in include/linux. /gfp. h �Type flags: GFP_ATOMIC GFP_NOIO GFP_NOFS GFP_KERNEL GFP_USER GFP_HIGHUSER GFP_DMA

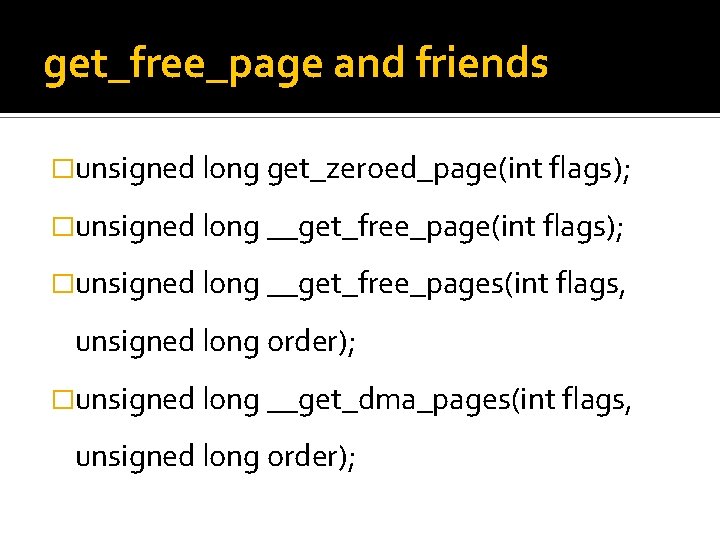

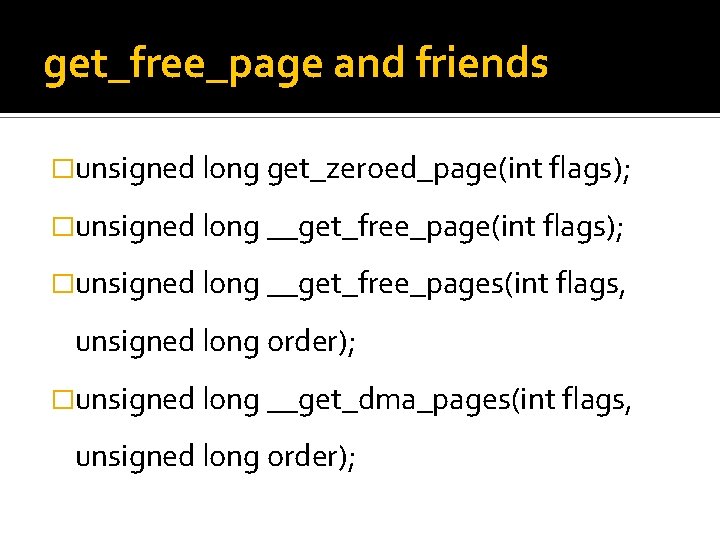

get_free_page and friends �unsigned long get_zeroed_page(int flags); �unsigned long __get_free_pages(int flags, unsigned long order); �unsigned long __get_dma_pages(int flags, unsigned long order);

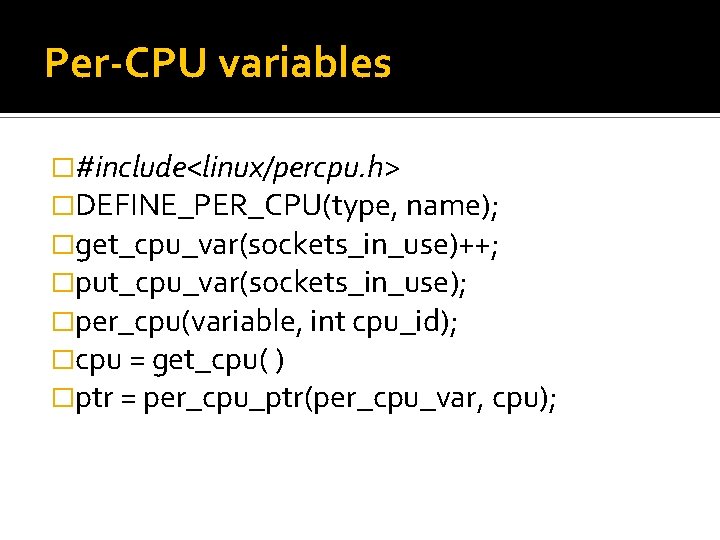

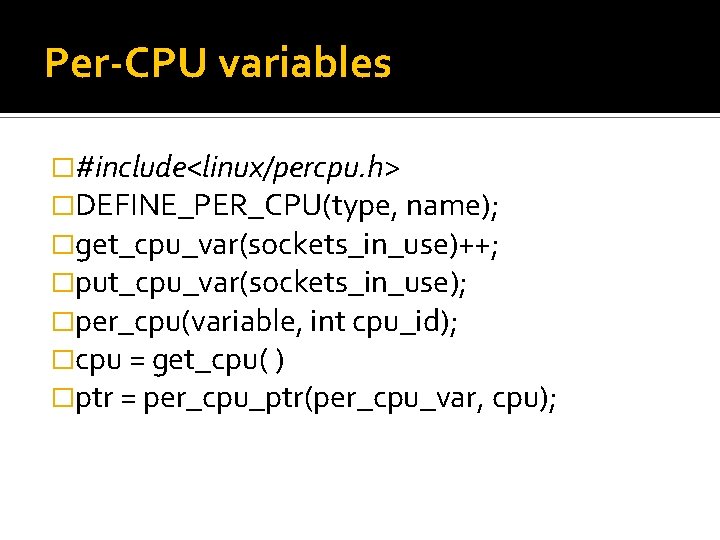

Per-CPU variables �#include<linux/percpu. h> �DEFINE_PER_CPU(type, name); �get_cpu_var(sockets_in_use)++; �put_cpu_var(sockets_in_use); �per_cpu(variable, int cpu_id); �cpu = get_cpu( ) �ptr = per_cpu_ptr(per_cpu_var, cpu);

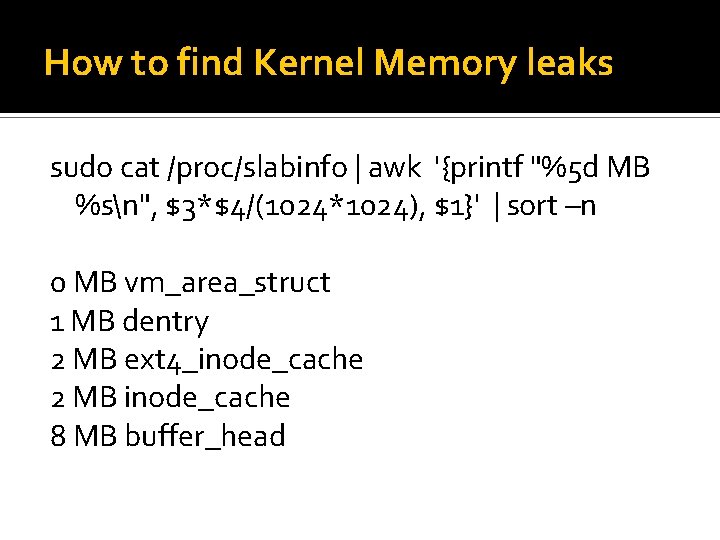

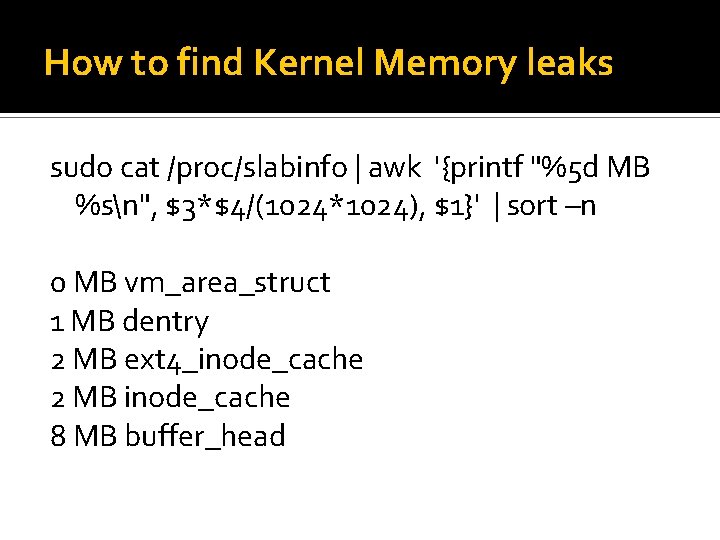

How to find Kernel Memory leaks sudo cat /proc/slabinfo | awk '{printf "%5 d MB %sn", $3*$4/(1024*1024), $1}' | sort –n 0 MB vm_area_struct 1 MB dentry 2 MB ext 4_inode_cache 2 MB inode_cache 8 MB buffer_head

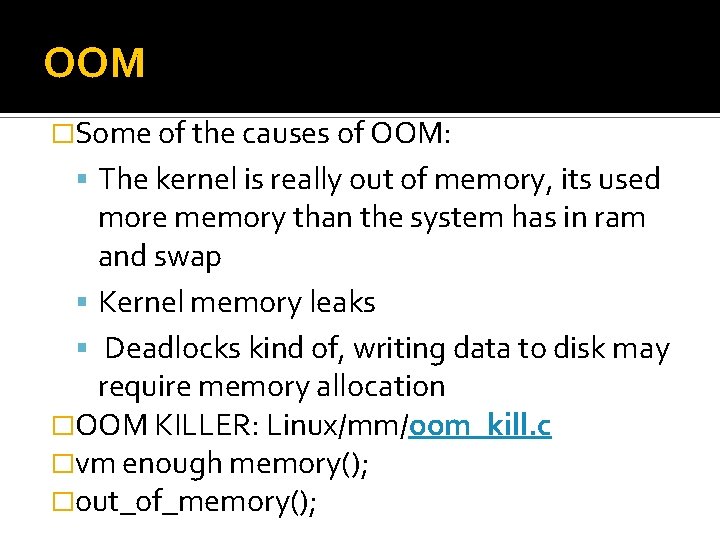

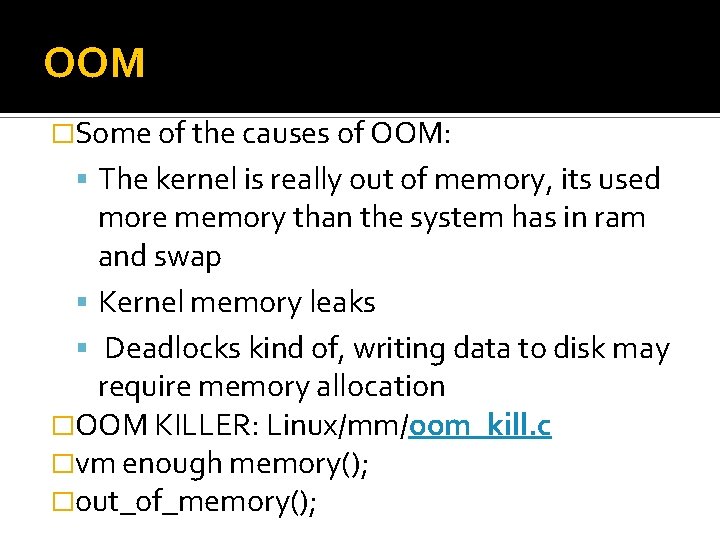

OOM �Some of the causes of OOM: The kernel is really out of memory, its used more memory than the system has in ram and swap Kernel memory leaks Deadlocks kind of, writing data to disk may require memory allocation �OOM KILLER: Linux/mm/oom_kill. c �vm enough memory(); �out_of_memory();

Story about OOM Killer �Thomas Habets had an unfortunate experience recently. His Linux system ran out of memory, and the dreaded "OOM killer" was loosed upon the system's unsuspecting processes. One of its victims turned out to be his screen locking program.

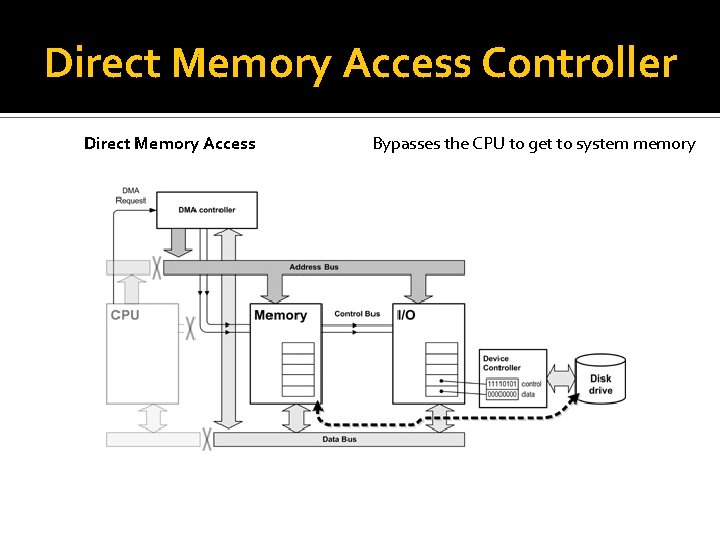

What is DMA? �DMA is a feature inside modern microcontrollers that allows other hardware subsystems to access system memory independently of the CPU. �Without DMA, large amount of CPU cycles are taken up, and PIO can be tied up for the entire duration of the read or write.

Any Questions? Useful websites: Kmalloc and more: lwn. net/images/pdf/LDD 3/ch 08. pdf http: //www. ibm. com/developerworks/linux/library/l-linuxslab-allocator/ http: //www. makelinux. net/books/lkd 2/ch 11 lev 1 sec 4

Pete Hemery Direct Memory Access DMA

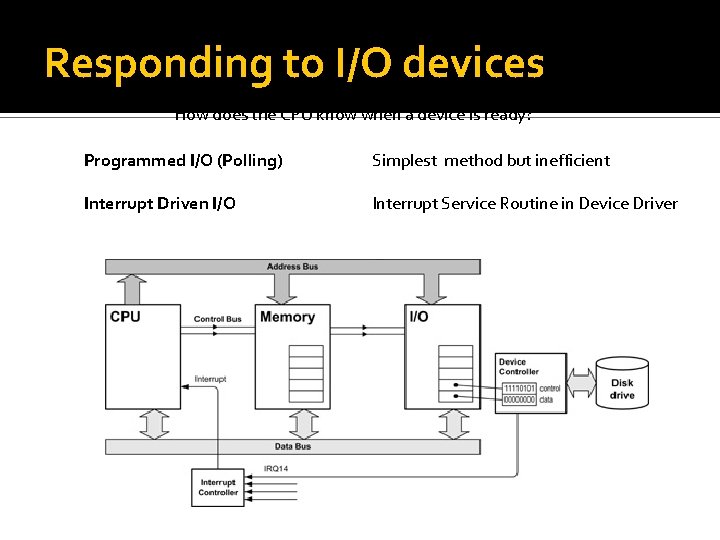

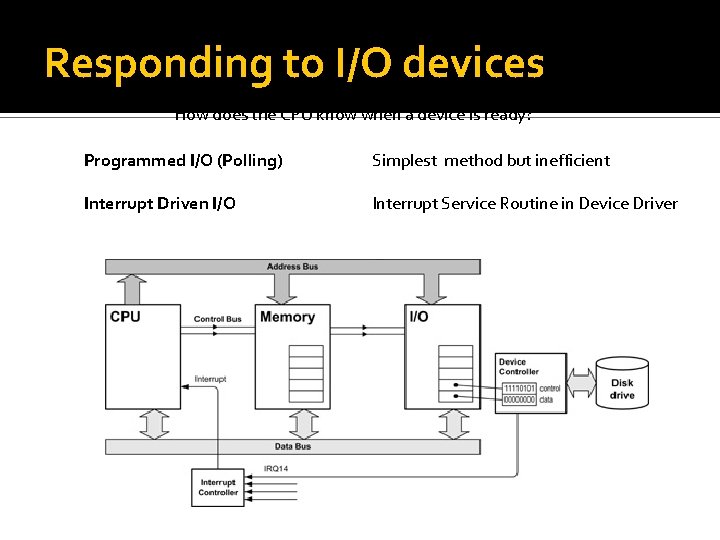

Responding to I/O devices How does the CPU know when a device is ready? Programmed I/O (Polling) Simplest method but inefficient Interrupt Driven I/O Interrupt Service Routine in Device Driver

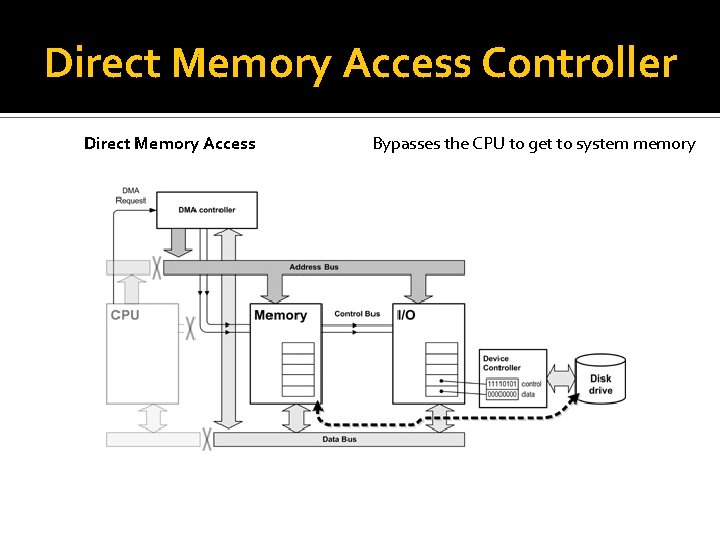

Direct Memory Access Controller Direct Memory Access Bypasses the CPU to get to system memory

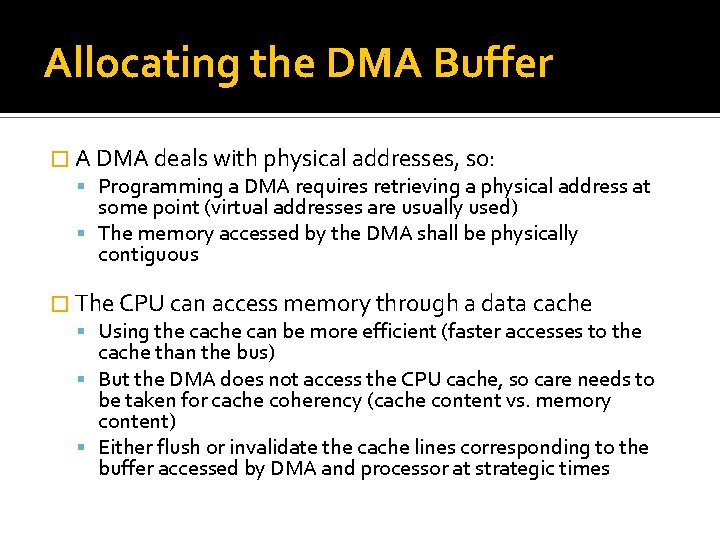

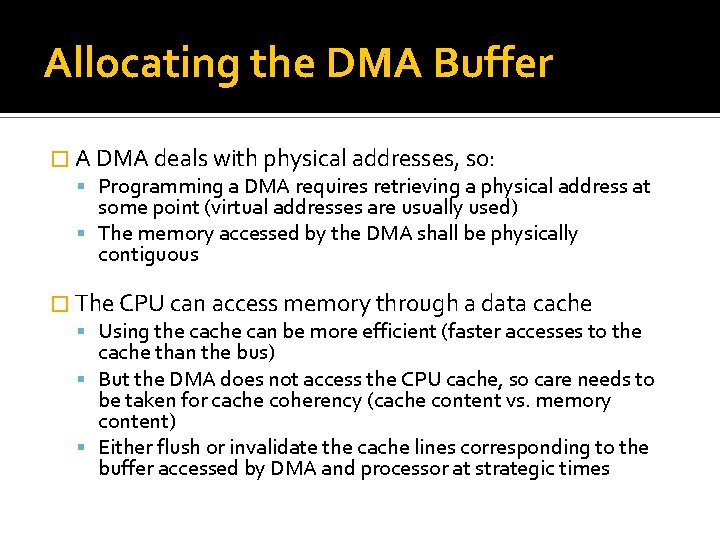

Allocating the DMA Buffer � A DMA deals with physical addresses, so: Programming a DMA requires retrieving a physical address at some point (virtual addresses are usually used) The memory accessed by the DMA shall be physically contiguous � The CPU can access memory through a data cache Using the cache can be more efficient (faster accesses to the cache than the bus) But the DMA does not access the CPU cache, so care needs to be taken for cache coherency (cache content vs. memory content) Either flush or invalidate the cache lines corresponding to the buffer accessed by DMA and processor at strategic times

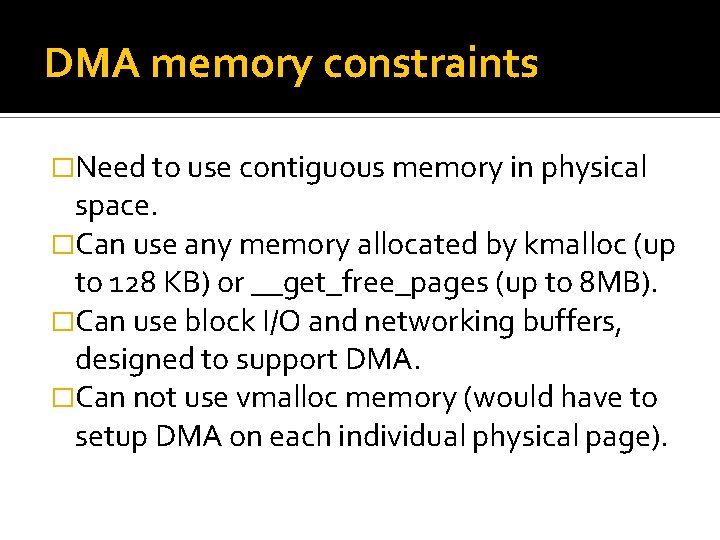

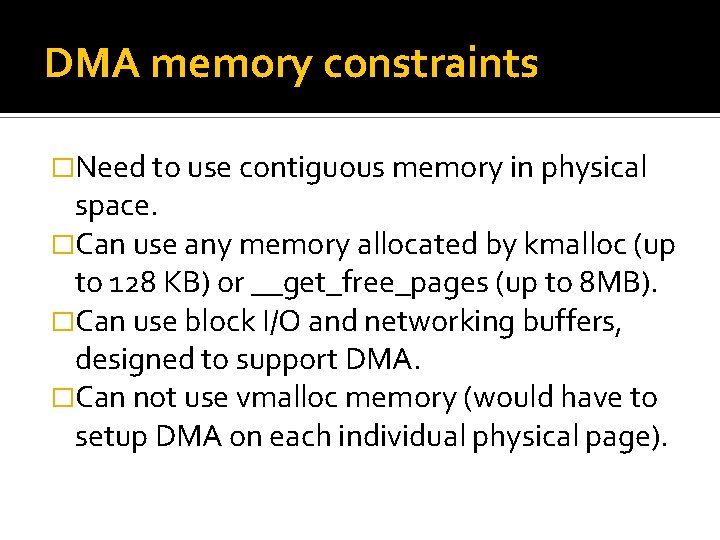

DMA memory constraints �Need to use contiguous memory in physical space. �Can use any memory allocated by kmalloc (up to 128 KB) or __get_free_pages (up to 8 MB). �Can use block I/O and networking buffers, designed to support DMA. �Can not use vmalloc memory (would have to setup DMA on each individual physical page).

Memory synchronization issues Memory caching could interfere with DMA �Before DMA to device: Need to make sure that all writes to DMA buffer are committed. �After DMA from device: Before drivers read from DMA buffer, need to make sure that memory caches are flushed. �Bidirectional DMA Need to flush caches before and after the DMA transfer.

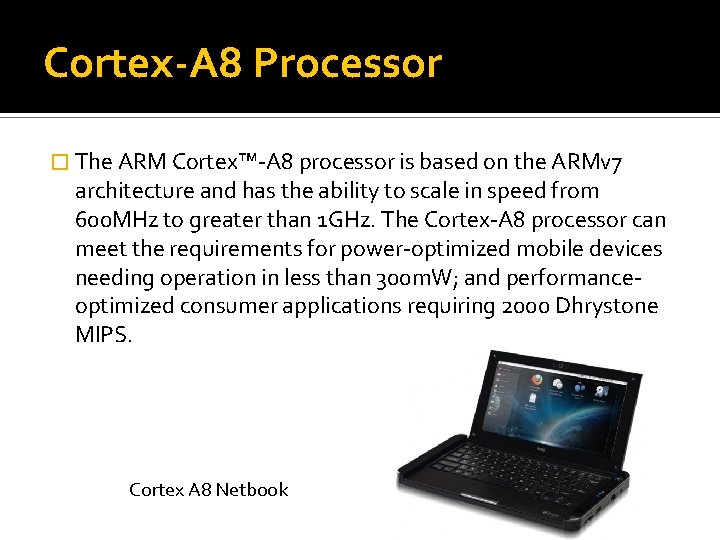

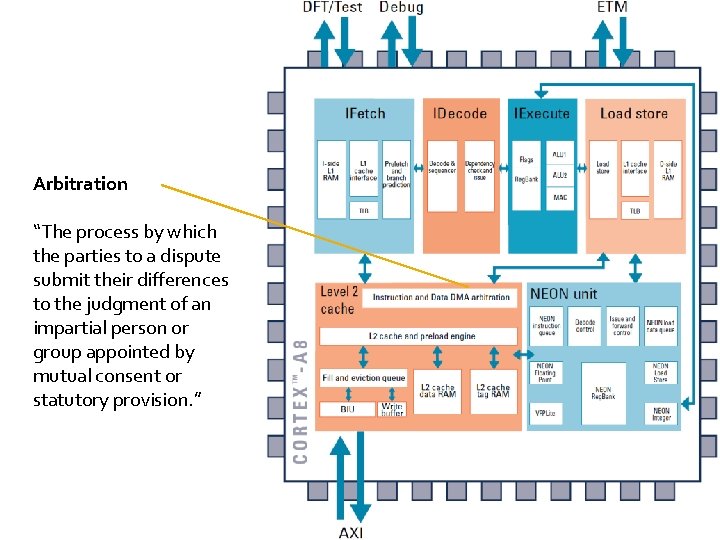

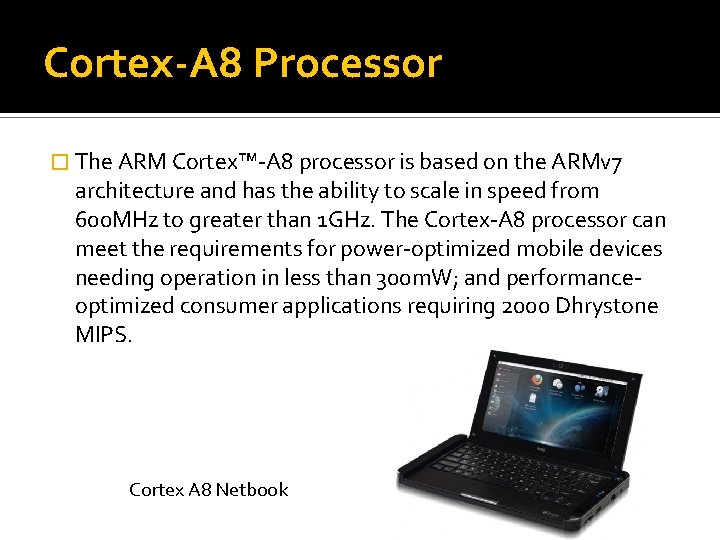

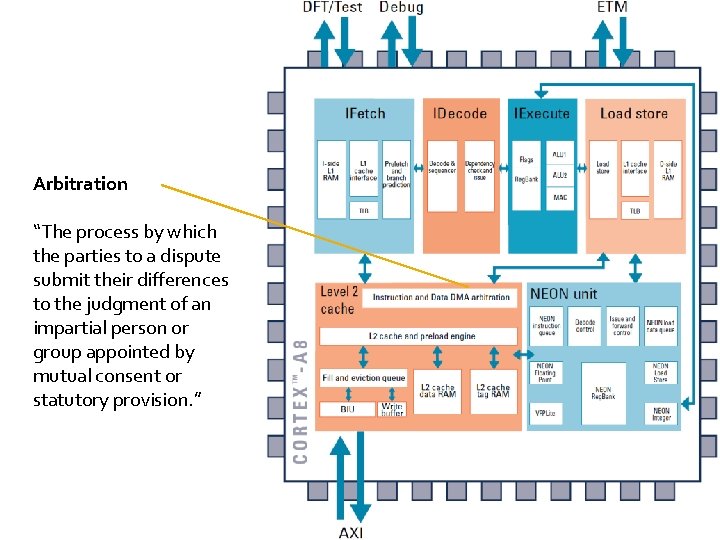

Cortex-A 8 Processor � The ARM Cortex™-A 8 processor is based on the ARMv 7 architecture and has the ability to scale in speed from 600 MHz to greater than 1 GHz. The Cortex-A 8 processor can meet the requirements for power-optimized mobile devices needing operation in less than 300 m. W; and performanceoptimized consumer applications requiring 2000 Dhrystone MIPS. Cortex A 8 Netbook

Arbitration “The process by which the parties to a dispute submit their differences to the judgment of an impartial person or group appointed by mutual consent or statutory provision. ”

Sitara ARM® Cortex™-A 8 and ARM 9™ Microprocessors

TI E 2 E™ Community �Sitara™ ARM® Microprocessors � Welcome to the Sitara™ ARM® Microprocessors Section of the TI E 2 E Support Community. Ask questions, share knowledge, explore ideas, and help solve problems with fellow engineers. To post a question, click on the forum tab then "New Post". � This group contains forums for discussion on Cortex A 8 based AM 35 x, AM 37 x and AM 335 x processors and ARM 9 based AM 1 x processors. For faster response please be sure to tag your post. http: //e 2 e. ti. com/support/dsp/sitara_arm 174_microprocessors/f/416/t/159602. aspx

http: //forum. meego. com/archive/index. php/t-117. html I am currently working on getting WLAN up and running. It seems that the SDIO driver is broken for libertas_sdio: probe of mmc 1: 0001: 1 failed with error -16 A second problem is the USB Host interface. it seems to be completely broken. Hotplugging USB mouse: [ 156. 736999] drivers/hid/usbhid/hid-core. c: can't reset device, ehci-omap. 0 -2. 3/input 0, status -71 Adding a webcam: [ 25. 468078] Linux video capture interface: v 2. 00 [ 25. 566772] gspca: main v 2. 9. 0 registered [ 25. 703247] gspca: probing 046 d: 08 da [ 25. 725189] twl_rtc: rtc core: registered twl_rtc as rtc 0 [ 25. 964385] lib 80211: common routines for IEEE 802. 11 drivers [ 25. 964416] lib 80211_crypt: registered algorithm 'NULL' [ 26. 142181] ads 7846 spi 1. 0: touchscreen, irq 274 [ 26. 143157] input: ADS 7846 Touchscreen as /devices/platform/omap 2_mcspi. 1/spi 1. 0/input 1 [ 26. 617645] cfg 80211: Calling CRDA to update world regulatory domain [ 26. 829406] libertas_sdio: Libertas SDIO driver [ 26. 829437] libertas_sdio: Copyright Pierre Ossman [ 26. 835327] zc 3 xx: probe 2 wr ov vga 0 x 0000 [ 26. 865203] zc 3 xx: probe sensor -> 0011 [ 26. 865234] zc 3 xx: Find Sensor HV 7131 R(c) [ 26. 865936] input: zc 3 xx as /devices/platform/ehci-omap. 0/usb 1/1 -2. 3/input 2 [ 26. 866424] gspca: video 0 created [ 26. 866455] gspca: found int in endpoint: 0 x 82, buffer_len=8, interval=10 [ 26. 866516] kernel BUG at arch/arm/mm/dma-mapping. c: 409! [ 26. 871887] Unable to handle kernel NULL pointer dereference at virtual address 0000 [ 26. 885864] libertas_sdio: probe of mmc 1: 0001: 1 failed with error -16 [ 26. 915069] cfg 80211: World regulatory domain updated: [ 26. 915100] (start_freq - end_freq @ bandwidth), (max_antenna_gain, max_eirp) [ 26. 915130] (2402000 KHz - 2472000 KHz @ 40000 KHz), (300 m. Bi, 2000 m. Bm) [ 26. 915161] (2457000 KHz - 2482000 KHz @ 20000 KHz), (300 m. Bi, 2000 m. Bm) [ 26. 915161] (2474000 KHz - 2494000 KHz @ 20000 KHz), (300 m. Bi, 2000 m. Bm) [ 26. 915191] (5170000 KHz - 5250000 KHz @ 40000 KHz), (300 m. Bi, 2000 m. Bm) [ 26. 915222] (5735000 KHz - 5835000 KHz @ 40000 KHz), (300 m. Bi, 2000 m. Bm) [ 26. 938995] pgd = cff 58000 [ 26. 942321] [0000] *pgd=8 ff 36031, *pte=0000, *ppte=0000 [ 26. 994537] Internal error: Oops: 817 [#1] PREEMPT [ 26. 999359] last sysfs file: /sys/devices/platform/ehci-omap. 0/usb 1/1 -2. 3/bcd. Device [ 27. 007415] Modules linked in: libertas_sdio libertas cfg 80211 joydev rfkill ads 7846 mailbox_mach lib 80211 mailbox rtc_twl gspca_zc 3 xx(+) rtc_core gspca_main videodev v 4 l 1_compat [ 27. 023590] CPU: 0 Not tainted (2. 6. 35. 3 #1)

Trouble Shooting DMA � � � This is a case where a thorough knowledge of the hardware is essential to making the software work. DMA is almost impossible to troubleshoot without using a logic analyzer. No matter what mode the transfers will ultimately use, and no matter what the source and destination devices are, I always first write a routine to do a memory to memory DMA transfer. This is much easier to troubleshoot than DMA to a complex I/O port. You can use your ICE to see if the transfer happened (by looking at the destination block), and to see if exactly the right number of bytes were transferred. At some point you'll have to recode to direct the transfer to your device. Hook up a logic analyzer to the DMA signals on the chip to be sure that the addresses and byte count are correct. Check this even if things seem to work - a slight mistake might trash part of your stack or data space. Some high integration CPUs with internal DMA controllers do not produce any sort of cycle that you can flag as being associated with DMA. This drives me nuts - one lousy extra pin would greatly ease debugging. The only way to track these transfers is to trigger the logic analyzer on address ranges associated with the transfer, but unfortunately these ranges may also have non-DMA activity in them. Be aware that DMA will destroy your timing calculations. Bit banging UARTs will not be reliable; carefully crafted timing loops will run slower than expected. In the old days we all counted Tstates to figure how long a loop ran, but DMA, prefetchers, cache, and all sorts of modern exoticness makes it almost impossible to calculate real execution time. http: //www. ganssle. com/articles/adma. htm

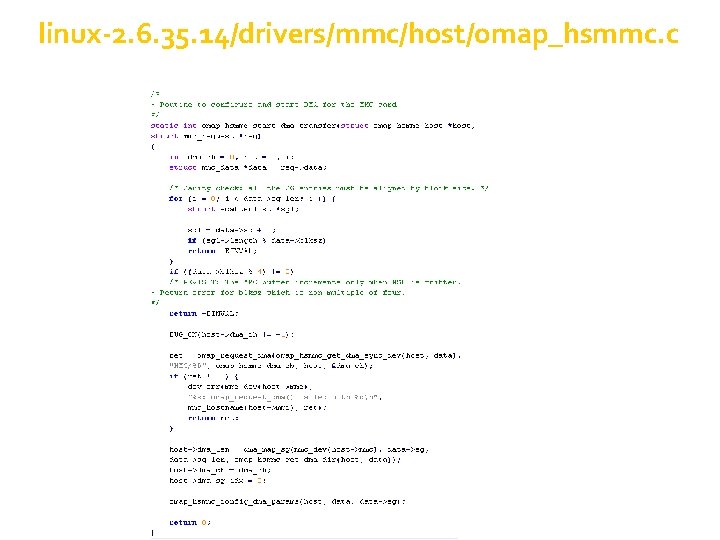

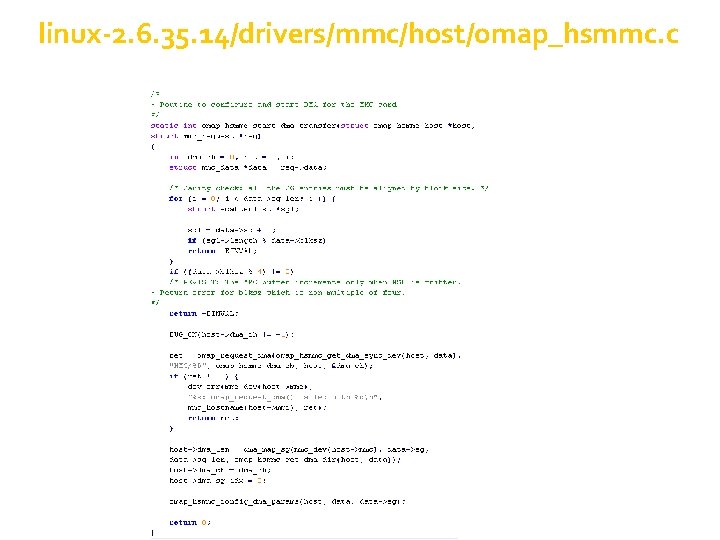

linux-2. 6. 35. 14/drivers/mmc/host/omap_hsmmc. c

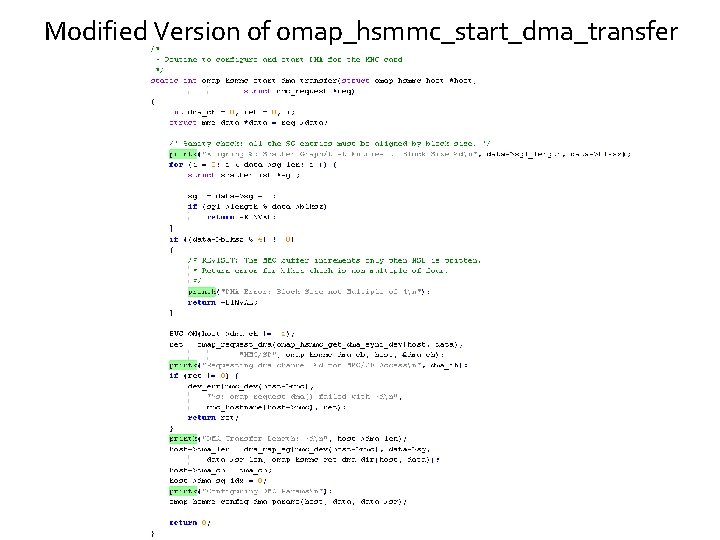

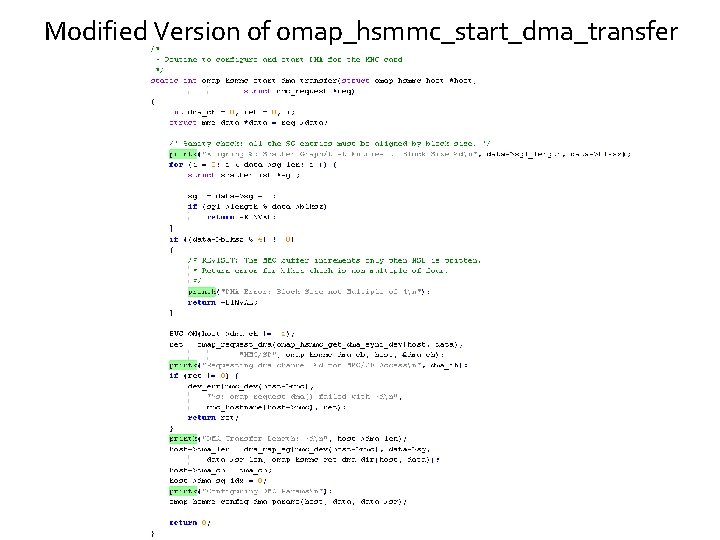

Modified Version of omap_hsmmc_start_dma_transfer

References � � � � http: //www. talktoanit. com/A+/aplus-website/lessons-io-principles. html http: //www. ti. com/lit/ug/spru 234 c. pdf http: //www. ti. com/lsds/ti/dsp/platform/sitara/whats_new. page? DCMP=A M 33 x_Announcement&HQS=am 335 x http: //www. arm. com/products/processors/cortex-a 8. php http: //www. ti. com/lit/ds/symlink/omap 3530. pdf http: //e 2 e. ti. com/support/dsp/omap_applications_processors/f/447/t/963 65. aspx http: //www. ganssle. com/articles/adma. htm