KDD 2004 Adversarial Classification Dalvi Domingos Mausam Sanghai

- Slides: 23

KDD 2004: Adversarial Classification Dalvi, Domingos, Mausam, Sanghai, Verma University of Washington

Introduction n Paper views classification as a game between classifier and the adversary ¨ Data is actively manipulated by the adversary to make classifier produce false negatives n Proposes a (Naïve Bayes) classifier that is optimal given adversary’s optimal strategy

Motivation (1) n n Many (all) data-mining algorithms assume that data-generating process is independent of classifier’s activities This is not true in domains like ¨ Spam detection ¨ Intrusion detection ¨ Fraud detection ¨ Surveillance Where data is actively manipulated by an adversary seeking to make classifier produce false negatives

Motivation (2) In real world performance of classifier can degrade rapidly after deployment as adversary learns how to defeat it n Solution: repeated, manual, ad hoc reconstruction of the classifier n This problem is different from a concept drift, since data is actively manipulated – is a function of the classifier itself n

Outline Problem definition n For Naïve Bayes: n ¨ Optimal strategy for adversary against adversary-unaware classifier ¨ Optimal strategy for classifier playing against adversary n Experimental results on 2 email spam datasets

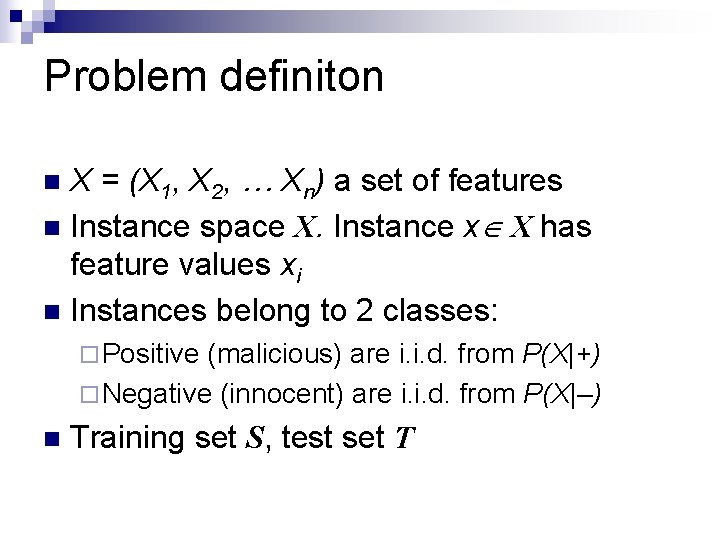

Problem definiton X = (X 1, X 2, … Xn) a set of features n Instance space X. Instance x X has feature values xi n Instances belong to 2 classes: n ¨ Positive (malicious) are i. i. d. from P(X|+) ¨ Negative (innocent) are i. i. d. from P(X|–) n Training set S, test set T

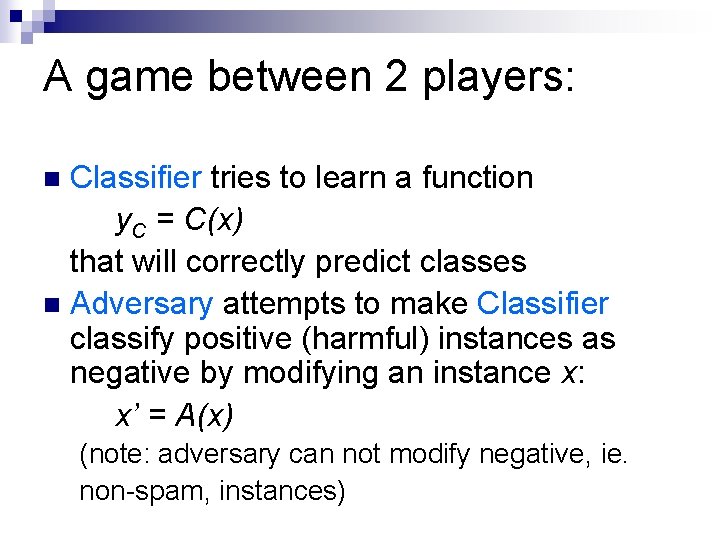

A game between 2 players: Classifier tries to learn a function y. C = C(x) that will correctly predict classes n Adversary attempts to make Classifier classify positive (harmful) instances as negative by modifying an instance x: x’ = A(x) n (note: adversary can not modify negative, ie. non-spam, instances)

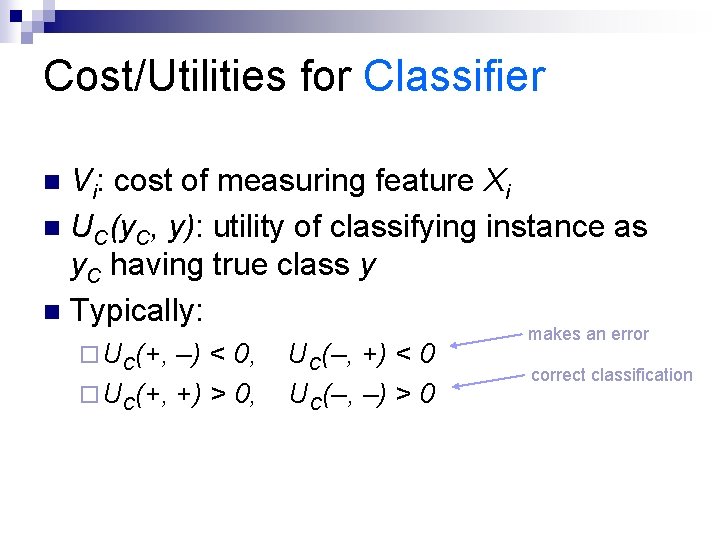

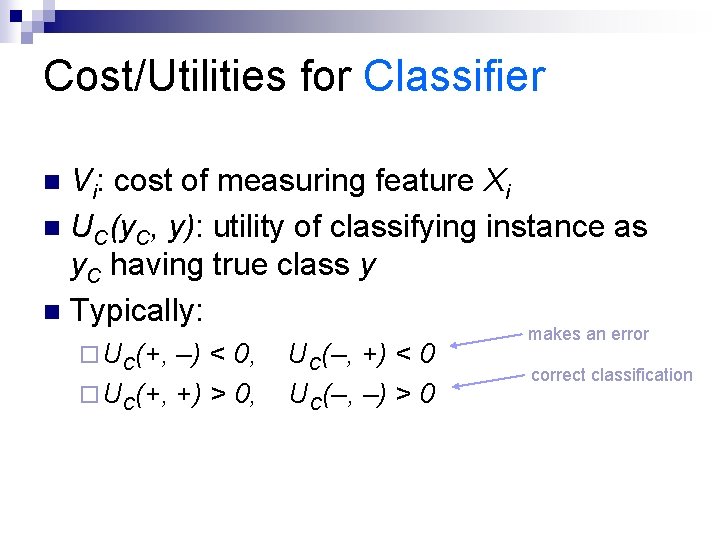

Cost/Utilities for Classifier Vi: cost of measuring feature Xi n UC(y. C, y): utility of classifying instance as y. C having true class y n Typically: n ¨ UC(+, –) < 0, ¨ UC(+, +) > 0, UC(–, +) < 0 UC(–, –) > 0 makes an error correct classification

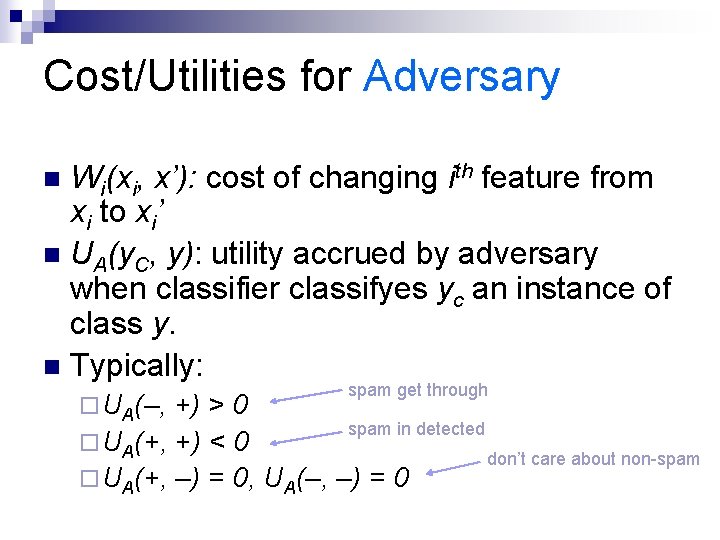

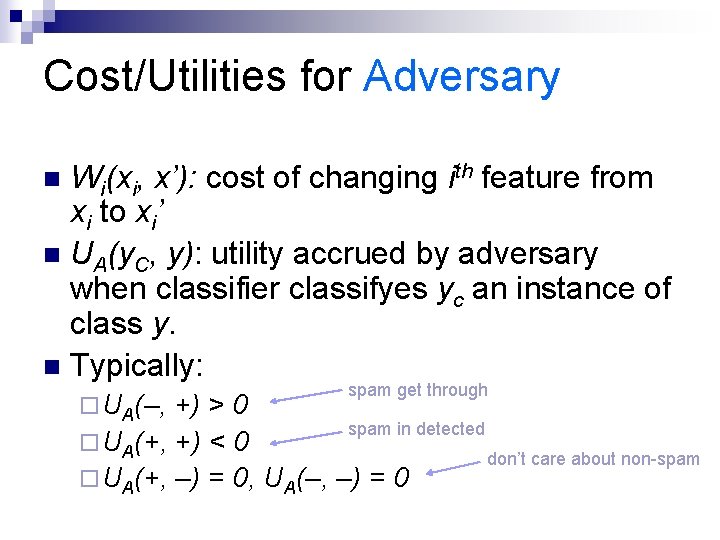

Cost/Utilities for Adversary Wi(xi, x’): cost of changing ith feature from xi to xi’ n UA(y. C, y): utility accrued by adversary when classifier classifyes yc an instance of class y. n Typically: n ¨ UA(–, spam get through +) > 0 spam in detected ¨ UA(+, +) < 0 don’t care about non-spam ¨ UA(+, –) = 0, UA(–, –) = 0

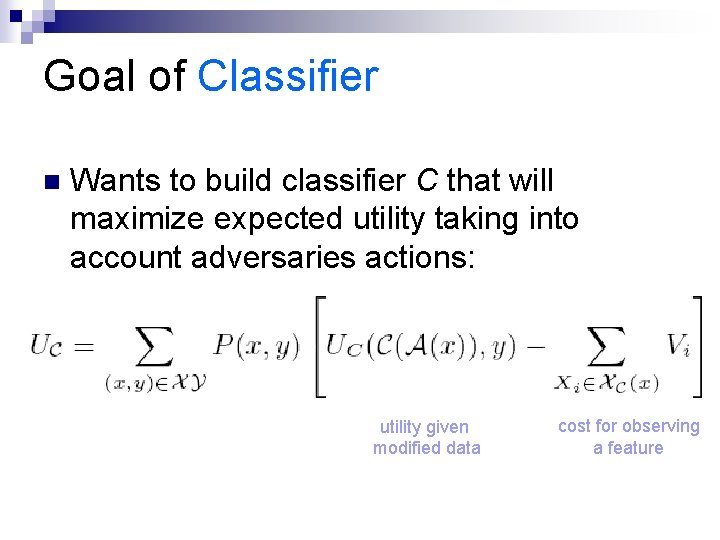

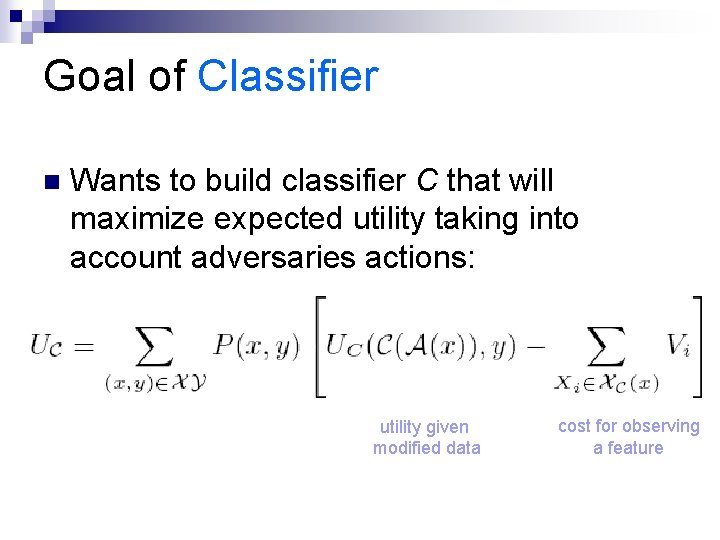

Goal of Classifier n Wants to build classifier C that will maximize expected utility taking into account adversaries actions: utility given modified data cost for observing a feature

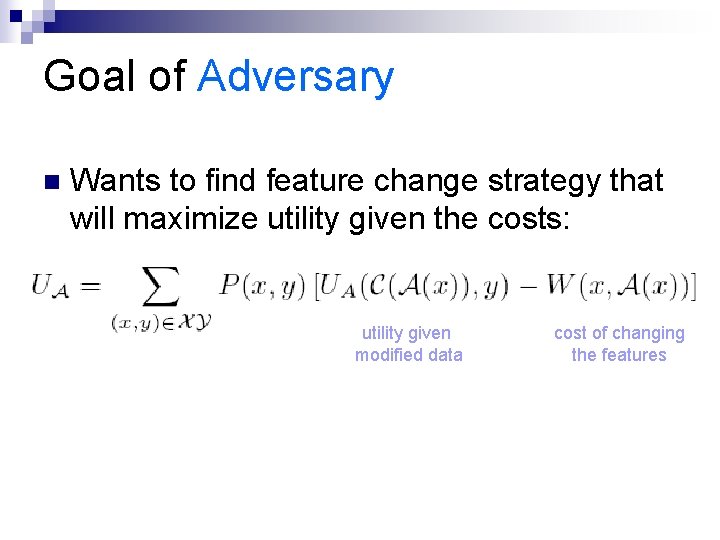

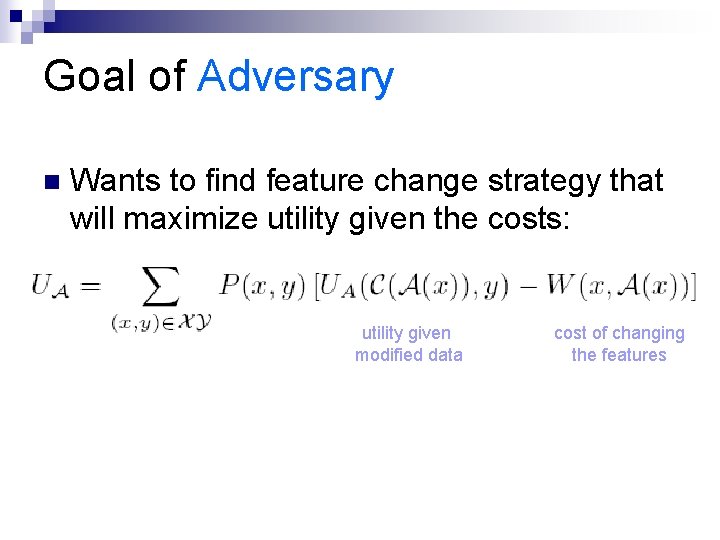

Goal of Adversary n Wants to find feature change strategy that will maximize utility given the costs: utility given modified data cost of changing the features

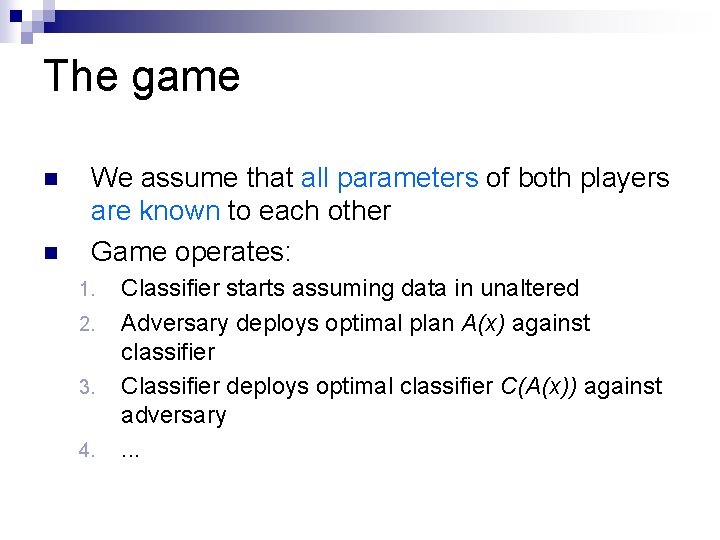

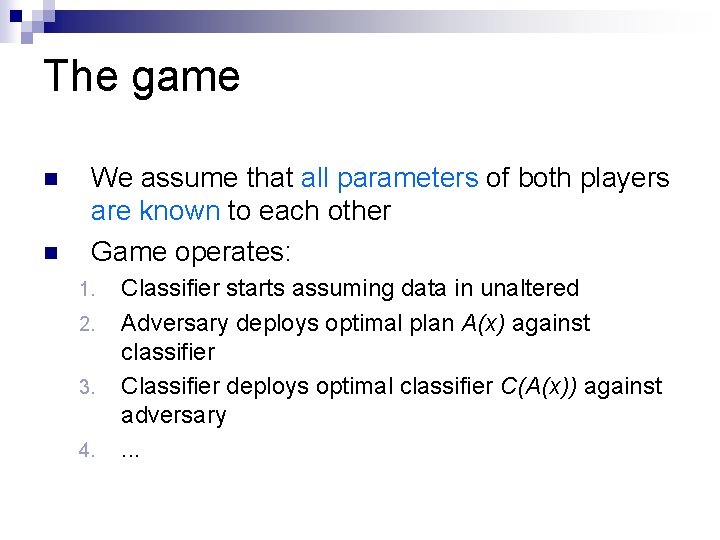

The game n n We assume that all parameters of both players are known to each other Game operates: 1. 2. 3. 4. Classifier starts assuming data in unaltered Adversary deploys optimal plan A(x) against classifier Classifier deploys optimal classifier C(A(x)) against adversary. . .

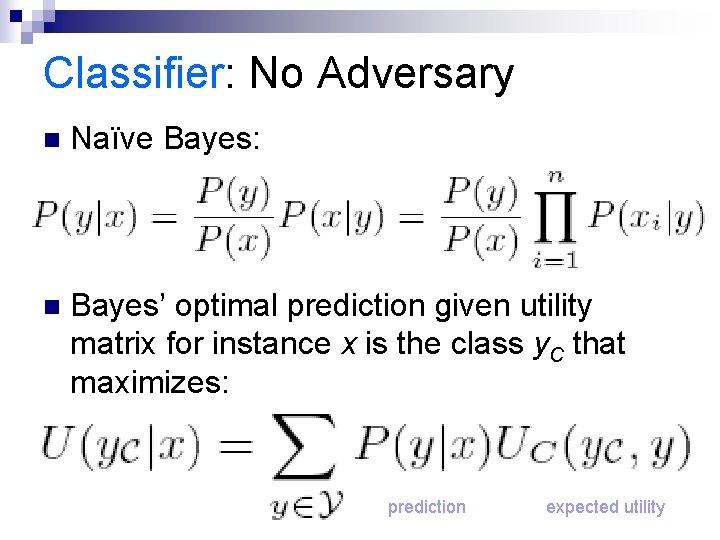

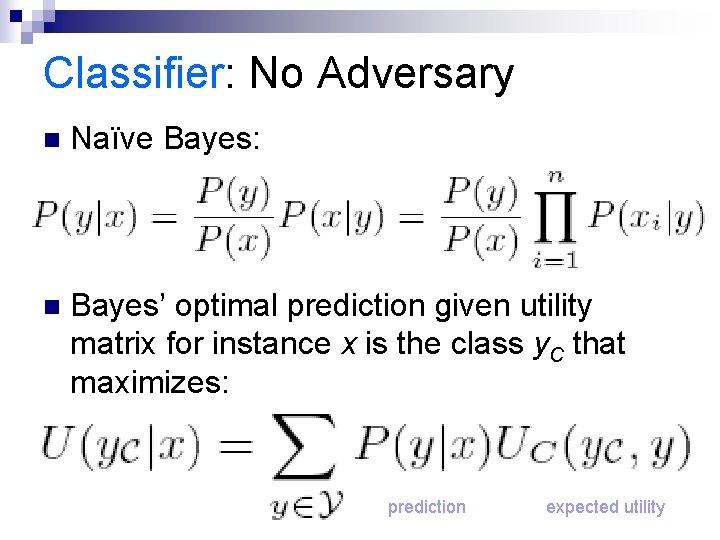

Classifier: No Adversary n Naïve Bayes: n Bayes’ optimal prediction given utility matrix for instance x is the class y. C that maximizes: prediction expected utility

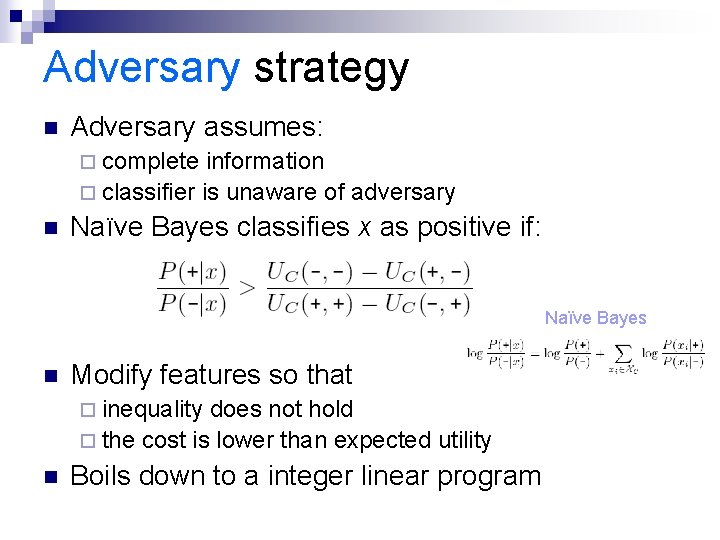

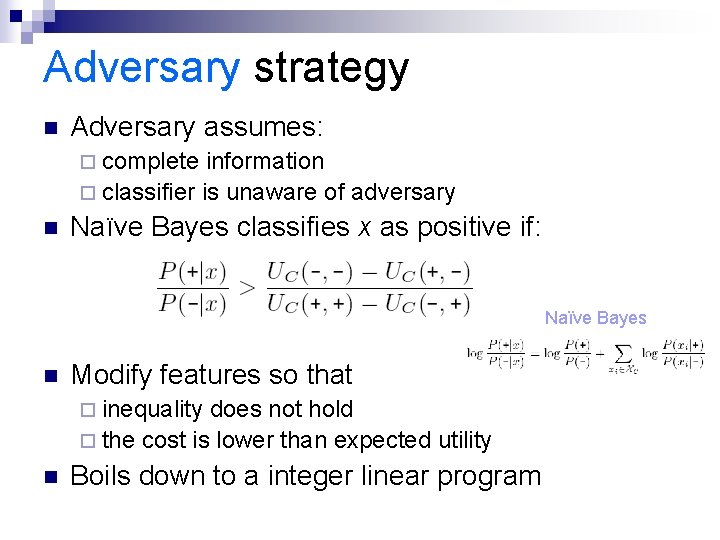

Adversary strategy n Adversary assumes: ¨ complete information ¨ classifier is unaware of adversary n Naïve Bayes classifies x as positive if: Naïve Bayes n Modify features so that ¨ inequality does not hold ¨ the cost is lower than expected utility n Boils down to a integer linear program

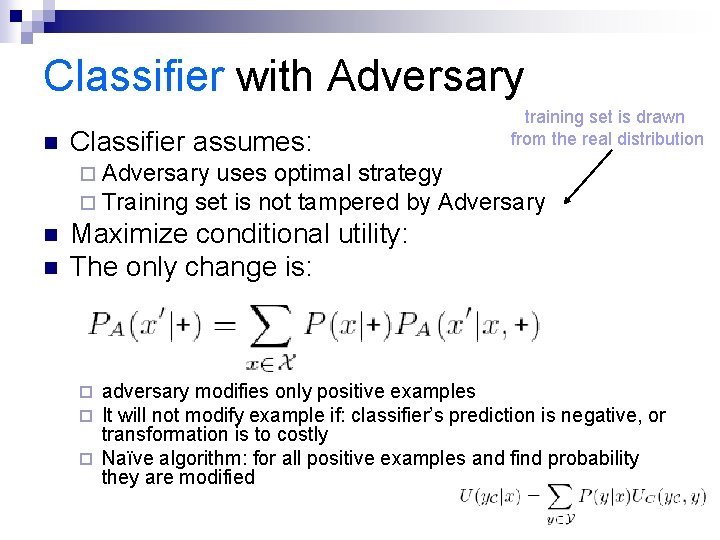

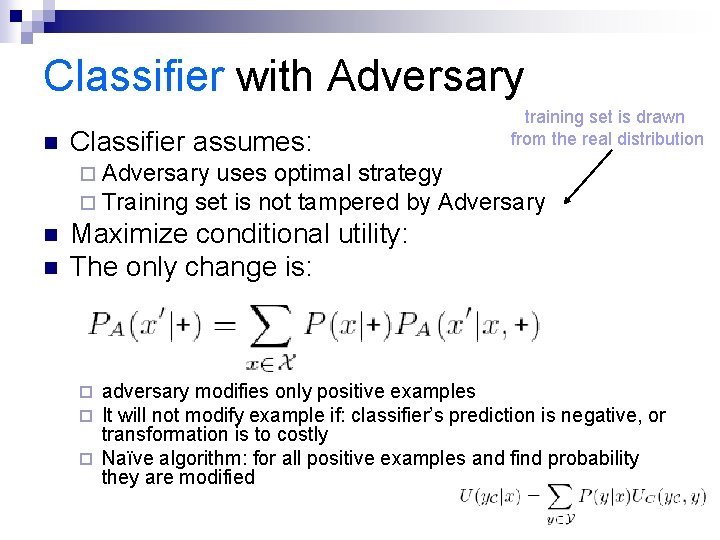

Classifier with Adversary n Classifier assumes: training set is drawn from the real distribution ¨ Adversary uses optimal strategy ¨ Training set is not tampered by Adversary n n Maximize conditional utility: The only change is: adversary modifies only positive examples It will not modify example if: classifier’s prediction is negative, or transformation is to costly ¨ Naïve algorithm: for all positive examples and find probability they are modified ¨ ¨

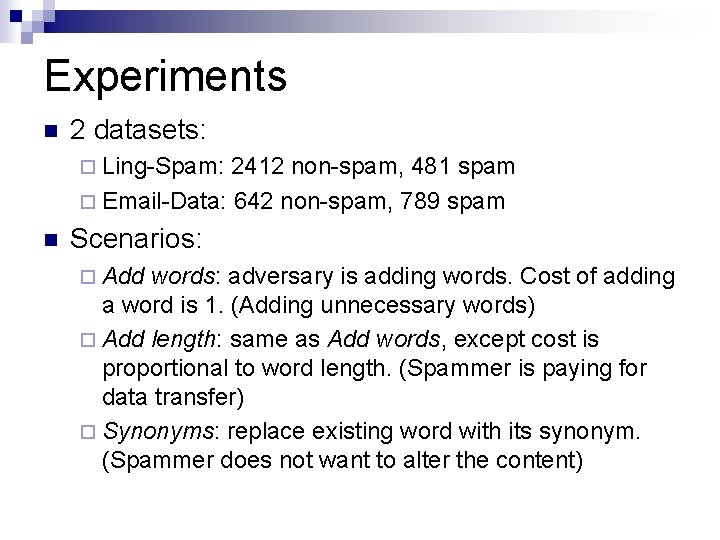

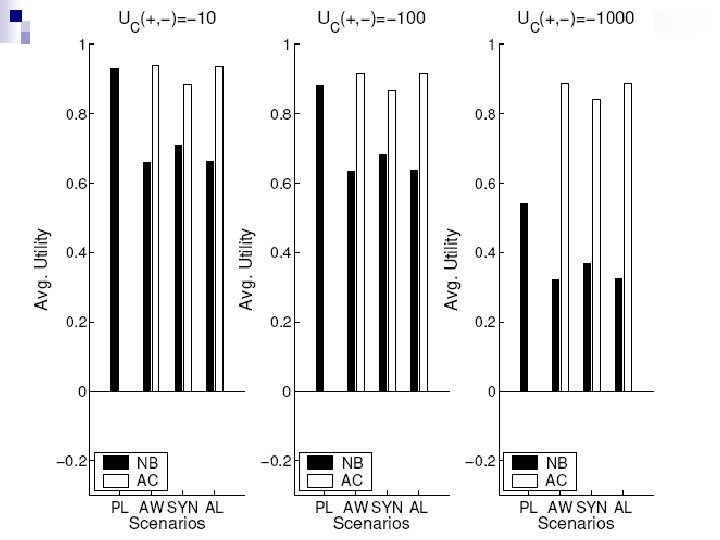

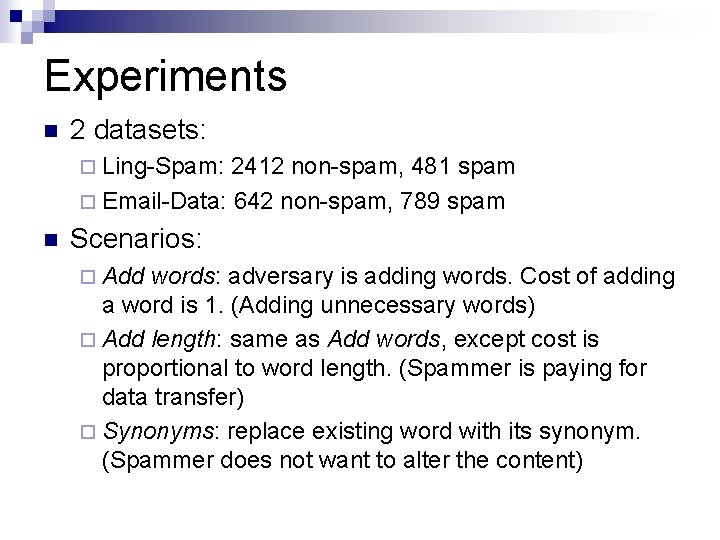

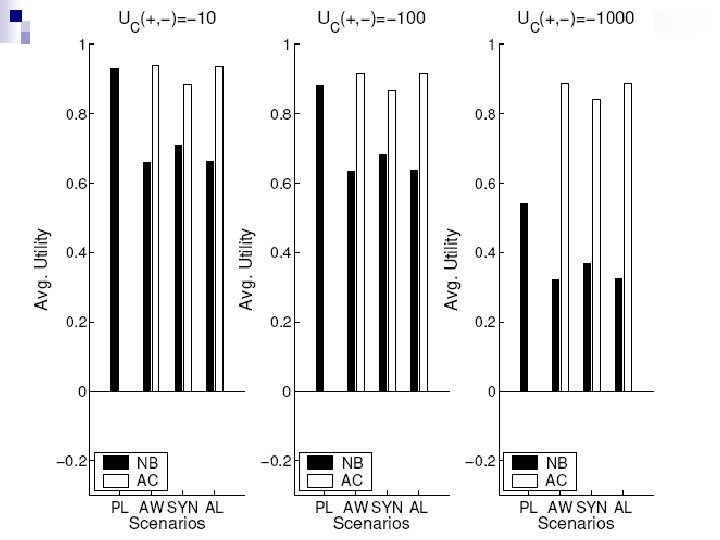

Experiments n 2 datasets: ¨ Ling-Spam: 2412 non-spam, 481 spam ¨ Email-Data: 642 non-spam, 789 spam n Scenarios: ¨ Add words: adversary is adding words. Cost of adding a word is 1. (Adding unnecessary words) ¨ Add length: same as Add words, except cost is proportional to word length. (Spammer is paying for data transfer) ¨ Synonyms: replace existing word with its synonym. (Spammer does not want to alter the content)

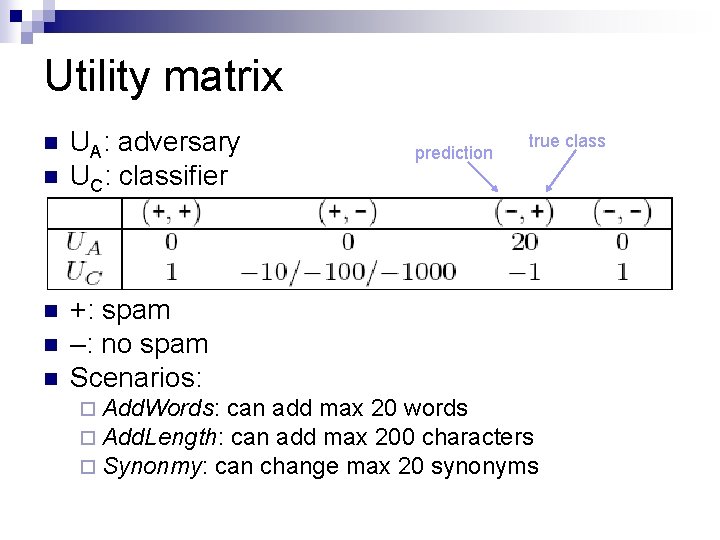

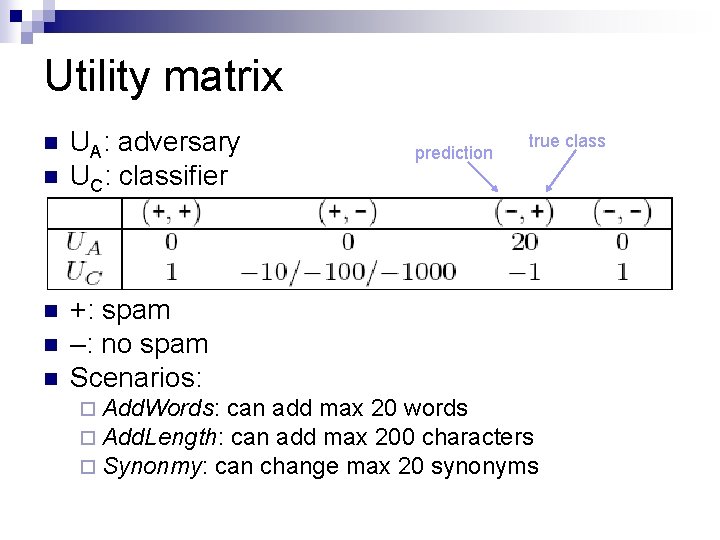

Utility matrix n n n UA: adversary UC: classifier prediction true class +: spam –: no spam Scenarios: ¨ Add. Words: can add max 20 words ¨ Add. Length: can add max 200 characters ¨ Synonmy: can change max 20 synonyms

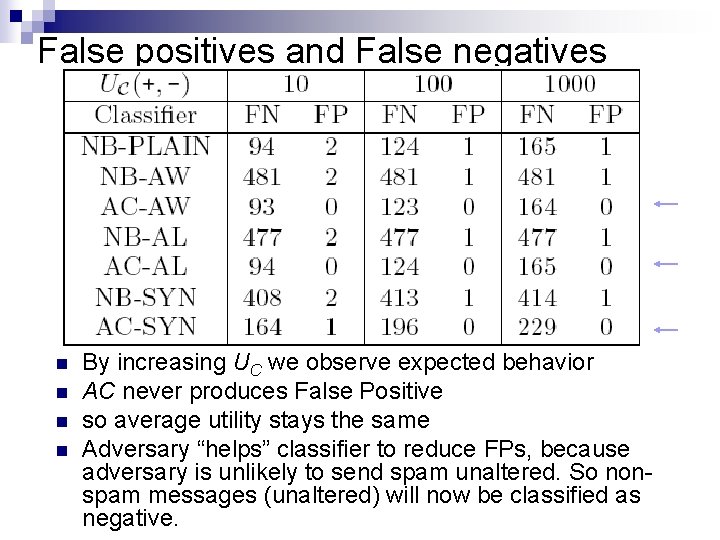

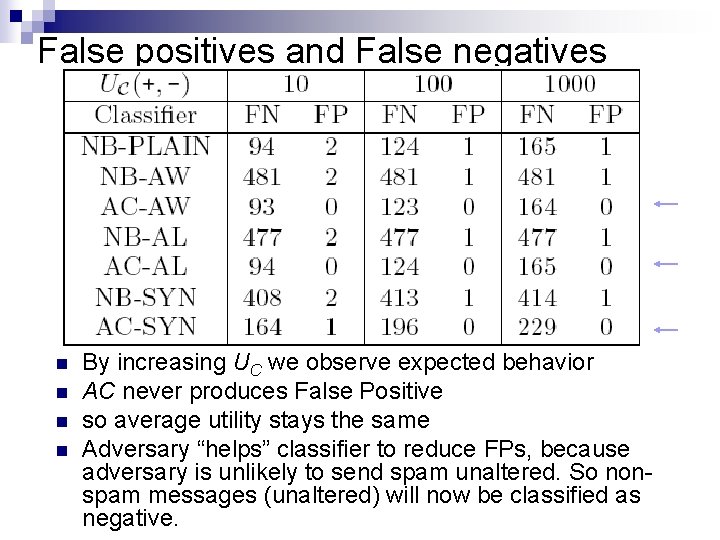

False positives and False negatives n n By increasing UC we observe expected behavior AC never produces False Positive so average utility stays the same Adversary “helps” classifier to reduce FPs, because adversary is unlikely to send spam unaltered. So nonspam messages (unaltered) will now be classified as negative.

Further work / Conclusions n Further work: ¨ Repeated game ¨ Incomplete information ¨ Approximately optimal strategies ¨ Generalization to other classifiers n Conclusions: ¨ Formalized the problem of adversarial manipulation ¨ Extended Naïve Bayes classifier ¨ Outperforms standard technique

Other interesting papers (1) n Best Paper: Probabilistic Framework for Semi-Supervised Clustering by Basu, Bilenko, and Mooney: ¨ gives a probabilistic model to find clusters that respect "must-link" and "cannot-link" constraints ¨ the EM-type algorithm is an incremental improvement over previous methods

Other interesting papers (2) n Data Mining in Metric Space: An Empirical Analysis of Supervised Learning Performance Criteria by Caruana and Niculescu-Mizil: ¨a massive comparison of different learning methods with different binary datasets, on different measure of performance: accuracy given a threshold, area under the ROC curve, squared error, etc. ¨ measures that depend on ranking only, and measures that depend on scores being calibrated probabilities, form two clusters. ¨ maximum margin methods (SVMs and boosted trees) give excellent ranking but scores that are far from well -calibrated probabilities. ¨ squared error may be the best general-purpose measure.

Other interesting papers (3) n Cyclic Pattern Kernels for Predictive Graph Mining by Horvath, Gaertner, and Wrobel: ¨ kernel for classifying graphs based on cyclic and tree patterns ¨ Computationally efficient (does not suffer frequent sub-graphs limitations) ¨ they use it with SVM for classifying molecules