Jointly Generating Captions to Aid Visual Question Answering

- Slides: 33

Jointly Generating Captions to Aid Visual Question Answering Raymond Mooney Department of Computer Science University of Texas at Austin with Jialin Wu

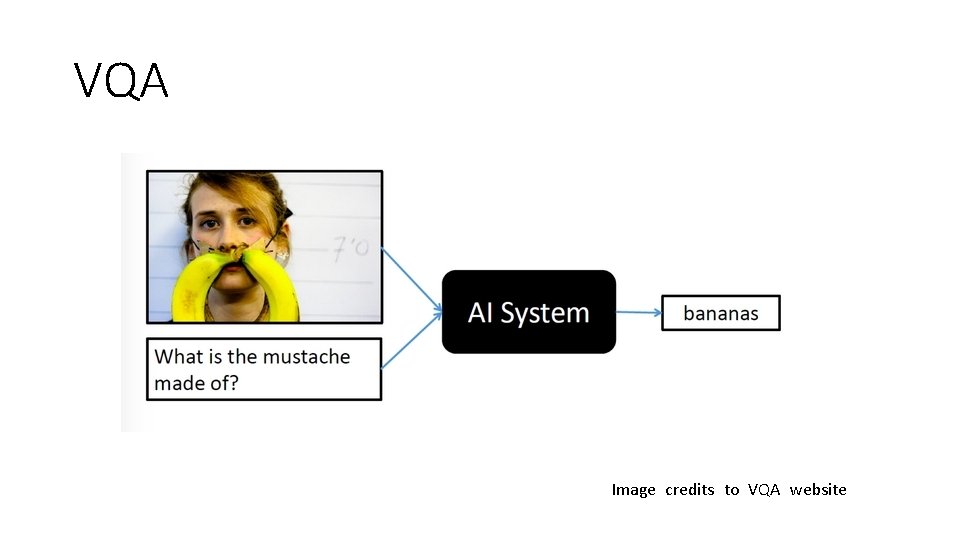

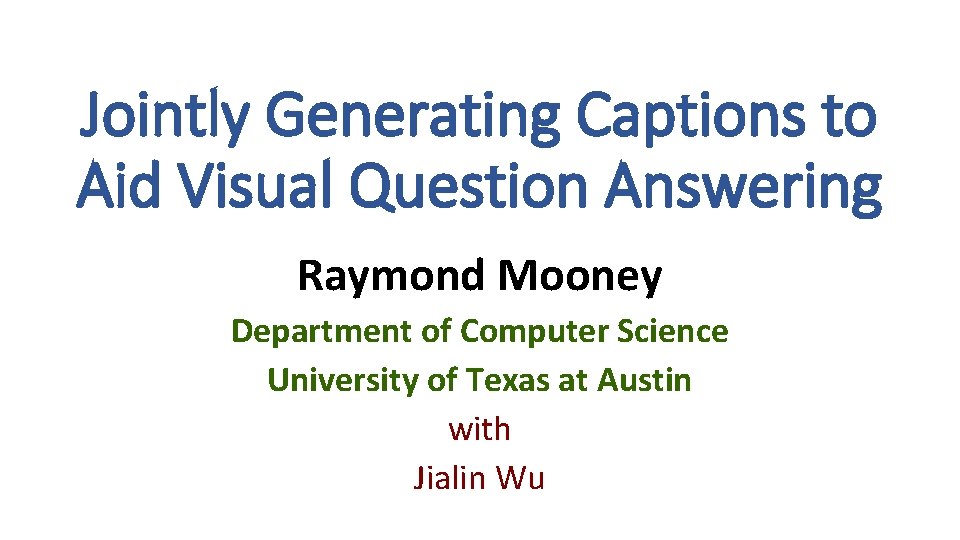

VQA Image credits to VQA website

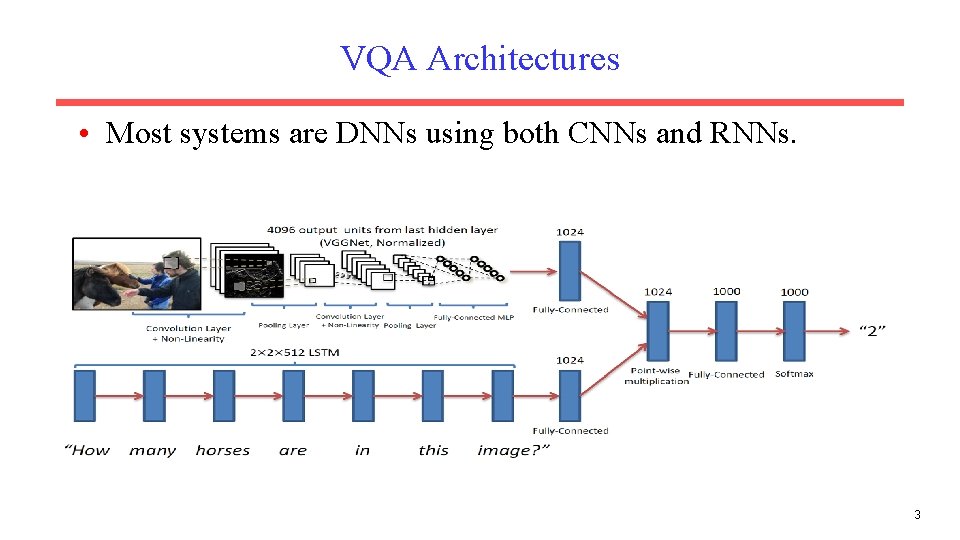

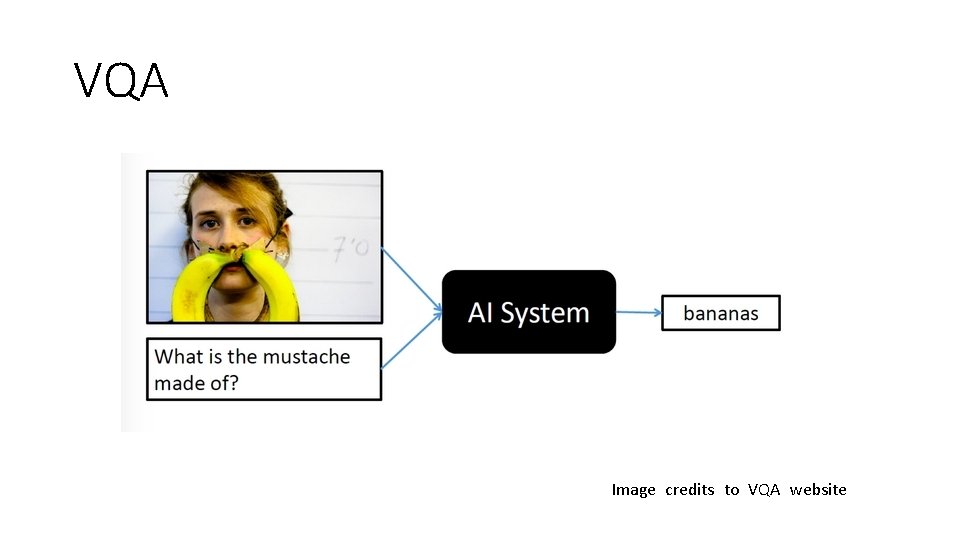

VQA Architectures • Most systems are DNNs using both CNNs and RNNs. 3

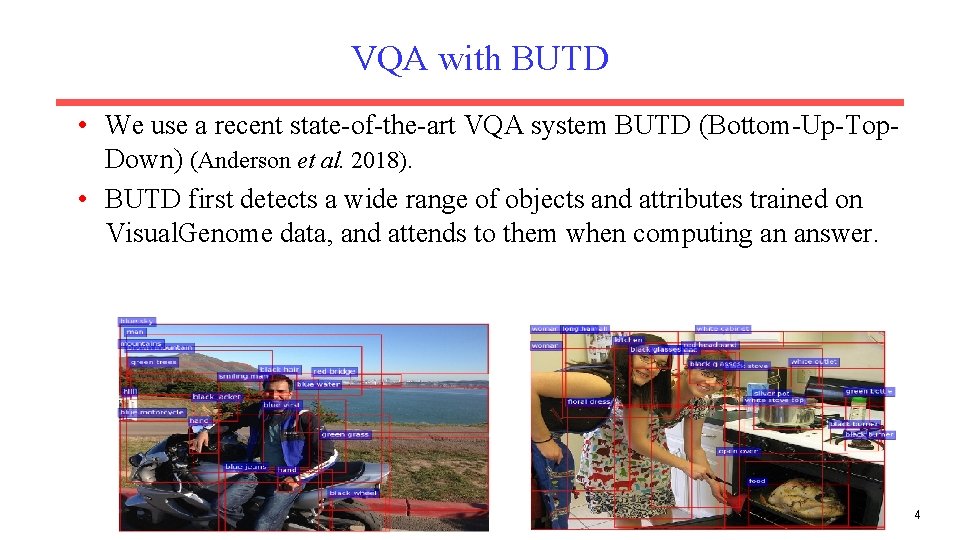

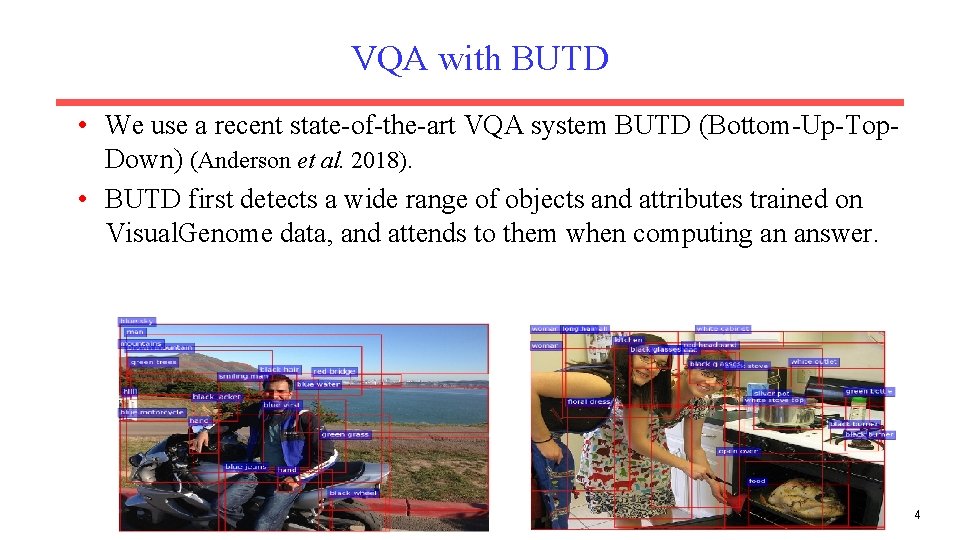

VQA with BUTD • We use a recent state-of-the-art VQA system BUTD (Bottom-Up-Top. Down) (Anderson et al. 2018). • BUTD first detects a wide range of objects and attributes trained on Visual. Genome data, and attends to them when computing an answer. 4

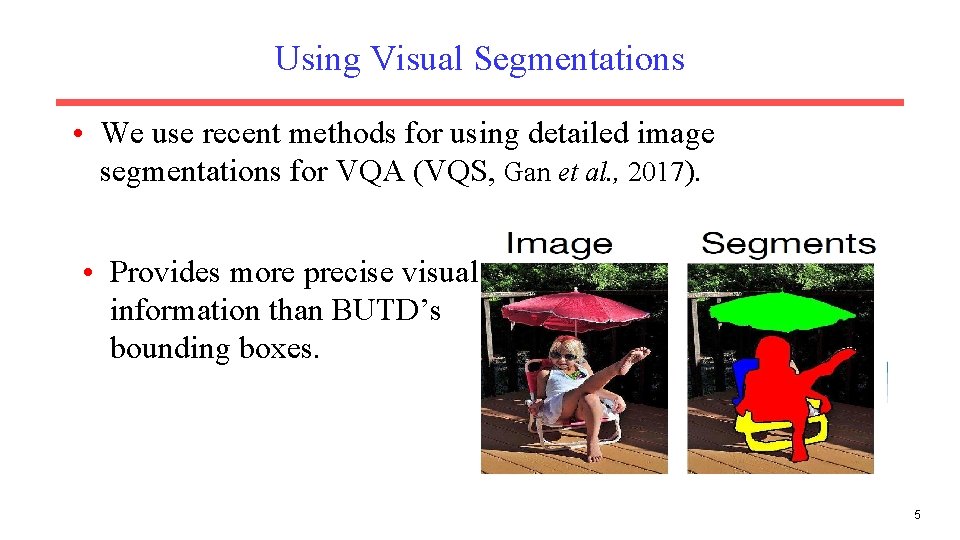

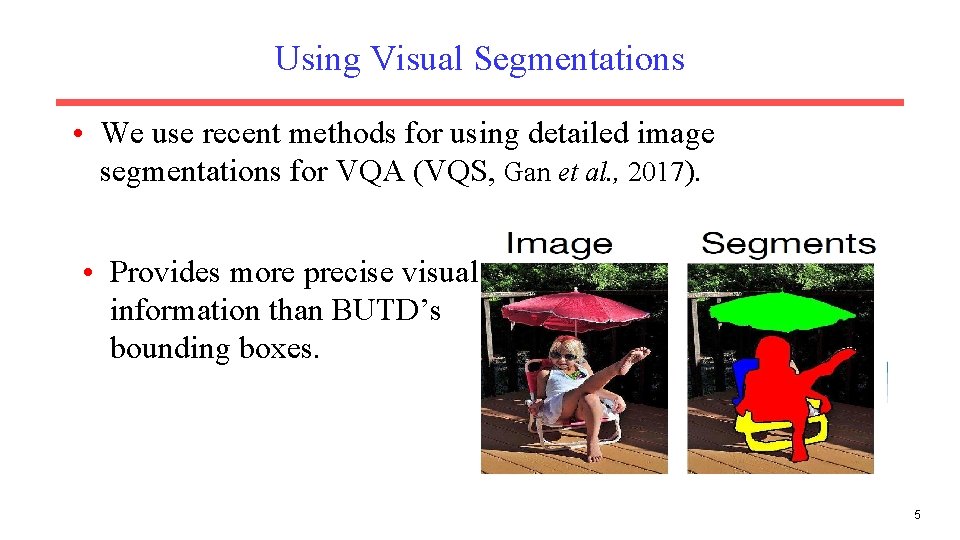

Using Visual Segmentations • We use recent methods for using detailed image segmentations for VQA (VQS, Gan et al. , 2017). • Provides more precise visual information than BUTD’s bounding boxes. 5

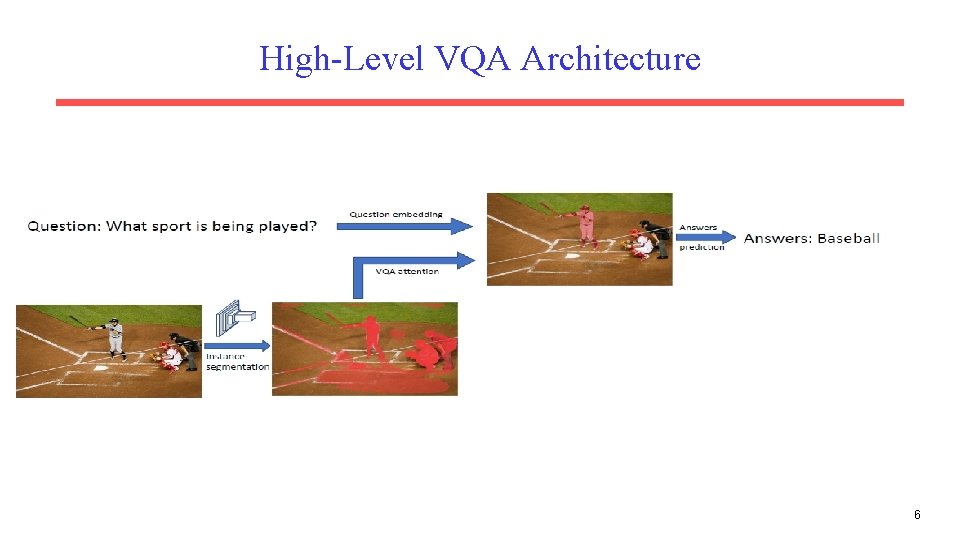

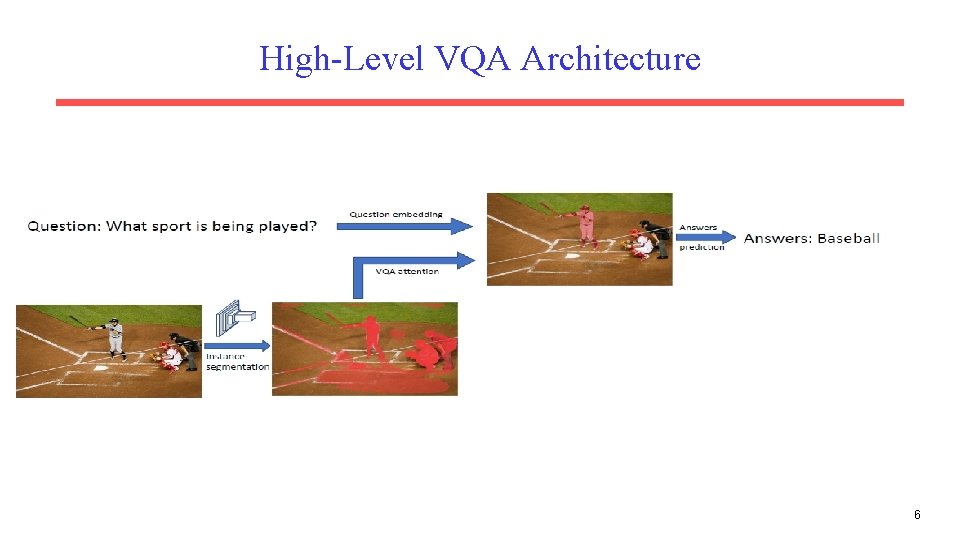

High-Level VQA Architecture 6

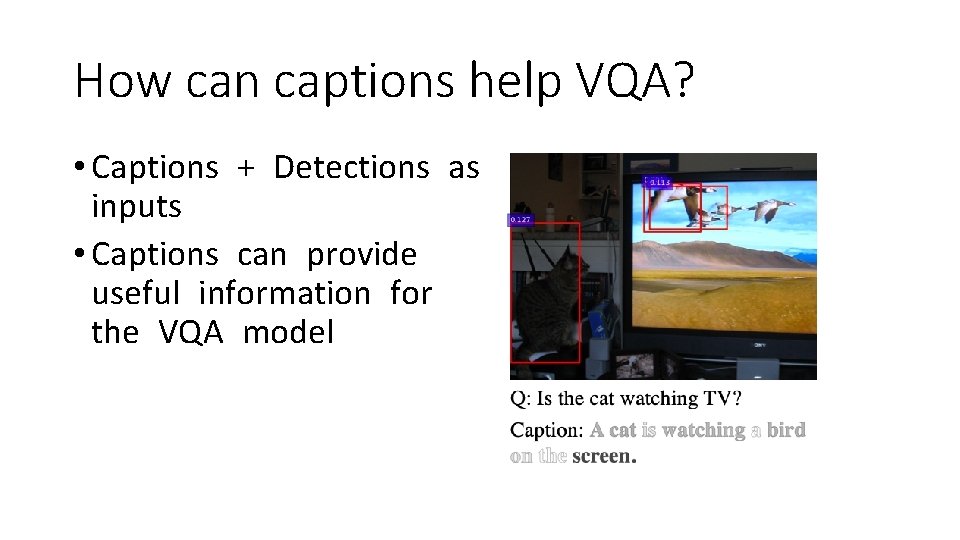

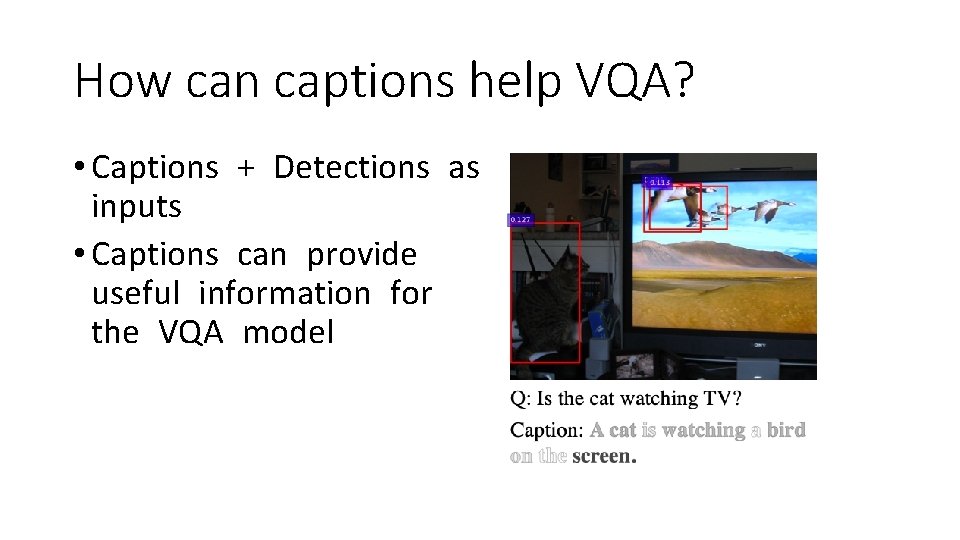

How can captions help VQA? • Captions + Detections as inputs • Captions can provide useful information for the VQA model

Multitask VQA and Image Captioning • There are lots of datasets with image captions. • COCO data used in VQA comes with captions • Captioning and VQA both need knowledge of image content and language. • Should benefit from multitask learning (Caruana, 1997).

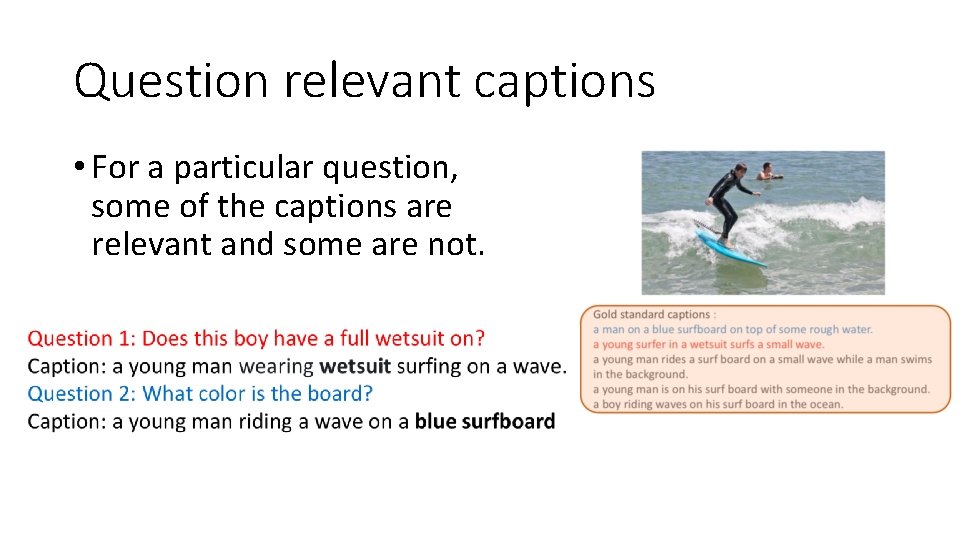

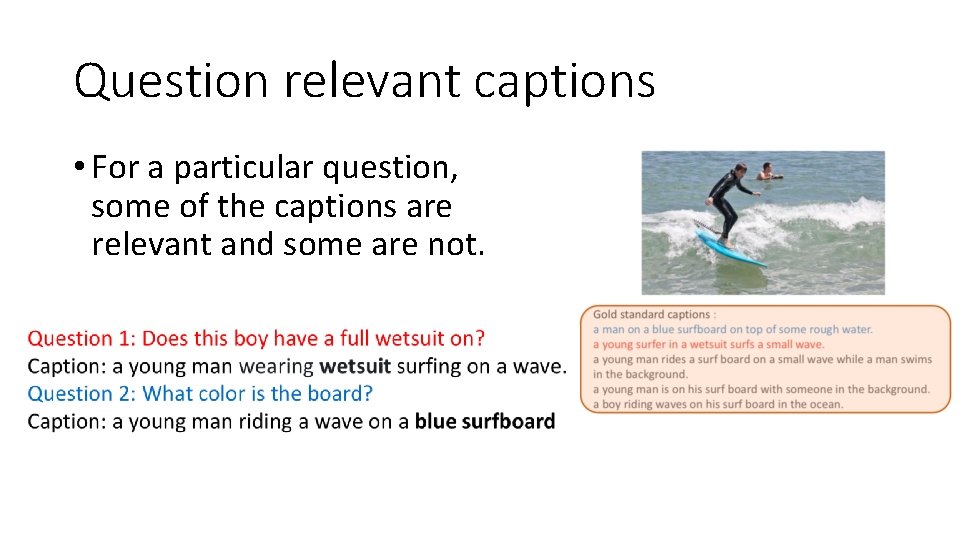

Question relevant captions • For a particular question, some of the captions are relevant and some are not.

How to generate question-relevant captions • Input feature side • We need to bias the features to encode the necessary information for the questions. • We used the VQA joint representation for simplicity. • Supervision side • We need the relevant captions to train the model to generate the relevant captions.

How to obtain relevant training captions • Directly Collecting captions for each question? • Over 1. 1 million questions in the dataset (not scalable). • The caption has to be in line with the VQA reasoning process. • Choosing the most relevant caption from existing dataset? • How to measure relevance? • What if there is no relevant caption for an image-question pair?

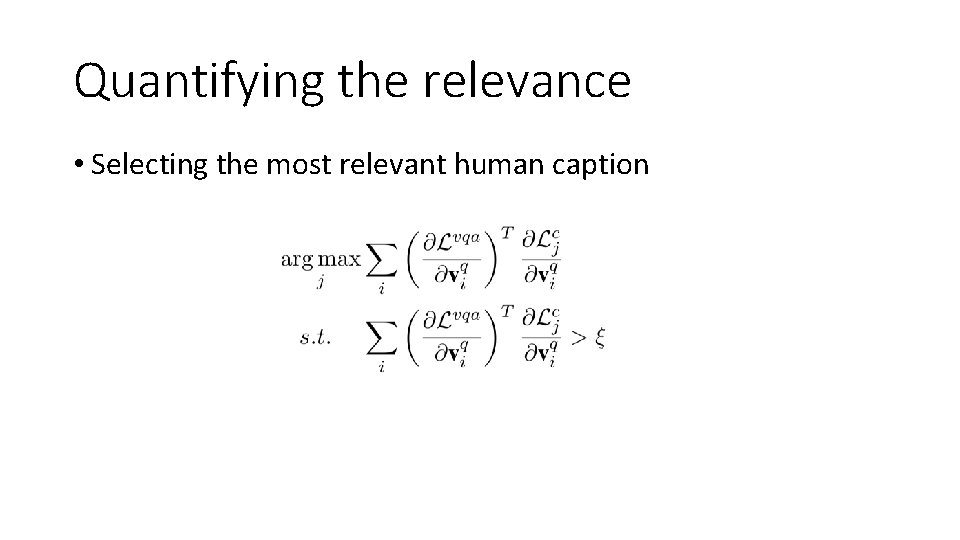

Quantifying the relevance • Intuition • Generating relevance captions should share the optimization goal with answering the visual question. • The two objectives should share some descent directions. • Relevance is measured using the inner-product of the gradients from the caption generation loss and the VQA answer prediction loss. • A positive inner-product means the two objective functions share some descent directions in the optimization process, and therefore indicates that the corresponding captions help the VQA process.

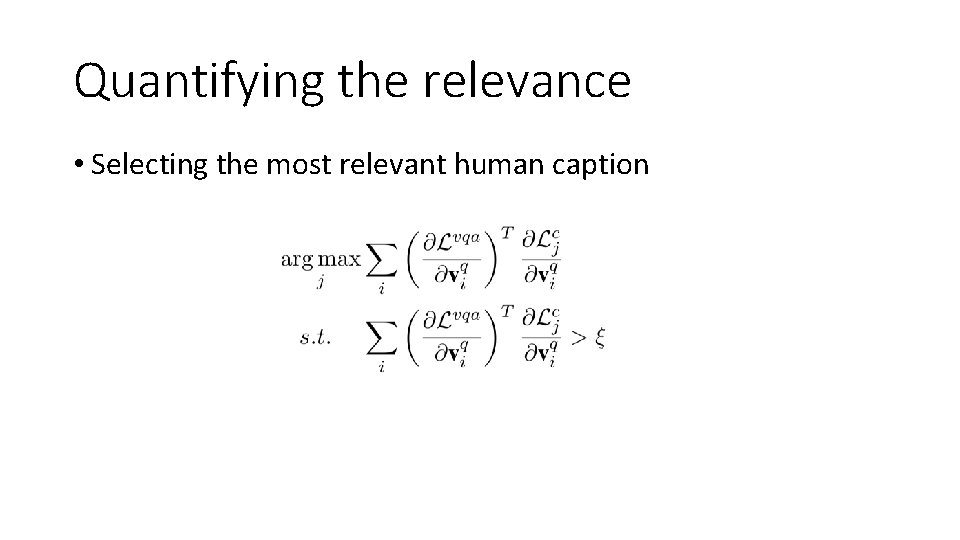

Quantifying the relevance • Selecting the most relevant human caption

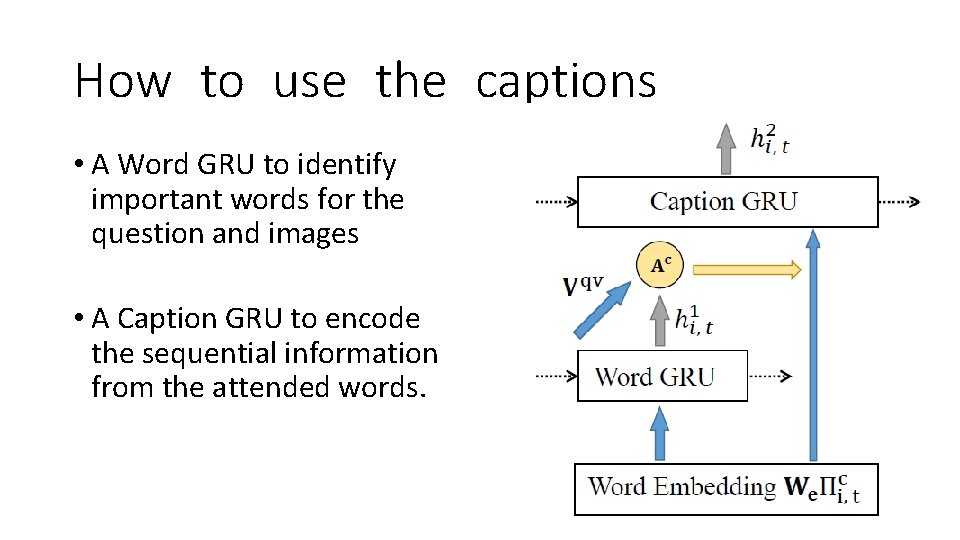

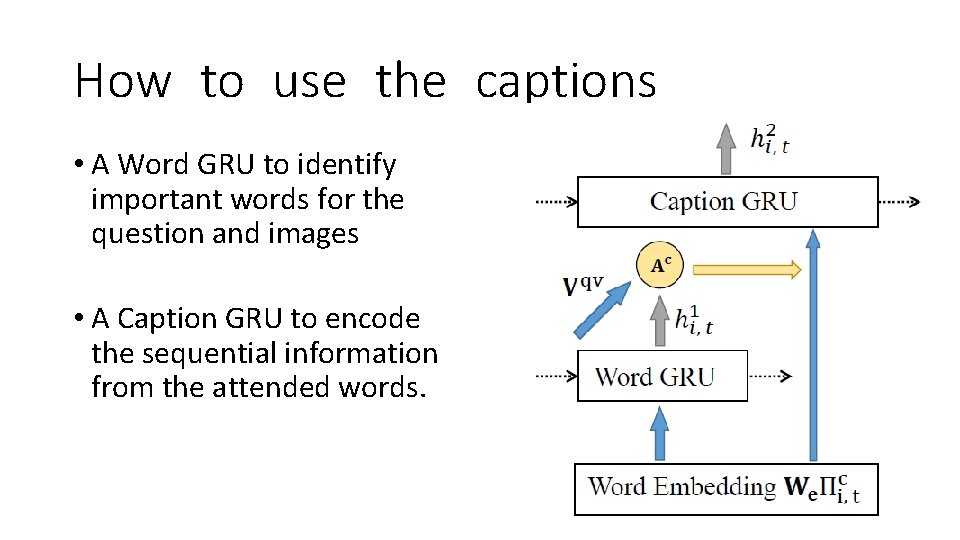

How to use the captions • A Word GRU to identify important words for the question and images • A Caption GRU to encode the sequential information from the attended words.

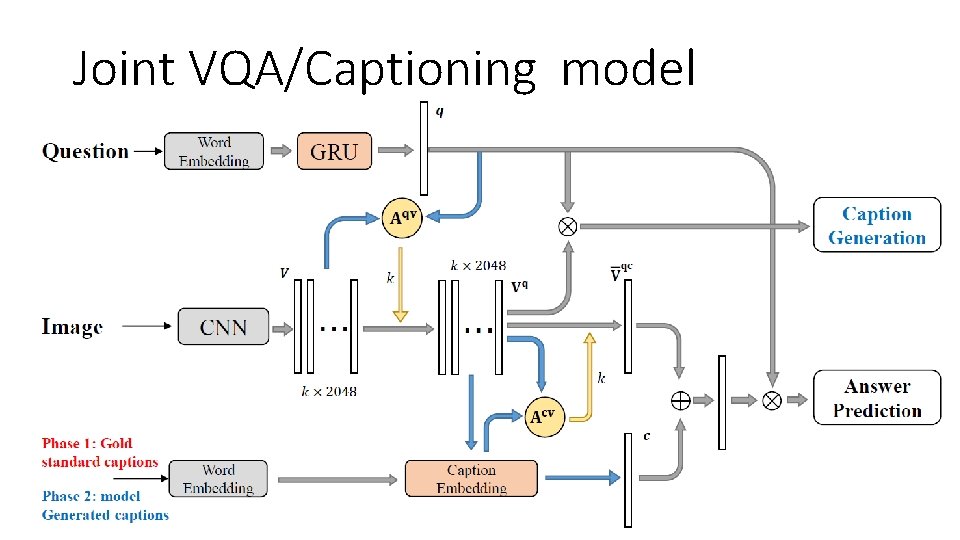

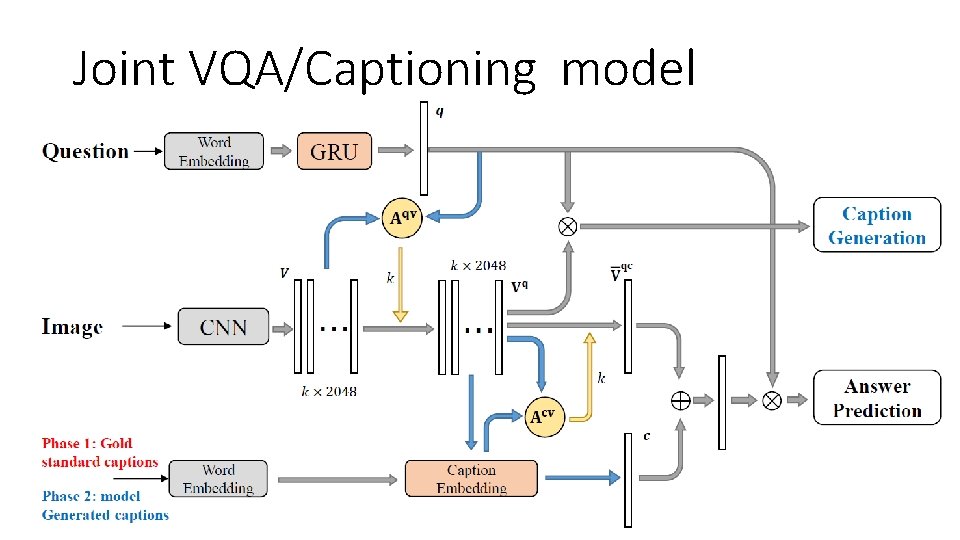

Joint VQA/Captioning model

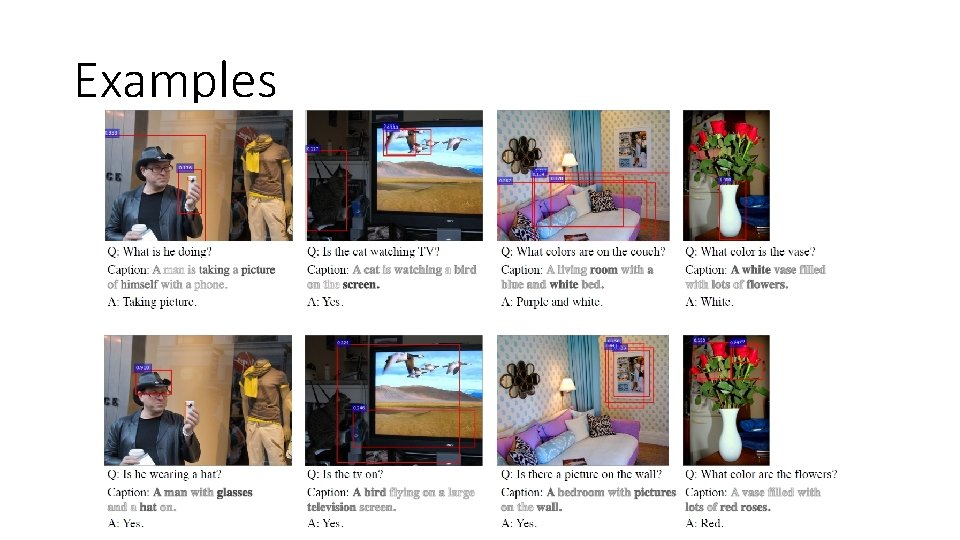

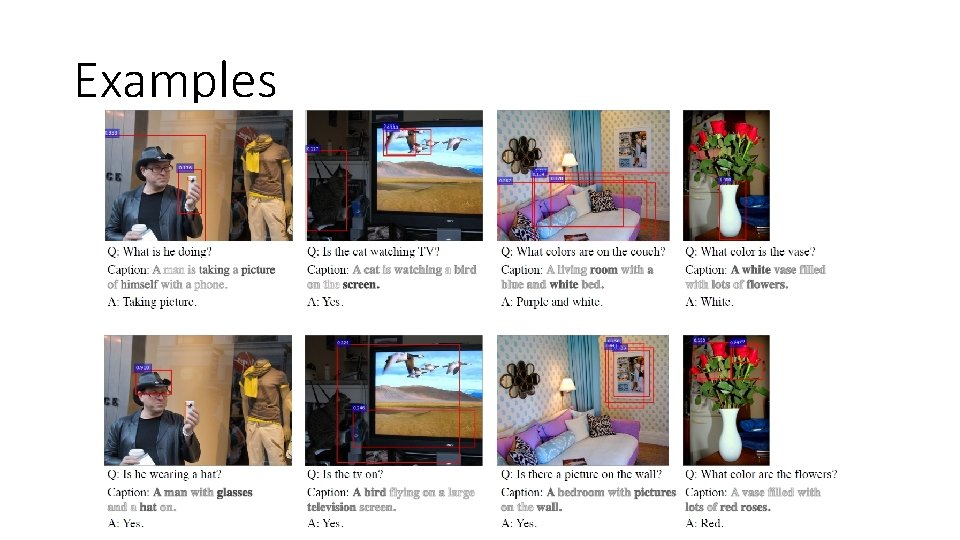

Examples

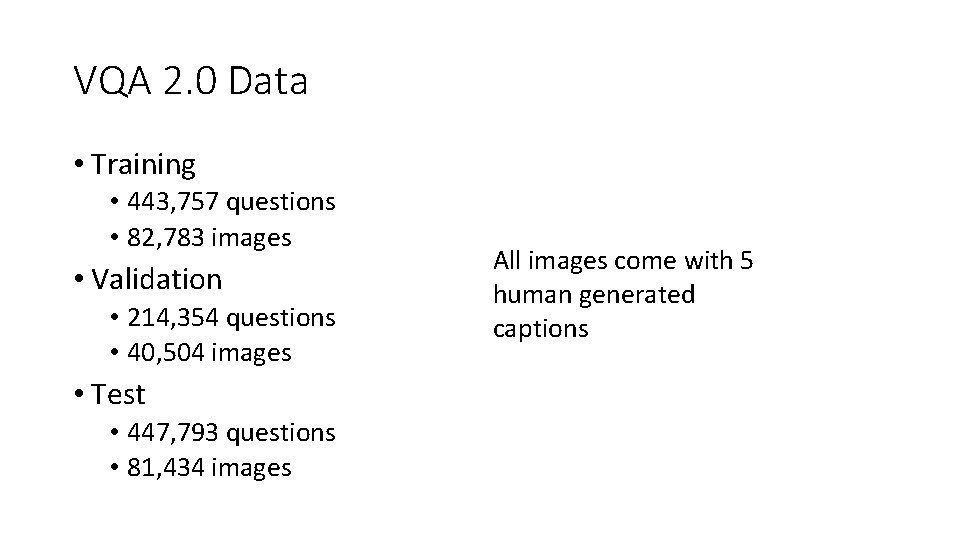

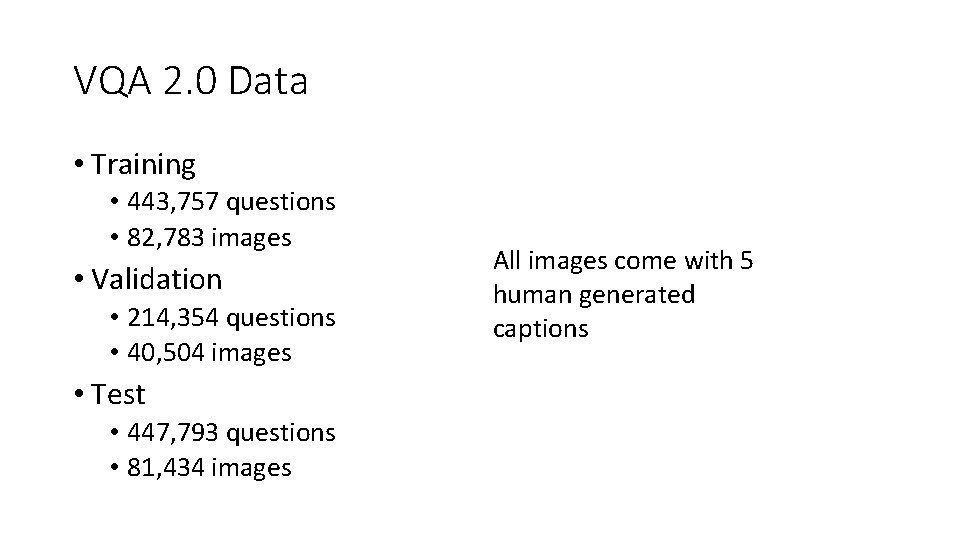

VQA 2. 0 Data • Training • 443, 757 questions • 82, 783 images • Validation • 214, 354 questions • 40, 504 images • Test • 447, 793 questions • 81, 434 images All images come with 5 human generated captions

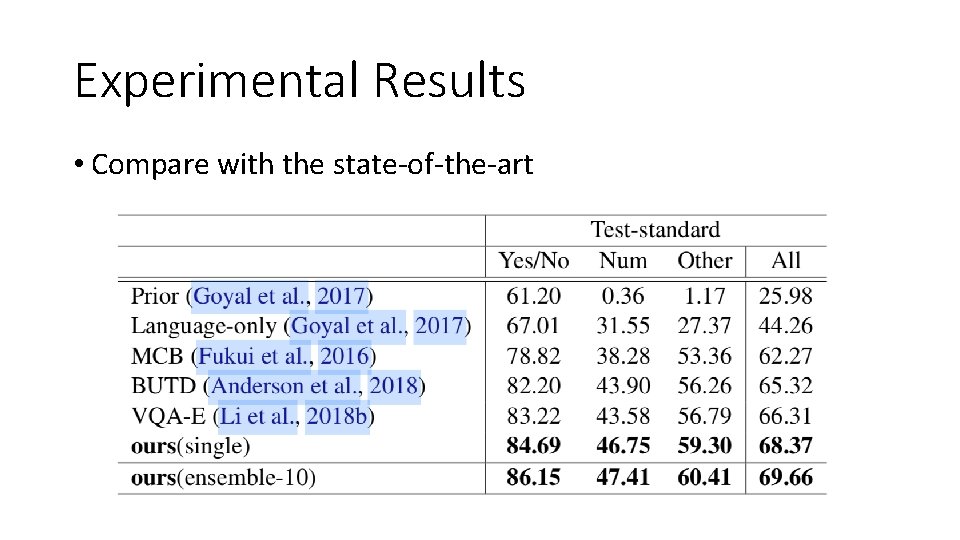

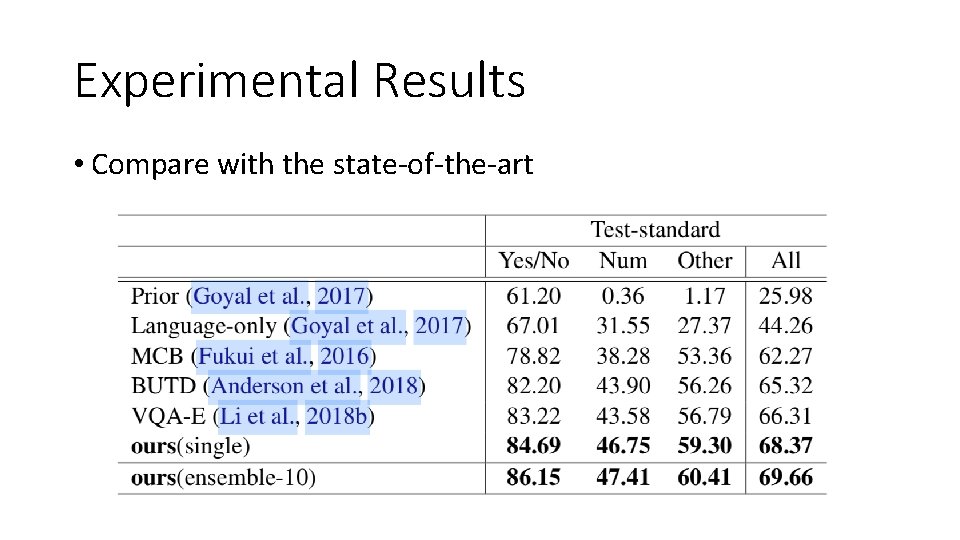

Experimental Results • Compare with the state-of-the-art

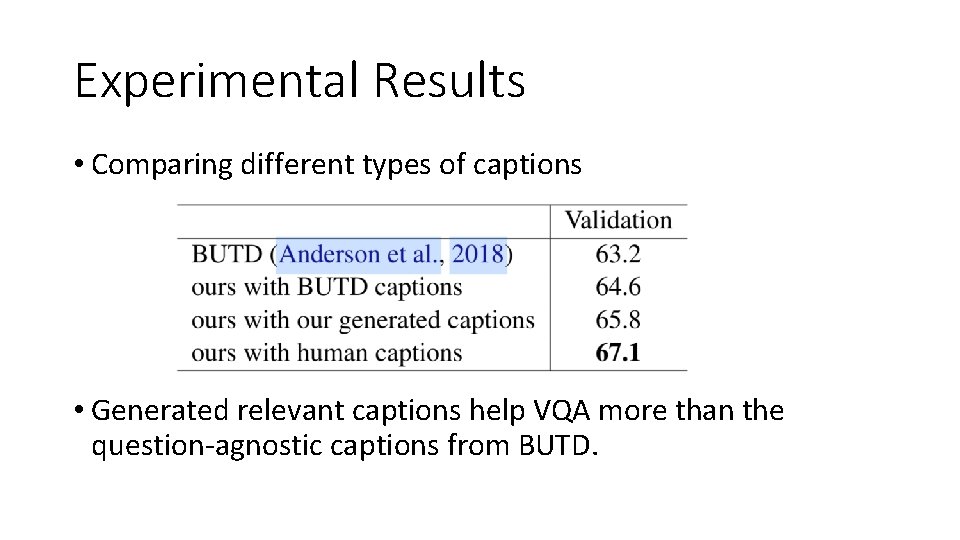

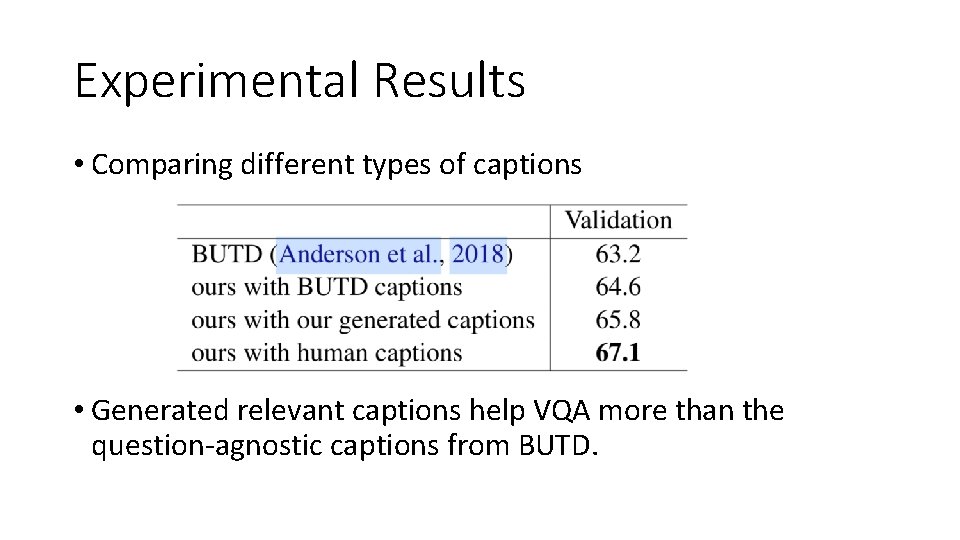

Experimental Results • Comparing different types of captions • Generated relevant captions help VQA more than the question-agnostic captions from BUTD.

Improving Image Captioning Using an Image-Conditioned Auto. Encoder

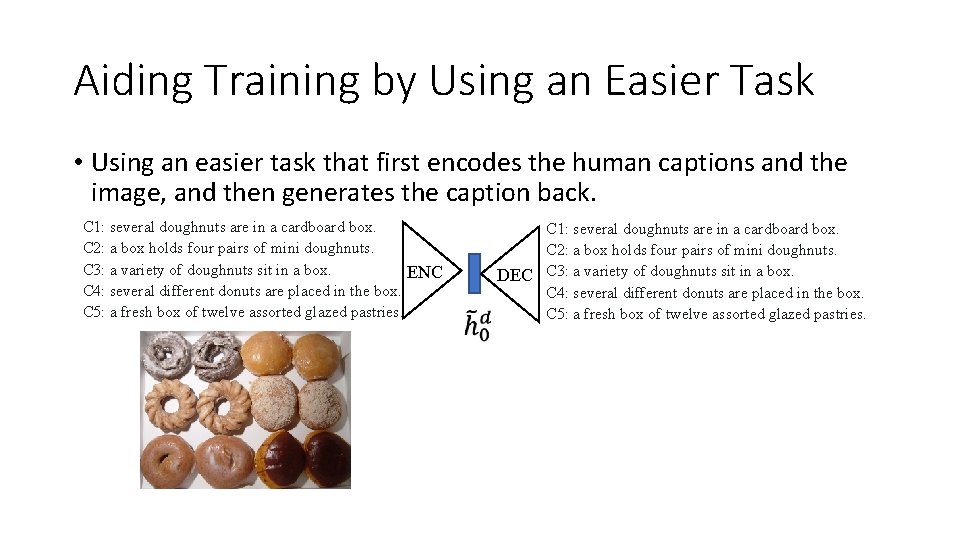

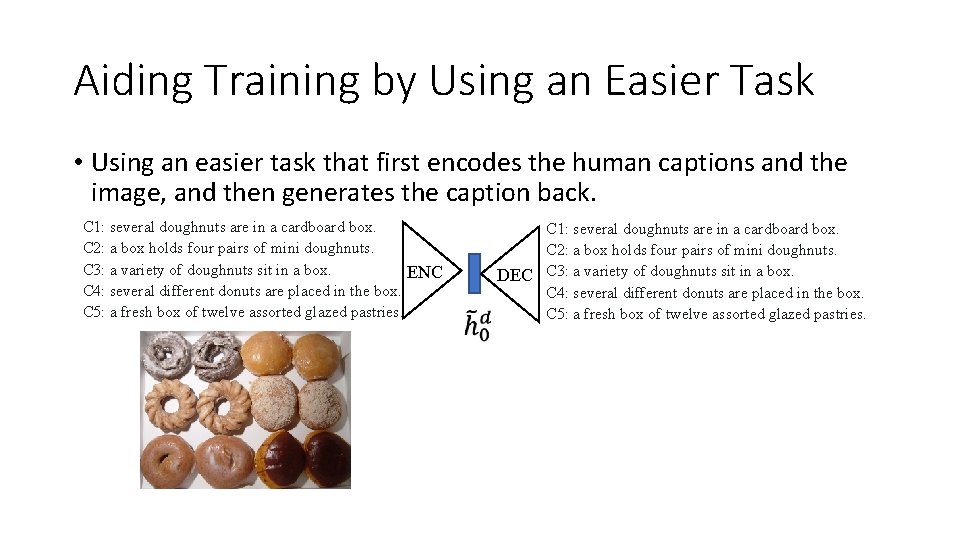

Aiding Training by Using an Easier Task • Using an easier task that first encodes the human captions and the image, and then generates the caption back. C 1: several doughnuts are in a cardboard box. C 2: a box holds four pairs of mini doughnuts. C 3: a variety of doughnuts sit in a box. ENC C 4: several different donuts are placed in the box. C 5: a fresh box of twelve assorted glazed pastries. C 1: several doughnuts are in a cardboard box. C 2: a box holds four pairs of mini doughnuts. DEC C 3: a variety of doughnuts sit in a box. C 4: several different donuts are placed in the box. C 5: a fresh box of twelve assorted glazed pastries.

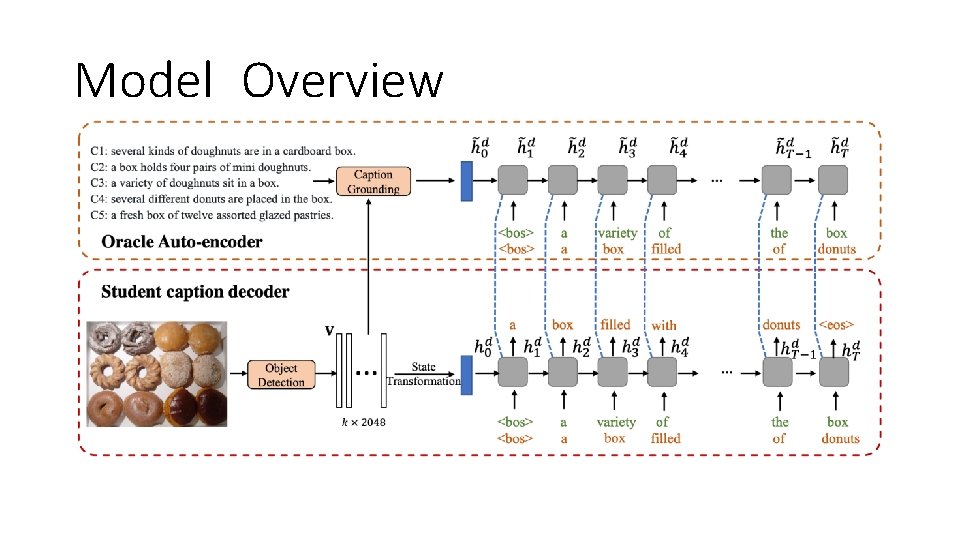

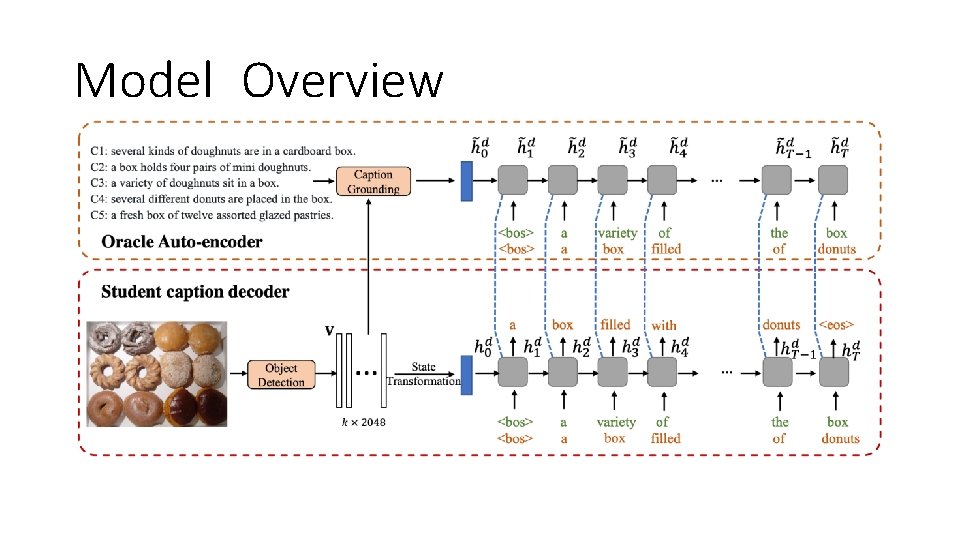

Model Overview

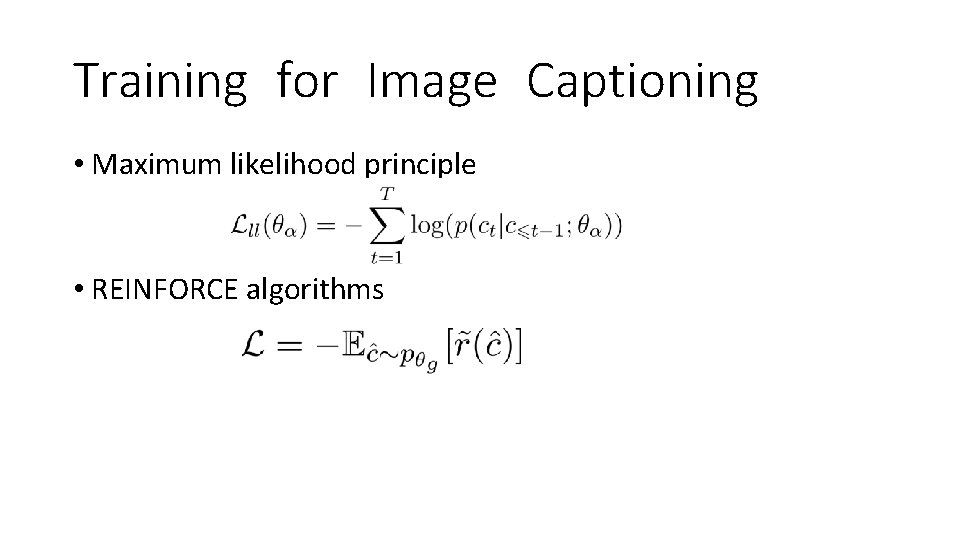

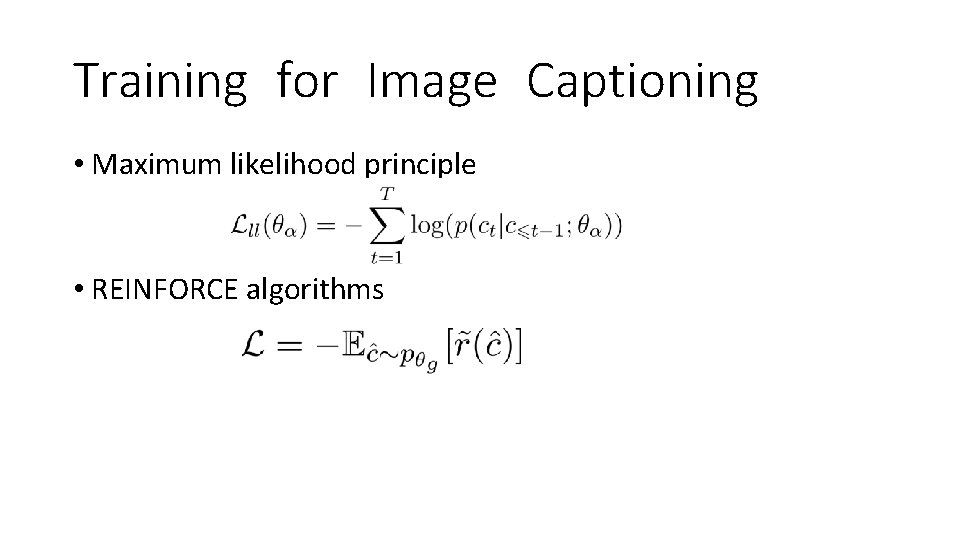

Training for Image Captioning • Maximum likelihood principle • REINFORCE algorithms

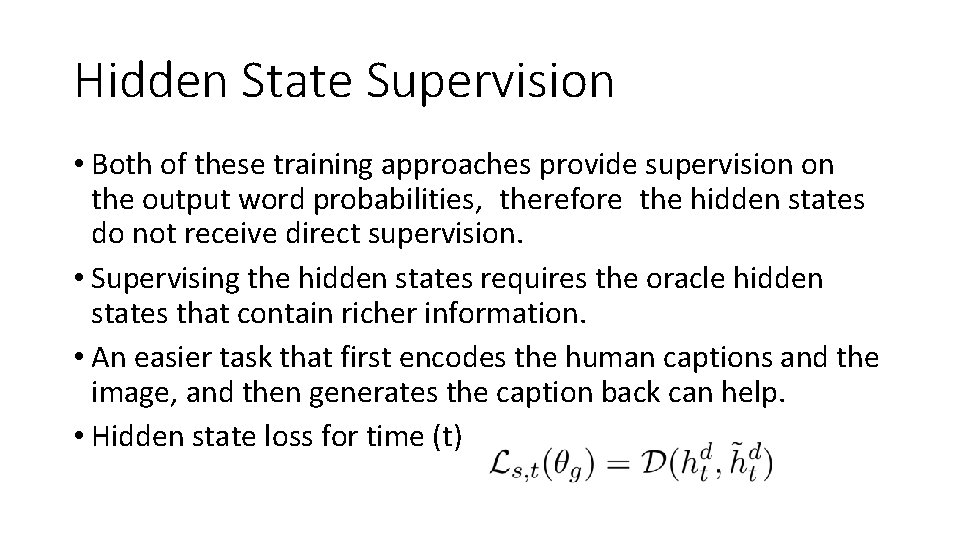

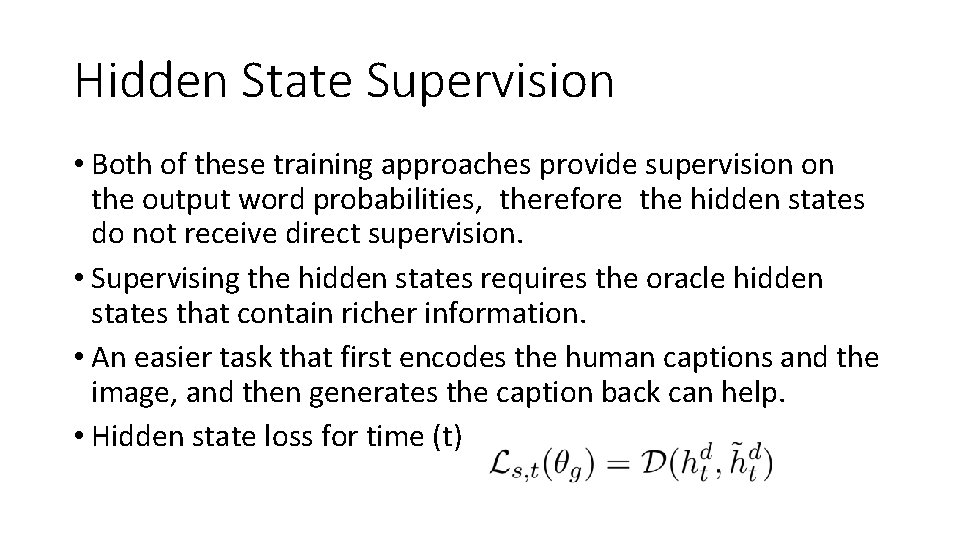

Hidden State Supervision • Both of these training approaches provide supervision on the output word probabilities, therefore the hidden states do not receive direct supervision. • Supervising the hidden states requires the oracle hidden states that contain richer information. • An easier task that first encodes the human captions and the image, and then generates the caption back can help. • Hidden state loss for time (t)

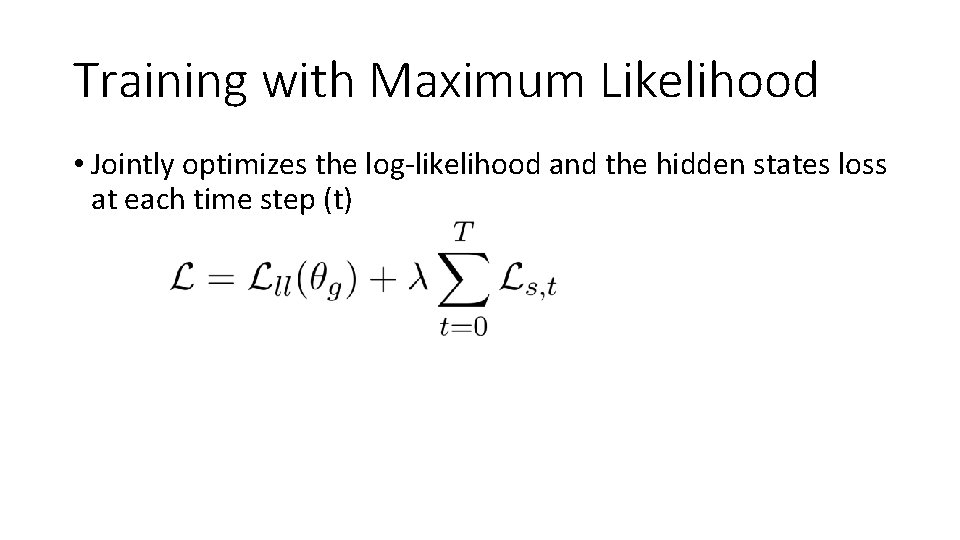

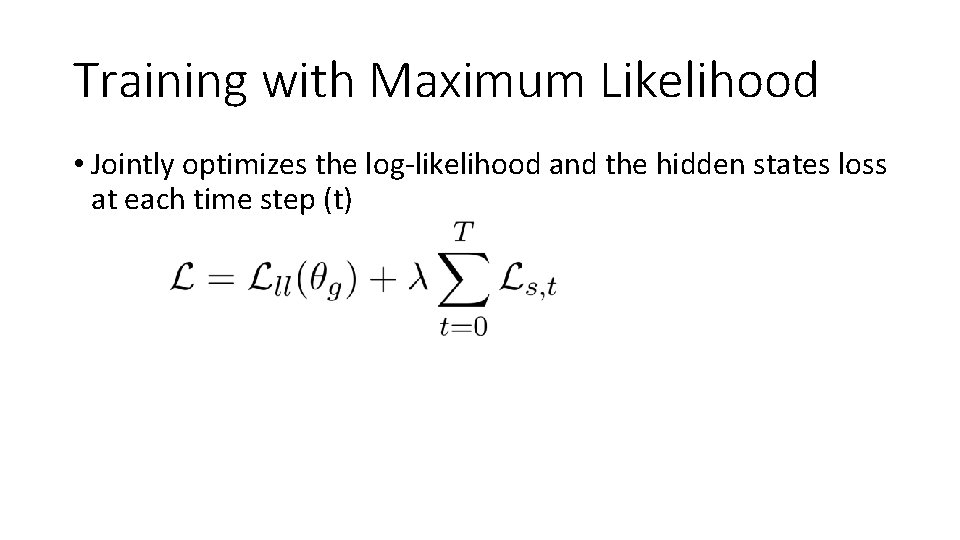

Training with Maximum Likelihood • Jointly optimizes the log-likelihood and the hidden states loss at each time step (t)

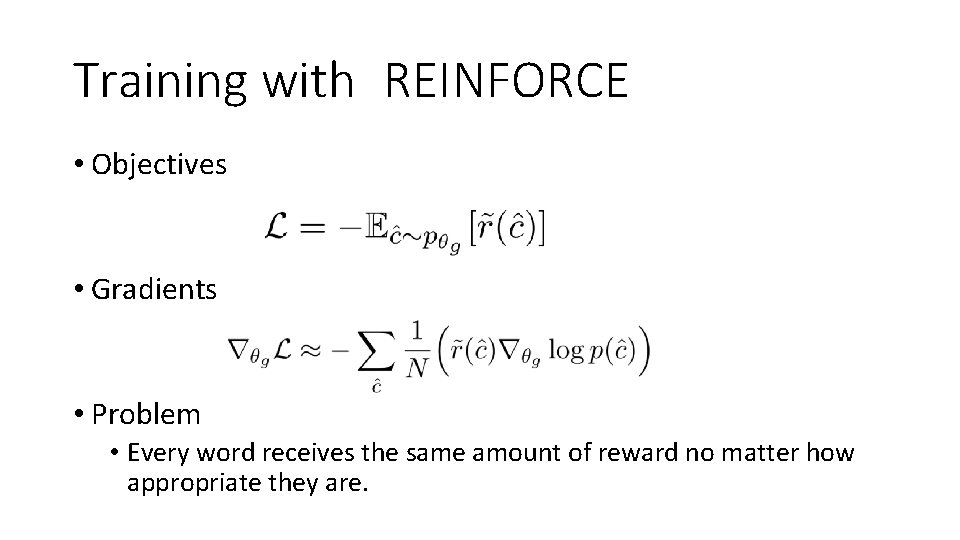

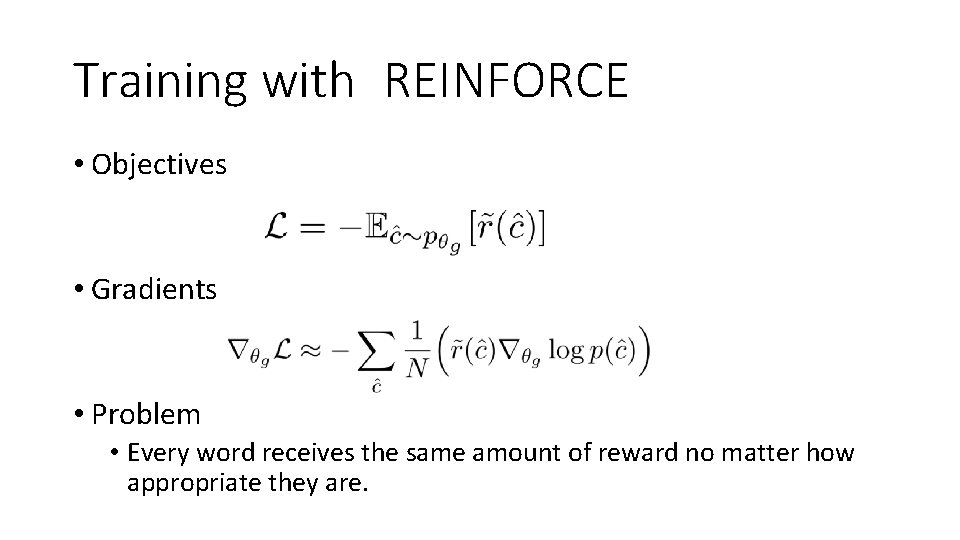

Training with REINFORCE • Objectives • Gradients • Problem • Every word receives the same amount of reward no matter how appropriate they are.

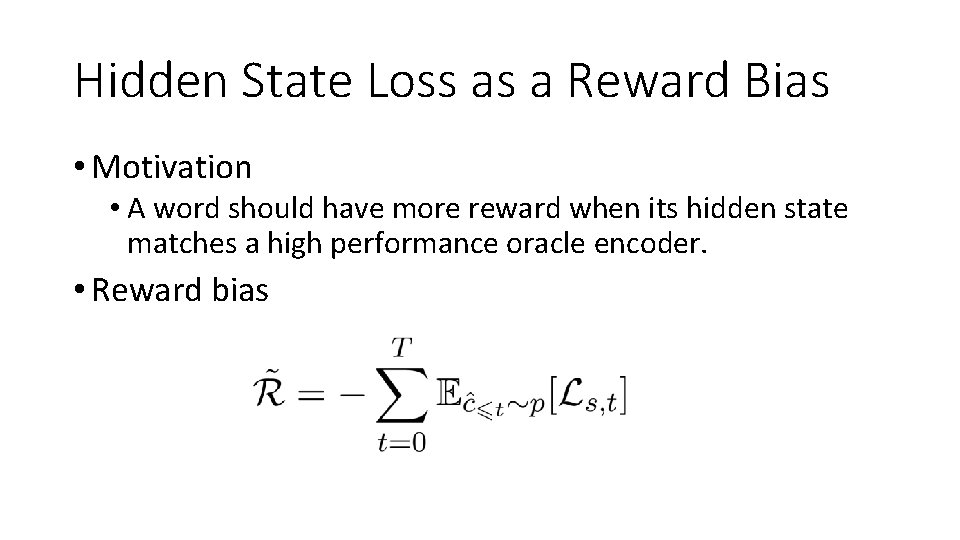

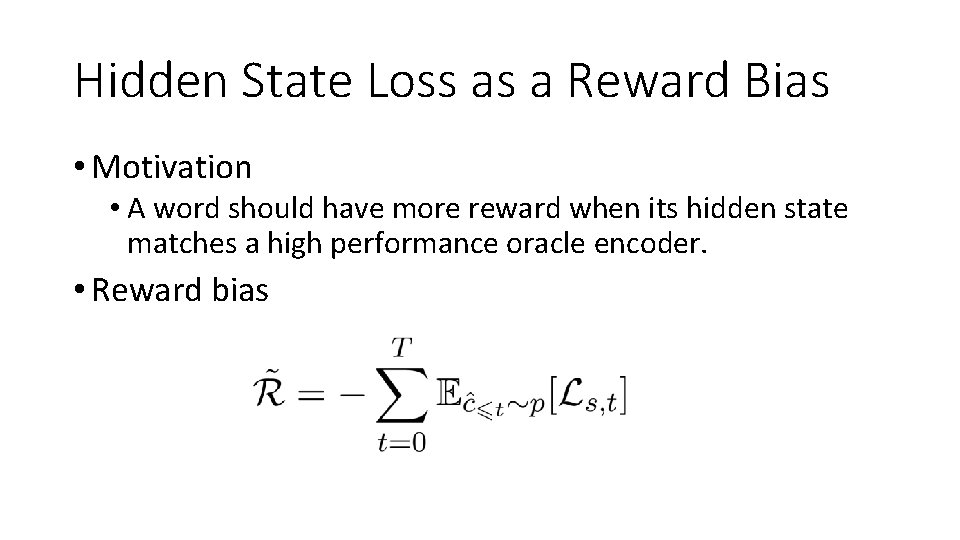

Hidden State Loss as a Reward Bias • Motivation • A word should have more reward when its hidden state matches a high performance oracle encoder. • Reward bias

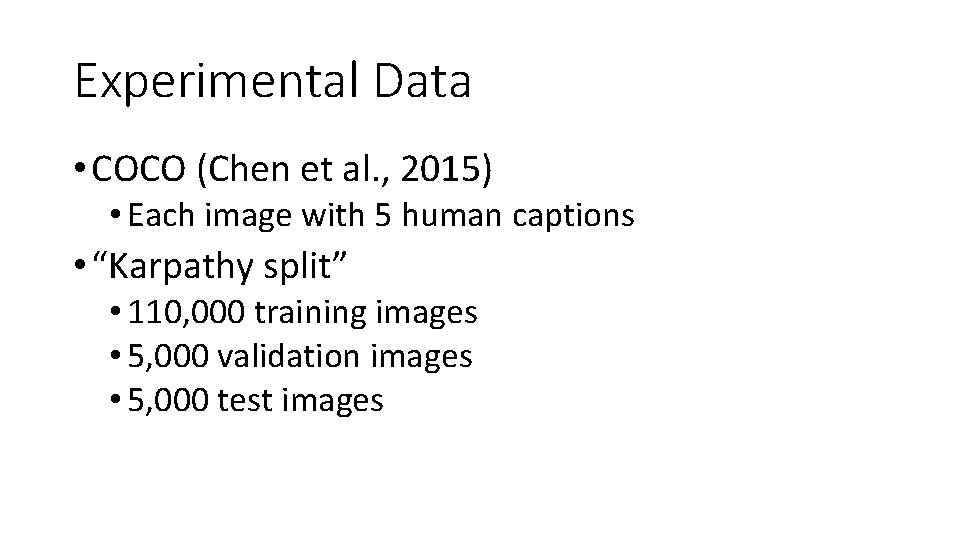

Experimental Data • COCO (Chen et al. , 2015) • Each image with 5 human captions • “Karpathy split” • 110, 000 training images • 5, 000 validation images • 5, 000 test images

Baseline Systems • FC (Rennie et al. , 2017) • With and without “self critical sequence training” • Up-Down (aka BUTD) (Anderson et al. , 2018) • With and without “self critical sequence training”

Evaluation Metrics • BLEU-4 (B-4) • METEOR (M) • ROUGE-L (R-L) • CIDEr (C) • SPICE (S)

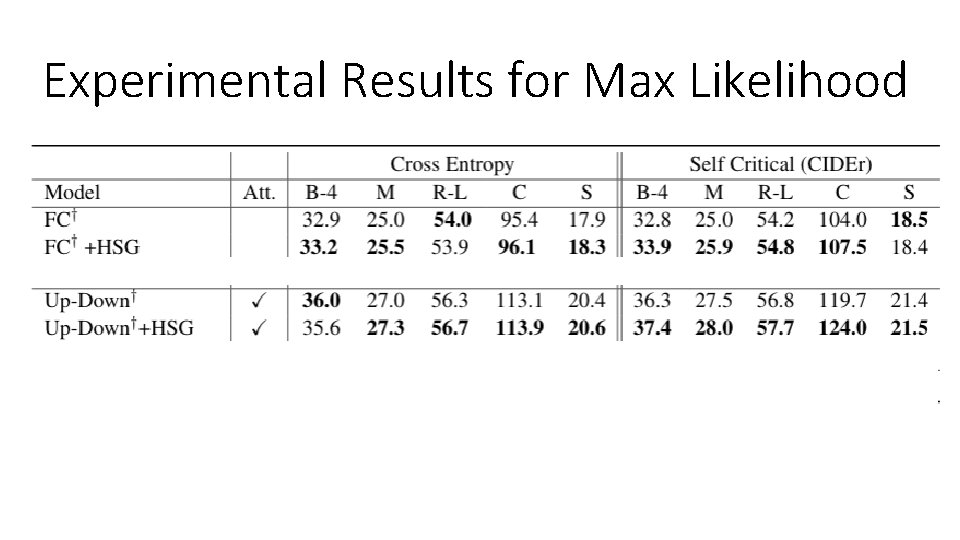

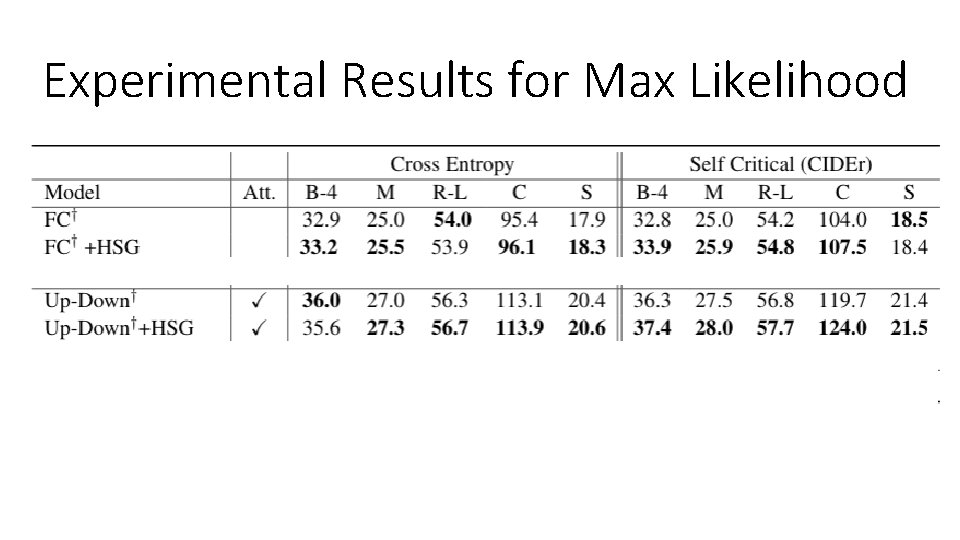

Experimental Results for Max Likelihood

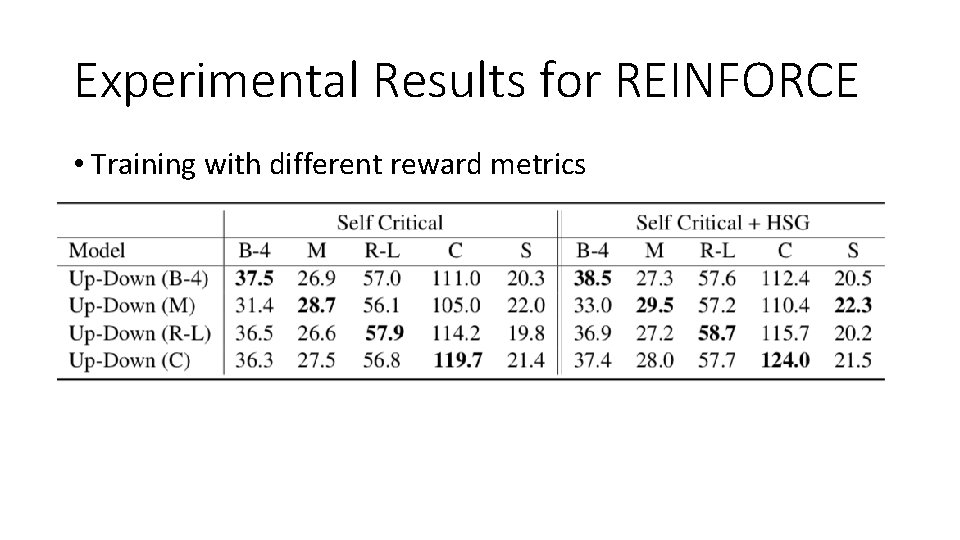

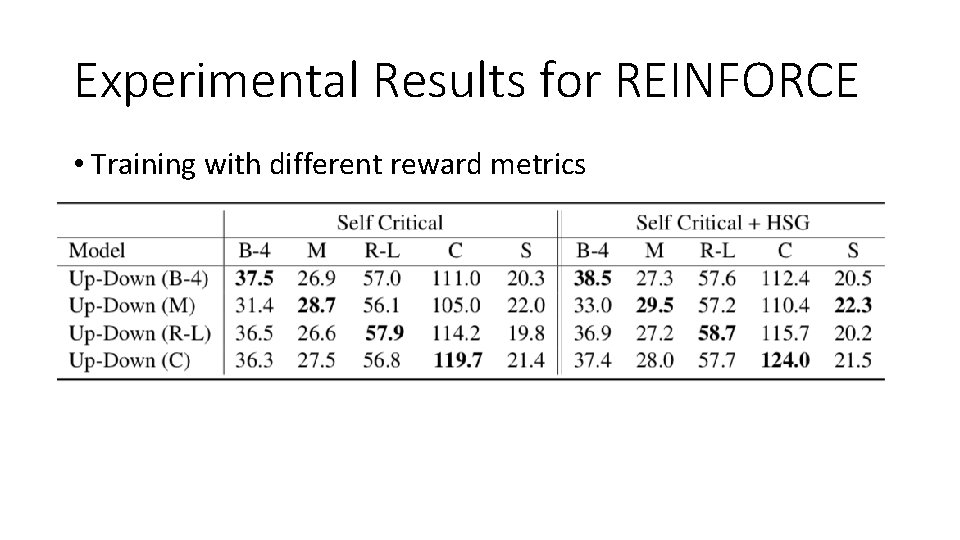

Experimental Results for REINFORCE • Training with different reward metrics

Conclusions • Jointly generating “question relevant” captions can improve Visual Question Answering. • First training an image-conditioned caption autoencoder can help supervise a captioner to create better hidden state representations that improve final captioning performance.