JAVA AND MATRIX COMPUTATION Geir Gundersen Department of

![C# Timings n Jagged arrays (Java like) for example double[m][n]. Not necessarily stored contiguously C# Timings n Jagged arrays (Java like) for example double[m][n]. Not necessarily stored contiguously](https://slidetodoc.com/presentation_image_h2/808fff29995407cfc668715486cedb13/image-17.jpg)

- Slides: 22

JAVA AND MATRIX COMPUTATION Geir Gundersen Department of Informatics University of Bergen Norway Joint work with Trond Steihaug

Has JAVA something to offer in the field of Sparse Matrix Computations? n Java Grande: Several extension to the Java language has been proposed but NOT integrated or considered by Sun Microsystems. n Our vision: What has the Java language to offer in the field of numerical computation as is?

Objectives Java and Scientific Computing: n q Java will be used for (limited) numerical computations. Jagged Arrays: n q Static and Dynamic operations. Challenges: n q Dense Matrix Computations. n C#. n Future Topics.

Benchmarking Java against C and FORTRAN for Scientific Applications n Java will loose in general for most kernels, a factor of 2 -4 times, but some benchmarking shows that Java will compete and even win for other kernels (on some platforms). n There is still progress in JVM and compiler optimizing. The gap between FORTRAN/C and Java will in the future get smaller(? ) n Benchmarking results are important but not in the scope of this work.

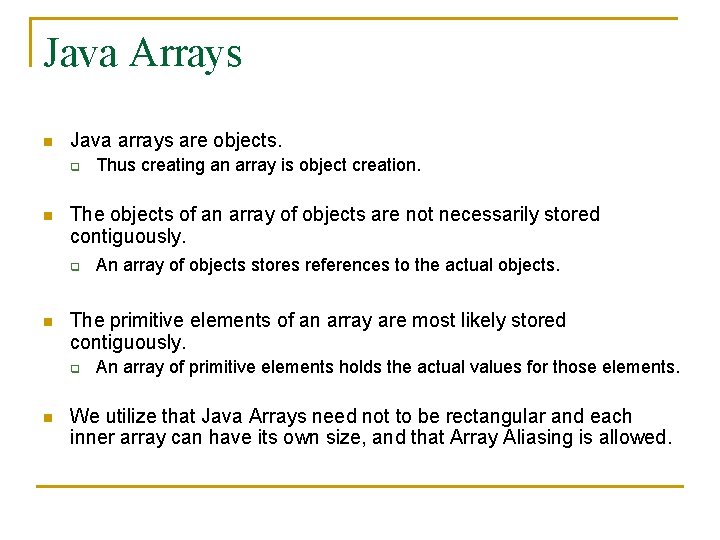

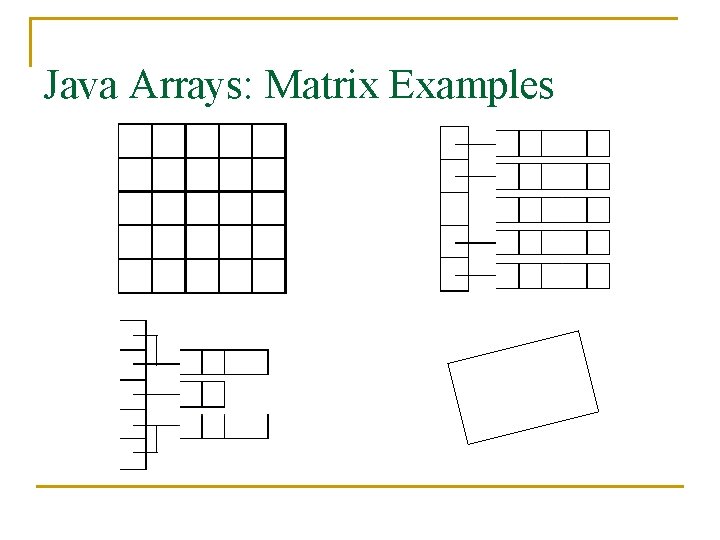

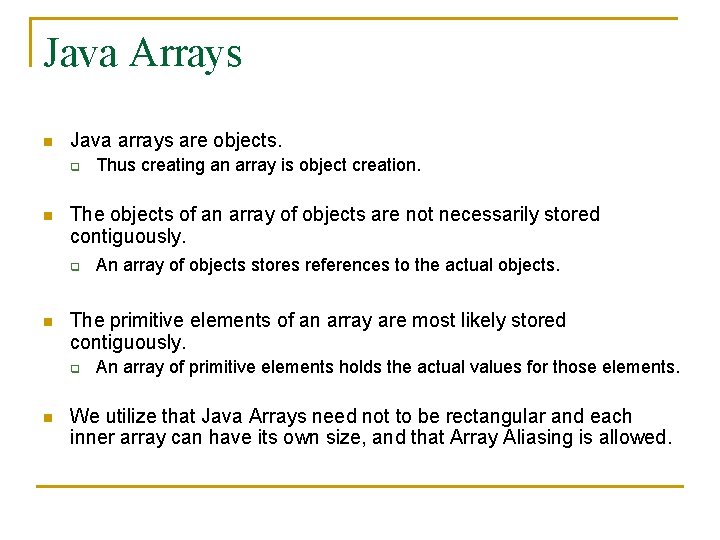

Java Arrays n Java arrays are objects. q n The objects of an array of objects are not necessarily stored contiguously. q n An array of objects stores references to the actual objects. The primitive elements of an array are most likely stored contiguously. q n Thus creating an array is object creation. An array of primitive elements holds the actual values for those elements. We utilize that Java Arrays need not to be rectangular and each inner array can have its own size, and that Array Aliasing is allowed.

Java Arrays: Matrix Examples

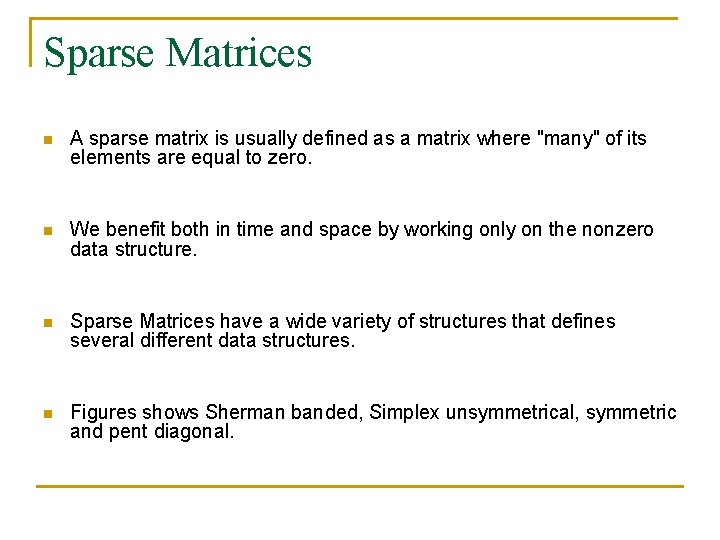

Sparse Matrices n A sparse matrix is usually defined as a matrix where "many" of its elements are equal to zero. n We benefit both in time and space by working only on the nonzero data structure. n Sparse Matrices have a wide variety of structures that defines several different data structures. n Figures shows Sherman banded, Simplex unsymmetrical, symmetric and pent diagonal.

Sparse Matrices: Examples

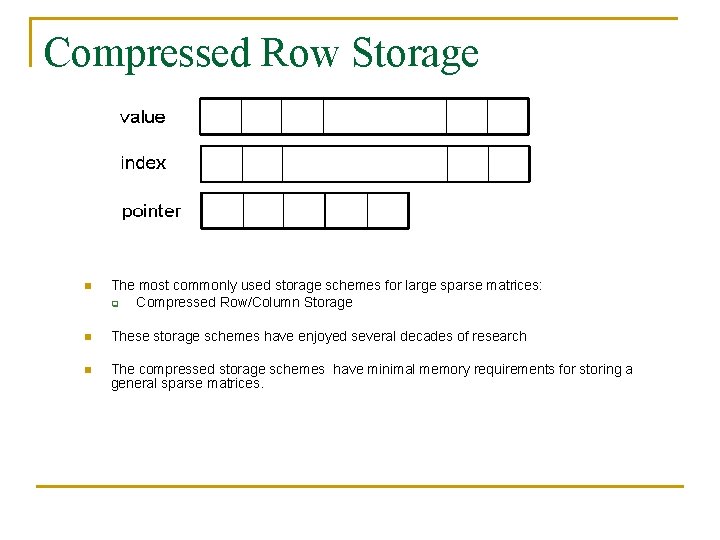

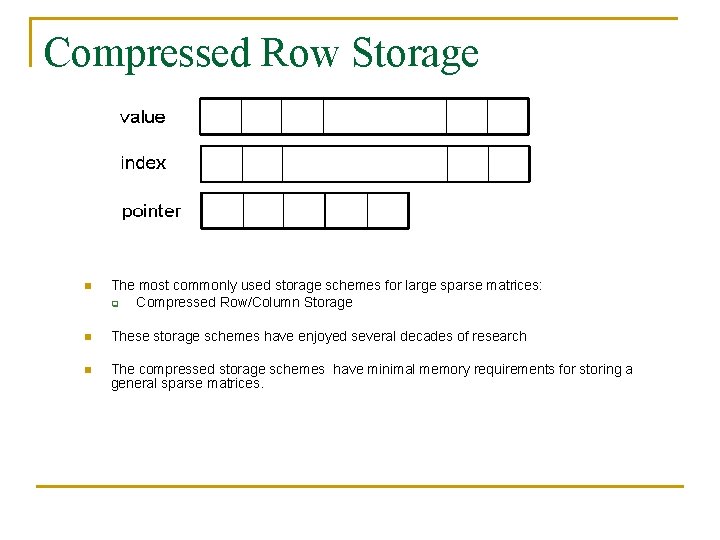

Compressed Row Storage n The most commonly used storage schemes for large sparse matrices: q Compressed Row/Column Storage n These storage schemes have enjoyed several decades of research n The compressed storage schemes have minimal memory requirements for storing a general sparse matrices.

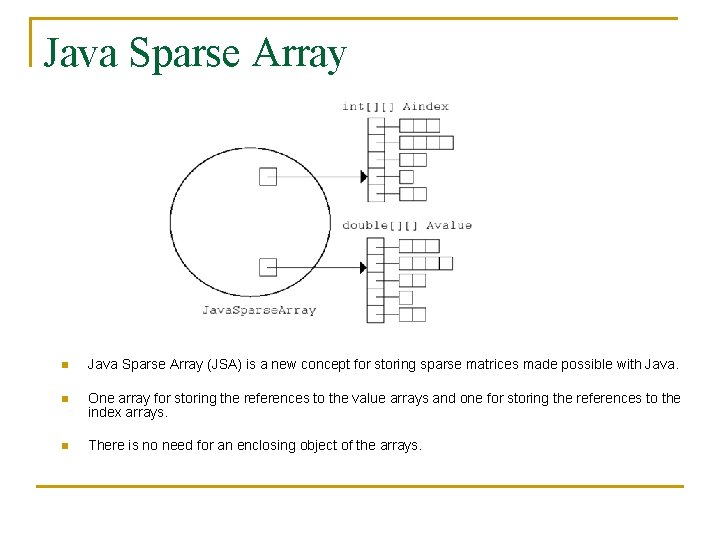

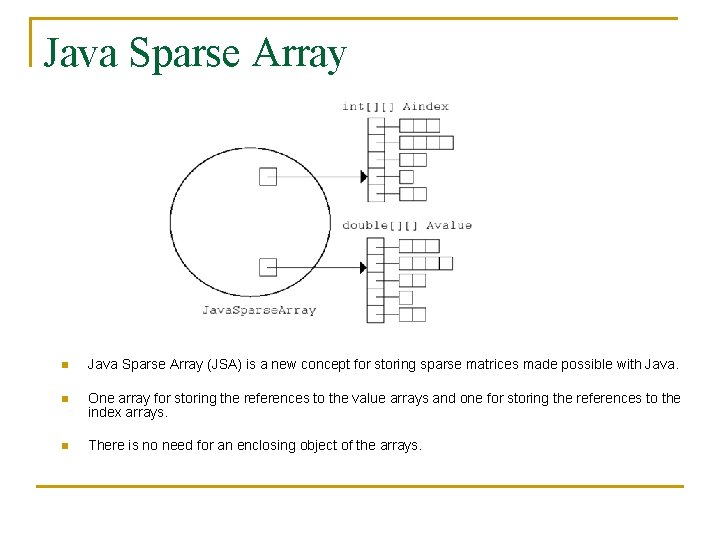

Java Sparse Array n Java Sparse Array (JSA) is a new concept for storing sparse matrices made possible with Java. n One array for storing the references to the value arrays and one for storing the references to the index arrays. n There is no need for an enclosing object of the arrays.

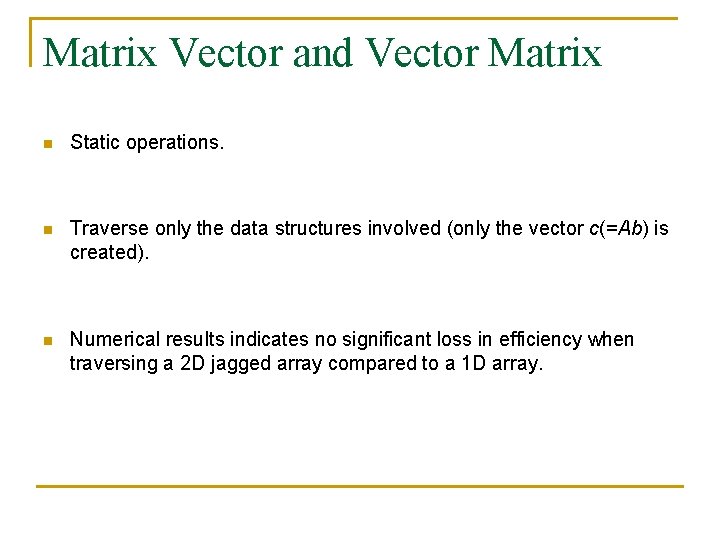

Matrix Vector and Vector Matrix n Static operations. n Traverse only the data structures involved (only the vector c(=Ab) is created). n Numerical results indicates no significant loss in efficiency when traversing a 2 D jagged array compared to a 1 D array.

Sparse Matrix Multiplication n Dynamic operations. n Creates each row of the resulting matrix C(=AB). n Numerical results indicates no significant loss in efficiency using jagged arrays in dynamic operations. n Symbolic Phase and Numerical Phase. n Jagged Arrays: One phase leads to locality. n CRS: Two separate phases.

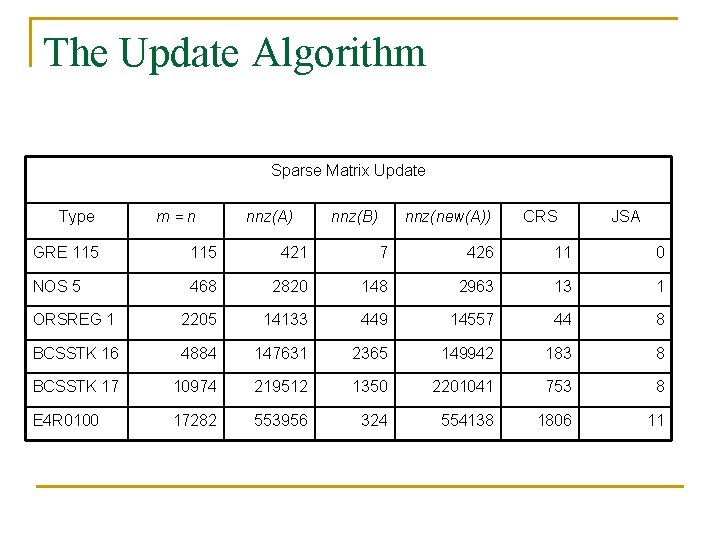

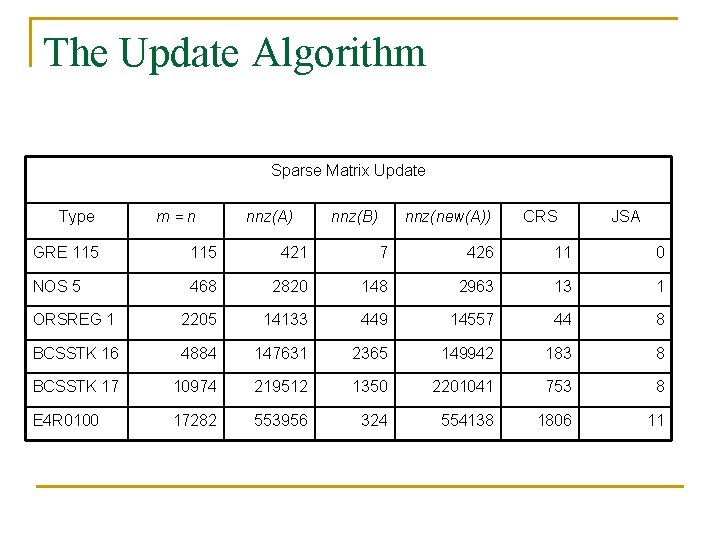

The Update Algorithm Sparse Matrix Update Type m=n nnz(A) nnz(B) nnz(new(A)) CRS JSA GRE 115 421 7 426 11 0 NOS 5 468 2820 148 2963 13 1 ORSREG 1 2205 14133 449 14557 44 8 BCSSTK 16 4884 147631 2365 149942 183 8 BCSSTK 17 10974 219512 1350 2201041 753 8 E 4 R 0100 17282 553956 324 554138 1806 11

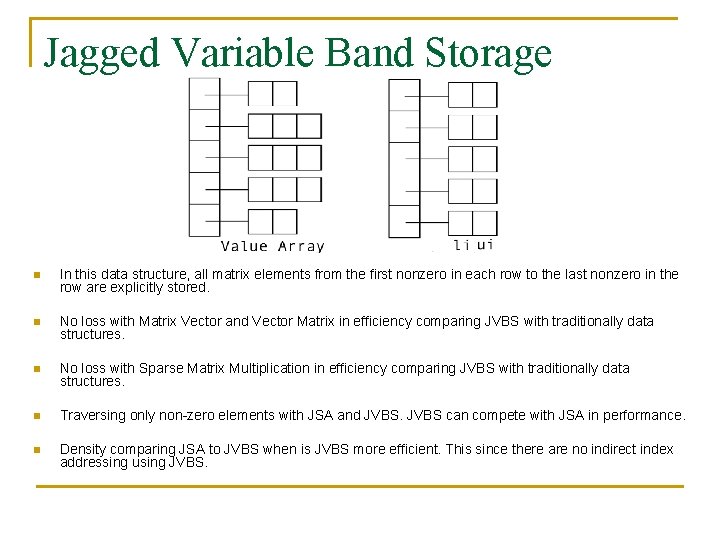

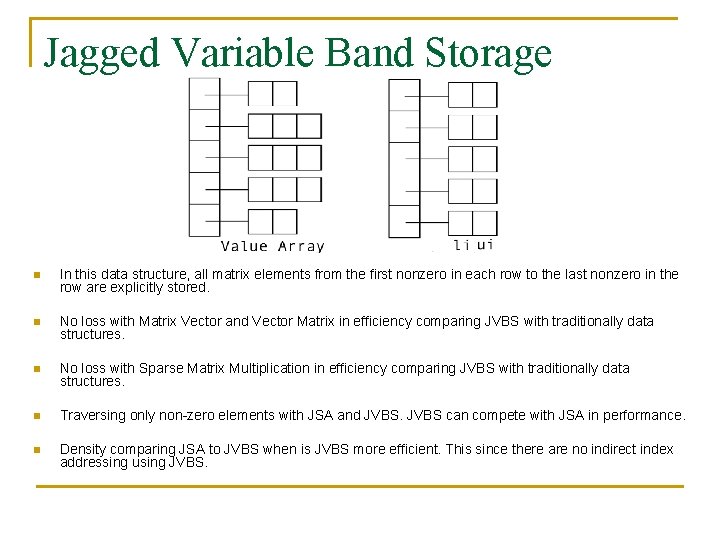

Jagged Variable Band Storage n In this data structure, all matrix elements from the first nonzero in each row to the last nonzero in the row are explicitly stored. n No loss with Matrix Vector and Vector Matrix in efficiency comparing JVBS with traditionally data structures. n No loss with Sparse Matrix Multiplication in efficiency comparing JVBS with traditionally data structures. n Traversing only non-zero elements with JSA and JVBS can compete with JSA in performance. n Density comparing JSA to JVBS when is JVBS more efficient. This since there are no indirect index addressing using JVBS.

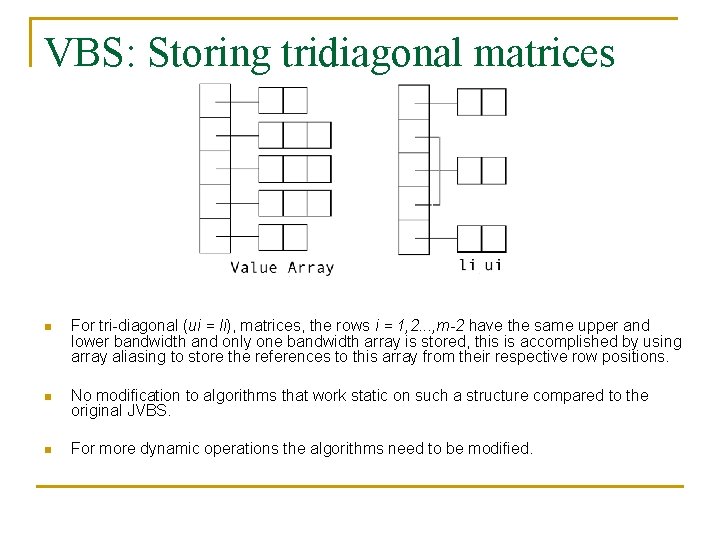

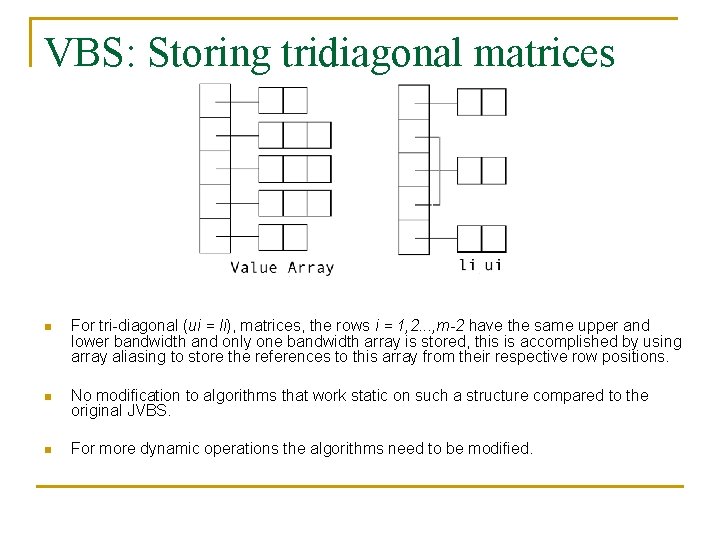

VBS: Storing tridiagonal matrices n For tri-diagonal (ui = li), matrices, the rows i = 1, 2. . . , m-2 have the same upper and lower bandwidth and only one bandwidth array is stored, this is accomplished by using array aliasing to store the references to this array from their respective row positions. n No modification to algorithms that work static on such a structure compared to the original JVBS. n For more dynamic operations the algorithms need to be modified.

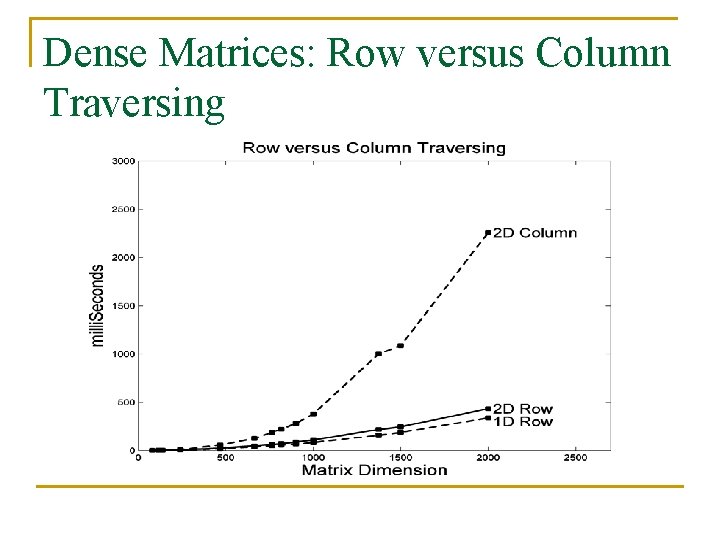

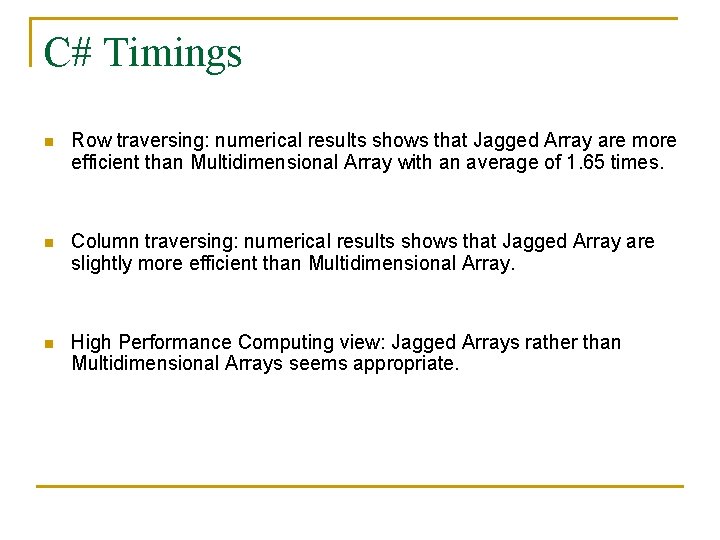

Dense Matrices: Row versus Column Traversing

![C Timings n Jagged arrays Java like for example doublemn Not necessarily stored contiguously C# Timings n Jagged arrays (Java like) for example double[m][n]. Not necessarily stored contiguously](https://slidetodoc.com/presentation_image_h2/808fff29995407cfc668715486cedb13/image-17.jpg)

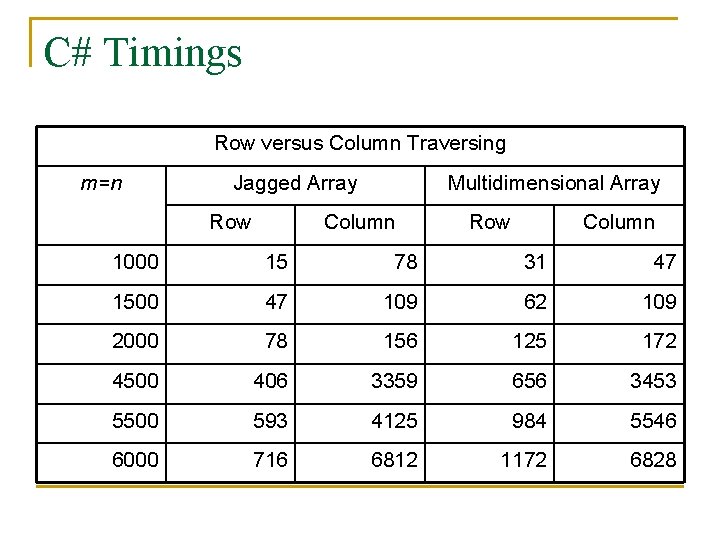

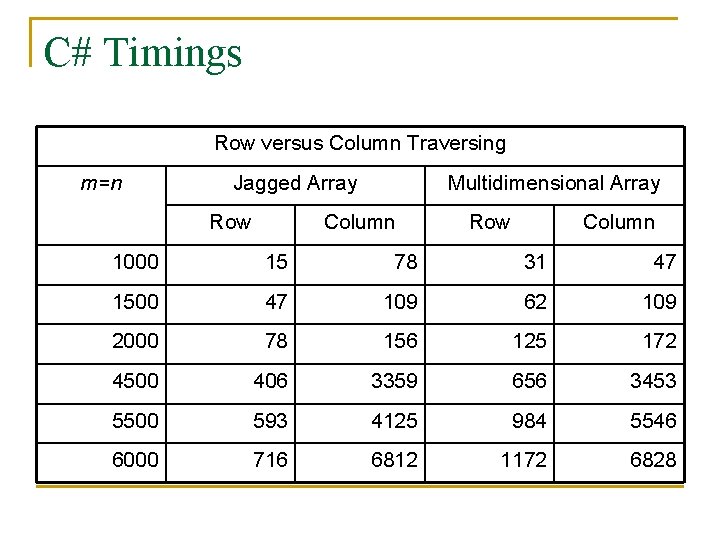

C# Timings n Jagged arrays (Java like) for example double[m][n]. Not necessarily stored contiguously in memory. n Multidimensional arrays (double[m, n]). Contiguously stored block in the memory. n Row-oriented. n Row versus Column traversing on a Multidimensional array (m=n) is an average of 3. 54 times. n The difference between row and column traversing on a Jagged array (m=n) is an average of 5. 71 times.

C# Timings n Row traversing: numerical results shows that Jagged Array are more efficient than Multidimensional Array with an average of 1. 65 times. n Column traversing: numerical results shows that Jagged Array are slightly more efficient than Multidimensional Array. n High Performance Computing view: Jagged Arrays rather than Multidimensional Arrays seems appropriate.

C# Timings Row versus Column Traversing m=n Jagged Array Row Multidimensional Array Column Row Column 1000 15 78 31 47 1500 47 109 62 109 2000 78 156 125 172 4500 406 3359 656 3453 5500 593 4125 984 5546 6000 716 6812 1172 6828

The Impact of Java and C# n The future will be Java and C# for commercial and educational use. n Commercial applications written in Java and C# will include scientific applications. n Java: Portability is especially important for high performance application, where the hardware architecture has a shorter lifespan the application software. n C#/. NET: Wide range of features are promised, but unfortunately still a one platform show. n C# versus Java: Is C# a better alternative then Java? n Java Grande Forum: C# might be the answer for some operations like parallel programming.

Future Topics q q n Sparse Gaussian Elimination with partial pivoting Multidimensional Matrices for Tensor Methods: q n Solving Large Sparse Linear System of Equations: Tensor methods gives 3 D structures where sparsity is an important issue. Parallel Java: Threads q q q Threads allow multiple activities to proceed concurrently in the same program. Parallel programming can only be achieved on a multiple processor platform. Suggested extensions to the Java language are Open. MP and MPI.

Concluding Remarks n We have shown for basic data structures there is a lot to gain in utilizing the flexibility (independently row updating). n Challenges as row versus column traversing for a 2 D square matrices. n This is just the beginning: q Java Threads leads to parallel computing but only on the premises of Java. q Other data structures must be investigated. q Graphs. n Applications: q Optimization and Numerical solution of PDEs. n People will use Java (and C#) for numerical computations, therefore it may be useful to invest time and resources finding how to use Java for numerical computation.