Its not a data deluge its worse than

- Slides: 42

It’s not a data deluge – it’s worse than that Craig Stewart – stewart@iu. edu Executive Director, Indiana University Pervasive Technology Institute Associate Dean, Research Technologies Keynote address for Third International Workshop on “Data Intensive Distributed Computing (DIDC'10)” held in conjunction with HPDC'10 in Chicago, IL on June 22 nd, 2010, organized by Tevfik Kosar, DIDC'10 Workshop Chair and Assistant Professor, Department of Computer Science & Center for Computational Theory, Louisiana State University http: //www. cct. lsu. edu/~kosar/didc 10/ 1

License Terms • Except where otherwise noted, contents of this presentation are Copyright 2011 by the Trustees of Indiana University. • Stewart, C. A. 2010. It’s not a data deluge – it’s worse than that. Keynote presentation. Third International Workshop on “Data Intensive Distributed Computing (DIDC'10)” held in conjunction with HPDC'10. June 22 nd, 2010, Chicago, IL. http: //hdl. handle. net/2022/13195 • This document is released under the Creative Commons Attribution 3. 0 Unported license (http: //creativecommons. org/licenses/by/3. 0/). This license includes the following terms: You are free to share – to copy, distribute and transmit the work and to remix – to adapt the work under the following conditions: attribution – you must attribute the work in the manner specified by the author or licensor (but not in any way that suggests that they endorse you or your use of the work). For any reuse or distribution, you must make clear to others the license terms of this work. 2

Graphic from Gantz, J. F. and D. Reinsel. IDC Digital Universe Study, Sponsored by EMC, "The Digital Universe Decade - Are You Ready? " May 2010. Used by permission of IDC. May not be reused without permission from IDC (www. idc. com) 3

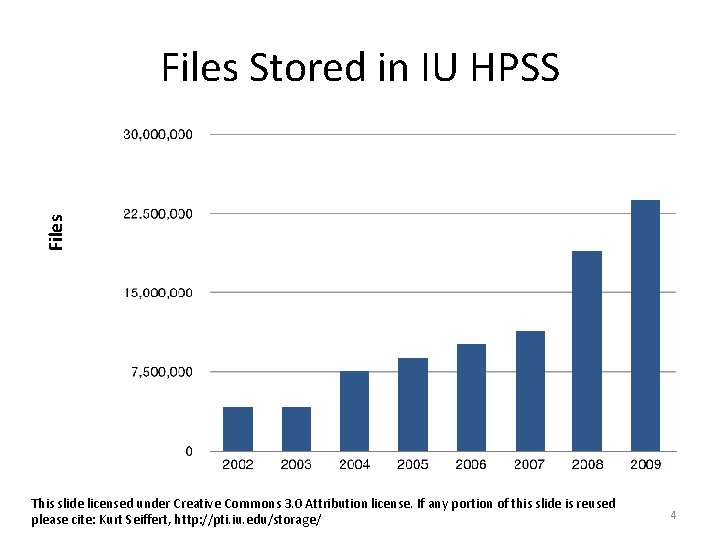

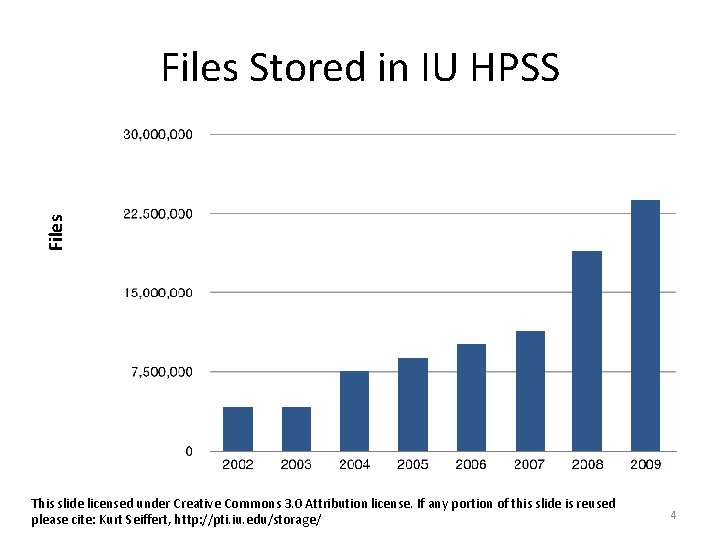

Files Stored in IU HPSS This slide licensed under Creative Commons 3. 0 Attribution license. If any portion of this slide is reused please cite: Kurt Seiffert, http: //pti. iu. edu/storage/ 4

TB Aggregate Data Stored in IU HPSS This slide licensed under Creative Commons 3. 0 Attribution license. If any portion of this slide is reused please cite: Kurt Seiffert, http: //pti. iu. edu/storage/ 5

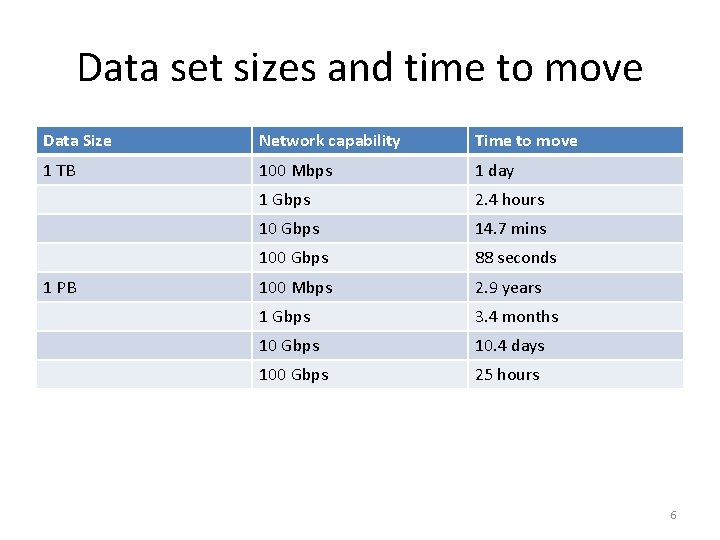

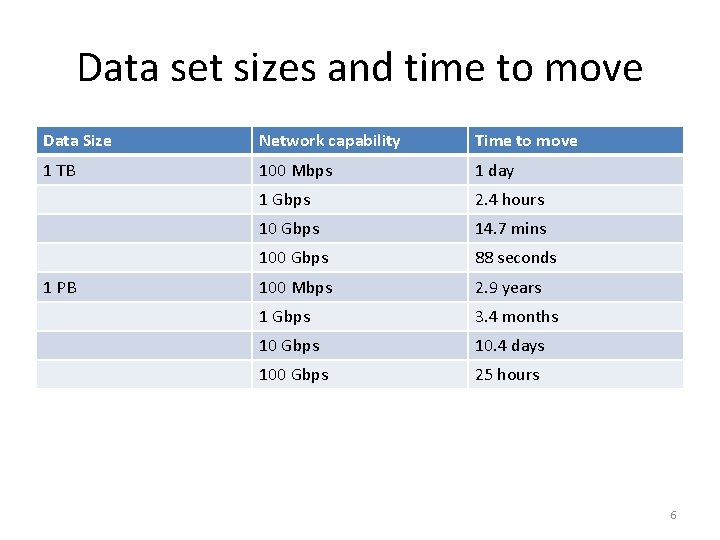

Data set sizes and time to move Data Size Network capability Time to move 1 TB 100 Mbps 1 day 1 Gbps 2. 4 hours 10 Gbps 14. 7 mins 100 Gbps 88 seconds 100 Mbps 2. 9 years 1 Gbps 3. 4 months 10 Gbps 10. 4 days 100 Gbps 25 hours 1 PB 6

Why it’s worse than just a deluge • Not all data are equal # 1: sensitive data • Campus networks and proliferation of digital instruments • Need for complexity hiding interfaces (Science gateways and others) • Metadata to enable later use and use in other disciplines • Where are the definitive repositories? • Not all data are equal #2: Who decides what is worth storing, and where do you store it? 7

Cyberinfrastructure • What is cyberinfrastructure? – Cyberinfrastructure consists of computing systems, data storage systems, advanced instruments and data repositories, visualization environments, and people, all linked together by software and high performance networks to improve research productivity and enable breakthroughs not otherwise possible. • Much of discussion is about cyberinfrastructure for handling data rather than basic computer science 8

Handling sensitive data • Two established approaches – Protect the data really well – Collect only the data that are essential 9

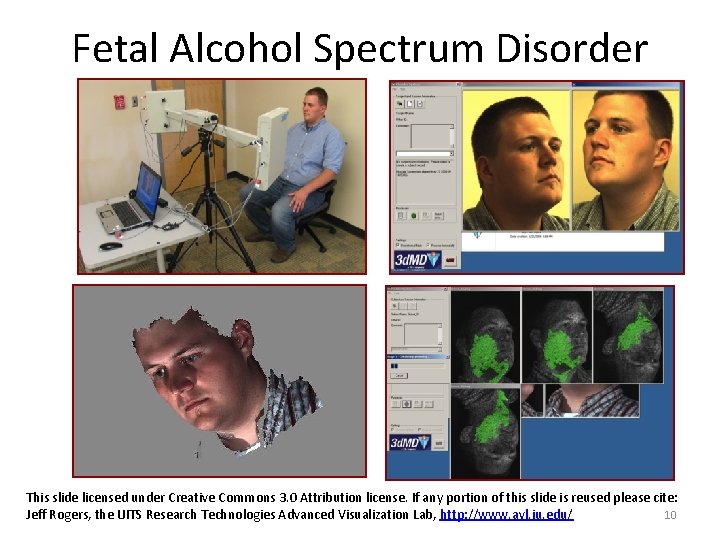

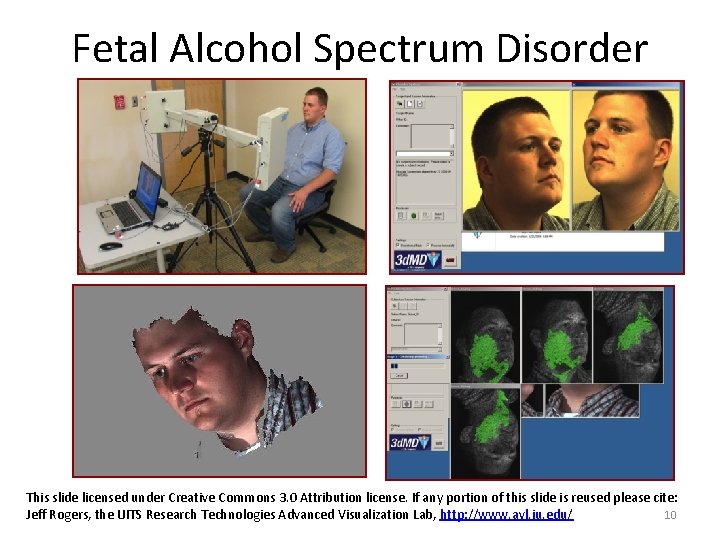

Fetal Alcohol Spectrum Disorder This slide licensed under Creative Commons 3. 0 Attribution license. If any portion of this slide is reused please cite: 10 Jeff Rogers, the UITS Research Technologies Advanced Visualization Lab, http: //www. avl. iu. edu/

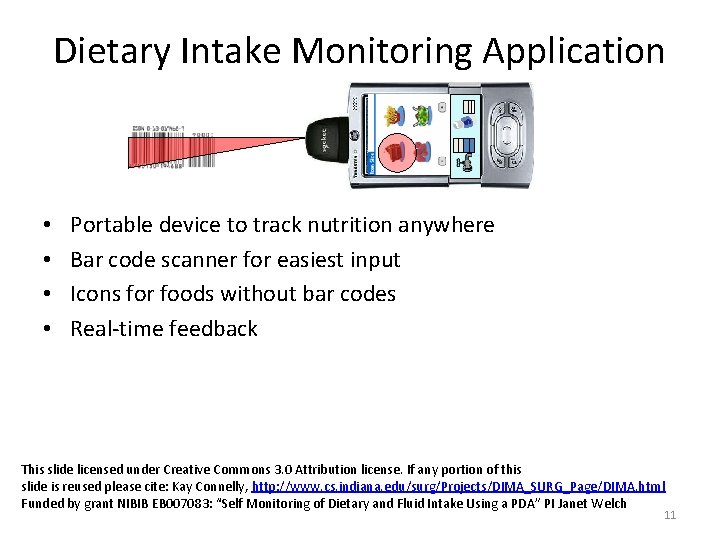

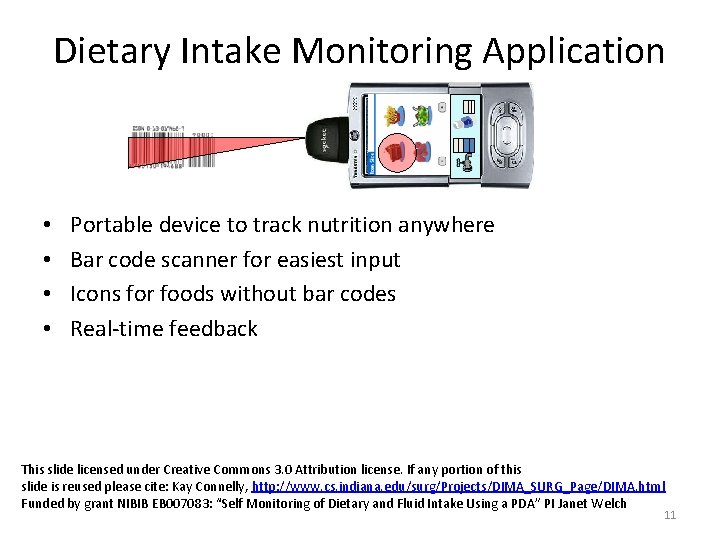

Dietary Intake Monitoring Application • • Portable device to track nutrition anywhere Bar code scanner for easiest input Icons for foods without bar codes Real-time feedback This slide licensed under Creative Commons 3. 0 Attribution license. If any portion of this slide is reused please cite: Kay Connelly, http: //www. cs. indiana. edu/surg/Projects/DIMA_SURG_Page/DIMA. html Funded by grant NIBIB EB 007083: “Self Monitoring of Dietary and Fluid Intake Using a PDA” PI Janet Welch 11

Ability to deal with sensitive data Increasingly important What are you doing about H 1 N 1 What are you doing about Haiti? What are you doing about the oil spill? What are you doing about _______? Bayer and Baycol Someday we will all be ex-human subjects Someday we (generally) hope to be old and applications that help us do that well are interesting • HIPAA - How to secure data is a reasonably well solved problem – just hard & matter of doing it. • • 12

Data creation and transport • Unite data and compute • Network bottleneck issues • A few example custom-crafted solutions 13

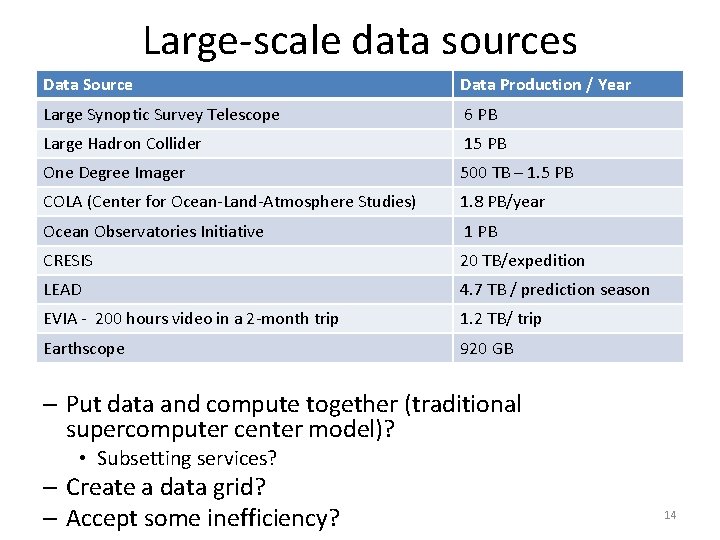

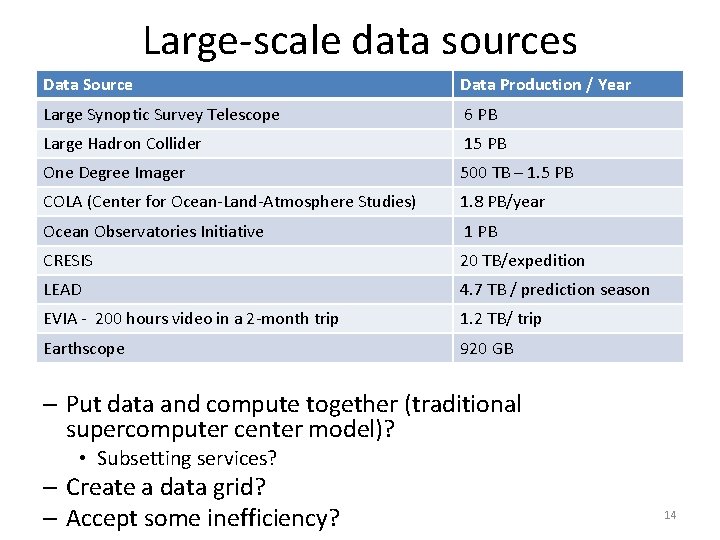

Large-scale data sources Data Source Data Production / Year Large Synoptic Survey Telescope 6 PB Large Hadron Collider 15 PB One Degree Imager 500 TB – 1. 5 PB COLA (Center for Ocean-Land-Atmosphere Studies) 1. 8 PB/year Ocean Observatories Initiative 1 PB CRESIS 20 TB/expedition LEAD 4. 7 TB / prediction season EVIA - 200 hours video in a 2 -month trip 1. 2 TB/ trip Earthscope 920 GB – Put data and compute together (traditional supercomputer center model)? • Subsetting services? – Create a data grid? – Accept some inefficiency? 14

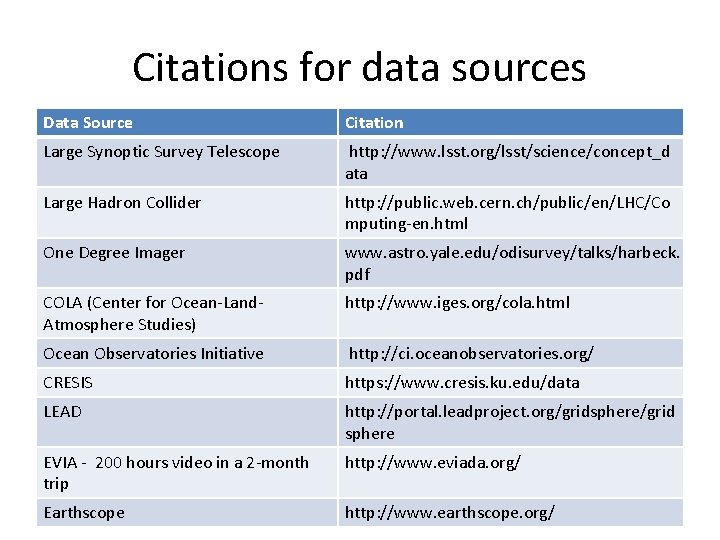

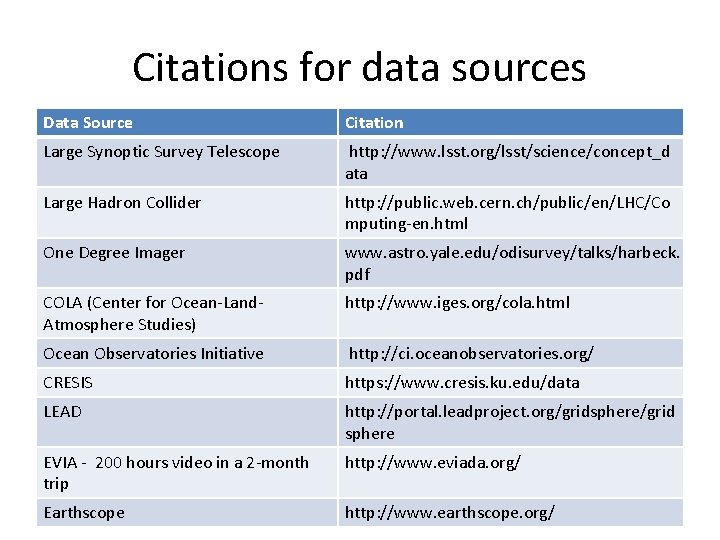

Citations for data sources Data Source Citation Large Synoptic Survey Telescope http: //www. lsst. org/lsst/science/concept_d ata Large Hadron Collider http: //public. web. cern. ch/public/en/LHC/Co mputing-en. html One Degree Imager www. astro. yale. edu/odisurvey/talks/harbeck. pdf COLA (Center for Ocean-Land. Atmosphere Studies) http: //www. iges. org/cola. html Ocean Observatories Initiative http: //ci. oceanobservatories. org/ CRESIS https: //www. cresis. ku. edu/data LEAD http: //portal. leadproject. org/gridsphere/grid sphere EVIA - 200 hours video in a 2 -month trip http: //www. eviada. org/ Earthscope http: //www. earthscope. org/ 15

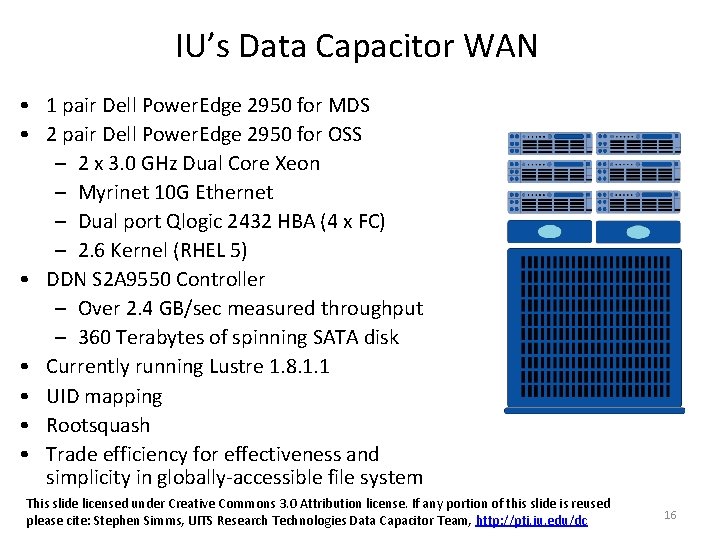

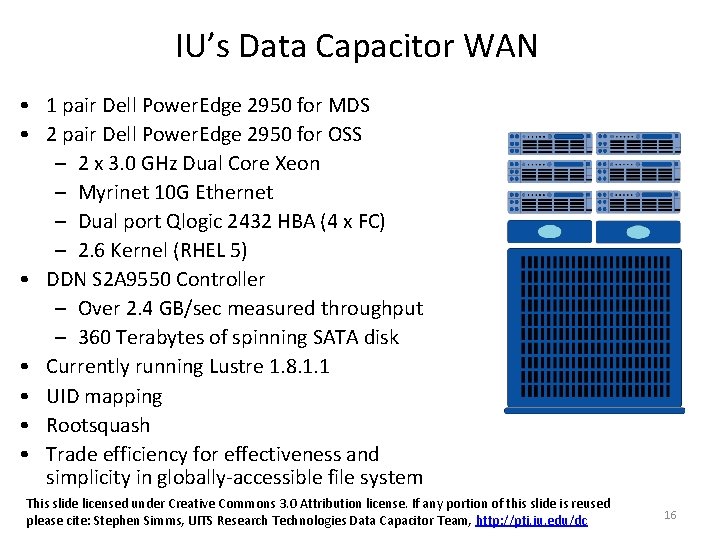

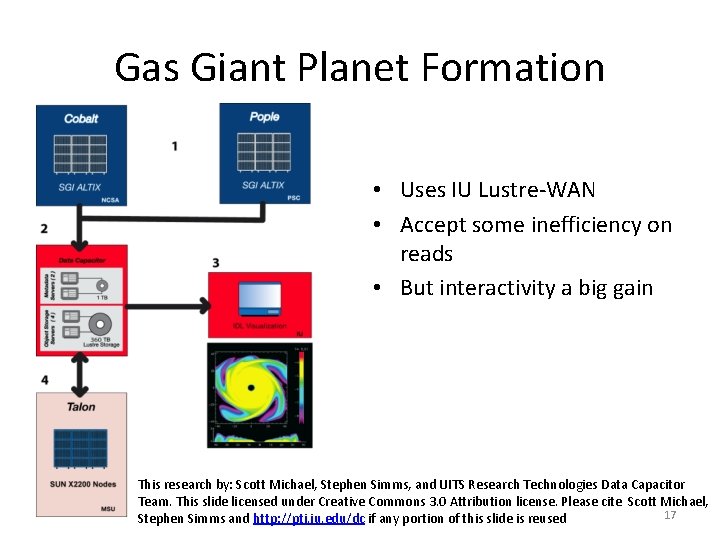

IU’s Data Capacitor WAN • 1 pair Dell Power. Edge 2950 for MDS • 2 pair Dell Power. Edge 2950 for OSS – 2 x 3. 0 GHz Dual Core Xeon – Myrinet 10 G Ethernet – Dual port Qlogic 2432 HBA (4 x FC) – 2. 6 Kernel (RHEL 5) • DDN S 2 A 9550 Controller – Over 2. 4 GB/sec measured throughput – 360 Terabytes of spinning SATA disk • Currently running Lustre 1. 8. 1. 1 • UID mapping • Rootsquash • Trade efficiency for effectiveness and simplicity in globally-accessible file system This slide licensed under Creative Commons 3. 0 Attribution license. If any portion of this slide is reused please cite: Stephen Simms, UITS Research Technologies Data Capacitor Team, http: //pti. iu. edu/dc 16

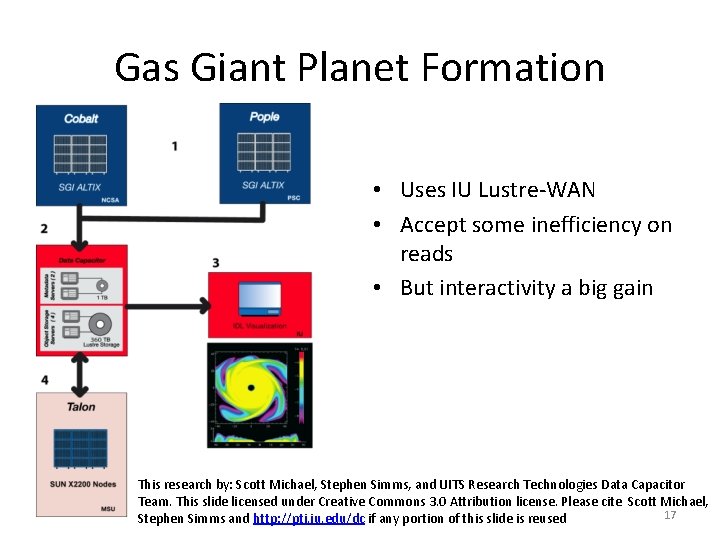

Gas Giant Planet Formation • Uses IU Lustre-WAN • Accept some inefficiency on reads • But interactivity a big gain This research by: Scott Michael, Stephen Simms, and UITS Research Technologies Data Capacitor Team. This slide licensed under Creative Commons 3. 0 Attribution license. Please cite Scott Michael, 17 Stephen Simms and http: //pti. iu. edu/dc if any portion of this slide is reused

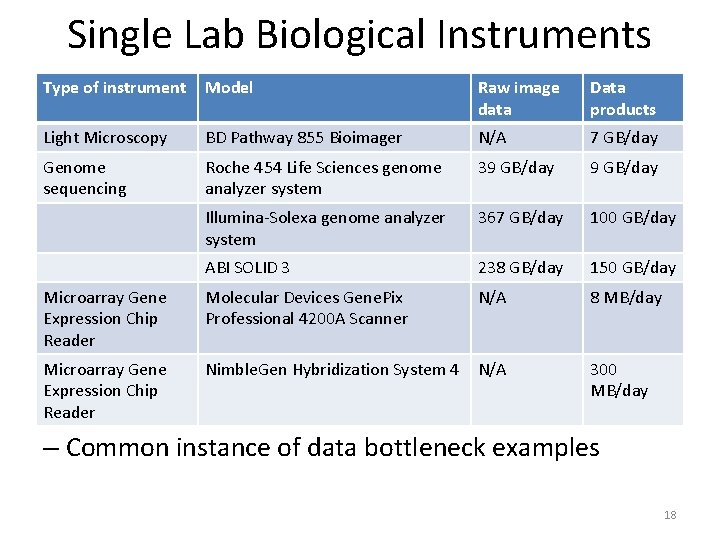

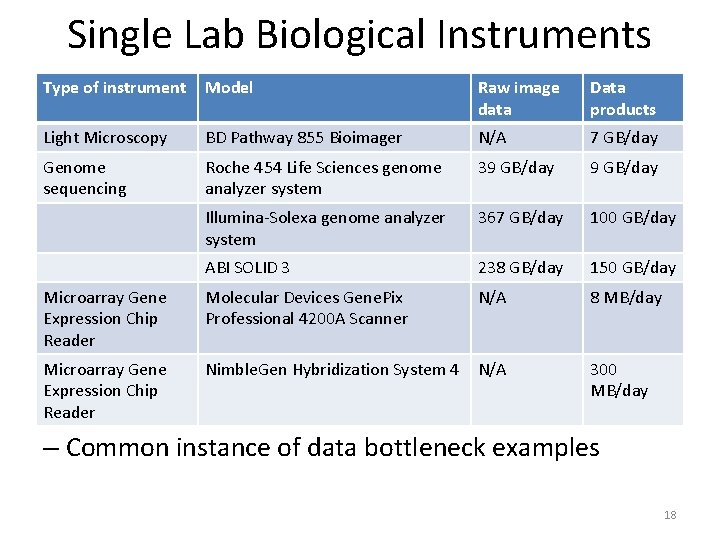

Single Lab Biological Instruments Type of instrument Model Raw image data Data products Light Microscopy BD Pathway 855 Bioimager N/A 7 GB/day Genome sequencing Roche 454 Life Sciences genome analyzer system 39 GB/day Illumina-Solexa genome analyzer system 367 GB/day 100 GB/day ABI SOLID 3 238 GB/day 150 GB/day Microarray Gene Expression Chip Reader Molecular Devices Gene. Pix Professional 4200 A Scanner N/A 8 MB/day Microarray Gene Expression Chip Reader Nimble. Gen Hybridization System 4 N/A 300 MB/day – Common instance of data bottleneck examples 18

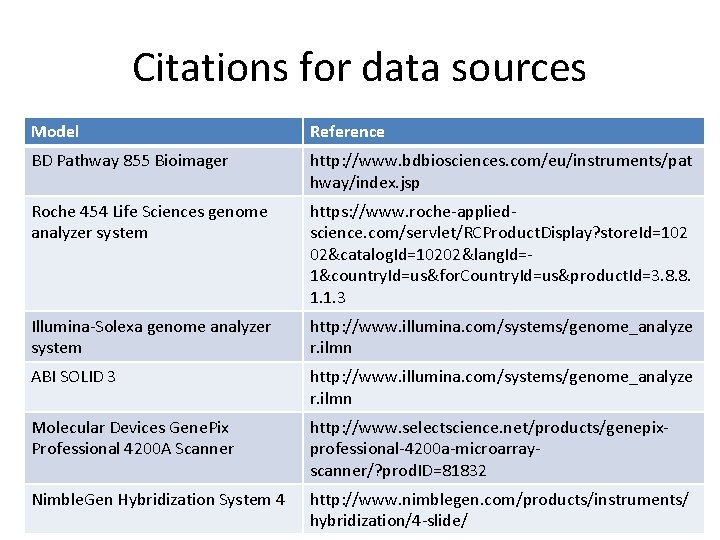

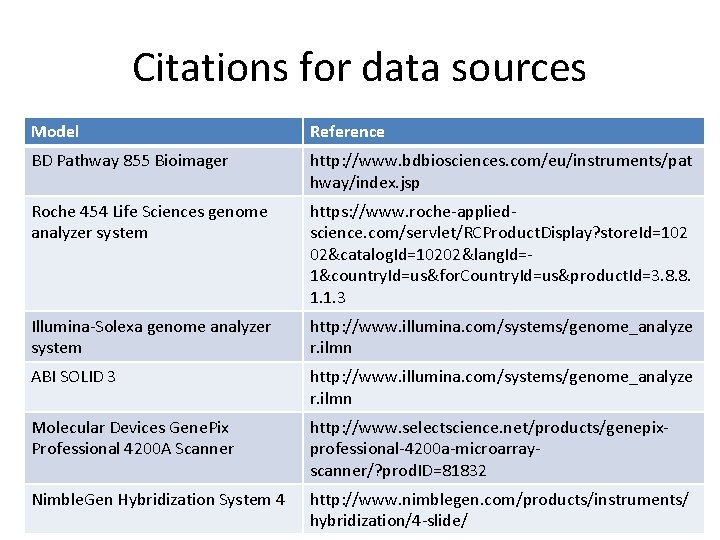

Citations for data sources Model Reference BD Pathway 855 Bioimager http: //www. bdbiosciences. com/eu/instruments/pat hway/index. jsp Roche 454 Life Sciences genome analyzer system https: //www. roche-appliedscience. com/servlet/RCProduct. Display? store. Id=102 02&catalog. Id=10202&lang. Id=1&country. Id=us&for. Country. Id=us&product. Id=3. 8. 8. 1. 1. 3 Illumina-Solexa genome analyzer system http: //www. illumina. com/systems/genome_analyze r. ilmn ABI SOLID 3 http: //www. illumina. com/systems/genome_analyze r. ilmn Molecular Devices Gene. Pix Professional 4200 A Scanner http: //www. selectscience. net/products/genepixprofessional-4200 a-microarrayscanner/? prod. ID=81832 Nimble. Gen Hybridization System 4 http: //www. nimblegen. com/products/instruments/ 19 hybridization/4 -slide/

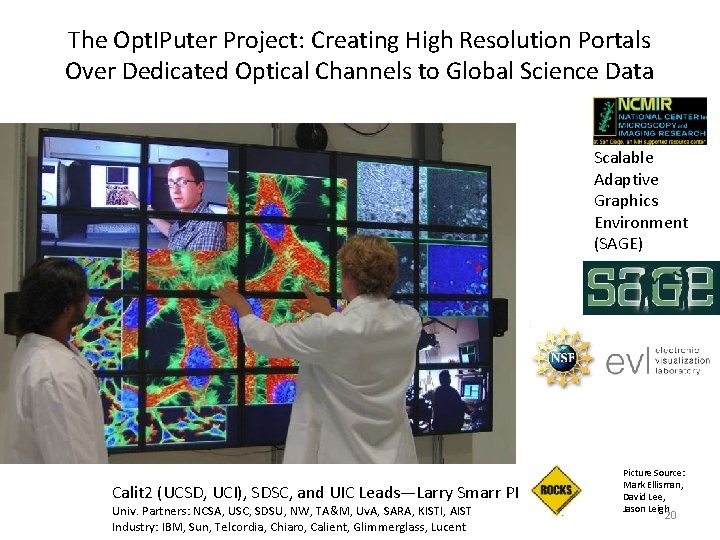

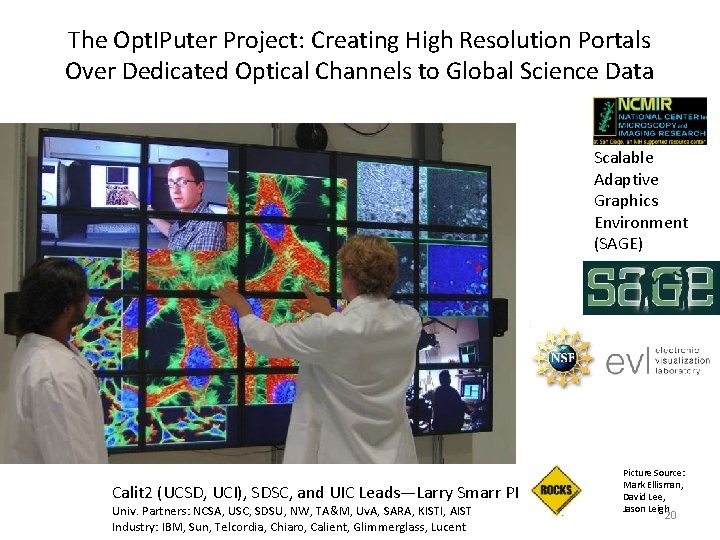

The Opt. IPuter Project: Creating High Resolution Portals Over Dedicated Optical Channels to Global Science Data Scalable Adaptive Graphics Environment (SAGE) Calit 2 (UCSD, UCI), SDSC, and UIC Leads—Larry Smarr PI Univ. Partners: NCSA, USC, SDSU, NW, TA&M, Uv. A, SARA, KISTI, AIST Industry: IBM, Sun, Telcordia, Chiaro, Calient, Glimmerglass, Lucent Picture Source: Mark Ellisman, David Lee, Jason Leigh 20

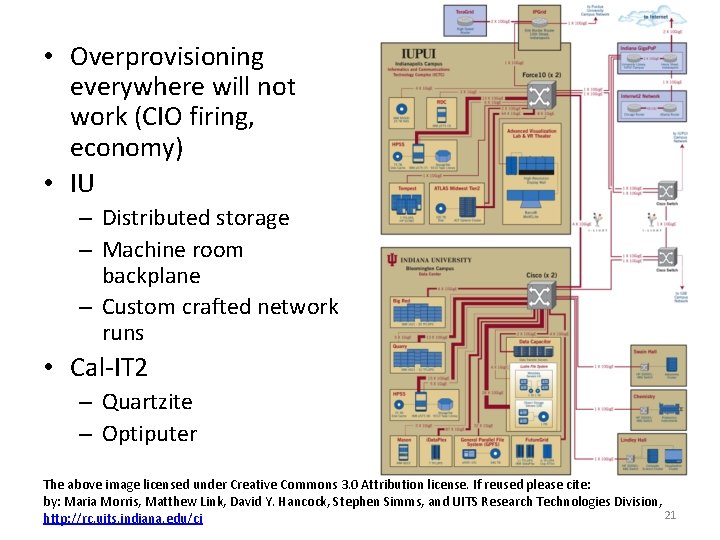

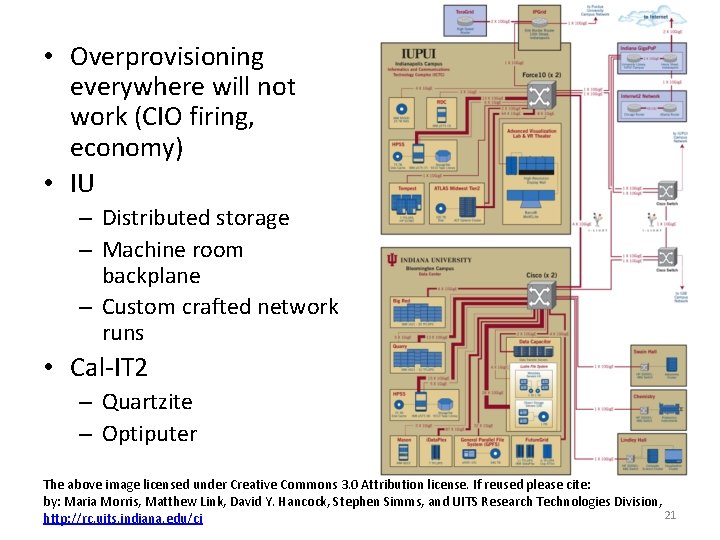

• Overprovisioning everywhere will not work (CIO firing, economy) • IU – Distributed storage – Machine room backplane – Custom crafted network runs • Cal-IT 2 – Quartzite – Optiputer The above image licensed under Creative Commons 3. 0 Attribution license. If reused please cite: by: Maria Morris, Matthew Link, David Y. Hancock, Stephen Simms, and UITS Research Technologies Division, 21 http: //rc. uits. indiana. edu/ci

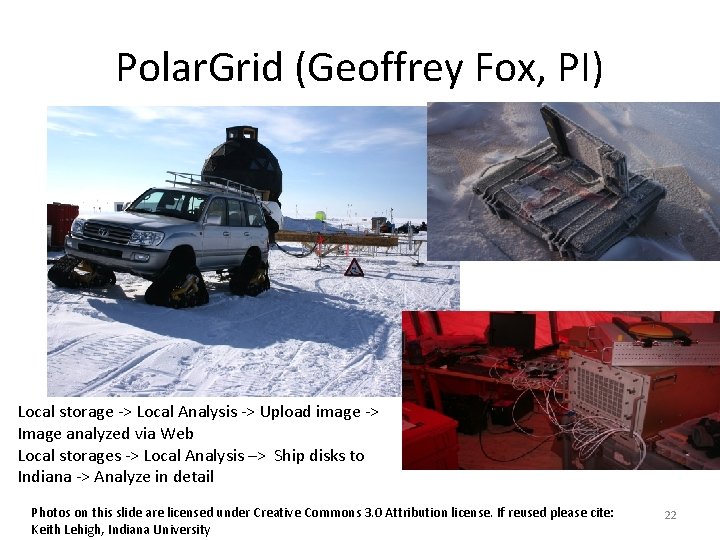

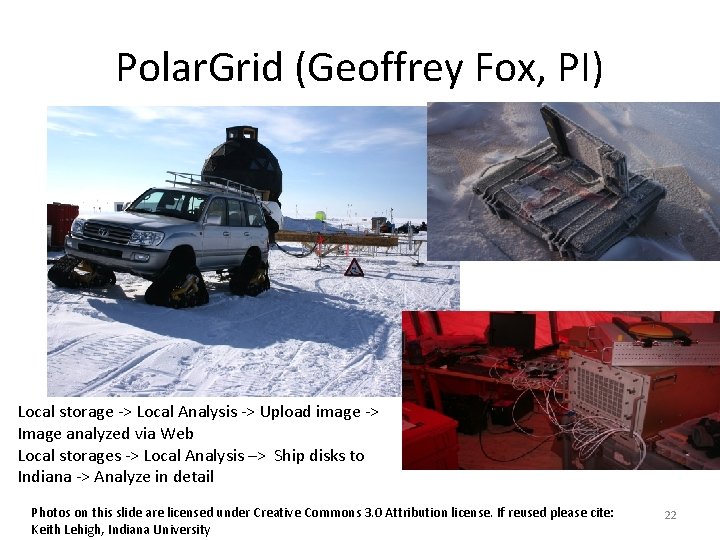

Polar. Grid (Geoffrey Fox, PI) Local storage -> Local Analysis -> Upload image -> Image analyzed via Web Local storages -> Local Analysis –> Ship disks to Indiana -> Analyze in detail Photos on this slide are licensed under Creative Commons 3. 0 Attribution license. If reused please cite: Keith Lehigh, Indiana University 22

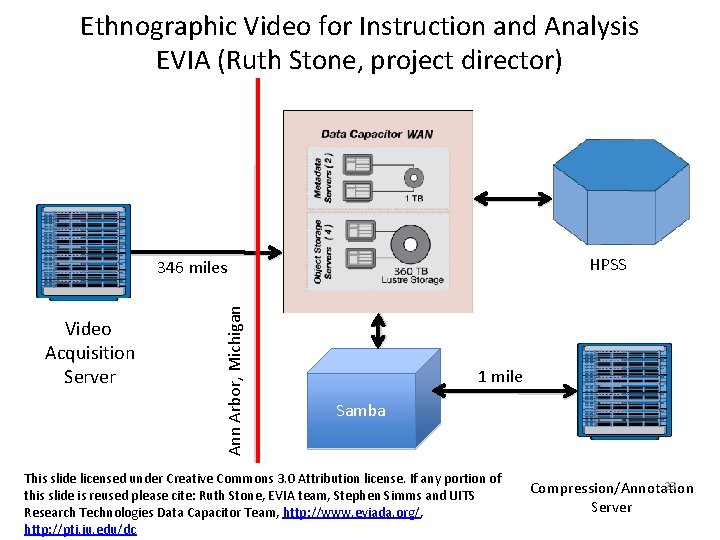

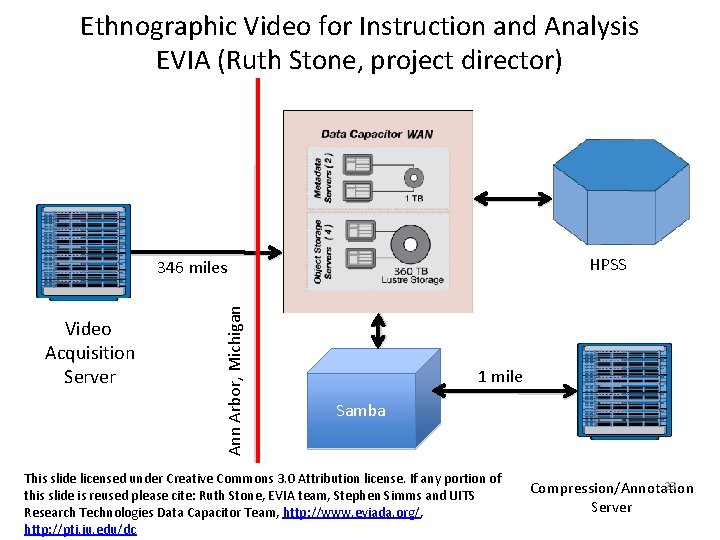

Ethnographic Video for Instruction and Analysis EVIA (Ruth Stone, project director) HPSS Video Acquisition Server Ann Arbor, Michigan 346 miles 1 mile Samba This slide licensed under Creative Commons 3. 0 Attribution license. If any portion of this slide is reused please cite: Ruth Stone, EVIA team, Stephen Simms and UITS Research Technologies Data Capacitor Team, http: //www. eviada. org/, http: //pti. iu. edu/dc 23 Compression/Annotation Server

Summing up data movement • Some proved approaches – Just put everything together – Build a data grid • Newer approaches – Handcraft solutions – Distributed file systems – Accept some inefficiencies • Network advances will continue to help – 100 Gbps – Dynamic networking services – Don’t underestimate bandwidth of a Fed. Ex shipment 24

Complexity Hiding Interfaces 25

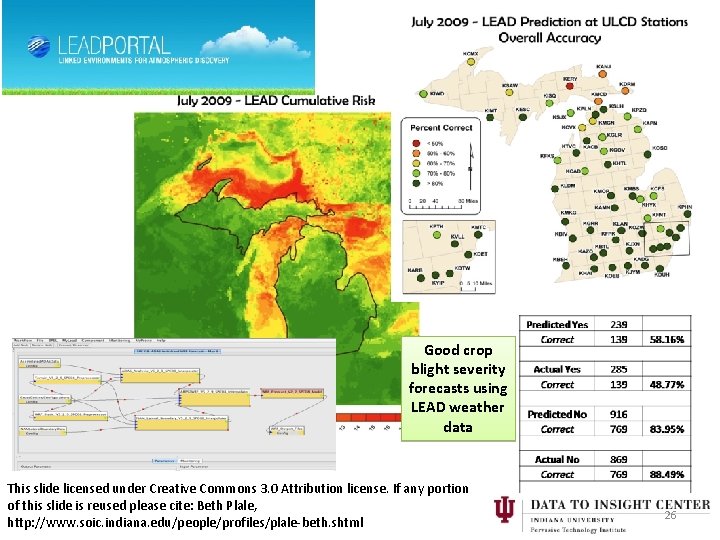

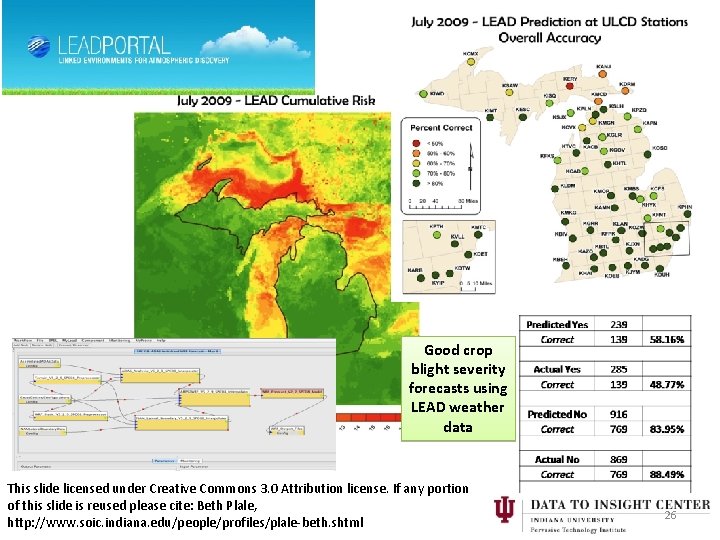

Good crop blight severity forecasts using LEAD weather data This slide licensed under Creative Commons 3. 0 Attribution license. If any portion of this slide is reused please cite: Beth Plale, http: //www. soic. indiana. edu/people/profiles/plale-beth. shtml 26

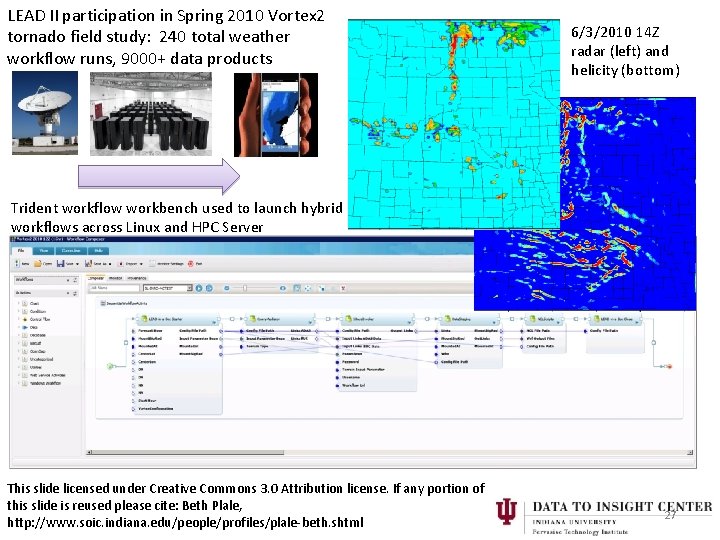

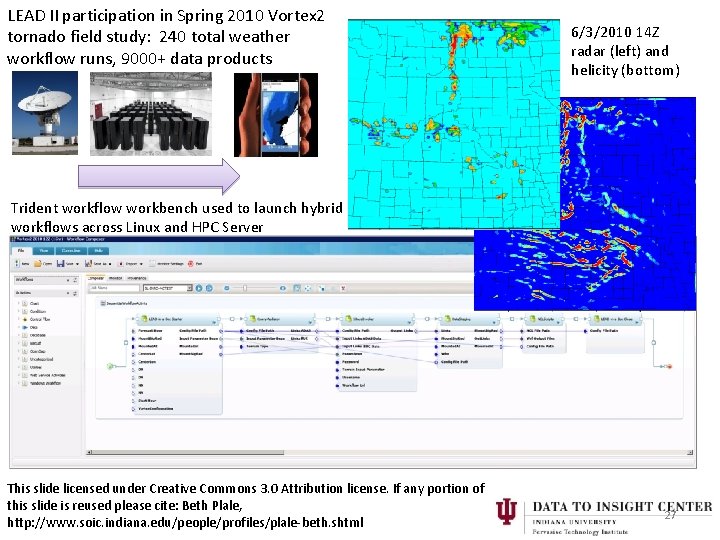

LEAD II participation in Spring 2010 Vortex 2 tornado field study: 240 total weather workflow runs, 9000+ data products 6/3/2010 14 Z radar (left) and helicity (bottom) Trident workflow workbench used to launch hybrid workflows across Linux and HPC Server This slide licensed under Creative Commons 3. 0 Attribution license. If any portion of this slide is reused please cite: Beth Plale, http: //www. soic. indiana. edu/people/profiles/plale-beth. shtml 27

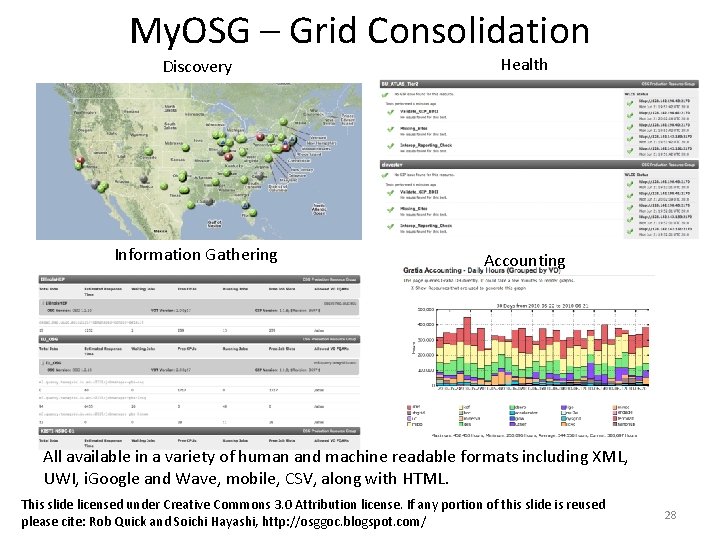

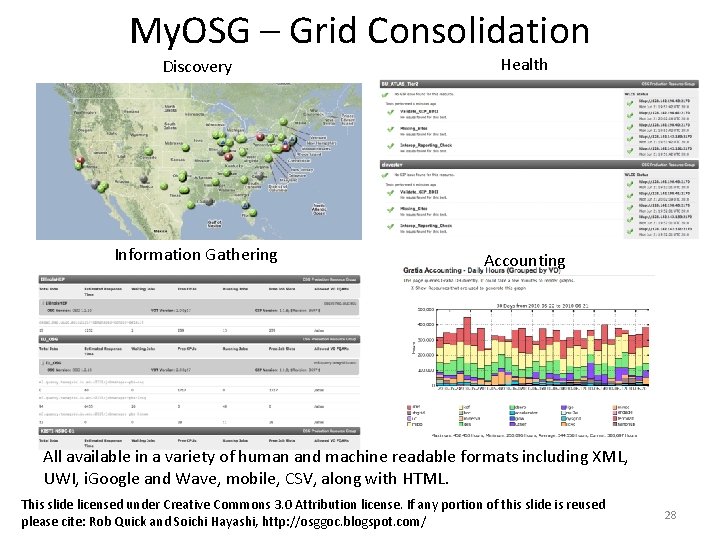

My. OSG – Grid Consolidation Discovery Health Information Gathering Accounting All available in a variety of human and machine readable formats including XML, UWI, i. Google and Wave, mobile, CSV, along with HTML. This slide licensed under Creative Commons 3. 0 Attribution license. If any portion of this slide is reused please cite: Rob Quick and Soichi Hayashi, http: //osggoc. blogspot. com/ 28

Complexity Hiding Interfaces • Allow creation of complex workflows on fly • Good demonstration of “not otherwise possible” part of CI definition • Outreach and Engagement • Bridge gap between creation of sophisticated data products and information in one domain and their application in another (Plale) 29

Metadata & Provenance 30

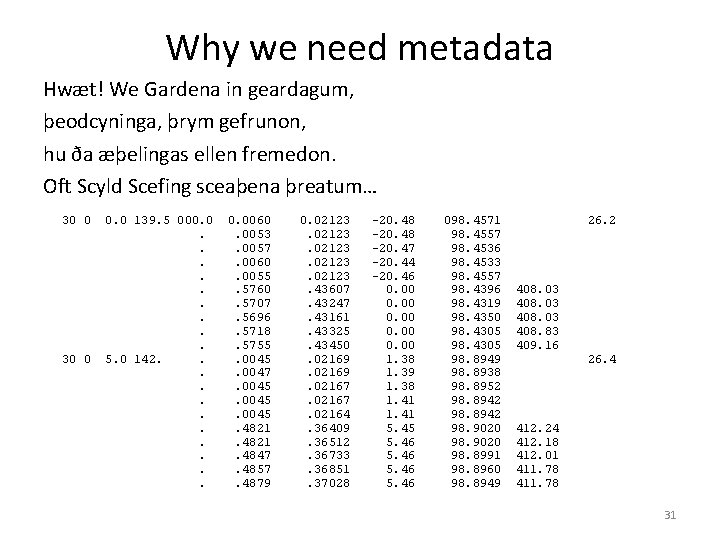

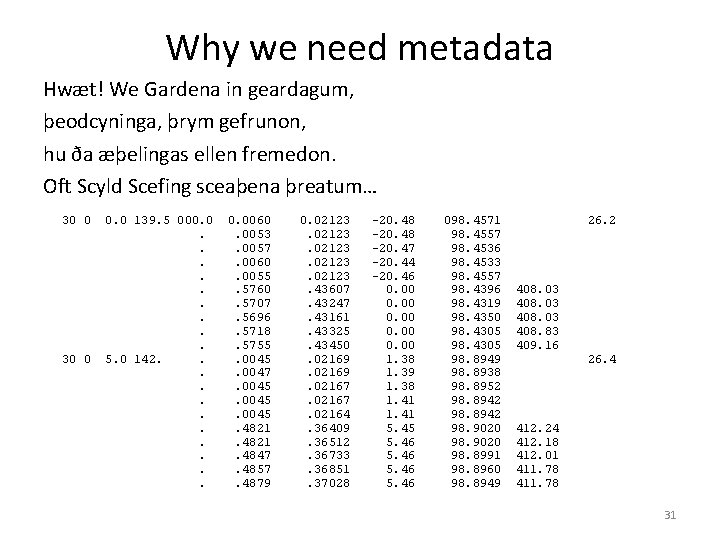

Why we need metadata Hwæt! We Gardena in geardagum, þeodcyninga, þrym gefrunon, hu ða æþelingas ellen fremedon. Oft Scyld Scefing sceaþena þreatum… 30 0 0. 0 139. 5 000. 0. . 5. 0 142. . . 0. 0060. 0053. 0057. 0060. 0055. 5760. 5707. 5696. 5718. 5755. 0047. 0045. 4821. 4847. 4857. 4879 0. 02123. 43607. 43247. 43161. 43325. 43450. 02169. 02167. 02164. 36409. 36512. 36733. 36851. 37028 -20. 48 -20. 47 -20. 44 -20. 46 0. 00 1. 38 1. 39 1. 38 1. 41 5. 45 5. 46 098. 4571 98. 4557 98. 4536 98. 4533 98. 4557 98. 4396 98. 4319 98. 4350 98. 4305 98. 8949 98. 8938 98. 8952 98. 8942 98. 9020 98. 8991 98. 8960 98. 8949 26. 2 408. 03 408. 83 409. 16 26. 4 412. 24 412. 18 412. 01 411. 78 31

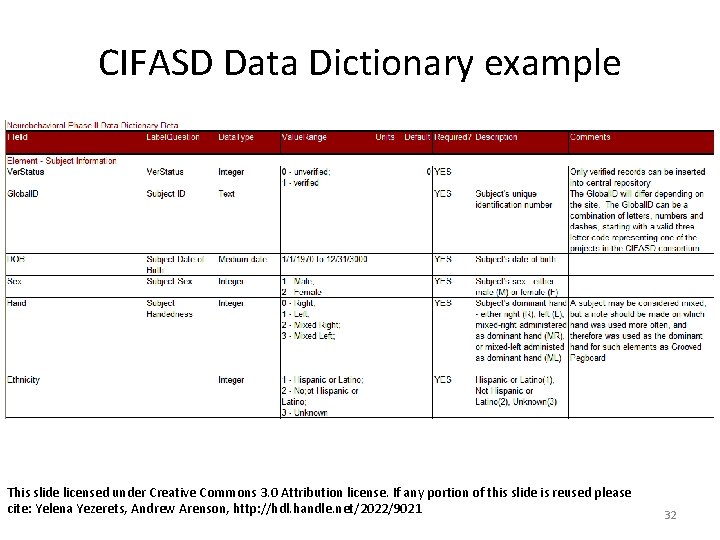

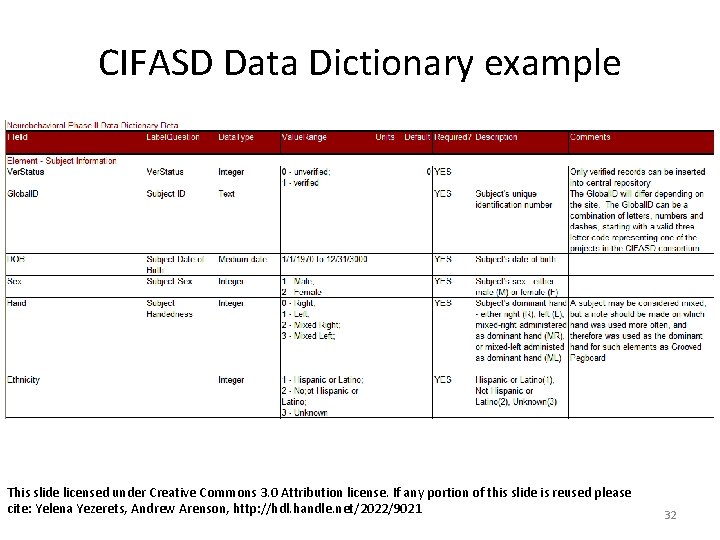

CIFASD Data Dictionary example This slide licensed under Creative Commons 3. 0 Attribution license. If any portion of this slide is reused please cite: Yelena Yezerets, Andrew Arenson, http: //hdl. handle. net/2022/9021 32

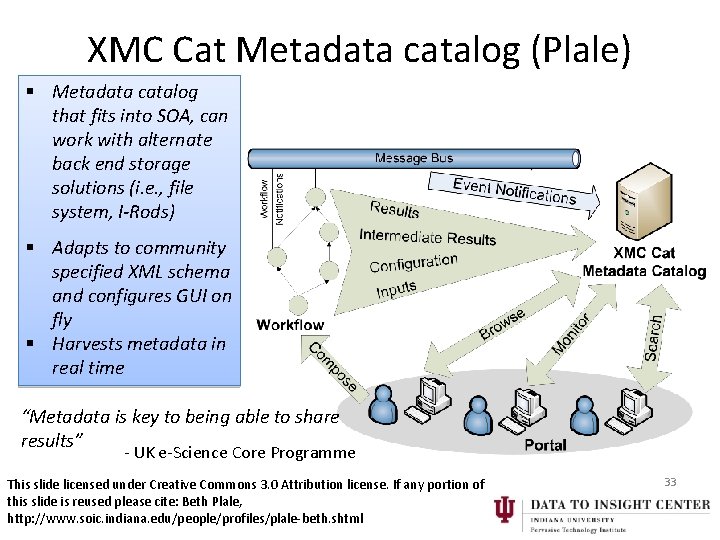

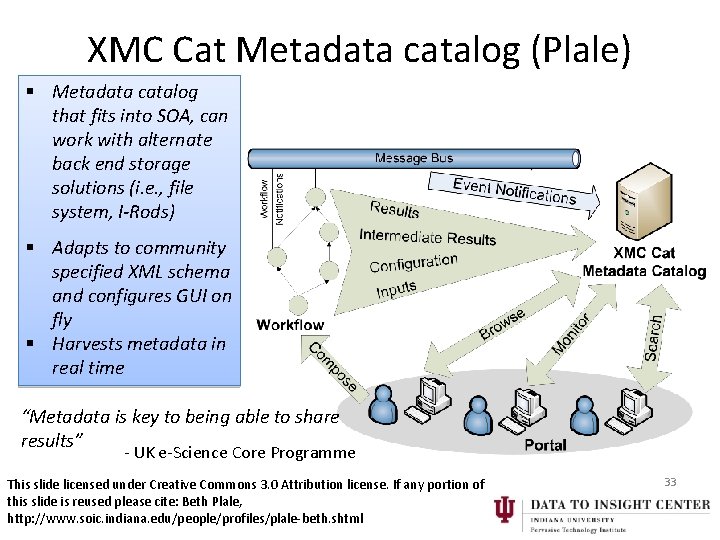

XMC Cat Metadata catalog (Plale) § Metadata catalog that fits into SOA, can work with alternate back end storage solutions (i. e. , file system, I-Rods) § Adapts to community specified XML schema and configures GUI on fly § Harvests metadata in real time “Metadata is key to being able to share results” - UK e-Science Core Programme This slide licensed under Creative Commons 3. 0 Attribution license. If any portion of this slide is reused please cite: Beth Plale, http: //www. soic. indiana. edu/people/profiles/plale-beth. shtml 33

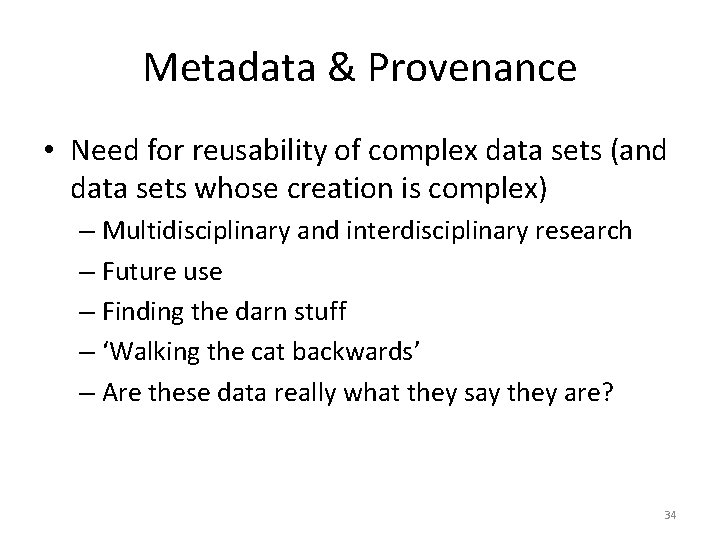

Metadata & Provenance • Need for reusability of complex data sets (and data sets whose creation is complex) – Multidisciplinary and interdisciplinary research – Future use – Finding the darn stuff – ‘Walking the cat backwards’ – Are these data really what they say they are? 34

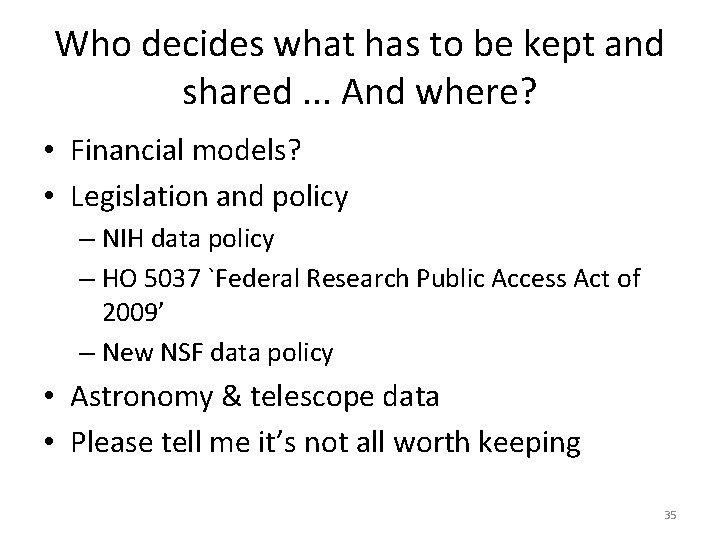

Who decides what has to be kept and shared. . . And where? • Financial models? • Legislation and policy – NIH data policy – HO 5037 `Federal Research Public Access Act of 2009’ – New NSF data policy • Astronomy & telescope data • Please tell me it’s not all worth keeping 35

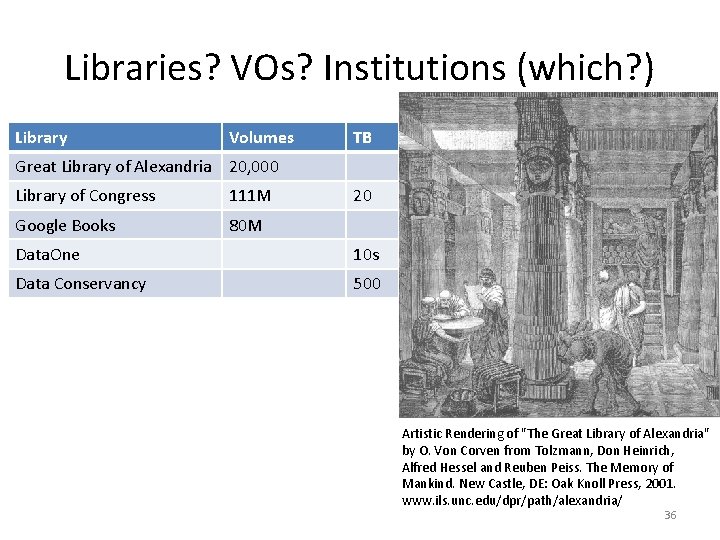

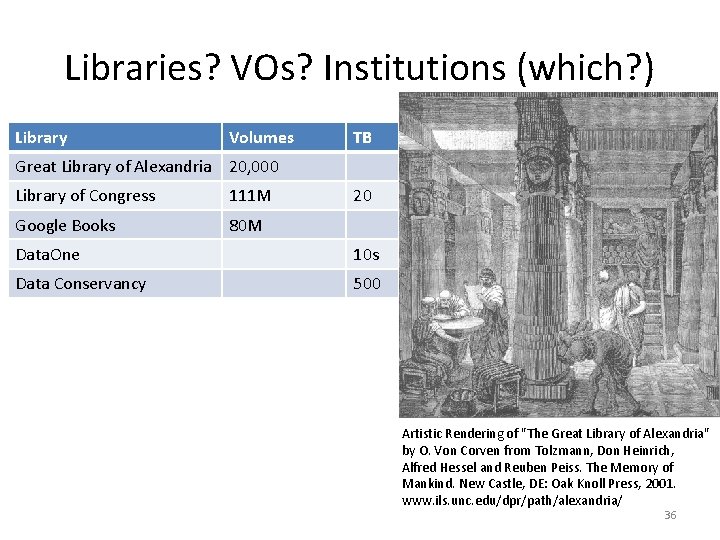

Libraries? VOs? Institutions (which? ) Library Volumes TB Great Library of Alexandria 20, 000 Library of Congress 111 M Google Books 80 M 20 Data. One 10 s Data Conservancy 500 Artistic Rendering of "The Great Library of Alexandria" by O. Von Corven from Tolzmann, Don Heinrich, Alfred Hessel and Reuben Peiss. The Memory of Mankind. New Castle, DE: Oak Knoll Press, 2001. www. ils. unc. edu/dpr/path/alexandria/ 36

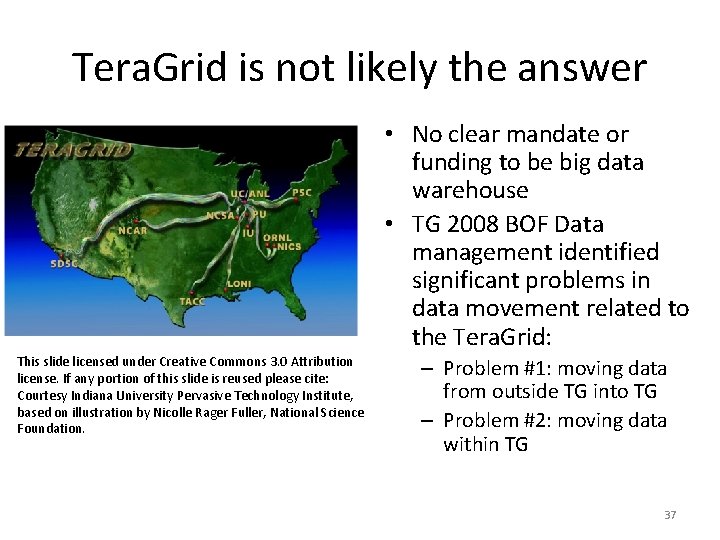

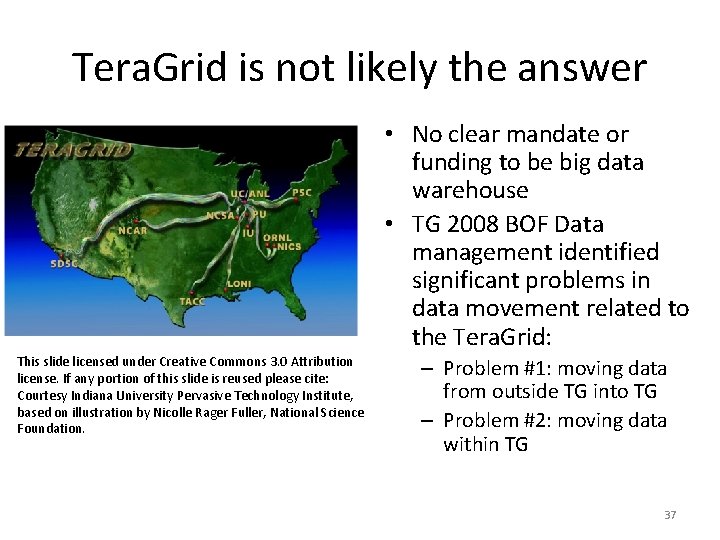

Tera. Grid is not likely the answer • No clear mandate or funding to be big data warehouse • TG 2008 BOF Data management identified significant problems in data movement related to the Tera. Grid: This slide licensed under Creative Commons 3. 0 Attribution license. If any portion of this slide is reused please cite: Courtesy Indiana University Pervasive Technology Institute, based on illustration by Nicolle Rager Fuller, National Science Foundation. – Problem #1: moving data from outside TG into TG – Problem #2: moving data within TG 37

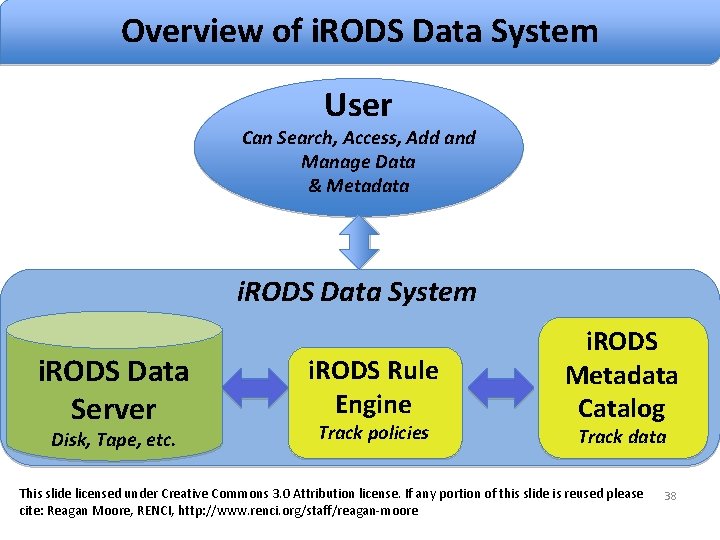

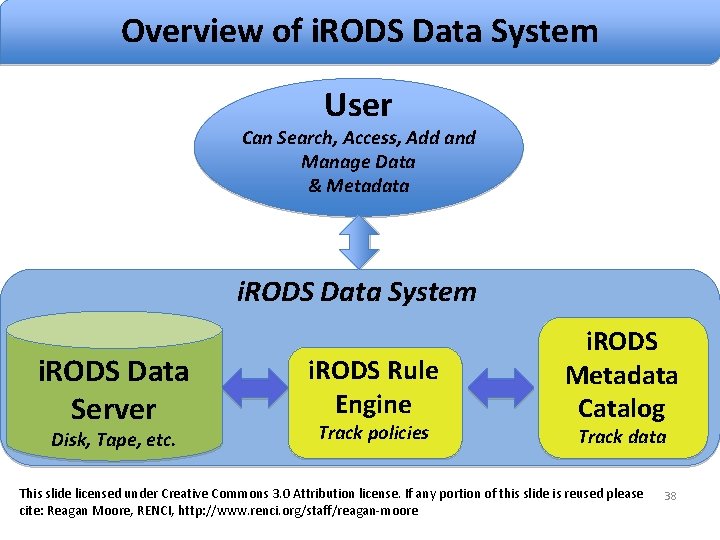

Overview Architecture Overviewof of i. RODS Data System User Can Search, Access, Add and Manage Data & Metadata i. RODS Data System i. RODS Data Server Disk, Tape, etc. i. RODS Rule Engine Track policies i. RODS Metadata Catalog Track data This slide licensed under Creative Commons 3. 0 Attribution license. If any portion of this slide is reused please cite: Reagan Moore, RENCI, http: //www. renci. org/staff/reagan-moore 38

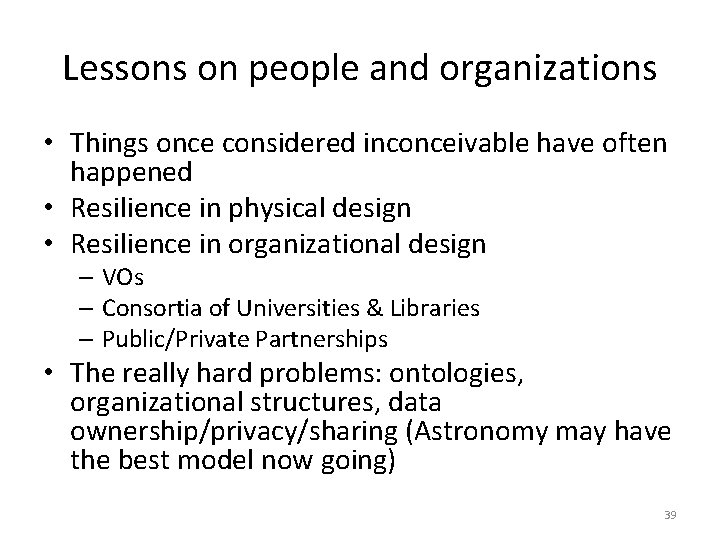

Lessons on people and organizations • Things once considered inconceivable have often happened • Resilience in physical design • Resilience in organizational design – VOs – Consortia of Universities & Libraries – Public/Private Partnerships • The really hard problems: ontologies, organizational structures, data ownership/privacy/sharing (Astronomy may have the best model now going) 39

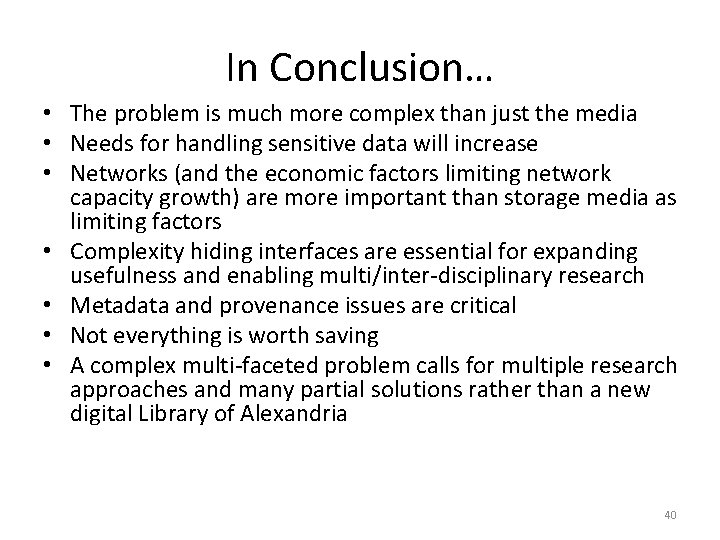

In Conclusion… • The problem is much more complex than just the media • Needs for handling sensitive data will increase • Networks (and the economic factors limiting network capacity growth) are more important than storage media as limiting factors • Complexity hiding interfaces are essential for expanding usefulness and enabling multi/inter-disciplinary research • Metadata and provenance issues are critical • Not everything is worth saving • A complex multi-faceted problem calls for multiple research approaches and many partial solutions rather than a new digital Library of Alexandria 40

Acknowledgments and Thanks • • • CIFASD (Stewart/Barnett): Part of this work was done in conjunction with the Collaborative Initiative on Fetal Alcohol Spectrum Disorders funded by NIH NIAAA incl. grant 1 U 24 AA 014818 -01 DIMA (Connelly): Lilly Endowment; NIBIB EB 007083 (PI Janet Welch) AVIDD (Mc. Robbie/Stewart): NSF 0116050 Data Capacitor, Tera. Grid (Stewart/Simms/Plale) NSF CNS-0521433, ACI-0338618 l, OCI-0451237, OCI-0535258, and OCI-0504075. Optiputer, Quartzite (Smarr, Papadapolous, Cal-IT 2 and UCSD) NSF 0225642, 0421555 LEAD, XMCCat, Karma (Plale): 0721674, 0720580, 0630322, 0331480, Microsoft Polar. Grid (Fox): CNS-0723054 i. RODS (Moore): NSF 1032732, 0910431, 0721400 Pervasive Technology Institute: Lilly Endowment Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the National Science Foundation. National Institutes or Health, Lilly Endowment, or other funding agencies Thanks to many colleagues locally at IU and globally 41

42