ITEC 325 Lecture 28 Memory5 Review P 2

- Slides: 21

ITEC 325 Lecture 28 Memory(5)

Review • • • P 2 coming on Friday Exam 2 next Friday Operating system / processes Logical address / physical address Pages / frames Main memory HD Memory (5)

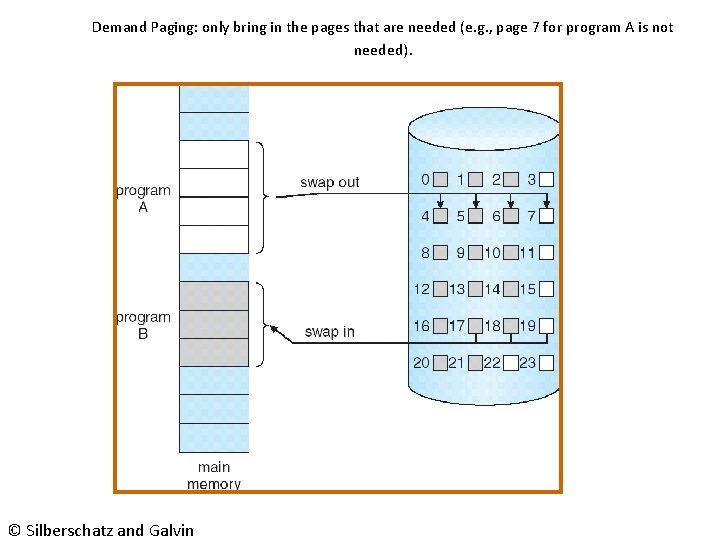

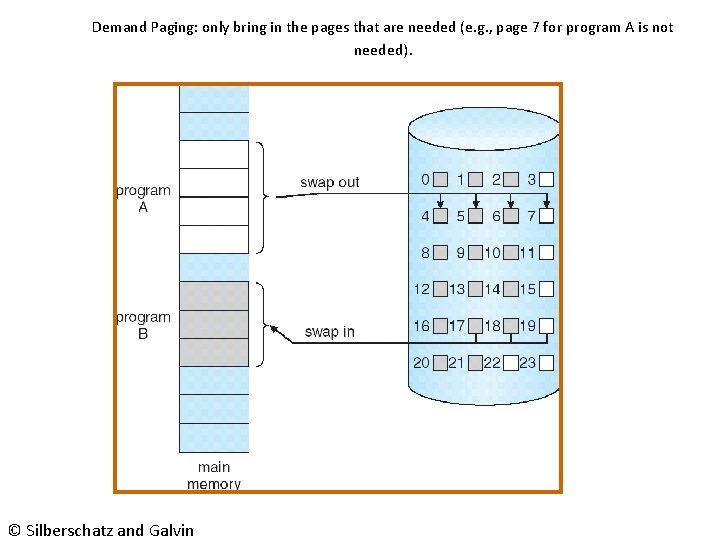

Demand Paging • Some programs hog memory resources: they are huge and eat up too much physical memory. • Most systems attack this problem by only bringing into the physical memory portions of the process that are actually needed. • • In other words, some pages in the process are not loaded into the physical memory – as long as they are not needed. This is called demand paging. Memory (5)

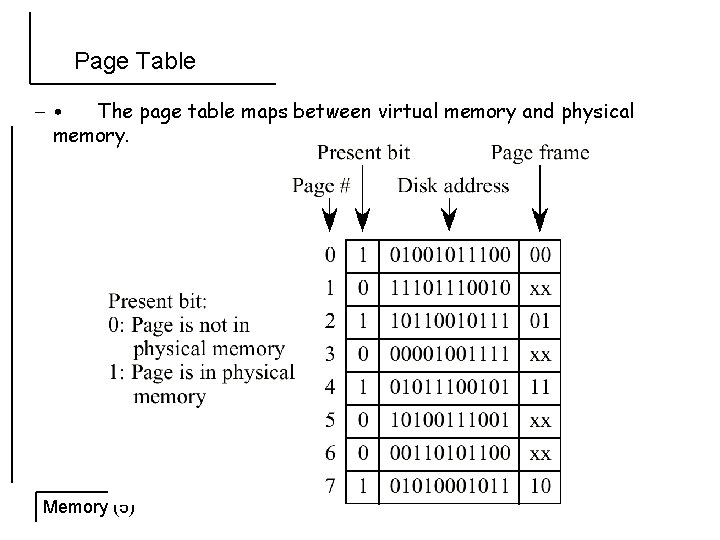

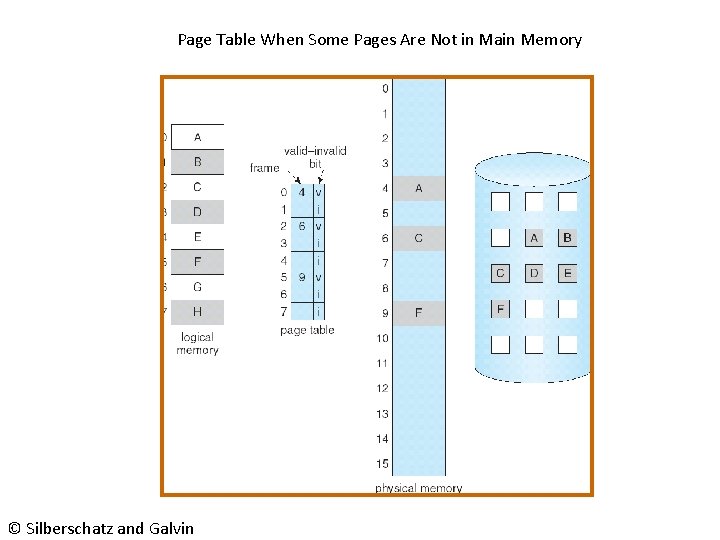

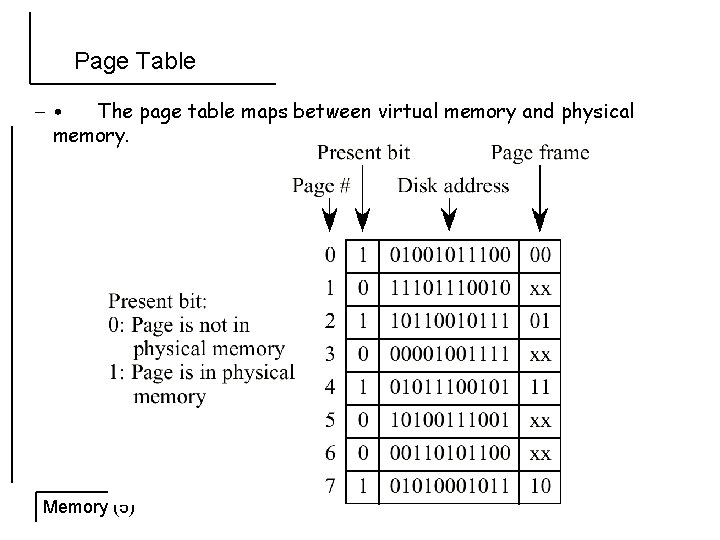

Page Table – • The page table maps between virtual memory and physical memory. Memory (5)

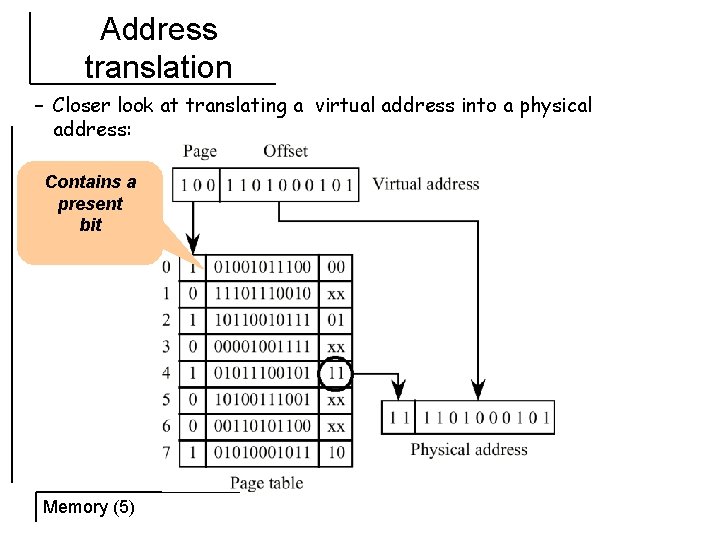

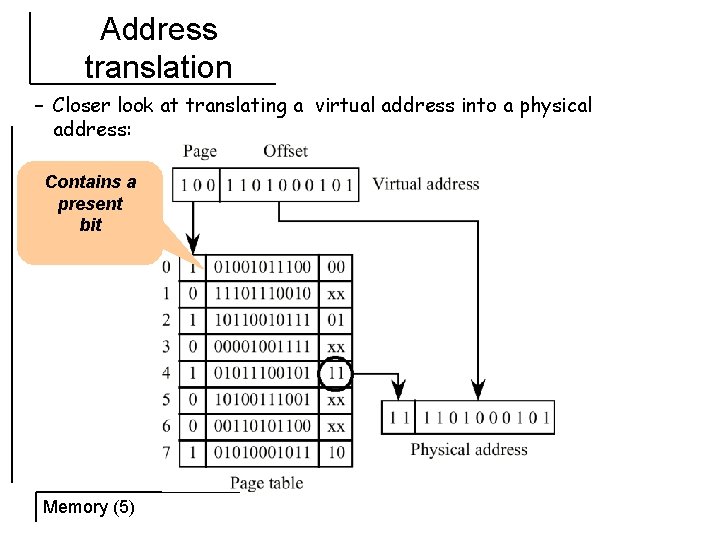

Address translation – Closer look at translating a virtual address into a physical address: Contains a present bit Memory (5)

Demand Paging: only bring in the pages that are needed (e. g. , page 7 for program A is not needed). © Silberschatz and Galvin

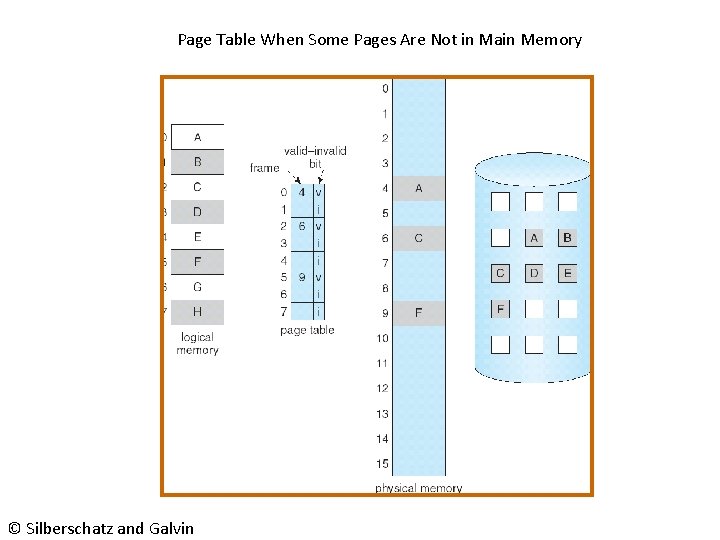

Page Table When Some Pages Are Not in Main Memory © Silberschatz and Galvin

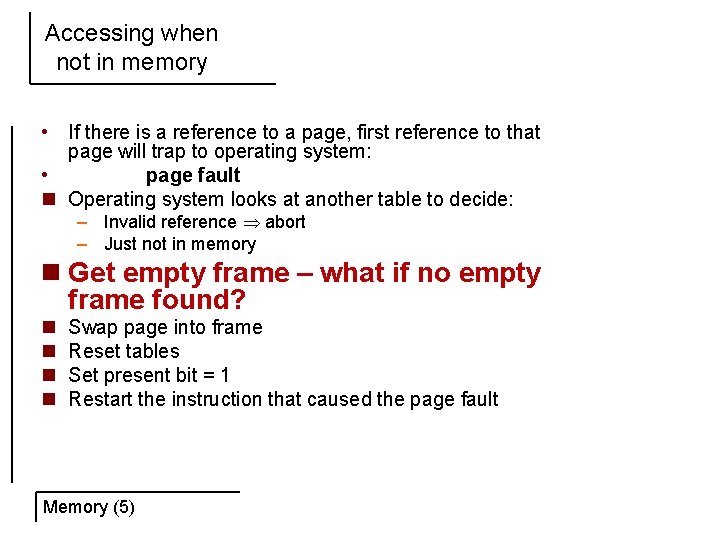

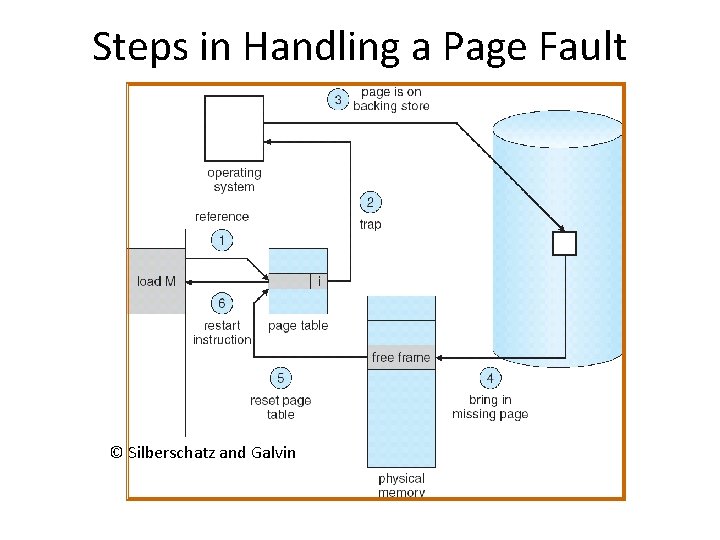

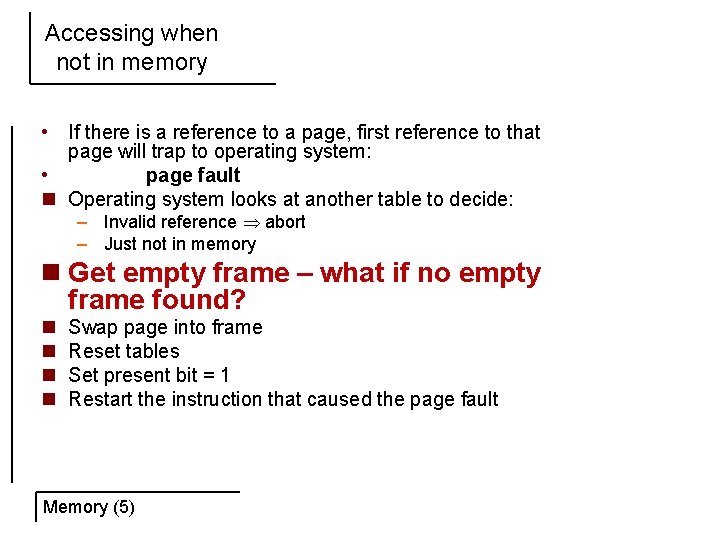

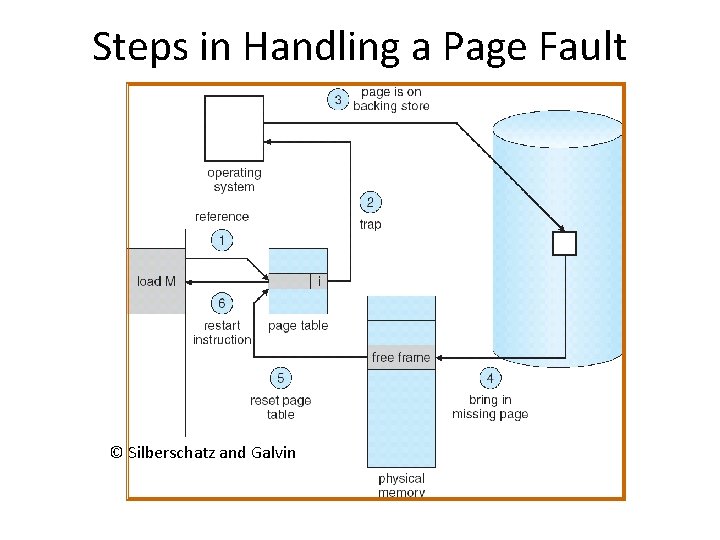

Accessing when not in memory • If there is a reference to a page, first reference to that page will trap to operating system: • page fault n Operating system looks at another table to decide: – Invalid reference abort – Just not in memory n Get empty frame – what if no empty frame found? n n Swap page into frame Reset tables Set present bit = 1 Restart the instruction that caused the page fault Memory (5)

Steps in Handling a Page Fault © Silberschatz and Galvin

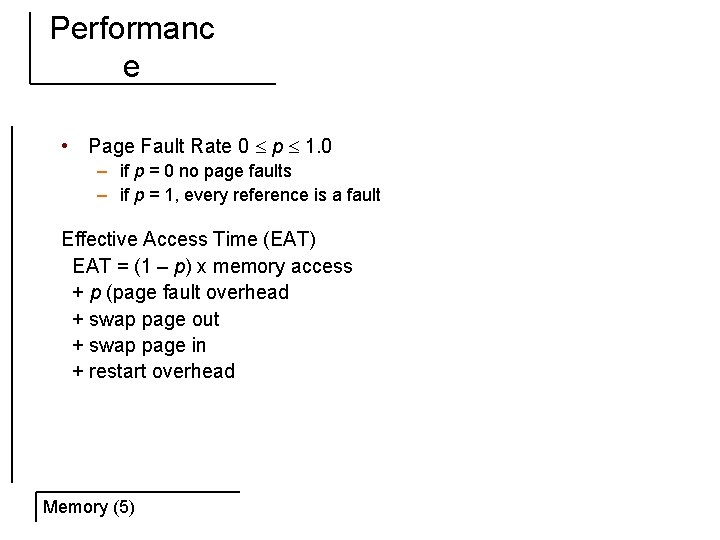

Performanc e • Page Fault Rate 0 p 1. 0 – if p = 0 no page faults – if p = 1, every reference is a fault Effective Access Time (EAT) EAT = (1 – p) x memory access + p (page fault overhead + swap page out + swap page in + restart overhead Memory (5)

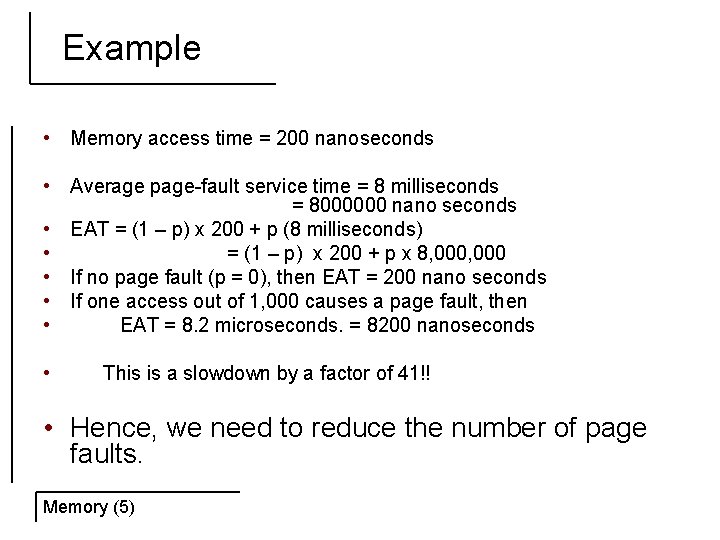

Example • Memory access time = 200 nanoseconds • Average page-fault service time = 8 milliseconds = 8000000 nano seconds • EAT = (1 – p) x 200 + p (8 milliseconds) • = (1 – p) x 200 + p x 8, 000 • If no page fault (p = 0), then EAT = 200 nano seconds • If one access out of 1, 000 causes a page fault, then • EAT = 8. 2 microseconds. = 8200 nanoseconds • This is a slowdown by a factor of 41!! • Hence, we need to reduce the number of page faults. Memory (5)

Performanc e of paging • Two factors effect performance: • • (a) Number of page faults: (b) Fast access to the page table. Next: Decreasing the number of page faults. Memory (5)

Decreasing page faults. • To reduce the number of page faults, a key factors is what pages to replace when a page fault occurs. – Two common algorithms: • Least recently used • First in First out. • Optimal page replacement – replace the page that will not be used for the longest period of time. Memory (5)

Example • When running a process, its virtual memory was divided into 9 pages. However, there is only free space for 4 pages in the physical memory. • Here is the order in which the pages are being accessed as the process executes: – 7, 0, 1, 2, 0, 3, 0, 4, 2, 3, 0, 3, 2, 1, 2, 0, 1, 7, 0, 1 – How many page faults occur with the: • Optimal page replacement algorithm? • Least Recently Used? – Exercise in class…. Memory (5) © Silberschatz and Galvin

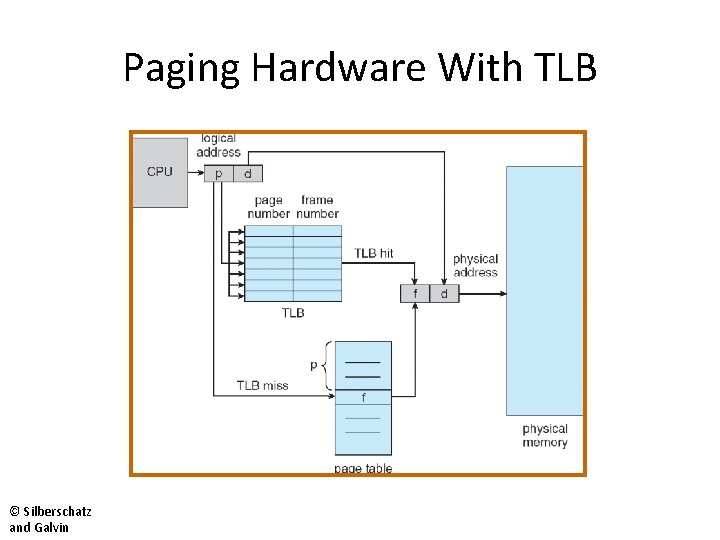

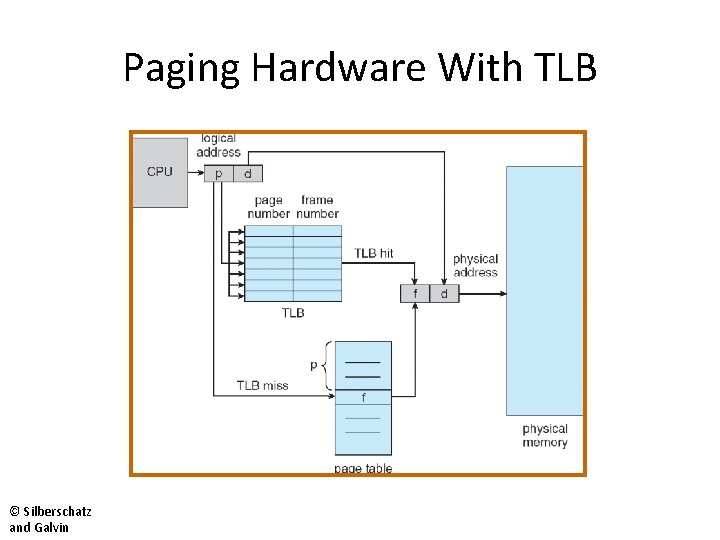

Faster implementation • Use a separate cache (other than L 1, L 2) to store a part of the “page table”. – Called Translation look aside buffer (TLB): • TLB is – Hardware implementing associative memory • Associative memory: similar to a hash table. • Working of a TLB: – First use page number to index into TLB, • If page number found (TLB hit), read associated frame number – This is faster than memory lookup since TLB is hardware implemented. • If page number not found (TLB miss), go to main memory Memory (5)

Paging Hardware With TLB © Silberschatz and Galvin

Advantage of TLB • When does TLB offer an advantage – When most of the page numbers the process refers to is in the TLB. – Determined by what is called as hit ratio – 0 <= hit ratio <= 1 – If hit ratio higher greater chance of finding page in TLB – Example: Let memory access time be 100 ns. Let TLB access time be 20 ns. Hit ratio: 0. 8. – What is the overhead of a lookup ? Memory (5)

Thrashing • Consider this scenario: – The CPU is idle, lots of processes are waiting to execute. – The OS (to increase multiprocessing) loads the processes into the pages. – Now let us say, a process starts using more memory. • • Then it demands free frames in main memory. What if there are no free frames? Solution: steal from other process. What if the other process needs more frames? – Processes start page faulting – this causes the OS to think that the CPU is idle and it loads even more processes causes more thrashing. – Example: the. NET runtime optimization service. © Silberschatz and Galvin Memory (5)

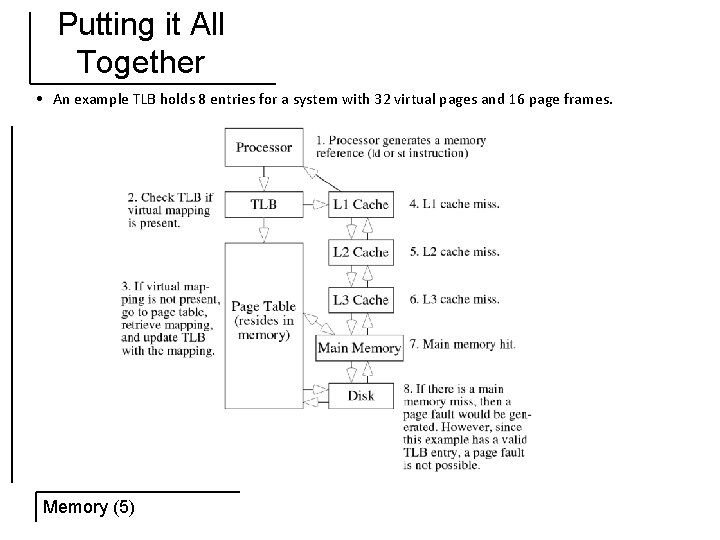

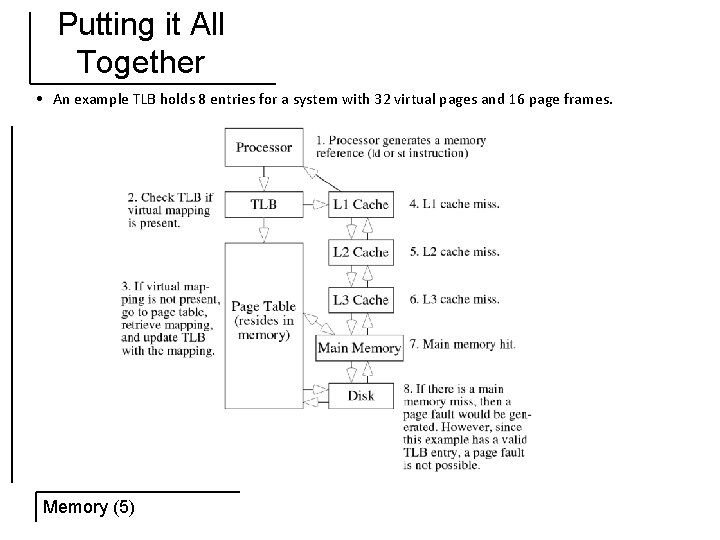

Putting it All Together • An example TLB holds 8 entries for a system with 32 virtual pages and 16 page frames. Memory (5)

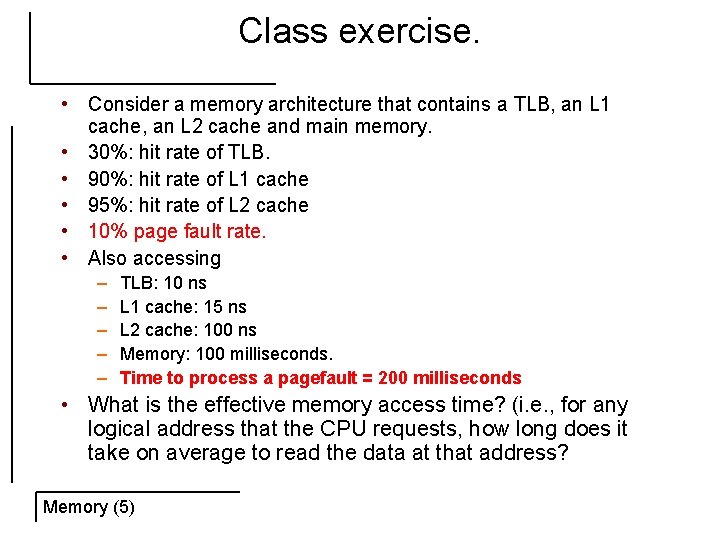

Class exercise. • Consider a memory architecture that contains a TLB, an L 1 cache, an L 2 cache and main memory. • 30%: hit rate of TLB. • 90%: hit rate of L 1 cache • 95%: hit rate of L 2 cache • 10% page fault rate. • Also accessing – – – TLB: 10 ns L 1 cache: 15 ns L 2 cache: 100 ns Memory: 100 milliseconds. Time to process a pagefault = 200 milliseconds • What is the effective memory access time? (i. e. , for any logical address that the CPU requests, how long does it take on average to read the data at that address? Memory (5)

Summary • Paging • Faults • TLB Memory (5)