ISAAC A Convolutional Neural Network Accelerator with InSitu

- Slides: 21

ISAAC: A Convolutional Neural Network Accelerator with In-Situ Analog Arithmetic in Crossbars Ali Shafiee*, Anirban Nag*, Naveen Muralimanohar†, Rajeev Balasubramonian*, John Paul Strachan†, Miao Hu†, R. Stanley Williams†, Vivek Srikumar* *University of Utah †Hewlett Packard Labs

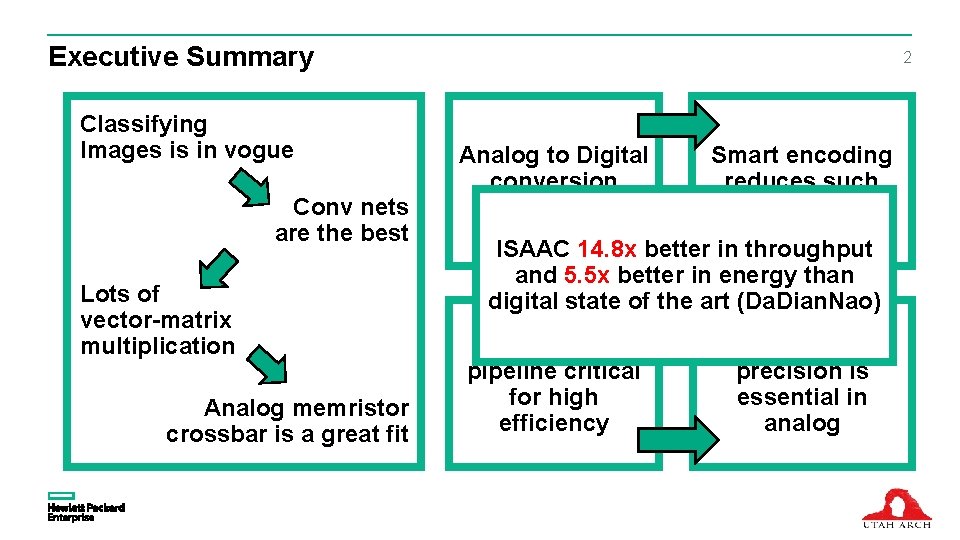

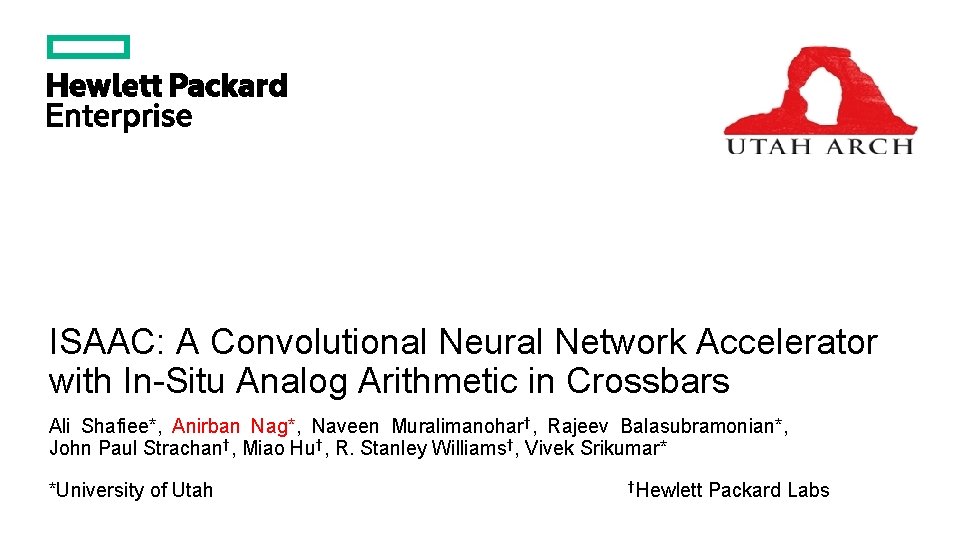

Executive Summary Classifying Images is in vogue Conv nets are the best Lots of vector-matrix multiplication Analog memristor crossbar is a great fit 2 Analog to Digital conversion overheads! Smart encoding reduces such overheads ISAAC 14. 8 x better in throughput and 5. 5 x better in energy than digital state of the art (Da. Dian. Nao) Balanced pipeline critical for high efficiency Preserving high precision is essential in analog

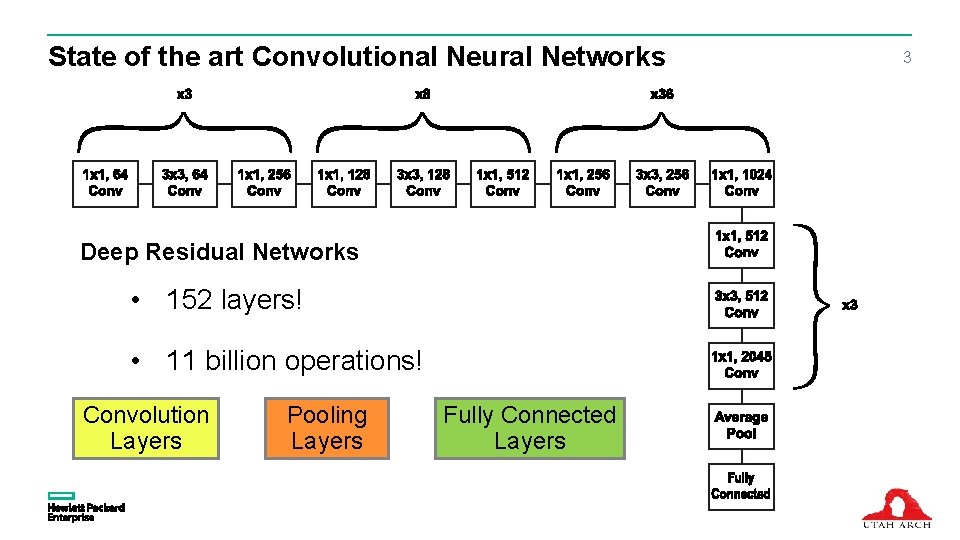

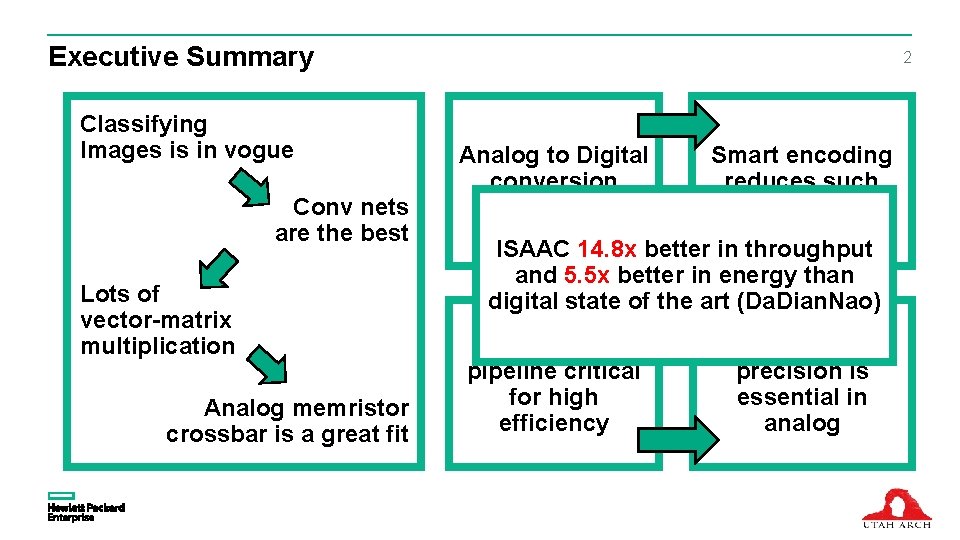

State of the art Convolutional Neural Networks Deep Residual Networks • 152 layers! • 11 billion operations! Convolution Layers Pooling Layers Fully Connected Layers 3

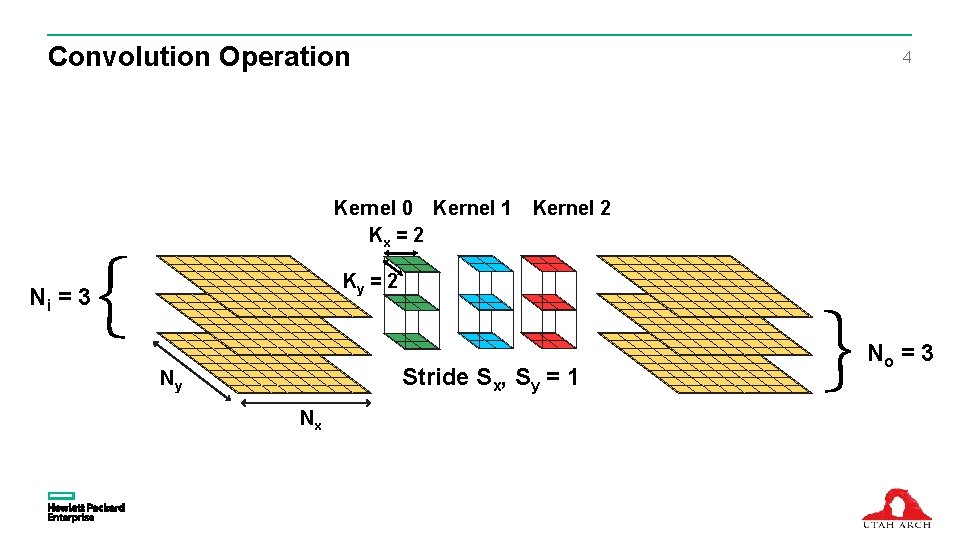

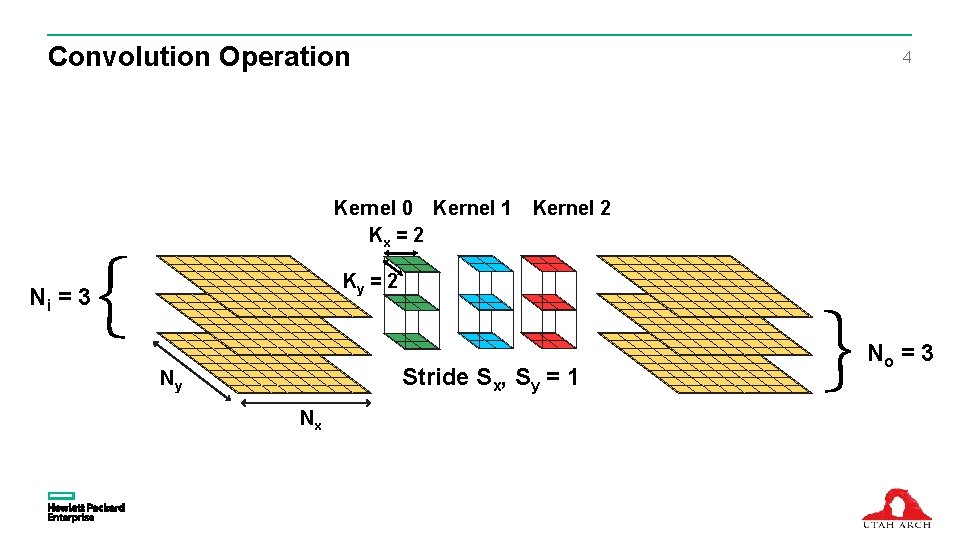

Convolution Operation 4 Kernel 0 Kernel 1 Kx = 2 Kernel 2 Ky = 2 Ni = 3 Stride Sx, Sy = 1 Ny Nx No = 3

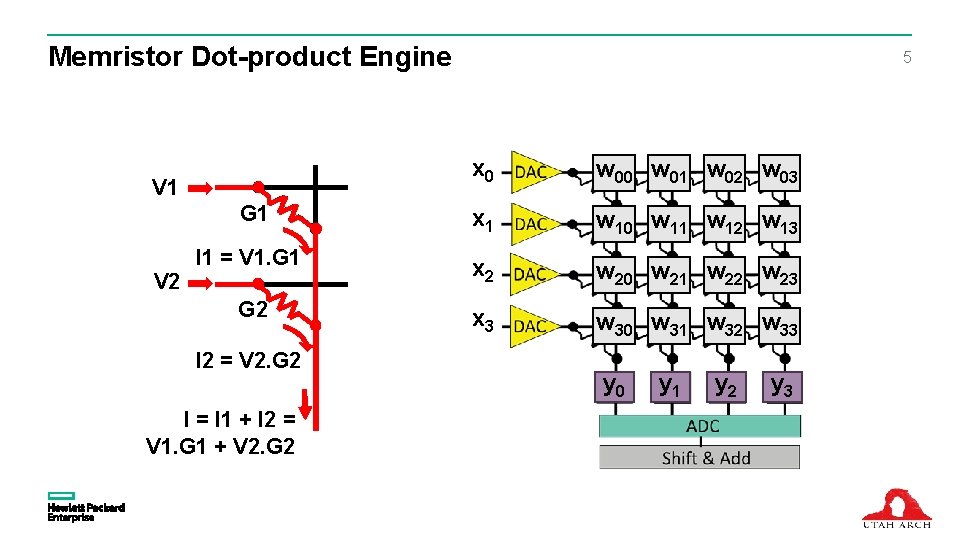

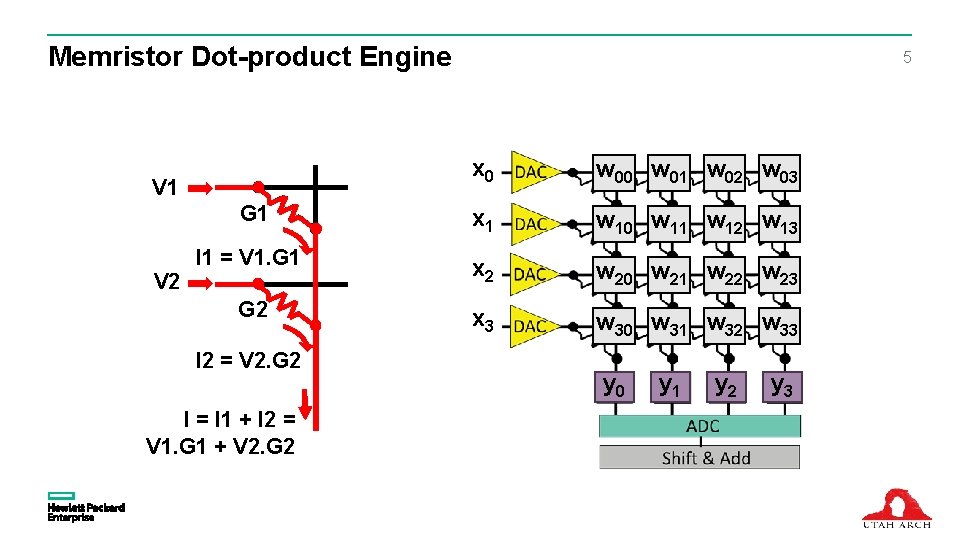

Memristor Dot-product Engine x 0 w 01 w 02 w 03 G 1 x 1 w 10 w 11 w 12 w 13 I 1 = V 1. G 1 x 2 w 20 w 21 w 22 w 23 G 2 x 3 w 30 w 31 w 32 w 33 V 1 V 2 5 I 2 = V 2. G 2 I = I 1 + I 2 = V 1. G 1 + V 2. G 2 y 0 y 1 y 2 y 3

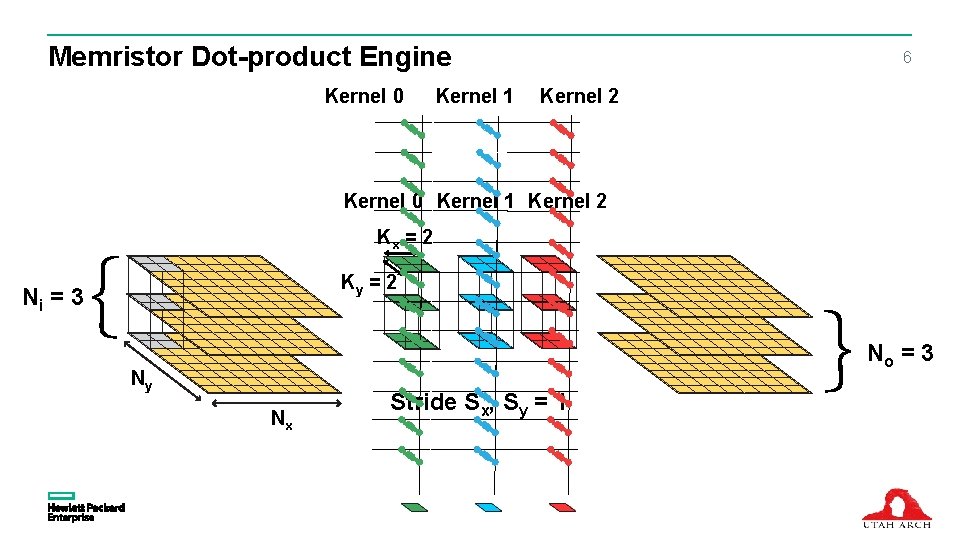

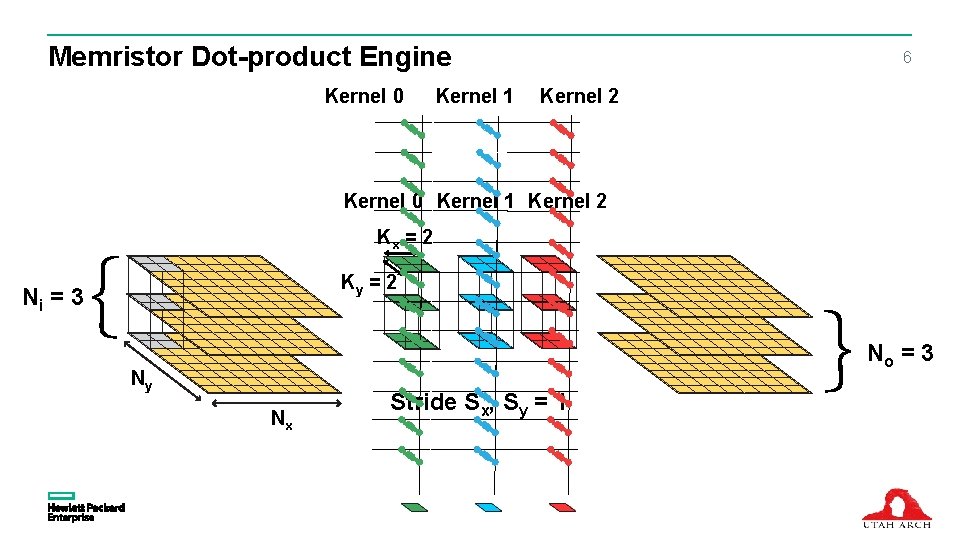

Memristor Dot-product Engine Kernel 0 Kernel 1 6 Kernel 2 Kernel 0 Kernel 1 Kernel 2 Kx = 2 Ky = 2 Ni = 3 No = 3 Ny Nx Stride Sx, Sy = 1

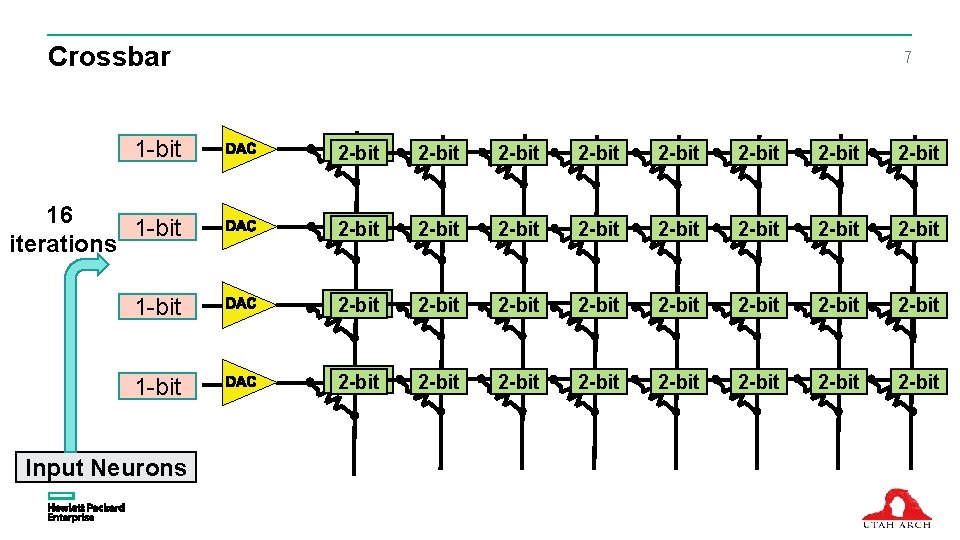

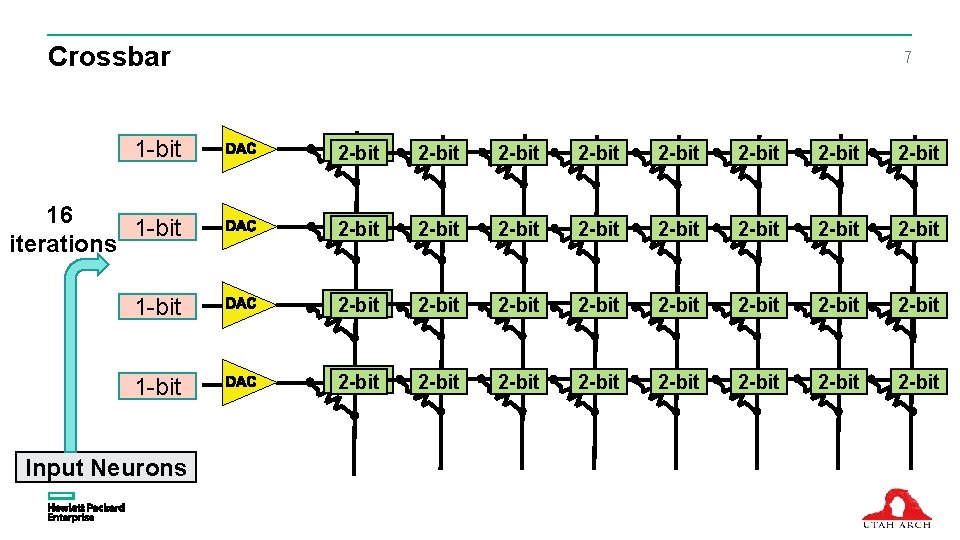

Crossbar 7 1 -bit 16 -bit 2 -bit 2 -bit 16 1 -bit 16 -bit iterations 16 -bit 2 -bit 2 -bit 2 -bit 2 -bit 1 -bit 16 -bit 2 -bit 2 -bit Input Neurons

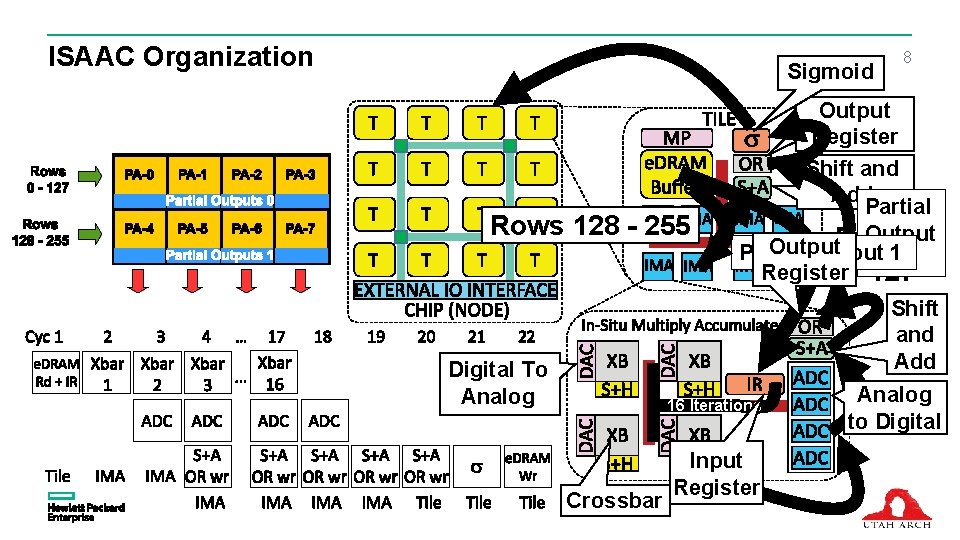

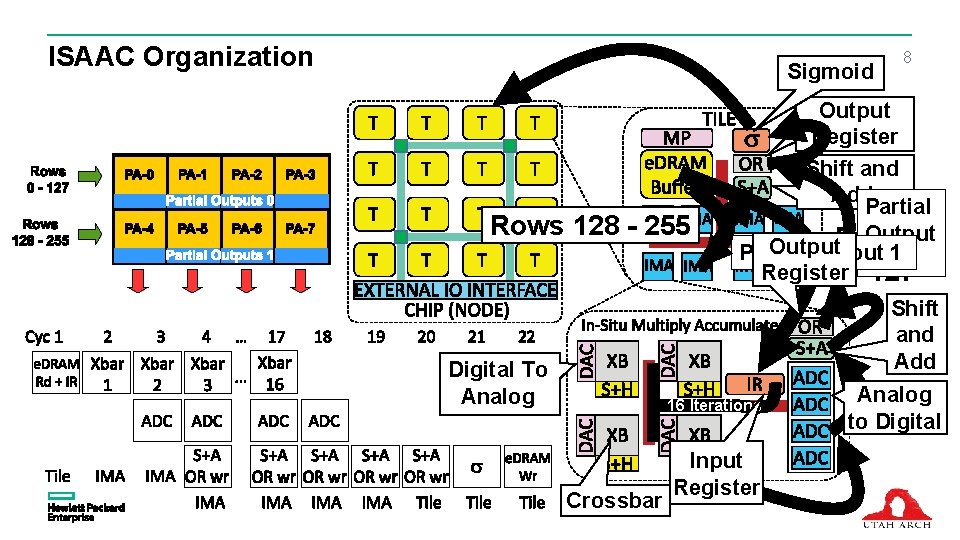

ISAAC Organization Sigmoid Rows 128 - 255 8 Output Register Shift and Add Partial Output Rows Output Partial Output 0 1 Register 0 - 127 Shift and Add Digital To Analog 16 Iterations Crossbar Input Register Analog to Digital

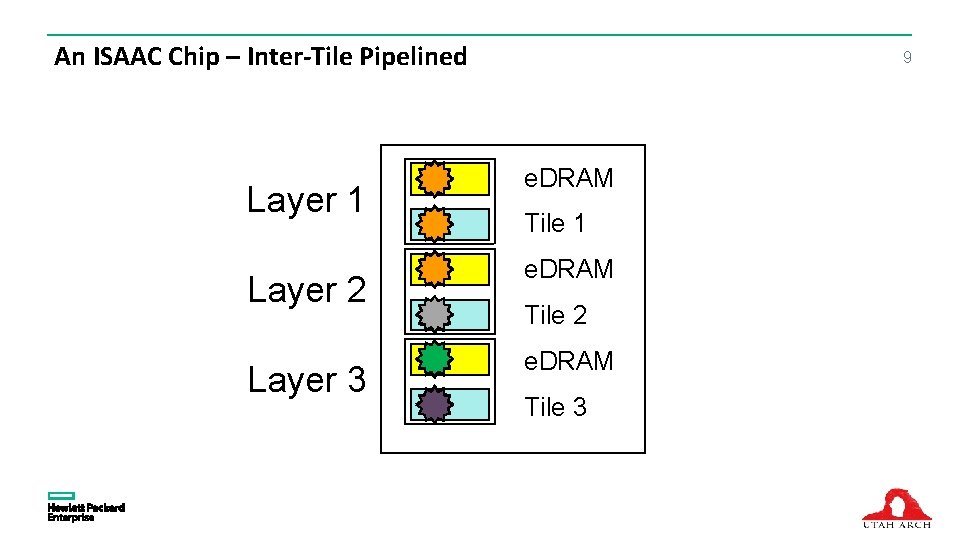

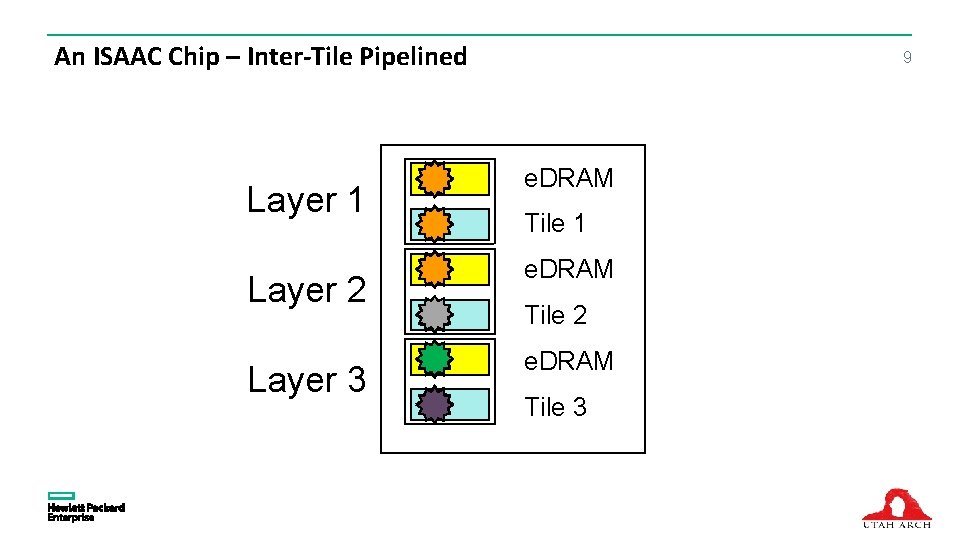

An ISAAC Chip – Inter-Tile Pipelined Layer 1 Layer 2 Layer 3 9 e. DRAM Tile 1 e. DRAM Tile 2 e. DRAM Tile 3

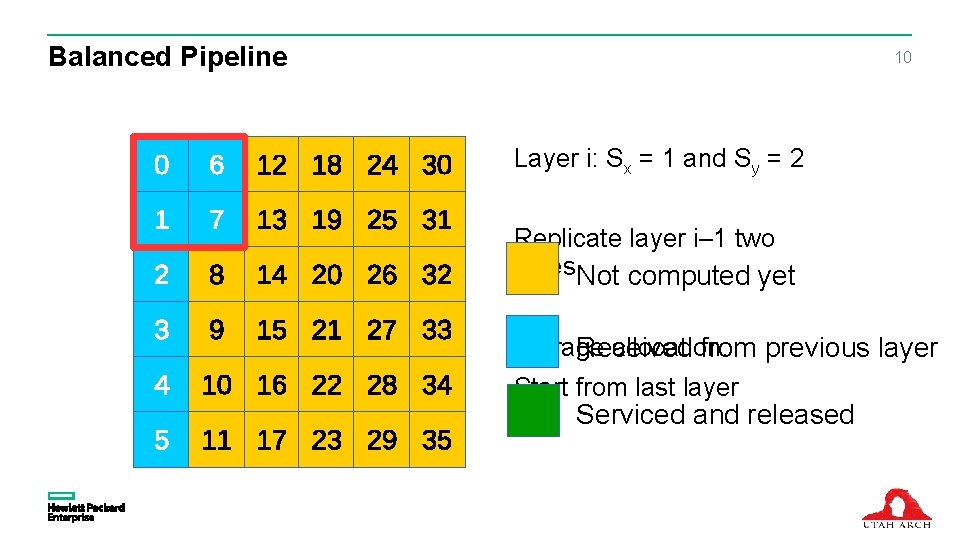

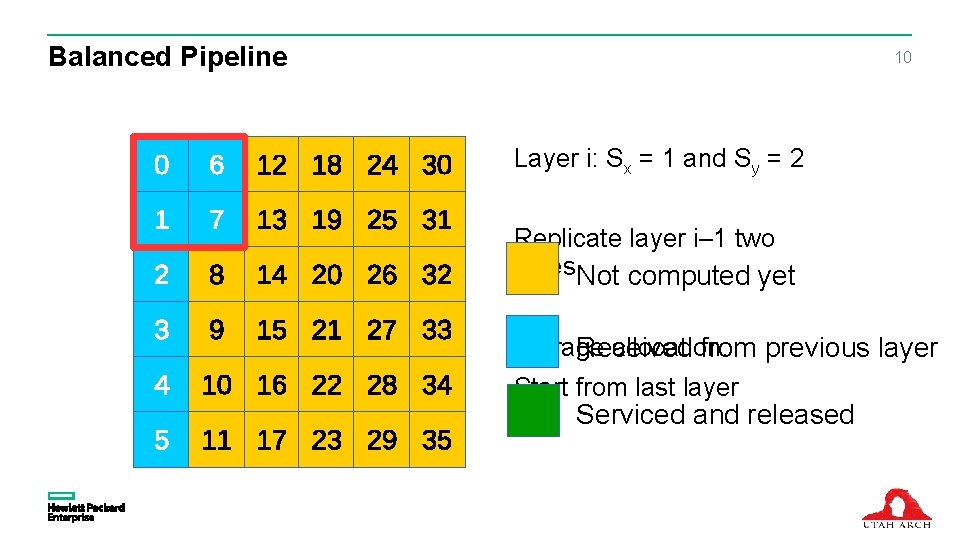

Balanced Pipeline 10 Layer i: Sx = 1 and Sy = 2 Replicate layer i– 1 two times. Not computed yet Storage allocation: Received from previous layer Start from last layer Serviced and released

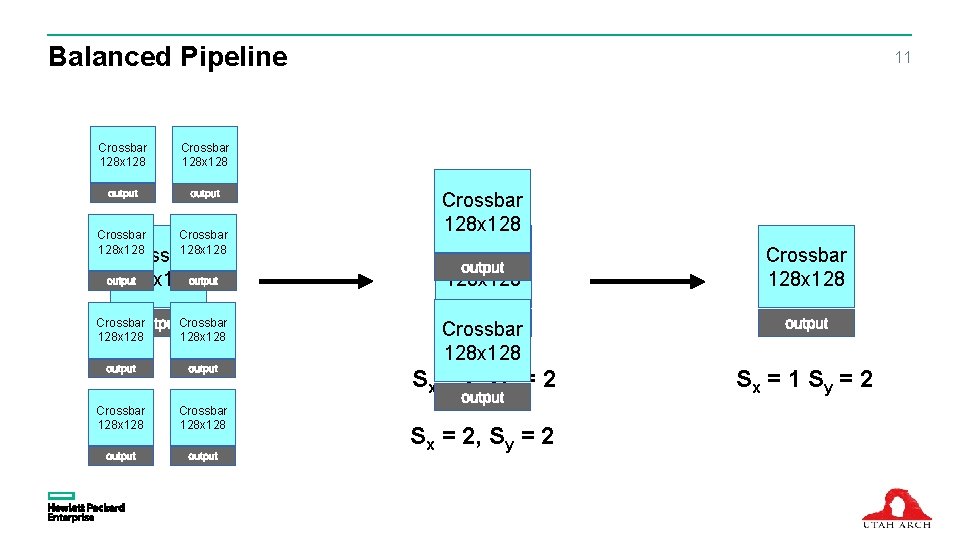

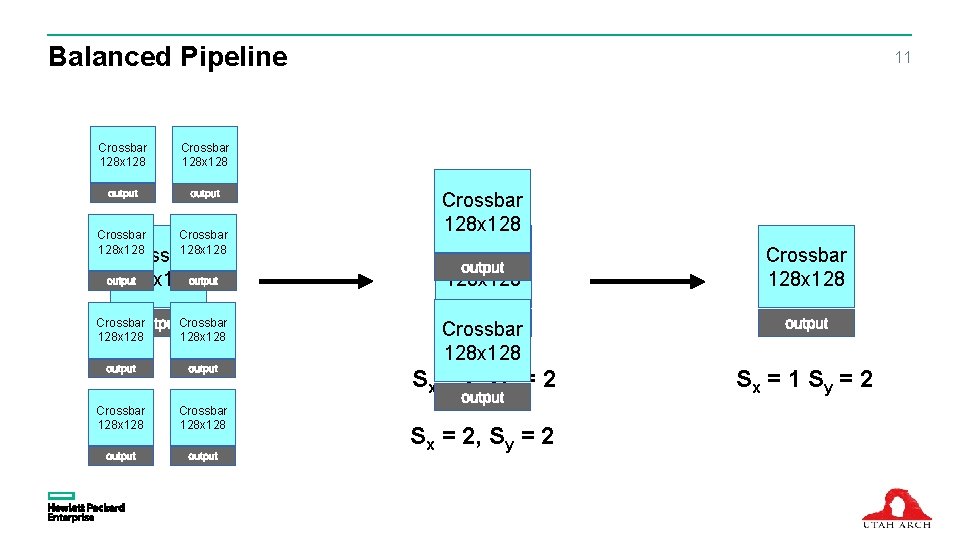

Balanced Pipeline Crossbar 128 x 128 11 Crossbar 128 x 128 Crossbar 128 x 128 Crossbar 128 x 128 Sx = 2, Sy = 2 Crossbar 128 x 128 Sx = 2, Sy = 2 Sx = 1 S y = 2

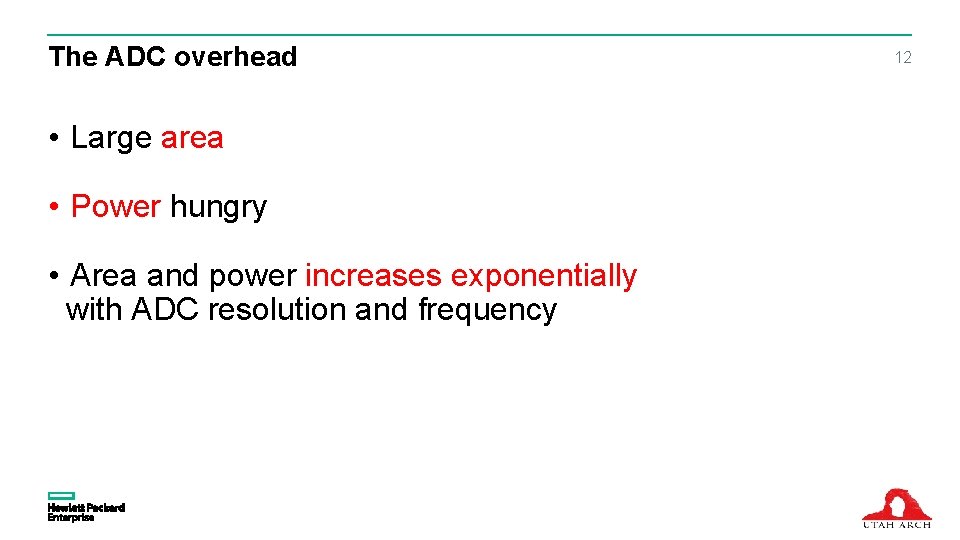

The ADC overhead • Large area • Power hungry • Area and power increases exponentially with ADC resolution and frequency 12

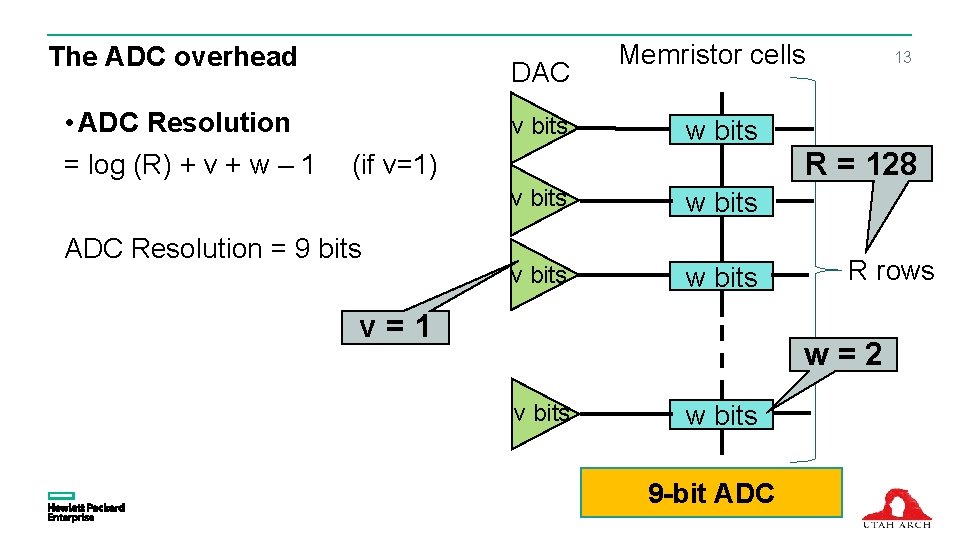

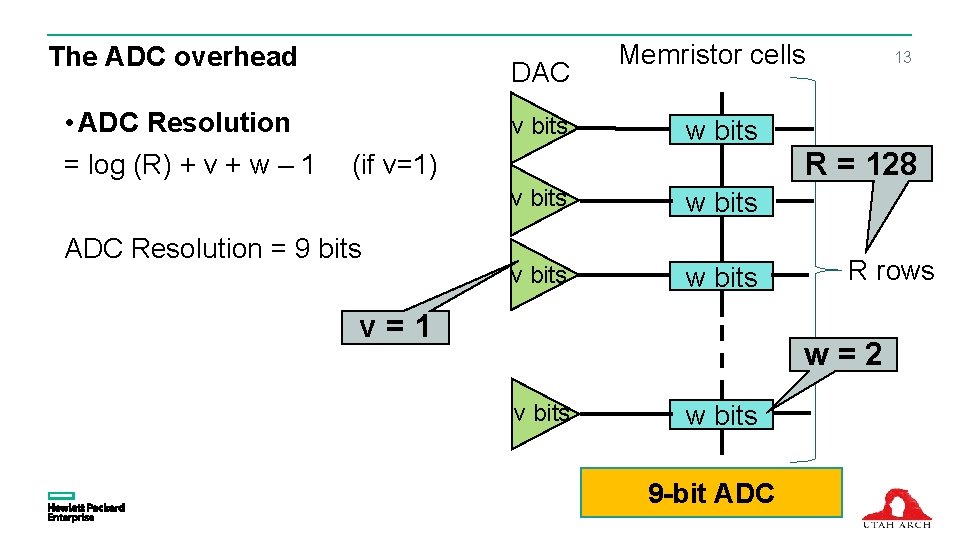

The ADC overhead • ADC Resolution = log (R) + v + w – 1 DAC v bits Memristor cells w bits R = 128 (if v=1) ADC Resolution = 9 bits 13 v bits w bits v=1 R rows w=2 v bits w bits log (R) + v. ADC +w– 1 9 -bit

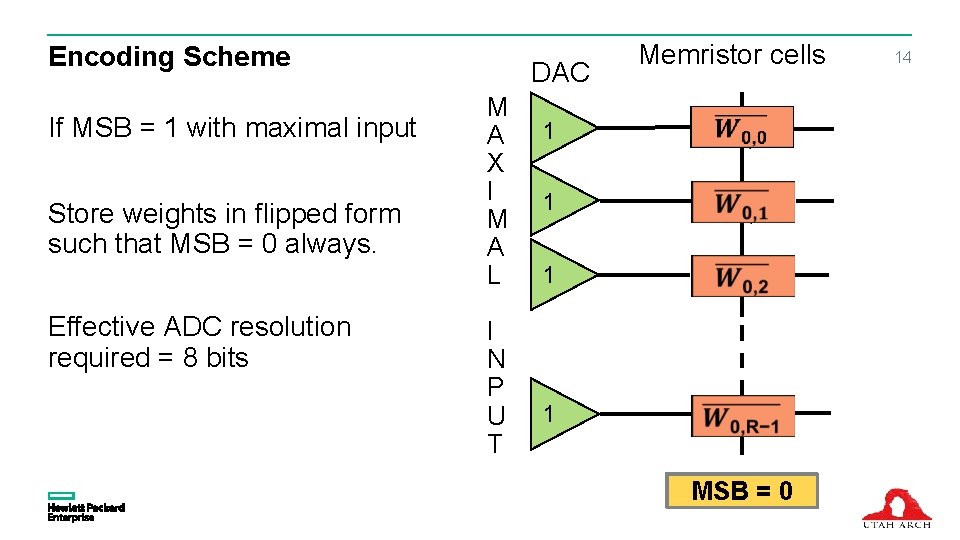

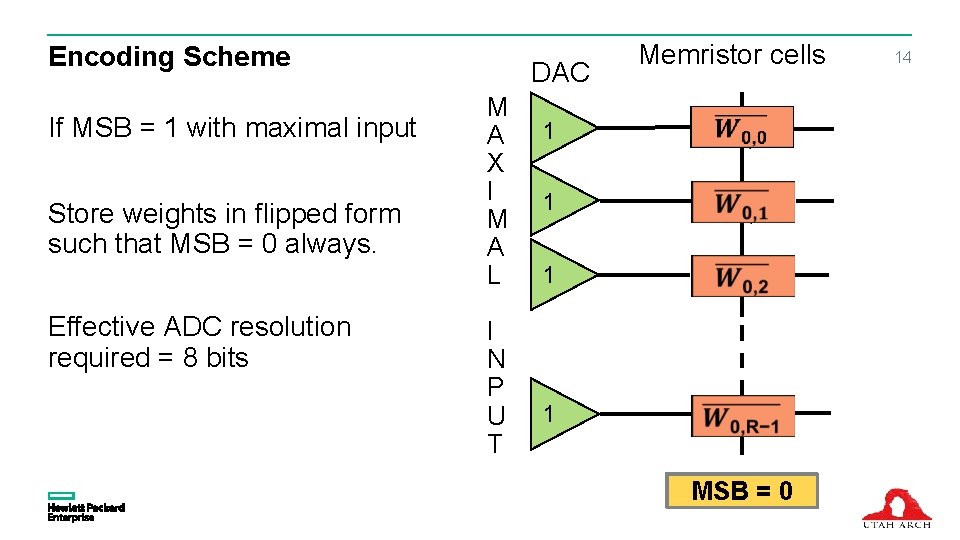

Encoding Scheme If MSB = 1 with maximal input Store weights in flipped form such that MSB = 0 always. Effective ADC resolution required = 8 bits DAC M A X I M A L I N P U T Memristor cells 1 1 If. MSB==01 14

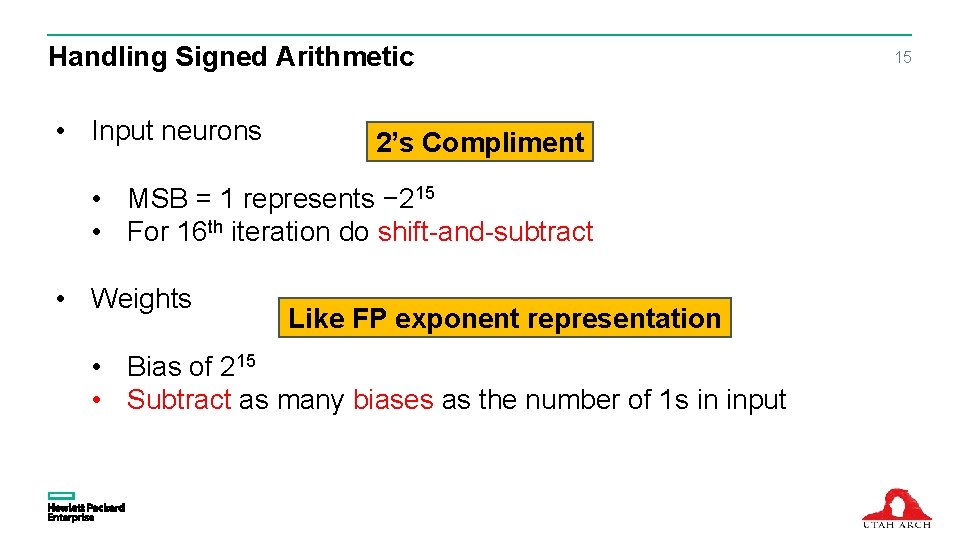

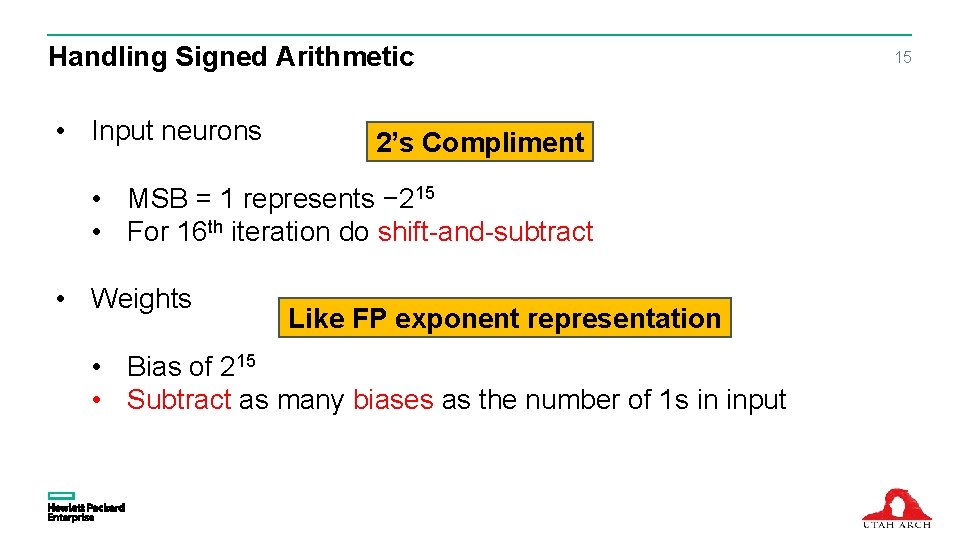

Handling Signed Arithmetic • Input neurons 2’s Compliment • MSB = 1 represents − 215 • For 16 th iteration do shift-and-subtract • Weights Like FP exponent representation • Bias of 215 • Subtract as many biases as the number of 1 s in input 15

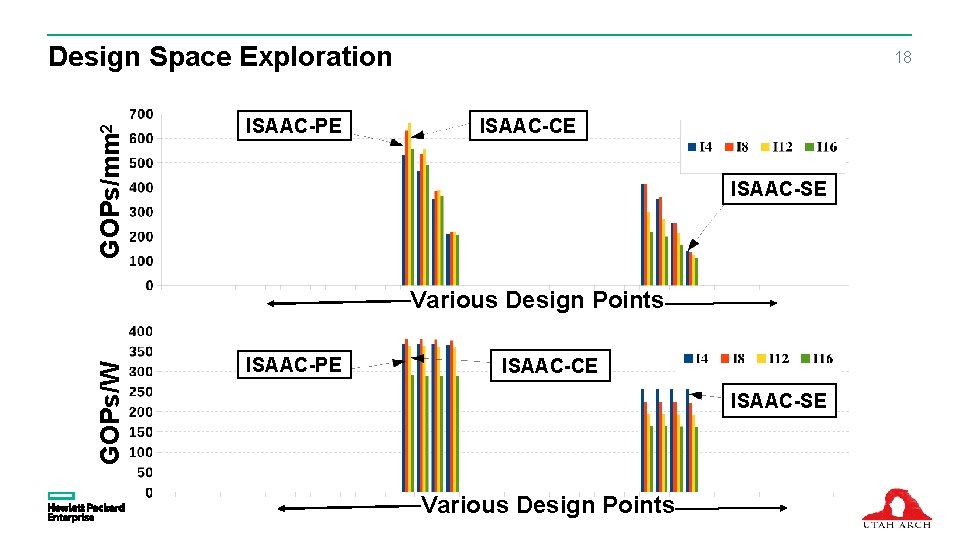

Analysis Metrics 1) CE: Computational Efficiency -> GOPS/s × mm 2 2) PE: Power Efficiency -> GOPS/W 3) SE: Storage Efficiency -> MB/mm 2 16

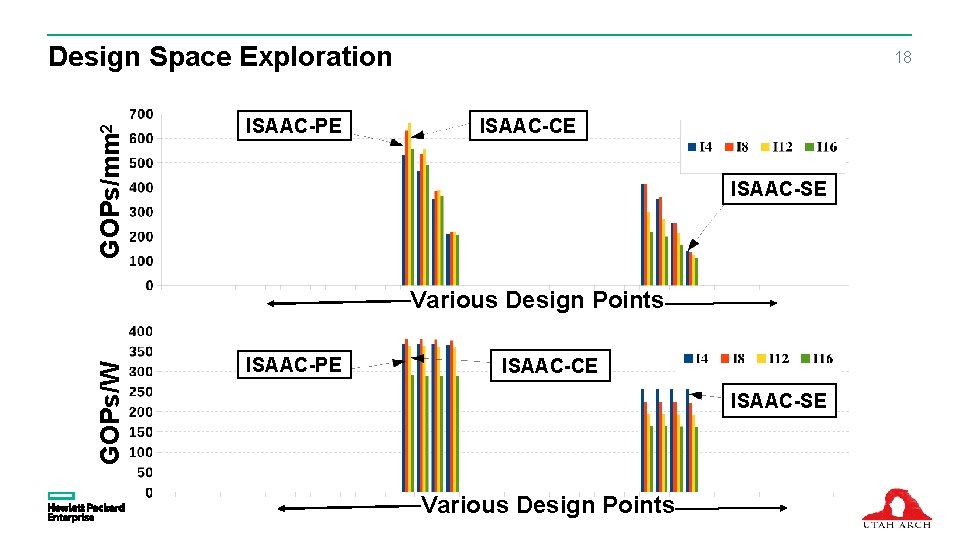

Design Space Exploration 1) rows per crossbar 2) ADCs per IMA 3) crossbars per IMA 4) IMA per tile 17

GOPs/mm 2 Design Space Exploration ISAAC-PE 18 ISAAC-CE ISAAC-SE GOPs/W Various Design Points ISAAC-PE ISAAC-CE ISAAC-SE Various Design Points

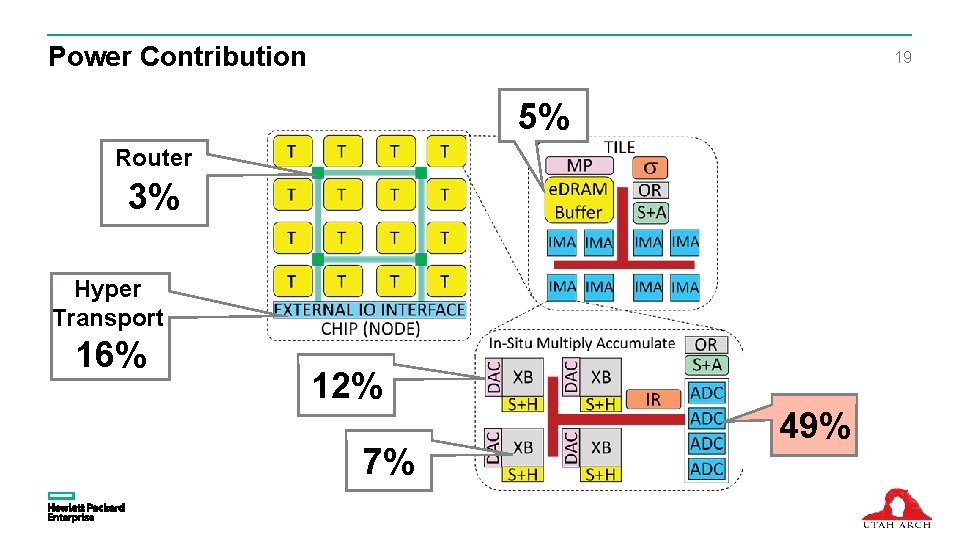

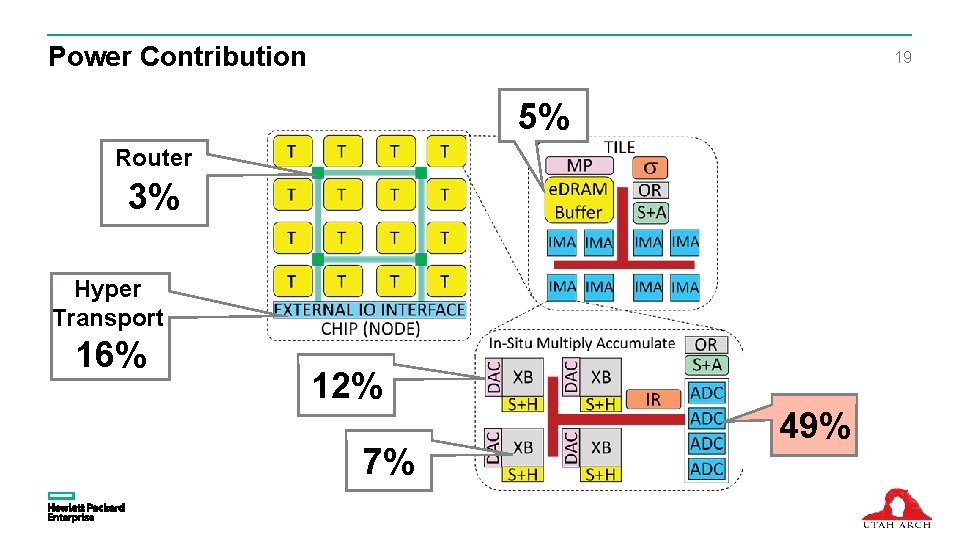

Power Contribution 19 58% 5% Router 3% Hyper Transport 16% 12% 7% 49%

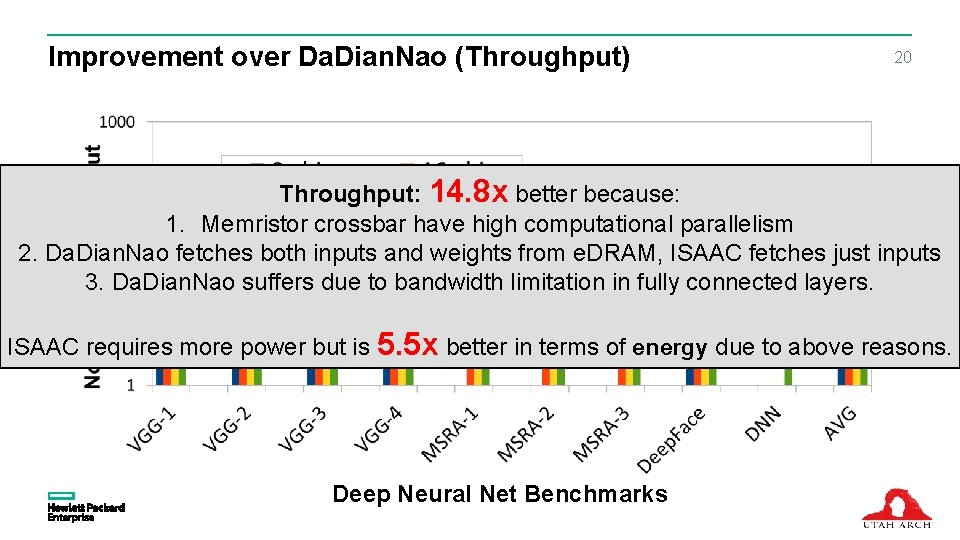

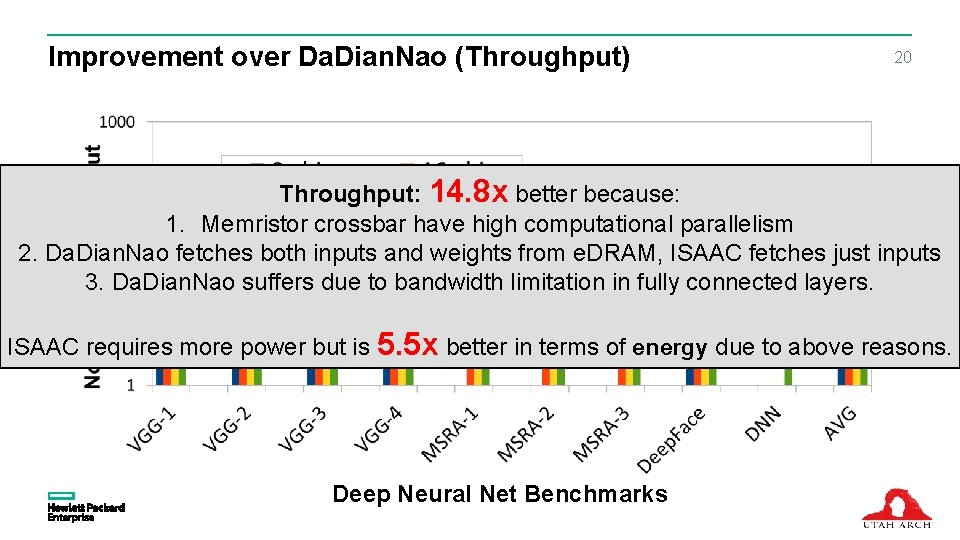

Improvement over Da. Dian. Nao (Throughput) 20 Throughput: 14. 8 x better because: 1. Memristor crossbar have high computational parallelism 2. Da. Dian. Nao fetches both inputs and weights from e. DRAM, ISAAC fetches just inputs 3. Da. Dian. Nao suffers due to bandwidth limitation in fully connected layers. ISAAC requires more power but is 5. 5 x better in terms of energy due to above reasons. Deep Neural Net Benchmarks

Conclusion • Takes advantage of analog in-situ computing. Fetches just the input neurons. • Handles ADC overheads with smart encoding. • Does not compromise on output precision. • Is faster than Da. Dian. Nao due to 8 x better computational efficiency and a balanced pipeline keeping all units busy. • Few questions still remain: integrate online training? 21