IR Theory Web Information Retrieval Fusion Web IR

IR Theory: Web Information Retrieval

Fusion Web IR IR Search Engine 2

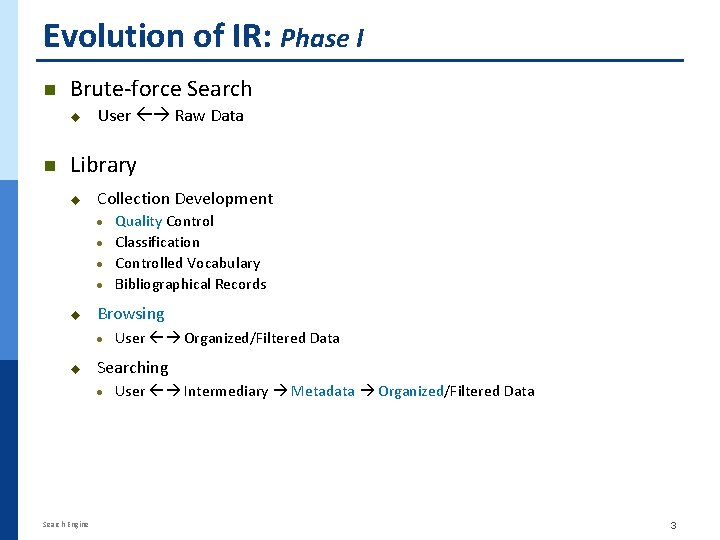

Evolution of IR: Phase I n Brute-force Search u n User Raw Data Library u Collection Development Quality Control · Classification · Controlled Vocabulary · Bibliographical Records · u Browsing · u Searching · Search Engine User Organized/Filtered Data User Intermediary Metadata Organized/Filtered Data 3

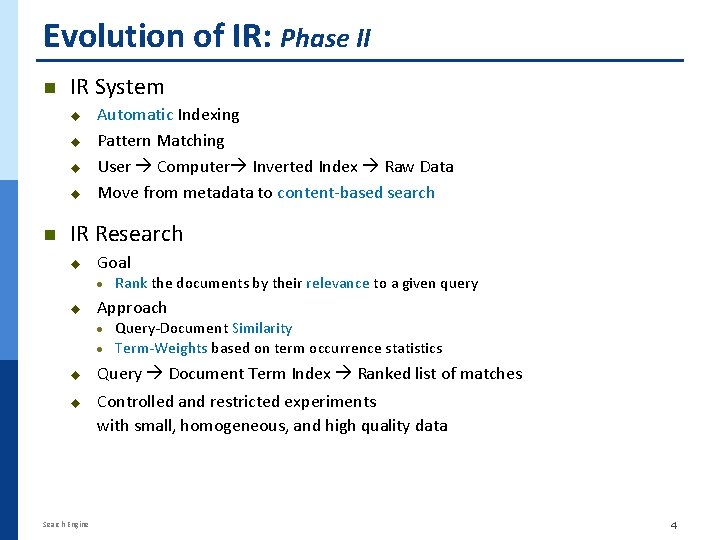

Evolution of IR: Phase II n IR System u u n Automatic Indexing Pattern Matching User Computer Inverted Index Raw Data Move from metadata to content-based search IR Research u Goal · u Approach · · u u Search Engine Rank the documents by their relevance to a given query Query-Document Similarity Term-Weights based on term occurrence statistics Query Document Term Index Ranked list of matches Controlled and restricted experiments with small, homogeneous, and high quality data 4

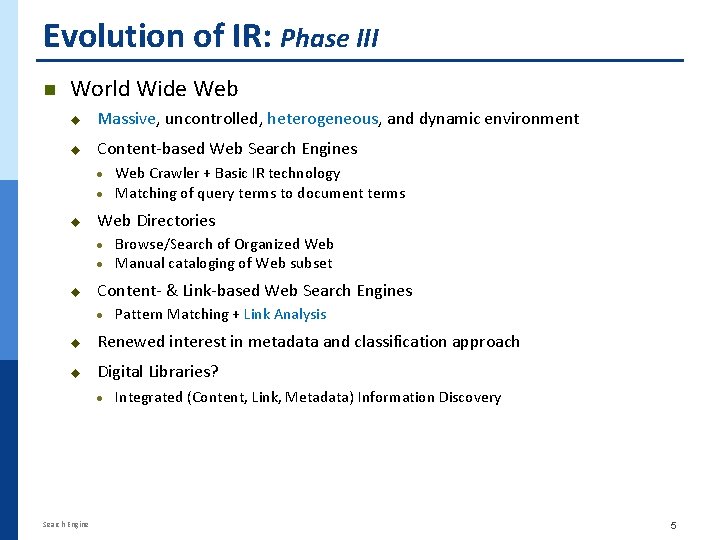

Evolution of IR: Phase III n World Wide Web u Massive, uncontrolled, heterogeneous, and dynamic environment u Content-based Web Search Engines Web Crawler + Basic IR technology · Matching of query terms to document terms · u Web Directories · · u Browse/Search of Organized Web Manual cataloging of Web subset Content- & Link-based Web Search Engines · Pattern Matching + Link Analysis u Renewed interest in metadata and classification approach u Digital Libraries? · Search Engine Integrated (Content, Link, Metadata) Information Discovery 5

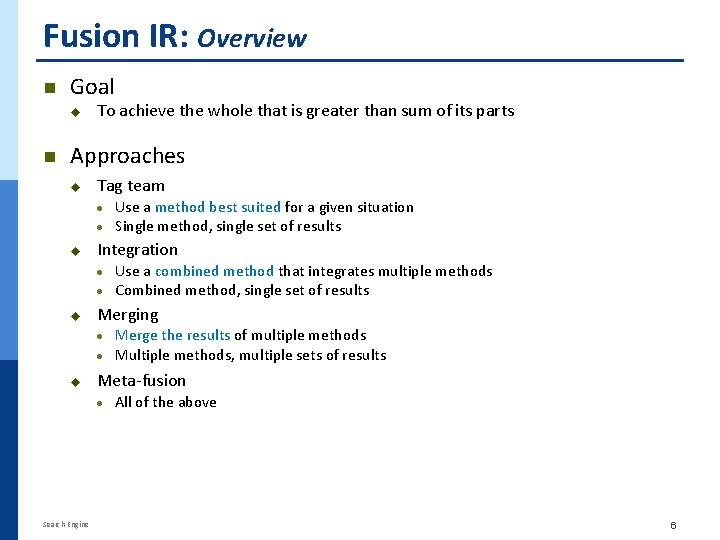

Fusion IR: Overview n Goal u n To achieve the whole that is greater than sum of its parts Approaches u Tag team · · u Integration · · u Merge the results of multiple methods Multiple methods, multiple sets of results Meta-fusion · Search Engine Use a combined method that integrates multiple methods Combined method, single set of results Merging · · u Use a method best suited for a given situation Single method, single set of results All of the above 6

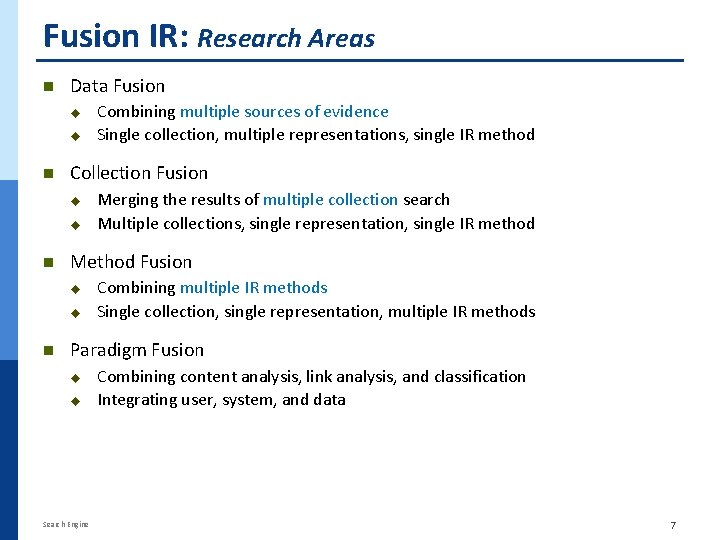

Fusion IR: Research Areas n Data Fusion u u n Collection Fusion u u n Merging the results of multiple collection search Multiple collections, single representation, single IR method Method Fusion u u n Combining multiple sources of evidence Single collection, multiple representations, single IR method Combining multiple IR methods Single collection, single representation, multiple IR methods Paradigm Fusion u u Search Engine Combining content analysis, link analysis, and classification Integrating user, system, and data 7

Fusion IR: Research Findings n Findings from content-based IR experiments with small, homogeneous document collections u Different IR systems retrieve ® u Documents retrieved by multiple systems ® u n are more likely to be relevant Combining different systems ® u different sets of documents is likely to be more beneficial than combining similar systems Fusion is good for IR Is fusion a viable approach for Web IR? Search Engine 8

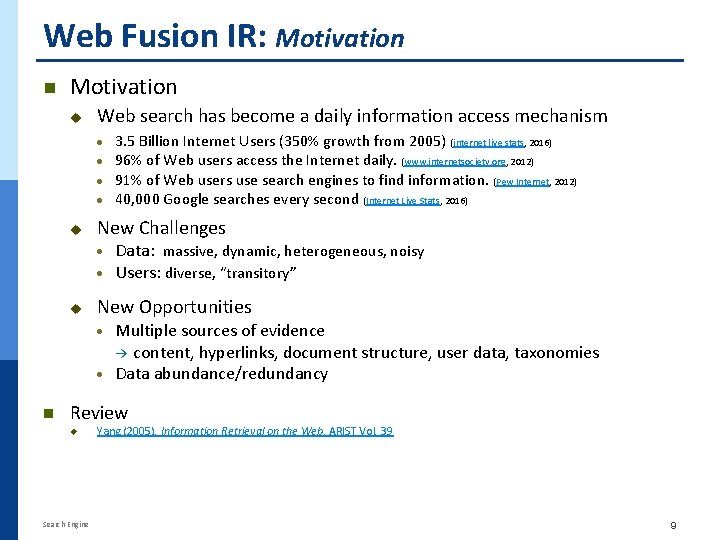

Web Fusion IR: Motivation n Motivation u Web search has become a daily information access mechanism · · u New Challenges · · u 3. 5 Billion Internet Users (350% growth from 2005) (internet live stats, 2016) 96% of Web users access the Internet daily. (www. internetsociety. org, 2012) 91% of Web users use search engines to find information. (Pew Internet, 2012) 40, 000 Google searches every second (Internet Live Stats, 2016) Data: massive, dynamic, heterogeneous, noisy Users: diverse, “transitory” New Opportunities Multiple sources of evidence → content, hyperlinks, document structure, user data, taxonomies · Data abundance/redundancy · n Review u Search Engine Yang (2005). Information Retrieval on the Web, ARIST Vol. 39 9

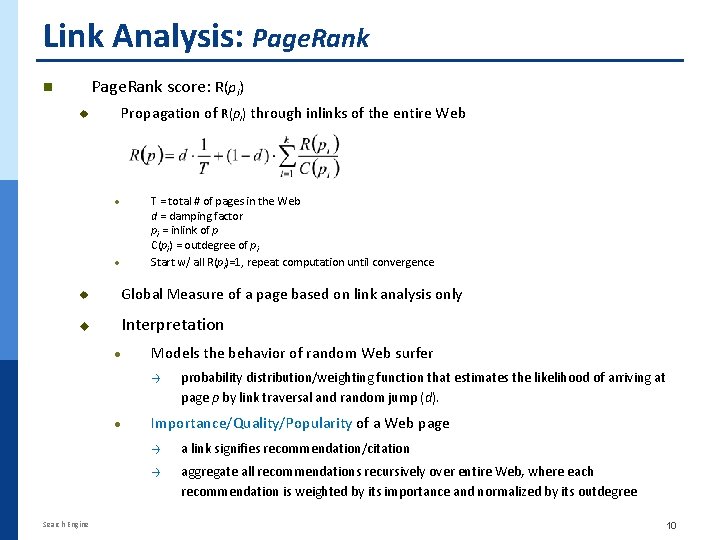

Link Analysis: Page. Rank score: R(pi) n Propagation of R(pi) through inlinks of the entire Web u T = total # of pages in the Web d = damping factor pi = inlink of p C(pi) = outdegree of pi Start w/ all R(pi)=1, repeat computation until convergence · · u Global Measure of a page based on link analysis only u Interpretation · Models the behavior of random Web surfer → · Search Engine probability distribution/weighting function that estimates the likelihood of arriving at page p by link traversal and random jump (d). Importance/Quality/Popularity of a Web page → a link signifies recommendation/citation → aggregate all recommendations recursively over entire Web, where each recommendation is weighted by its importance and normalized by its outdegree 10

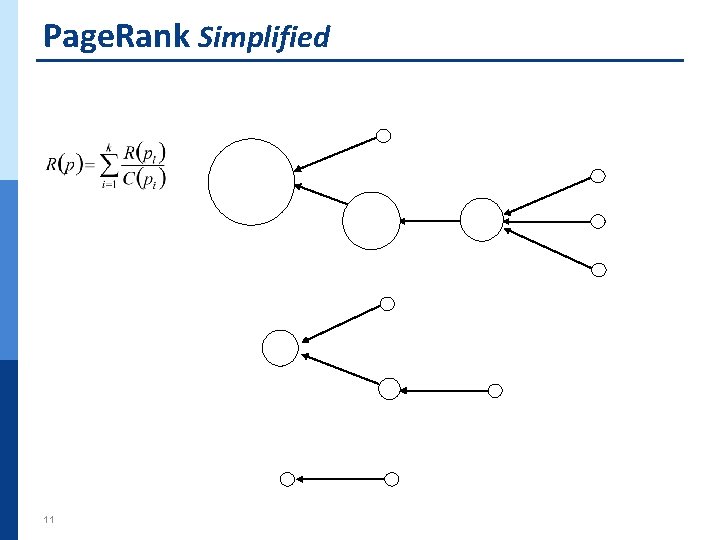

Page. Rank Simplified 11

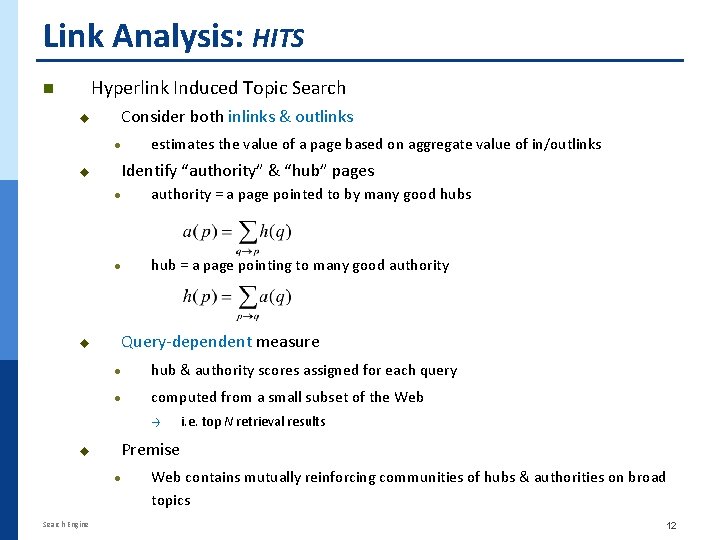

Link Analysis: HITS Hyperlink Induced Topic Search n u Consider both inlinks & outlinks · u u estimates the value of a page based on aggregate value of in/outlinks Identify “authority” & “hub” pages · authority = a page pointed to by many good hubs · hub = a page pointing to many good authority Query-dependent measure · hub & authority scores assigned for each query · computed from a small subset of the Web → u Premise · Search Engine i. e. top N retrieval results Web contains mutually reinforcing communities of hubs & authorities on broad topics 12

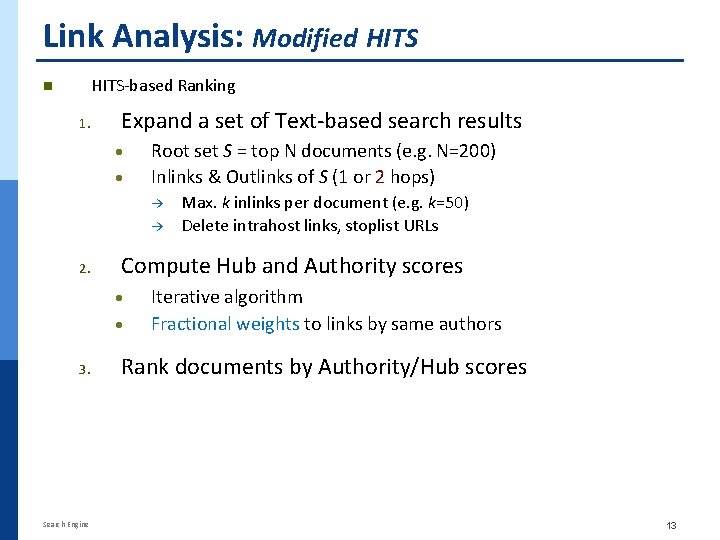

Link Analysis: Modified HITS-based Ranking n 1. Expand a set of Text-based search results · · Root set S = top N documents (e. g. N=200) Inlinks & Outlinks of S (1 or 2 hops) → → 2. Compute Hub and Authority scores · · 3. Search Engine Max. k inlinks per document (e. g. k=50) Delete intrahost links, stoplist URLs Iterative algorithm Fractional weights to links by same authors Rank documents by Authority/Hub scores 13

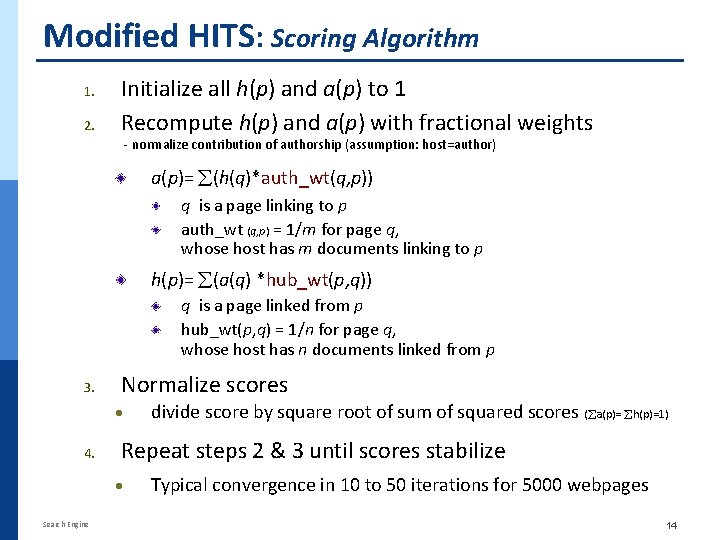

Modified HITS: Scoring Algorithm 1. 2. Initialize all h(p) and a(p) to 1 Recompute h(p) and a(p) with fractional weights - normalize contribution of authorship (assumption: host=author) a(p)= (h(q)*auth_wt(q, p)) q is a page linking to p auth_wt (q, p) = 1/m for page q, whose host has m documents linking to p h(p)= (a(q) *hub_wt(p, q)) q is a page linked from p hub_wt(p, q) = 1/n for page q, whose host has n documents linked from p 3. Normalize scores · 4. Repeat steps 2 & 3 until scores stabilize · Search Engine divide score by square root of sum of squared scores ( a(p)= h(p)=1) Typical convergence in 10 to 50 iterations for 5000 webpages 14

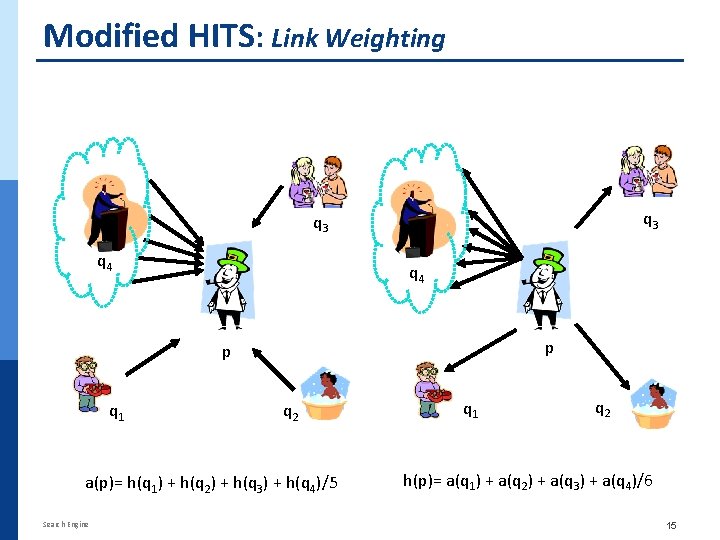

Modified HITS: Link Weighting q 3 q 4 p p q 1 q 2 a(p)= h(q 1) + h(q 2) + h(q 3) + h(q 4)/5 Search Engine q 1 q 2 h(p)= a(q 1) + a(q 2) + a(q 3) + a(q 4)/6 15

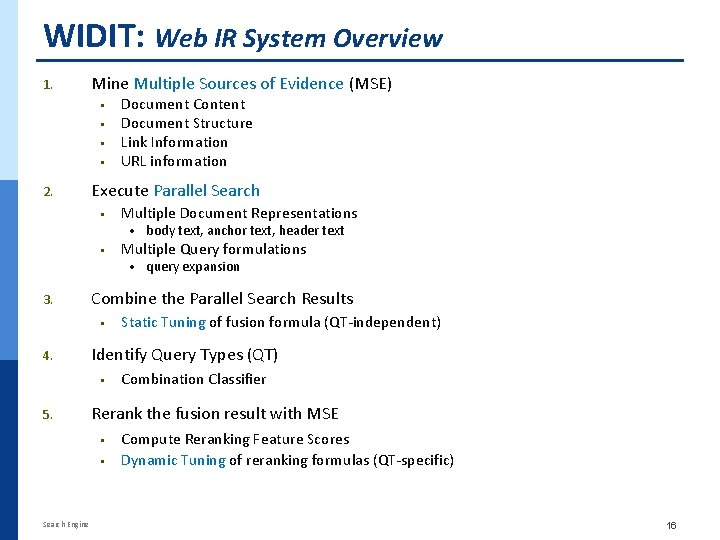

WIDIT: Web IR System Overview 1. Mine Multiple Sources of Evidence (MSE) § § 2. Document Content Document Structure Link Information URL information Execute Parallel Search § Multiple Document Representations · body text, anchor text, header text § Multiple Query formulations · query expansion 3. Combine the Parallel Search Results § 4. Identify Query Types (QT) § 5. Combination Classifier Rerank the fusion result with MSE § § Search Engine Static Tuning of fusion formula (QT-independent) Compute Reranking Feature Scores Dynamic Tuning of reranking formulas (QT-specific) 16

WIDIT: Web IR System Architecture Anchor Index Body Index Sub-indexes Documents Indexing Module Sub-indexes Header Index Static Tuning Sub-indexes Fusion Module Retrieval Module Search Results Topics Queries Simple Queries Query Classification Module Queries Expanded Queries Query Types Dynamic Tuning Fusion Result Re-ranking Module Final Result Search Engine 17

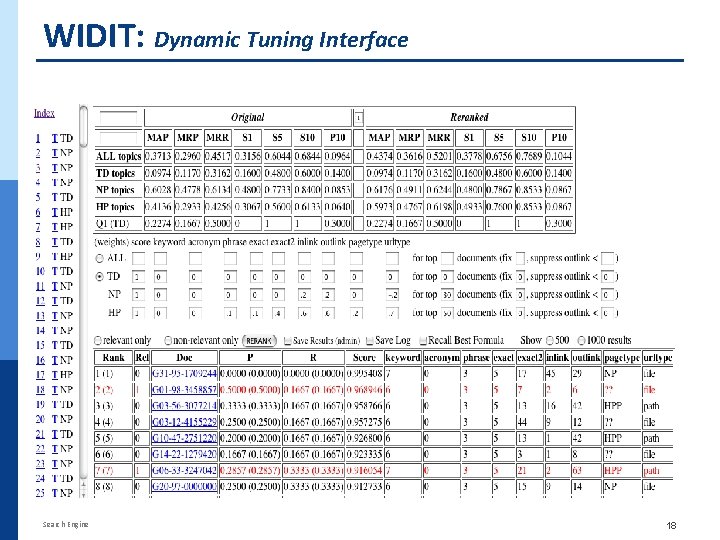

WIDIT: Dynamic Tuning Interface Search Engine 18

Term Weight: Length-Normalized Term Weights u · · SMART lnu weight for document terms SMART ltc weight for query terms where: fik = number of times term k appears in document i idfk = inverse document frequency of term k t = number of terms in document/query Document Score u · inner product of document and query vectors where: Search Engine SMART System qk = weight of term k in the query dik = weight of term k in document i t = number of terms common to query & document 19

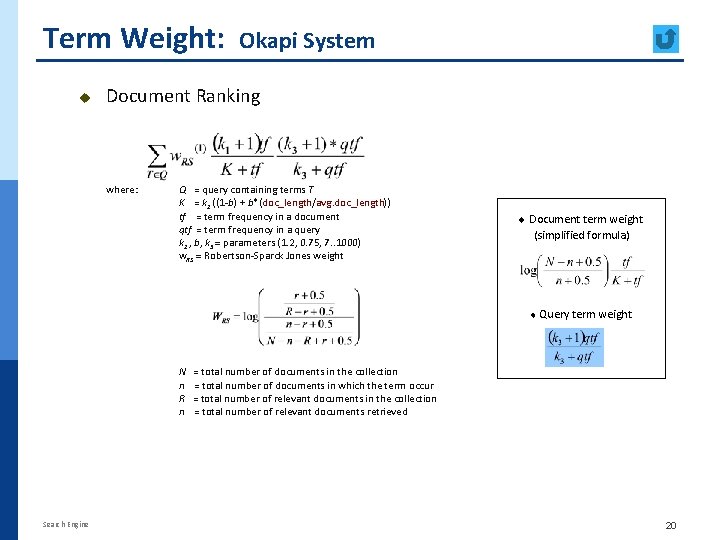

Term Weight: u Okapi System Document Ranking where: Q = query containing terms T K = k 1 ((1 -b) + b*(doc_length/avg. doc_length)) tf = term frequency in a document qtf = term frequency in a query k 1 , b, k 3 = parameters (1. 2, 0. 75, 7. . 1000) w. RS = Robertson-Sparck Jones weight ¨ Document term weight (simplified formula) ¨ Query term weight N n R n Search Engine = total number of documents in the collection = total number of documents in which the term occur = total number of relevant documents in the collection = total number of relevant documents retrieved 20

- Slides: 20