IR Theory Relevance Feedback Relevance Feedback Example n

IR Theory: Relevance Feedback

Relevance Feedback: Example n Initial Results Search Engine 2

Relevance Feedback: Example n Relevance Feedback Search Engine 3

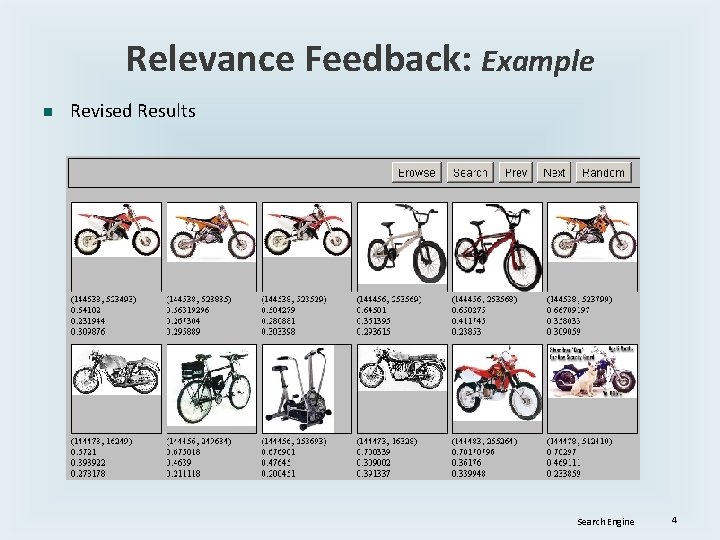

Relevance Feedback: Example n Revised Results Search Engine 4

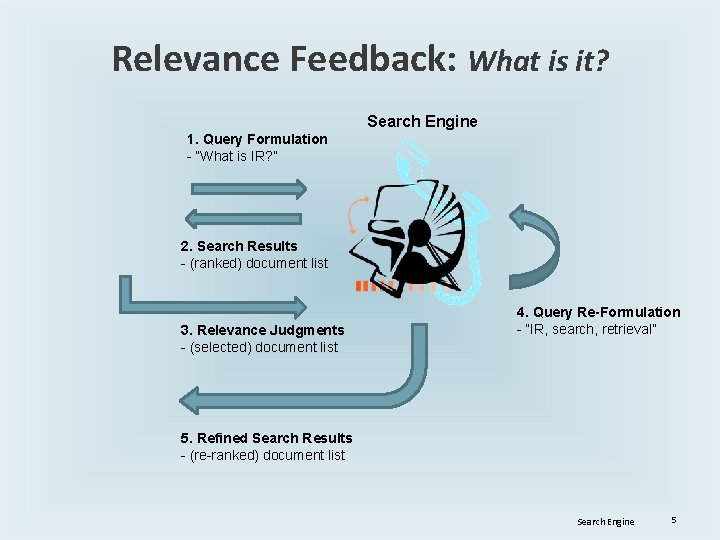

Relevance Feedback: What is it? Search Engine 1. Query Formulation - “What is IR? ” 2. Search Results - (ranked) document list 3. Relevance Judgments - (selected) document list 4. Query Re-Formulation - “IR, search, retrieval” 5. Refined Search Results - (re-ranked) document list Search Engine 5

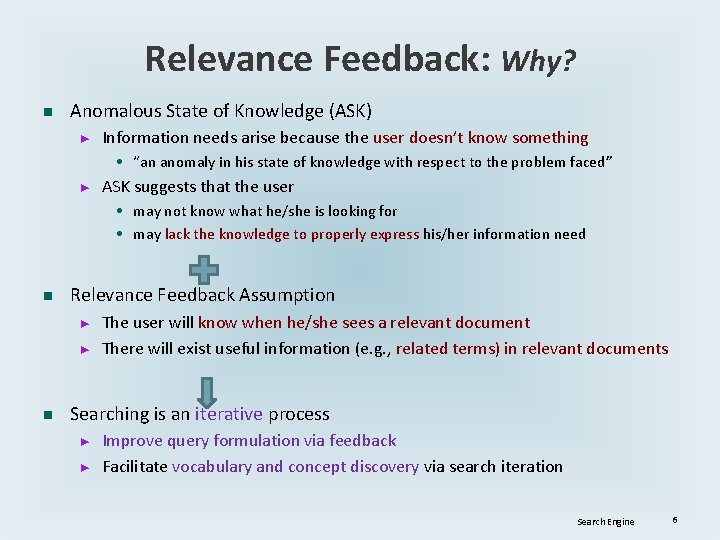

Relevance Feedback: Why? n Anomalous State of Knowledge (ASK) ► Information needs arise because the user doesn’t know something • “an anomaly in his state of knowledge with respect to the problem faced” ► ASK suggests that the user • may not know what he/she is looking for • may lack the knowledge to properly express his/her information need n Relevance Feedback Assumption ► ► n The user will know when he/she sees a relevant document There will exist useful information (e. g. , related terms) in relevant documents Searching is an iterative process ► ► Improve query formulation via feedback Facilitate vocabulary and concept discovery via search iteration Search Engine 6

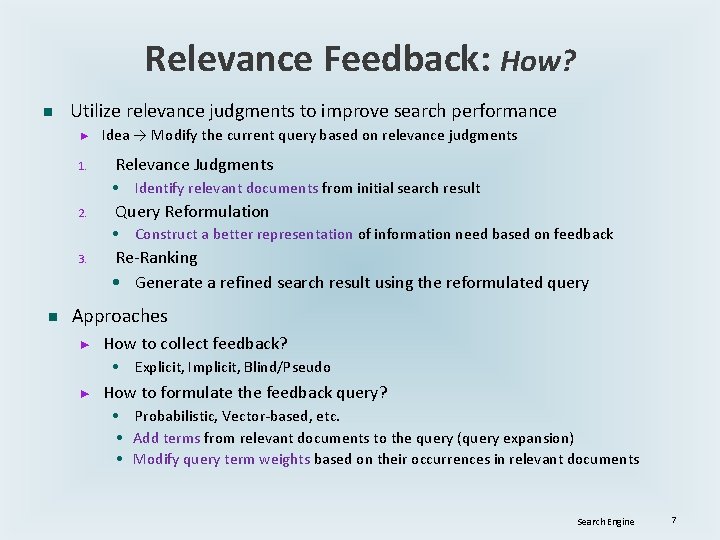

Relevance Feedback: How? n Utilize relevance judgments to improve search performance ► 1. Idea → Modify the current query based on relevance judgments Relevance Judgments • Identify relevant documents from initial search result 2. Query Reformulation • Construct a better representation of information need based on feedback 3. n Re-Ranking • Generate a refined search result using the reformulated query Approaches ► How to collect feedback? • Explicit, Implicit, Blind/Pseudo ► How to formulate the feedback query? • Probabilistic, Vector-based, etc. • Add terms from relevant documents to the query (query expansion) • Modify query term weights based on their occurrences in relevant documents Search Engine 7

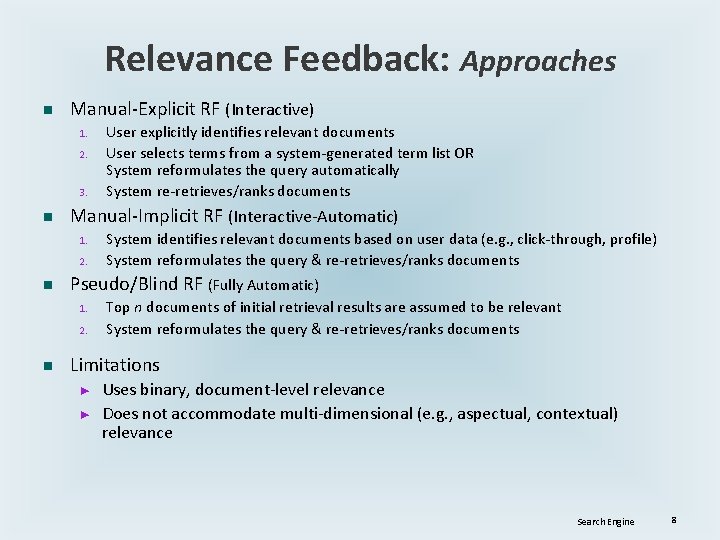

Relevance Feedback: Approaches n Manual-Explicit RF (Interactive) 1. 2. 3. n Manual-Implicit RF (Interactive-Automatic) 1. 2. n System identifies relevant documents based on user data (e. g. , click-through, profile) System reformulates the query & re-retrieves/ranks documents Pseudo/Blind RF (Fully Automatic) 1. 2. n User explicitly identifies relevant documents User selects terms from a system-generated term list OR System reformulates the query automatically System re-retrieves/ranks documents Top n documents of initial retrieval results are assumed to be relevant System reformulates the query & re-retrieves/ranks documents Limitations ► ► Uses binary, document-level relevance Does not accommodate multi-dimensional (e. g. , aspectual, contextual) relevance Search Engine 8

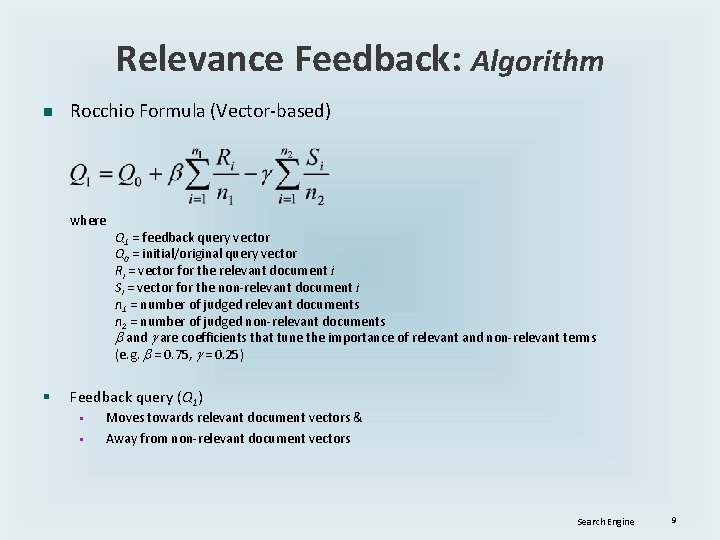

Relevance Feedback: Algorithm n Rocchio Formula (Vector-based) where § Q 1 = feedback query vector Q 0 = initial/original query vector Ri = vector for the relevant document i Si = vector for the non-relevant document i n 1 = number of judged relevant documents n 2 = number of judged non-relevant documents and are coefficients that tune the importance of relevant and non-relevant terms (e. g. = 0. 75, = 0. 25) Feedback query (Q 1) § § Moves towards relevant document vectors & Away from non-relevant document vectors Search Engine 9

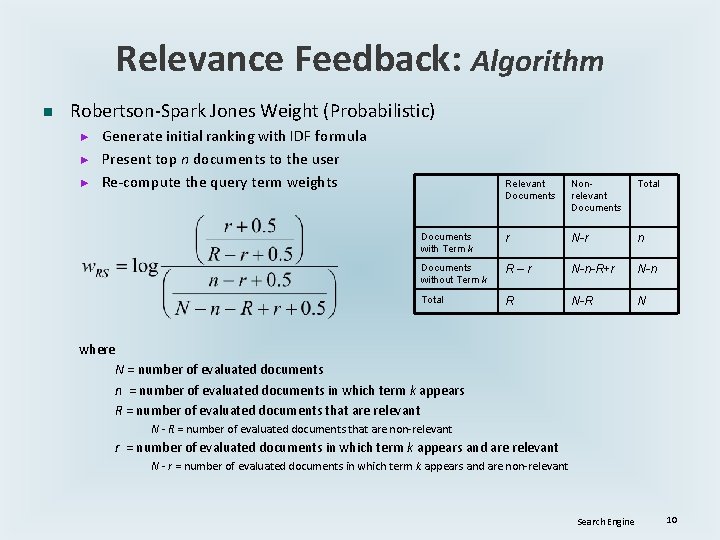

Relevance Feedback: Algorithm n Robertson-Spark Jones Weight (Probabilistic) ► ► ► Generate initial ranking with IDF formula Present top n documents to the user Re-compute the query term weights Relevant Documents Nonrelevant Documents Total Documents with Term k r N-r n Documents without Term k R–r N-n-R+r N-n Total R N-R N where N = number of evaluated documents n = number of evaluated documents in which term k appears R = number of evaluated documents that are relevant N - R = number of evaluated documents that are non-relevant r = number of evaluated documents in which term k appears and are relevant N - r = number of evaluated documents in which term k appears and are non-relevant Search Engine 10

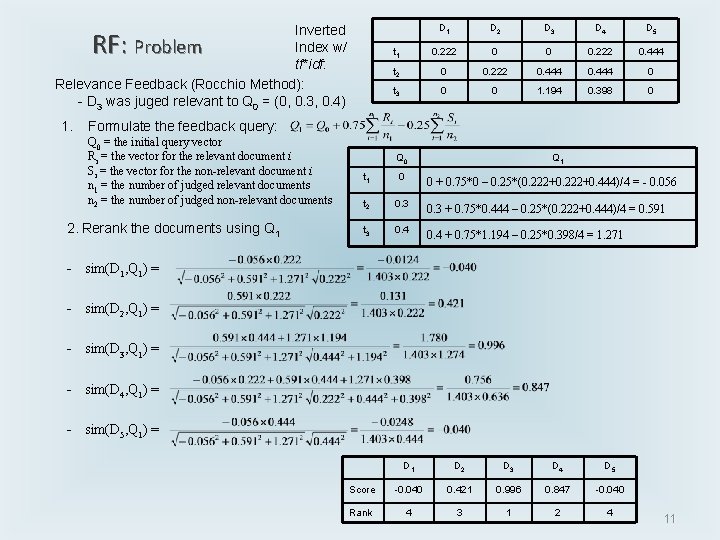

Inverted Index w/ Problem tf*idf: Relevance Feedback (Rocchio Method): - D 3 was juged relevant to Q 0 = (0, 0. 3, 0. 4) RF: 1. D 1 D 2 D 3 D 4 D 5 t 1 0. 222 0 0 0. 222 0. 444 t 2 0 0. 222 0. 444 0 t 3 0 0 1. 194 0. 398 0 Formulate the feedback query: Q 0 = the initial query vector Ri = the vector for the relevant document i Si = the vector for the non-relevant document i n 1 = the number of judged relevant documents n 2 = the number of judged non-relevant documents 2. Rerank the documents using Q 1 Q 0 Q 1 t 1 0 t 2 0. 3 + 0. 75*0. 444 – 0. 25*(0. 222+0. 444)/4 = 0. 591 t 3 0. 4 + 0. 75*1. 194 – 0. 25*0. 398/4 = 1. 271 0 + 0. 75*0 – 0. 25*(0. 222+0. 444)/4 = - 0. 056 - sim(D 1, Q 1) = - sim(D 2, Q 1) = - sim(D 3, Q 1) = - sim(D 4, Q 1) = - sim(D 5, Q 1) = D 1 D 2 D 3 D 4 D 5 Score -0. 040 0. 421 0. 996 0. 847 -0. 040 Rank 4 3 1 2 4 11

- Slides: 11