IR into Context of the User IR models

- Slides: 61

IR into Context of the User: IR models, Interaction & Relevance Peter Ingwersen Royal School of LIS, University of Copenhagen Denmark peter. ingwersen@hum. ku. dk – http: //research. ku. dk/search/? pure=en%2 Fpersons%2 Fpeteringwersen%28 b 7 bb 0323 -445 e-4 fdf-88 c 833 e 399 df 2 b 46%29%2 Fcv. html IVA 2017 Ingwersen 1

Agenda - 1 n Introduction Research Frameworks vs. Models n Central components of Lab. IR and Interactive IR (IIR) n The Integrated Cognitive Research Framework for IR n n From Simulation to ‘Ultra-light’ IIR Short-term IR interaction experiments n Sample study – Diane Kelly (2005/2007) n IVA 2017 Ingwersen 2

Agenda - 2 n Experimental Research Designs with Test persons Interactive-light session-based IR studies n Request types n Test persons n Design of task-based simulated search situations n Relevance and evaluation measures in IIR n Sample study – Pia Borlund (2000; 2003 b) n IVA 2017 Ingwersen 3

Agenda - 3 n Naturalistic Field Investigations of IIR (20 min) Integrating context variables n Live systems & (simulated) work tasks n Sample Study – Marianne Lykke (Nielsen) (2001; 2004) n n Wrapping up (5 min) Questions are welcome during the sessions Advanced Information Retrieval / Ricardo Baetza Yeates & Ingwersen IVA 2017 Massimo Melucci (eds. ). Springer, 2011, p. 91 -118. 4

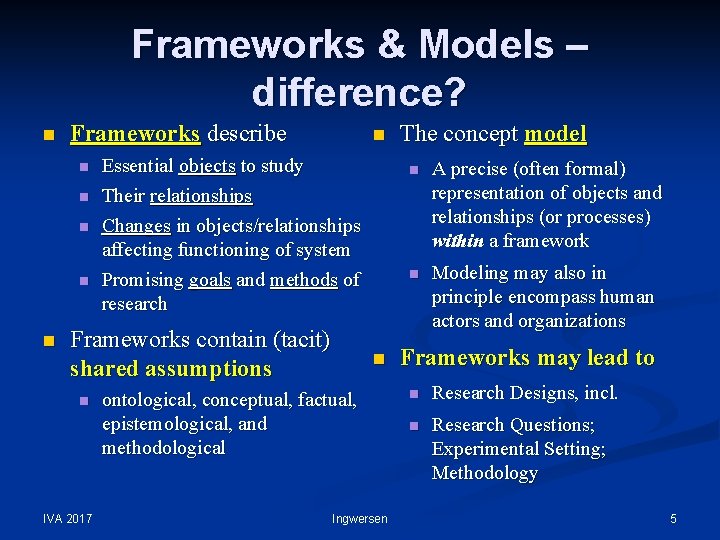

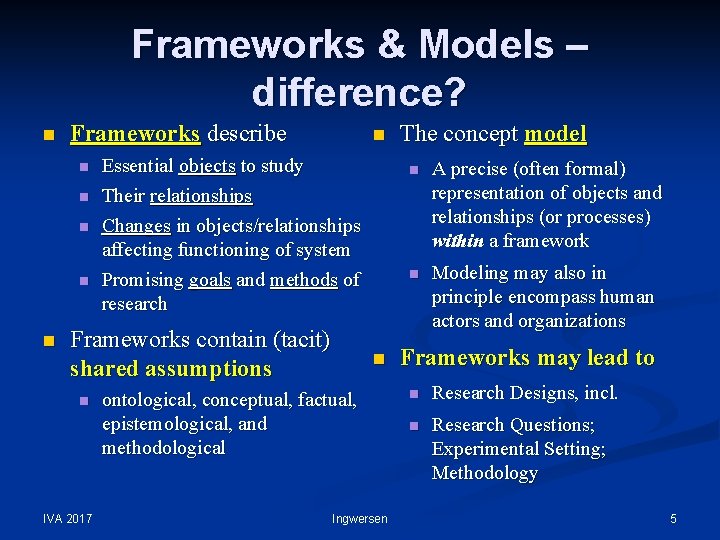

Frameworks & Models – difference? n Frameworks describe n n n Essential objects to study Their relationships Changes in objects/relationships affecting functioning of system Promising goals and methods of research Frameworks contain (tacit) shared assumptions n IVA 2017 n ontological, conceptual, factual, epistemological, and methodological Ingwersen The concept model n A precise (often formal) representation of objects and relationships (or processes) within a framework n Modeling may also in principle encompass human actors and organizations Frameworks may lead to n Research Designs, incl. n Research Questions; Experimental Setting; Methodology 5

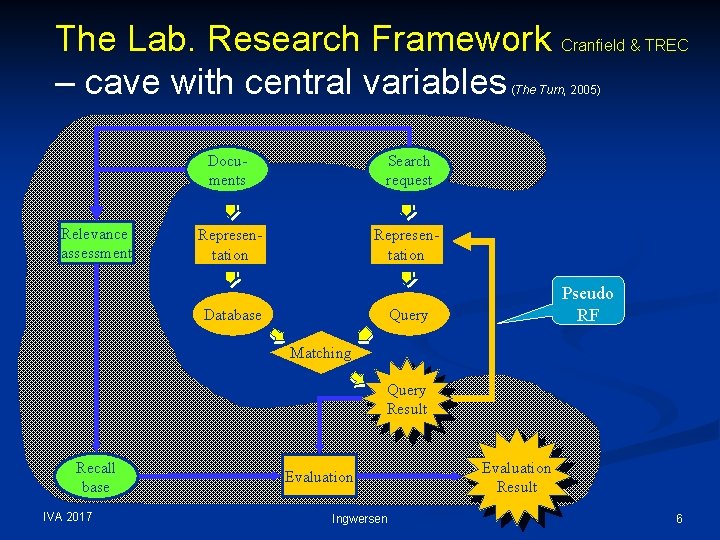

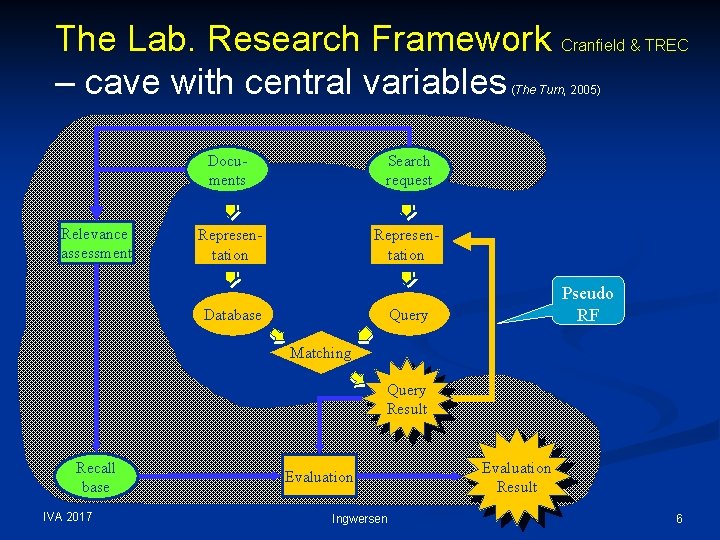

The Lab. Research Framework Cranfield & TREC – cave with central variables (The Turn, 2005) Relevance assessment Documents Search request Representation Database Pseudo RF Query Matching Query Result Recall base IVA 2017 Evaluation Ingwersen Evaluation Result 6

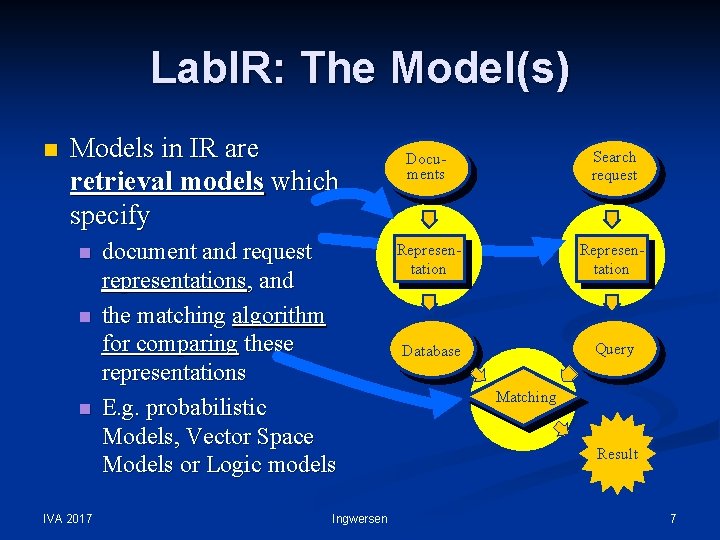

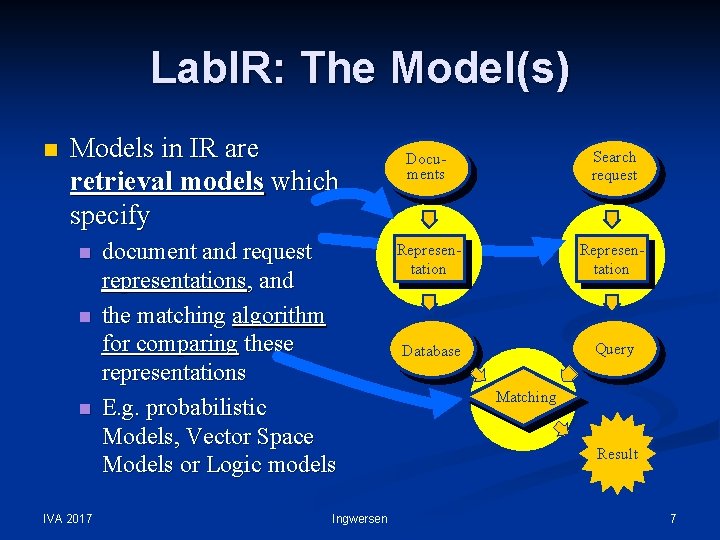

Lab. IR: The Model(s) n Models in IR are retrieval models which specify n n n IVA 2017 document and request representations, and the matching algorithm for comparing these representations E. g. probabilistic Models, Vector Space Models or Logic models Ingwersen Documents Search request Representation Database Query Matching Result 7

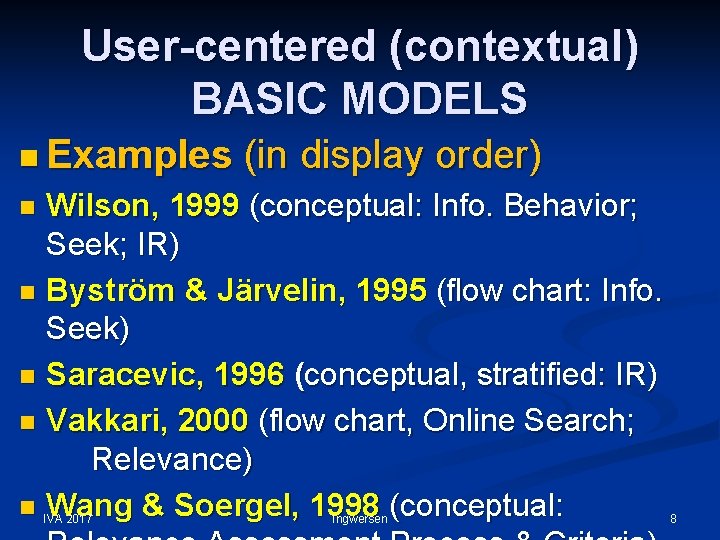

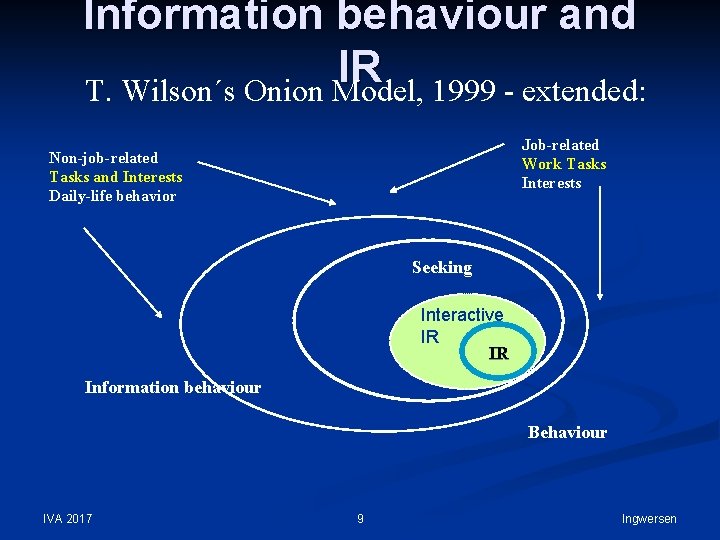

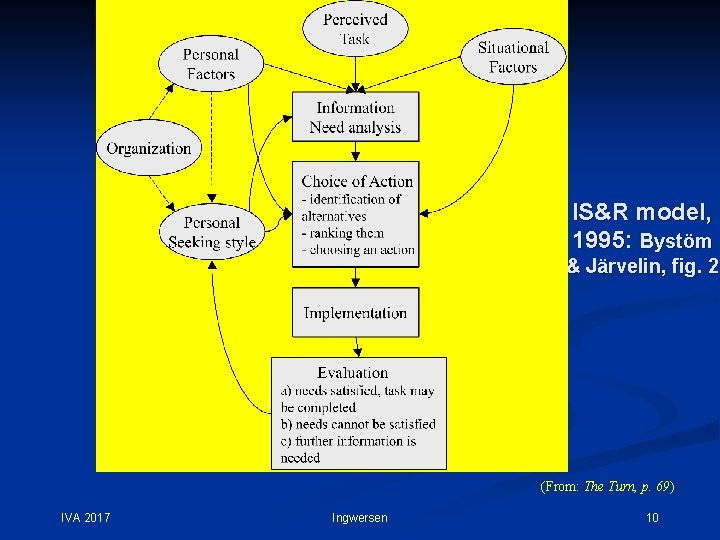

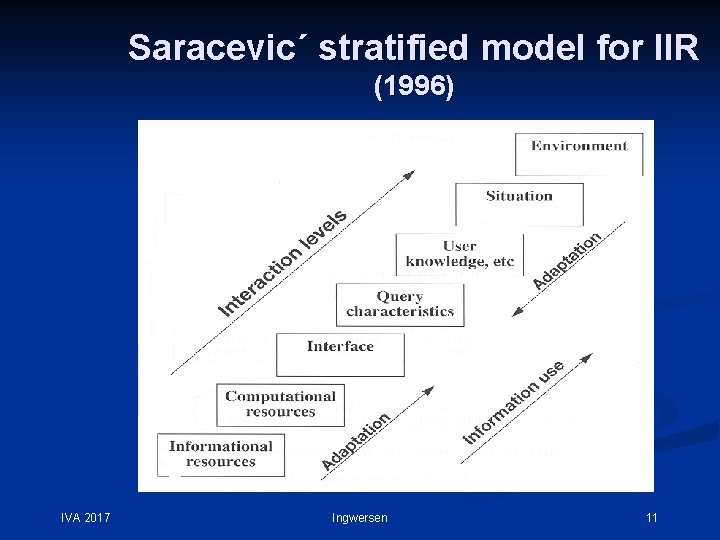

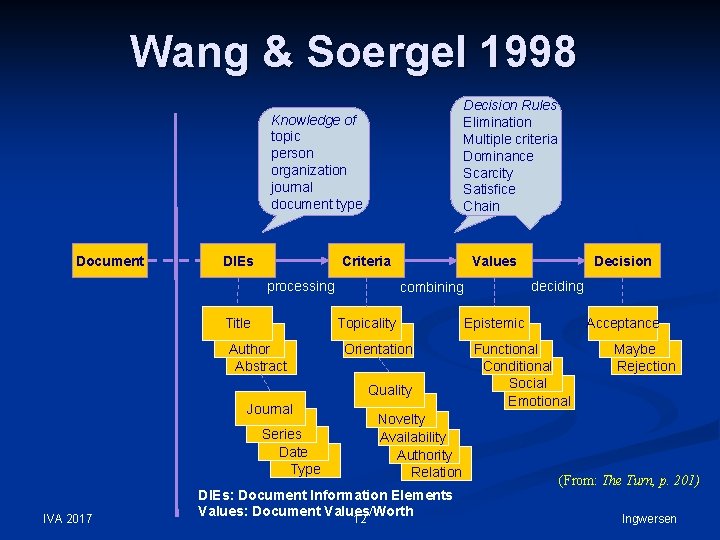

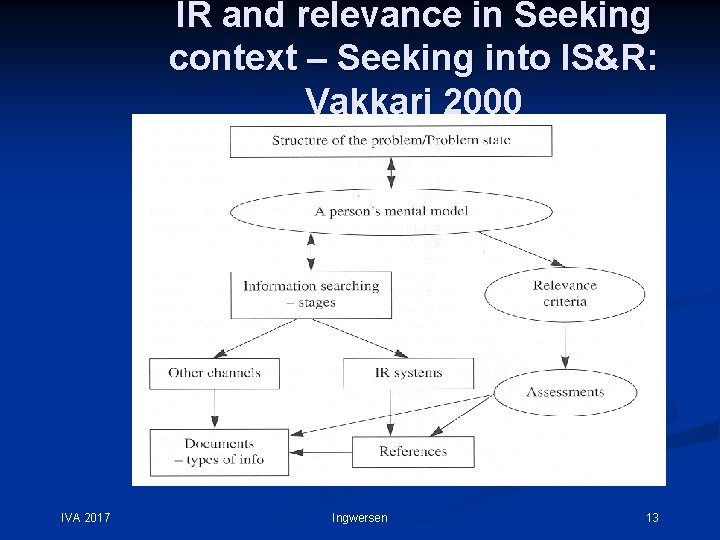

User-centered (contextual) BASIC MODELS n Examples (in display order) Wilson, 1999 (conceptual: Info. Behavior; Seek; IR) n Byström & Järvelin, 1995 (flow chart: Info. Seek) n Saracevic, 1996 (conceptual, stratified: IR) n Vakkari, 2000 (flow chart, Online Search; Relevance) n Wang & Soergel, 1998 (conceptual: n IVA 2017 Ingwersen 8

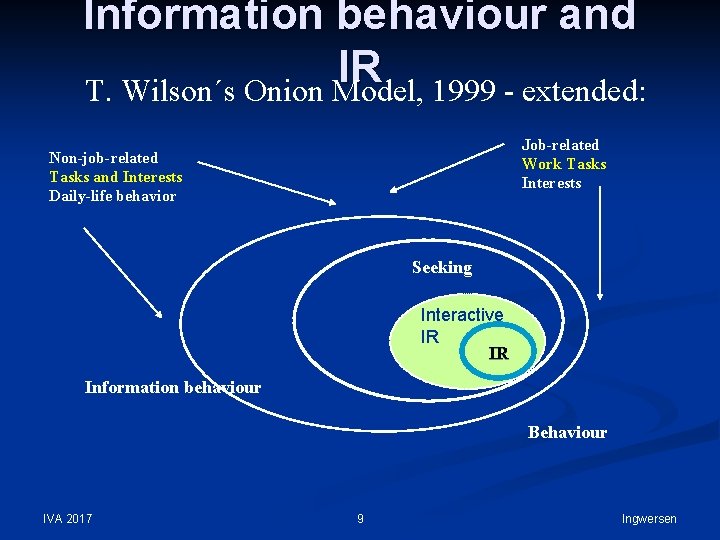

Information behaviour and IR T. Wilson´s Onion Model, 1999 - extended: Job-related Work Tasks Interests Non-job-related Tasks and Interests Daily-life behavior Seeking Interactive IR IR Information behaviour Behaviour IVA 2017 9 Ingwersen

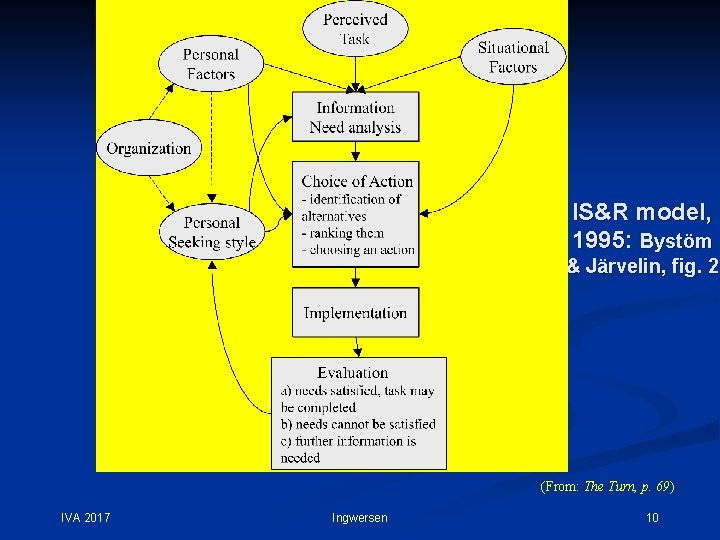

IS&R model, 1995: Bystöm & Järvelin, fig. 2 (From: The Turn, p. 69) IVA 2017 Ingwersen 10

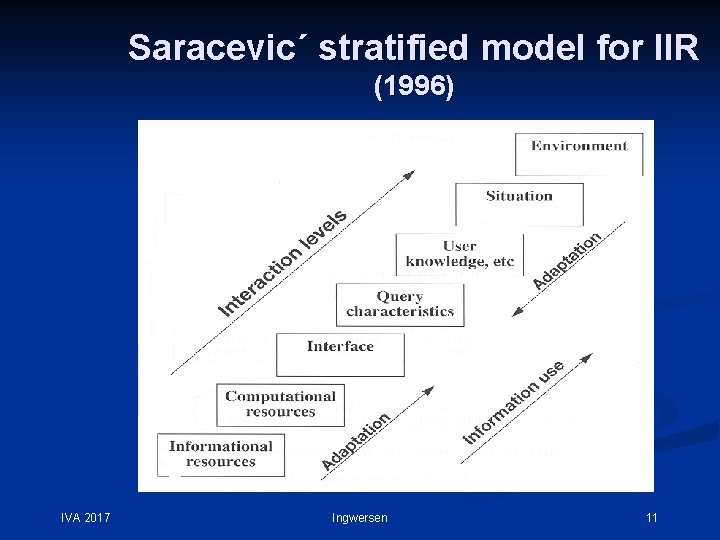

Saracevic´ stratified model for IIR (1996) IVA 2017 Ingwersen 11

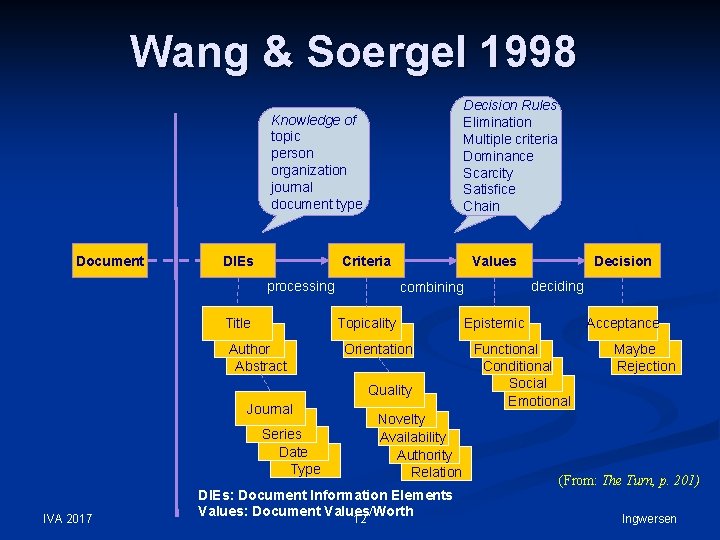

Wang & Soergel 1998 Decision Rules Elimination Multiple criteria Dominance Scarcity Satisfice Chain Knowledge of topic person organization journal document type Document DIEs Criteria processing Title Values Author Abstract Orientation Quality Journal Series Date Type IVA 2017 deciding combining Topicality Novelty Availability Authority Relation DIEs: Document Information Elements Values: Document Values/Worth 12 Decision Epistemic Acceptance Functional Conditional Social Emotional Maybe Rejection (From: The Turn, p. 201) Ingwersen

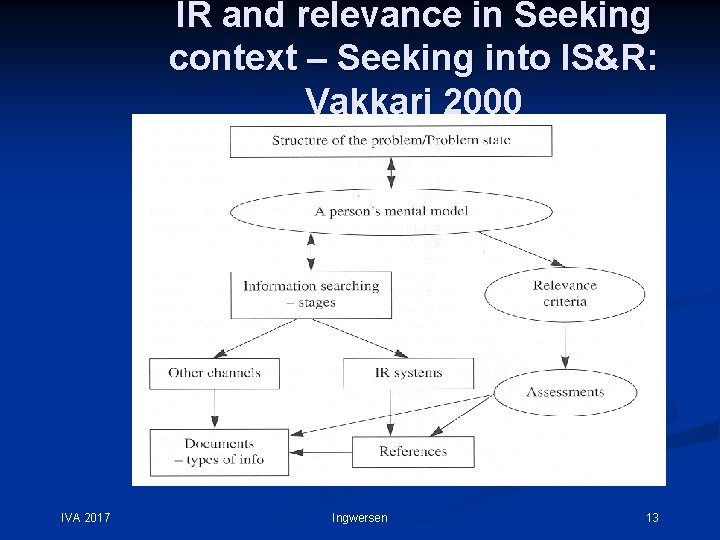

IR and relevance in Seeking context – Seeking into IS&R: Vakkari 2000 IVA 2017 Ingwersen 13

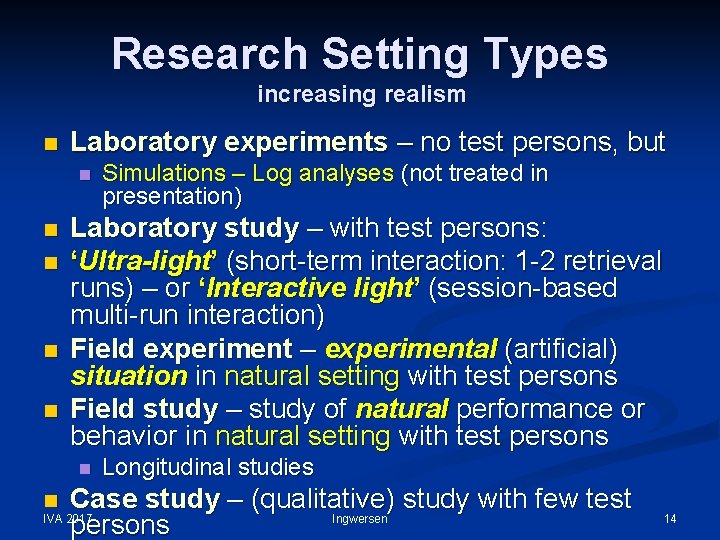

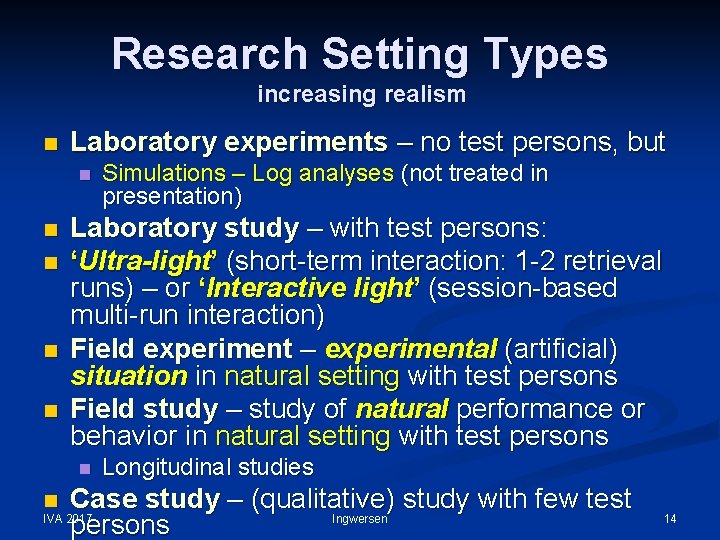

Research Setting Types increasing realism n Laboratory experiments – no test persons, but n n n Simulations – Log analyses (not treated in presentation) Laboratory study – with test persons: ‘Ultra-light’ (short-term interaction: 1 -2 retrieval runs) – or ‘Interactive light’ (session-based multi-run interaction) Field experiment – experimental (artificial) situation in natural setting with test persons Field study – study of natural performance or behavior in natural setting with test persons n Longitudinal studies Case study – (qualitative) study with few test Ingwersen IVA 2017 persons n 14

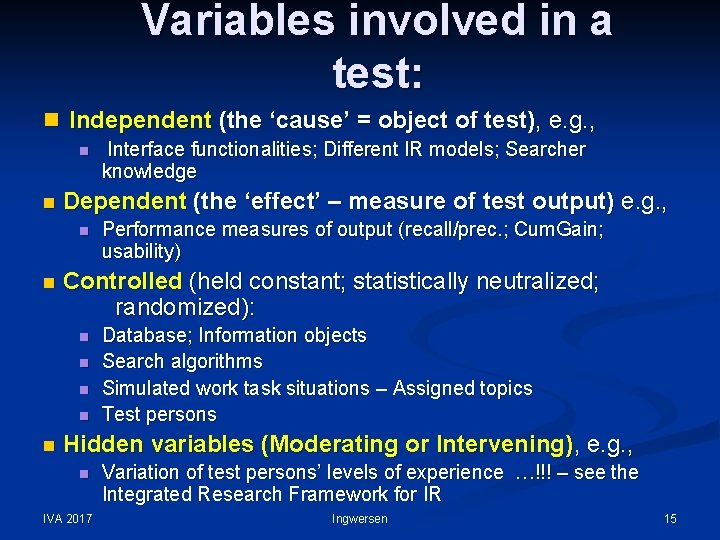

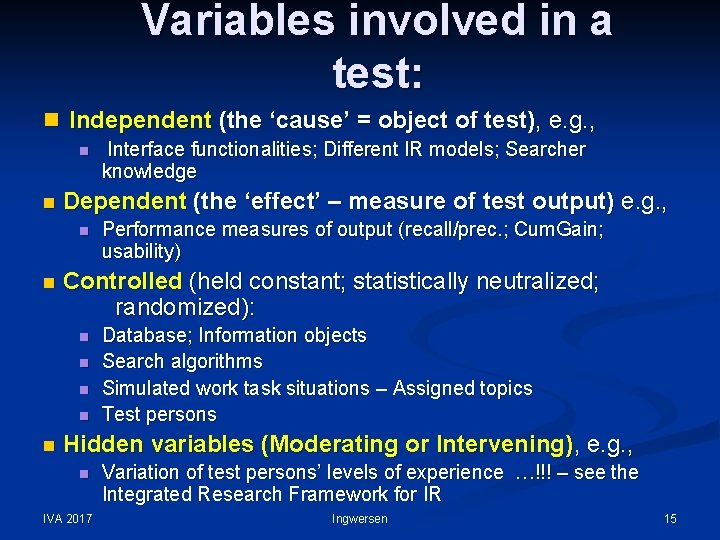

Variables involved in a test: n Independent (the ‘cause’ = object of test), e. g. , n n Dependent (the ‘effect’ – measure of test output) e. g. , n n Performance measures of output (recall/prec. ; Cum. Gain; usability) Controlled (held constant; statistically neutralized; randomized): n n n Interface functionalities; Different IR models; Searcher knowledge Database; Information objects Search algorithms Simulated work task situations – Assigned topics Test persons Hidden variables (Moderating or Intervening), e. g. , n IVA 2017 Variation of test persons’ levels of experience …!!! – see the Integrated Research Framework for IR Ingwersen 15

Agenda - 1 ü Introduction to Tutorial ü Research Frameworks vs. Models n Central components of Lab. IR and Interactive IR (IIR) n The Integrated Cognitive Research Framework for IR n From Simulation to ‘Ultra-light’ IIR (25 min) Short-term IR interaction experiments n Sample study – Diane Kelly (2005/2007) n Essir 2009 IVA 2017 Ingwersen 16

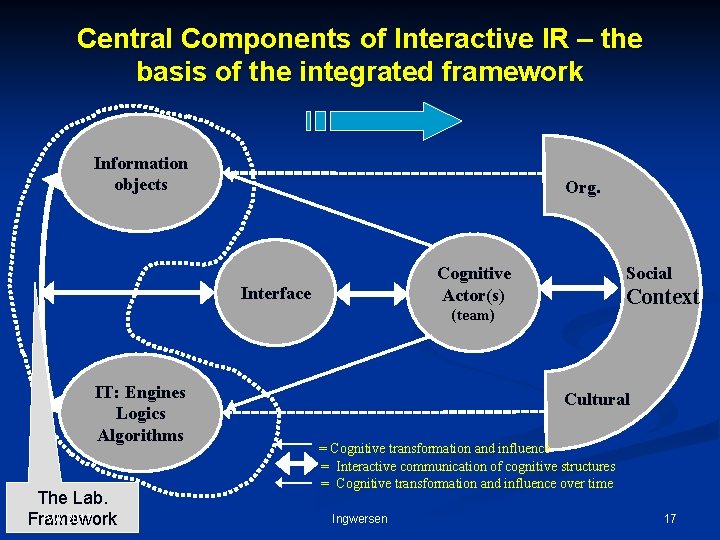

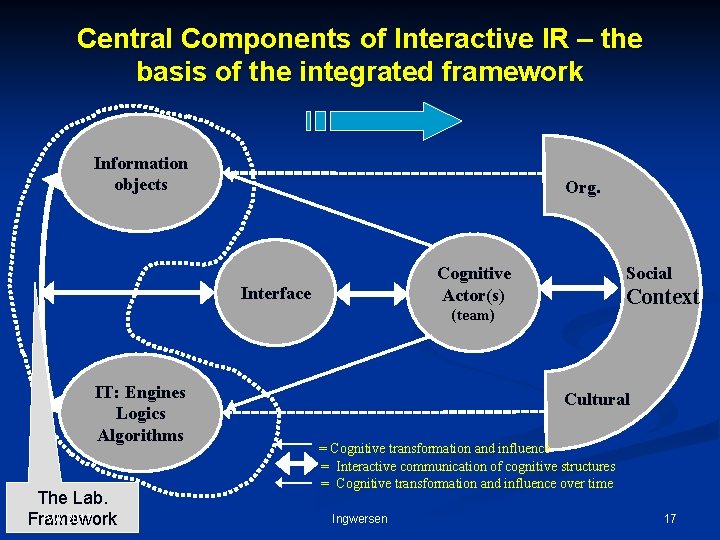

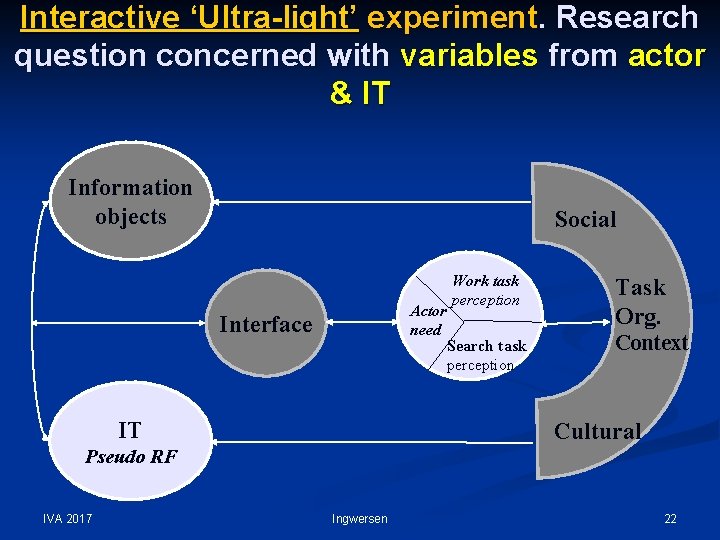

Central Components of Interactive IR – the basis of the integrated framework Information objects Org. Cognitive Actor(s) Interface Social Context (team) IT: Engines Logics Algorithms The Lab. IVA 2017 Framework Cultural = Cognitive transformation and influence = Interactive communication of cognitive structures = Cognitive transformation and influence over time Ingwersen 17

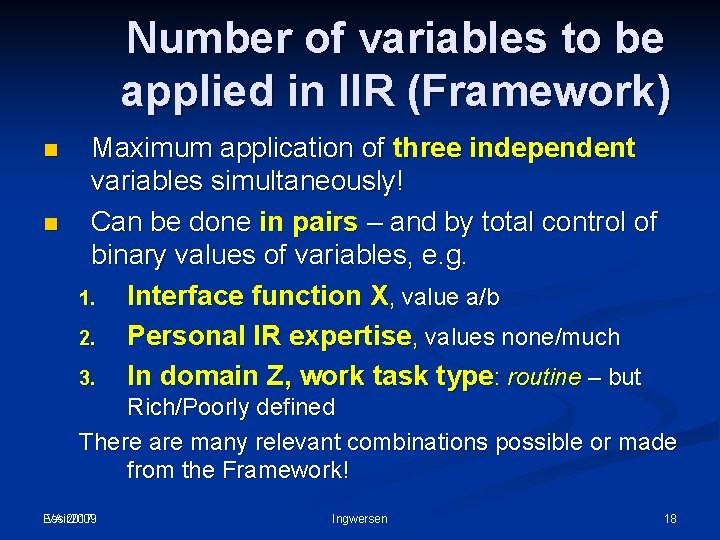

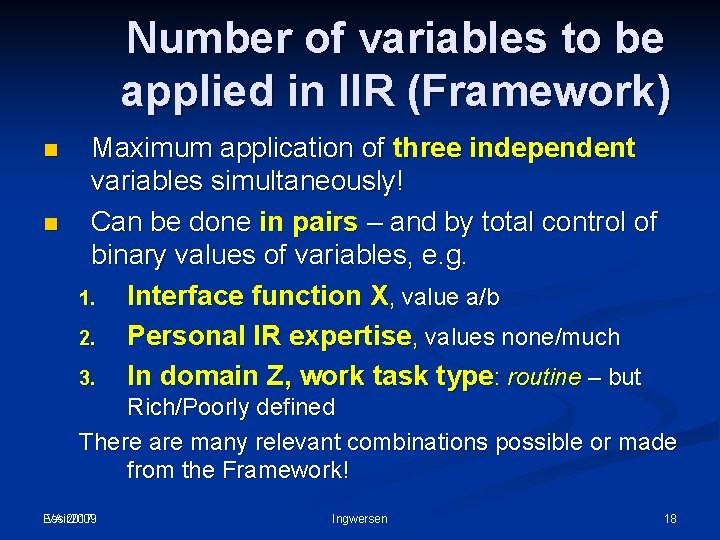

Number of variables to be applied in IIR (Framework) n n Maximum application of three independent variables simultaneously! Can be done in pairs – and by total control of binary values of variables, e. g. 1. Interface function X, value a/b 2. Personal IR expertise, values none/much 3. In domain Z, work task type: routine – but Rich/Poorly defined There are many relevant combinations possible or made from the Framework! Essir 2009 IVA 2017 Ingwersen 18

Agenda - 1 ü Introduction to Tutorial (20 min) ü Research Frameworks vs. Models ü Central components of Lab IR and Interactive IR (IIR) ü The Integrated Cognitive Research Framework for IR n From Simulation to ‘Ultra-light’ IIR (25 min) Short-term IR interaction experiments n Sample study – Diane Kelly (2005/2007) n Essir 2009 IVA 2017 Ingwersen 19

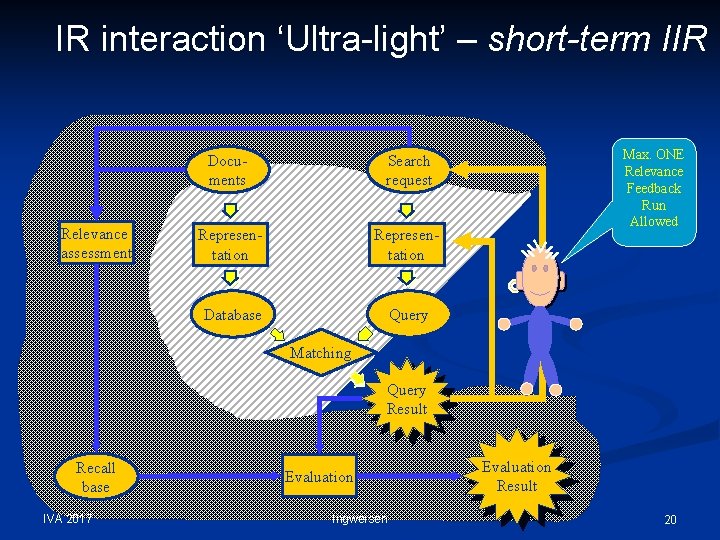

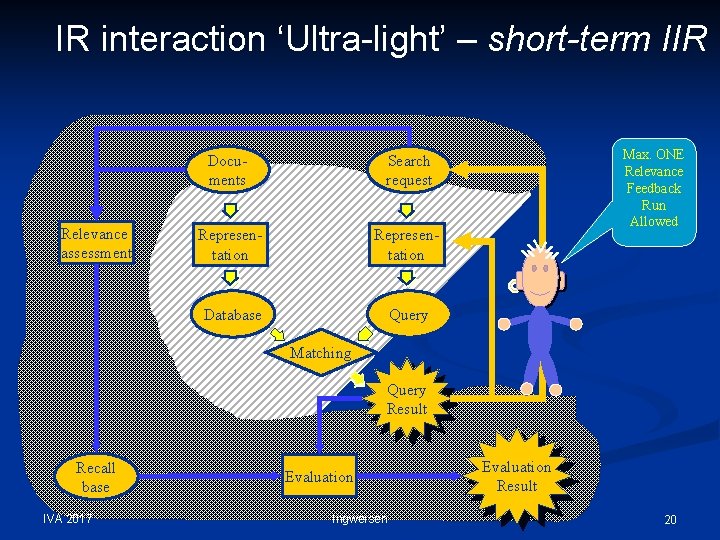

IR interaction ‘Ultra-light’ – short-term IIR Relevance assessment Documents Search request Representation Database Query Max. ONE Relevance Feedback Run Allowed Matching Query Result Recall base IVA 2017 Evaluation Ingwersen Evaluation Result 20

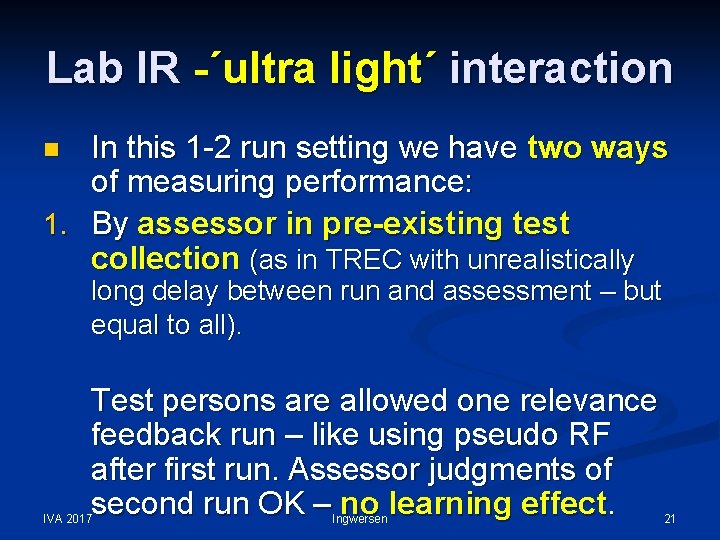

Lab IR -´ultra light´ interaction In this 1 -2 run setting we have two ways of measuring performance: 1. By assessor in pre-existing test collection (as in TREC with unrealistically n long delay between run and assessment – but equal to all). Test persons are allowed one relevance feedback run – like using pseudo RF after first run. Assessor judgments of second run OK – no learning effect. IVA 2017 Ingwersen 21

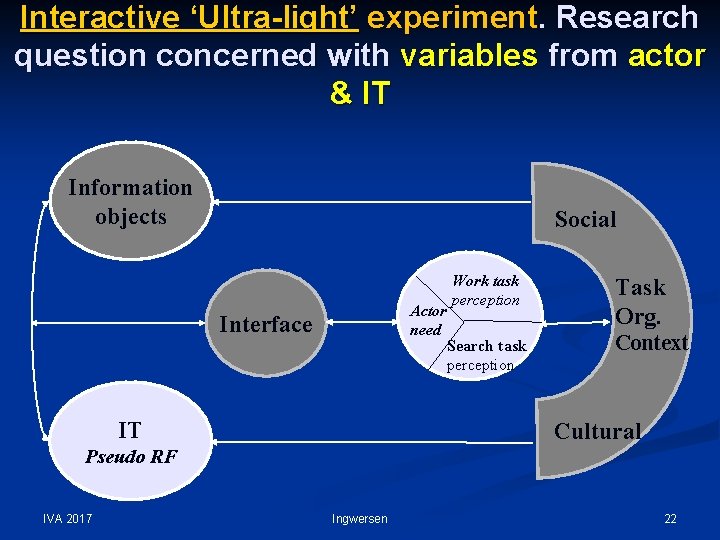

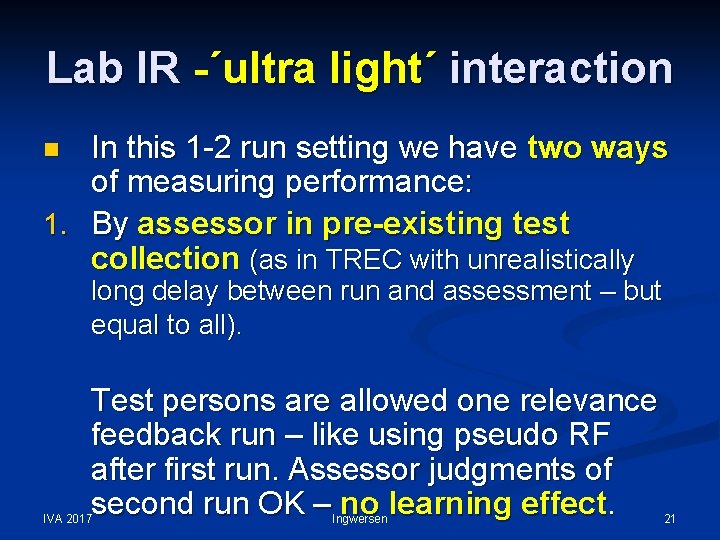

Interactive ‘Ultra-light’ experiment. Research question concerned with variables from actor & IT Information objects Social Actor need Interface IT Work task perception Task Org. Search task perception Context Cultural Pseudo RF IVA 2017 Ingwersen 22

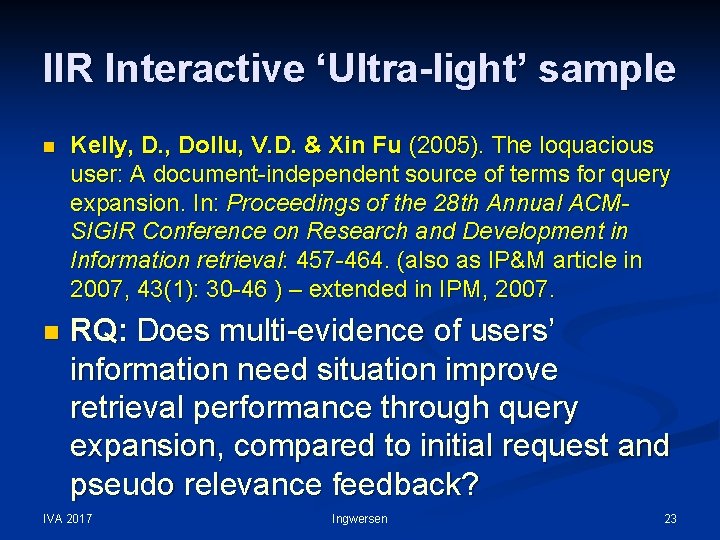

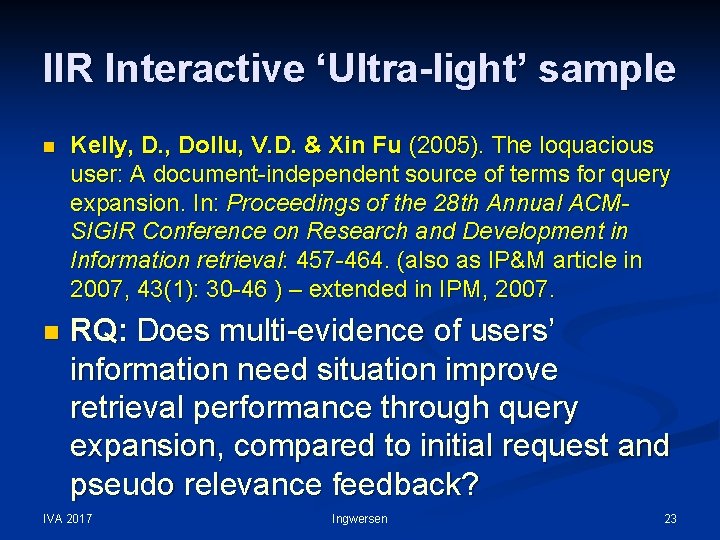

IIR Interactive ‘Ultra-light’ sample n Kelly, D. , Dollu, V. D. & Xin Fu (2005). The loquacious user: A document-independent source of terms for query expansion. In: Proceedings of the 28 th Annual ACMSIGIR Conference on Research and Development in Information retrieval: 457 -464. (also as IP&M article in 2007, 43(1): 30 -46 ) – extended in IPM, 2007. n RQ: Does multi-evidence of users’ information need situation improve retrieval performance through query expansion, compared to initial request and pseudo relevance feedback? IVA 2017 Ingwersen 23

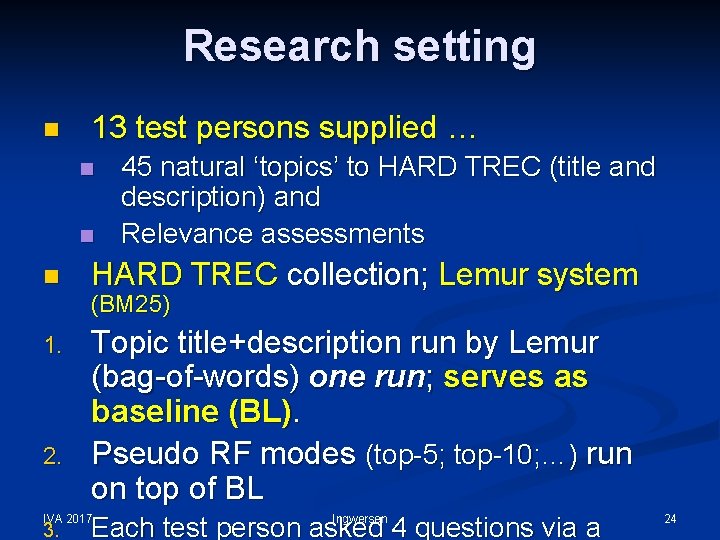

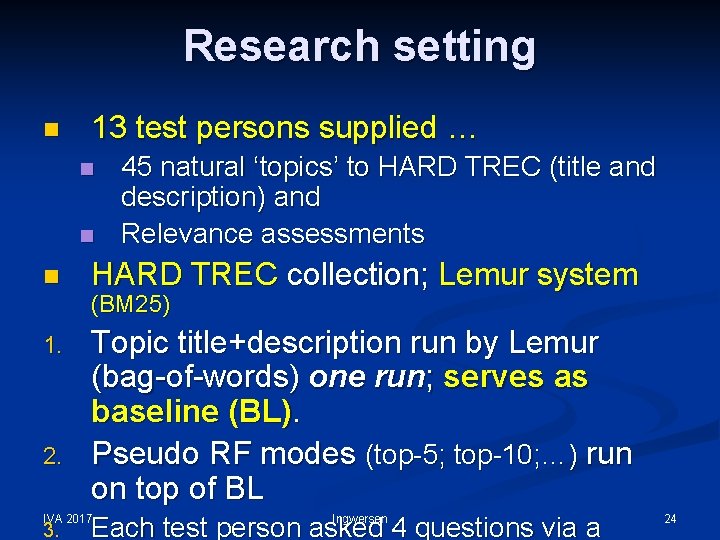

Research setting n 13 test persons supplied … n n n 45 natural ‘topics’ to HARD TREC (title and description) and Relevance assessments HARD TREC collection; Lemur system (BM 25) 1. 2. Topic title+description run by Lemur (bag-of-words) one run; serves as baseline (BL). Pseudo RF modes (top-5; top-10; …) run on top of BL Each test person asked 4 questions via a IVA 2017 3. Ingwersen 24

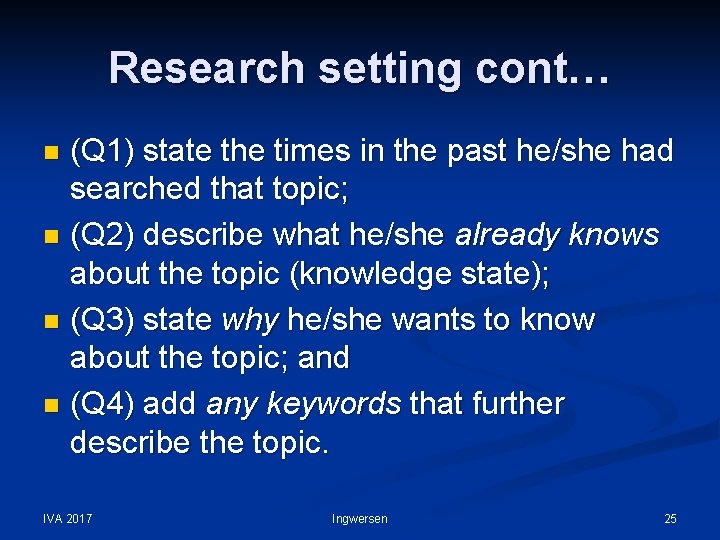

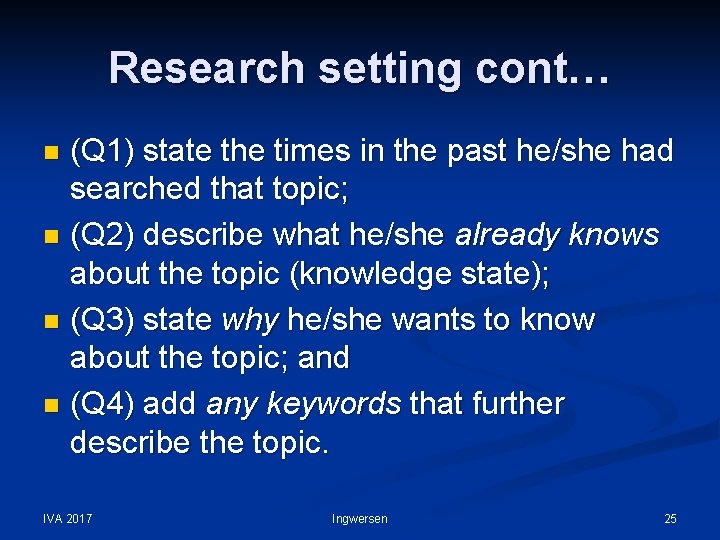

Research setting cont… (Q 1) state the times in the past he/she had searched that topic; n (Q 2) describe what he/she already knows about the topic (knowledge state); n (Q 3) state why he/she wants to know about the topic; and n (Q 4) add any keywords that further describe the topic. n IVA 2017 Ingwersen 25

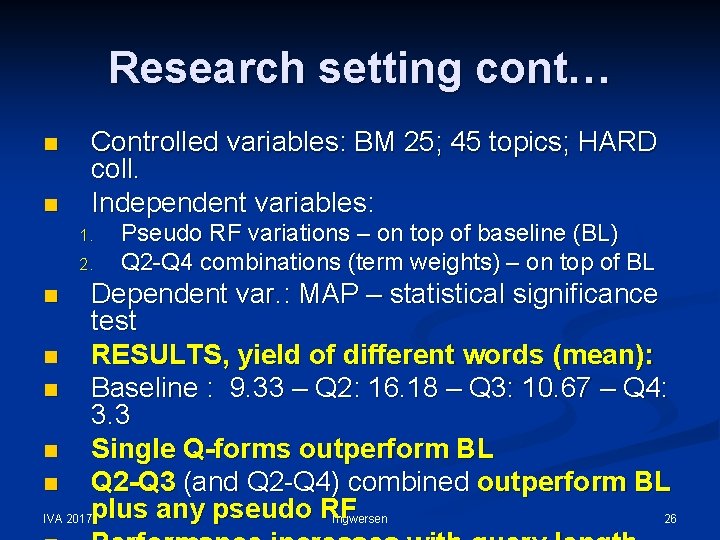

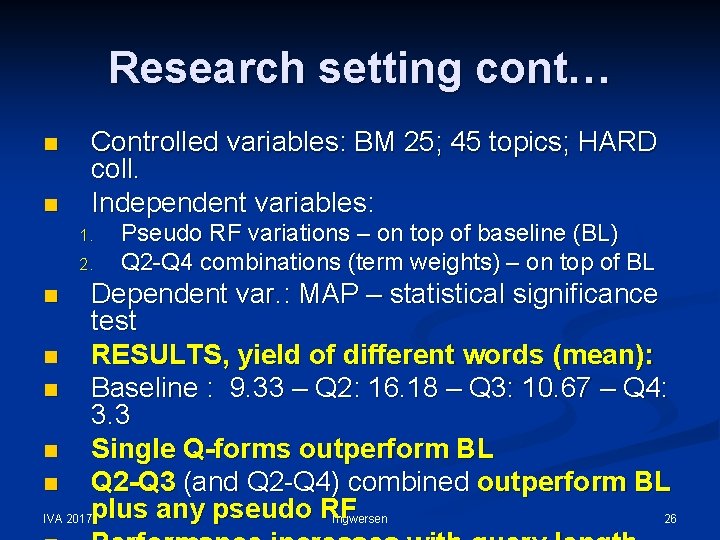

Research setting cont… n n Controlled variables: BM 25; 45 topics; HARD coll. Independent variables: 1. 2. Pseudo RF variations – on top of baseline (BL) Q 2 -Q 4 combinations (term weights) – on top of BL Dependent var. : MAP – statistical significance test n RESULTS, yield of different words (mean): n Baseline : 9. 33 – Q 2: 16. 18 – Q 3: 10. 67 – Q 4: 3. 3 n Single Q-forms outperform BL n Q 2 -Q 3 (and Q 2 -Q 4) combined outperform BL Ingwersen 26 IVA 2017 plus any pseudo RF n

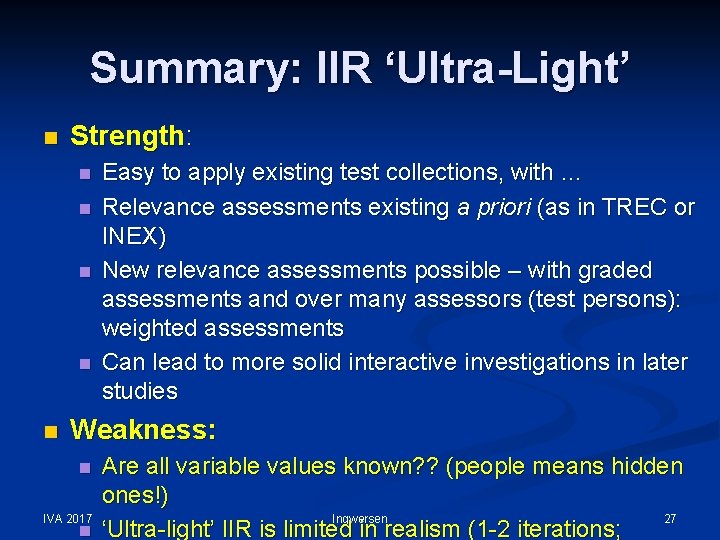

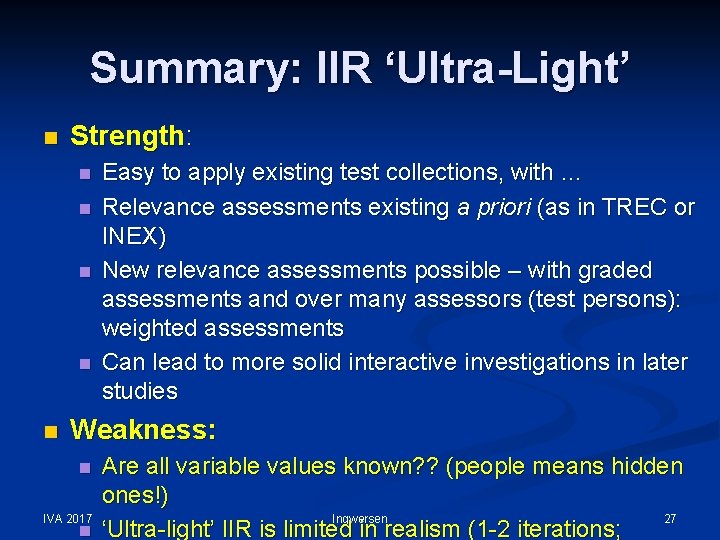

Summary: IIR ‘Ultra-Light’ n Strength: n n n Easy to apply existing test collections, with … Relevance assessments existing a priori (as in TREC or INEX) New relevance assessments possible – with graded assessments and over many assessors (test persons): weighted assessments Can lead to more solid interactive investigations in later studies Weakness: n IVA 2017 n Are all variable values known? ? (people means hidden ones!) Ingwersen 27 ‘Ultra-light’ IIR is limited in realism (1 -2 iterations;

Agenda - 1 ü Introduction to Tutorial ü Research Frameworks vs. Models ü Central components of Interactive IR (IIR) ü The Integrated Cognitive Research Framework for IR ü From Simulation to ‘Ultra-light’ IIR ü Short-term IR interaction experiments ü Sample study – Diane Kelly (2005/2007) Essir 2009 IVA 2017 Ingwersen 28

Agenda - 2 n Experimental Set-ups with Test Persons Interactive-light session-based IR studies n Request types n Test persons n Design of task-based simulated search situations n Relevance and evaluation measures in IIR n Sample study – Pia Borlund (2000; 2003 b) n IVA 2017 Ingwersen 29

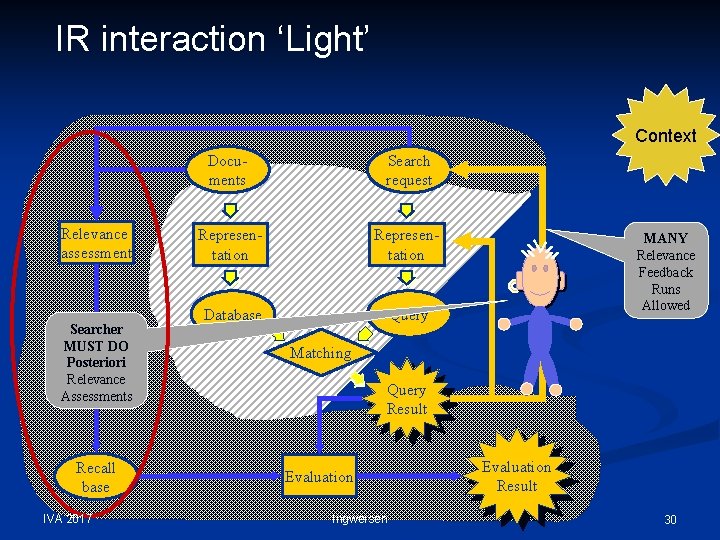

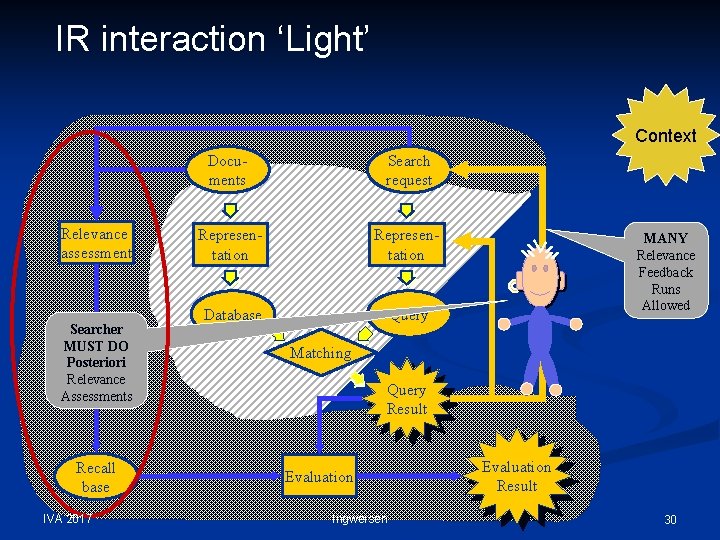

IR interaction ‘Light’ Context Relevance assessment Searcher MUST DO Posteriori Relevance Assessments Recall base IVA 2017 Documents Search request Representation Database Query MANY Relevance Feedback Runs Allowed Matching Query Result Evaluation Ingwersen Evaluation Result 30

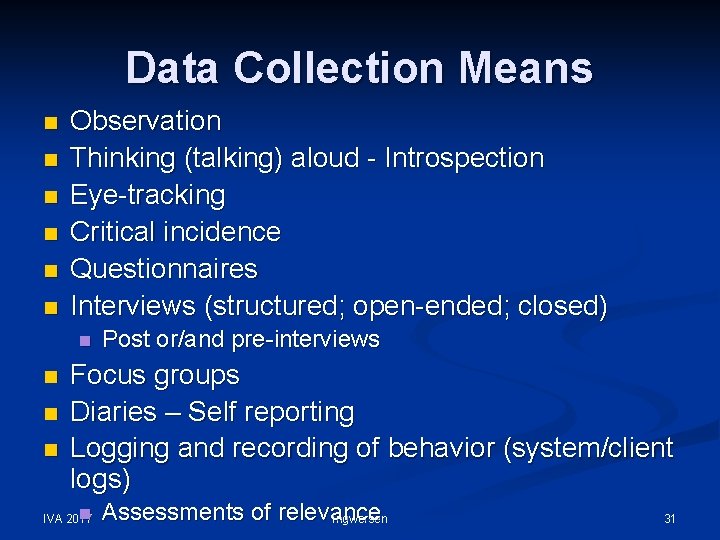

Data Collection Means n n n Observation Thinking (talking) aloud - Introspection Eye-tracking Critical incidence Questionnaires Interviews (structured; open-ended; closed) n n Post or/and pre-interviews Focus groups Diaries – Self reporting Logging and recording of behavior (system/client logs) n IVA 2017 Assessments of relevance Ingwersen 31

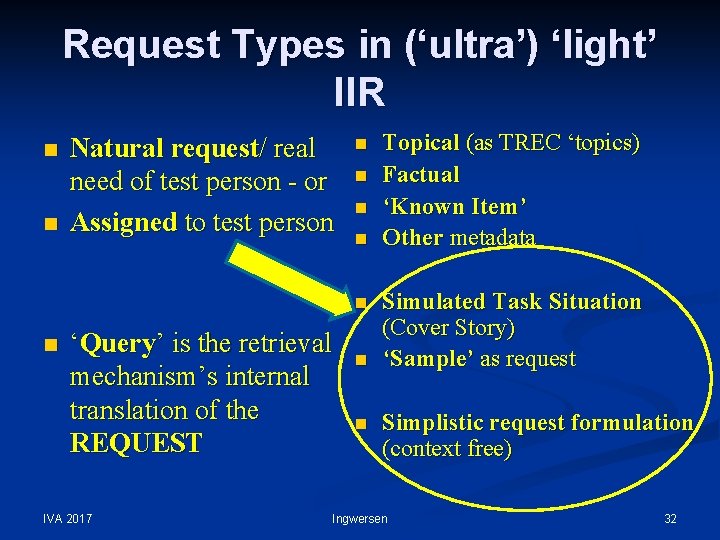

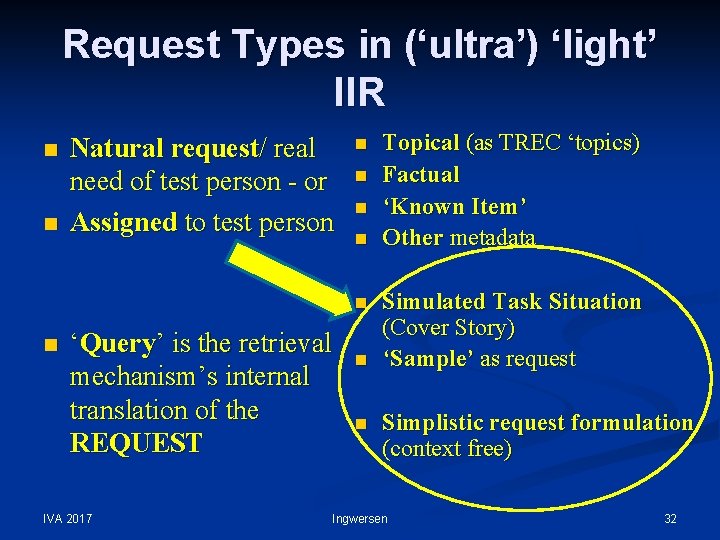

Request Types in (‘ultra’) ‘light’ IIR n n Natural request/ real need of test person - or Assigned to test person n n n ‘Query’ is the retrieval mechanism’s internal translation of the REQUEST IVA 2017 n n Topical (as TREC ‘topics) Factual ‘Known Item’ Other metadata Simulated Task Situation (Cover Story) ‘Sample’ as request Simplistic request formulation (context free) Ingwersen 32

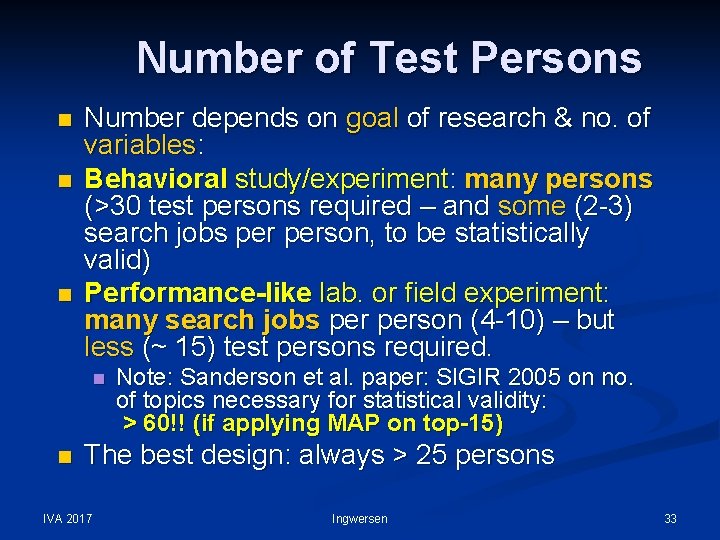

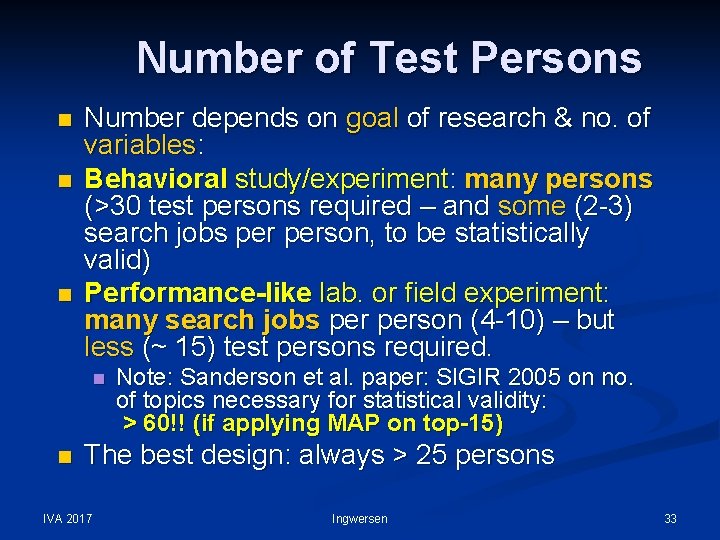

Number of Test Persons n n n Number depends on goal of research & no. of variables: Behavioral study/experiment: many persons (>30 test persons required – and some (2 -3) search jobs person, to be statistically valid) Performance-like lab. or field experiment: many search jobs person (4 -10) – but less (~ 15) test persons required. n n Note: Sanderson et al. paper: SIGIR 2005 on no. of topics necessary for statistical validity: > 60!! (if applying MAP on top-15) The best design: always > 25 persons IVA 2017 Ingwersen 33

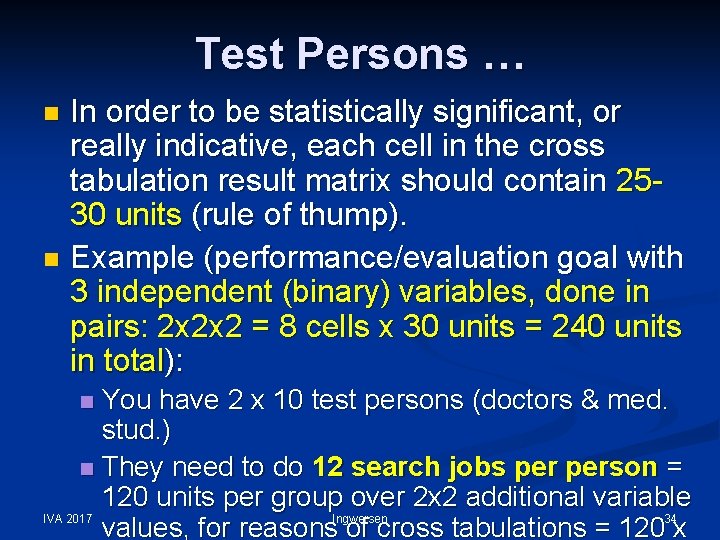

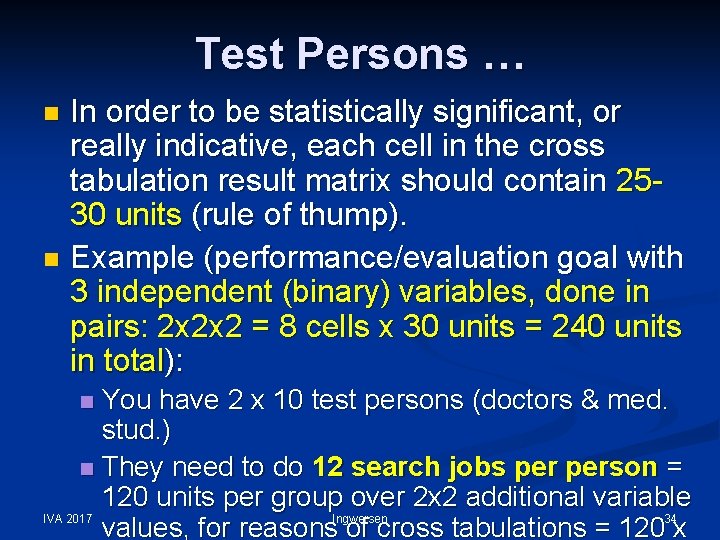

Test Persons … In order to be statistically significant, or really indicative, each cell in the cross tabulation result matrix should contain 2530 units (rule of thump). n Example (performance/evaluation goal with 3 independent (binary) variables, done in pairs: 2 x 2 x 2 = 8 cells x 30 units = 240 units in total): n You have 2 x 10 test persons (doctors & med. stud. ) n They need to do 12 search jobs person = 120 units per group over 2 x 2 additional variable Ingwersen 34 IVA 2017 values, for reasons of cross tabulations = 120 x n

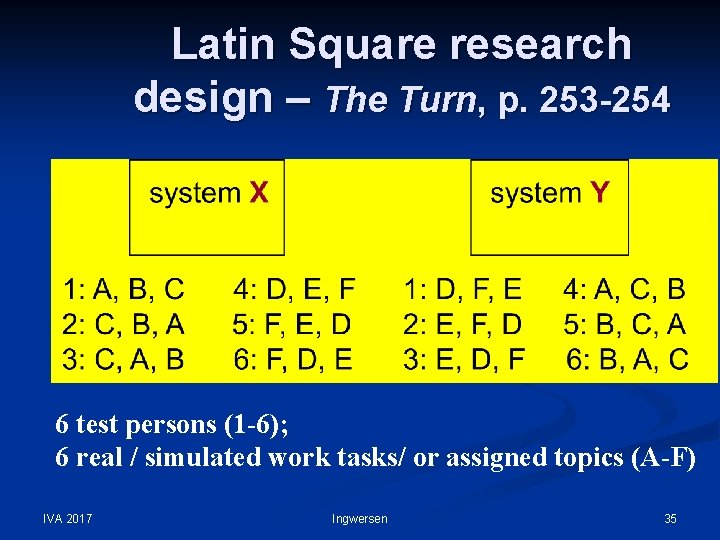

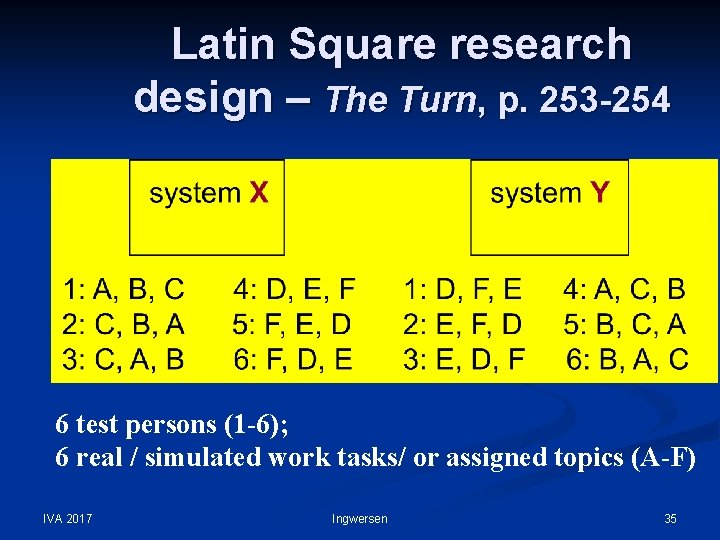

Latin Square research design – The Turn, p. 253 -254 6 test persons (1 -6); 6 real / simulated work tasks/ or assigned topics (A-F) IVA 2017 Ingwersen 35

Agenda - 2 ü Experimental Set-ups with Test Persons (25 min) ü Interactive-light session-based IR studies ü Request types ü Test persons n Design of task-based simulated search situations n Relevance and evaluation measures in IIR n Sample study – Pia Borlund (2000; 2003 a) IVA 2017 Ingwersen 36

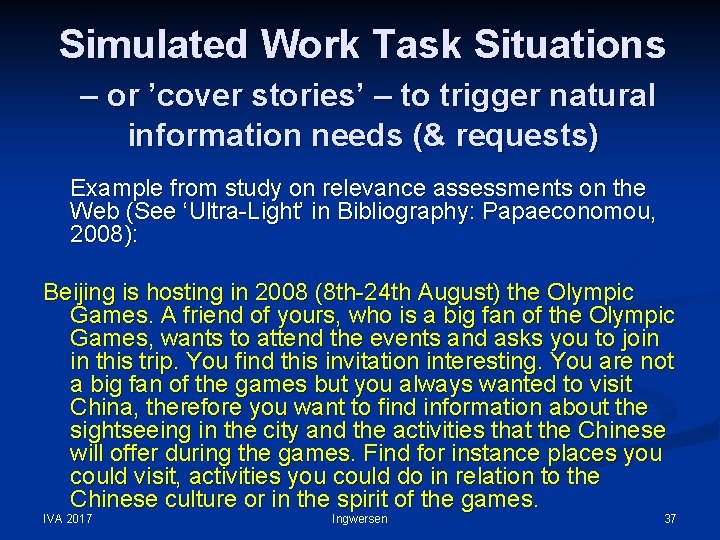

Simulated Work Task Situations – or ’cover stories’ – to trigger natural information needs (& requests) Example from study on relevance assessments on the Web (See ‘Ultra-Light’ in Bibliography: Papaeconomou, 2008): Beijing is hosting in 2008 (8 th-24 th August) the Olympic Games. A friend of yours, who is a big fan of the Olympic Games, wants to attend the events and asks you to join in this trip. You find this invitation interesting. You are not a big fan of the games but you always wanted to visit China, therefore you want to find information about the sightseeing in the city and the activities that the Chinese will offer during the games. Find for instance places you could visit, activities you could do in relation to the Chinese culture or in the spirit of the games. IVA 2017 Ingwersen 37

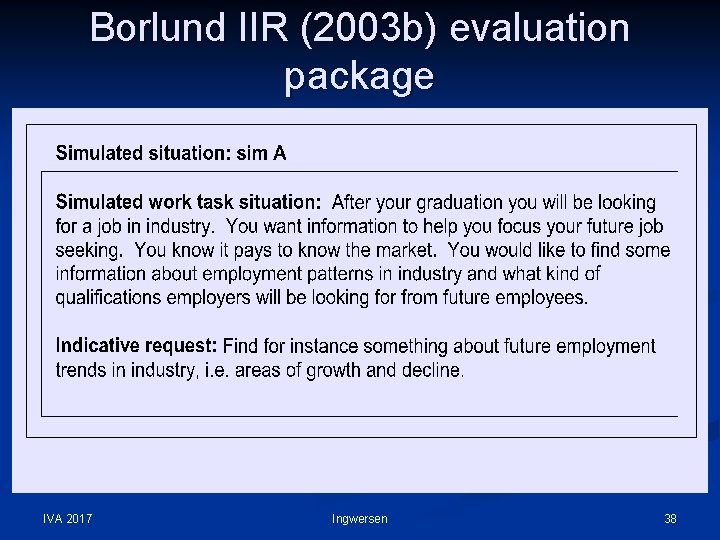

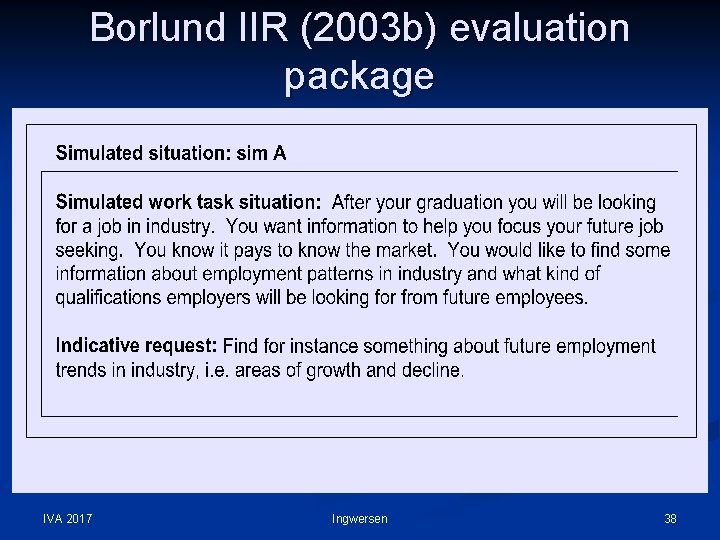

Borlund IIR (2003 b) evaluation package IVA 2017 Ingwersen 38

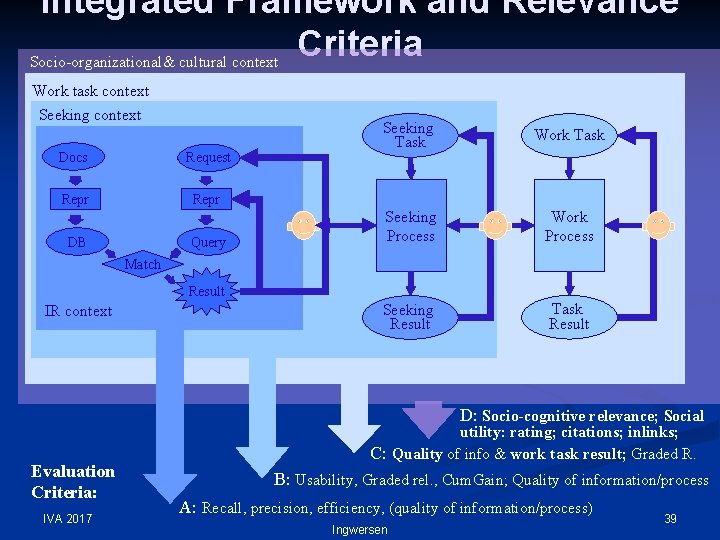

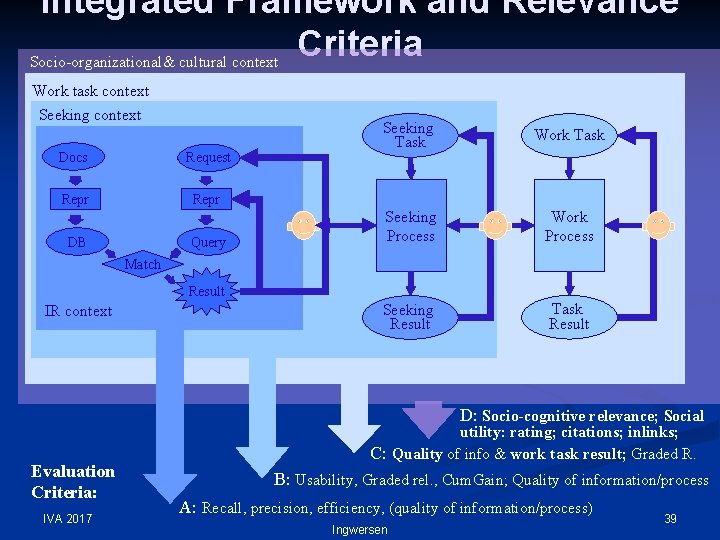

Integrated Framework and Relevance Criteria Socio-organizational& cultural context Work task context Seeking context Docs Request Repr DB Query Seeking Task Work Task Seeking Process Work Process Seeking Result Task Result Match Result IR context D: Socio-cognitive relevance; Social Evaluation Criteria: IVA 2017 utility: rating; citations; inlinks; C: Quality of info & work task result; Graded R. B: Usability, Graded rel. , Cum. Gain; Quality of information/process A: Recall, precision, efficiency, (quality of information/process) Ingwersen 39

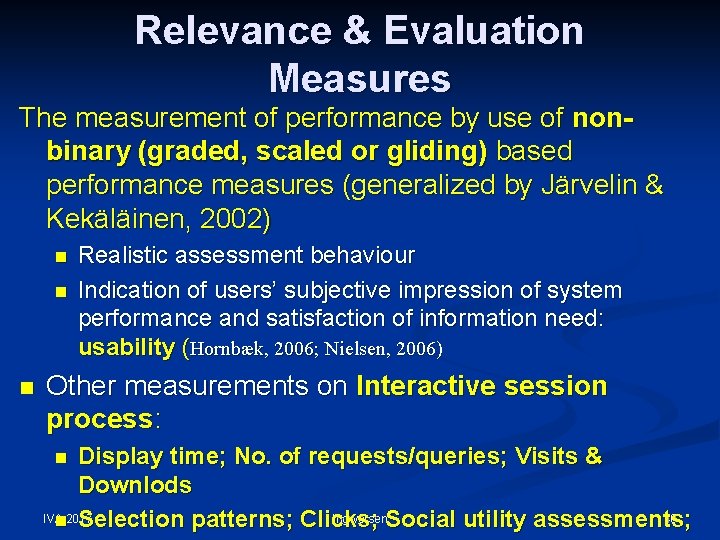

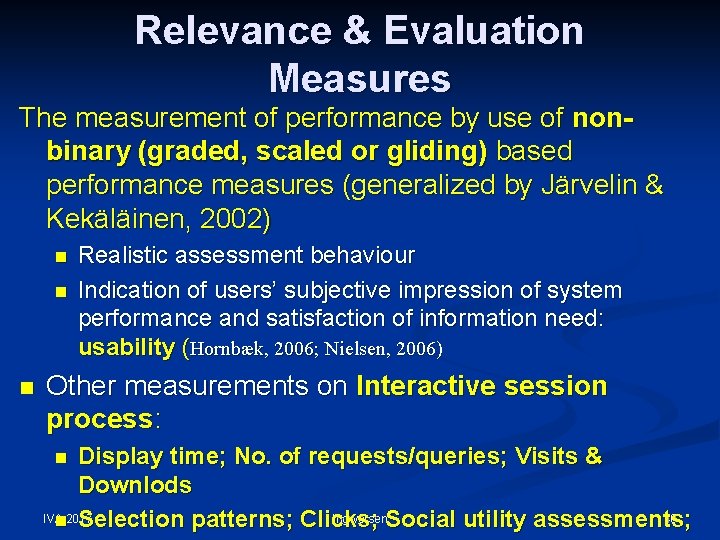

Relevance & Evaluation Measures The measurement of performance by use of nonbinary (graded, scaled or gliding) based performance measures (generalized by Järvelin & Kekäläinen, 2002) n n n Realistic assessment behaviour Indication of users’ subjective impression of system performance and satisfaction of information need: usability (Hornbæk, 2006; Nielsen, 2006) Other measurements on Interactive session process: Display time; No. of requests/queries; Visits & Downlods Ingwersen. Social utility assessments; 40 IVA n 2017 Selection patterns; Clicks; n

Agenda - 2 ü Experimental Set-ups with Test Persons (40 min) ü Interactive-light session-based IR studies ü Request types ü Test persons ü Design of task-based simulated search situations ü Relevance and evaluation measures in IIR n Sample study – Pia Borlund (2000; 2003 a) IVA 2017 Ingwersen 41

The Borlund Case (2000; 2003 b) n 1) Research questions Can simulated information needs substitute real information needs? n Hypothesis is: YES! 2) What makes a ‘good’ simulated situation with reference to semantic openness and types of topics of the simulated situations? IVA 2017 Ingwersen 42

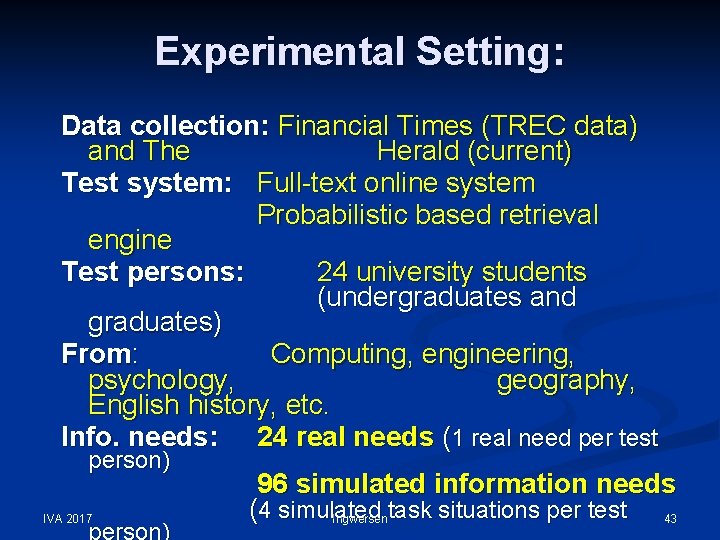

Experimental Setting: Data collection: Financial Times (TREC data) and The Herald (current) Test system: Full-text online system Probabilistic based retrieval engine Test persons: 24 university students (undergraduates and graduates) From: Computing, engineering, psychology, geography, English history, etc. Info. needs: 24 real needs (1 real need per test person) IVA 2017 96 simulated information needs (4 simulated Ingwersentask situations per test 43

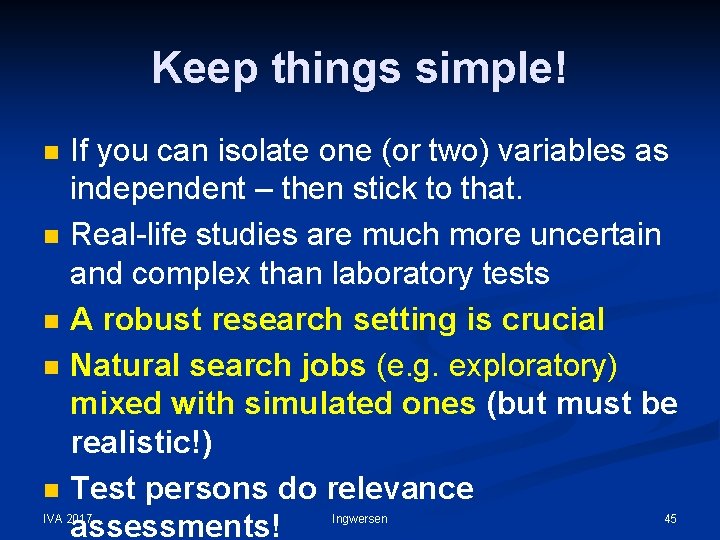

Agenda - 3 n Naturalistic Field Investigations of IIR Integrating context variables n Live systems & (simulated/real) work tasks n Sample Study – Marianne Lykke Nielsen (2001; 2004) n n Wrapping up of Tutorial Questions are welcome during the tutorial sessions IVA 2017 Ingwersen 44

Keep things simple! n n n If you can isolate one (or two) variables as independent – then stick to that. Real-life studies are much more uncertain and complex than laboratory tests A robust research setting is crucial Natural search jobs (e. g. exploratory) mixed with simulated ones (but must be realistic!) Test persons do relevance assessments! IVA 2017 Ingwersen 45

Agenda - 3 ü Naturalistic Field Investigations of IIR (20 min) ü Integrating context variables ü Live systems & (simulated) work tasks n Sample Study – Marianne Lykke Nielsen (2001; 2004) n Wrapping up (5 min) Questions are welcome during the tutorial sessions IVA 2017 Ingwersen 46

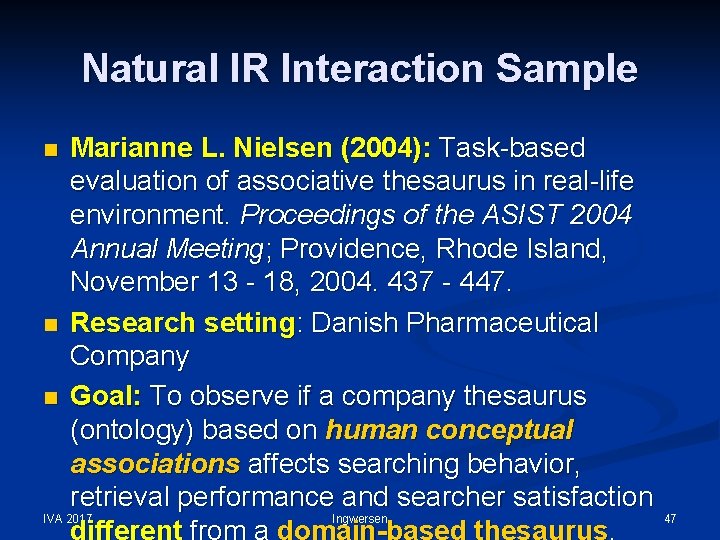

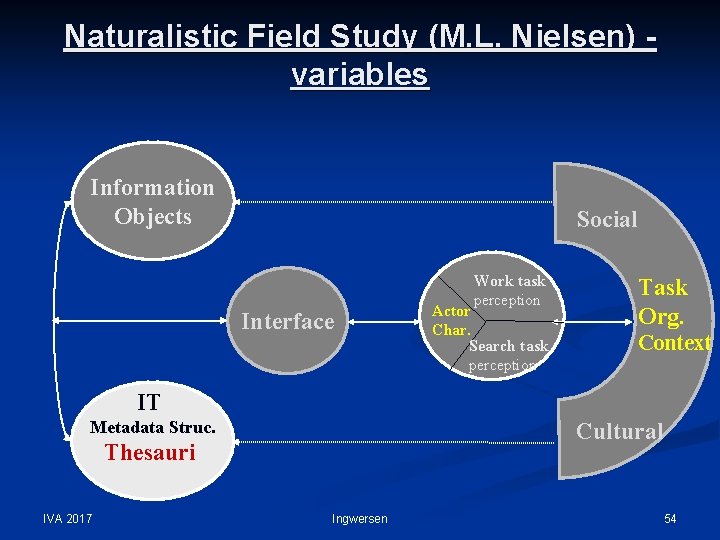

Natural IR Interaction Sample Marianne L. Nielsen (2004): Task-based evaluation of associative thesaurus in real-life environment. Proceedings of the ASIST 2004 Annual Meeting; Providence, Rhode Island, November 13 - 18, 2004. 437 - 447. n Research setting: Danish Pharmaceutical Company n Goal: To observe if a company thesaurus (ontology) based on human conceptual associations affects searching behavior, retrieval performance and searcher satisfaction Ingwersen IVA 2017 different from a domain-based thesaurus. n 47

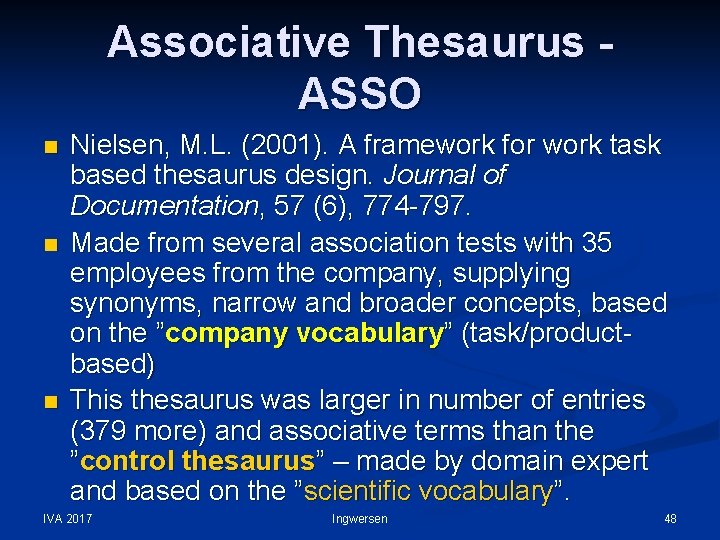

Associative Thesaurus ASSO n n n Nielsen, M. L. (2001). A framework for work task based thesaurus design. Journal of Documentation, 57 (6), 774 -797. Made from several association tests with 35 employees from the company, supplying synonyms, narrow and broader concepts, based on the ”company vocabulary” (task/productbased) This thesaurus was larger in number of entries (379 more) and associative terms than the ”control thesaurus” – made by domain expert and based on the ”scientific vocabulary”. IVA 2017 Ingwersen 48

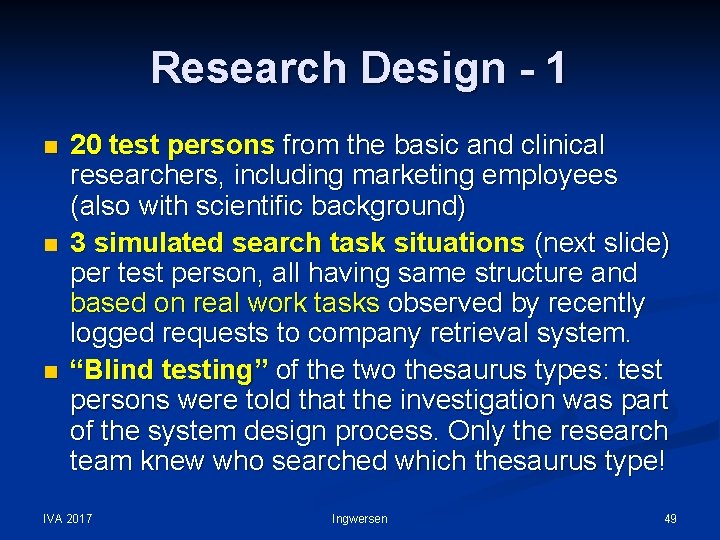

Research Design - 1 n n n 20 test persons from the basic and clinical researchers, including marketing employees (also with scientific background) 3 simulated search task situations (next slide) per test person, all having same structure and based on real work tasks observed by recently logged requests to company retrieval system. “Blind testing” of the two thesaurus types: test persons were told that the investigation was part of the system design process. Only the research team knew who searched which thesaurus type! IVA 2017 Ingwersen 49

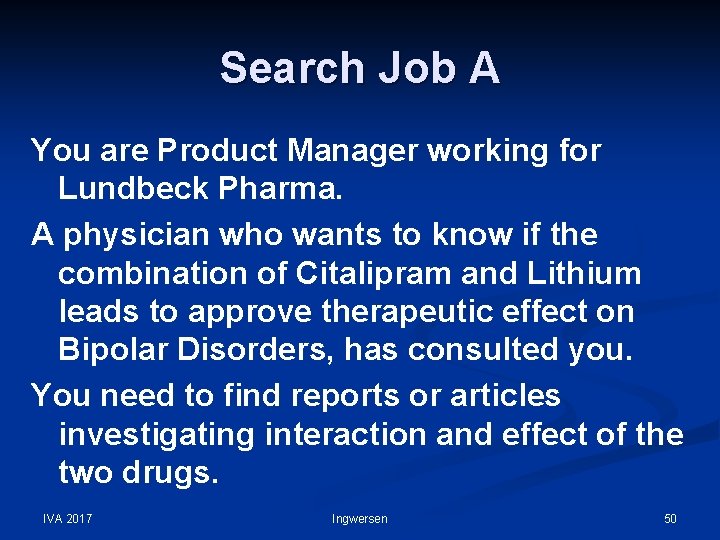

Search Job A You are Product Manager working for Lundbeck Pharma. A physician who wants to know if the combination of Citalipram and Lithium leads to approve therapeutic effect on Bipolar Disorders, has consulted you. You need to find reports or articles investigating interaction and effect of the two drugs. IVA 2017 Ingwersen 50

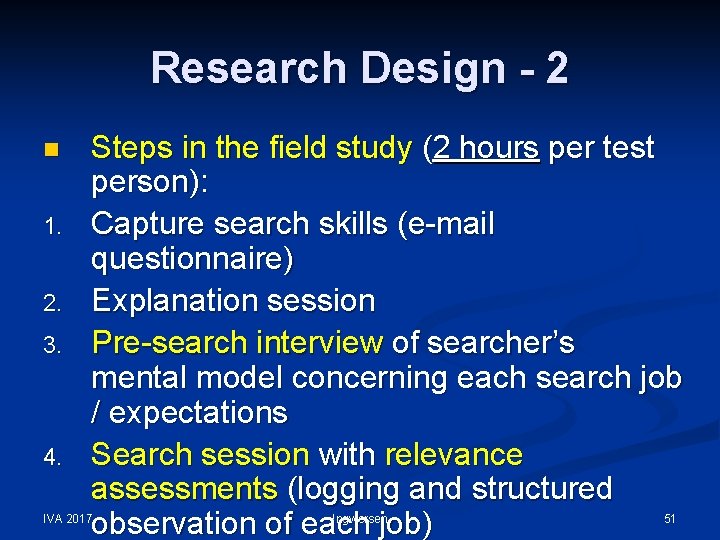

Research Design - 2 n 1. 2. 3. 4. Steps in the field study (2 hours per test person): Capture search skills (e-mail questionnaire) Explanation session Pre-search interview of searcher’s mental model concerning each search job / expectations Search session with relevance assessments (logging and structured observation of each job) IVA 2017 Ingwersen 51

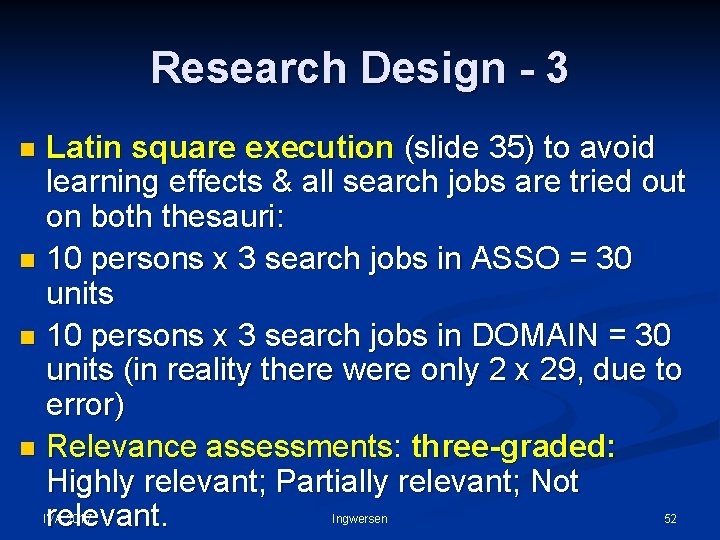

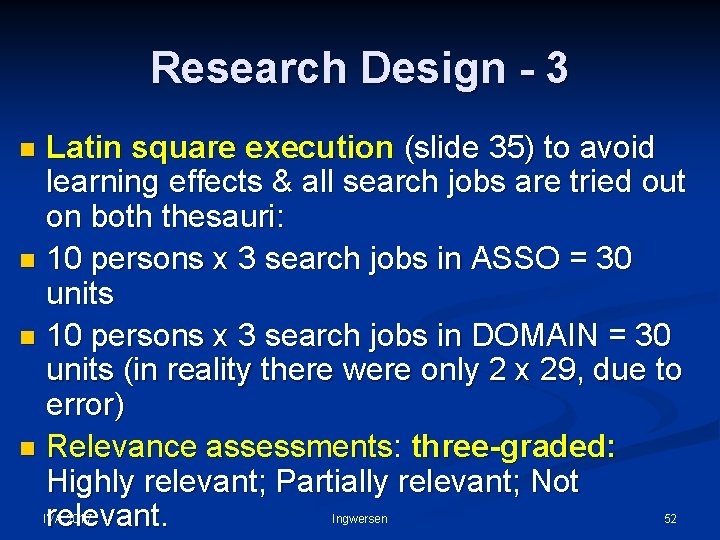

Research Design - 3 Latin square execution (slide 35) to avoid learning effects & all search jobs are tried out on both thesauri: n 10 persons x 3 search jobs in ASSO = 30 units n 10 persons x 3 search jobs in DOMAIN = 30 units (in reality there were only 2 x 29, due to error) n Relevance assessments: three-graded: Highly relevant; Partially relevant; Not relevant. n IVA 2017 Ingwersen 52

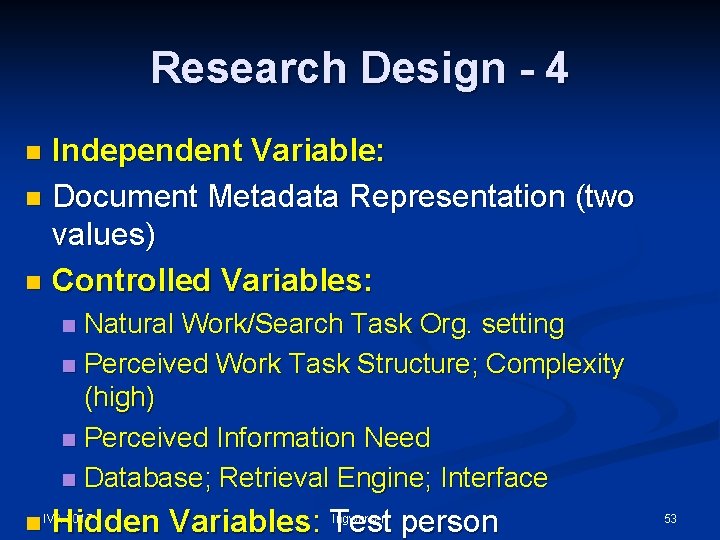

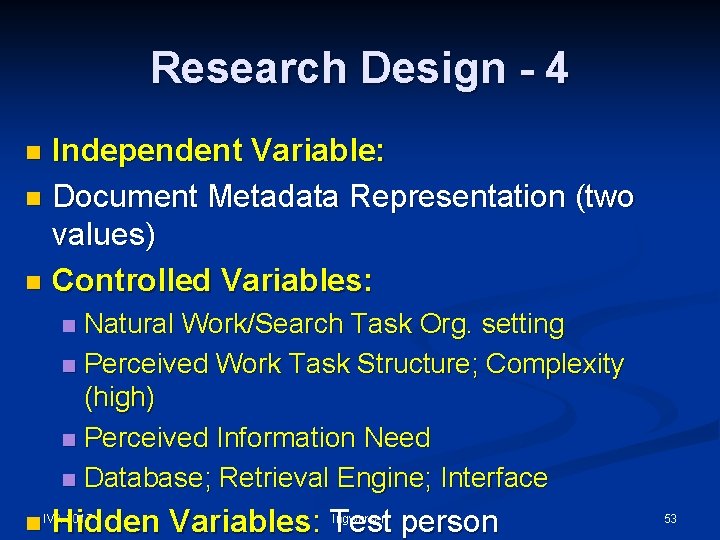

Research Design - 4 Independent Variable: n Document Metadata Representation (two values) n Controlled Variables: n Natural Work/Search Task Org. setting n Perceived Work Task Structure; Complexity (high) n Perceived Information Need n Database; Retrieval Engine; Interface n 2017 n IVAHidden Variables: Test person Ingwersen 53

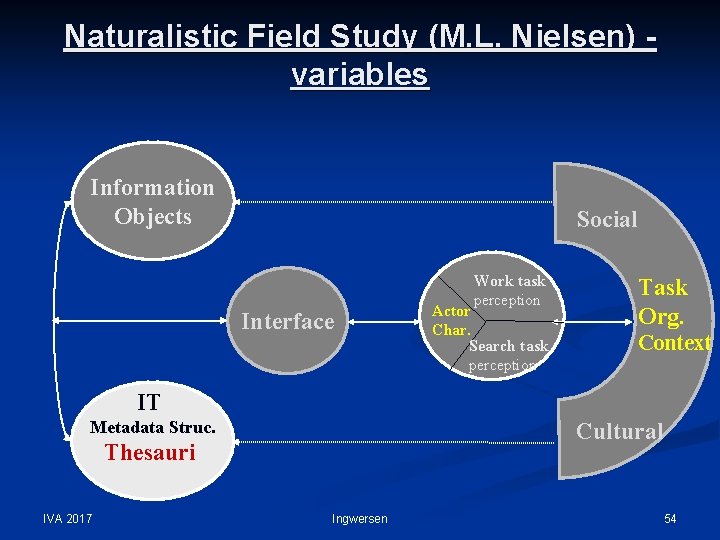

Naturalistic Field Study (M. L. Nielsen) variables Information Objects Social Interface Work task perception Actor Char. Search task perception Task Org. Context IT Metadata Struc. Cultural Thesauri IVA 2017 Ingwersen 54

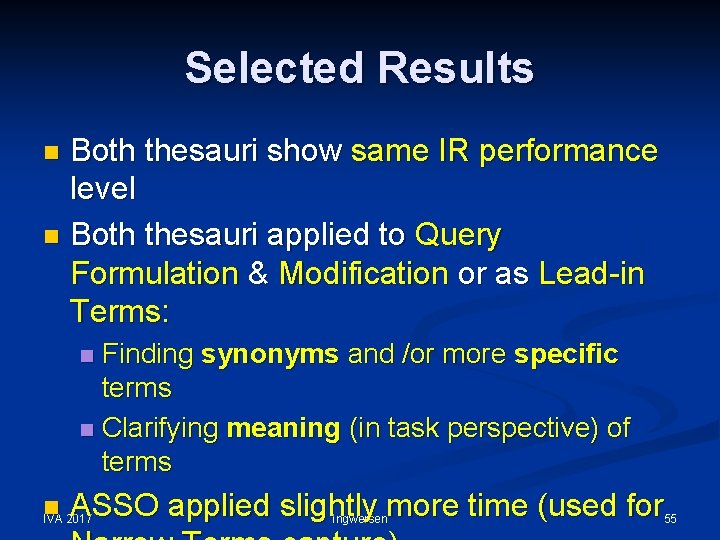

Selected Results Both thesauri show same IR performance level n Both thesauri applied to Query Formulation & Modification or as Lead-in Terms: n Finding synonyms and /or more specific terms n Clarifying meaning (in task perspective) of terms n n ASSO applied slightly more time (used for IVA 2017 Ingwersen 55

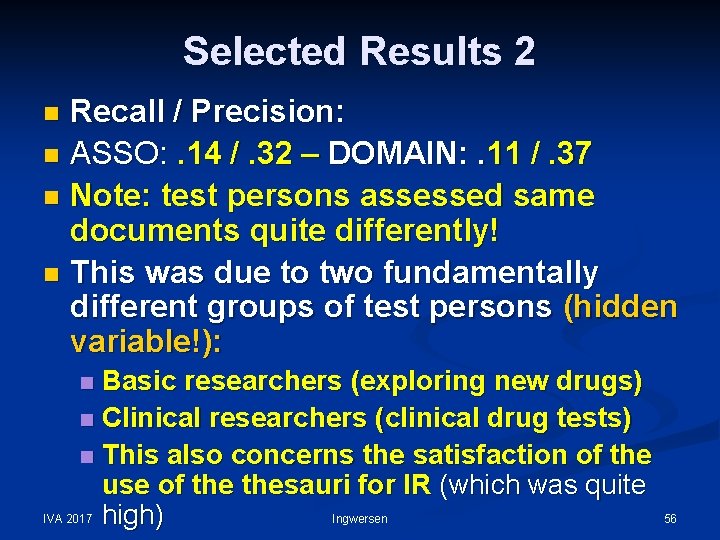

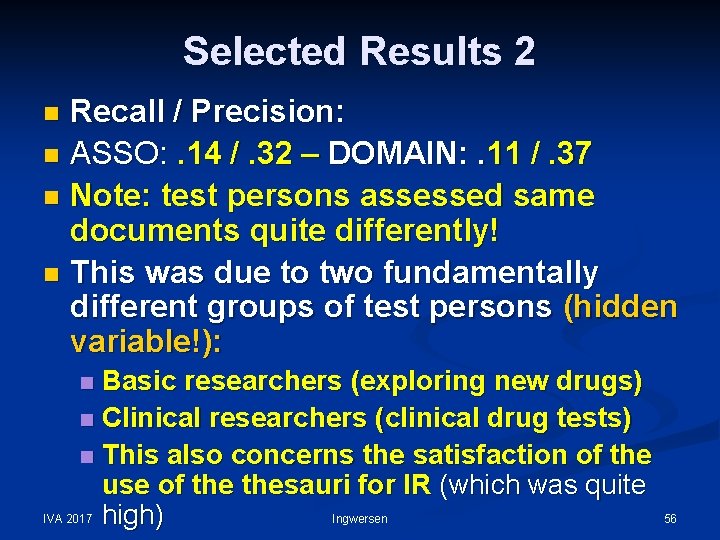

Selected Results 2 Recall / Precision: n ASSO: . 14 /. 32 – DOMAIN: . 11 /. 37 n Note: test persons assessed same documents quite differently! n This was due to two fundamentally different groups of test persons (hidden variable!): n Basic researchers (exploring new drugs) n Clinical researchers (clinical drug tests) n This also concerns the satisfaction of the use of thesauri for IR (which was quite Ingwersen IVA 2017 high) n 56

Agenda - 3 ü Naturalistic Field Investigations of IIR (20 min) ü Integrating context variables ü Live systems & (simulated) work tasks ü Sample Study – Marianne Lykke Nielsen (2001; 2004) n Wrapping up of Tutorial (5 min) Questions are welcome during the tutorial sessions IVA 2017 Ingwersen 57

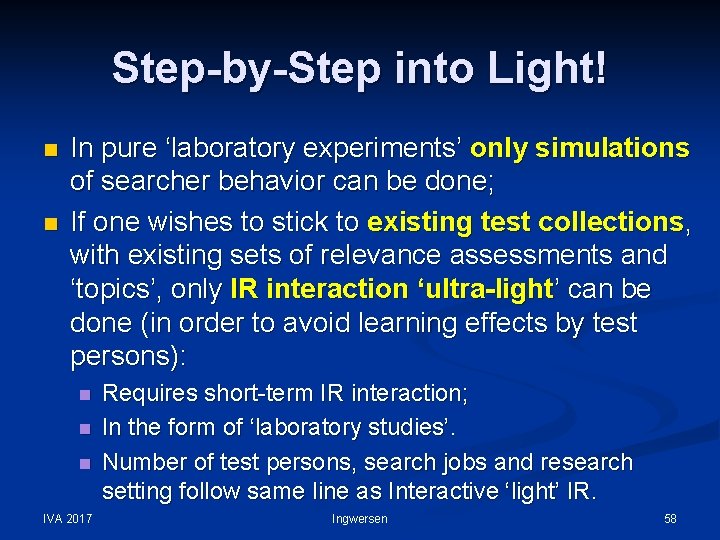

Step-by-Step into Light! n n In pure ‘laboratory experiments’ only simulations of searcher behavior can be done; If one wishes to stick to existing test collections, with existing sets of relevance assessments and ‘topics’, only IR interaction ‘ultra-light’ can be done (in order to avoid learning effects by test persons): n n n IVA 2017 Requires short-term IR interaction; In the form of ‘laboratory studies’. Number of test persons, search jobs and research setting follow same line as Interactive ‘light’ IR. Ingwersen 58

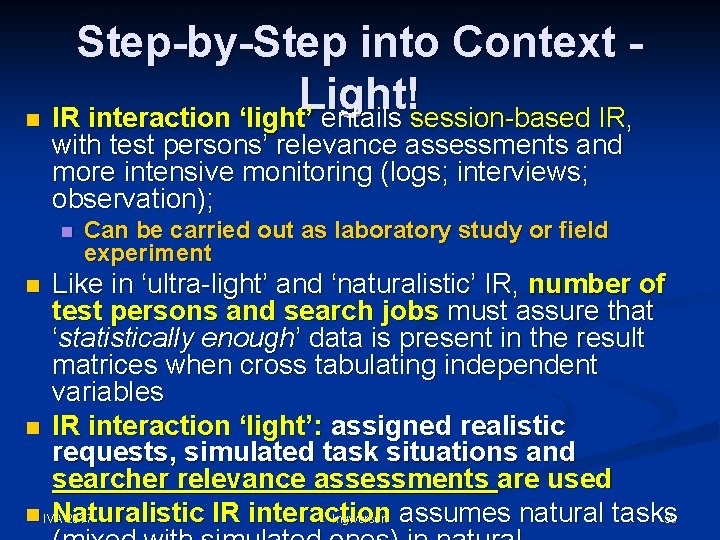

Step-by-Step into Context Light! n IR interaction ‘light’ entails session-based IR, with test persons’ relevance assessments and more intensive monitoring (logs; interviews; observation); n Can be carried out as laboratory study or field experiment Like in ‘ultra-light’ and ‘naturalistic’ IR, number of test persons and search jobs must assure that ‘statistically enough’ data is present in the result matrices when cross tabulating independent variables n IR interaction ‘light’: assigned realistic requests, simulated task situations and searcher relevance assessments are used n IVA Naturalistic IR interaction Ingwersen assumes natural tasks 59 2017 n

‘Ultra-light’ and ‘Light’ IIR n n Several conceptual models of IIR are available Assessments can be 4 -graded (Vakkari & Sormunen, 2004); n n n Realistic but few relevance assessments person; Assessments can be pooled for same search job over all test persons – weighted doc. assessments Common recall/precision, MAP, Cum. Gain, P@n, etc. feasible You require min. 30 responses per result cell IVA 2017 n Ingwersen 60

Ny Litteratur n Kelly, D. , & Sugimoto, C. R. (2013). A systematic review of interactive information retrievaluation studies, 1967– 2006. Journal of the American Society for Information Science and Technology, 64(4), 745 -770. THANK YOU!! IVA 2017 Ingwersen 61