IOOS DMAC Standards Process and Lessons Learned Anne

- Slides: 50

IOOS DMAC Standards Process and Lessons Learned Anne Ball DMAC Steering Team Ocean. US

Topics • Brief overview – IOOS – DMAC • • DMAC standards process Lessons learned Links to more information Discussion

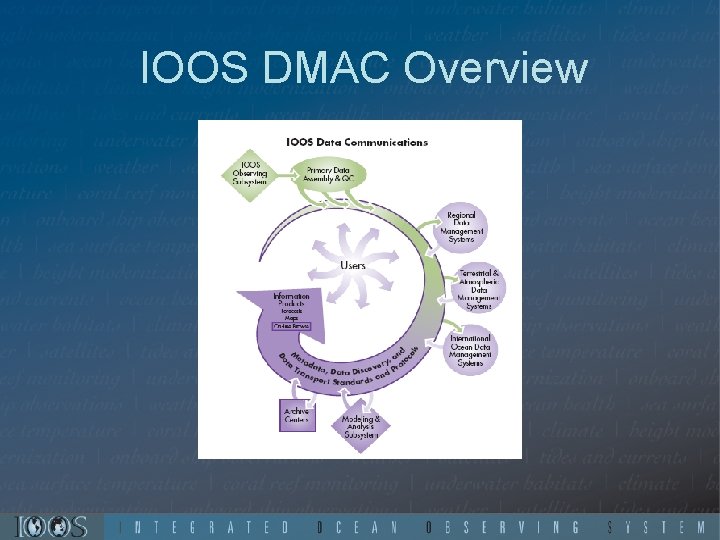

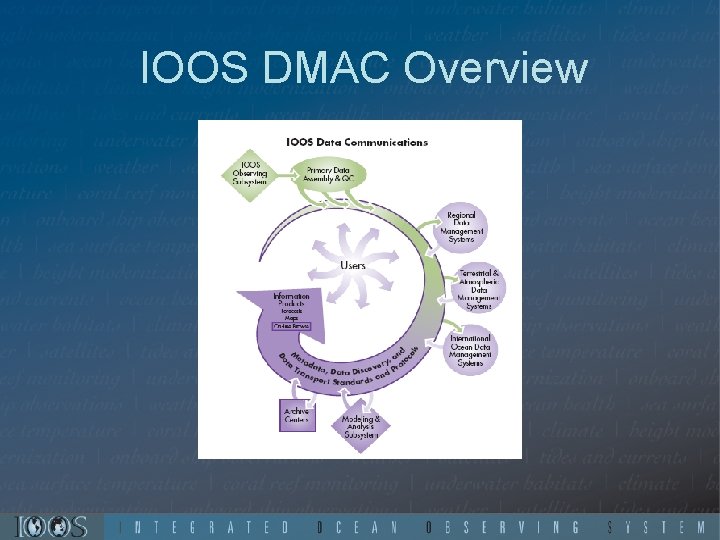

IOOS DMAC Overview

Integrated Ocean Observing System IOOS Tracking, predicting, managing, and adapting to changes in our coastal and ocean environment

Integrated Ocean Observing System SEE Safety Oceans Economy Environment Coasts Great Lakes

Integrated Ocean Observing System • Safety – Earlier and better predictions of severe weather – Search and rescue – Protect water quality – Homeland security

Integrated Ocean Observing System • Economy – Optimized shipping routes based on improved forecasts – More accurate, longer-term forecasts for agriculture – Better planning for coastal construction and zoning – Potential sources for medicines and new technologies – Alternative energy sources – Improvements in offshore drilling practices

Integrated Ocean Observing System • Environment – More timely and accurate predictions for oil spills and other pollutants – Reduced vessel groundings – Better ecological protection – Fisheries – Protection and restoration of marine ecosystems

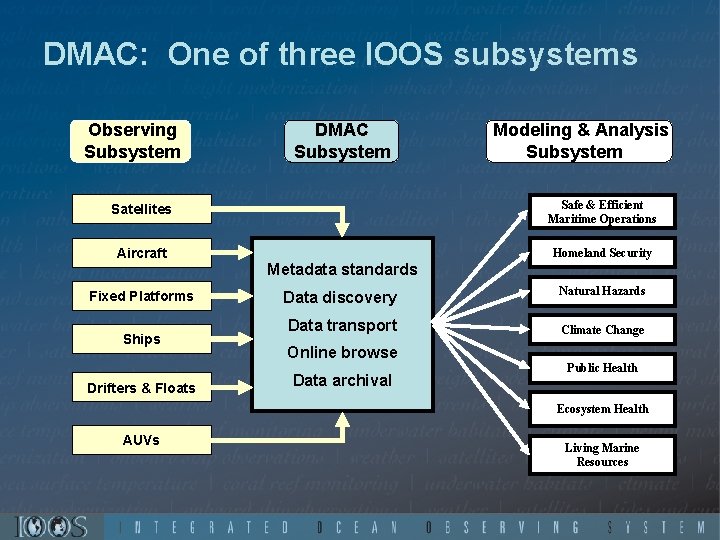

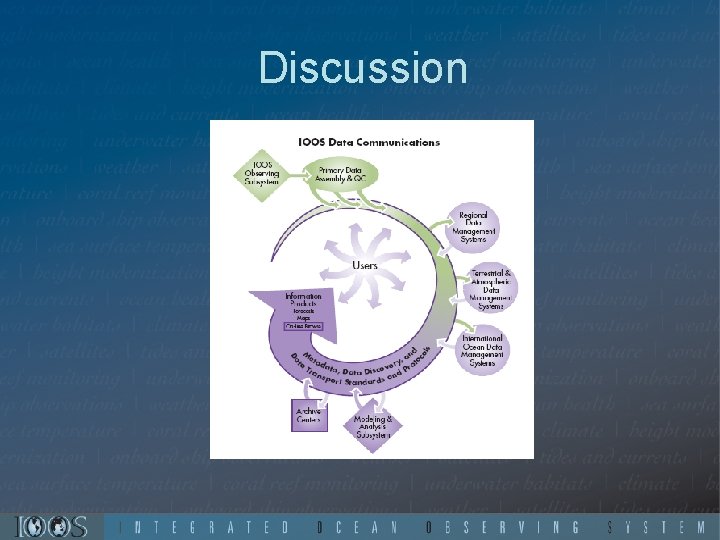

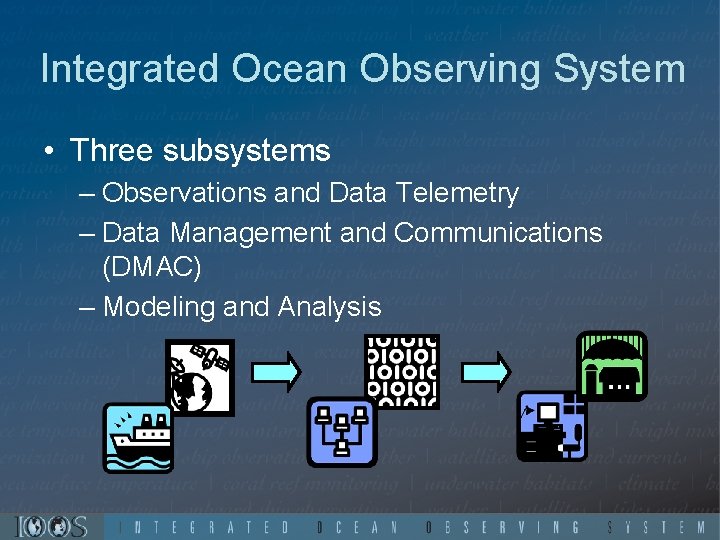

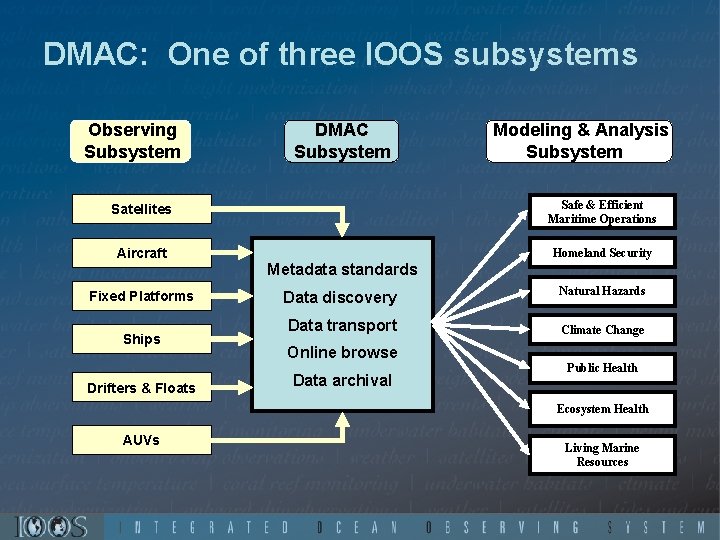

Integrated Ocean Observing System • Three subsystems – Observations and Data Telemetry – Data Management and Communications (DMAC) – Modeling and Analysis

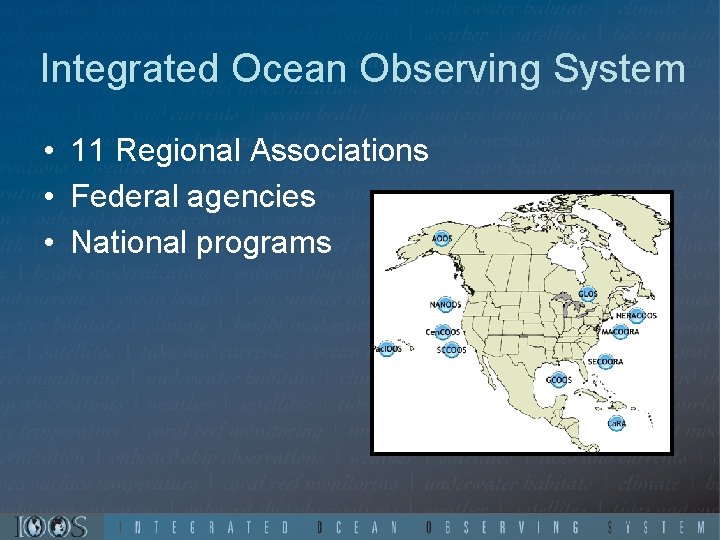

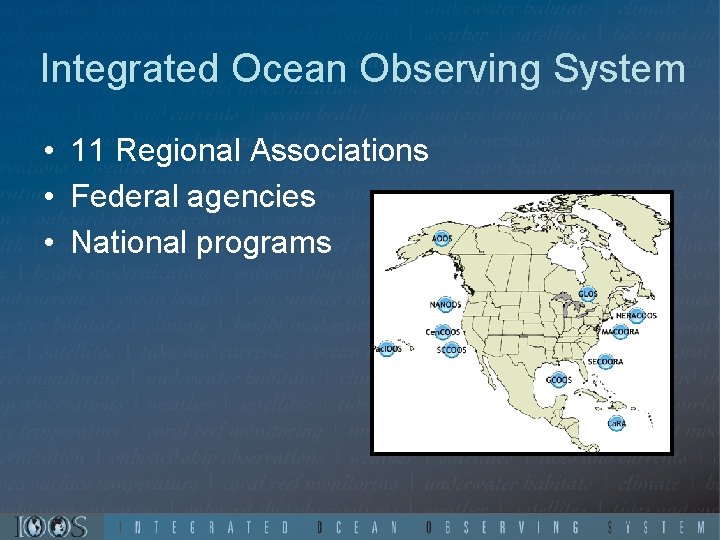

Integrated Ocean Observing System • 11 Regional Associations • Federal agencies • National programs

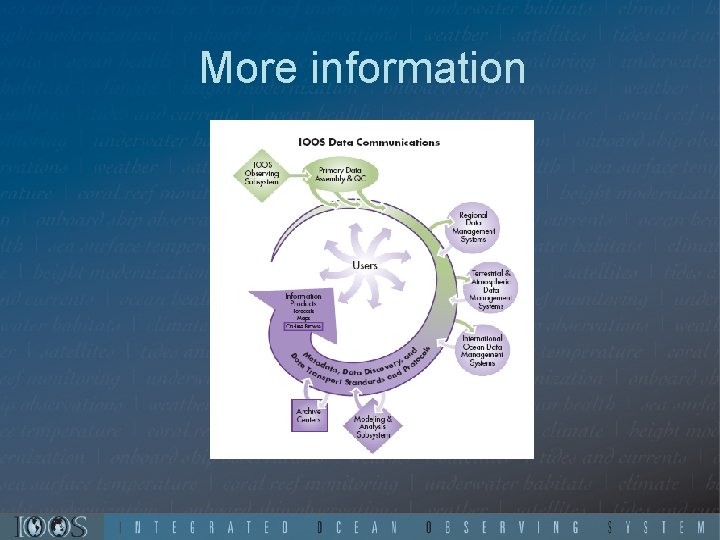

Data Management and Communications • DMAC Goal – design the system that efficiently and effectively links observations to applications by enabling rapid access to diverse data from multiple sources as needed

Data Management and Communications • Infrastructure – Data discovery, access, transfer, metadata, archive – identify the technologies, formats, and protocols that support an infrastructure needed to meet its goal. – must interact with regional, national, and international systems.

Data Management and Communications • Usability – Quality assurance, quality control, information requirements – Data must be accompanied by the information that supports its proper use and sound results when combined with additional data. – This information may vary between different types of observations and collection methods. – DMAC will adopt quality assurance, quality control, and content requirements identified by expert communities and ensure this information is carried within the DMAC infrastructure.

DMAC: One of three IOOS subsystems Observing Subsystem DMAC Subsystem Modeling & Analysis Subsystem Satellites Safe & Efficient Maritime Operations Aircraft Homeland Security Metadata standards Fixed Platforms Ships Drifters & Floats Data discovery Data transport Natural Hazards Climate Change Online browse Data archival Public Health Ecosystem Health AUVs Living Marine Resources

DMAC Implementation Team approach • Steering Team • Expert Teams • Caucuses • Working groups • Interagency Oversight Working Group

DMAC Steering Team • Representatives from government, industry, academia, public, and non-profits • Coordinate and oversee DMAC standards evolution • Identify and provide recommendations on gaps • Conduct process in an open, objective, and balanced manner

DMAC Teams • Expert teams – Archive – Metadata and data discovery – Transport and Access • Working group – Systems engineering • Address key issues as defined in DMAC plan • Review and make recommendations on standards

DMAC Caucuses • Outreach and community engagement – International – Private sector – Education • K-12 • Professional development – Modeling – Regional

Interagency Coordination Interagency Oversight Working Group (IOWG) • Representatives of federal agencies • Oversight of DMAC implementation within agencies

Roles • Teams: – Review standards – Make recommendations – Identify gaps • Organizations – Implement standards and recommendations – Fill-in gaps

Standards and standard process

Standards are key to the success of DMAC • Identify standards needed • Coordinate with other standards processes • DMAC standards mantra: – Adopt – Adapt – Develop only as a last resort

DMAC Standards Process • Identifying existing standards is a first step • Often need to “standardize” the standard – Ex. Use FGDC metadata – not good enough to describe waves, so must develop a waves profile • Data providers may be required to use different standards – Crosswalks, interoperability is critical

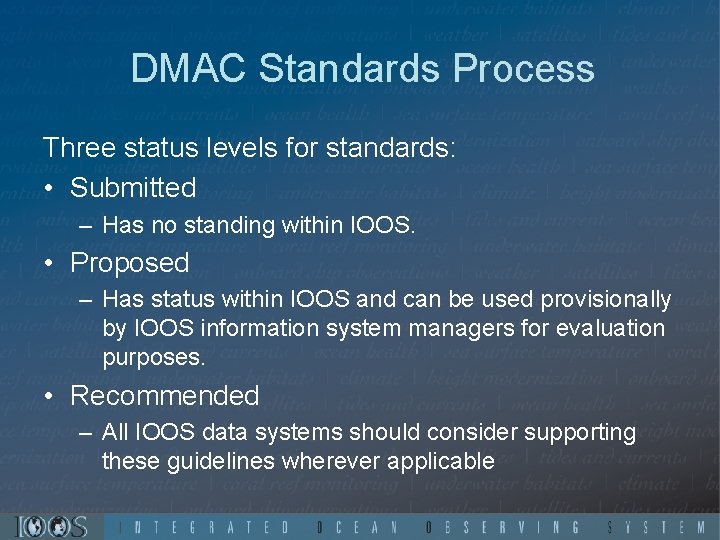

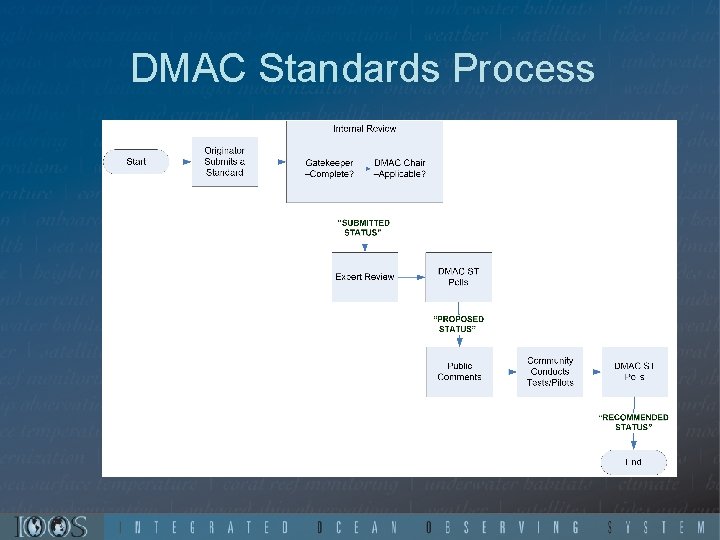

DMAC Standards Process Three status levels for standards: • Submitted – Has no standing within IOOS. • Proposed – Has status within IOOS and can be used provisionally by IOOS information system managers for evaluation purposes. • Recommended – All IOOS data systems should consider supporting these guidelines wherever applicable

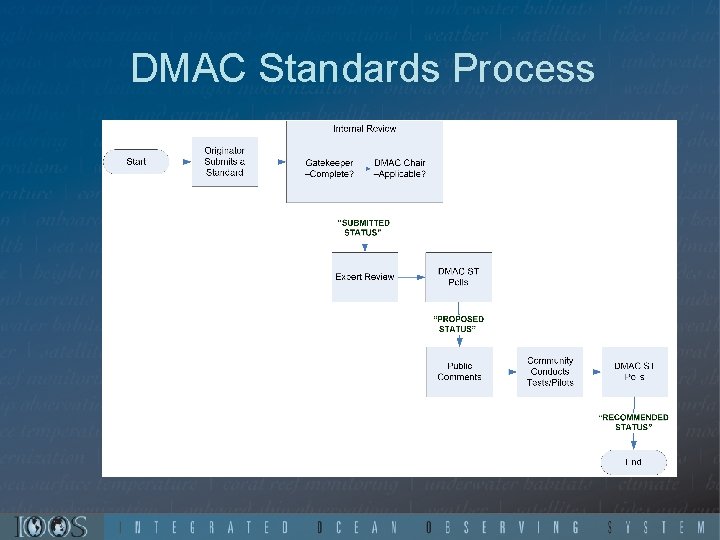

DMAC Standards Process

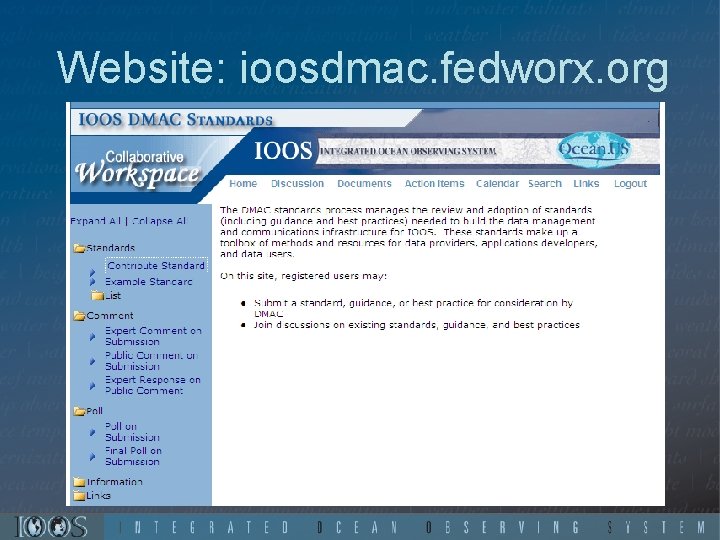

Website: ioosdmac. fedworx. org

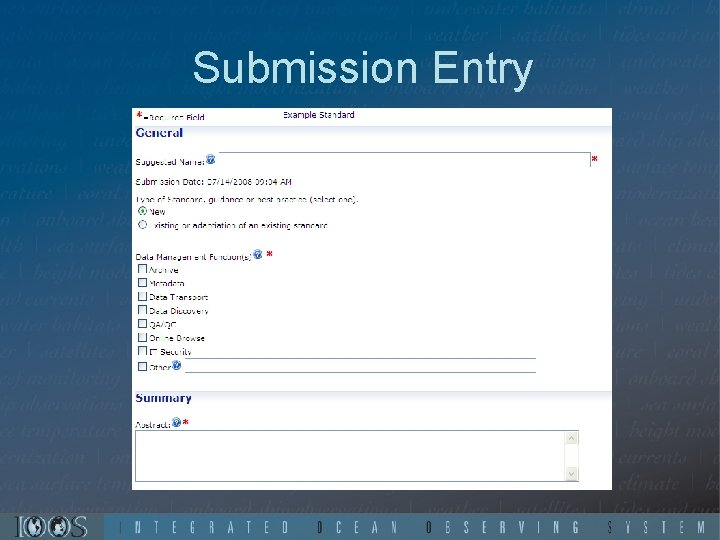

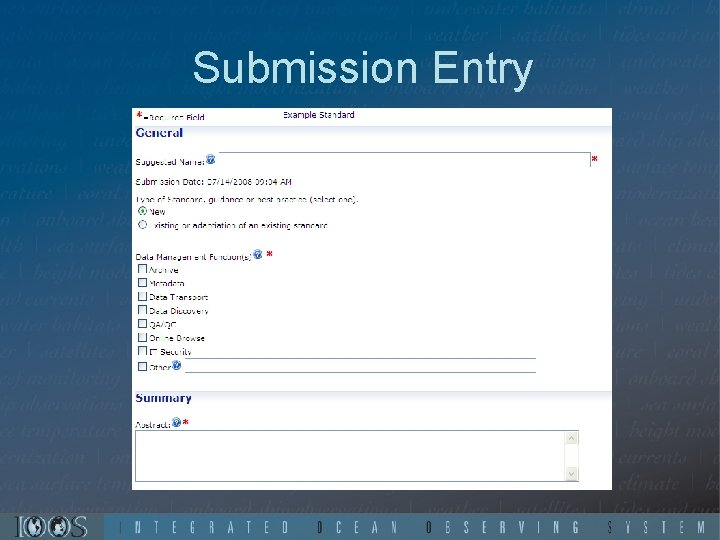

Submission Entry

DMAC Standards Process • Entry fields: – – – – Name Type (new, existing, adaption) Data mgmt function (metadata, transport, …) Abstract Purpose Technical description Statutory requirement or international agreement?

DMAC Standards Process • Entry fields (continued) – – – – Relationships, dependencies, conflicts Current usage Justification References Acronyms Contact information Supporting parties/members

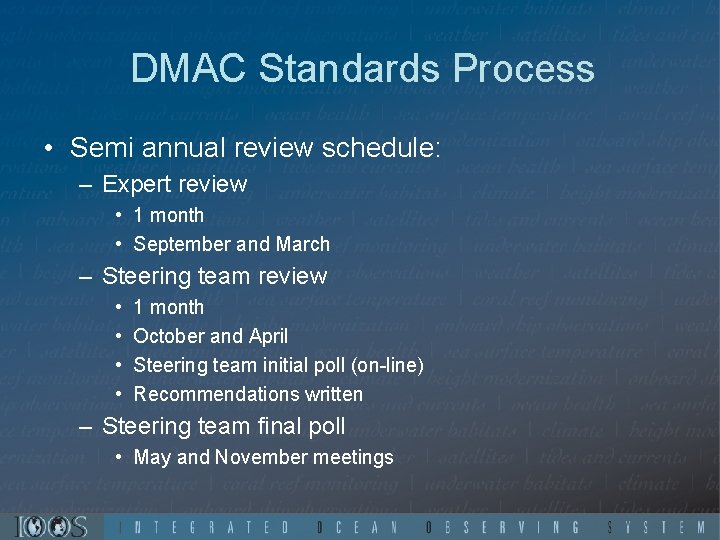

DMAC Standards Process • Semi annual review schedule: – Expert review • 1 month • September and March – Steering team review • • 1 month October and April Steering team initial poll (on-line) Recommendations written – Steering team final poll • May and November meetings

DMAC Standards Process • Chair drafts recommendations based on polling and distribute to Steering Team • Steering Team discusses recommendations at semiannual meeting – Try to reach consensus – If consensus is not possible (per Terms of Reference): • Vote is carried by 75% of those members voting “approve” or “disapprove. ” • Quorum consists of 60% of members. • Support of multiple strategies may be the most prudent approach. As long as the consideration of multiple options does not violate the spirit of IOOS interoperability, the IOOS-ST may move forward without consensus.

DMAC Standards Process • Steering team may decide: – Move forward (“submitted” to “proposed”) – Request additions or changes – Table and revisit in next round – Reject

Lessons Learned

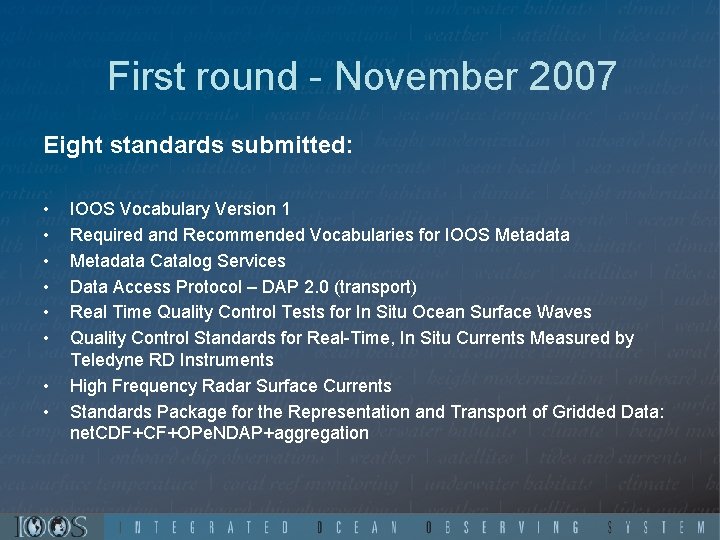

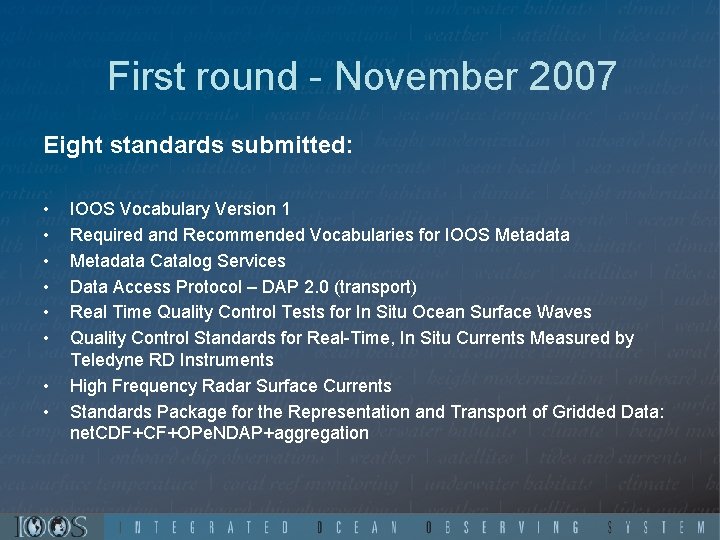

First round - November 2007 Eight standards submitted: • • IOOS Vocabulary Version 1 Required and Recommended Vocabularies for IOOS Metadata Catalog Services Data Access Protocol – DAP 2. 0 (transport) Real Time Quality Control Tests for In Situ Ocean Surface Waves Quality Control Standards for Real-Time, In Situ Currents Measured by Teledyne RD Instruments High Frequency Radar Surface Currents Standards Package for the Representation and Transport of Gridded Data: net. CDF+CF+OPe. NDAP+aggregation

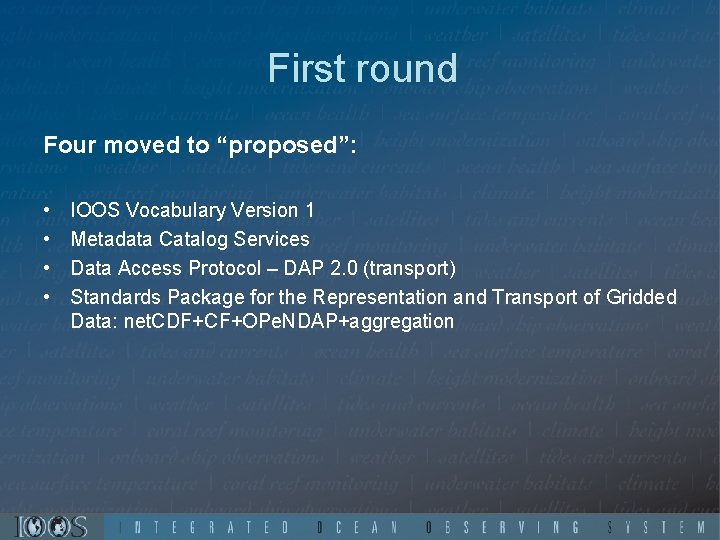

First round Four moved to “proposed”: • • IOOS Vocabulary Version 1 Metadata Catalog Services Data Access Protocol – DAP 2. 0 (transport) Standards Package for the Representation and Transport of Gridded Data: net. CDF+CF+OPe. NDAP+aggregation

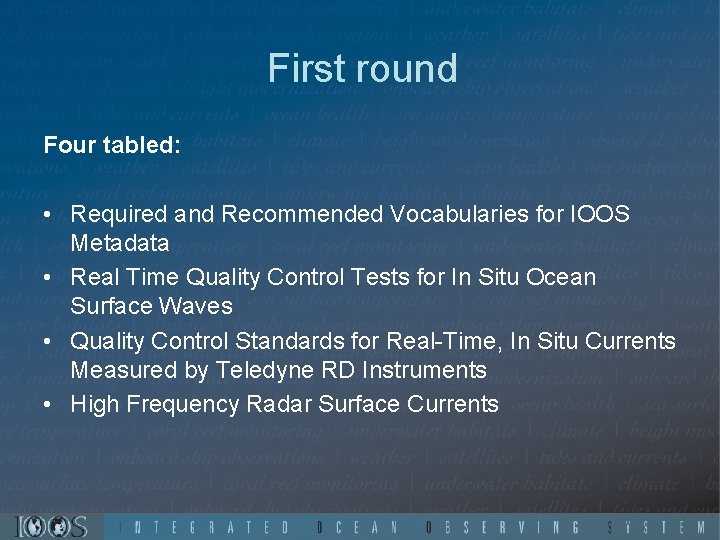

First round Four tabled: • Required and Recommended Vocabularies for IOOS Metadata • Real Time Quality Control Tests for In Situ Ocean Surface Waves • Quality Control Standards for Real-Time, In Situ Currents Measured by Teledyne RD Instruments • High Frequency Radar Surface Currents

First round • Tabled submissions: – Real Time Quality Control Tests for In Situ Ocean Surface Waves – Quality Control Standards for Real-Time, In Situ Currents Measured by Teledyne RD Instruments – High Frequency Radar Surface Currents • Should DMAC be involved in QA/QC? • If so, how?

First round Tabled submission: • Required and Recommended Vocabularies for IOOS Metadata • Adopt multiple vocabularies? • Use technology/ontologies to map multiple vocabularies?

First round • Findings – – Wide variety of “standards” submitted No clear definition of “standards” vs “best practices” How do we handle QA/QC standards? Need better instructions and/or help for submitters

Additional thoughts • May need to better define what we’re looking for • May need to focus more on data/metadata content

May 2008 • Addressed tabled submissions – Additional fields – Tried to refine definitions of fields – Tried to define “standards” vs “best practices”

QA/QC • “Lessons Learned” discussion: – ST lacks technical expertise to confidently evaluate QA/QC – Better criteria needed • To guide ST in evaluation • To assist Originators in preparing “Work Packages” – Draw on expertise of QA/QC-experienced organizations • As Originators • During Expert review – Devote more effort (time) to submission ‘Work Packages’ at front-end

QA/QC • Is QA/QC a DMAC issue? – Regardless, DMAC must deal with QA/QC to move forward – Develop supplemental criteria for reviewing QA/QC submissions

QA/QC Applicability • Does the submission: – Address an IOOS data type or parameter? – Significant to multiple applications or users? – If specific to a particular instrument, can approach be extended to similar instruments? – Would it benefit IOOS if implemented more widely?

QA/QC • Originating organization – Does Originator have recognized expertise in the topic area? – Will the standard (including, if applicable, all components of the submission) be maintained over time by a recognized organization? • Supporting organizations – Are recognized organizations or programs supporting the adoption of this as a standard?

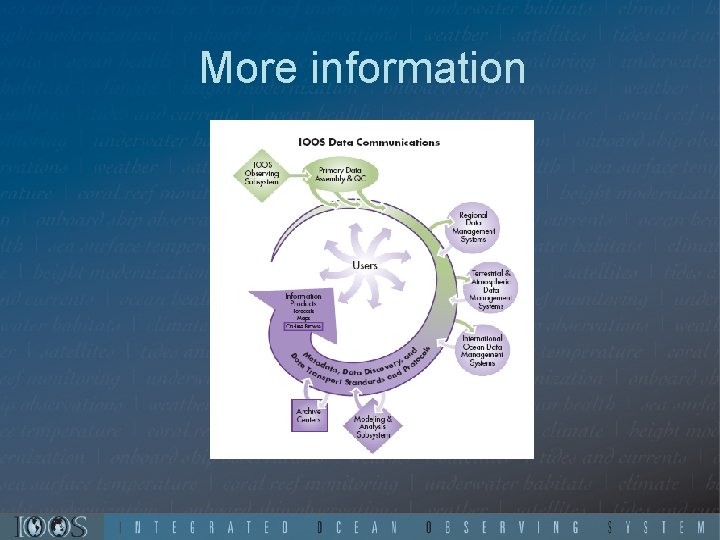

More information

DMAC Documents DMAC Plan • Detailed plans for implementing DMAC • Created with input from federal and state governments, academia, non-profits, and private industry http: //dmac. ocean. us

DMAC Documents Guide for IOOS Data Providers • Draft of the IOOS Data Policy • Guidelines for data and metadata interoperability standards and best practices http: //dmac. ocean. us

DMAC Standards Website • Submit standards • Provide feedback on standards (public comments)

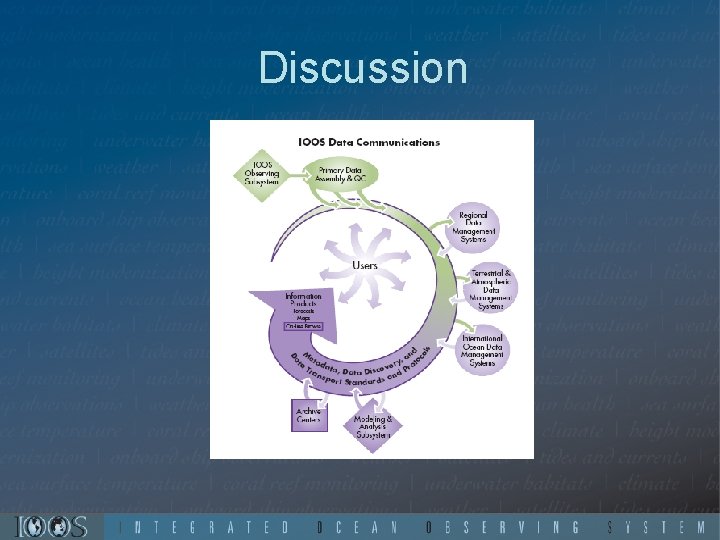

Discussion