Introduction to Voice Conversion HsinTe Hwang max 0219

![Overview of Techniques Abe et al. (1988) [6]: VQ mapping n Valbret et al. Overview of Techniques Abe et al. (1988) [6]: VQ mapping n Valbret et al.](https://slidetodoc.com/presentation_image_h/2952b2a773fcb27ab15baae6f5f98255/image-6.jpg)

![Stylianou-GMM based mapping function (2/2) n Mapping Function [10]: n Motivation: n Estimation of Stylianou-GMM based mapping function (2/2) n Mapping Function [10]: n Motivation: n Estimation of](https://slidetodoc.com/presentation_image_h/2952b2a773fcb27ab15baae6f5f98255/image-15.jpg)

![Stylianou based vs Kain based VC n n n Kain[11] based method makes no Stylianou based vs Kain based VC n n n Kain[11] based method makes no](https://slidetodoc.com/presentation_image_h/2952b2a773fcb27ab15baae6f5f98255/image-19.jpg)

![Nonparallel training for VC n n Mouchtaris et al. (2004, 2006) [14, 15]: GMM Nonparallel training for VC n n Mouchtaris et al. (2004, 2006) [14, 15]: GMM](https://slidetodoc.com/presentation_image_h/2952b2a773fcb27ab15baae6f5f98255/image-23.jpg)

![Time independent assumption(2/2) Example of converted and natural target parameter trajectories. [24] Time independent assumption(2/2) Example of converted and natural target parameter trajectories. [24]](https://slidetodoc.com/presentation_image_h/2952b2a773fcb27ab15baae6f5f98255/image-28.jpg)

![Solution for time independent assumption (1/3) Duxans et al [23] (HMM based voice conversion): Solution for time independent assumption (1/3) Duxans et al [23] (HMM based voice conversion):](https://slidetodoc.com/presentation_image_h/2952b2a773fcb27ab15baae6f5f98255/image-29.jpg)

![Solution for time independent assumption (2/3) Chi-Chun Hsia et al [21] (Gaussian Mixture Bi-gram Solution for time independent assumption (2/3) Chi-Chun Hsia et al [21] (Gaussian Mixture Bi-gram](https://slidetodoc.com/presentation_image_h/2952b2a773fcb27ab15baae6f5f98255/image-30.jpg)

![Over-smooth problem (3/3) Example of converted and natural target spectra. [24] Over-smooth problem (3/3) Example of converted and natural target spectra. [24]](https://slidetodoc.com/presentation_image_h/2952b2a773fcb27ab15baae6f5f98255/image-34.jpg)

![Solutions for over-smooth problem (2/2) Toda et al [11, 29]: n Combine joint GMM Solutions for over-smooth problem (2/2) Toda et al [11, 29]: n Combine joint GMM](https://slidetodoc.com/presentation_image_h/2952b2a773fcb27ab15baae6f5f98255/image-36.jpg)

![CART based voice conversion(1/2) Duxans et al [23]: n Using. GMMor HMM, we only CART based voice conversion(1/2) Duxans et al [23]: n Using. GMMor HMM, we only](https://slidetodoc.com/presentation_image_h/2952b2a773fcb27ab15baae6f5f98255/image-37.jpg)

![Prosody conversion n Chi-Chun Hsia, Chung-Hsien Wu, (2007) [21] “A Study on Synthesis Unit Prosody conversion n Chi-Chun Hsia, Chung-Hsien Wu, (2007) [21] “A Study on Synthesis Unit](https://slidetodoc.com/presentation_image_h/2952b2a773fcb27ab15baae6f5f98255/image-39.jpg)

![Other issues n n n Subjective and objective evaluation Cross-lingual voice conversion [25] Time Other issues n n n Subjective and objective evaluation Cross-lingual voice conversion [25] Time](https://slidetodoc.com/presentation_image_h/2952b2a773fcb27ab15baae6f5f98255/image-40.jpg)

![References (1/5) [1] Chung-Hsien Wu, Chi-Chun Hsia, Te-Hsien Liu, and Jhing-Fa Wang, “Voice Conversion References (1/5) [1] Chung-Hsien Wu, Chi-Chun Hsia, Te-Hsien Liu, and Jhing-Fa Wang, “Voice Conversion](https://slidetodoc.com/presentation_image_h/2952b2a773fcb27ab15baae6f5f98255/image-42.jpg)

![References (2/5) [8] H. Kuwabara and Y. Sagisaka, “ Acoustic characteristics of speaker individuality: References (2/5) [8] H. Kuwabara and Y. Sagisaka, “ Acoustic characteristics of speaker individuality:](https://slidetodoc.com/presentation_image_h/2952b2a773fcb27ab15baae6f5f98255/image-43.jpg)

![References (3/5) [15] A. Mouchtaris, J. Spiegel, and P. Mueller, Non-Parallel Training for Voice References (3/5) [15] A. Mouchtaris, J. Spiegel, and P. Mueller, Non-Parallel Training for Voice](https://slidetodoc.com/presentation_image_h/2952b2a773fcb27ab15baae6f5f98255/image-44.jpg)

![References (4/5) [21] Chi-Chun Hsia, Chung-Hsien Wu, “A Study on Synthesis Unit Selection and References (4/5) [21] Chi-Chun Hsia, Chung-Hsien Wu, “A Study on Synthesis Unit Selection and](https://slidetodoc.com/presentation_image_h/2952b2a773fcb27ab15baae6f5f98255/image-45.jpg)

![References (5/5) [28] L. Meshabi, V. Barreaud, and O. Boeffard, “GMMbased Speech Transformation Systems References (5/5) [28] L. Meshabi, V. Barreaud, and O. Boeffard, “GMMbased Speech Transformation Systems](https://slidetodoc.com/presentation_image_h/2952b2a773fcb27ab15baae6f5f98255/image-46.jpg)

- Slides: 47

Introduction to Voice Conversion Hsin-Te Hwang max 0219. cm 94 g@nctu. edu. tw Department of Communication Engineering, Chiao Tung University, Hsinchu 1

Outline n n n Introduction VC baseline (GMM based VC) Problems Summary References 2

What is voice conversion (VC)? n Definition: n n To modify the speech signal of one speaker (source) to sound like the other speaker (target). More generalized definition: To modify (transform) the characteristics of the speech signal. Ex: Emotional Voice Conversion [1, 2] n 3

Application of VC n In TTS: n n Building a new voice based on Current state of the art TTS system such as Corpus based TTS is hard. Same problem in building an Emotional TTS [1, 2]. By using VC, one can use recorded database and convert it to a target voice using as little as 10 -20 sentences [3]. Others: n n To convert narrow-band speech to wide band speech for telecommunication [4]. Modeling of speech production [5]. 4

Conversion? n Spectrum: n n Prosody n n Convert Spectrum only. Prosody remains unchanged or uses sample way to convert prosody. Convert prosody only. Spectrum + Prosody n Convert spectrum and prosody. 5

![Overview of Techniques Abe et al 1988 6 VQ mapping n Valbret et al Overview of Techniques Abe et al. (1988) [6]: VQ mapping n Valbret et al.](https://slidetodoc.com/presentation_image_h/2952b2a773fcb27ab15baae6f5f98255/image-6.jpg)

Overview of Techniques Abe et al. (1988) [6]: VQ mapping n Valbret et al. (1992) [7]: Linear Multivariate Regression (LMR). Dynamic Frequency Warping (DFW) n Kuwabara et al. (1995) [8]: Fuzzy VQ n. M. Narendranath et al. (1995) [9]: ANN based n Stylianou et al. (1995) [10]: GMM based n Kain et al. (1998) [11]: GMM based n Toda et al. (2001) [12]: GMM and DFW n Toda et al. (2005) [13]: GMM consider Globe Variance n Mouchtaris et al. (2006) [14]: GMM and speaker adaptation n 6

Outline n n n Introduction VC baseline (GMM based VC) Problems Summaries Reference 7

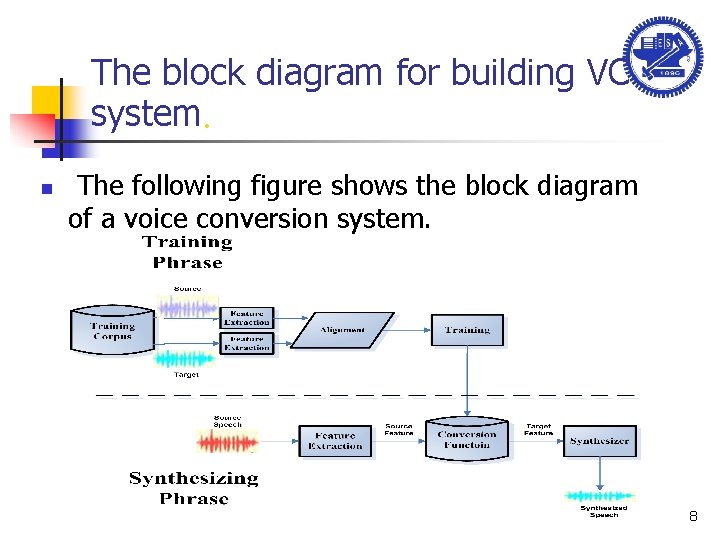

The block diagram for building VC system. n The following figure shows the block diagram of a voice conversion system. 8

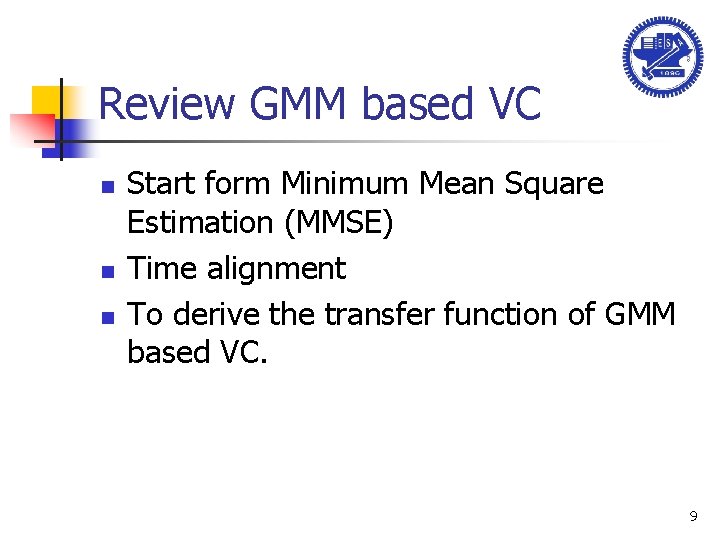

Review GMM based VC n n n Start form Minimum Mean Square Estimation (MMSE) Time alignment To derive the transfer function of GMM based VC. 9

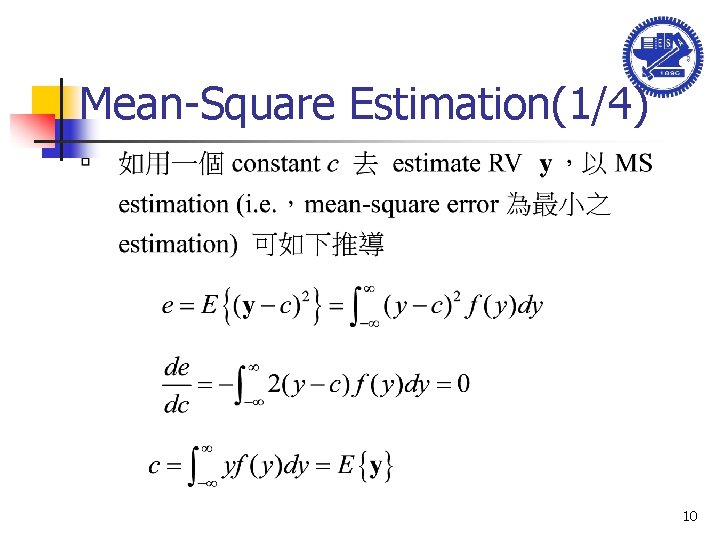

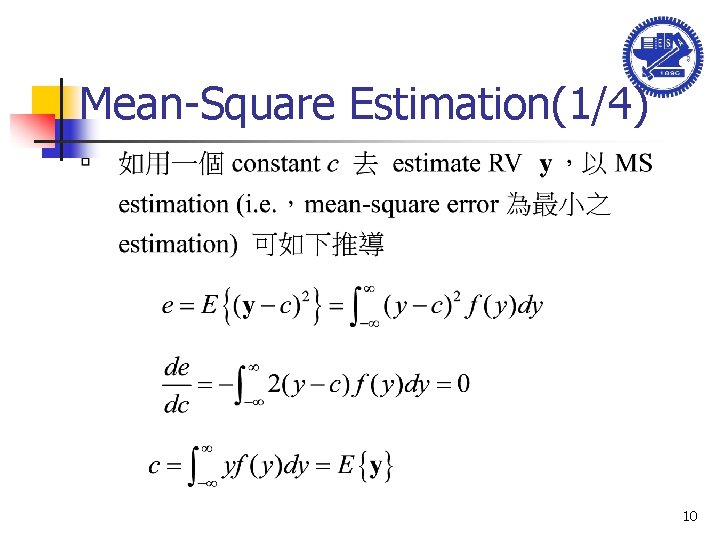

Mean-Square Estimation(1/4) 10

Mean-Square Estimation(2/4) 11

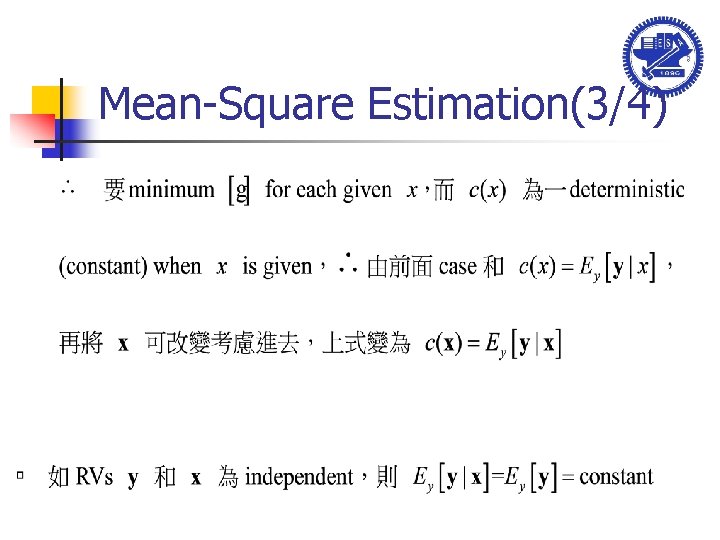

Mean-Square Estimation(3/4)

Mean-Square Estimation(4/4)

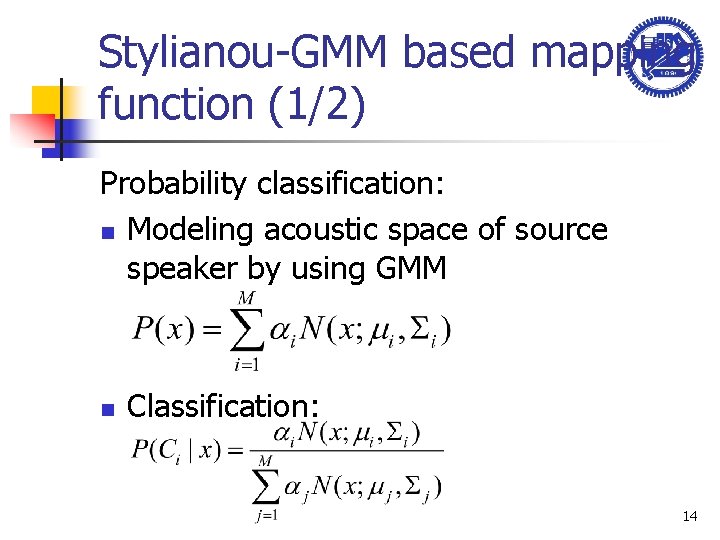

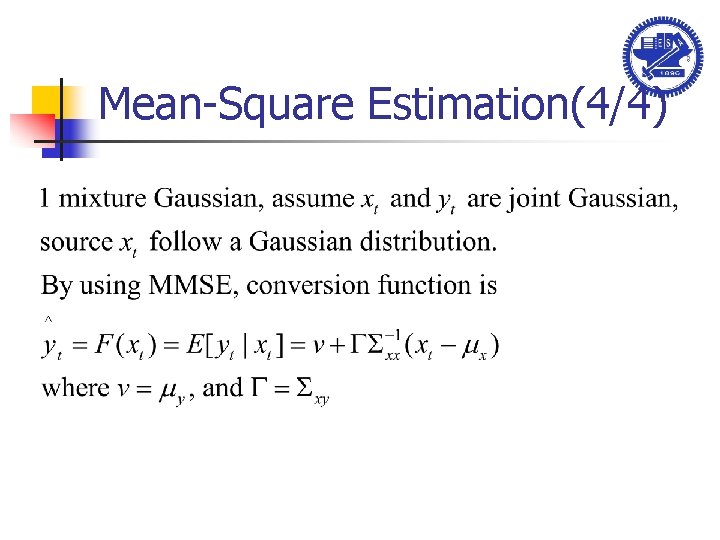

Stylianou-GMM based mapping function (1/2) Probability classification: n Modeling acoustic space of source speaker by using GMM n Classification: 14

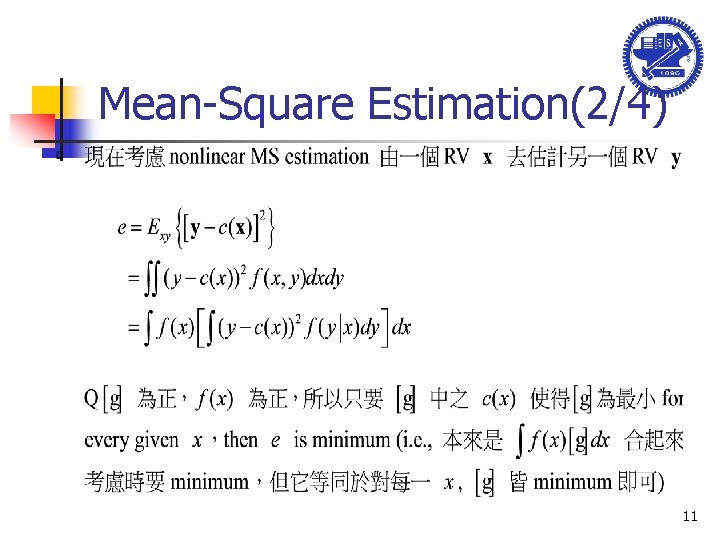

![StylianouGMM based mapping function 22 n Mapping Function 10 n Motivation n Estimation of Stylianou-GMM based mapping function (2/2) n Mapping Function [10]: n Motivation: n Estimation of](https://slidetodoc.com/presentation_image_h/2952b2a773fcb27ab15baae6f5f98255/image-15.jpg)

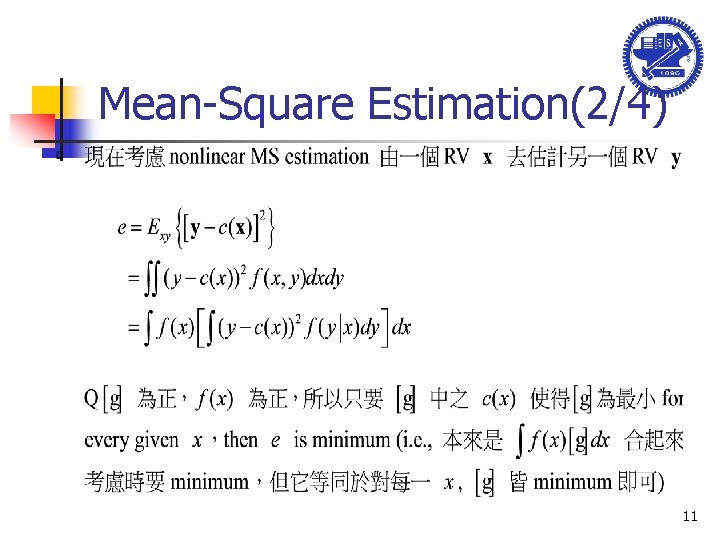

Stylianou-GMM based mapping function (2/2) n Mapping Function [10]: n Motivation: n Estimation of mapping function: 15

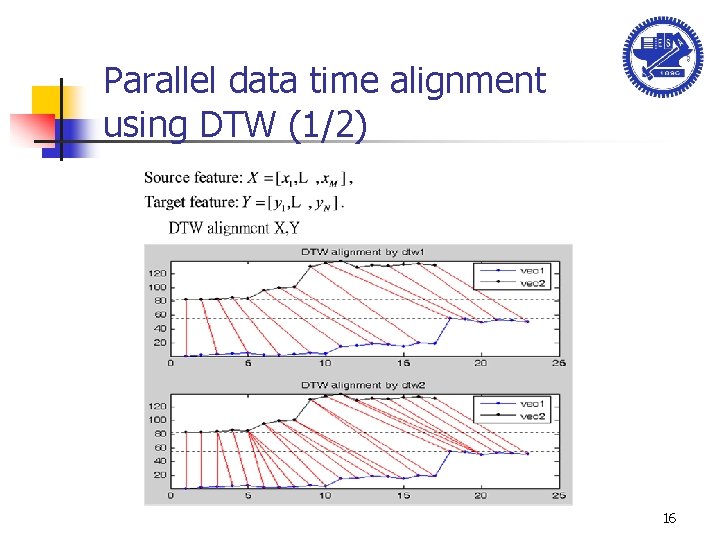

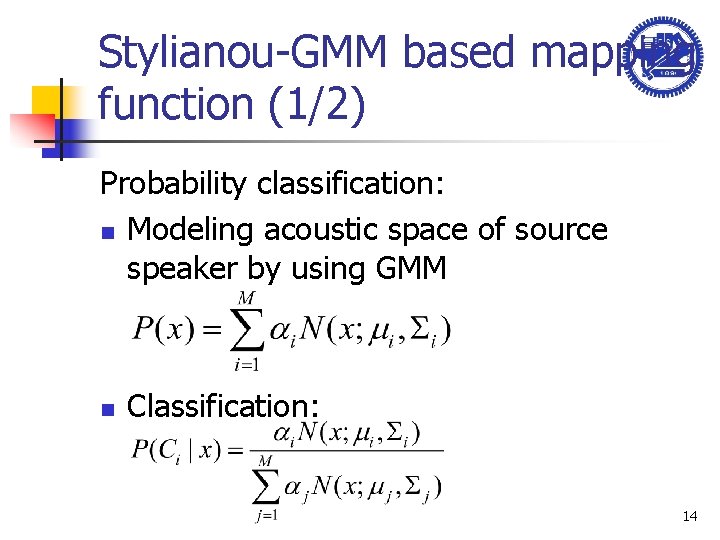

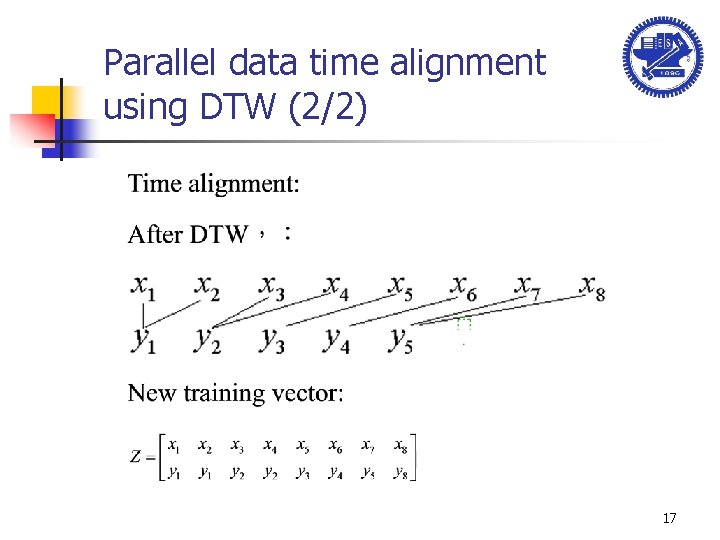

Parallel data time alignment using DTW (1/2) 16

Parallel data time alignment using DTW (2/2) 17

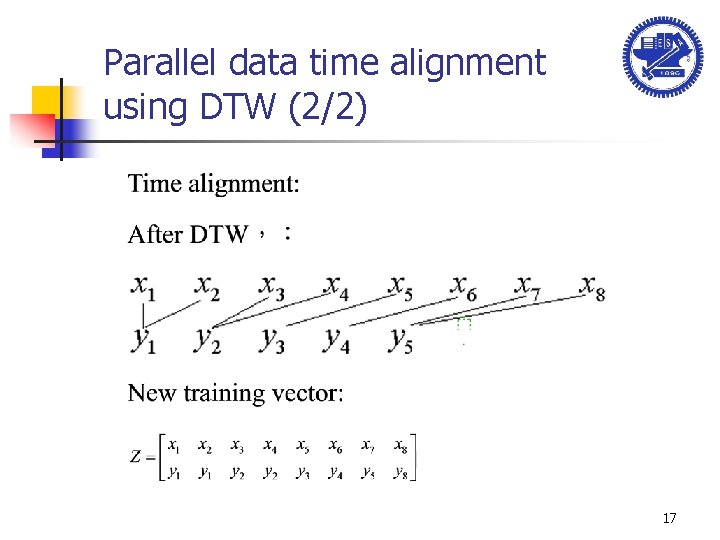

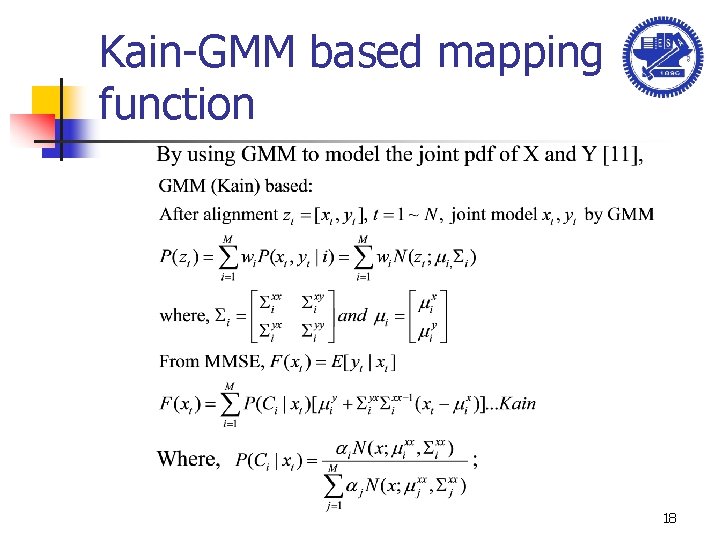

Kain-GMM based mapping function 18

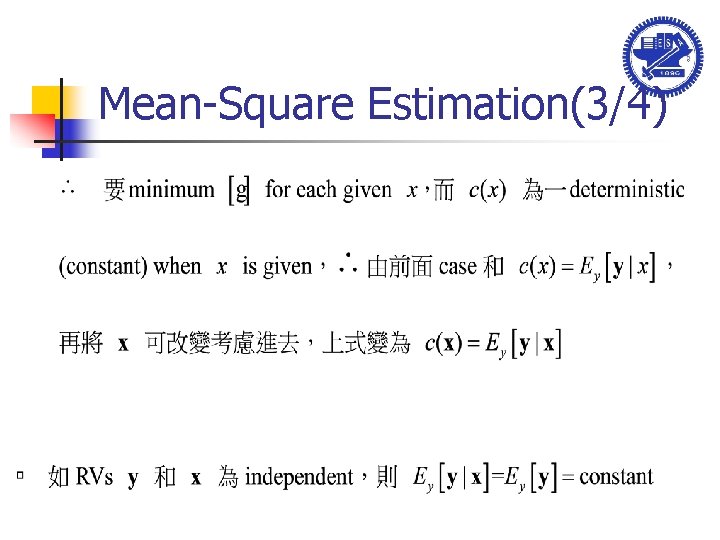

![Stylianou based vs Kain based VC n n n Kain11 based method makes no Stylianou based vs Kain based VC n n n Kain[11] based method makes no](https://slidetodoc.com/presentation_image_h/2952b2a773fcb27ab15baae6f5f98255/image-19.jpg)

Stylianou based vs Kain based VC n n n Kain[11] based method makes no assumptions about the target distributions: clustering takes place on the source and the target vectors. In theory, modeling the joint density rather than the source density should lead to a more judicious allocation of mixtures for the regression problem. Kain based method is computationally more expensie during the EM step than Stylianou [10].

Outline n n n Introduction VC baseline (GMM based VC) Problems Summary Reference 20

Problems n n To make the training more flexible (nonparallel training) To improve the quality and similarity of transform speech Prosody conversion Other issues 21

Problems of parallel training for VC n In order to derive the conversion function, a speech corpus is needed that contains the same utterances form both the source and target speakers. Such corpus is called parallel corpus. n The disadvantage of this method is that such corpus is difficult or even impossible to collect. – Cross lingual voice conversion. – Most of the databases are nonparallel. 22

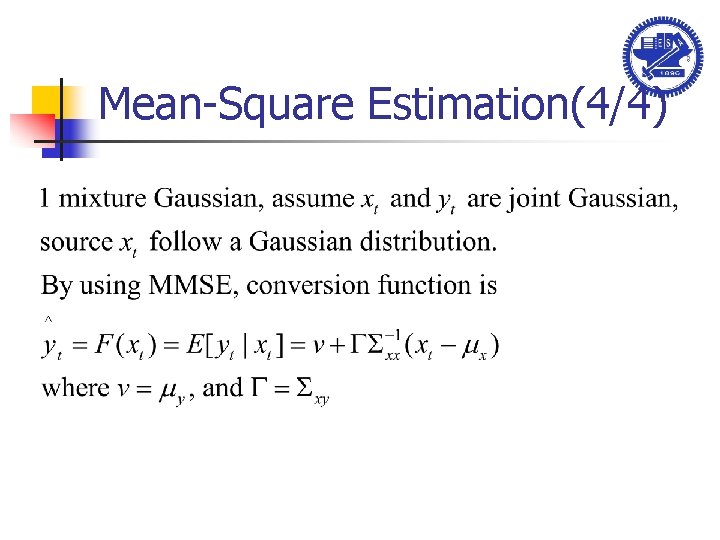

![Nonparallel training for VC n n Mouchtaris et al 2004 2006 14 15 GMM Nonparallel training for VC n n Mouchtaris et al. (2004, 2006) [14, 15]: GMM](https://slidetodoc.com/presentation_image_h/2952b2a773fcb27ab15baae6f5f98255/image-23.jpg)

Nonparallel training for VC n n Mouchtaris et al. (2004, 2006) [14, 15]: GMM and speaker adaptation D. Säundermann et al (2003) [16] VTLN based H. Ye et al (2004) [17] VC for Unknown Speaker M. Mashimo et al. (2001) [18] Cross-Language VC 23

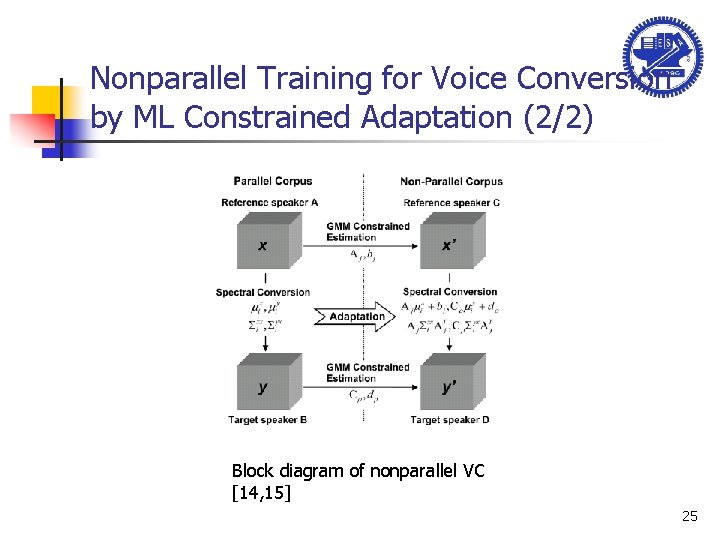

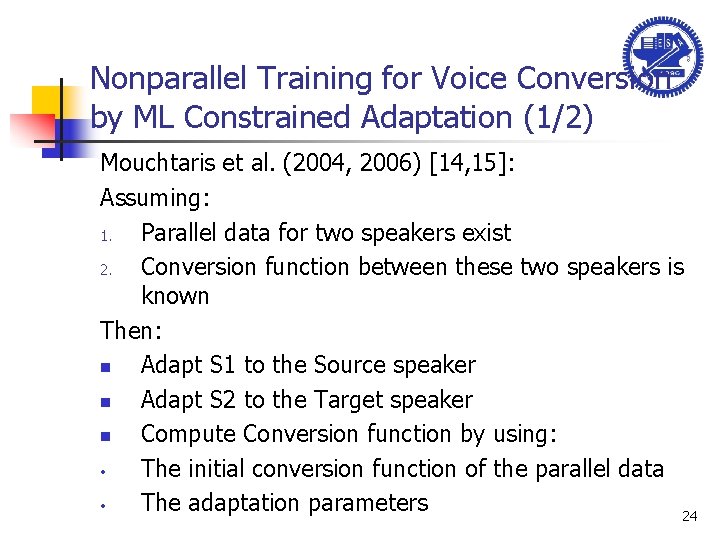

Nonparallel Training for Voice Conversion by ML Constrained Adaptation (1/2) Mouchtaris et al. (2004, 2006) [14, 15]: Assuming: 1. Parallel data for two speakers exist 2. Conversion function between these two speakers is known Then: n Adapt S 1 to the Source speaker n Adapt S 2 to the Target speaker n Compute Conversion function by using: • The initial conversion function of the parallel data • The adaptation parameters 24

Nonparallel Training for Voice Conversion by ML Constrained Adaptation (2/2) Block diagram of nonparallel VC [14, 15] 25

Quality improvement Two major problems of GMM based VC: n Time independent assumption n Over-smooth 26

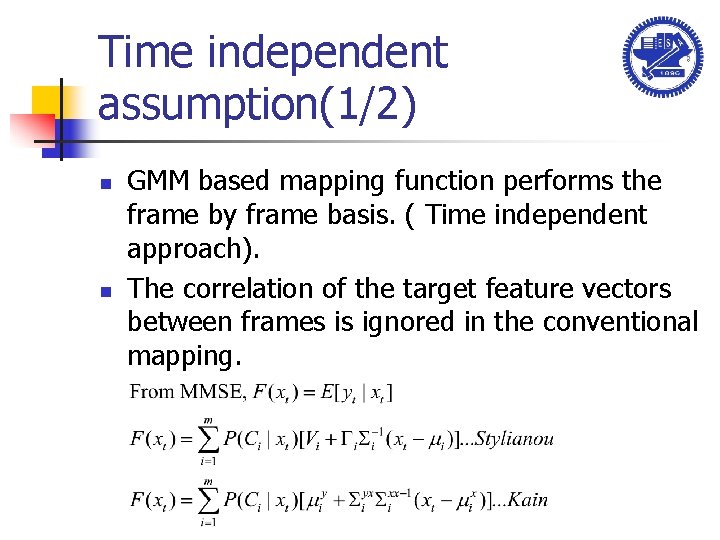

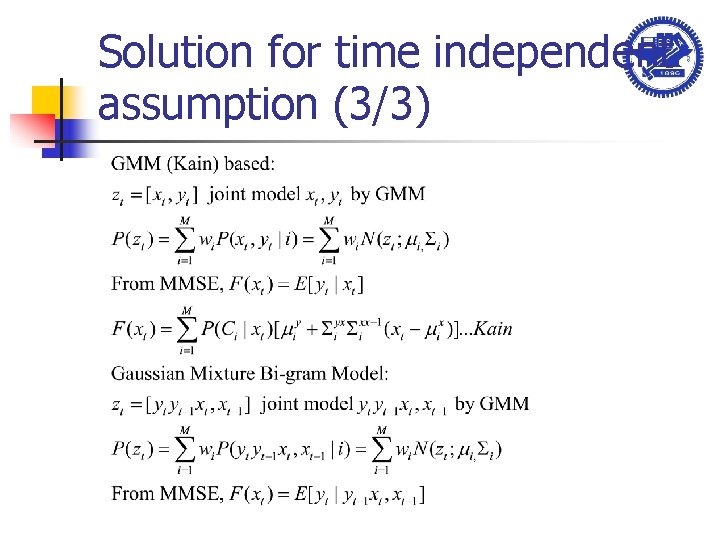

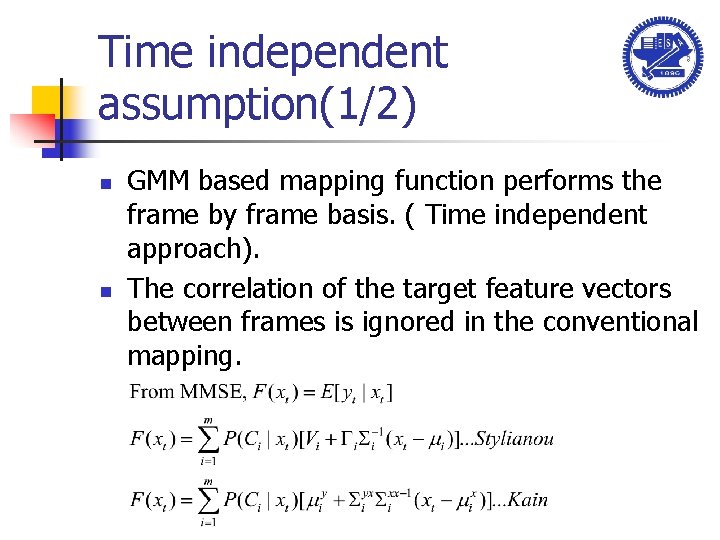

Time independent assumption(1/2) n n GMM based mapping function performs the frame by frame basis. ( Time independent approach). The correlation of the target feature vectors between frames is ignored in the conventional mapping.

![Time independent assumption22 Example of converted and natural target parameter trajectories 24 Time independent assumption(2/2) Example of converted and natural target parameter trajectories. [24]](https://slidetodoc.com/presentation_image_h/2952b2a773fcb27ab15baae6f5f98255/image-28.jpg)

Time independent assumption(2/2) Example of converted and natural target parameter trajectories. [24]

![Solution for time independent assumption 13 Duxans et al 23 HMM based voice conversion Solution for time independent assumption (1/3) Duxans et al [23] (HMM based voice conversion):](https://slidetodoc.com/presentation_image_h/2952b2a773fcb27ab15baae6f5f98255/image-29.jpg)

Solution for time independent assumption (1/3) Duxans et al [23] (HMM based voice conversion): n HMM are well-known models which can capture the dynamics of the training data using states. n it can model the dynamics of sequences of vectors with transition probabilities between states. HMM based VC system block diagram [23]

![Solution for time independent assumption 23 ChiChun Hsia et al 21 Gaussian Mixture Bigram Solution for time independent assumption (2/3) Chi-Chun Hsia et al [21] (Gaussian Mixture Bi-gram](https://slidetodoc.com/presentation_image_h/2952b2a773fcb27ab15baae6f5f98255/image-30.jpg)

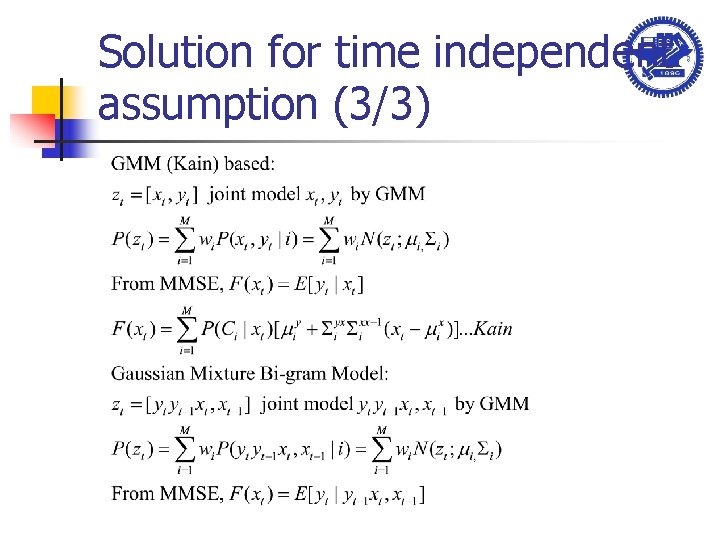

Solution for time independent assumption (2/3) Chi-Chun Hsia et al [21] (Gaussian Mixture Bi-gram Model): n To Adopt the Gaussian mixture bi-gram model to characterize temporal and spectral evolution in the conversion function. 30

Solution for time independent assumption (3/3)

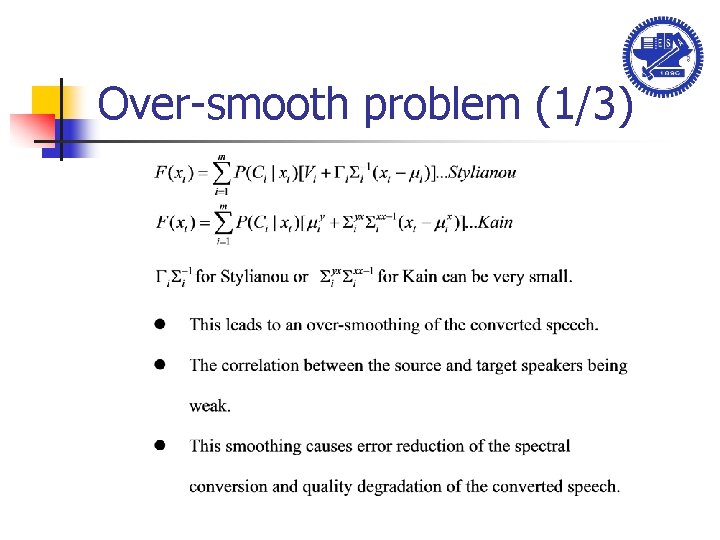

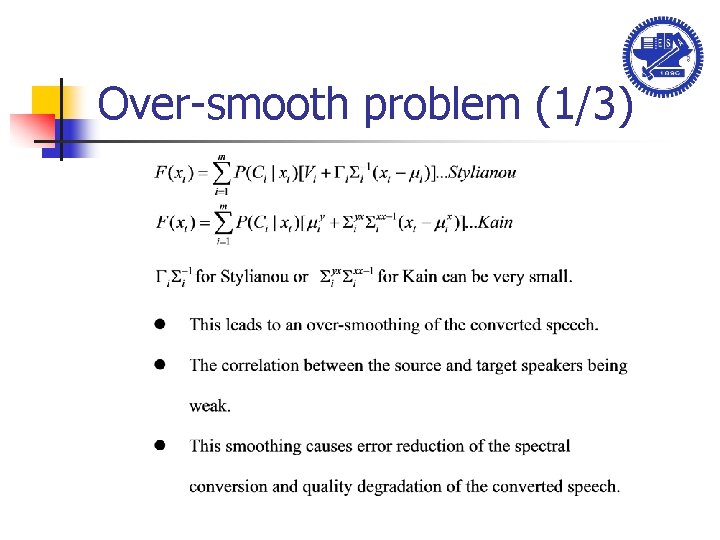

Over-smooth problem (1/3)

Over-smooth problem (2/3)

![Oversmooth problem 33 Example of converted and natural target spectra 24 Over-smooth problem (3/3) Example of converted and natural target spectra. [24]](https://slidetodoc.com/presentation_image_h/2952b2a773fcb27ab15baae6f5f98255/image-34.jpg)

Over-smooth problem (3/3) Example of converted and natural target spectra. [24]

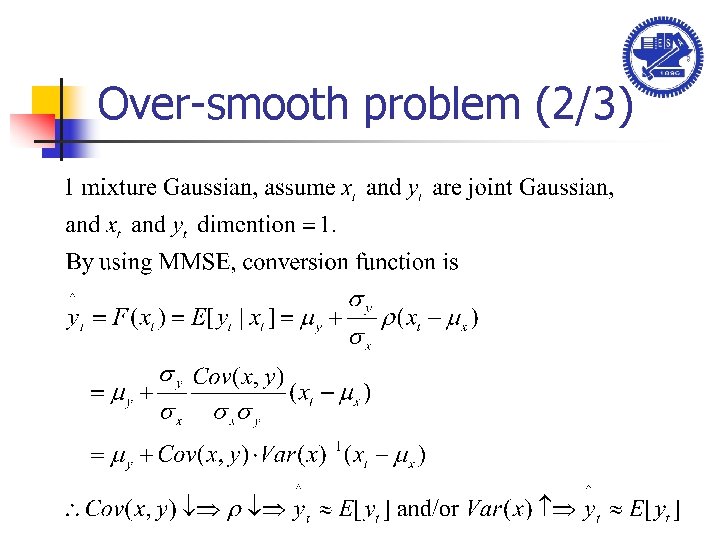

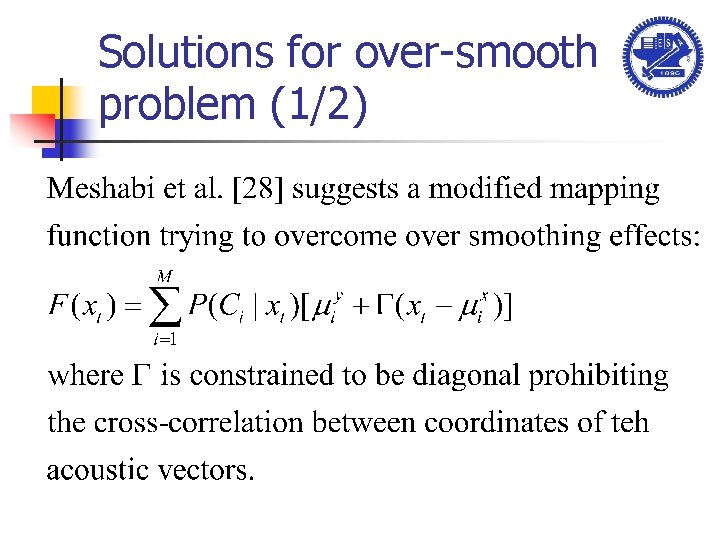

Solutions for over-smooth problem (1/2)

![Solutions for oversmooth problem 22 Toda et al 11 29 n Combine joint GMM Solutions for over-smooth problem (2/2) Toda et al [11, 29]: n Combine joint GMM](https://slidetodoc.com/presentation_image_h/2952b2a773fcb27ab15baae6f5f98255/image-36.jpg)

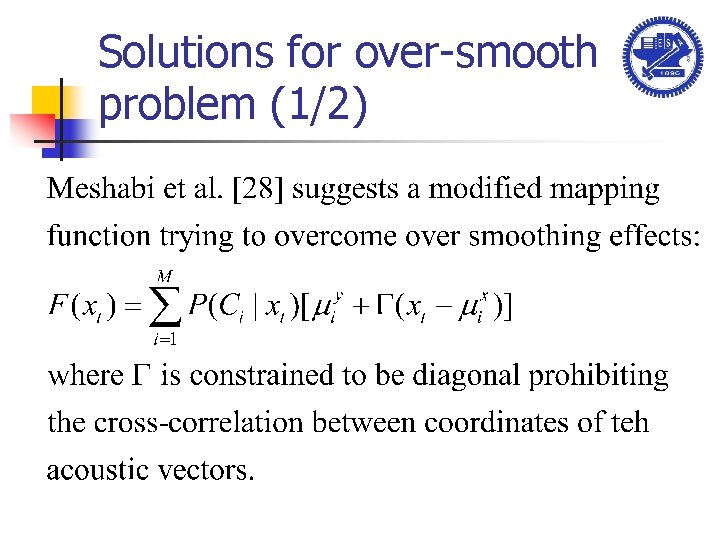

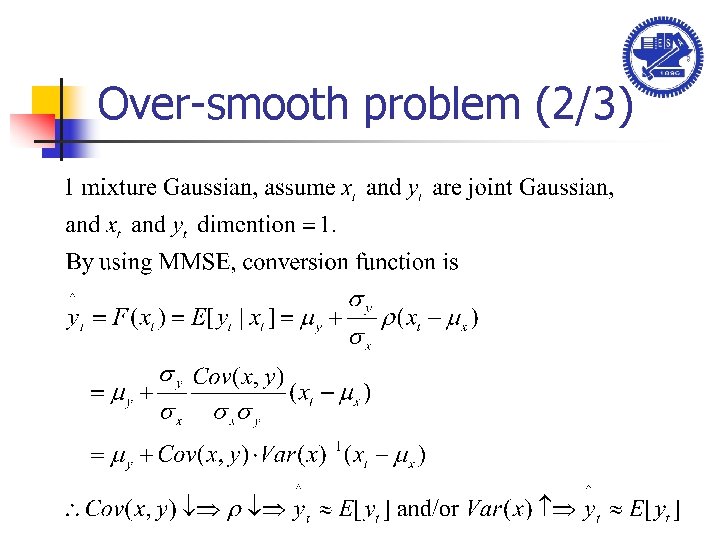

Solutions for over-smooth problem (2/2) Toda et al [11, 29]: n Combine joint GMM with the global variance of the converted spectra in each utterance to cope with oversmoothing n Use of delta features have been used to alleviate spectral discontinuities

![CART based voice conversion12 Duxans et al 23 n Using GMMor HMM we only CART based voice conversion(1/2) Duxans et al [23]: n Using. GMMor HMM, we only](https://slidetodoc.com/presentation_image_h/2952b2a773fcb27ab15baae6f5f98255/image-37.jpg)

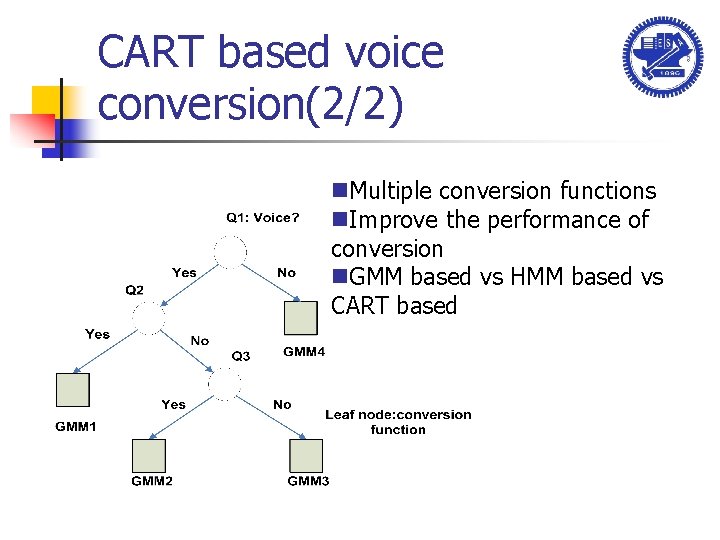

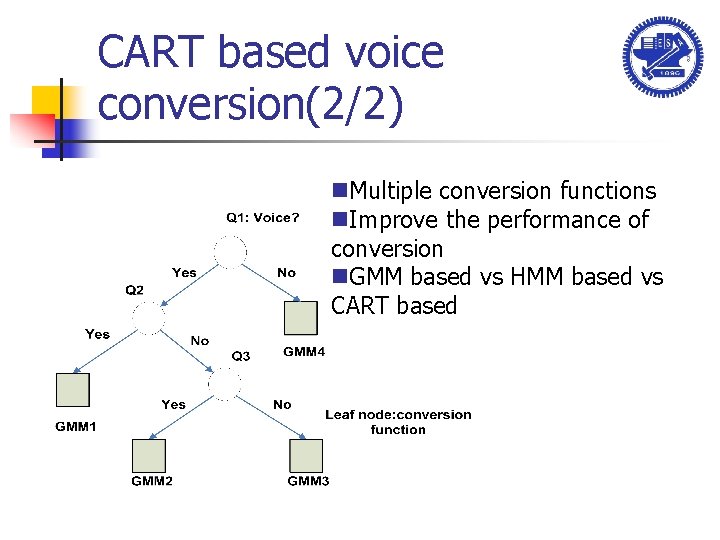

CART based voice conversion(1/2) Duxans et al [23]: n Using. GMMor HMM, we only have spectral information to identify the classes. But using decision trees we can also use phonetic information. n Phonetic information for each frame, such as the phone, a vowel/consonant flag, point of articulation, manner and voicing.

CART based voice conversion(2/2) n. Multiple conversion functions n. Improve the performance of conversion n. GMM based vs HMM based vs CART based

![Prosody conversion n ChiChun Hsia ChungHsien Wu 2007 21 A Study on Synthesis Unit Prosody conversion n Chi-Chun Hsia, Chung-Hsien Wu, (2007) [21] “A Study on Synthesis Unit](https://slidetodoc.com/presentation_image_h/2952b2a773fcb27ab15baae6f5f98255/image-39.jpg)

Prosody conversion n Chi-Chun Hsia, Chung-Hsien Wu, (2007) [21] “A Study on Synthesis Unit Selection and Voice Conversion for Text-to-Speech Synthesis” n Hanzlíček, Zdeněk et al (2007) [22] "F 0 transformation within the voice conversion framework” n Guoyu Zuo et al (2005) [19] “ Mandarin Voice Conversion Using Tone Codebook Mapping. n E. E. Helander et al (2007) [2] “A Novel Method for Prosody Prediction in Voice Conversion” 39

![Other issues n n n Subjective and objective evaluation Crosslingual voice conversion 25 Time Other issues n n n Subjective and objective evaluation Cross-lingual voice conversion [25] Time](https://slidetodoc.com/presentation_image_h/2952b2a773fcb27ab15baae6f5f98255/image-40.jpg)

Other issues n n n Subjective and objective evaluation Cross-lingual voice conversion [25] Time alignment A novel VC frame work [26] Residual prediction [27]

Summary n Ø Ø Ø To increase the usefulness of the voice conversion system, practical aspects should be considered. Flexible training framework Quality and Similarity Objective Evaluation 41

![References 15 1 ChungHsien Wu ChiChun Hsia TeHsien Liu and JhingFa Wang Voice Conversion References (1/5) [1] Chung-Hsien Wu, Chi-Chun Hsia, Te-Hsien Liu, and Jhing-Fa Wang, “Voice Conversion](https://slidetodoc.com/presentation_image_h/2952b2a773fcb27ab15baae6f5f98255/image-42.jpg)

References (1/5) [1] Chung-Hsien Wu, Chi-Chun Hsia, Te-Hsien Liu, and Jhing-Fa Wang, “Voice Conversion Using Duration-Embedded Bi-HMMs for Expressive Speech Synthesis, IEEE Trans. Audio, Speech and Language Processing, vol. 14, no. 4, July, 2006, pp. 1109 -1116. [2] Chi-Chun Hsia, Chung-Hsien Wu, Jian-Qi Wu, “Conversion Function Clustering and Selection Using Linguistic and Spectral Information for Emotional Voice Conversion, “ IEEE Trans. Computers (Special Issue on Emergent Systems, Algorithms and Architectures for Speech-based Human machine Interaction), vol. 56, no. 9, September 2007, pp. 1225 -1233. [3] http: //festvox. org/transform. html [4] K. Y. Park and H. S. Kim, “Narrowband to wideband conversion of speech using GMM based transformation, ” in Proc. ICASSP, Istanbul, Turkey, Jun. 2000, pp. 1847– 1850. [5] K. Richmond, S. King, and P. Taylor, “Modelling the uncertainty in recovering articulation from acoustics, ” Comput. Speech Lang. , vol. 17, pp. 153– 172, 2003. [6] M. Abe, S. Nakamura, K. Shikano and H. Kuwabara, “Voice conversion through vector Quantization, ”in Proc. of ICASP, New York, NY, USA, pp. 655 -658, Apr. 1988. [7 ] N. Iwahashi and Y. Sagisaka, “ Speech spectrum transformation based on speaker interpolation. ” in Proc. ICASSP 94. 1994. 42

![References 25 8 H Kuwabara and Y Sagisaka Acoustic characteristics of speaker individuality References (2/5) [8] H. Kuwabara and Y. Sagisaka, “ Acoustic characteristics of speaker individuality:](https://slidetodoc.com/presentation_image_h/2952b2a773fcb27ab15baae6f5f98255/image-43.jpg)

References (2/5) [8] H. Kuwabara and Y. Sagisaka, “ Acoustic characteristics of speaker individuality: Control and conversion, “ Speech Communication, vol, 19, no. 2, pp. 165 -173, 1995. [9] M. Narendranath, H. A. Murthy, S. Rajendran, and B. Yegnanarayana, “Transformation of formants for voice conversion using artificial neural networks, ” Speech Commun. , vol. 16, no. 2, pp. 207– 216, 1995. [10] Y. Stylianou, “Continuous probabilistic transform for voice conversion, ” IEEE Trans. on Speech and Audio Processing, vol. 6, no. 2, pp. 131 -142, Mar. 1998. [11] A. Kain and M. W. Macon, “Spectral Voice Conversion for Text-to-Speech Synthesis, ” in Proc. of ICASSP, vol. 1, pp. 285 -288, Seattle, Washington, USA, May 1998. [12] T. Toda, H. Saruwatari, and K. Shikano, “Voice Conversion Algorithm based on Gaussian Mixture Model with Dynamic Frequency Warping of STRAIGHT spectrum, “in Proc. IEEE Int. Conf. Acoust, Speech, Signal Processing, (Salt Lake City, USA), pp. 841 -844, 2001. [13] T. Toda, A. Black, and K. Tokuda, “ Spectral Conversion Based on Maximum Likelihood Estimation considering Global Variance of Converted Parameter, ” in Proc. IEEE Int. Conf. Acoust. Speech, Signal Processing, (Philadelphia, USA), pp. 9 -12, 2005. [14] A. Mouchtaris, J. Van der Spiegel, and P. Mueller, “Non-Parallel Training for Voice Conversion Based on a Parameter Adaptation Approach”, in IEEE Trans. 43 Audio, Speech and Language Processing, vol. 14, no. 3, May 2006, pp. 952 -963.

![References 35 15 A Mouchtaris J Spiegel and P Mueller NonParallel Training for Voice References (3/5) [15] A. Mouchtaris, J. Spiegel, and P. Mueller, Non-Parallel Training for Voice](https://slidetodoc.com/presentation_image_h/2952b2a773fcb27ab15baae6f5f98255/image-44.jpg)

References (3/5) [15] A. Mouchtaris, J. Spiegel, and P. Mueller, Non-Parallel Training for Voice Conversion by Maximum Likelihood Constrained Adaptation, " in Proc: of the ICASSP'04, Montreal, Canada, 2004. [16] D. SÄundermann, H. Ney, and H. HÄoge, VTLN-Based Cross-Language Voice Conversion, " in Proc: of the ASRU'03, St: Thomas, USA, 2003. [17] H. Ye and S. J. Young, Voice Conversion for Unknown Speakers, " in Proc: of the ICSLP'04, Jeju, South Korea, 2004. [18] M. Mashimo, T. Toda, K. Shikano, and N. Campbell, Eval-uation of Cross. Language Voice Conversion Based on GMMand STRAIGHT, " in Proc: of the Eurospeech'01, Aalborg, Denmark, 2001. [19] Guoyu Zuo, Yao Chen, Xiaogang Ruan, Wenju Liu: Mandarin Voice Conversion Using Tone Codebook Mapping. ICMLC 2005: 965 -973 [DBLP: conf/icmlc/Zuo. CRL 05] [20] E. E. Helander, J. Nurminen. 2007. A Novel Method for Prosody Prediction in Voice Conversion Acoustics. Speech and Signal Processing. ICASSP 2007. IEEE International Conference on Volume 4: 509 -512 [22] Hanzlíček, Zdeněk / Matoušek, Jindřich (2007): "F 0 transformation within the 44 voice conversion framework", In INTERSPEECH-2007, 1961 -1964.

![References 45 21 ChiChun Hsia ChungHsien Wu A Study on Synthesis Unit Selection and References (4/5) [21] Chi-Chun Hsia, Chung-Hsien Wu, “A Study on Synthesis Unit Selection and](https://slidetodoc.com/presentation_image_h/2952b2a773fcb27ab15baae6f5f98255/image-45.jpg)

References (4/5) [21] Chi-Chun Hsia, Chung-Hsien Wu, “A Study on Synthesis Unit Selection and Voice Conversion for Text-to-Speech Synthesis”, Department of Computer Science and Information Engineering, NCKU, Dissertation for Doctor of Philosophy, December 2007. [23] Duxans, H. , Bonafonte, A. , Kain, A. and van Santen, J. , “Including Dynamic and Phonetic Information in Voice Conversion Systems, ” in Proc. of ICSLP 2004, pp. 5 -8, Jeju Island, South Korea, 2004. [24] T. Toda, A. W. Black, K. Tokuda, ''Voice Conversion Based on Maximum Likelihood Estimation of Spectral Parameter Trajectory, '' IEEE Transactions on Audio, Speech and Language Processing, Vol. 15, No. 8, pp. 2222 -2235, Nov. 2007. [25] D. S¨undermann, H. Ney, and H. H¨oge, “VTLN-Based Cross-Language Voice Conversion, ” in Proc. of the ASRU’ 03, Virgin Islands, USA, 2003. [26] T. Toda, Y. Ohtani, and K. Shikano, “One-to-many and many-to-one voice conversion based on eigenvoices, ” in Proc. ICASSP, Honolulu, HI, Apr. 2007, vol. 4, pp. 1249– 1252. [27] A. Kain and M. W. Macon, “Design and evaluation of a voice conversion algorithm based on spectral envelope mapping and residual prediction, ” in Proc. ICASSP, Salt Lake City, UT, May 2001, pp. 813– 816. 45

![References 55 28 L Meshabi V Barreaud and O Boeffard GMMbased Speech Transformation Systems References (5/5) [28] L. Meshabi, V. Barreaud, and O. Boeffard, “GMMbased Speech Transformation Systems](https://slidetodoc.com/presentation_image_h/2952b2a773fcb27ab15baae6f5f98255/image-46.jpg)

References (5/5) [28] L. Meshabi, V. Barreaud, and O. Boeffard, “GMMbased Speech Transformation Systems under Data Reduction, ” 6 th ISCA Workshop on Speech Synthesis, pp. 119 -124. August 22 -24, 2007. [29] T. Toda, A. W. Black, K. Tokuda, “ Voice Conversion Based on Maximum Likelihood Estimation of Spectral Parameter Trajectory, '' IEEE Transactions on Audio, Speech and Language Processing, Vol. 15, No. 8, pp. 2222 -2235, Nov. 2007.

Q&A? Thanks for your listening!