Introduction to the LLVM Compilation Infra Structure Fernando

- Slides: 75

Introduction to the LLVM Compilation Infra -Structure Fernando Magno Quintão Pereira fernando@dcc. ufmg. br

Why all this? • Why should we study compilers? • What is LLVM? • Why should we study LLVM? • What will we learn about LLVM?

Why to Learn Compilers? "We do not need that many compiler guys. But those that we need, we need them badly. " François Bodin – CEO of CAPS A lot of amazing people in computer science were working with compilers and programming languages. Who do you know in these photos? And many of the amazing things that we have today only exist because of compilers and programming languages!

The Mission of the Compiler Writer The goal of a compiler writer is to bridge the gap between programming languages and the hardware; hence, making programmers more productive A compiler writer builds bridges between people and machines, and this task is each day more challenging. Software engineers want abstractions that let them stay closer to the specification of the problems that they need to solve. Hardware engineers want efficiency. To obtain every little nanosecond of speed, they build machines each time more (beautifully) complex.

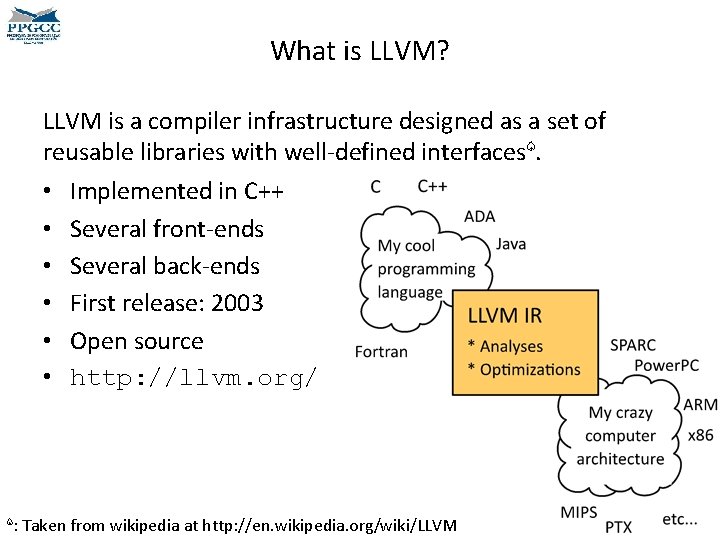

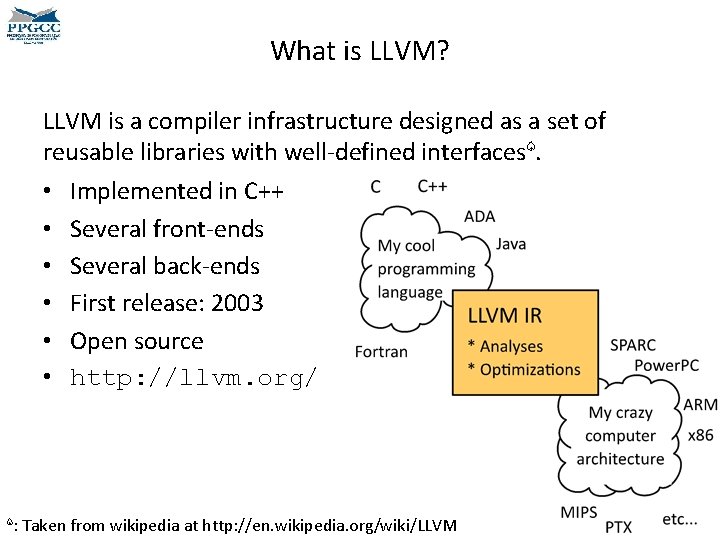

What is LLVM? LLVM is a compiler infrastructure designed as a set of reusable libraries with well-defined interfaces♤. • • • ♤: Implemented in C++ Several front-ends Several back-ends First release: 2003 Open source http: //llvm. org/ Taken from wikipedia at http: //en. wikipedia. org/wiki/LLVM

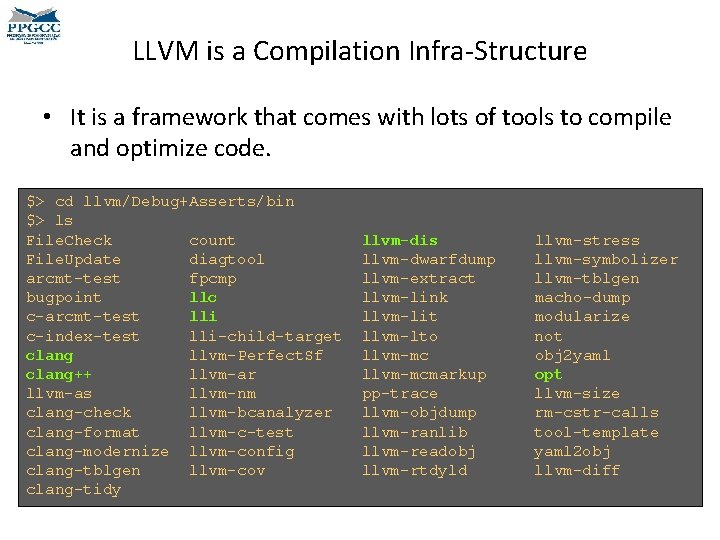

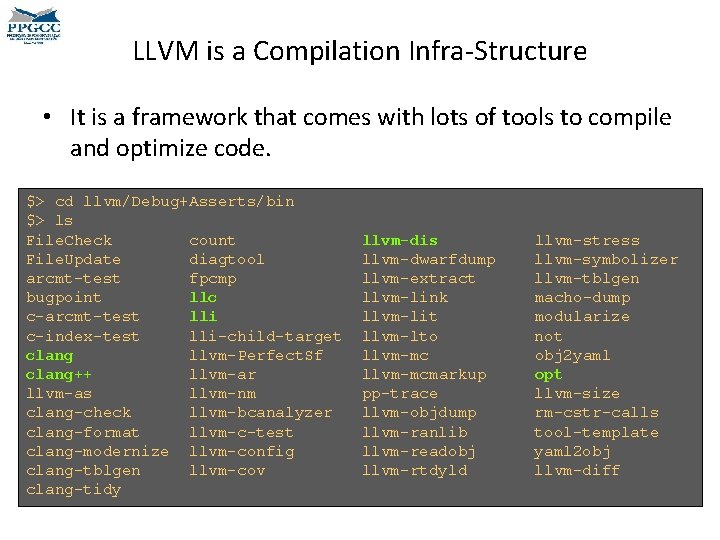

LLVM is a Compilation Infra-Structure • It is a framework that comes with lots of tools to compile and optimize code. $> cd llvm/Debug+Asserts/bin $> ls File. Check count File. Update diagtool arcmt-test fpcmp bugpoint llc c-arcmt-test lli c-index-test lli-child-target clang llvm-Perfect. Sf clang++ llvm-ar llvm-as llvm-nm clang-check llvm-bcanalyzer clang-format llvm-c-test clang-modernize llvm-config clang-tblgen llvm-cov clang-tidy llvm-dis llvm-dwarfdump llvm-extract llvm-link llvm-lit llvm-lto llvm-mcmarkup pp-trace llvm-objdump llvm-ranlib llvm-readobj llvm-rtdyld llvm-stress llvm-symbolizer llvm-tblgen macho-dump modularize not obj 2 yaml opt llvm-size rm-cstr-calls tool-template yaml 2 obj llvm-diff

LLVM is a Compilation Infra-Structure • Compile C/C++ programs: $> $> 42 echo "int main() {return 42; }" > test. c clang test. c. /a. out echo $? clang/clang++ are very competitive when compared with, say, gcc, or icc. Some of these compilers are faster in some benchmarks, and slower in others. Usually clang/clang++ have faster compilation times. The Internet is crowed with benchmarks. Which compiler do you think generates faster code: LLVM or gcc?

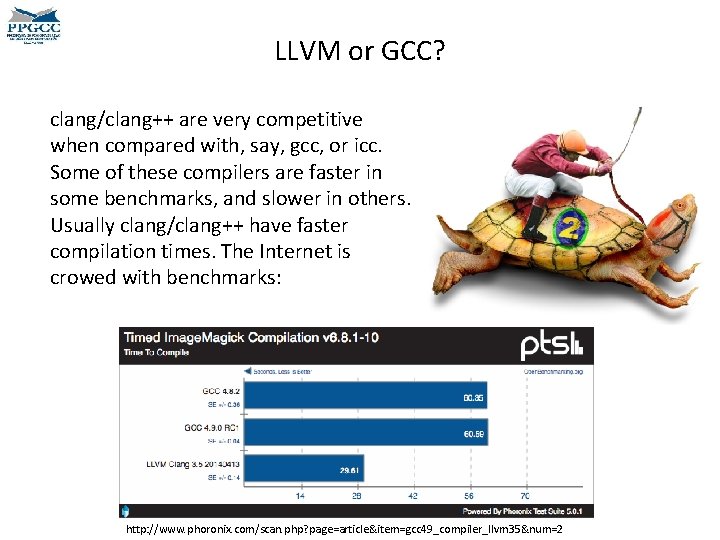

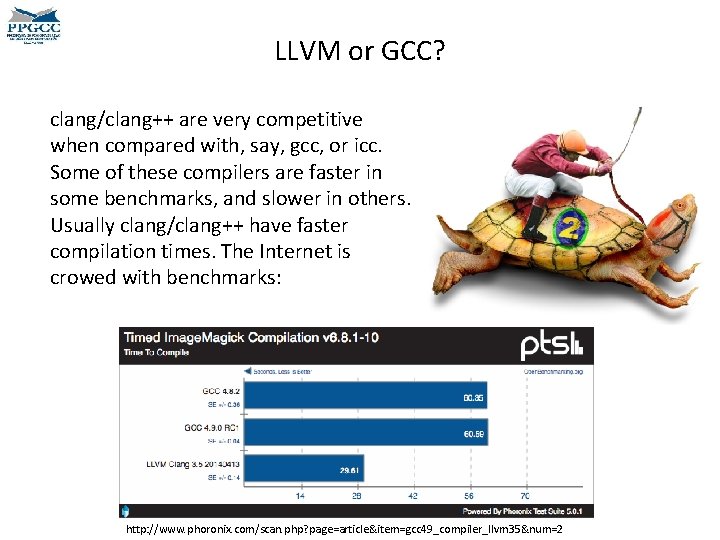

LLVM or GCC? clang/clang++ are very competitive when compared with, say, gcc, or icc. Some of these compilers are faster in some benchmarks, and slower in others. Usually clang/clang++ have faster compilation times. The Internet is crowed with benchmarks: http: //www. phoronix. com/scan. php? page=article&item=gcc 49_compiler_llvm 35&num=2

Why to Learn LLVM? • Intensively used in the academia♧: • Used by many companies – LLVM is maintained by Apple. – ARM, NVIDIA, Mozilla, Cray, etc. • Clean and modular interfaces. • Important awards: – Most cited CGO paper; ACM Software System Award 2012 ♧: 2831 citations in February 13 th of 2017

Many Languages use LLVM Do you know these programming languages?

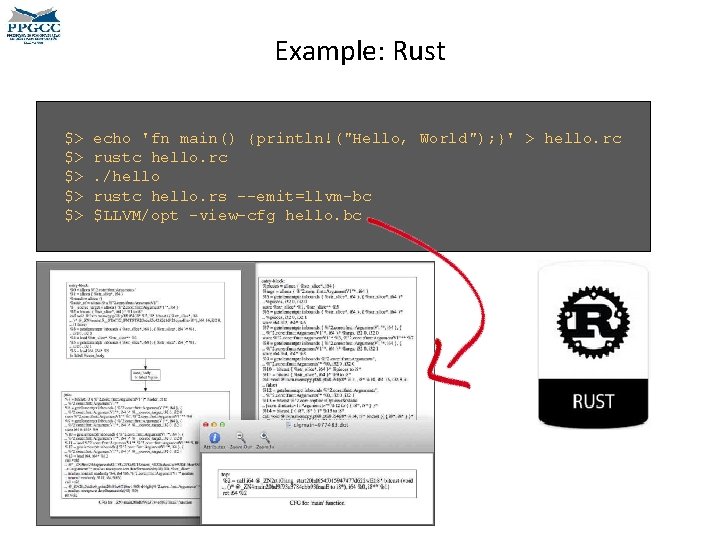

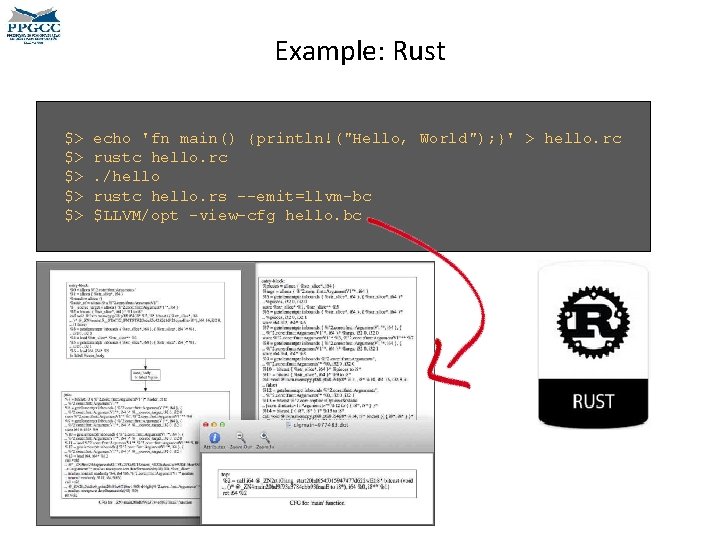

Example: Rust $> $> $> echo 'fn main() {println!("Hello, World"); }' > hello. rc rustc hello. rc. /hello rustc hello. rs --emit=llvm-bc $LLVM/opt -view-cfg hello. bc

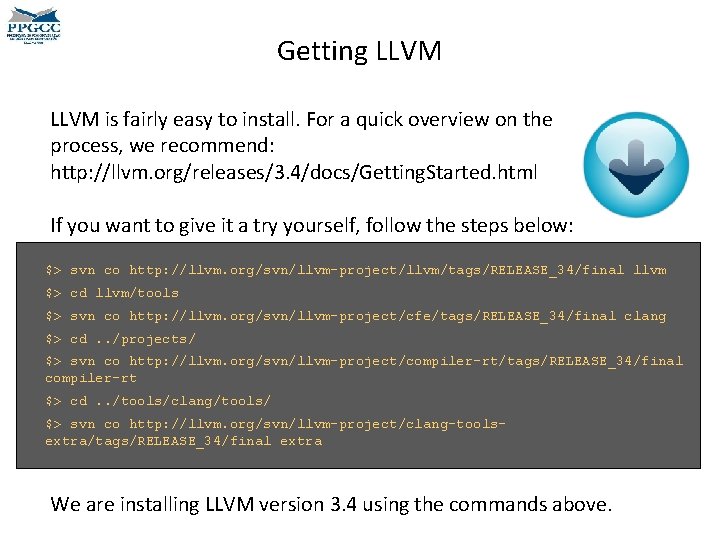

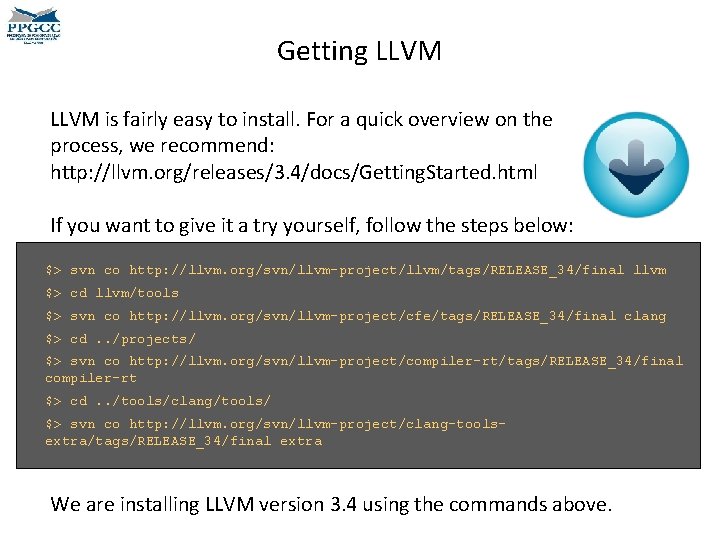

Getting LLVM is fairly easy to install. For a quick overview on the process, we recommend: http: //llvm. org/releases/3. 4/docs/Getting. Started. html If you want to give it a try yourself, follow the steps below: $> svn co http: //llvm. org/svn/llvm-project/llvm/tags/RELEASE_34/final llvm $> cd llvm/tools $> svn co http: //llvm. org/svn/llvm-project/cfe/tags/RELEASE_34/final clang $> cd. . /projects/ $> svn co http: //llvm. org/svn/llvm-project/compiler-rt/tags/RELEASE_34/final compiler-rt $> cd. . /tools/clang/tools/ $> svn co http: //llvm. org/svn/llvm-project/clang-toolsextra/tags/RELEASE_34/final extra We are installing LLVM version 3. 4 using the commands above.

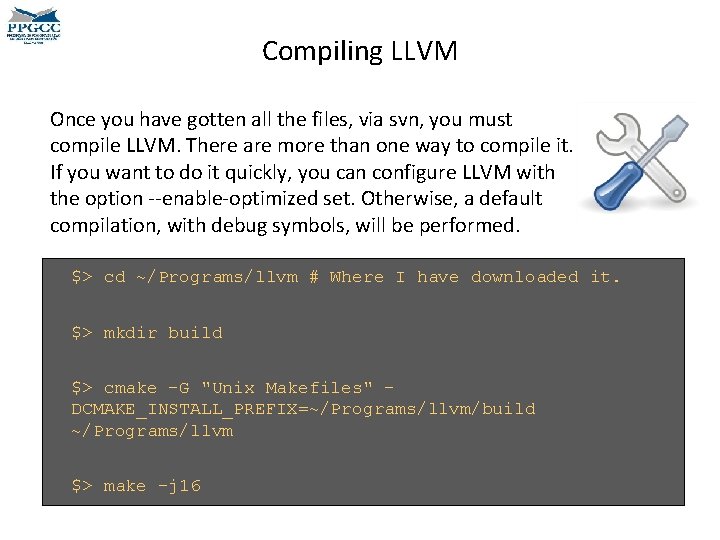

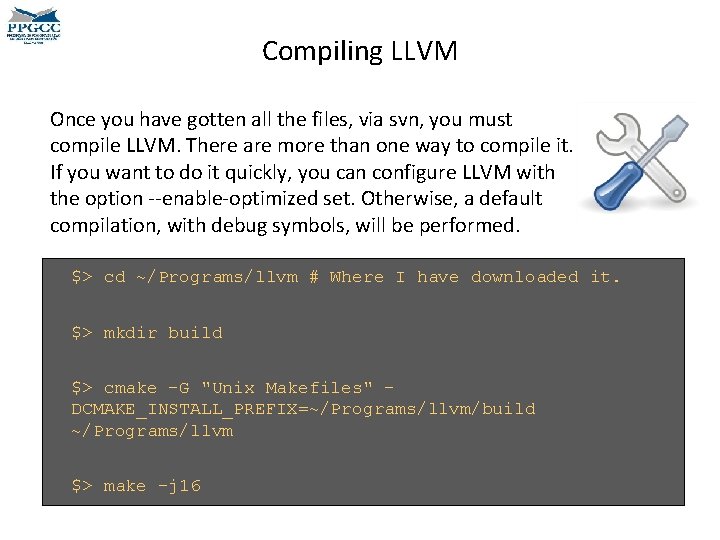

Compiling LLVM Once you have gotten all the files, via svn, you must compile LLVM. There are more than one way to compile it. If you want to do it quickly, you can configure LLVM with the option --enable-optimized set. Otherwise, a default compilation, with debug symbols, will be performed. $> cd ~/Programs/llvm # Where I have downloaded it. $> mkdir build $> cmake -G "Unix Makefiles" DCMAKE_INSTALL_PREFIX=~/Programs/llvm/build ~/Programs/llvm $> make -j 16

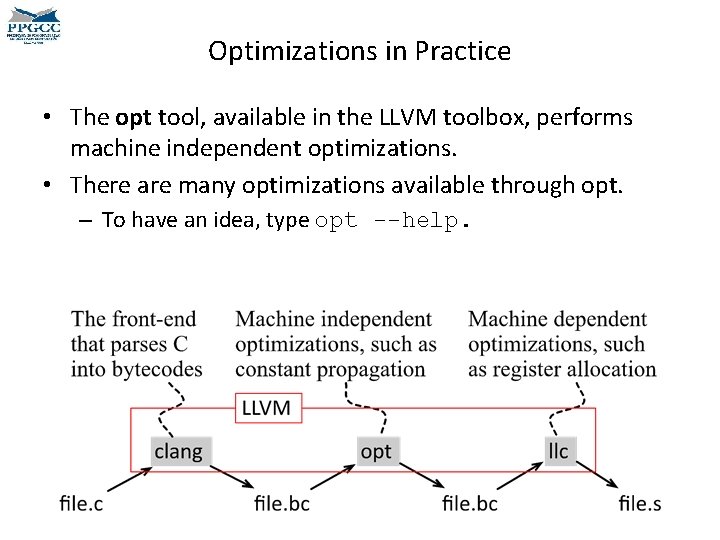

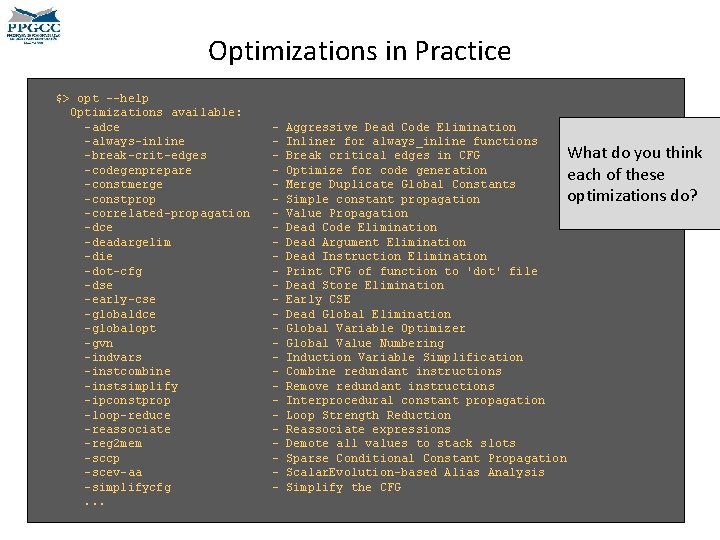

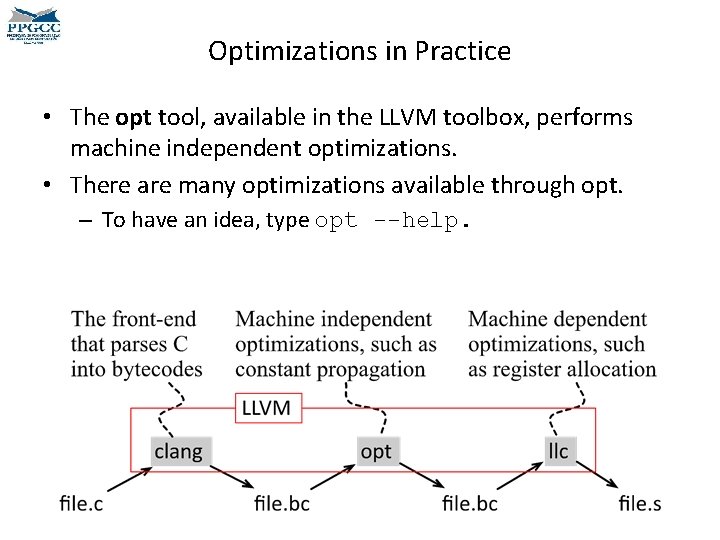

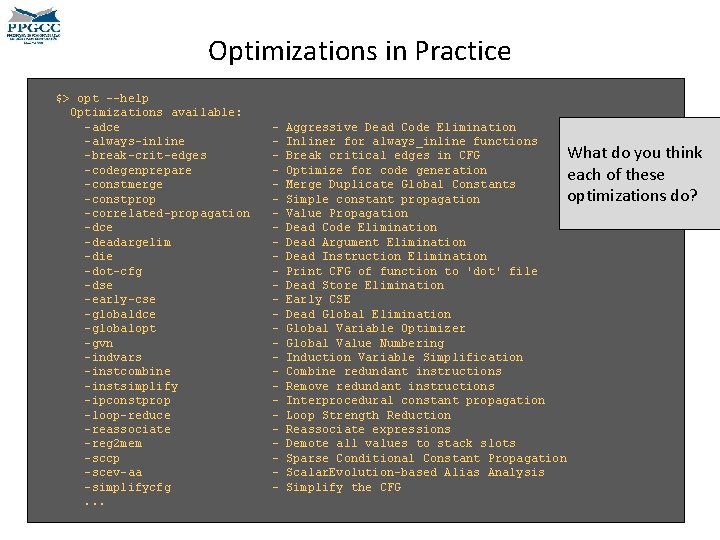

Optimizations in Practice • The opt tool, available in the LLVM toolbox, performs machine independent optimizations. • There are many optimizations available through opt. – To have an idea, type opt --help.

Optimizations in Practice $> opt --help Optimizations available: -adce -always-inline -break-crit-edges -codegenprepare -constmerge -constprop -correlated-propagation -dce -deadargelim -die -dot-cfg -dse -early-cse -globaldce -globalopt -gvn -indvars -instcombine -instsimplify -ipconstprop -loop-reduce -reassociate -reg 2 mem -sccp -scev-aa -simplifycfg. . . - Aggressive Dead Code Elimination Inliner for always_inline functions What do you think Break critical edges in CFG Optimize for code generation each of these Merge Duplicate Global Constants optimizations do? Simple constant propagation Value Propagation Dead Code Elimination Dead Argument Elimination Dead Instruction Elimination Print CFG of function to 'dot' file Dead Store Elimination Early CSE Dead Global Elimination Global Variable Optimizer Global Value Numbering Induction Variable Simplification Combine redundant instructions Remove redundant instructions Interprocedural constant propagation Loop Strength Reduction Reassociate expressions Demote all values to stack slots Sparse Conditional Constant Propagation Scalar. Evolution-based Alias Analysis Simplify the CFG

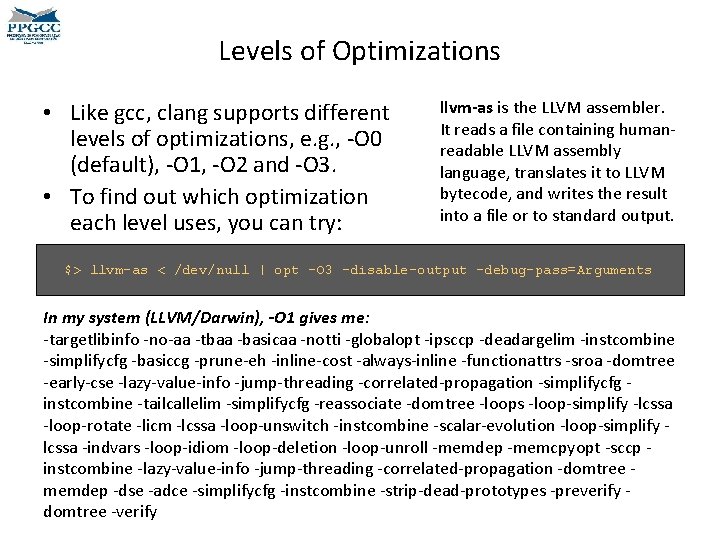

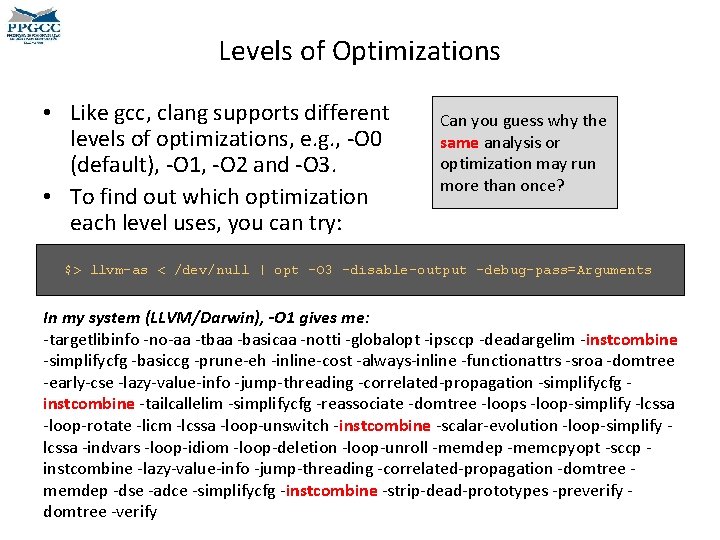

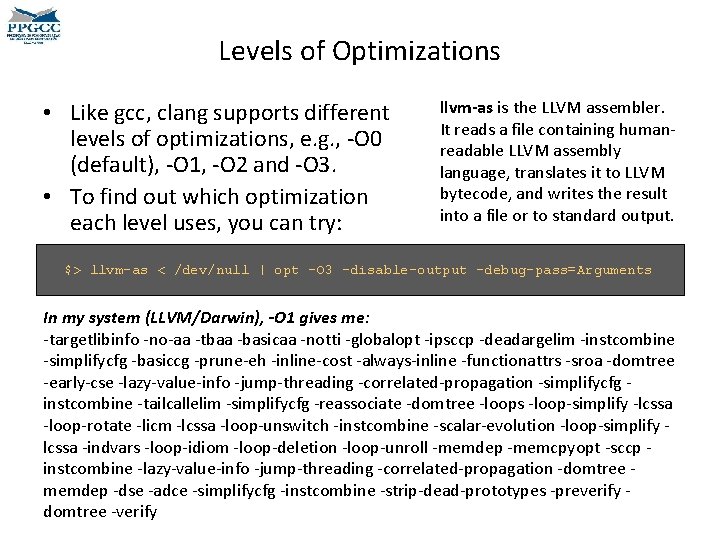

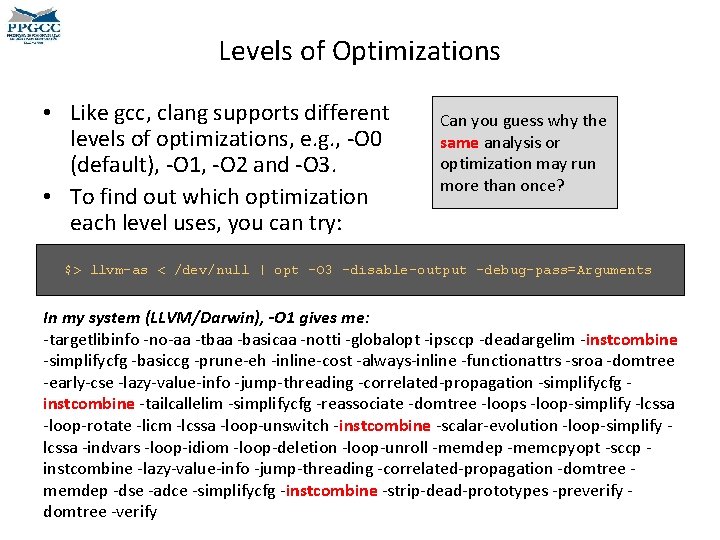

Levels of Optimizations • Like gcc, clang supports different levels of optimizations, e. g. , -O 0 (default), -O 1, -O 2 and -O 3. • To find out which optimization each level uses, you can try: llvm-as is the LLVM assembler. It reads a file containing humanreadable LLVM assembly language, translates it to LLVM bytecode, and writes the result into a file or to standard output. $> llvm-as < /dev/null | opt -O 3 -disable-output -debug-pass=Arguments In my system (LLVM/Darwin), -O 1 gives me: -targetlibinfo -no-aa -tbaa -basicaa -notti -globalopt -ipsccp -deadargelim -instcombine -simplifycfg -basiccg -prune-eh -inline-cost -always-inline -functionattrs -sroa -domtree -early-cse -lazy-value-info -jump-threading -correlated-propagation -simplifycfg instcombine -tailcallelim -simplifycfg -reassociate -domtree -loops -loop-simplify -lcssa -loop-rotate -licm -lcssa -loop-unswitch -instcombine -scalar-evolution -loop-simplify lcssa -indvars -loop-idiom -loop-deletion -loop-unroll -memdep -memcpyopt -sccp instcombine -lazy-value-info -jump-threading -correlated-propagation -domtree memdep -dse -adce -simplifycfg -instcombine -strip-dead-prototypes -preverify domtree -verify

Levels of Optimizations • Like gcc, clang supports different levels of optimizations, e. g. , -O 0 (default), -O 1, -O 2 and -O 3. • To find out which optimization each level uses, you can try: Can you guess why the same analysis or optimization may run more than once? $> llvm-as < /dev/null | opt -O 3 -disable-output -debug-pass=Arguments In my system (LLVM/Darwin), -O 1 gives me: -targetlibinfo -no-aa -tbaa -basicaa -notti -globalopt -ipsccp -deadargelim -instcombine -simplifycfg -basiccg -prune-eh -inline-cost -always-inline -functionattrs -sroa -domtree -early-cse -lazy-value-info -jump-threading -correlated-propagation -simplifycfg instcombine -tailcallelim -simplifycfg -reassociate -domtree -loops -loop-simplify -lcssa -loop-rotate -licm -lcssa -loop-unswitch -instcombine -scalar-evolution -loop-simplify lcssa -indvars -loop-idiom -loop-deletion -loop-unroll -memdep -memcpyopt -sccp instcombine -lazy-value-info -jump-threading -correlated-propagation -domtree memdep -dse -adce -simplifycfg -instcombine -strip-dead-prototypes -preverify domtree -verify

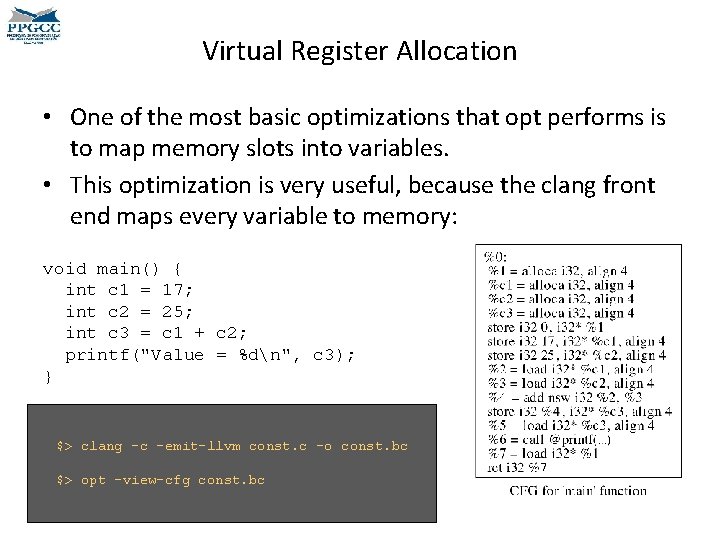

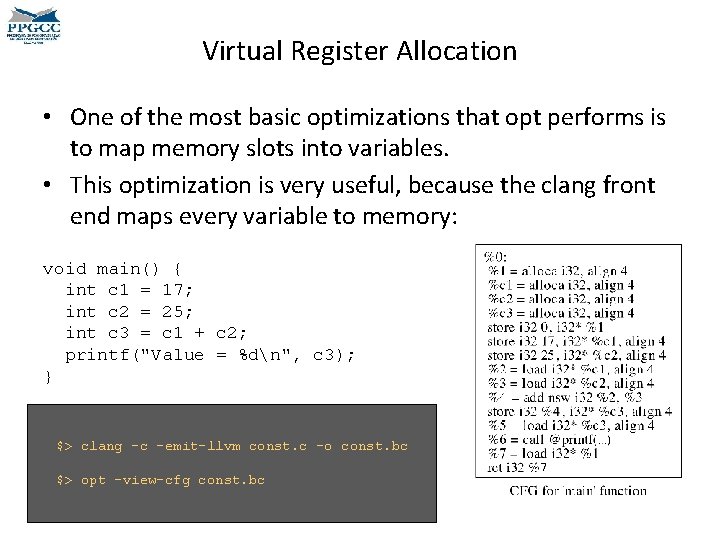

Virtual Register Allocation • One of the most basic optimizations that opt performs is to map memory slots into variables. • This optimization is very useful, because the clang front end maps every variable to memory: void main() { int c 1 = 17; int c 2 = 25; int c 3 = c 1 + c 2; printf("Value = %dn", c 3); } $> clang -c -emit-llvm const. c -o const. bc $> opt –view-cfg const. bc

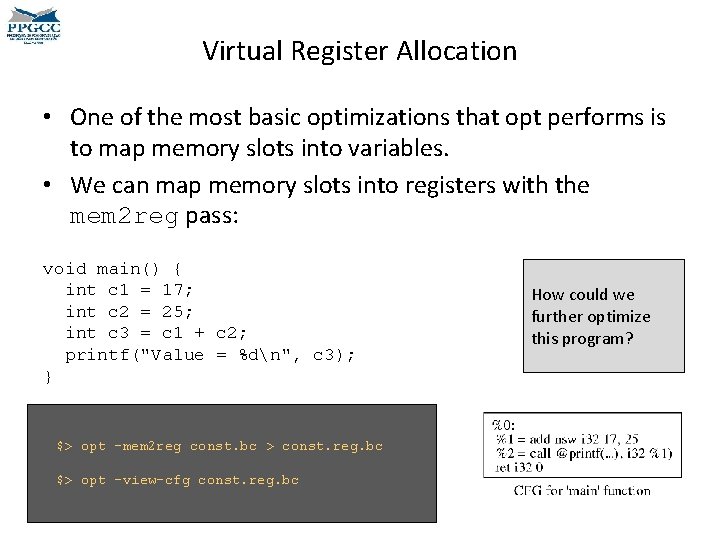

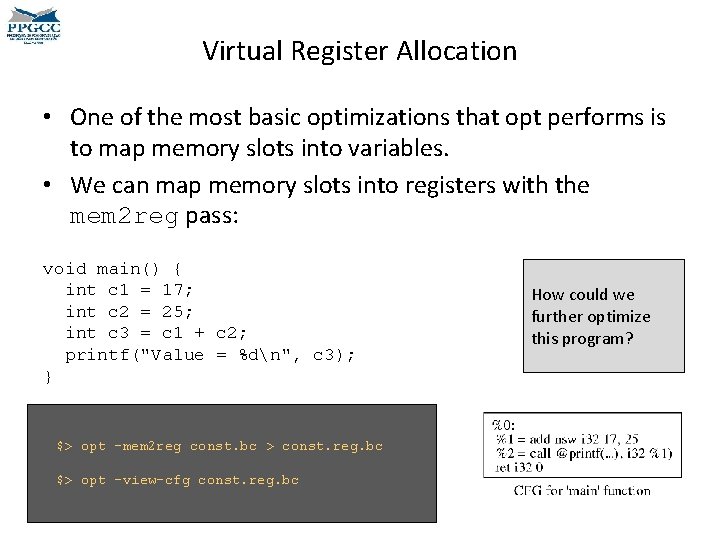

Virtual Register Allocation • One of the most basic optimizations that opt performs is to map memory slots into variables. • We can map memory slots into registers with the mem 2 reg pass: void main() { int c 1 = 17; int c 2 = 25; int c 3 = c 1 + c 2; printf("Value = %dn", c 3); } $> opt -mem 2 reg const. bc > const. reg. bc $> opt –view-cfg const. reg. bc How could we further optimize this program?

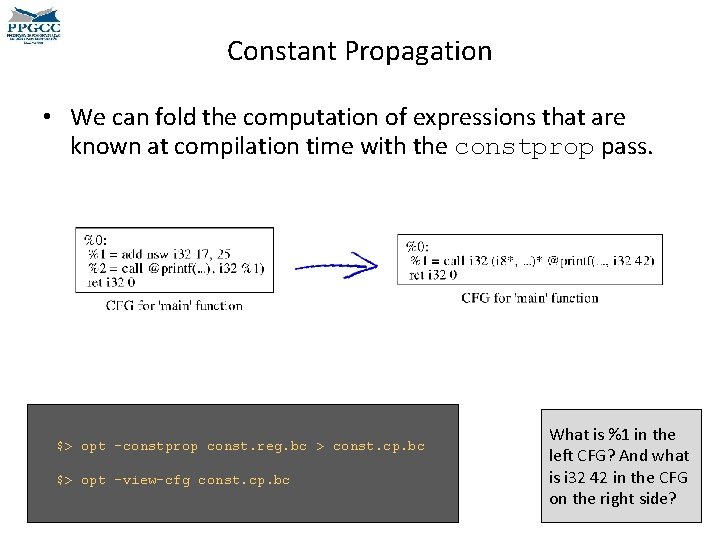

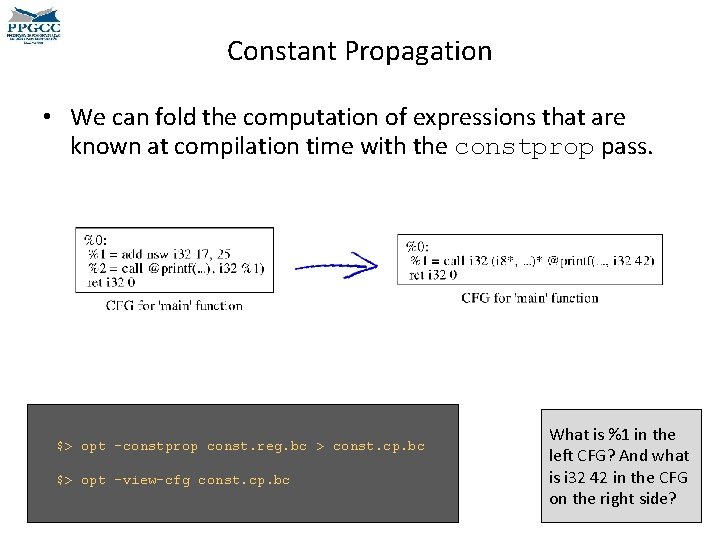

Constant Propagation • We can fold the computation of expressions that are known at compilation time with the constprop pass. $> opt -constprop const. reg. bc > const. cp. bc $> opt –view-cfg const. cp. bc What is %1 in the left CFG? And what is i 32 42 in the CFG on the right side?

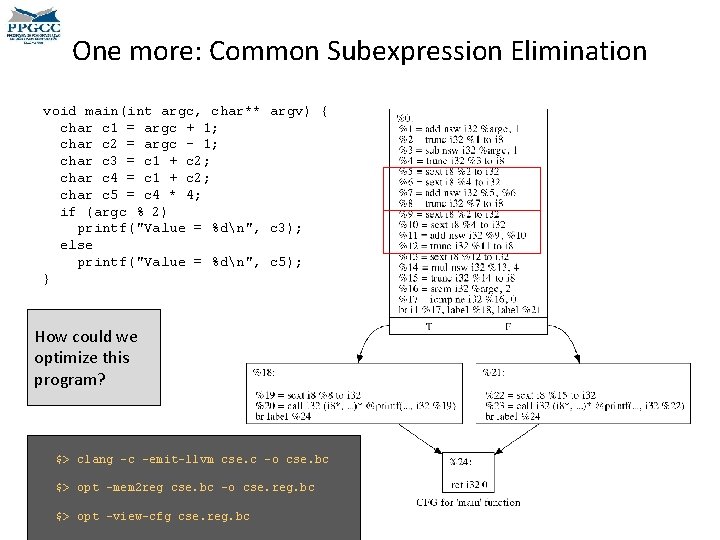

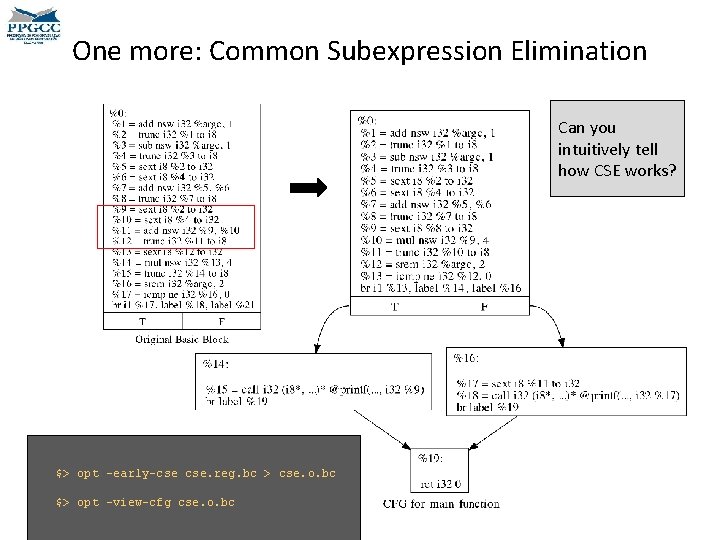

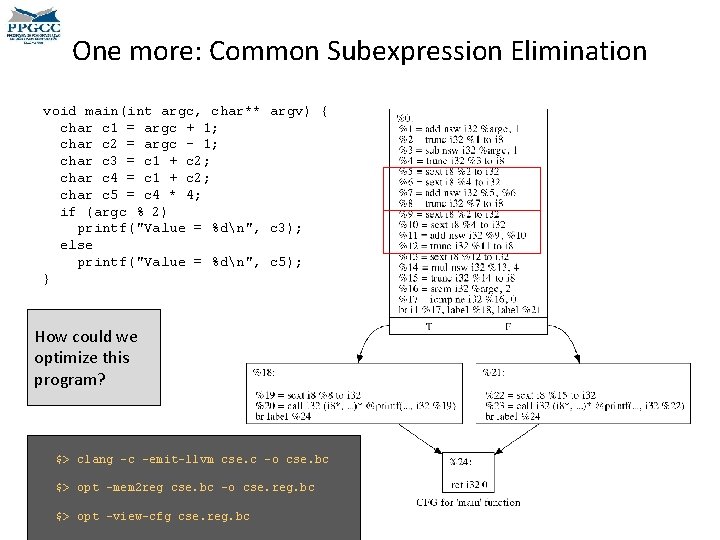

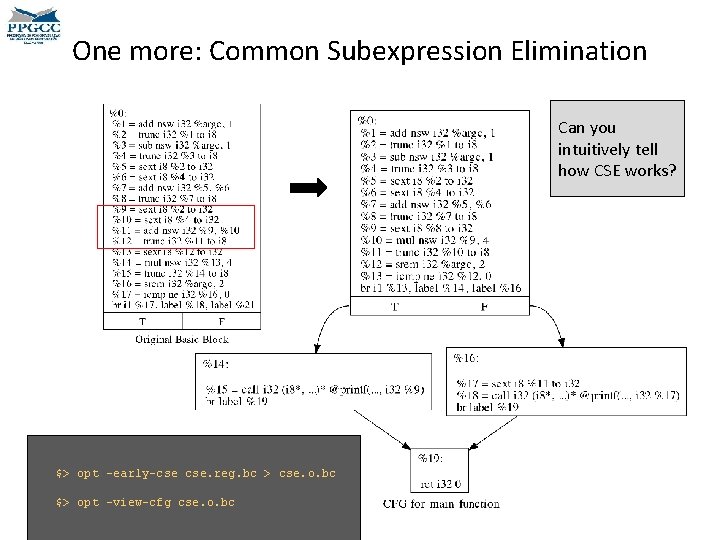

One more: Common Subexpression Elimination void main(int argc, char** argv) { char c 1 = argc + 1; char c 2 = argc - 1; char c 3 = c 1 + c 2; char c 4 = c 1 + c 2; char c 5 = c 4 * 4; if (argc % 2) printf("Value = %dn", c 3); else printf("Value = %dn", c 5); } How could we optimize this program? $> clang -c -emit-llvm cse. c -o cse. bc $> opt –mem 2 reg cse. bc -o cse. reg. bc $> opt –view-cfg cse. reg. bc

One more: Common Subexpression Elimination Can you intuitively tell how CSE works? $> opt -early-cse cse. reg. bc > cse. o. bc $> opt –view-cfg cse. o. bc

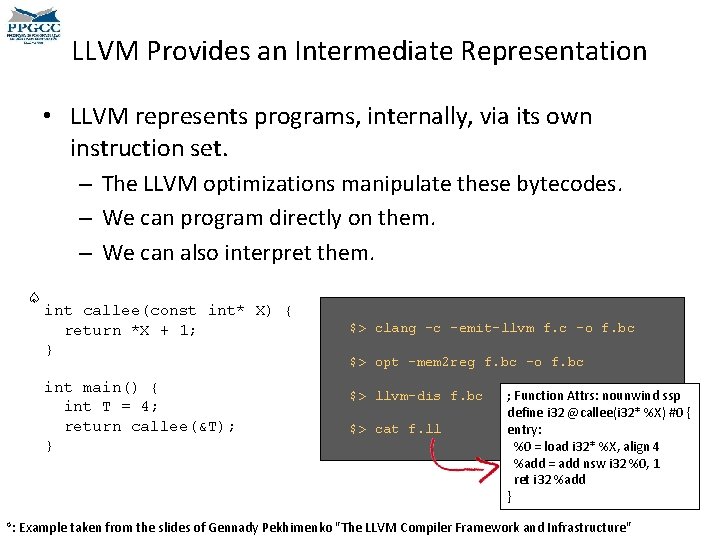

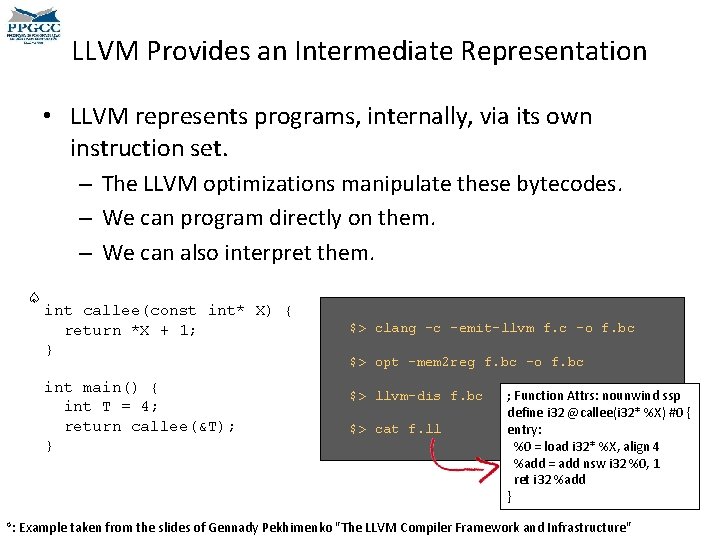

LLVM Provides an Intermediate Representation • LLVM represents programs, internally, via its own instruction set. – The LLVM optimizations manipulate these bytecodes. – We can program directly on them. – We can also interpret them. ♤ int callee(const int* X) { return *X + 1; } int main() { int T = 4; return callee(&T); } ♤: $> clang –c –emit-llvm f. c –o f. bc $> opt –mem 2 reg f. bc –o f. bc $> llvm-dis f. bc $> cat f. ll ; Function Attrs: nounwind ssp define i 32 @callee(i 32* %X) #0 { entry: %0 = load i 32* %X, align 4 %add = add nsw i 32 %0, 1 ret i 32 %add } Example taken from the slides of Gennady Pekhimenko "The LLVM Compiler Framework and Infrastructure"

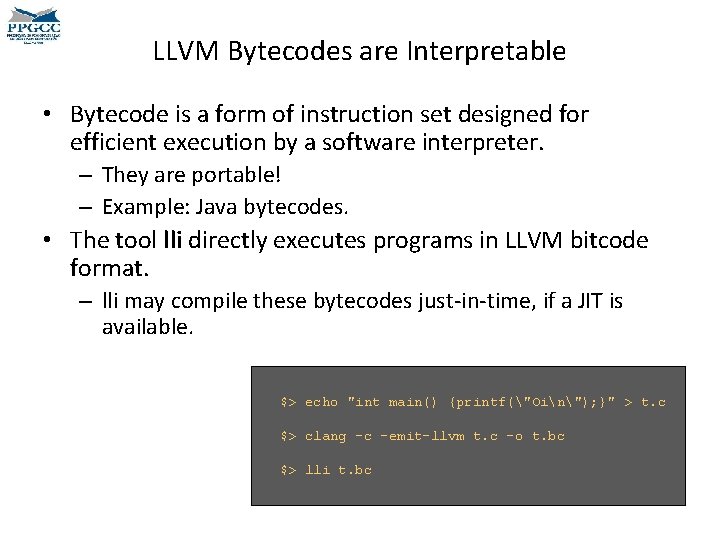

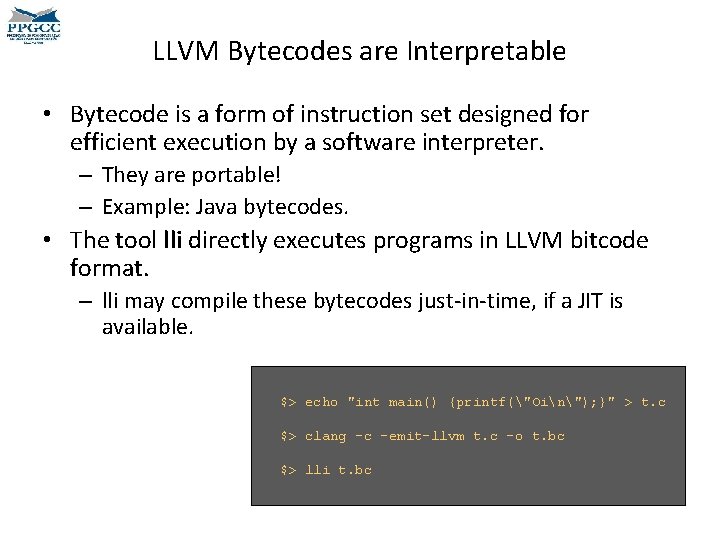

LLVM Bytecodes are Interpretable • Bytecode is a form of instruction set designed for efficient execution by a software interpreter. – They are portable! – Example: Java bytecodes. • The tool lli directly executes programs in LLVM bitcode format. – lli may compile these bytecodes just-in-time, if a JIT is available. $> echo "int main() {printf("Oin"); }" > t. c $> clang -c -emit-llvm t. c -o t. bc $> lli t. bc

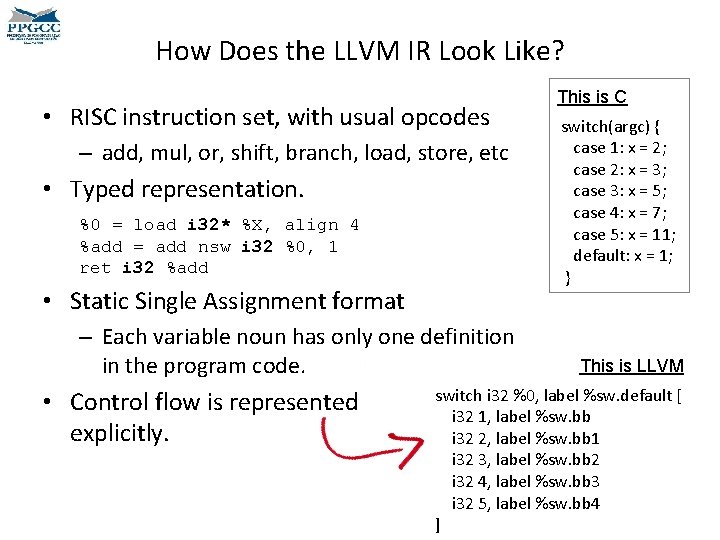

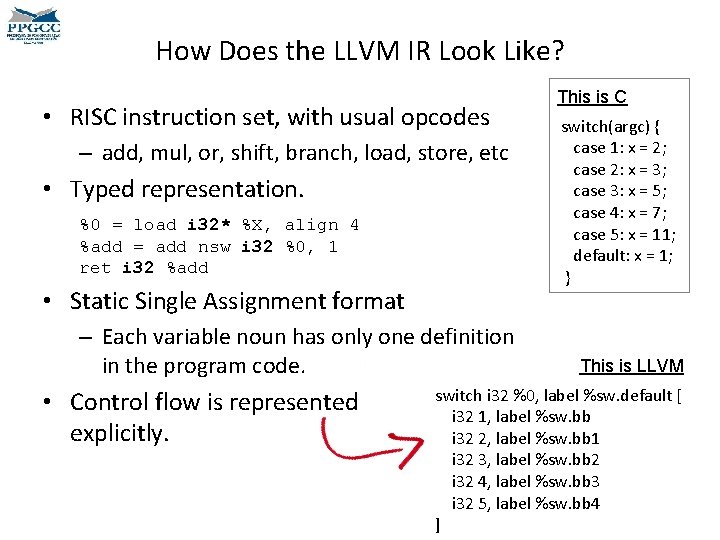

How Does the LLVM IR Look Like? • RISC instruction set, with usual opcodes – add, mul, or, shift, branch, load, store, etc • Typed representation. %0 = load i 32* %X, align 4 %add = add nsw i 32 %0, 1 ret i 32 %add • Static Single Assignment format – Each variable noun has only one definition in the program code. • Control flow is represented explicitly. This is C switch(argc) { case 1: x = 2; case 2: x = 3; case 3: x = 5; case 4: x = 7; case 5: x = 11; default: x = 1; } This is LLVM switch i 32 %0, label %sw. default [ i 32 1, label %sw. bb i 32 2, label %sw. bb 1 i 32 3, label %sw. bb 2 i 32 4, label %sw. bb 3 i 32 5, label %sw. bb 4 ]

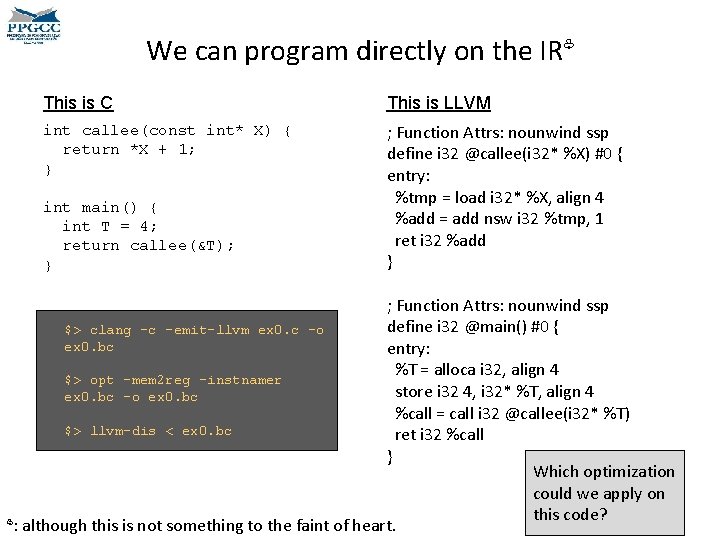

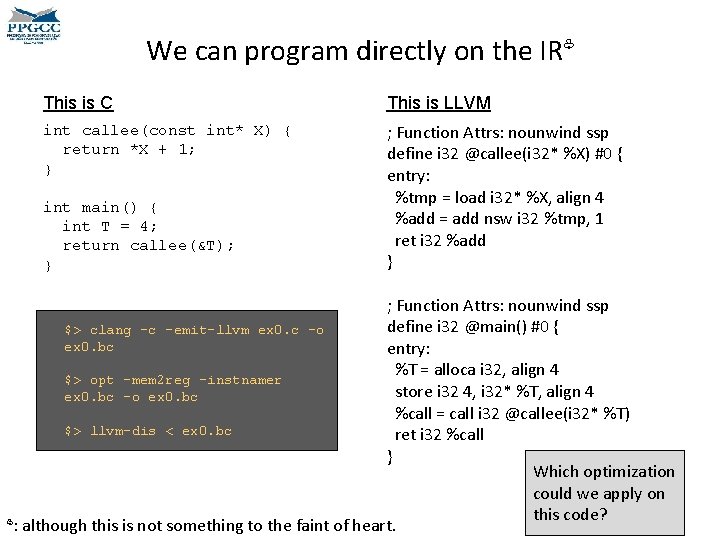

We can program directly on the IR♧ This is C This is LLVM int callee(const int* X) { return *X + 1; } ; Function Attrs: nounwind ssp define i 32 @callee(i 32* %X) #0 { entry: %tmp = load i 32* %X, align 4 %add = add nsw i 32 %tmp, 1 ret i 32 %add } int main() { int T = 4; return callee(&T); } ; Function Attrs: nounwind ssp define i 32 @main() #0 { $> clang -c -emit-llvm ex 0. c -o ex 0. bc entry: %T = alloca i 32, align 4 $> opt -mem 2 reg -instnamer store i 32 4, i 32* %T, align 4 ex 0. bc -o ex 0. bc %call = call i 32 @callee(i 32* %T) $> llvm-dis < ex 0. bc ret i 32 %call } Which optimization could we apply on this code? ♧: although this is not something to the faint of heart.

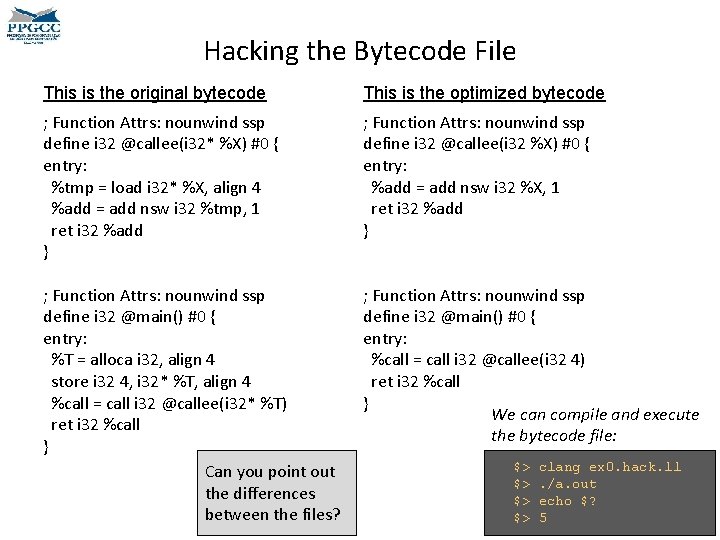

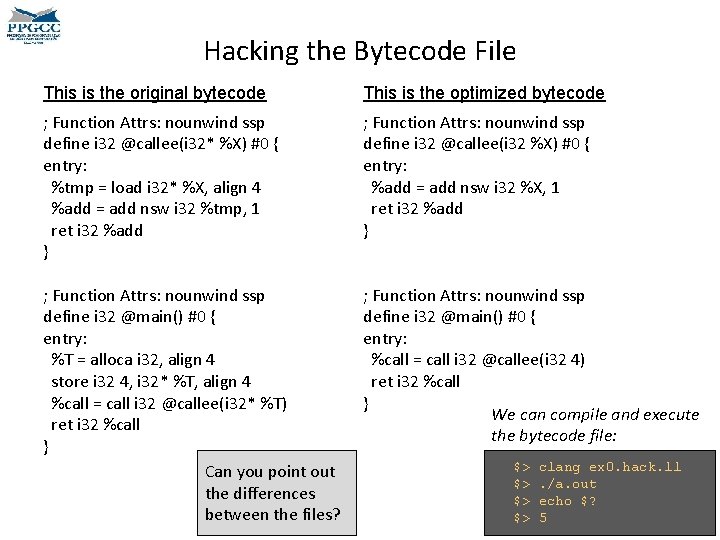

Hacking the Bytecode File This is the original bytecode This is the optimized bytecode ; Function Attrs: nounwind ssp define i 32 @callee(i 32* %X) #0 { entry: %tmp = load i 32* %X, align 4 %add = add nsw i 32 %tmp, 1 ret i 32 %add } ; Function Attrs: nounwind ssp define i 32 @callee(i 32 %X) #0 { entry: %add = add nsw i 32 %X, 1 ret i 32 %add } ; Function Attrs: nounwind ssp define i 32 @main() #0 { entry: %T = alloca i 32, align 4 store i 32 4, i 32* %T, align 4 %call = call i 32 @callee(i 32* %T) ret i 32 %call } Can you point out the differences between the files? ; Function Attrs: nounwind ssp define i 32 @main() #0 { entry: %call = call i 32 @callee(i 32 4) ret i 32 %call } We can compile and execute the bytecode file: $> $> clang ex 0. hack. ll. /a. out echo $? 5

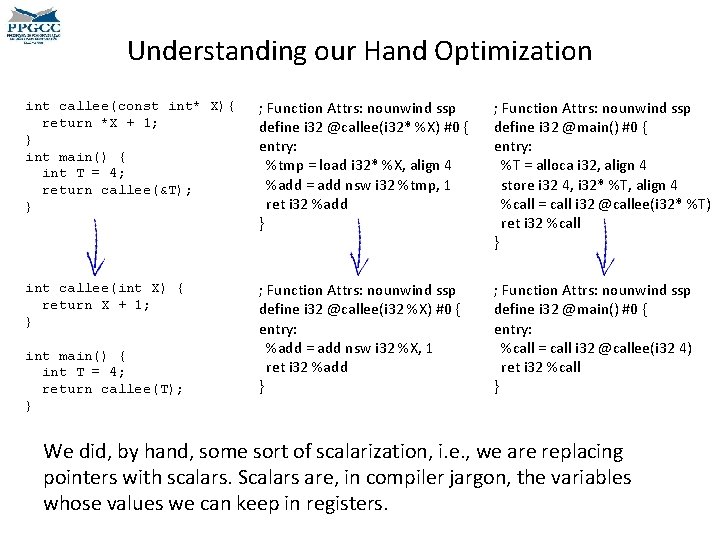

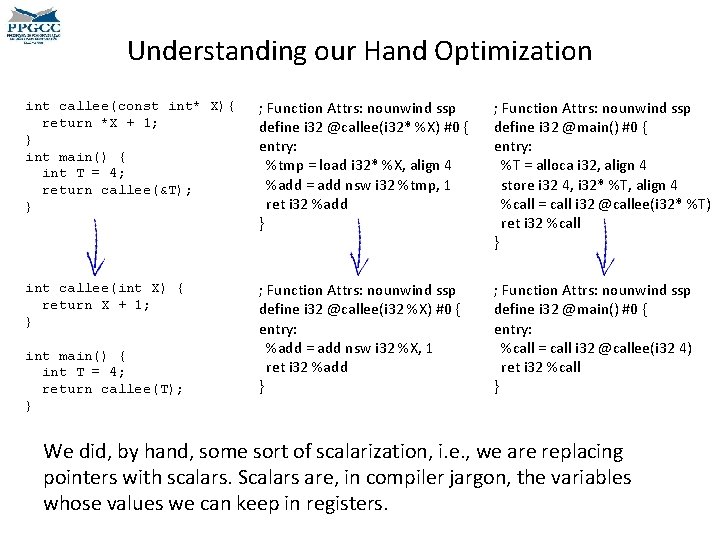

Understanding our Hand Optimization int callee(const int* X){ return *X + 1; } int main() { int T = 4; return callee(&T); } ; Function Attrs: nounwind ssp define i 32 @callee(i 32* %X) #0 { entry: %tmp = load i 32* %X, align 4 %add = add nsw i 32 %tmp, 1 ret i 32 %add } ; Function Attrs: nounwind ssp define i 32 @main() #0 { entry: %T = alloca i 32, align 4 store i 32 4, i 32* %T, align 4 %call = call i 32 @callee(i 32* %T) ret i 32 %call } int callee(int X) { return X + 1; } ; Function Attrs: nounwind ssp define i 32 @callee(i 32 %X) #0 { entry: %add = add nsw i 32 %X, 1 ret i 32 %add } ; Function Attrs: nounwind ssp define i 32 @main() #0 { entry: %call = call i 32 @callee(i 32 4) ret i 32 %call } int main() { int T = 4; return callee(T); } We did, by hand, some sort of scalarization, i. e. , we are replacing pointers with scalars. Scalars are, in compiler jargon, the variables whose values we can keep in registers.

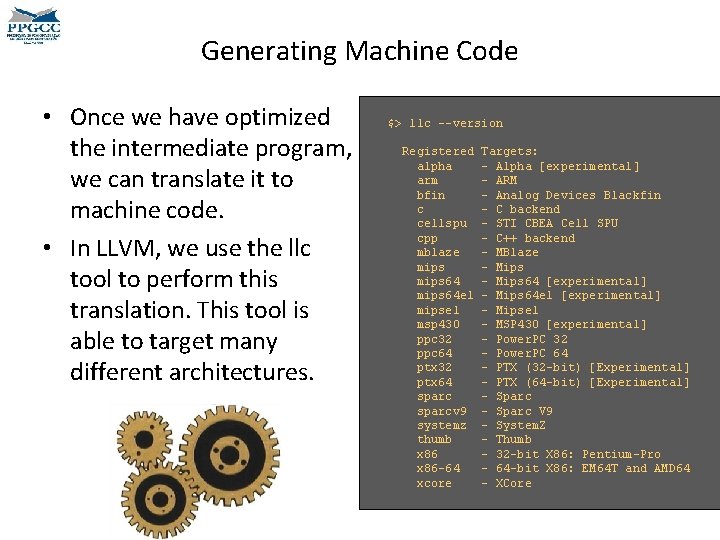

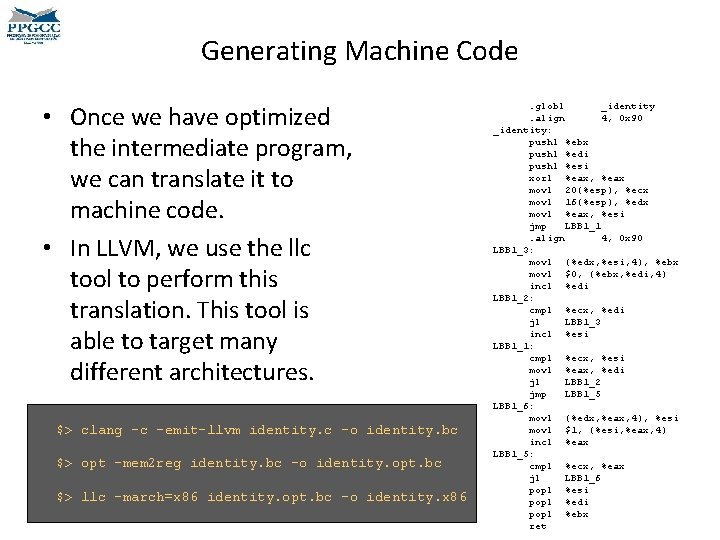

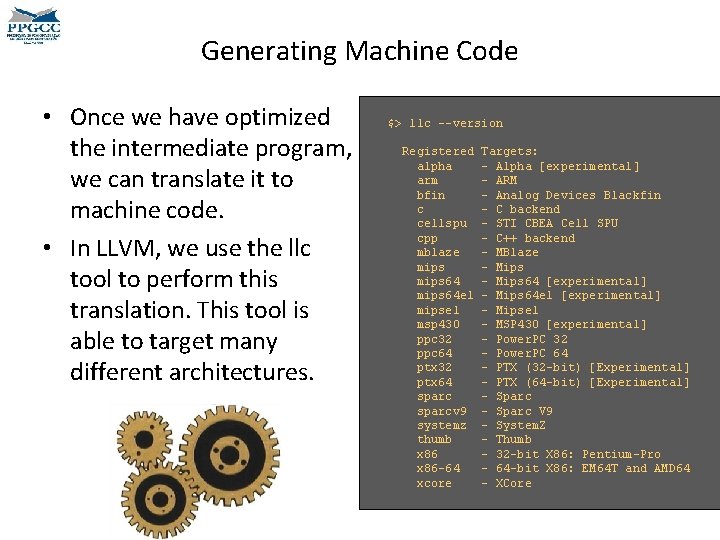

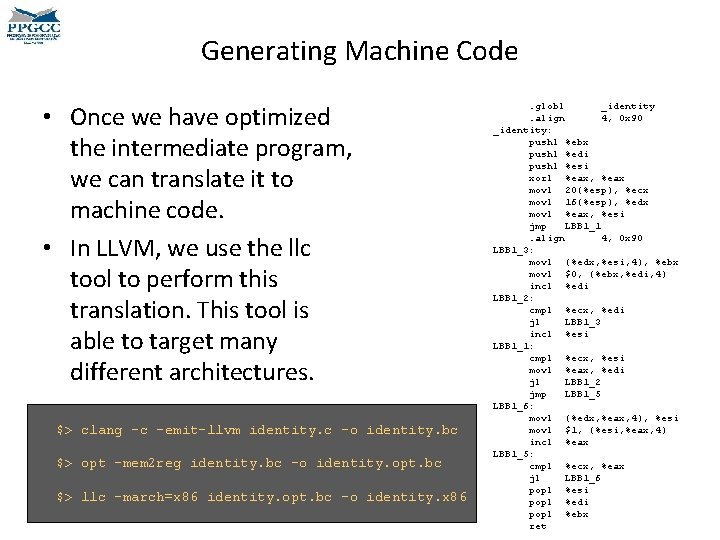

Generating Machine Code • Once we have optimized the intermediate program, we can translate it to machine code. • In LLVM, we use the llc tool to perform this translation. This tool is able to target many different architectures. $> llc --version Registered alpha arm bfin c cellspu cpp mblaze mips 64 el mipsel msp 430 ppc 32 ppc 64 ptx 32 ptx 64 sparcv 9 systemz thumb x 86 -64 xcore Targets: - Alpha [experimental] - ARM - Analog Devices Blackfin - C backend - STI CBEA Cell SPU - C++ backend - MBlaze - Mips 64 [experimental] - Mips 64 el [experimental] - Mipsel - MSP 430 [experimental] - Power. PC 32 - Power. PC 64 - PTX (32 -bit) [Experimental] - PTX (64 -bit) [Experimental] - Sparc V 9 - System. Z - Thumb - 32 -bit X 86: Pentium-Pro - 64 -bit X 86: EM 64 T and AMD 64 - XCore

Generating Machine Code • Once we have optimized the intermediate program, we can translate it to machine code. • In LLVM, we use the llc tool to perform this translation. This tool is able to target many different architectures. $> clang -c -emit-llvm identity. c -o identity. bc $> opt -mem 2 reg identity. bc -o identity. opt. bc $> llc -march=x 86 identity. opt. bc -o identity. x 86 . globl _identity. align 4, 0 x 90 _identity: pushl %ebx pushl %edi pushl %esi xorl %eax, %eax movl 20(%esp), %ecx movl 16(%esp), %edx movl %eax, %esi jmp LBB 1_1. align 4, 0 x 90 LBB 1_3: movl (%edx, %esi, 4), %ebx movl $0, (%ebx, %edi, 4) incl %edi LBB 1_2: cmpl %ecx, %edi jl LBB 1_3 incl %esi LBB 1_1: cmpl %ecx, %esi movl %eax, %edi jl LBB 1_2 jmp LBB 1_5 LBB 1_6: movl (%edx, %eax, 4), %esi movl $1, (%esi, %eax, 4) incl %eax LBB 1_5: cmpl %ecx, %eax jl LBB 1_6 popl %esi popl %edi popl %ebx ret

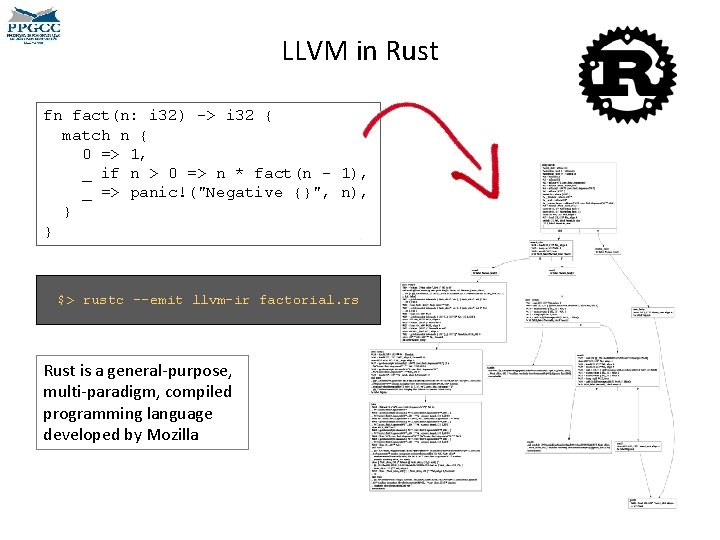

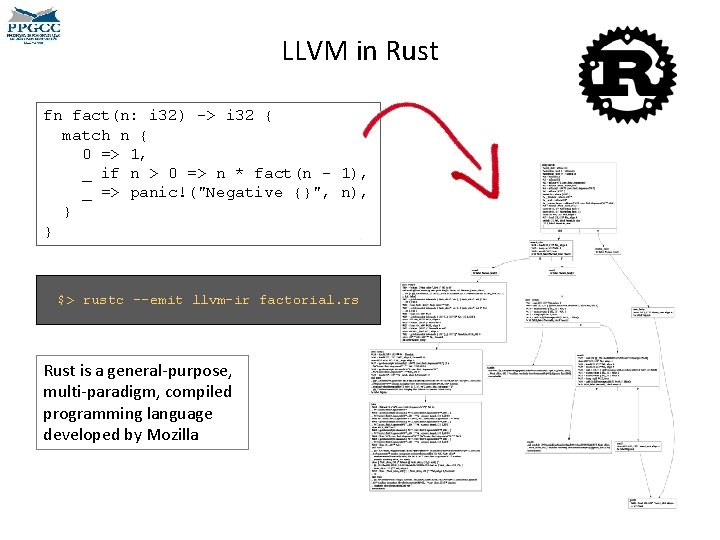

LLVM in Rust fn fact(n: i 32) -> i 32 { match n { 0 => 1, _ if n > 0 => n * fact(n - 1), _ => panic!("Negative {}", n), } } $> rustc --emit llvm-ir factorial. rs Rust is a general-purpose, multi-paradigm, compiled programming language developed by Mozilla

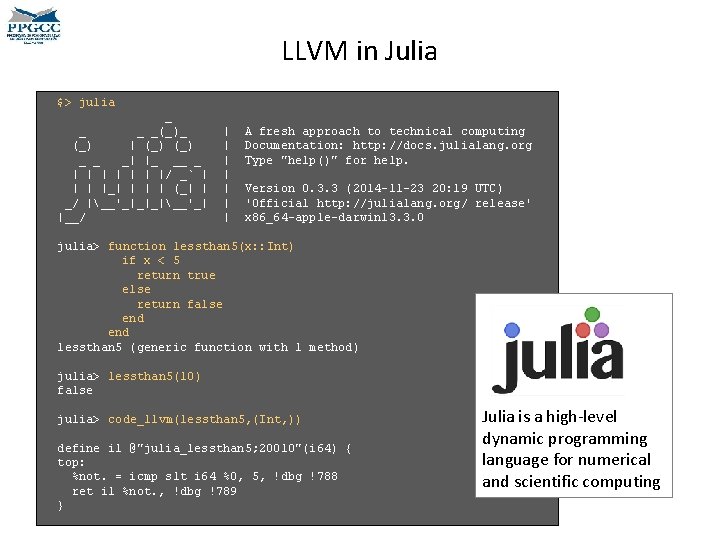

LLVM in Julia $> julia _ _(_)_ (_) | (_) _ _ _| |_ __ _ | | | |/ _` | |_| | (_| | _/ |__'_|_|_|__'_| |__/ | | | | A fresh approach to technical computing Documentation: http: //docs. julialang. org Type "help()" for help. Version 0. 3. 3 (2014 -11 -23 20: 19 UTC) 'Official http: //julialang. org/ release' x 86_64 -apple-darwin 13. 3. 0 julia> function lessthan 5(x: : Int) if x < 5 return true else return false end lessthan 5 (generic function with 1 method) julia> lessthan 5(10) false julia> code_llvm(lessthan 5, (Int, )) define i 1 @"julia_lessthan 5; 20010"(i 64) { top: %not. = icmp slt i 64 %0, 5, !dbg !788 ret i 1 %not. , !dbg !789 } Julia is a high-level dynamic programming language for numerical and scientific computing

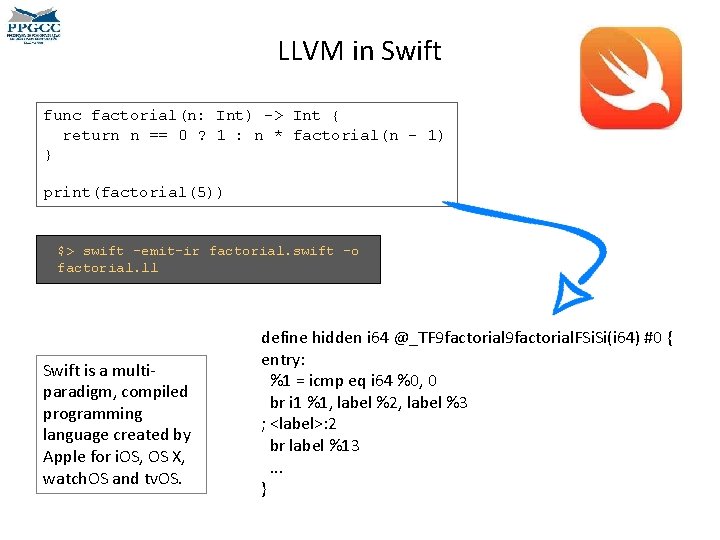

LLVM in Swift func factorial(n: Int) -> Int { return n == 0 ? 1 : n * factorial(n - 1) } print(factorial(5)) $> swift –emit-ir factorial. swift –o factorial. ll Swift is a multiparadigm, compiled programming language created by Apple for i. OS, OS X, watch. OS and tv. OS. define hidden i 64 @_TF 9 factorial. FSi. Si(i 64) #0 { entry: %1 = icmp eq i 64 %0, 0 br i 1 %1, label %2, label %3 ; <label>: 2 br label %13. . . }

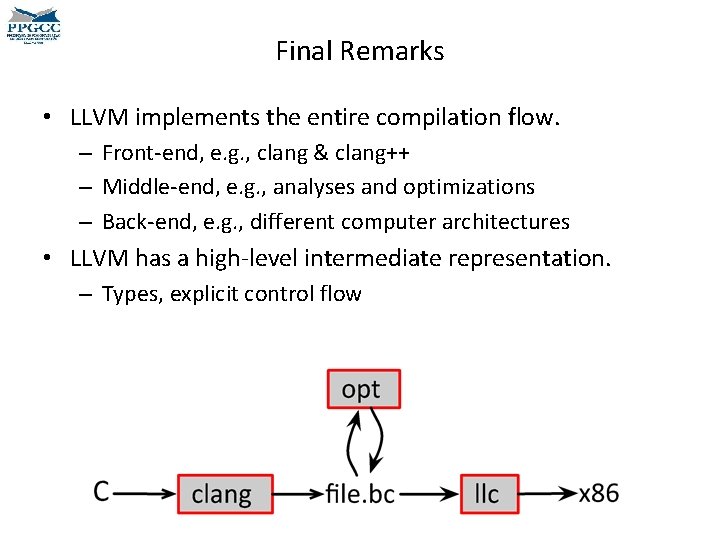

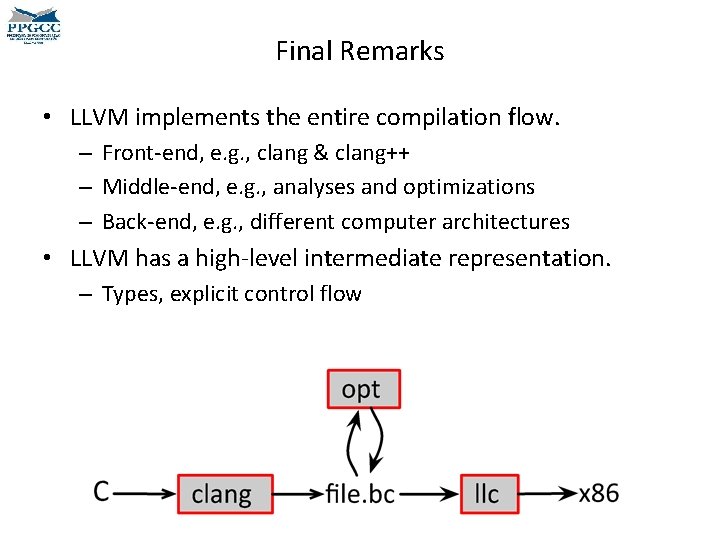

Final Remarks • LLVM implements the entire compilation flow. – Front-end, e. g. , clang & clang++ – Middle-end, e. g. , analyses and optimizations – Back-end, e. g. , different computer architectures • LLVM has a high-level intermediate representation. – Types, explicit control flow

DEPARTMENT OF COMPUTER SCIENCE UNIVERSIDADE FEDERAL DE MINAS GERAIS FEDERAL UNIVERSITY OF MINAS GERAIS, BRAZIL WRITING AN LLVM PASS

Passes • LLVM applies a chain of analyses and transformations on the target program. • Each of these analyses or transformations is called a pass. • We have seen a few passes already: mem 2 reg, early-cse and constprop, for instance. • Some passes, which are machine independent, are invoked by opt. • Other passes, which are machine dependent, are invoked by llc. • A pass may require information provided by other passes. Such dependencies must be explicitly stated. – For instance: a common pattern is a transformation pass requiring an analysis pass.

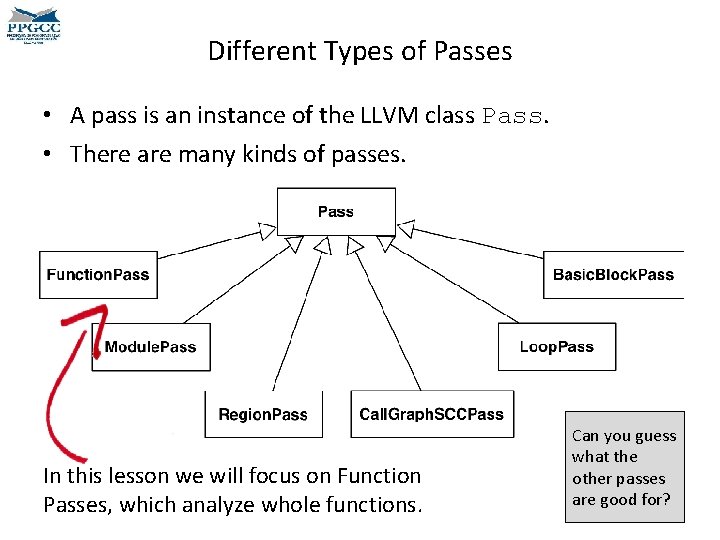

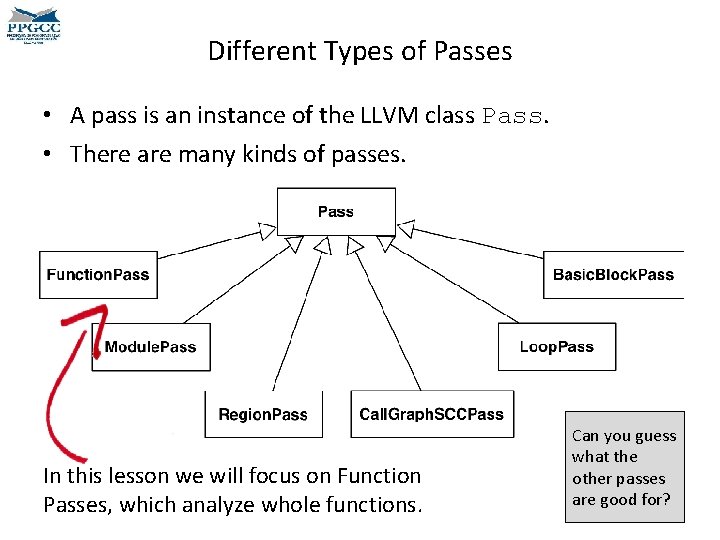

Different Types of Passes • A pass is an instance of the LLVM class Pass. • There are many kinds of passes. In this lesson we will focus on Function Passes, which analyze whole functions. Can you guess what the other passes are good for?

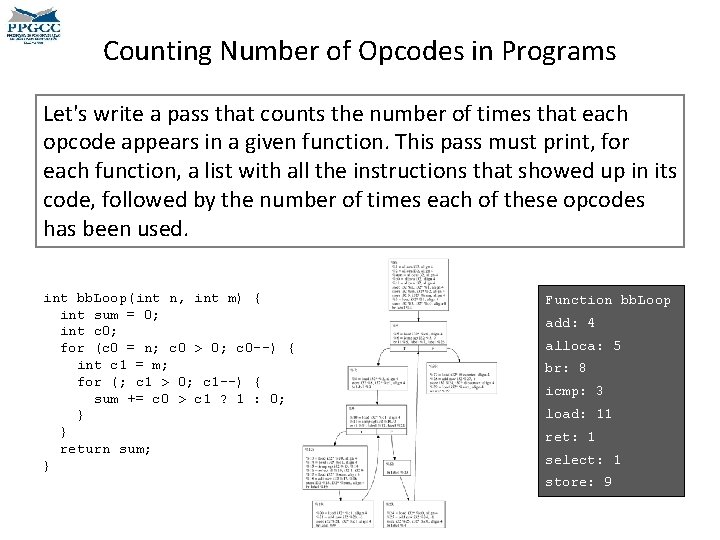

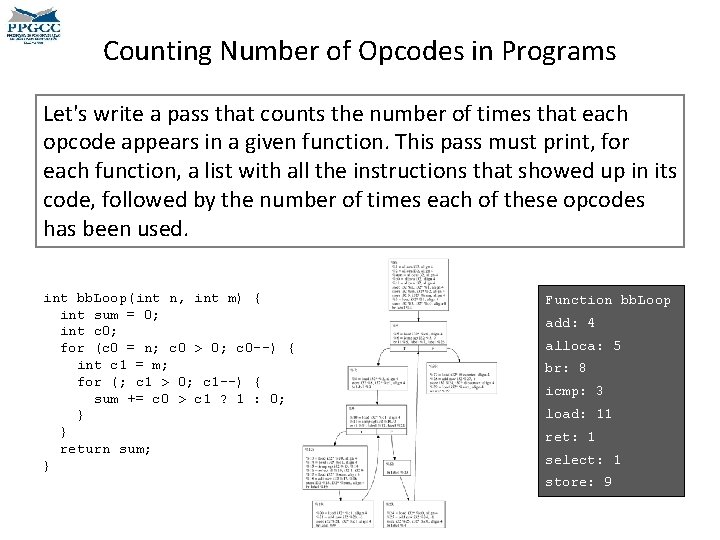

Counting Number of Opcodes in Programs Let's write a pass that counts the number of times that each opcode appears in a given function. This pass must print, for each function, a list with all the instructions that showed up in its code, followed by the number of times each of these opcodes has been used. int bb. Loop(int n, int m) { int sum = 0; int c 0; for (c 0 = n; c 0 > 0; c 0 --) { int c 1 = m; for (; c 1 > 0; c 1 --) { sum += c 0 > c 1 ? 1 : 0; } } return sum; } Function bb. Loop add: 4 alloca: 5 br: 8 icmp: 3 load: 11 ret: 1 select: 1 store: 9

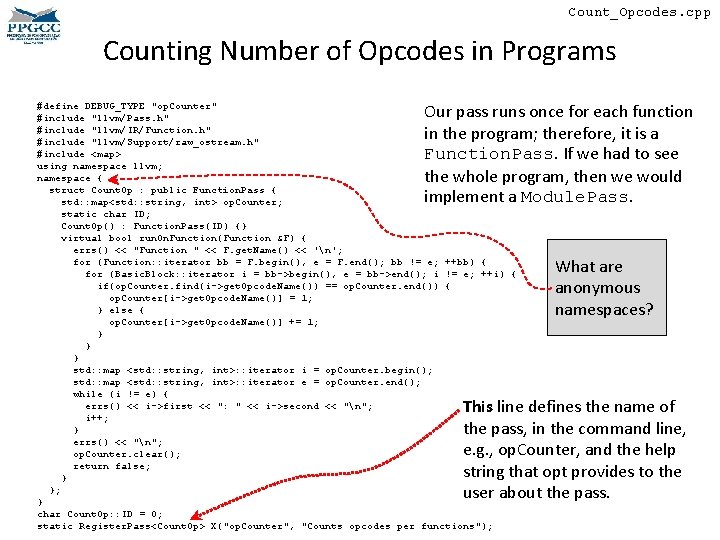

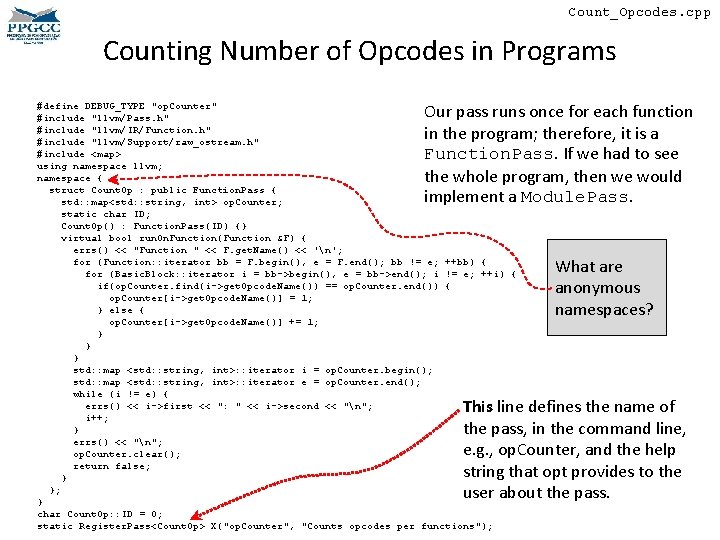

Count_Opcodes. cpp Counting Number of Opcodes in Programs Our pass runs once for each function in the program; therefore, it is a Function. Pass. If we had to see the whole program, then we would implement a Module. Pass. #define DEBUG_TYPE "op. Counter" #include "llvm/Pass. h" #include "llvm/IR/Function. h" #include "llvm/Support/raw_ostream. h" #include <map> using namespace llvm; namespace { struct Count. Op : public Function. Pass { std: : map<std: : string, int> op. Counter; static char ID; Count. Op() : Function. Pass(ID) {} virtual bool run. On. Function(Function &F) { errs() << "Function " << F. get. Name() << 'n'; for (Function: : iterator bb = F. begin(), e = F. end(); bb != e; ++bb) { for (Basic. Block: : iterator i = bb->begin(), e = bb->end(); i != e; ++i) { if(op. Counter. find(i->get. Opcode. Name()) == op. Counter. end()) { op. Counter[i->get. Opcode. Name()] = 1; } else { op. Counter[i->get. Opcode. Name()] += 1; } } } std: : map <std: : string, int>: : iterator i = op. Counter. begin(); std: : map <std: : string, int>: : iterator e = op. Counter. end(); while (i != e) { errs() << i->first << ": " << i->second << "n"; i++; } errs() << "n"; op. Counter. clear(); return false; } }; } char Count. Op: : ID = 0; static Register. Pass<Count. Op> X("op. Counter", "Counts opcodes per functions"); What are anonymous namespaces? This line defines the name of the pass, in the command line, e. g. , op. Counter, and the help string that opt provides to the user about the pass.

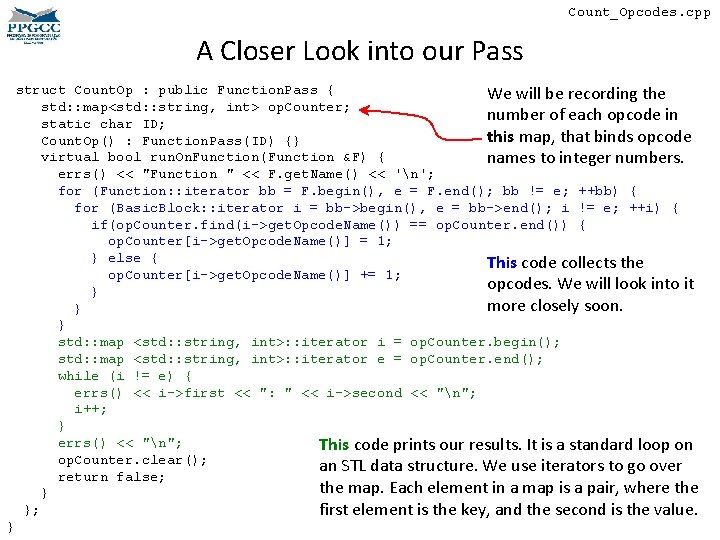

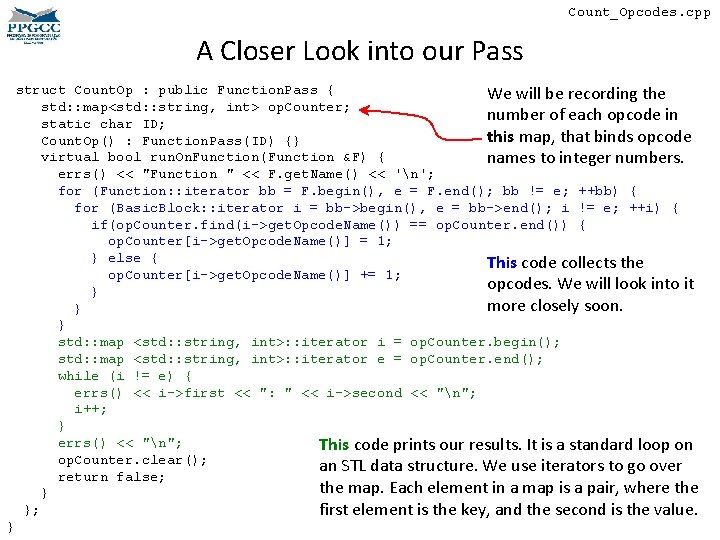

Count_Opcodes. cpp A Closer Look into our Pass } struct Count. Op : public Function. Pass { We will be recording the std: : map<std: : string, int> op. Counter; number of each opcode in static char ID; this map, that binds opcode Count. Op() : Function. Pass(ID) {} virtual bool run. On. Function(Function &F) { names to integer numbers. errs() << "Function " << F. get. Name() << 'n'; for (Function: : iterator bb = F. begin(), e = F. end(); bb != e; ++bb) { for (Basic. Block: : iterator i = bb->begin(), e = bb->end(); i != e; ++i) { if(op. Counter. find(i->get. Opcode. Name()) == op. Counter. end()) { op. Counter[i->get. Opcode. Name()] = 1; } else { This code collects the op. Counter[i->get. Opcode. Name()] += 1; opcodes. We will look into it } more closely soon. } } std: : map <std: : string, int>: : iterator i = op. Counter. begin(); std: : map <std: : string, int>: : iterator e = op. Counter. end(); while (i != e) { errs() << i->first << ": " << i->second << "n"; i++; } errs() << "n"; This code prints our results. It is a standard loop on op. Counter. clear(); an STL data structure. We use iterators to go over return false; the map. Each element in a map is a pair, where the } }; first element is the key, and the second is the value.

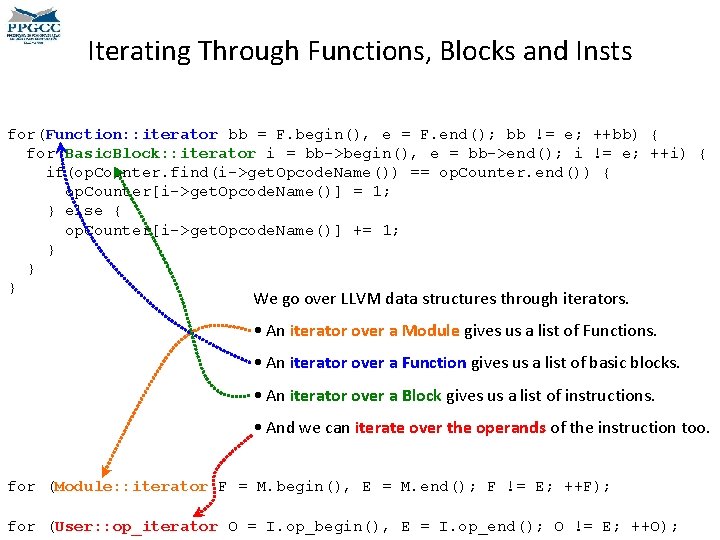

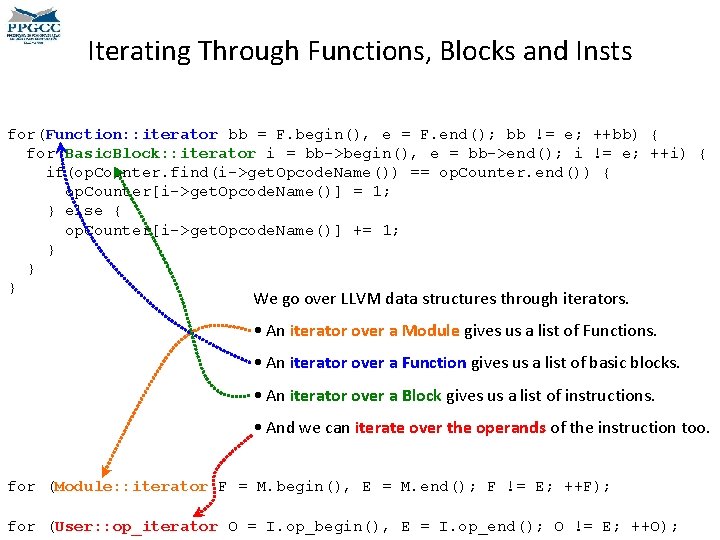

Iterating Through Functions, Blocks and Insts for(Function: : iterator bb = F. begin(), e = F. end(); bb != e; ++bb) { for(Basic. Block: : iterator i = bb->begin(), e = bb->end(); i != e; ++i) { if(op. Counter. find(i->get. Opcode. Name()) == op. Counter. end()) { op. Counter[i->get. Opcode. Name()] = 1; } else { op. Counter[i->get. Opcode. Name()] += 1; } } } We go over LLVM data structures through iterators. • An iterator over a Module gives us a list of Functions. • An iterator over a Function gives us a list of basic blocks. • An iterator over a Block gives us a list of instructions. • And we can iterate over the operands of the instruction too. for (Module: : iterator F = M. begin(), E = M. end(); F != E; ++F); for (User: : op_iterator O = I. op_begin(), E = I. op_end(); O != E; ++O);

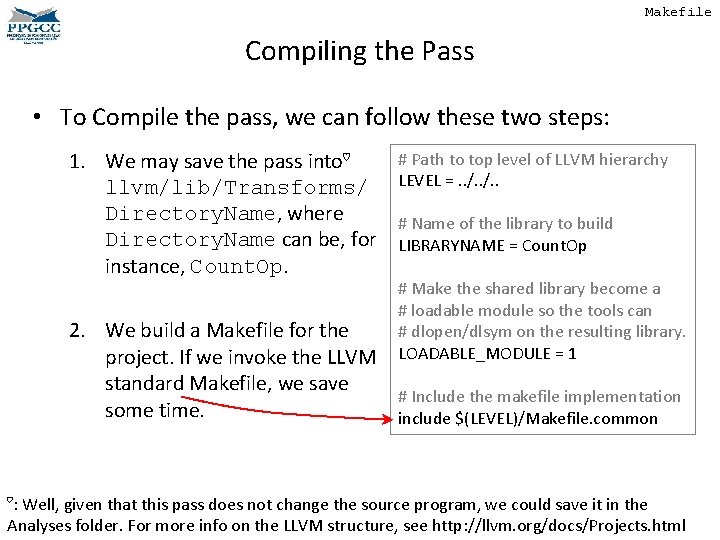

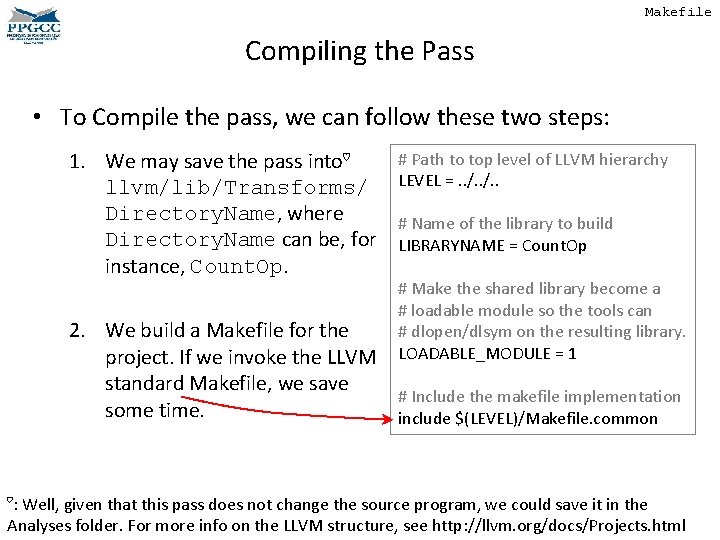

Makefile Compiling the Pass • To Compile the pass, we can follow these two steps: 1. We may save the pass into♡ llvm/lib/Transforms/ Directory. Name, where Directory. Name can be, for instance, Count. Op. 2. We build a Makefile for the project. If we invoke the LLVM standard Makefile, we save some time. ♡: # Path to top level of LLVM hierarchy LEVEL =. . /. . # Name of the library to build LIBRARYNAME = Count. Op # Make the shared library become a # loadable module so the tools can # dlopen/dlsym on the resulting library. LOADABLE_MODULE = 1 # Include the makefile implementation include $(LEVEL)/Makefile. common Well, given that this pass does not change the source program, we could save it in the Analyses folder. For more info on the LLVM structure, see http: //llvm. org/docs/Projects. html

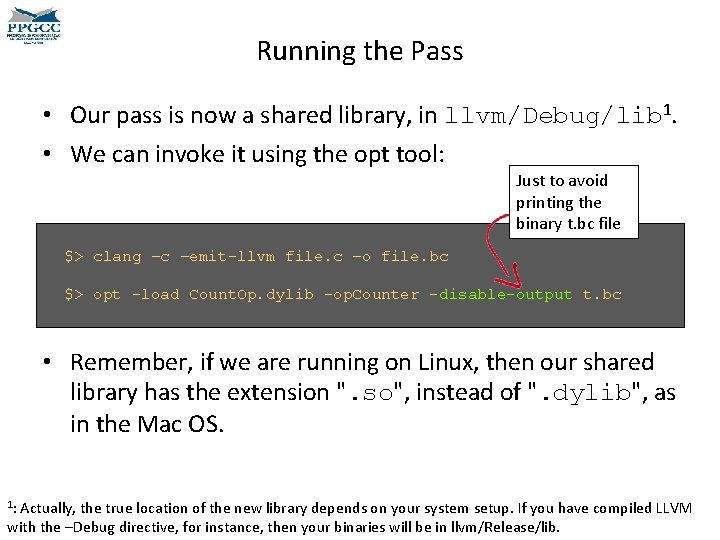

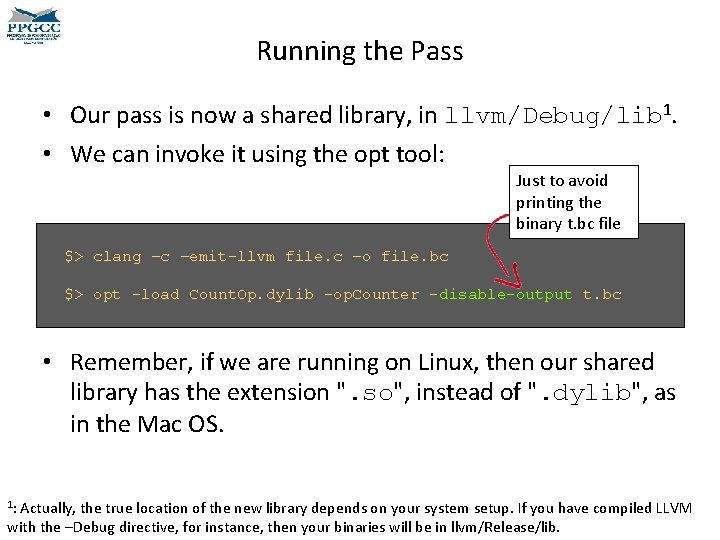

Running the Pass • Our pass is now a shared library, in llvm/Debug/lib 1. • We can invoke it using the opt tool: Just to avoid printing the binary t. bc file $> clang –c –emit-llvm file. c –o file. bc $> opt -load Count. Op. dylib -op. Counter -disable-output t. bc • Remember, if we are running on Linux, then our shared library has the extension ". so", instead of ". dylib", as in the Mac OS. 1: Actually, the true location of the new library depends on your system setup. If you have compiled LLVM with the –Debug directive, for instance, then your binaries will be in llvm/Release/lib.

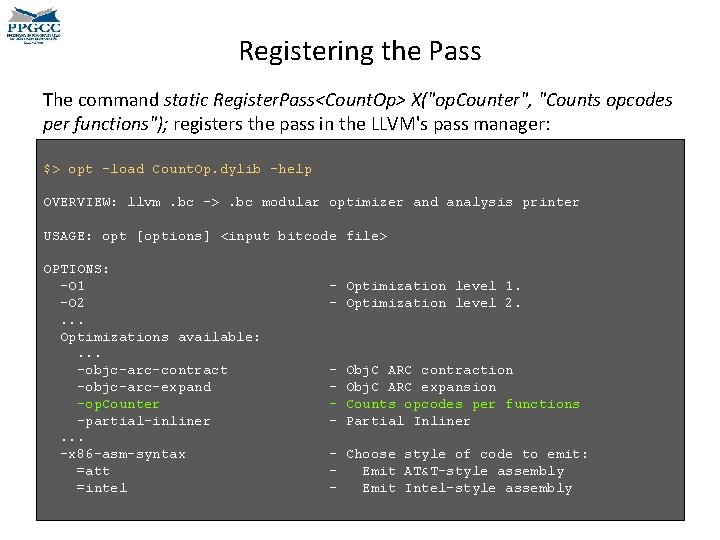

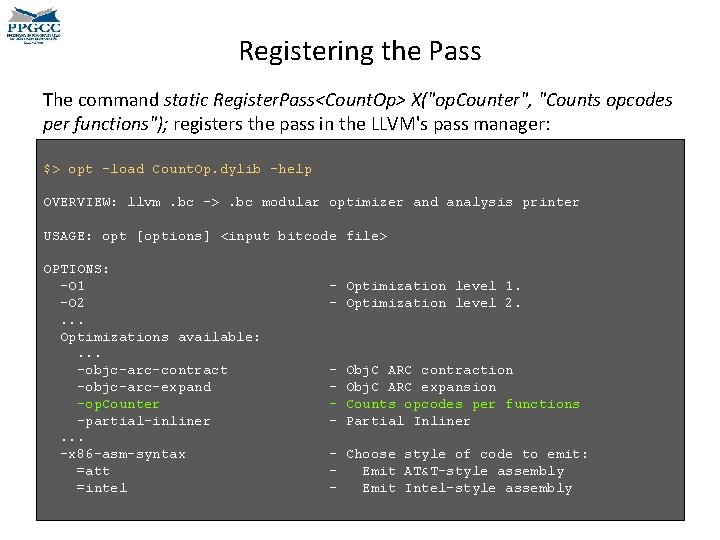

Registering the Pass The command static Register. Pass<Count. Op> X("op. Counter", "Counts opcodes per functions"); registers the pass in the LLVM's pass manager: $> opt -load Count. Op. dylib -help OVERVIEW: llvm. bc ->. bc modular optimizer and analysis printer USAGE: opt [options] <input bitcode file> OPTIONS: -O 1 -O 2. . . Optimizations available: . . . -objc-arc-contract -objc-arc-expand -op. Counter -partial-inliner. . . -x 86 -asm-syntax =att =intel - Optimization level 1. - Optimization level 2. - Obj. C ARC contraction Obj. C ARC expansion Counts opcodes per functions Partial Inliner - Choose style of code to emit: Emit AT&T-style assembly Emit Intel-style assembly

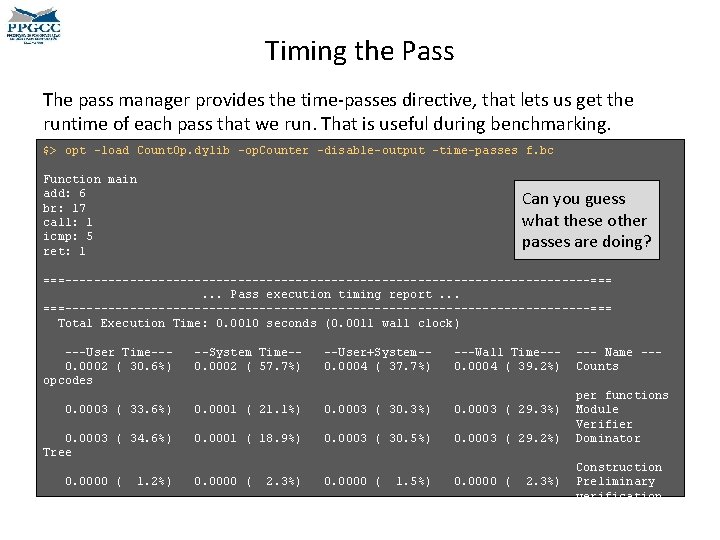

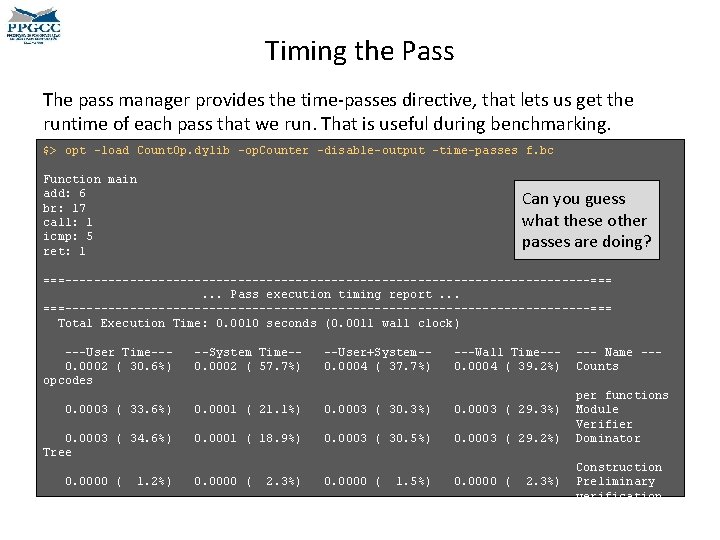

Timing the Pass The pass manager provides the time-passes directive, that lets us get the runtime of each pass that we run. That is useful during benchmarking. $> opt -load Count. Op. dylib -op. Counter -disable-output -time-passes f. bc Function main add: 6 br: 17 call: 1 icmp: 5 ret: 1 Can you guess what these other passes are doing? ===-------------------------------------===. . . Pass execution timing report. . . ===-------------------------------------=== Total Execution Time: 0. 0010 seconds (0. 0011 wall clock) ---User Time--0. 0002 ( 30. 6%) opcodes --System Time-0. 0002 ( 57. 7%) --User+System-0. 0004 ( 37. 7%) ---Wall Time--0. 0004 ( 39. 2%) 0. 0003 ( 33. 6%) 0. 0001 ( 21. 1%) 0. 0003 ( 30. 3%) 0. 0003 ( 29. 3%) 0. 0003 ( 34. 6%) Tree 0. 0001 ( 18. 9%) 0. 0003 ( 30. 5%) 0. 0003 ( 29. 2%) 0. 0000 ( 1. 2%) 0. 0008 (100. 0%) 0. 0000 ( 2. 3%) 0. 0003 (100. 0%) 0. 0000 ( 1. 5%) 0. 0010 (100. 0%) 0. 0000 ( 2. 3%) 0. 0011 (100. 0%) --- Name --Counts per functions Module Verifier Dominator Construction Preliminary verification Total

Chaining Passes • A pass may invoke another. – To transform the program, e. g. , Break. Critical. Edges – To obtain information about the program, e. g. , Alias. Analysis • If a pass invokes another, then it must say it explicitly, through the get. Analysis. Usage method, in the class Function. Pass. • To recover the data-structures created by the pass, we can use the get. Analysis method.

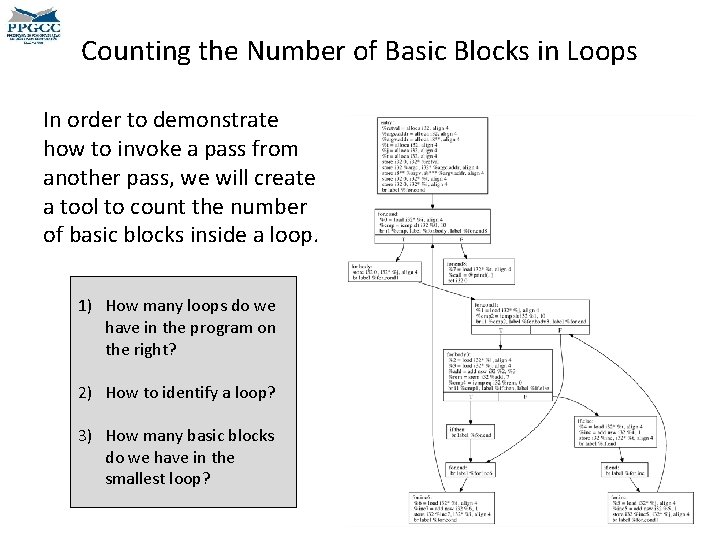

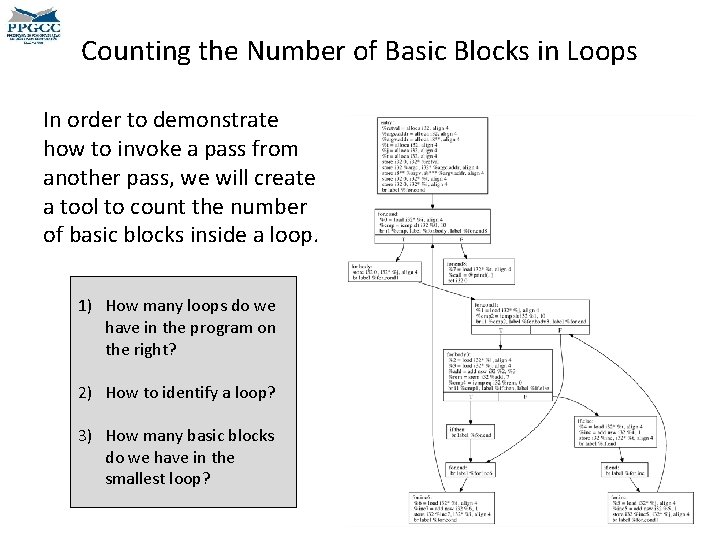

Counting the Number of Basic Blocks in Loops In order to demonstrate how to invoke a pass from another pass, we will create a tool to count the number of basic blocks inside a loop. 1) How many loops do we have in the program on the right? 2) How to identify a loop? 3) How many basic blocks do we have in the smallest loop?

Dealing with Loops In order to demonstrate how to invoke a pass from another pass, we will create a tool to count the number of basic blocks inside a loop. • We could implement some functionalities to deal with all the questions on the left. • However, LLVM already has a pass that handles loops. • We can use this pass to obtain the number of basic blocks per loops. 1) How many loops do we have in the program on the right? 2) How to identify a loop? 3) How many basic blocks do we have in the smallest loop?

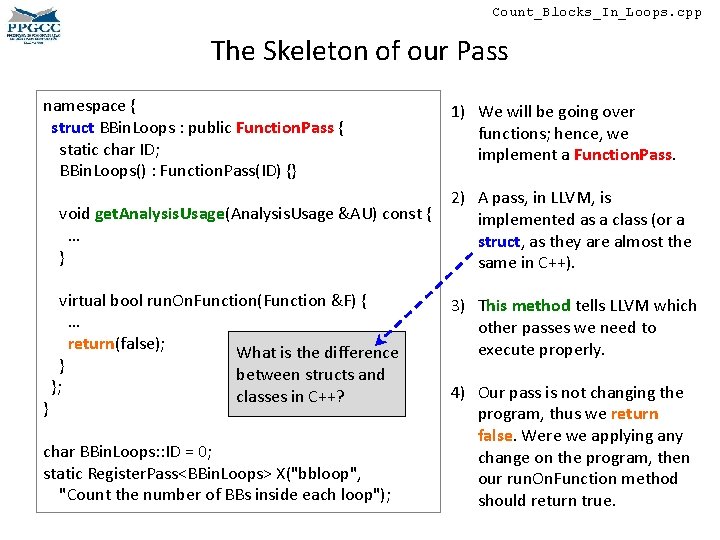

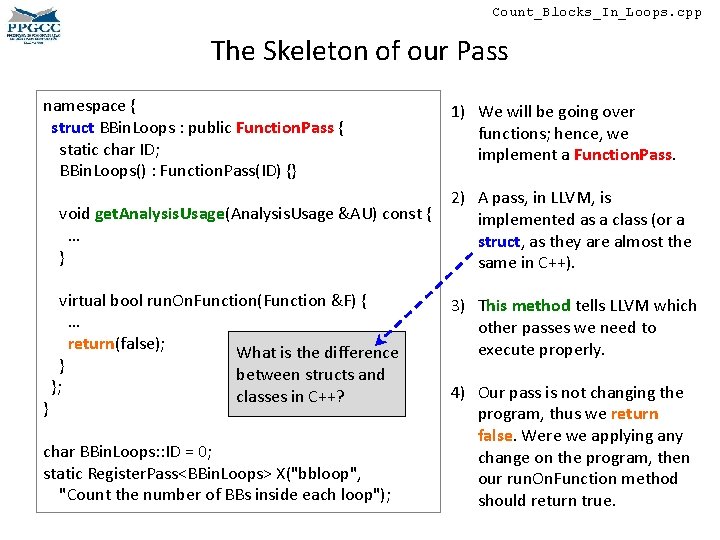

Count_Blocks_In_Loops. cpp The Skeleton of our Pass namespace { struct BBin. Loops : public Function. Pass { static char ID; BBin. Loops() : Function. Pass(ID) {} void get. Analysis. Usage(Analysis. Usage &AU) const { … } } virtual bool run. On. Function(Function &F) { … return(false); What is the difference } between structs and }; classes in C++? char BBin. Loops: : ID = 0; static Register. Pass<BBin. Loops> X("bbloop", "Count the number of BBs inside each loop"); 1) We will be going over functions; hence, we implement a Function. Pass. 2) A pass, in LLVM, is implemented as a class (or a struct, as they are almost the same in C++). 3) This method tells LLVM which other passes we need to execute properly. 4) Our pass is not changing the program, thus we return false. Were we applying any change on the program, then our run. On. Function method should return true.

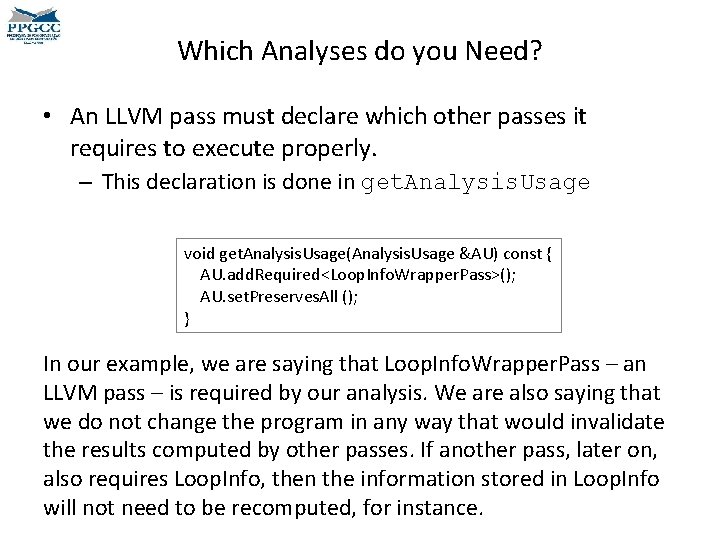

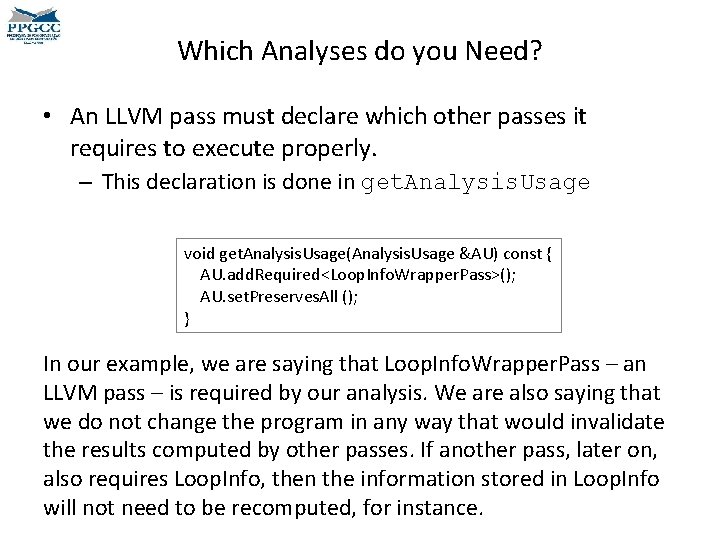

Which Analyses do you Need? • An LLVM pass must declare which other passes it requires to execute properly. – This declaration is done in get. Analysis. Usage void get. Analysis. Usage(Analysis. Usage &AU) const { AU. add. Required<Loop. Info. Wrapper. Pass>(); AU. set. Preserves. All (); } In our example, we are saying that Loop. Info. Wrapper. Pass – an LLVM pass – is required by our analysis. We are also saying that we do not change the program in any way that would invalidate the results computed by other passes. If another pass, later on, also requires Loop. Info, then the information stored in Loop. Info will not need to be recomputed, for instance.

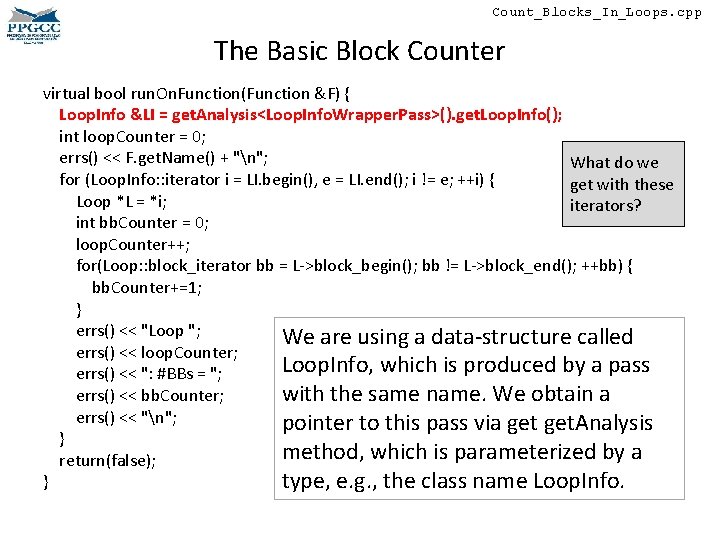

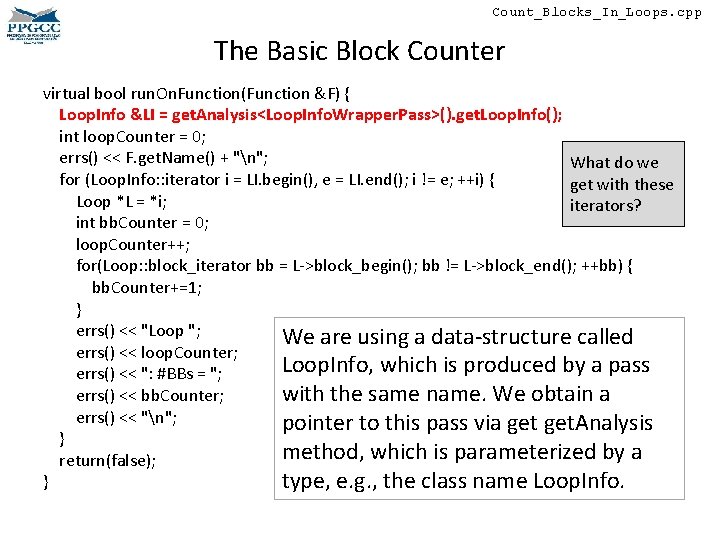

Count_Blocks_In_Loops. cpp The Basic Block Counter virtual bool run. On. Function(Function &F) { Loop. Info &LI = get. Analysis<Loop. Info. Wrapper. Pass>(). get. Loop. Info(); int loop. Counter = 0; errs() << F. get. Name() + "n"; What do we for (Loop. Info: : iterator i = LI. begin(), e = LI. end(); i != e; ++i) { get with these Loop *L = *i; iterators? int bb. Counter = 0; loop. Counter++; for(Loop: : block_iterator bb = L->block_begin(); bb != L->block_end(); ++bb) { bb. Counter+=1; } errs() << "Loop "; We are using a data-structure called errs() << loop. Counter; Loop. Info, which is produced by a pass errs() << ": #BBs = "; errs() << bb. Counter; with the same name. We obtain a errs() << "n"; pointer to this pass via get. Analysis } method, which is parameterized by a return(false); } type, e. g. , the class name Loop. Info.

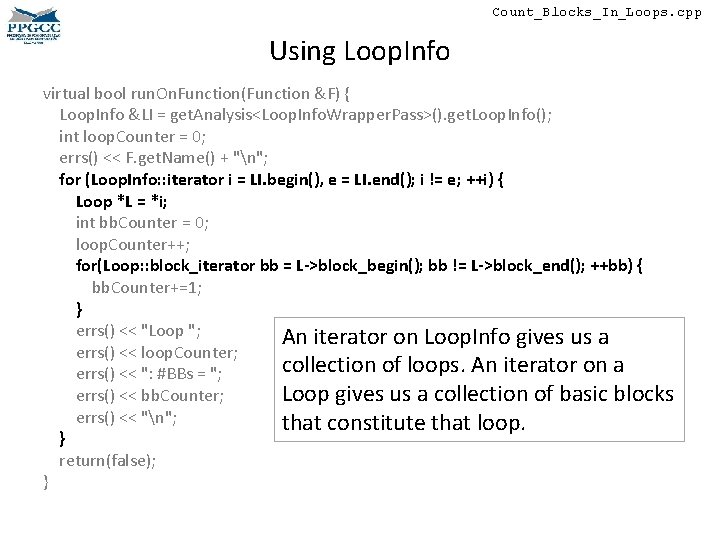

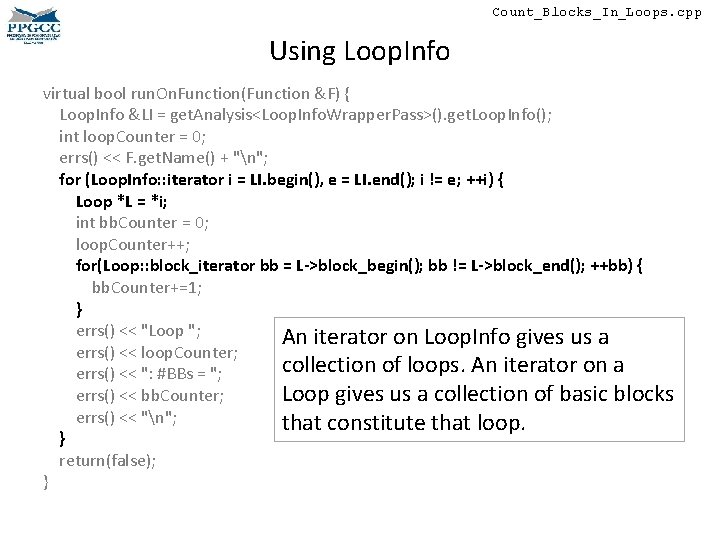

Count_Blocks_In_Loops. cpp Using Loop. Info virtual bool run. On. Function(Function &F) { Loop. Info &LI = get. Analysis<Loop. Info. Wrapper. Pass>(). get. Loop. Info(); int loop. Counter = 0; errs() << F. get. Name() + "n"; for (Loop. Info: : iterator i = LI. begin(), e = LI. end(); i != e; ++i) { Loop *L = *i; int bb. Counter = 0; loop. Counter++; for(Loop: : block_iterator bb = L->block_begin(); bb != L->block_end(); ++bb) { bb. Counter+=1; } errs() << "Loop "; An iterator on Loop. Info gives us a errs() << loop. Counter; collection of loops. An iterator on a errs() << ": #BBs = "; errs() << bb. Counter; Loop gives us a collection of basic blocks errs() << "n"; that constitute that loop. } return(false); }

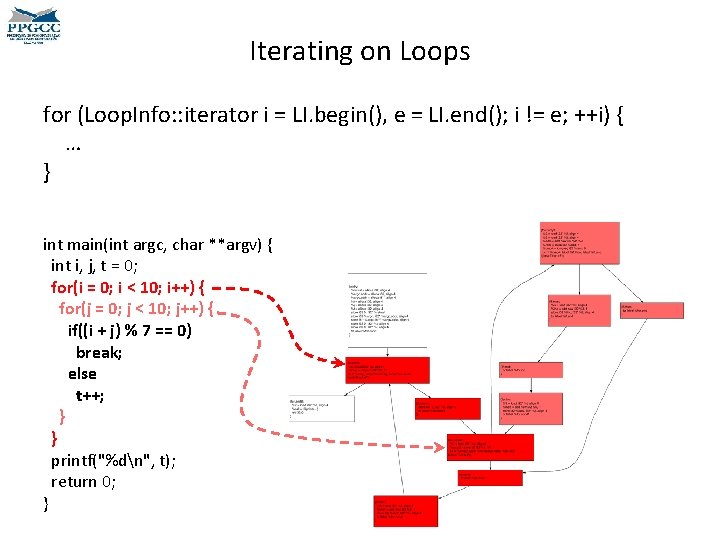

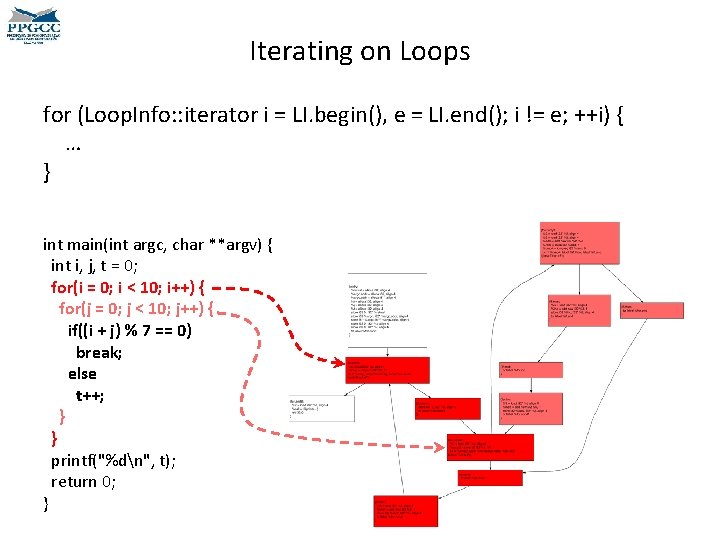

Iterating on Loops for (Loop. Info: : iterator i = LI. begin(), e = LI. end(); i != e; ++i) { … } int main(int argc, char **argv) { int i, j, t = 0; for(i = 0; i < 10; i++) { for(j = 0; j < 10; j++) { if((i + j) % 7 == 0) break; else t++; } } printf("%dn", t); return 0; }

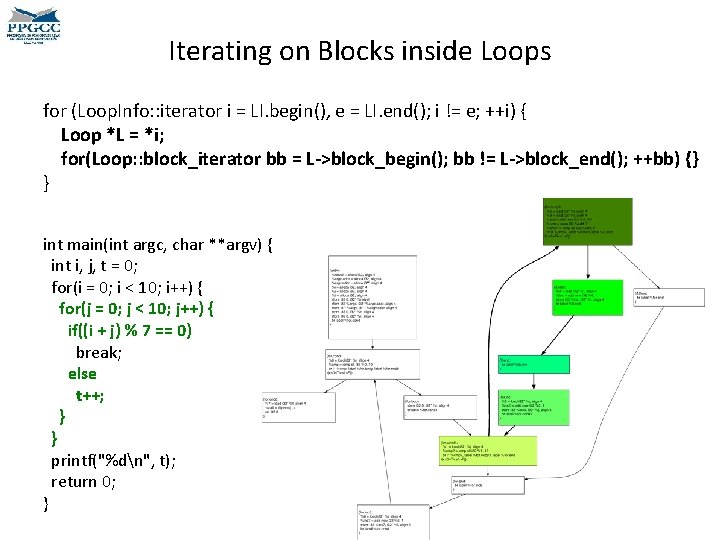

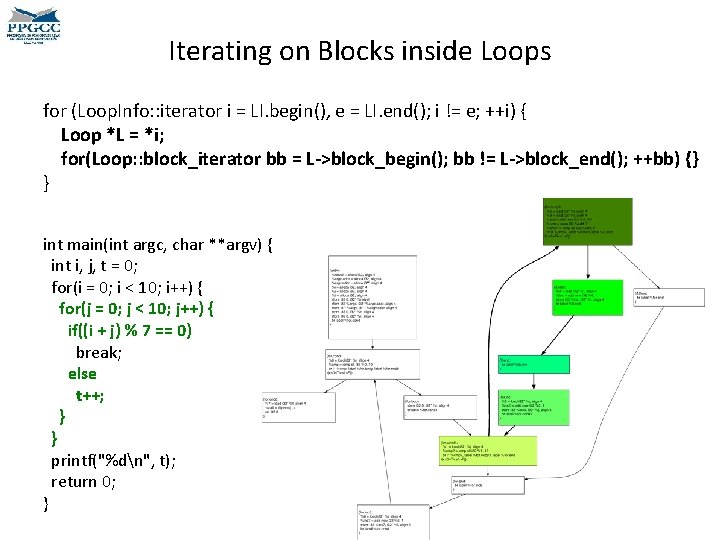

Iterating on Blocks inside Loops for (Loop. Info: : iterator i = LI. begin(), e = LI. end(); i != e; ++i) { Loop *L = *i; for(Loop: : block_iterator bb = L->block_begin(); bb != L->block_end(); ++bb) {} } int main(int argc, char **argv) { int i, j, t = 0; for(i = 0; i < 10; i++) { for(j = 0; j < 10; j++) { if((i + j) % 7 == 0) break; else t++; } } printf("%dn", t); return 0; }

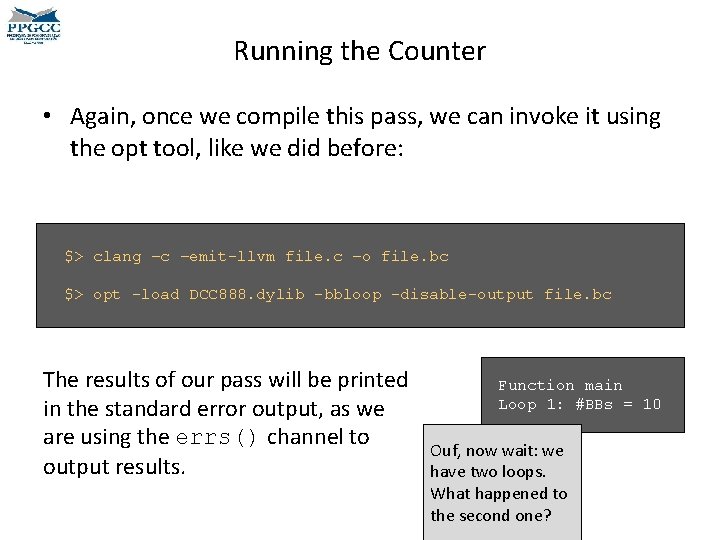

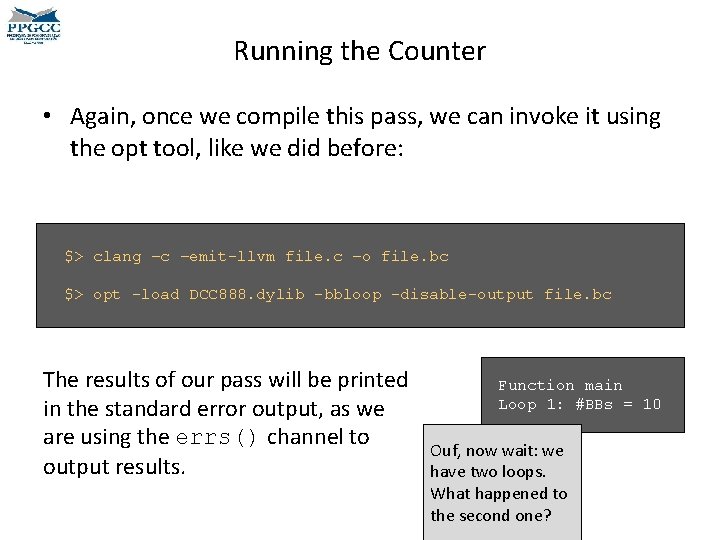

Running the Counter • Again, once we compile this pass, we can invoke it using the opt tool, like we did before: $> clang –c –emit-llvm file. c –o file. bc $> opt -load DCC 888. dylib -bbloop -disable-output file. bc The results of our pass will be printed in the standard error output, as we are using the errs() channel to output results. Function main Loop 1: #BBs = 10 Ouf, now wait: we have two loops. What happened to the second one?

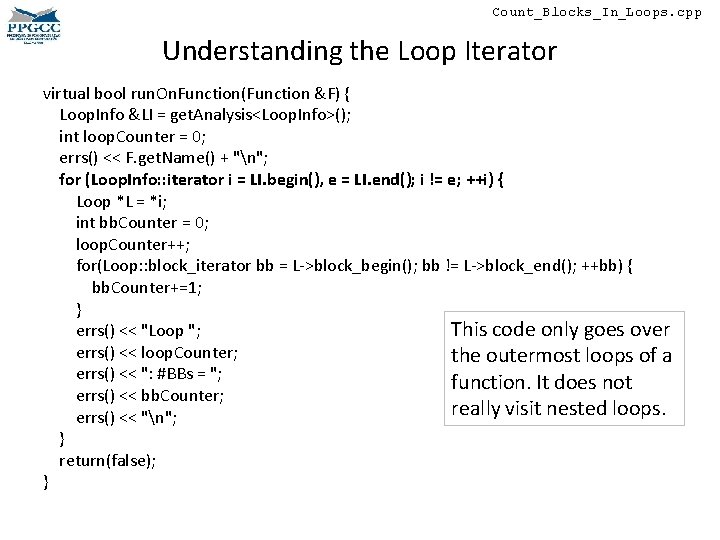

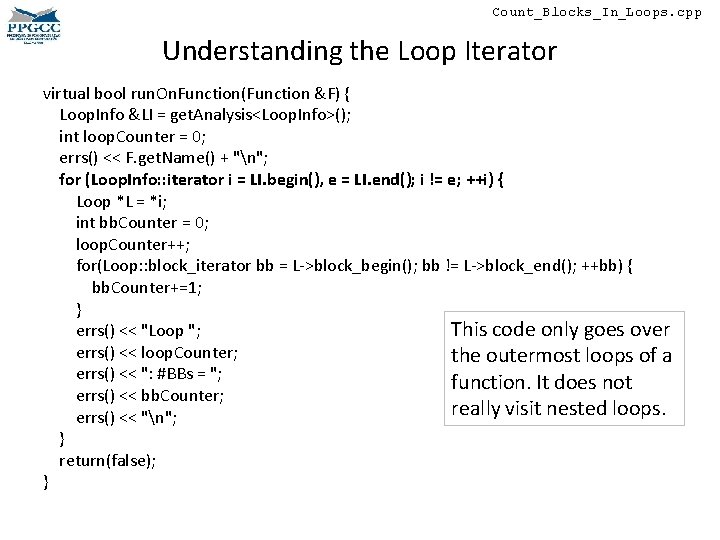

Count_Blocks_In_Loops. cpp Understanding the Loop Iterator virtual bool run. On. Function(Function &F) { Loop. Info &LI = get. Analysis<Loop. Info>(); int loop. Counter = 0; errs() << F. get. Name() + "n"; for (Loop. Info: : iterator i = LI. begin(), e = LI. end(); i != e; ++i) { Loop *L = *i; int bb. Counter = 0; loop. Counter++; for(Loop: : block_iterator bb = L->block_begin(); bb != L->block_end(); ++bb) { bb. Counter+=1; } This code only goes over errs() << "Loop "; errs() << loop. Counter; the outermost loops of a errs() << ": #BBs = "; function. It does not errs() << bb. Counter; really visit nested loops. errs() << "n"; } return(false); }

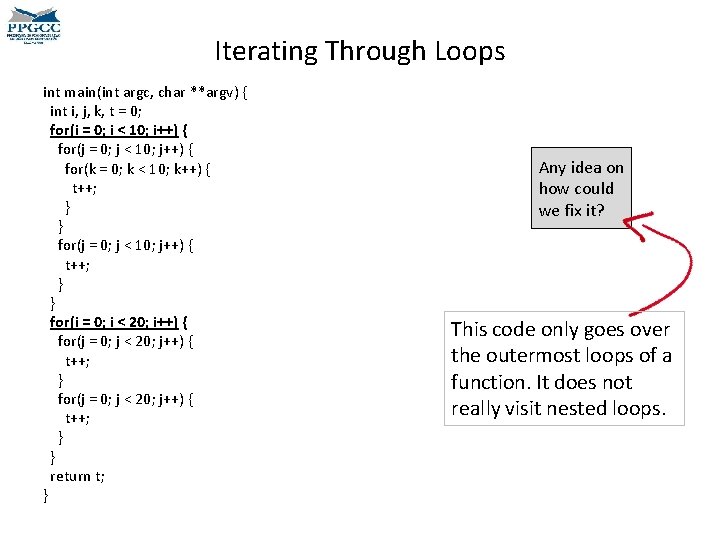

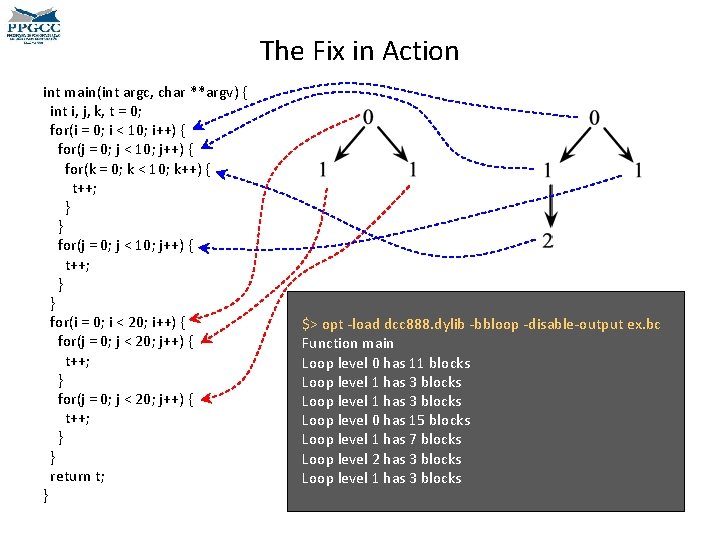

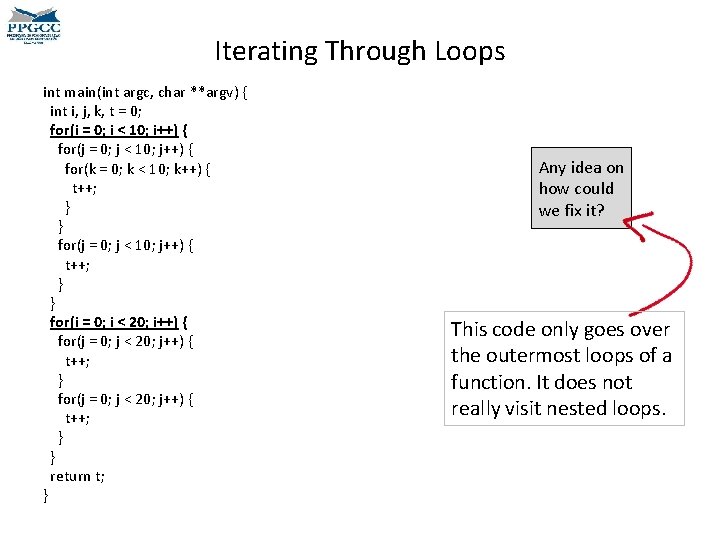

Iterating Through Loops int main(int argc, char **argv) { int i, j, k, t = 0; for(i = 0; i < 10; i++) { for(j = 0; j < 10; j++) { for(k = 0; k < 10; k++) { t++; } } for(j = 0; j < 10; j++) { t++; } } for(i = 0; i < 20; i++) { for(j = 0; j < 20; j++) { t++; } } return t; } Any idea on how could we fix it? This code only goes over the outermost loops of a function. It does not really visit nested loops.

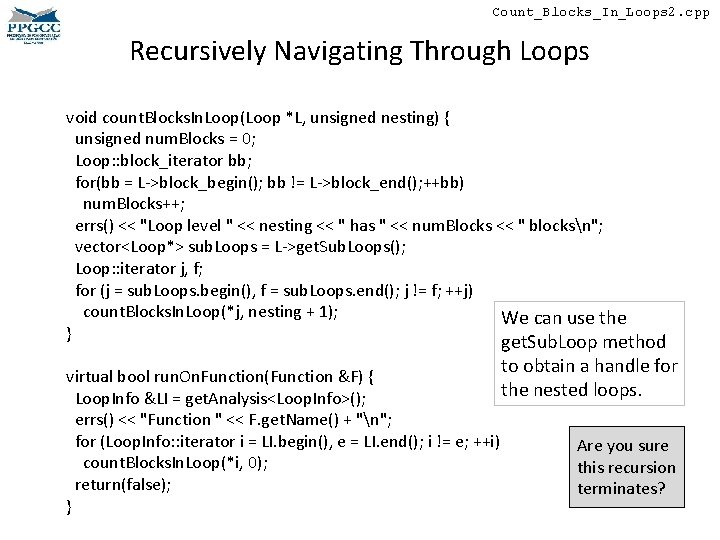

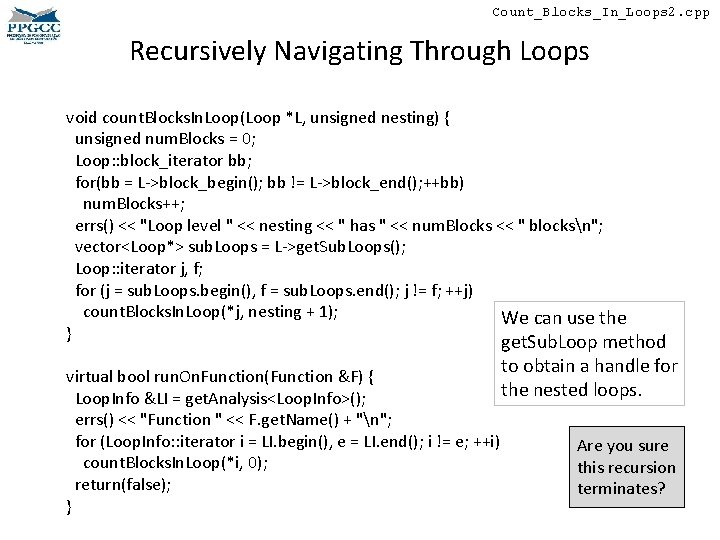

Count_Blocks_In_Loops 2. cpp Recursively Navigating Through Loops void count. Blocks. In. Loop(Loop *L, unsigned nesting) { unsigned num. Blocks = 0; Loop: : block_iterator bb; for(bb = L->block_begin(); bb != L->block_end(); ++bb) num. Blocks++; errs() << "Loop level " << nesting << " has " << num. Blocks << " blocksn"; vector<Loop*> sub. Loops = L->get. Sub. Loops(); Loop: : iterator j, f; for (j = sub. Loops. begin(), f = sub. Loops. end(); j != f; ++j) count. Blocks. In. Loop(*j, nesting + 1); We can use the } get. Sub. Loop method to obtain a handle for virtual bool run. On. Function(Function &F) { the nested loops. Loop. Info &LI = get. Analysis<Loop. Info>(); errs() << "Function " << F. get. Name() + "n"; for (Loop. Info: : iterator i = LI. begin(), e = LI. end(); i != e; ++i) Are you sure count. Blocks. In. Loop(*i, 0); this recursion return(false); terminates? }

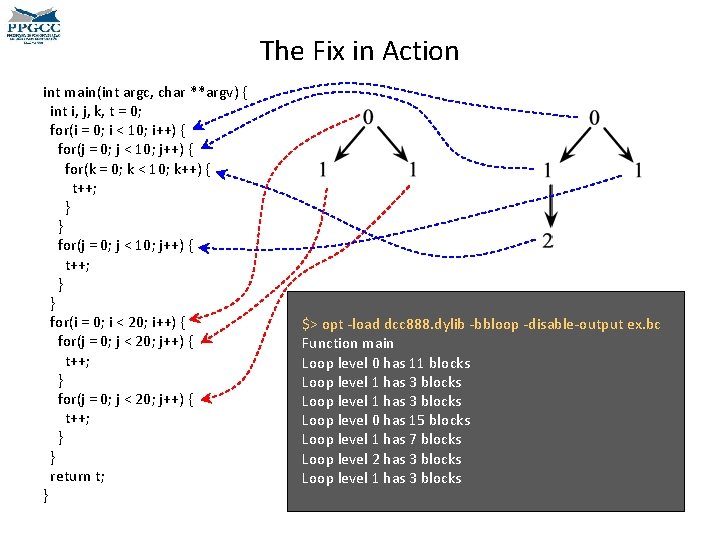

The Fix in Action int main(int argc, char **argv) { int i, j, k, t = 0; for(i = 0; i < 10; i++) { for(j = 0; j < 10; j++) { for(k = 0; k < 10; k++) { t++; } } for(j = 0; j < 10; j++) { t++; } } for(i = 0; i < 20; i++) { for(j = 0; j < 20; j++) { t++; } } return t; } $> opt -load dcc 888. dylib -bbloop -disable-output ex. bc Function main Loop level 0 has 11 blocks Loop level 1 has 3 blocks Loop level 0 has 15 blocks Loop level 1 has 7 blocks Loop level 2 has 3 blocks Loop level 1 has 3 blocks

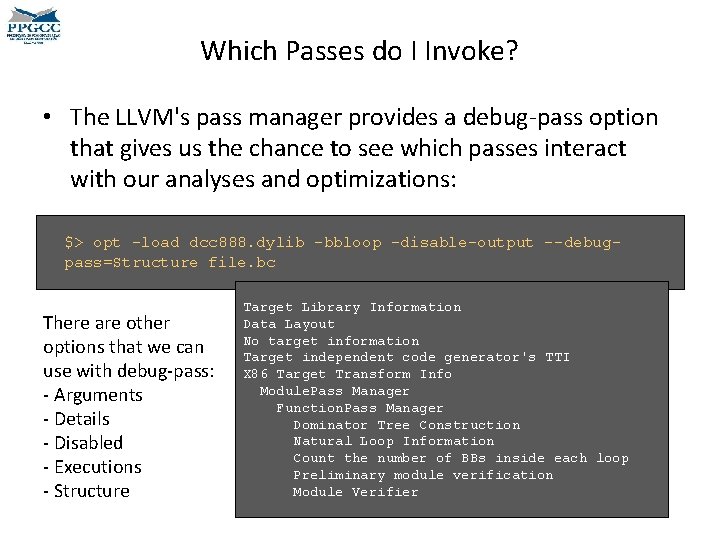

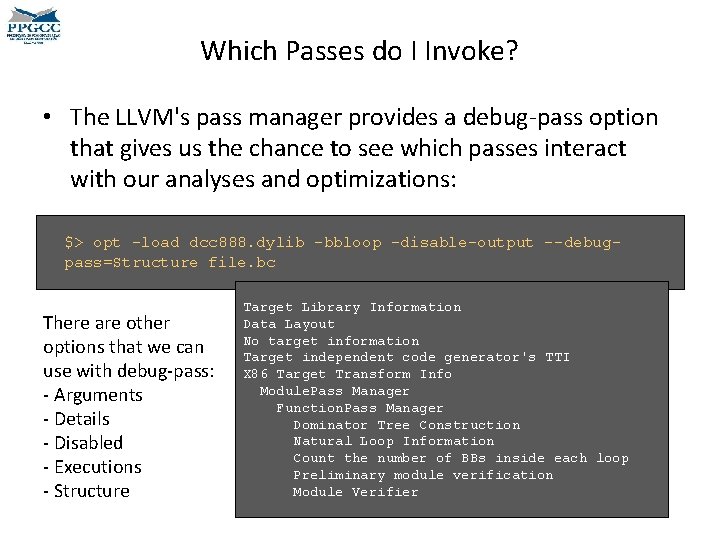

Which Passes do I Invoke? • The LLVM's pass manager provides a debug-pass option that gives us the chance to see which passes interact with our analyses and optimizations: $> opt -load dcc 888. dylib -bbloop -disable-output --debugpass=Structure file. bc There are other options that we can use with debug-pass: - Arguments - Details - Disabled - Executions - Structure Target Library Information Data Layout No target information Target independent code generator's TTI X 86 Target Transform Info Module. Pass Manager Function. Pass Manager Dominator Tree Construction Natural Loop Information Count the number of BBs inside each loop Preliminary module verification Module Verifier

Final Remarks • LLVM provides users with a string of analyses and optimizations which are called passes. • Users can chain new passes into this pipeline. • The pass manager orders the passes in such a a way to satisfies the dependencies. • Passes are organized according to their granularity, e. g. , module, function, loop, basic block, etc.

DEPARTMENT OF COMPUTER SCIENCE UNIVERSIDADE FEDERAL DE MINAS GERAIS FEDERAL UNIVERSITY OF MINAS GERAIS, BRAZIL PESQUISA EM COMPILADORES NA UFMG

Como tornar seu sistema de informática mais eficiente e mais seguro?

Sem comprar novo hardware? Como tornar seu sistema de informática mais eficiente e mais seguro? Sem re-escrever seu código?

Melhore seu compilador! um software que traduz Linguagens de Programação em Código Binário

E porque trabalhar conosco?

Já Usou o Firefox? • Nós implementamos a propagação de constantes usada pelo compilador de Java. Script que Firefox usa – https: //bugzilla. mozilla. org/show_bug. cgi? id=536641 Para saber mais: • • Igor Rafael de Assis Costa, Péricles Rafael Oliveira Alves, Henrique Nazaré Santos, Fernando Magno Quintão Pereira: Just-in-time value specialization. CGO 2013: 29: 1 -29: 11 Igor Rafael de Assis Costa, Henrique Nazaré Santos, Péricles Rafael Oliveira Alves, Fernando Magno Quintão Pereira: Just-in-time value specialization. Computer Languages, Systems & Structures 40(2): 37 -52 (2014)

Joga Video-Games? • Mais de 10. 000 linhas de código usados no Ocelot, um compilador para placas gráficas, foram feitas na UFMG – http: //gpuocelot. gatech. edu/ • Hoje disponível em LLVM – http: //reviews. llvm. org/D 8576 – Usada pela Mentor-Graphics e pela Nvidia Para saber mais: • Diogo Sampaio, Rafael Martins de Souza, Sylvain Collange, Fernando Magno Quintão Pereira: Divergence analysis. ACM Trans. Program. Lang. Syst. 35(4): 13 (2013)

Programa Em PHP? • Nós implementamos a análise de fluxos contaminados, hoje usada pelo compilador de PHP chamado Phc – Diversas falhas de segurança encontradas em programas de uso industrial Para saber mais: • • Andrei Rimsa, Marcelo d'Amorim, Fernando Magno Quintão Pereira: Efficient static checker for tainted variable attacks. Sci. Comput. Program. 80: 91 -105 (2014) Andrei Rimsa, Marcelo d'Amorim, Fernando Magno Quintão Pereira: Tainted Flow Analysis on e-SSA-Form Programs. CC 2011: 124 -143

Prêmios (Últimos 5 Anos) • • • Melhor artigo SBLP 2010 “Removing Overflow Tests via Run-Time Partial Evaluation”. Melhor artigo SBAC-PAD 2010 “Performance Debugging of GPGPU Applications with the Divergence Map”. Melhor artigo do SBQS 2011 “Software Evolution Characterization - A Complex Network Approach” Melhor artigo SBLP 2012 "Spill Code Placement for SIMD Machines". Melhor trabalho de iniciação científica, SIC UFMG 2012, Ciências Exatas e da Terra: Péricles Alves, "Runtime Value Specialization". 2013 Segunda melhor dissertação de mestrado da Sociedade Brasileira de Computação: "Divergence Analysis with Affine Constraints". 2014 Melhor artigo do XIX Simpósio Brasileiro de Linguagens de Programação: "Restritificação", Sociedade Brasileira de Computação. 2015 Terceiro melhor artigo do XIX Simpósio Brasileiro de Linguagens de Programação: "Automatic Inference of Loop Complexity through Polynomial Interpolation", Sociedade Brasileira de Computação. 2015 Melhor Ferramenta do Congresso Brasileiro de Software, Teoria e Prática (CBSoft): "Restrictifier: a tool to disambiguate pointers at function call sites", Sociedade Brasileira de Computação. 2015 Segunda Melhor Ferramenta do Congresso Brasileiro de Software, Teoria e Prática (CBSoft): "Flow. Tracker - Detecção de Código Não Isócrono via Análise Estática de Fluxo", Sociedade Brasileira de Computação. 2015 Segundo melhor artigo no SBSeg 2015 "Uma Técnica de Análise Estática para Detecção de Canais Laterais Baseados em Tempo"

Infra-Estrutura Processo de medição de consumo energético em hardware embarcado Novo laboratório inaugurado no dia 18 de Dezembro, construído com recursos da Intel e da LG Electronics

Colaborações com a Indústria • Intel: compilação segura de programas escritos em C/C++ para dispositivos embarcados. • Maxtrack: teste automático de software implementado em micro-controladores. • LG Electronics: paralelização automática de código para smartphones. • Nvidia: análise de divergências. • Google: seis projetos "Summer of Code", em parceria com Mozilla, Apple e GHC.

E no final das contas. . . Hi Prof Pereira, Long time don't see. I trust you are well. Here at Apple, we are investing heavily in our compiler technologies. I was wondering if you might have recommendations for students who are interested in pursuing a career in industrial compiler work. I'd be interested in talking to them about opportunities here. Regards, Evan Cheng Sr Manager, Compiler Technologies, Apple (Março'13)

E mais no final das contas ainda. . . Dear Fernando, I have read your paper in JIT specialization in Java. Script and particularly your work in the context of Ion. Monkey has caught my eye. I am working as a Research Scientist at Intel Labs on the River Trail project. We are collaborating with Mozilla on a Firefox implementation. Given your recent work on Ion. Monkey, maybe one of your students would be interested to join us in Santa Clara to work on this topic? Regards, Stephan (May'13)

Conclusão • Queremos fazer pesquisa que tem importância para as pessoas. – Produtos – Inovações – Geração de conhecimento