Introduction to the Data Predictive Analytics Methods with

![Linear Discriminant Analysis - R accuracy_LDA_train <data. frame(ifelse(predict_LDA_train[1] == train$RETAINED, 1, 0)) accuracy_LDA_test <data. Linear Discriminant Analysis - R accuracy_LDA_train <data. frame(ifelse(predict_LDA_train[1] == train$RETAINED, 1, 0)) accuracy_LDA_test <data.](https://slidetodoc.com/presentation_image_h/14afd4de8cfc8b133aae673d32bbf841/image-23.jpg)

![Linear Regression - R accuracy_linear_train <data. frame(abs(predict_linear_train[1] - train$GPA_FIRST_TERM)) accuracy_linear_test <data. frame(abs(predict_linear_test[1] - test$GPA_FIRST_TERM)) Linear Regression - R accuracy_linear_train <data. frame(abs(predict_linear_train[1] - train$GPA_FIRST_TERM)) accuracy_linear_test <data. frame(abs(predict_linear_test[1] - test$GPA_FIRST_TERM))](https://slidetodoc.com/presentation_image_h/14afd4de8cfc8b133aae673d32bbf841/image-47.jpg)

![Logistic Regression - R accuracy_logistic_train <data. frame(ifelse(predict_logistic_train[1] == train$RETAINED, 1, 0)) accuracy_logistic_test <data. frame(ifelse(predict_logistic_test[1] Logistic Regression - R accuracy_logistic_train <data. frame(ifelse(predict_logistic_train[1] == train$RETAINED, 1, 0)) accuracy_logistic_test <data. frame(ifelse(predict_logistic_test[1]](https://slidetodoc.com/presentation_image_h/14afd4de8cfc8b133aae673d32bbf841/image-50.jpg)

![Decision Tree - R accuracy_tree_train <data. frame(ifelse(predict_tree_train[1] == train$RETAINED, 1, 0)) accuracy_tree_test <data. frame(ifelse(predict_tree_test[1] Decision Tree - R accuracy_tree_train <data. frame(ifelse(predict_tree_train[1] == train$RETAINED, 1, 0)) accuracy_tree_test <data. frame(ifelse(predict_tree_test[1]](https://slidetodoc.com/presentation_image_h/14afd4de8cfc8b133aae673d32bbf841/image-70.jpg)

![Ensemble - R ensemble_RETAINED <- data. frame(train$RETAINED) ensemble_RETAINED[2] <- predict_LDA_train[1] ensemble_RETAINED[3] <- predict_logistic_train ensemble_RETAINED[4] Ensemble - R ensemble_RETAINED <- data. frame(train$RETAINED) ensemble_RETAINED[2] <- predict_LDA_train[1] ensemble_RETAINED[3] <- predict_logistic_train ensemble_RETAINED[4]](https://slidetodoc.com/presentation_image_h/14afd4de8cfc8b133aae673d32bbf841/image-71.jpg)

- Slides: 73

Introduction to the Data Predictive Analytics Methods with Rapid. Miner and R using Institutional Data Mark Leany and Evelyn Ho-Wisniewski AIR Forum 2018 Orlando, Florida

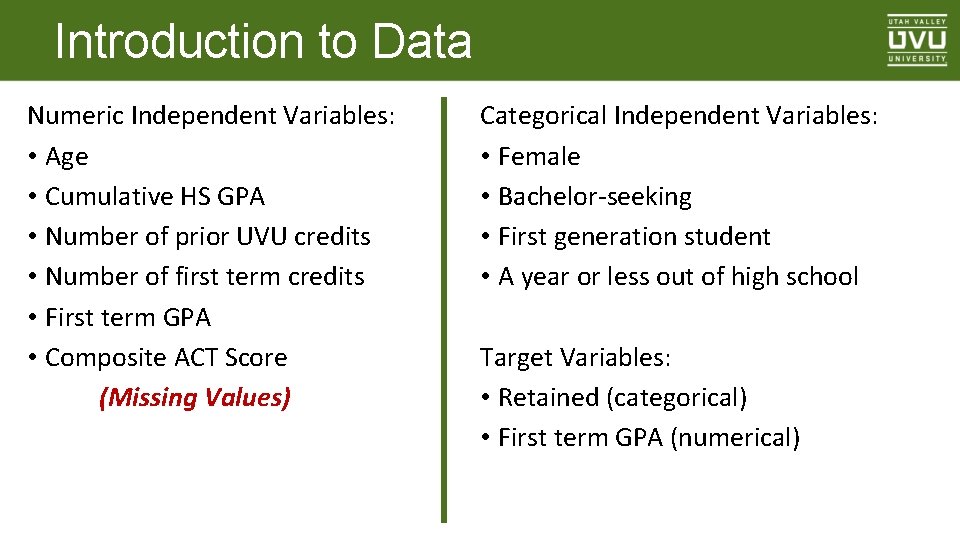

Tools https: //rapidminer. com/ Strengths: • Easy GUI • It does the work behind the scenes • It has multiple pre-defined techniques and options Limitations: • Need to manually update each parameter • Don’t always know what it did behind the scenes • Not the best documentation (sometimes have to use other tools)

Tools https: //cran. r-project. org/ https: //www. rstudio. com/ Strengths: • Open Source (and FREE!) • Very powerful – lots of options • Very easy to modify for a change in parameters Limitations: • Not really supported, so help can be inconsistent • Don’t always know which commands exist • Non-standard commands have to be entered correctly (Case Sensitive)

Introduction to Data Numeric Independent Variables: • Age • Cumulative HS GPA • Number of prior UVU credits • Number of first term credits • First term GPA • Composite ACT Score (Missing Values) Categorical Independent Variables: • Female • Bachelor-seeking • First generation student • A year or less out of high school Target Variables: • Retained (categorical) • First term GPA (numerical)

Data Cleaning Age • <= 40 • After 40, < 10 per group (0. 32% of total) Prior Credits • <= 64 • 64 is Associate’s Degree (0. 37% of total) Term Credits • <= 20 • Need specific permission to take more (0. 03% of total)

Datasets - Train / Test / Score Train • 2/3 of Fall 2012 – 2015 • Cross Validate Test • 1/3 of Fall 2012 – 2015 • Test (and modify or select alternate model as necessary) Score • All of Fall 2016 • You would not normally have results (to calculate % success) _____

Techniques Cluster Analysis – Placing elements into natural groups Linear Discriminant Analysis (LDA) – Predicting a categorical result based on continuous independent variables Linear Regression – Predicting a continuous result based on continuous independent variables Logistic Regression – Predicting the probability of a categorical result based on continuous independent variables Decision Trees – Predicting a categorical result based on continuous or categorical independent variables

Clustering - Definition Type: Classification Input: Numeric Output: Centroids and assignment to a cluster Unique Characteristics: • Unsupervised method • k-Means, k-Medoids, k-Modes • Scale your data

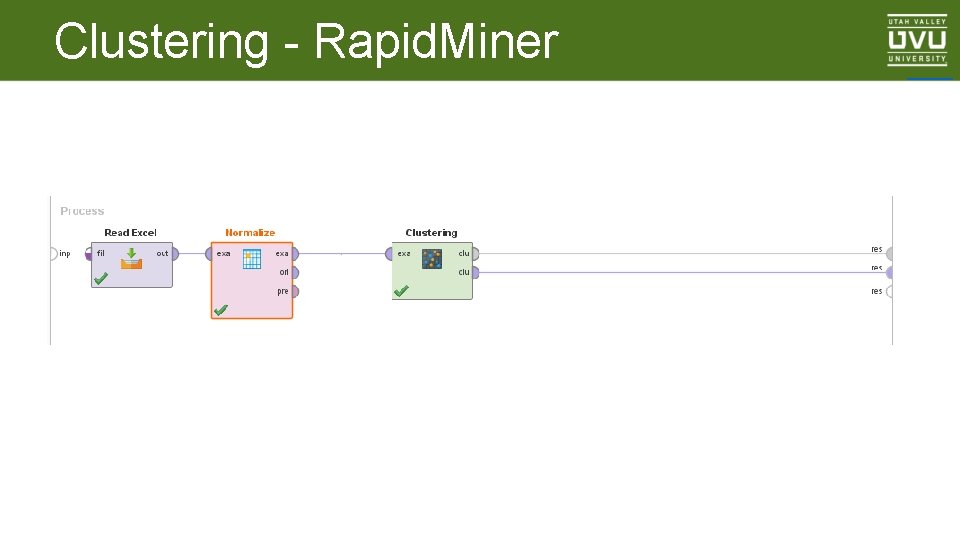

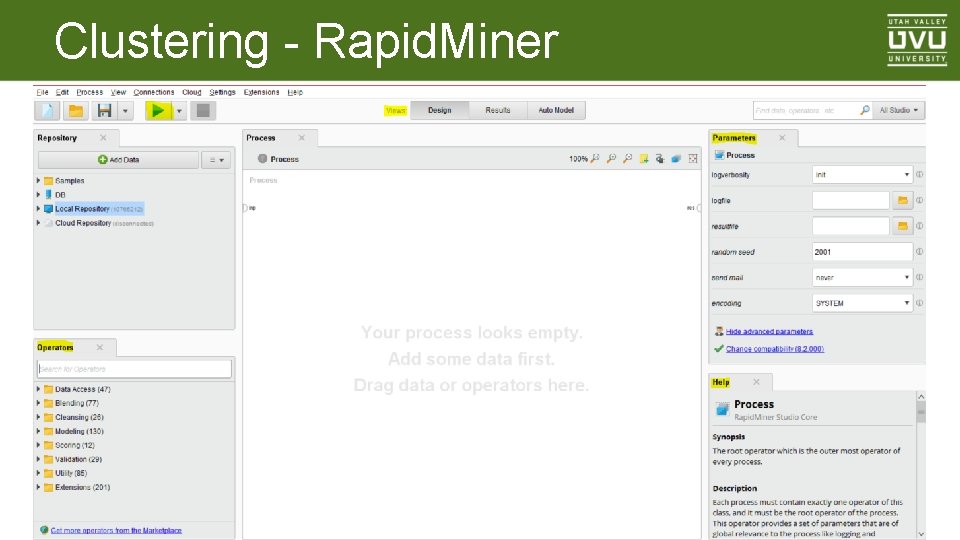

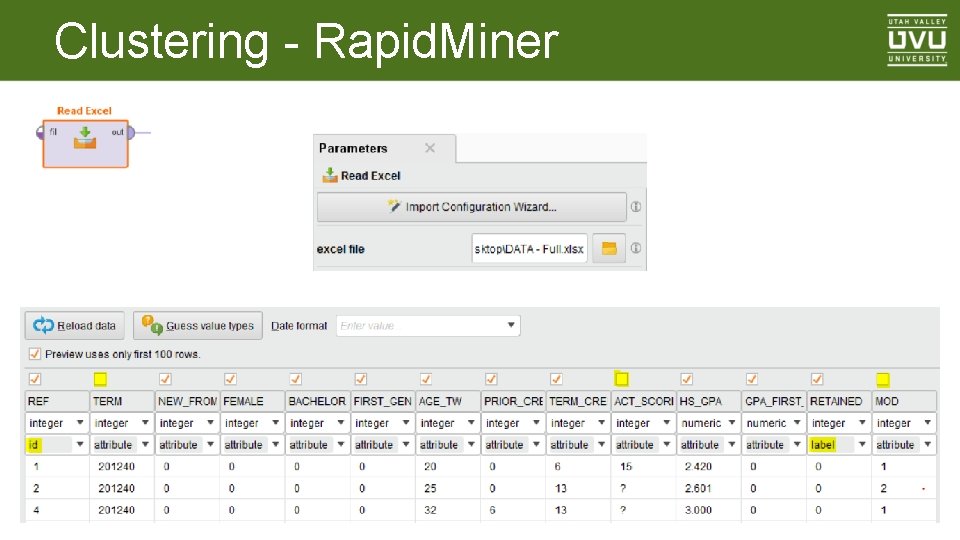

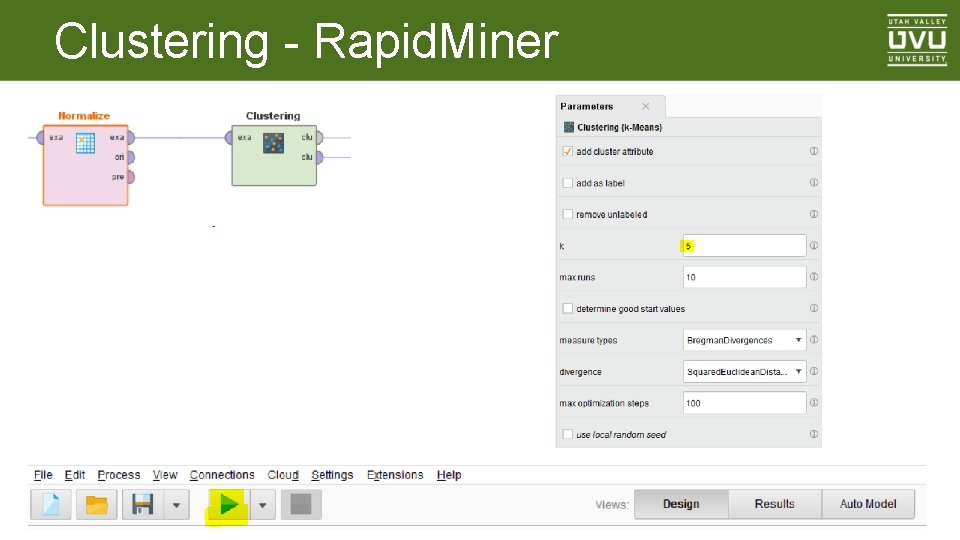

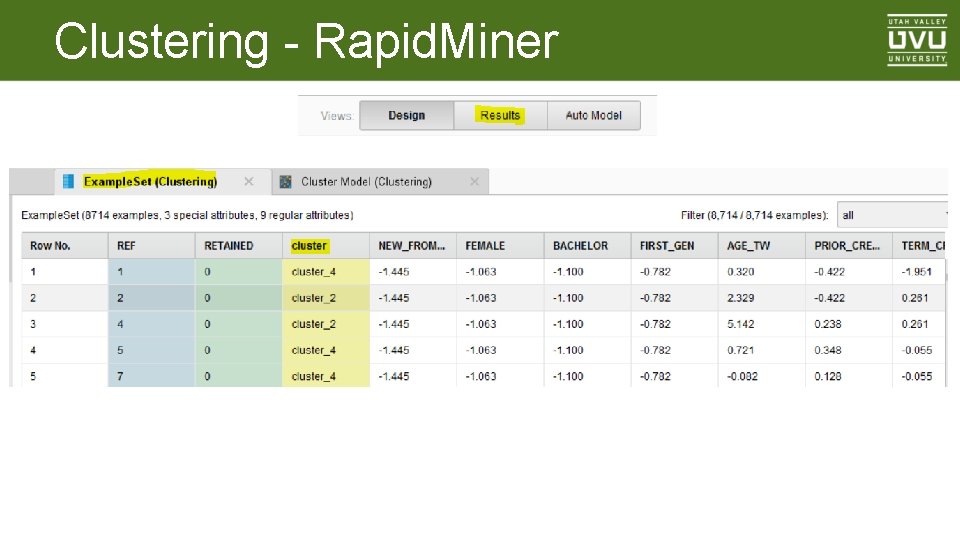

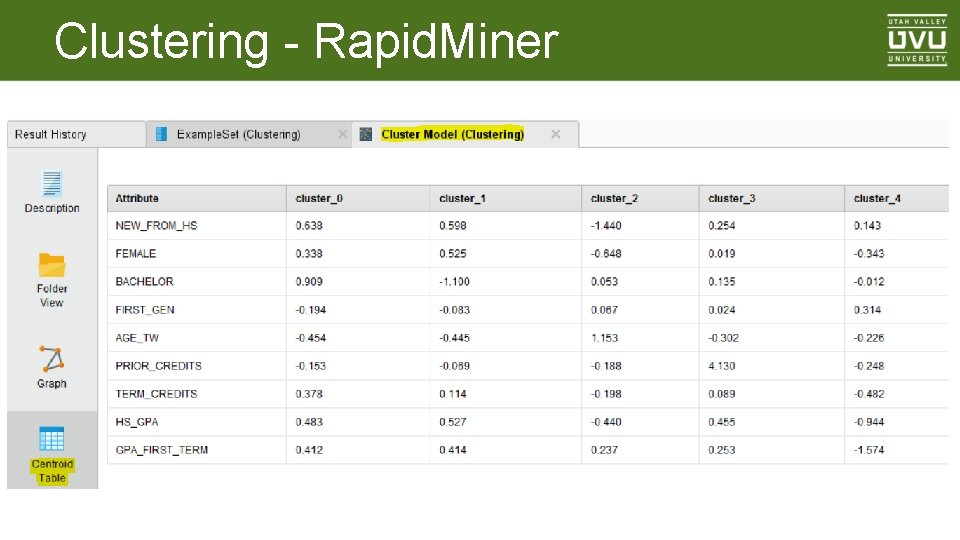

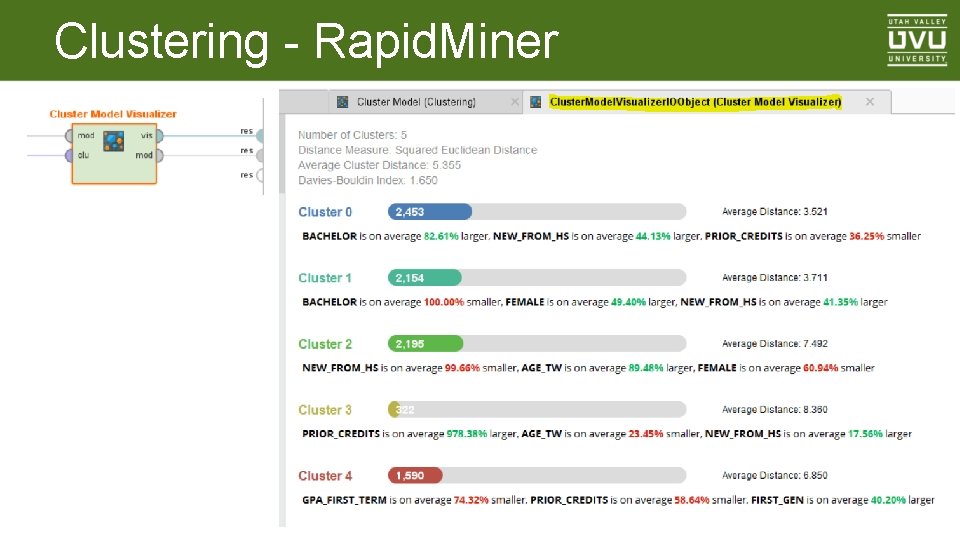

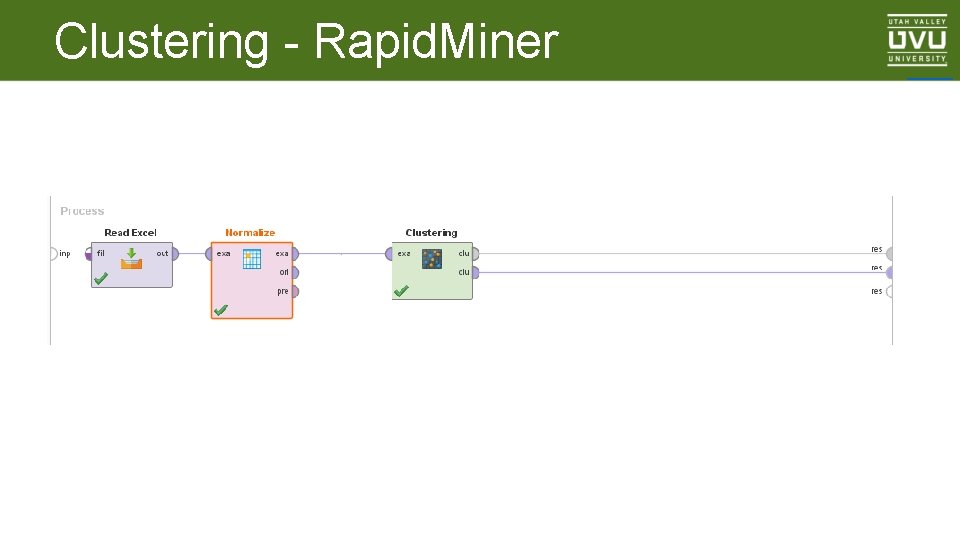

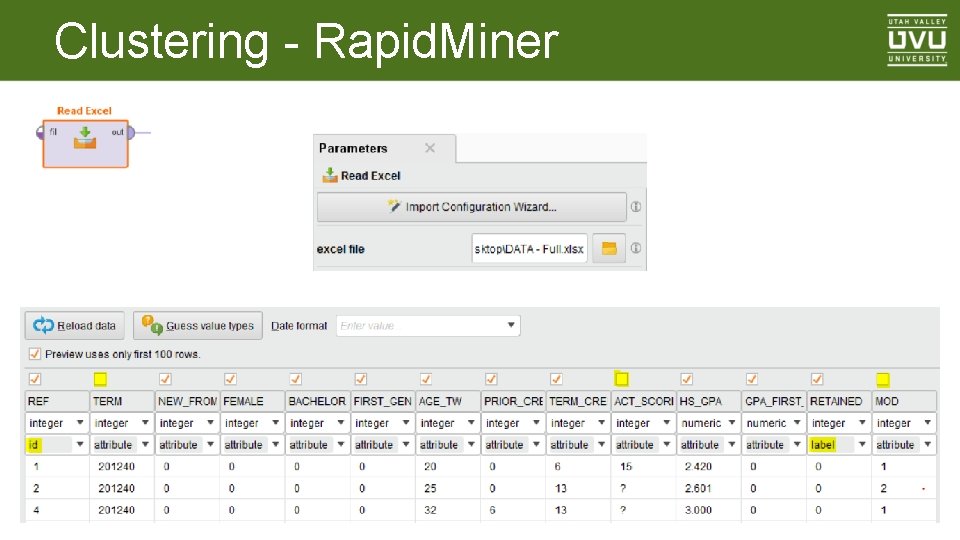

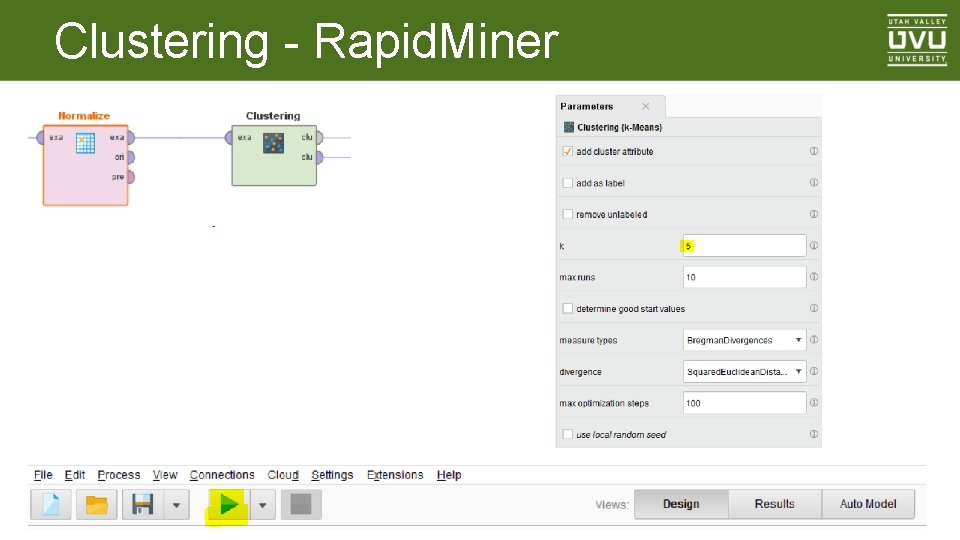

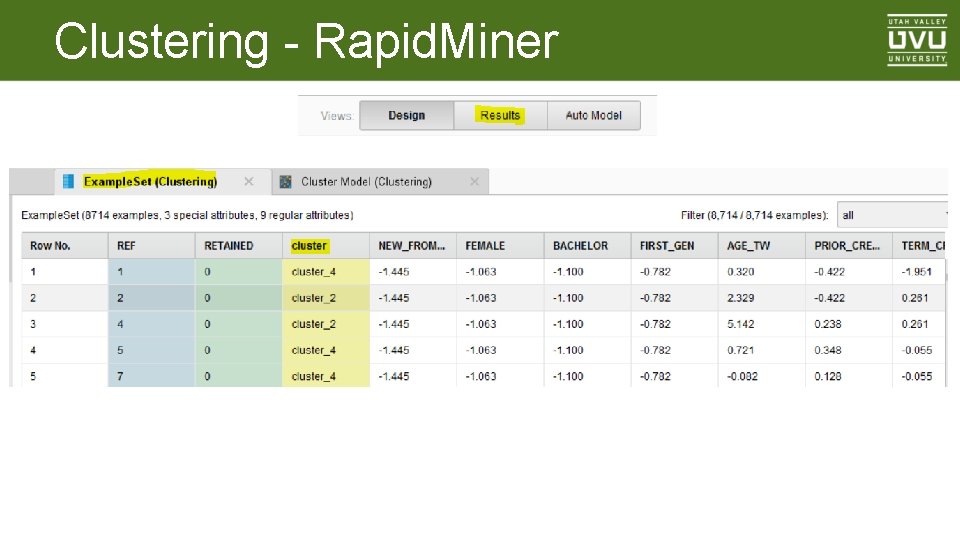

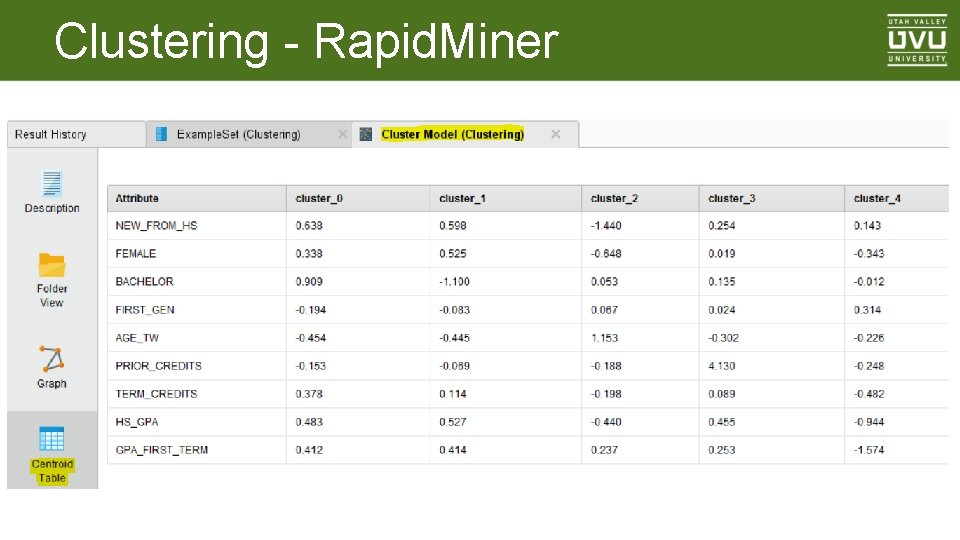

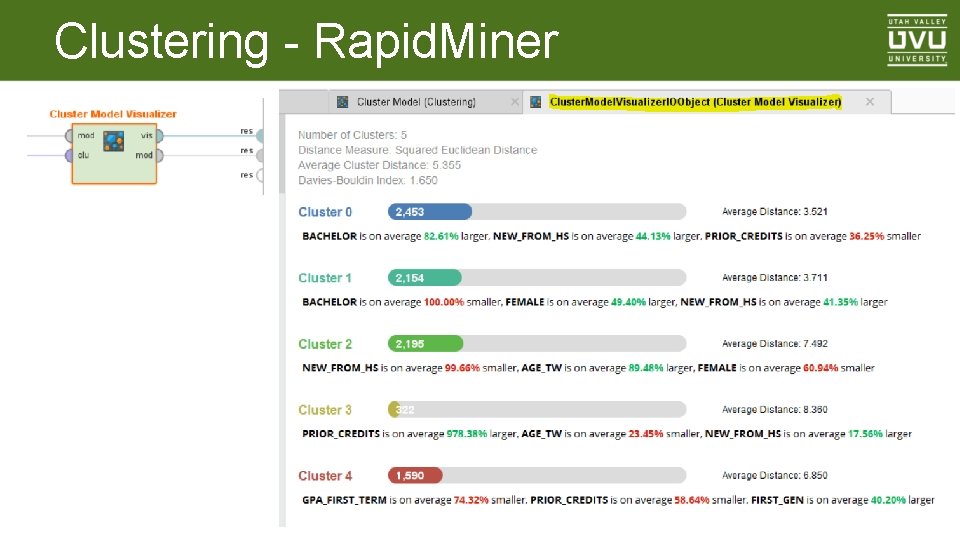

Clustering - Rapid. Miner _____

Clustering - Rapid. Miner

Clustering - Rapid. Miner

Clustering - Rapid. Miner

Clustering - Rapid. Miner

Clustering - Rapid. Miner

Clustering - Rapid. Miner

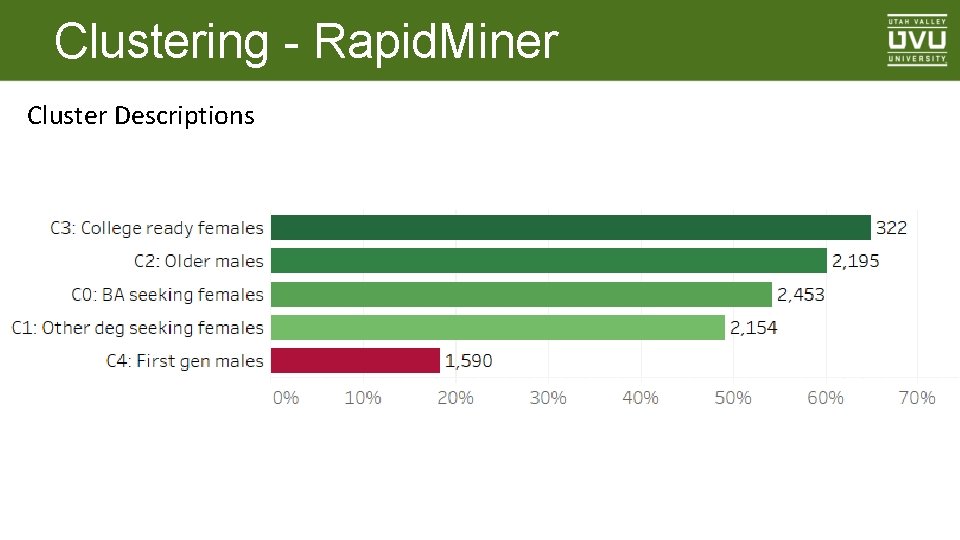

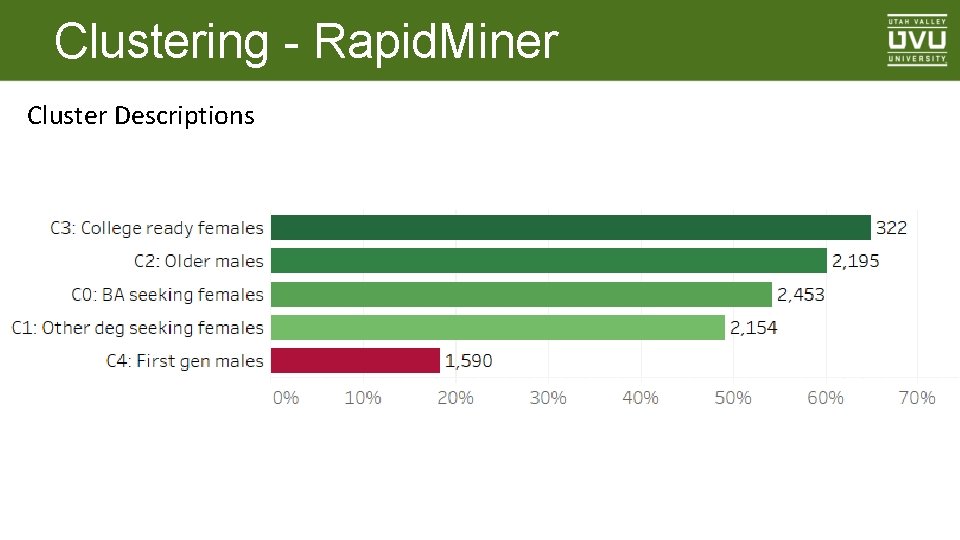

Clustering - Rapid. Miner Cluster Descriptions

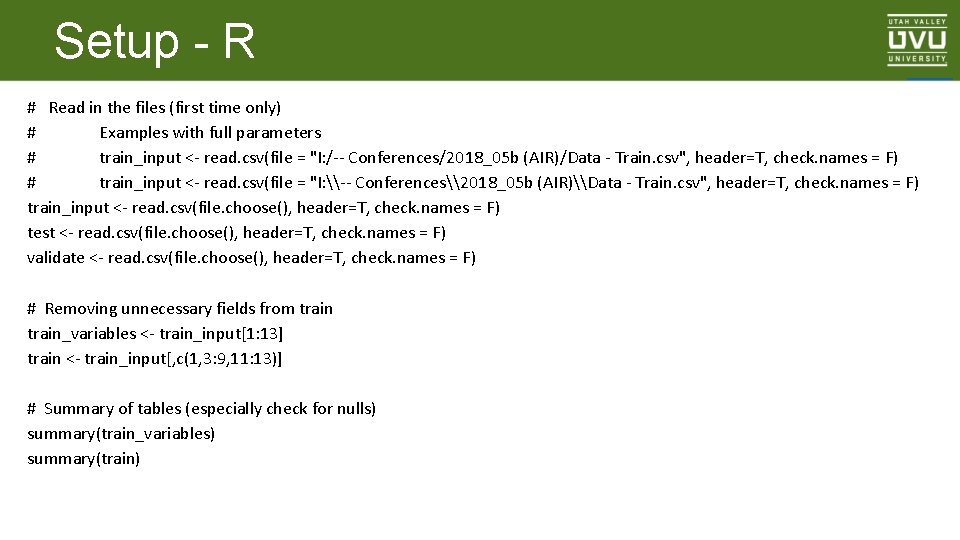

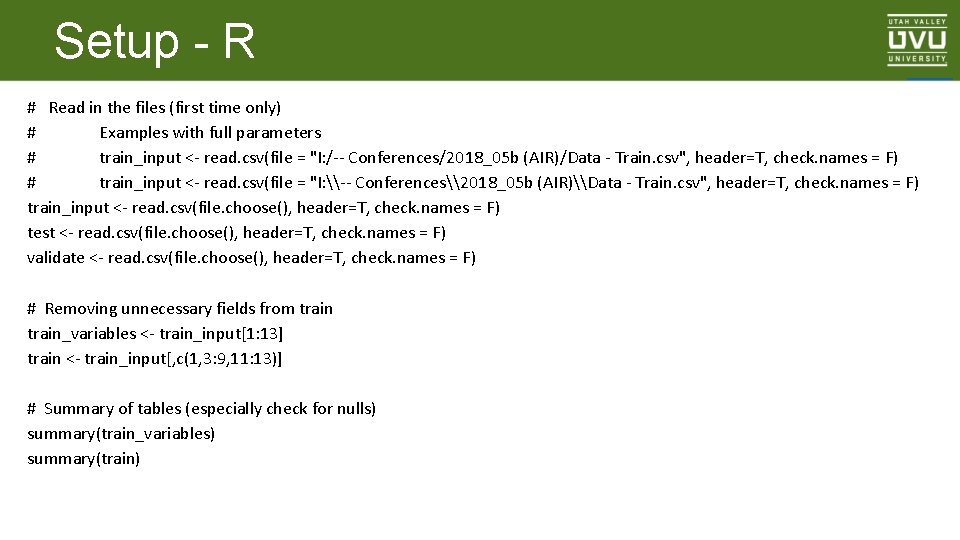

Setup - R _____ # Read in the files (first time only) # Examples with full parameters # train_input <- read. csv(file = "I: /-- Conferences/2018_05 b (AIR)/Data - Train. csv", header=T, check. names = F) # train_input <- read. csv(file = "I: \-- Conferences\2018_05 b (AIR)\Data - Train. csv", header=T, check. names = F) train_input <- read. csv(file. choose(), header=T, check. names = F) test <- read. csv(file. choose(), header=T, check. names = F) validate <- read. csv(file. choose(), header=T, check. names = F) # Removing unnecessary fields from train_variables <- train_input[1: 13] train <- train_input[, c(1, 3: 9, 11: 13)] # Summary of tables (especially check for nulls) summary(train_variables) summary(train)

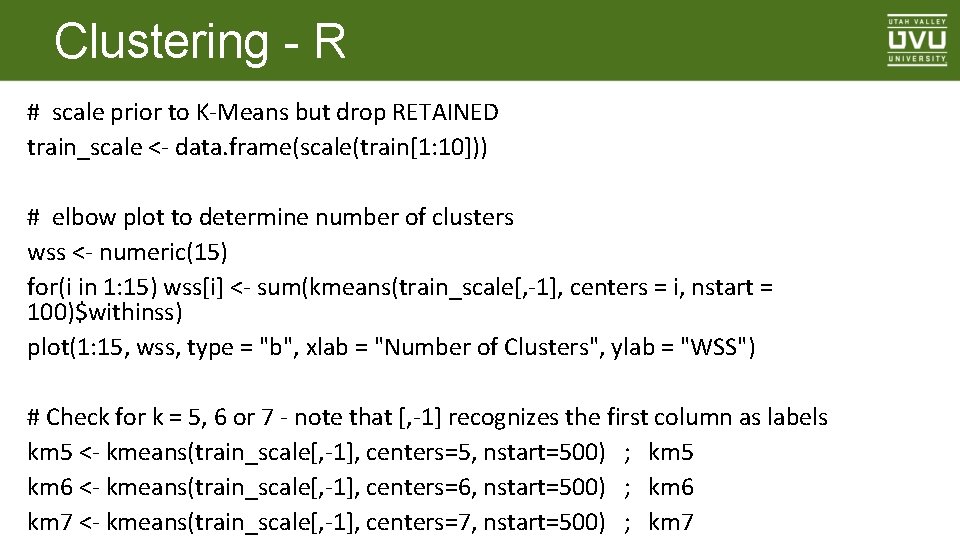

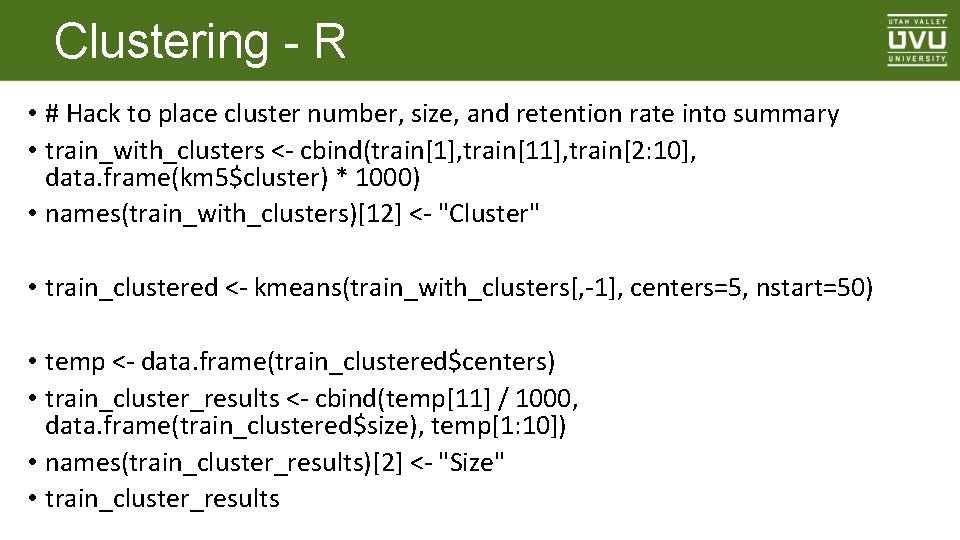

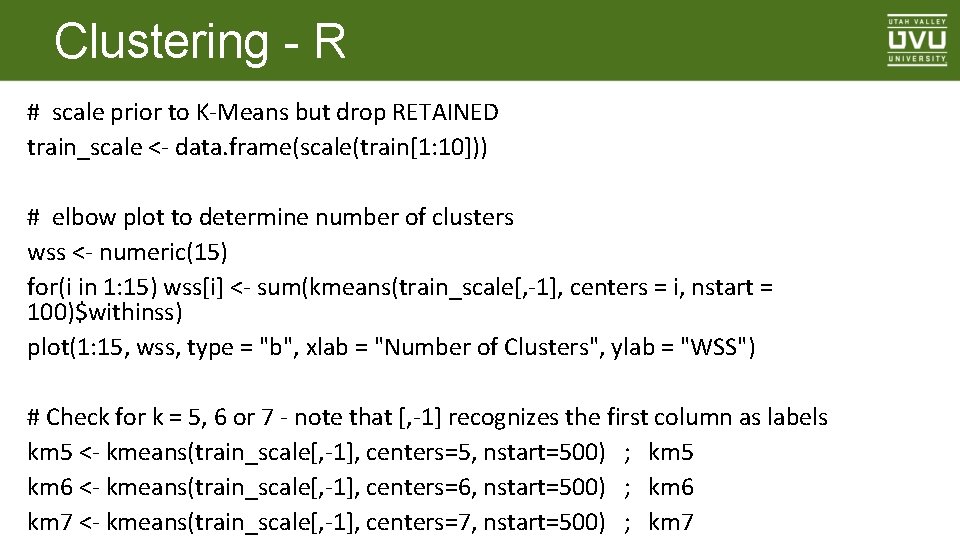

Clustering - R # scale prior to K-Means but drop RETAINED train_scale <- data. frame(scale(train[1: 10])) # elbow plot to determine number of clusters wss <- numeric(15) for(i in 1: 15) wss[i] <- sum(kmeans(train_scale[, -1], centers = i, nstart = 100)$withinss) plot(1: 15, wss, type = "b", xlab = "Number of Clusters", ylab = "WSS") # Check for k = 5, 6 or 7 - note that [, -1] recognizes the first column as labels km 5 <- kmeans(train_scale[, -1], centers=5, nstart=500) ; km 5 km 6 <- kmeans(train_scale[, -1], centers=6, nstart=500) ; km 6 km 7 <- kmeans(train_scale[, -1], centers=7, nstart=500) ; km 7

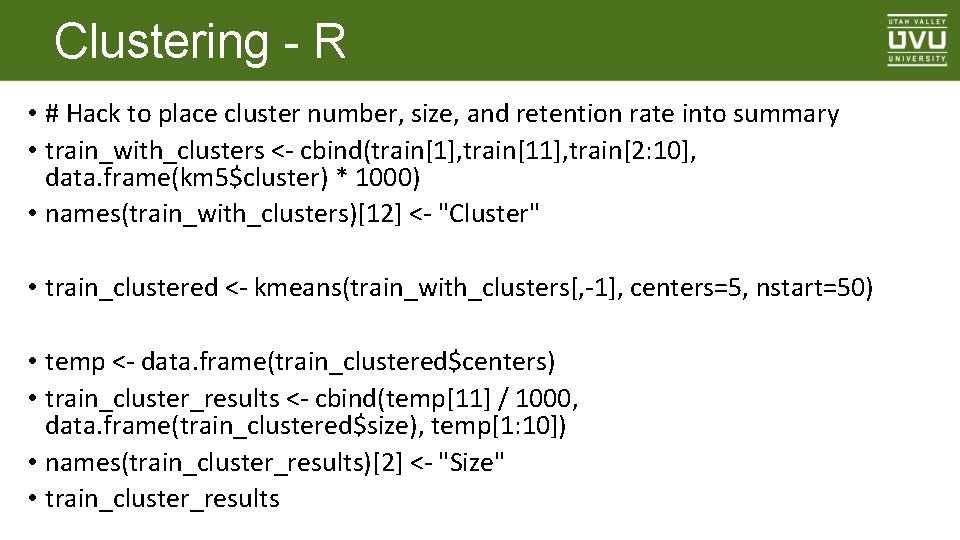

Clustering - R • # Hack to place cluster number, size, and retention rate into summary • train_with_clusters <- cbind(train[1], train[11], train[2: 10], data. frame(km 5$cluster) * 1000) • names(train_with_clusters)[12] <- "Cluster" • train_clustered <- kmeans(train_with_clusters[, -1], centers=5, nstart=50) • temp <- data. frame(train_clustered$centers) • train_cluster_results <- cbind(temp[11] / 1000, data. frame(train_clustered$size), temp[1: 10]) • names(train_cluster_results)[2] <- "Size" • train_cluster_results

Clustering - R

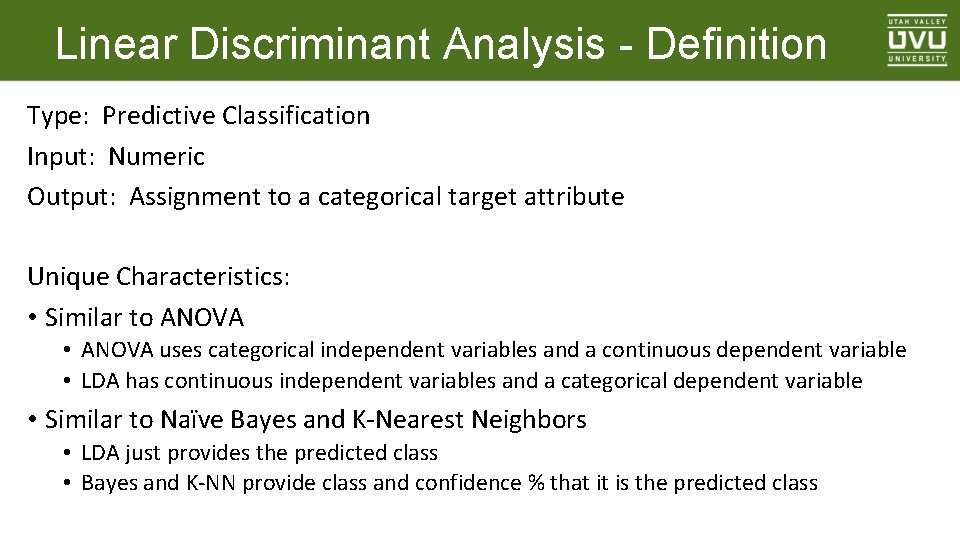

Linear Discriminant Analysis - Definition Type: Predictive Classification Input: Numeric Output: Assignment to a categorical target attribute Unique Characteristics: • Similar to ANOVA • ANOVA uses categorical independent variables and a continuous dependent variable • LDA has continuous independent variables and a categorical dependent variable • Similar to Naïve Bayes and K-Nearest Neighbors • LDA just provides the predicted class • Bayes and K-NN provide class and confidence % that it is the predicted class

Linear Discriminant Analysis - R # Run LDA model (it needs the MASS library) library(MASS) # model_LDA <- lda(RETAINED ~. , data = train) Can get unintended variables model_LDA <- lda(RETAINED ~ NEW_FROM_HS + FEMALE + BACHELOR + FIRST_GEN + AGE_TW + PRIOR_CREDITS + TERM_CREDITS + HS_GPA + GPA_FIRST_TERM, data = train) model_LDA predict_LDA_train <- data. frame(predict(model_LDA, train)) predict_LDA_test <- data. frame(predict(model_LDA, test)) predict_LDA_score <- data. frame(predict(model_LDA, score))

![Linear Discriminant Analysis R accuracyLDAtrain data frameifelsepredictLDAtrain1 trainRETAINED 1 0 accuracyLDAtest data Linear Discriminant Analysis - R accuracy_LDA_train <data. frame(ifelse(predict_LDA_train[1] == train$RETAINED, 1, 0)) accuracy_LDA_test <data.](https://slidetodoc.com/presentation_image_h/14afd4de8cfc8b133aae673d32bbf841/image-23.jpg)

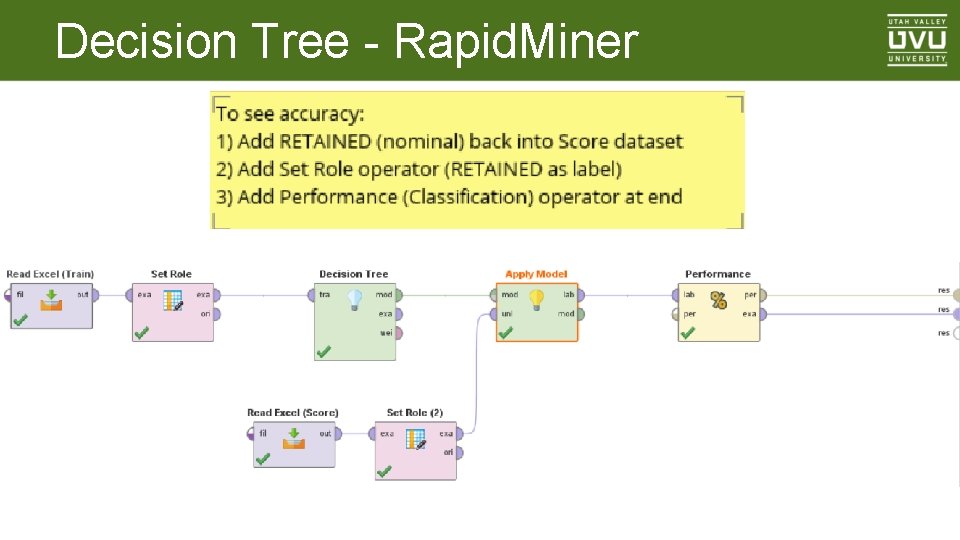

Linear Discriminant Analysis - R accuracy_LDA_train <data. frame(ifelse(predict_LDA_train[1] == train$RETAINED, 1, 0)) accuracy_LDA_test <data. frame(ifelse(predict_LDA_test[1] == test$RETAINED, 1, 0)) accuracy_LDA_score <data. frame(ifelse(predict_LDA_score[1] == score$RETAINED, 1, 0)) sum(accuracy_LDA_train) / nrow(accuracy_LDA_train) sum(accuracy_LDA_test) / nrow(accuracy_LDA_test) sum(accuracy_LDA_score) / nrow(accuracy_LDA_score) # 65. 5 # 66. 4 # 63. 6

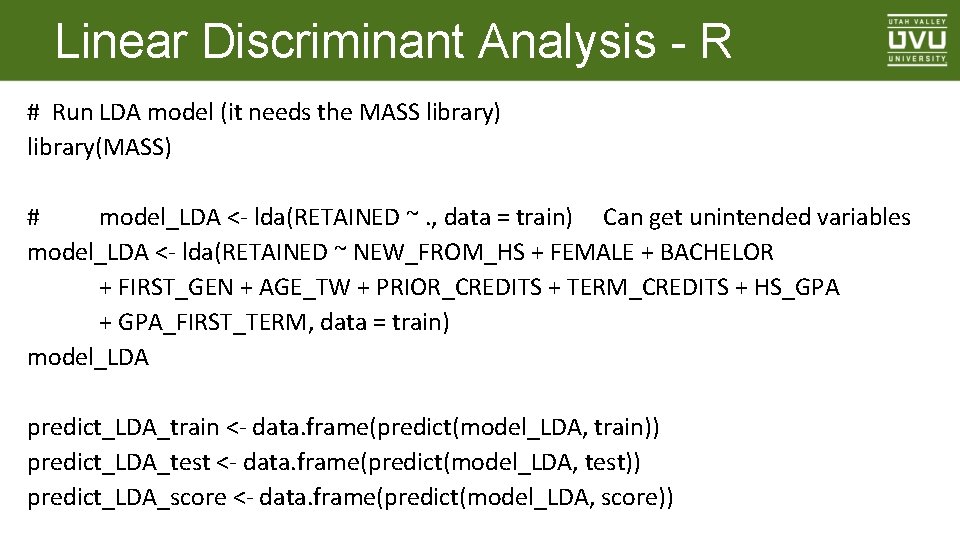

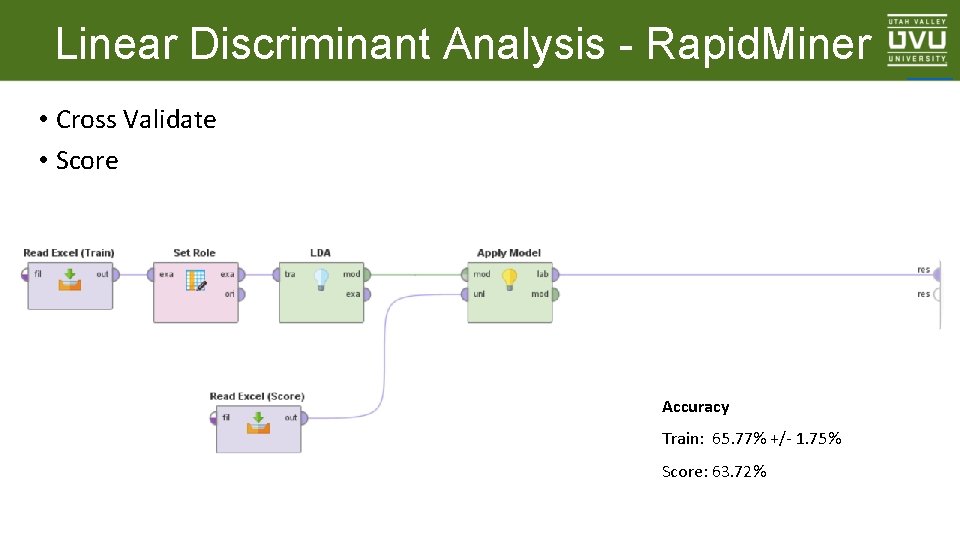

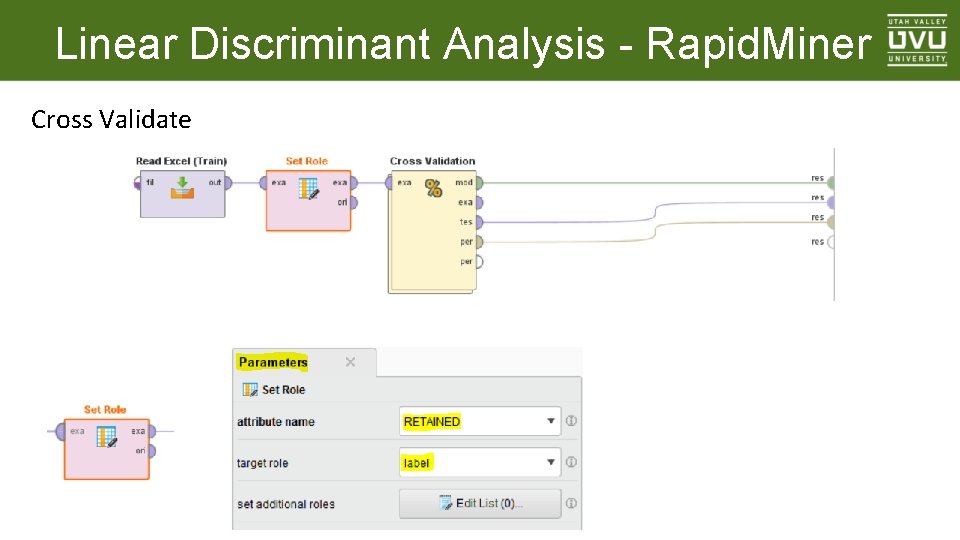

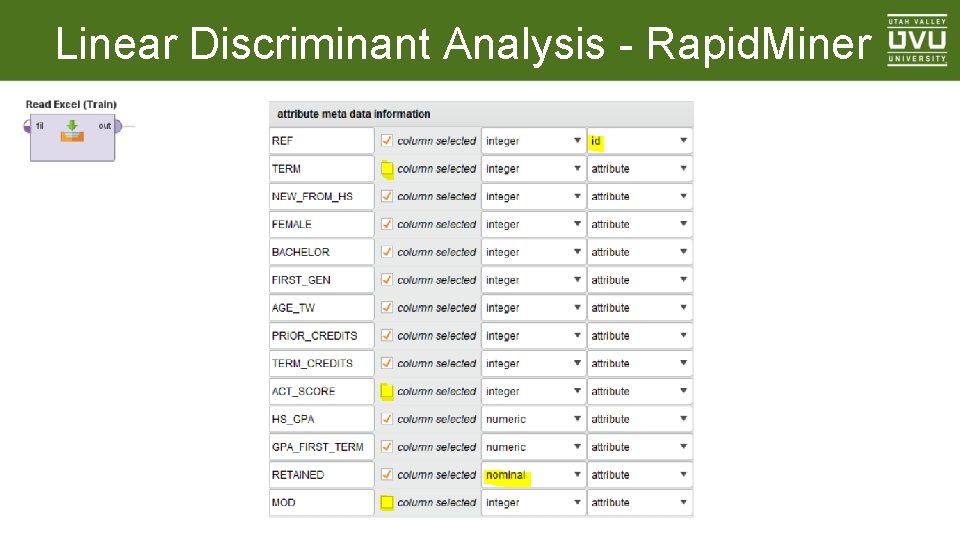

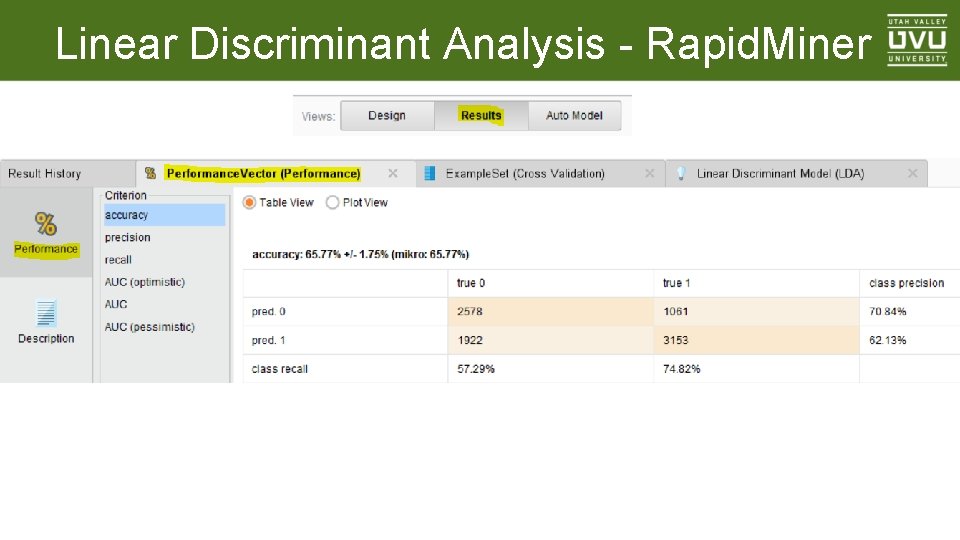

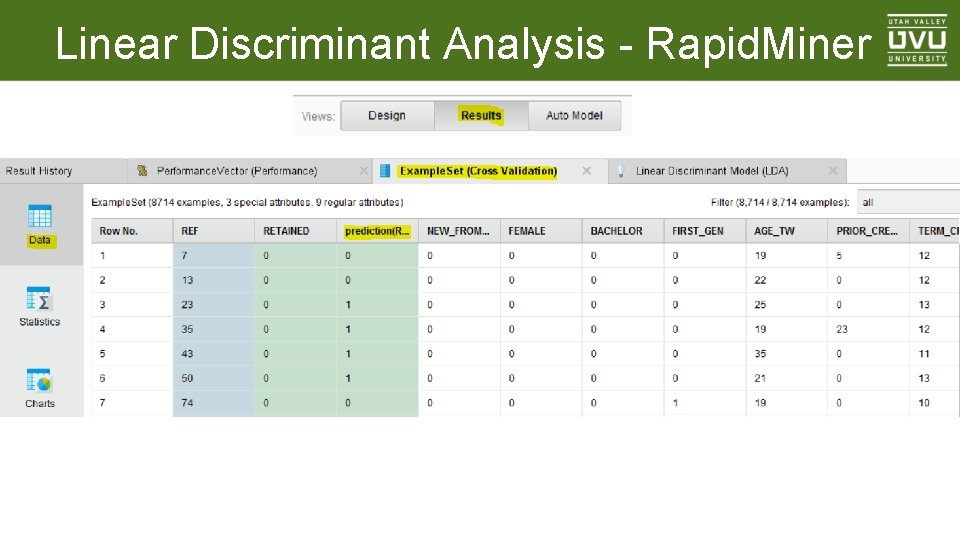

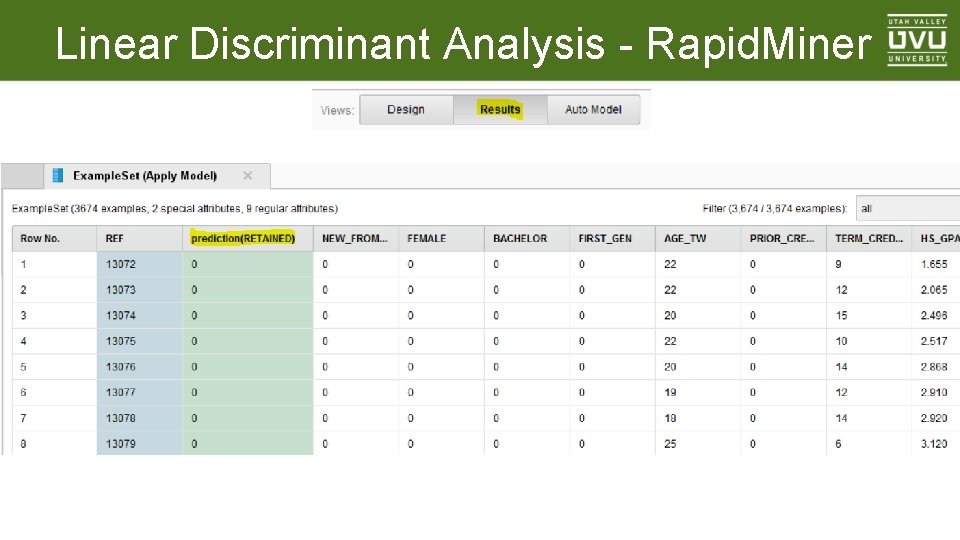

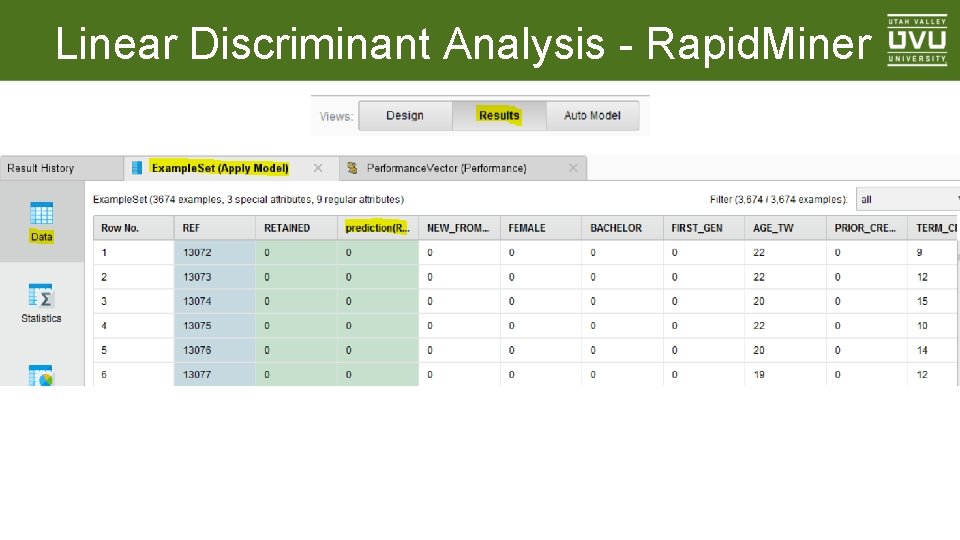

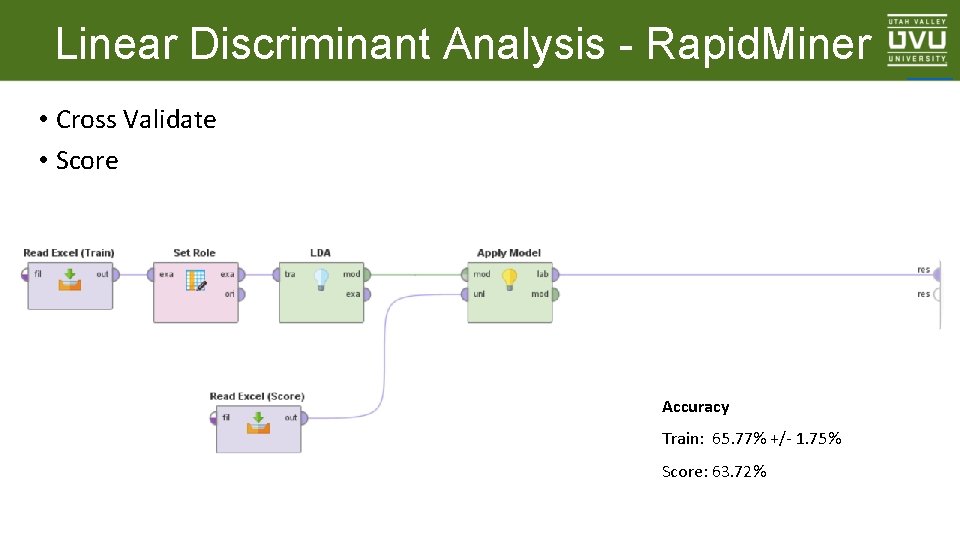

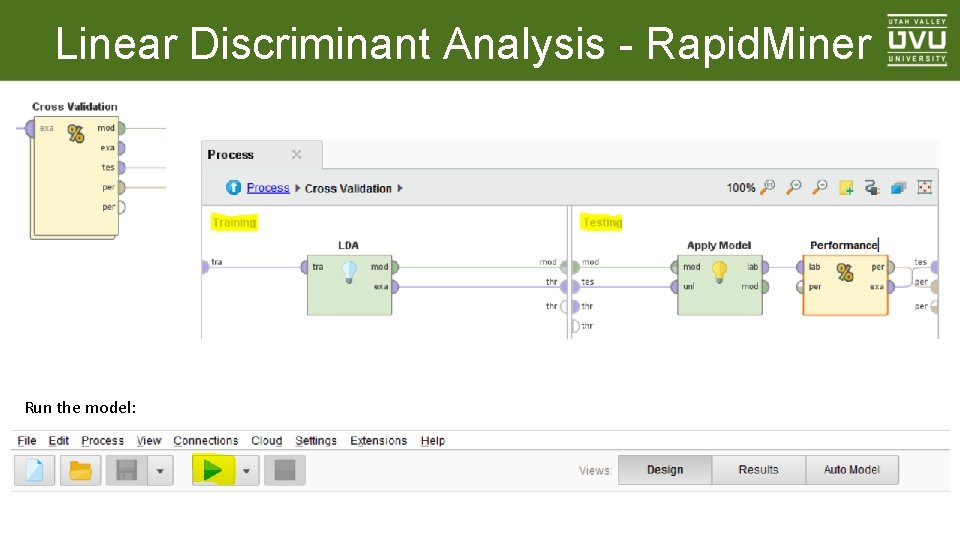

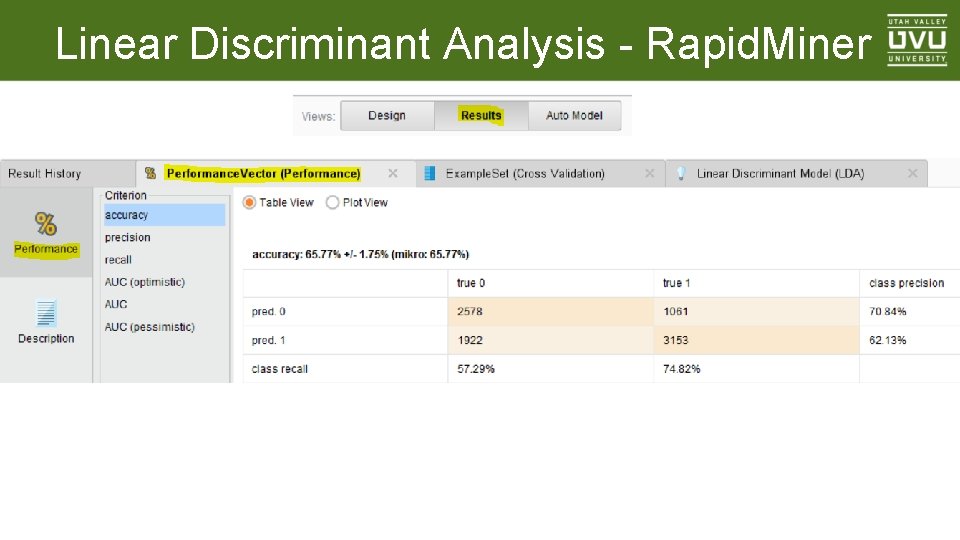

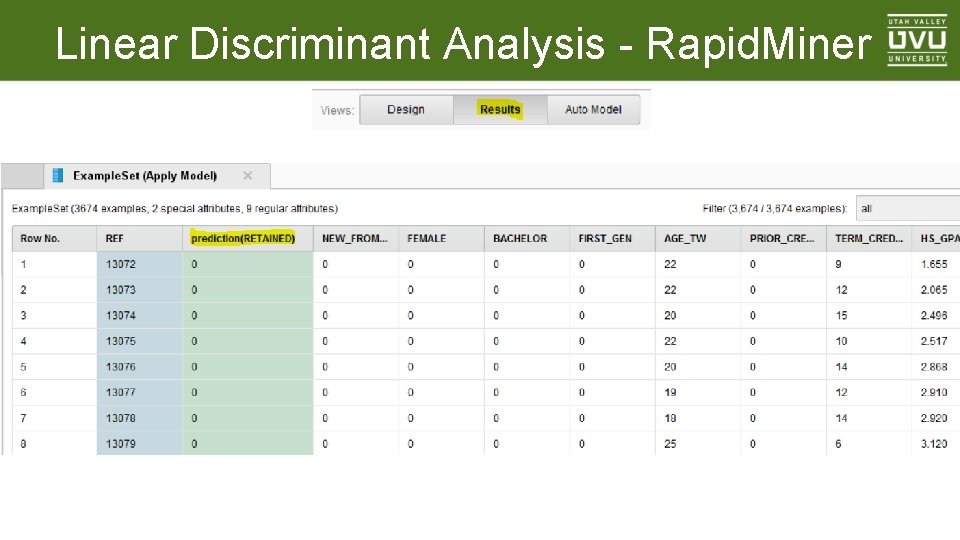

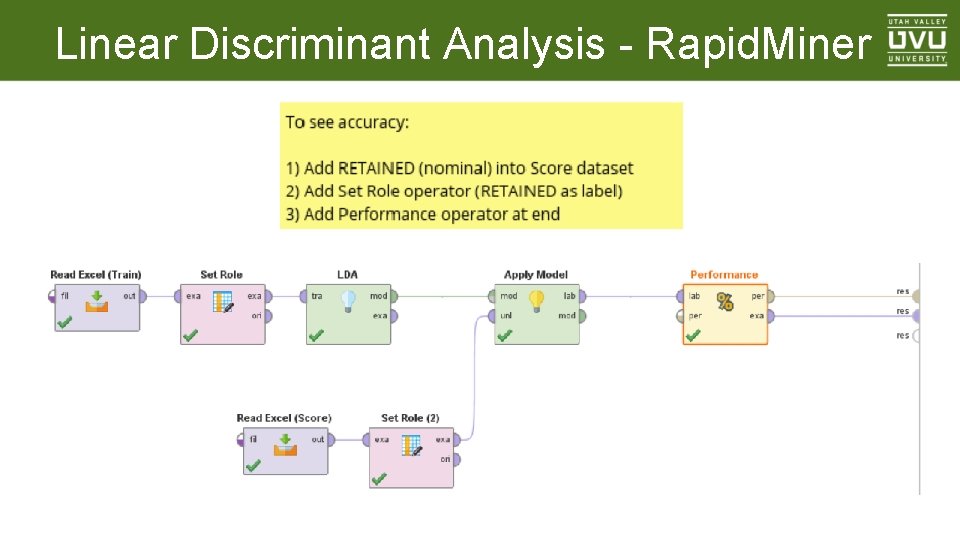

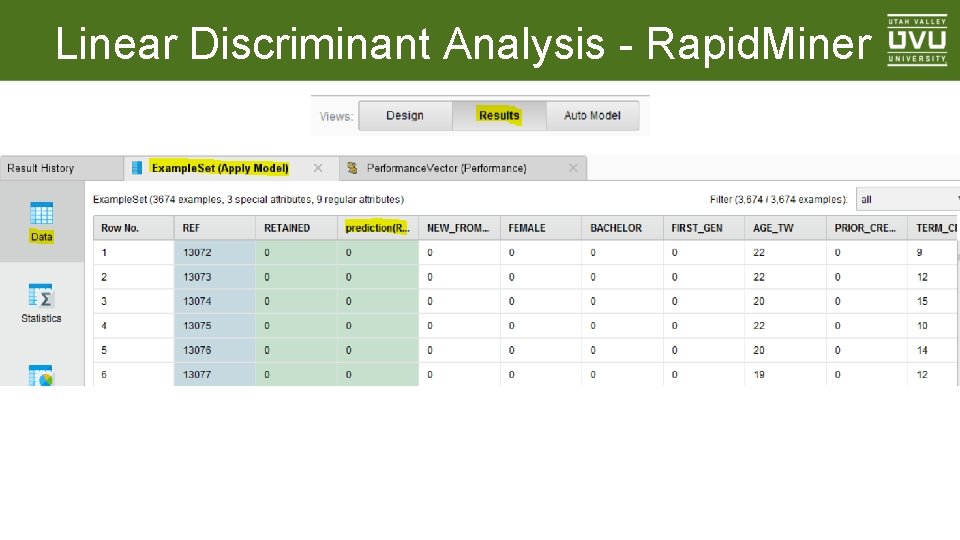

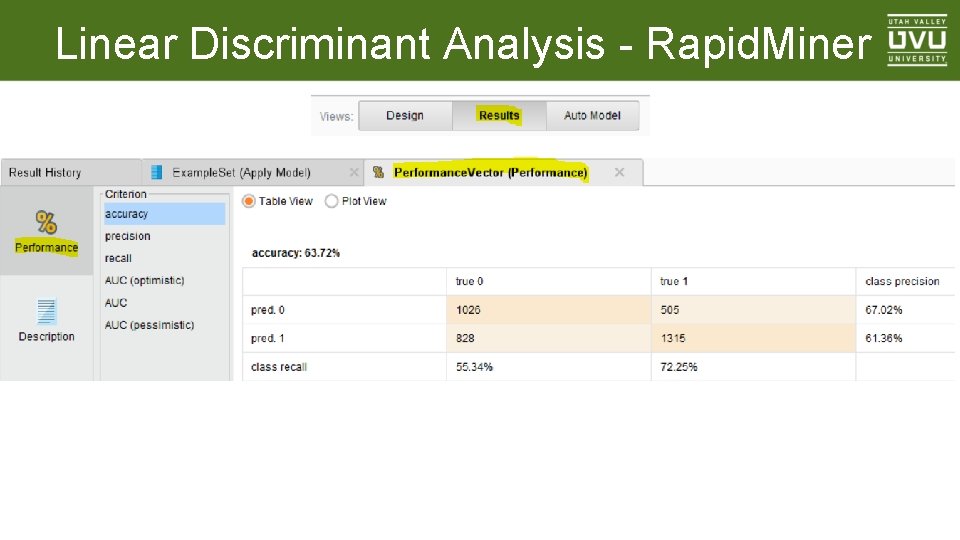

Linear Discriminant Analysis - Rapid. Miner • Cross Validate • Score Accuracy Train: 65. 77% +/- 1. 75% Score: 63. 72% _____

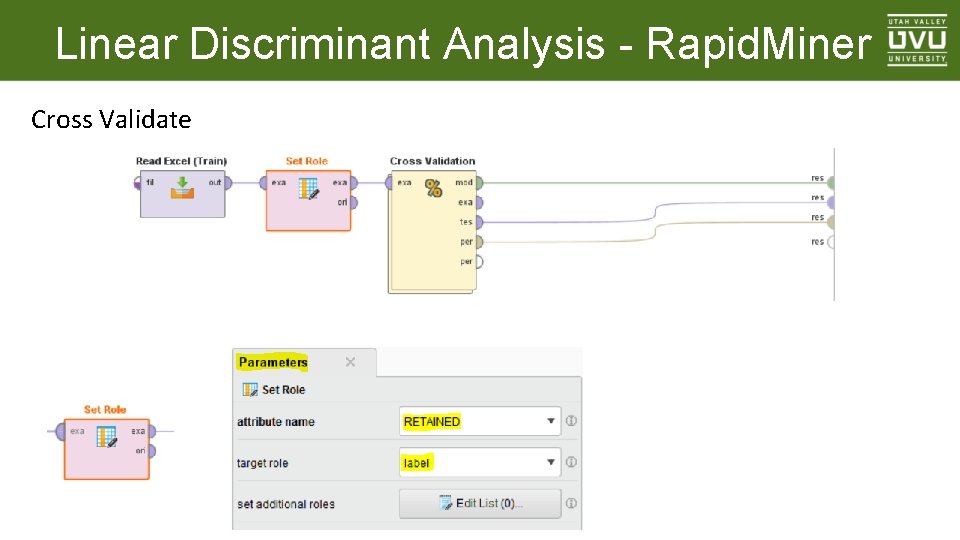

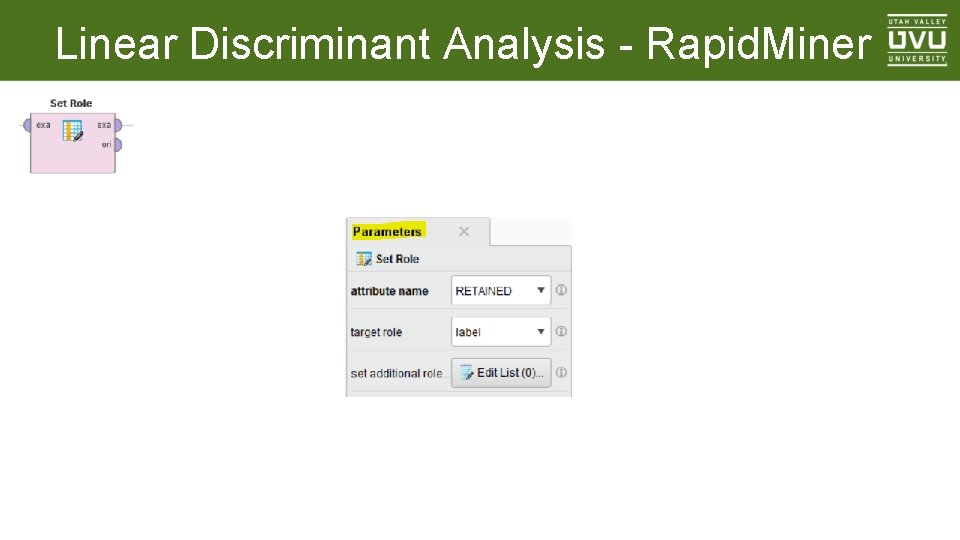

Linear Discriminant Analysis - Rapid. Miner Cross Validate

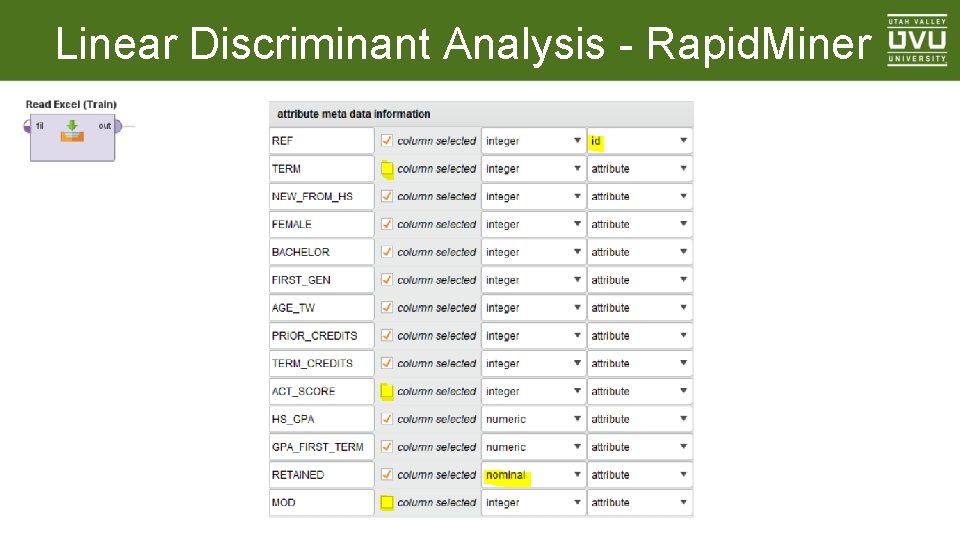

Linear Discriminant Analysis - Rapid. Miner

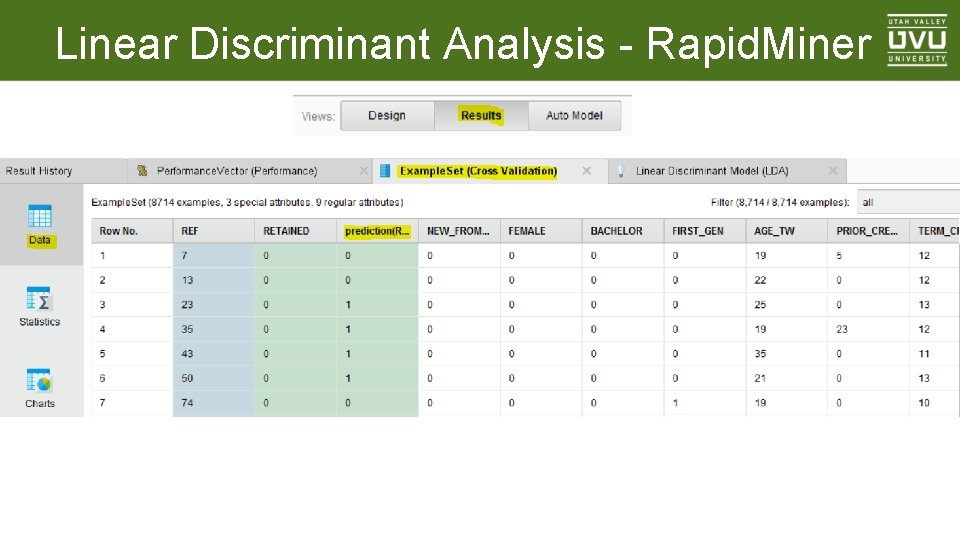

Linear Discriminant Analysis - Rapid. Miner

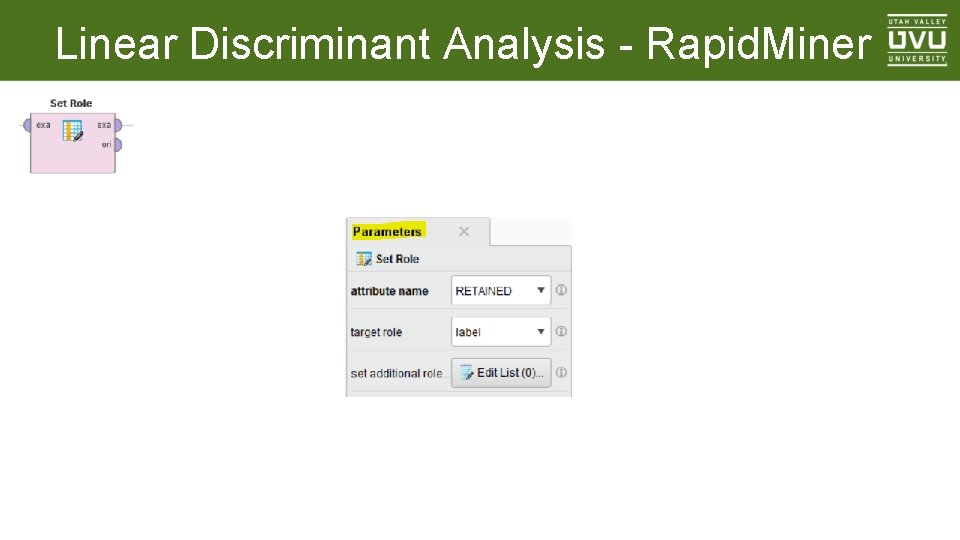

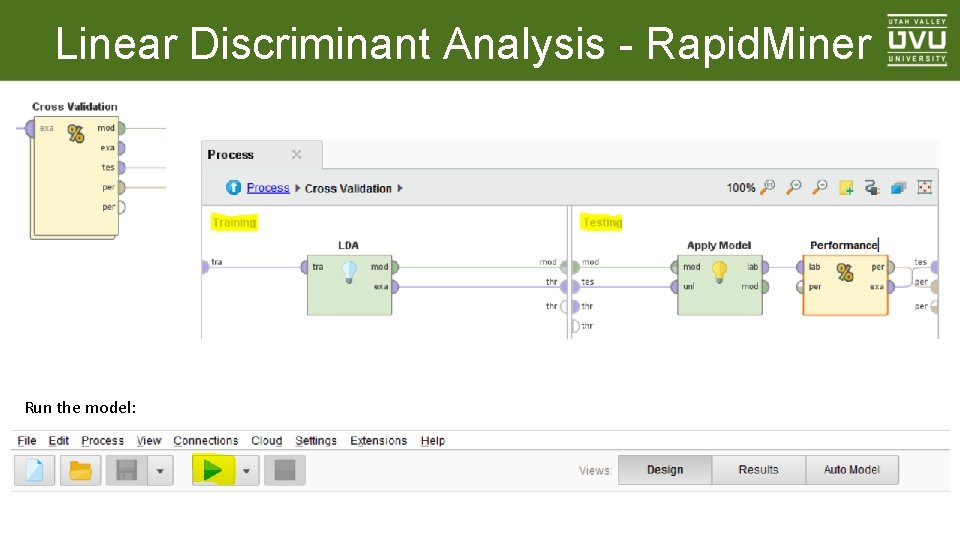

Linear Discriminant Analysis - Rapid. Miner Run the model:

Linear Discriminant Analysis - Rapid. Miner

Linear Discriminant Analysis - Rapid. Miner

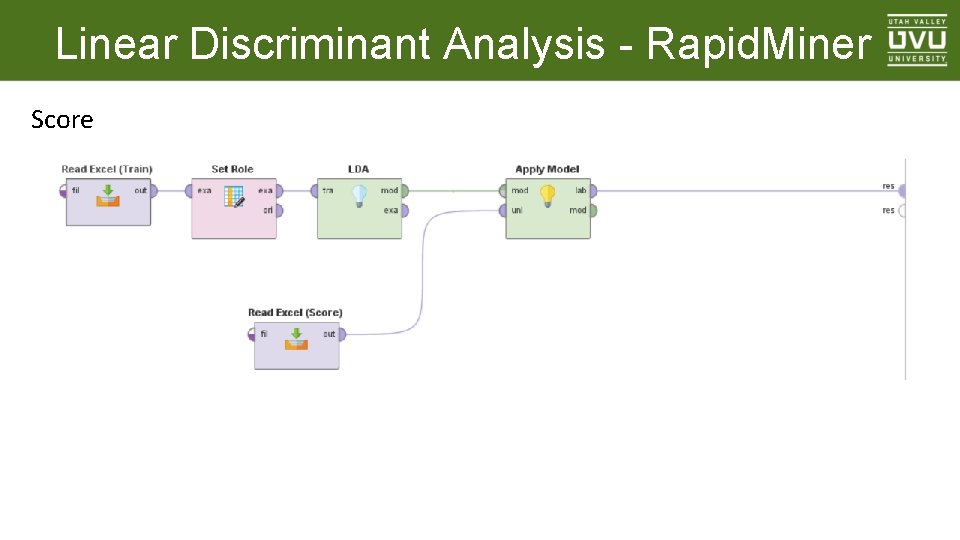

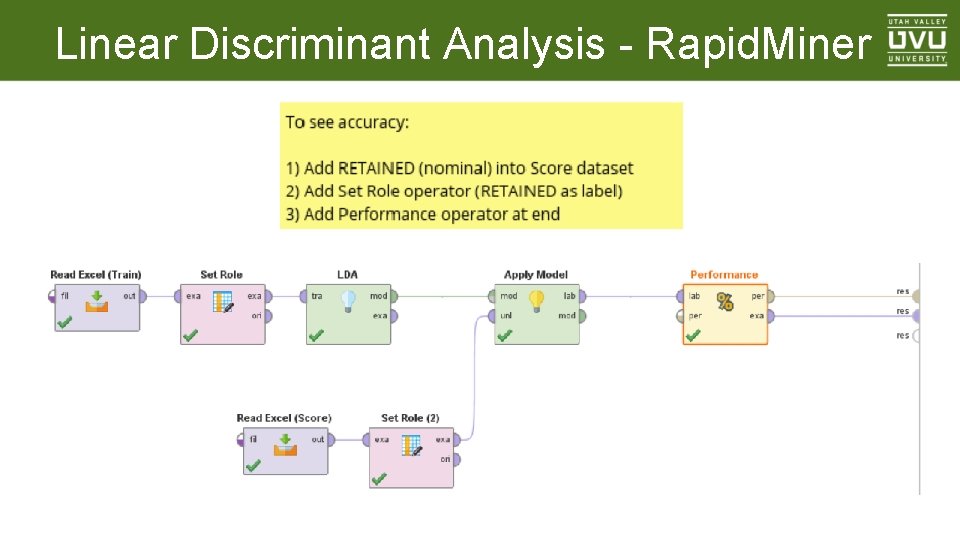

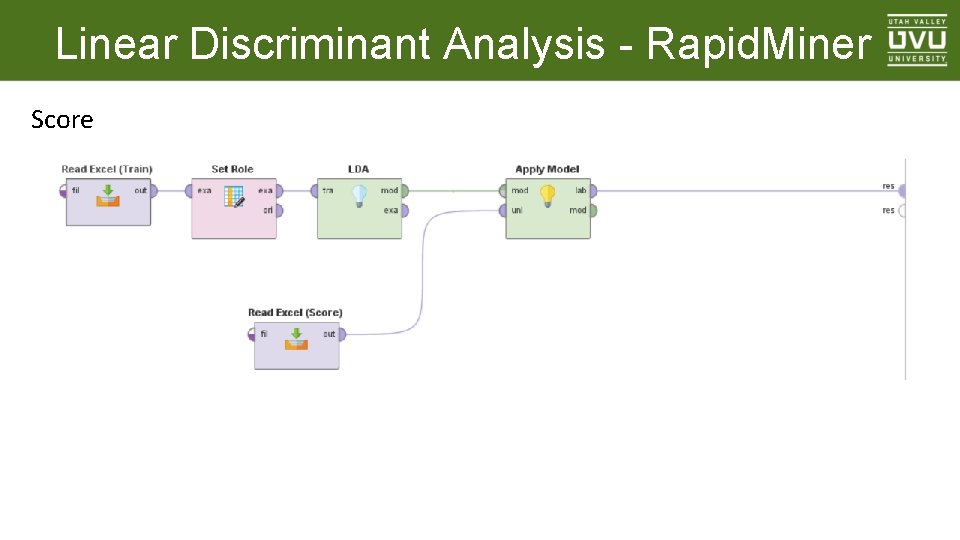

Linear Discriminant Analysis - Rapid. Miner Score

Linear Discriminant Analysis - Rapid. Miner

Linear Discriminant Analysis - Rapid. Miner

Linear Discriminant Analysis - Rapid. Miner

Linear Discriminant Analysis - Rapid. Miner

Linear Regression - Definition Type: Prediction Input: Numeric Output: Numeric predicted value Unique Characteristics: • Answer is equation (y = a + b 1 x 1 + b 2 x 2 + …) • Cannot predict for variables outside range of Training dataset • P-values for significance of individual variables • R-Squared for overall usefulness

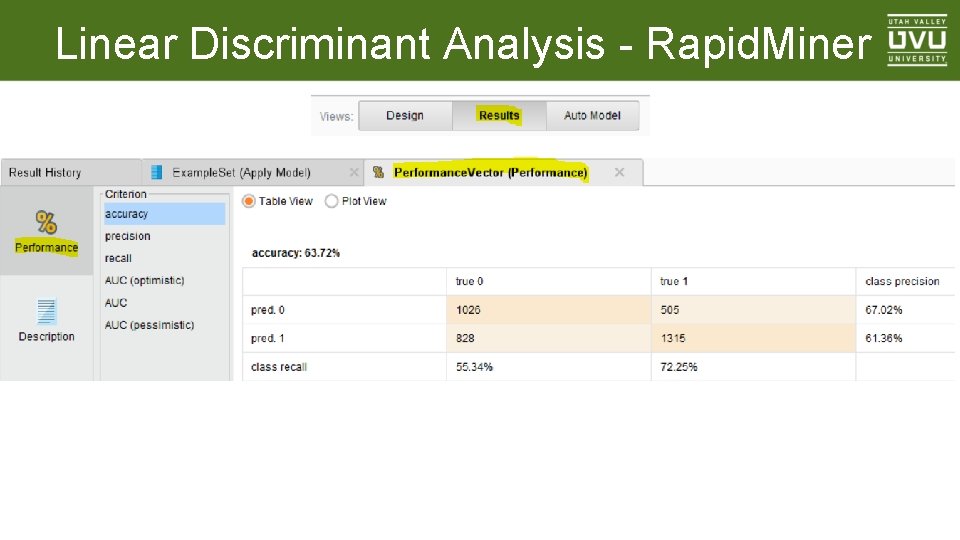

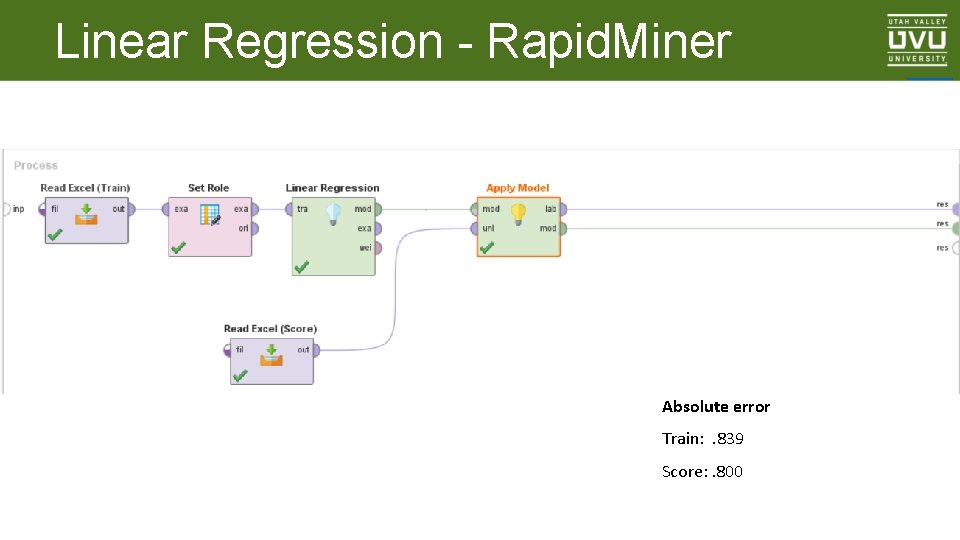

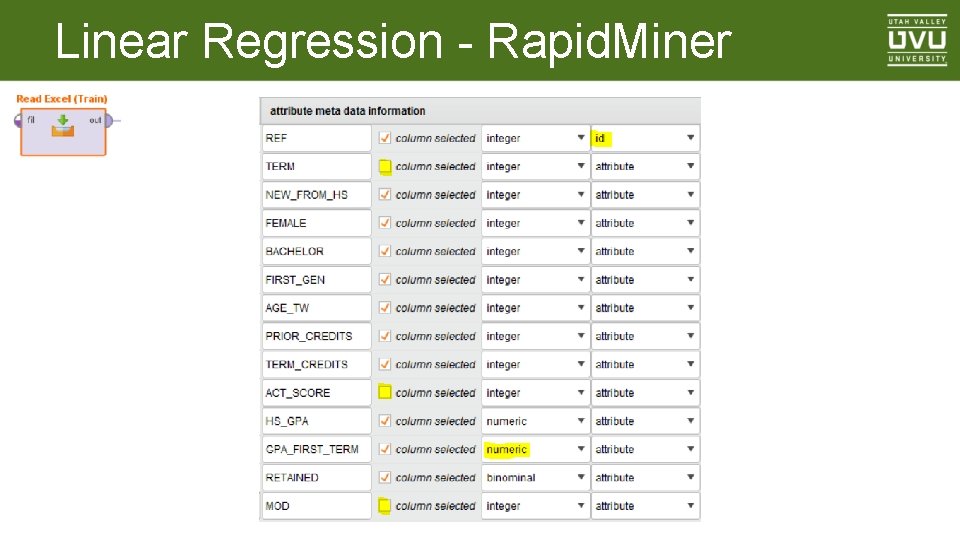

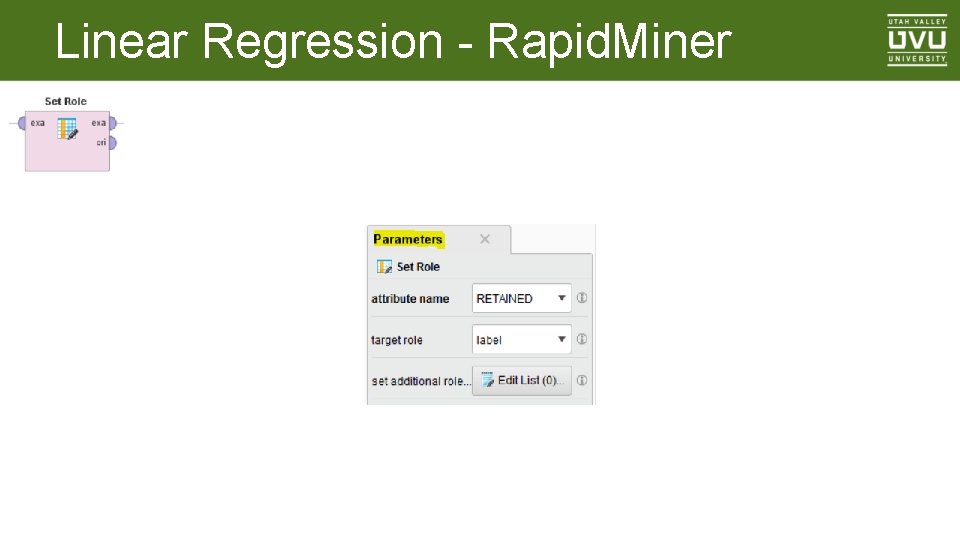

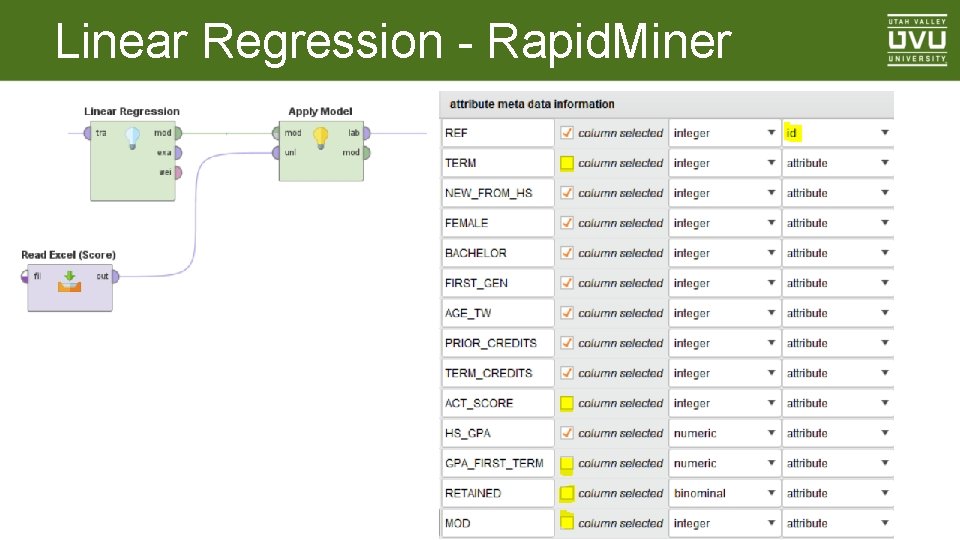

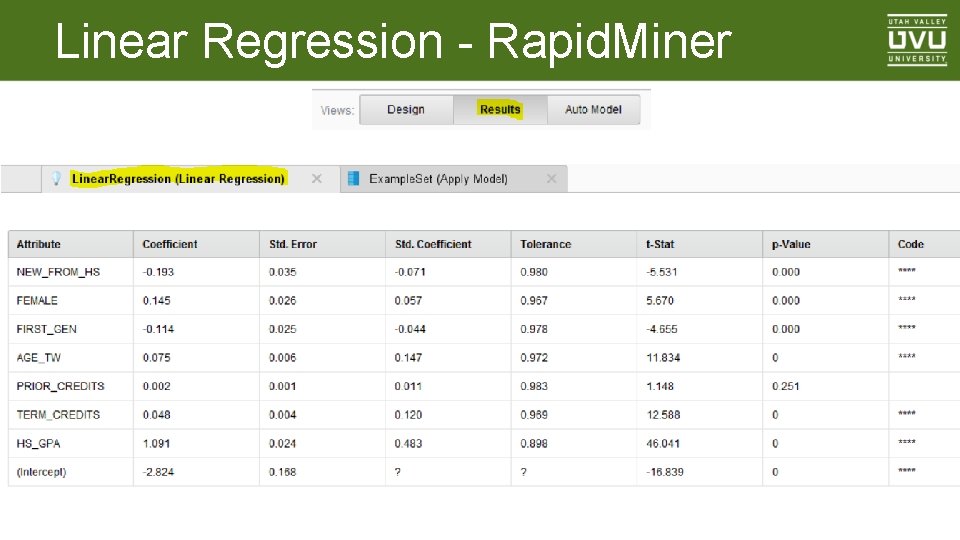

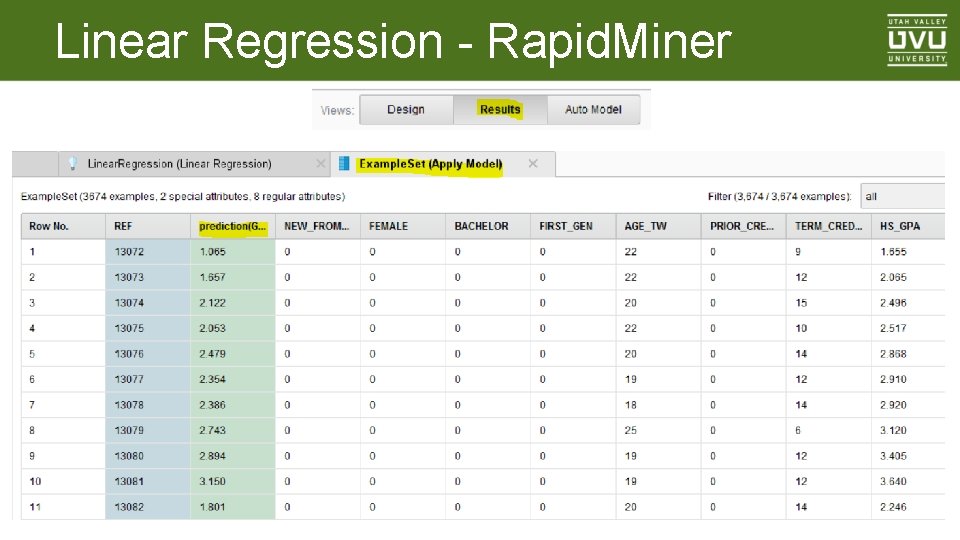

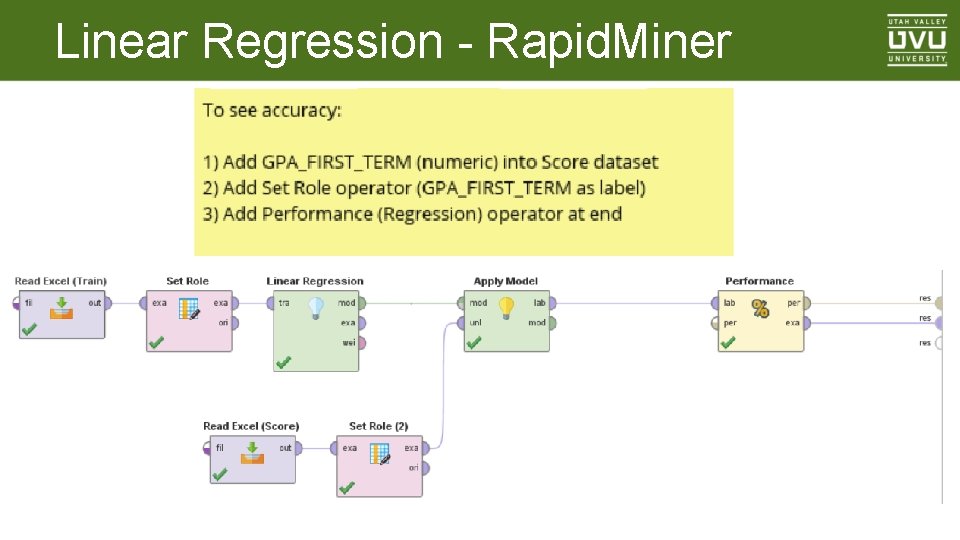

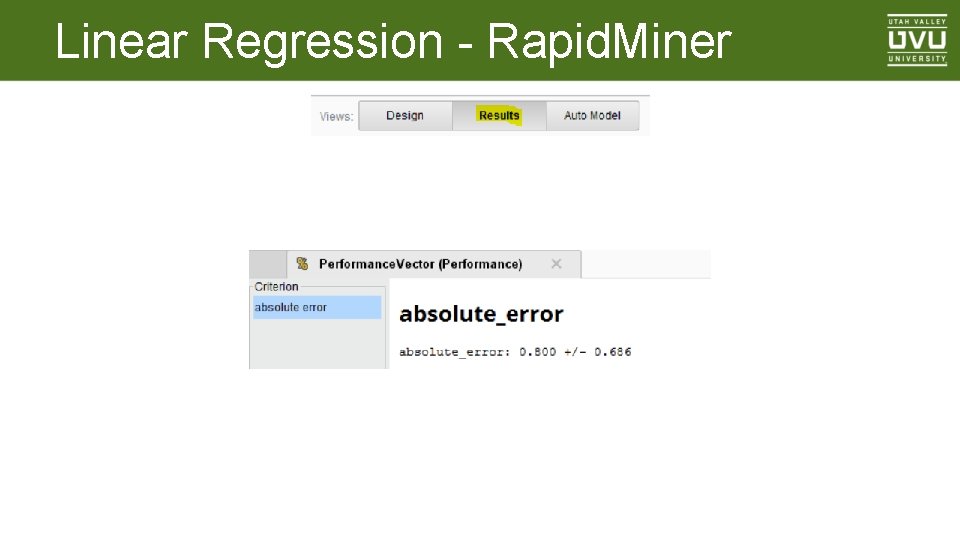

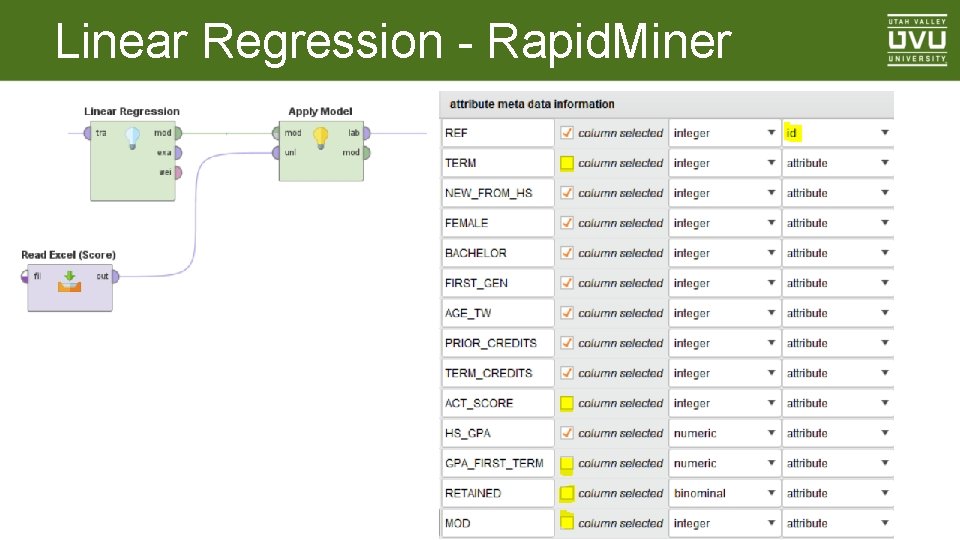

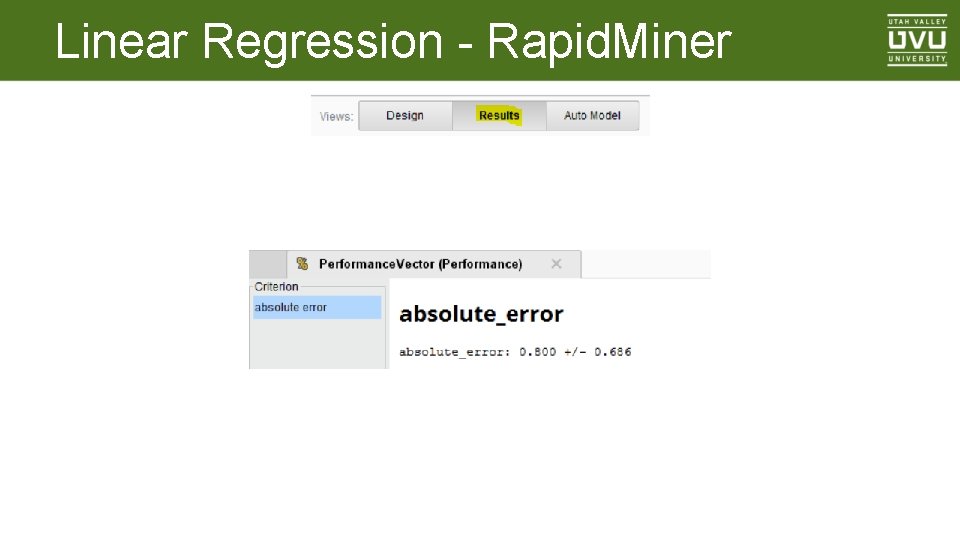

Linear Regression - Rapid. Miner Absolute error Train: . 839 Score: . 800 _____

Linear Regression - Rapid. Miner

Linear Regression - Rapid. Miner

Linear Regression - Rapid. Miner

Linear Regression - Rapid. Miner

Linear Regression - Rapid. Miner

Linear Regression - Rapid. Miner

Linear Regression - Rapid. Miner

Linear Regression - Rapid. Miner

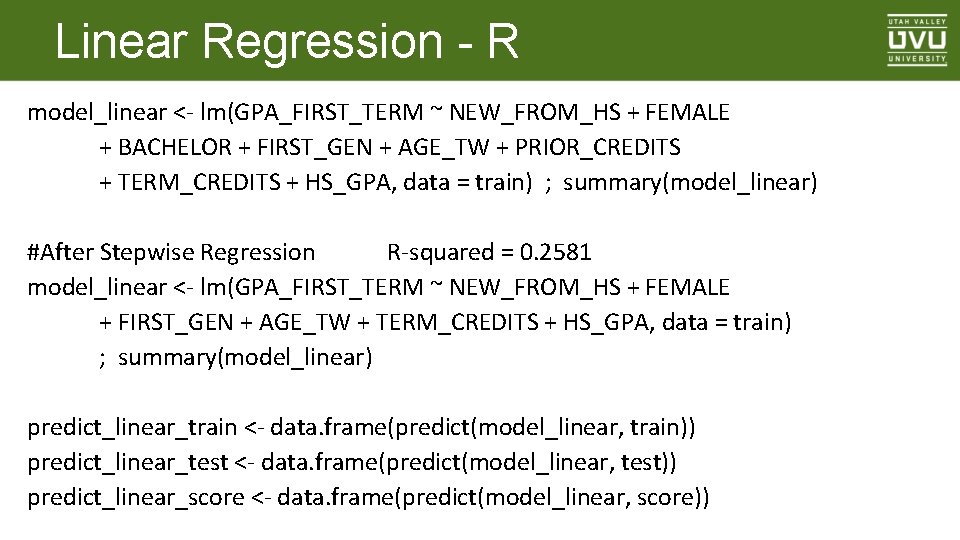

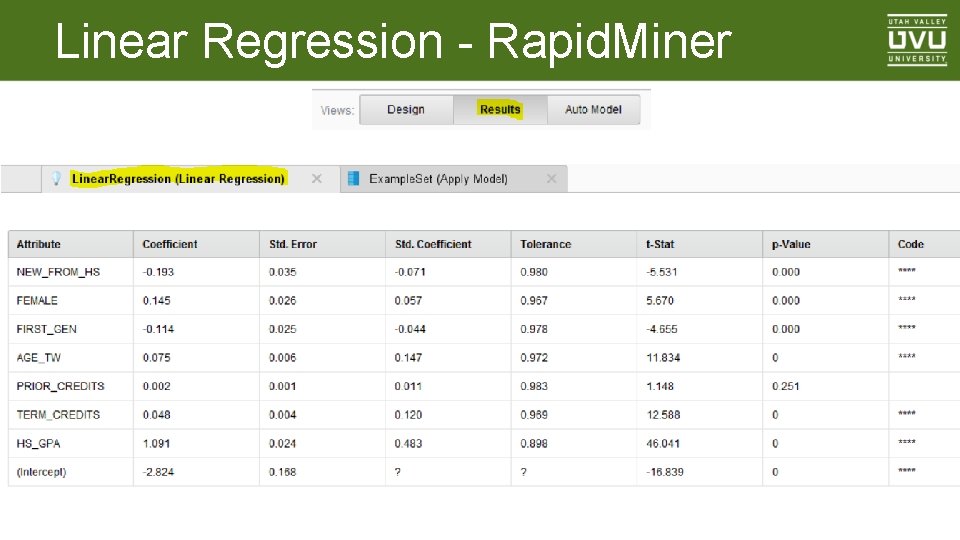

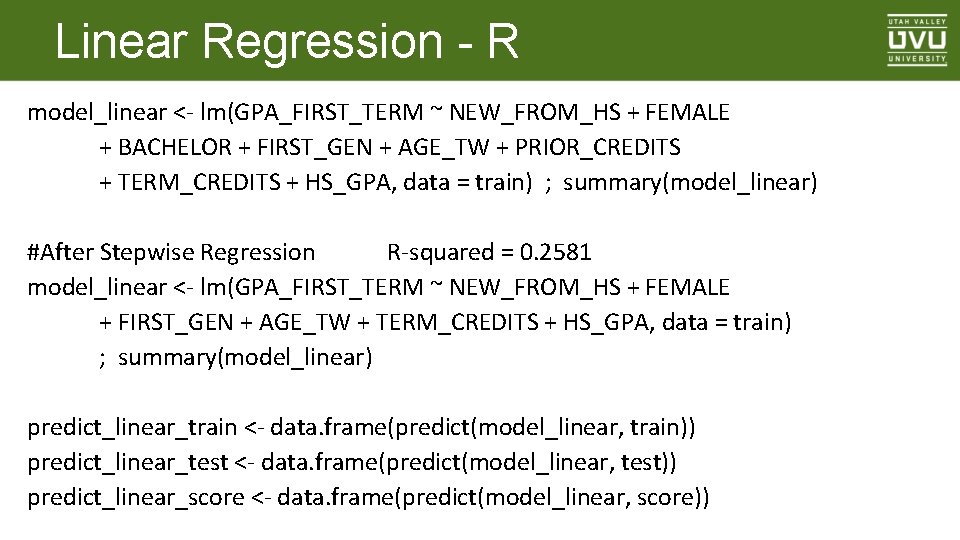

Linear Regression - R model_linear <- lm(GPA_FIRST_TERM ~ NEW_FROM_HS + FEMALE + BACHELOR + FIRST_GEN + AGE_TW + PRIOR_CREDITS + TERM_CREDITS + HS_GPA, data = train) ; summary(model_linear) #After Stepwise Regression R-squared = 0. 2581 model_linear <- lm(GPA_FIRST_TERM ~ NEW_FROM_HS + FEMALE + FIRST_GEN + AGE_TW + TERM_CREDITS + HS_GPA, data = train) ; summary(model_linear) predict_linear_train <- data. frame(predict(model_linear, train)) predict_linear_test <- data. frame(predict(model_linear, test)) predict_linear_score <- data. frame(predict(model_linear, score))

![Linear Regression R accuracylineartrain data frameabspredictlineartrain1 trainGPAFIRSTTERM accuracylineartest data frameabspredictlineartest1 testGPAFIRSTTERM Linear Regression - R accuracy_linear_train <data. frame(abs(predict_linear_train[1] - train$GPA_FIRST_TERM)) accuracy_linear_test <data. frame(abs(predict_linear_test[1] - test$GPA_FIRST_TERM))](https://slidetodoc.com/presentation_image_h/14afd4de8cfc8b133aae673d32bbf841/image-47.jpg)

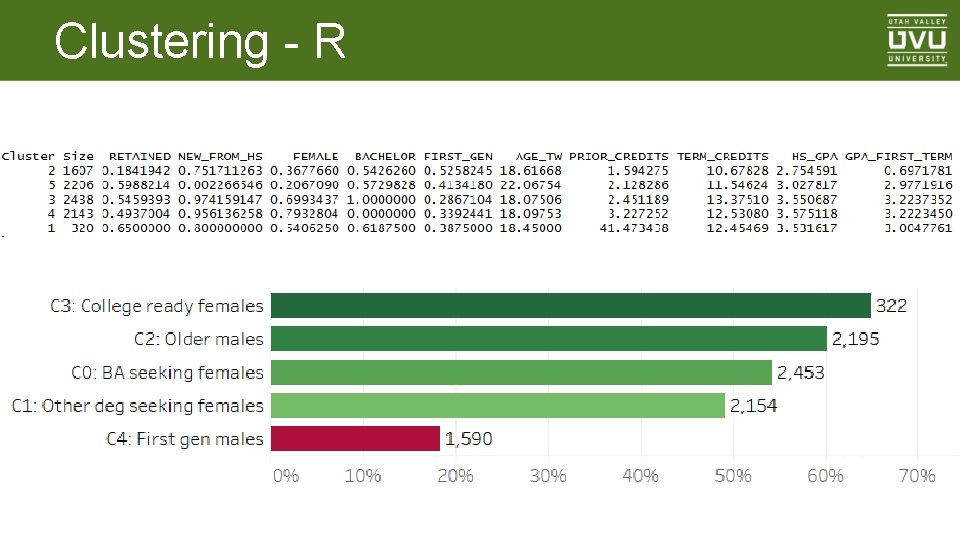

Linear Regression - R accuracy_linear_train <data. frame(abs(predict_linear_train[1] - train$GPA_FIRST_TERM)) accuracy_linear_test <data. frame(abs(predict_linear_test[1] - test$GPA_FIRST_TERM)) accuracy_linear_score <data. frame(abs(predict_linear_score[1] - score$GPA_FIRST_TERM)) sum(accuracy_linear_train) / nrow(accuracy_linear_train) sum(accuracy_linear_test) / nrow(accuracy_linear_test) sum(accuracy_linear_score) / nrow(accuracy_linear_score) # 0. 838 # 0. 799

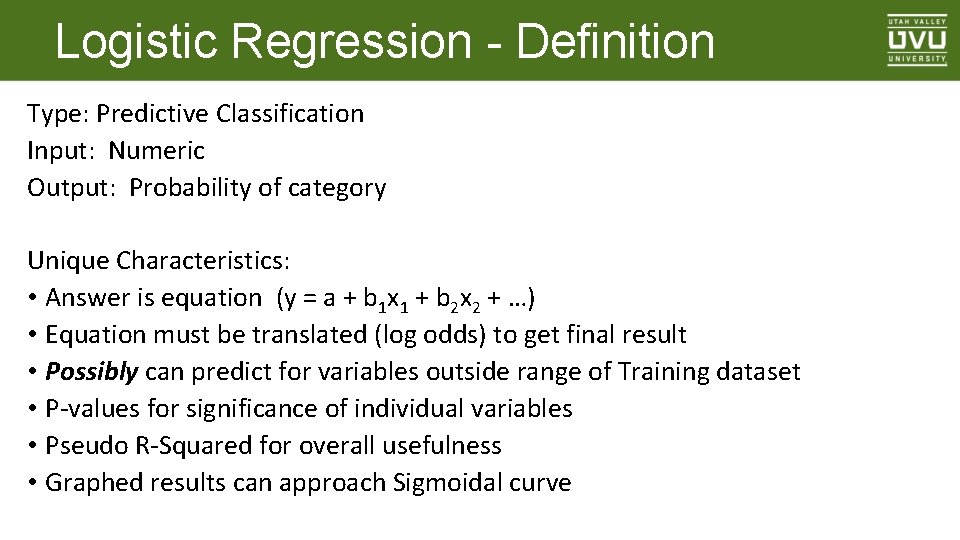

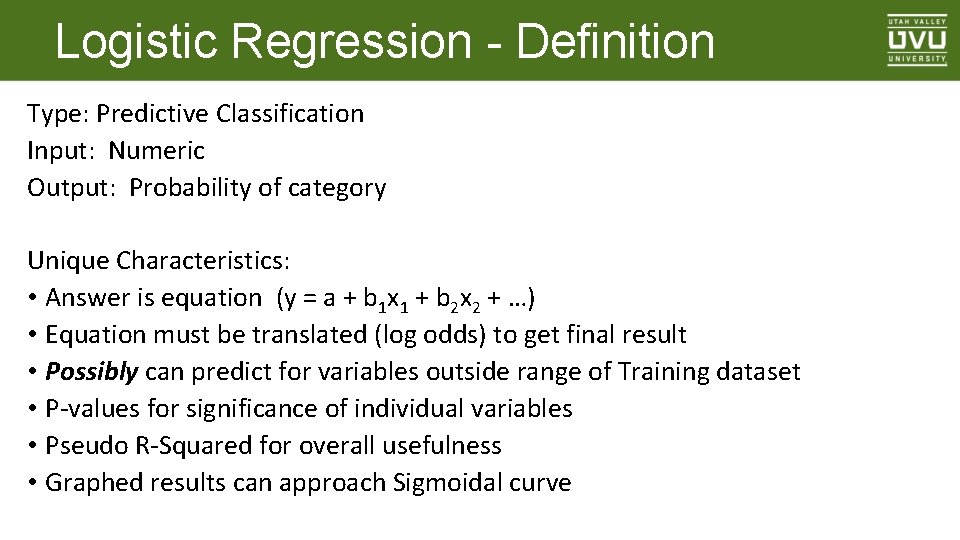

Logistic Regression - Definition Type: Predictive Classification Input: Numeric Output: Probability of category Unique Characteristics: • Answer is equation (y = a + b 1 x 1 + b 2 x 2 + …) • Equation must be translated (log odds) to get final result • Possibly can predict for variables outside range of Training dataset • P-values for significance of individual variables • Pseudo R-Squared for overall usefulness • Graphed results can approach Sigmoidal curve

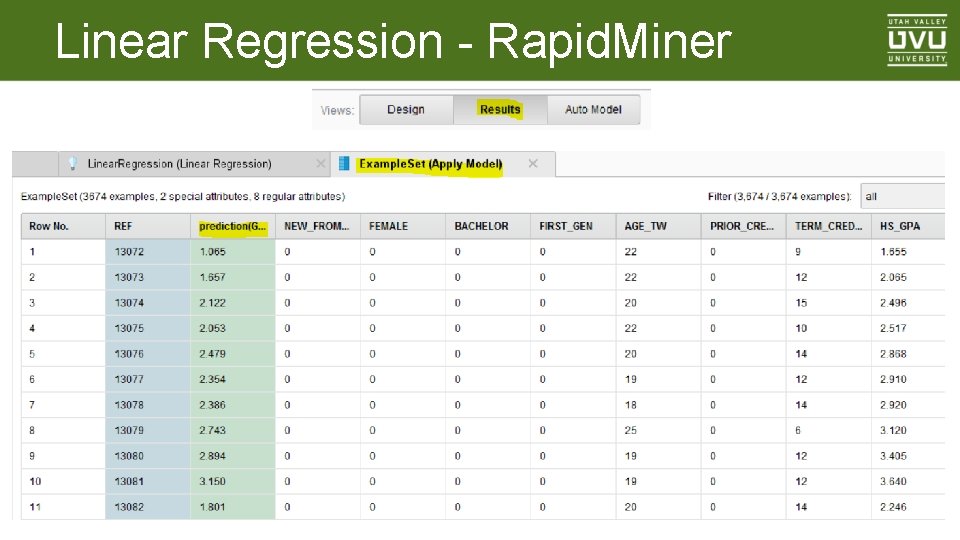

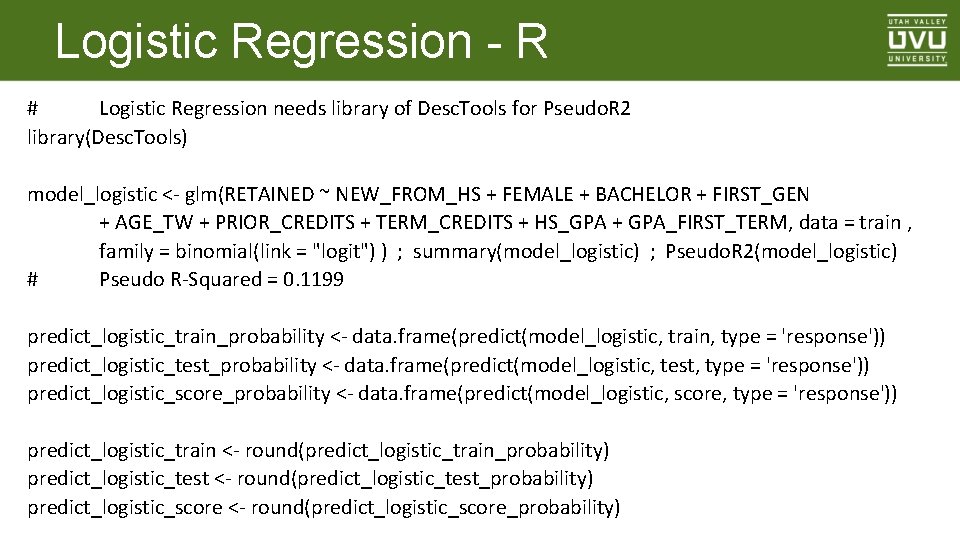

Logistic Regression - R # Logistic Regression needs library of Desc. Tools for Pseudo. R 2 library(Desc. Tools) model_logistic <- glm(RETAINED ~ NEW_FROM_HS + FEMALE + BACHELOR + FIRST_GEN + AGE_TW + PRIOR_CREDITS + TERM_CREDITS + HS_GPA + GPA_FIRST_TERM, data = train , family = binomial(link = "logit") ) ; summary(model_logistic) ; Pseudo. R 2(model_logistic) # Pseudo R-Squared = 0. 1199 predict_logistic_train_probability <- data. frame(predict(model_logistic, train, type = 'response')) predict_logistic_test_probability <- data. frame(predict(model_logistic, test, type = 'response')) predict_logistic_score_probability <- data. frame(predict(model_logistic, score, type = 'response')) predict_logistic_train <- round(predict_logistic_train_probability) predict_logistic_test <- round(predict_logistic_test_probability) predict_logistic_score <- round(predict_logistic_score_probability)

![Logistic Regression R accuracylogistictrain data frameifelsepredictlogistictrain1 trainRETAINED 1 0 accuracylogistictest data frameifelsepredictlogistictest1 Logistic Regression - R accuracy_logistic_train <data. frame(ifelse(predict_logistic_train[1] == train$RETAINED, 1, 0)) accuracy_logistic_test <data. frame(ifelse(predict_logistic_test[1]](https://slidetodoc.com/presentation_image_h/14afd4de8cfc8b133aae673d32bbf841/image-50.jpg)

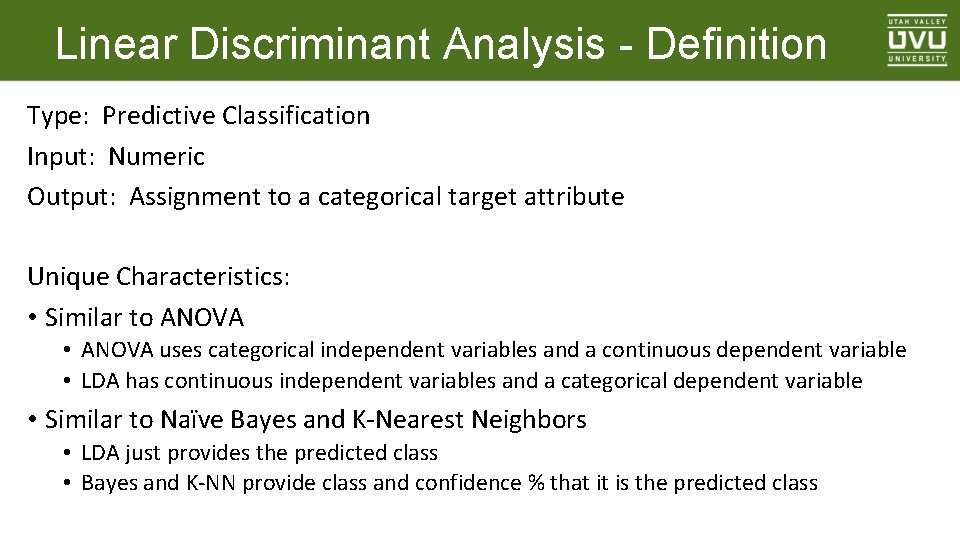

Logistic Regression - R accuracy_logistic_train <data. frame(ifelse(predict_logistic_train[1] == train$RETAINED, 1, 0)) accuracy_logistic_test <data. frame(ifelse(predict_logistic_test[1] == test$RETAINED, 1, 0)) accuracy_logistic_score <data. frame(ifelse(predict_logistic_score[1] == score$RETAINED, 1, 0)) sum(accuracy_logistic_train) / nrow(accuracy_logistic_train) sum(accuracy_logistic_test) / nrow(accuracy_logistic_test) sum(accuracy_logistic_score) / nrow(accuracy_logistic_score) # 65. 7 # 66. 2 # 64. 0

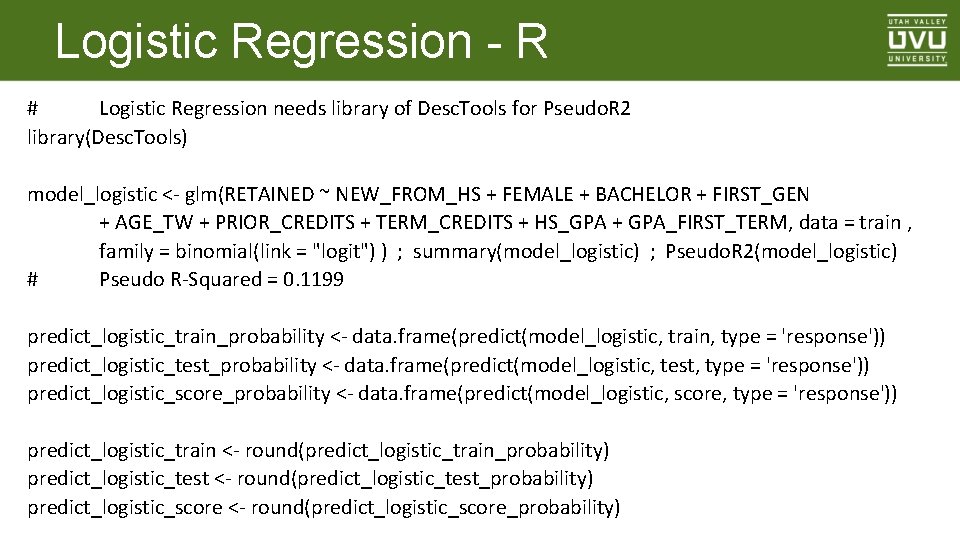

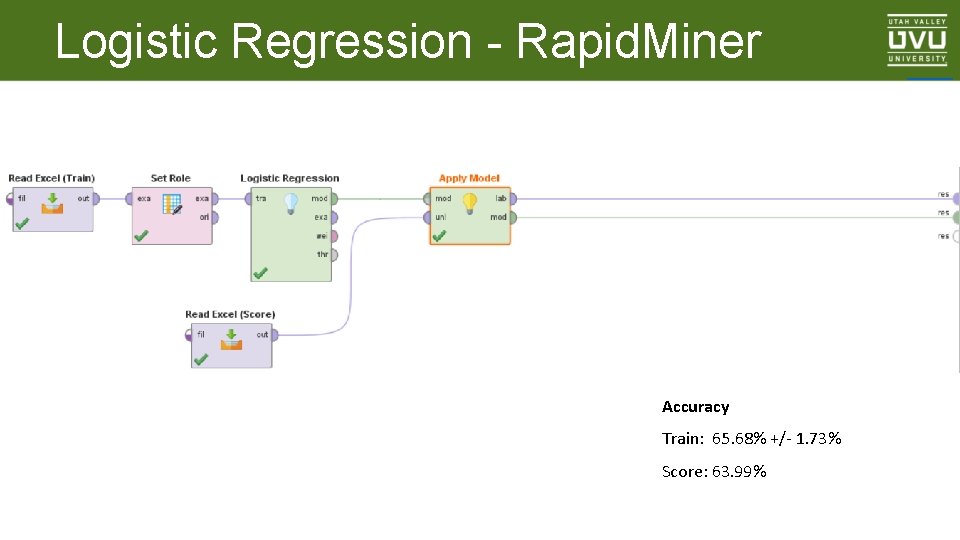

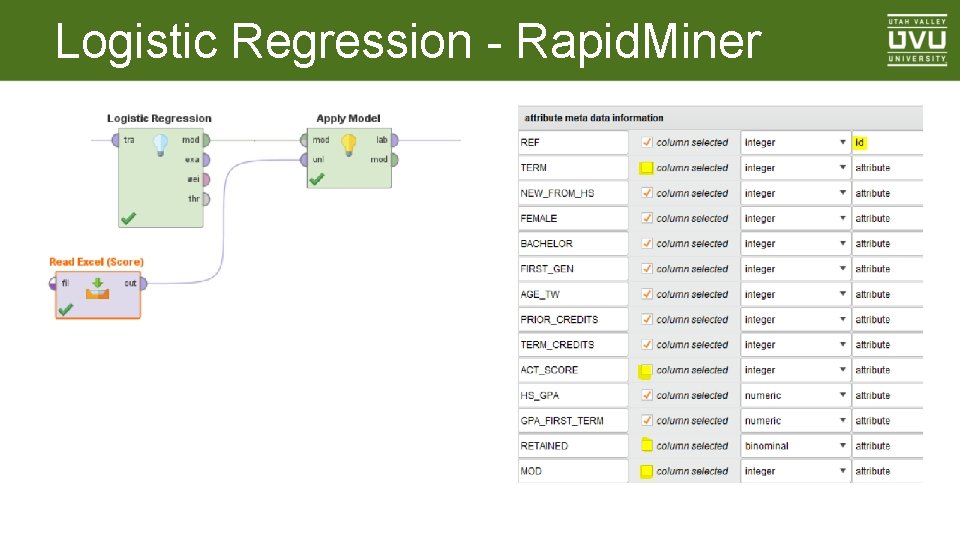

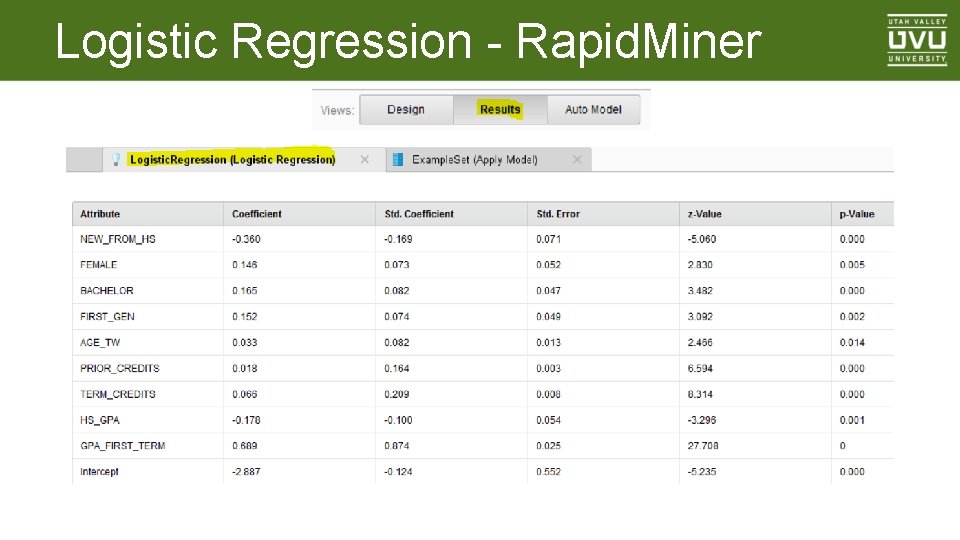

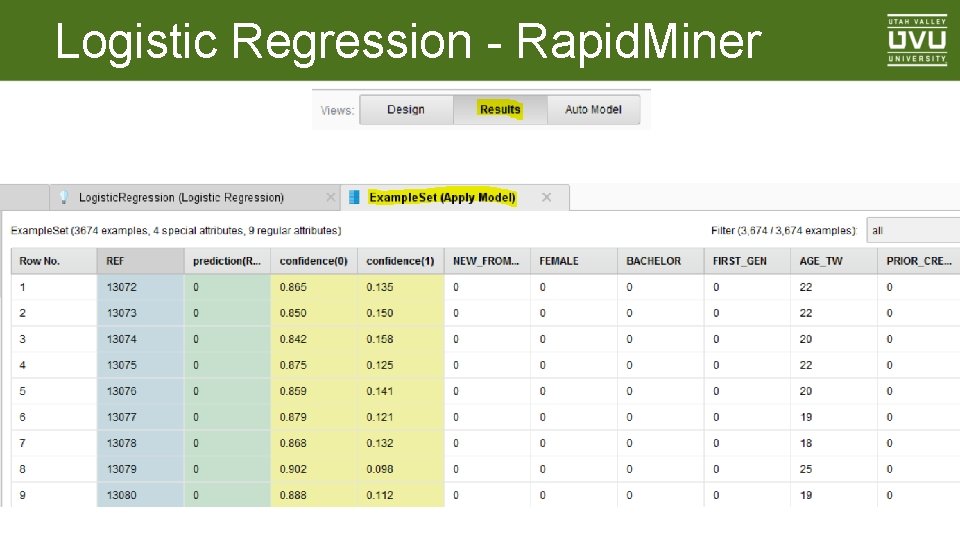

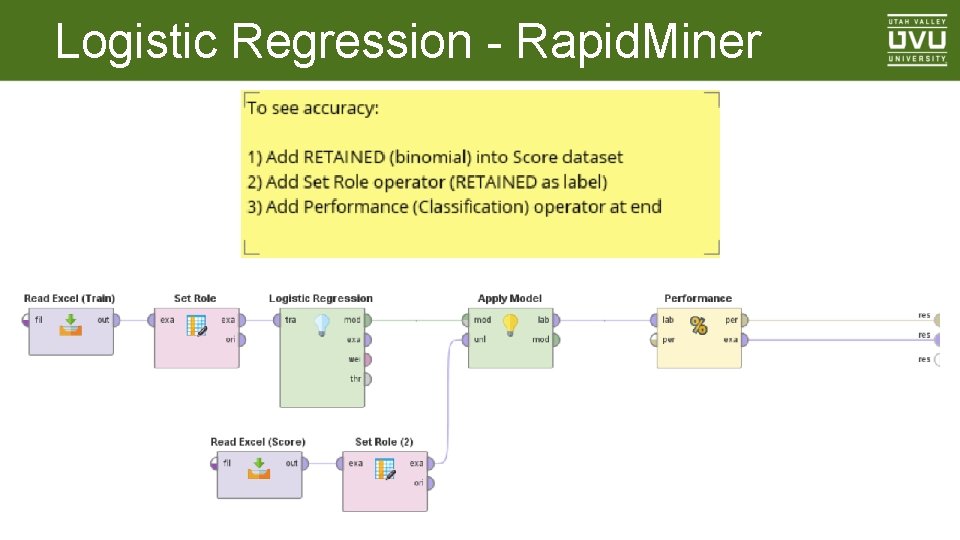

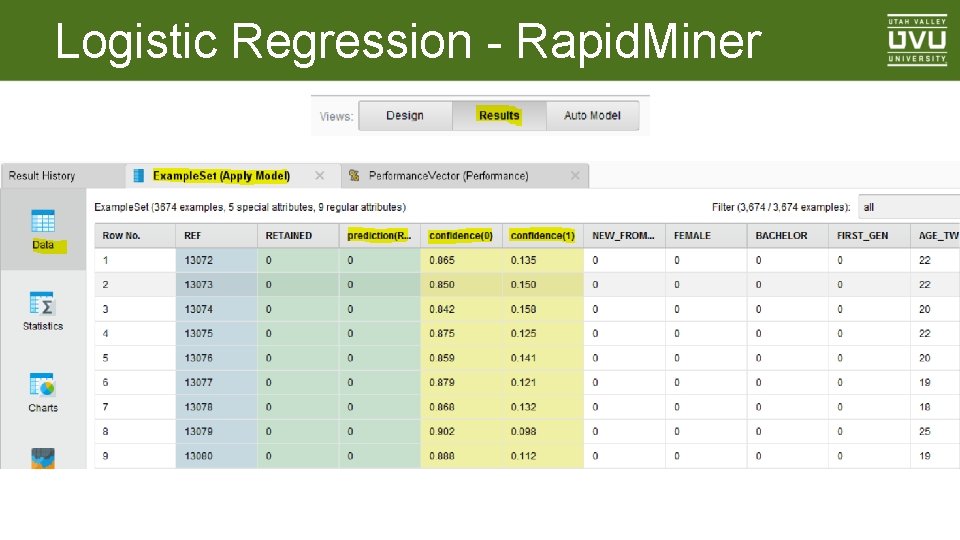

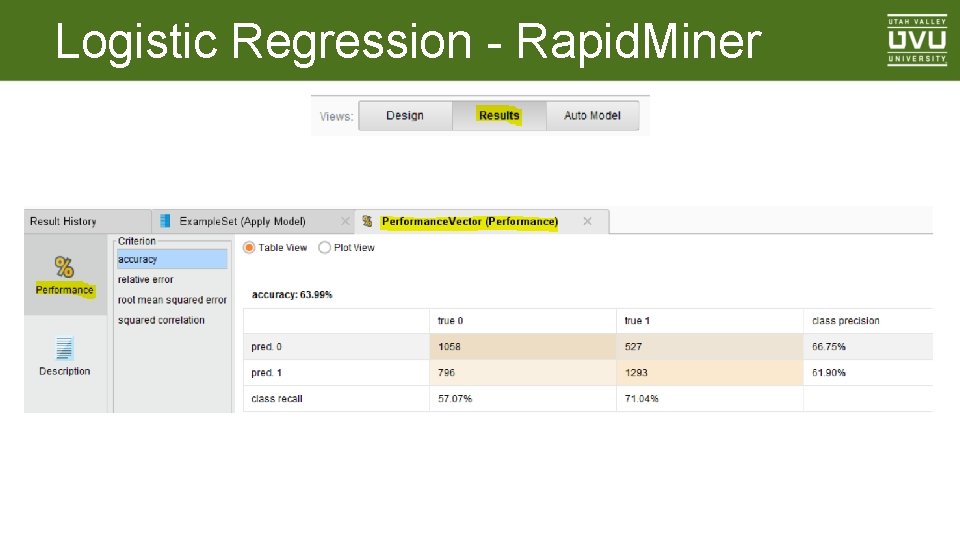

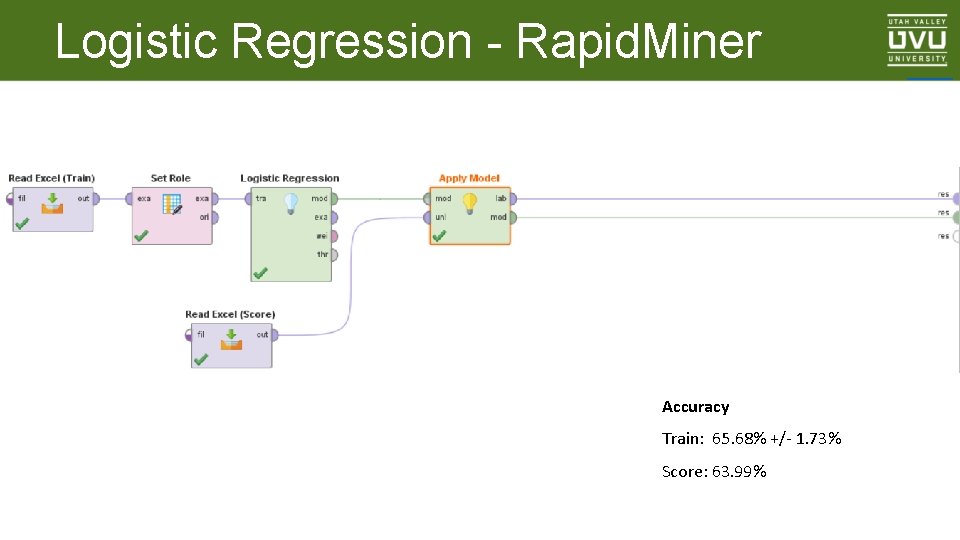

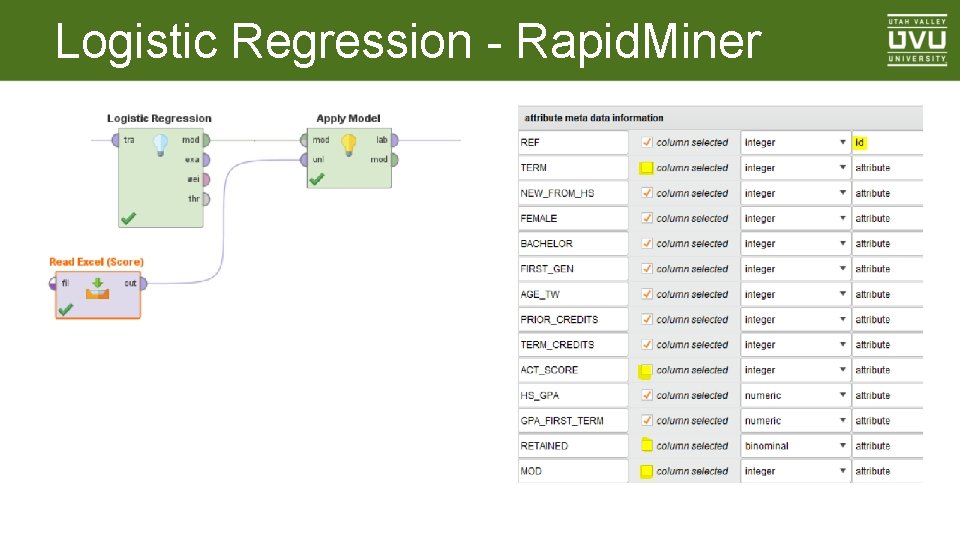

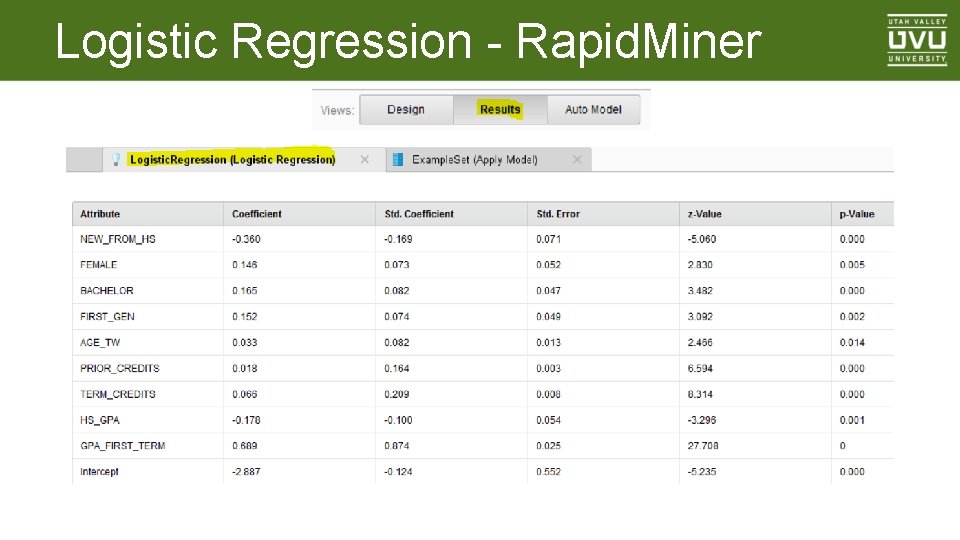

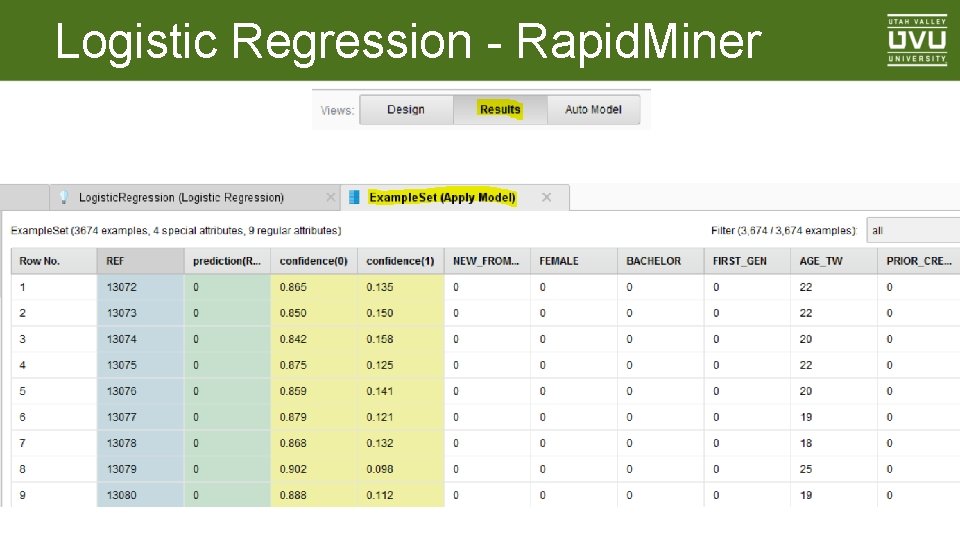

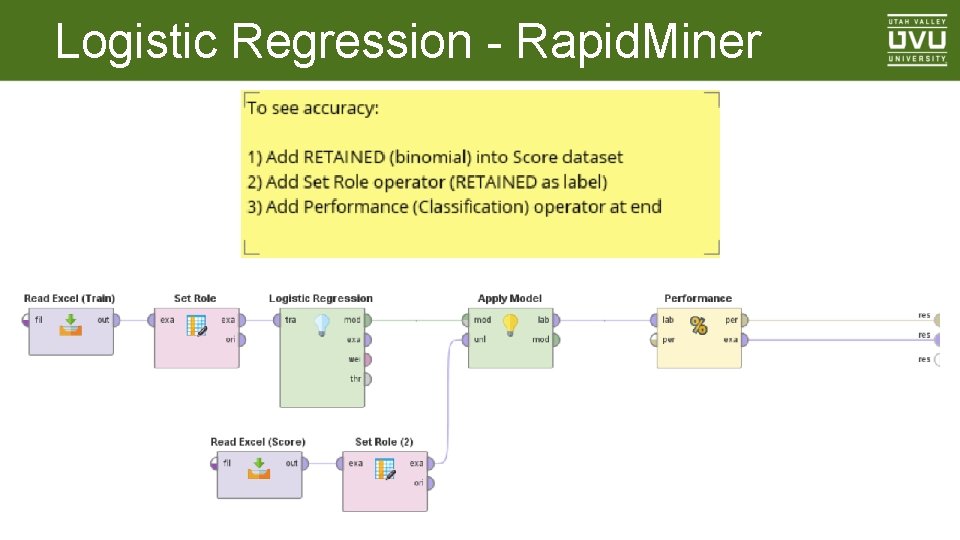

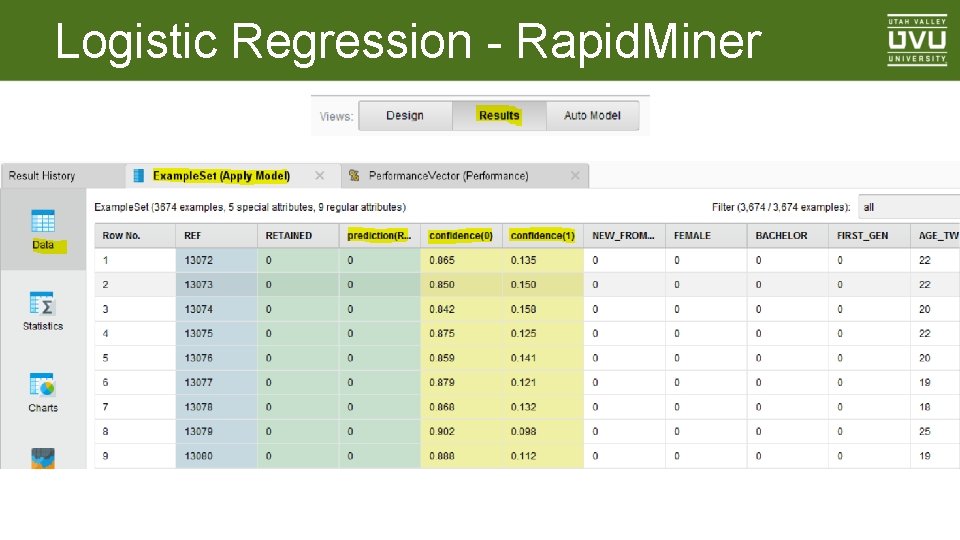

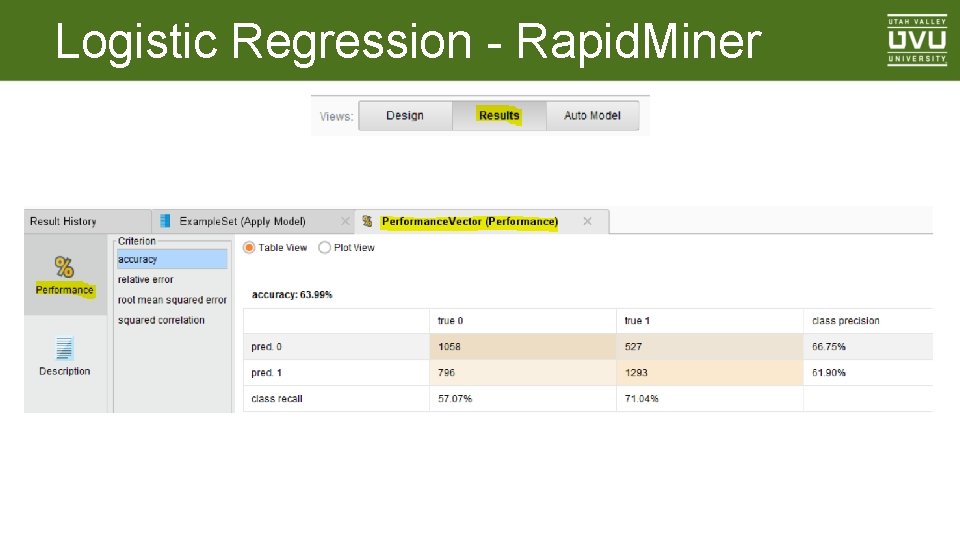

Logistic Regression - Rapid. Miner Accuracy Train: 65. 68% +/- 1. 73% Score: 63. 99% _____

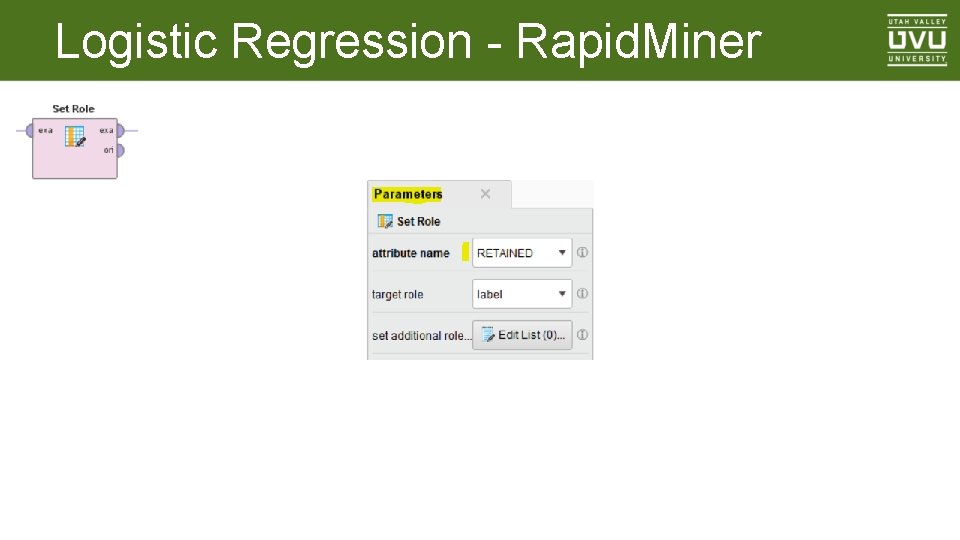

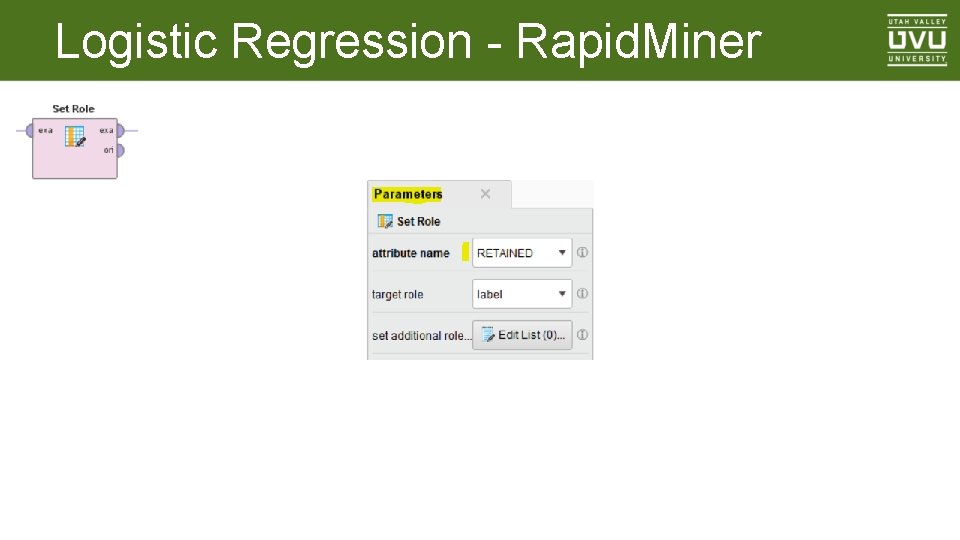

Logistic Regression - Rapid. Miner

Logistic Regression - Rapid. Miner

Logistic Regression - Rapid. Miner

Logistic Regression - Rapid. Miner

Logistic Regression - Rapid. Miner

Logistic Regression - Rapid. Miner

Logistic Regression - Rapid. Miner

Logistic Regression - Rapid. Miner

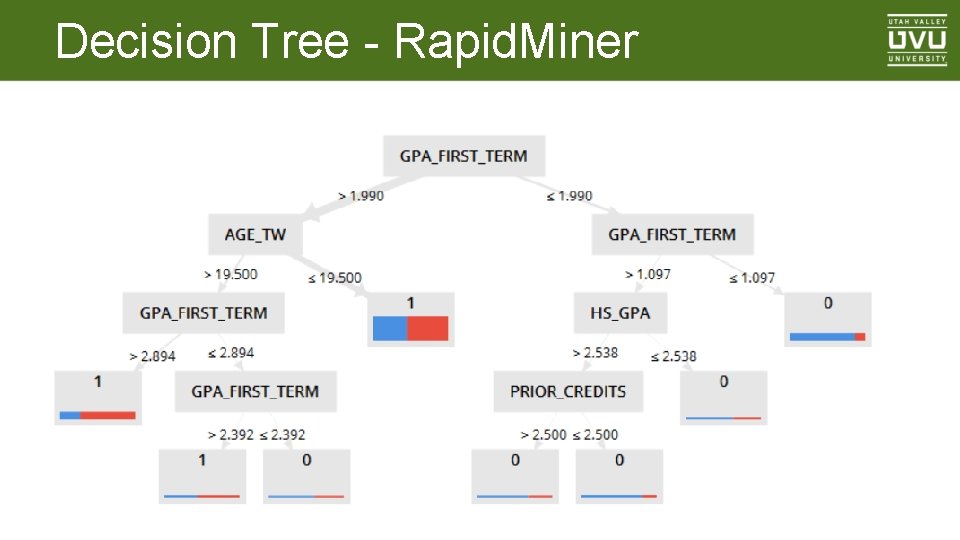

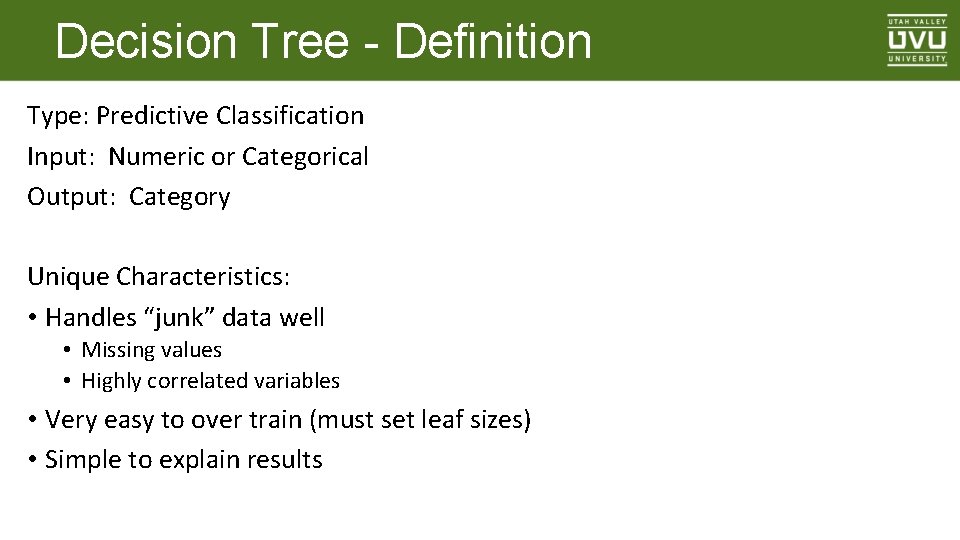

Decision Tree - Definition Type: Predictive Classification Input: Numeric or Categorical Output: Category Unique Characteristics: • Handles “junk” data well • Missing values • Highly correlated variables • Very easy to over train (must set leaf sizes) • Simple to explain results

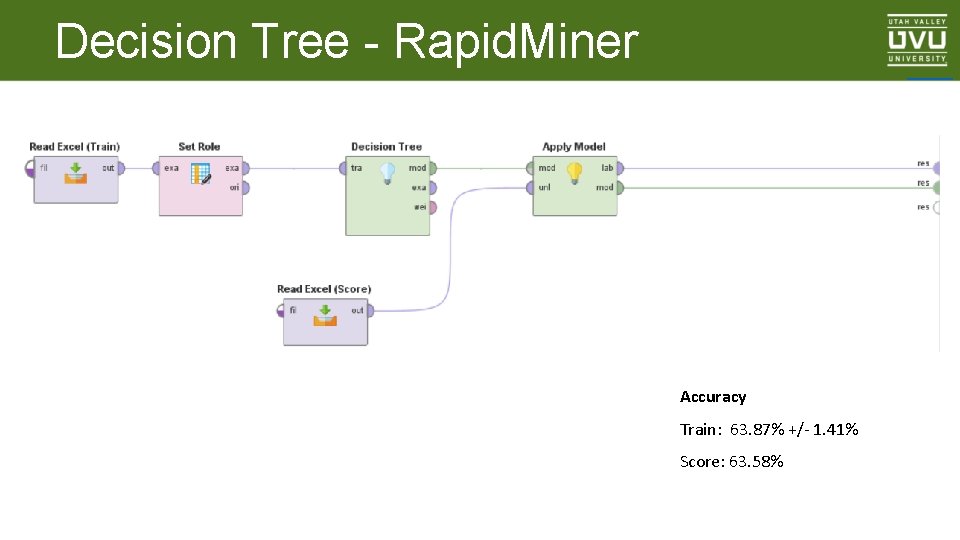

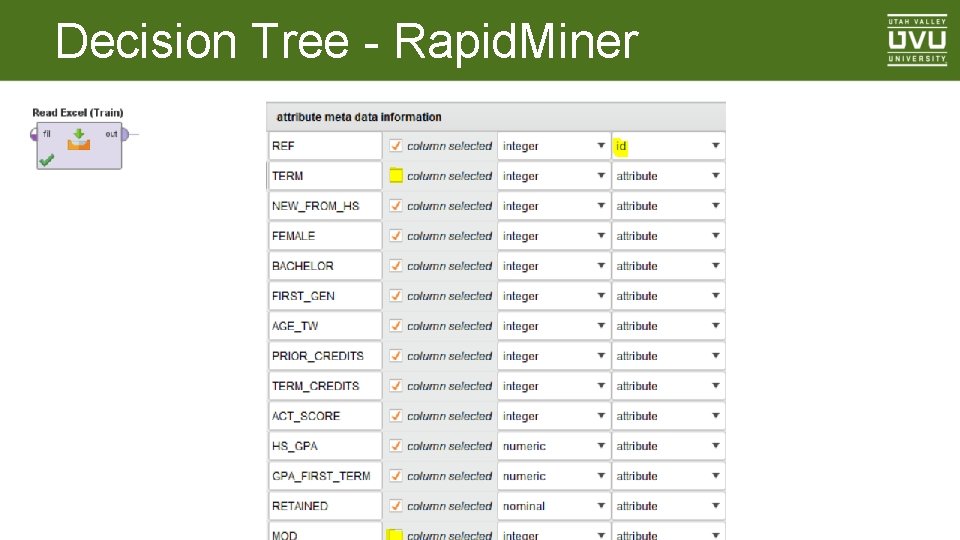

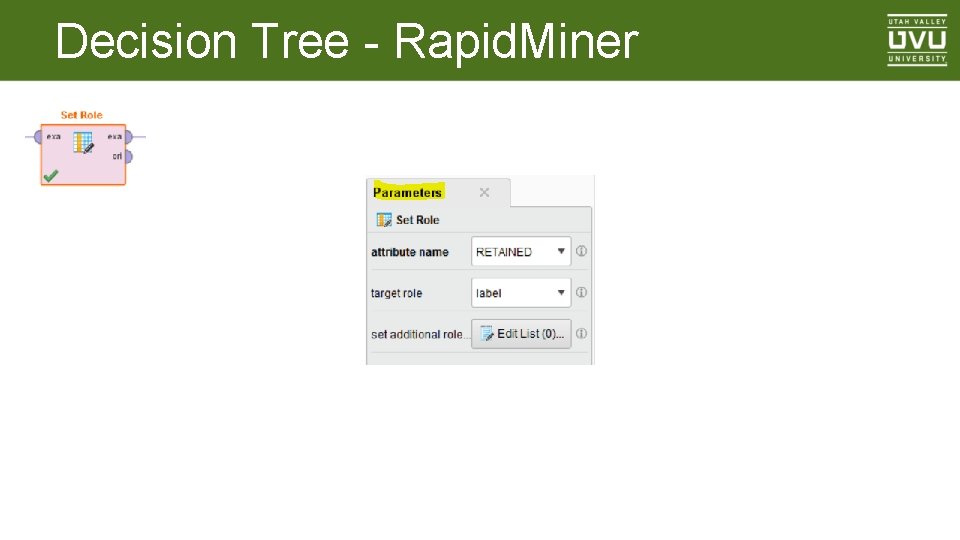

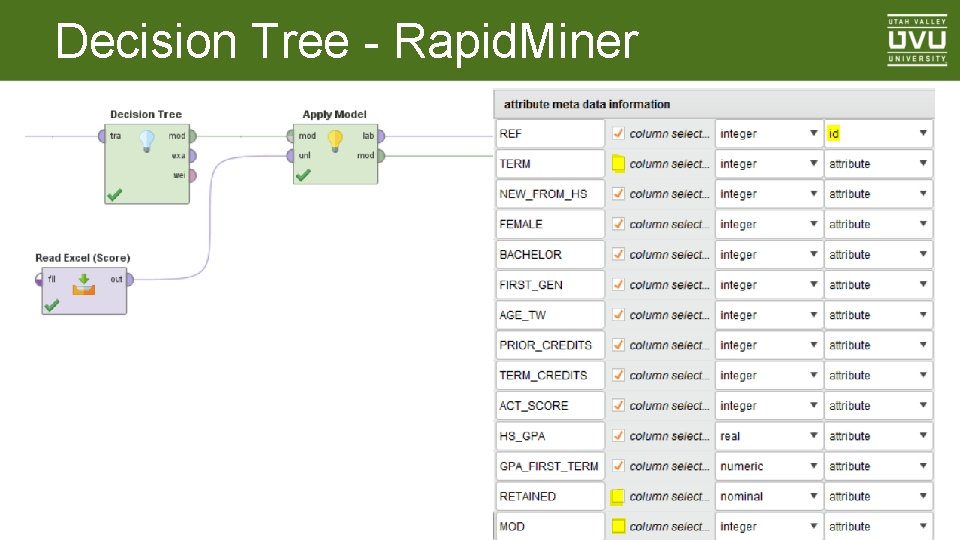

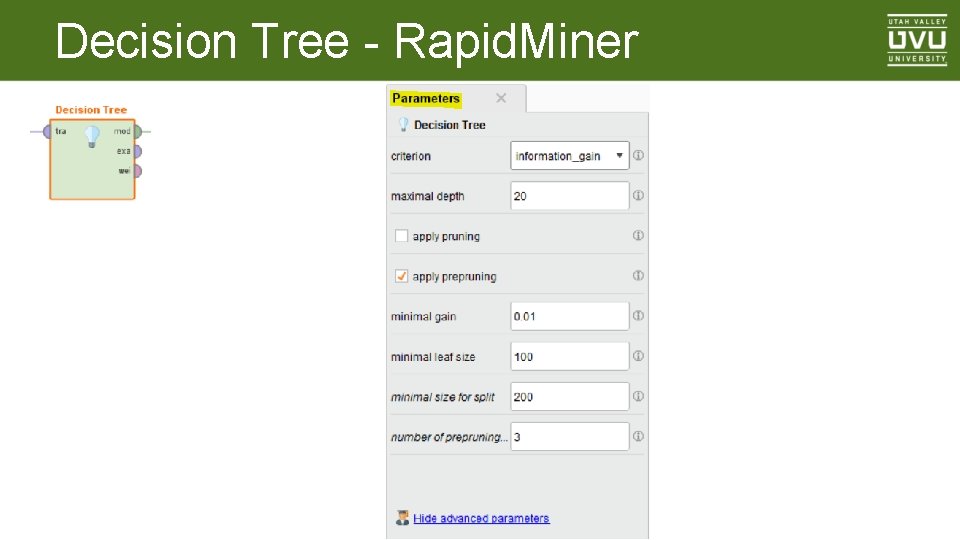

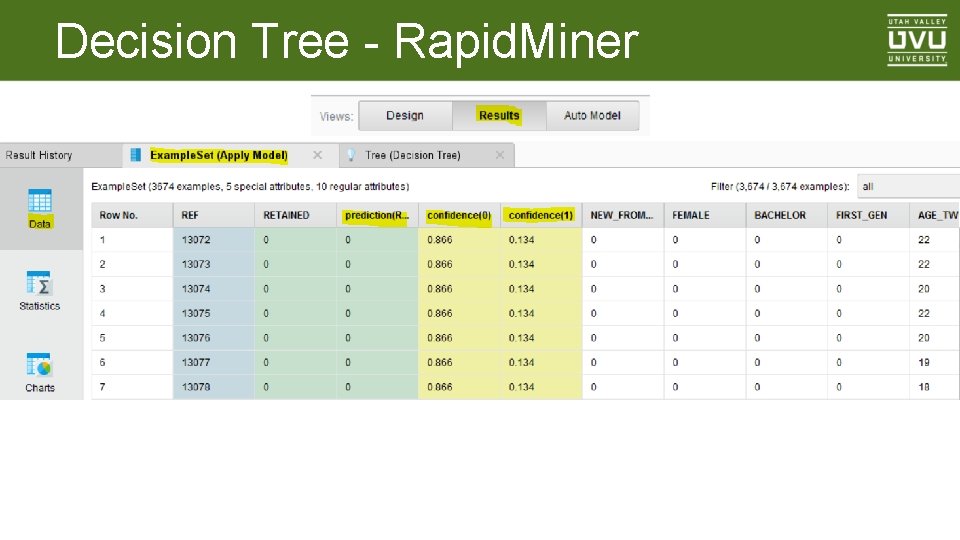

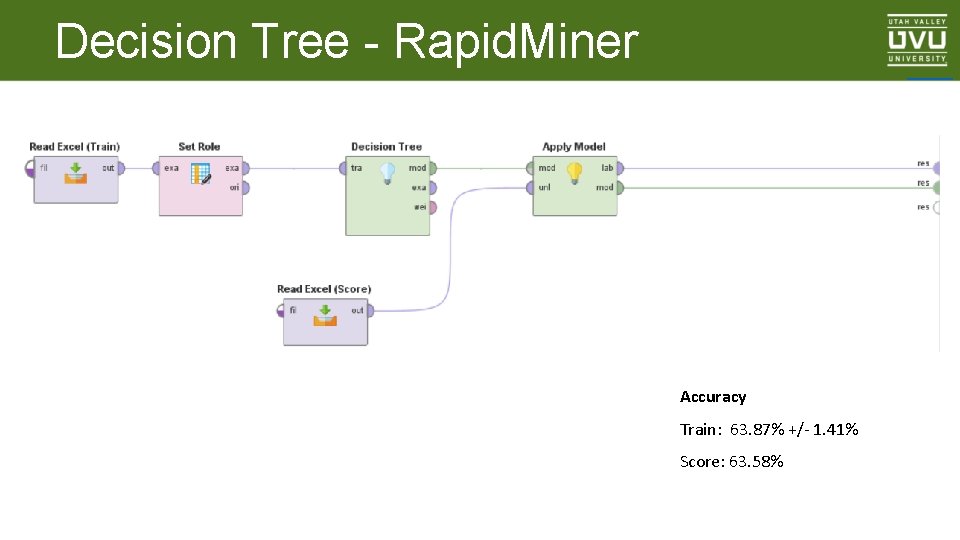

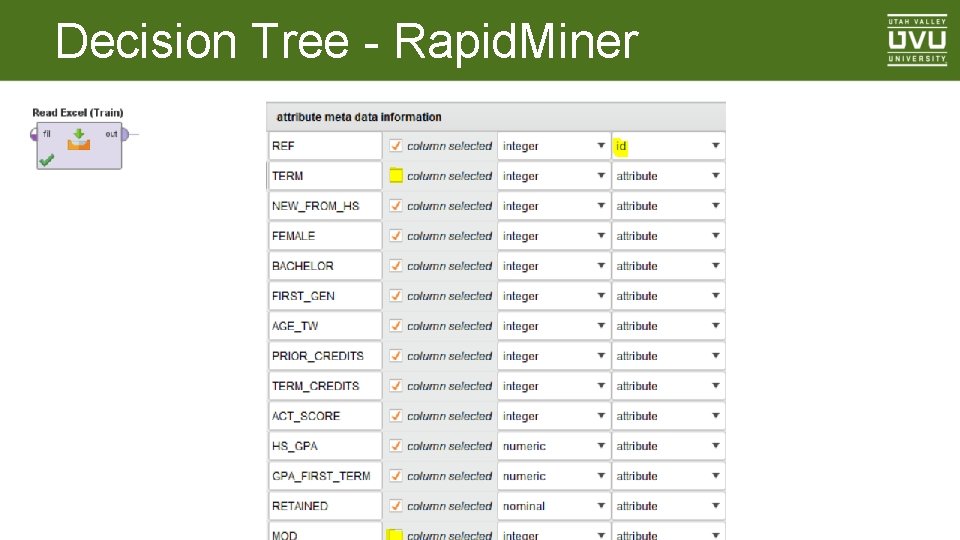

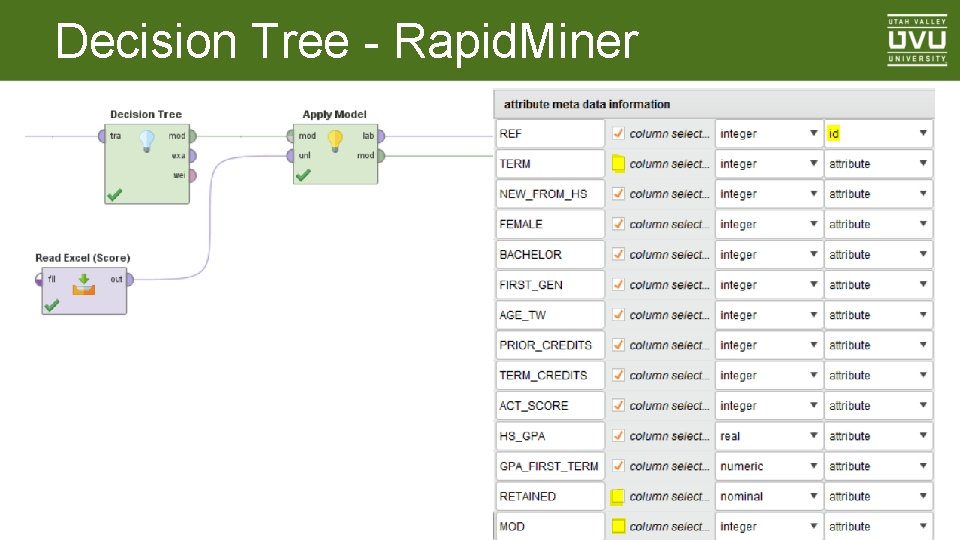

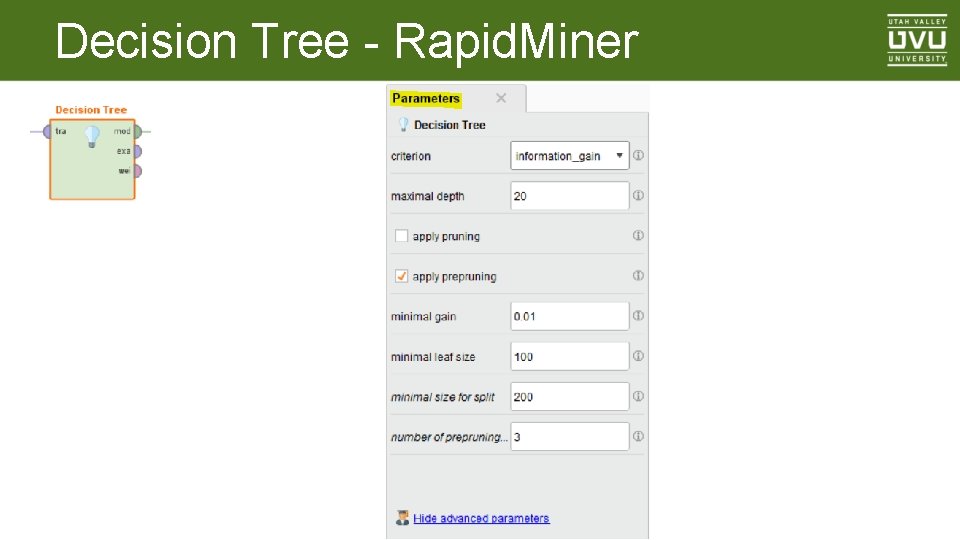

Decision Tree - Rapid. Miner _____ Accuracy Train: 63. 87% +/- 1. 41% Score: 63. 58%

Decision Tree - Rapid. Miner

Decision Tree - Rapid. Miner

Decision Tree - Rapid. Miner

Decision Tree - Rapid. Miner

Decision Tree - Rapid. Miner

Decision Tree - Rapid. Miner

Decision Tree - Rapid. Miner

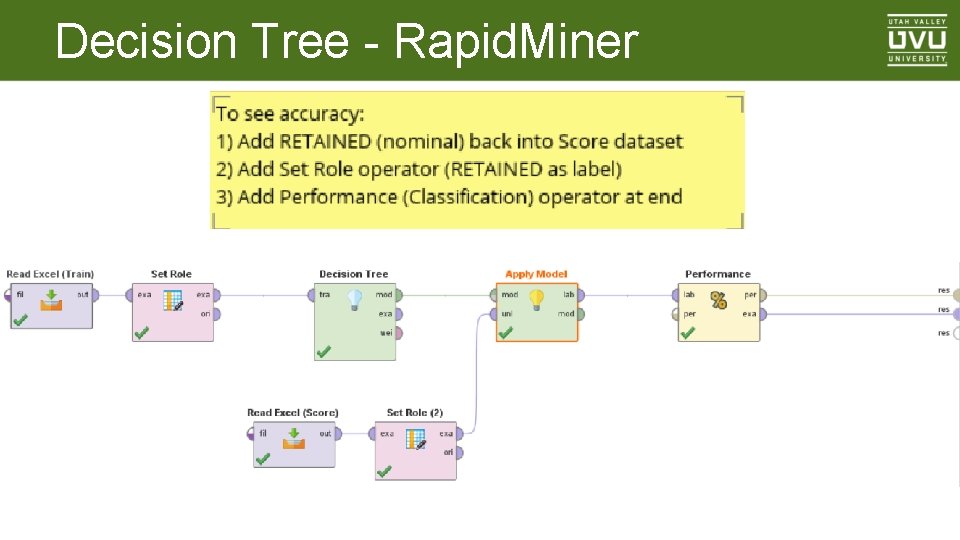

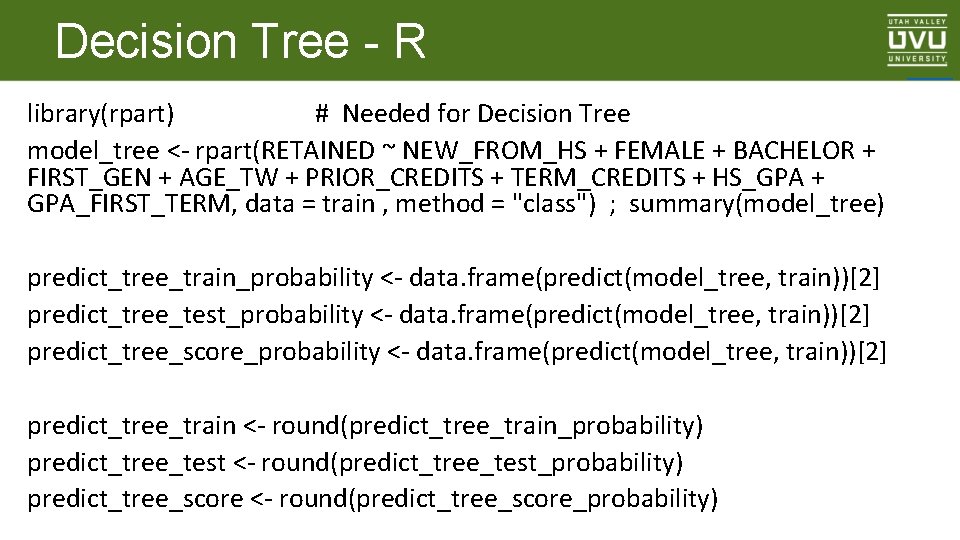

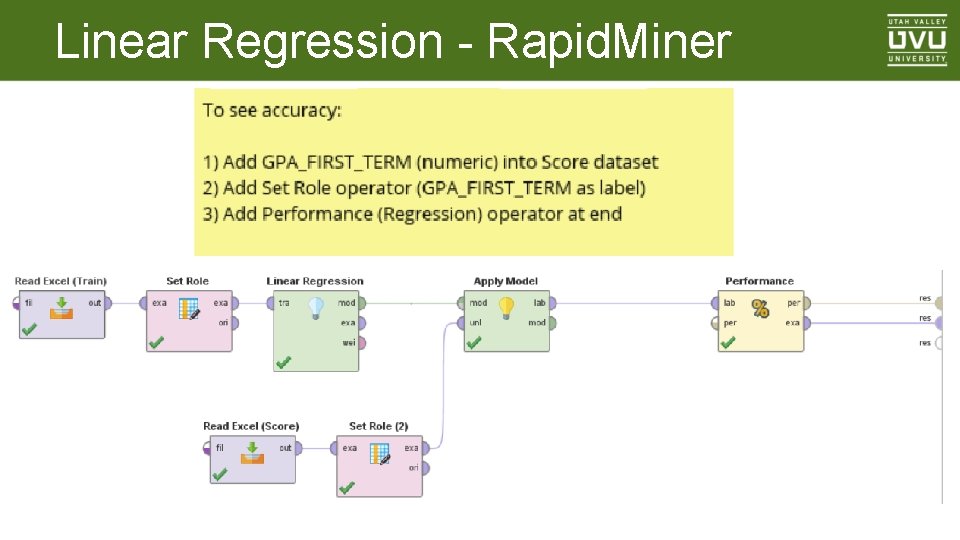

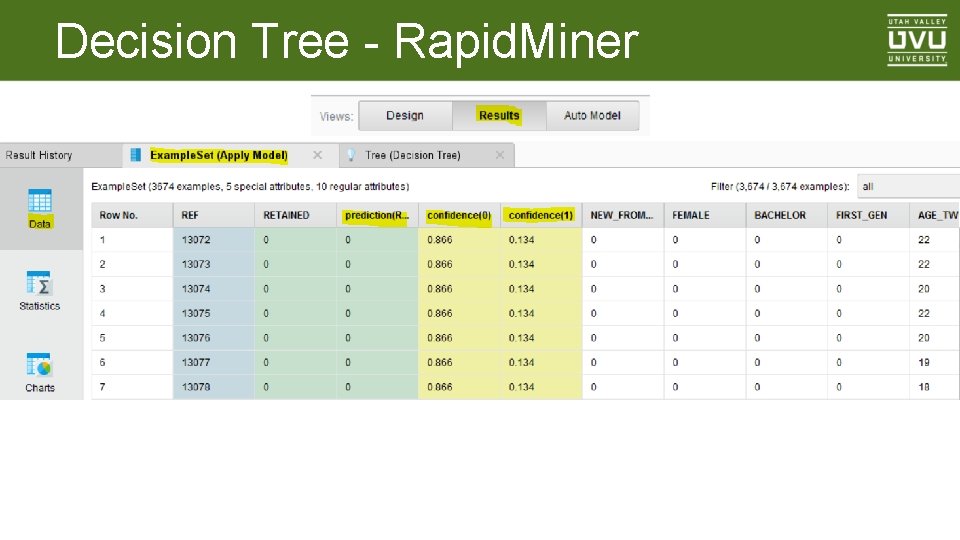

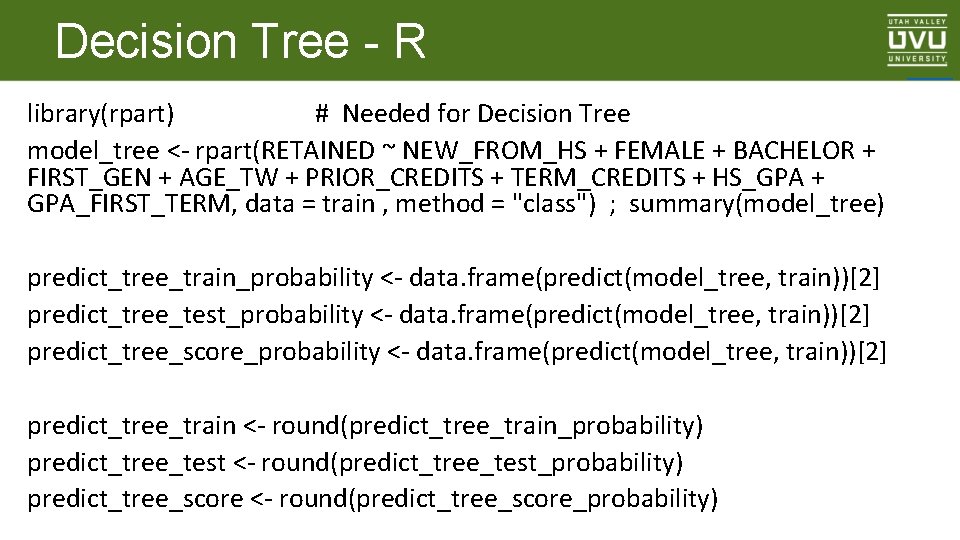

Decision Tree - R library(rpart) # Needed for Decision Tree model_tree <- rpart(RETAINED ~ NEW_FROM_HS + FEMALE + BACHELOR + FIRST_GEN + AGE_TW + PRIOR_CREDITS + TERM_CREDITS + HS_GPA + GPA_FIRST_TERM, data = train , method = "class") ; summary(model_tree) predict_tree_train_probability <- data. frame(predict(model_tree, train))[2] predict_tree_test_probability <- data. frame(predict(model_tree, train))[2] predict_tree_score_probability <- data. frame(predict(model_tree, train))[2] predict_tree_train <- round(predict_tree_train_probability) predict_tree_test <- round(predict_tree_test_probability) predict_tree_score <- round(predict_tree_score_probability) _____

![Decision Tree R accuracytreetrain data frameifelsepredicttreetrain1 trainRETAINED 1 0 accuracytreetest data frameifelsepredicttreetest1 Decision Tree - R accuracy_tree_train <data. frame(ifelse(predict_tree_train[1] == train$RETAINED, 1, 0)) accuracy_tree_test <data. frame(ifelse(predict_tree_test[1]](https://slidetodoc.com/presentation_image_h/14afd4de8cfc8b133aae673d32bbf841/image-70.jpg)

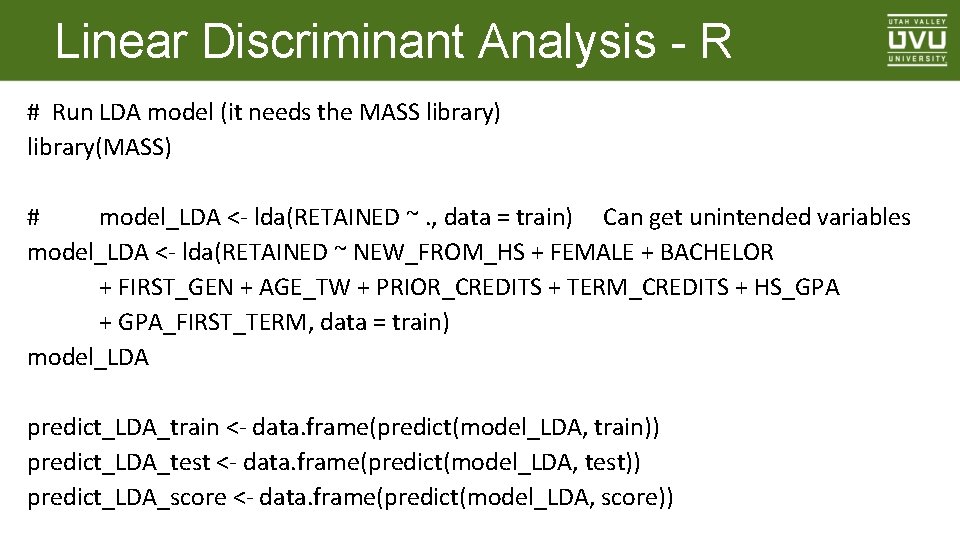

Decision Tree - R accuracy_tree_train <data. frame(ifelse(predict_tree_train[1] == train$RETAINED, 1, 0)) accuracy_tree_test <data. frame(ifelse(predict_tree_test[1] == test$RETAINED, 1, 0)) accuracy_tree_score <data. frame(ifelse(predict_tree_score[1] == score$RETAINED, 1, 0)) sum(accuracy_tree_train) / nrow(accuracy_tree_train) sum(accuracy_tree_test) / nrow(accuracy_tree_test) sum(accuracy_tree_score) / nrow(accuracy_tree_score) # 64. 8 # 51. 2 # 43. 3

![Ensemble R ensembleRETAINED data frametrainRETAINED ensembleRETAINED2 predictLDAtrain1 ensembleRETAINED3 predictlogistictrain ensembleRETAINED4 Ensemble - R ensemble_RETAINED <- data. frame(train$RETAINED) ensemble_RETAINED[2] <- predict_LDA_train[1] ensemble_RETAINED[3] <- predict_logistic_train ensemble_RETAINED[4]](https://slidetodoc.com/presentation_image_h/14afd4de8cfc8b133aae673d32bbf841/image-71.jpg)

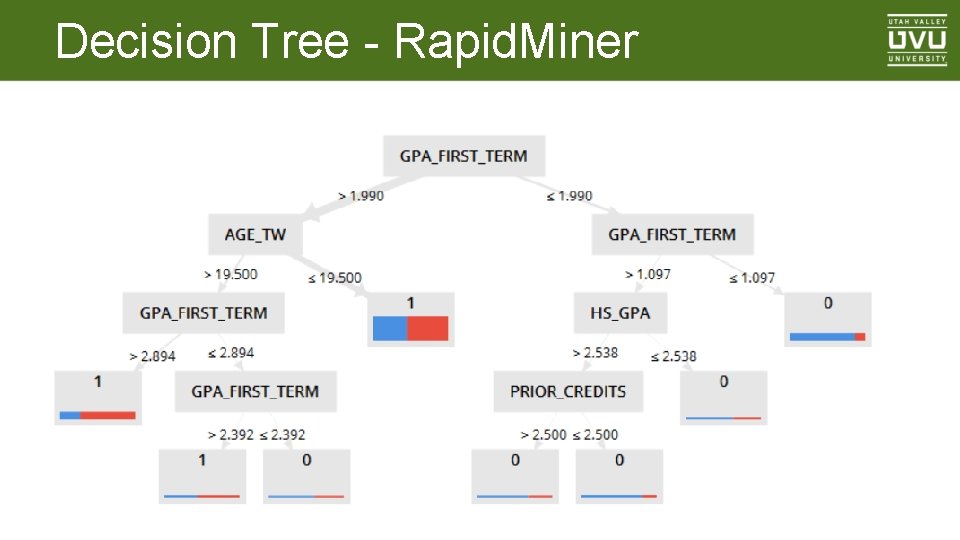

Ensemble - R ensemble_RETAINED <- data. frame(train$RETAINED) ensemble_RETAINED[2] <- predict_LDA_train[1] ensemble_RETAINED[3] <- predict_logistic_train ensemble_RETAINED[4] <- predict_tree_train ensemble_RETAINED[5] <- predict_logistic_train_probability ensemble_RETAINED[6] <- predict_tree_train_probability names(ensemble_RETAINED)[1] <- "Actual" names(ensemble_RETAINED)[2] <- "LDA" names(ensemble_RETAINED)[3] <- "logistic" names(ensemble_RETAINED)[4] <- "tree" names(ensemble_RETAINED)[5] <- "logistic_probability" names(ensemble_RETAINED)[6] <- "tree_probability"

Questions? Mark Leany – leanyma@uvu. edu Evelyn Ho-Wisniewski – eho-wisniewski@uvu. edu

Datasets - Train / Test / Score Fall Terms 2012 – 2015, sorted (for stratification): • Term • Retained • Female • A year or less out of high school • Bachelor-seeking • First generation student • First term GPA • Cumulative HS GPA • Others (it never got this far) _____