Introduction to Reinforcement Learning and QLearning Andrew L

- Slides: 24

Introduction to Reinforcement Learning and Q-Learning Andrew L. Nelson Visiting Research Faculty University of South Florida 3/8/2021 Q-Learning 1

Overview • • References Introduction • • Policy Learning in known space • • Example Reinforcement Policy Learning • • • Agent and environment Nomenclature Cell World Q-Function Q-Algorithm Example Generalization Summary 3/8/2021 • Outline to the left in green • Current topic in yellow • References • Introduction • Learning an optimal policy in a known environment • Learning an approximate optimal policy in an unknown environment • Example • Generalization and representation • Knowledge based vs general function approximation methods Q-Learning 2

References • Overview • References • Introduction • • Policy Learning in known space • • Example Reinforcement Policy Learning • • • Agent and environment Nomenclature Cell World • C. Watkins, P. Dayan, “QLearning, ” Machine Learning, vol. 8, pp. 279 -292, 1989. • T. M. Mitchell, Machine Learning, WCB/Mc. Graw-Hill, 1997. Q-Function Q-Algorithm Example Generalization Summary 3/8/2021 Q-Learning 3

Introduction • • Overview References • Introduction • • Policy Learning in known space • • Example Reinforcement Policy Learning • • • Agent and environment Nomenclature Cell World Q-Function Q-Algorithm Example Generalization Summary 3/8/2021 • Situated Learning Agents • The Goal of a leaning agent is to learn to choose actions (a) so that the net reward over a sequence of actions is maximized • Supervised learning methods make use of knowledge of the world and of known reward functions • Reinforcement learning methods use rewards to learn an optimal policy in a given (unknown) environment Q-Learning 4

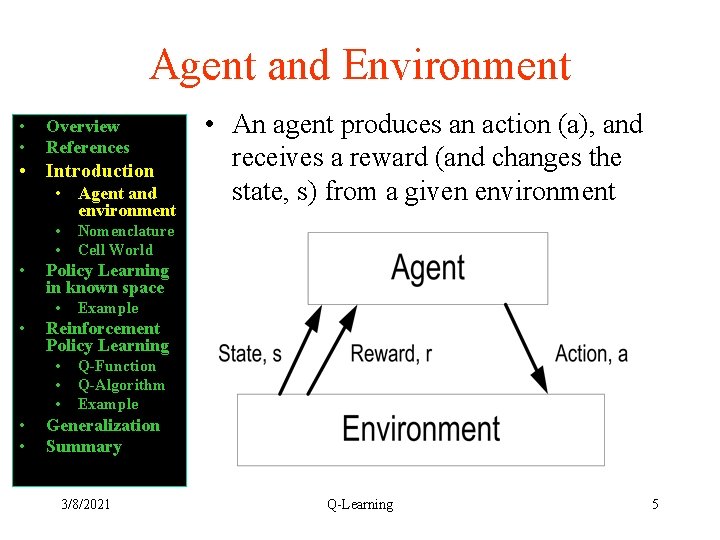

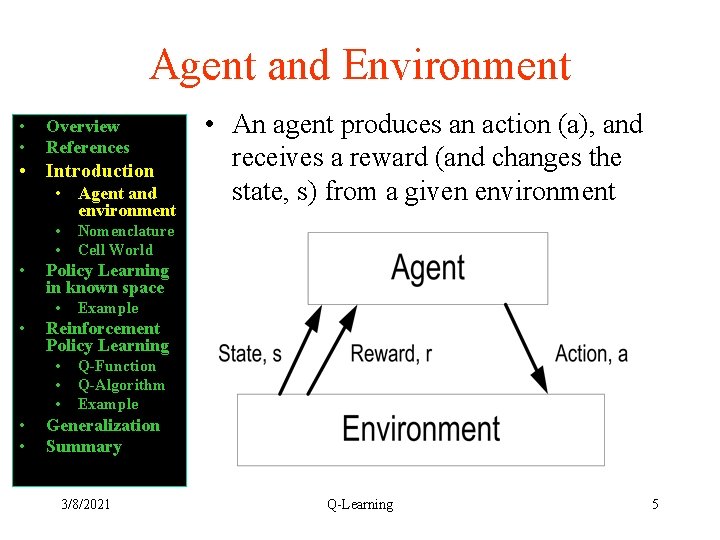

Agent and Environment • • Overview References • Introduction • Agent and environment • • • Example Reinforcement Policy Learning • • • Nomenclature Cell World Policy Learning in known space • • • An agent produces an action (a), and receives a reward (and changes the state, s) from a given environment Q-Function Q-Algorithm Example Generalization Summary 3/8/2021 Q-Learning 5

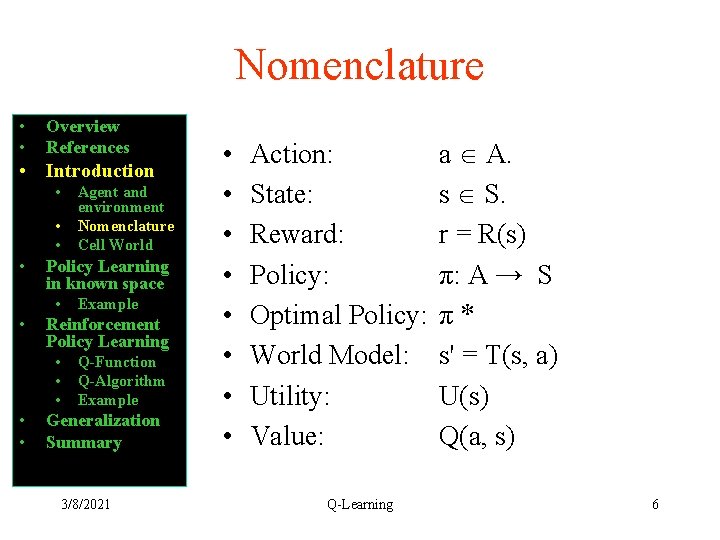

Nomenclature • • Overview References • Introduction • • Policy Learning in known space • • Example Reinforcement Policy Learning • • • Agent and environment Nomenclature Cell World Q-Function Q-Algorithm Example Generalization Summary 3/8/2021 • • Action: State: Reward: Policy: Optimal Policy: World Model: Utility: Value: Q-Learning a A. s S. r = R(s) π: A → S π* s' = T(s, a) U(s) Q(a, s) 6

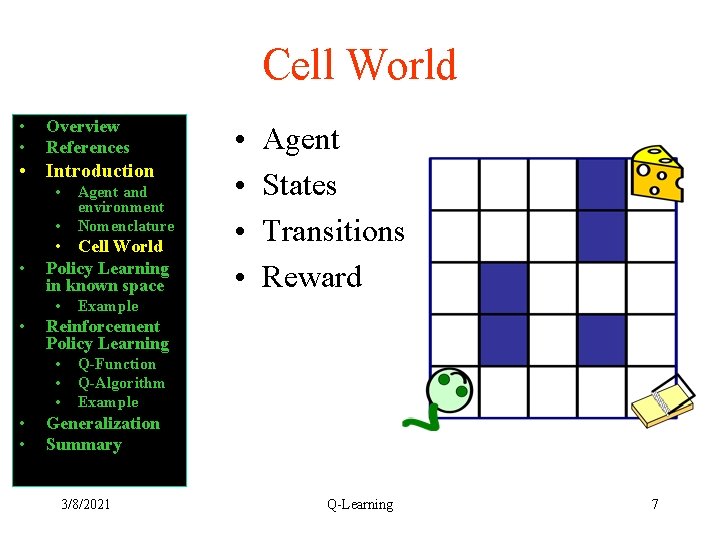

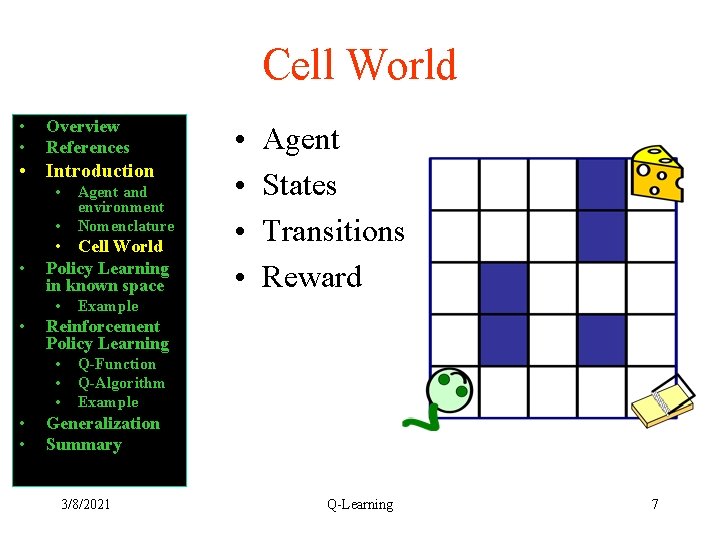

Cell World • • Overview References • Introduction • • Cell World Policy Learning in known space • • Agent States Transitions Reward Example Reinforcement Policy Learning • • • Agent and environment Nomenclature • • Q-Function Q-Algorithm Example Generalization Summary 3/8/2021 Q-Learning 7

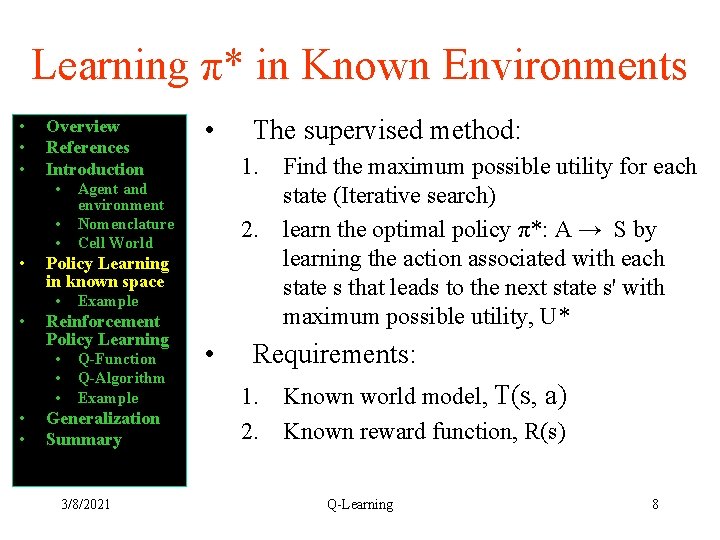

Learning π* in Known Environments • • • Overview References Introduction • • • Agent and environment Nomenclature Cell World Example Reinforcement Policy Learning Q-Function Q-Algorithm Example Generalization Summary 3/8/2021 The supervised method: 1. Find the maximum possible utility for each state (Iterative search) 2. learn the optimal policy π*: A → S by learning the action associated with each state s that leads to the next state s' with maximum possible utility, U* Policy Learning in known space • • Requirements: 1. Known world model, T(s, a) 2. Known reward function, R(s) Q-Learning 8

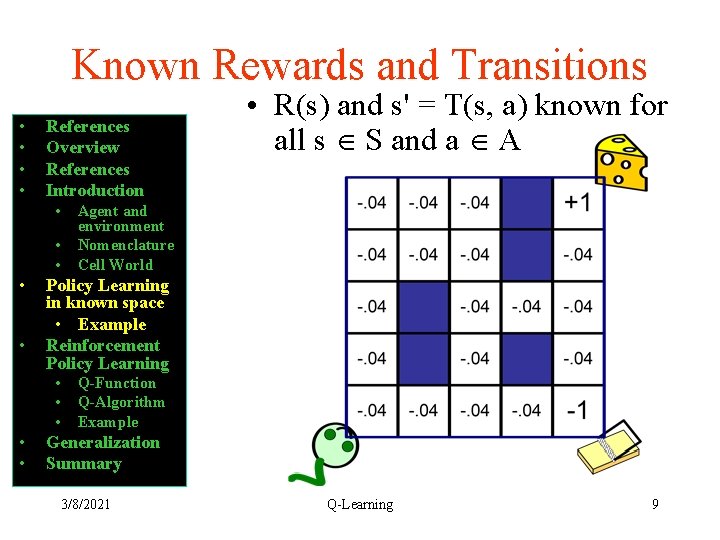

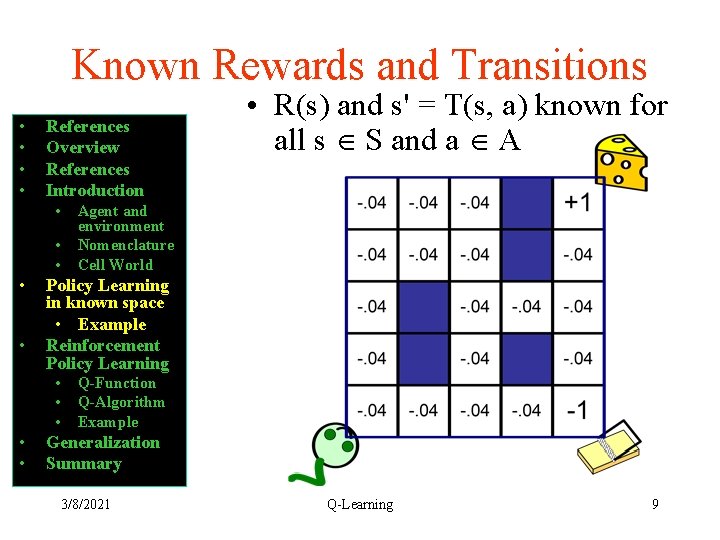

Known Rewards and Transitions • • References Overview References Introduction • • • Agent and environment Nomenclature Cell World Policy Learning in known space • Example Reinforcement Policy Learning • • • R(s) and s' = T(s, a) known for all s S and a A Q-Function Q-Algorithm Example Generalization Summary 3/8/2021 Q-Learning 9

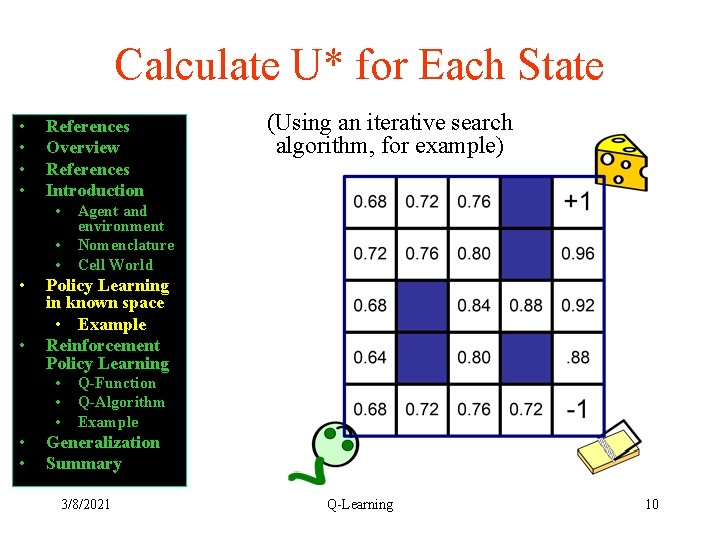

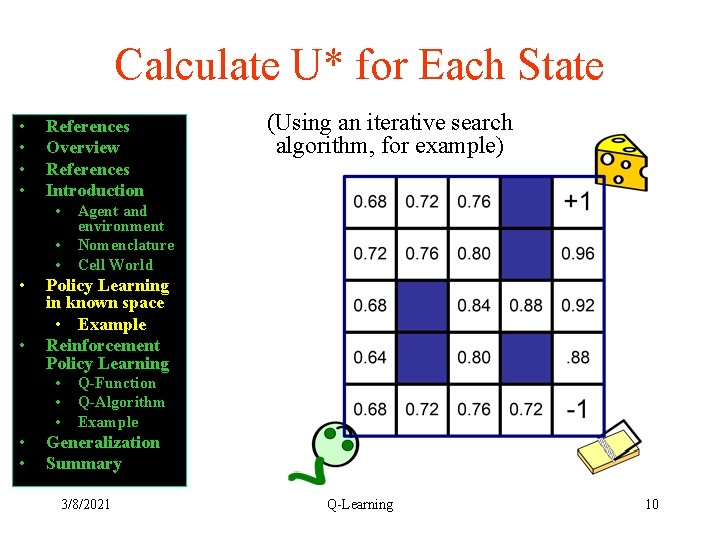

Calculate U* for Each State • • References Overview References Introduction • • • Agent and environment Nomenclature Cell World Policy Learning in known space • Example Reinforcement Policy Learning • • • (Using an iterative search algorithm, for example) Q-Function Q-Algorithm Example Generalization Summary 3/8/2021 Q-Learning 10

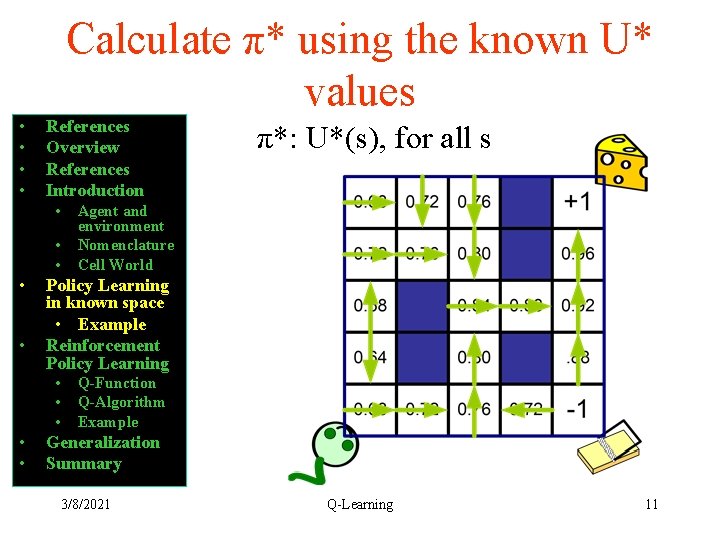

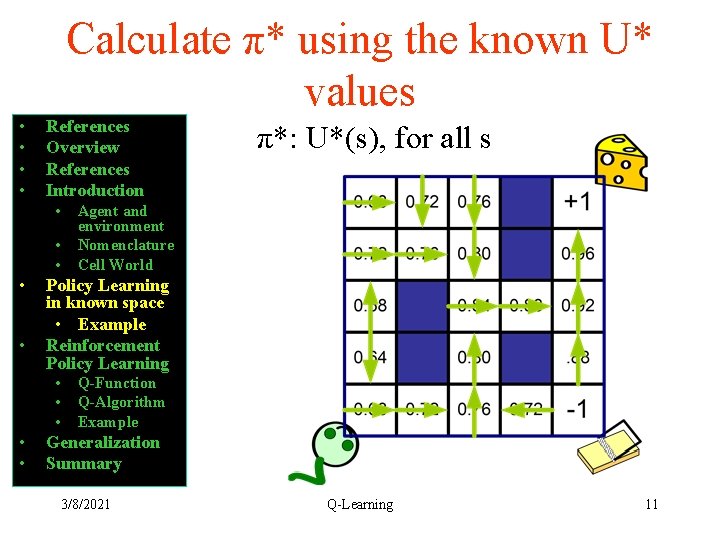

Calculate π* using the known U* values • • References Overview References Introduction • • • Agent and environment Nomenclature Cell World Policy Learning in known space • Example Reinforcement Policy Learning • • • π*: U*(s), for all s Q-Function Q-Algorithm Example Generalization Summary 3/8/2021 Q-Learning 11

Notes • • • Overview References Introduction • • Policy Learning in known space • • Example Reinforcement Policy Learning • • • Agent and environment Nomenclature Cell World Q-Function Q-Algorithm Example • Supervised learning methods work well when a complete model of the environment and the reward function are known • Since R(s) and T(s, a) are known, we can reduce learning to a standard iterative learning process. Generalization Summary 3/8/2021 Q-Learning 12

Unknown Environments • • • Overview References Introduction • • Policy Learning in known space • • Example Reinforcement Policy Learning • • • Agent and environment Nomenclature Cell World • What if the environment is unknown? Q-Function Q-Algorithm Example Generalization Summary 3/8/2021 Q-Learning 13

• • • Overview References Introduction • • Policy Learning in known space • • Example Reinforcement Policy Learning • • • Agent and environment Nomenclature Cell World Q-Function Q-Algorithm Example Generalization Summary 3/8/2021 Q-Learning 14

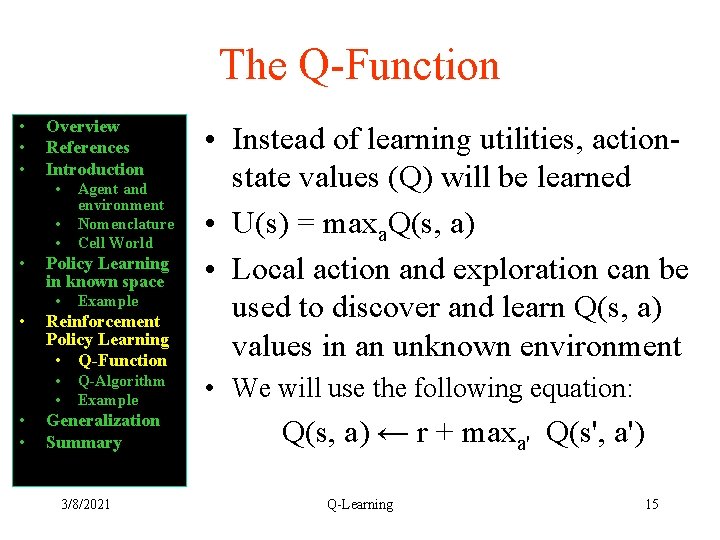

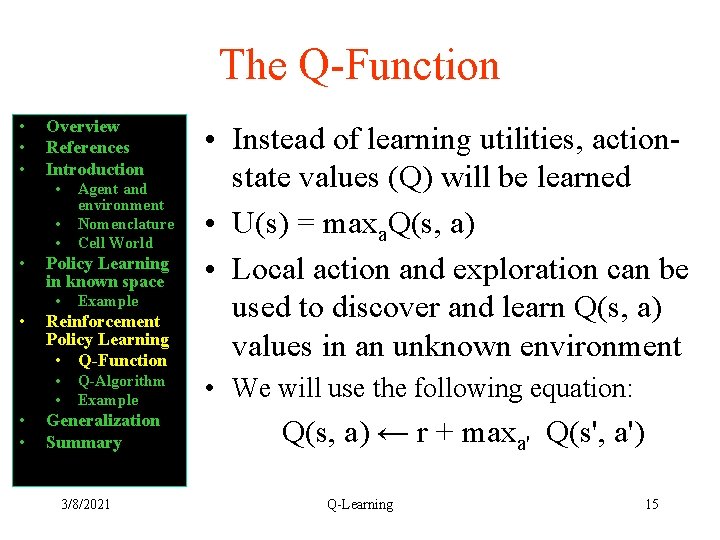

The Q-Function • • • Overview References Introduction • • Policy Learning in known space • • Example Reinforcement Policy Learning • Q-Function • • Agent and environment Nomenclature Cell World Q-Algorithm Example Generalization Summary 3/8/2021 • Instead of learning utilities, actionstate values (Q) will be learned • U(s) = maxa. Q(s, a) • Local action and exploration can be used to discover and learn Q(s, a) values in an unknown environment • We will use the following equation: Q(s, a) ← r + maxa' Q(s', a') Q-Learning 15

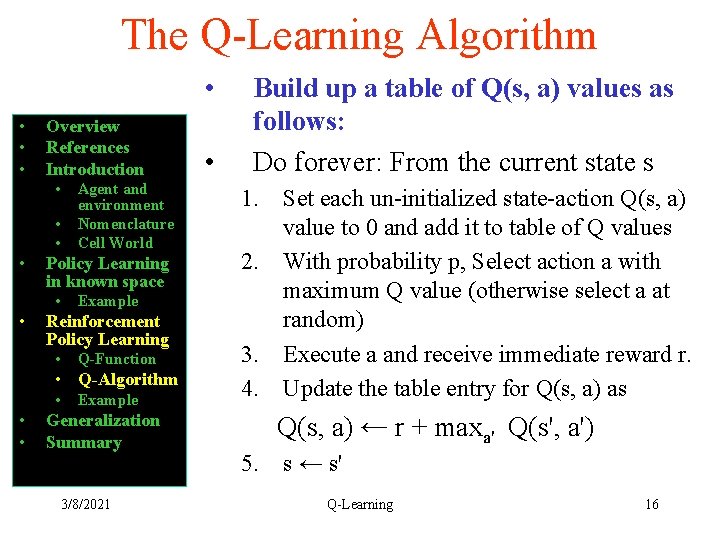

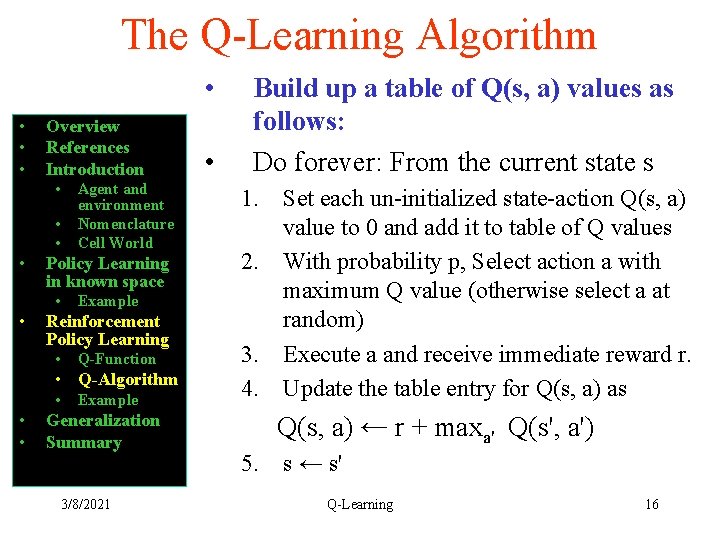

The Q-Learning Algorithm • • Overview References Introduction • • Policy Learning in known space • • Agent and environment Nomenclature Cell World Example Reinforcement Policy Learning • Q-Function • Q-Algorithm • • • Example Generalization Summary 3/8/2021 • Build up a table of Q(s, a) values as follows: Do forever: From the current state s 1. Set each un-initialized state-action Q(s, a) value to 0 and add it to table of Q values 2. With probability p, Select action a with maximum Q value (otherwise select a at random) 3. Execute a and receive immediate reward r. 4. Update the table entry for Q(s, a) as Q(s, a) ← r + maxa' Q(s', a') 5. s ← s' Q-Learning 16

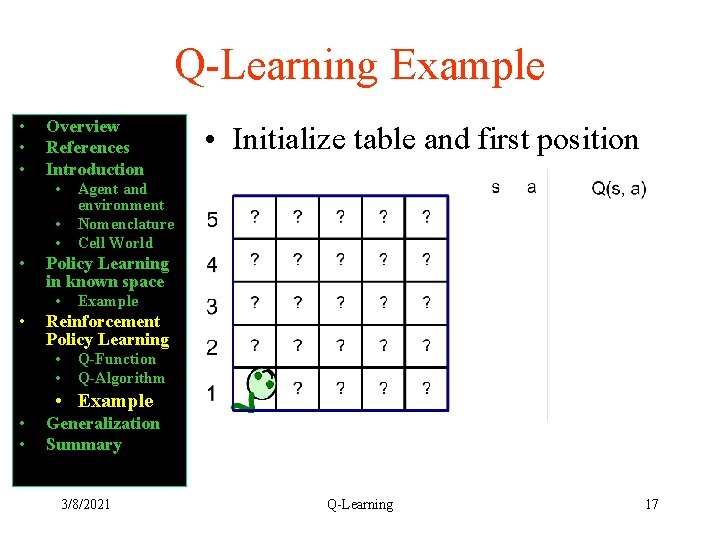

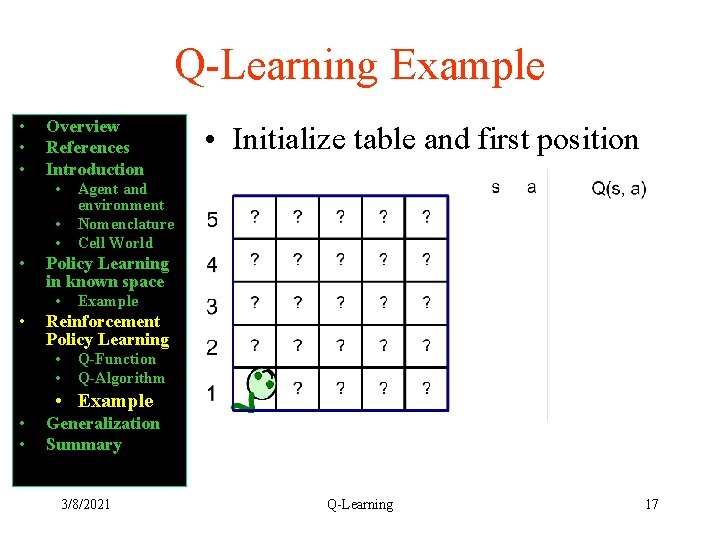

Q-Learning Example • • • Overview References Introduction • • Agent and environment Nomenclature Cell World Policy Learning in known space • • • Initialize table and first position Example Reinforcement Policy Learning • • Q-Function Q-Algorithm • Example • • Generalization Summary 3/8/2021 Q-Learning 17

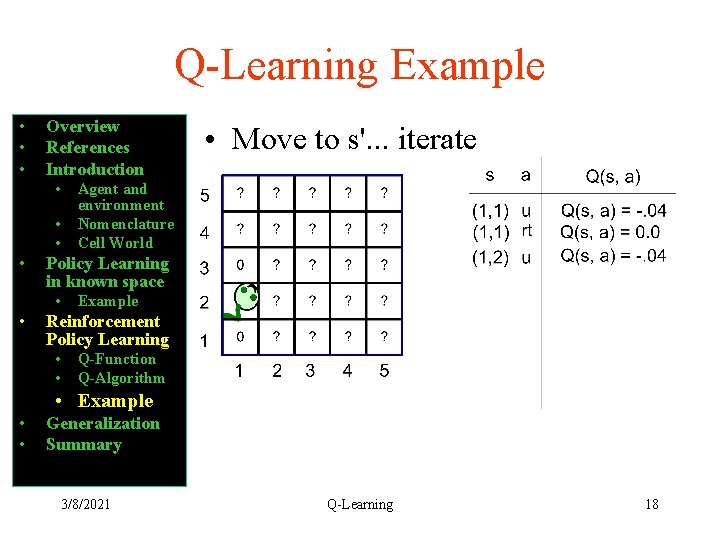

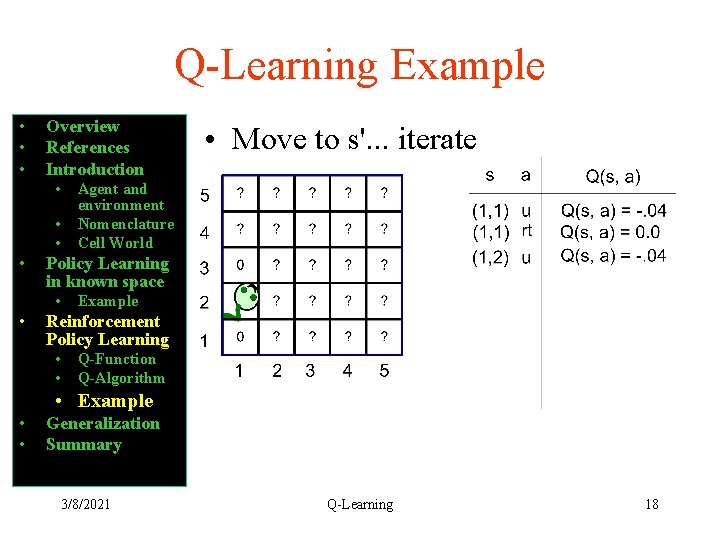

Q-Learning Example • • • Overview References Introduction • • Agent and environment Nomenclature Cell World Policy Learning in known space • • • Move to s'. . . iterate Example Reinforcement Policy Learning • • Q-Function Q-Algorithm • Example • • Generalization Summary 3/8/2021 Q-Learning 18

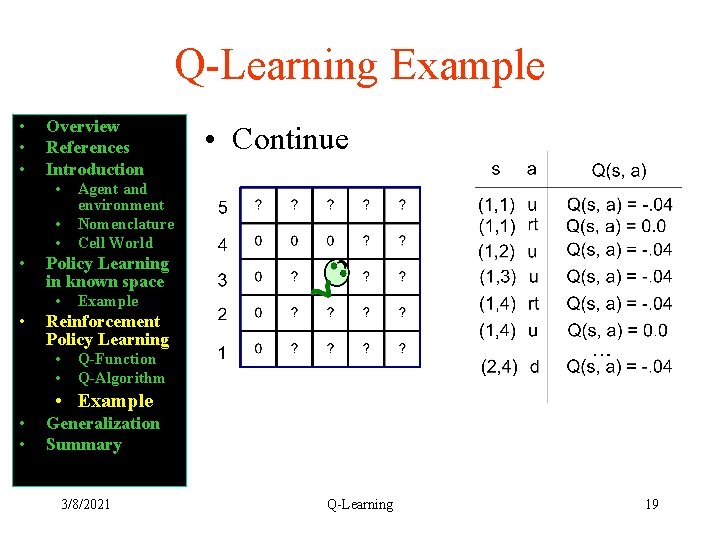

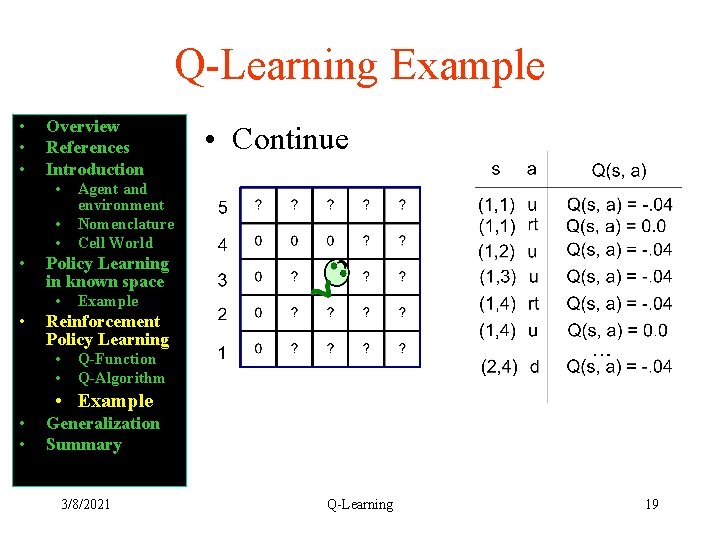

Q-Learning Example • • • Overview References Introduction • • Agent and environment Nomenclature Cell World Policy Learning in known space • • • Continue Example Reinforcement Policy Learning • • Q-Function Q-Algorithm • Example • • Generalization Summary 3/8/2021 Q-Learning 19

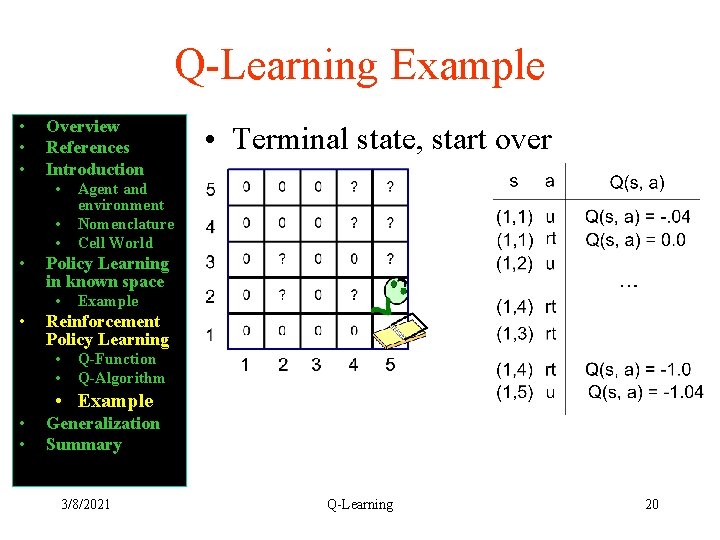

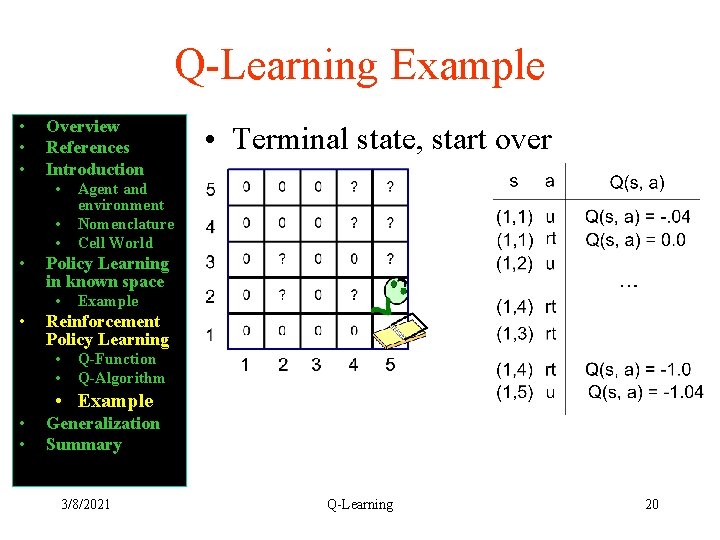

Q-Learning Example • • • Overview References Introduction • • Agent and environment Nomenclature Cell World Policy Learning in known space • • • Terminal state, start over Example Reinforcement Policy Learning • • Q-Function Q-Algorithm • Example • • Generalization Summary 3/8/2021 Q-Learning 20

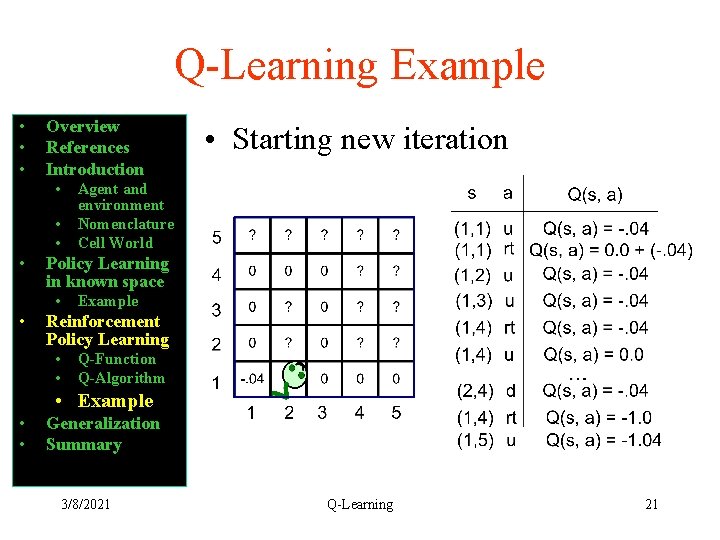

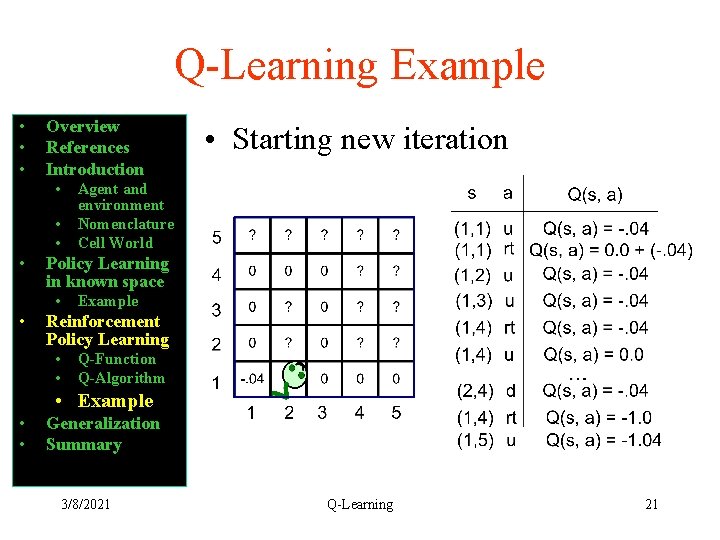

Q-Learning Example • • • Overview References Introduction • • Agent and environment Nomenclature Cell World Policy Learning in known space • • • Starting new iteration Example Reinforcement Policy Learning • • Q-Function Q-Algorithm • Example • • Generalization Summary 3/8/2021 Q-Learning 21

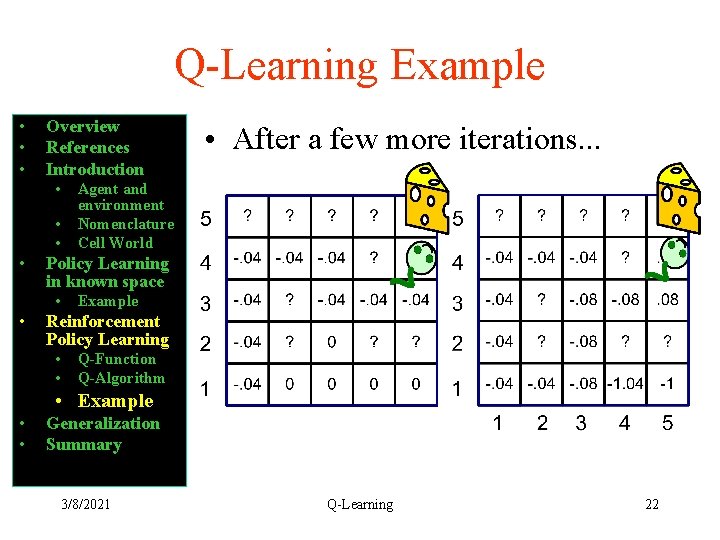

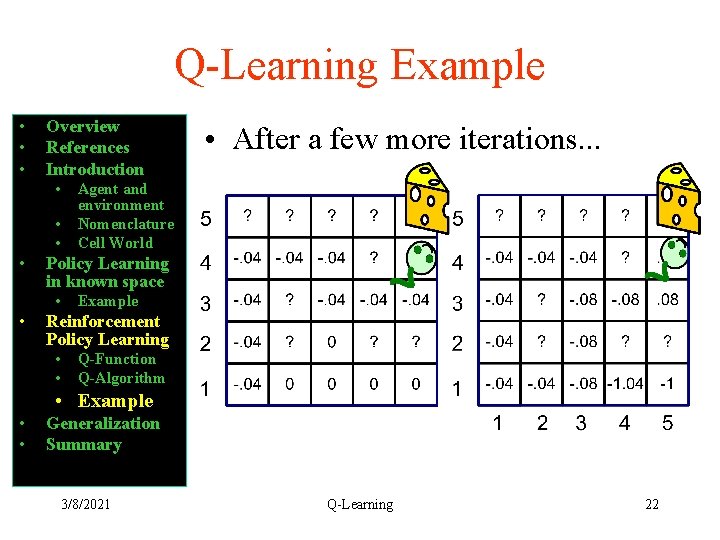

Q-Learning Example • • • Overview References Introduction • • Agent and environment Nomenclature Cell World Policy Learning in known space • • • After a few more iterations. . . Example Reinforcement Policy Learning • • Q-Function Q-Algorithm • Example • • Generalization Summary 3/8/2021 Q-Learning 22

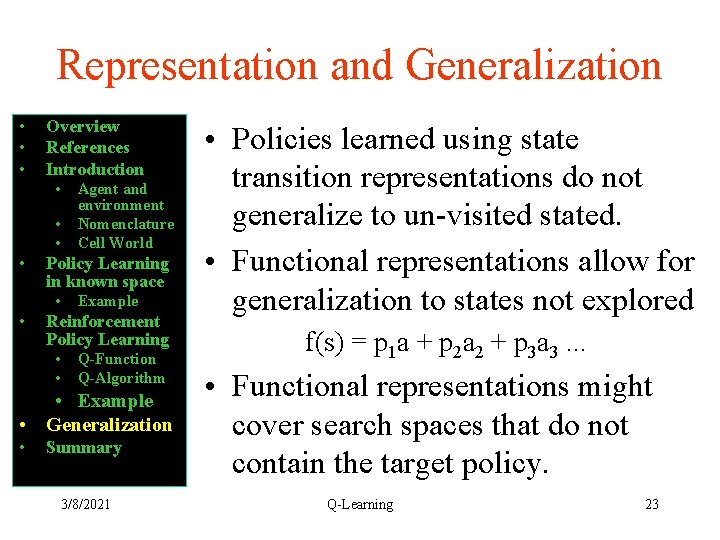

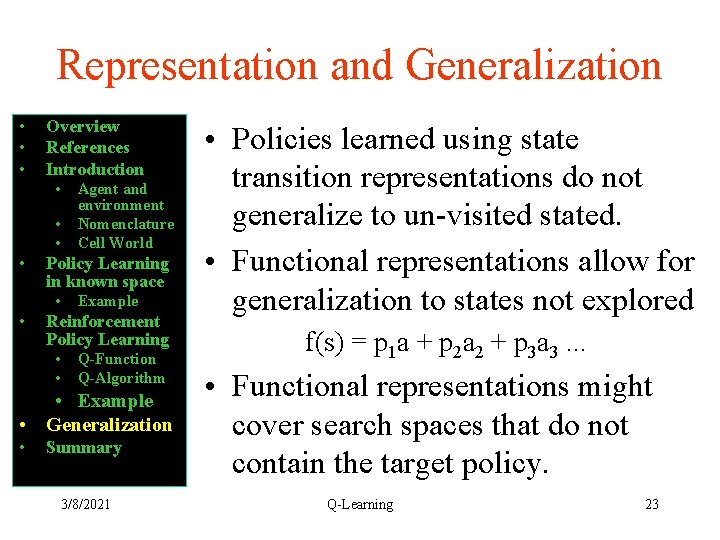

Representation and Generalization • • • Overview References Introduction • • Policy Learning in known space • • Agent and environment Nomenclature Cell World Example Reinforcement Policy Learning • • Q-Function Q-Algorithm • Example • Generalization • Summary 3/8/2021 • Policies learned using state transition representations do not generalize to un-visited stated. • Functional representations allow for generalization to states not explored f(s) = p 1 a + p 2 a 2 + p 3 a 3. . . • Functional representations might cover search spaces that do not contain the target policy. Q-Learning 23

Summary • • • Overview References Introduction • • Policy Learning in known space • • Agent and environment Nomenclature Cell World Example Reinforcement Policy Learning • • Q-Function Q-Algorithm • Example • Generalization • Summary 3/8/2021 • Reinforcement learning (RL) is useful for learning policies in uncharacterized environments • RL uses reward from actions taken during exploration • RL is useful on small state transition spaces • Functional representations increase the power of RL both in terms of generalization and representation Q-Learning 24