Introduction to Recurrent Neural Networks RNN J S

- Slides: 18

Introduction to Recurrent Neural Networks (RNN) J. -S. Roger Jang (張智星) jang@mirlab. org http: //mirlab. org/jang MIR Lab, CSIE Dept. National Taiwan University

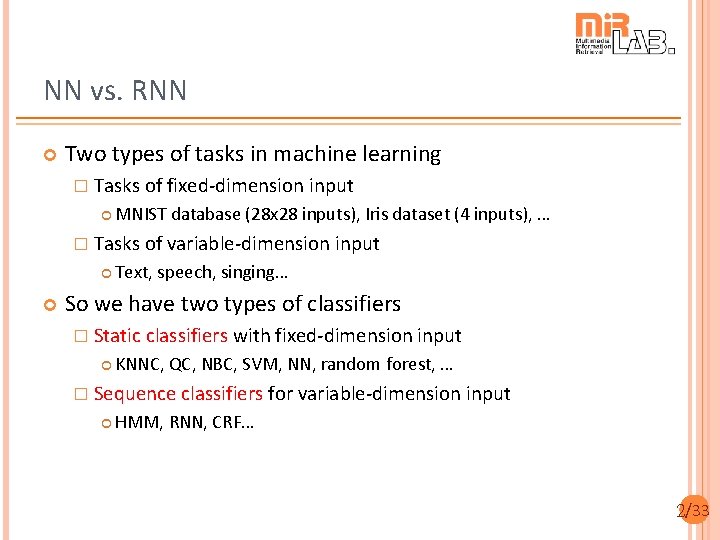

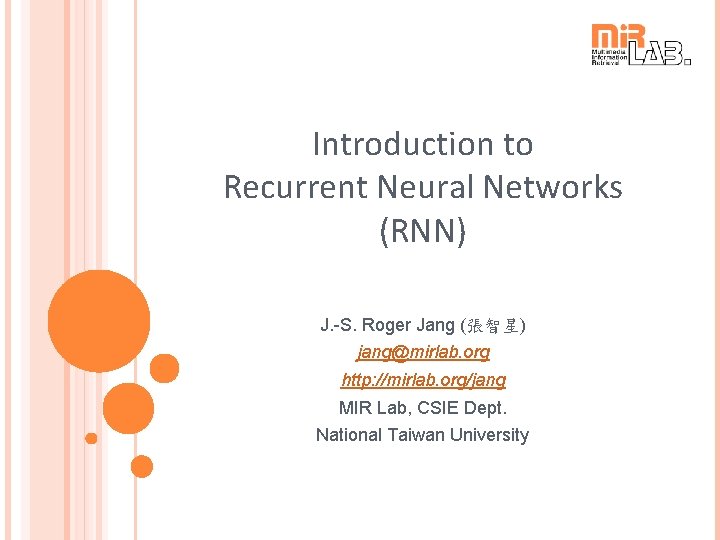

NN vs. RNN Two types of tasks in machine learning � Tasks of fixed-dimension input MNIST database (28 x 28 inputs), Iris dataset (4 inputs), … � Tasks of variable-dimension input Text, speech, singing… So we have two types of classifiers � Static classifiers with fixed-dimension input KNNC, QC, NBC, SVM, NN, random forest, … � Sequence classifiers for variable-dimension input HMM, RNN, CRF… 2/33

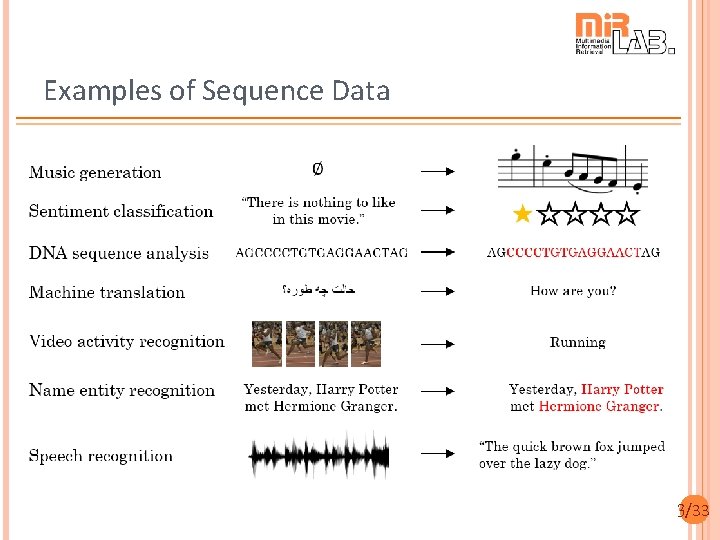

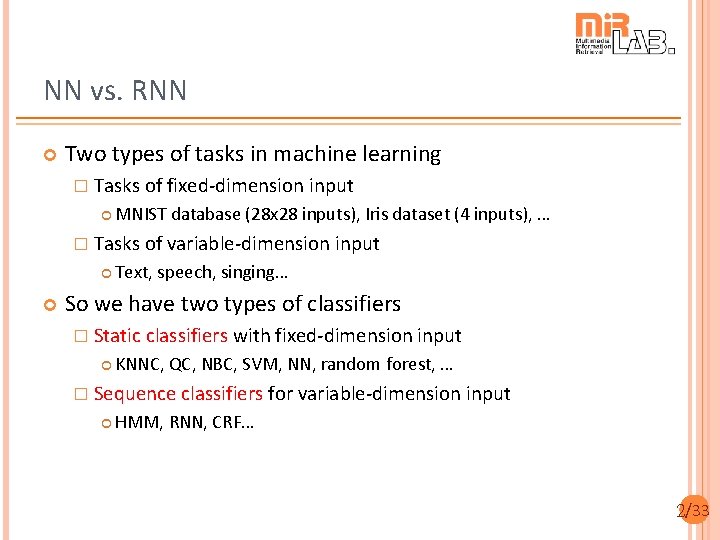

Examples of Sequence Data 3/33

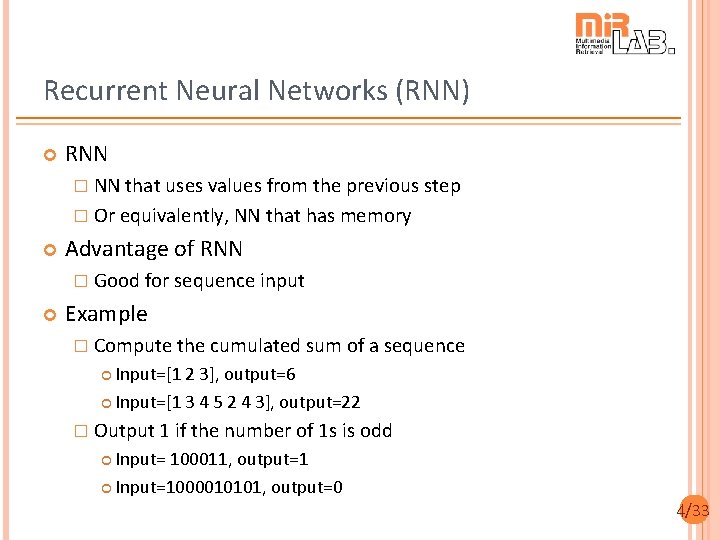

Recurrent Neural Networks (RNN) RNN � NN that uses values from the previous step � Or equivalently, NN that has memory Advantage of RNN � Good for sequence input Example � Compute the cumulated sum of a sequence Input=[1 2 3], output=6 Input=[1 3 4 5 2 4 3], output=22 � Output 1 if the number of 1 s is odd Input= 100011, output=1 Input=1000010101, output=0 4/33

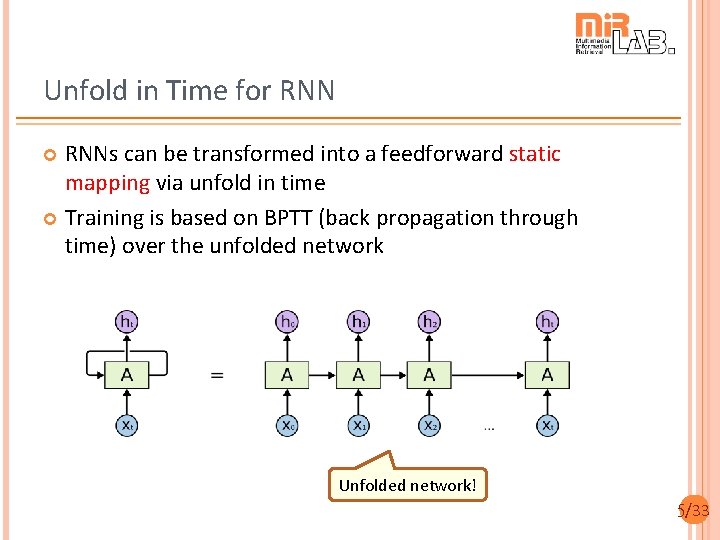

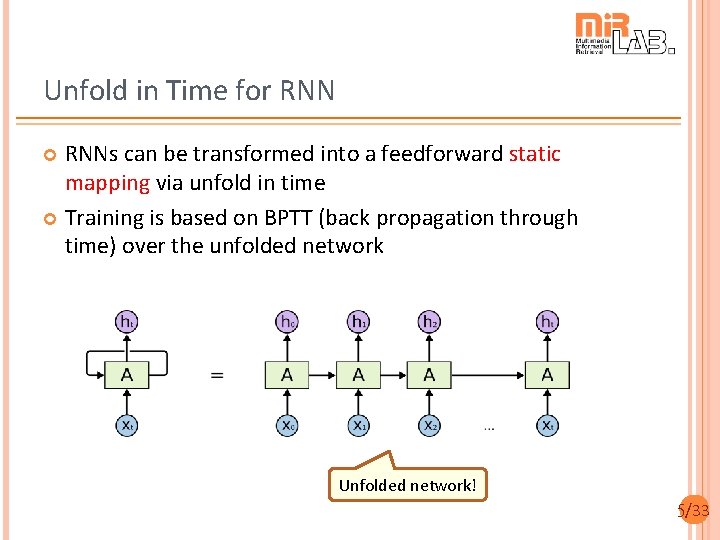

Unfold in Time for RNNs can be transformed into a feedforward static mapping via unfold in time Training is based on BPTT (back propagation through time) over the unfolded network Unfolded network! 5/33

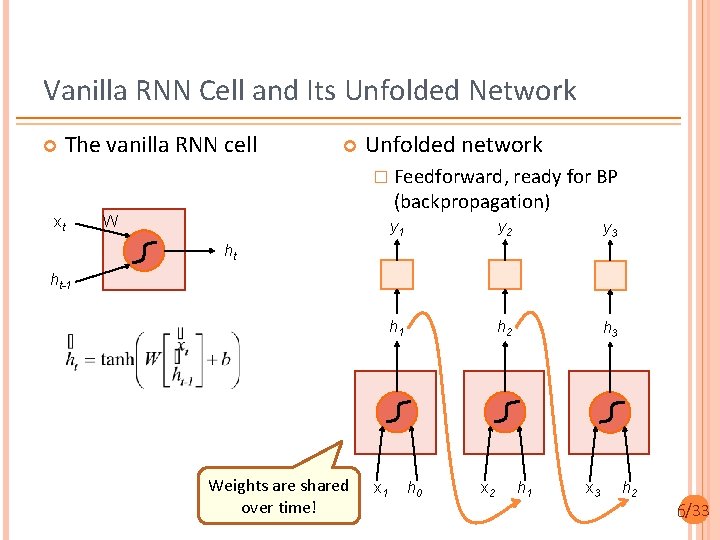

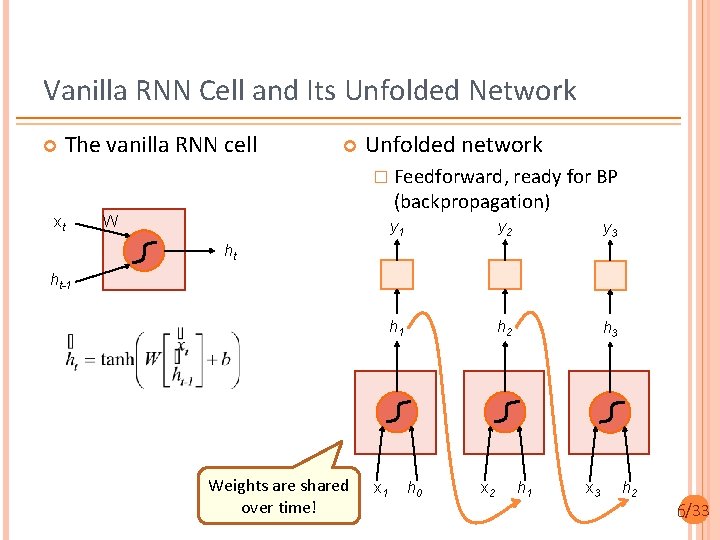

Vanilla RNN Cell and Its Unfolded Network The vanilla RNN cell Unfolded network � Feedforward, ready for BP xt (backpropagation) W y 1 y 2 y 3 h 1 h 2 h 3 ht ht-1 Weights are shared over time! x 1 h 0 x 2 h 1 x 3 h 2 6/33

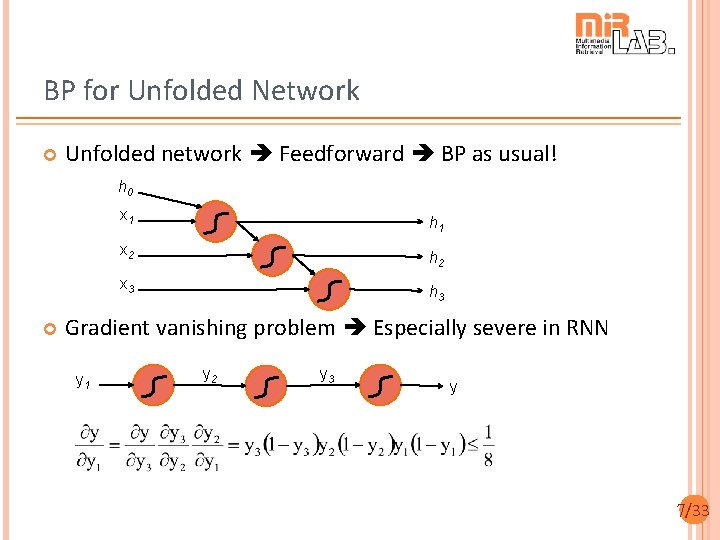

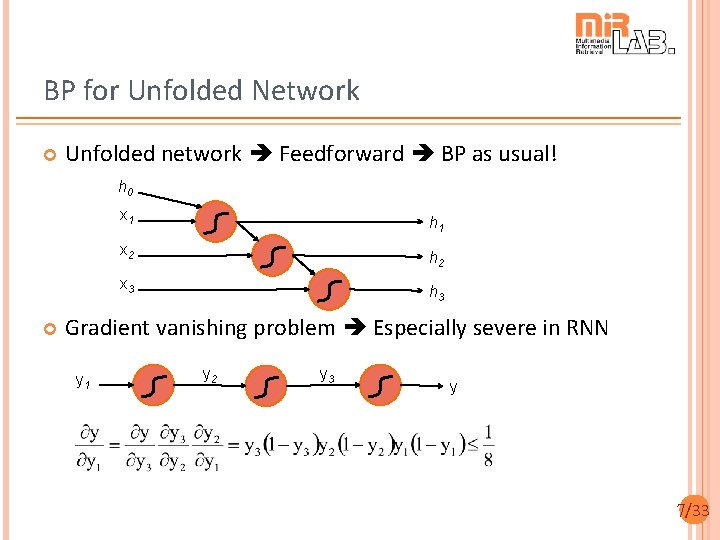

BP for Unfolded Network Unfolded network Feedforward BP as usual! h 0 x 1 h 1 x 2 h 2 x 3 h 3 Gradient vanishing problem Especially severe in RNN y 1 y 2 y 3 y 7/33

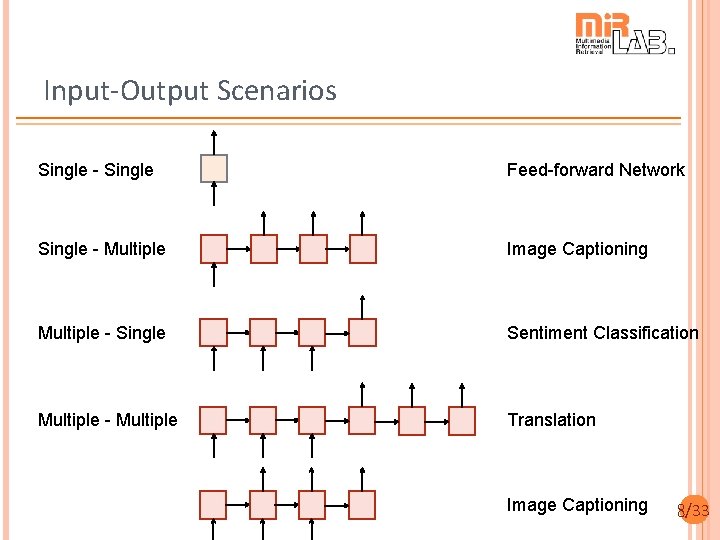

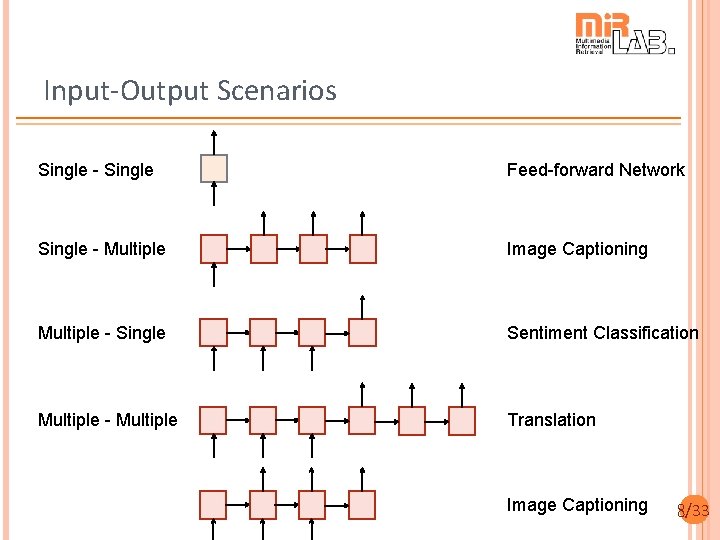

Input-Output Scenarios Single - Single Feed-forward Network Single - Multiple Image Captioning Multiple - Single Sentiment Classification Multiple - Multiple Translation Image Captioning 8/33

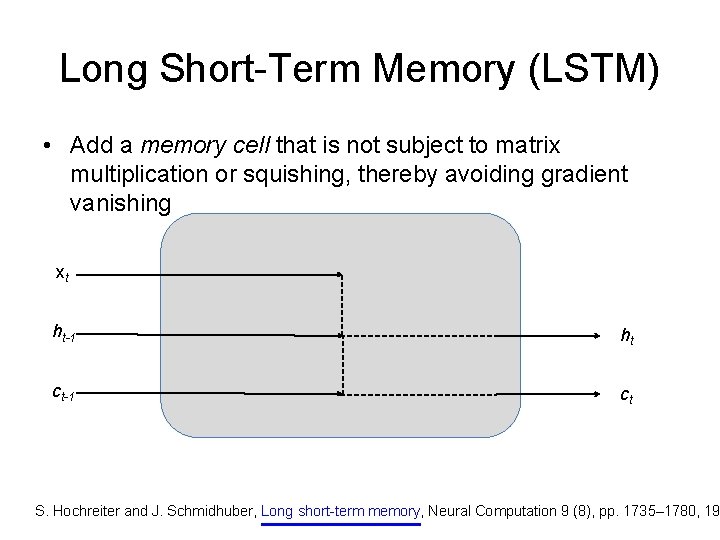

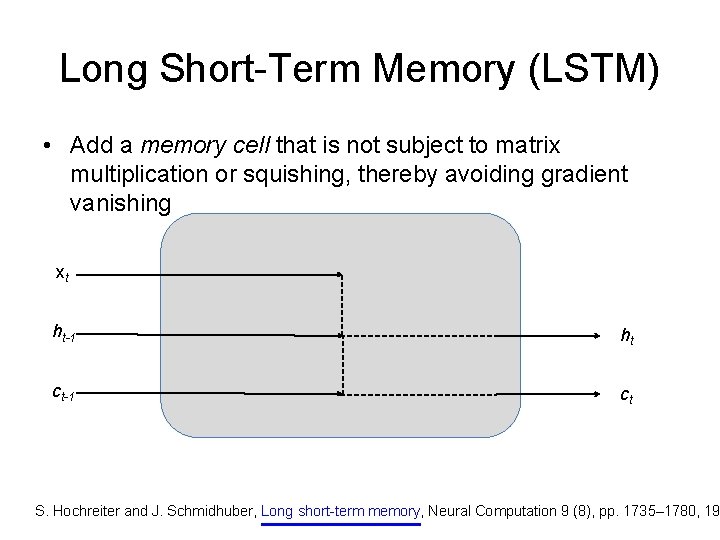

Long Short-Term Memory (LSTM) • Add a memory cell that is not subject to matrix multiplication or squishing, thereby avoiding gradient vanishing xt ht-1 ht ct-1 ct S. Hochreiter and J. Schmidhuber, Long short-term memory, Neural Computation 9 (8), pp. 1735– 1780, 19

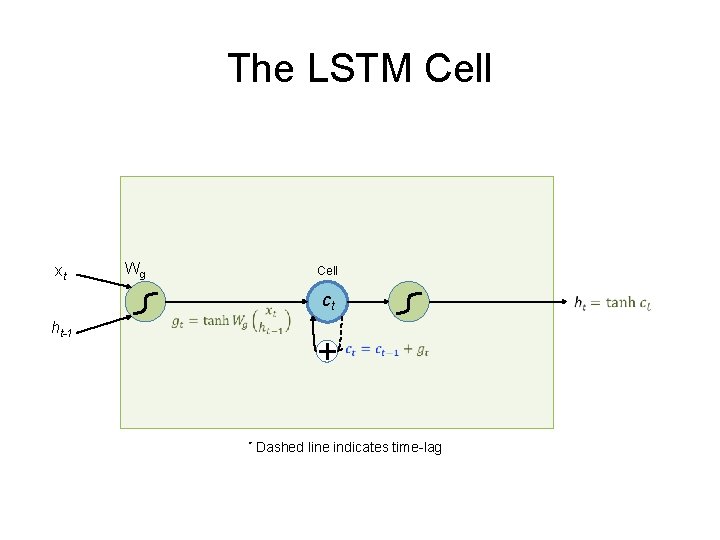

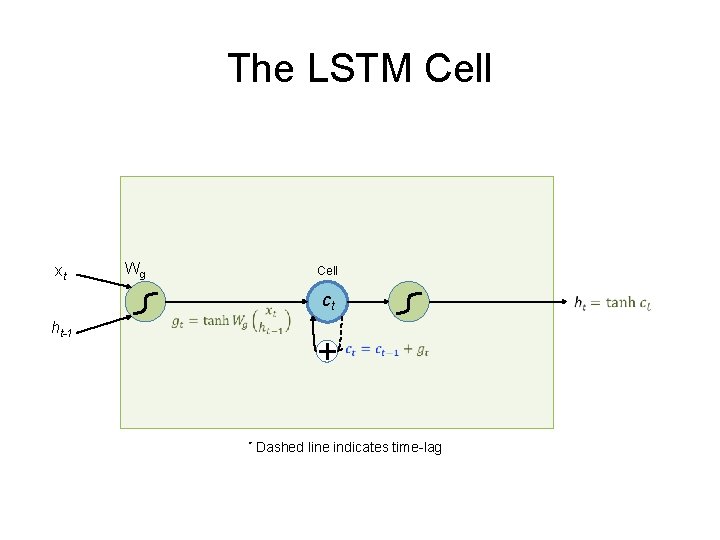

The LSTM Cell xt ht-1 Wg Cell ct * Dashed line indicates time-lag

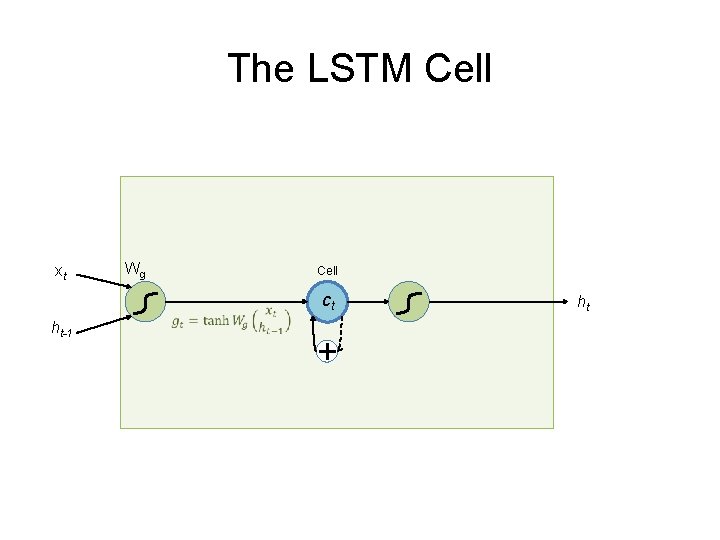

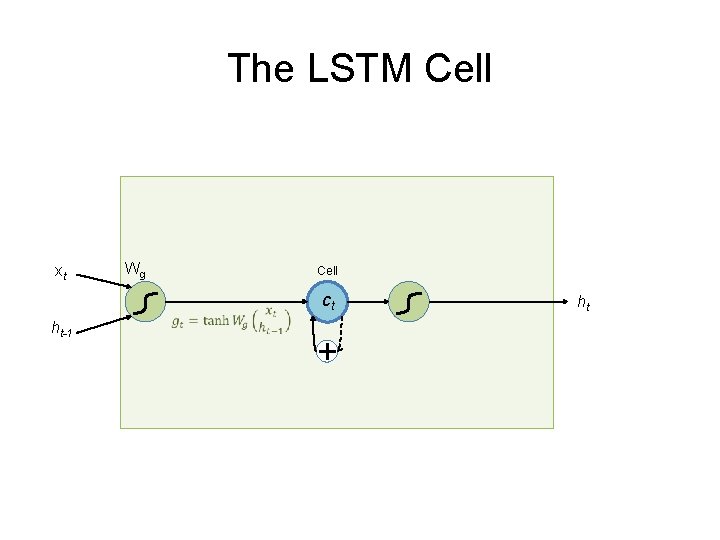

The LSTM Cell xt ht-1 Wg Cell ct ht

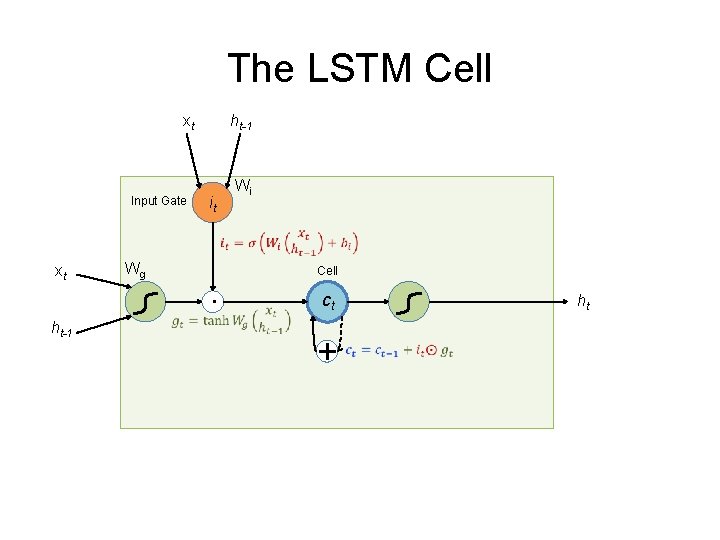

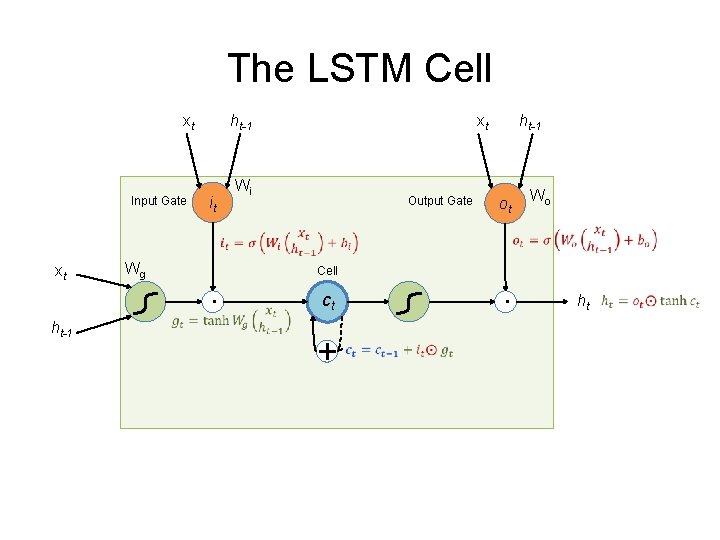

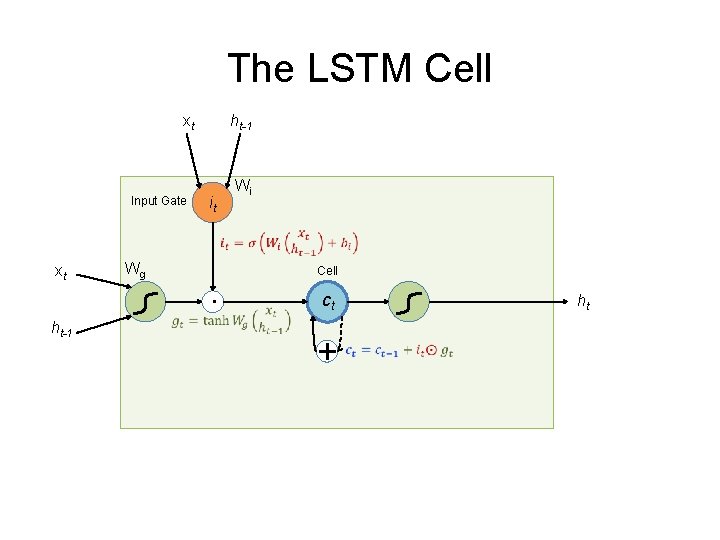

The LSTM Cell xt Input Gate ht-1 Wi it xt ht-1 Wg . Cell ct ht

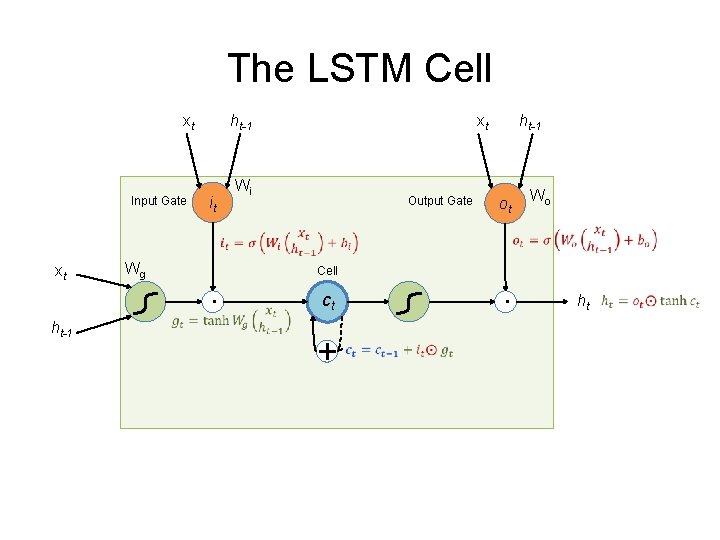

The LSTM Cell xt Input Gate ht-1 xt Wi it Output Gate ht-1 Wg . ot Wo xt ht-1 Cell . ct ht

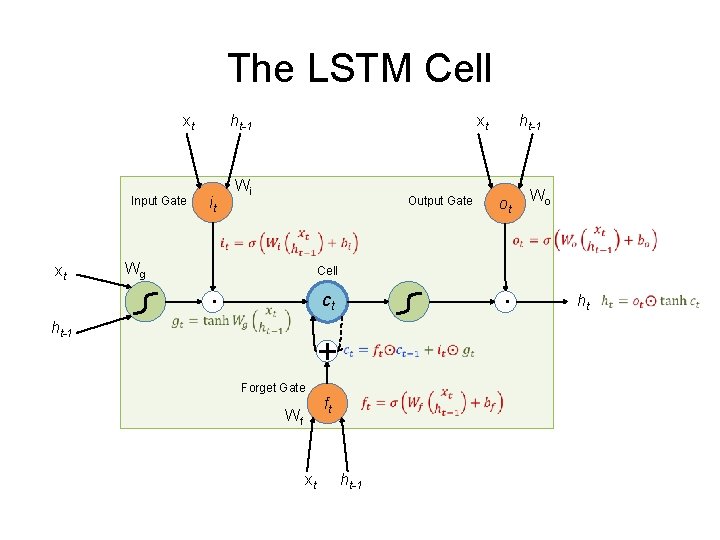

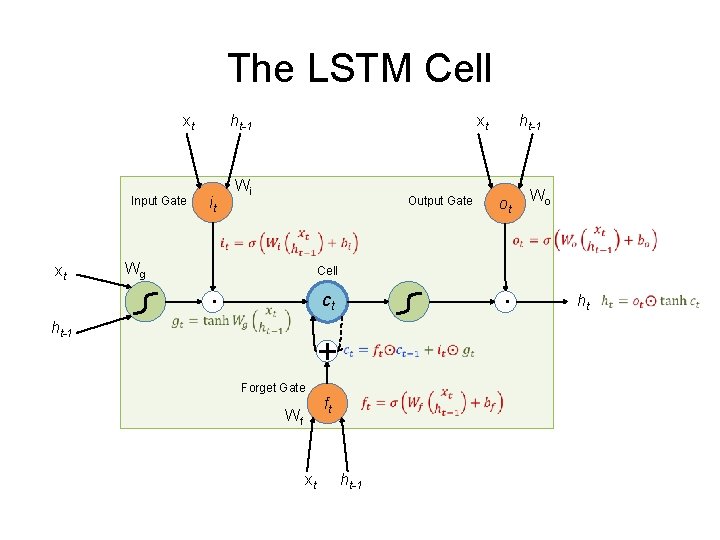

The LSTM Cell xt Input Gate ht-1 xt Wi it Output Gate ht-1 Wg ot Wo xt ht-1 Cell . . ct Forget Gate ft Wf xt ht-1 ht

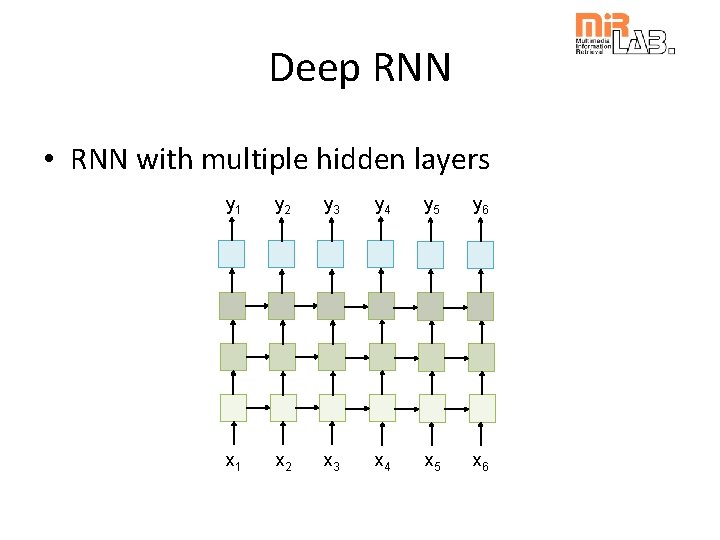

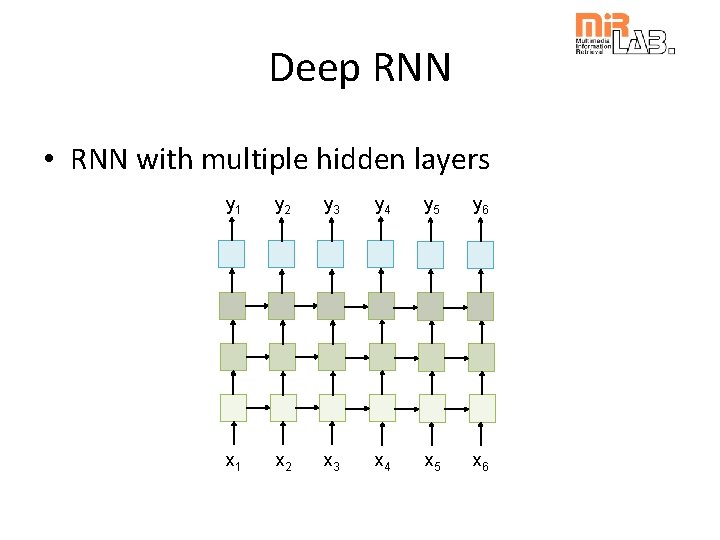

Deep RNN • RNN with multiple hidden layers y 1 y 2 y 3 y 4 y 5 y 6 x 1 x 2 x 3 x 4 x 5 x 6

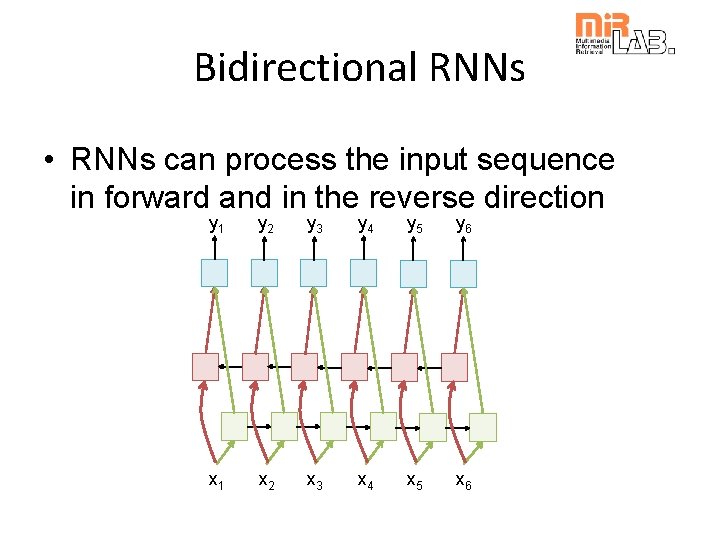

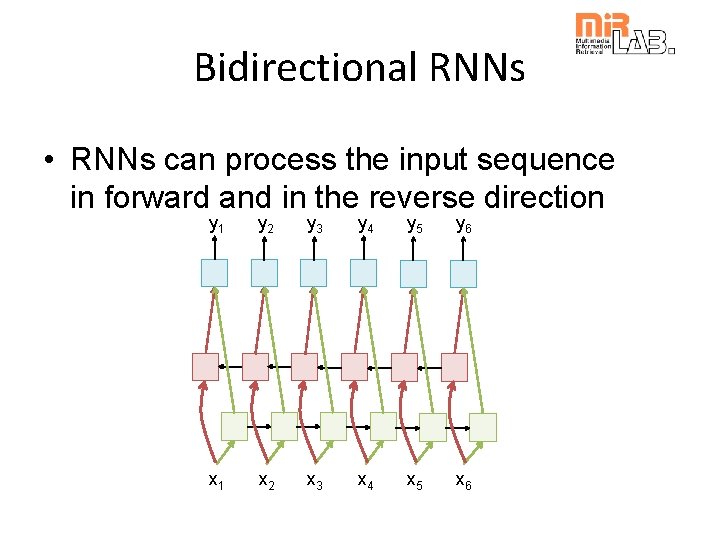

Bidirectional RNNs • RNNs can process the input sequence in forward and in the reverse direction y 1 y 2 y 3 y 4 y 5 y 6 x 1 x 2 x 3 x 4 x 5 x 6

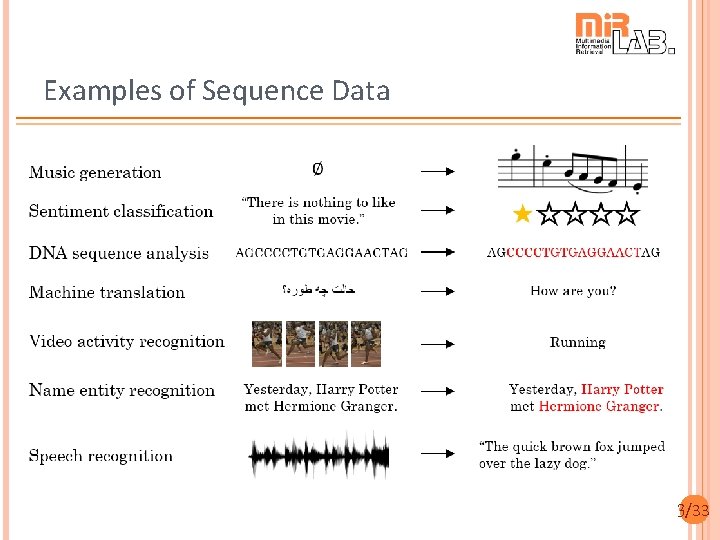

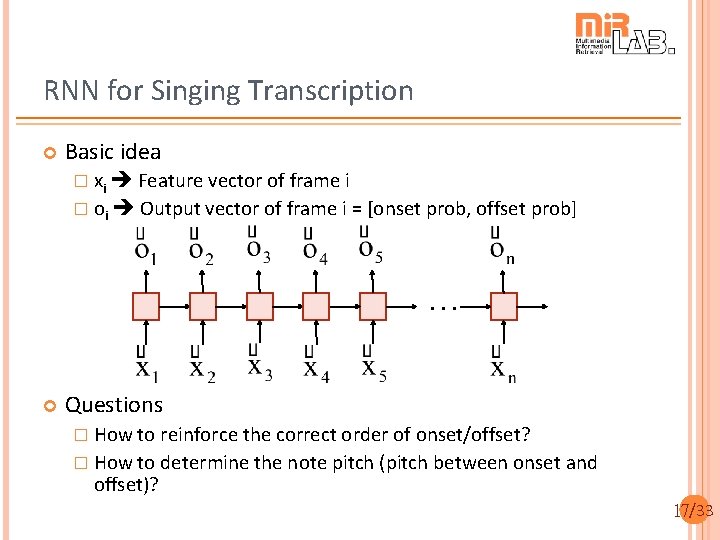

RNN for Singing Transcription Basic idea � xi Feature vector of frame i � oi Output vector of frame i = [onset prob, offset prob] … Questions � How to reinforce the correct order of onset/offset? � How to determine the note pitch (pitch between onset and offset)? 17/33

References Some course material taken from the following slides � http: //slazebni. cs. illinois. edu/spring 17/lec 02_rnn. pptx by Arun Mallya � http: //fall 97. class. vision/slides/5. pptx � https: //cs. uwaterloo. ca/~mli/Deep-Learning-2017 Lecture 6 RNN. ppt Videos � https: //www. youtube. com/watch? v=x. CGid. Aey. S 4 M by李宏毅 � https: //www. youtube. com/watch? v=r. Tqm. Wlnwz_0 by李宏毅 � https: //www. youtube. com/watch? v=lyc. Kqccytf. U 18/33