Introduction to Pattern Recognition Kama Jambi kjambikau edu

Introduction to Pattern Recognition Kama Jambi kjambi@kau. edu. sa

Course Information z Assessment y. Project (Group) – y. Exams (Mid + Final) – y. Paper + Presentation –

What is pattern recognition? z Pattern recognition is the assignment of a label to a given input value -- Wikipedia z The assignment of a physical object or event to one of several predefined categories -- Duda & Hart Related terminology: Machine Learning z Construction and study of systems that can learn from data -- Wikipedia z Field of study that gives computers the ability to learn without being explicitly programmed -- Arthur Samuel Usually they refer to the same concept

Learning and Generalization z. Learning => improvement y. A computer program is said to learn from experience E with respect to some class of tasks T and performance measure P, if its performance at tasks in T, as measured by P, improves with experience E -- Tom Mitchell z. Generalization y. Recognize new patterns

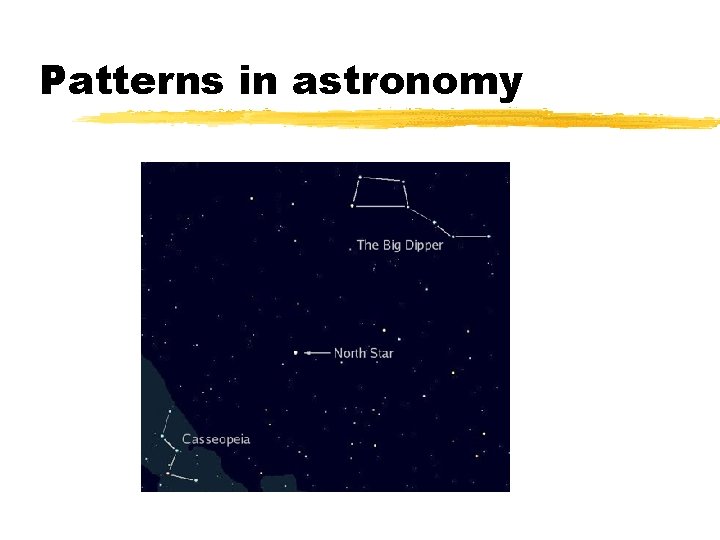

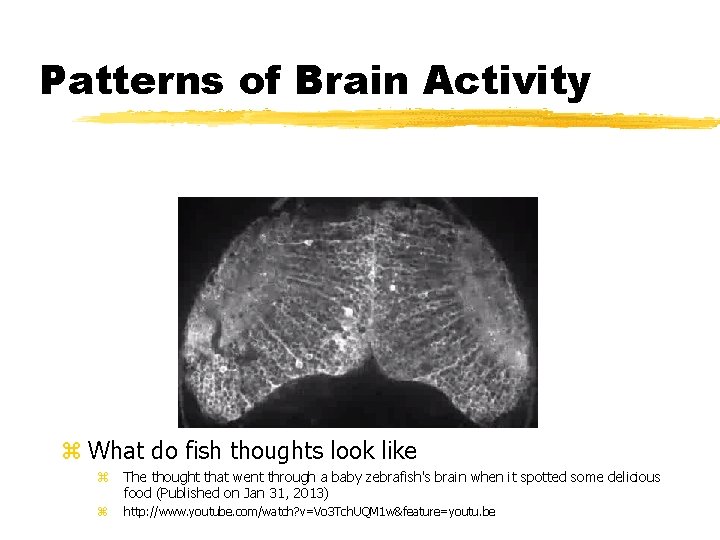

What is a pattern z A pattern is an object, process or event that can be given a name z A pattern is opposite of a chaos, an entity, vaguely defined, that could be given a name -- Watanabe z For example, a pattern could be: y Arrangement of stars y Fingerprint image y Handwritten word y Brain activity

Patterns in astronomy

Patterns in fingerprints

Patterns in handwriting

Patterns of Brain Activity z What do fish thoughts look like z The thought that went through a baby zebrafish's brain when it spotted some delicious food (Published on Jan 31, 2013) z http: //www. youtube. com/watch? v=Vo 3 Tch. UQM 1 w&feature=youtu. be

What are Class/Classification/Classifier z. A pattern class (or category) is a set of patterns sharing common attributes and usually originating from the same source z. During recognition (or classification) given objects are assigned to prescribed classes z. A classifier is a machine which performs classification

Goal of Pattern Recognition z. Recognize Patterns: Make decisions about patterns. y. Visual Example – is this person happy or sad? y. Speech Example – did the speaker say “Yes” or “No”? y. Textual Example – is this word the name of a person? y. Physics Example – is this an atom or a molecule?

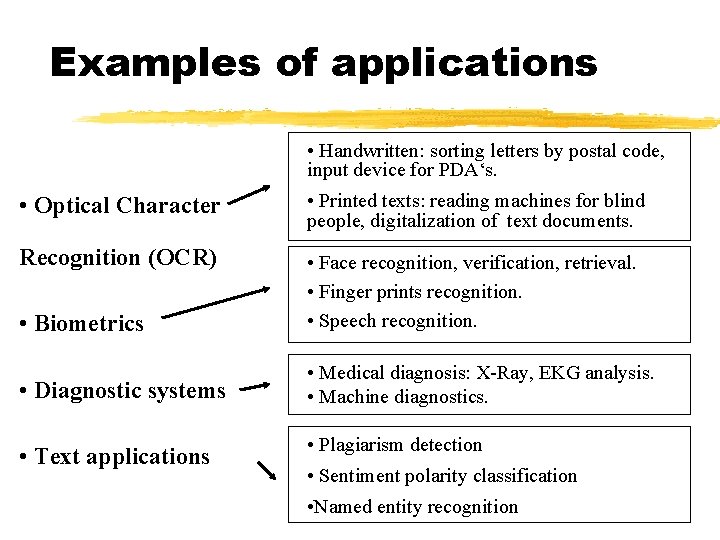

Examples of applications • Handwritten: sorting letters by postal code, input device for PDA‘s. • Optical Character • Printed texts: reading machines for blind people, digitalization of text documents. Recognition (OCR) • Biometrics • Face recognition, verification, retrieval. • Finger prints recognition. • Speech recognition. • Diagnostic systems • Medical diagnosis: X-Ray, EKG analysis. • Machine diagnostics. • Text applications • Plagiarism detection • Sentiment polarity classification • Named entity recognition

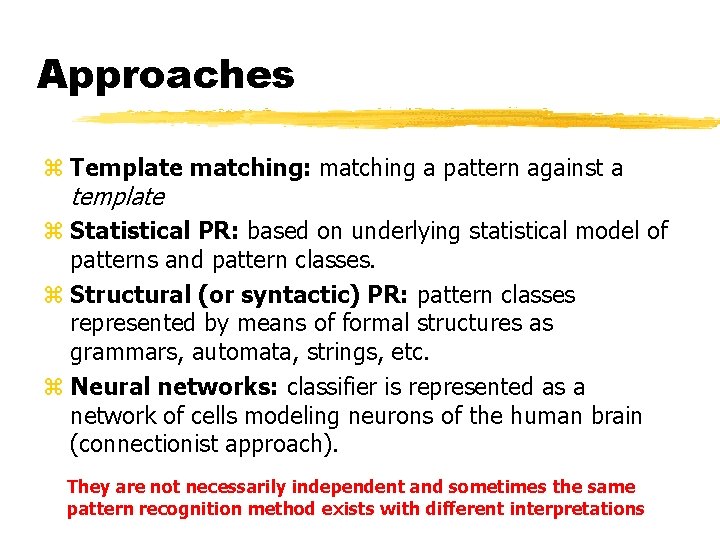

Approaches z Template matching: matching a pattern against a template z Statistical PR: based on underlying statistical model of patterns and pattern classes. z Structural (or syntactic) PR: pattern classes represented by means of formal structures as grammars, automata, strings, etc. z Neural networks: classifier is represented as a network of cells modeling neurons of the human brain (connectionist approach). They are not necessarily independent and sometimes the same pattern recognition method exists with different interpretations

Template Matching z. Determine the similarity between two entities z. Pattern to be recognized is matched against a stored template z. Pattern variations are taken into account z. Template is learned form the data

Statistical Approach z Each patterns is represented in terms of d features and is viewed as a point in d-dimensional space z Given a set of training patterns from each class, the objective is to establish decision boundaries in the feature space which separate patterns belonging to different classes z Boundaries are determined y By the probability distribution of patterns belonging to each class y Directly, by defining a parametric form and finding the best boundary based upon classification of data

Syntactic Approach z. A pattern is viewed as being constructed hierarchically of sub-patterns, the simplest of which are called primitives z. Patterns are like sentences while primitives are like alphabets of a language z. Sentences are generated according a grammar that is inferred from the data

Neural Networks z. Massively parallel computing systems consisting of an extremely large number of simple processors (neuron) with many interconnections z. Can learn complex nonlinear relationships

Statistical Pattern Recognition z A given pattern is to be assigned to one of c categories (ω1, ω2, …, ωc) based upon on a vector of d feature values X (x 1, x 2, …, xd) z Features have a probability density or mass function z A pattern vector X belonging to class ωi is viewed as an observation drawn randomly from the class-conditional probability function P(X| ω i)

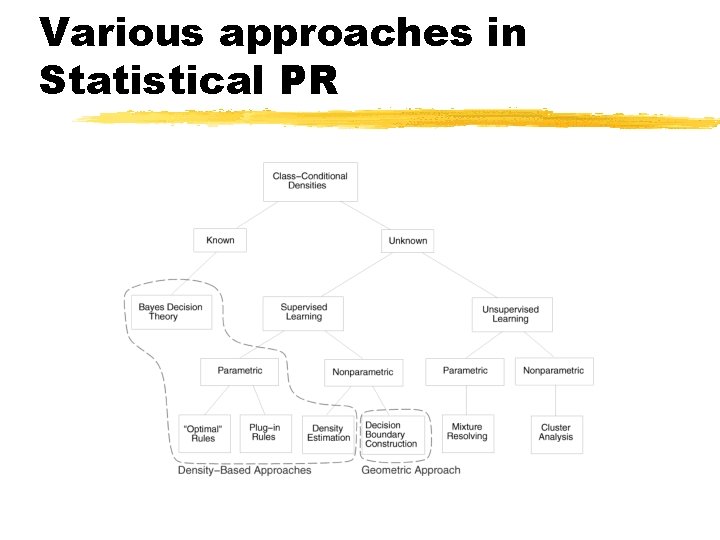

Various approaches in Statistical PR

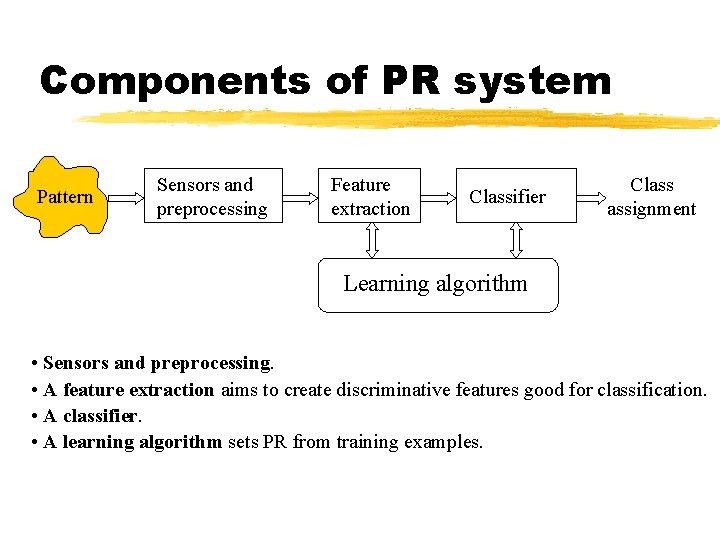

Components of PR system Pattern Sensors and preprocessing Feature extraction Classifier Class assignment Learning algorithm • Sensors and preprocessing. • A feature extraction aims to create discriminative features good for classification. • A classifier. • A learning algorithm sets PR from training examples.

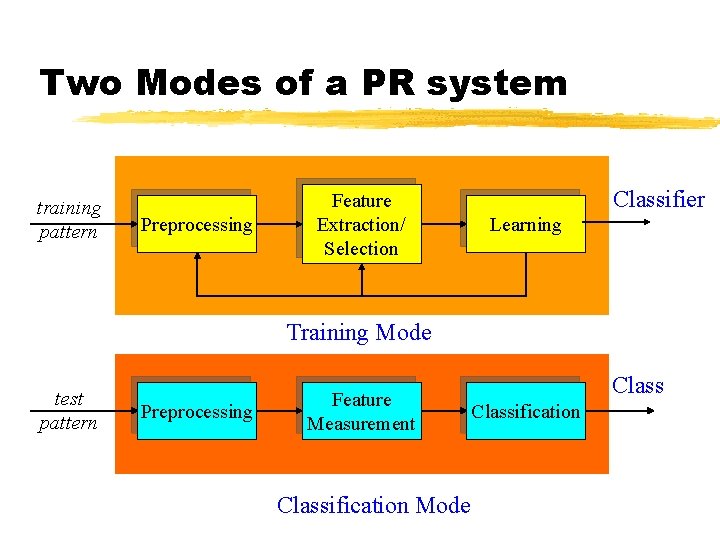

Two Modes of a PR system training pattern Preprocessing Feature Extraction/ Selection Classifier Learning Training Mode test pattern Preprocessing Feature Measurement Classification Mode Classification

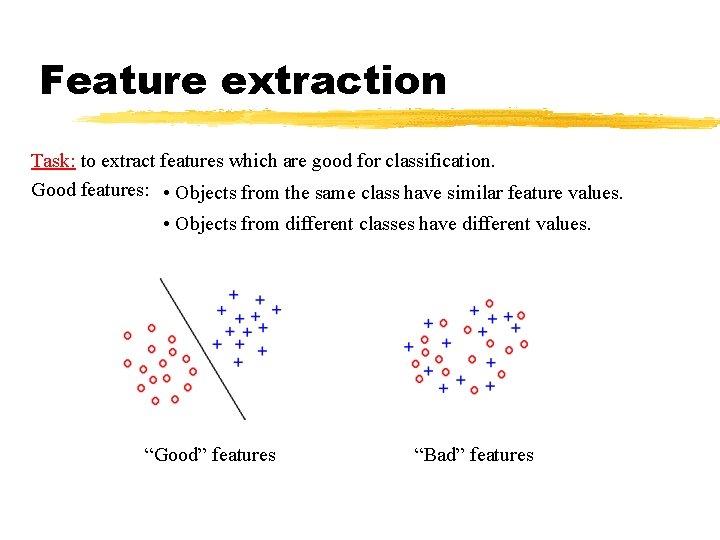

Feature extraction Task: to extract features which are good for classification. Good features: • Objects from the same class have similar feature values. • Objects from different classes have different values. “Good” features “Bad” features

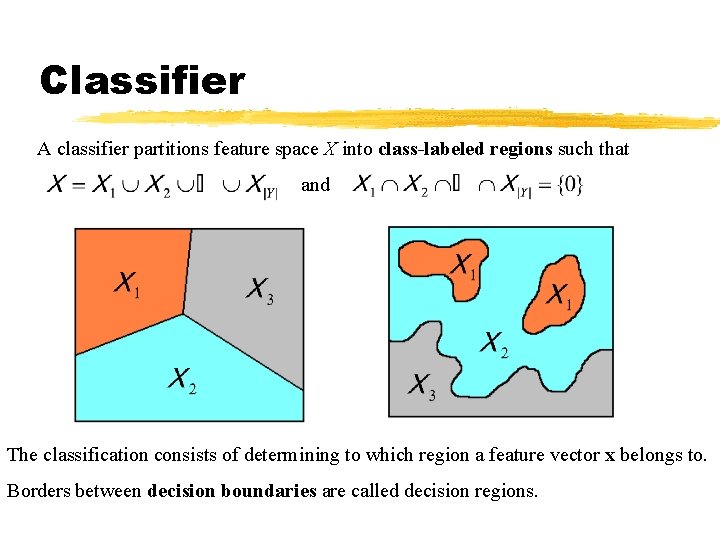

Classifier A classifier partitions feature space X into class-labeled regions such that and The classification consists of determining to which region a feature vector x belongs to. Borders between decision boundaries are called decision regions.

An Example z“Sorting incoming Fish on a conveyor according to species using optical sensing” Sea bass Species Salmon Pattern Classification, Chapter 1 24

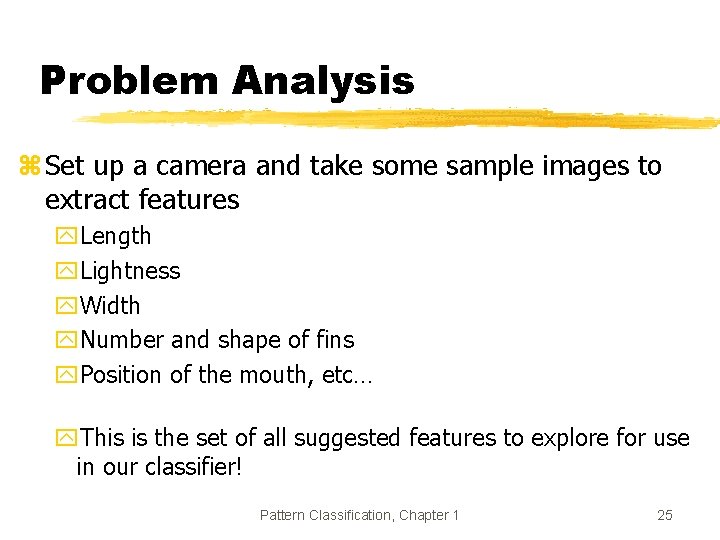

Problem Analysis z Set up a camera and take some sample images to extract features y. Length y. Lightness y. Width y. Number and shape of fins y. Position of the mouth, etc… y. This is the set of all suggested features to explore for use in our classifier! Pattern Classification, Chapter 1 25

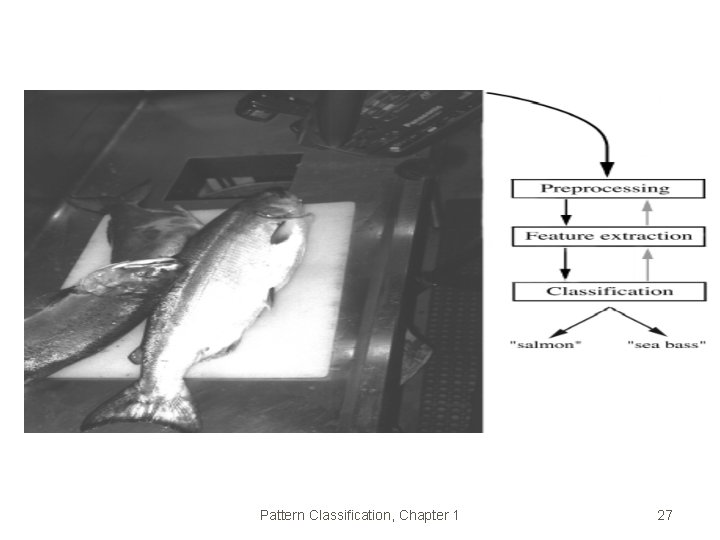

Problem Analysis z Preprocessing y. Use a segmentation operation to isolate fishes from one another and from the background z. Information from a single fish is sent to a feature extractor whose purpose is to reduce the data by measuring certain features z. The features are passed to a classifier Pattern Classification, Chapter 1 26

Pattern Classification, Chapter 1 27

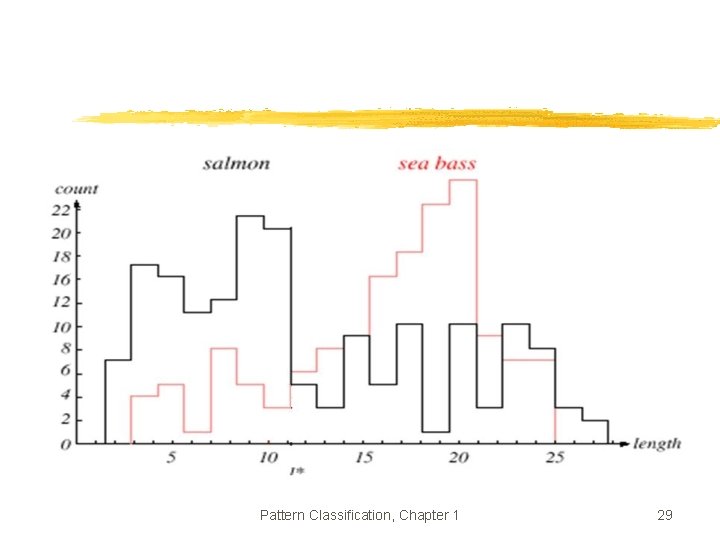

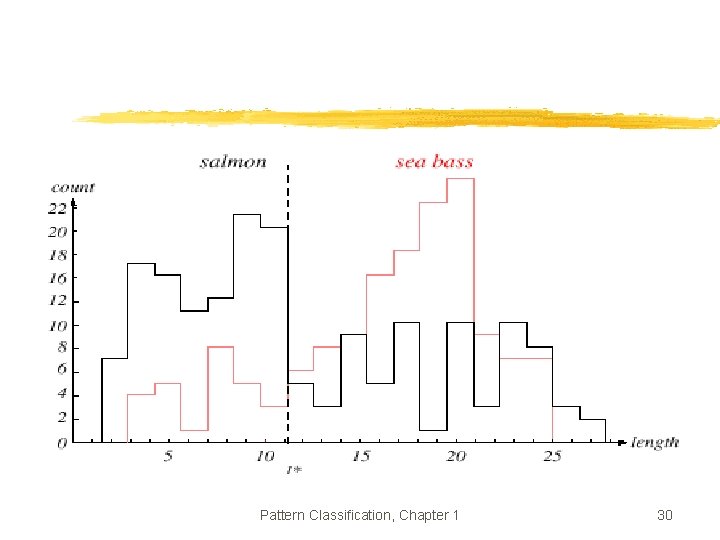

Classification z Select the length of the fish as a possible feature for discrimination z Classify the fish merely by seeing whether or not the length l of a fish exceeds some critical value l* z To choose l* we need training samples of the different types of fish and make length measurements z Obtain histogram of lengths Pattern Classification, Chapter 1 28

Pattern Classification, Chapter 1 29

Pattern Classification, Chapter 1 30

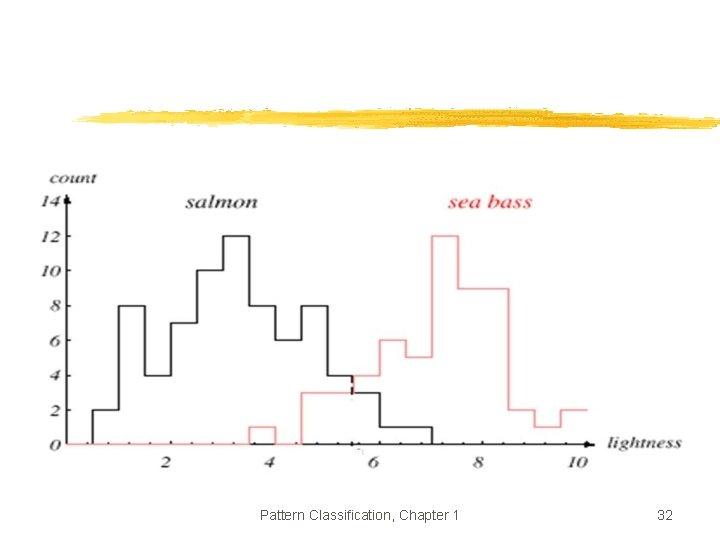

The length is a poor feature alone! Select the lightness as a possible feature. Pattern Classification, Chapter 1 31

Pattern Classification, Chapter 1 32

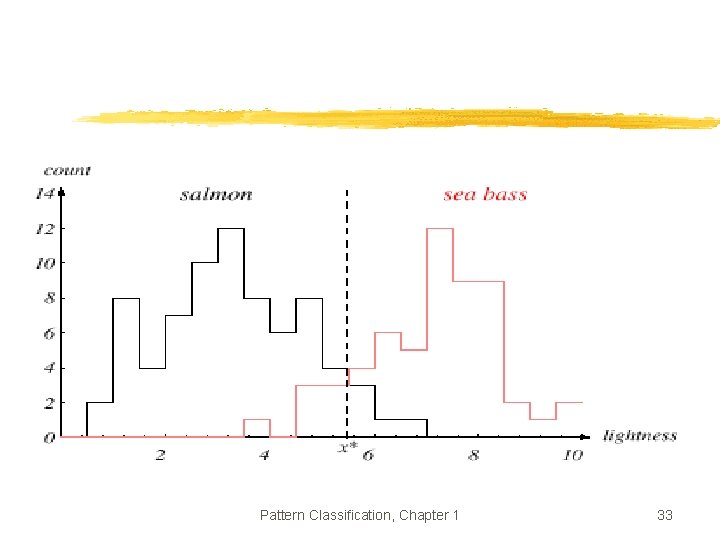

Pattern Classification, Chapter 1 33

Threshold decision boundary and cost relationship z. Move our decision boundary toward smaller values of lightness in order to minimize the cost (reduce the number of sea bass that are classified salmon!) Task of decision theory Pattern Classification, Chapter 1 34

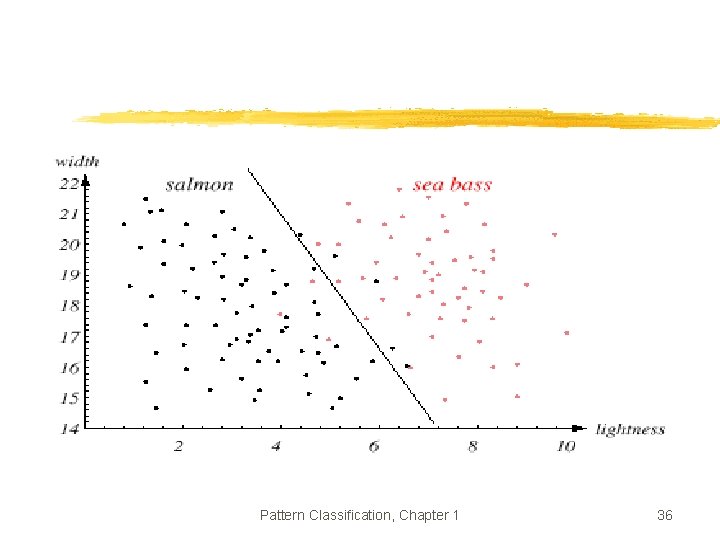

z Can we improve our model z Add one more feature, i. e. the width of the fish z Adopt the lightness and add the width of the fish z Each fish is represented by a point or a feature vector x in a two-dimensional feature space Fish x. T = [x 1, x 2] Lightness Pattern Classification, Chapter 1 Width 35

Pattern Classification, Chapter 1 36

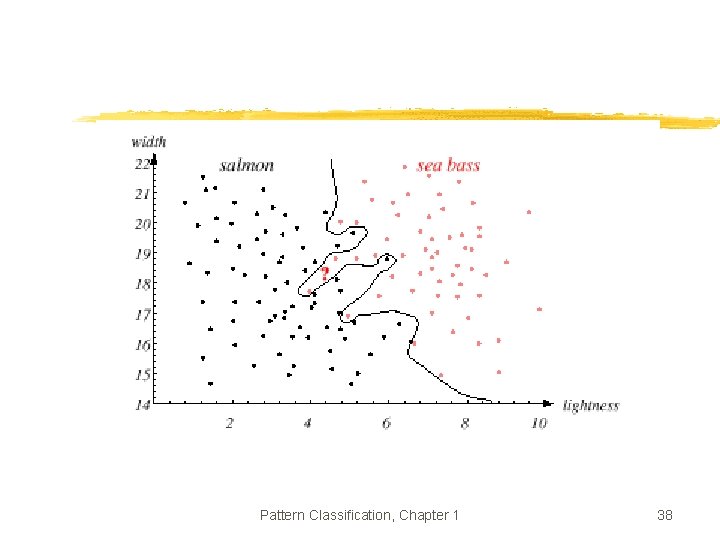

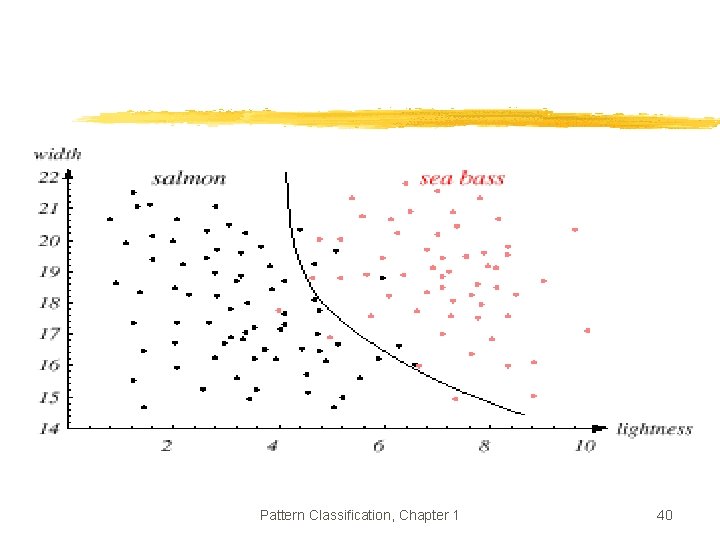

z. We might add other features that are not correlated with the ones we already have. A precaution should be taken not to reduce the performance by adding such “noisy features” z. Ideally, the best decision boundary should be the one which provides an optimal performance such as in the following figure: Pattern Classification, Chapter 1 37

Pattern Classification, Chapter 1 38

z. However, our satisfaction is premature because the central aim of designing a classifier is to correctly classify novel input Issue of generalization! Pattern Classification, Chapter 1 39

Pattern Classification, Chapter 1 40

Learning and Adaptation z. Supervised: class label is known z. Unsupervised: class label is unknown z. Semi-supervised: class label is known for some (seed) examples z. Reinforcement: assessment feedback is available

References Jain, A. K. ; Duin, R. P. W. ; Jianchang Mao; , "Statistical pattern recognition: a review, " Pattern Analysis and Machine Intelligence, IEEE Transactions on , vol. 22, no. 1, pp. 4 -37, Jan 2000 Duda, Heart, Stork: Pattern Classification. Wiley & Sons, 2 nd edition, 2000. Fukunaga: Introduction to Statistical Pattern Recognition. Academic Press, 1990.

- Slides: 42