Introduction to Pattern Recognition Chapter 1 Duda et

- Slides: 46

Introduction to Pattern Recognition Chapter 1 (Duda et al. ) CS 479/679 Pattern Recognition Dr. George Bebis 1

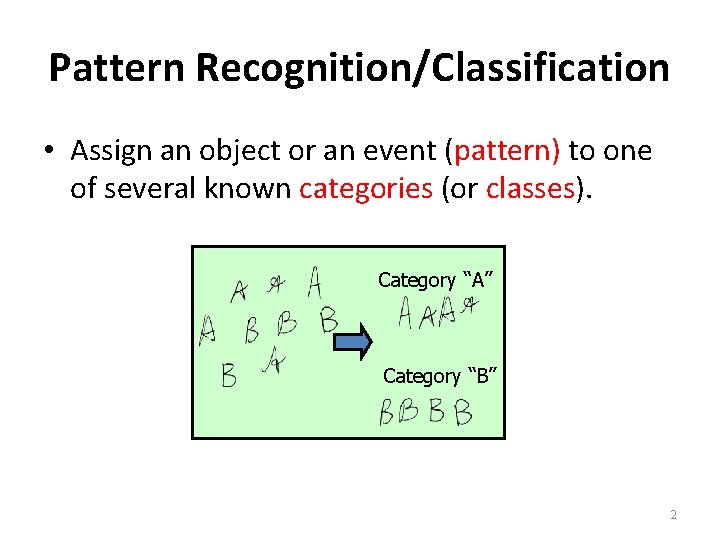

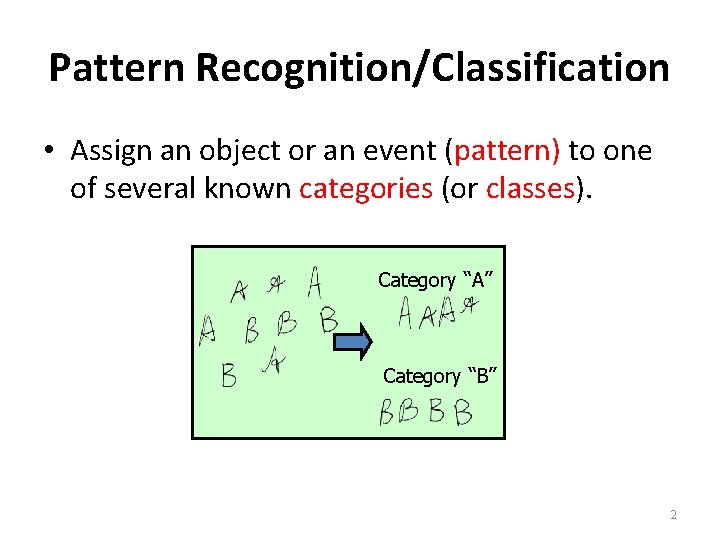

Pattern Recognition/Classification • Assign an object or an event (pattern) to one of several known categories (or classes). Category “A” Category “B” 2

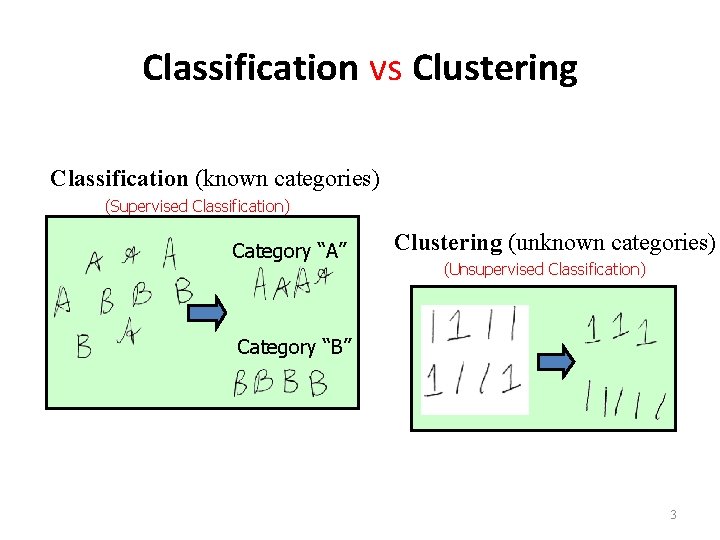

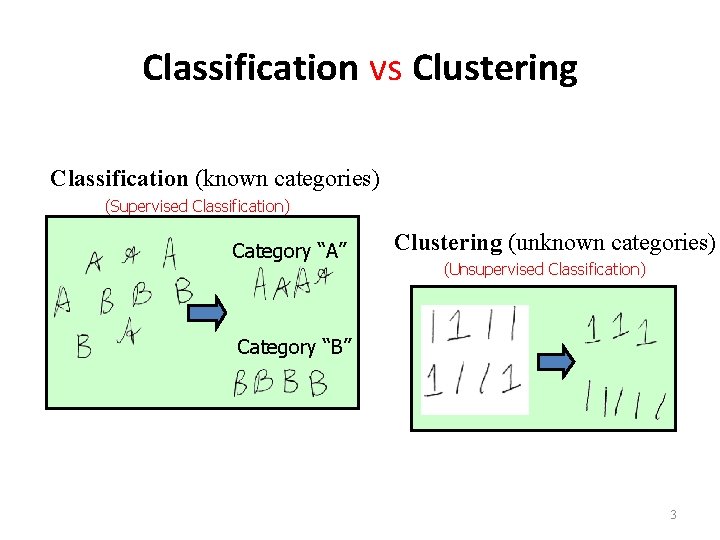

Classification vs Clustering Classification (known categories) (Supervised Classification) Category “A” Clustering (unknown categories) (Unsupervised Classification) Category “B” 3

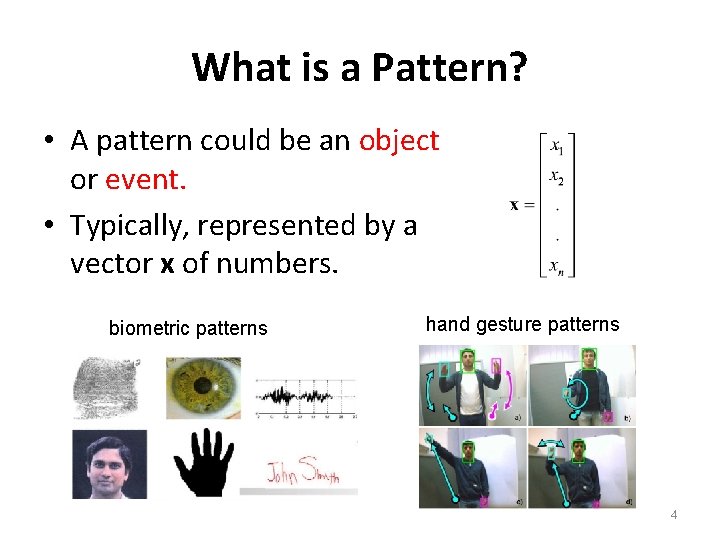

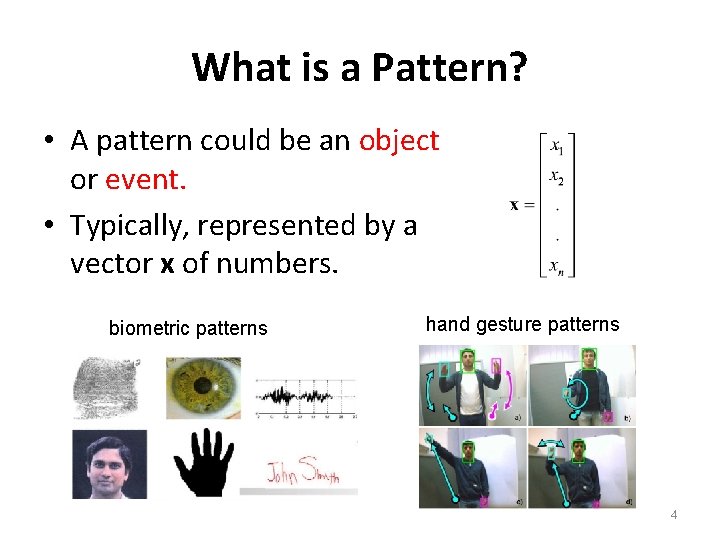

What is a Pattern? • A pattern could be an object or event. • Typically, represented by a vector x of numbers. biometric patterns hand gesture patterns 4

What is a Pattern? (con’t) • Loan/Credit card applications – Income, # of dependents, mortgage amount credit worthiness classification • Dating services – Age, hobbies, income “desirability” classification • Web documents – Key-word based descriptions (e. g. , documents containing “football”, “NFL”) document classification 5

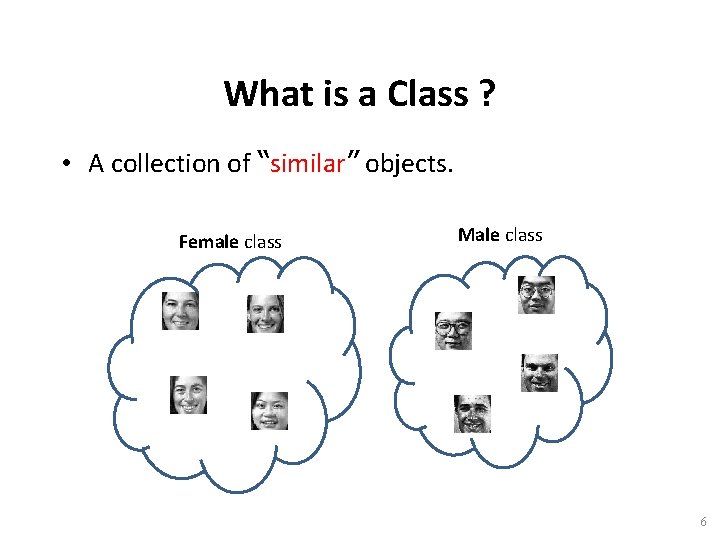

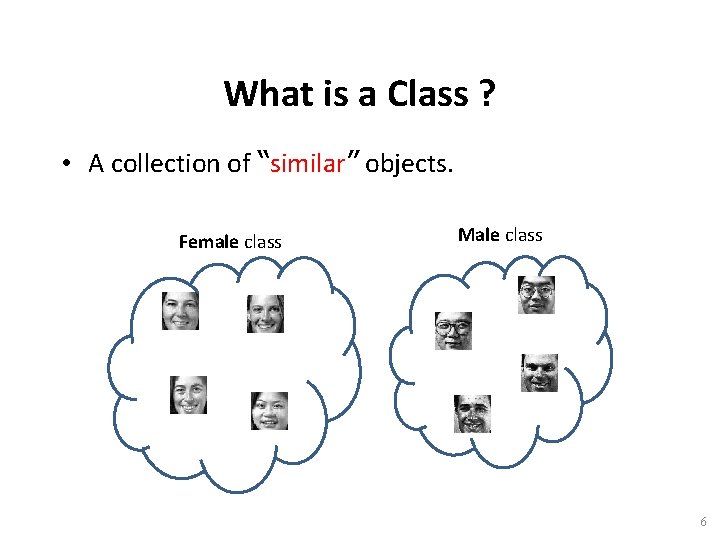

What is a Class ? • A collection of “similar” objects. Female class Male class 6

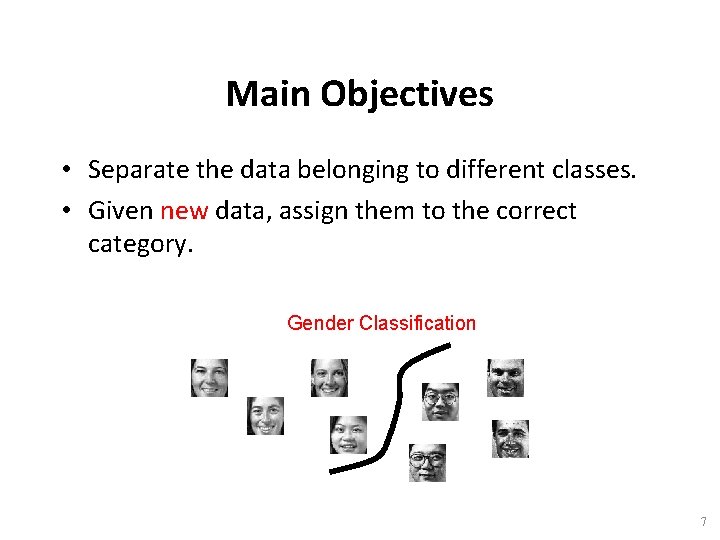

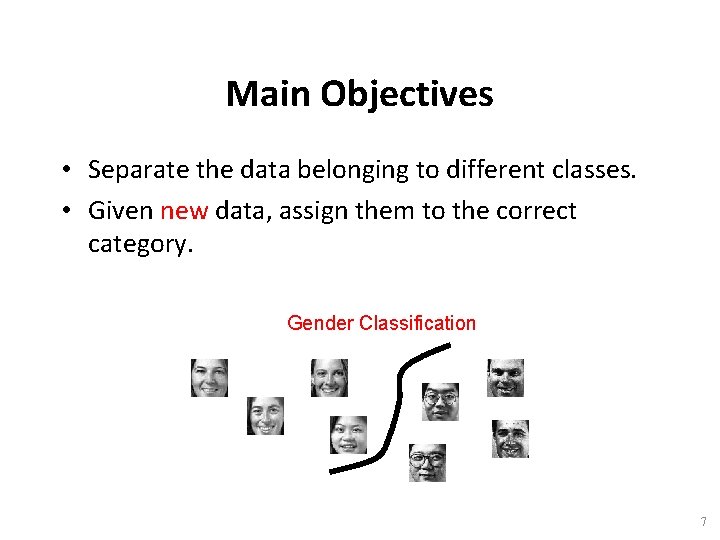

Main Objectives • Separate the data belonging to different classes. • Given new data, assign them to the correct category. Gender Classification 7

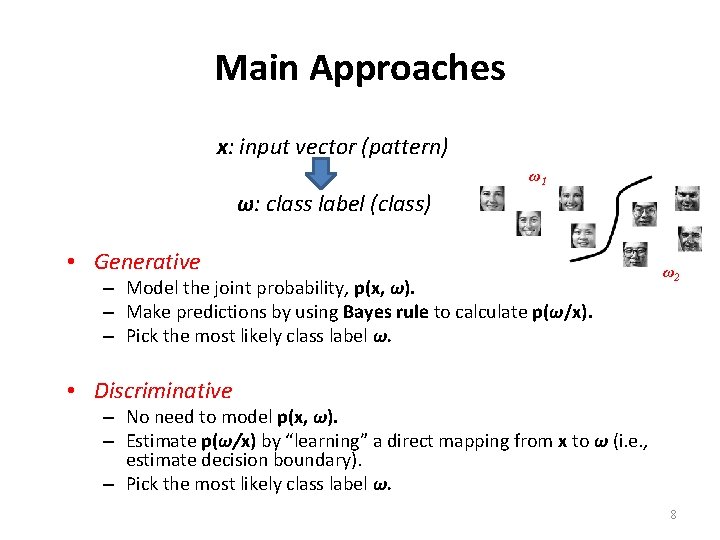

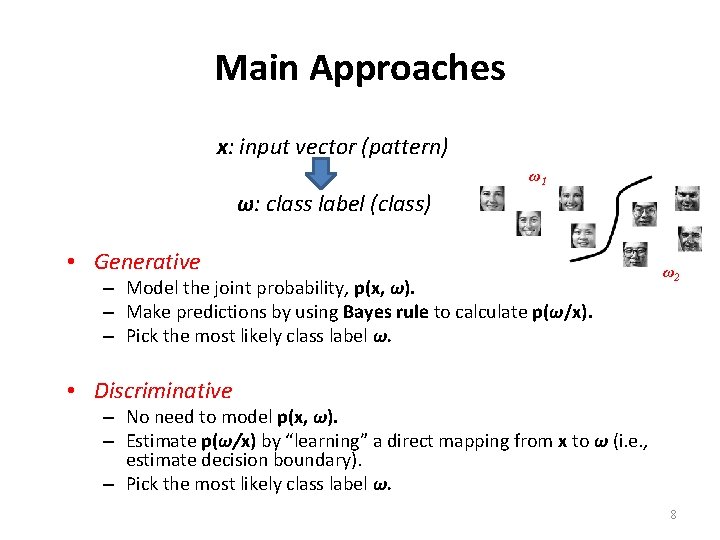

Main Approaches x: input vector (pattern) ω1 ω: class label (class) • Generative – Model the joint probability, p(x, ω). – Make predictions by using Bayes rule to calculate p(ω/x). – Pick the most likely class label ω. ω2 • Discriminative – No need to model p(x, ω). – Estimate p(ω/x) by “learning” a direct mapping from x to ω (i. e. , estimate decision boundary). – Pick the most likely class label ω. 8

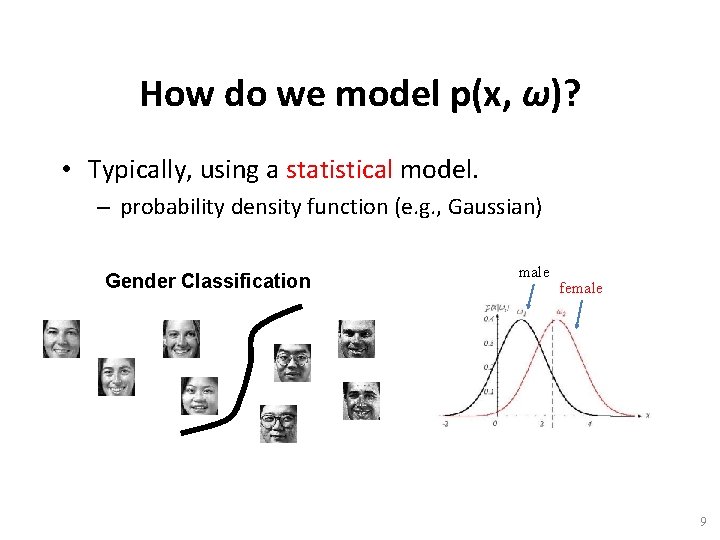

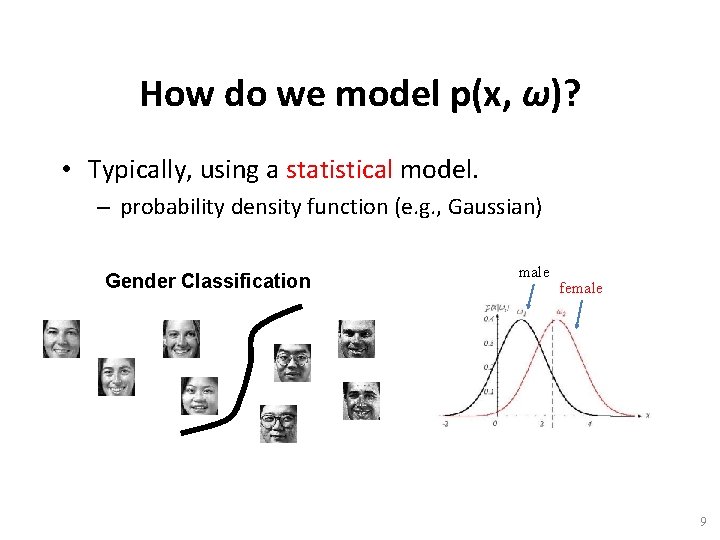

How do we model p(x, ω)? • Typically, using a statistical model. – probability density function (e. g. , Gaussian) Gender Classification male female 9

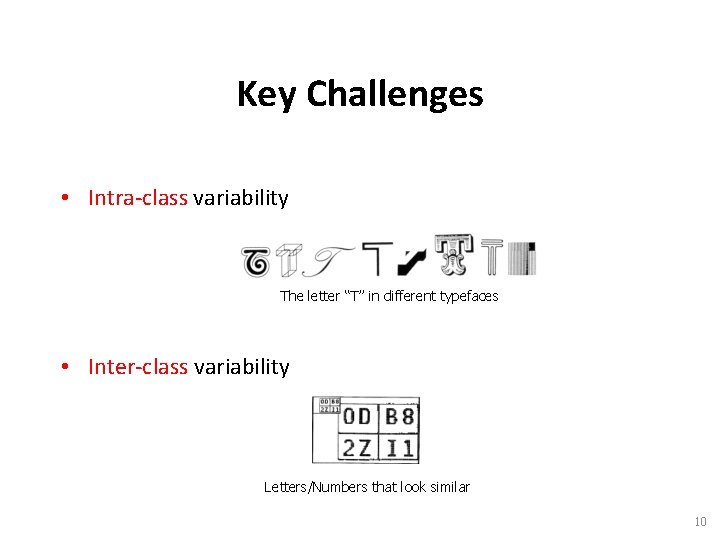

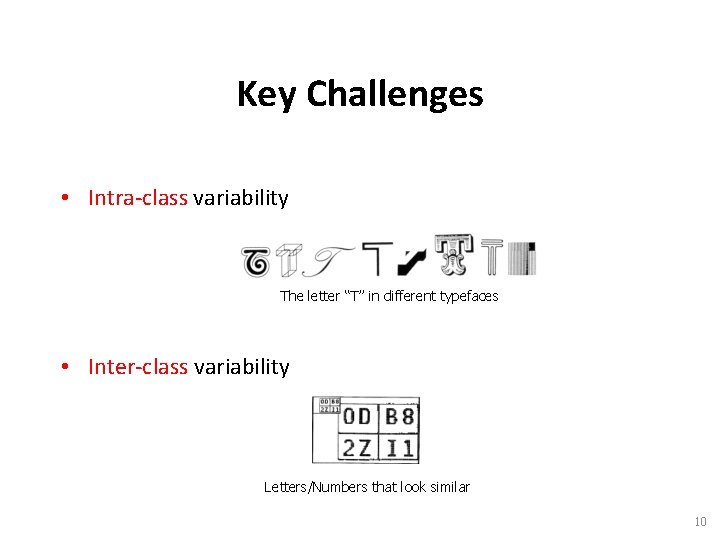

Key Challenges • Intra-class variability The letter “T” in different typefaces • Inter-class variability Letters/Numbers that look similar 10

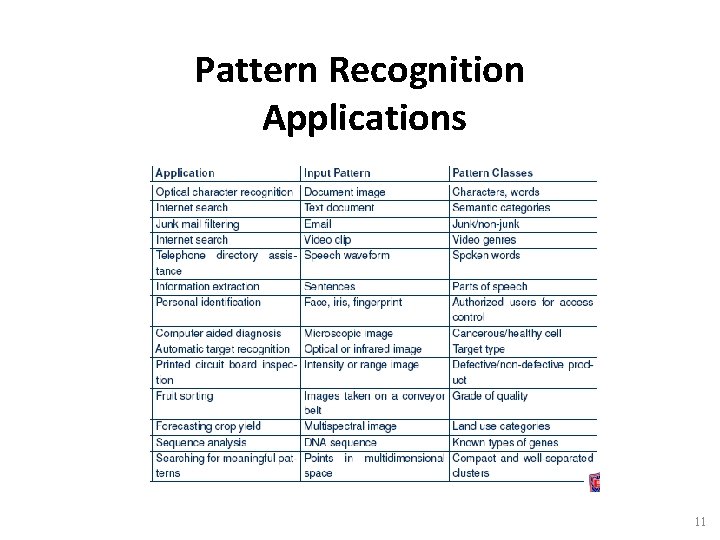

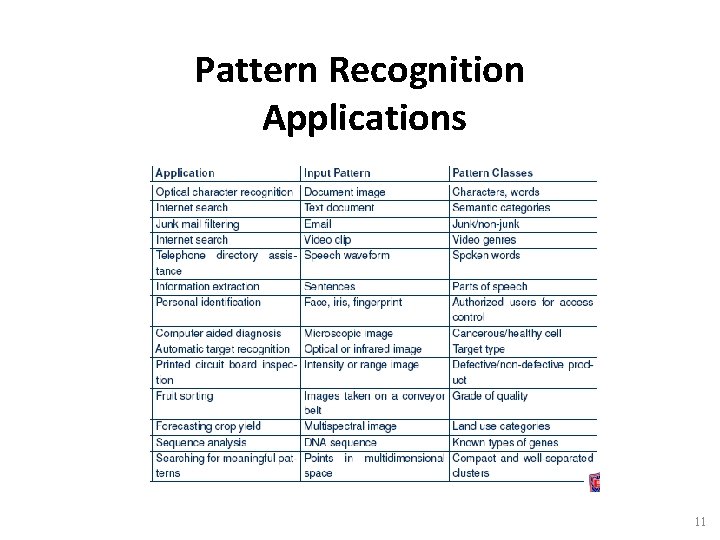

Pattern Recognition Applications 11

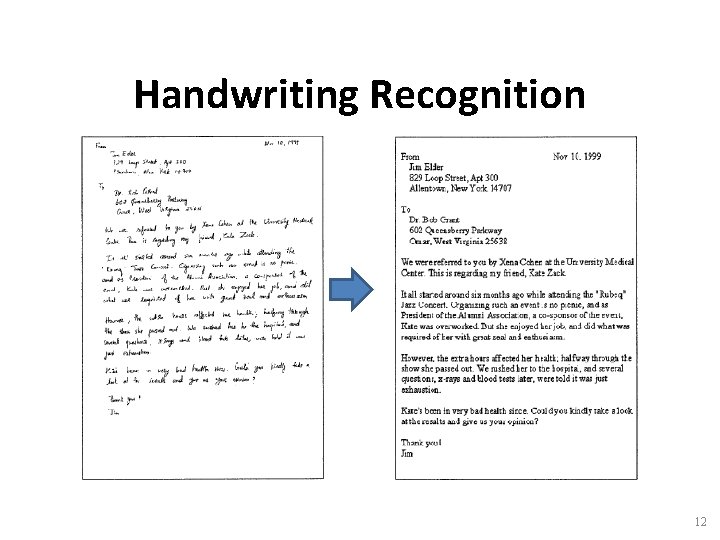

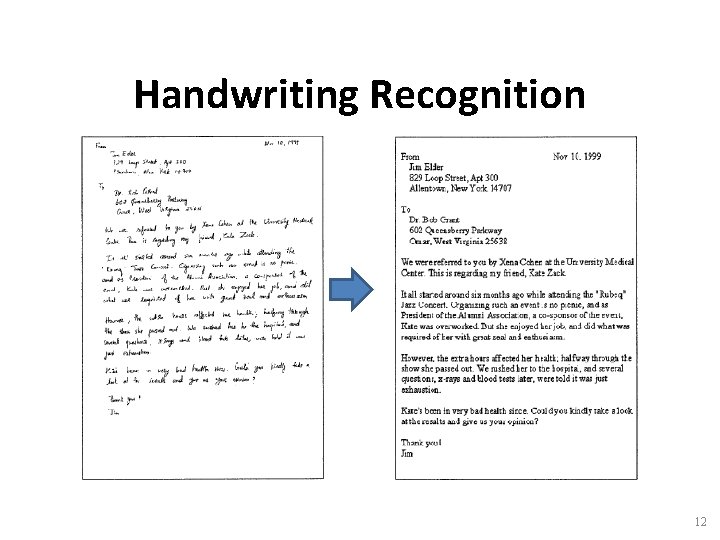

Handwriting Recognition 12

License Plate Recognition 13

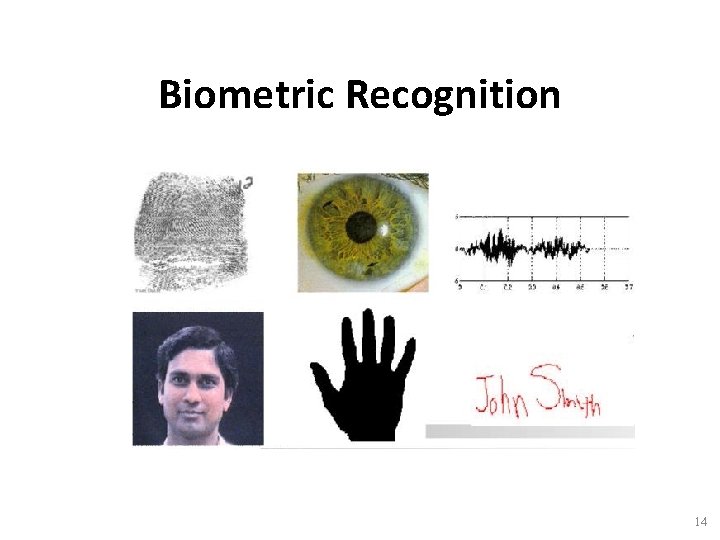

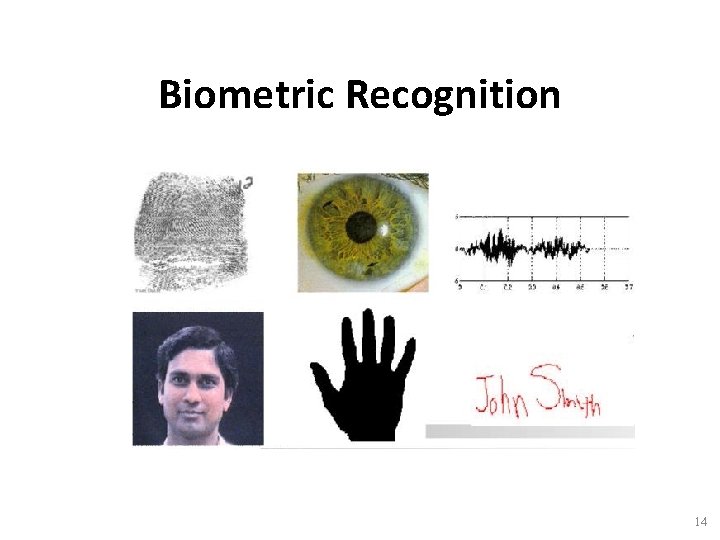

Biometric Recognition 14

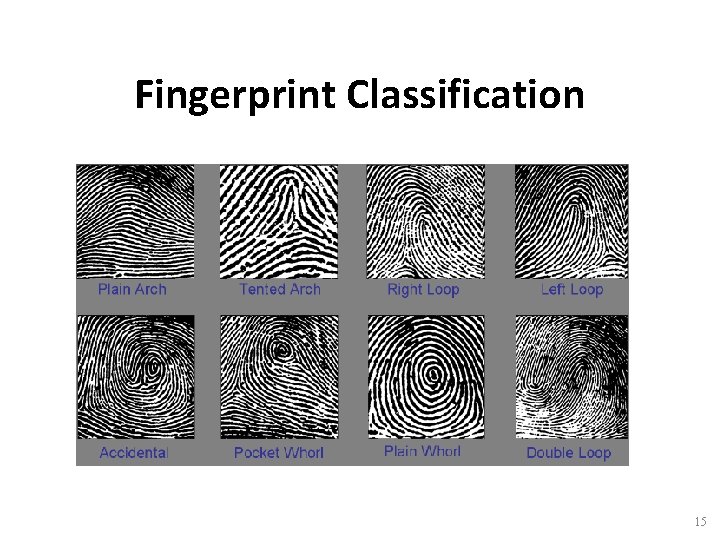

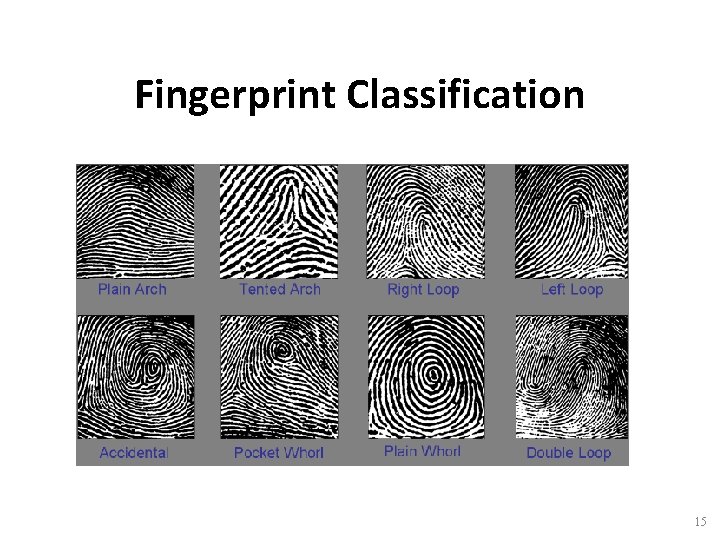

Fingerprint Classification 15

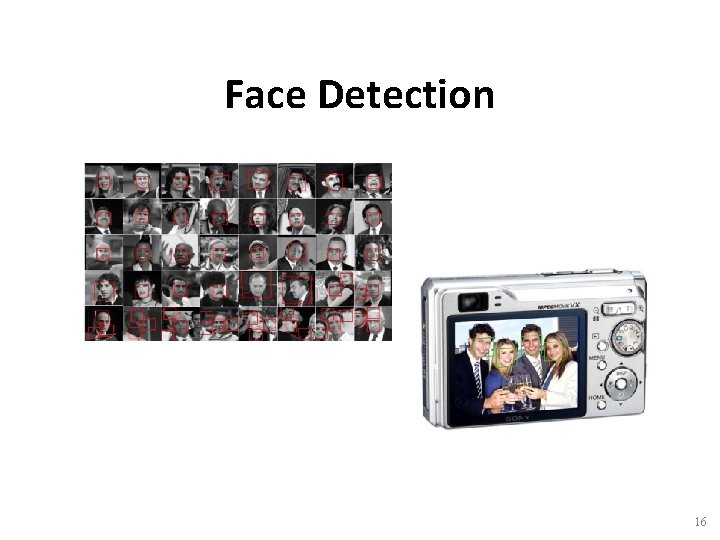

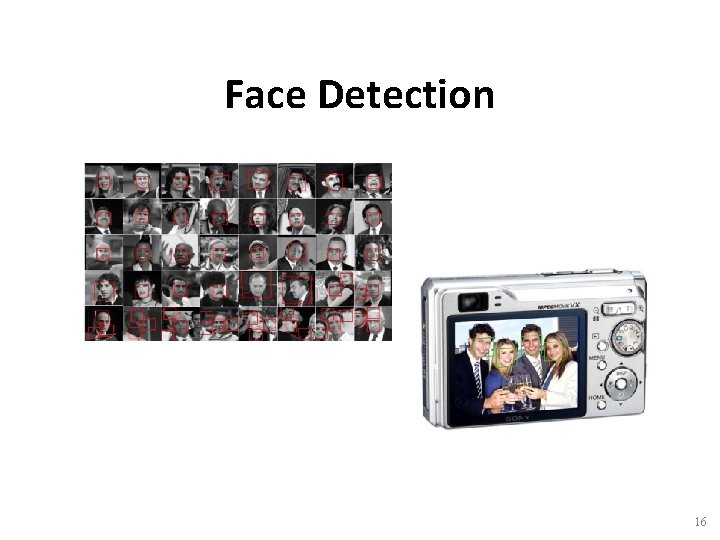

Face Detection 16

Autonomous Systems 17

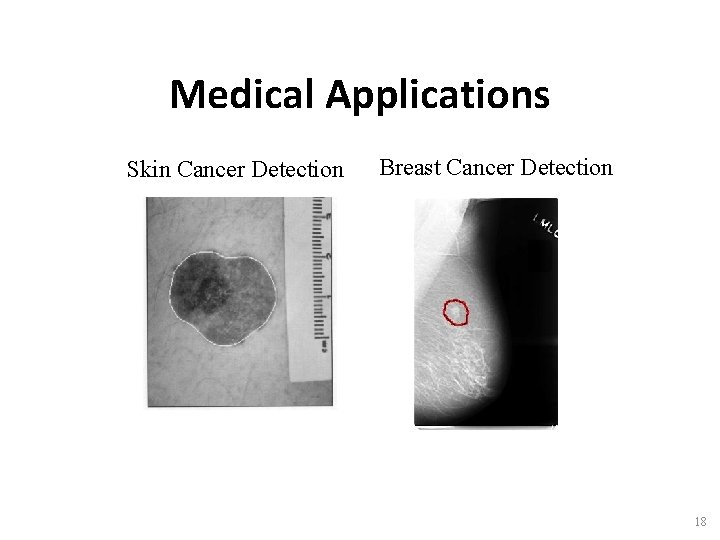

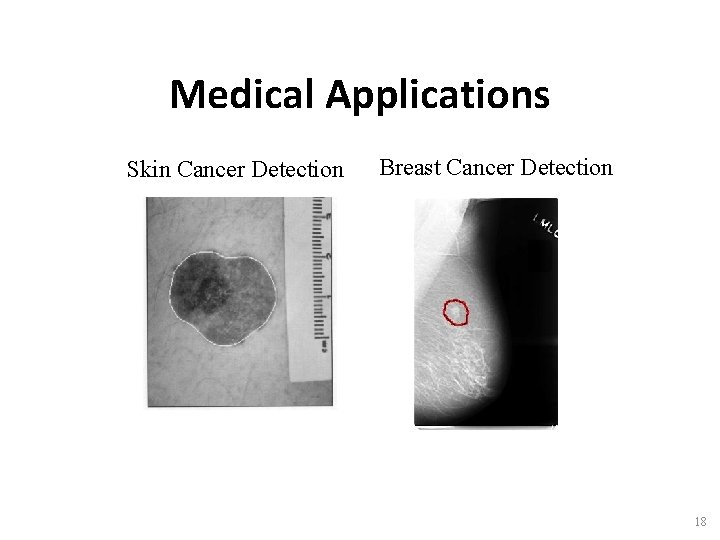

Medical Applications Skin Cancer Detection Breast Cancer Detection 18

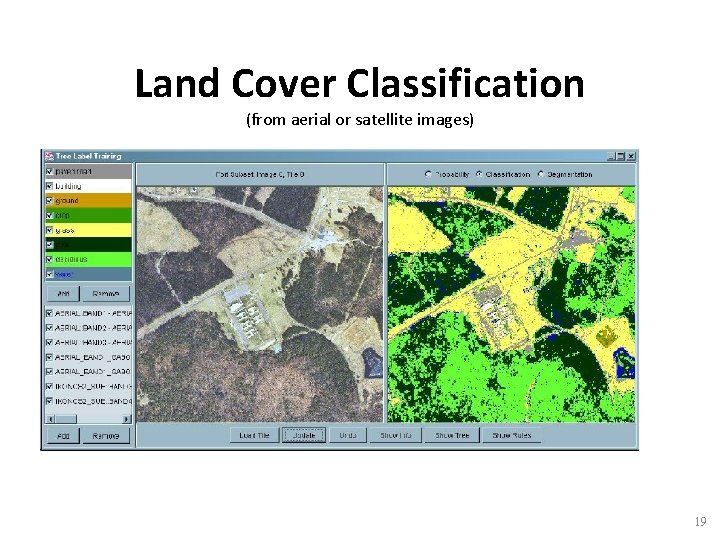

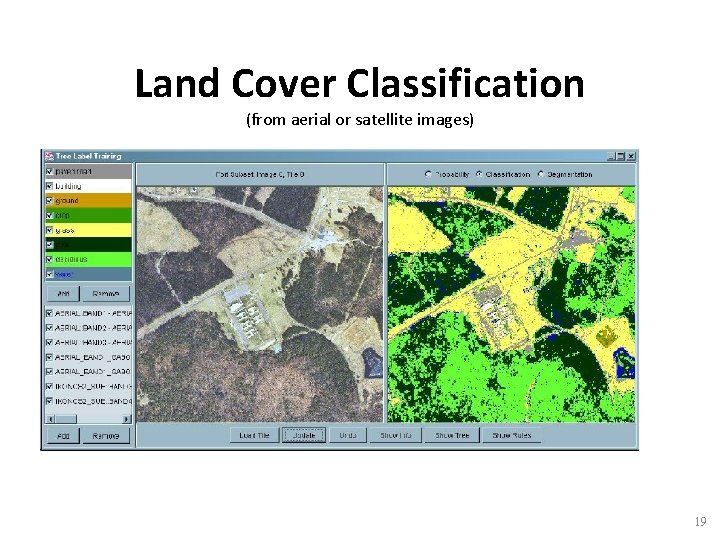

Land Cover Classification (from aerial or satellite images) 19

“Hot” Applications • Recommendation systems – Amazon, Netflix • • Targeted advertising Spam filters Loan/Credit Card Applications Malicious website detection 20

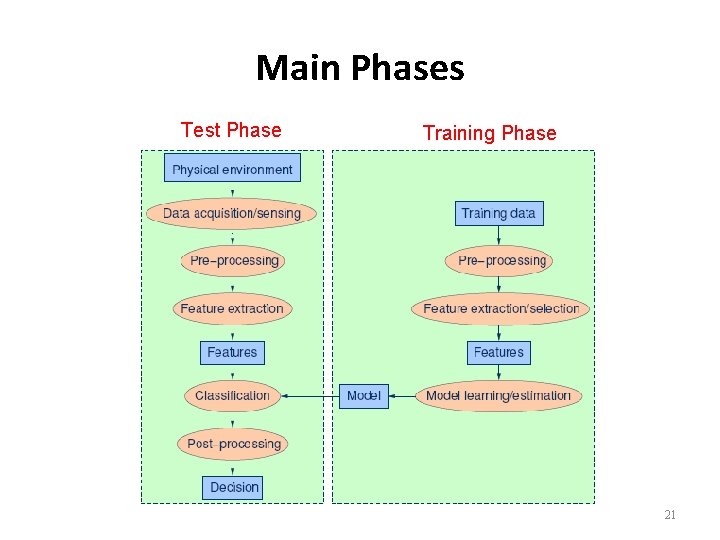

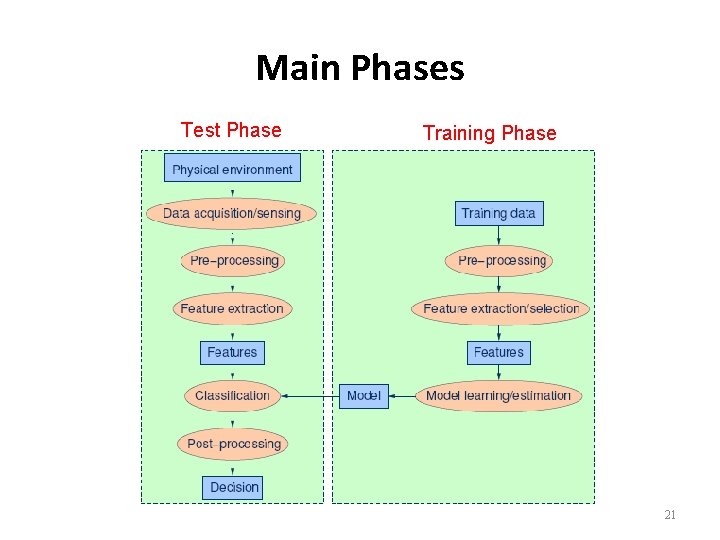

Main Phases Test Phase Training Phase 21

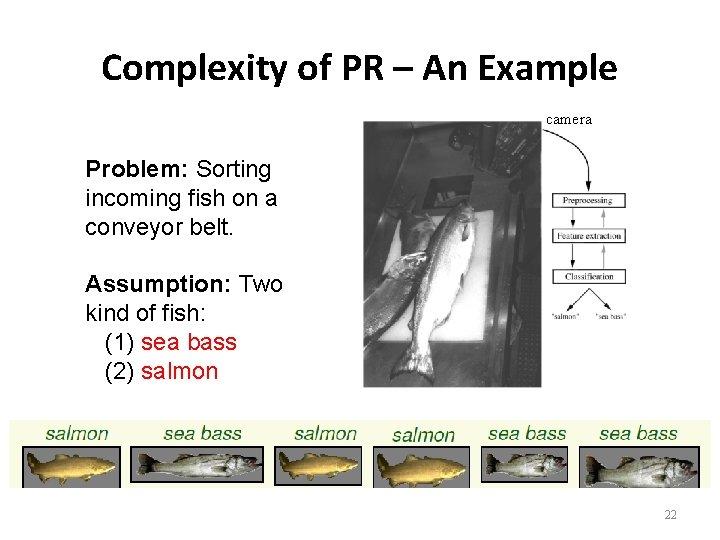

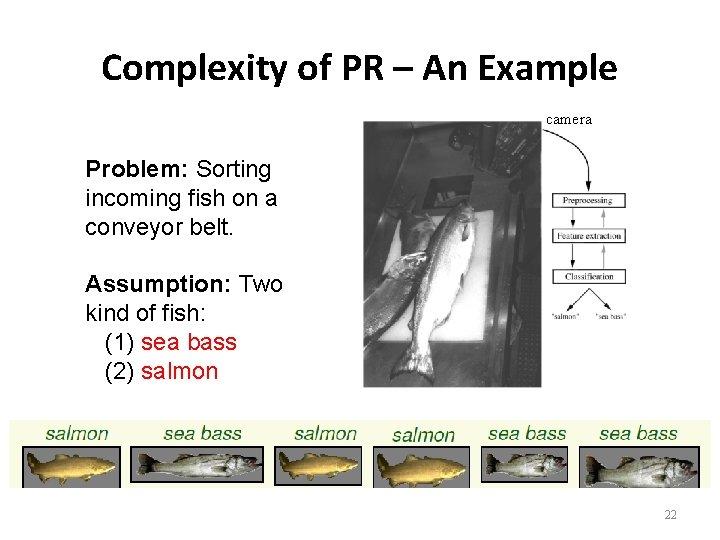

Complexity of PR – An Example camera Problem: Sorting incoming fish on a conveyor belt. Assumption: Two kind of fish: (1) sea bass (2) salmon 22

Sensors • Sensing: – Use a sensor (camera or microphone) for data capture. – PR depends on bandwidth, resolution, sensitivity, distortion of the sensor. 23

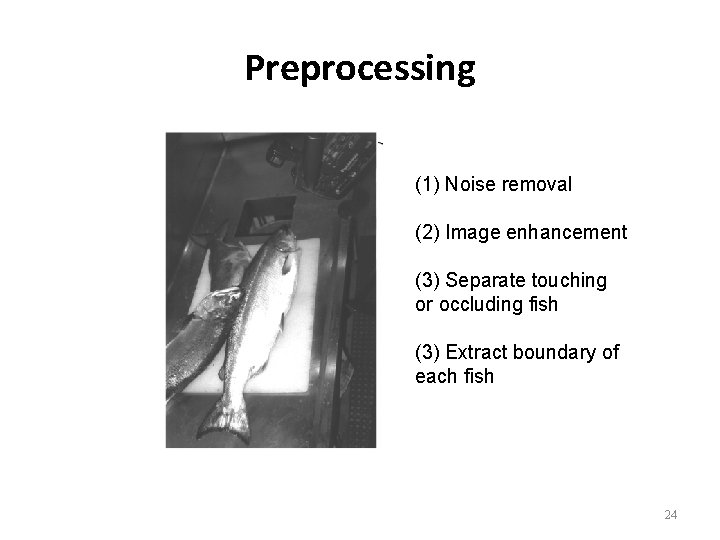

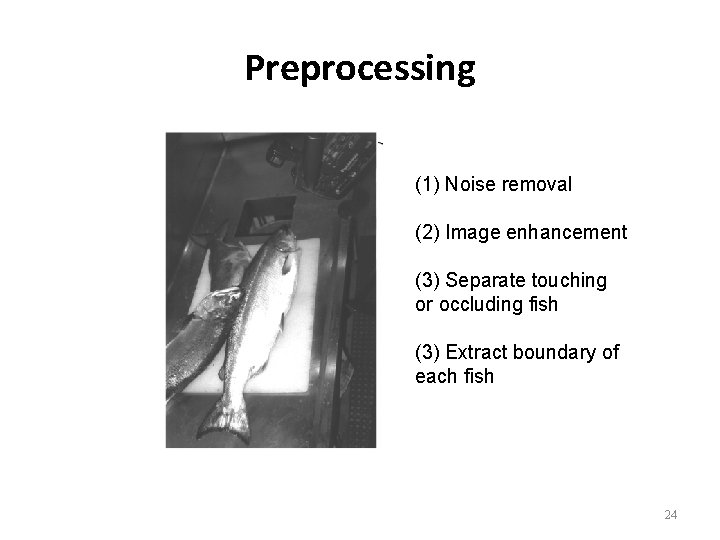

Preprocessing (1) Noise removal (2) Image enhancement (3) Separate touching or occluding fish (3) Extract boundary of each fish 24

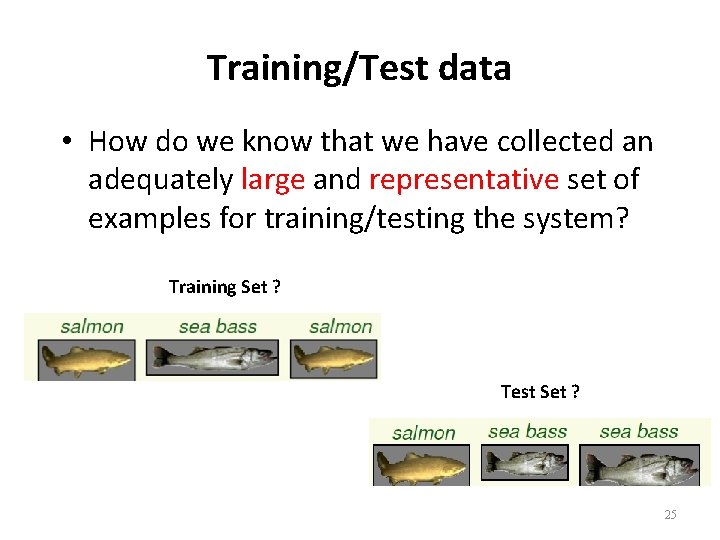

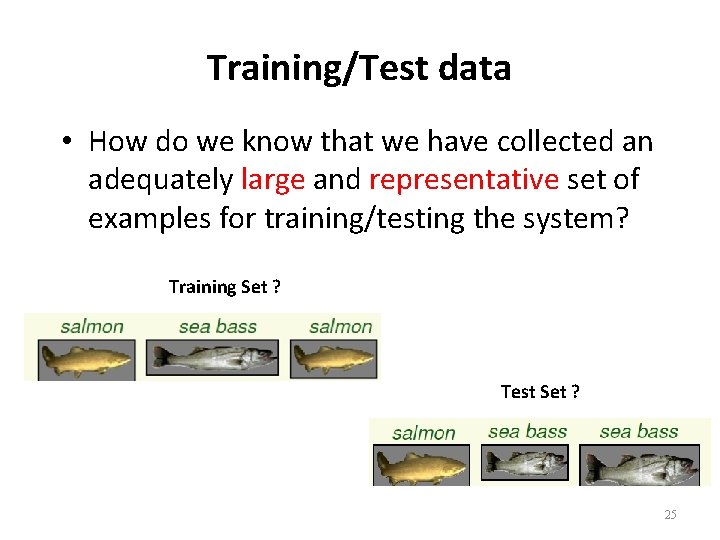

Training/Test data • How do we know that we have collected an adequately large and representative set of examples for training/testing the system? Training Set ? Test Set ? 25

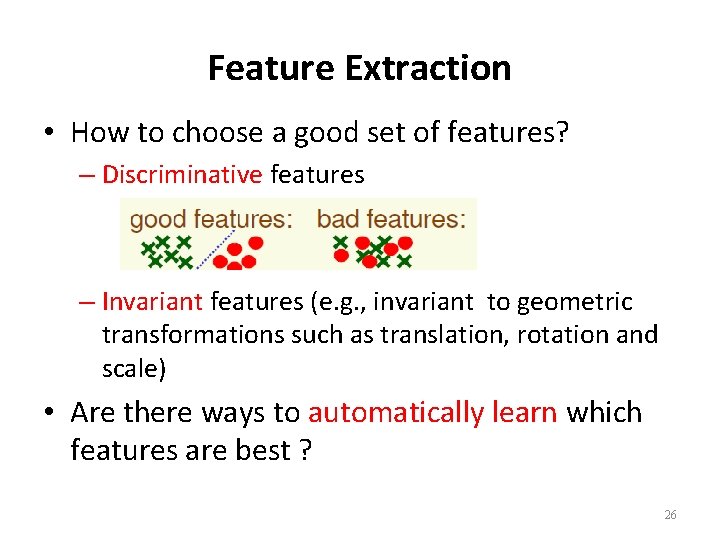

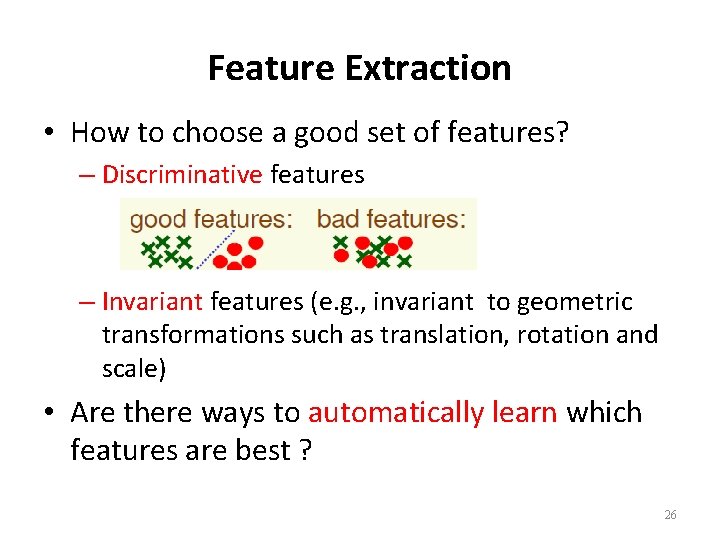

Feature Extraction • How to choose a good set of features? – Discriminative features – Invariant features (e. g. , invariant to geometric transformations such as translation, rotation and scale) • Are there ways to automatically learn which features are best ? 26

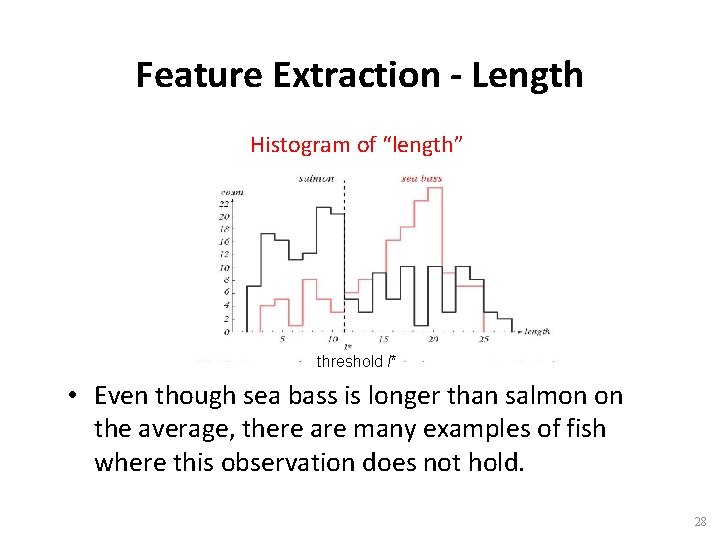

Feature Extraction - Example • Let’s consider the fish classification example: – Assume a fisherman told us that a sea bass is generally longer than a salmon. – We can use length as a feature and decide between sea bass and salmon according to a threshold on length. – How should we choose threshold? 27

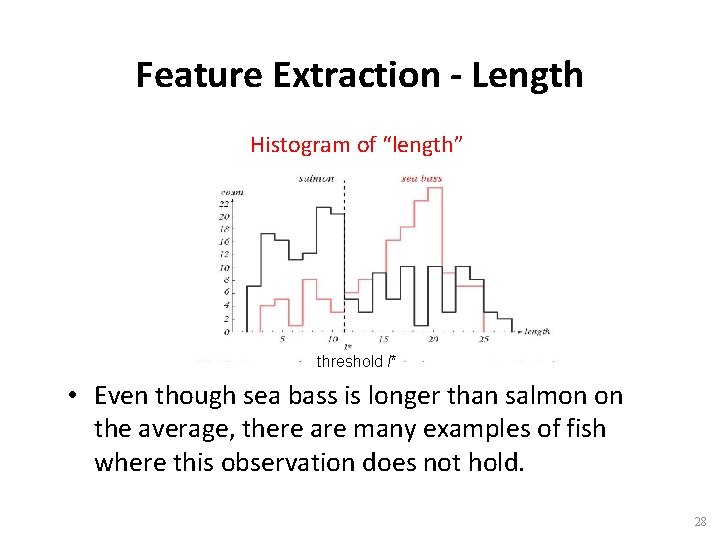

Feature Extraction - Length Histogram of “length” threshold l* • Even though sea bass is longer than salmon on the average, there are many examples of fish where this observation does not hold. 28

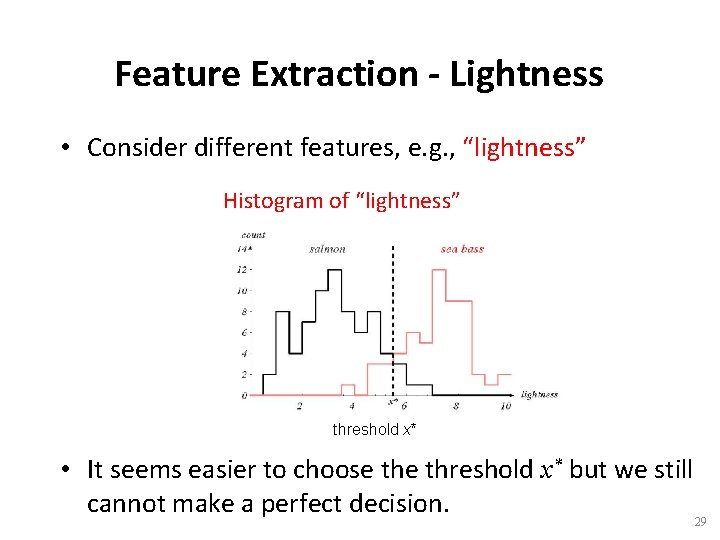

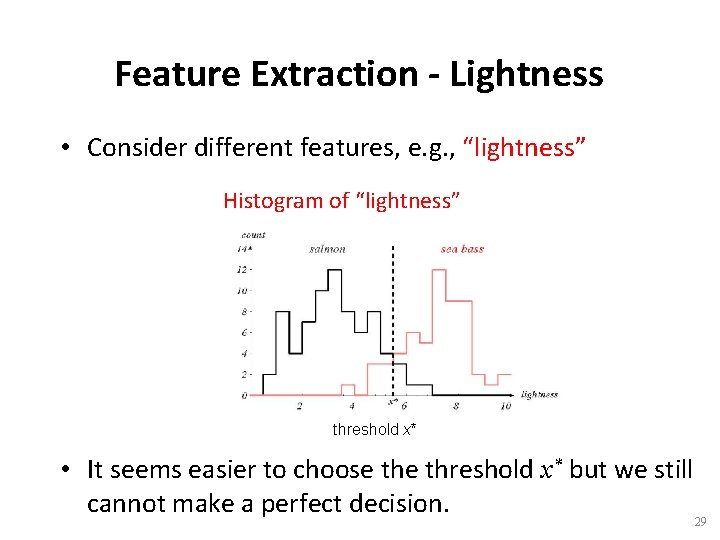

Feature Extraction - Lightness • Consider different features, e. g. , “lightness” Histogram of “lightness” threshold x* • It seems easier to choose threshold x* but we still cannot make a perfect decision. 29

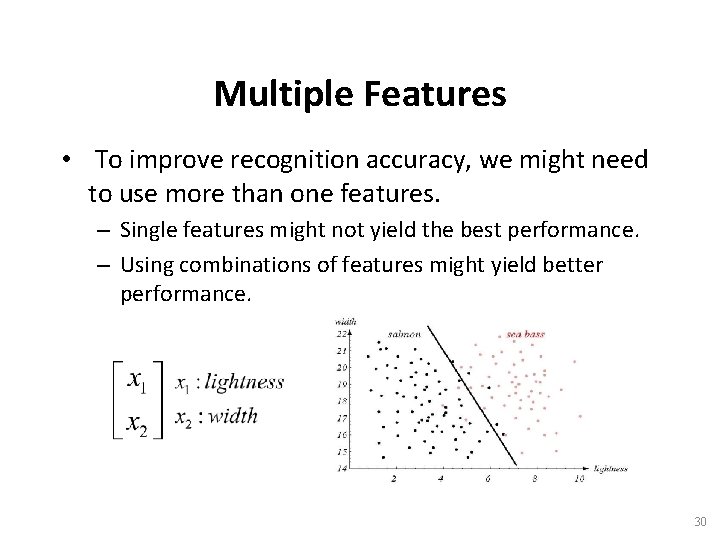

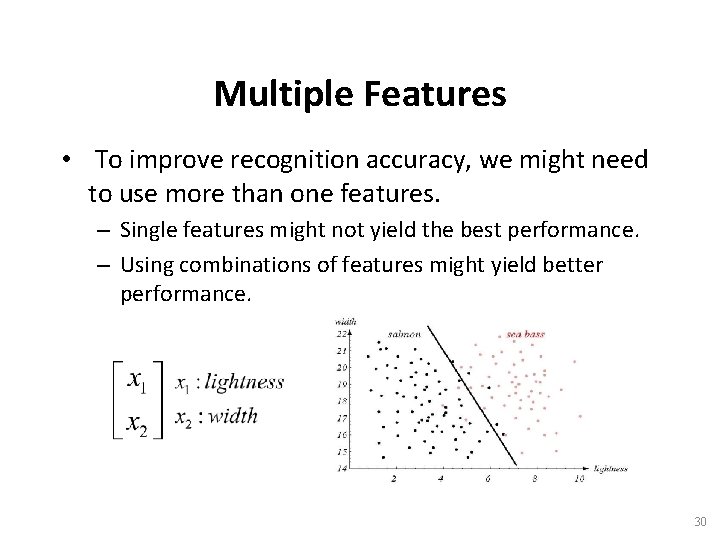

Multiple Features • To improve recognition accuracy, we might need to use more than one features. – Single features might not yield the best performance. – Using combinations of features might yield better performance. 30

How Many Features? • Does adding more features always improve performance? – It might be difficult and computationally expensive to extract certain features. – Correlated features might not improve performance (i. e. redundancy). – “Curse” of dimensionality. 31

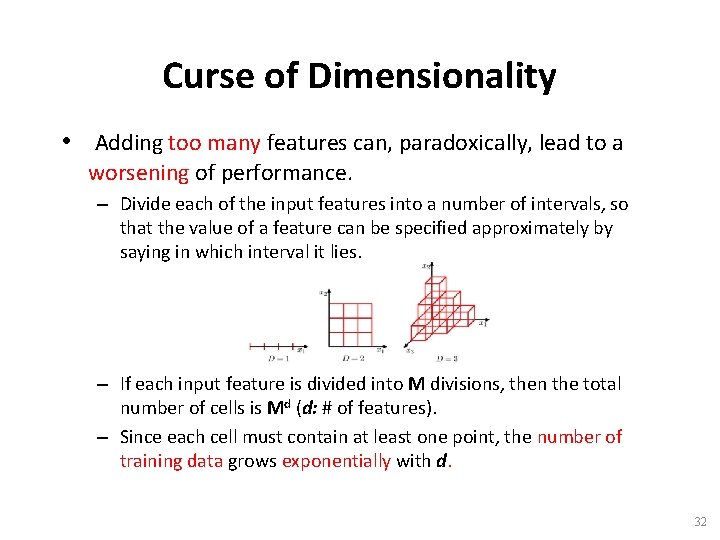

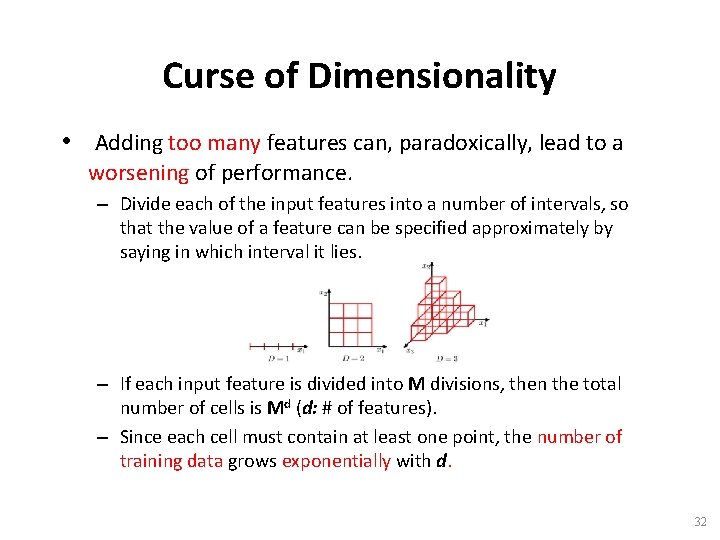

Curse of Dimensionality • Adding too many features can, paradoxically, lead to a worsening of performance. – Divide each of the input features into a number of intervals, so that the value of a feature can be specified approximately by saying in which interval it lies. – If each input feature is divided into M divisions, then the total number of cells is Md (d: # of features). – Since each cell must contain at least one point, the number of training data grows exponentially with d. 32

Missing Features • Certain features might be missing (e. g. , due to occlusion). • How should we train the classifier with missing features ? • How should the classifier make the best decision with missing features ? 33

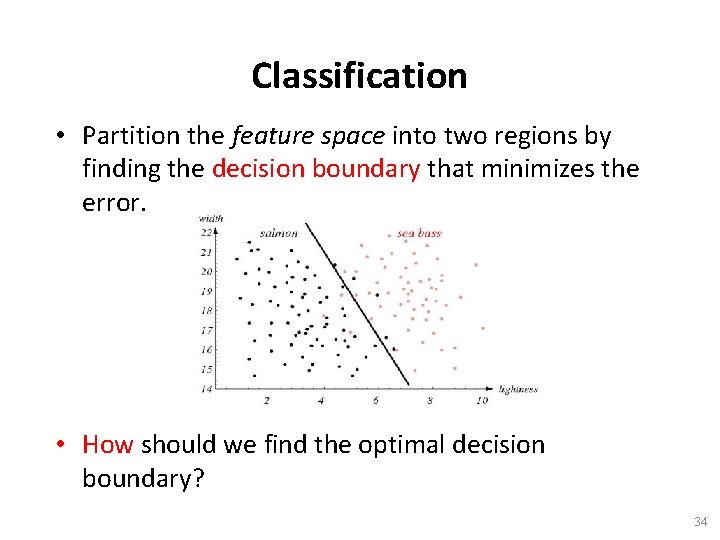

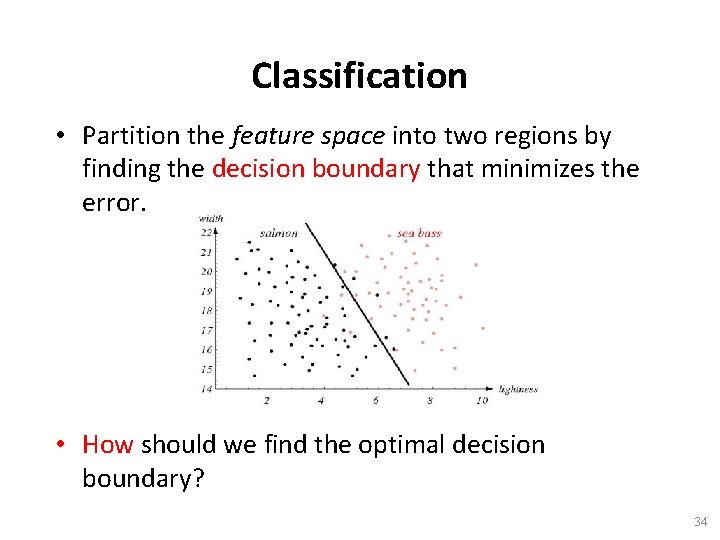

Classification • Partition the feature space into two regions by finding the decision boundary that minimizes the error. • How should we find the optimal decision boundary? 34

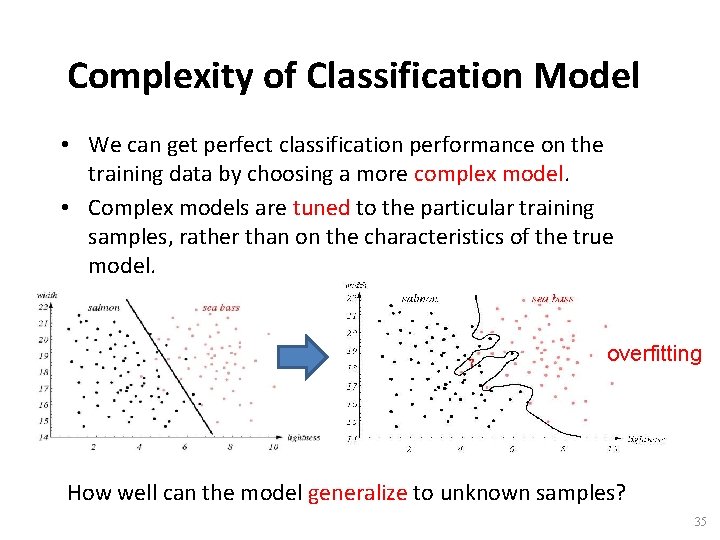

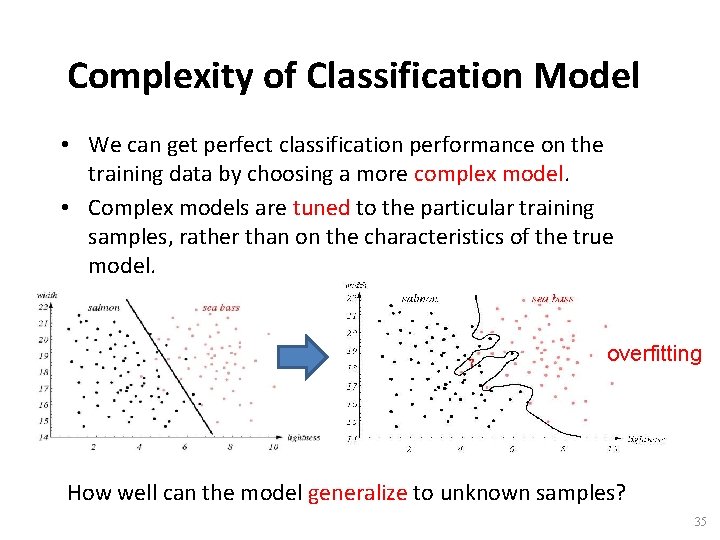

Complexity of Classification Model • We can get perfect classification performance on the training data by choosing a more complex model. • Complex models are tuned to the particular training samples, rather than on the characteristics of the true model. overfitting How well can the model generalize to unknown samples? 35

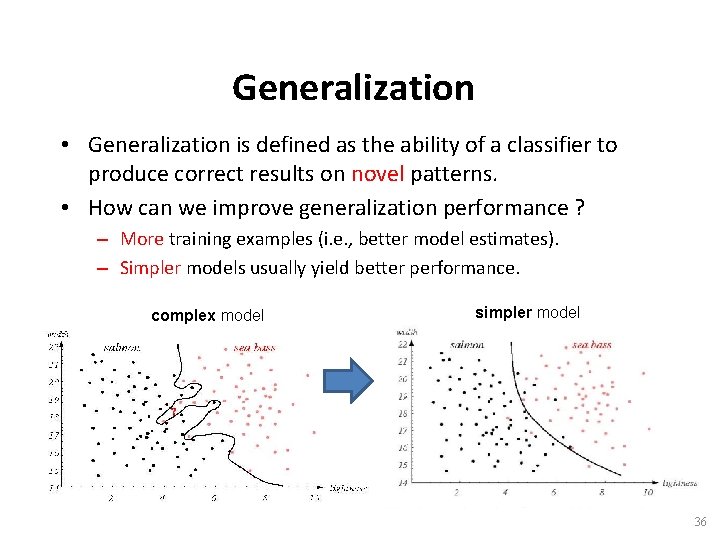

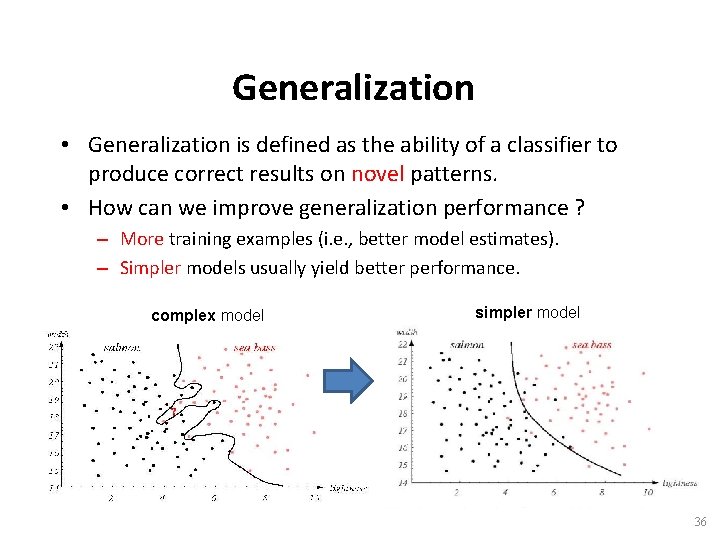

Generalization • Generalization is defined as the ability of a classifier to produce correct results on novel patterns. • How can we improve generalization performance ? – More training examples (i. e. , better model estimates). – Simpler models usually yield better performance. complex model simpler model 36

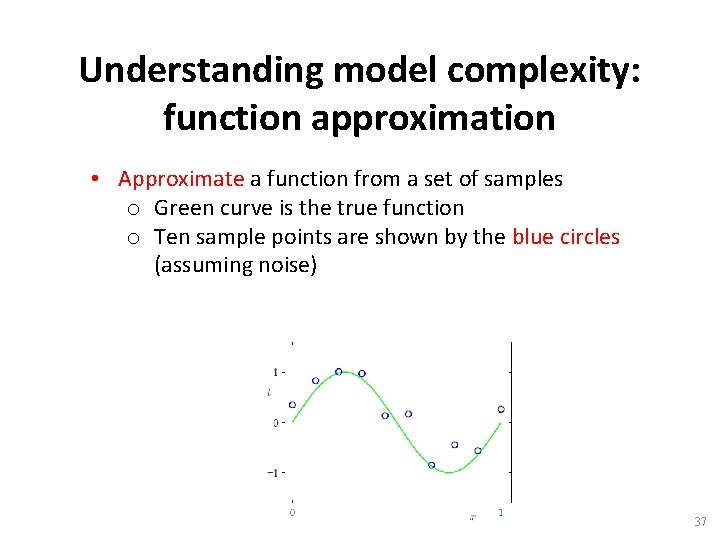

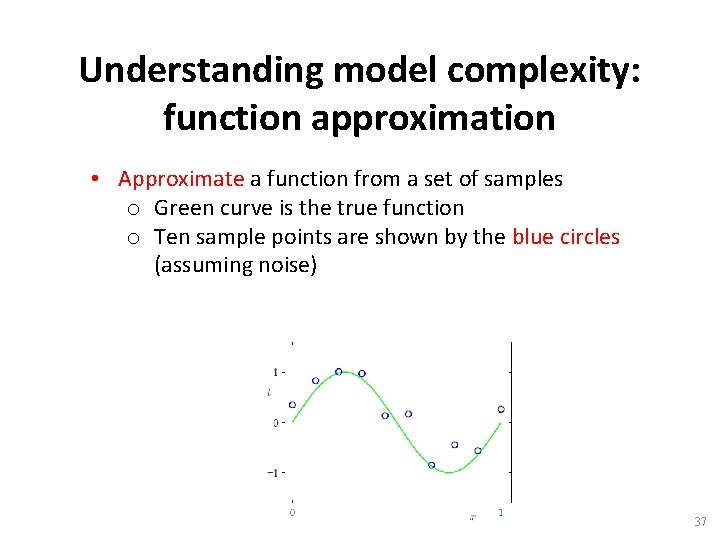

Understanding model complexity: function approximation • Approximate a function from a set of samples o Green curve is the true function o Ten sample points are shown by the blue circles (assuming noise) 37

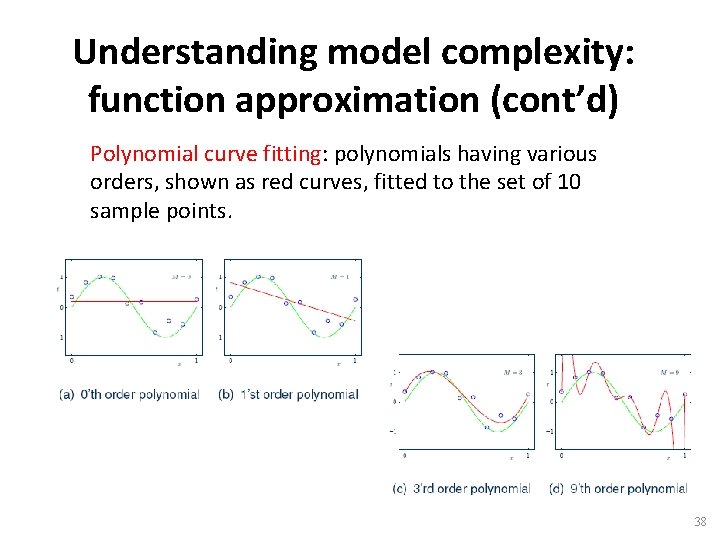

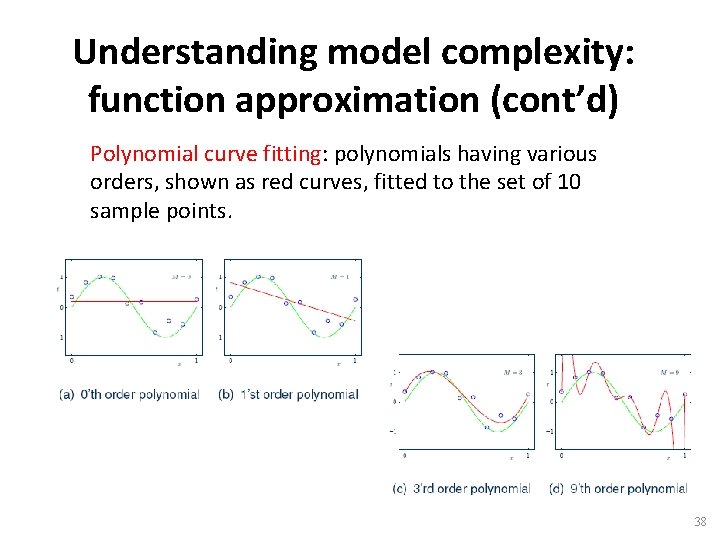

Understanding model complexity: function approximation (cont’d) Polynomial curve fitting: polynomials having various orders, shown as red curves, fitted to the set of 10 sample points. 38

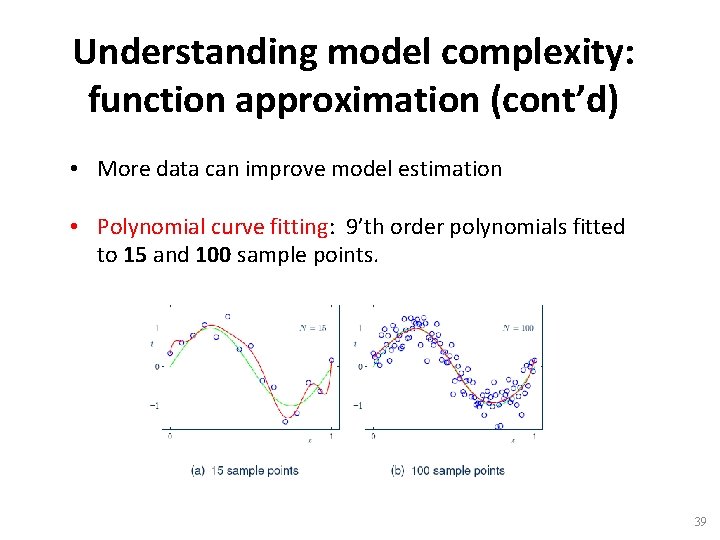

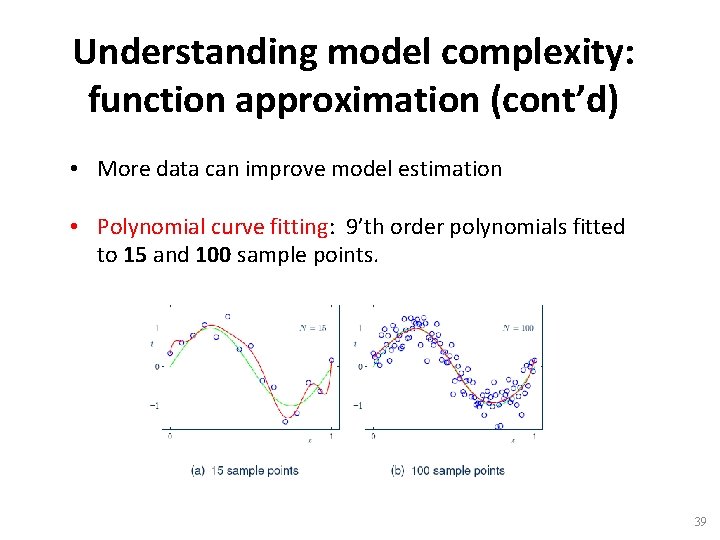

Understanding model complexity: function approximation (cont’d) • More data can improve model estimation • Polynomial curve fitting: 9’th order polynomials fitted to 15 and 100 sample points. 39

Improve Classification Performance through Post-processing • Consider the problem of character recognition. • Exploit context to improve performance. How m ch info mation are y u mi sing? 40

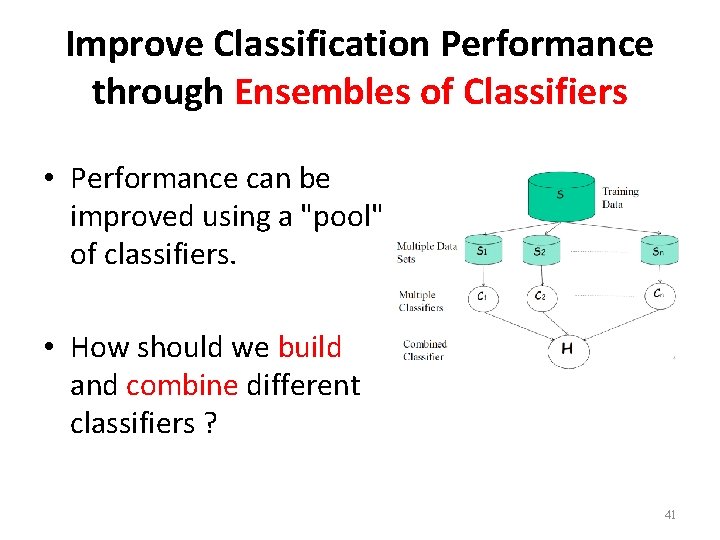

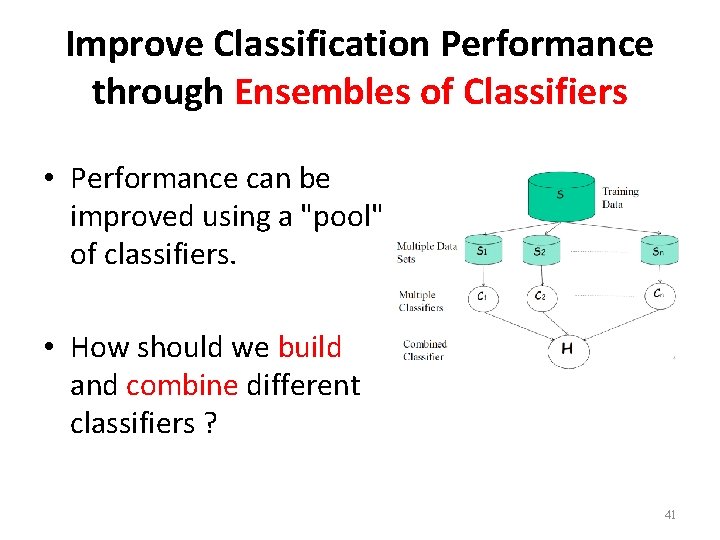

Improve Classification Performance through Ensembles of Classifiers • Performance can be improved using a "pool" of classifiers. • How should we build and combine different classifiers ? 41

Cost of miss-classifications • Consider the fish classification example. • There are two possible classification errors: (1) Deciding the fish was a sea bass when it was a salmon. (2) Deciding the fish was a salmon when it was a sea bass. • Are both errors equally important ? 42

Cost of miss-classifications (cont’d) • Suppose that: – Customers who buy salmon will object vigorously if they see sea bass in their cans. – Customers who buy sea bass will not be unhappy if they occasionally see some expensive salmon in their cans. • How does this knowledge affect our decision? 43

Computational Complexity • How does an algorithm scale with the number of: • features • patterns • categories • Need to consider tradeoffs between computational complexity and performance. 44

Would it be possible to build a “general purpose” PR system? • It would be very difficult to design a system that is capable of performing a variety of classification tasks. – Different problems require different features. – Different features might yield different solutions. – Different tradeoffs exist for different problems. 45

Quiz #1 • Tuesday, February 4 th • Beginning of the class (10 minutes) • Chapter #1 and these slides 46