Introduction to Parallel Computing Architectures Systems and Programming

- Slides: 36

Introduction to Parallel Computing: Architectures, Systems, and Programming Prof. Rajkumar Buyya Cloud Computing and Distributed Systems (CLOUDS) Lab. The University of Melbourne, Australia www. buyya. com

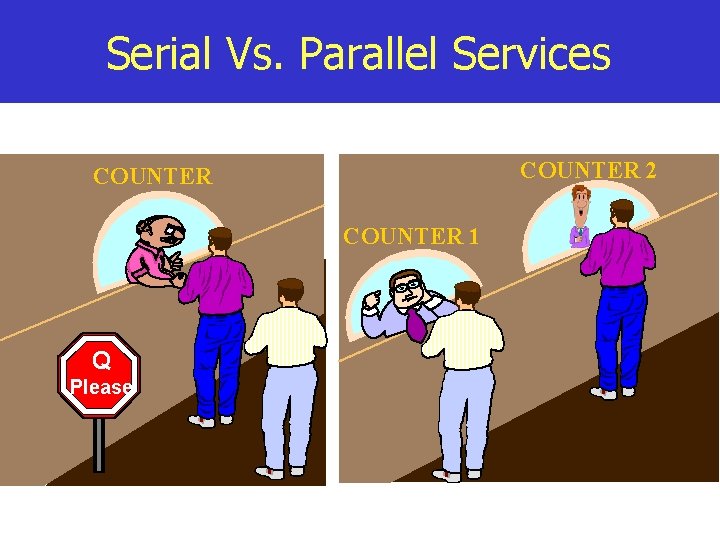

Serial Vs. Parallel Services COUNTER 2 COUNTER 1 Q Please

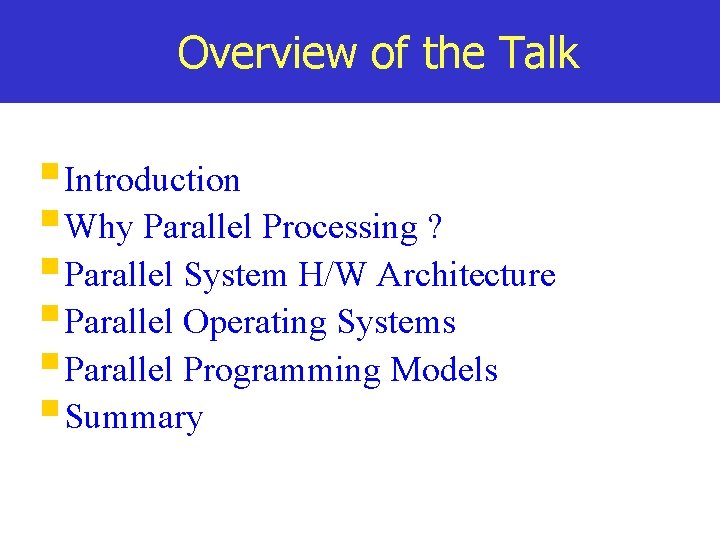

Overview of the Talk § Introduction § Why Parallel Processing ? § Parallel System H/W Architecture § Parallel Operating Systems § Parallel Programming Models § Summary

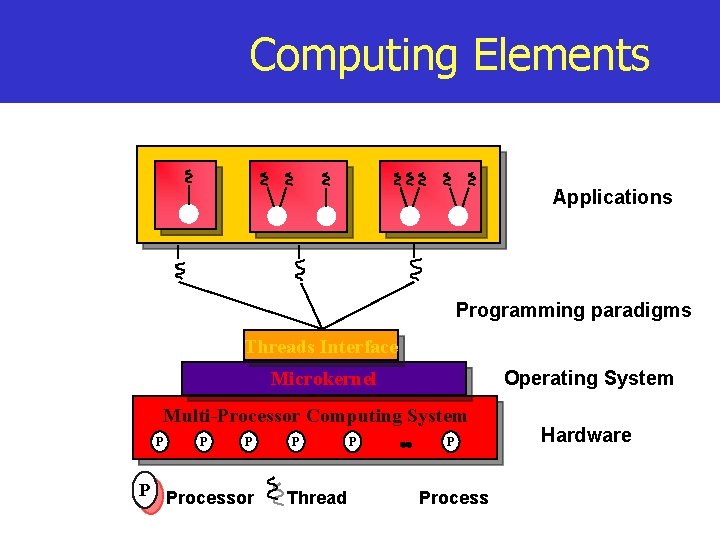

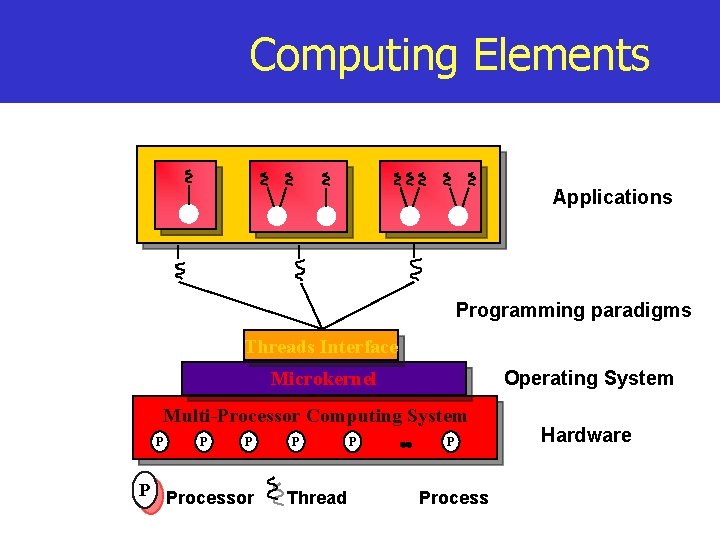

Computing Elements Applications Programming paradigms Threads Interface Operating System Microkernel Multi-Processor Computing System P P Processor P Thread P . . P Process Hardware

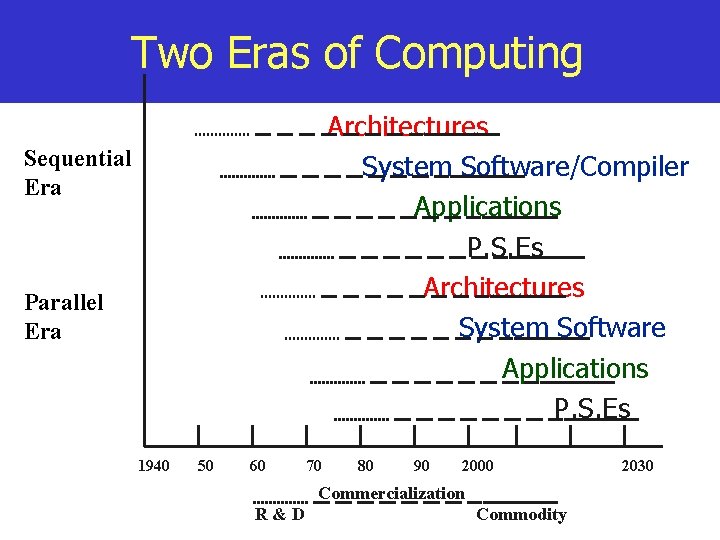

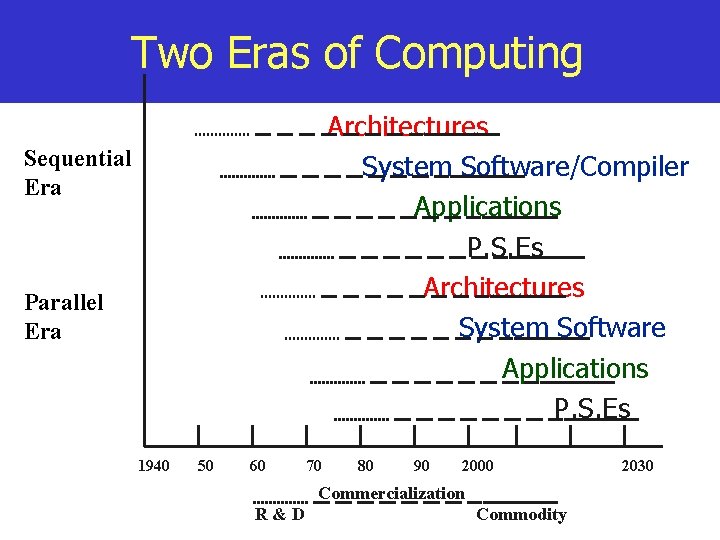

Two Eras of Computing Architectures System Software/Compiler Applications P. S. Es Architectures System Software Applications P. S. Es Sequential Era Parallel Era 1940 50 60 70 80 90 2000 Commercialization R&D Commodity 2030

History of Parallel Processing n The notion of parallel processing can be traced to a tablet dated around 100 BC. n n Tablet has 3 calculating positions capable of operating simultaneously. From this we can infer that: n They were aimed at “speed” or “reliability”.

Motivating Factor: Human Brain n The human brain consists of a large number (more than a billion) of neural cells that process information. Each cell works like a simple processor and only the massive interaction between all cells and their parallel processing makes the brain's abilities possible. n n Individual neuron response speed is slow (ms) Aggregated speed with which complex calculations carried out by (billions of) neurons demonstrate feasibility of parallel processing.

Why Parallel Processing? n Computation requirements are ever increasing: n n simulations, scientific prediction (earthquake), distributed databases, weather forecasting (will it rain tomorrow? ), search engines, e-commerce, Internet service applications, Data Center applications, Finance (investment risk analysis), Oil Exploration, Mining, etc. Silicon based (sequential) architectures reaching their limits in processing capabilities (clock speed) as they are constrained by: n the speed of light, thermodynamics

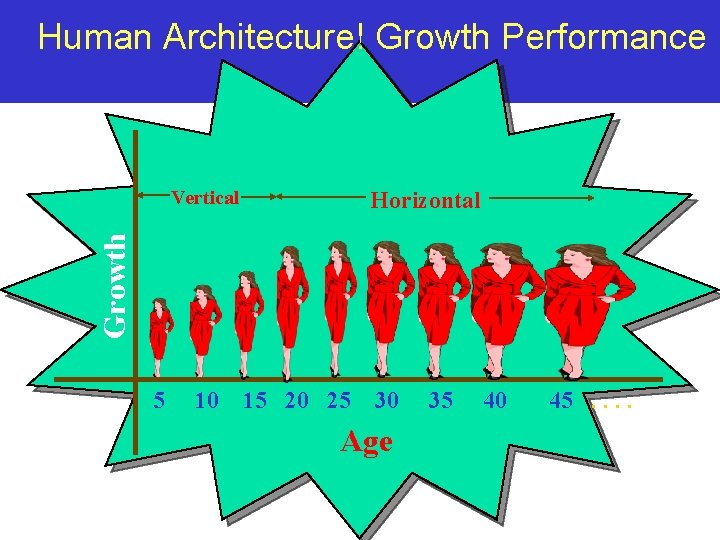

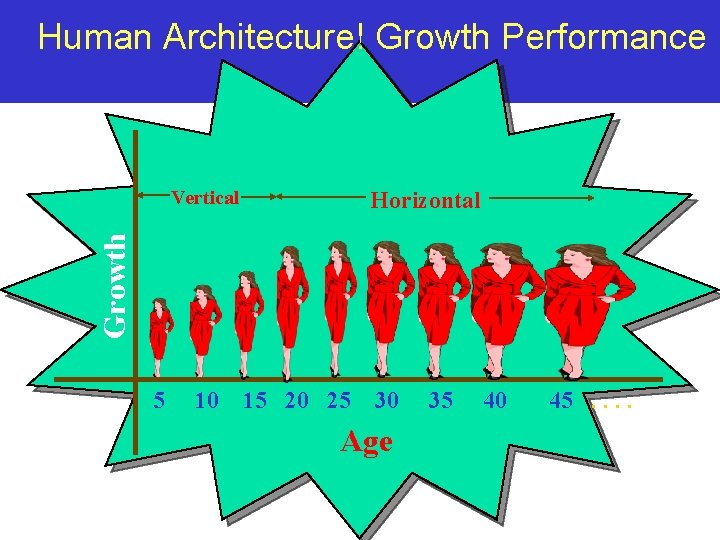

Human Architecture! Growth Performance Vertical Growth Horizontal 5 10 15 20 25 30 Age 35 40 45. .

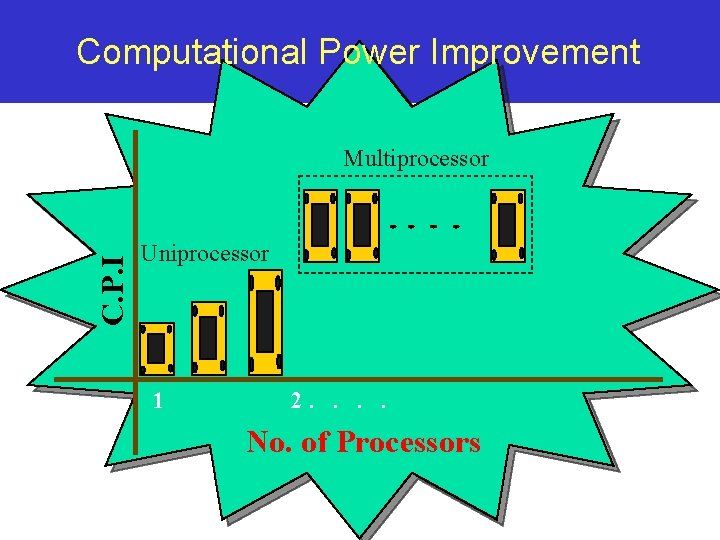

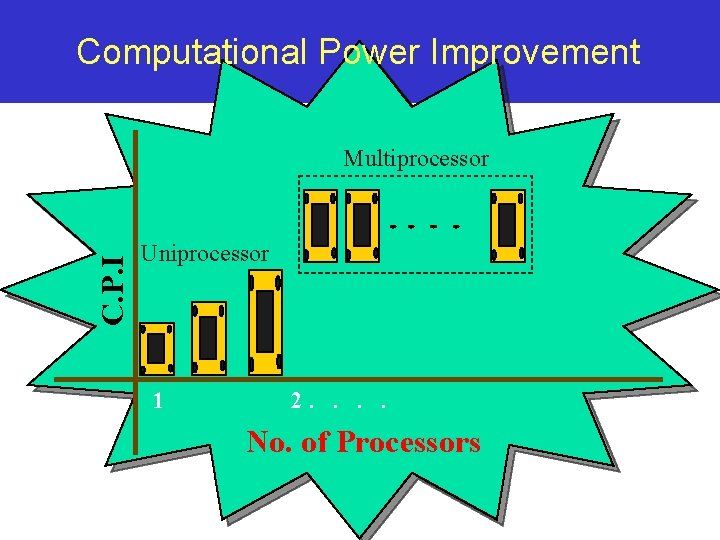

Computational Power Improvement C. P. I Multiprocessor Uniprocessor 1 2. . No. of Processors

Why Parallel Processing? n n Hardware improvements like pipelining, superscalar are not scaling well and require sophisticated compiler technology to exploit performance out of them. Techniques such as vector processing works well for certain kind of problems.

Why Parallel Processing? n n Significant development in networking technology is paving a way for network-based cost-effective parallel computing. The parallel processing technology is now mature and is being exploited commercially. n All computers (including desktops and laptops) are now based on parallel processing (e. g. , multicore) architecture.

Processing Elements Architecture

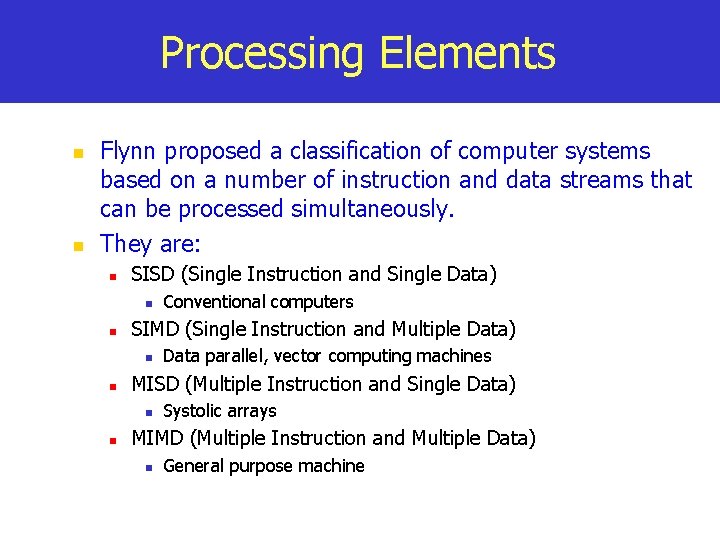

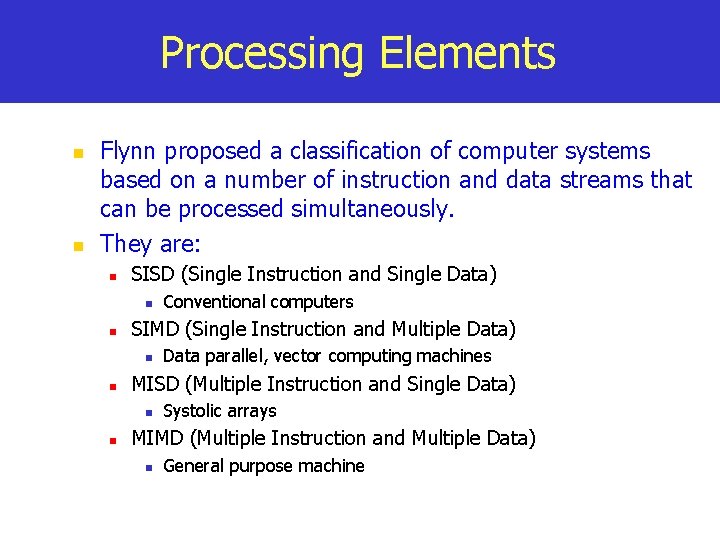

Processing Elements n n Flynn proposed a classification of computer systems based on a number of instruction and data streams that can be processed simultaneously. They are: n SISD (Single Instruction and Single Data) n n SIMD (Single Instruction and Multiple Data) n n Data parallel, vector computing machines MISD (Multiple Instruction and Single Data) n n Conventional computers Systolic arrays MIMD (Multiple Instruction and Multiple Data) n General purpose machine

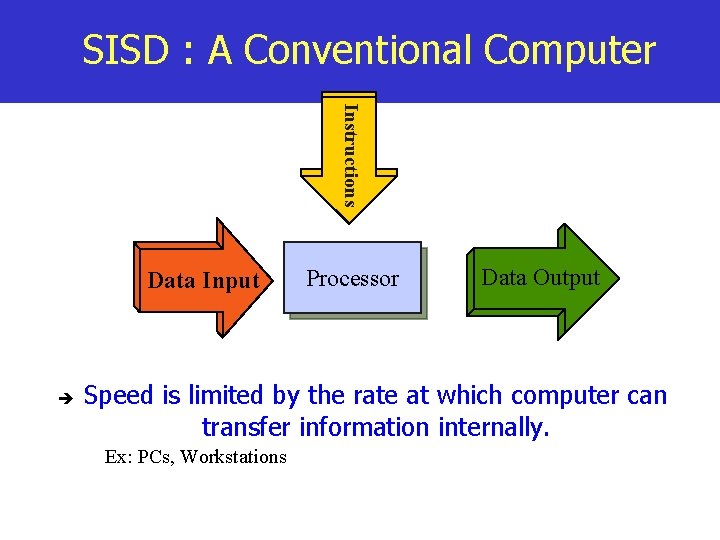

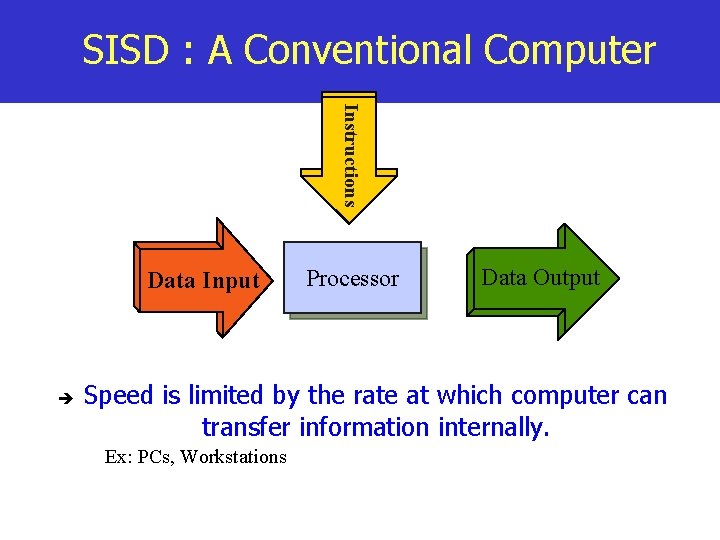

SISD : A Conventional Computer Instructions Data Input è Processor Data Output Speed is limited by the rate at which computer can transfer information internally. Ex: PCs, Workstations

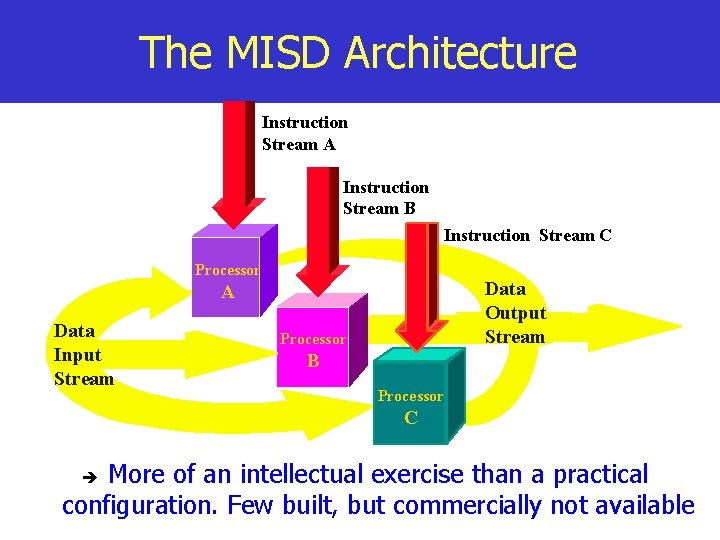

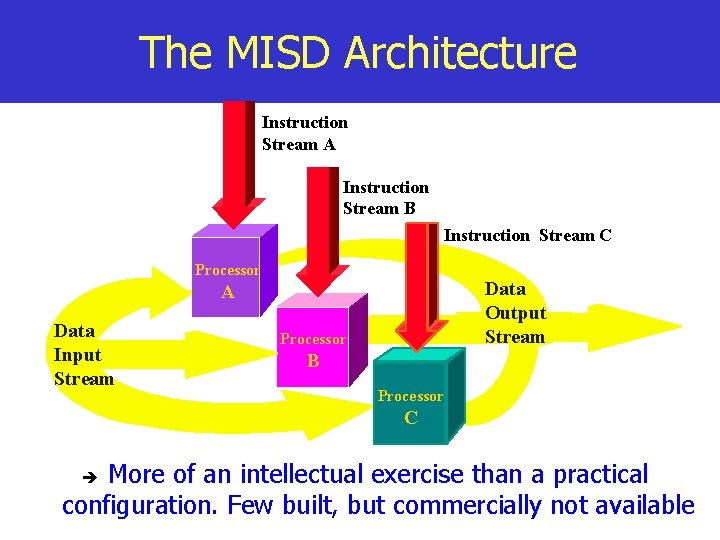

The MISD Architecture Instruction Stream A Instruction Stream B Instruction Stream C Processor Data Output Stream A Data Input Stream Processor B Processor C More of an intellectual exercise than a practical configuration. Few built, but commercially not available è

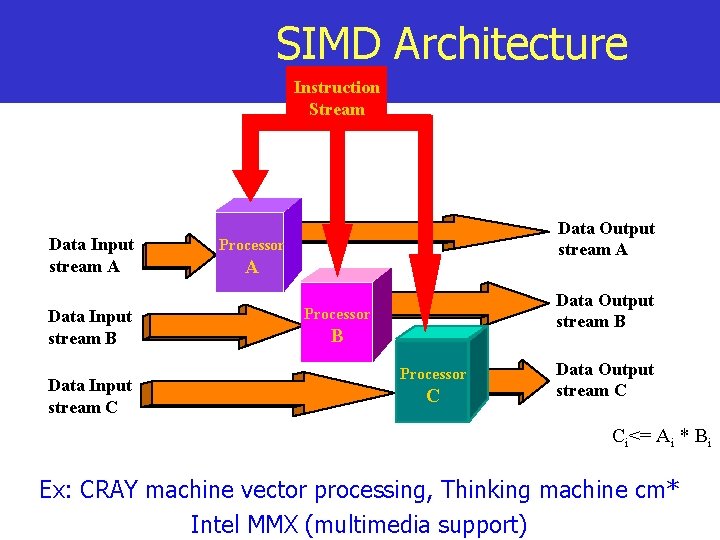

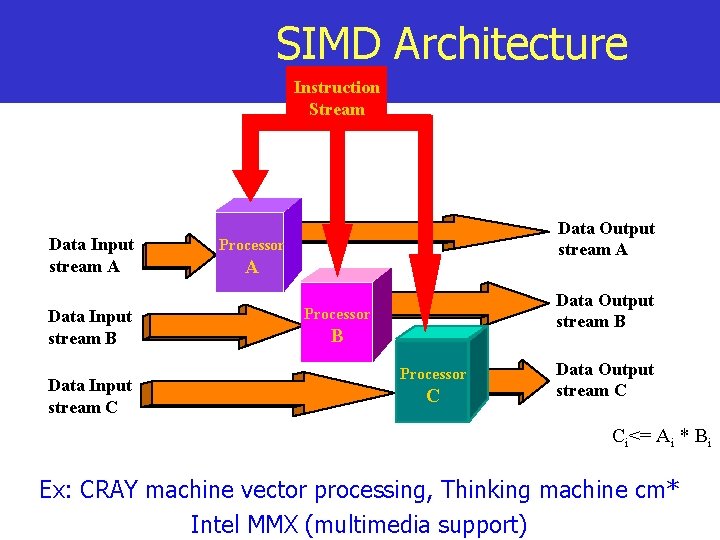

SIMD Architecture Instruction Stream Data Input stream A Data Input stream B Data Input stream C Data Output stream A Processor A Data Output stream B Processor C Data Output stream C Ci<= Ai * Bi Ex: CRAY machine vector processing, Thinking machine cm* Intel MMX (multimedia support)

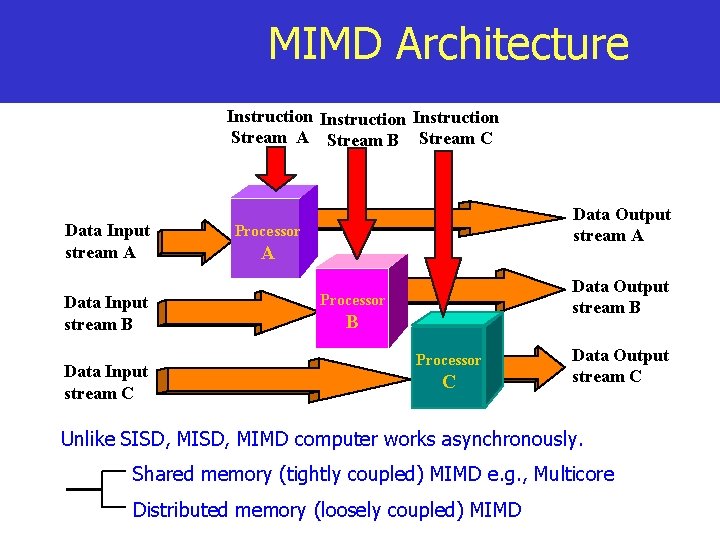

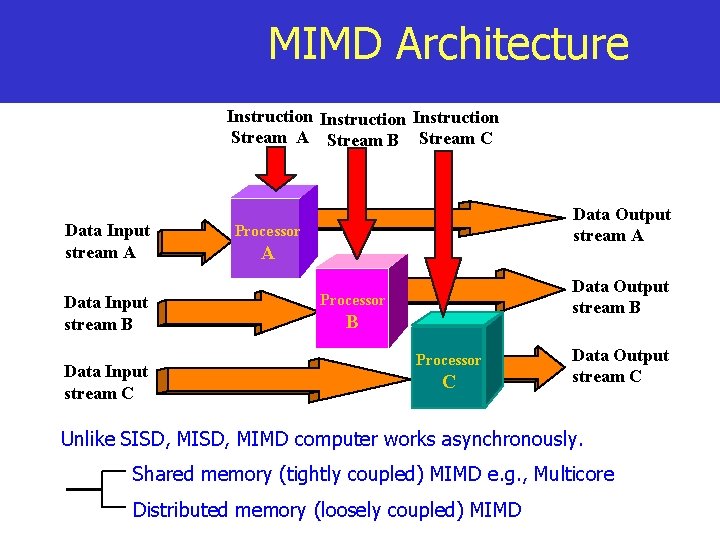

MIMD Architecture Instruction Stream A Stream B Stream C Data Input stream A Data Input stream B Data Input stream C Data Output stream A Processor A Data Output stream B Processor C Data Output stream C Unlike SISD, MIMD computer works asynchronously. Shared memory (tightly coupled) MIMD e. g. , Multicore Distributed memory (loosely coupled) MIMD

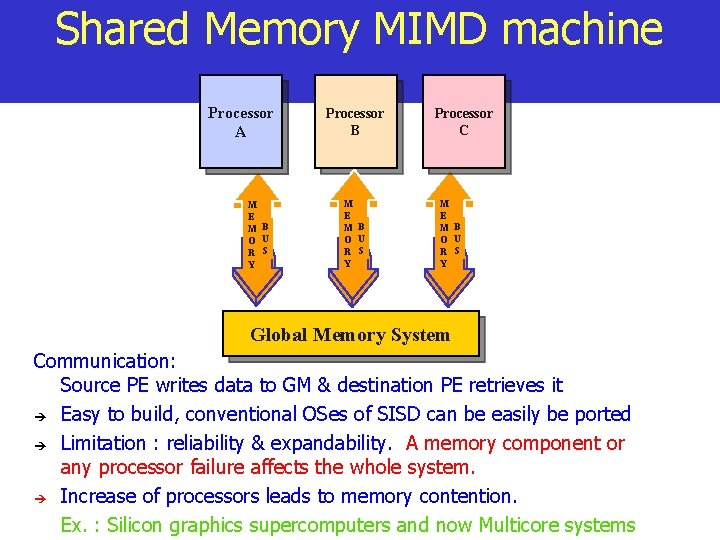

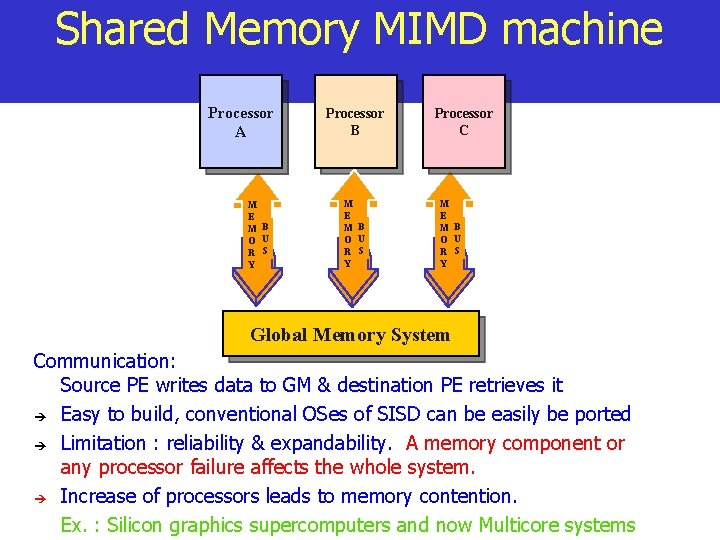

Shared Memory MIMD machine Processor A M E M B O U R S Y Processor B M E M B O U R S Y Processor C M E M B O U R S Y Global Memory System Communication: Source PE writes data to GM & destination PE retrieves it è Easy to build, conventional OSes of SISD can be easily be ported è Limitation : reliability & expandability. A memory component or any processor failure affects the whole system. è Increase of processors leads to memory contention. Ex. : Silicon graphics supercomputers and now Multicore systems

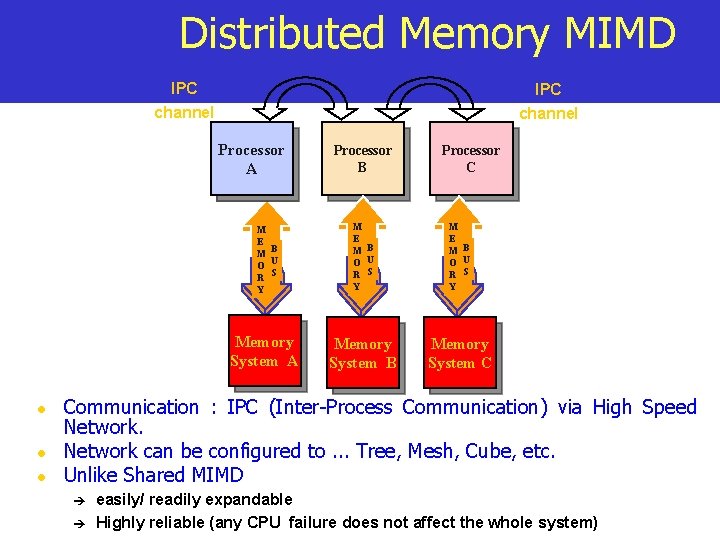

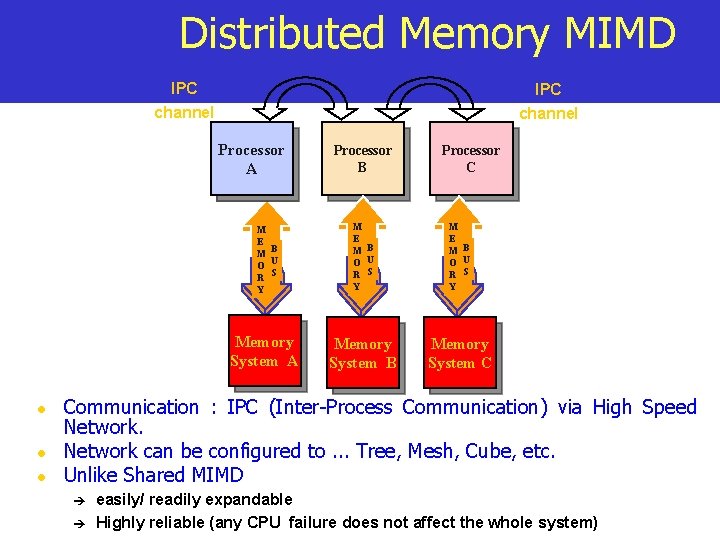

Distributed Memory MIMD IPC channel Processor A l l l Processor B Processor C M E M B O U R S Y Memory System A Memory System B Memory System C Communication : IPC (Inter-Process Communication) via High Speed Network can be configured to. . . Tree, Mesh, Cube, etc. Unlike Shared MIMD è è easily/ readily expandable Highly reliable (any CPU failure does not affect the whole system)

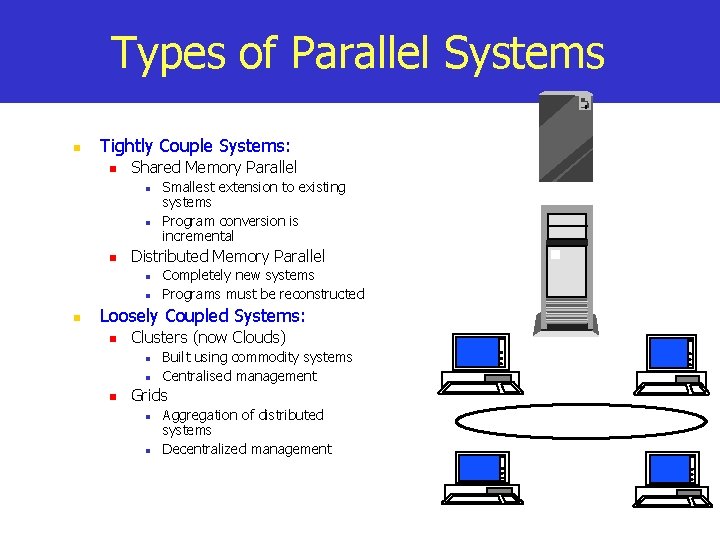

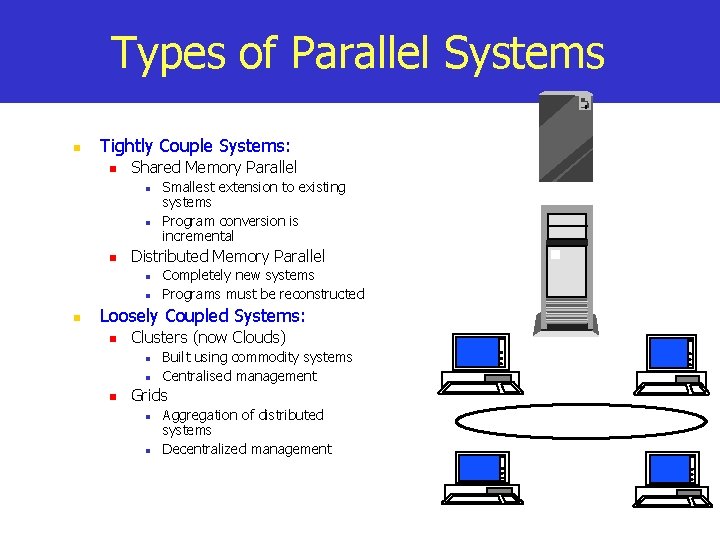

Types of Parallel Systems n Tightly Couple Systems: n Shared Memory Parallel n n n Distributed Memory Parallel n n n Smallest extension to existing systems Program conversion is incremental Completely new systems Programs must be reconstructed Loosely Coupled Systems: n Clusters (now Clouds) n n n Built using commodity systems Centralised management Grids n n Aggregation of distributed systems Decentralized management

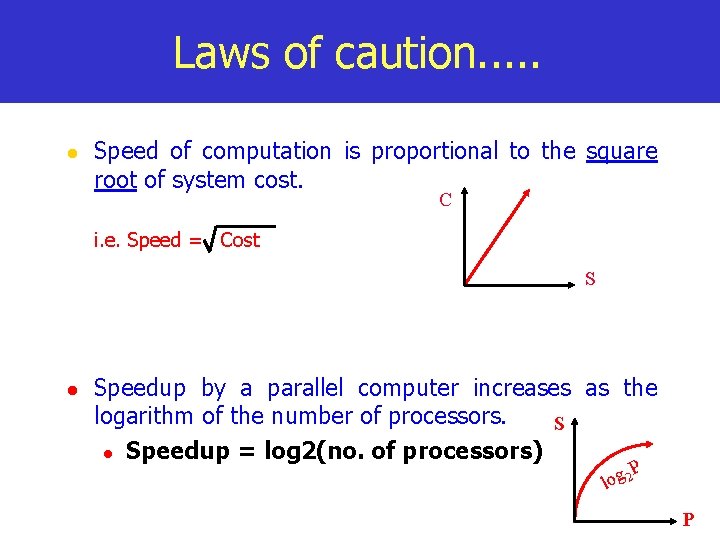

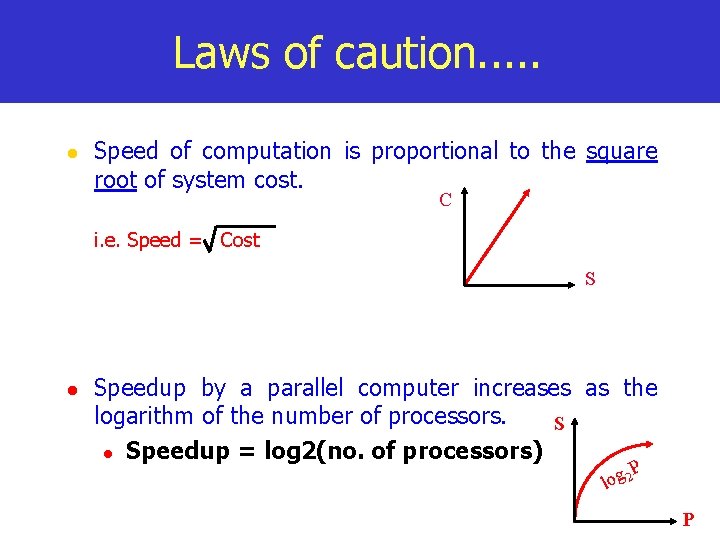

Laws of caution. . . l Speed of computation is proportional to the square root of system cost. C i. e. Speed = Cost S l Speedup by a parallel computer increases as the logarithm of the number of processors. S l Speedup = log 2(no. of processors) P 2 g o l P

Caution. . n n n Very fast development in network computing and related area have blurred concept boundaries, causing lot of terminological confusion: concurrent computing, parallel computing, multiprocessing, supercomputing, massively parallel processing, cluster computing, distributed computing, Internet computing, grid computing, Cloud computing, etc. At the user level, even well-defined distinctions such as shared memory and distributed memory are disappearing due to new advances in technologies. Good tools for parallel application development and debugging are yet to emerge.

Caution. . n There is no strict delimiters for contributors to the area of parallel processing: n n computer architecture, operating systems, high-level languages, algorithms, databases, computer networks, … All have a role to play.

Operating Systems for High Performance Computing

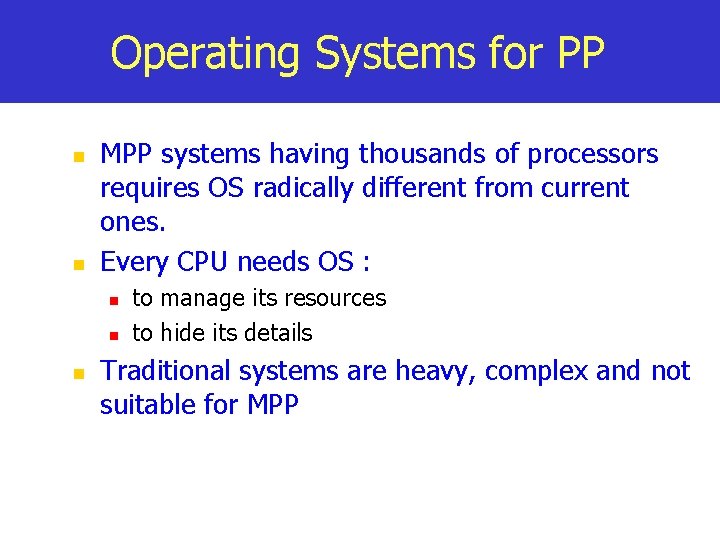

Operating Systems for PP n n MPP systems having thousands of processors requires OS radically different from current ones. Every CPU needs OS : n n n to manage its resources to hide its details Traditional systems are heavy, complex and not suitable for MPP

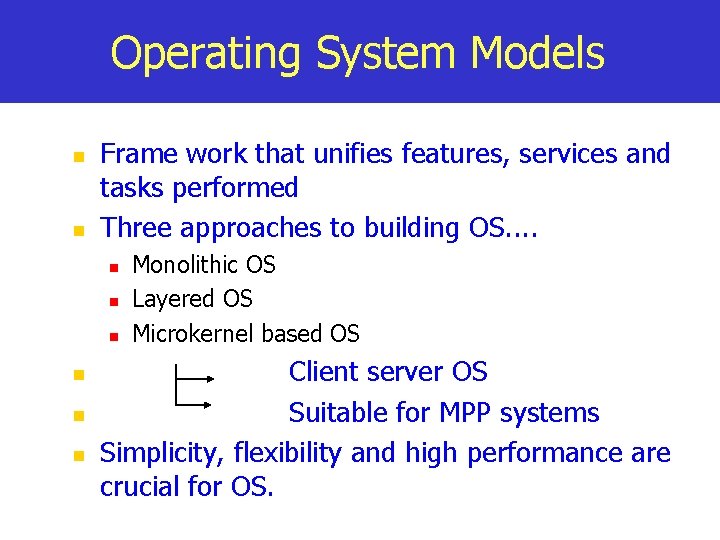

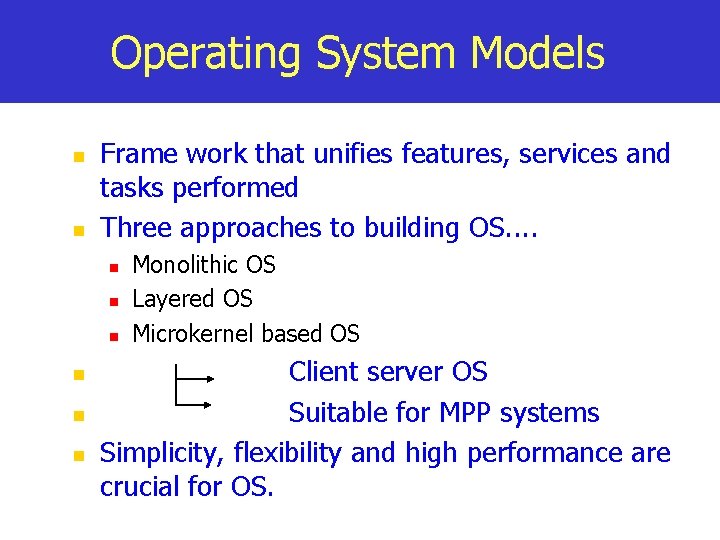

Operating System Models n n Frame work that unifies features, services and tasks performed Three approaches to building OS. . n n n Monolithic OS Layered OS Microkernel based OS Client server OS Suitable for MPP systems Simplicity, flexibility and high performance are crucial for OS.

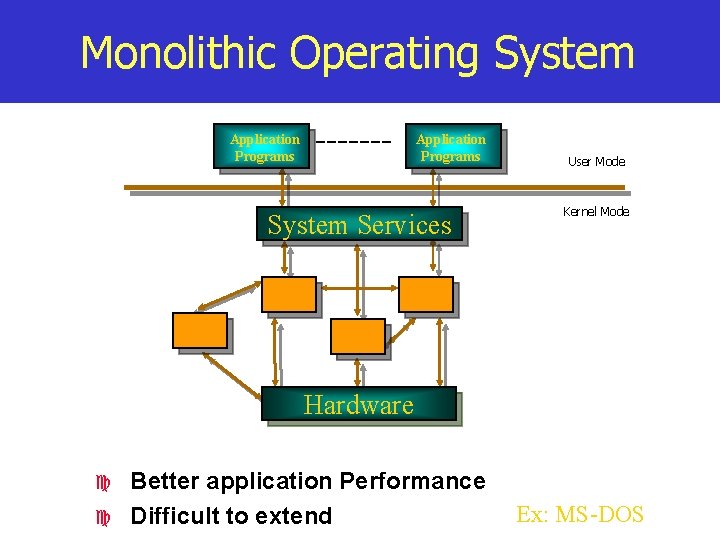

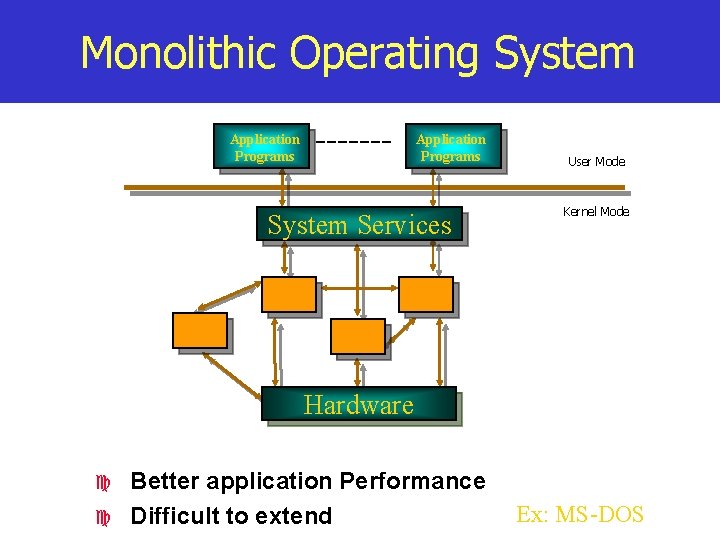

Monolithic Operating System Application Programs System Services User Mode Kernel Mode Hardware c c Better application Performance Difficult to extend Ex: MS-DOS

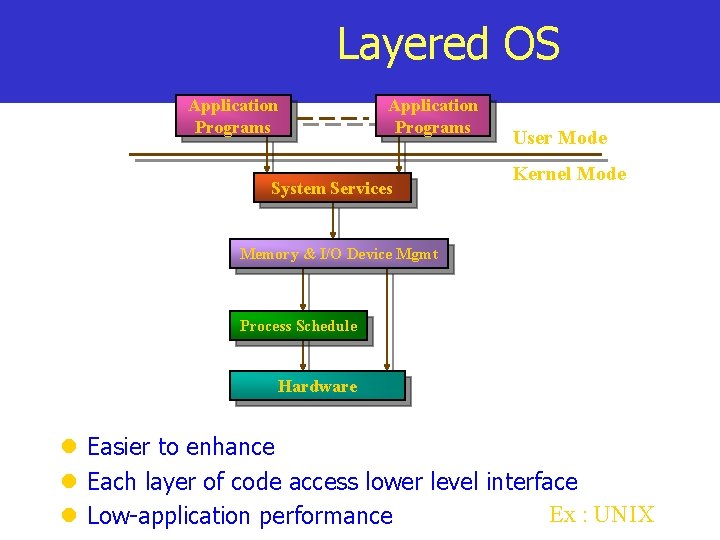

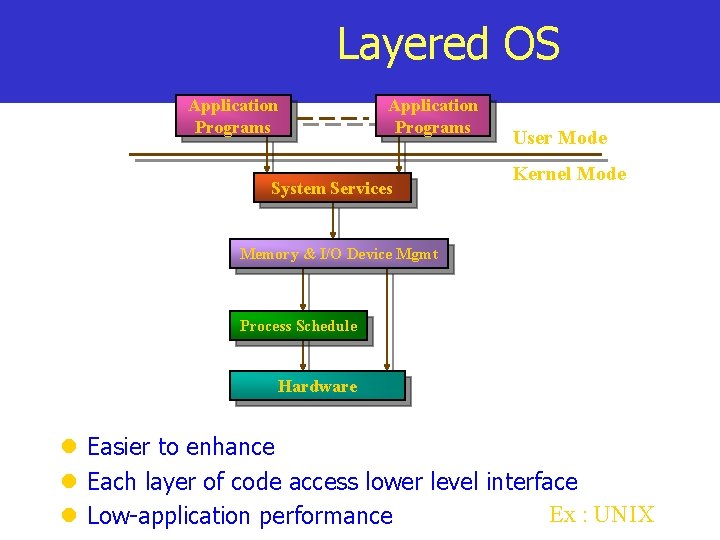

Layered OS Application Programs System Services User Mode Kernel Mode Memory & I/O Device Mgmt Process Schedule Hardware l Easier to enhance l Each layer of code access lower level interface Ex : UNIX l Low-application performance

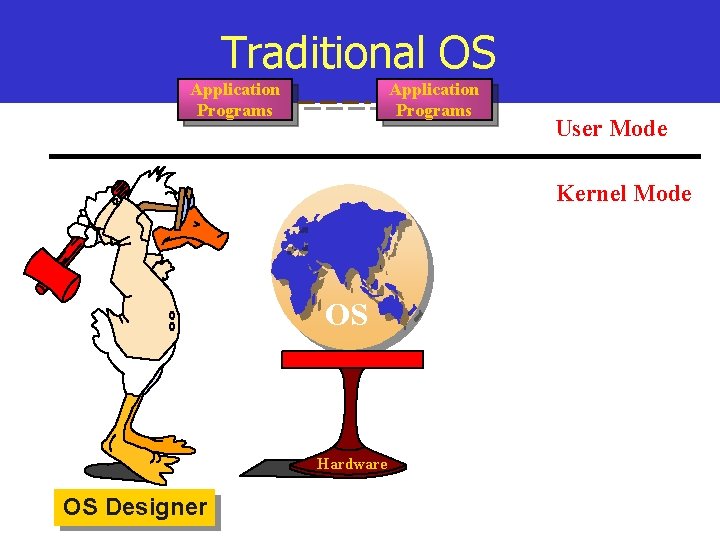

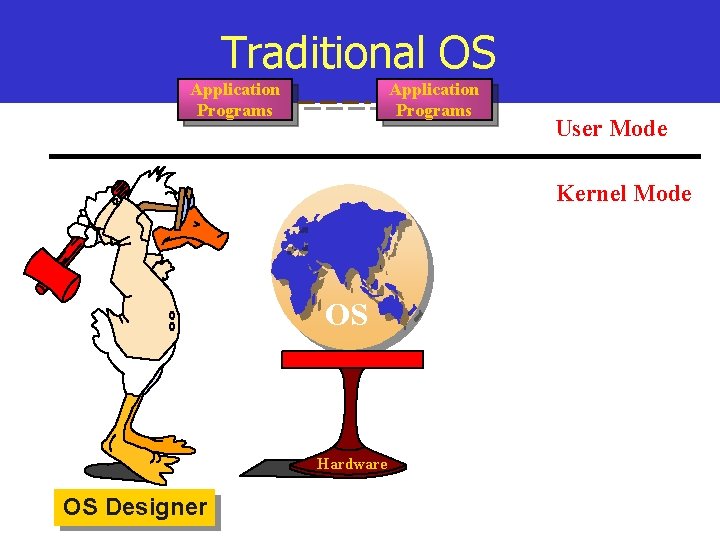

Traditional OS Application Programs User Mode Kernel Mode OS Hardware OS Designer

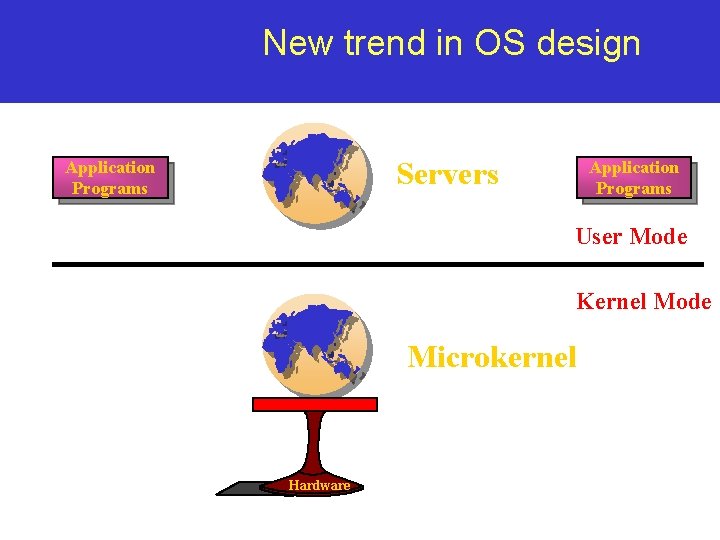

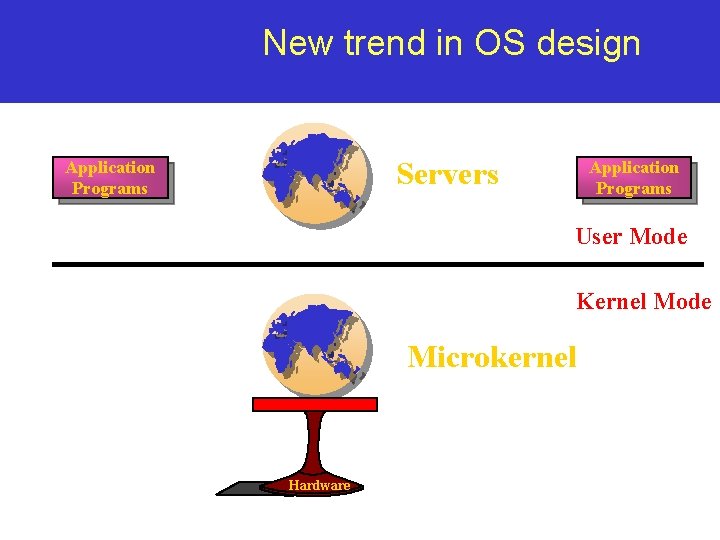

New trend in OS design Servers Application Programs User Mode Kernel Mode Microkernel Hardware

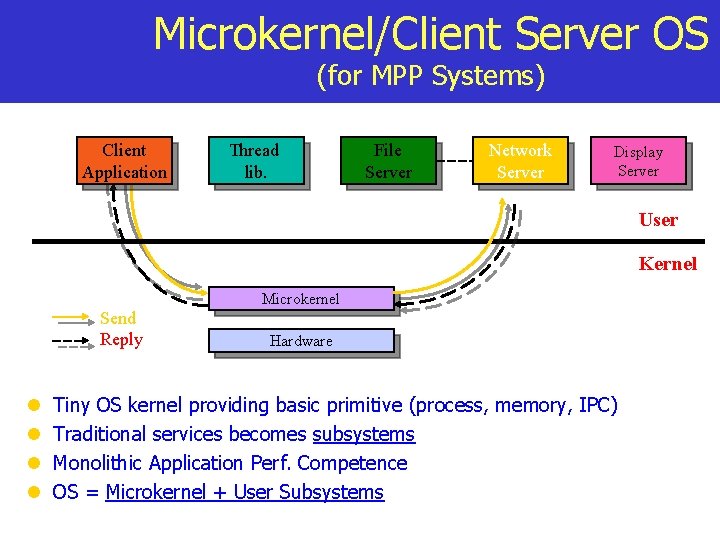

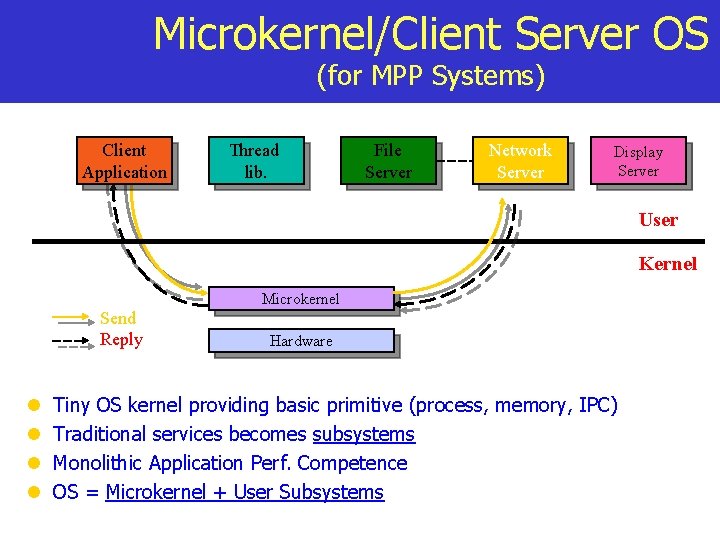

Microkernel/Client Server OS (for MPP Systems) Client Application Thread lib. File Server Network Server Display Server User Kernel Send Reply l l Microkernel Hardware Tiny OS kernel providing basic primitive (process, memory, IPC) Traditional services becomes subsystems Monolithic Application Perf. Competence OS = Microkernel + User Subsystems

Few Popular Microkernel Systems , MACH, CMU , PARAS, C-DAC , Chorus , QNX , (Windows)

Parallel Programs n n Consist of multiple active “processes” simultaneously solving a given problem. And the communication and synchronization between them (parallel processes) forms the core of parallel programming efforts.

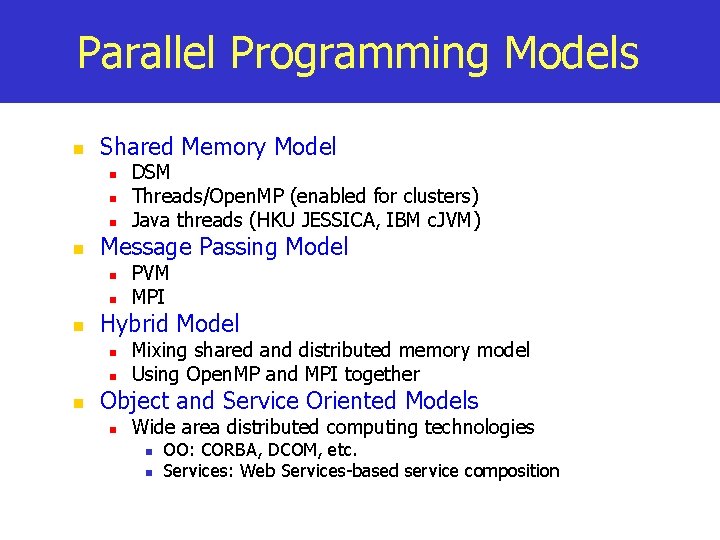

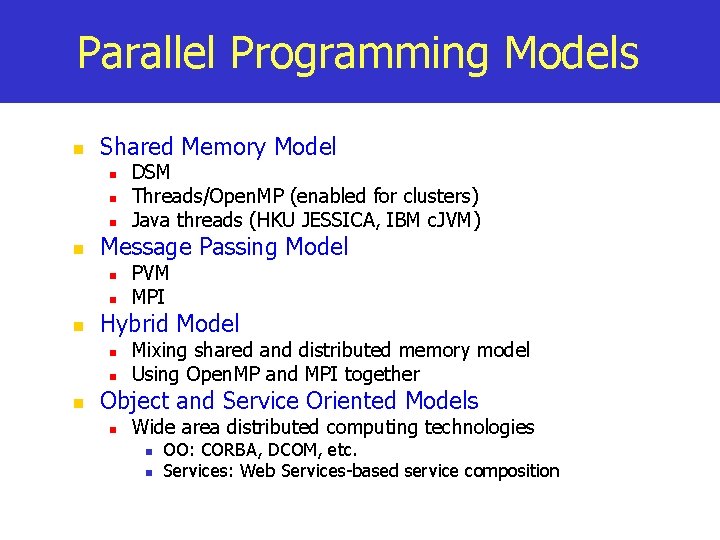

Parallel Programming Models n Shared Memory Model n n Message Passing Model n n n PVM MPI Hybrid Model n n n DSM Threads/Open. MP (enabled for clusters) Java threads (HKU JESSICA, IBM c. JVM) Mixing shared and distributed memory model Using Open. MP and MPI together Object and Service Oriented Models n Wide area distributed computing technologies n n OO: CORBA, DCOM, etc. Services: Web Services-based service composition

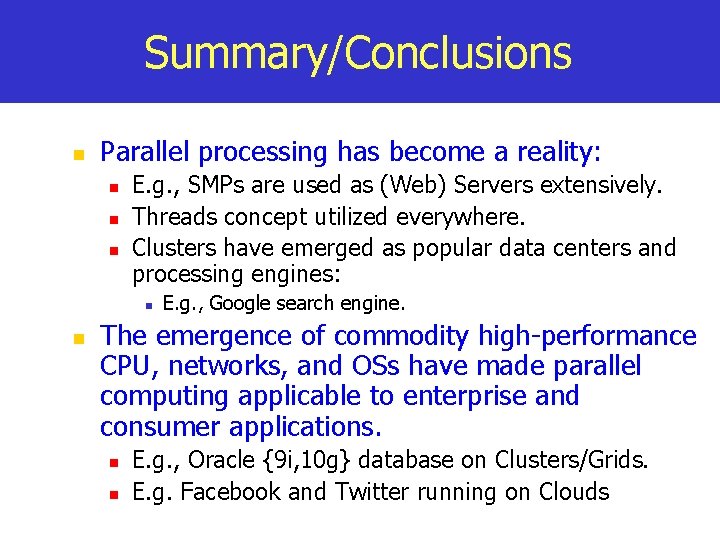

Summary/Conclusions n Parallel processing has become a reality: n n n E. g. , SMPs are used as (Web) Servers extensively. Threads concept utilized everywhere. Clusters have emerged as popular data centers and processing engines: n n E. g. , Google search engine. The emergence of commodity high-performance CPU, networks, and OSs have made parallel computing applicable to enterprise and consumer applications. n n E. g. , Oracle {9 i, 10 g} database on Clusters/Grids. E. g. Facebook and Twitter running on Clouds