Introduction to Parallel Computation FDI 2007 Track Q

- Slides: 14

Introduction to Parallel Computation FDI 2007 Track Q Day 1 – Morning Session

Track Q Overview • Monday – – Intro to Parallel Computation Parallel Architectures and Parallel Programming Concepts Message-Passing Paradigm, MPI Topics • Tuesday – – Data-Parallelism Master/Worker and Asynchronous Communication Parallelizing Sequential Codes Performance: Evaluation, Tuning, Visualization • Wednesday – Shared-Memory Parallel Computing, Open. MP – Practicum, BYOC

What is Parallel Computing? • “Multiple CPUs cooperating to solve one problem. ” • Motivation: – Solve a given problem faster – Solve a larger problem in the same time – (Take advantage of multiple cores in an SMP) • Distinguished from … – Distributed computing – Grid computing – Ensemble computing

Why is Parallel Computing Difficult? • Existing codes are too valuable to discard. • We don’t think in parallel. • There are hard problems that must be solved without sacrificing performance, e. g. , synchronization, communication, load balancing. • Parallel computing platforms are too diverse, programming environments are too low-level.

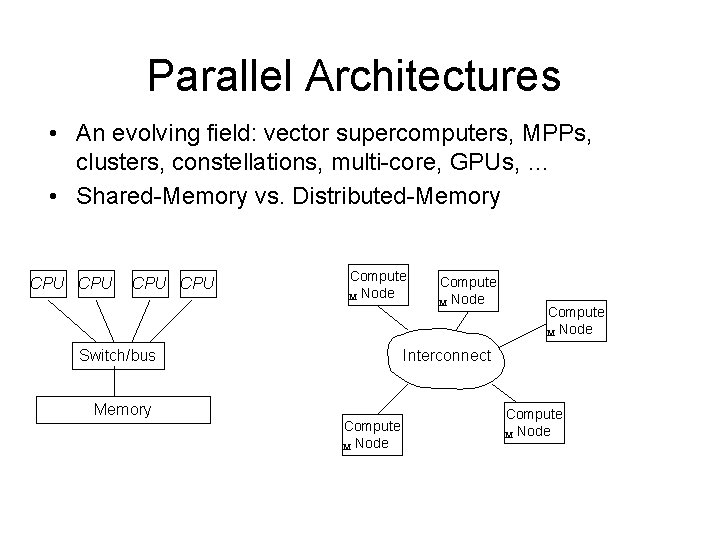

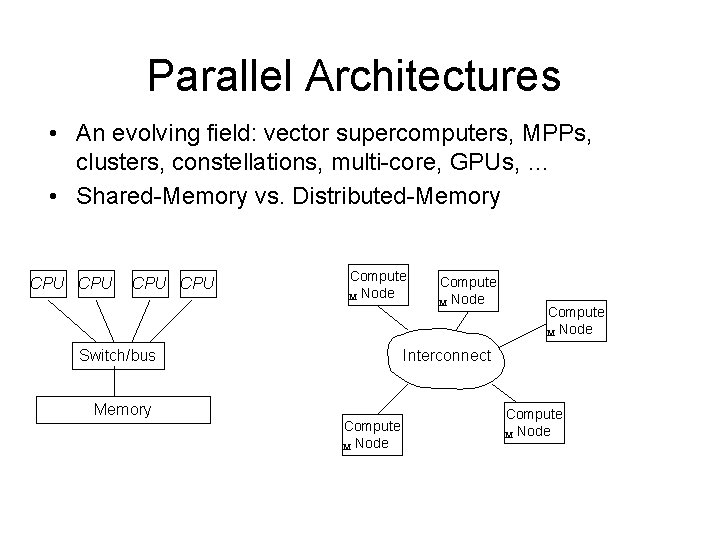

Parallel Architectures • An evolving field: vector supercomputers, MPPs, clusters, constellations, multi-core, GPUs, … • Shared-Memory vs. Distributed-Memory CPU CPU Compute M Node Switch/bus Compute M Node Interconnect Memory Compute M Node

Parallel Algorithms: Some Approaches • • • Loop-based parallelism Functional vs data parallelism Domain decomposition Pipelining Master/worker Embarrassingly parallel, ensembles, screen-saver science

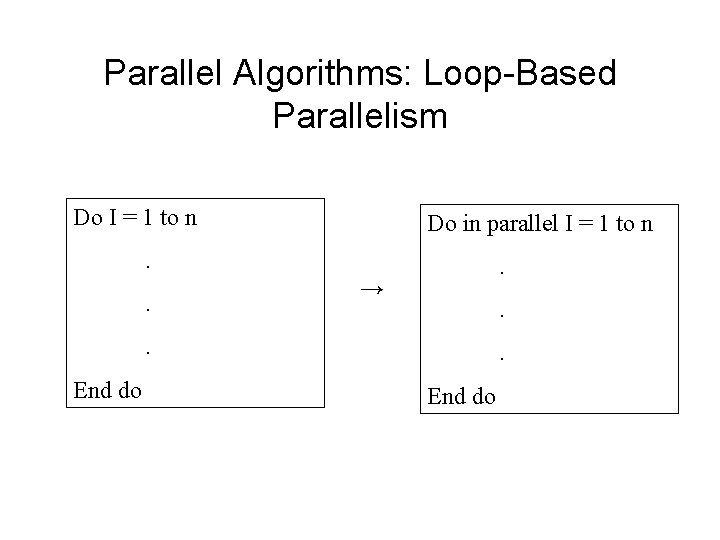

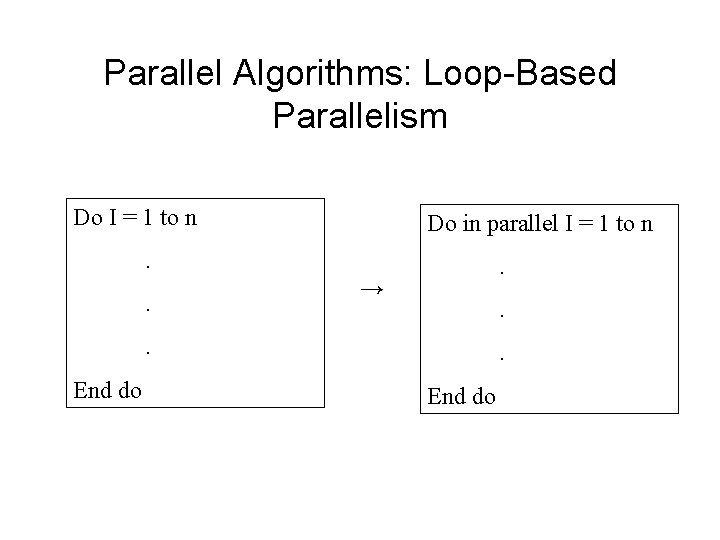

Parallel Algorithms: Loop-Based Parallelism Do I = 1 to n Do in parallel I = 1 to n . . . → . . End do

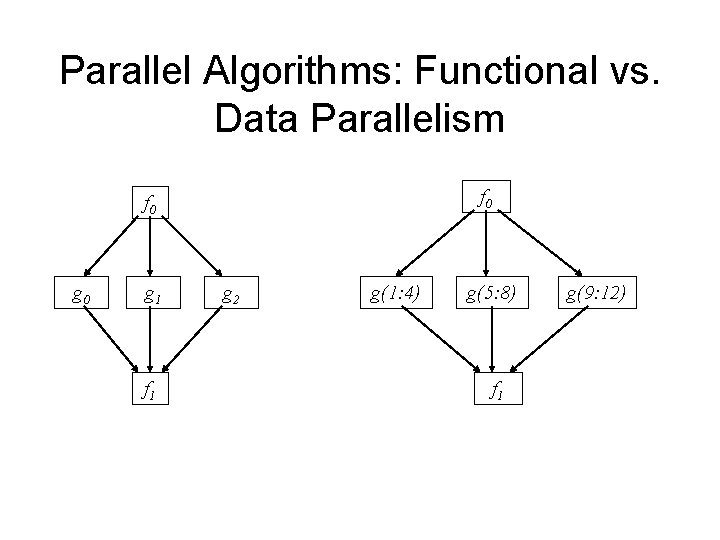

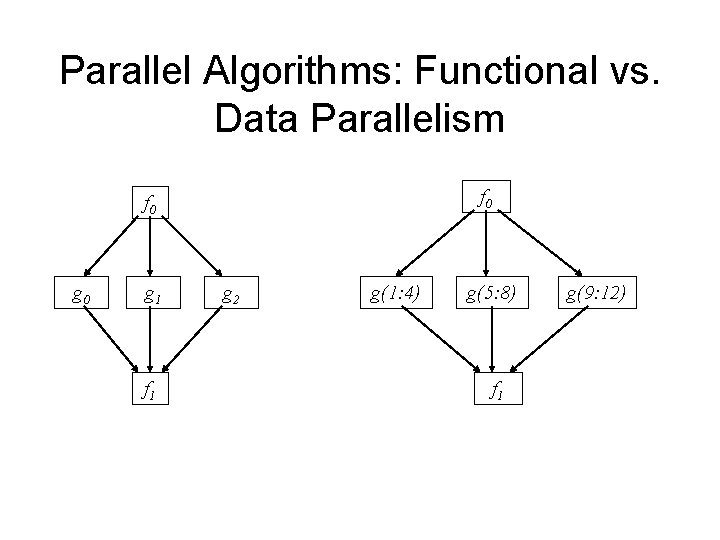

Parallel Algorithms: Functional vs. Data Parallelism f 0 g 0 g 1 f 1 g 2 g(1: 4) g(5: 8) f 1 g(9: 12)

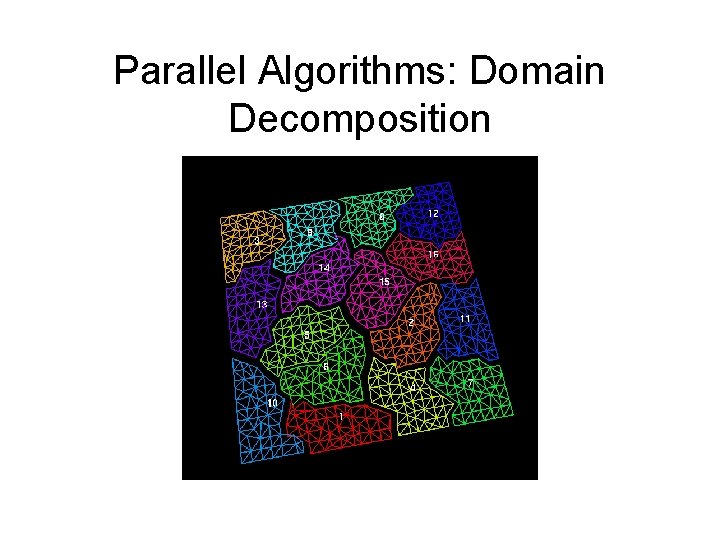

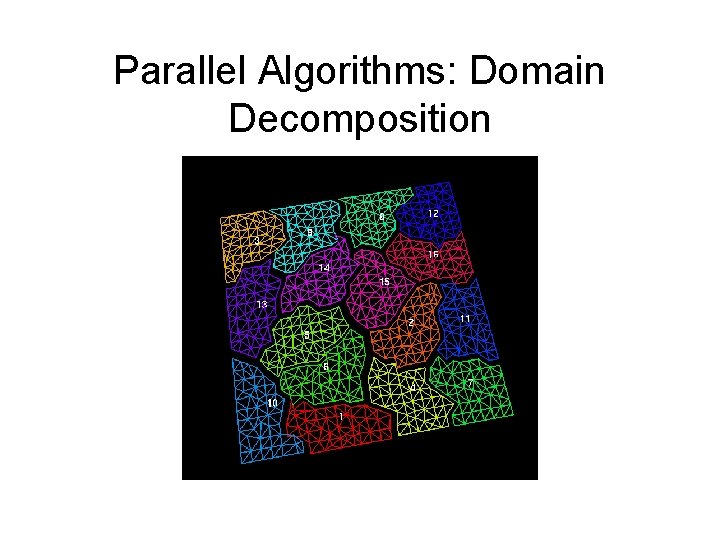

Parallel Algorithms: Domain Decomposition

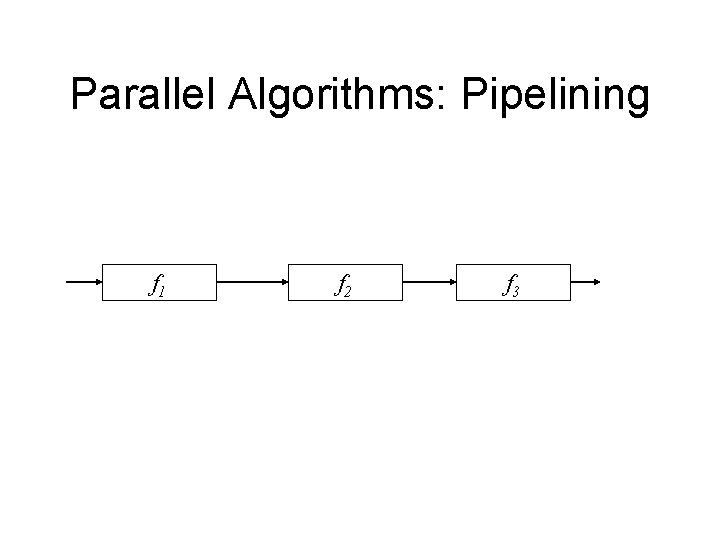

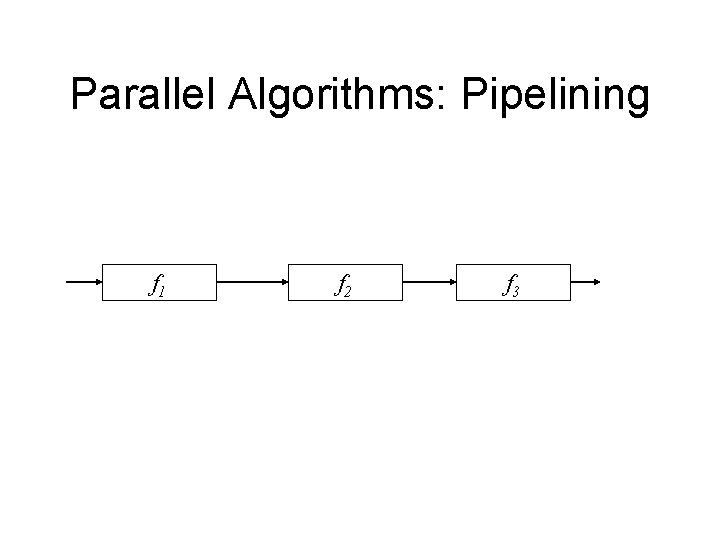

Parallel Algorithms: Pipelining f 1 f 2 f 3

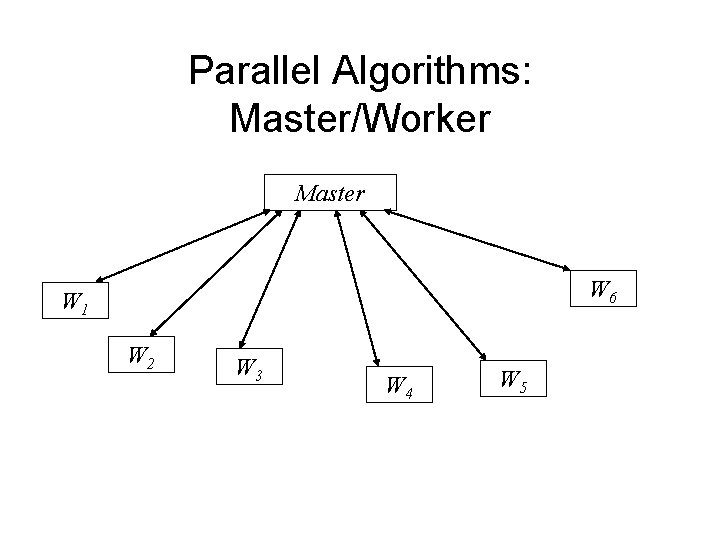

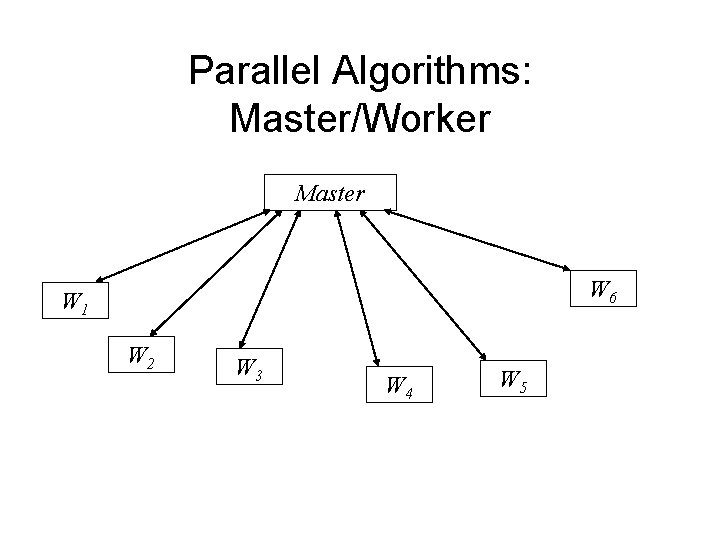

Parallel Algorithms: Master/Worker Master W 6 W 1 W 2 W 3 W 4 W 5

Evaluating and Predicting Performance • Theoretical approaches: asymptotic analysis, complexity theory, analytic modeling of systems and algorithms. • Empirical approaches: benchmarking, metrics, visualization.

“How do I program a parallel computer? ” Some possible answers: 1. As always. • • Rely on compiler, libraries, run-time system. Comment: general solution very unlikely 2. New or extended programming language. • • Re-write code, use new compilers & run-time. Comment: lots of academia, small market-share

“How do I program a parallel computer? ” (cont’d) 3. Existing language + compiler directives. • • Compiler extension or pre-preprocessor optionally handles explicitly parallel constructs. Comment: Open. MP widely-used for shared-memory machines. 4. Existing language + library calls. • • Explicitly (re-)code for threads or message passing. Comment: most common approach, especially for distributed memory machines.