Introduction to Open MP Introduction July 24 2012

![Hello World int main (int argc, char *argv[]) { #pragma omp parallel { printf("Hello Hello World int main (int argc, char *argv[]) { #pragma omp parallel { printf("Hello](https://slidetodoc.com/presentation_image_h/30fc241359412221b9cefd07ec29d7e7/image-7.jpg)

![Shared versus Private Data int main (int argc, char *argv[]) { int x; x Shared versus Private Data int main (int argc, char *argv[]) { int x; x](https://slidetodoc.com/presentation_image_h/30fc241359412221b9cefd07ec29d7e7/image-12.jpg)

![Sections Output Thread Thread Thread Thread 0 doing section 1 0: c[0]= 5. 000000 Sections Output Thread Thread Thread Thread 0 doing section 1 0: c[0]= 5. 000000](https://slidetodoc.com/presentation_image_h/30fc241359412221b9cefd07ec29d7e7/image-21.jpg)

![Sections Output Thread Thread Thread Thread 0 doing section 1 0: c[0]= 5. 000000 Sections Output Thread Thread Thread Thread 0 doing section 1 0: c[0]= 5. 000000](https://slidetodoc.com/presentation_image_h/30fc241359412221b9cefd07ec29d7e7/image-22.jpg)

- Slides: 50

Introduction to Open. MP Introduction July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

Shared-Memory Systems Processor Bus interface Processor/ memory us Memory controller Shared memory July 24, 2012 Memory All processors can access all of the shared memory © copyright 2012, Clayton S. Ferner, UNC Wilmington

Open. MP Open. MP uses compiler directives (similar to Paraguin) to parallelize a program The programmer inserts #pragma statements into the sequential program to tell the compiler how to parallelize the program This is a higher level of abstraction than pthreads or Java threads Standardized in late 1990 s gcc supports Open. MP July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

Getting Started July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

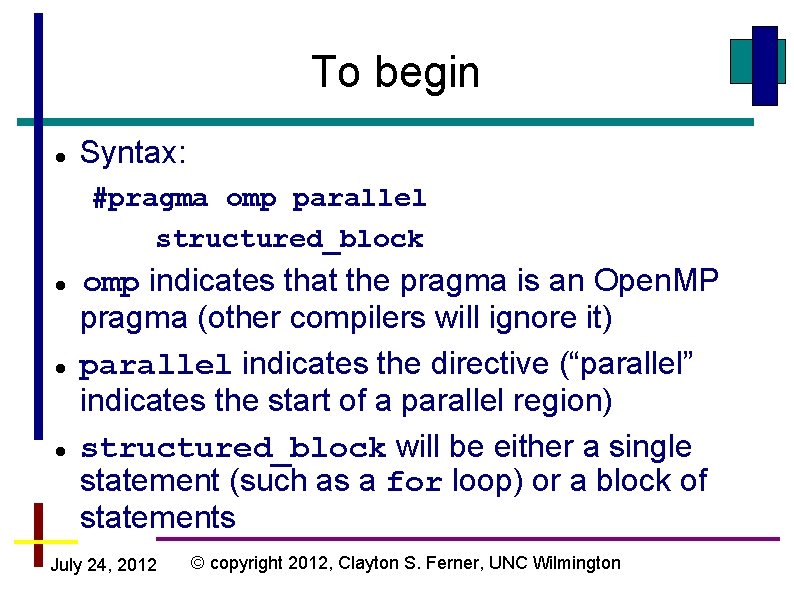

To begin Syntax: #pragma omp parallel structured_block omp indicates that the pragma is an Open. MP pragma (other compilers will ignore it) parallel indicates the directive (“parallel” indicates the start of a parallel region) structured_block will be either a single statement (such as a for loop) or a block of statements July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

A parallel region indicates sections of code that are executed by all threads At the end of a parallel region, all threads synchronize as if there were a barrier Code outside a parallel region is executed by master thread only Master thread parallel region Multiple threads Synchronization July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

![Hello World int main int argc char argv pragma omp parallel printfHello Hello World int main (int argc, char *argv[]) { #pragma omp parallel { printf("Hello](https://slidetodoc.com/presentation_image_h/30fc241359412221b9cefd07ec29d7e7/image-7.jpg)

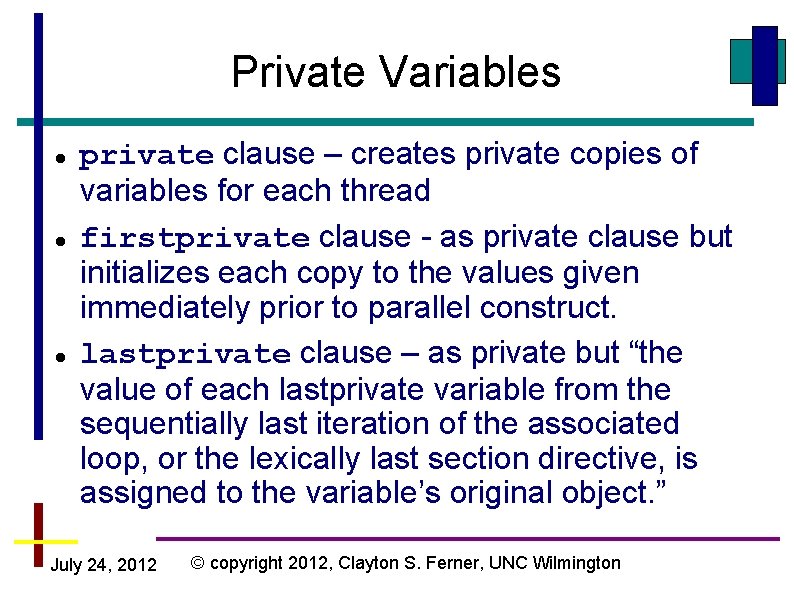

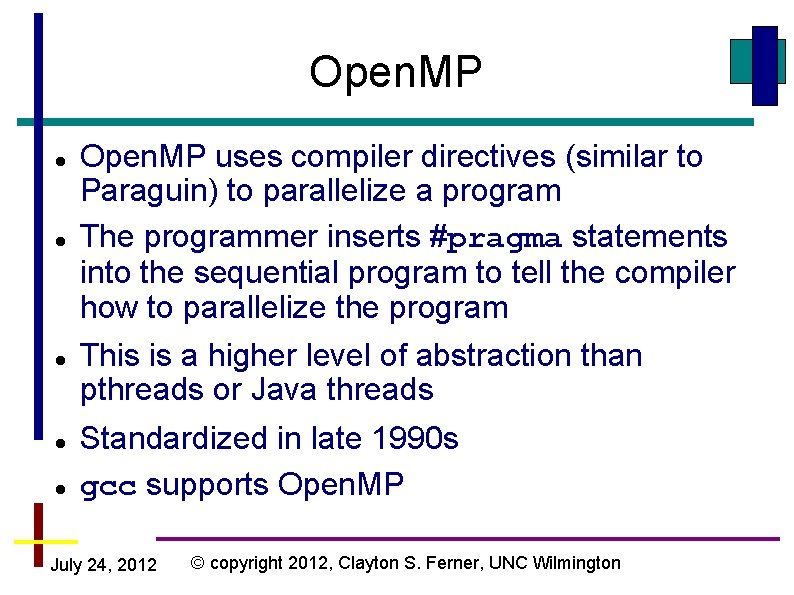

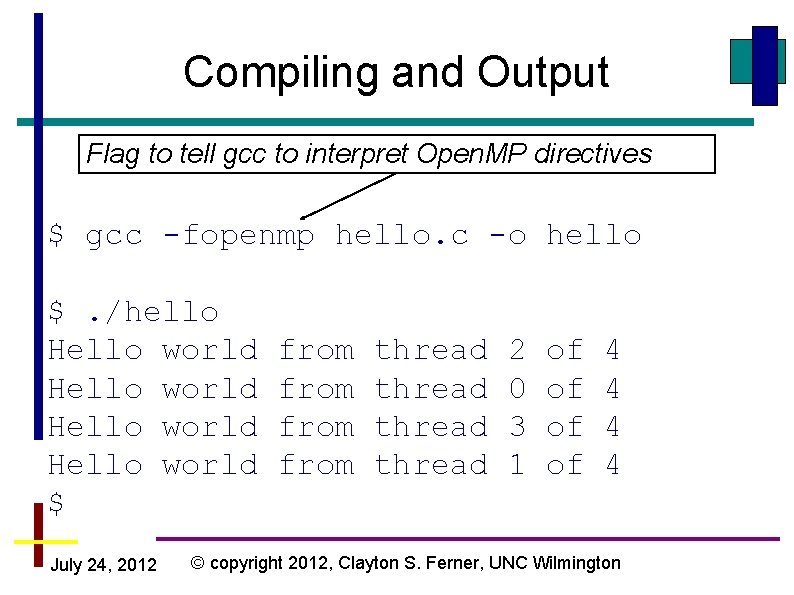

Hello World int main (int argc, char *argv[]) { #pragma omp parallel { printf("Hello World from thread = %d of %dn", omp_get_thread_num(), omp_get_num_threads()); } } Very Important Opening brace must be on a new line July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

Compiling and Output Flag to tell gcc to interpret Open. MP directives $ gcc -fopenmp hello. c -o hello $. /hello Hello world $ July 24, 2012 from thread 2 0 3 1 of of 4 4 © copyright 2012, Clayton S. Ferner, UNC Wilmington

Execution omp_get_thread_num() – get the current threads number omp_get_num_threads() – get the total number of threads The names of these two functions are similar; easy to confuse. July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

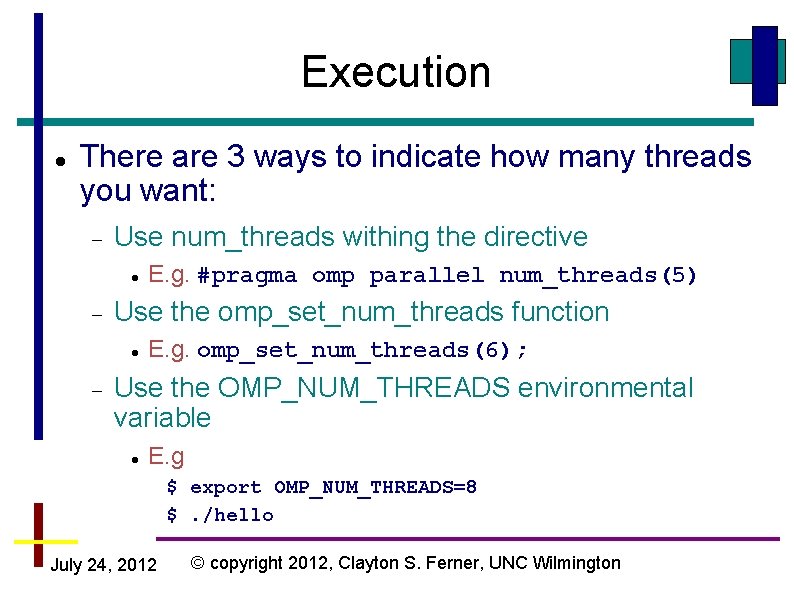

Execution There are 3 ways to indicate how many threads you want: Use num_threads withing the directive Use the omp_set_num_threads function E. g. #pragma omp parallel num_threads(5) E. g. omp_set_num_threads(6); Use the OMP_NUM_THREADS environmental variable E. g $ export OMP_NUM_THREADS=8 $. /hello July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

Shared versus Private Data July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

![Shared versus Private Data int main int argc char argv int x x Shared versus Private Data int main (int argc, char *argv[]) { int x; x](https://slidetodoc.com/presentation_image_h/30fc241359412221b9cefd07ec29d7e7/image-12.jpg)

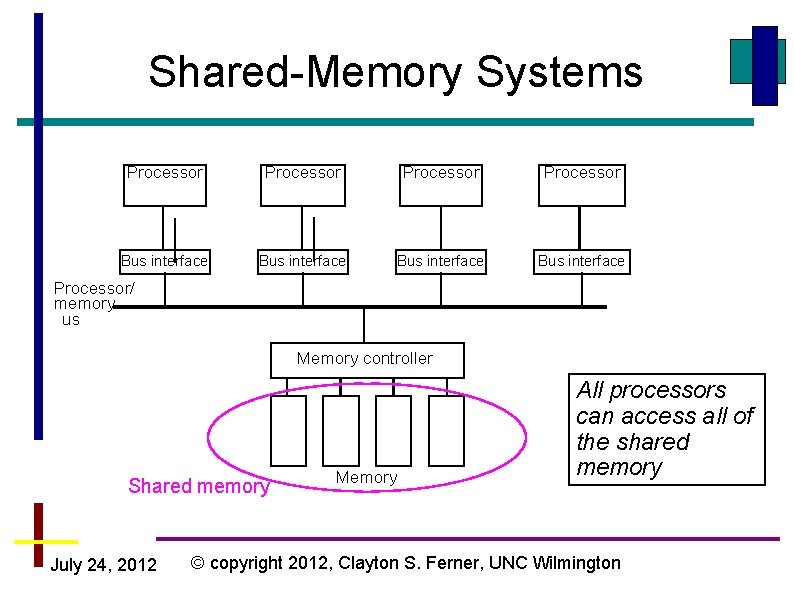

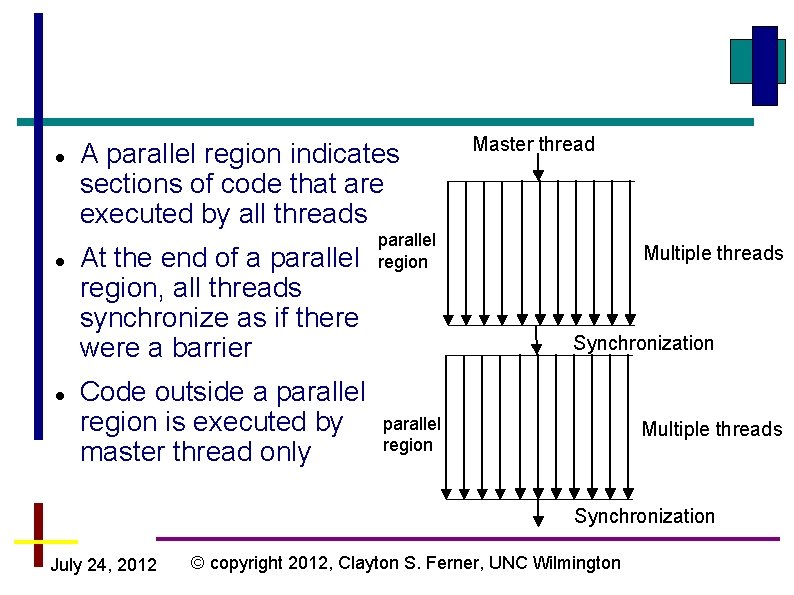

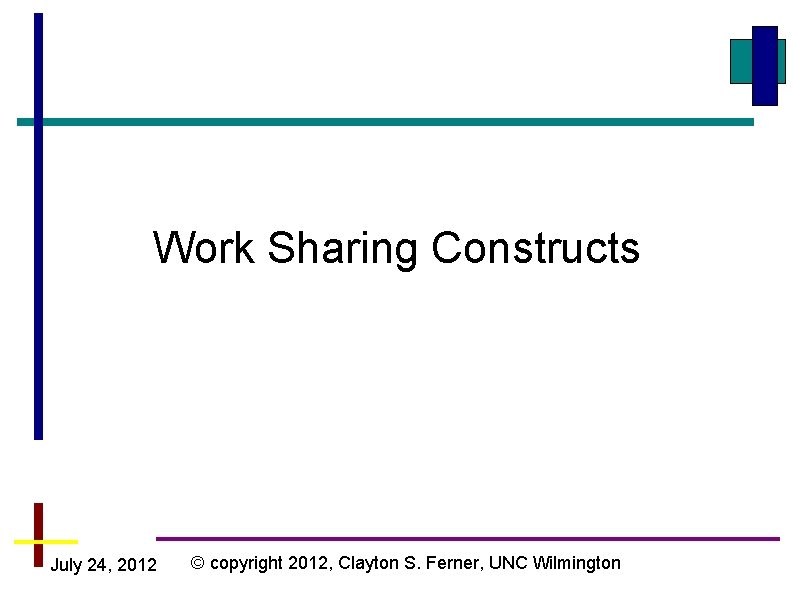

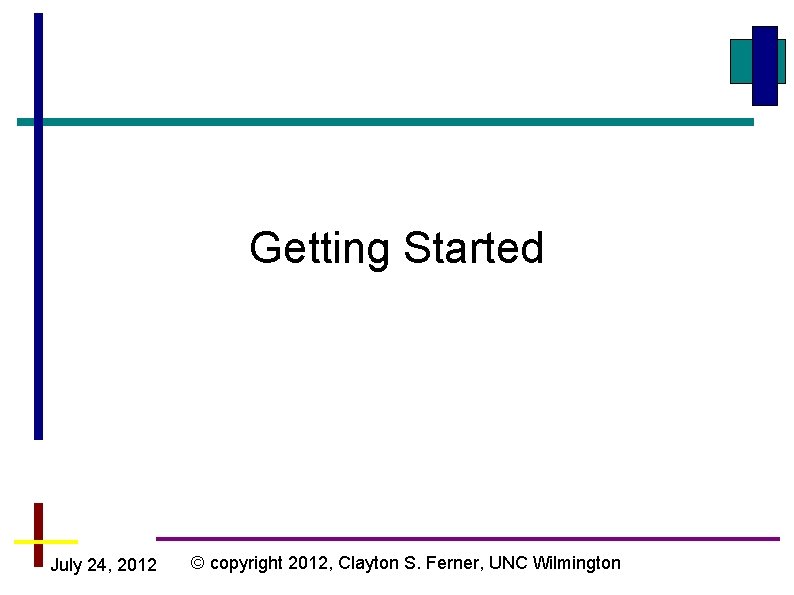

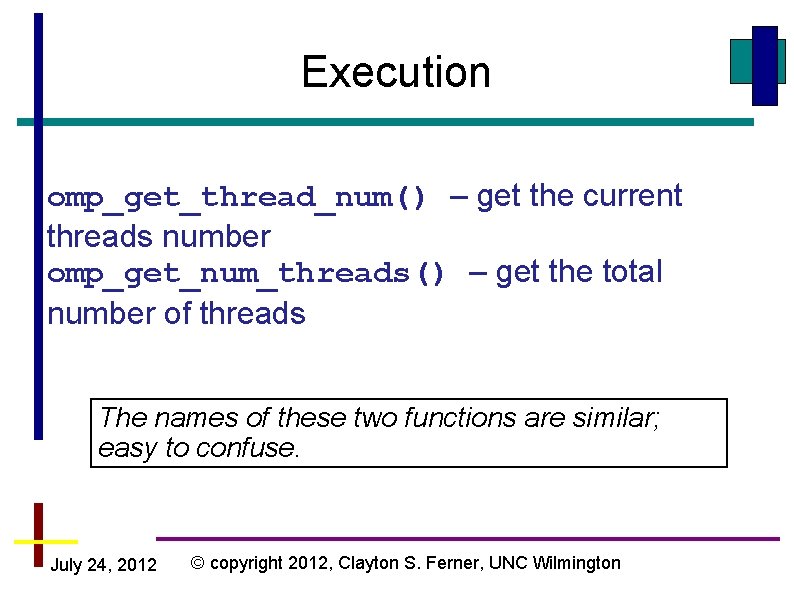

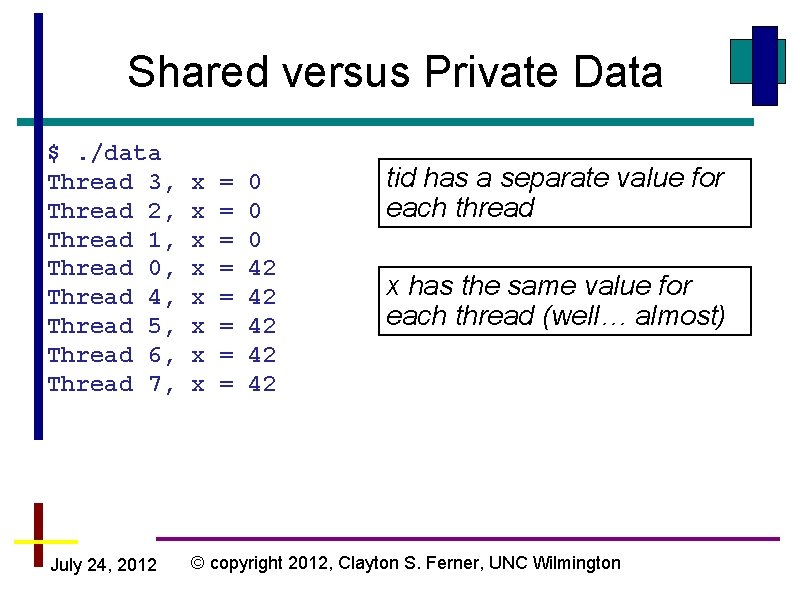

Shared versus Private Data int main (int argc, char *argv[]) { int x; x is shared by all threads int tid; tid is private – #pragma omp parallel private(tid) each thread { has its own tid = omp_get_thread_num(); copy if (tid == 0) x = 42; printf ("Thread %d, x = %dn", tid, x); } } Variables declared outside the parallel construct are shared unless otherwise specified July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

Shared versus Private Data $. /data Thread 3, Thread 2, Thread 1, Thread 0, Thread 4, Thread 5, Thread 6, Thread 7, x x x x July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington = = = = 0 0 0 42 42 42 tid has a separate value for each thread x has the same value for each thread (well… almost)

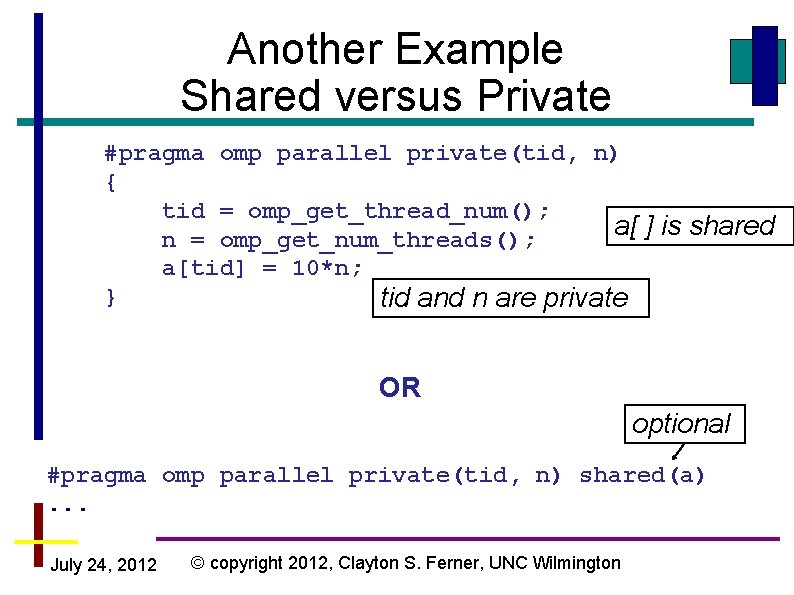

Another Example Shared versus Private #pragma omp parallel private(tid, n) { tid = omp_get_thread_num(); a[ ] is shared n = omp_get_num_threads(); a[tid] = 10*n; } tid and n are private OR optional #pragma omp parallel private(tid, n) shared(a). . . July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

Private Variables private clause – creates private copies of variables for each thread firstprivate clause - as private clause but initializes each copy to the values given immediately prior to parallel construct. lastprivate clause – as private but “the value of each lastprivate variable from the sequentially last iteration of the associated loop, or the lexically last section directive, is assigned to the variable’s original object. ” July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

Work Sharing Constructs July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

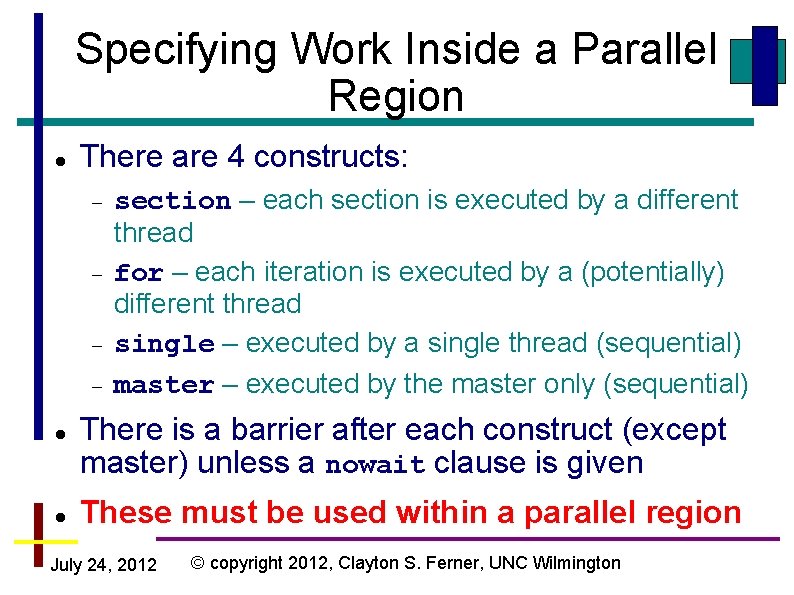

Specifying Work Inside a Parallel Region There are 4 constructs: section – each section is executed by a different thread for – each iteration is executed by a (potentially) different thread single – executed by a single thread (sequential) master – executed by the master only (sequential) There is a barrier after each construct (except master) unless a nowait clause is given These must be used within a parallel region July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

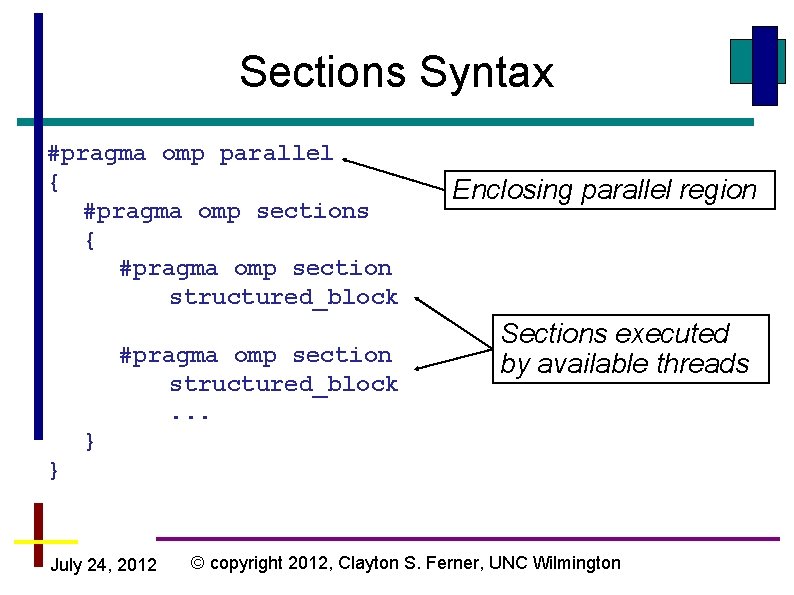

Sections Syntax #pragma omp parallel { #pragma omp sections { #pragma omp section structured_block. . . Enclosing parallel region Sections executed by available threads } } July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

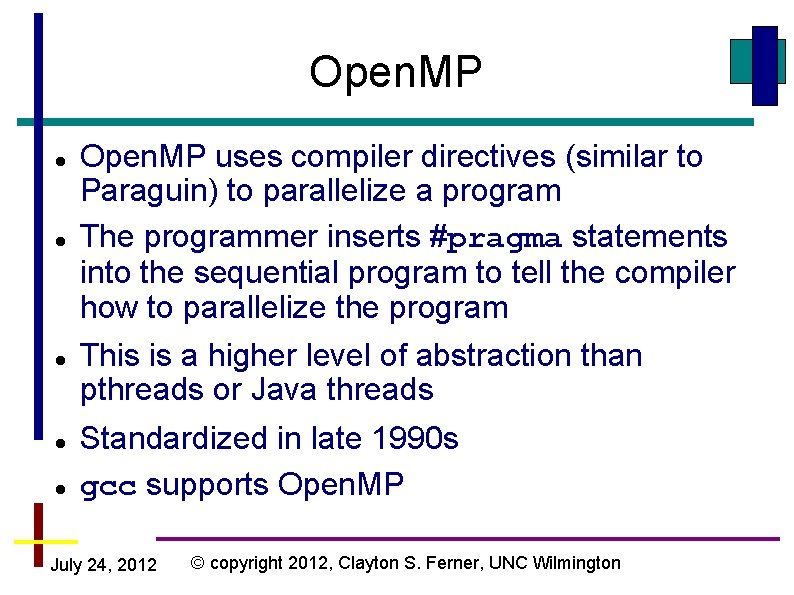

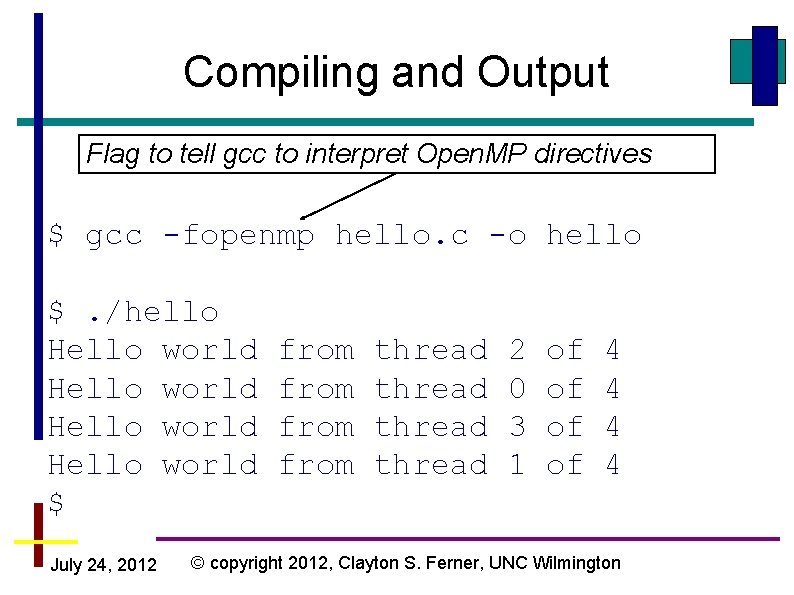

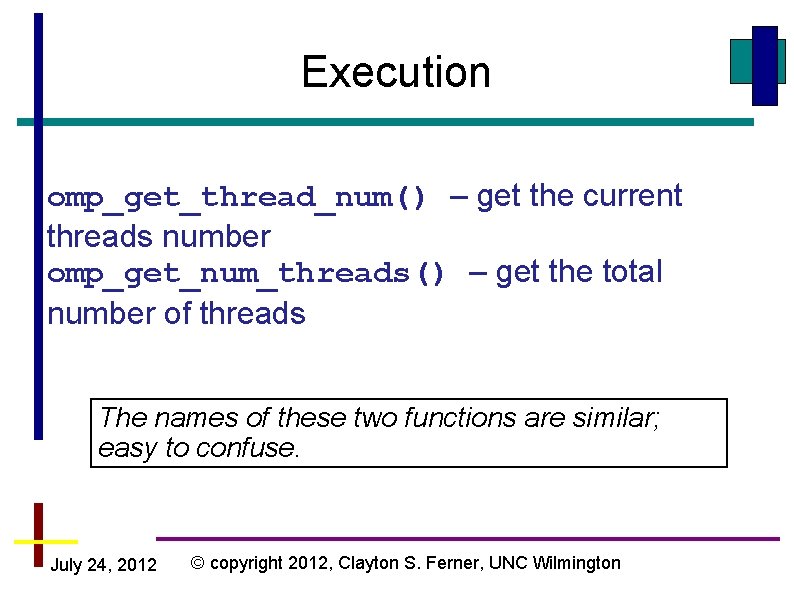

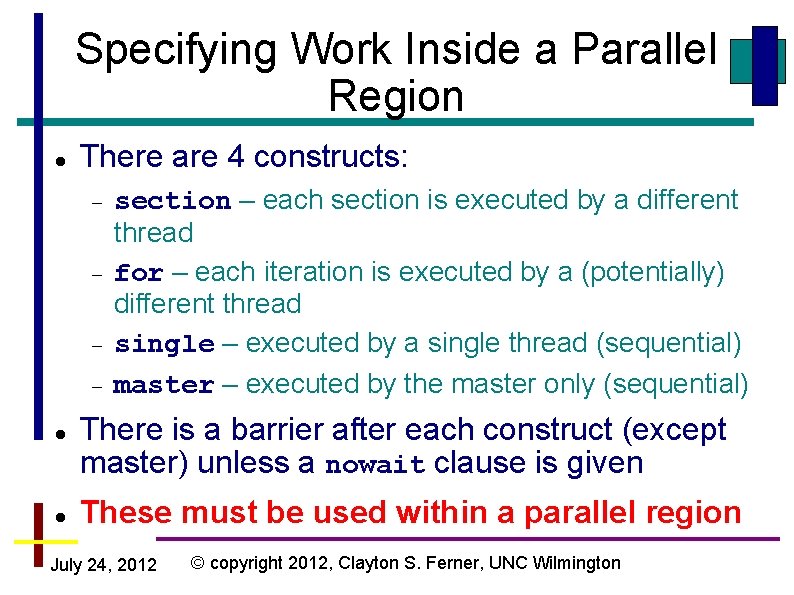

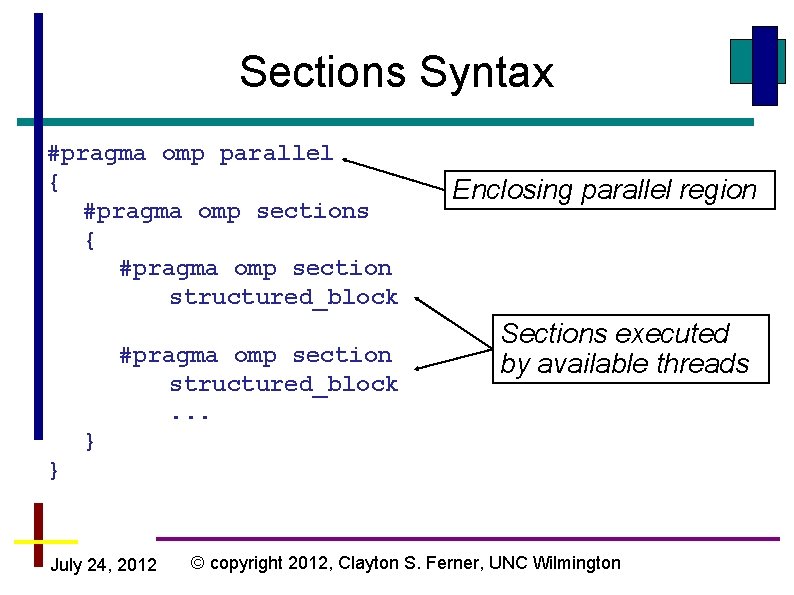

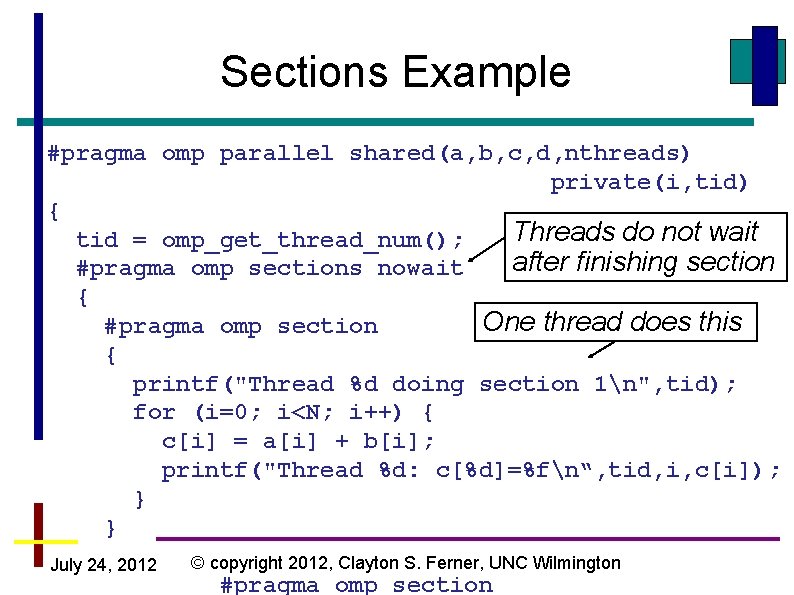

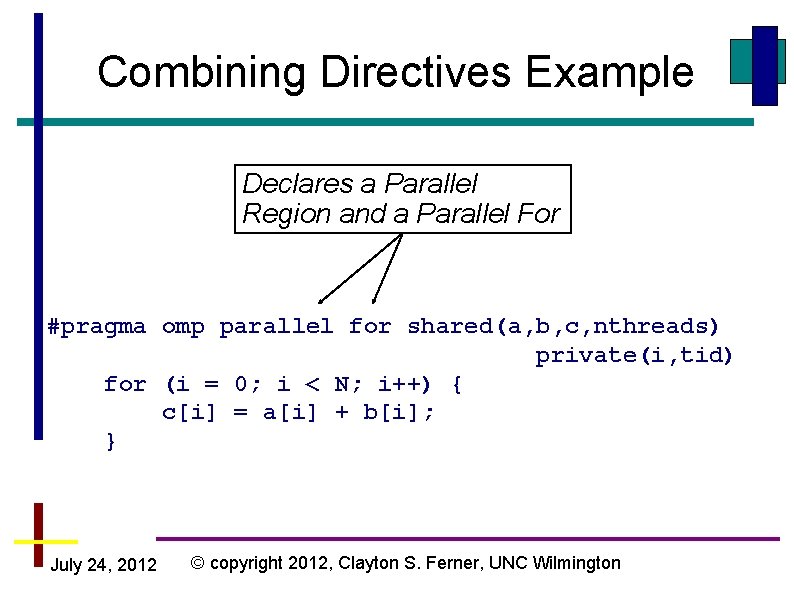

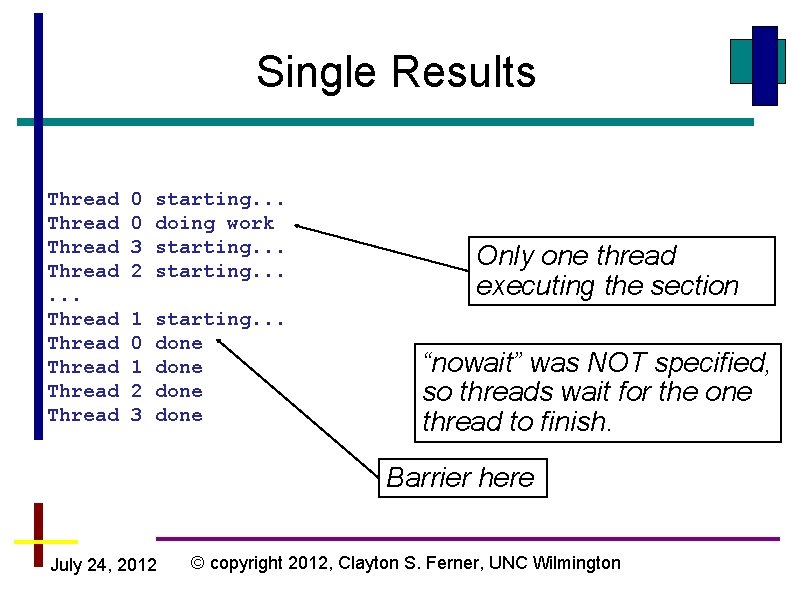

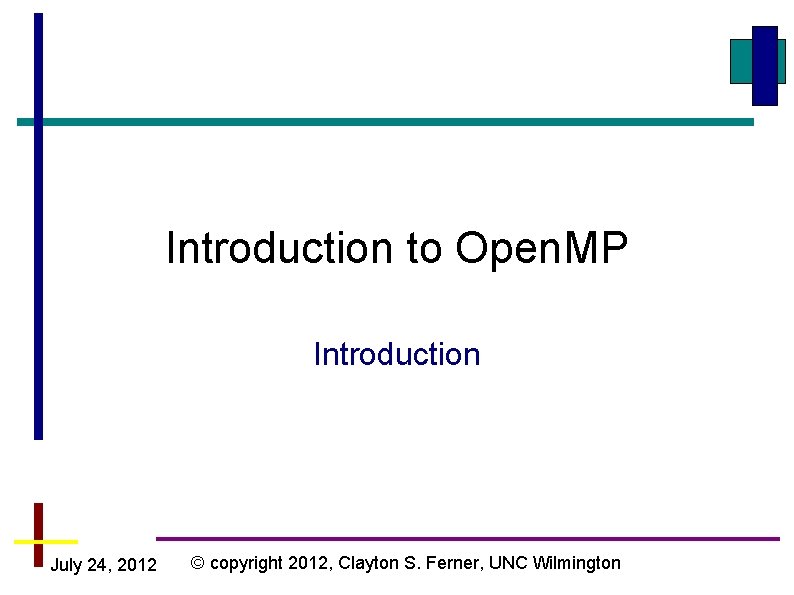

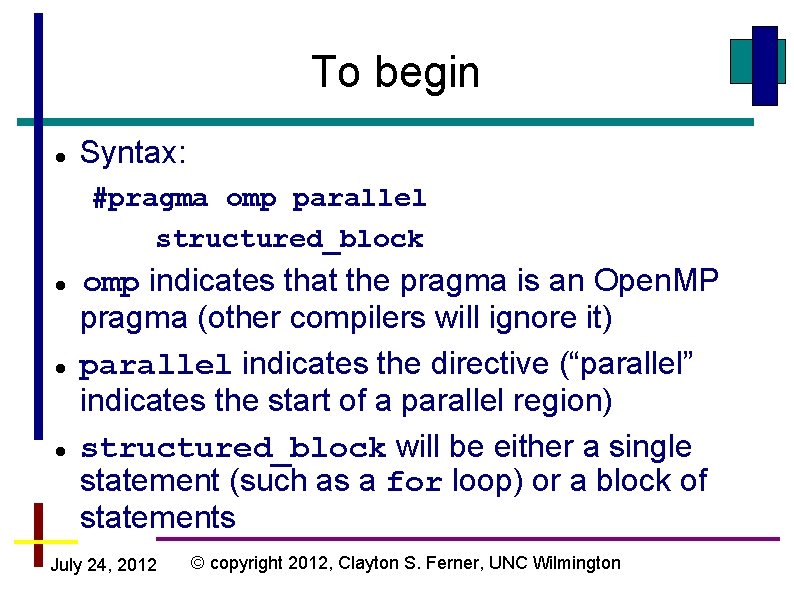

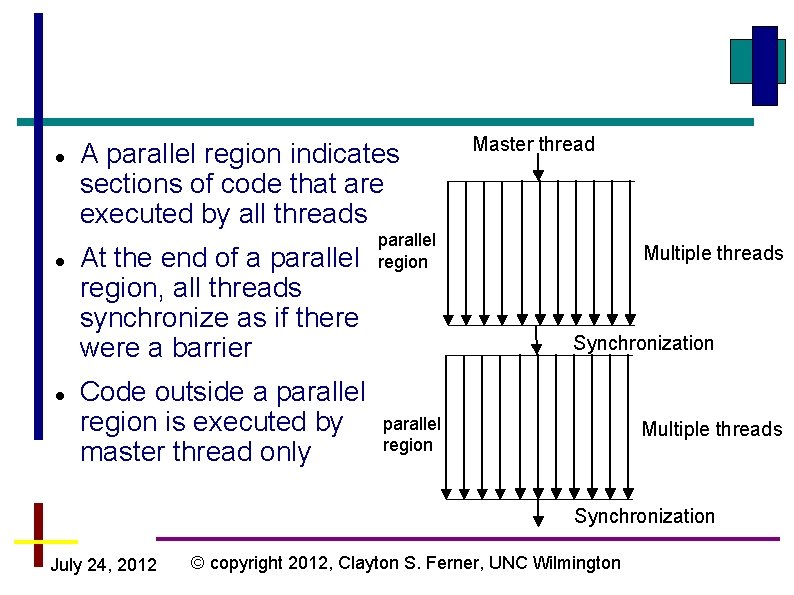

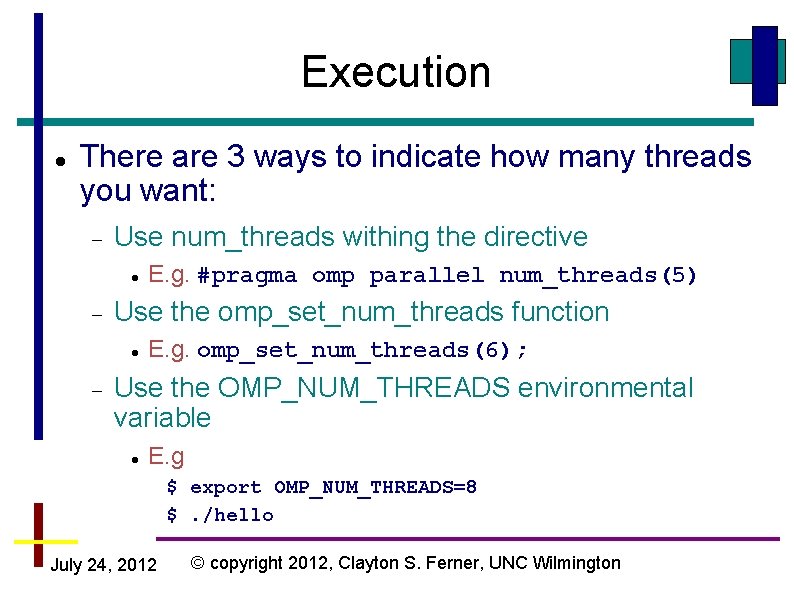

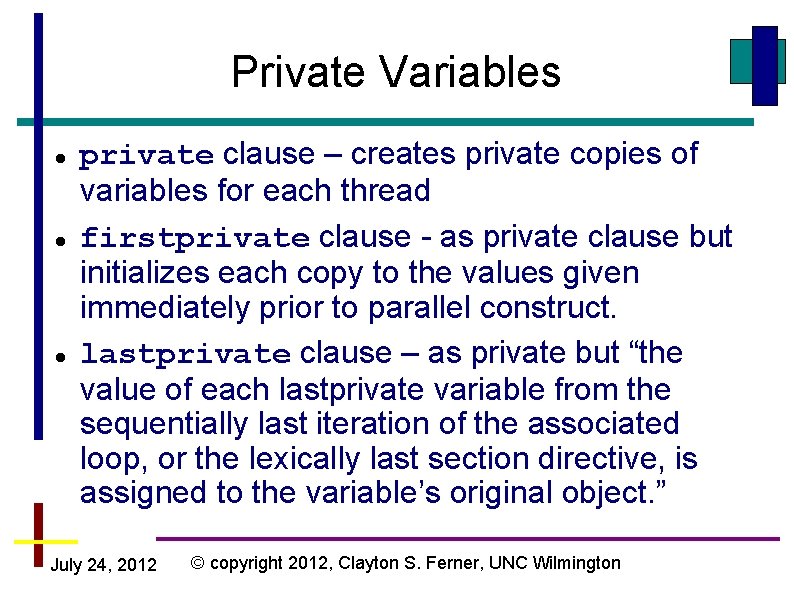

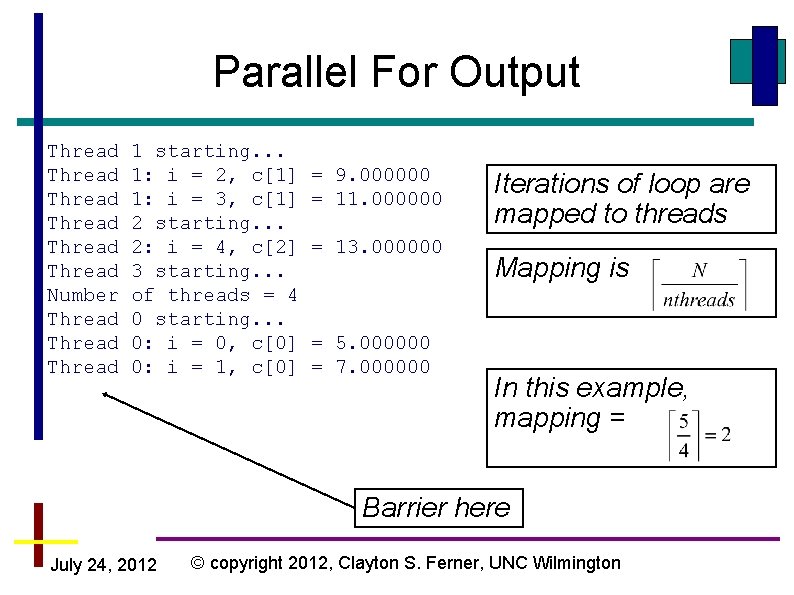

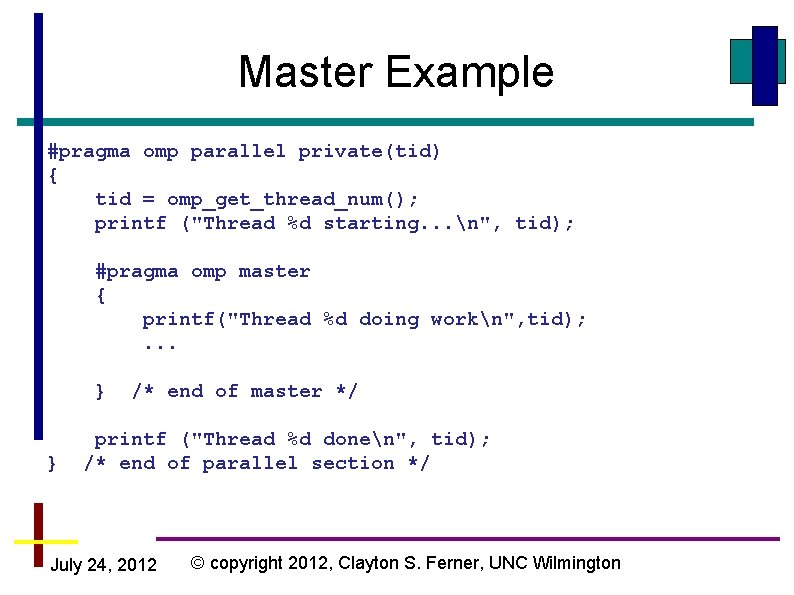

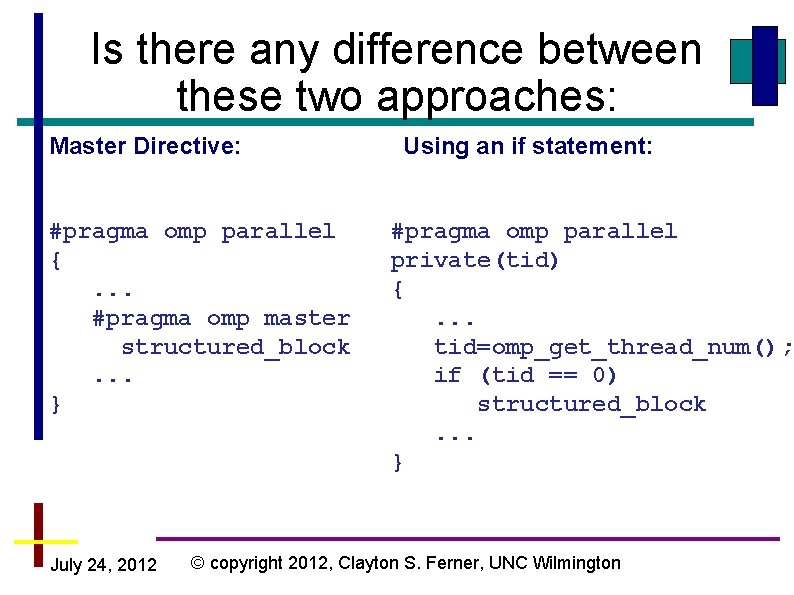

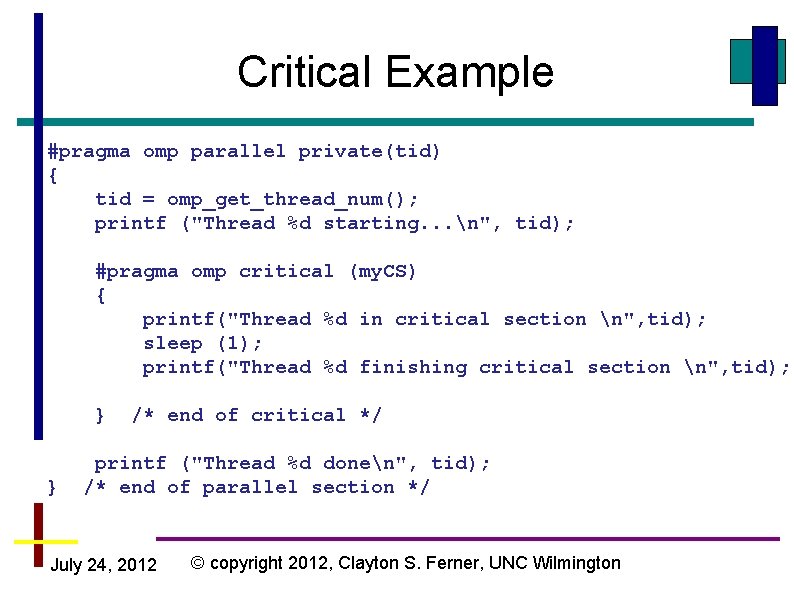

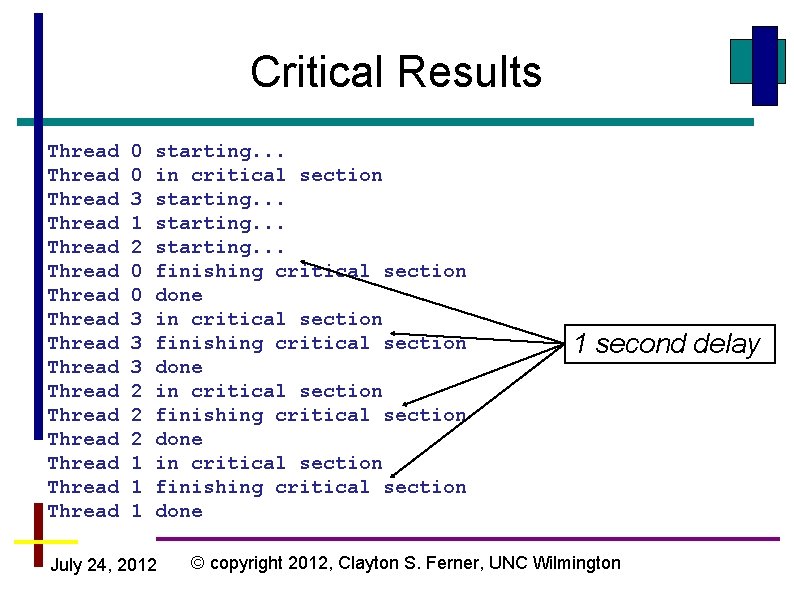

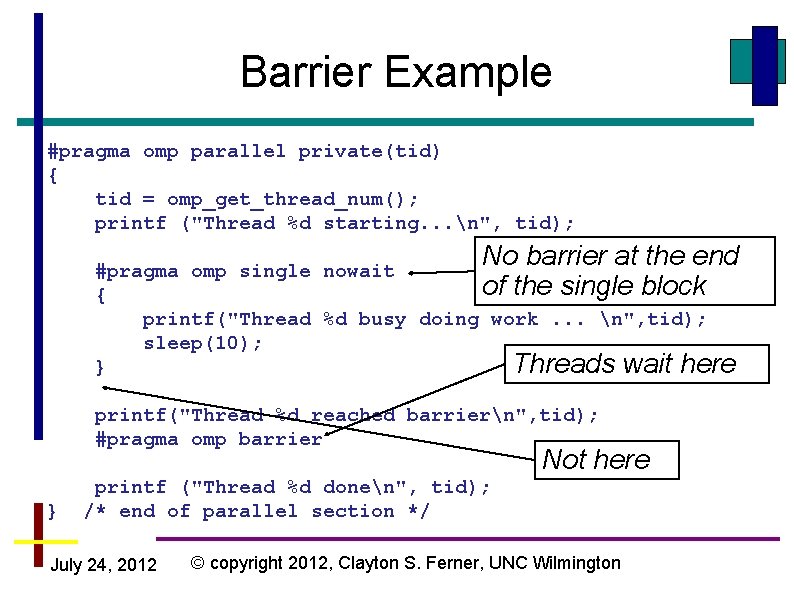

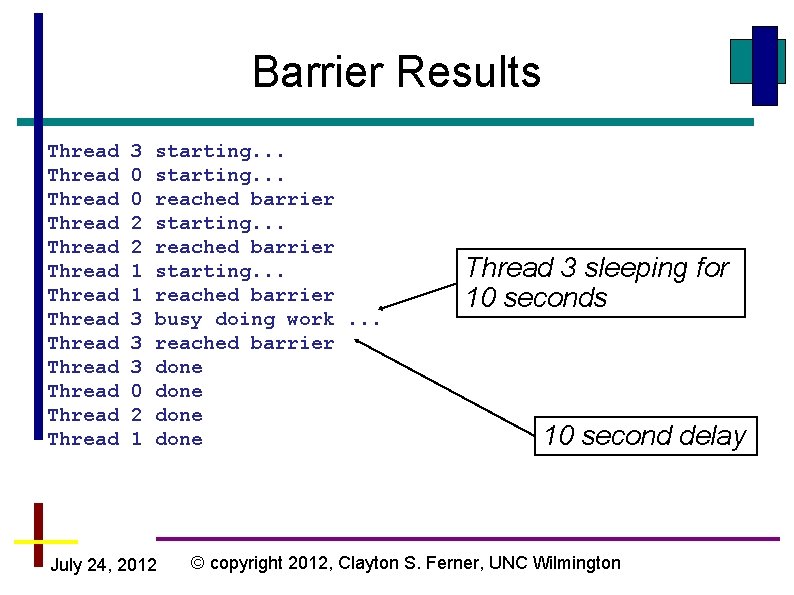

Sections Example #pragma omp parallel shared(a, b, c, d, nthreads) private(i, tid) { Threads do not wait tid = omp_get_thread_num(); after finishing section #pragma omp sections nowait { One thread does this #pragma omp section { printf("Thread %d doing section 1n", tid); for (i=0; i<N; i++) { c[i] = a[i] + b[i]; printf("Thread %d: c[%d]=%fn“, tid, i, c[i]); } } July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington #pragma omp section

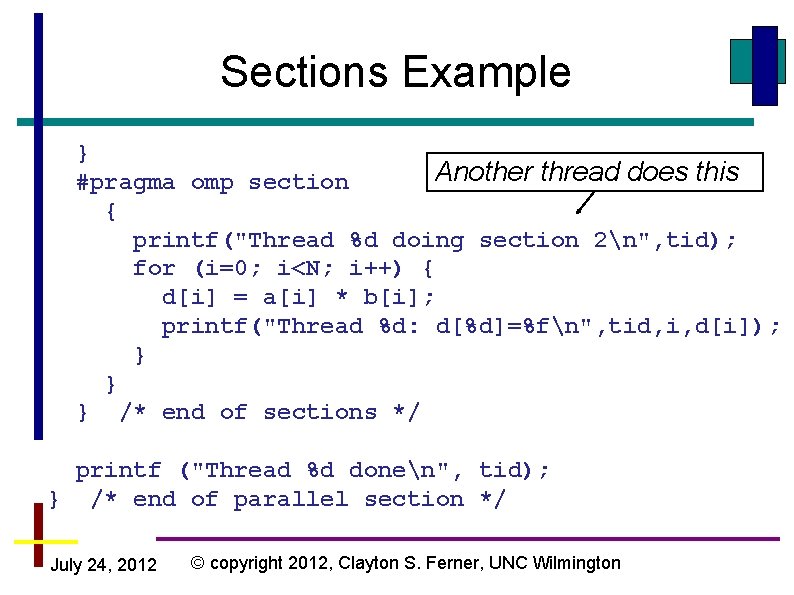

Sections Example } Another thread does this #pragma omp section { printf("Thread %d doing section 2n", tid); for (i=0; i<N; i++) { d[i] = a[i] * b[i]; printf("Thread %d: d[%d]=%fn", tid, i, d[i]); } } } /* end of sections */ printf ("Thread %d donen", tid); } /* end of parallel section */ July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

![Sections Output Thread Thread Thread Thread 0 doing section 1 0 c0 5 000000 Sections Output Thread Thread Thread Thread 0 doing section 1 0: c[0]= 5. 000000](https://slidetodoc.com/presentation_image_h/30fc241359412221b9cefd07ec29d7e7/image-21.jpg)

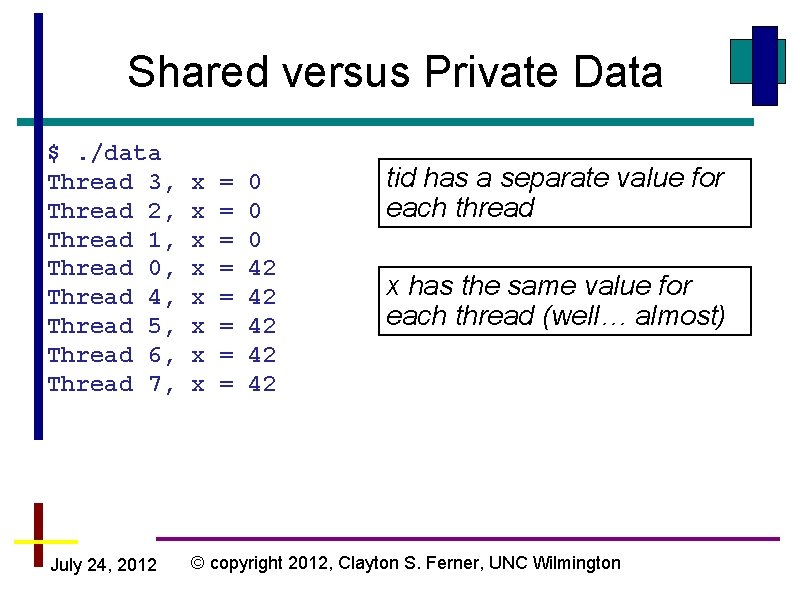

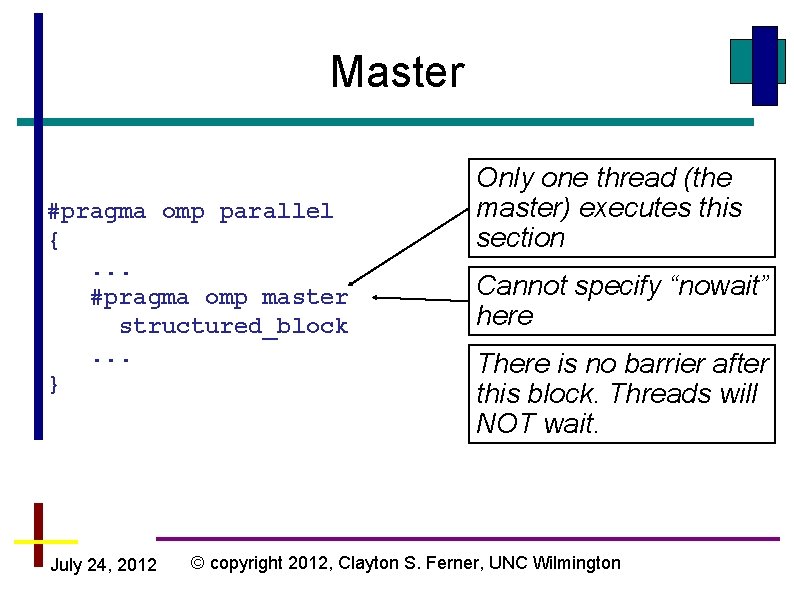

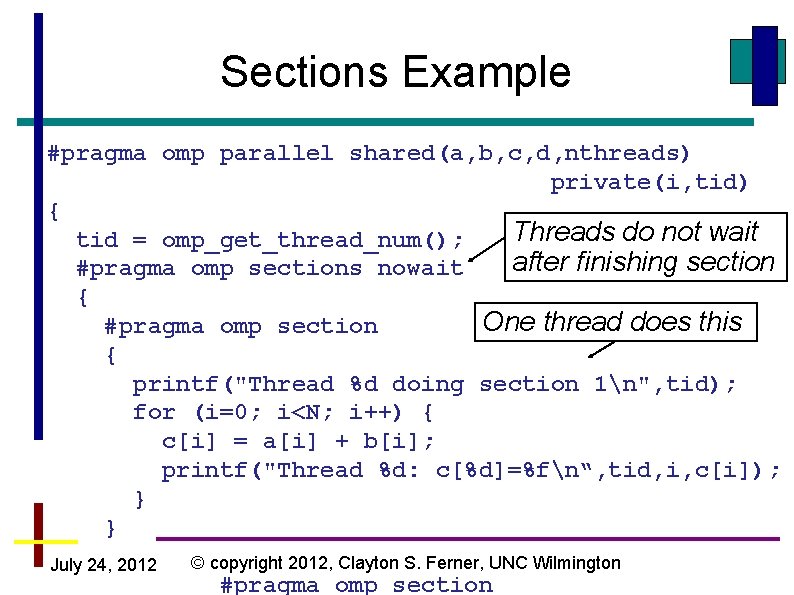

Sections Output Thread Thread Thread Thread 0 doing section 1 0: c[0]= 5. 000000 0: c[1]= 7. 000000 0: c[2]= 9. 000000 0: c[3]= 11. 000000 0: c[4]= 13. 000000 3 done 2 done 1 doing section 2 1: d[0]= 0. 000000 1: d[1]= 6. 000000 1: d[2]= 14. 000000 1: d[3]= 24. 000000 0 done 1: d[4]= 36. 000000 1 done July 24, 2012 Threads do not wait (i. e. no barrier) © copyright 2012, Clayton S. Ferner, UNC Wilmington

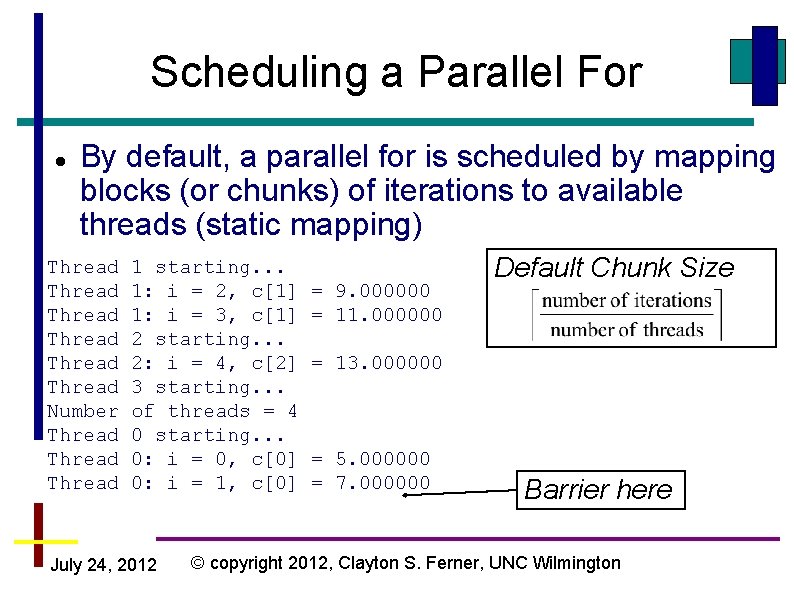

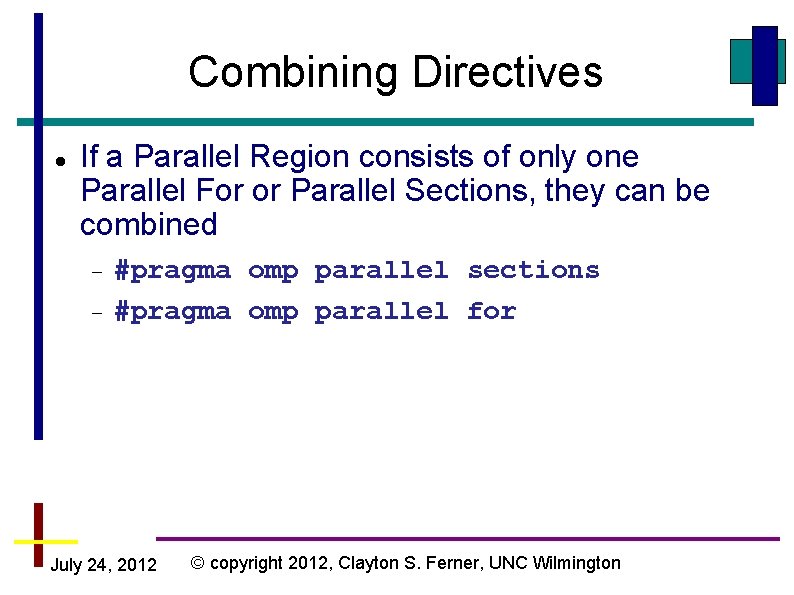

![Sections Output Thread Thread Thread Thread 0 doing section 1 0 c0 5 000000 Sections Output Thread Thread Thread Thread 0 doing section 1 0: c[0]= 5. 000000](https://slidetodoc.com/presentation_image_h/30fc241359412221b9cefd07ec29d7e7/image-22.jpg)

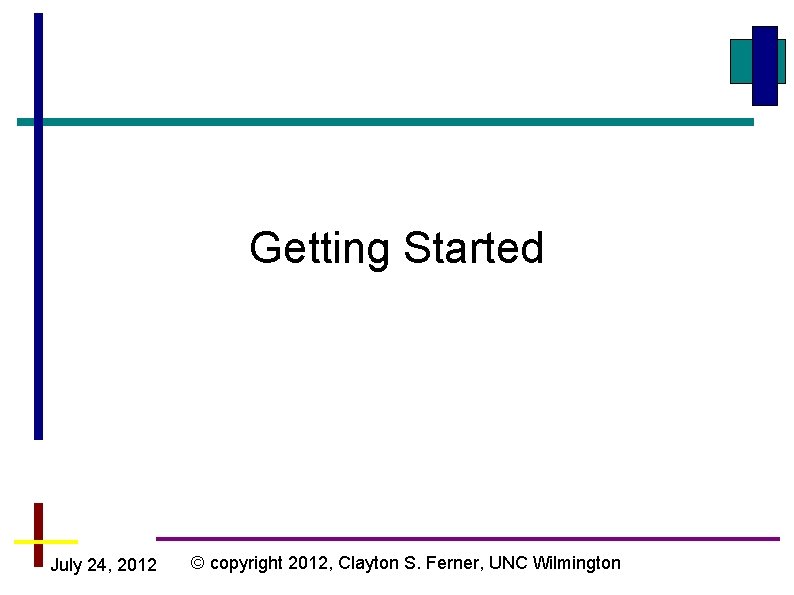

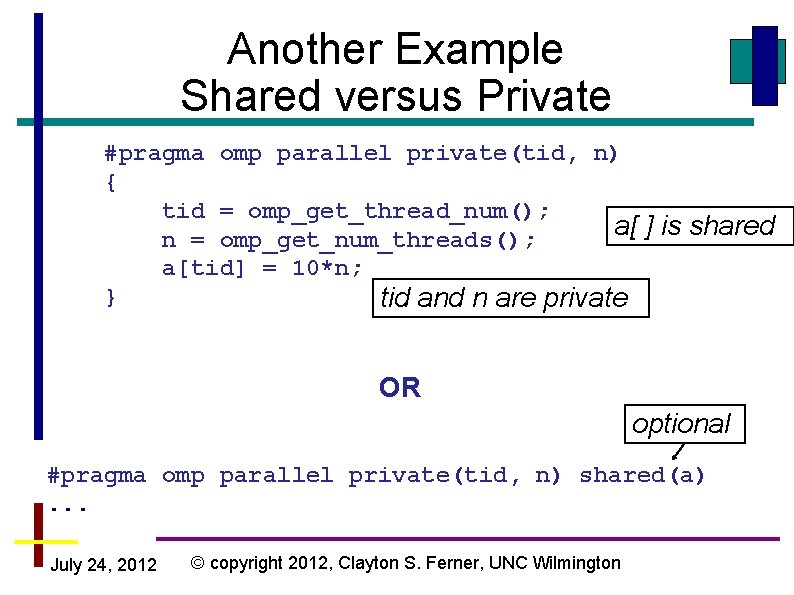

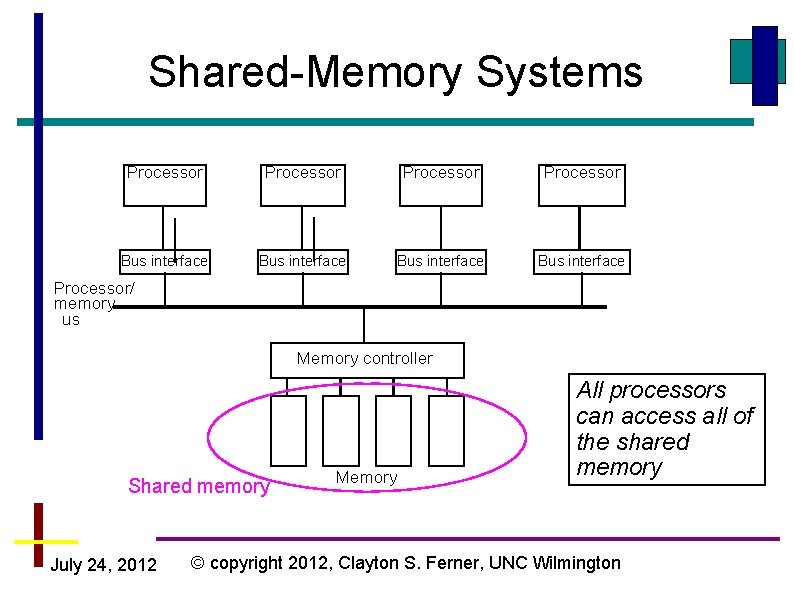

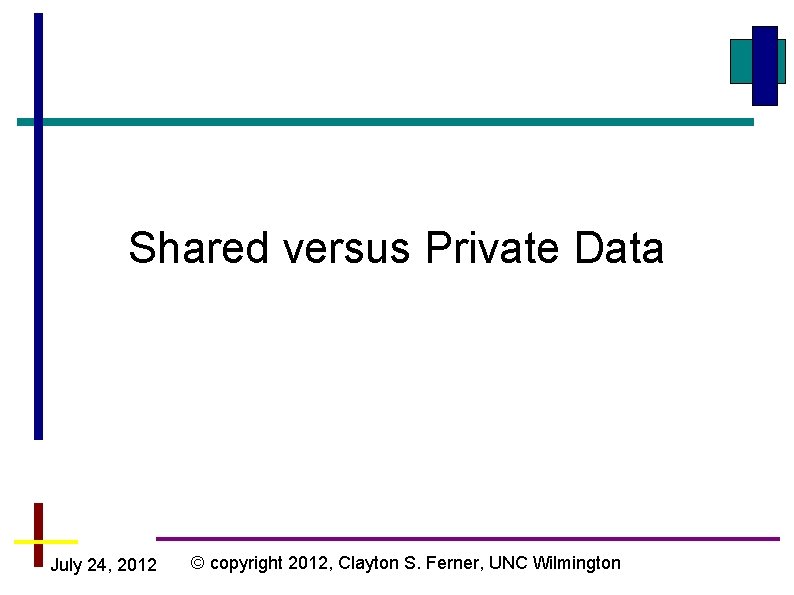

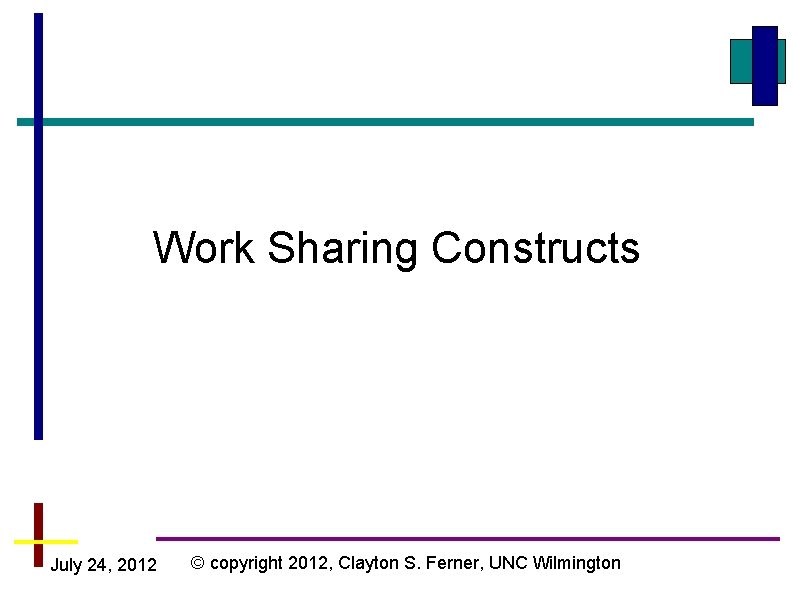

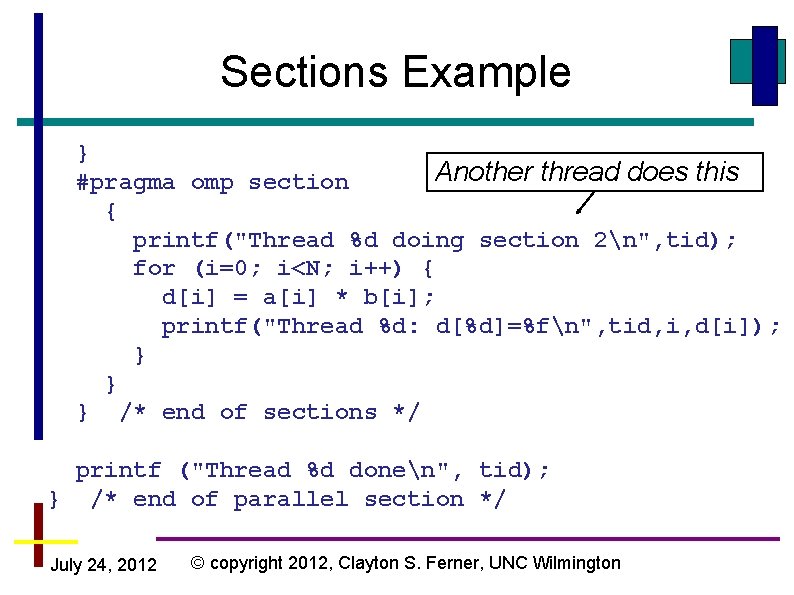

Sections Output Thread Thread Thread Thread 0 doing section 1 0: c[0]= 5. 000000 0: c[1]= 7. 000000 0: c[2]= 9. 000000 0: c[3]= 11. 000000 0: c[4]= 13. 000000 3 doing section 2 3: d[0]= 0. 000000 3: d[1]= 6. 000000 3: d[2]= 14. 000000 3: d[3]= 24. 000000 3: d[4]= 36. 000000 3 done 1 done 2 done 0 done July 24, 2012 Barrier here If we remove the nowait, then there is a barrier at the end of the section. Threads wait until they are all done with the section. © copyright 2012, Clayton S. Ferner, UNC Wilmington

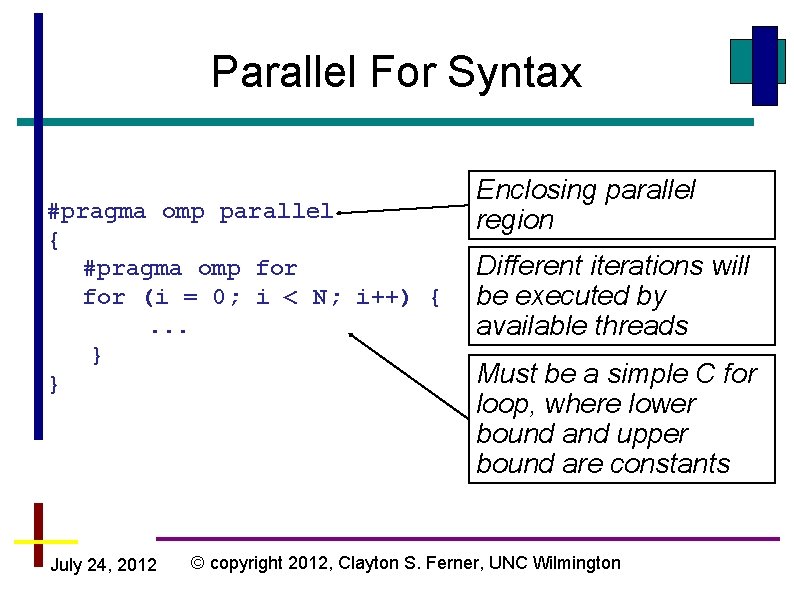

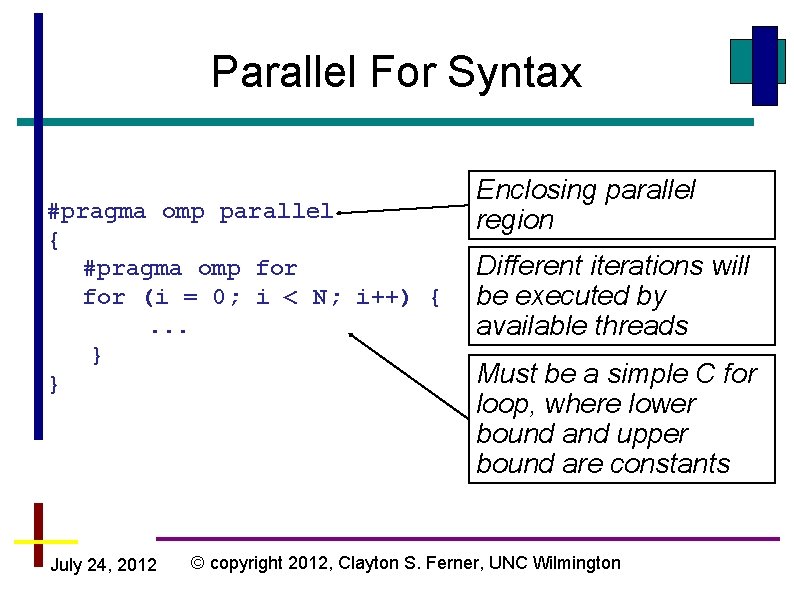

Parallel For Syntax #pragma omp parallel { #pragma omp for (i = 0; i < N; i++) {. . . } } July 24, 2012 Enclosing parallel region Different iterations will be executed by available threads Must be a simple C for loop, where lower bound and upper bound are constants © copyright 2012, Clayton S. Ferner, UNC Wilmington

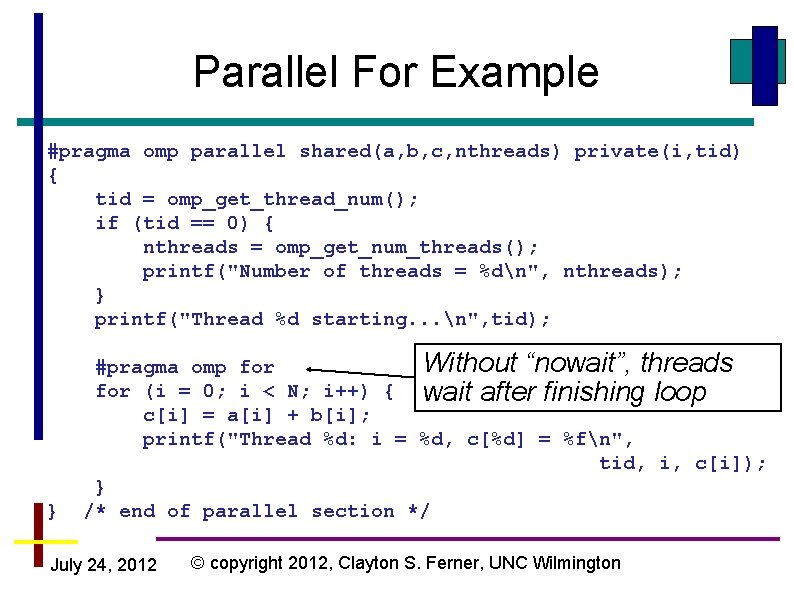

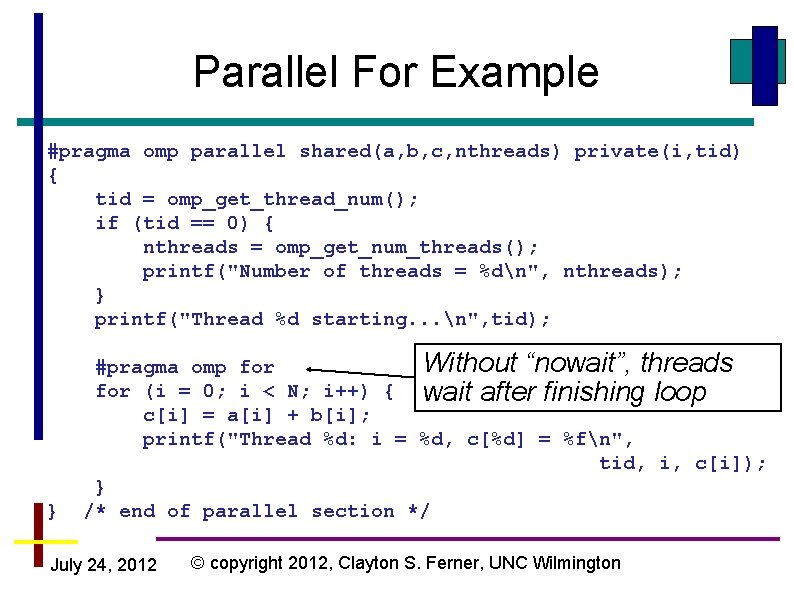

Parallel For Example #pragma omp parallel shared(a, b, c, nthreads) private(i, tid) { tid = omp_get_thread_num(); if (tid == 0) { nthreads = omp_get_num_threads(); printf("Number of threads = %dn", nthreads); } printf("Thread %d starting. . . n", tid); } Without “nowait”, threads #pragma omp for (i = 0; i < N; i++) { wait after finishing loop c[i] = a[i] + b[i]; printf("Thread %d: i = %d, c[%d] = %fn", tid, i, c[i]); } /* end of parallel section */ July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

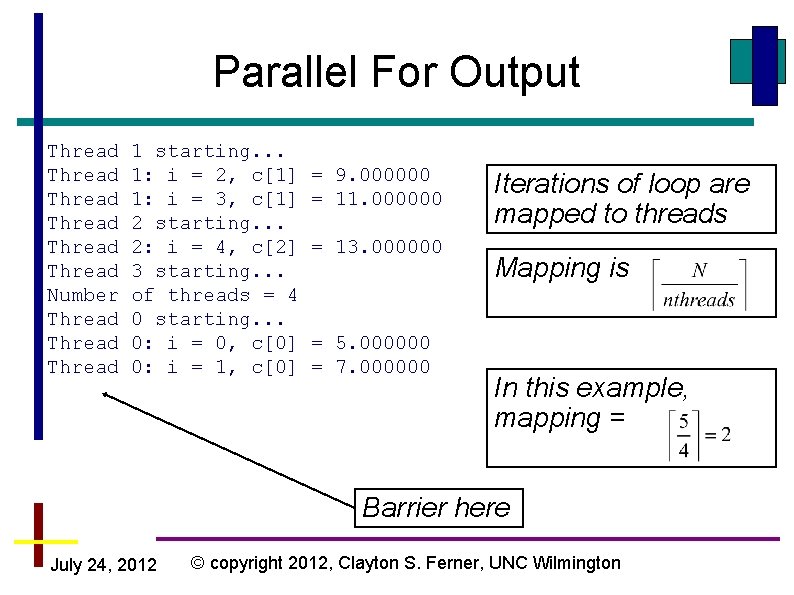

Parallel For Output Thread Thread Number Thread 1 starting. . . 1: i = 2, c[1] 1: i = 3, c[1] 2 starting. . . 2: i = 4, c[2] 3 starting. . . of threads = 4 0 starting. . . 0: i = 0, c[0] 0: i = 1, c[0] = 9. 000000 = 11. 000000 = 13. 000000 = 5. 000000 = 7. 000000 Iterations of loop are mapped to threads Mapping is In this example, mapping = Barrier here July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

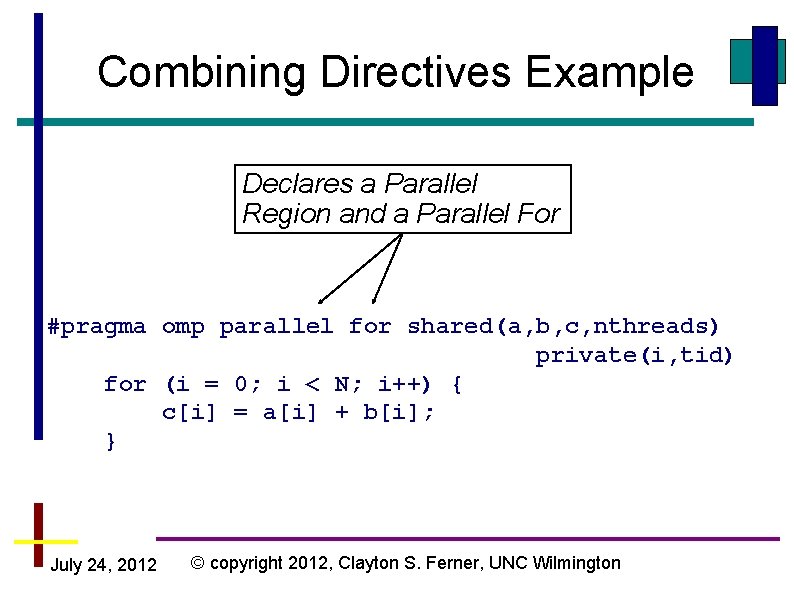

Combining Directives If a Parallel Region consists of only one Parallel For or Parallel Sections, they can be combined #pragma omp parallel sections #pragma omp parallel for July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

Combining Directives Example Declares a Parallel Region and a Parallel For #pragma omp parallel for shared(a, b, c, nthreads) private(i, tid) for (i = 0; i < N; i++) { c[i] = a[i] + b[i]; } July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

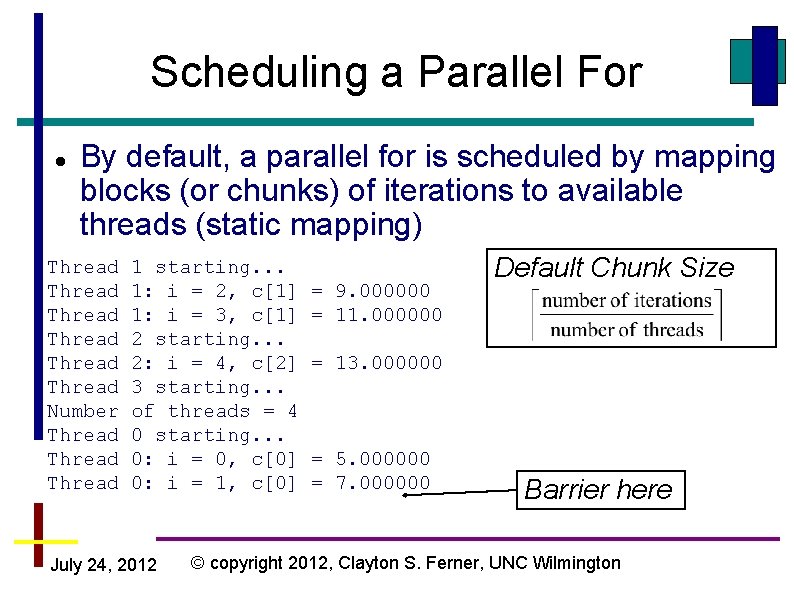

Scheduling a Parallel For By default, a parallel for is scheduled by mapping blocks (or chunks) of iterations to available threads (static mapping) Thread Thread Number Thread 1 starting. . . 1: i = 2, c[1] 1: i = 3, c[1] 2 starting. . . 2: i = 4, c[2] 3 starting. . . of threads = 4 0 starting. . . 0: i = 0, c[0] 0: i = 1, c[0] July 24, 2012 = 9. 000000 = 11. 000000 Default Chunk Size = 13. 000000 = 5. 000000 = 7. 000000 Barrier here © copyright 2012, Clayton S. Ferner, UNC Wilmington

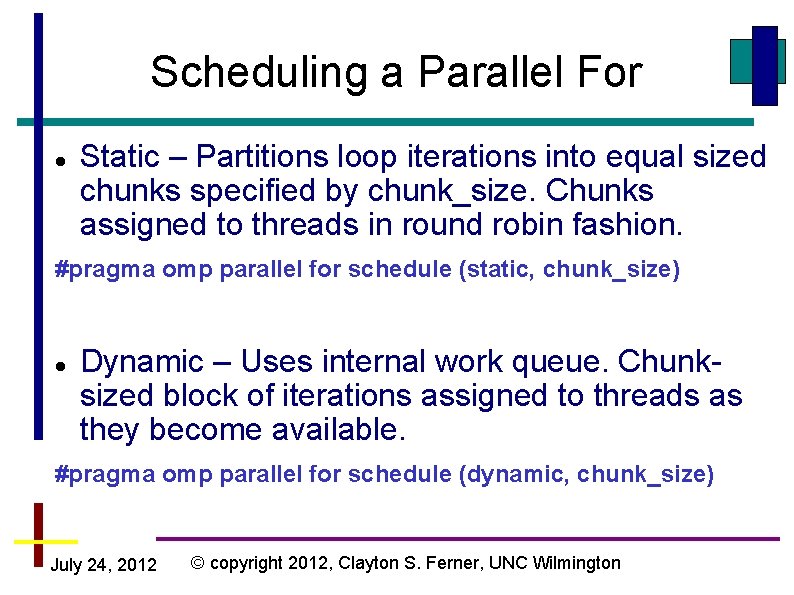

Scheduling a Parallel For Static – Partitions loop iterations into equal sized chunks specified by chunk_size. Chunks assigned to threads in round robin fashion. #pragma omp parallel for schedule (static, chunk_size) Dynamic – Uses internal work queue. Chunksized block of iterations assigned to threads as they become available. #pragma omp parallel for schedule (dynamic, chunk_size) July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

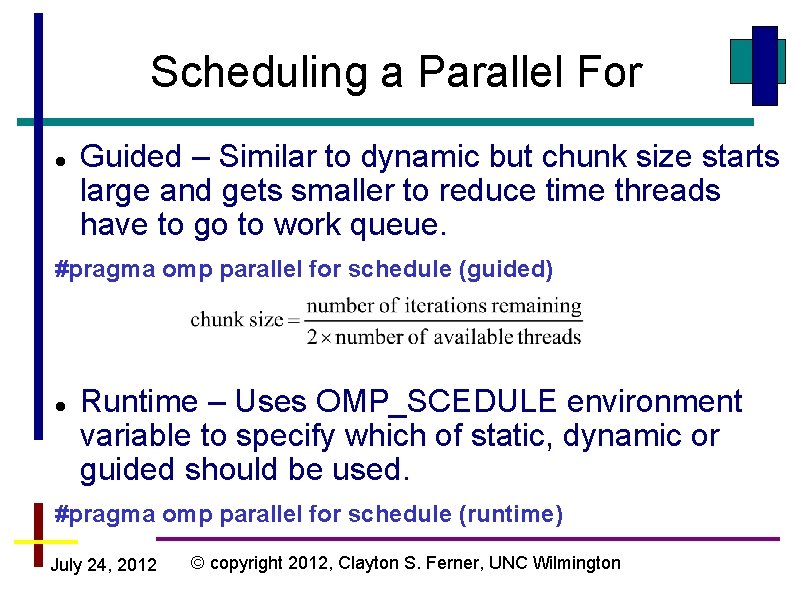

Scheduling a Parallel For Guided – Similar to dynamic but chunk size starts large and gets smaller to reduce time threads have to go to work queue. #pragma omp parallel for schedule (guided) Runtime – Uses OMP_SCEDULE environment variable to specify which of static, dynamic or guided should be used. #pragma omp parallel for schedule (runtime) July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

Question Guided scheduling is similar to Static except that the chunk sizes start large and get smaller. What is the advantage of using Guided versus Static? Answer: Guided improves load balance July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

Reduction A reduction is when we apply a commutative operator to an aggregate values creating a single value (similar to the MPI_Reduce) sum = 0; #pragma omp parallel for reduction(+: sum) for (k = 0; k < 100; k++ ) { sum = sum + funct(k); Operation Variable } Private copy of sum created for each thread by compiler. Private copy will be added to sum at end. Eliminates the need for critical sections here. July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

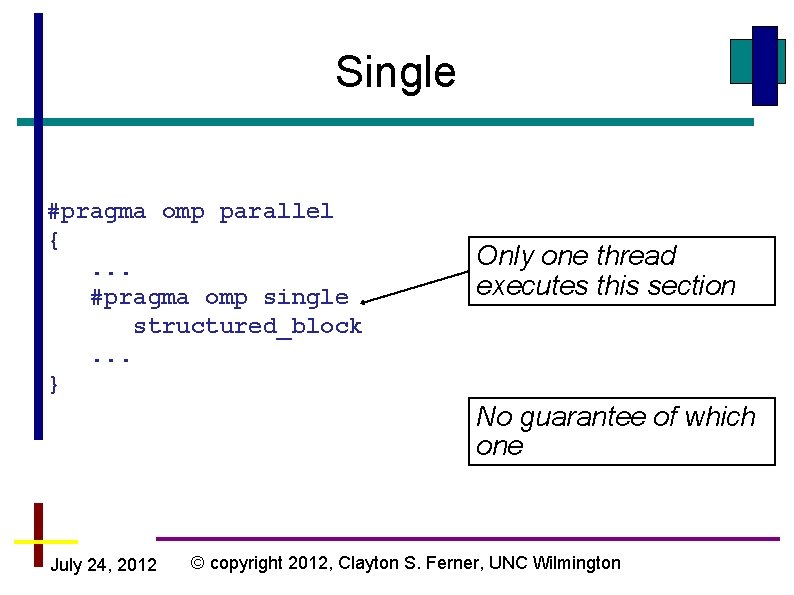

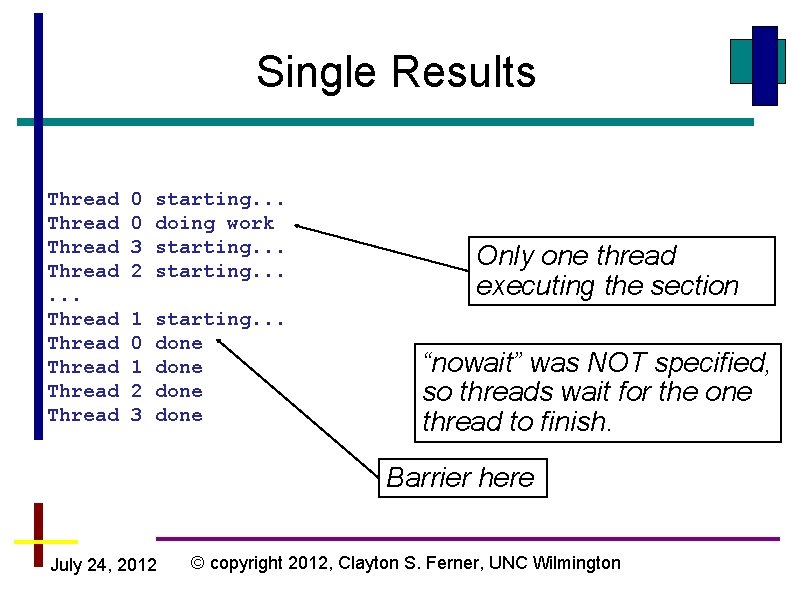

Single #pragma omp parallel {. . . #pragma omp single structured_block. . . } Only one thread executes this section No guarantee of which one July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

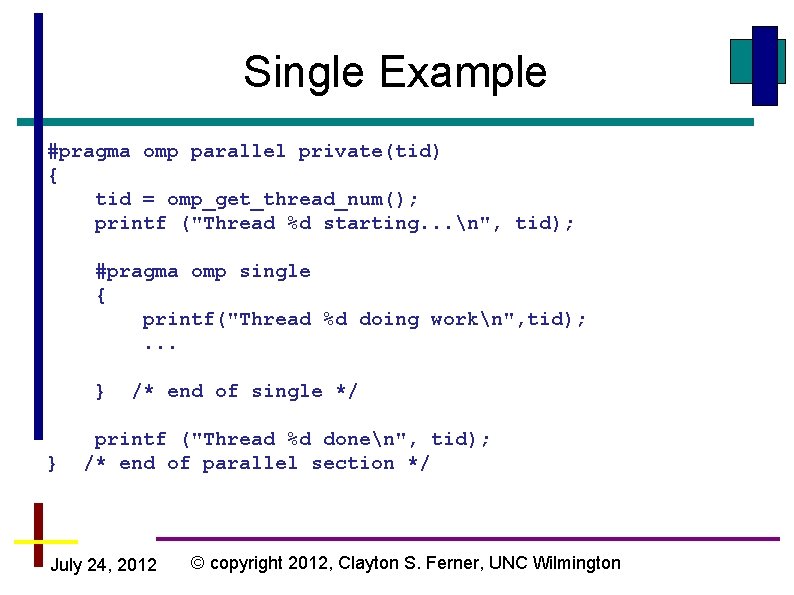

Single Example #pragma omp parallel private(tid) { tid = omp_get_thread_num(); printf ("Thread %d starting. . . n", tid); #pragma omp single { printf("Thread %d doing workn", tid); . . . } } /* end of single */ printf ("Thread %d donen", tid); /* end of parallel section */ July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

Single Results Thread. . . Thread Thread 0 0 3 2 starting. . . doing work starting. . . 1 0 1 2 3 starting. . . done Only one thread executing the section “nowait” was NOT specified, so threads wait for the one thread to finish. Barrier here July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

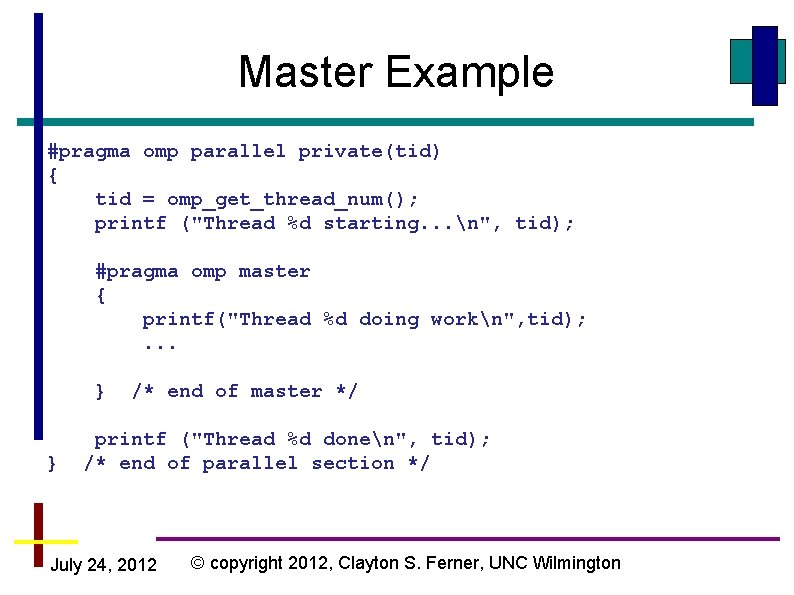

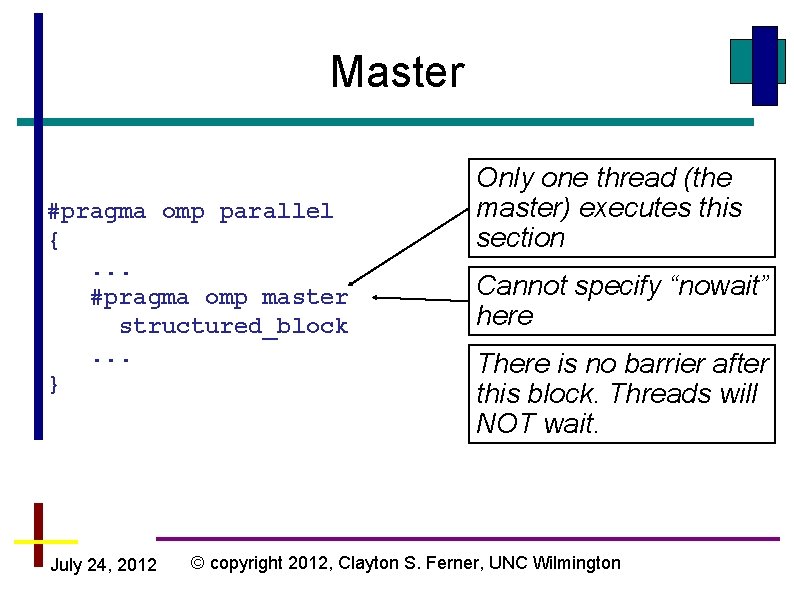

Master #pragma omp parallel {. . . #pragma omp master structured_block. . . } July 24, 2012 Only one thread (the master) executes this section Cannot specify “nowait” here There is no barrier after this block. Threads will NOT wait. © copyright 2012, Clayton S. Ferner, UNC Wilmington

Master Example #pragma omp parallel private(tid) { tid = omp_get_thread_num(); printf ("Thread %d starting. . . n", tid); #pragma omp master { printf("Thread %d doing workn", tid); . . . } } /* end of master */ printf ("Thread %d donen", tid); /* end of parallel section */ July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

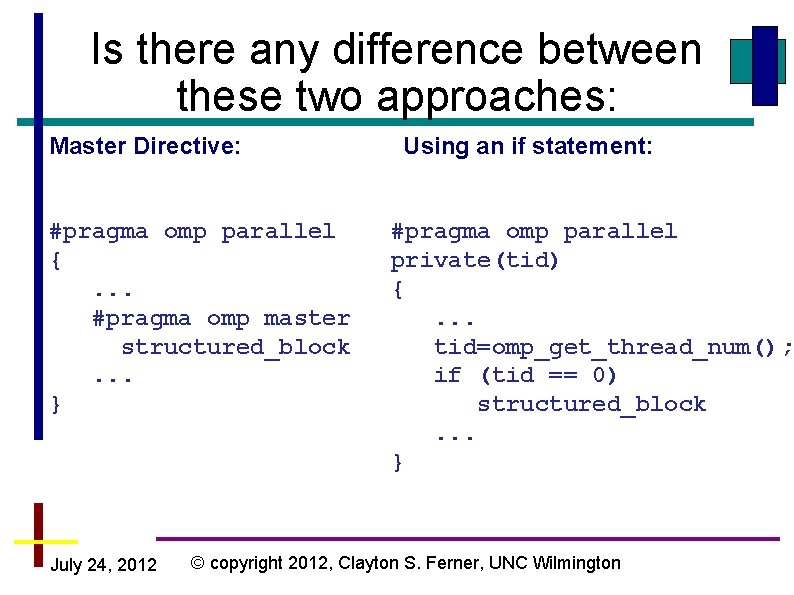

Is there any difference between these two approaches: Master Directive: #pragma omp parallel {. . . #pragma omp master structured_block. . . } July 24, 2012 Using an if statement: #pragma omp parallel private(tid) {. . . tid=omp_get_thread_num(); if (tid == 0) structured_block. . . } © copyright 2012, Clayton S. Ferner, UNC Wilmington

Synchronization July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

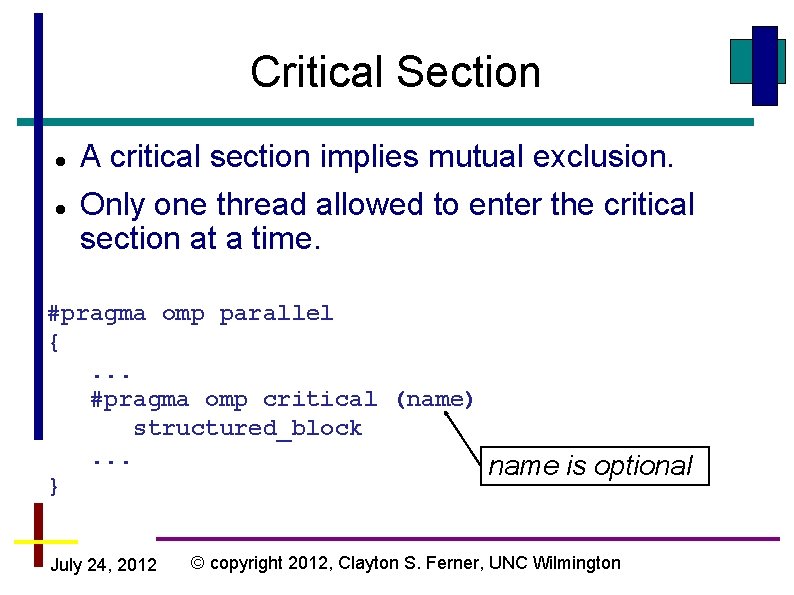

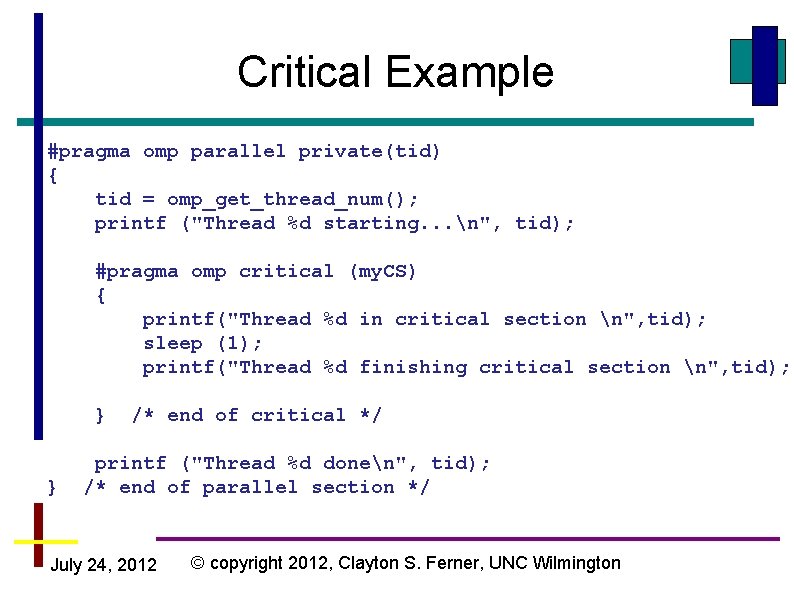

Critical Section A critical section implies mutual exclusion. Only one thread allowed to enter the critical section at a time. #pragma omp parallel {. . . #pragma omp critical (name) structured_block. . . name is optional } July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

Critical Example #pragma omp parallel private(tid) { tid = omp_get_thread_num(); printf ("Thread %d starting. . . n", tid); #pragma omp critical (my. CS) { printf("Thread %d in critical section n", tid); sleep (1); printf("Thread %d finishing critical section n", tid); } } /* end of critical */ printf ("Thread %d donen", tid); /* end of parallel section */ July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

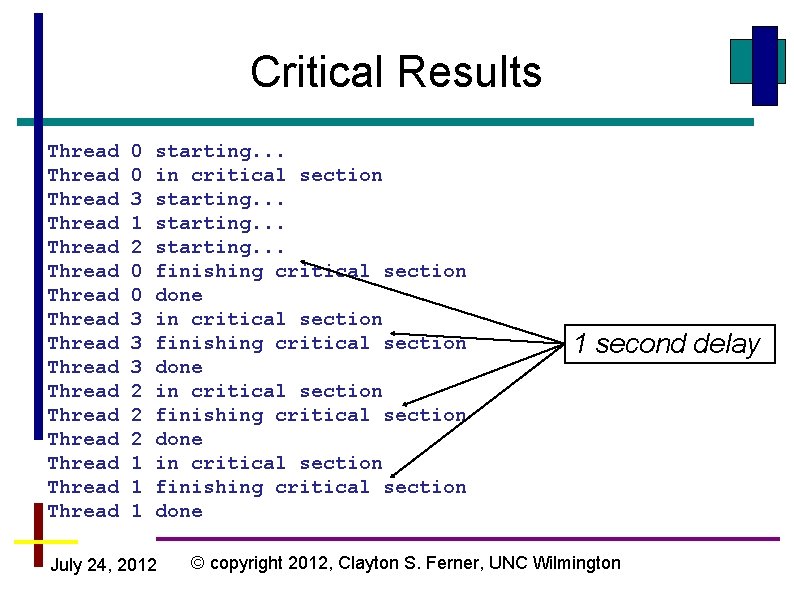

Critical Results Thread Thread Thread Thread 0 0 3 1 2 0 0 3 3 3 2 2 2 1 1 1 starting. . . in critical section starting. . . finishing critical section done in critical section finishing critical section done July 24, 2012 1 second delay © copyright 2012, Clayton S. Ferner, UNC Wilmington

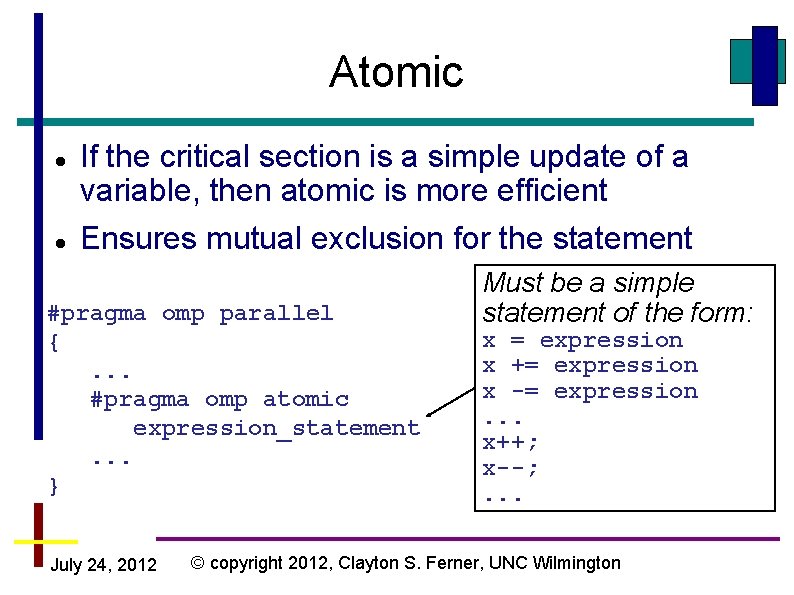

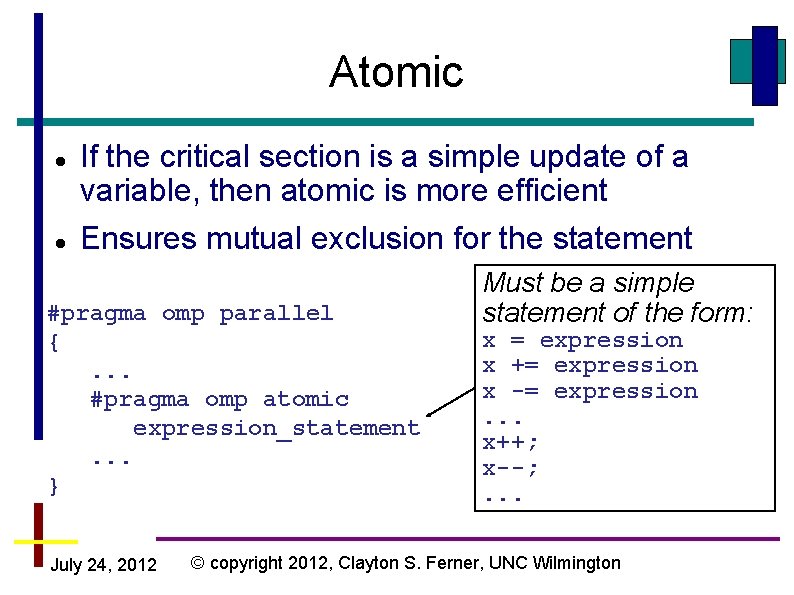

Atomic If the critical section is a simple update of a variable, then atomic is more efficient Ensures mutual exclusion for the statement #pragma omp parallel {. . . #pragma omp atomic expression_statement. . . } July 24, 2012 Must be a simple statement of the form: x = expression x += expression x -= expression. . . x++; x--; . . . © copyright 2012, Clayton S. Ferner, UNC Wilmington

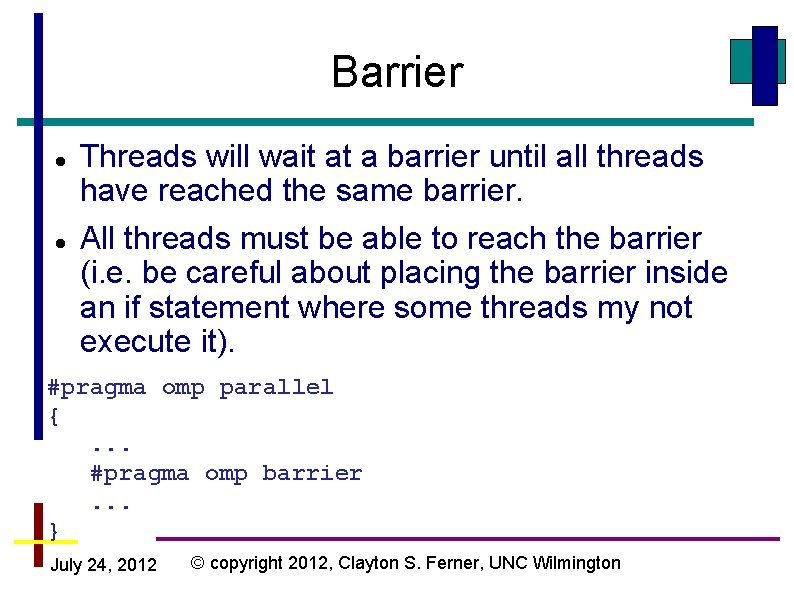

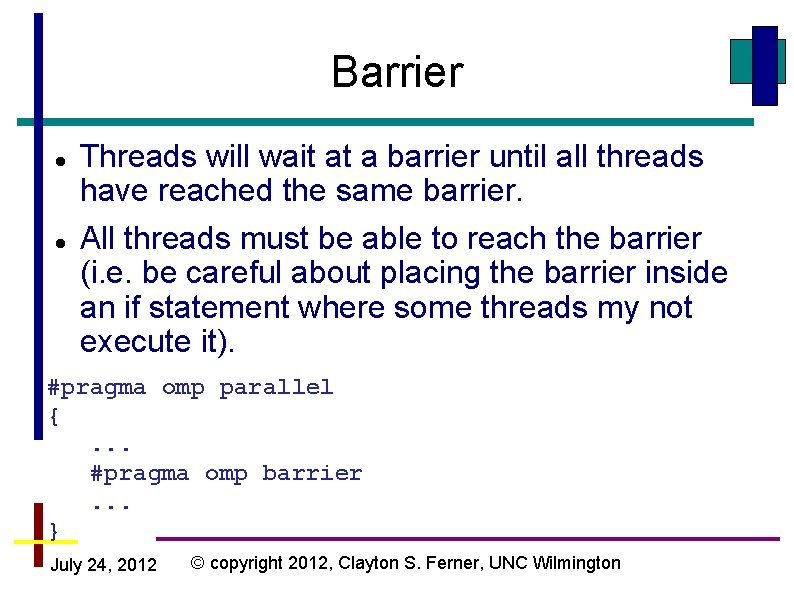

Barrier Threads will wait at a barrier until all threads have reached the same barrier. All threads must be able to reach the barrier (i. e. be careful about placing the barrier inside an if statement where some threads my not execute it). #pragma omp parallel {. . . #pragma omp barrier. . . } July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

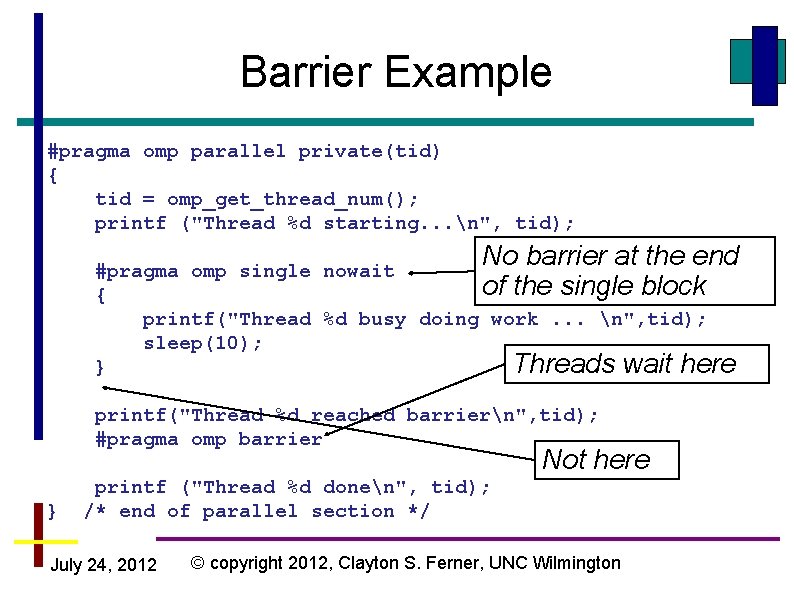

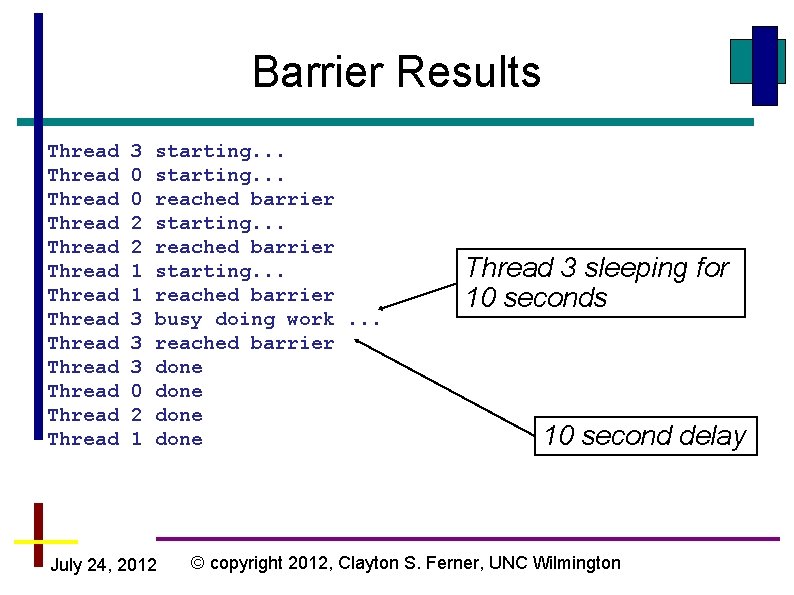

Barrier Example #pragma omp parallel private(tid) { tid = omp_get_thread_num(); printf ("Thread %d starting. . . n", tid); No barrier at the end #pragma omp single nowait of the single block { printf("Thread %d busy doing work. . . n", tid); sleep(10); } Threads wait here printf("Thread %d reached barriern", tid); #pragma omp barrier Not here } printf ("Thread %d donen", tid); /* end of parallel section */ July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

Barrier Results Thread Thread Thread Thread 3 0 0 2 2 1 1 3 3 3 0 2 1 starting. . . reached barrier busy doing work. . . reached barrier done July 24, 2012 Thread 3 sleeping for 10 seconds 10 second delay © copyright 2012, Clayton S. Ferner, UNC Wilmington

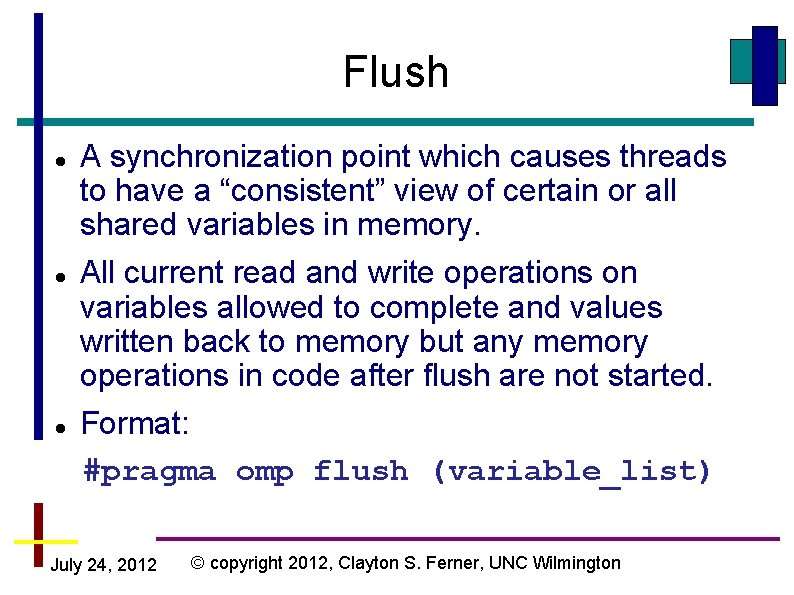

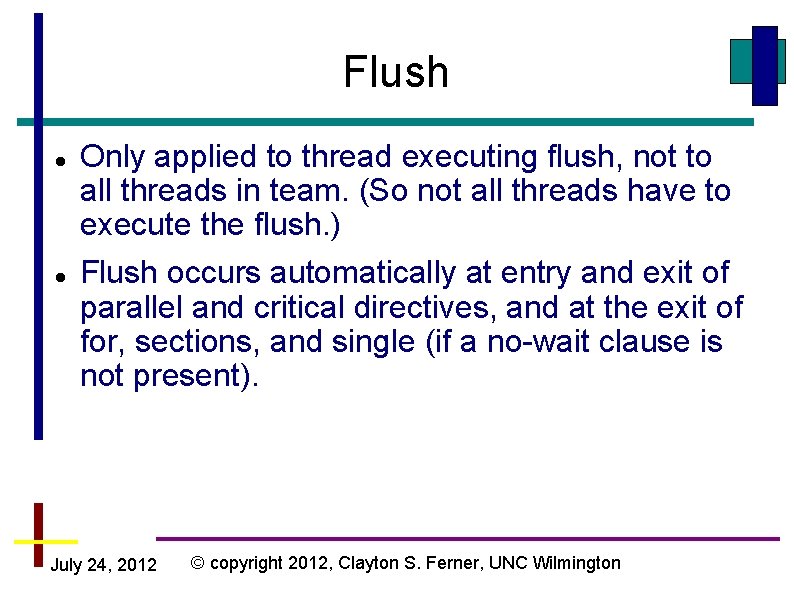

Flush A synchronization point which causes threads to have a “consistent” view of certain or all shared variables in memory. All current read and write operations on variables allowed to complete and values written back to memory but any memory operations in code after flush are not started. Format: #pragma omp flush (variable_list) July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

Flush Only applied to thread executing flush, not to all threads in team. (So not all threads have to execute the flush. ) Flush occurs automatically at entry and exit of parallel and critical directives, and at the exit of for, sections, and single (if a no-wait clause is not present). July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

More information http: //openmp. org/wp/ July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington

Questions July 24, 2012 © copyright 2012, Clayton S. Ferner, UNC Wilmington