Introduction to Numerical Methods Andreas Grtler Hans Maassen

![Root finding: bisection method • The bisection method finds a root in [a, b] Root finding: bisection method • The bisection method finds a root in [a, b]](https://slidetodoc.com/presentation_image/24ee6e514f92d93c63565cb6e2a8c306/image-20.jpg)

![convergence • Bisection always converges, but maybe slow • f C[a, b] and f(a)*f(b)<0 convergence • Bisection always converges, but maybe slow • f C[a, b] and f(a)*f(b)<0](https://slidetodoc.com/presentation_image/24ee6e514f92d93c63565cb6e2a8c306/image-22.jpg)

- Slides: 26

Introduction to Numerical Methods Andreas Gürtler Hans Maassen (maassen@math. ru. nl) tel. : 52991

Plan for today • Introduction • Errors • Representation of real numbers in a computer • When can we solve a problem? • A first numerical method: • Bisection method for root-finding (solving f(x)=0) Practical part: Implementation of the bisection method

What are numerical methods for? • Many problems can be formulated in simple • • mathematical equations This does not mean, that they are solved easily! For any application, you need numbers Numerical Methods! Needed for most basic things: exp(3), sqrt(7), sin(42°), log(5) , Often, modeling and numerical calculations can help in design, construction, safety Note: many everyday’s problems are so complicated that they cannot be solved yet Efficiency is crucial

Numerics is about numbers • Numerical methods: Numerical approximation of solutions to understood problems • Numerical representation of real numbers has far-reaching consequences • Two main objectives – quantify errors Approximation without error estimation is useless – increase efficiency Solutions which take years or need more resources that you have are useless • Nowadays, many fields depend on numerics

Errors

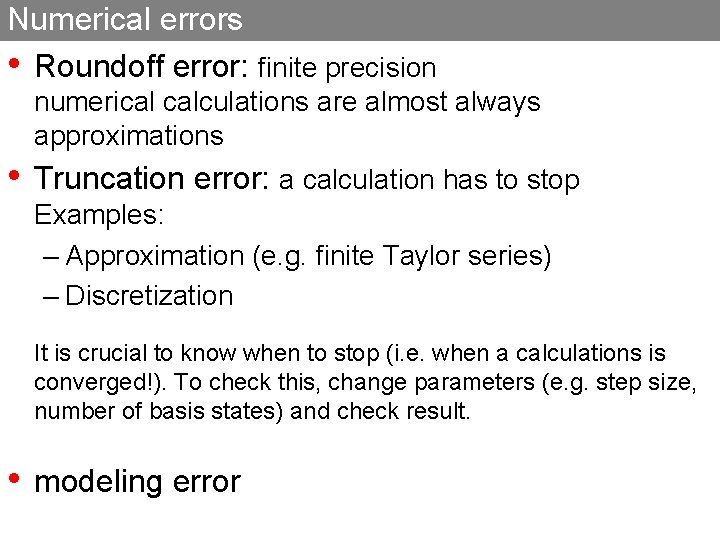

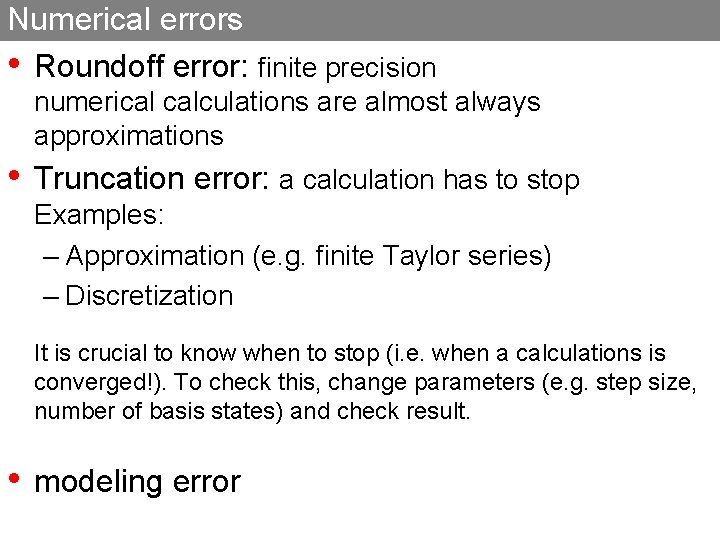

Numerical errors • Roundoff error: finite precision numerical calculations are almost always approximations • Truncation error: a calculation has to stop Examples: – Approximation (e. g. finite Taylor series) – Discretization It is crucial to know when to stop (i. e. when a calculations is converged!). To check this, change parameters (e. g. step size, number of basis states) and check result. • modeling error

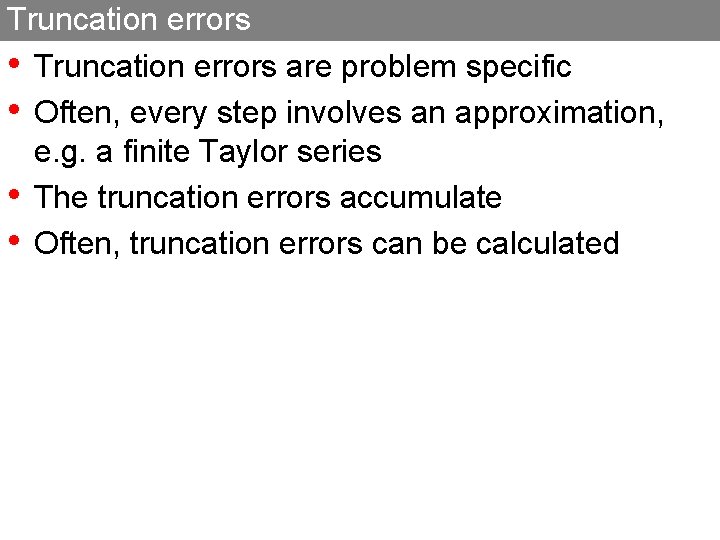

Truncation errors • Truncation errors are problem specific • Often, every step involves an approximation, e. g. a finite Taylor series • The truncation errors accumulate • Often, truncation errors can be calculated

Roundoff errors • Precision of representation of numbers is finite - errors accumulate • a real number x can be represented as fl(x) = x·(1+ ) : floating point computer representation |fl(x)-x| = x |fl(x)-x|/x = absolute error (often also x) relative error Example: Logistic Map xi+1=r * xi * (1 -xi) x 0= 0. 7 ; r=4 single and double precision

Computer representation of numbers

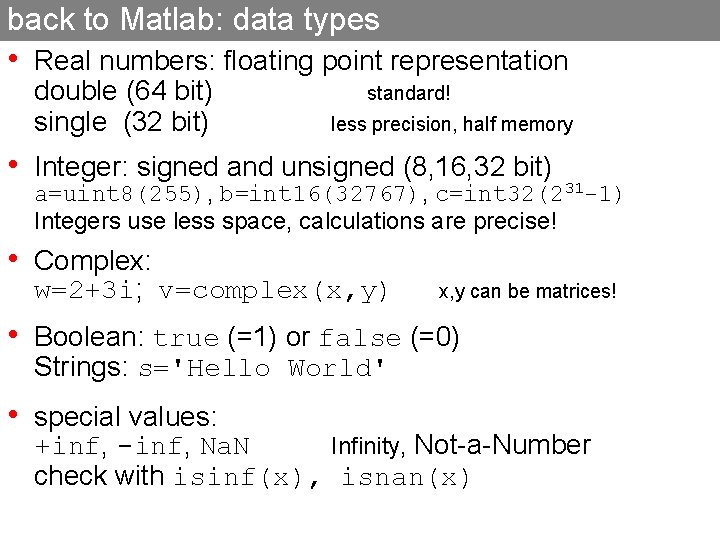

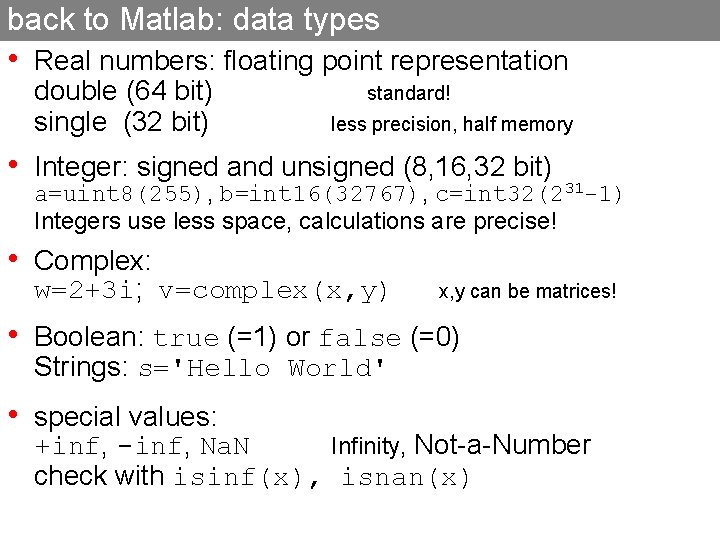

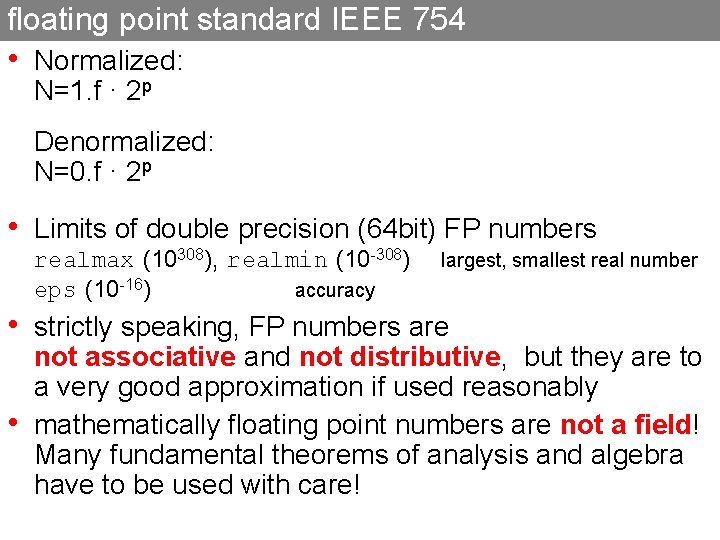

back to Matlab: data types • Real numbers: floating point representation double (64 bit) single (32 bit) standard! less precision, half memory • Integer: signed and unsigned (8, 16, 32 bit) a=uint 8(255), b=int 16(32767), c=int 32(231 -1) Integers use less space, calculations are precise! • Complex: w=2+3 i; v=complex(x, y) x, y can be matrices! • Boolean: true (=1) or false (=0) Strings: s='Hello World' • special values: +inf, -inf, Na. N Infinity, Not-a-Number check with isinf(x), isnan(x)

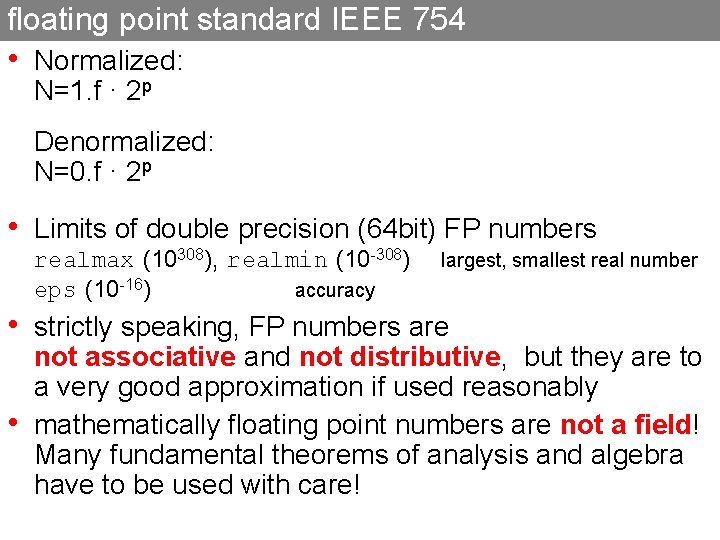

floating point standard IEEE 754 • Normalized: N=1. f · 2 p Denormalized: N=0. f · 2 p • Limits of double precision (64 bit) FP numbers realmax (10308), realmin (10 -308) eps (10 -16) accuracy largest, smallest real number • strictly speaking, FP numbers are • not associative and not distributive, but they are to a very good approximation if used reasonably mathematically floating point numbers are not a field! Many fundamental theorems of analysis and algebra have to be used with care!

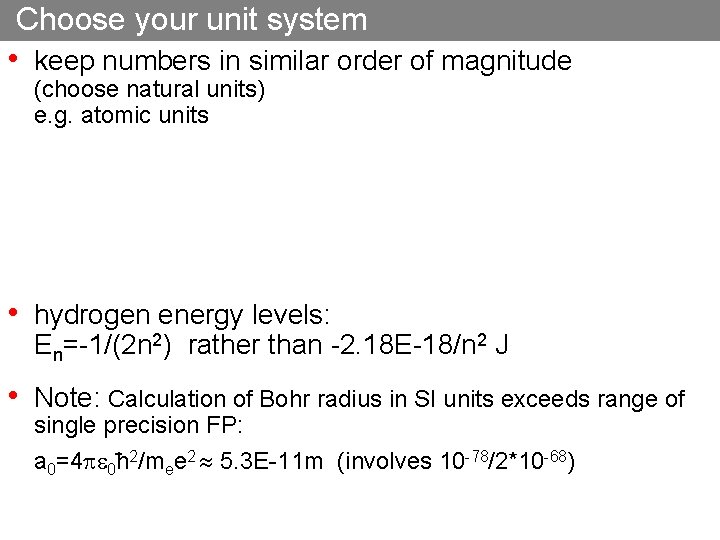

Choose your unit system • keep numbers in similar order of magnitude (choose natural units) e. g. atomic units • hydrogen energy levels: En=-1/(2 n 2) rather than -2. 18 E-18/n 2 J • Note: Calculation of Bohr radius in SI units exceeds range of single precision FP: a 0=4 0 -h 2/mee 2 5. 3 E-11 m (involves 10 -78/2*10 -68)

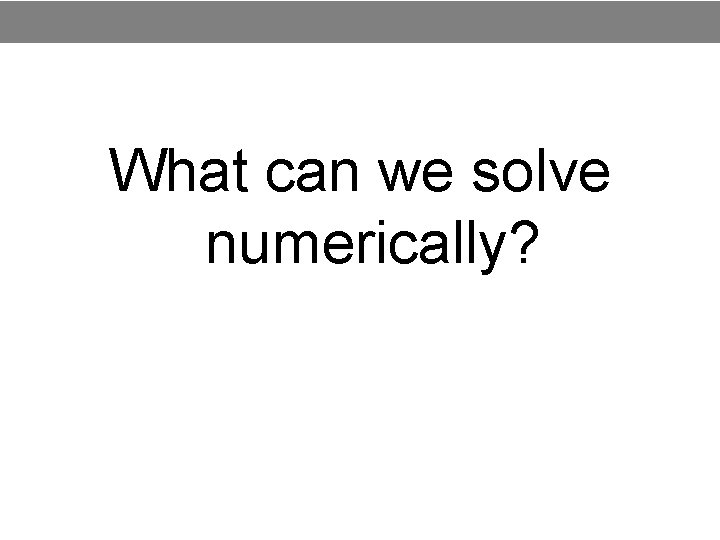

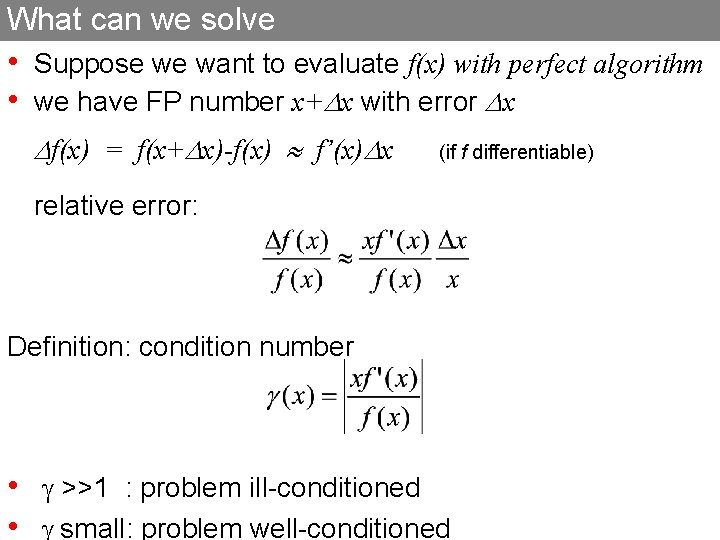

floating point caveats • not all real numbers can be represented as FP 0. 1+0. 1 -1 • Floating point calculations are not precise! sin(pi) is not 0, 1 E 20+1 -1 E 20 is not 1 • never compare two floats (a==b) directly try 100*(1/3)/100 == 1/3 use abs(a-b)<eps FALSE!!! (eps=2. 2 E-16) • be careful with mixing integer and FP i=int 32(1); i/2 produces 1 as i/2 stays integer! i/3 produces 0 ! This is much more dangerous in C and FORTRAN etc. Solution: explicit type conversion double(i)/2 produces 0. 5 -1 E-16

What can we solve numerically?

What can we solve • Suppose we want to evaluate f(x) with perfect algorithm • we have FP number x+ x with error x f(x) = f(x+ x)-f(x) f’(x) x (if f differentiable) relative error: Definition: condition number • >>1 : problem ill-conditioned • small: problem well-conditioned

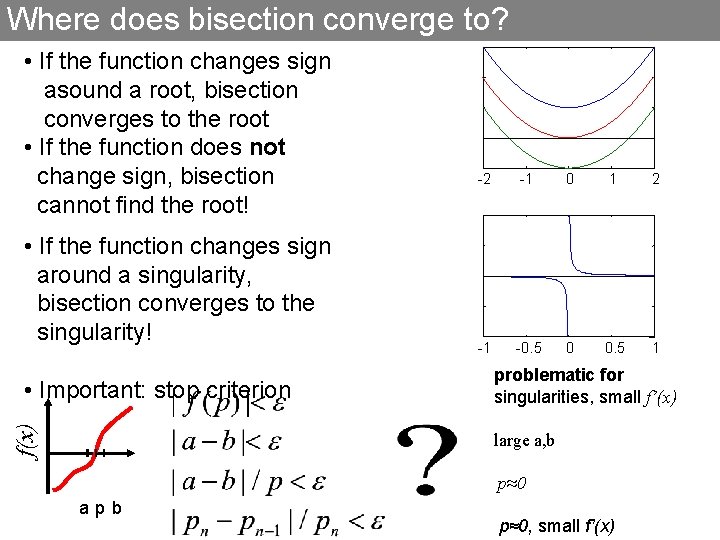

well- and ill-conditioned methods • Let’s try a simple calculation: 99 -70*sqrt(2) ( 0. 00505) • suppose we have 1. 4 as approximation for 2 • We have 2 mathematically equivalent methods: f 1: 99 -70* 2 f 2: 1/(99+70* 2) f 1(1. 4) = 1 f 2(1. 4) 0. 0051 Condition numbers: f 1(x)= 99 -70 x 2 20000 f 1(x)= 1/(99+70 x) 2 0. 5

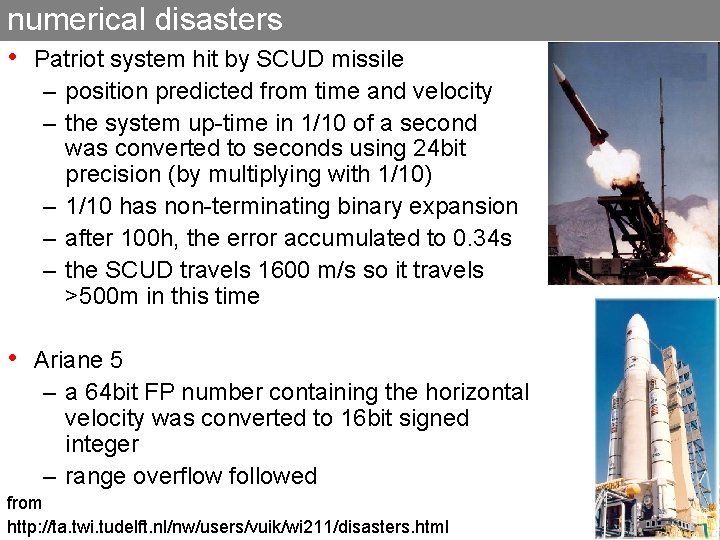

what happened? f 1: 99 -70* 2 f 2: 1/(99+70* 2) • Condition number of subtraction, addition: f(x)=x-a f(x)=x+a = |-x/(x-a)| = |x/(x+a)| ill-conditioned for x-a≈0 ill-conditioned for x+a≈0 • Condition number for multiplication, division: f(x)=ax f(x)=1/x = |xa/(ax)| =1 = |xx-2/(x-1)| =1 well-conditioned

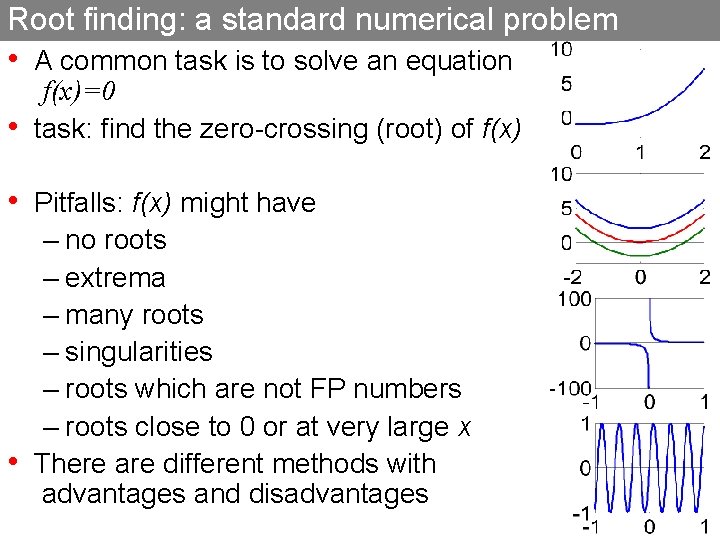

numerical disasters • Patriot system hit by SCUD missile – position predicted from time and velocity – the system up-time in 1/10 of a second was converted to seconds using 24 bit precision (by multiplying with 1/10) – 1/10 has non-terminating binary expansion – after 100 h, the error accumulated to 0. 34 s – the SCUD travels 1600 m/s so it travels >500 m in this time • Ariane 5 – a 64 bit FP number containing the horizontal velocity was converted to 16 bit signed integer – range overflow followed from http: //ta. twi. tudelft. nl/nw/users/vuik/wi 211/disasters. html

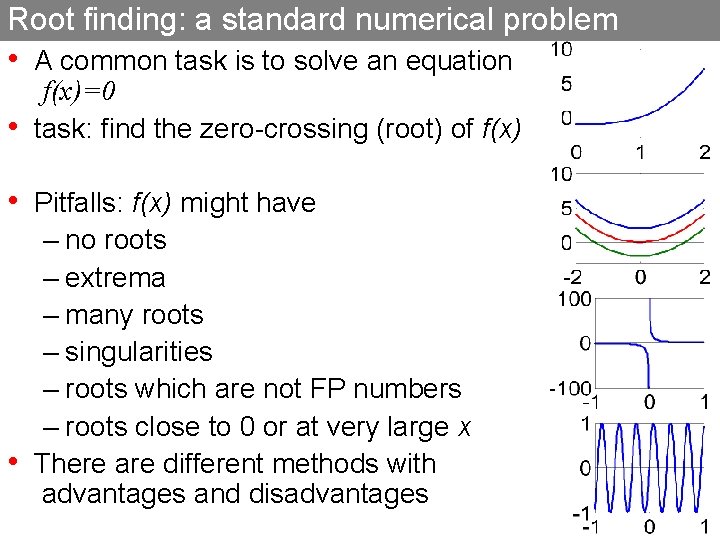

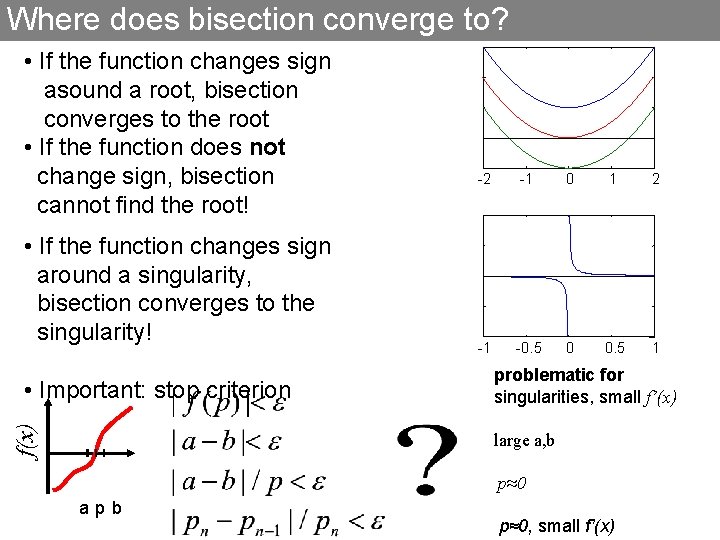

Root finding: a standard numerical problem • A common task is to solve an equation • f(x)=0 task: find the zero-crossing (root) of f(x) • Pitfalls: f(x) might have – no roots – extrema – many roots – singularities – roots which are not FP numbers – roots close to 0 or at very large x • There are different methods with advantages and disadvantages

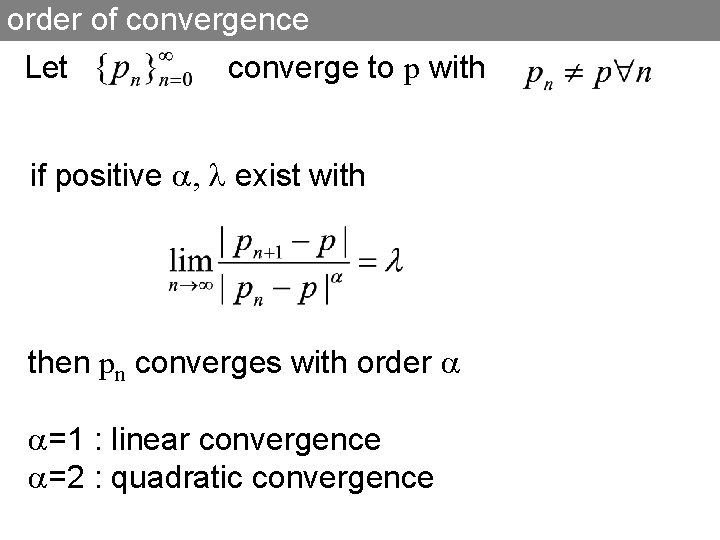

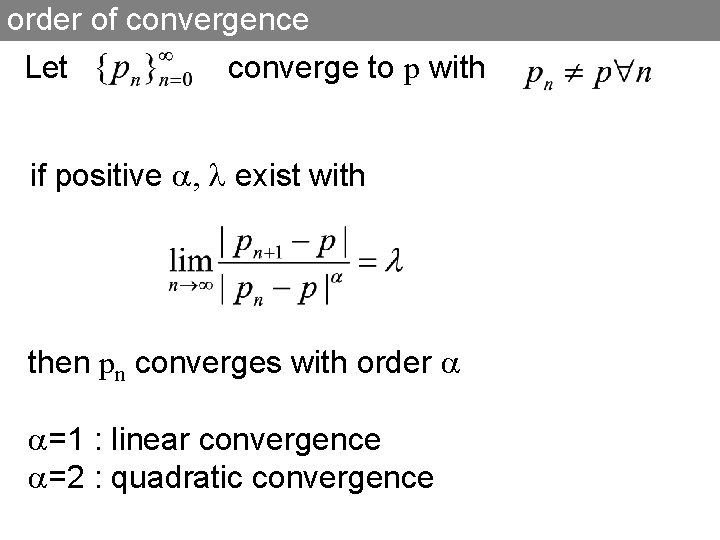

![Root finding bisection method The bisection method finds a root in a b Root finding: bisection method • The bisection method finds a root in [a, b]](https://slidetodoc.com/presentation_image/24ee6e514f92d93c63565cb6e2a8c306/image-20.jpg)

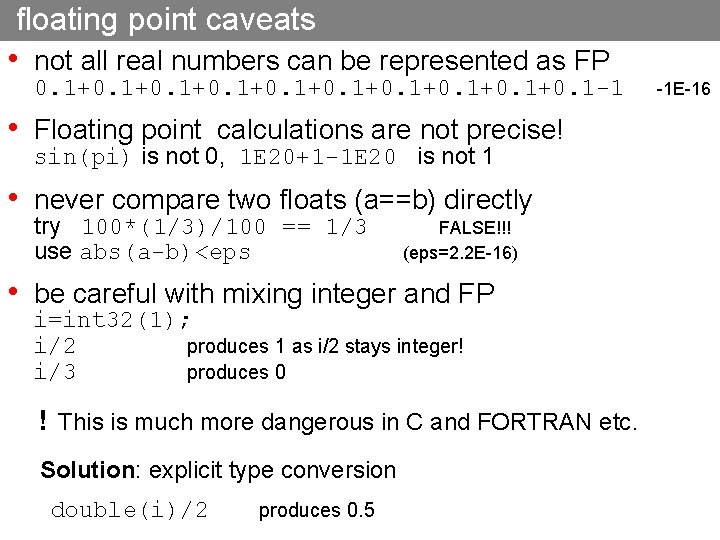

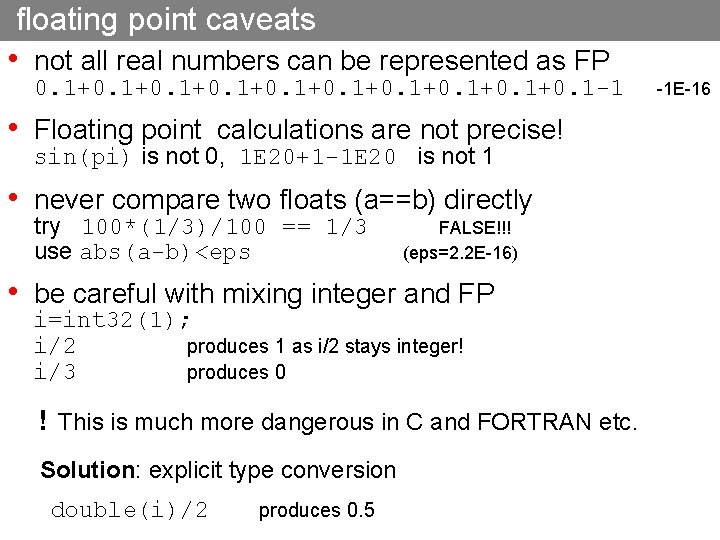

Root finding: bisection method • The bisection method finds a root in [a, b] if f(x) C(a, b), f(a)*f(b)<0 • wanted: x 0: f(x 0)=0 f(x) (There is a root because of the intermediate value theorem) • Bisection is slow, but always works!

Where does bisection converge to? • If the function changes sign asound a root, bisection converges to the root • If the function does not change sign, bisection cannot find the root! • If the function changes sign around a singularity, bisection converges to the singularity! f(x) • Important: stop criterion -2 -1 0 1 2 -1 -0. 5 0 0. 5 1 problematic for singularities, small f’(x) large a, b p≈0 apb p≈0, small f’(x)

![convergence Bisection always converges but maybe slow f Ca b and fafb0 convergence • Bisection always converges, but maybe slow • f C[a, b] and f(a)*f(b)<0](https://slidetodoc.com/presentation_image/24ee6e514f92d93c63565cb6e2a8c306/image-22.jpg)

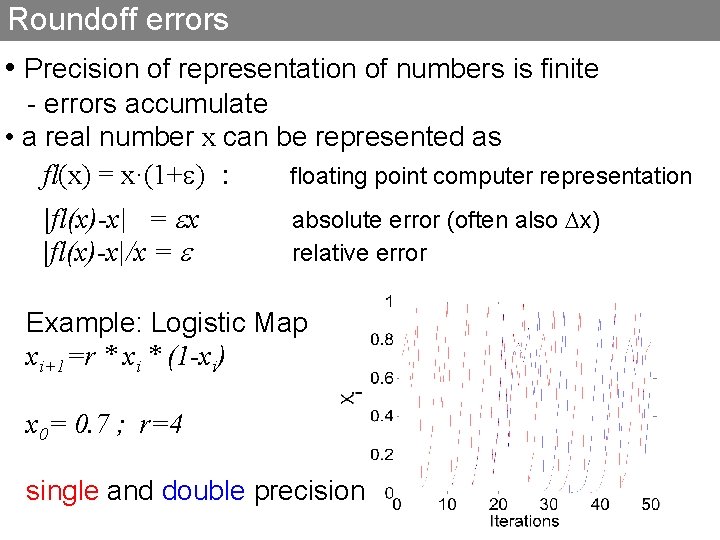

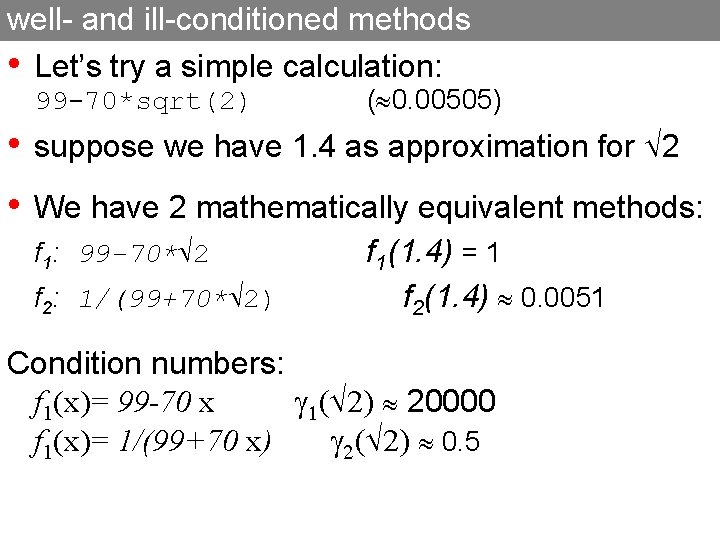

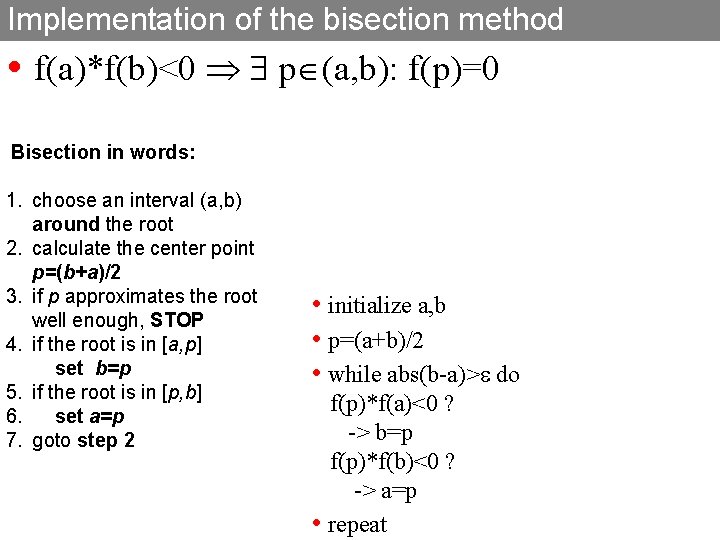

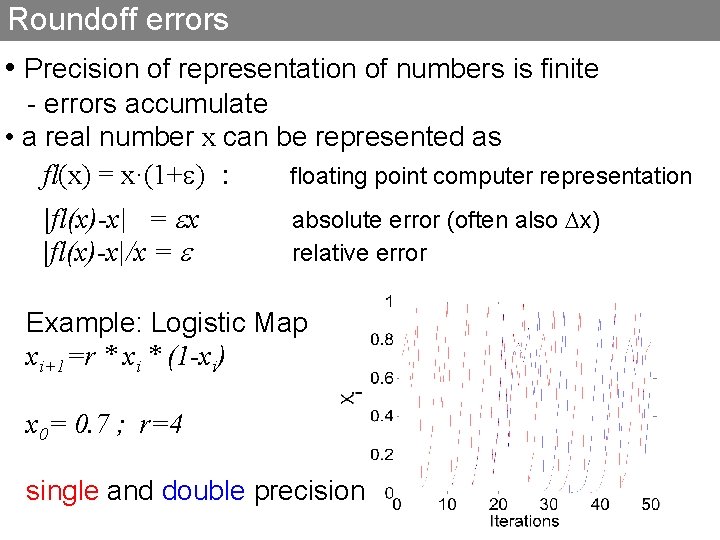

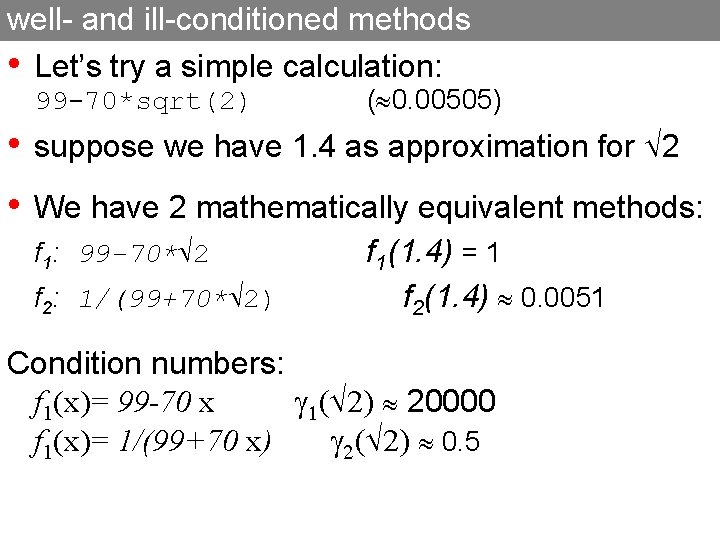

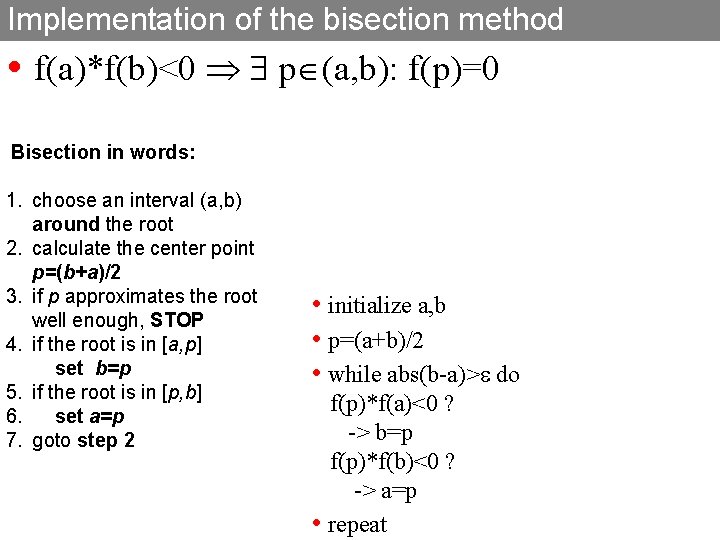

convergence • Bisection always converges, but maybe slow • f C[a, b] and f(a)*f(b)<0 then {pn} converges to a root p of f and |pn-p| (b-a)/2 n • Proof: n 1: bn-an = (b-a)/2 n-1 and p (an, bn) • convergence is linear

order of convergence Let converge to p with if positive exist with then pn converges with order =1 : linear convergence =2 : quadratic convergence

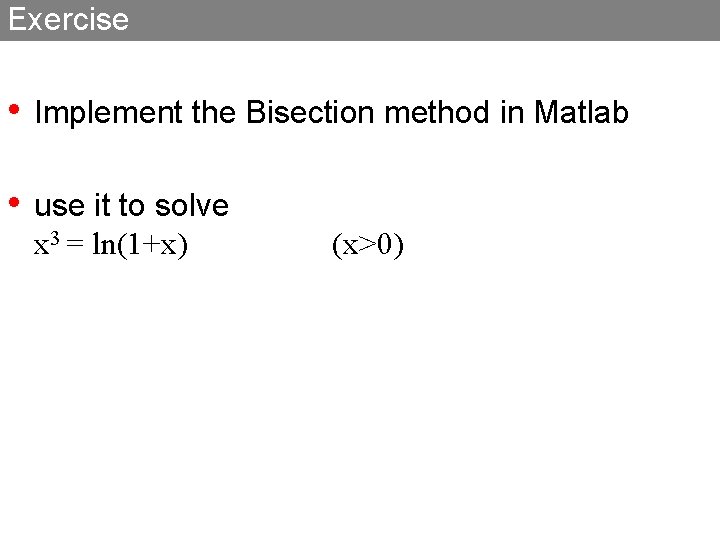

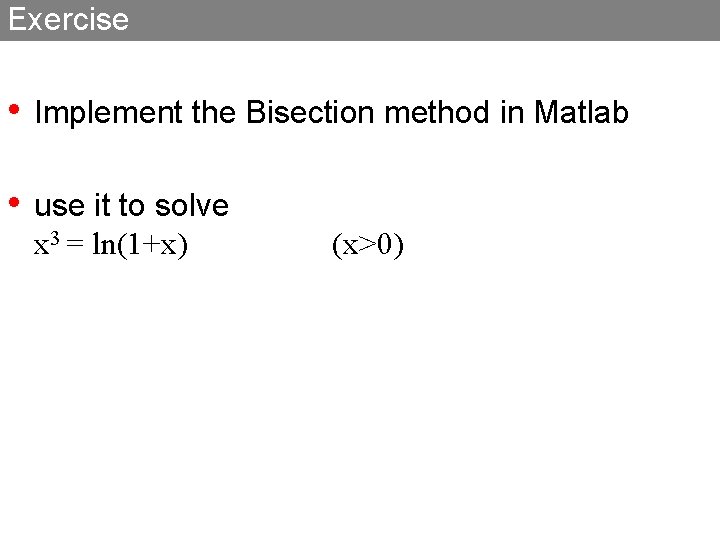

Implementation of the bisection method • f(a)*f(b)<0 p (a, b): f(p)=0 Bisection in words: 1. choose an interval (a, b) around the root 2. calculate the center point p=(b+a)/2 3. if p approximates the root well enough, STOP 4. if the root is in [a, p] set b=p 5. if the root is in [p, b] 6. set a=p 7. goto step 2 • initialize a, b • p=(a+b)/2 • while abs(b-a)> do f(p)*f(a)<0 ? -> b=p f(p)*f(b)<0 ? -> a=p • repeat

Exercise • Implement the Bisection method in Matlab • use it to solve x 3 = ln(1+x) (x>0)

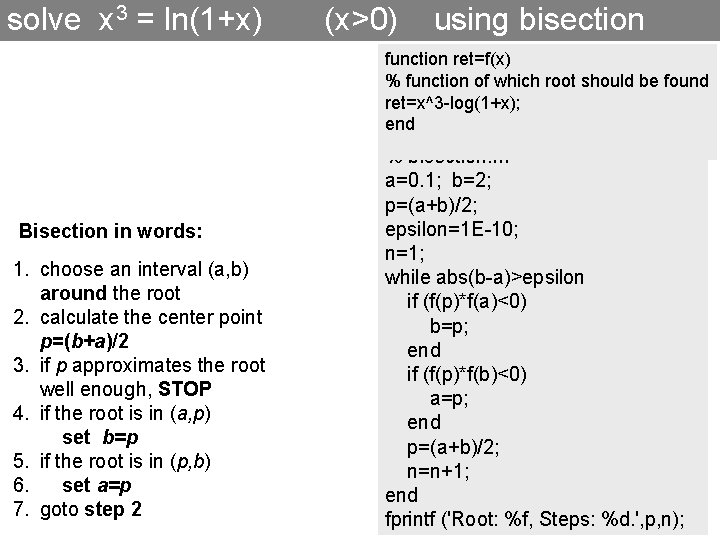

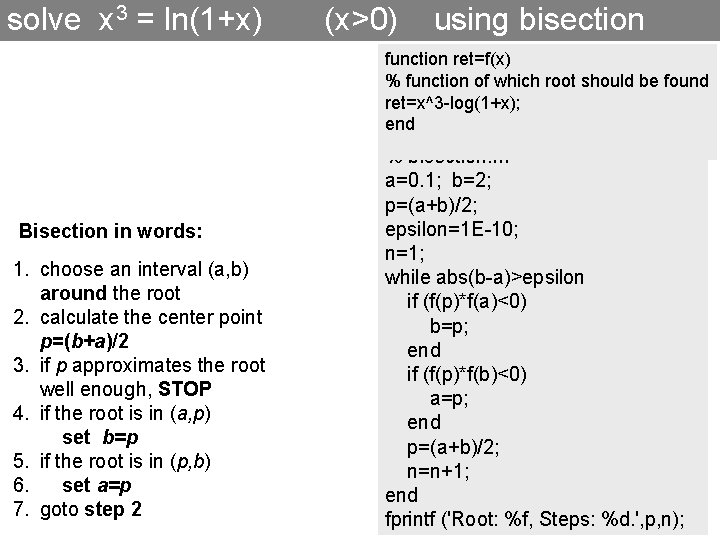

solve x 3 = ln(1+x) (x>0) using bisection function ret=f(x) % function of which root should be found ret=x^3 -log(1+x); end Bisection in words: 1. choose an interval (a, b) around the root 2. calculate the center point p=(b+a)/2 3. if p approximates the root well enough, STOP 4. if the root is in (a, p) set b=p 5. if the root is in (p, b) 6. set a=p 7. goto step 2 % bisection. m a=0. 1; b=2; p=(a+b)/2; epsilon=1 E-10; n=1; while abs(b-a)>epsilon if (f(p)*f(a)<0) b=p; end if (f(p)*f(b)<0) a=p; end p=(a+b)/2; n=n+1; end fprintf ('Root: %f, Steps: %d. ', p, n);