Introduction to Neural Networks Neural Networks in the

- Slides: 19

Introduction to Neural Networks

Neural Networks in the Brain • Human brain “computes” in an entirely different way from conventional digital computers. • The brain is highly complex, nonlinear, and parallel. • Organization of neurons to perform tasks much faster than computers. (Typical time taken in visual recognition tasks is 100– 200 ms. ) • Key features of the biological brain: experience shapes the wiring through plasticity, and hence learning becomes the central issue in neural networks.

Neural Networks as an Adaptive Machine • A neural network is a massively parallel distributed processor made up of simple processing units, which has a natural propensity for storing experimental knowledge and making it available for use. • Neural networks resemble the brain: – Knowledge is acquired from the environment through a learning process. – Iner neuron connection strengths, known as synaptic weights, are used to store the acquired knowledge. • Procedure used for learning: learning algorithm. Weights, or even the topology can be adjusted.

Benefits of Neural Networks • Nonlinearity: nonlinear components, distributed nonlinearity • Input-output mapping: supervised learning, nonparametric statistical inference (model-free estimation, no prior assumptions) • Adaptivity: either retain or adapt. Can deal with nonstationary environments. Must overcome stabilityplasticity dilemma. • Evidential response: decision plus confidence of the decision can be provided. • Contextual information: Every neuron in the network potentially influences every other neuron, so contextual information is dealt with naturally

Benefits of Neural Networks • Fault tolerance: performance degrades gracefully. • VLSI implementability: network of simple components. • Uniformity of analysis and design: common components (neurons), sharability of theories and learning algorithms, and seamless integration based on modularity. • Neurobiological analogy: Neural nets motivated by neurobiology, and neurobiology also turning to neural networks for insights and tools.

Human Brain • Stimulus → Receptors ⇔ Neural Net ⇔ Effectors → Response: Arbib (1987) • Neurons are slow: 10− 3 s per operation, compared to 10− 9 s of modern CPUs. • Huge number of neurons and on nections: 1010 neurons, 6 × 1013 connections in human brain. • Highly energy efficient: 10− 16 J in the brain vs. 10− 6 J in modern computers

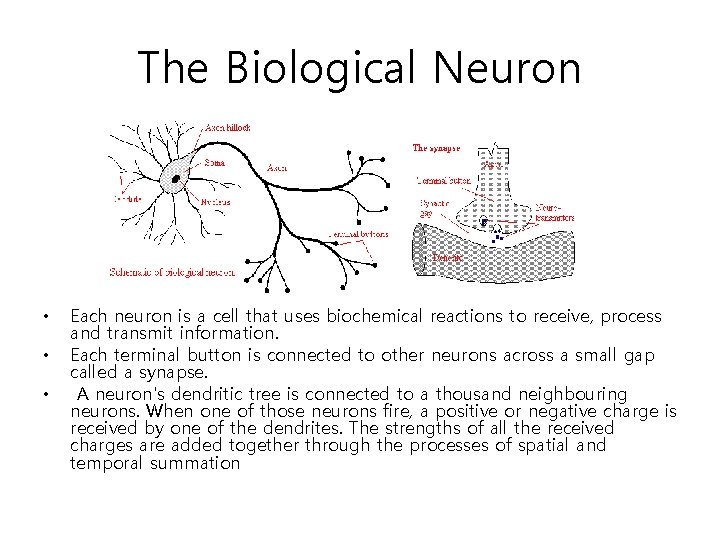

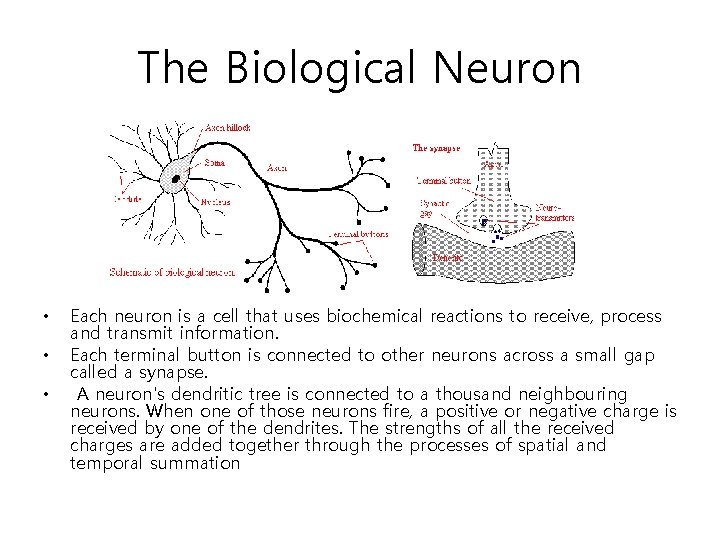

The Biological Neuron • • • Each neuron is a cell that uses biochemical reactions to receive, process and transmit information. Each terminal button is connected to other neurons across a small gap called a synapse. A neuron's dendritic tree is connected to a thousand neighbouring neurons. When one of those neurons fire, a positive or negative charge is received by one of the dendrites. The strengths of all the received charges are added together through the processes of spatial and temporal summation

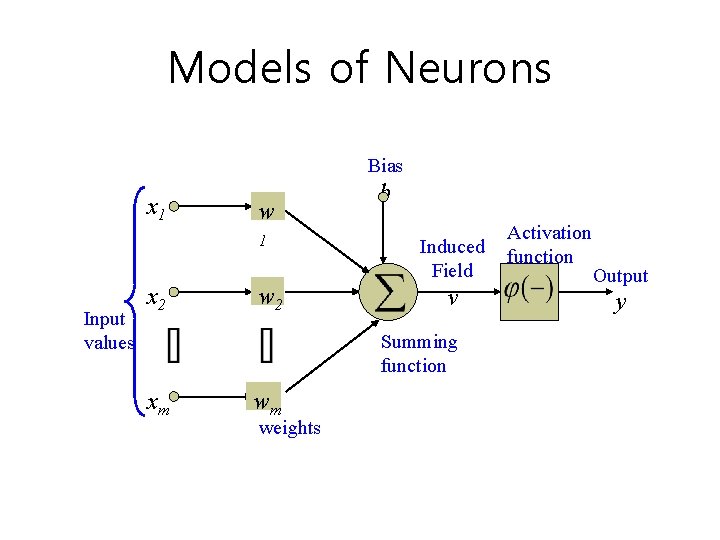

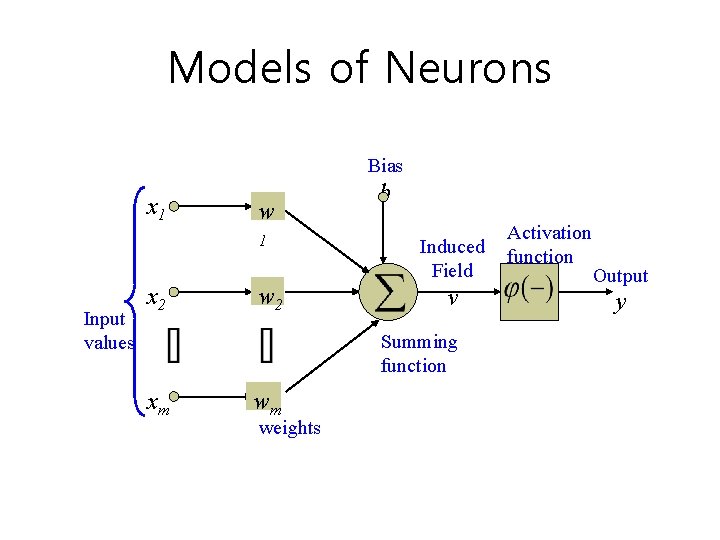

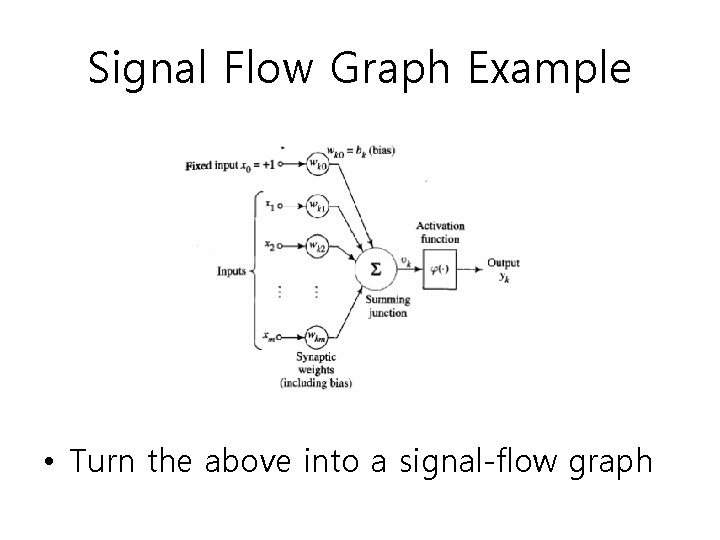

Models of Neurons Bias x 1 w 1 Input values x 2 w 2 b Induced Field v Summing function xm wm weights Activation function Output y

Activation Functions

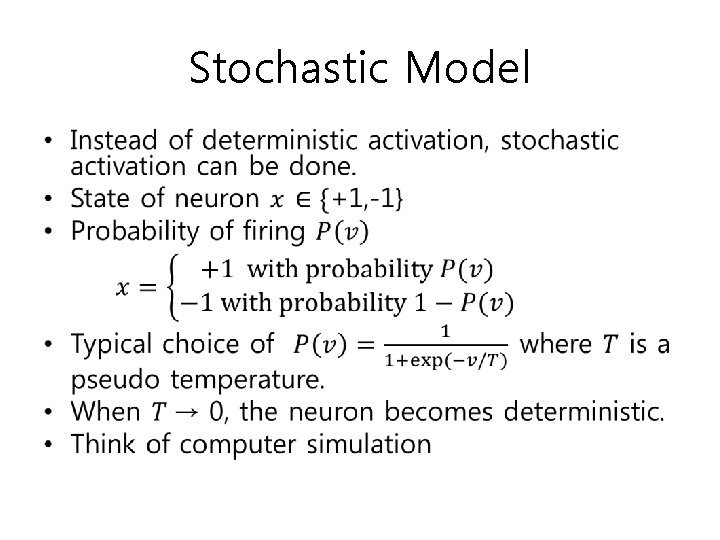

Stochastic Model •

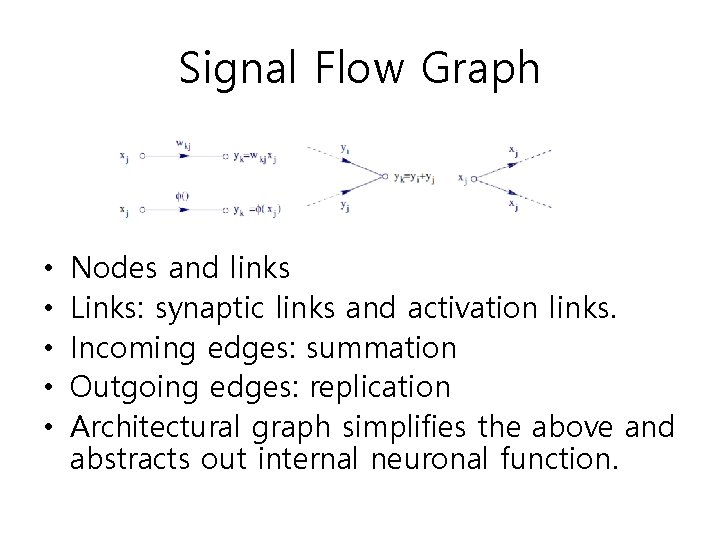

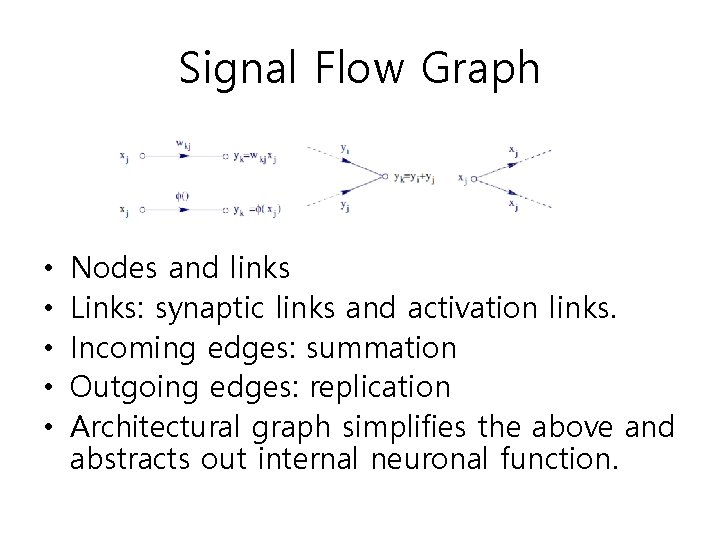

Signal Flow Graph • • • Nodes and links Links: synaptic links and activation links. Incoming edges: summation Outgoing edges: replication Architectural graph simplifies the above and abstracts out internal neuronal function.

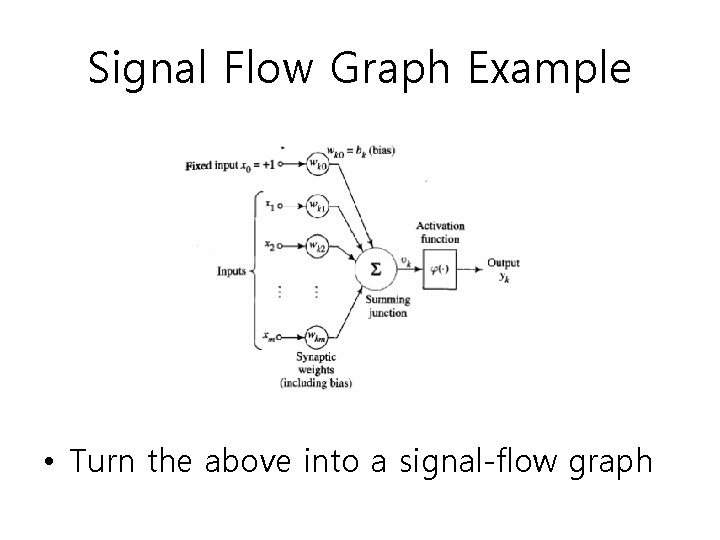

Signal Flow Graph Example • Turn the above into a signal-flow graph

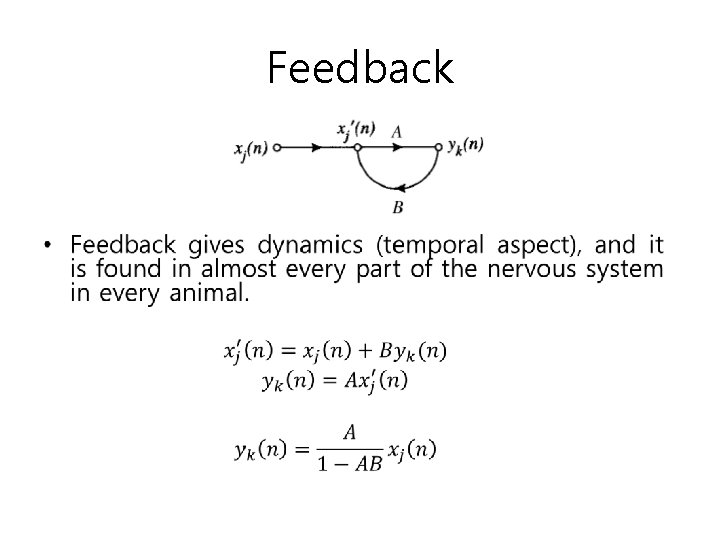

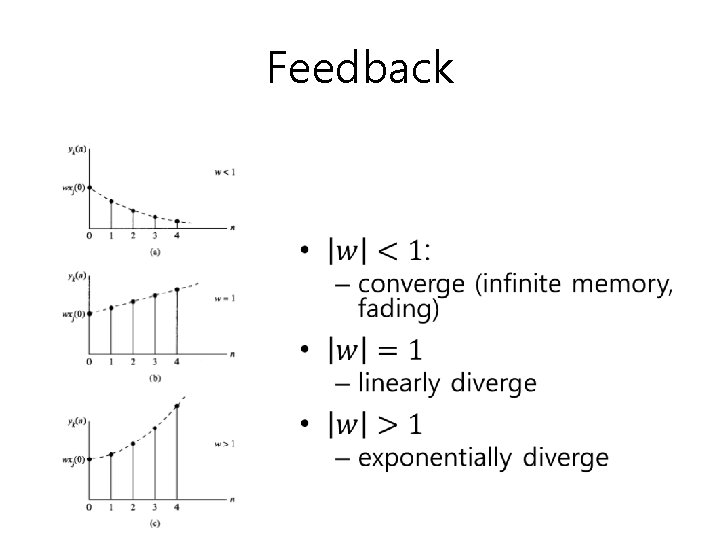

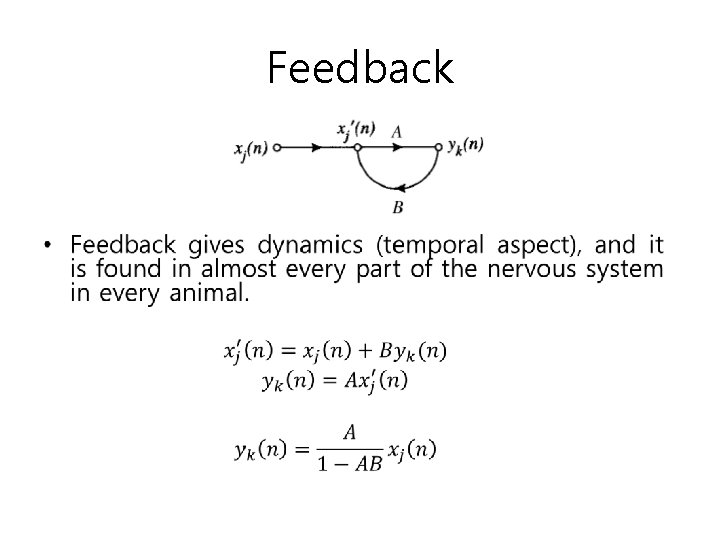

Feedback •

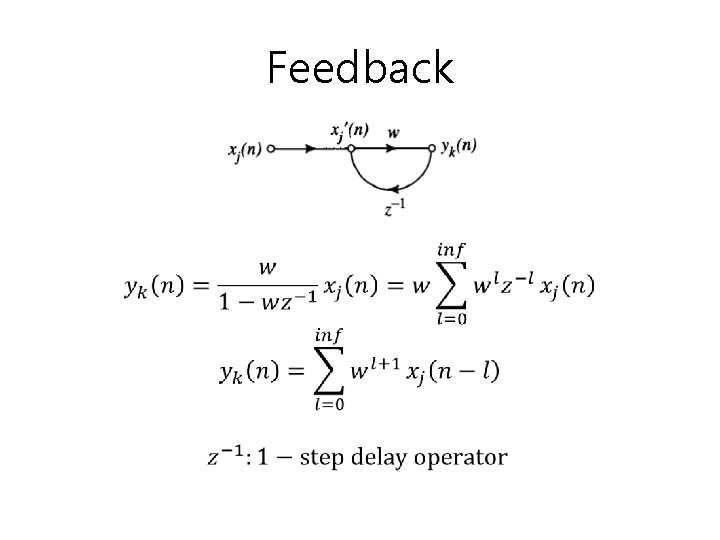

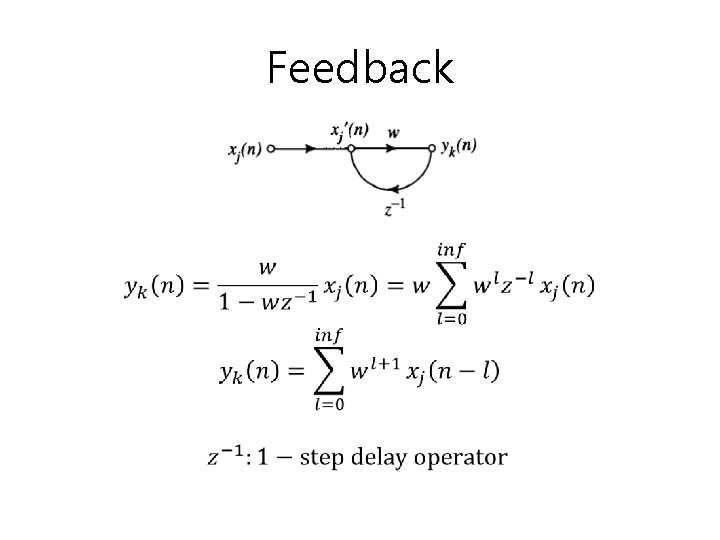

Feedback •

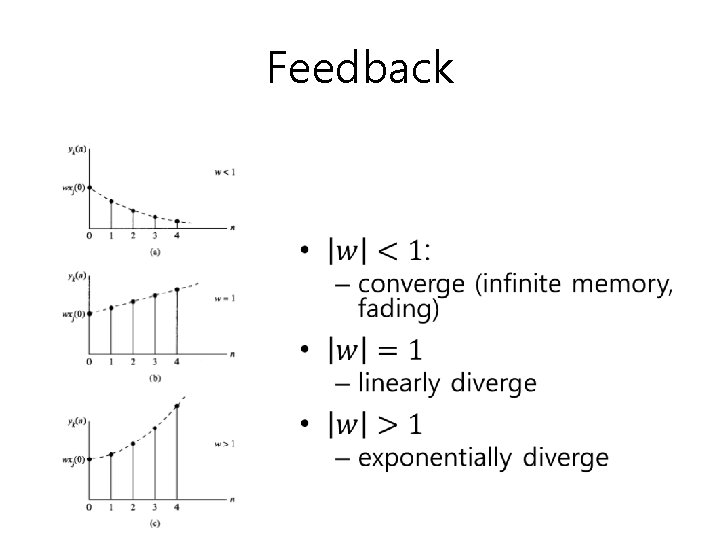

Feedback •

Definition of Neural Networks • An information processing system that has been developed as a generalization of mathematical models of human cognition or neurobiology, based on the assumptions that – Information processing occurs at many simple elements called neurons. – Signals are passed between neurons over connection links. – Each connection link has an associated weight, which typically multiplies the signal transmitted. – Each neuron applies an activation function (usually non-linear) to its net input (sum of weighted input signals) to determine its output signal.

Network Architectures • The connectivity of a neural network is intimately linked with the learning algorithm. – Single-layer feedforward networks: one input layer, one layer of computing units (output layer), acyclic connections. – Multilayer feedforward networks: one input layer, one (or more)hidden layers, and one output layer. Recurrent networks: feedback loop exists. – Recurrent networks: feedback loop exists. • Layers can be fully connected or partially connected.

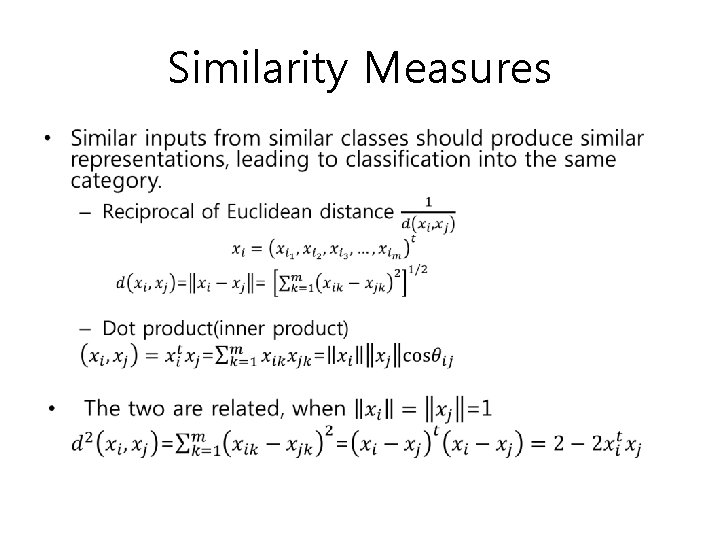

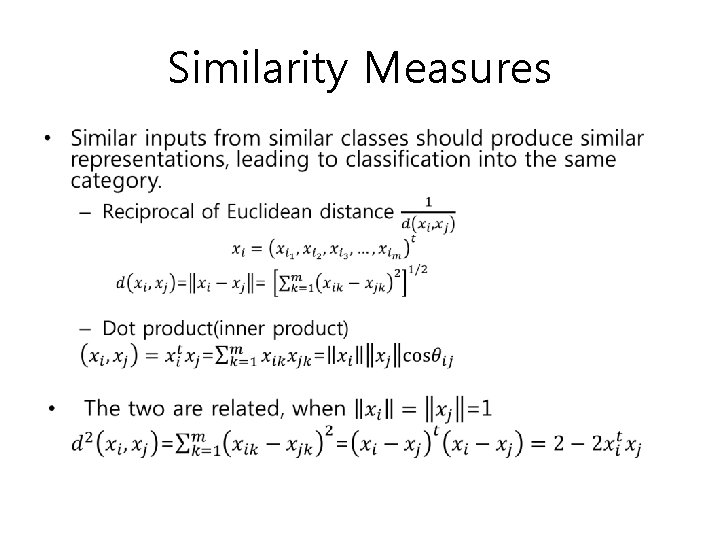

Similarity Measures •

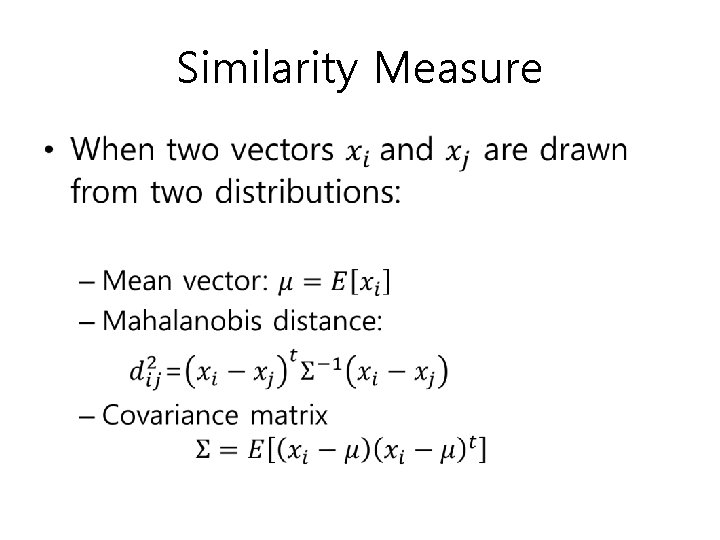

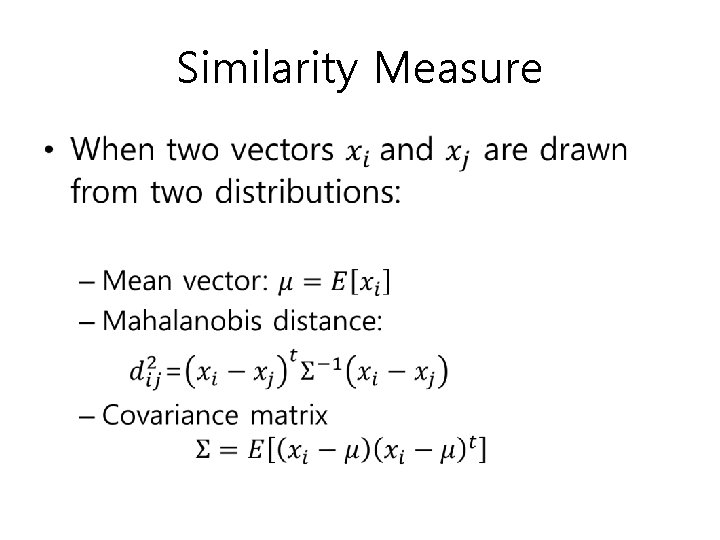

Similarity Measure •

Toolbox neural network matlab

Toolbox neural network matlab Cnn ppt for image classification

Cnn ppt for image classification Introduction to convolutional neural networks

Introduction to convolutional neural networks Visualizing and understanding convolutional networks

Visualizing and understanding convolutional networks Liran szlak

Liran szlak Cameron mott now

Cameron mott now Audio super resolution using neural networks

Audio super resolution using neural networks Convolutional neural networks for visual recognition

Convolutional neural networks for visual recognition Style transfer

Style transfer Nvdla

Nvdla Mippers

Mippers Neural networks and learning machines 3rd edition

Neural networks and learning machines 3rd edition Pixel recurrent neural networks.

Pixel recurrent neural networks. Neural networks for rf and microwave design

Neural networks for rf and microwave design 11-747 neural networks for nlp

11-747 neural networks for nlp Xor problem

Xor problem Sparse convolutional neural networks

Sparse convolutional neural networks On the computational efficiency of training neural networks

On the computational efficiency of training neural networks Tlu in neural network

Tlu in neural network Neural networks and fuzzy logic

Neural networks and fuzzy logic