Introduction to MPI programming Morris Law SCID May

- Slides: 44

Introduction to MPI programming Morris Law, SCID May 18/25, 2013

What is Message Passing Interface (MPI)? Portable standard for communication ¡ Processes can communicate through messages. ¡ Each process is a separable program ¡ All data is private ¡

Multi-core programming Currently, most CPUs has multiple cores that can be utilized easily by compiling with openmp support ¡ Programmers no longer need to rewrite a sequential code but to add directives to instruct the compiler for parallelizing the code with openmp. ¡

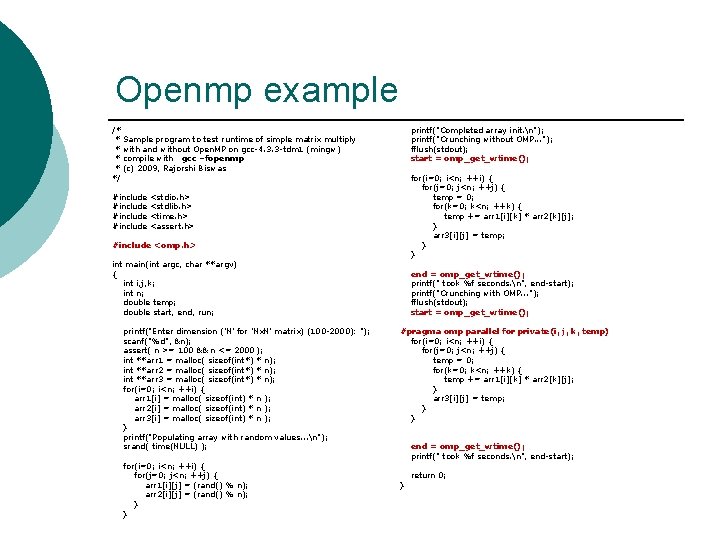

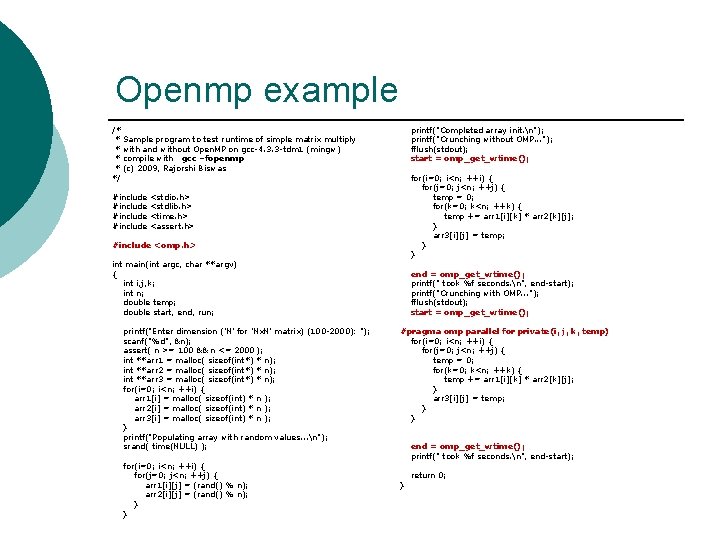

Openmp example /* * Sample program to test runtime of simple matrix multiply * with and without Open. MP on gcc-4. 3. 3 -tdm 1 (mingw) * compile with gcc –fopenmp * (c) 2009, Rajorshi Biswas */ #include printf("Completed array init. n"); printf("Crunching without OMP. . . "); fflush(stdout); start = omp_get_wtime(); for(i=0; i<n; ++i) { for(j=0; j<n; ++j) { temp = 0; for(k=0; k<n; ++k) { temp += arr 1[i][k] * arr 2[k][j]; } arr 3[i][j] = temp; } } <stdio. h> <stdlib. h> <time. h> <assert. h> #include <omp. h> int main(int argc, char **argv) { int i, j, k; int n; double temp; double start, end, run; printf("Enter dimension ('N' for 'Nx. N' matrix) (100 -2000): "); scanf("%d", &n); assert( n >= 100 && n <= 2000 ); int **arr 1 = malloc( sizeof(int*) * n); int **arr 2 = malloc( sizeof(int*) * n); int **arr 3 = malloc( sizeof(int*) * n); for(i=0; i<n; ++i) { arr 1[i] = malloc( sizeof(int) * n ); arr 2[i] = malloc( sizeof(int) * n ); arr 3[i] = malloc( sizeof(int) * n ); } printf("Populating array with random values. . . n"); srand( time(NULL) ); for(i=0; i<n; ++i) { for(j=0; j<n; ++j) { arr 1[i][j] = (rand() % n); arr 2[i][j] = (rand() % n); } } end = omp_get_wtime(); printf(" took %f seconds. n", end-start); printf("Crunching with OMP. . . "); fflush(stdout); start = omp_get_wtime(); #pragma omp parallel for private(i, j, k, temp) for(i=0; i<n; ++i) { for(j=0; j<n; ++j) { temp = 0; for(k=0; k<n; ++k) { temp += arr 1[i][k] * arr 2[k][j]; } arr 3[i][j] = temp; } } end = omp_get_wtime(); printf(" took %f seconds. n", end-start); } return 0;

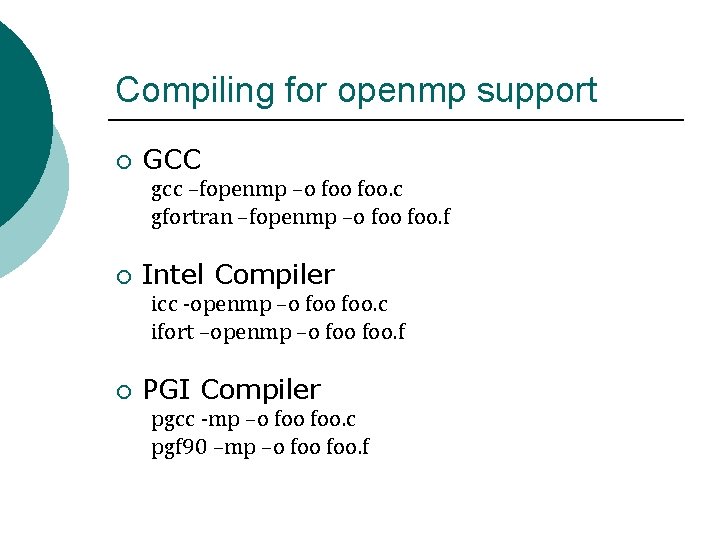

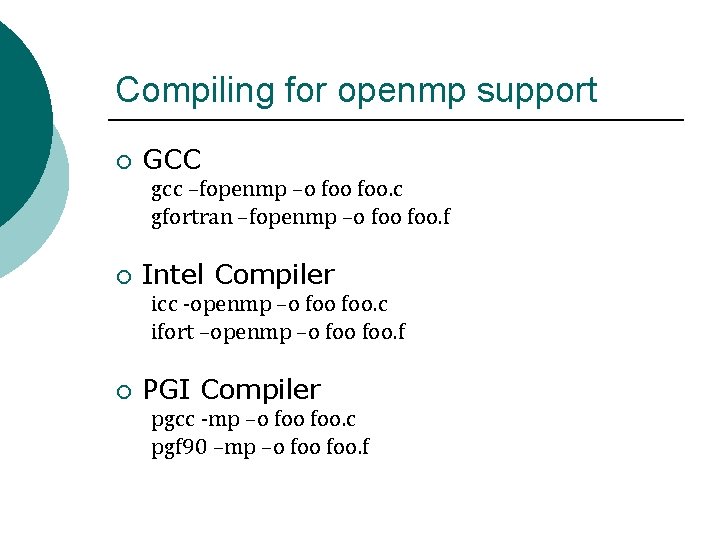

Compiling for openmp support ¡ GCC gcc –fopenmp –o foo. c gfortran –fopenmp –o foo. f ¡ Intel Compiler icc -openmp –o foo. c ifort –openmp –o foo. f ¡ PGI Compiler pgcc -mp –o foo. c pgf 90 –mp –o foo. f

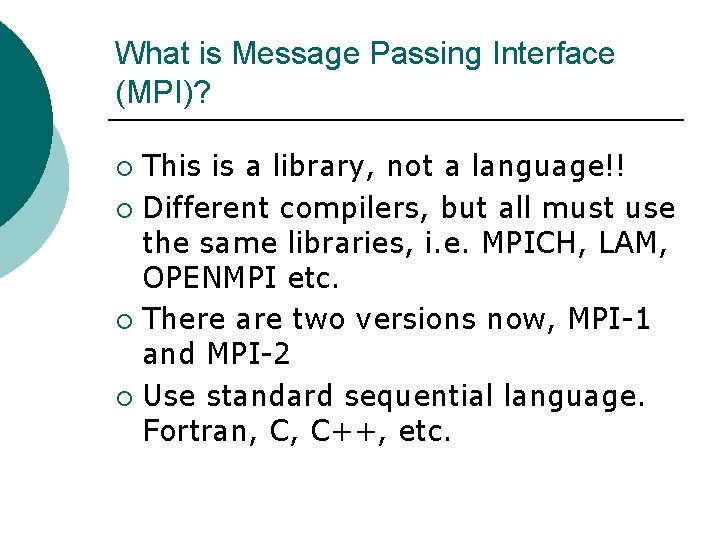

What is Message Passing Interface (MPI)? This is a library, not a language!! ¡ Different compilers, but all must use the same libraries, i. e. MPICH, LAM, OPENMPI etc. ¡ There are two versions now, MPI-1 and MPI-2 ¡ Use standard sequential language. Fortran, C, C++, etc. ¡

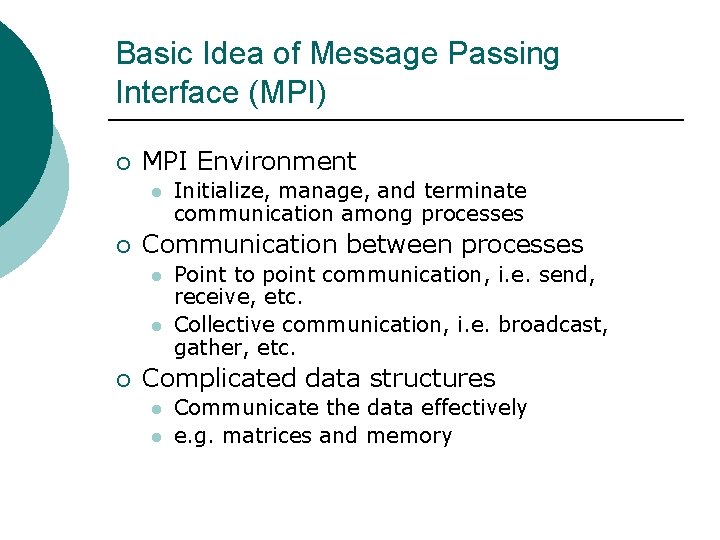

Basic Idea of Message Passing Interface (MPI) ¡ MPI Environment l ¡ Communication between processes l l ¡ Initialize, manage, and terminate communication among processes Point to point communication, i. e. send, receive, etc. Collective communication, i. e. broadcast, gather, etc. Complicated data structures l l Communicate the data effectively e. g. matrices and memory

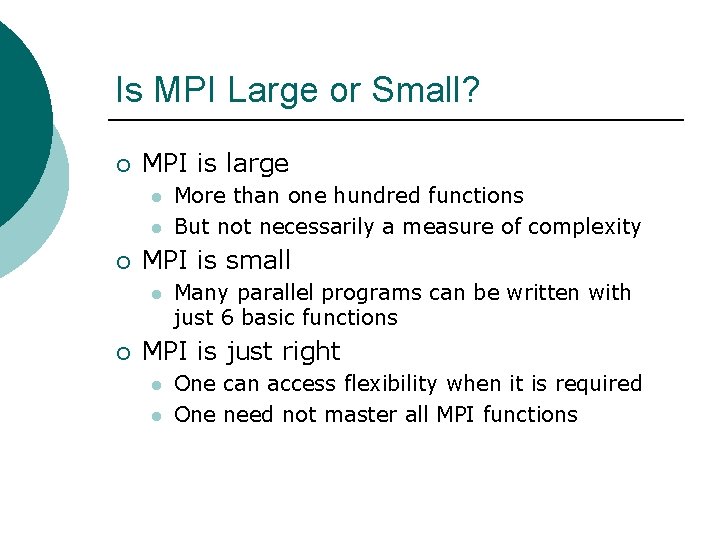

Is MPI Large or Small? ¡ MPI is large l l ¡ MPI is small l ¡ More than one hundred functions But not necessarily a measure of complexity Many parallel programs can be written with just 6 basic functions MPI is just right l l One can access flexibility when it is required One need not master all MPI functions

When Use MPI? You need a portable parallel program ¡ You are writing a parallel library ¡ You care about performance ¡ You have a problem that can be solved in parallel ways ¡

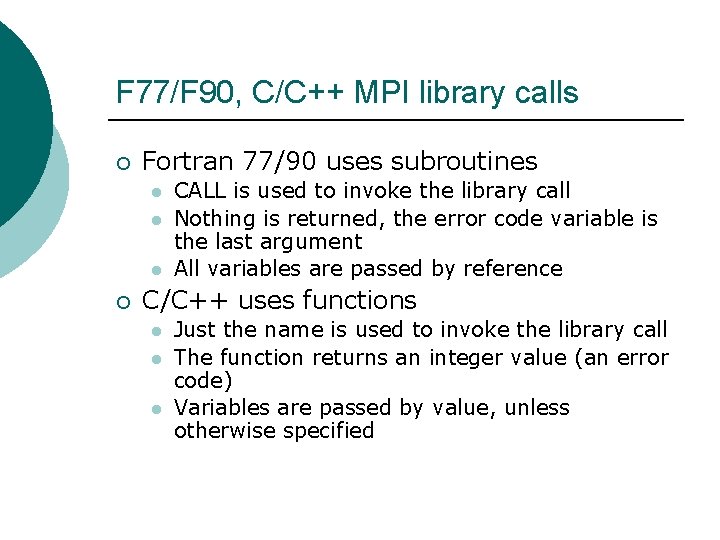

F 77/F 90, C/C++ MPI library calls ¡ Fortran 77/90 uses subroutines l l l ¡ CALL is used to invoke the library call Nothing is returned, the error code variable is the last argument All variables are passed by reference C/C++ uses functions l l l Just the name is used to invoke the library call The function returns an integer value (an error code) Variables are passed by value, unless otherwise specified

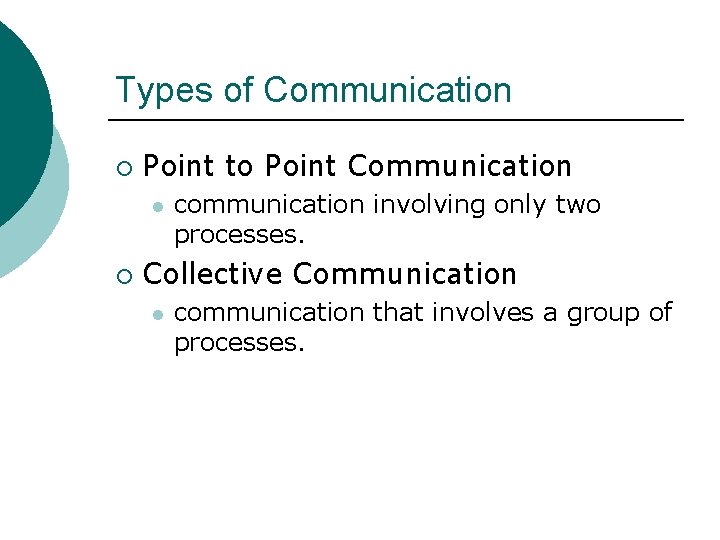

Types of Communication ¡ Point to Point Communication l ¡ communication involving only two processes. Collective Communication l communication that involves a group of processes.

Implementation of MPI

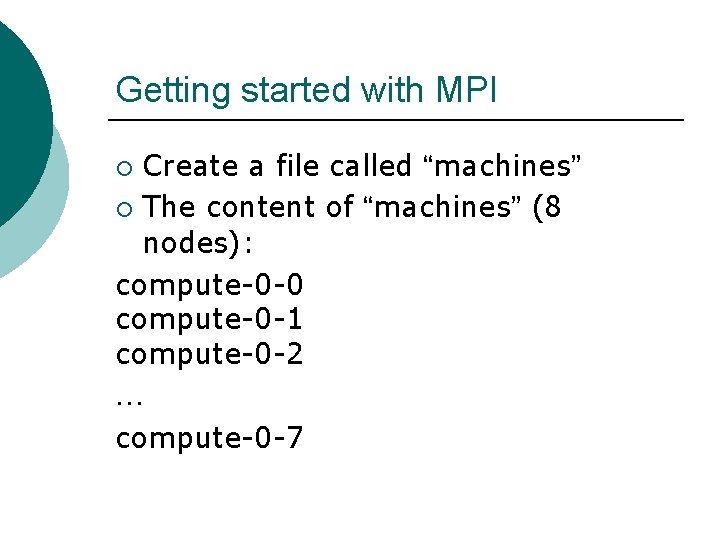

Getting started with MPI Create a file called “machines” ¡ The content of “machines” (8 nodes): compute-0 -0 compute-0 -1 compute-0 -2 … compute-0 -7 ¡

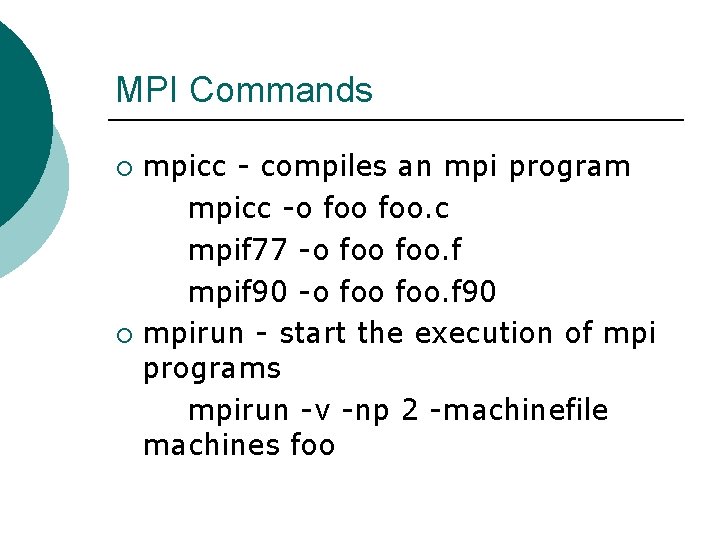

MPI Commands mpicc - compiles an mpi program mpicc -o foo. c mpif 77 -o foo. f mpif 90 -o foo. f 90 ¡ mpirun - start the execution of mpi programs mpirun -v -np 2 -machinefile machines foo ¡

Basic MPI Functions

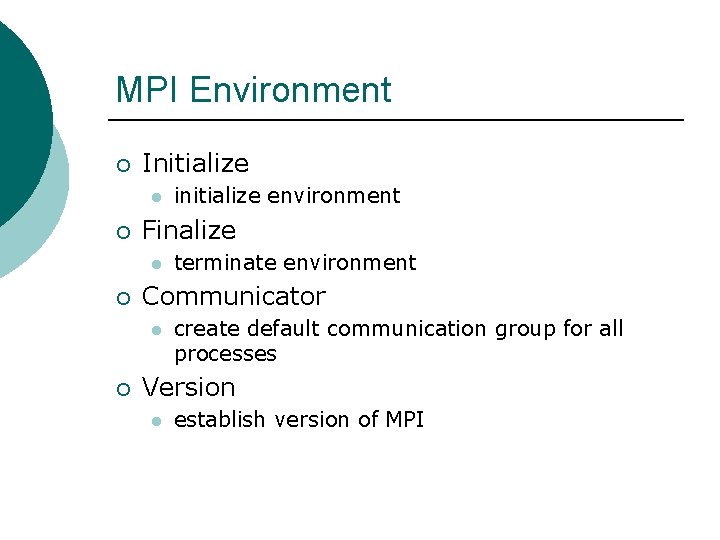

MPI Environment ¡ Initialize l ¡ Finalize l ¡ terminate environment Communicator l ¡ initialize environment create default communication group for all processes Version l establish version of MPI

MPI Environment ¡ Total processes l ¡ Rank/Process ID l ¡ spawn total processes assign identifier to each process Timing Functions l MPI_Wtime, MPI_Wtick

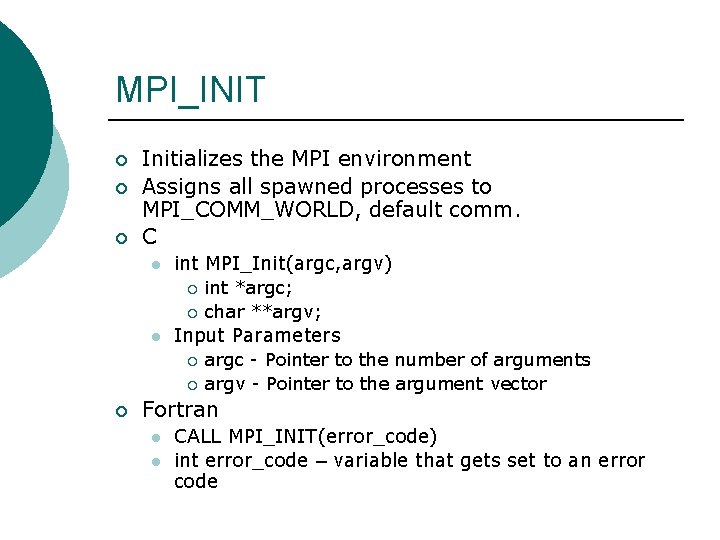

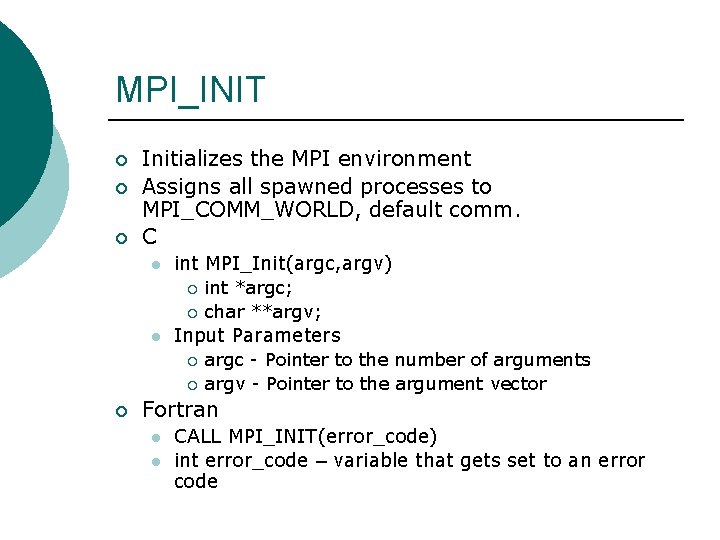

MPI_INIT ¡ ¡ ¡ Initializes the MPI environment Assigns all spawned processes to MPI_COMM_WORLD, default comm. C l int MPI_Init(argc, argv) ¡ ¡ l Input Parameters ¡ ¡ ¡ int *argc; char **argv; argc - Pointer to the number of arguments argv - Pointer to the argument vector Fortran l l CALL MPI_INIT(error_code) int error_code – variable that gets set to an error code

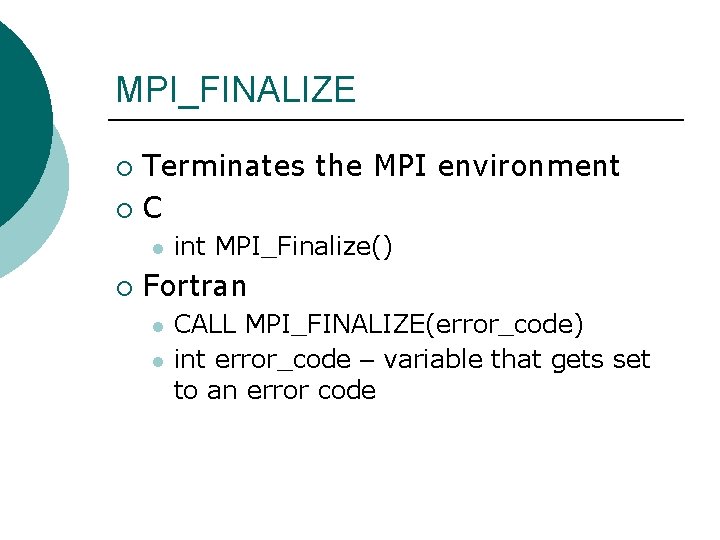

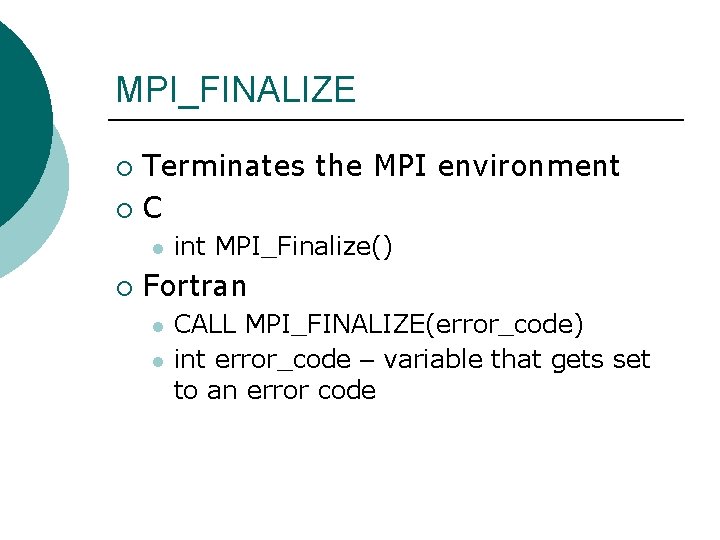

MPI_FINALIZE Terminates the MPI environment ¡ C ¡ l ¡ int MPI_Finalize() Fortran l l CALL MPI_FINALIZE(error_code) int error_code – variable that gets set to an error code

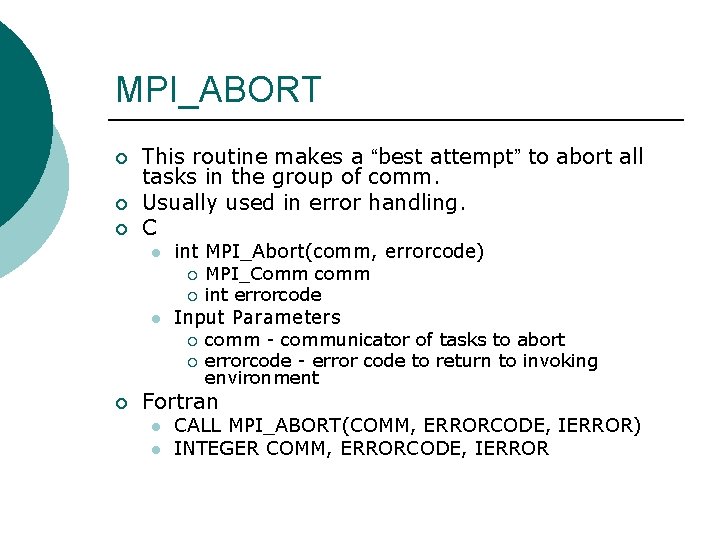

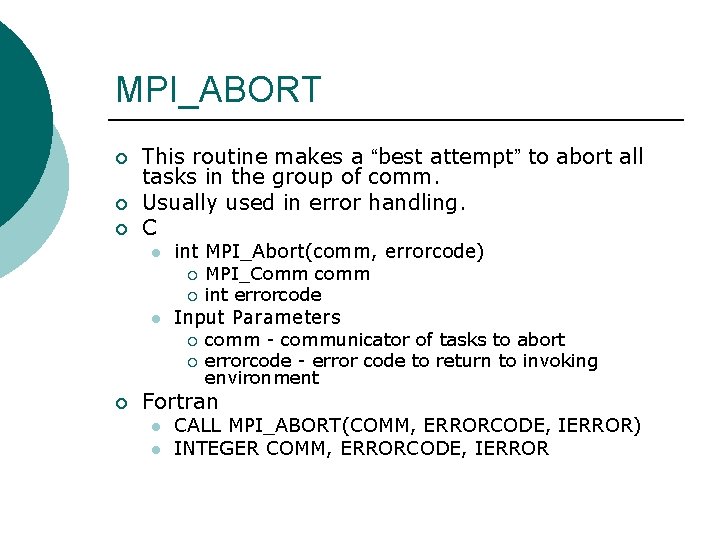

MPI_ABORT ¡ ¡ ¡ This routine makes a “best attempt” to abort all tasks in the group of comm. Usually used in error handling. C l int MPI_Abort(comm, errorcode) ¡ ¡ l Input Parameters ¡ ¡ ¡ MPI_Comm comm int errorcode comm - communicator of tasks to abort errorcode - error code to return to invoking environment Fortran l l CALL MPI_ABORT(COMM, ERRORCODE, IERROR) INTEGER COMM, ERRORCODE, IERROR

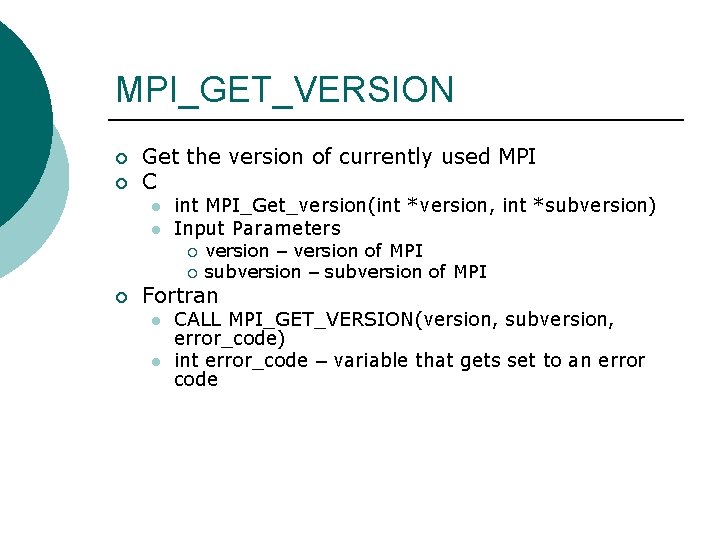

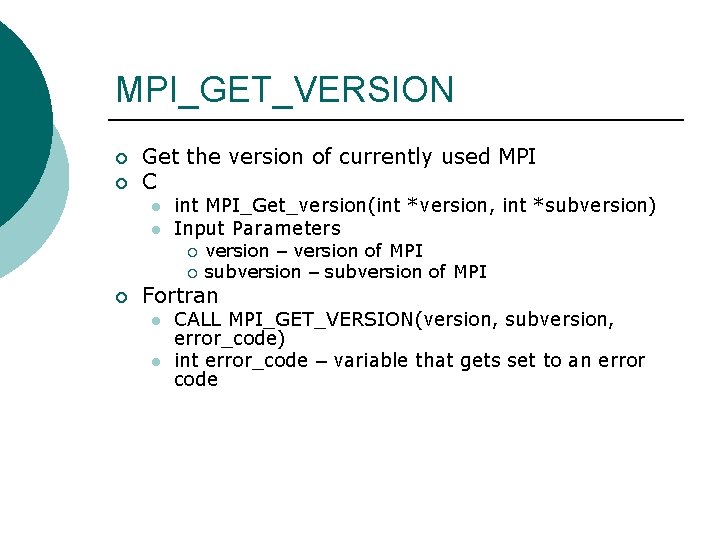

MPI_GET_VERSION ¡ ¡ Get the version of currently used MPI C l l int MPI_Get_version(int *version, int *subversion) Input Parameters ¡ ¡ ¡ version – version of MPI subversion – subversion of MPI Fortran l l CALL MPI_GET_VERSION(version, subversion, error_code) int error_code – variable that gets set to an error code

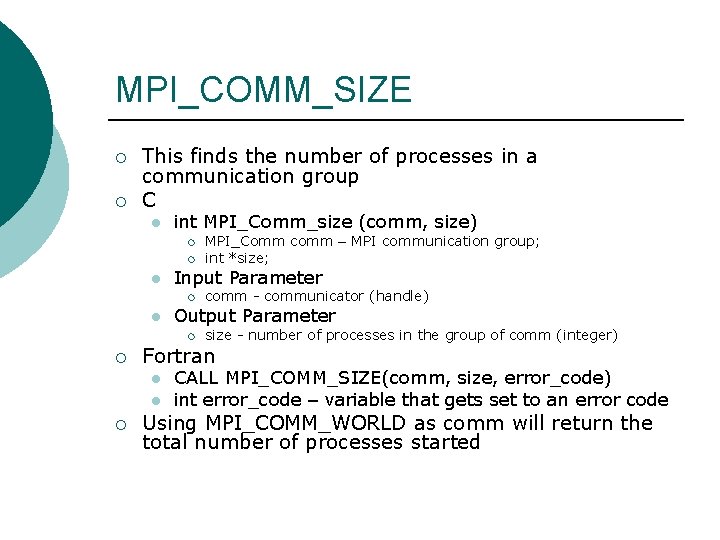

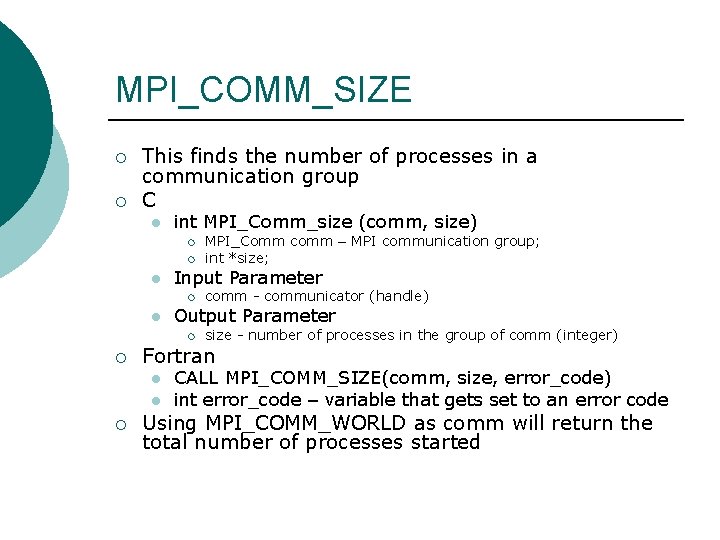

MPI_COMM_SIZE ¡ ¡ This finds the number of processes in a communication group C l int MPI_Comm_size (comm, size) ¡ ¡ l Input Parameter ¡ l size - number of processes in the group of comm (integer) Fortran l l ¡ comm - communicator (handle) Output Parameter ¡ ¡ MPI_Comm comm – MPI communication group; int *size; CALL MPI_COMM_SIZE(comm, size, error_code) int error_code – variable that gets set to an error code Using MPI_COMM_WORLD as comm will return the total number of processes started

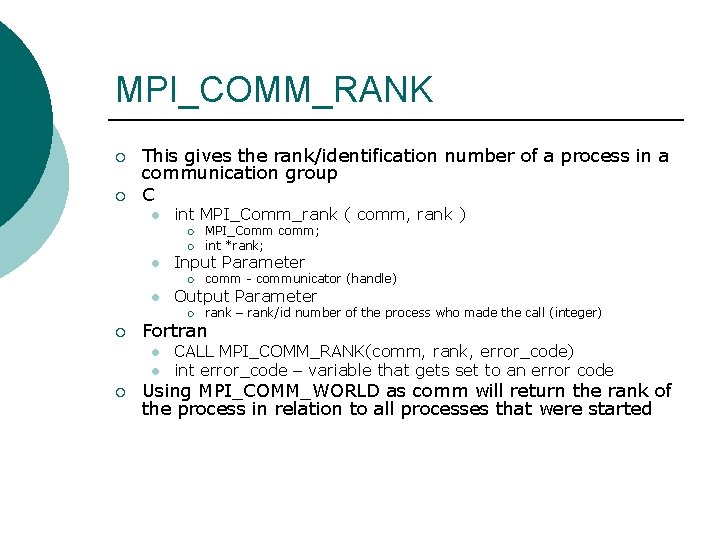

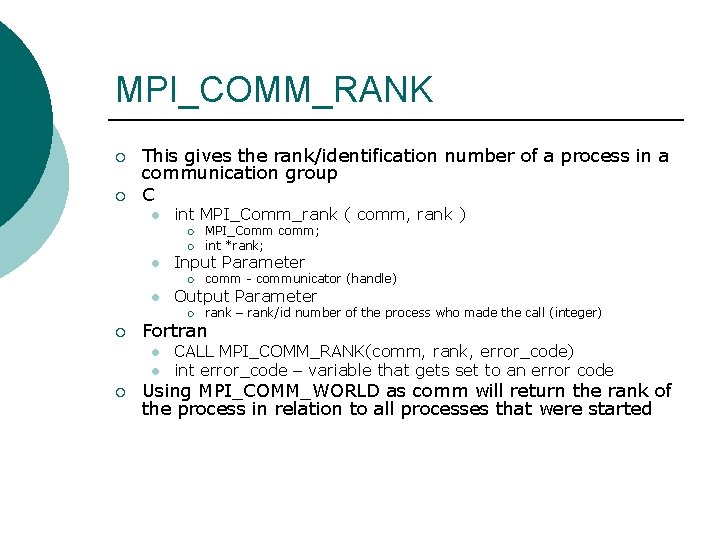

MPI_COMM_RANK ¡ ¡ This gives the rank/identification number of a process in a communication group C l int MPI_Comm_rank ( comm, rank ) ¡ ¡ l Input Parameter ¡ l rank – rank/id number of the process who made the call (integer) Fortran l l ¡ comm - communicator (handle) Output Parameter ¡ ¡ MPI_Comm comm; int *rank; CALL MPI_COMM_RANK(comm, rank, error_code) int error_code – variable that gets set to an error code Using MPI_COMM_WORLD as comm will return the rank of the process in relation to all processes that were started

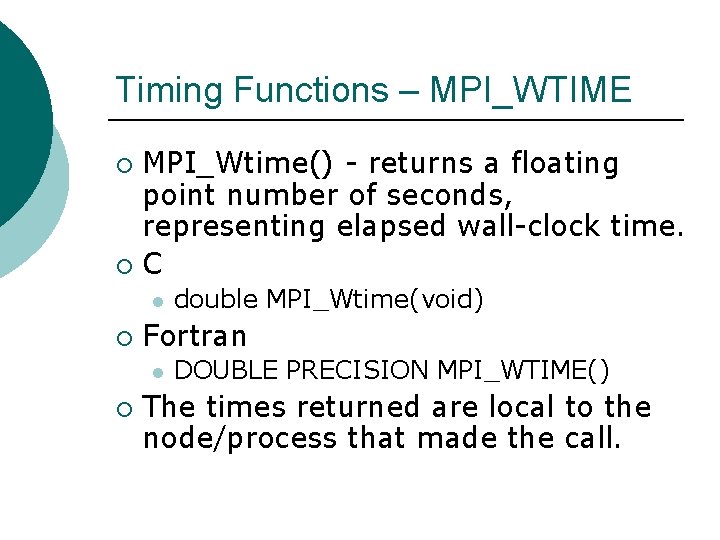

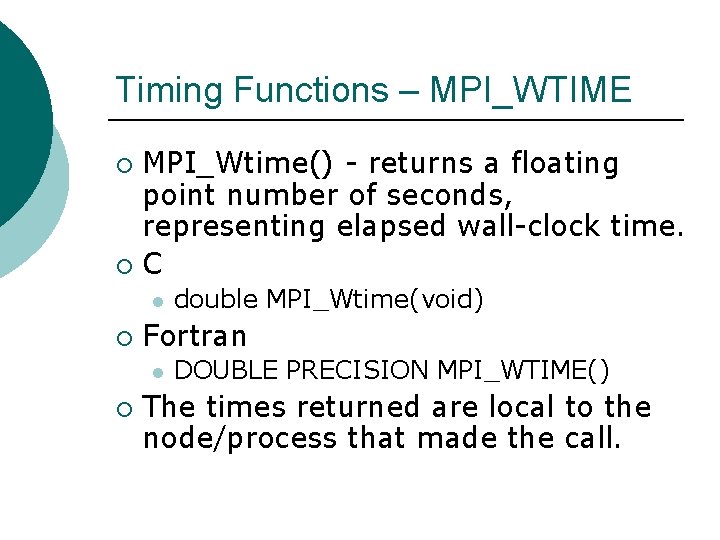

Timing Functions – MPI_WTIME MPI_Wtime() - returns a floating point number of seconds, representing elapsed wall-clock time. ¡ C ¡ l ¡ Fortran l ¡ double MPI_Wtime(void) DOUBLE PRECISION MPI_WTIME() The times returned are local to the node/process that made the call.

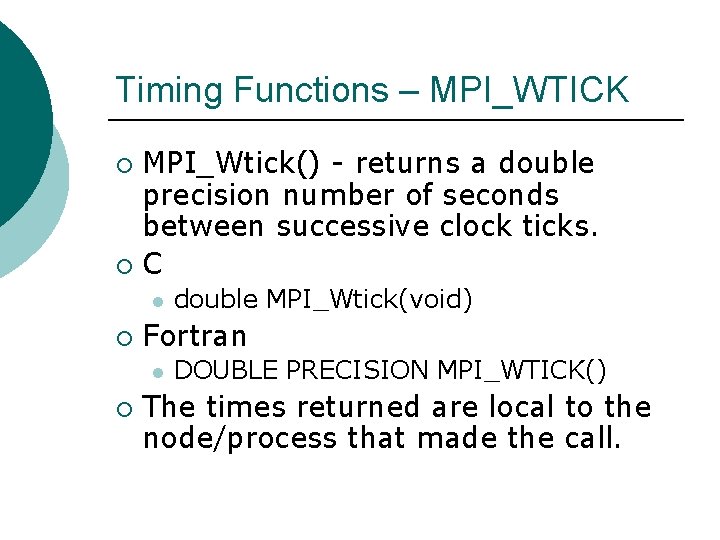

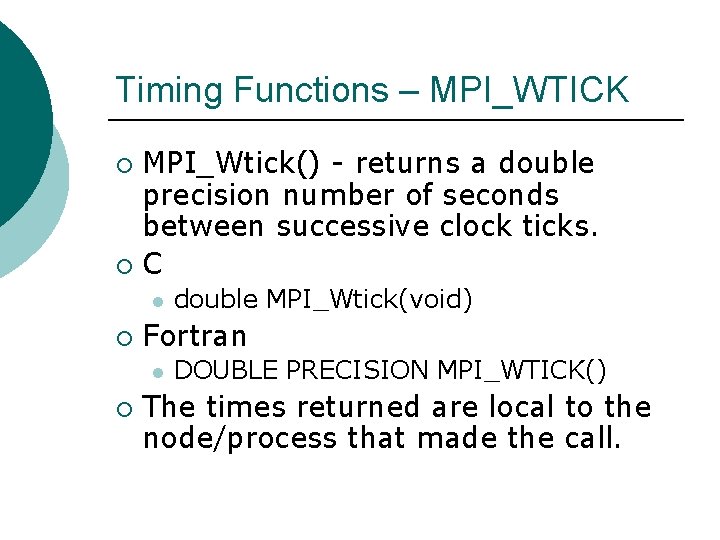

Timing Functions – MPI_WTICK MPI_Wtick() - returns a double precision number of seconds between successive clock ticks. ¡ C ¡ l ¡ Fortran l ¡ double MPI_Wtick(void) DOUBLE PRECISION MPI_WTICK() The times returned are local to the node/process that made the call.

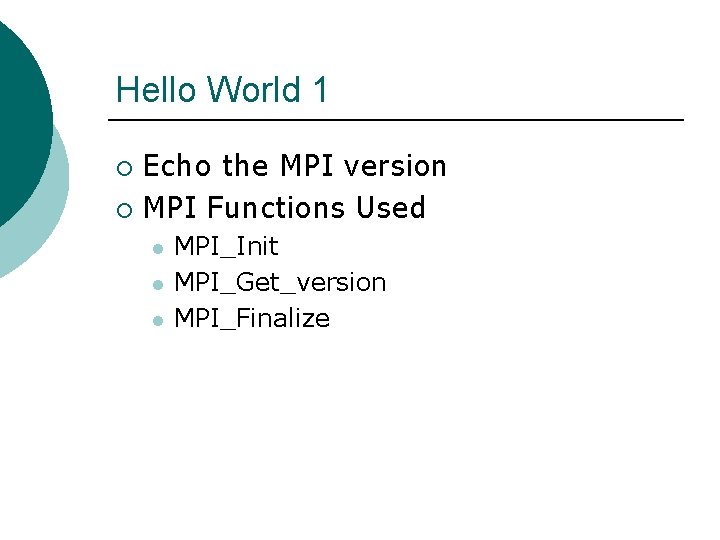

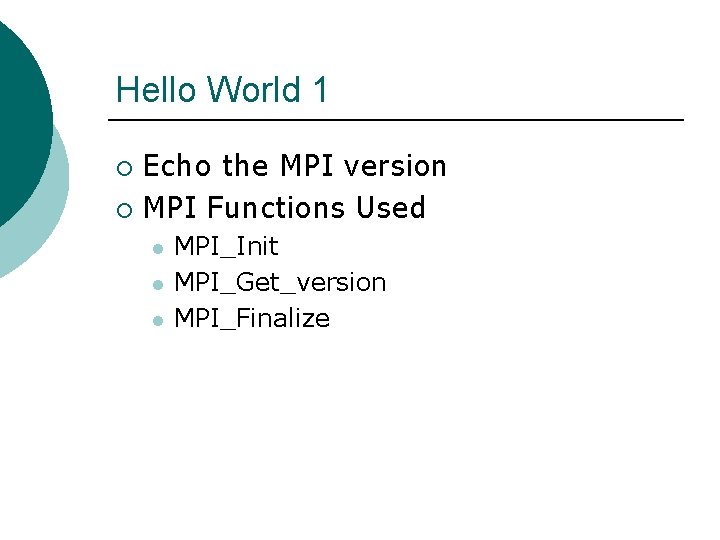

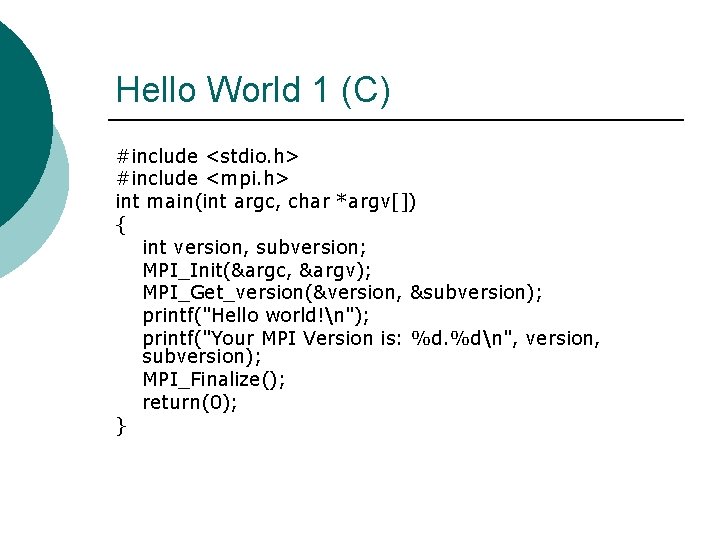

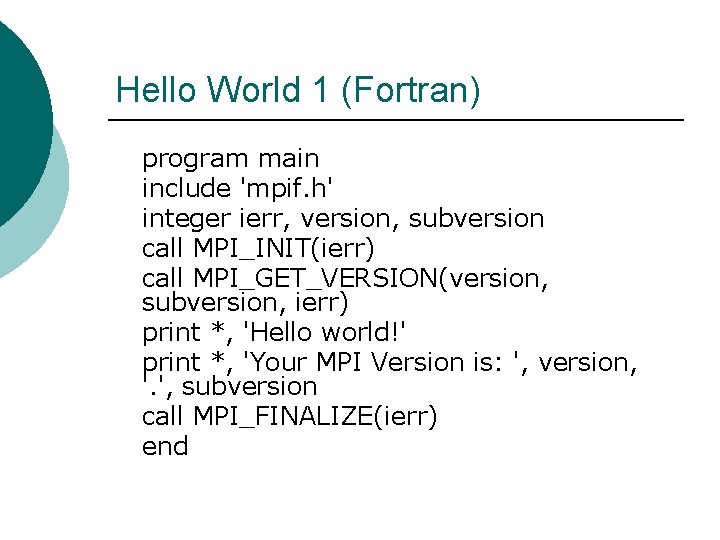

Hello World 1 Echo the MPI version ¡ MPI Functions Used ¡ l l l MPI_Init MPI_Get_version MPI_Finalize

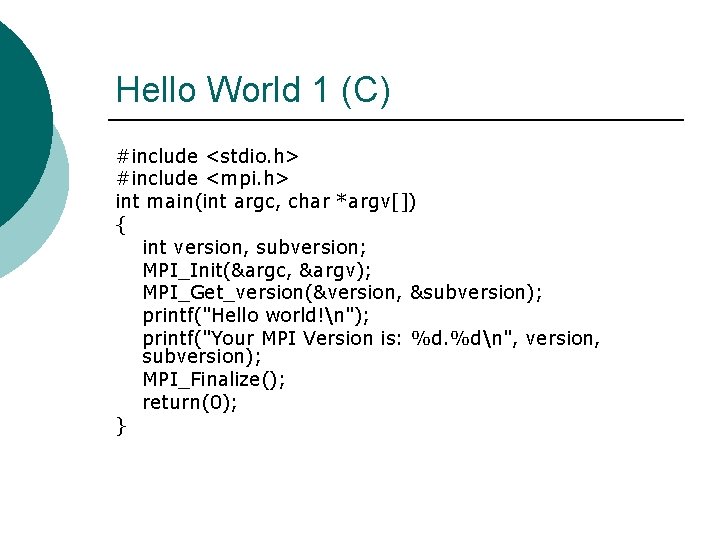

Hello World 1 (C) #include <stdio. h> #include <mpi. h> int main(int argc, char *argv[]) { int version, subversion; MPI_Init(&argc, &argv); MPI_Get_version(&version, &subversion); printf("Hello world!n"); printf("Your MPI Version is: %d. %dn", version, subversion); MPI_Finalize(); return(0); }

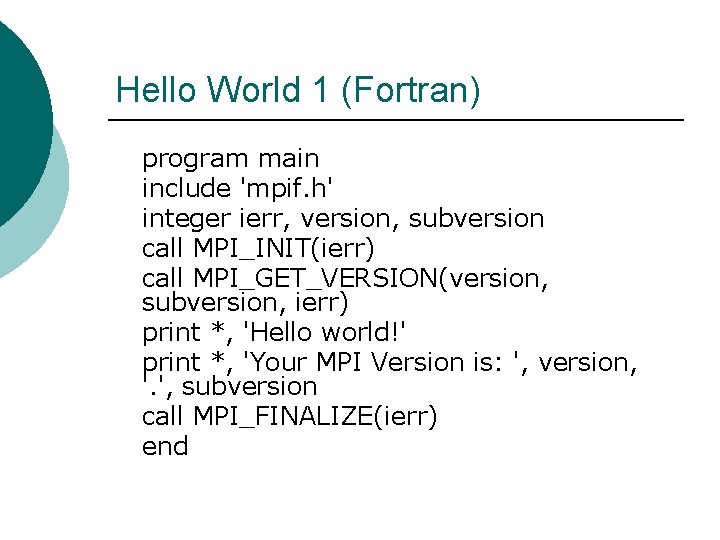

Hello World 1 (Fortran) program main include 'mpif. h' integer ierr, version, subversion call MPI_INIT(ierr) call MPI_GET_VERSION(version, subversion, ierr) print *, 'Hello world!' print *, 'Your MPI Version is: ', version, '. ', subversion call MPI_FINALIZE(ierr) end

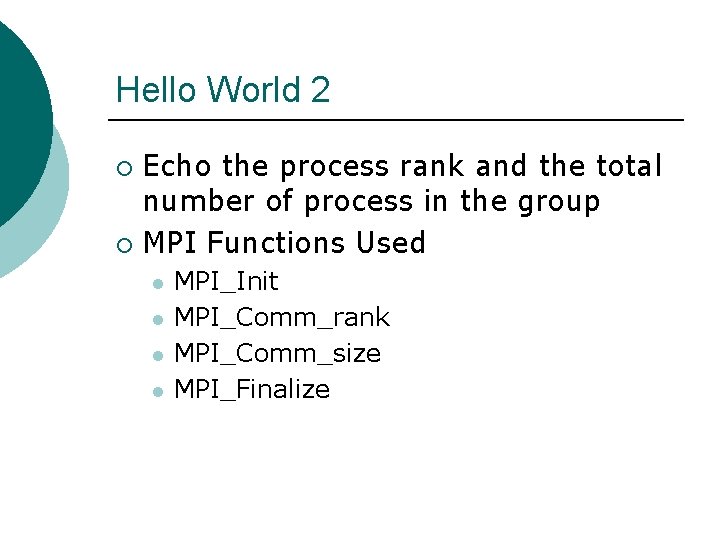

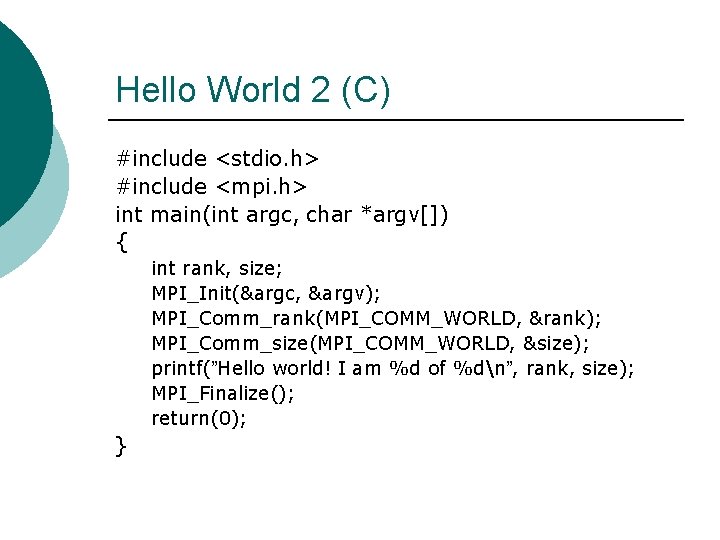

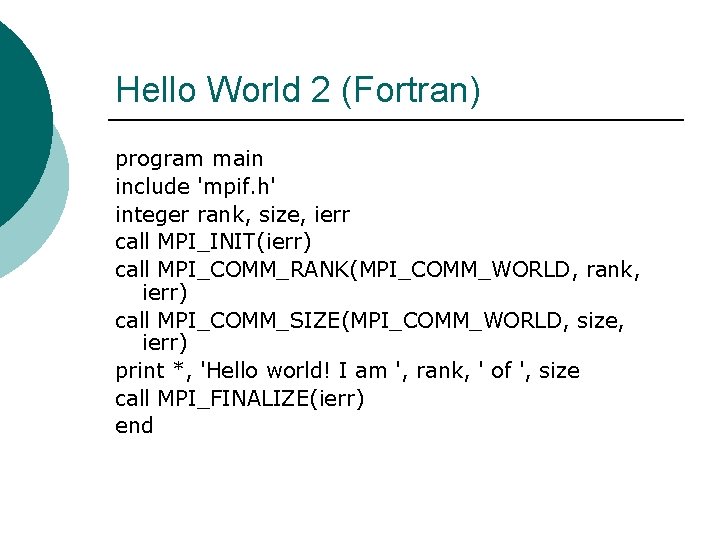

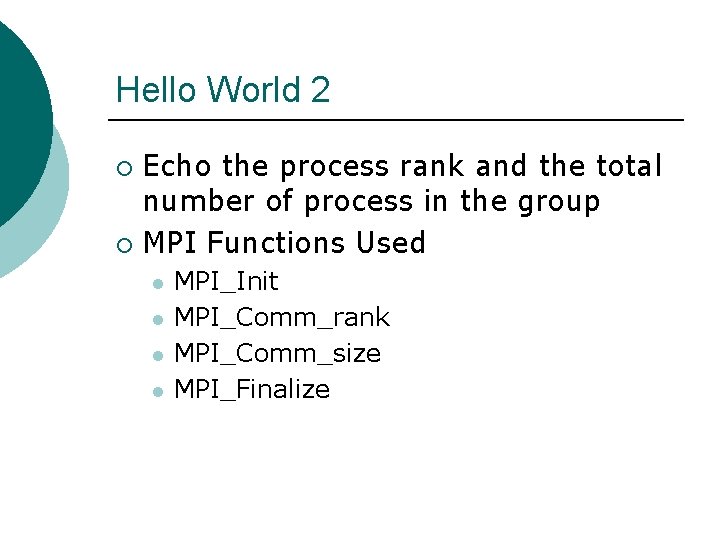

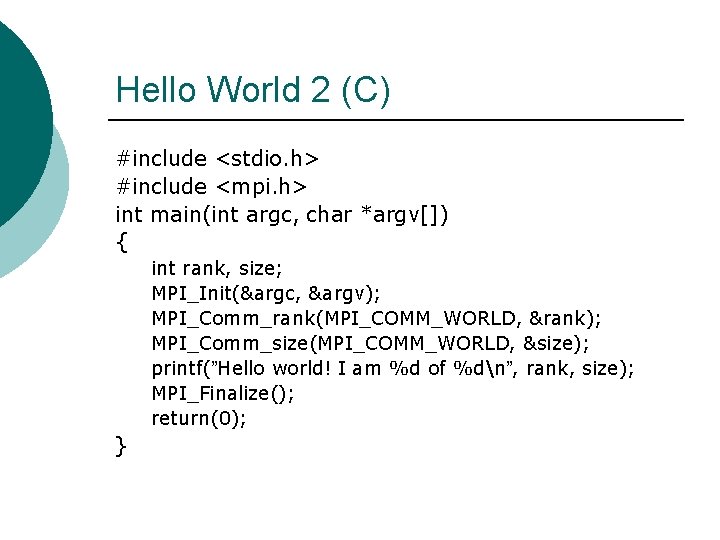

Hello World 2 Echo the process rank and the total number of process in the group ¡ MPI Functions Used ¡ l l MPI_Init MPI_Comm_rank MPI_Comm_size MPI_Finalize

Hello World 2 (C) #include <stdio. h> #include <mpi. h> int main(int argc, char *argv[]) { int rank, size; MPI_Init(&argc, &argv); MPI_Comm_rank(MPI_COMM_WORLD, &rank); MPI_Comm_size(MPI_COMM_WORLD, &size); printf(”Hello world! I am %d of %dn”, rank, size); MPI_Finalize(); return(0); }

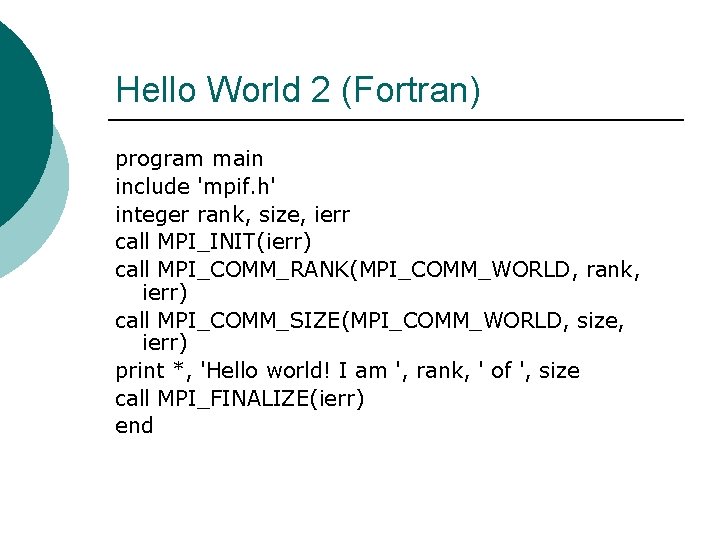

Hello World 2 (Fortran) program main include 'mpif. h' integer rank, size, ierr call MPI_INIT(ierr) call MPI_COMM_RANK(MPI_COMM_WORLD, rank, ierr) call MPI_COMM_SIZE(MPI_COMM_WORLD, size, ierr) print *, 'Hello world! I am ', rank, ' of ', size call MPI_FINALIZE(ierr) end

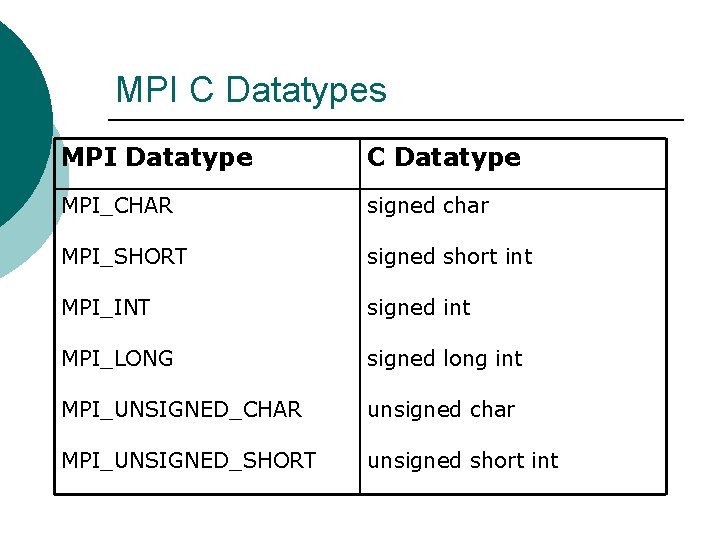

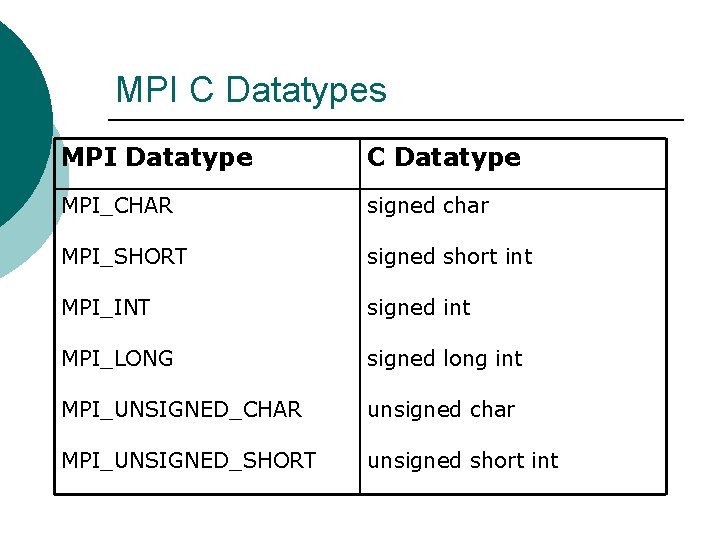

MPI C Datatypes MPI Datatype C Datatype MPI_CHAR signed char MPI_SHORT signed short int MPI_INT signed int MPI_LONG signed long int MPI_UNSIGNED_CHAR unsigned char MPI_UNSIGNED_SHORT unsigned short int

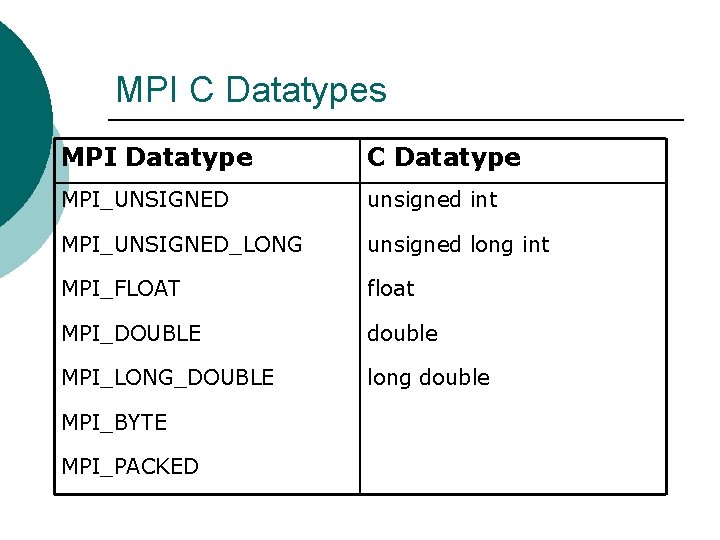

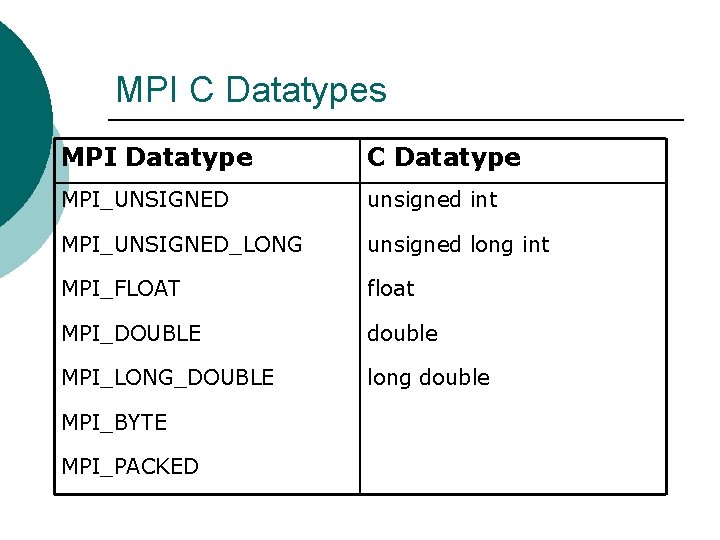

MPI C Datatypes MPI Datatype C Datatype MPI_UNSIGNED unsigned int MPI_UNSIGNED_LONG unsigned long int MPI_FLOAT float MPI_DOUBLE double MPI_LONG_DOUBLE long double MPI_BYTE MPI_PACKED

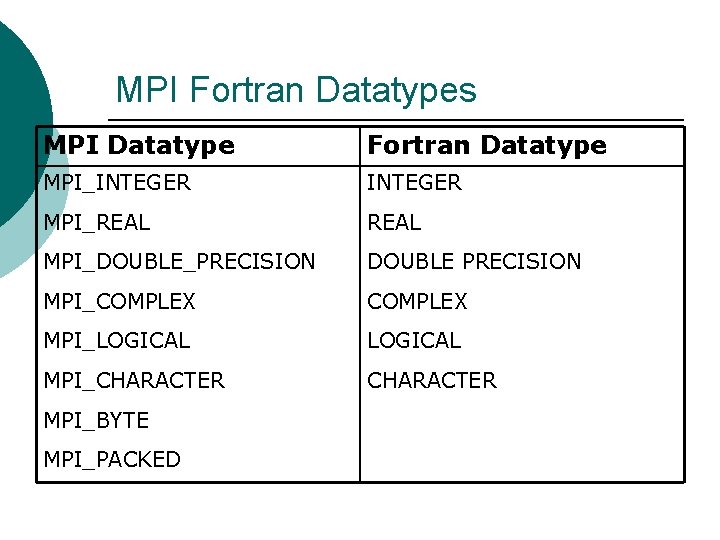

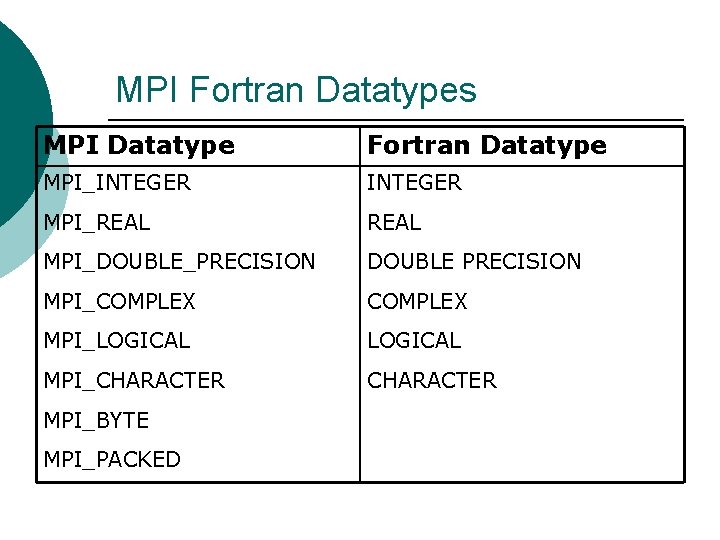

MPI Fortran Datatypes MPI Datatype Fortran Datatype MPI_INTEGER MPI_REAL MPI_DOUBLE_PRECISION DOUBLE PRECISION MPI_COMPLEX MPI_LOGICAL MPI_CHARACTER MPI_BYTE MPI_PACKED

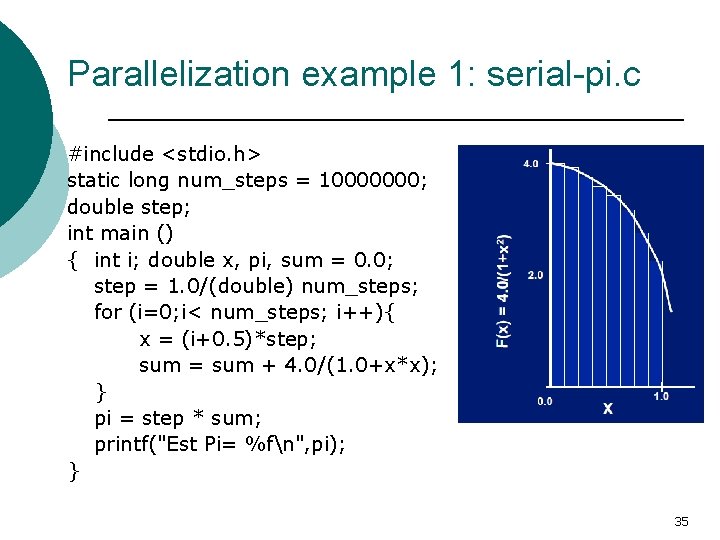

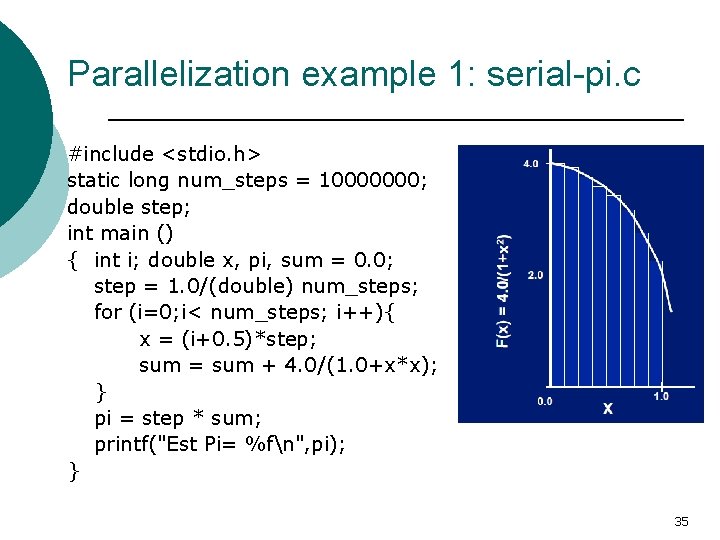

Parallelization example 1: serial-pi. c #include <stdio. h> static long num_steps = 10000000; double step; int main () { int i; double x, pi, sum = 0. 0; step = 1. 0/(double) num_steps; for (i=0; i< num_steps; i++){ x = (i+0. 5)*step; sum = sum + 4. 0/(1. 0+x*x); } pi = step * sum; printf("Est Pi= %fn", pi); } 35

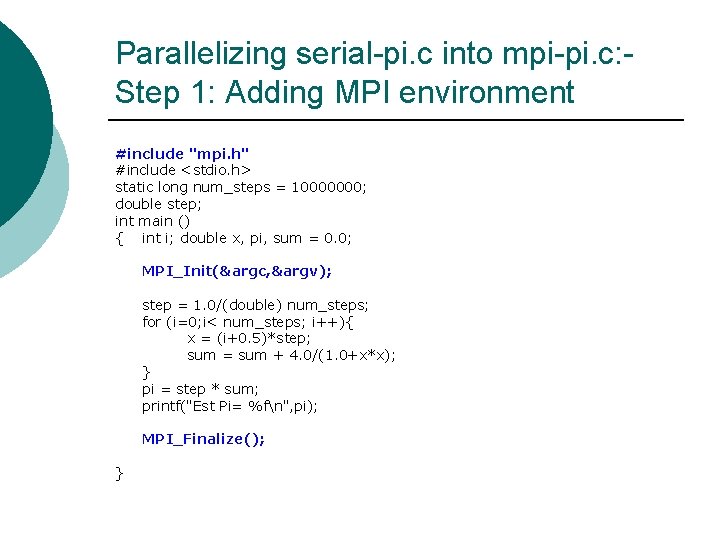

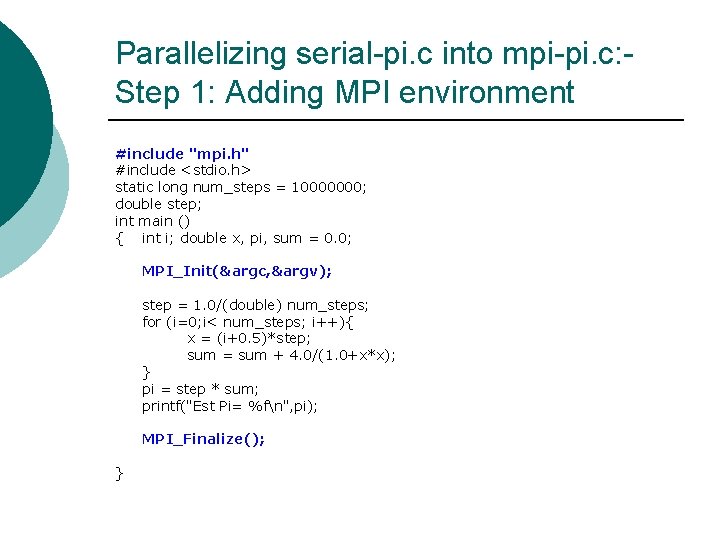

Parallelizing serial-pi. c into mpi-pi. c: Step 1: Adding MPI environment #include "mpi. h" #include <stdio. h> static long num_steps = 10000000; double step; int main () { int i; double x, pi, sum = 0. 0; MPI_Init(&argc, &argv); step = 1. 0/(double) num_steps; for (i=0; i< num_steps; i++){ x = (i+0. 5)*step; sum = sum + 4. 0/(1. 0+x*x); } pi = step * sum; printf("Est Pi= %fn", pi); MPI_Finalize(); }

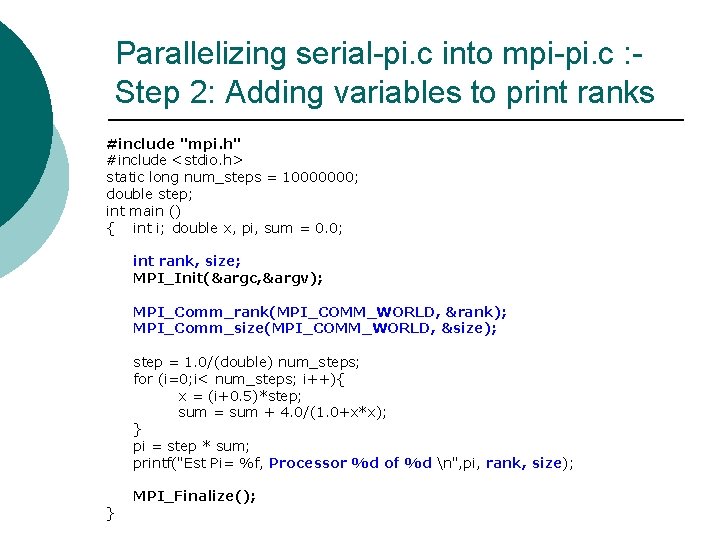

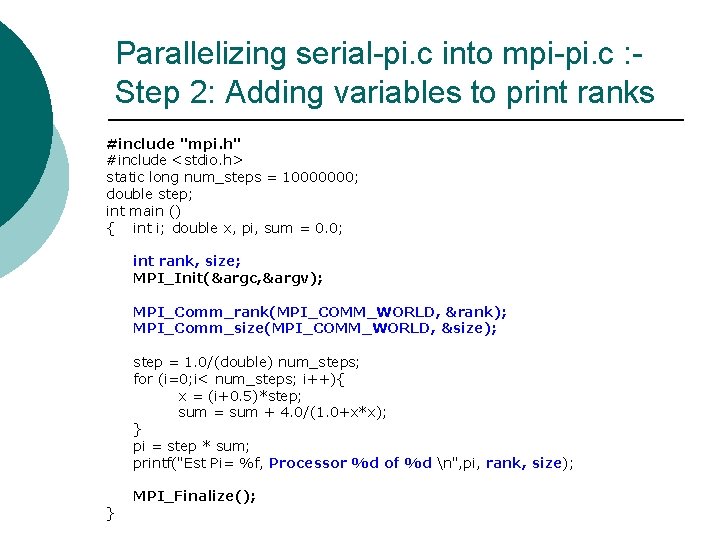

Parallelizing serial-pi. c into mpi-pi. c : Step 2: Adding variables to print ranks #include "mpi. h" #include <stdio. h> static long num_steps = 10000000; double step; int main () { int i; double x, pi, sum = 0. 0; int rank, size; MPI_Init(&argc, &argv); MPI_Comm_rank(MPI_COMM_WORLD, &rank); MPI_Comm_size(MPI_COMM_WORLD, &size); step = 1. 0/(double) num_steps; for (i=0; i< num_steps; i++){ x = (i+0. 5)*step; sum = sum + 4. 0/(1. 0+x*x); } pi = step * sum; printf("Est Pi= %f, Processor %d of %d n", pi, rank, size); } MPI_Finalize();

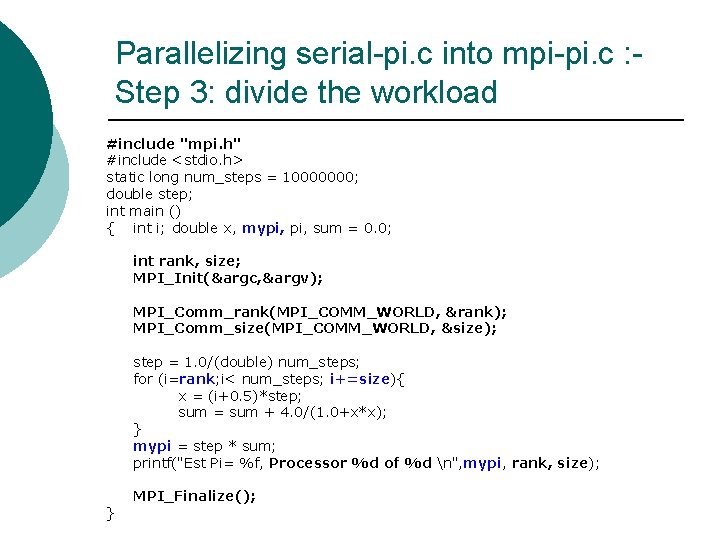

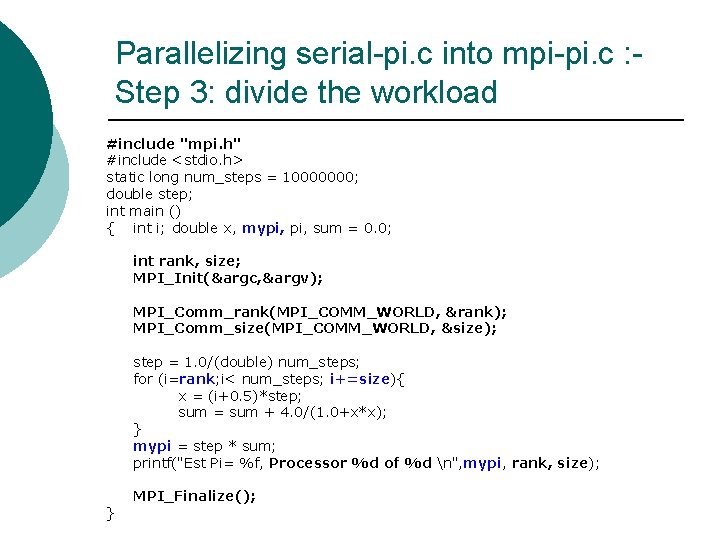

Parallelizing serial-pi. c into mpi-pi. c : Step 3: divide the workload #include "mpi. h" #include <stdio. h> static long num_steps = 10000000; double step; int main () { int i; double x, mypi, sum = 0. 0; int rank, size; MPI_Init(&argc, &argv); MPI_Comm_rank(MPI_COMM_WORLD, &rank); MPI_Comm_size(MPI_COMM_WORLD, &size); step = 1. 0/(double) num_steps; for (i=rank; i< num_steps; i+=size){ x = (i+0. 5)*step; sum = sum + 4. 0/(1. 0+x*x); } mypi = step * sum; printf("Est Pi= %f, Processor %d of %d n", mypi, rank, size); } MPI_Finalize();

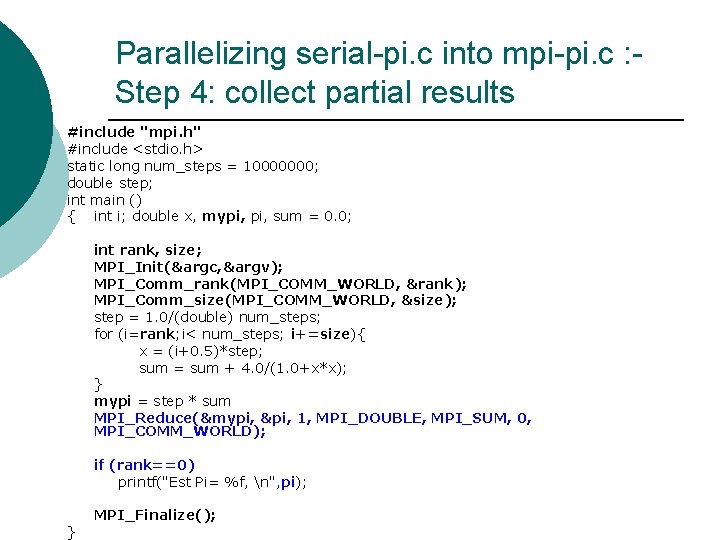

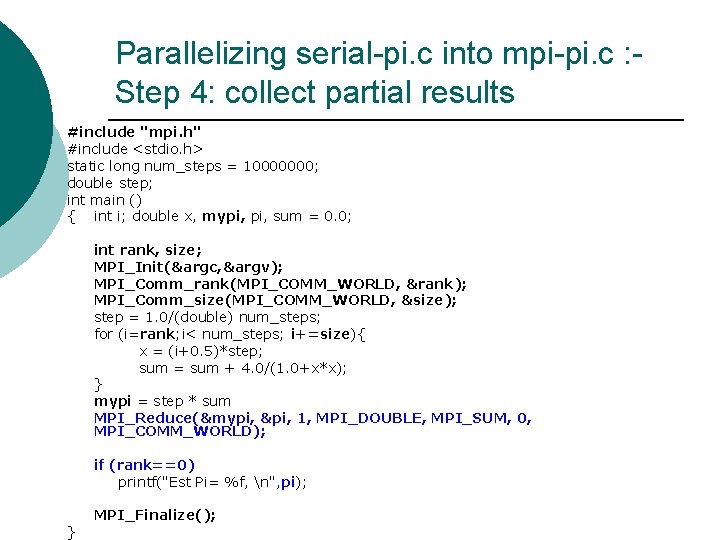

Parallelizing serial-pi. c into mpi-pi. c : Step 4: collect partial results #include "mpi. h" #include <stdio. h> static long num_steps = 10000000; double step; int main () { int i; double x, mypi, sum = 0. 0; int rank, size; MPI_Init(&argc, &argv); MPI_Comm_rank(MPI_COMM_WORLD, &rank); MPI_Comm_size(MPI_COMM_WORLD, &size); step = 1. 0/(double) num_steps; for (i=rank; i< num_steps; i+=size){ x = (i+0. 5)*step; sum = sum + 4. 0/(1. 0+x*x); } mypi = step * sum MPI_Reduce(&mypi, &pi, 1, MPI_DOUBLE, MPI_SUM, 0, MPI_COMM_WORLD); if (rank==0) printf("Est Pi= %f, n", pi); } MPI_Finalize();

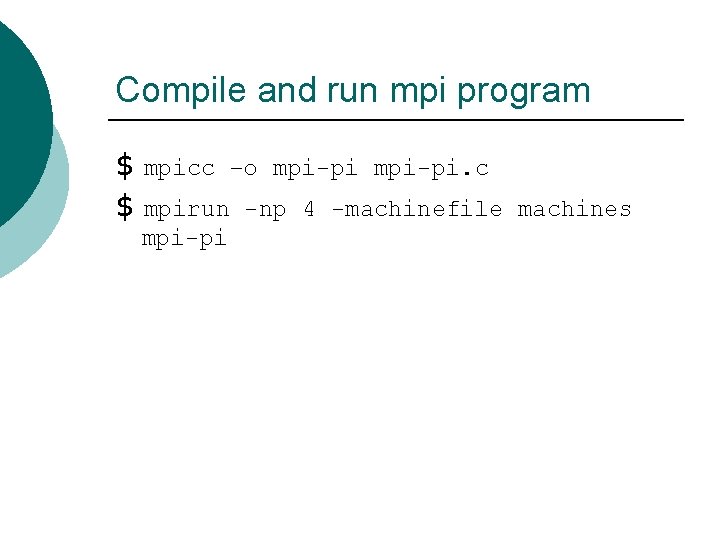

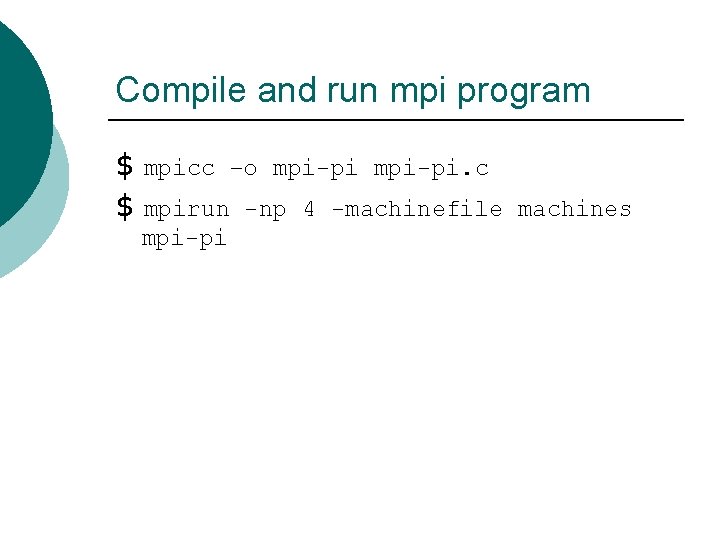

Compile and run mpi program $ mpicc –o mpi-pi. c $ mpirun -np 4 -machinefile machines mpi-pi

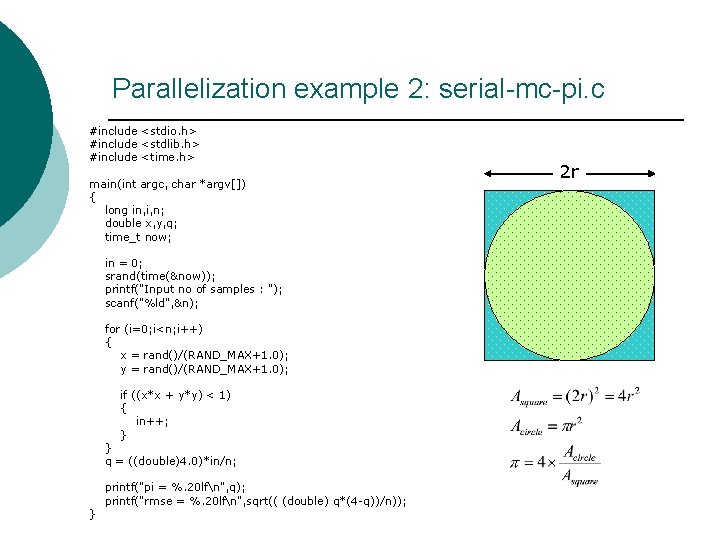

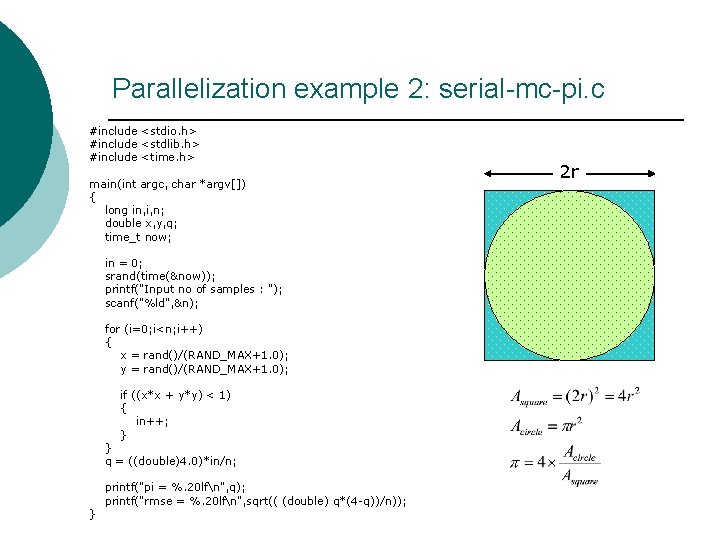

Parallelization example 2: serial-mc-pi. c #include <stdio. h> #include <stdlib. h> #include <time. h> main(int argc, char *argv[]) { long in, i, n; double x, y, q; time_t now; in = 0; srand(time(&now)); printf("Input no of samples : "); scanf("%ld", &n); for (i=0; i<n; i++) { x = rand()/(RAND_MAX+1. 0); y = rand()/(RAND_MAX+1. 0); if ((x*x + y*y) < 1) { in++; } } q = ((double)4. 0)*in/n; } printf("pi = %. 20 lfn", q); printf("rmse = %. 20 lfn", sqrt(( (double) q*(4 -q))/n)); 2 r

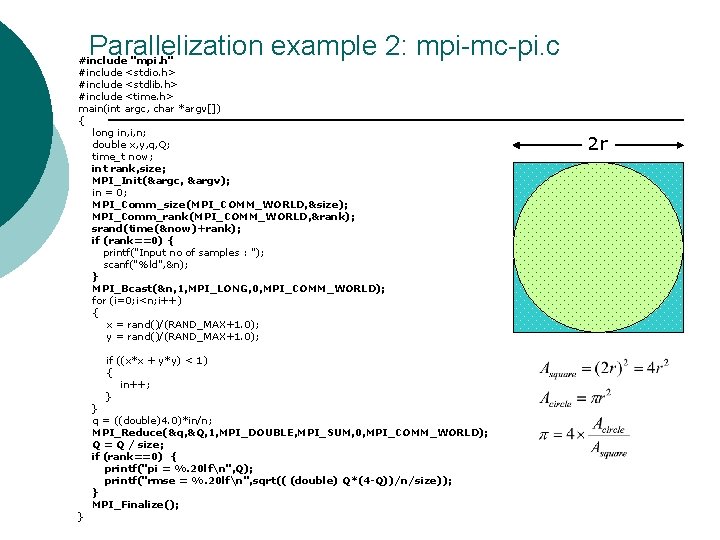

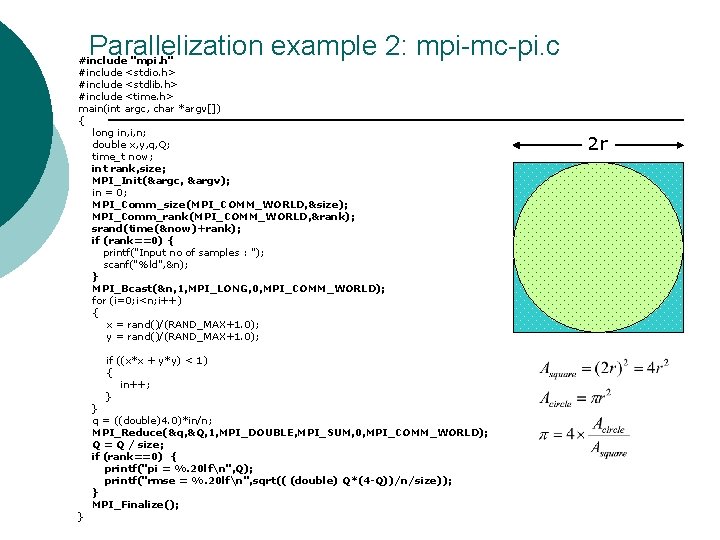

Parallelization example 2: mpi-mc-pi. c #include "mpi. h" #include <stdio. h> #include <stdlib. h> #include <time. h> main(int argc, char *argv[]) { long in, i, n; double x, y, q, Q; time_t now; int rank, size; MPI_Init(&argc, &argv); in = 0; MPI_Comm_size(MPI_COMM_WORLD, &size); MPI_Comm_rank(MPI_COMM_WORLD, &rank); srand(time(&now)+rank); if (rank==0) { printf("Input no of samples : "); scanf("%ld", &n); } MPI_Bcast(&n, 1, MPI_LONG, 0, MPI_COMM_WORLD); for (i=0; i<n; i++) { x = rand()/(RAND_MAX+1. 0); y = rand()/(RAND_MAX+1. 0); if ((x*x + y*y) < 1) { in++; } } } q = ((double)4. 0)*in/n; MPI_Reduce(&q, &Q, 1, MPI_DOUBLE, MPI_SUM, 0, MPI_COMM_WORLD); Q = Q / size; if (rank==0) { printf("pi = %. 20 lfn", Q); printf("rmse = %. 20 lfn", sqrt(( (double) Q*(4 -Q))/n/size)); } MPI_Finalize(); 2 r

Compile and run mpi-mc-pi $ mpicc –o mpi-mc-pi. c $ mpirun -np 4 -machinefile machines mpi-mc-pi

The End