Introduction to ManyCore Architectures Henk Corporaal www ics

![FIR using pthread_t fir_threads[N_THREAD]; fir_arg fa[N_THREAD]; tsize = M/N_THREAD; for(i = 0; i < FIR using pthread_t fir_threads[N_THREAD]; fir_arg fa[N_THREAD]; tsize = M/N_THREAD; for(i = 0; i <](https://slidetodoc.com/presentation_image_h2/d9de3b692d14856c617e033d3ae12fd4/image-105.jpg)

![SPE DMA double buffering float i. Buf[2][BUF_SIZE]; float o. Buf[2][BUF_SIZE]; int idx=0; int buffers=size/BUF_SIZE; SPE DMA double buffering float i. Buf[2][BUF_SIZE]; float o. Buf[2][BUF_SIZE]; int idx=0; int buffers=size/BUF_SIZE;](https://slidetodoc.com/presentation_image_h2/d9de3b692d14856c617e033d3ae12fd4/image-108.jpg)

- Slides: 124

Introduction to Many-Core Architectures Henk Corporaal www. ics. ele. tue. nl/~heco ASCI Winterschool on Embedded Systems Soesterberg, March 2010 ASCI Winterschool 2010

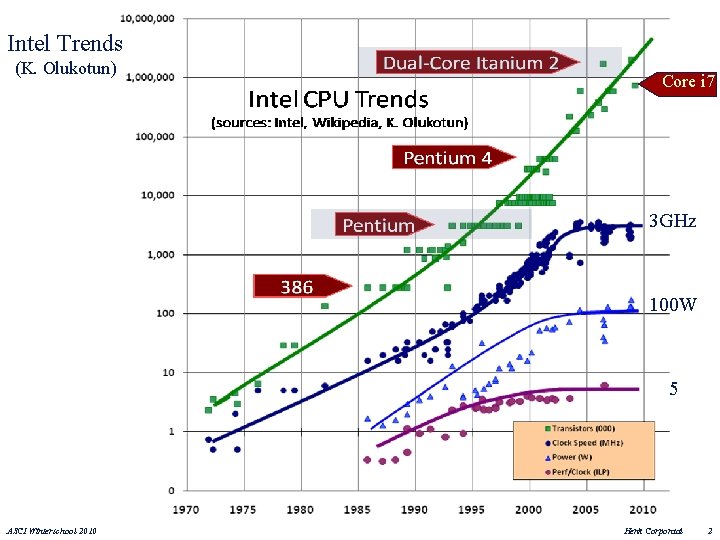

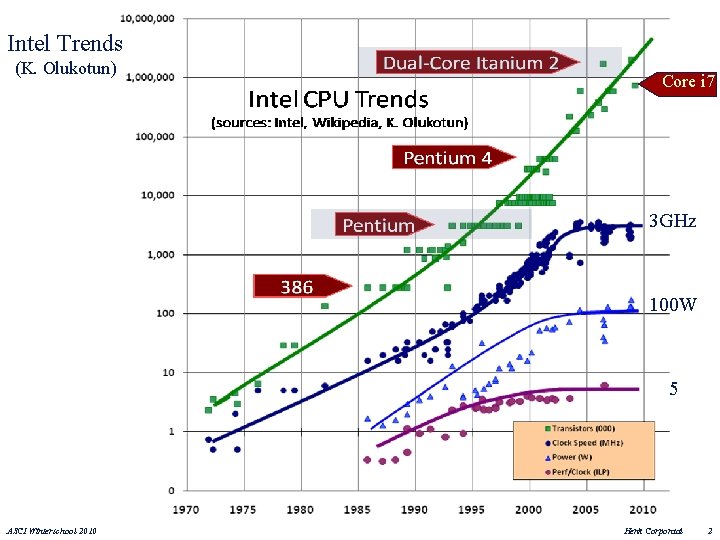

Intel Trends (K. Olukotun) Core i 7 3 GHz 100 W 5 ASCI Winterschool 2010 Henk Corporaal 2

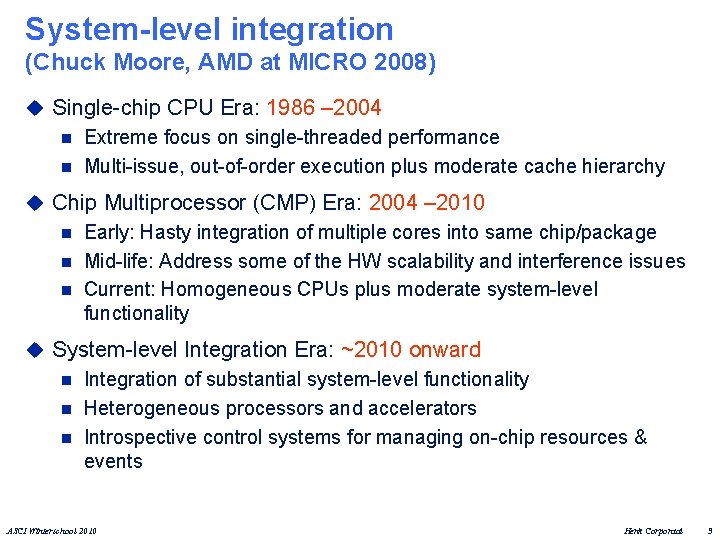

System-level integration (Chuck Moore, AMD at MICRO 2008) u Single-chip CPU Era: 1986 – 2004 n Extreme focus on single-threaded performance n Multi-issue, out-of-order execution plus moderate cache hierarchy u Chip Multiprocessor (CMP) Era: 2004 – 2010 n Early: Hasty integration of multiple cores into same chip/package n Mid-life: Address some of the HW scalability and interference issues n Current: Homogeneous CPUs plus moderate system-level functionality u System-level Integration Era: ~2010 onward n Integration of substantial system-level functionality n Heterogeneous processors and accelerators n Introspective control systems for managing on-chip resources & events ASCI Winterschool 2010 Henk Corporaal 3

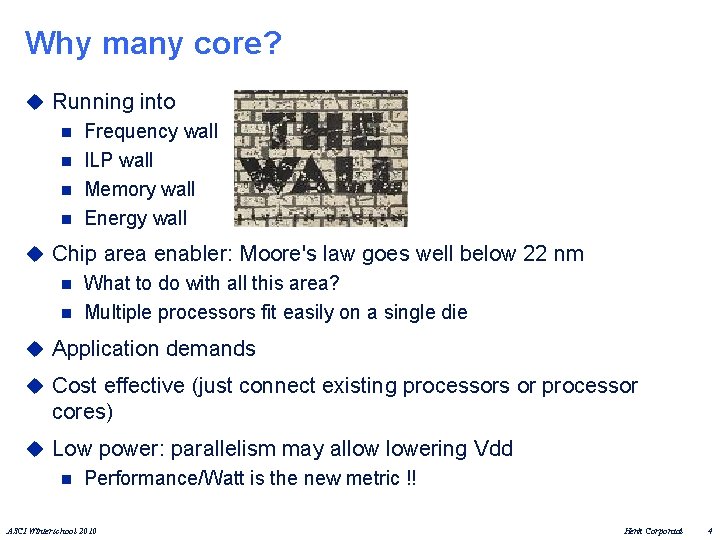

Why many core? u Running into n Frequency wall n ILP wall n Memory wall n Energy wall u Chip area enabler: Moore's law goes well below 22 nm n What to do with all this area? n Multiple processors fit easily on a single die u Application demands u Cost effective (just connect existing processors or processor cores) u Low power: parallelism may allow lowering Vdd n Performance/Watt is the new metric !! ASCI Winterschool 2010 Henk Corporaal 4

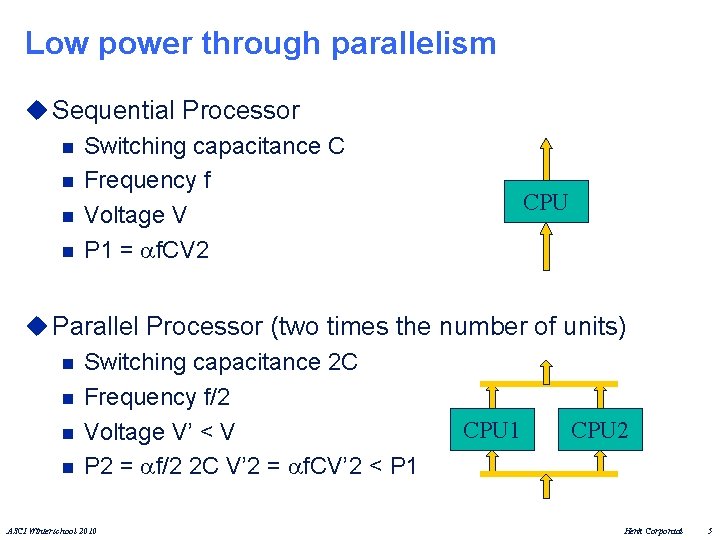

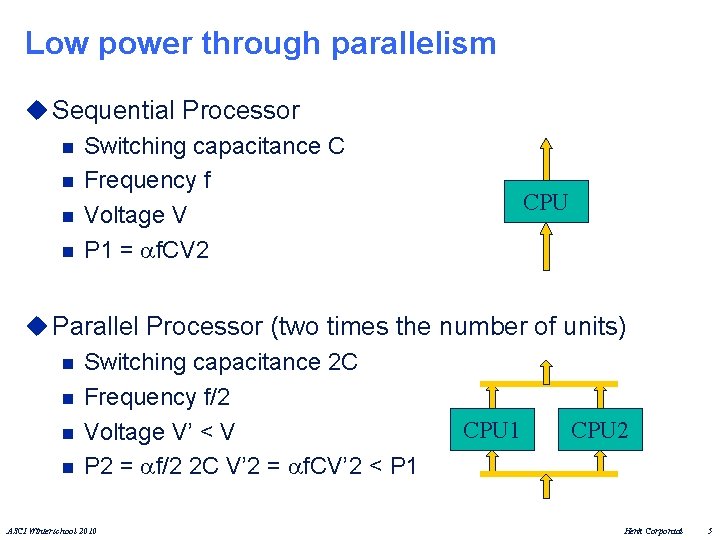

Low power through parallelism u Sequential Processor n Switching capacitance C n Frequency f n Voltage V n P 1 = f. CV 2 CPU u Parallel Processor (two times the number of units) n Switching capacitance 2 C n Frequency f/2 CPU 1 CPU 2 n Voltage V’ < V n P 2 = f/2 2 C V’ 2 = f. CV’ 2 < P 1 ASCI Winterschool 2010 Henk Corporaal 5

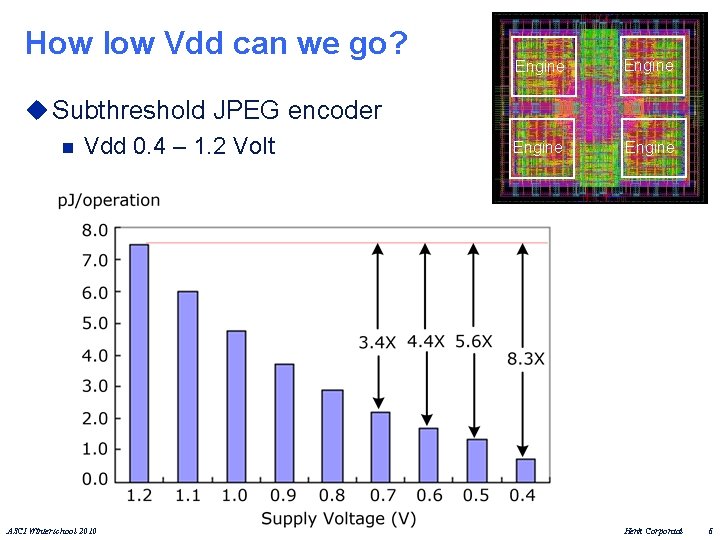

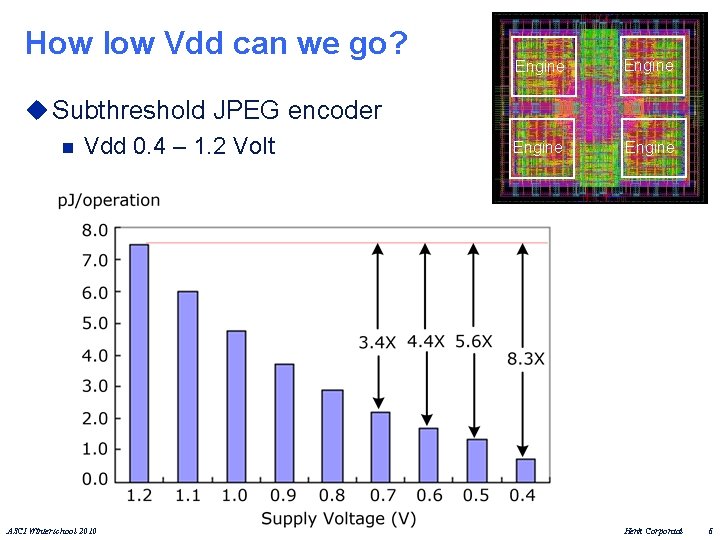

How low Vdd can we go? u Subthreshold JPEG encoder n Vdd 0. 4 – 1. 2 Volt ASCI Winterschool 2010 Engine Henk Corporaal 6

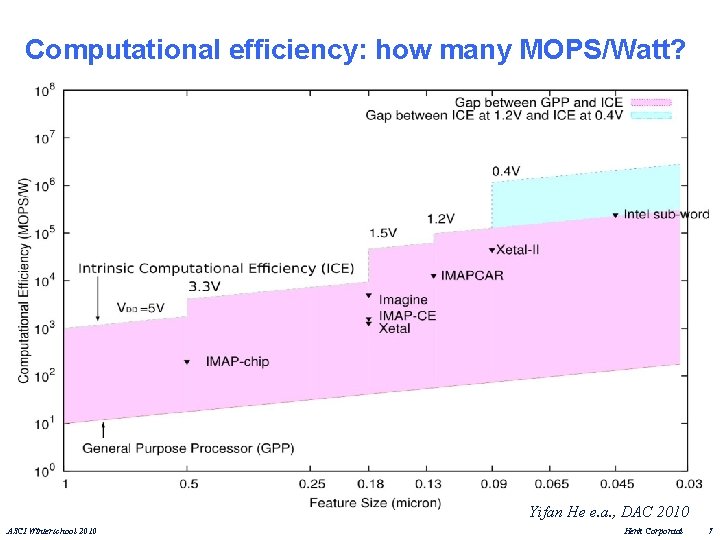

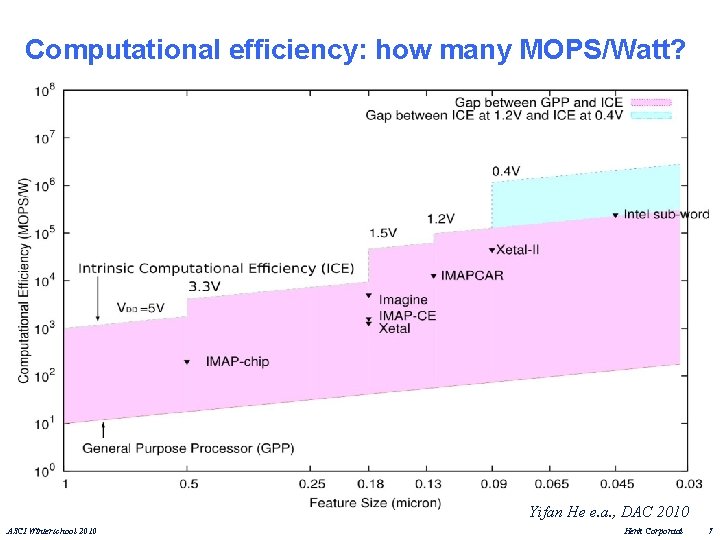

Computational efficiency: how many MOPS/Watt? Yifan He e. a. , DAC 2010 ASCI Winterschool 2010 Henk Corporaal 7

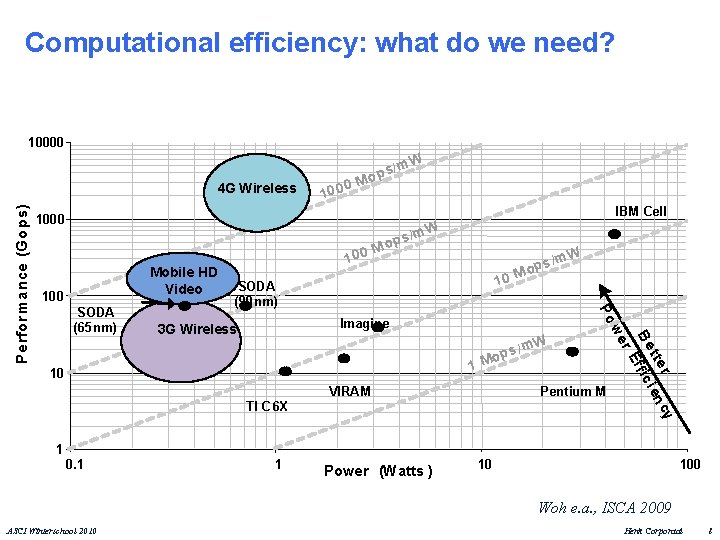

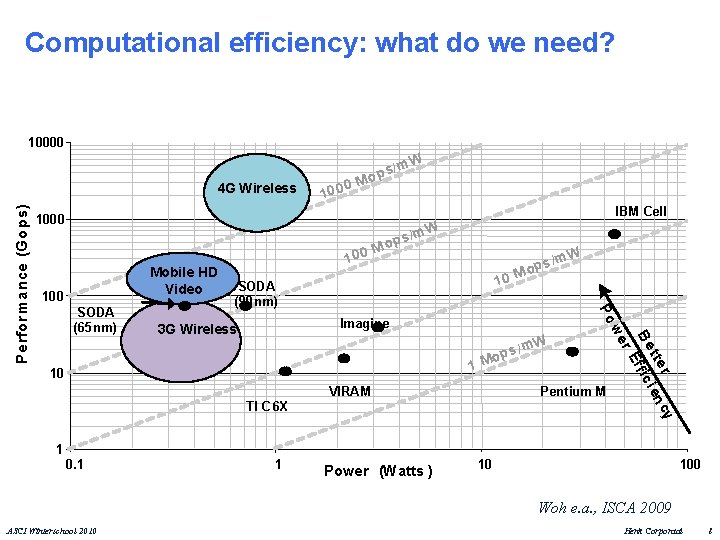

Computational efficiency: what do we need? 10000 10 0 0 M m. W IBM Cell 1000 Mobile HD Video 100 M m. W 10 SODA (90 nm) ps / Mo Imagine 1 M 10 VIRAM / ops m. W Pentium M TI C 6 X r cy t te i e n B e f f ic r. E 3 G Wireless m. W we SODA (65 nm) 1 00 / ops Po Pe rfor ma nce (Go ps) 4 G Wireless s/ op 1 0. 1 1 Power (Watts ) 10 100 Woh e. a. , ISCA 2009 ASCI Winterschool 2010 Henk Corporaal 8

Intel's opinion: 48 -core x 86 ASCI Winterschool 2010 Henk Corporaal 9

Outline u Classifications of Parallel Architectures u Examples n Various (research) architectures n GPUs n Cell n Intel multi-cores u How much performance do you really get? Roofline model u Trends & Conclusions ASCI Winterschool 2010 Henk Corporaal 10

Classifications u Performance / parallelism driven: n 4 -5 D n Flynn u Communication & Memory n Message passing / Shared memory n Shared memory issues: coherency, consistency, synchronization u Interconnect ASCI Winterschool 2010 Henk Corporaal 11

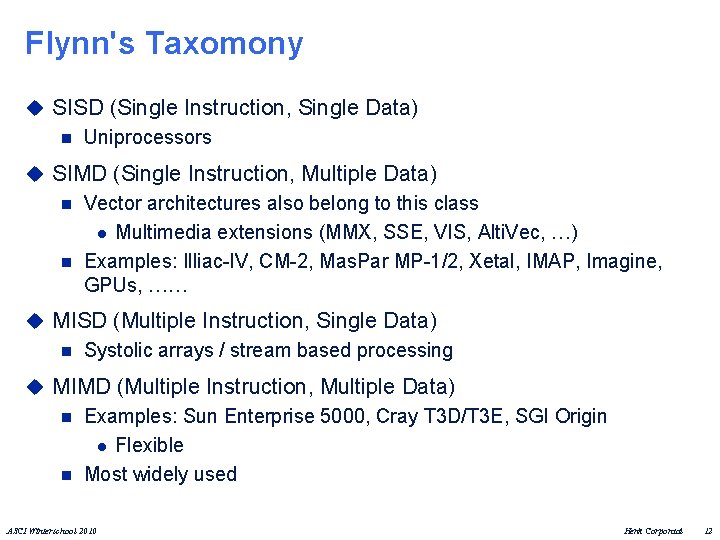

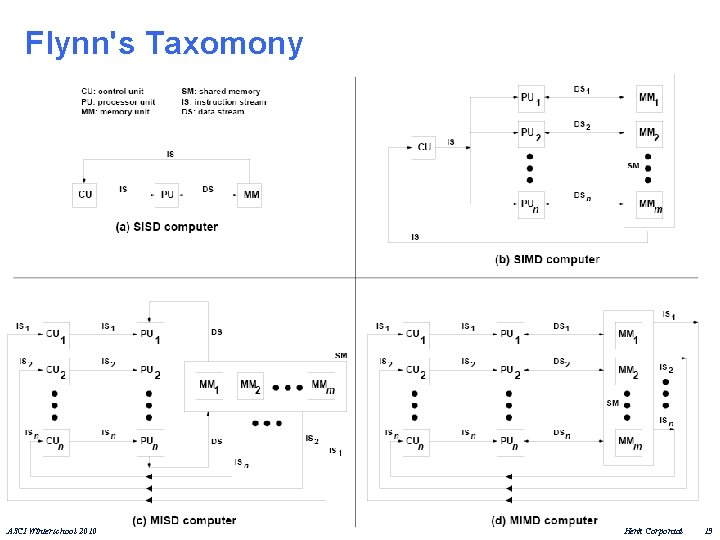

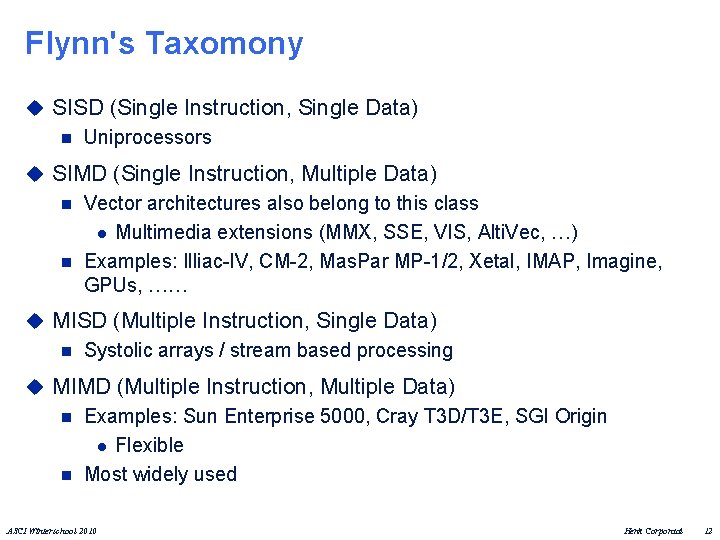

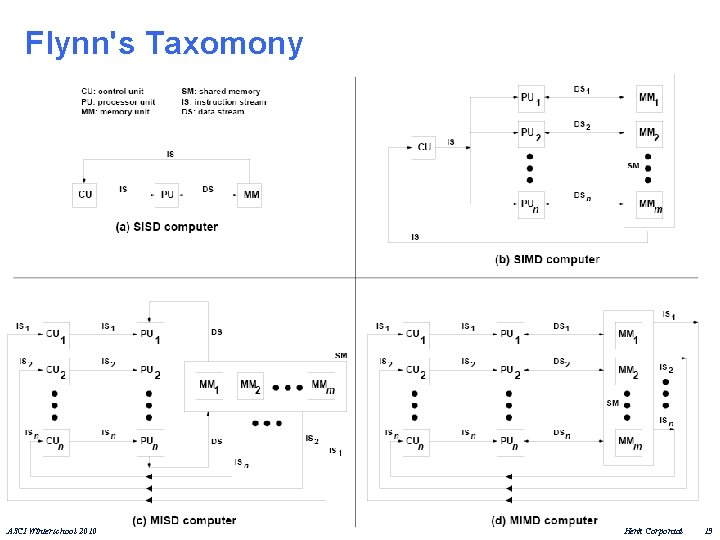

Flynn's Taxomony u SISD (Single Instruction, Single Data) n Uniprocessors u SIMD (Single Instruction, Multiple Data) n Vector architectures also belong to this class l Multimedia extensions (MMX, SSE, VIS, Alti. Vec, …) n Examples: Illiac-IV, CM-2, Mas. Par MP-1/2, Xetal, IMAP, Imagine, GPUs, …… u MISD (Multiple Instruction, Single Data) n Systolic arrays / stream based processing u MIMD (Multiple Instruction, Multiple Data) n Examples: Sun Enterprise 5000, Cray T 3 D/T 3 E, SGI Origin l Flexible n Most widely used ASCI Winterschool 2010 Henk Corporaal 12

Flynn's Taxomony ASCI Winterschool 2010 Henk Corporaal 13

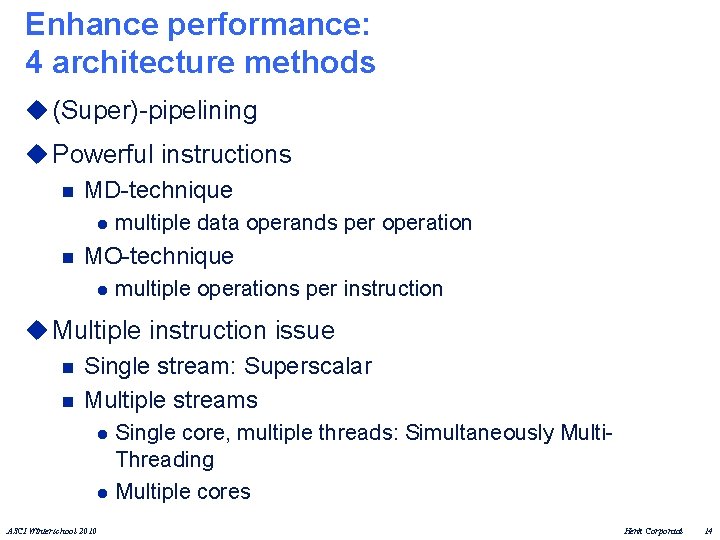

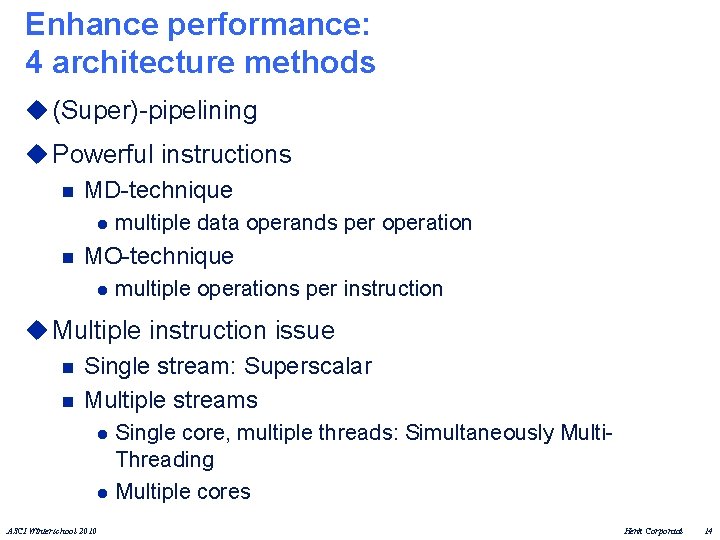

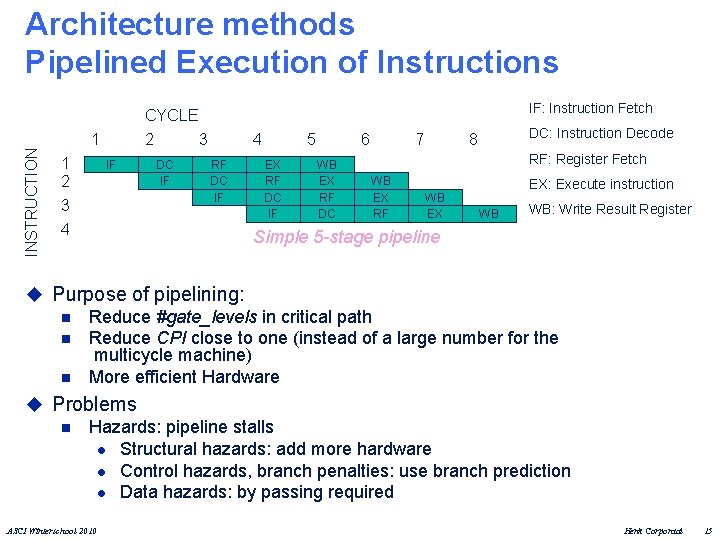

Enhance performance: 4 architecture methods u (Super)-pipelining u Powerful instructions n MD-technique l n multiple data operands per operation MO-technique l multiple operations per instruction u Multiple instruction issue n Single stream: Superscalar n Multiple streams l l ASCI Winterschool 2010 Single core, multiple threads: Simultaneously Multi. Threading Multiple cores Henk Corporaal 14

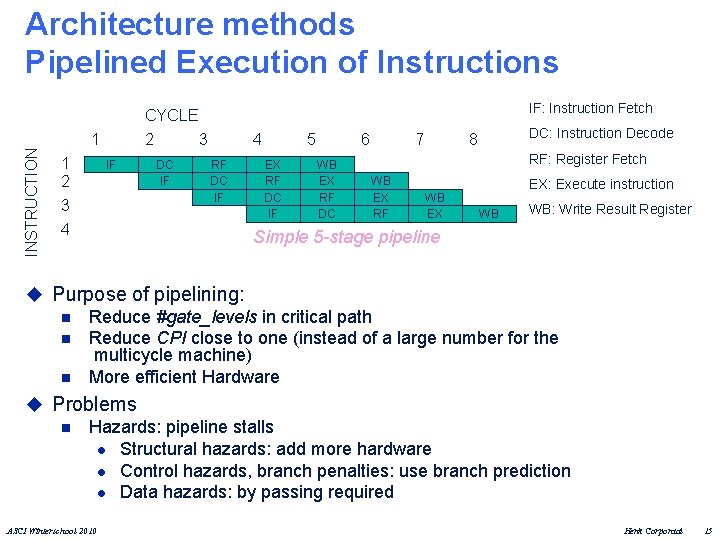

Architecture methods Pipelined Execution of Instructions IF: Instruction Fetch INSTRUCTION CYCLE 1 1 2 3 4 2 IF 3 DC IF 4 RF DC IF 5 EX RF DC IF 6 WB EX RF DC 7 DC: Instruction Decode 8 RF: Register Fetch WB EX RF WB EX EX: Execute instruction WB WB: Write Result Register Simple 5 -stage pipeline u Purpose of pipelining: n Reduce #gate_levels in critical path n Reduce CPI close to one (instead of a large number for the multicycle machine) n More efficient Hardware u Problems n Hazards: pipeline stalls l Structural hazards: add more hardware l Control hazards, branch penalties: use branch prediction l Data hazards: by passing required ASCI Winterschool 2010 Henk Corporaal 15

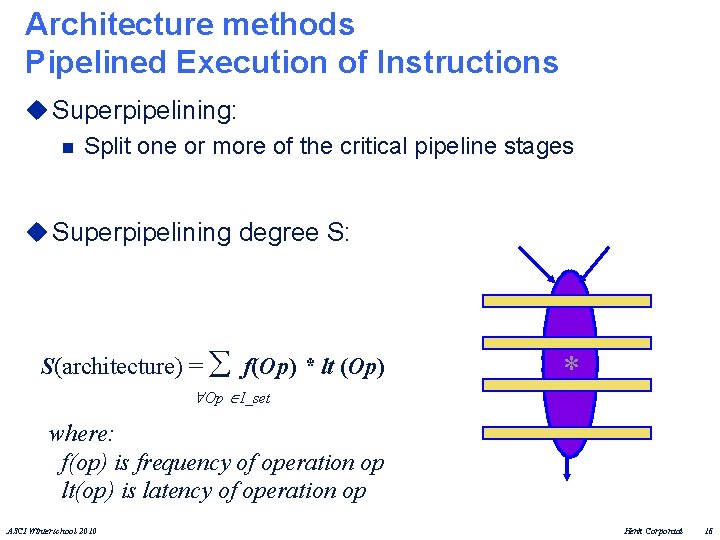

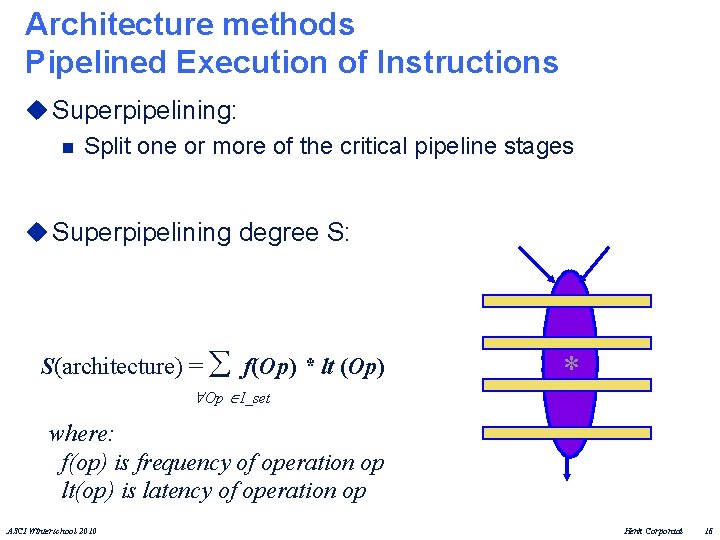

Architecture methods Pipelined Execution of Instructions u Superpipelining: n Split one or more of the critical pipeline stages u Superpipelining degree S: S(architecture) = f(Op) * lt (Op) Op I_set * where: f(op) is frequency of operation op lt(op) is latency of operation op ASCI Winterschool 2010 Henk Corporaal 16

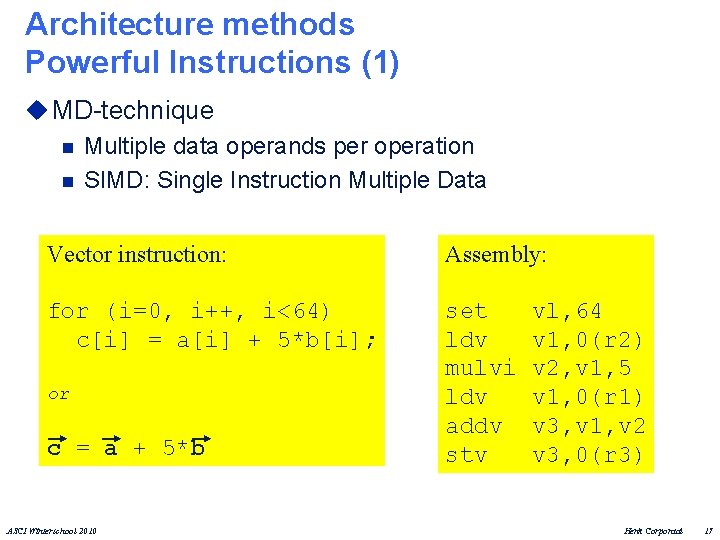

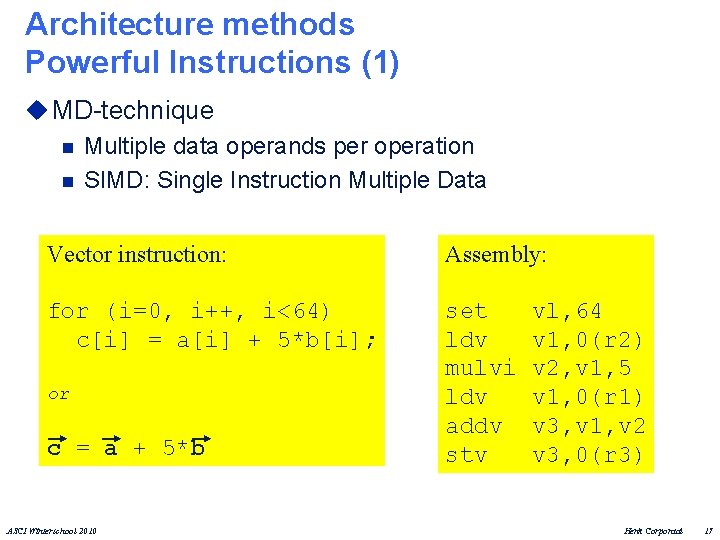

Architecture methods Powerful Instructions (1) u MD-technique n Multiple data operands per operation n SIMD: Single Instruction Multiple Data Vector instruction: Assembly: for (i=0, i++, i<64) c[i] = a[i] + 5*b[i]; set ldv mulvi ldv addv stv or c = a + 5*b ASCI Winterschool 2010 vl, 64 v 1, 0(r 2) v 2, v 1, 5 v 1, 0(r 1) v 3, v 1, v 2 v 3, 0(r 3) Henk Corporaal 17

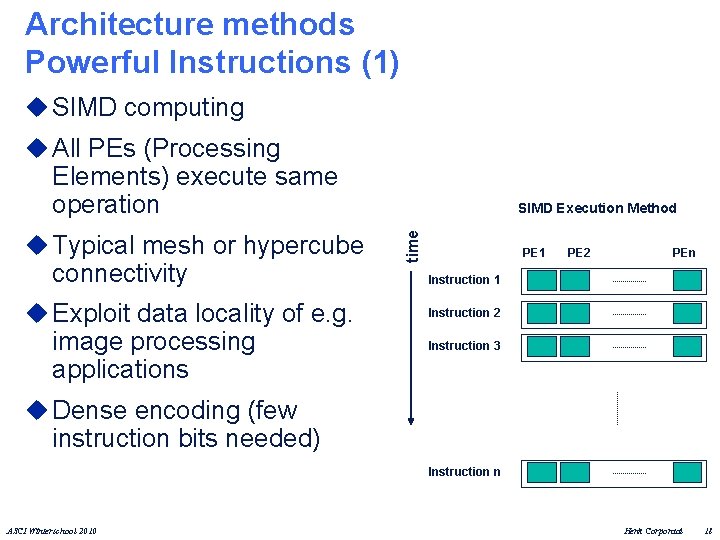

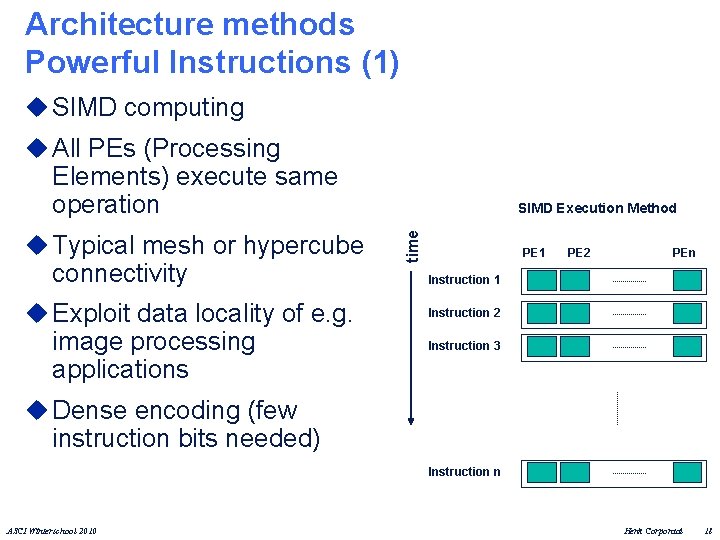

Architecture methods Powerful Instructions (1) u SIMD computing u All PEs (Processing Elements) execute same operation connectivity u Exploit data locality of e. g. image processing applications time u Typical mesh or hypercube SIMD Execution Method PE 1 PE 2 PEn Instruction 1 Instruction 2 Instruction 3 u Dense encoding (few instruction bits needed) Instruction n ASCI Winterschool 2010 Henk Corporaal 18

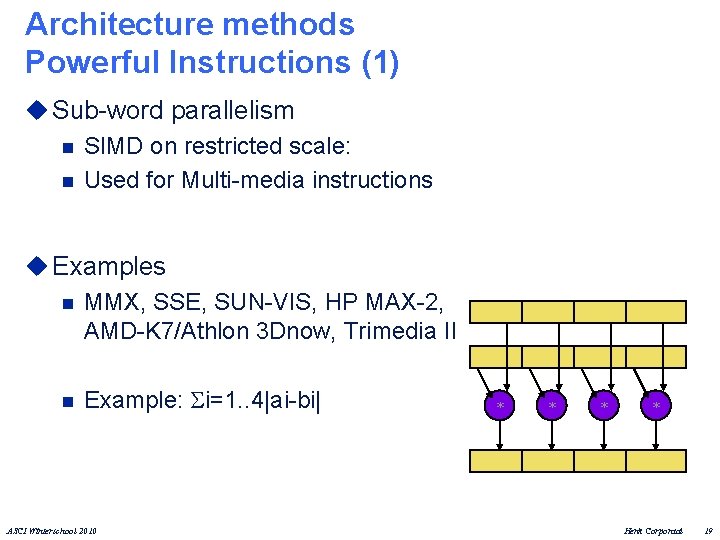

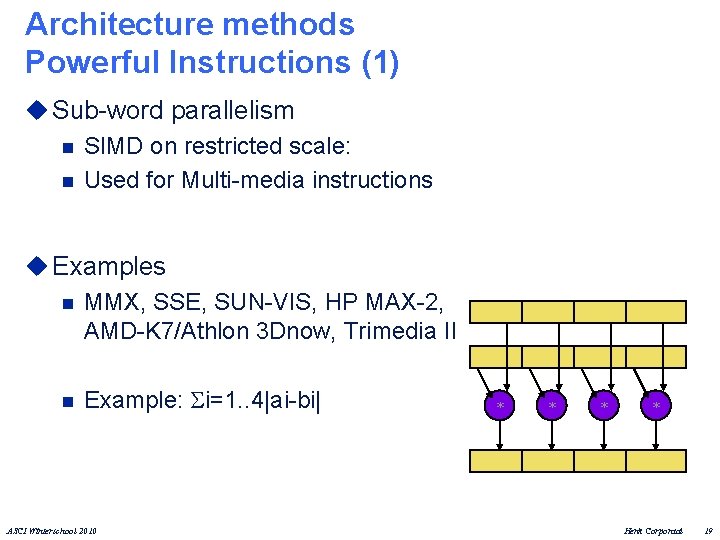

Architecture methods Powerful Instructions (1) u Sub-word parallelism n SIMD on restricted scale: n Used for Multi-media instructions u Examples n MMX, SSE, SUN-VIS, HP MAX-2, AMD-K 7/Athlon 3 Dnow, Trimedia II n Example: i=1. . 4|ai-bi| ASCI Winterschool 2010 * * Henk Corporaal 19

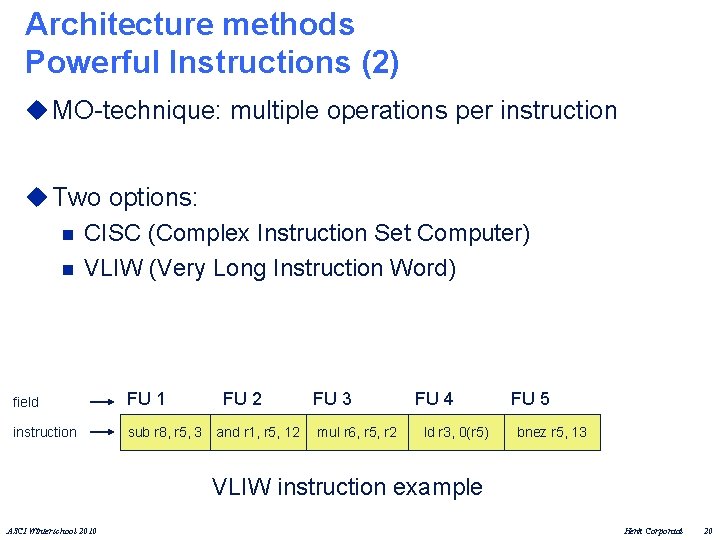

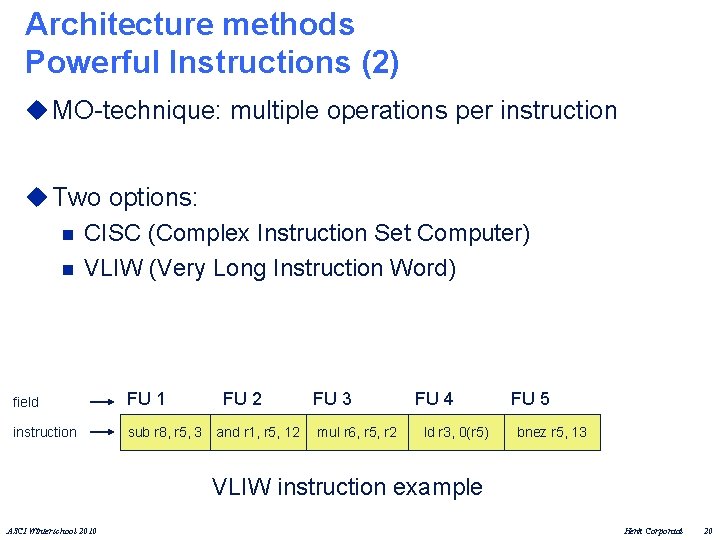

Architecture methods Powerful Instructions (2) u MO-technique: multiple operations per instruction u Two options: n CISC (Complex Instruction Set Computer) n VLIW (Very Long Instruction Word) field FU 1 instruction sub r 8, r 5, 3 FU 2 and r 1, r 5, 12 FU 3 mul r 6, r 5, r 2 FU 4 ld r 3, 0(r 5) FU 5 bnez r 5, 13 VLIW instruction example ASCI Winterschool 2010 Henk Corporaal 20

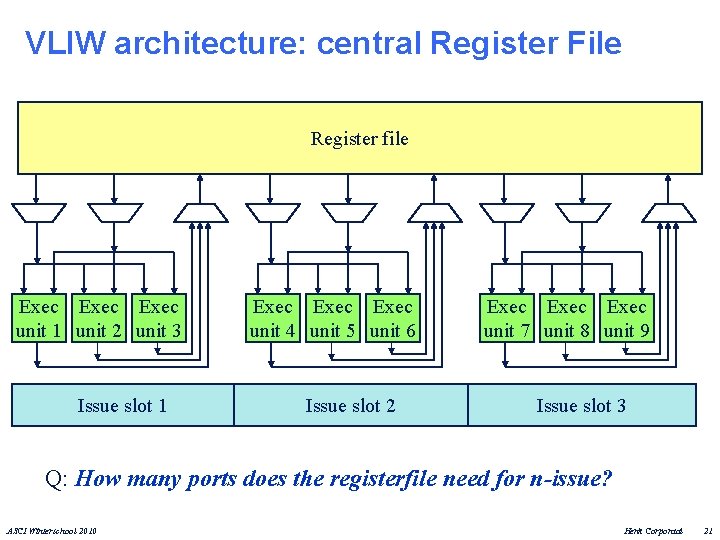

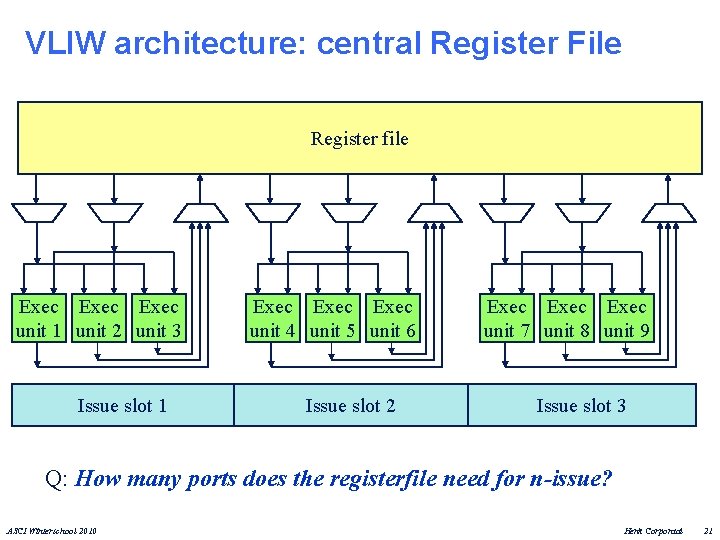

VLIW architecture: central Register File Register file Exec unit 1 unit 2 unit 3 Issue slot 1 Exec unit 4 unit 5 unit 6 Issue slot 2 Exec unit 7 unit 8 unit 9 Issue slot 3 Q: How many ports does the registerfile need for n-issue? ASCI Winterschool 2010 Henk Corporaal 21

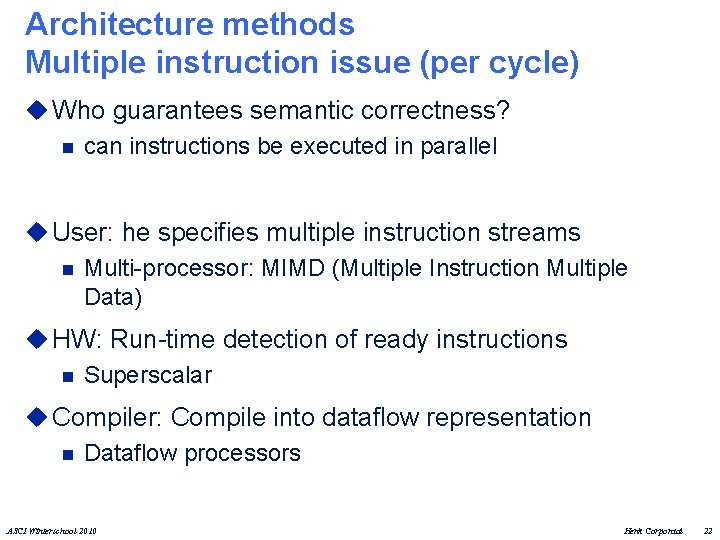

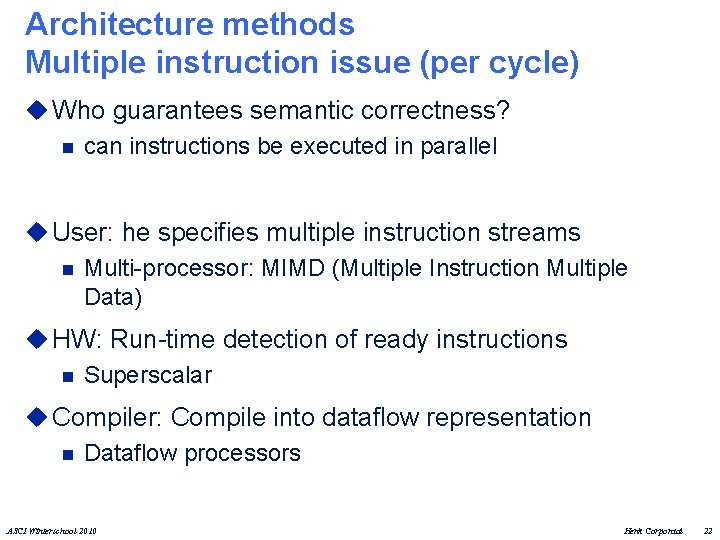

Architecture methods Multiple instruction issue (per cycle) u Who guarantees semantic correctness? n can instructions be executed in parallel u User: he specifies multiple instruction streams n Multi-processor: MIMD (Multiple Instruction Multiple Data) u HW: Run-time detection of ready instructions n Superscalar u Compiler: Compile into dataflow representation n Dataflow processors ASCI Winterschool 2010 Henk Corporaal 22

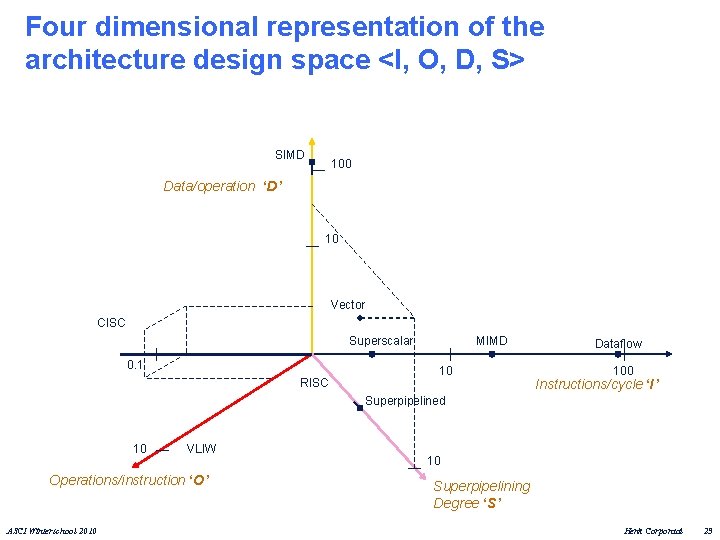

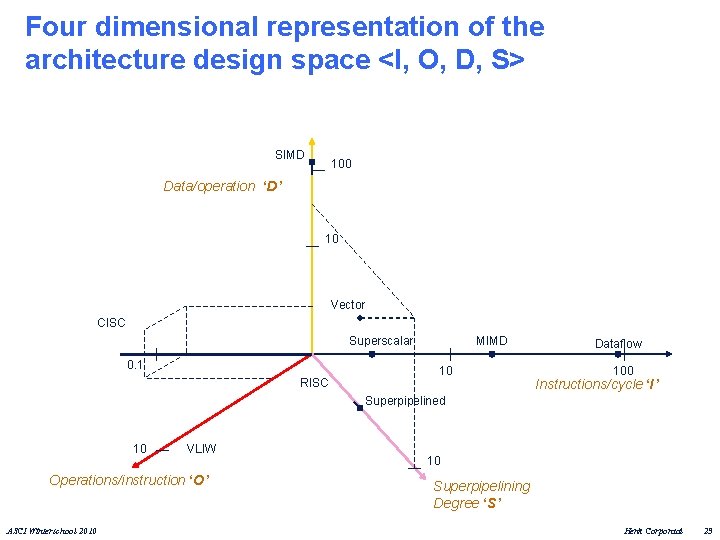

Four dimensional representation of the architecture design space <I, O, D, S> SIMD 100 Data/operation ‘D’ 10 Vector CISC Superscalar 0. 1 RISC MIMD 10 Dataflow 100 Instructions/cycle ‘I’ Superpipelined 10 VLIW Operations/instruction ‘O’ ASCI Winterschool 2010 10 Superpipelining Degree ‘S’ Henk Corporaal 23

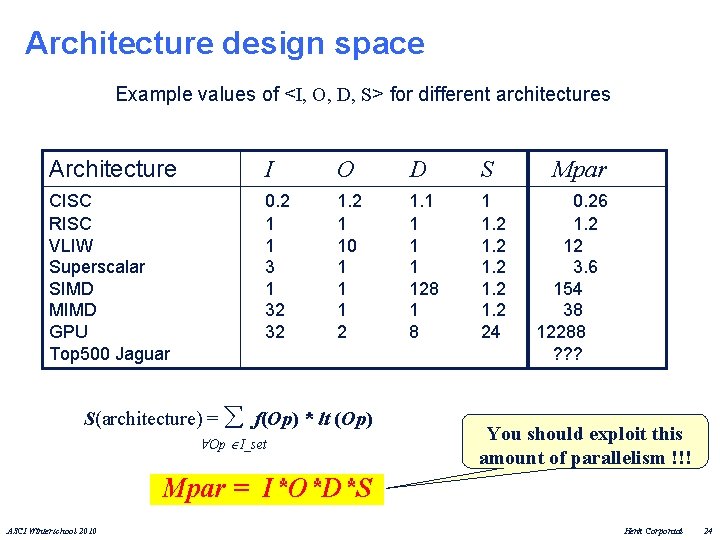

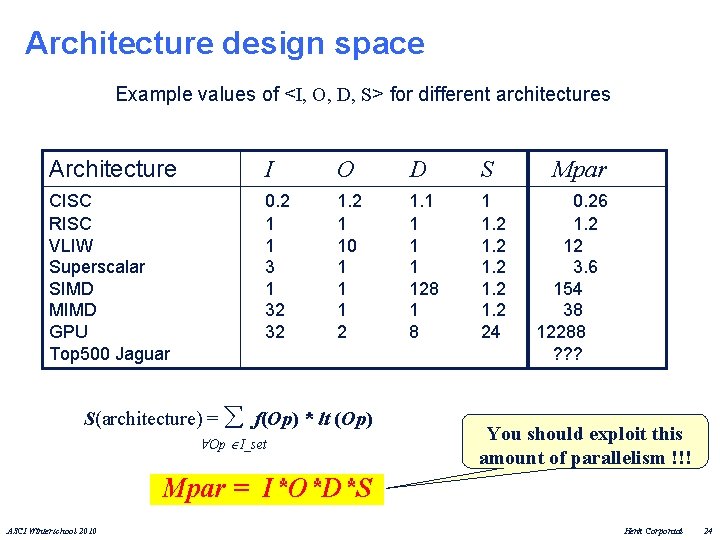

Architecture design space Example values of <I, O, D, S> for different architectures Architecture I O D S CISC RISC VLIW Superscalar SIMD MIMD GPU Top 500 Jaguar 0. 2 1 1 32 32 1. 2 1 10 1 1 1 2 1. 1 1 128 1 1. 2 1. 2 24 S(architecture) = f(Op) * lt (Op) Op I_set Mpar 0. 26 1. 2 12 3. 6 154 38 12288 ? ? ? You should exploit this amount of parallelism !!! Mpar = I*O*D*S ASCI Winterschool 2010 Henk Corporaal 24

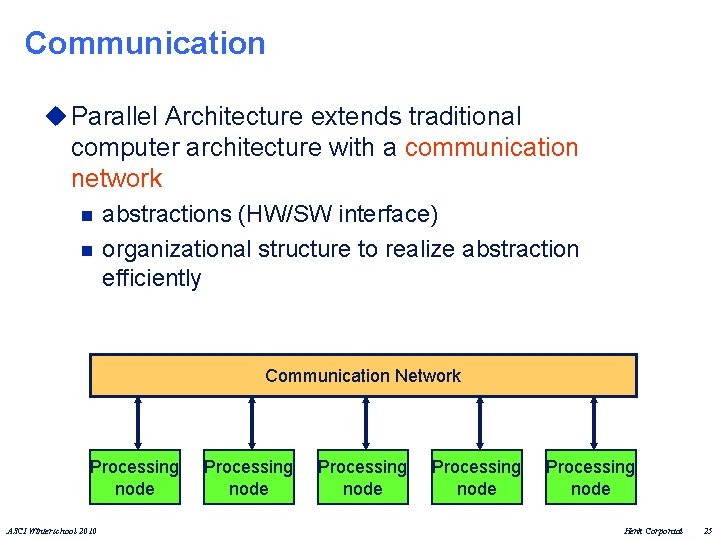

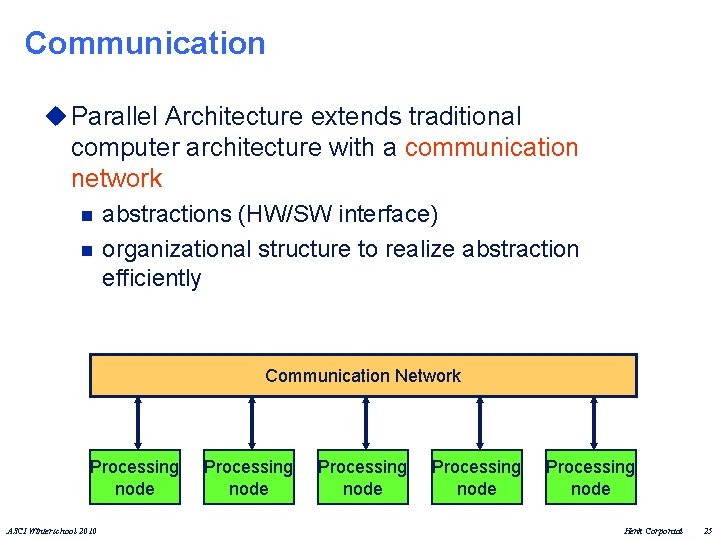

Communication u Parallel Architecture extends traditional computer architecture with a communication network n n abstractions (HW/SW interface) organizational structure to realize abstraction efficiently Communication Network Processing node ASCI Winterschool 2010 Processing node Henk Corporaal 25

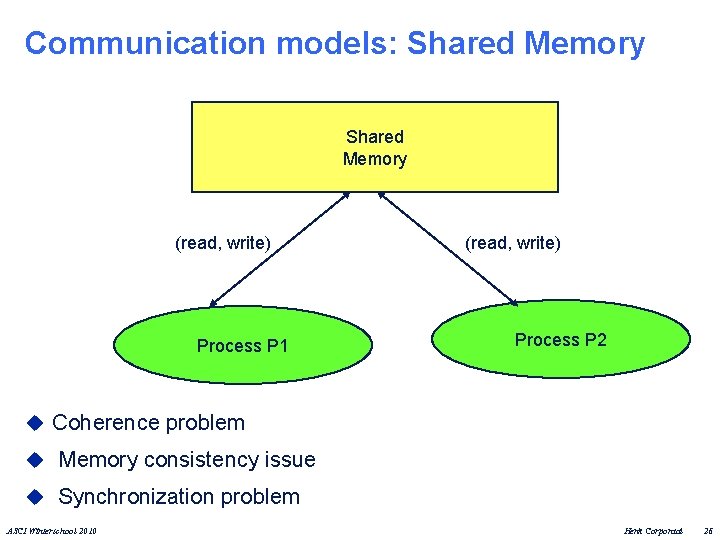

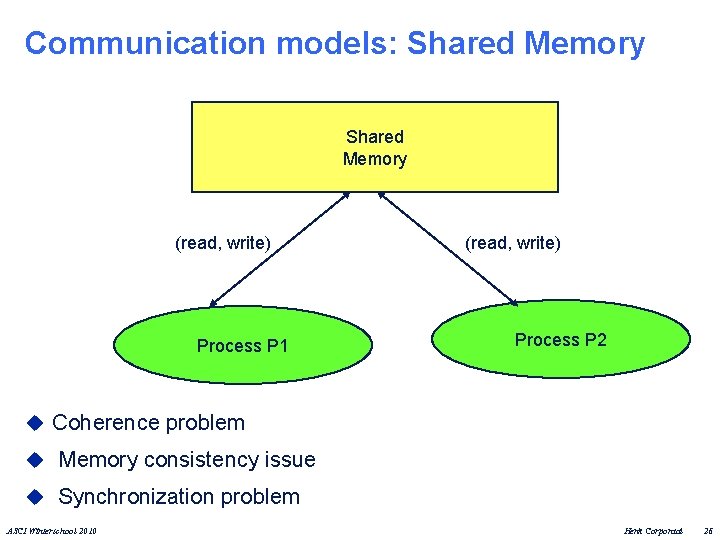

Communication models: Shared Memory (read, write) Process P 1 (read, write) Process P 2 u Coherence problem u Memory consistency issue u Synchronization problem ASCI Winterschool 2010 Henk Corporaal 26

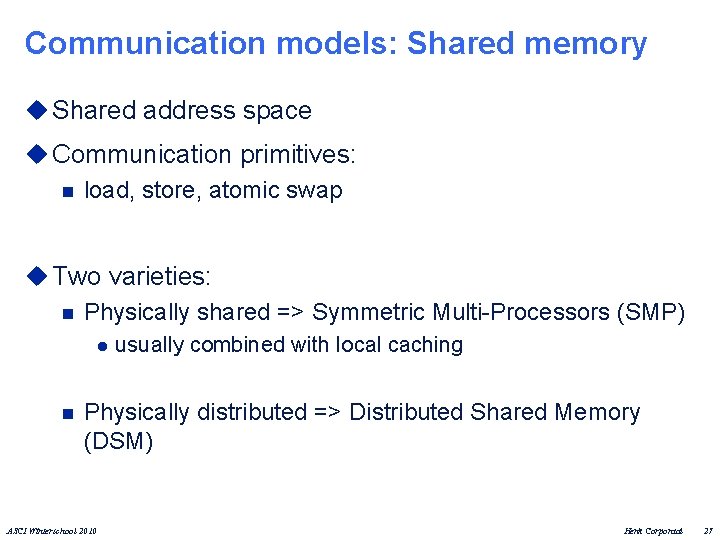

Communication models: Shared memory u Shared address space u Communication primitives: n load, store, atomic swap u Two varieties: n Physically shared => Symmetric Multi-Processors (SMP) l n usually combined with local caching Physically distributed => Distributed Shared Memory (DSM) ASCI Winterschool 2010 Henk Corporaal 27

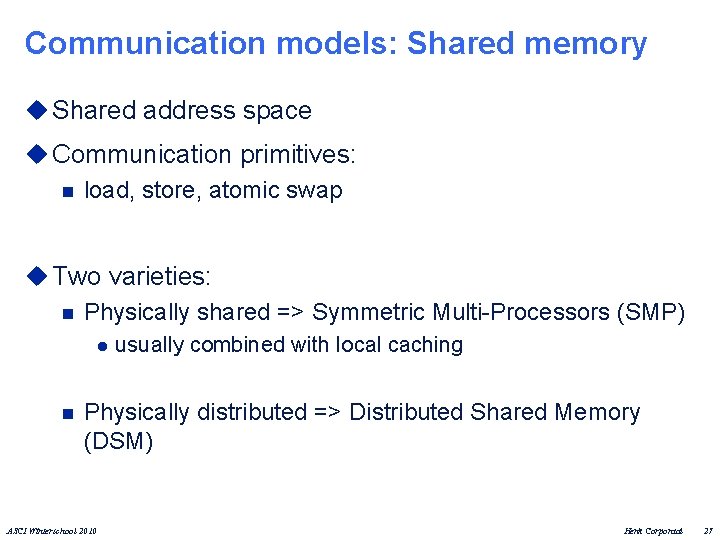

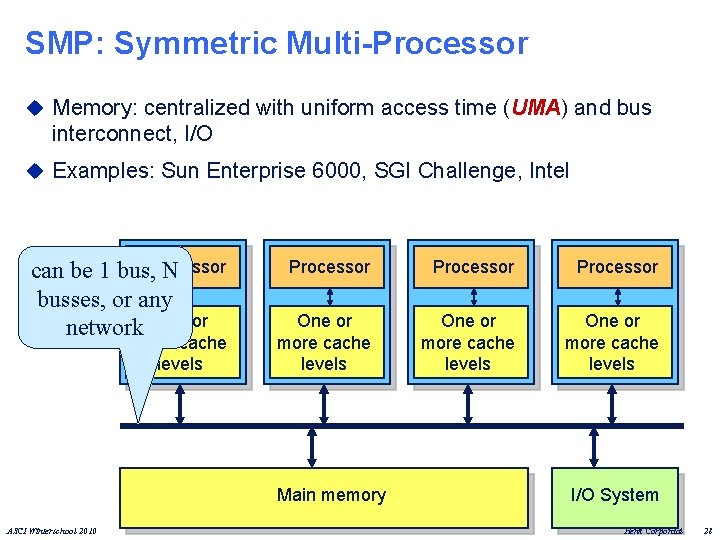

SMP: Symmetric Multi-Processor u Memory: centralized with uniform access time (UMA) and bus interconnect, I/O u Examples: Sun Enterprise 6000, SGI Challenge, Intel can be 1 bus, Processor N busses, or any network One or more cache levels Processor One or more cache levels Main memory ASCI Winterschool 2010 I/O System Henk Corporaal 28

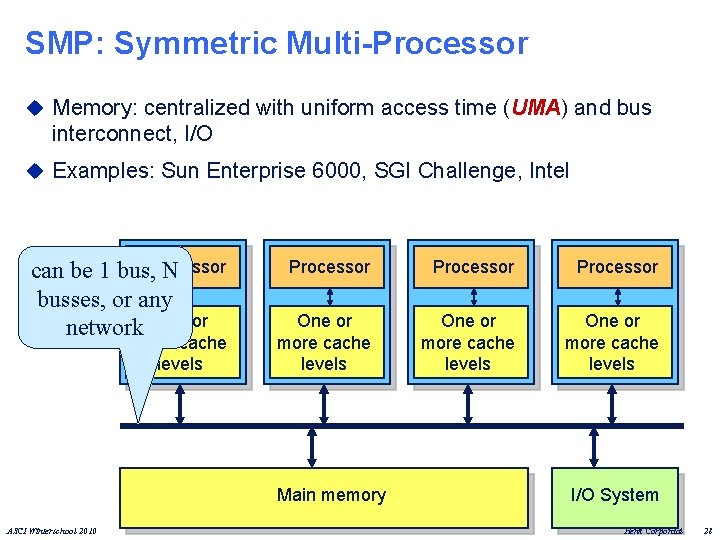

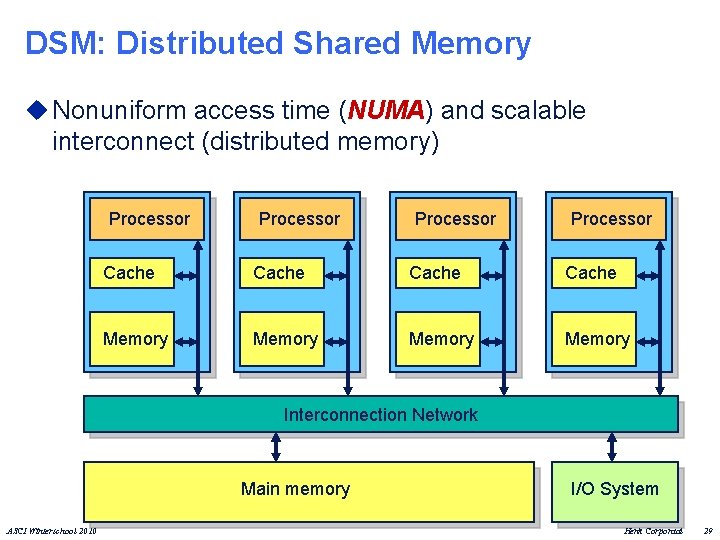

DSM: Distributed Shared Memory u Nonuniform access time (NUMA) and scalable interconnect (distributed memory) Processor Cache Memory Interconnection Network Main memory ASCI Winterschool 2010 I/O System Henk Corporaal 29

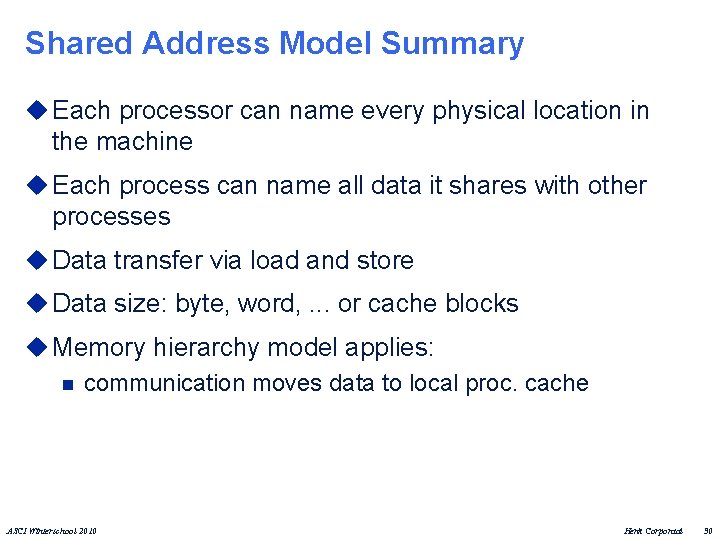

Shared Address Model Summary u Each processor can name every physical location in the machine u Each process can name all data it shares with other processes u Data transfer via load and store u Data size: byte, word, . . . or cache blocks u Memory hierarchy model applies: n communication moves data to local proc. cache ASCI Winterschool 2010 Henk Corporaal 30

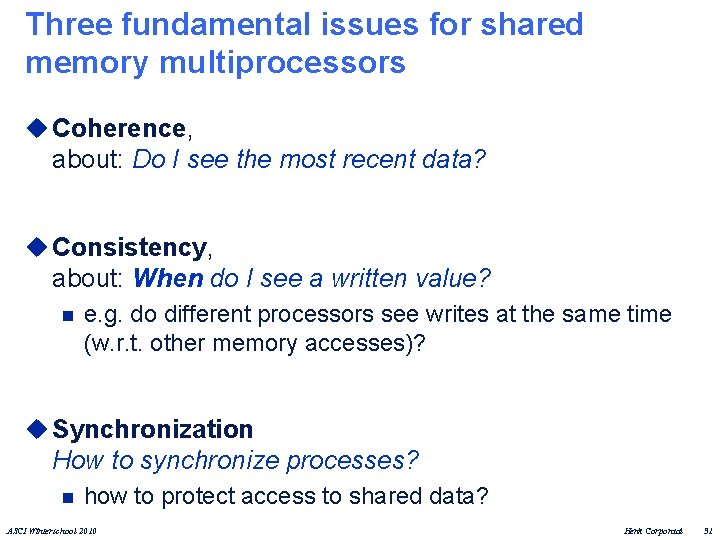

Three fundamental issues for shared memory multiprocessors u Coherence, about: Do I see the most recent data? u Consistency, about: When do I see a written value? n e. g. do different processors see writes at the same time (w. r. t. other memory accesses)? u Synchronization How to synchronize processes? n how to protect access to shared data? ASCI Winterschool 2010 Henk Corporaal 31

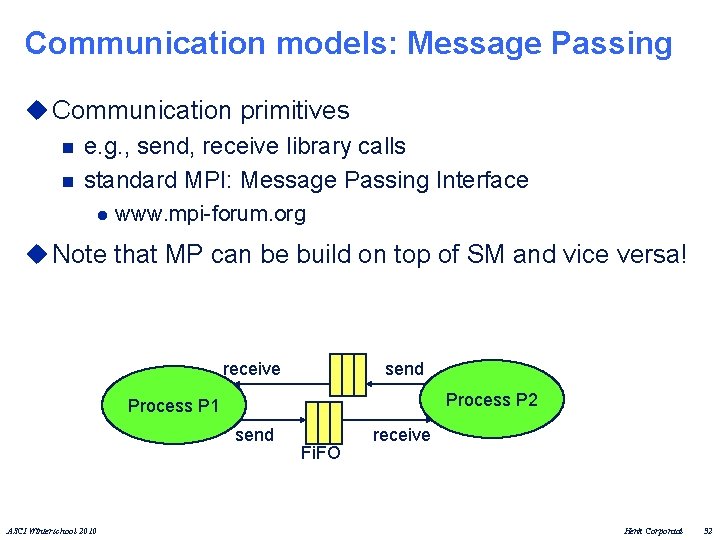

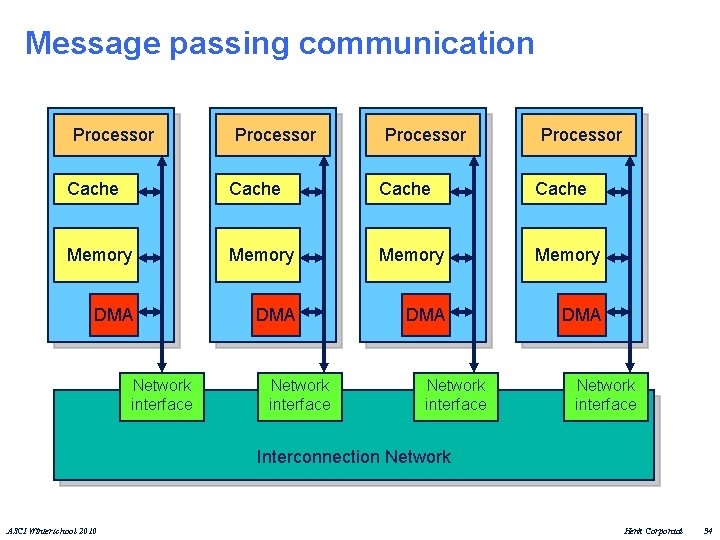

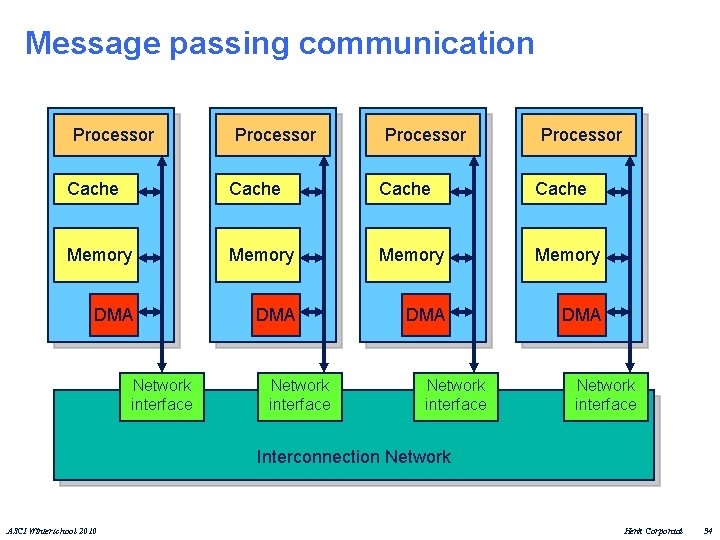

Communication models: Message Passing u Communication primitives n e. g. , send, receive library calls n standard MPI: Message Passing Interface l www. mpi-forum. org u Note that MP can be build on top of SM and vice versa! receive send Process P 2 Process P 1 send ASCI Winterschool 2010 Fi. FO receive Henk Corporaal 32

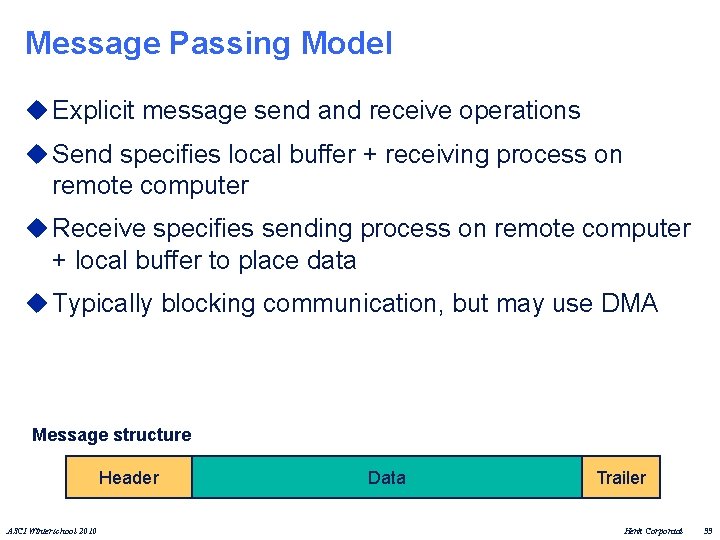

Message Passing Model u Explicit message send and receive operations u Send specifies local buffer + receiving process on remote computer u Receive specifies sending process on remote computer + local buffer to place data u Typically blocking communication, but may use DMA Message structure Header ASCI Winterschool 2010 Data Trailer Henk Corporaal 33

Message passing communication Processor Cache Memory DMA DMA Network interface Interconnection Network ASCI Winterschool 2010 Henk Corporaal 34

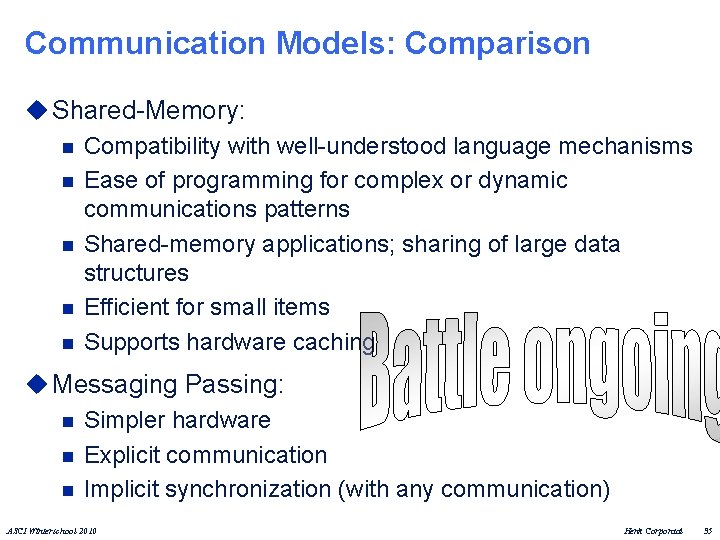

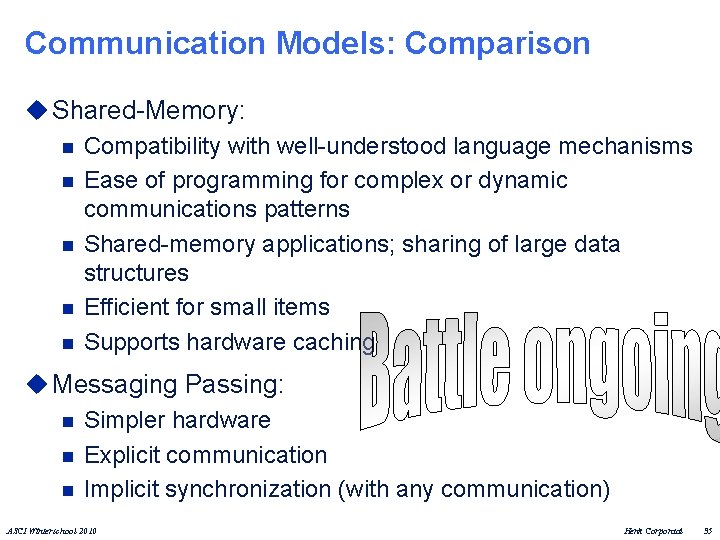

Communication Models: Comparison u Shared-Memory: n Compatibility with well-understood language mechanisms n Ease of programming for complex or dynamic communications patterns n Shared-memory applications; sharing of large data structures n Efficient for small items n Supports hardware caching u Messaging Passing: n Simpler hardware n Explicit communication n Implicit synchronization (with any communication) ASCI Winterschool 2010 Henk Corporaal 35

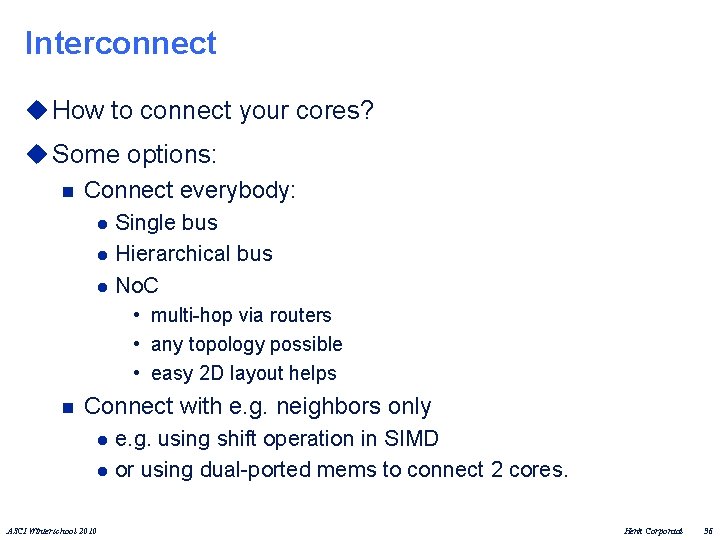

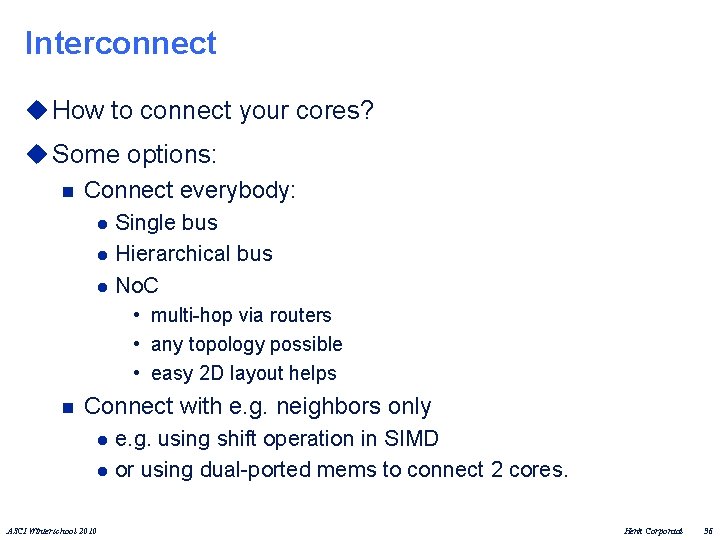

Interconnect u How to connect your cores? u Some options: n Connect everybody: l l l Single bus Hierarchical bus No. C • multi-hop via routers • any topology possible • easy 2 D layout helps n Connect with e. g. neighbors only l l ASCI Winterschool 2010 e. g. using shift operation in SIMD or using dual-ported mems to connect 2 cores. Henk Corporaal 36

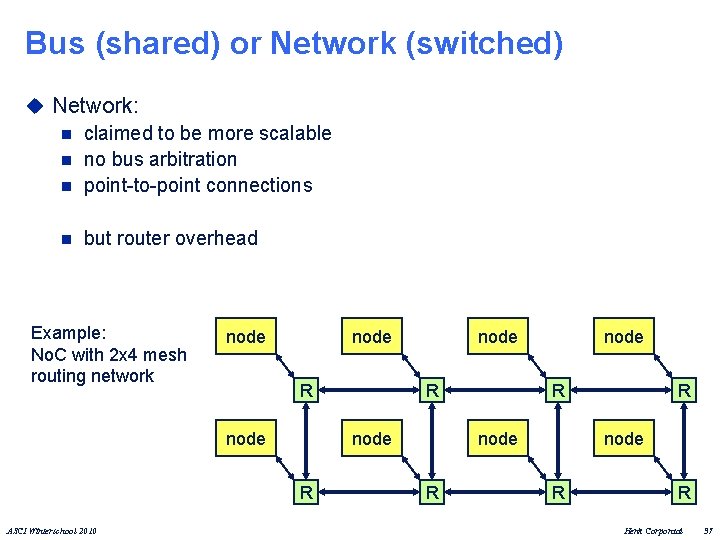

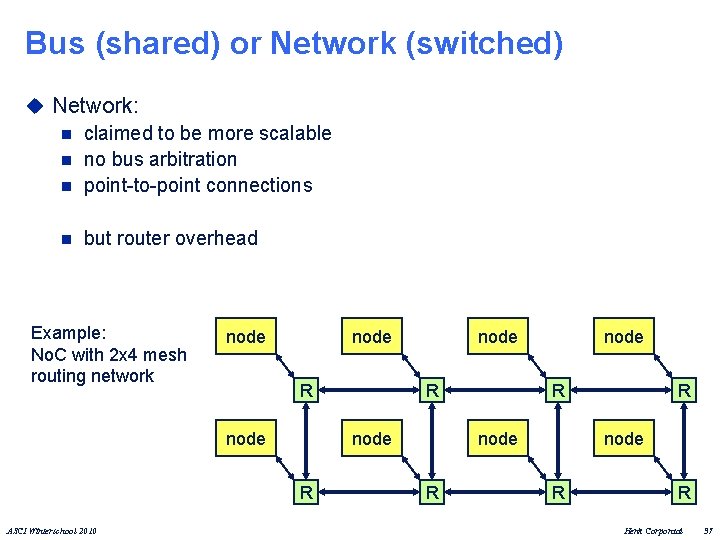

Bus (shared) or Network (switched) u Network: n claimed to be more scalable n no bus arbitration n point-to-point connections n but router overhead Example: No. C with 2 x 4 mesh routing network node R ASCI Winterschool 2010 node R R Henk Corporaal 37

Historical Perspective u Early machines were: n Collection of microprocessors. n Communication was performed using bi-directional queues between nearest neighbors. u Messages were forwarded by processors on path n “Store and forward” networking u There was a strong emphasis on topology in algorithms, in order to minimize the number of hops => minimize time ASCI Winterschool 2010 Henk Corporaal 38

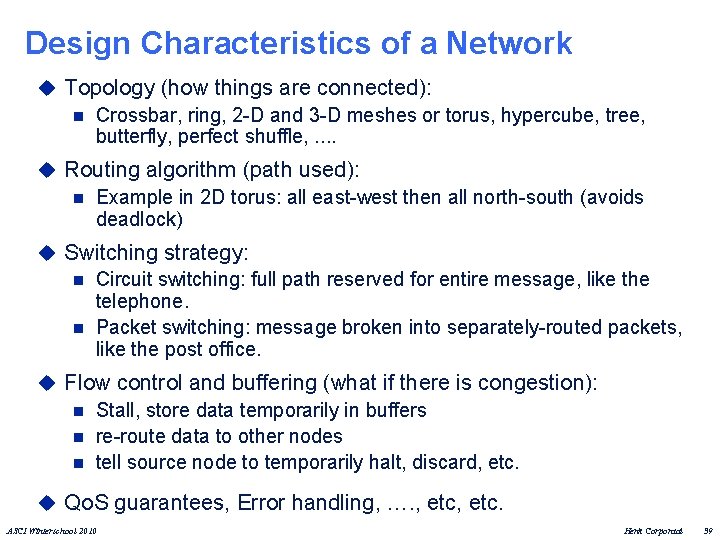

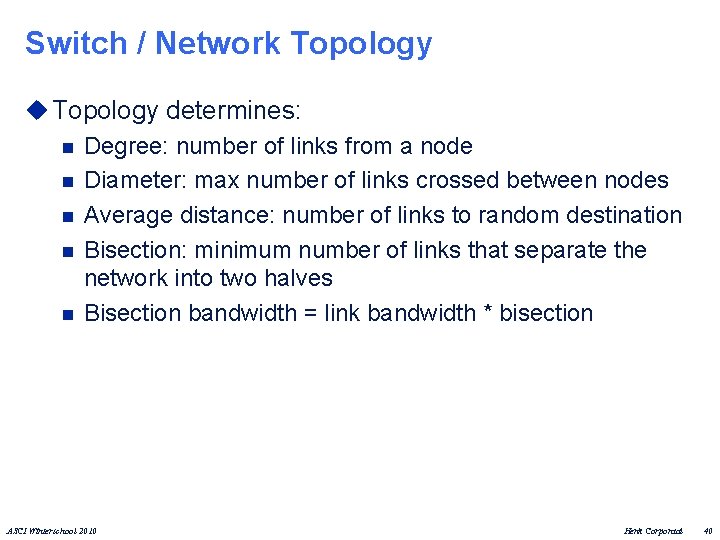

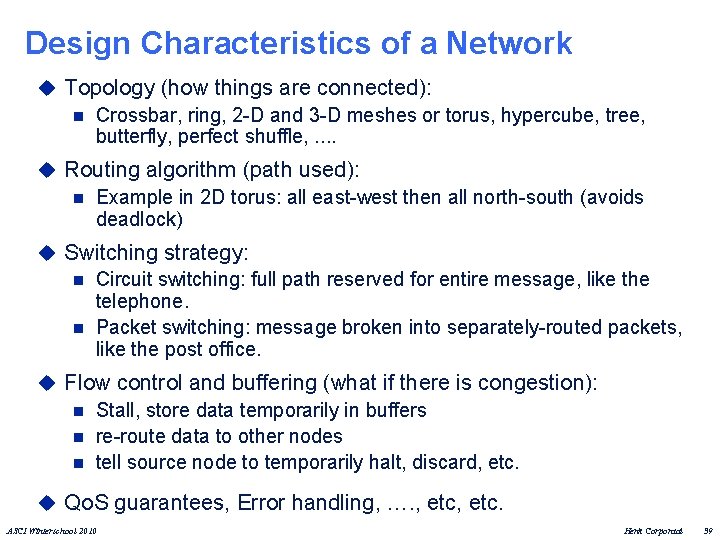

Design Characteristics of a Network u Topology (how things are connected): n Crossbar, ring, 2 -D and 3 -D meshes or torus, hypercube, tree, butterfly, perfect shuffle, . . u Routing algorithm (path used): n Example in 2 D torus: all east-west then all north-south (avoids deadlock) u Switching strategy: n Circuit switching: full path reserved for entire message, like the telephone. n Packet switching: message broken into separately-routed packets, like the post office. u Flow control and buffering (what if there is congestion): n Stall, store data temporarily in buffers n re-route data to other nodes n tell source node to temporarily halt, discard, etc. u Qo. S guarantees, Error handling, …. , etc. ASCI Winterschool 2010 Henk Corporaal 39

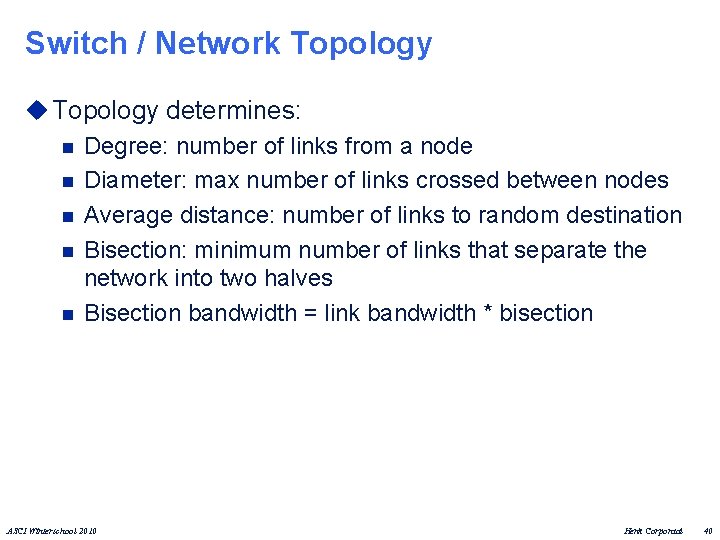

Switch / Network Topology u Topology determines: n Degree: number of links from a node n Diameter: max number of links crossed between nodes n Average distance: number of links to random destination n Bisection: minimum number of links that separate the network into two halves n Bisection bandwidth = link bandwidth * bisection ASCI Winterschool 2010 Henk Corporaal 40

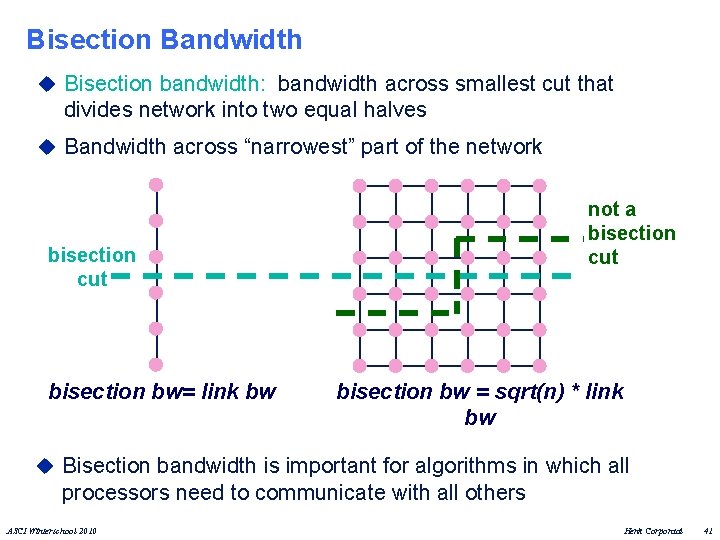

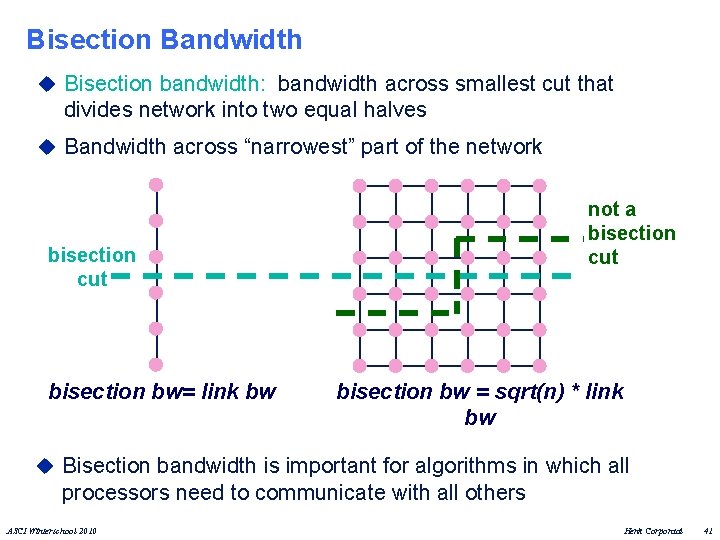

Bisection Bandwidth u Bisection bandwidth: bandwidth across smallest cut that divides network into two equal halves u Bandwidth across “narrowest” part of the network not a bisection cut bisection bw= link bw bisection bw = sqrt(n) * link bw u Bisection bandwidth is important for algorithms in which all processors need to communicate with all others ASCI Winterschool 2010 Henk Corporaal 41

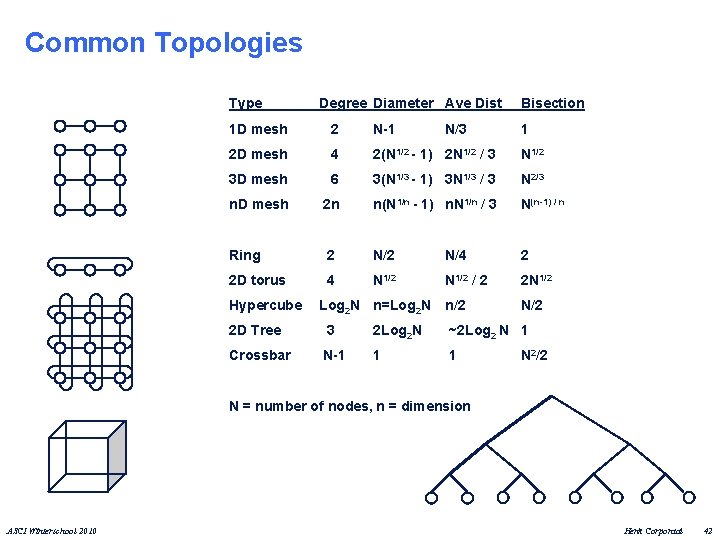

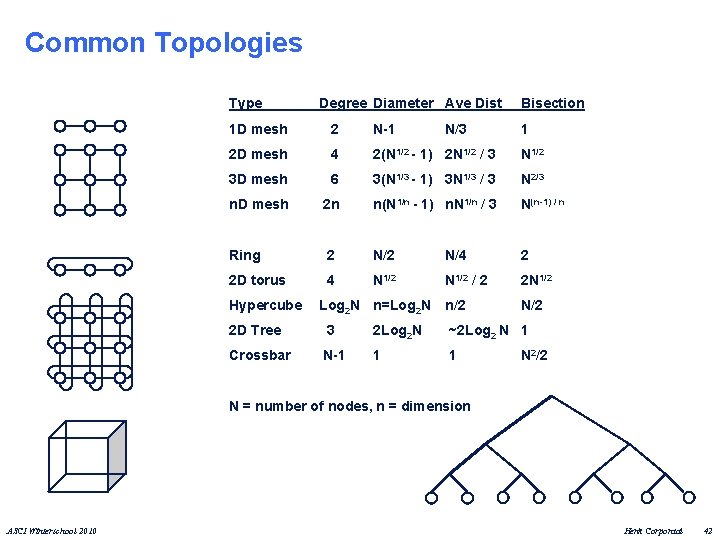

Common Topologies Type Degree Diameter Ave Dist 1 D mesh 2 N-1 2 D mesh 4 2(N 1/2 - 1) 2 N 1/2 / 3 N 1/2 3 D mesh 6 3(N 1/3 - 1) 3 N 1/3 / 3 N 2/3 n. D mesh 2 n n(N 1/n - 1) n. N 1/n / 3 N(n-1) / n Ring 2 N/4 2 2 D torus 4 N 1/2 / 2 2 N 1/2 n/2 N/2 Hypercube Log 2 N n=Log 2 N N/3 Bisection 1 2 D Tree 3 2 Log 2 N ~2 Log 2 N 1 Crossbar N-1 1 1 N 2/2 N = number of nodes, n = dimension ASCI Winterschool 2010 Henk Corporaal 42

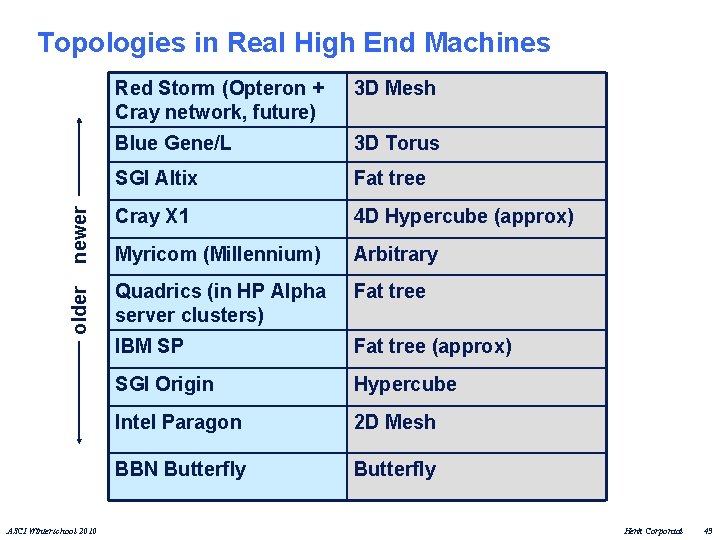

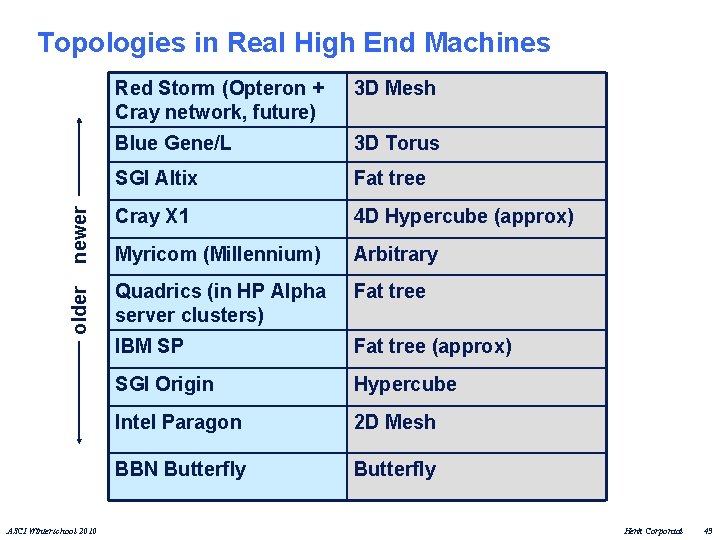

Red Storm (Opteron + Cray network, future) 3 D Mesh Blue Gene/L 3 D Torus SGI Altix Fat tree newer Cray X 1 4 D Hypercube (approx) Myricom (Millennium) Arbitrary older Topologies in Real High End Machines Quadrics (in HP Alpha server clusters) Fat tree IBM SP Fat tree (approx) SGI Origin Hypercube Intel Paragon 2 D Mesh BBN Butterfly ASCI Winterschool 2010 Henk Corporaal 43

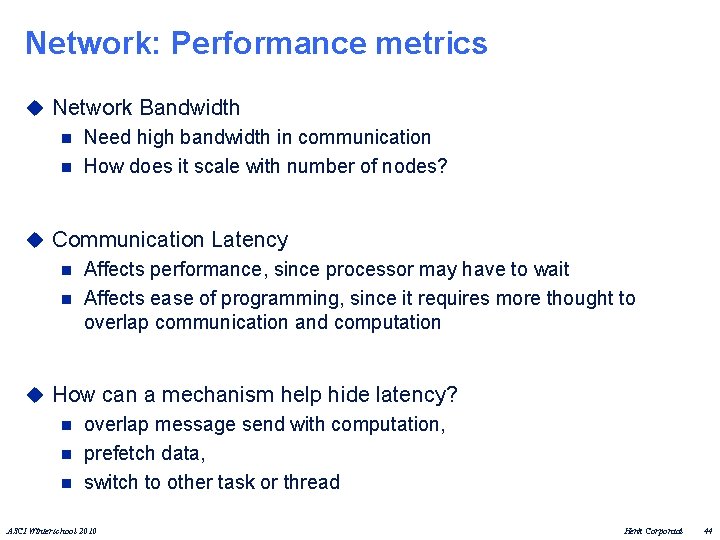

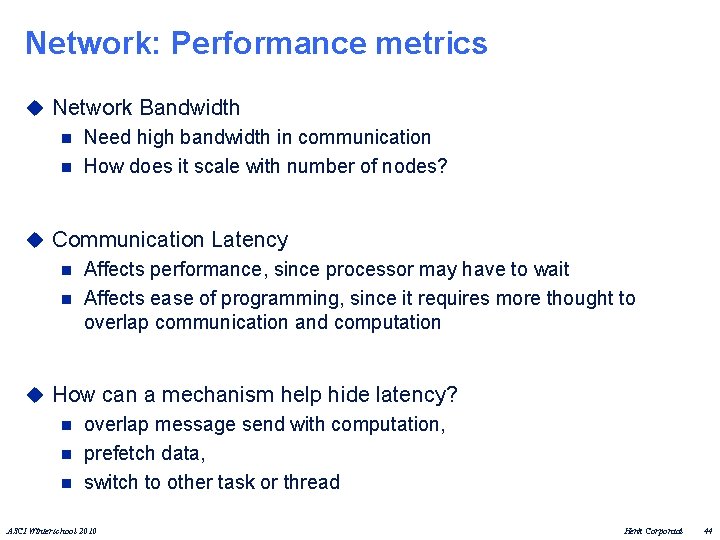

Network: Performance metrics u Network Bandwidth n Need high bandwidth in communication n How does it scale with number of nodes? u Communication Latency n Affects performance, since processor may have to wait n Affects ease of programming, since it requires more thought to overlap communication and computation u How can a mechanism help hide latency? n overlap message send with computation, n prefetch data, n switch to other task or thread ASCI Winterschool 2010 Henk Corporaal 44

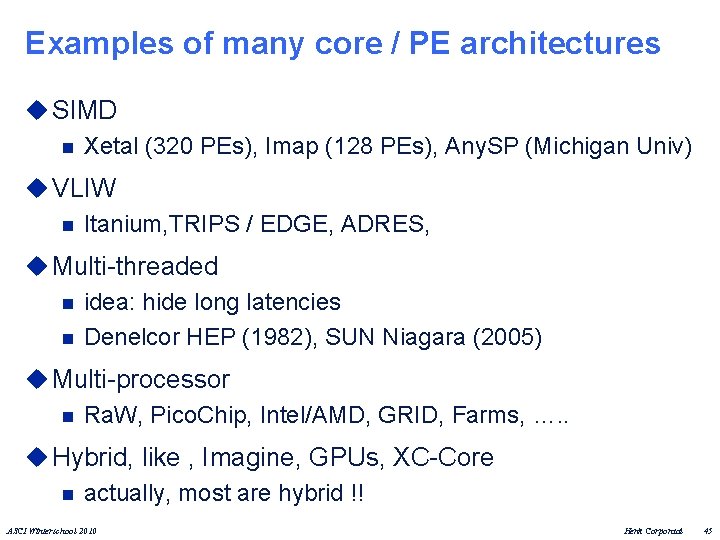

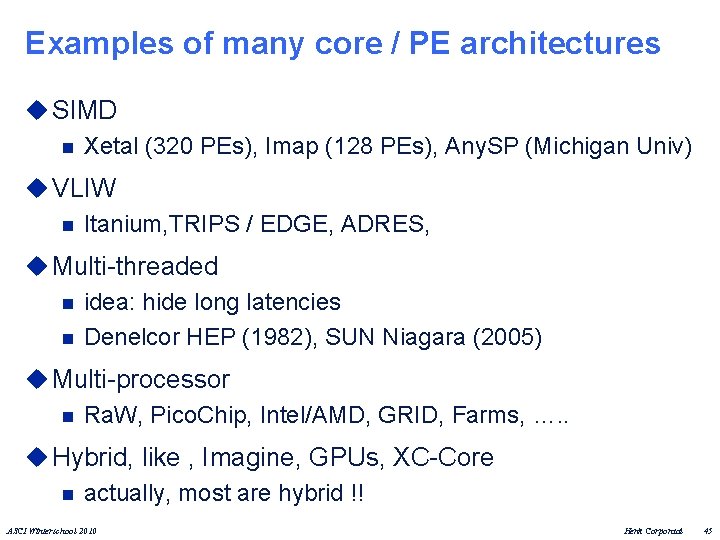

Examples of many core / PE architectures u SIMD n Xetal (320 PEs), Imap (128 PEs), Any. SP (Michigan Univ) u VLIW n Itanium, TRIPS / EDGE, ADRES, u Multi-threaded n idea: hide long latencies n Denelcor HEP (1982), SUN Niagara (2005) u Multi-processor n Ra. W, Pico. Chip, Intel/AMD, GRID, Farms, …. . u Hybrid, like , Imagine, GPUs, XC-Core n actually, most are hybrid !! ASCI Winterschool 2010 Henk Corporaal 45

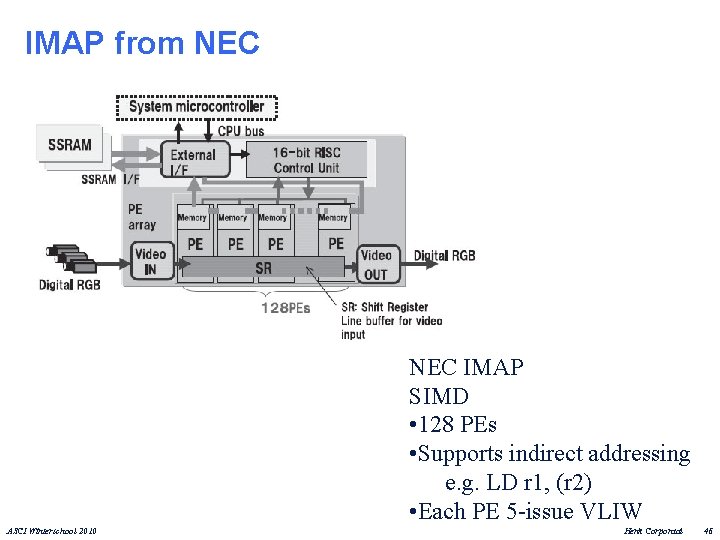

IMAP from NEC IMAP SIMD • 128 PEs • Supports indirect addressing e. g. LD r 1, (r 2) • Each PE 5 -issue VLIW ASCI Winterschool 2010 Henk Corporaal 46

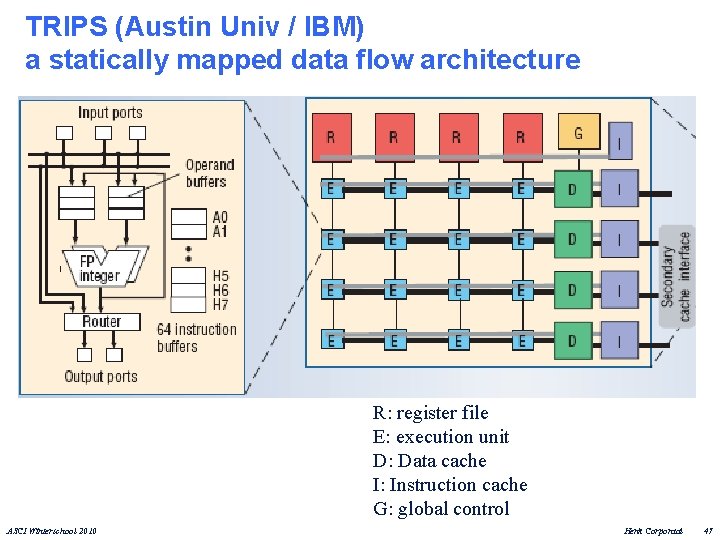

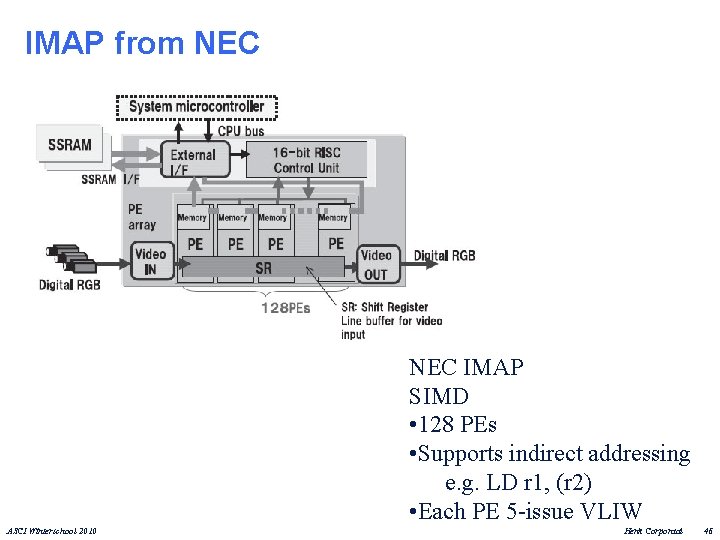

TRIPS (Austin Univ / IBM) a statically mapped data flow architecture R: register file E: execution unit D: Data cache I: Instruction cache G: global control ASCI Winterschool 2010 Henk Corporaal 47

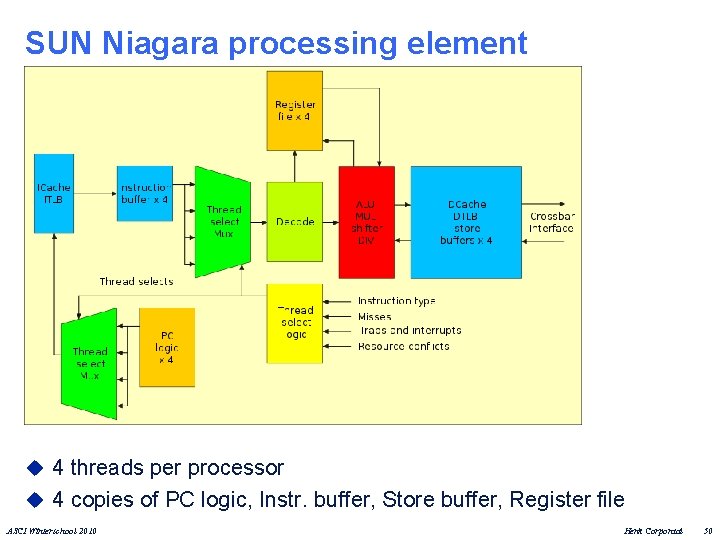

Compiling for TRIPS 1. 2. Form hyperblocks (use unrolling, predication, inlining to enlarge scope) Spatial map operations of each hyperblock u 3. registers are accessed at hyperblock boundaries Schedule hyperblocks ASCI Winterschool 2010 Henk Corporaal 48

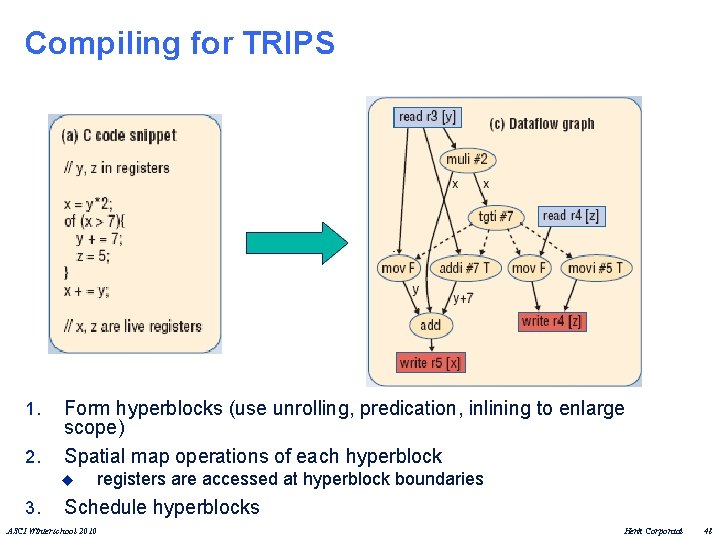

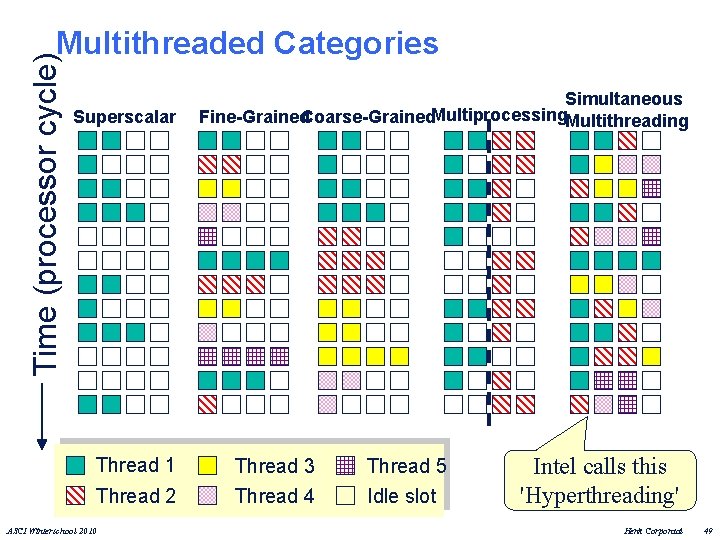

Time (processor cycle) Multithreaded Categories Superscalar Thread 1 Thread 2 ASCI Winterschool 2010 Simultaneous Fine-Grained. Coarse-Grained. Multiprocessing. Multithreading Thread 3 Thread 4 Thread 5 Idle slot Intel calls this 'Hyperthreading' Henk Corporaal 49

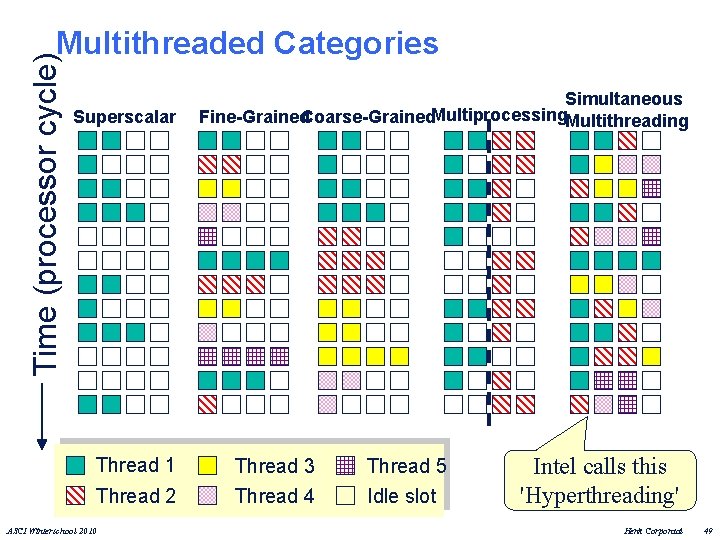

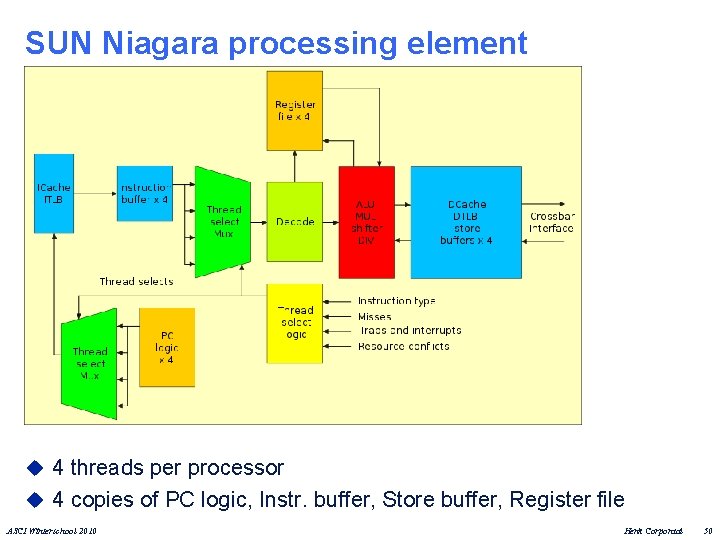

SUN Niagara processing element u 4 threads per processor u 4 copies of PC logic, Instr. buffer, Store buffer, Register file ASCI Winterschool 2010 Henk Corporaal 50

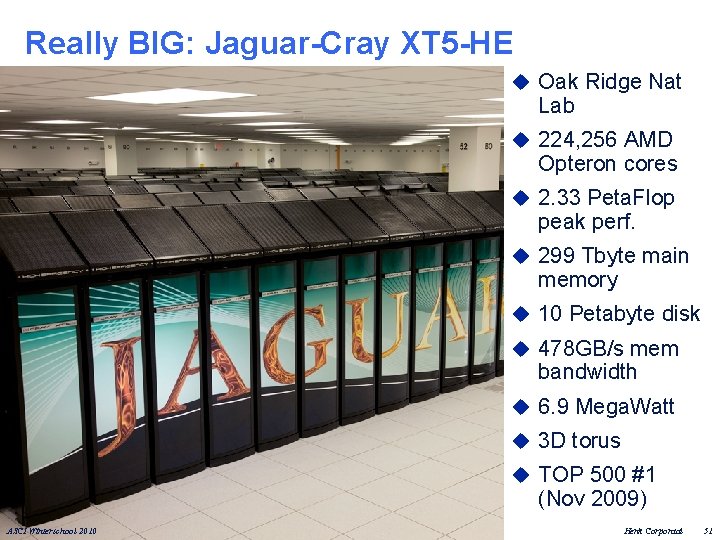

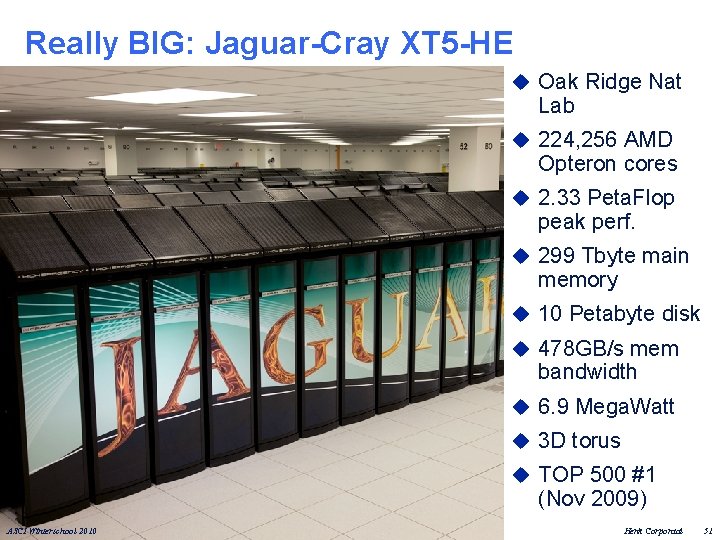

Really BIG: Jaguar-Cray XT 5 -HE u Oak Ridge Nat Lab u 224, 256 AMD Opteron cores u 2. 33 Peta. Flop peak perf. u 299 Tbyte main memory u 10 Petabyte disk u 478 GB/s mem bandwidth u 6. 9 Mega. Watt u 3 D torus u TOP 500 #1 (Nov 2009) ASCI Winterschool 2010 Henk Corporaal 51

Graphic Processing Units (GPUs) NVIDIA GT 340 (2010) ATI 5970 (2009) ASCI Winterschool 2010 Henk Corporaal 52

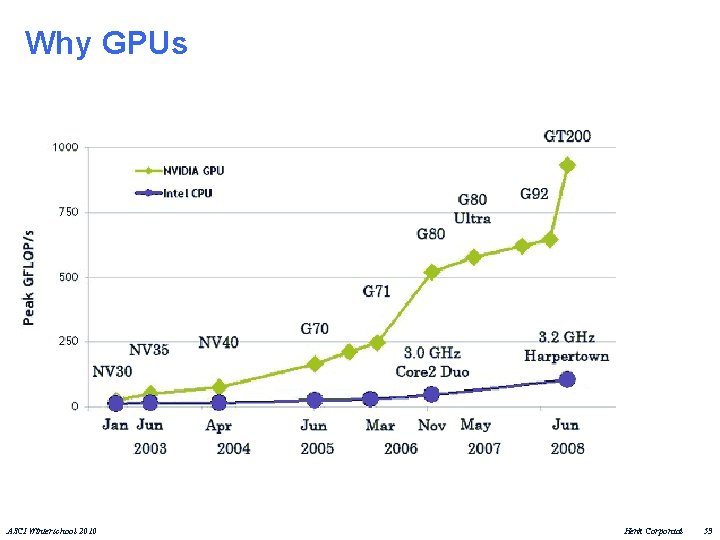

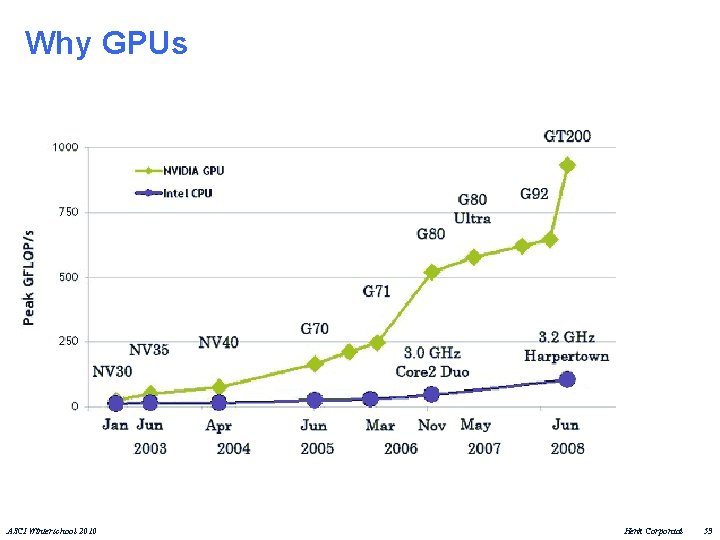

Why GPUs ASCI Winterschool 2010 Henk Corporaal 53

In Need of Tera. Flops? 3 * GTX 295 • 1440 PEs • 5. 3 Tera. Flop ASCI Winterschool 2010 Henk Corporaal 54

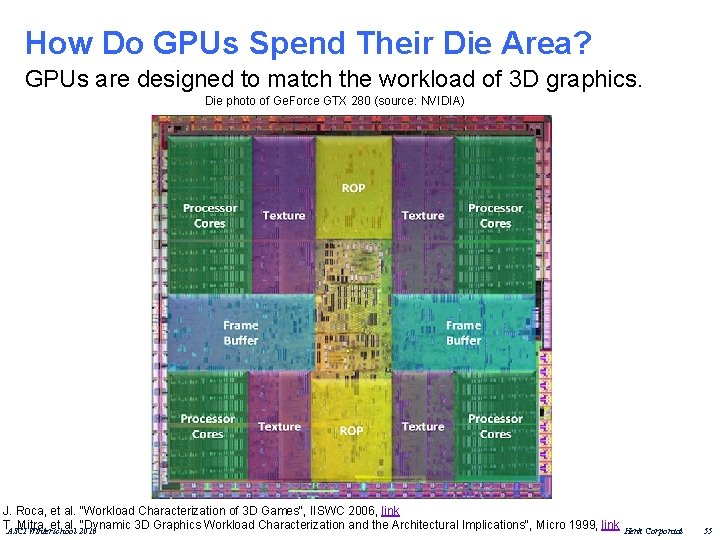

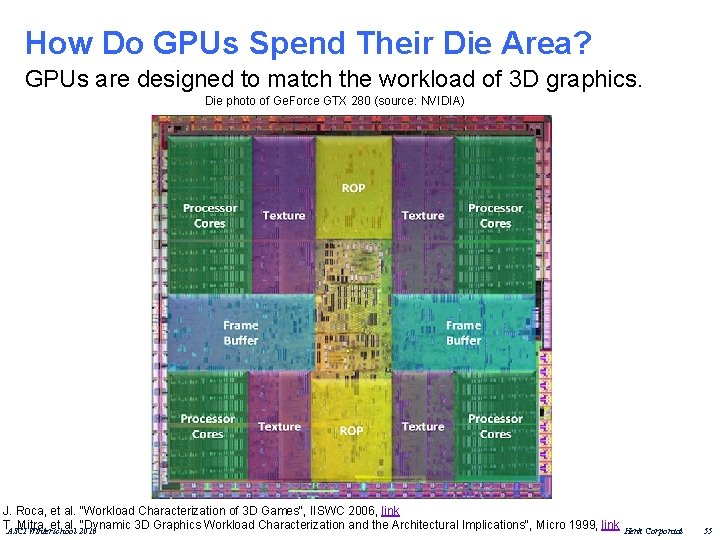

How Do GPUs Spend Their Die Area? GPUs are designed to match the workload of 3 D graphics. Die photo of Ge. Force GTX 280 (source: NVIDIA) J. Roca, et al. "Workload Characterization of 3 D Games", IISWC 2006, link T. Mitra, et al. "Dynamic 3 D Graphics Workload Characterization and the Architectural Implications", Micro 1999, link ASCI Winterschool 2010 Henk Corporaal 55

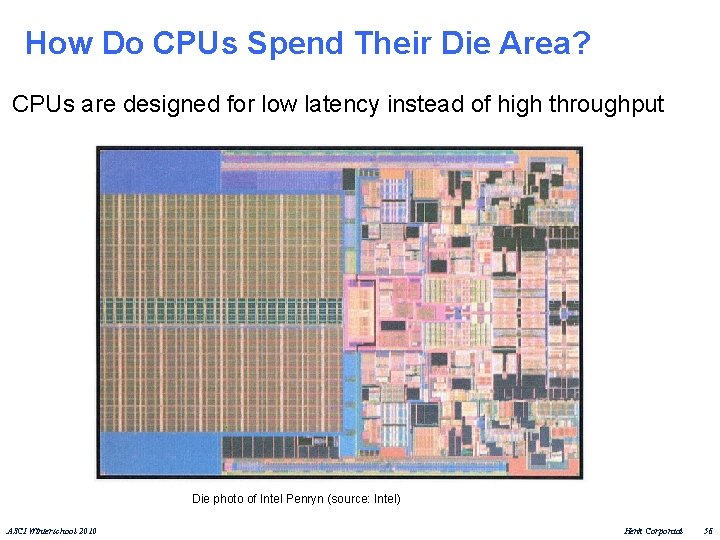

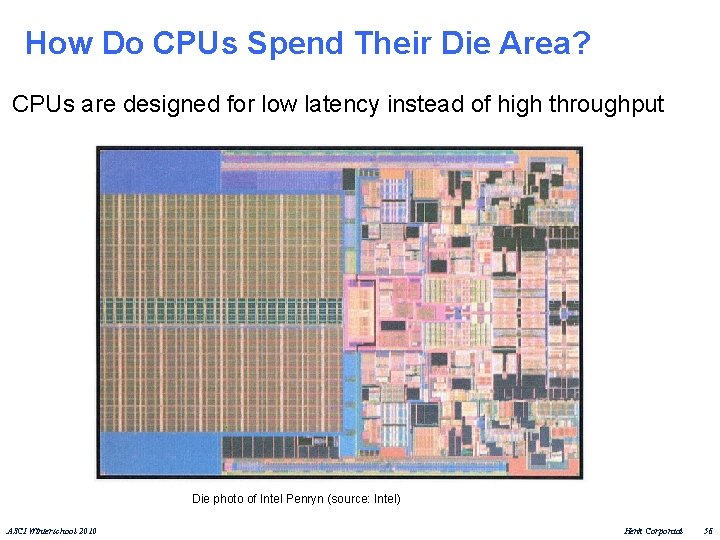

How Do CPUs Spend Their Die Area? CPUs are designed for low latency instead of high throughput Die photo of Intel Penryn (source: Intel) ASCI Winterschool 2010 Henk Corporaal 56

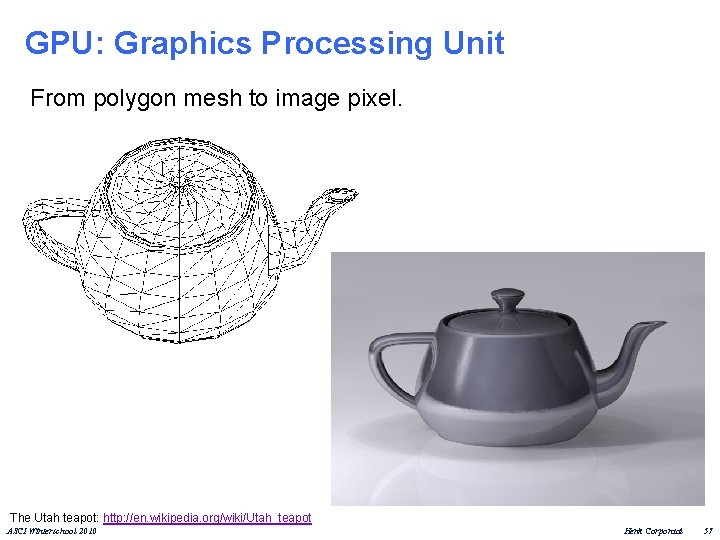

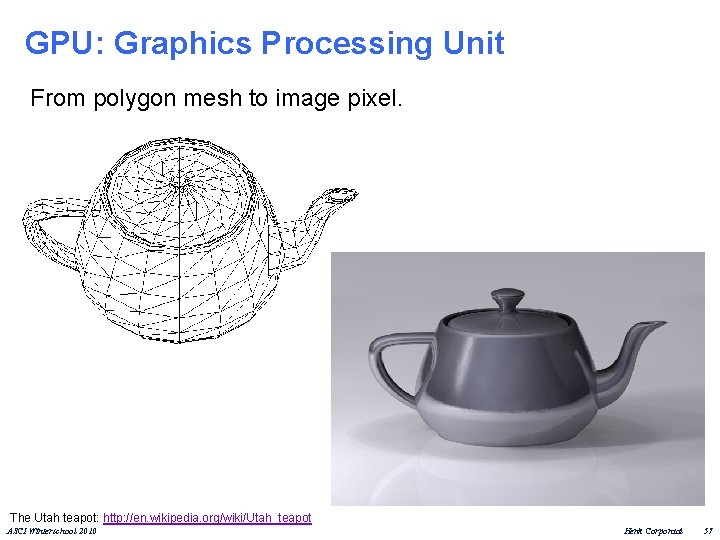

GPU: Graphics Processing Unit From polygon mesh to image pixel. The Utah teapot: http: //en. wikipedia. org/wiki/Utah_teapot ASCI Winterschool 2010 Henk Corporaal 57

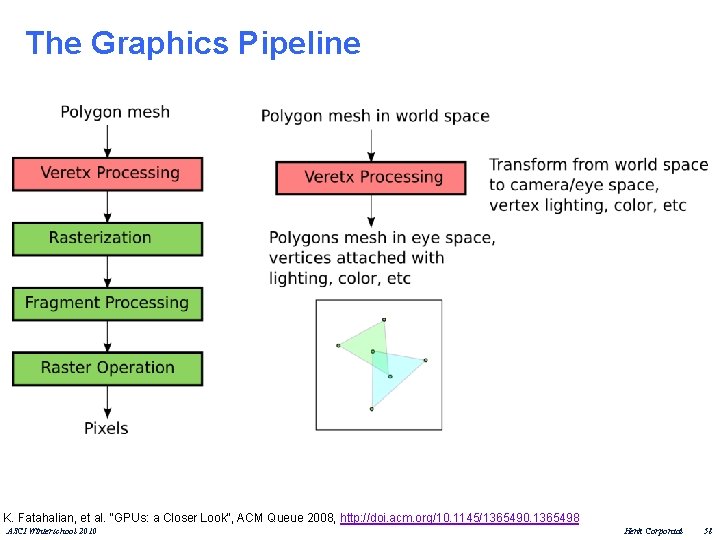

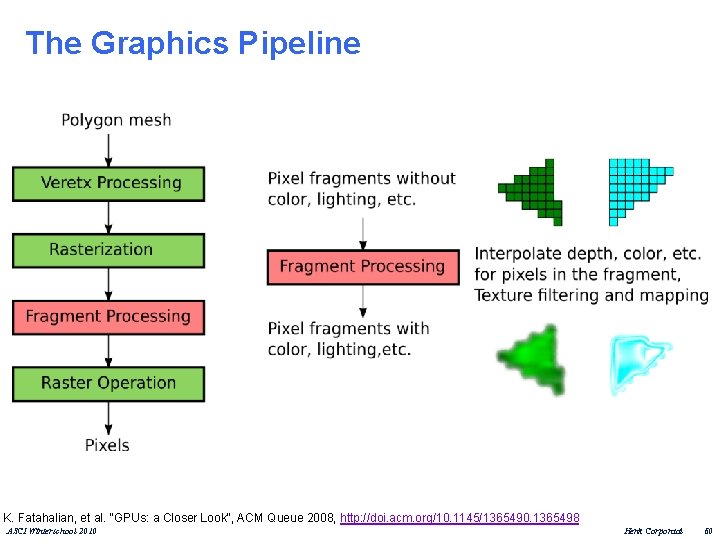

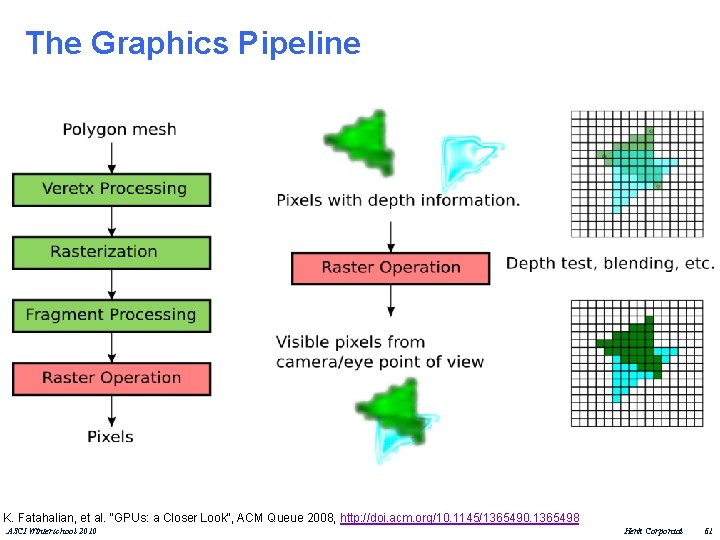

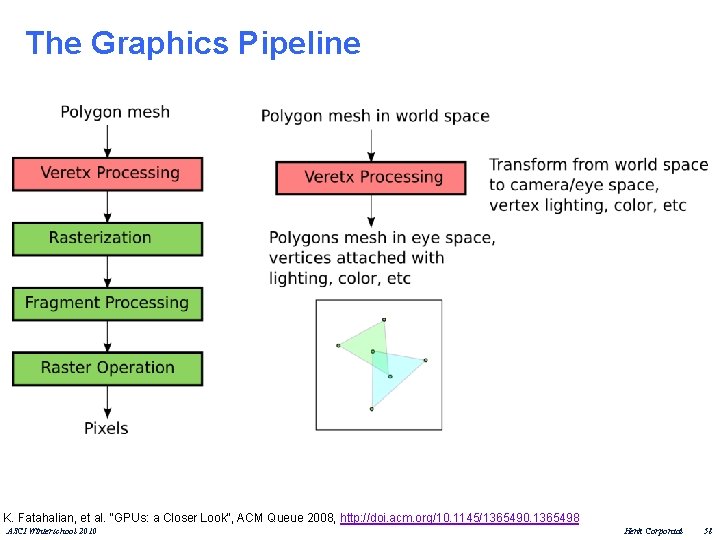

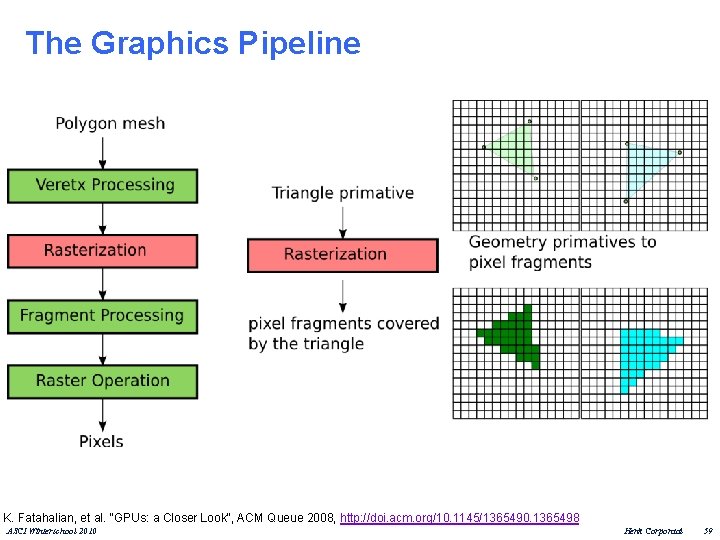

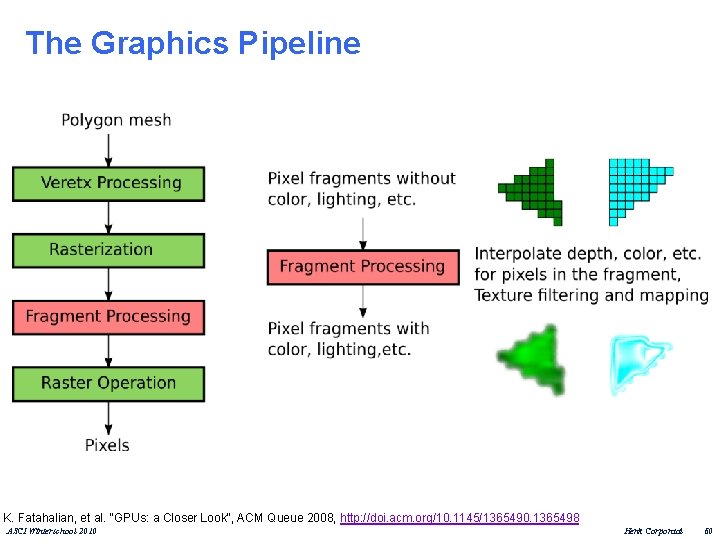

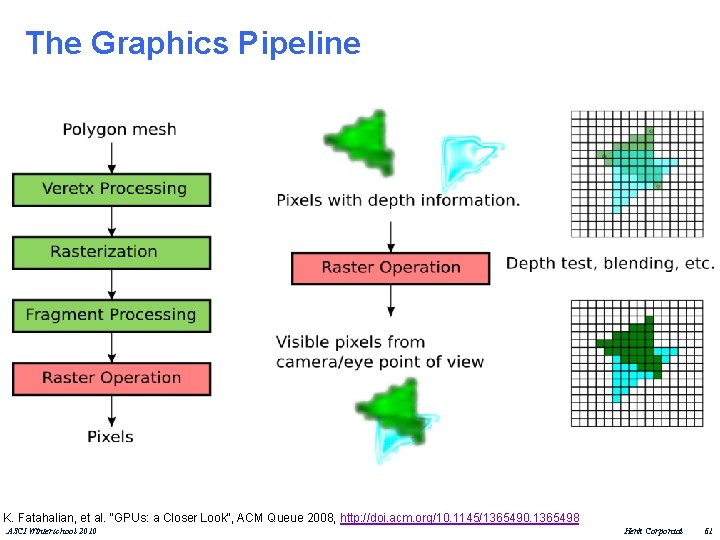

The Graphics Pipeline K. Fatahalian, et al. "GPUs: a Closer Look", ACM Queue 2008, http: //doi. acm. org/10. 1145/1365490. 1365498 ASCI Winterschool 2010 Henk Corporaal 58

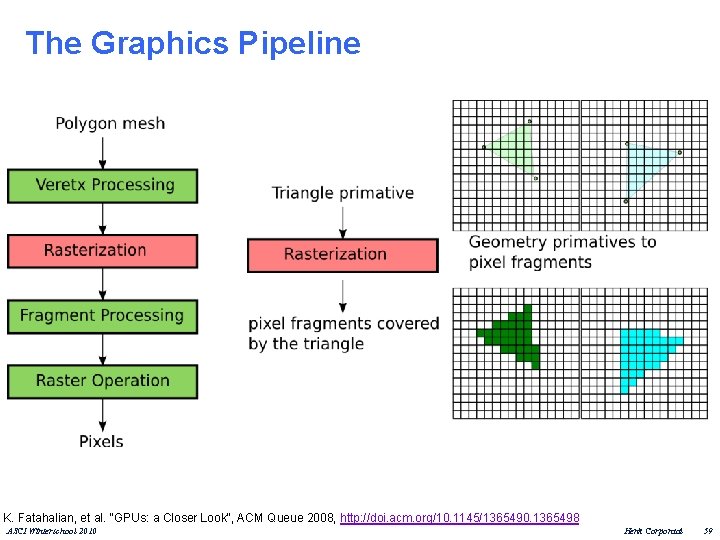

The Graphics Pipeline K. Fatahalian, et al. "GPUs: a Closer Look", ACM Queue 2008, http: //doi. acm. org/10. 1145/1365490. 1365498 ASCI Winterschool 2010 Henk Corporaal 59

The Graphics Pipeline K. Fatahalian, et al. "GPUs: a Closer Look", ACM Queue 2008, http: //doi. acm. org/10. 1145/1365490. 1365498 ASCI Winterschool 2010 Henk Corporaal 60

The Graphics Pipeline K. Fatahalian, et al. "GPUs: a Closer Look", ACM Queue 2008, http: //doi. acm. org/10. 1145/1365490. 1365498 ASCI Winterschool 2010 Henk Corporaal 61

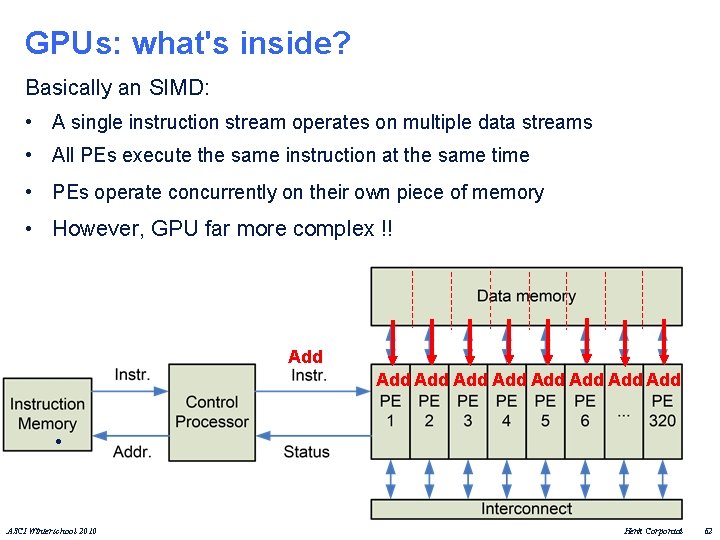

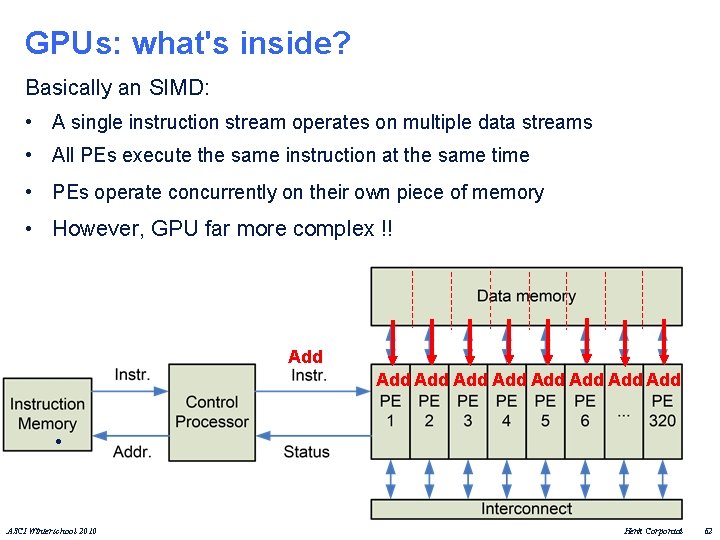

GPUs: what's inside? Basically an SIMD: • A single instruction stream operates on multiple data streams • All PEs execute the same instruction at the same time • PEs operate concurrently on their own piece of memory • However, GPU far more complex !! Add Add Add • • ASCI Winterschool 2010 Henk Corporaal 62

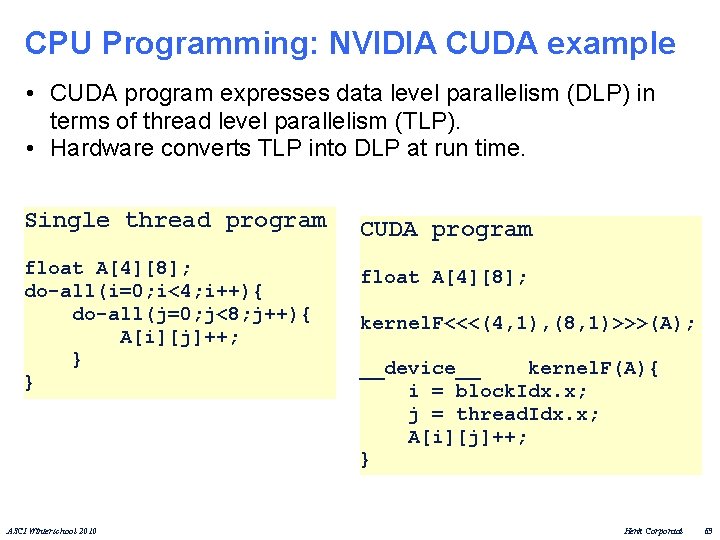

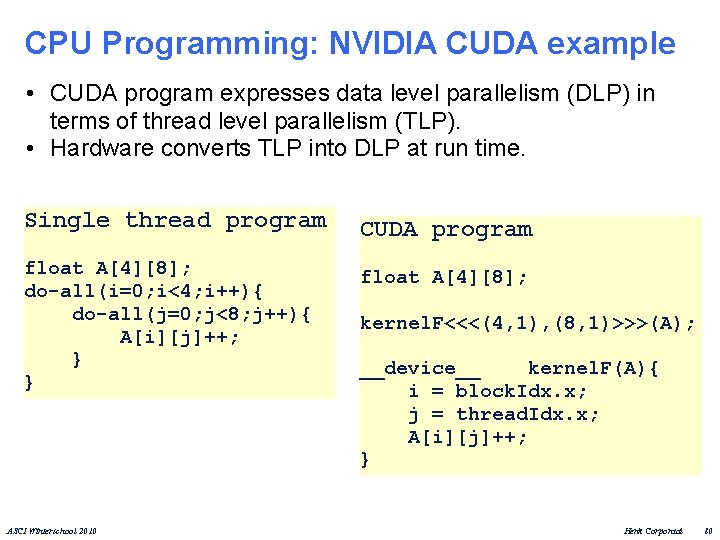

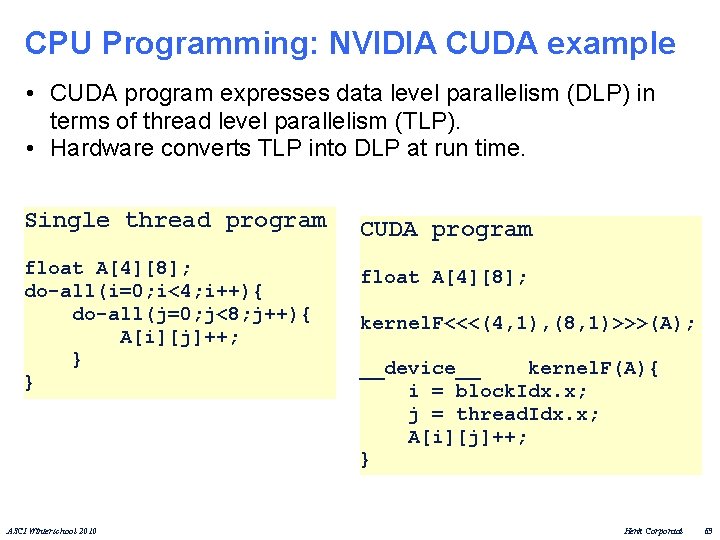

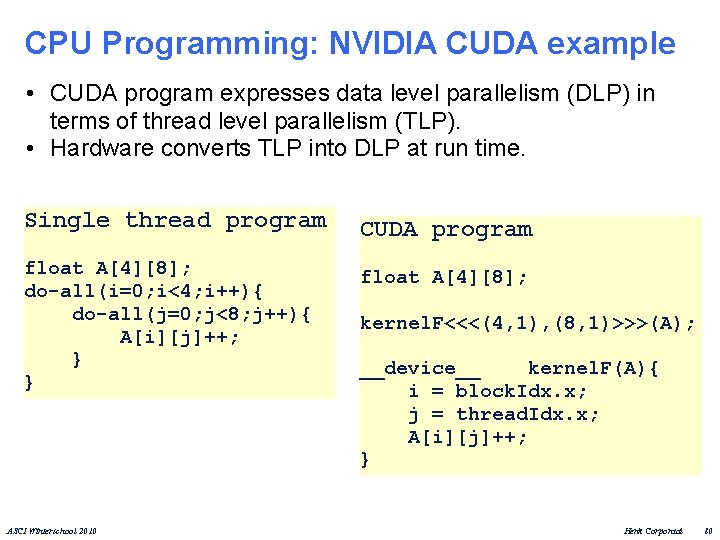

CPU Programming: NVIDIA CUDA example • CUDA program expresses data level parallelism (DLP) in terms of thread level parallelism (TLP). • Hardware converts TLP into DLP at run time. Single thread program CUDA program float A[4][8]; do-all(i=0; i<4; i++){ do-all(j=0; j<8; j++){ A[i][j]++; } } float A[4][8]; ASCI Winterschool 2010 kernel. F<<<(4, 1), (8, 1)>>>(A); __device__ kernel. F(A){ i = block. Idx. x; j = thread. Idx. x; A[i][j]++; } Henk Corporaal 63

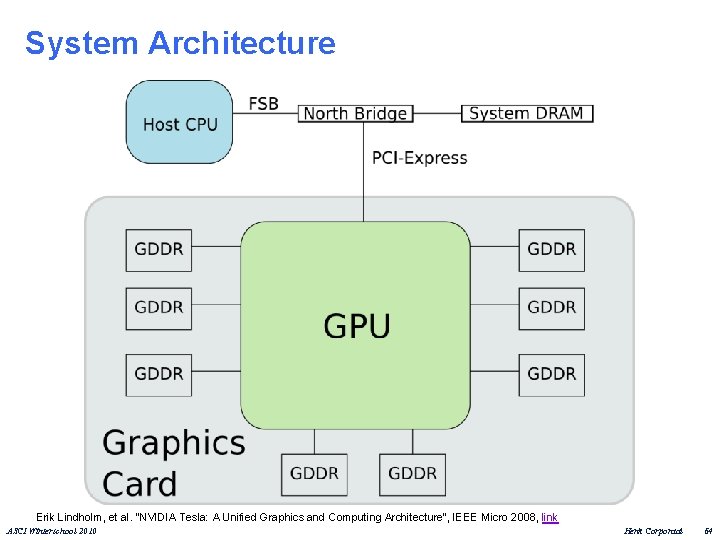

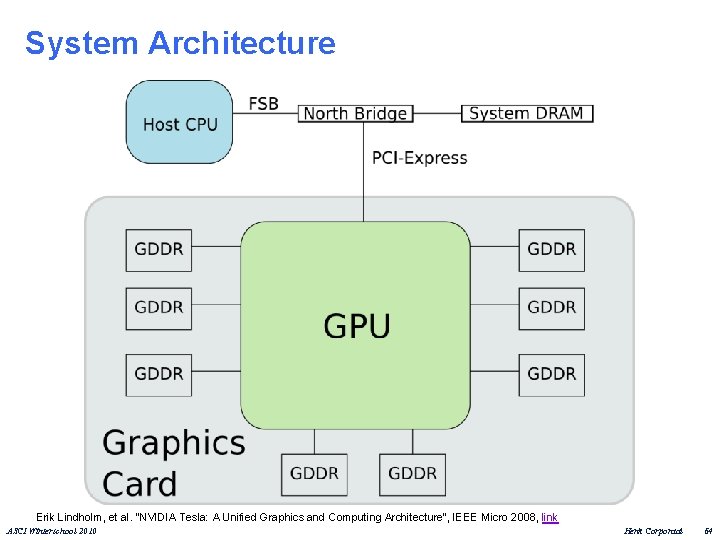

System Architecture Erik Lindholm, et al. "NVIDIA Tesla: A Unified Graphics and Computing Architecture", IEEE Micro 2008, link ASCI Winterschool 2010 Henk Corporaal 64

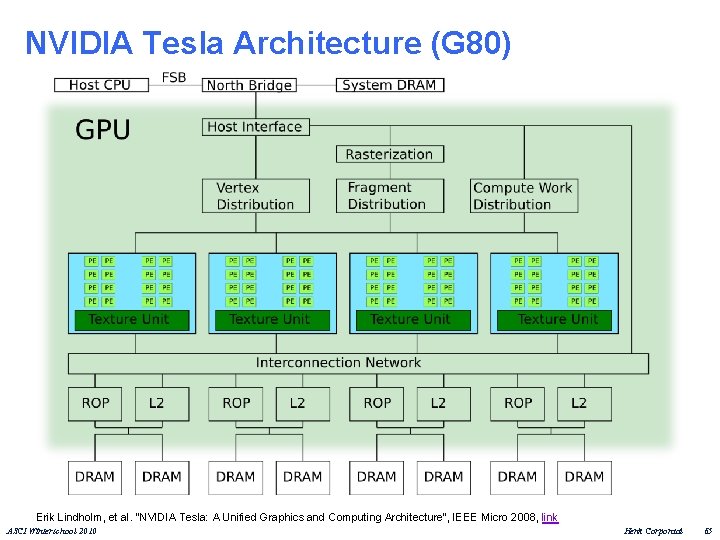

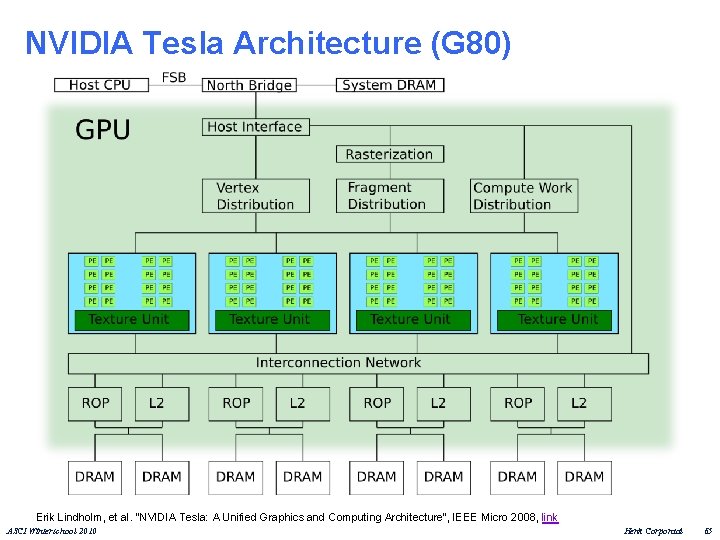

NVIDIA Tesla Architecture (G 80) Erik Lindholm, et al. "NVIDIA Tesla: A Unified Graphics and Computing Architecture", IEEE Micro 2008, link ASCI Winterschool 2010 Henk Corporaal 65

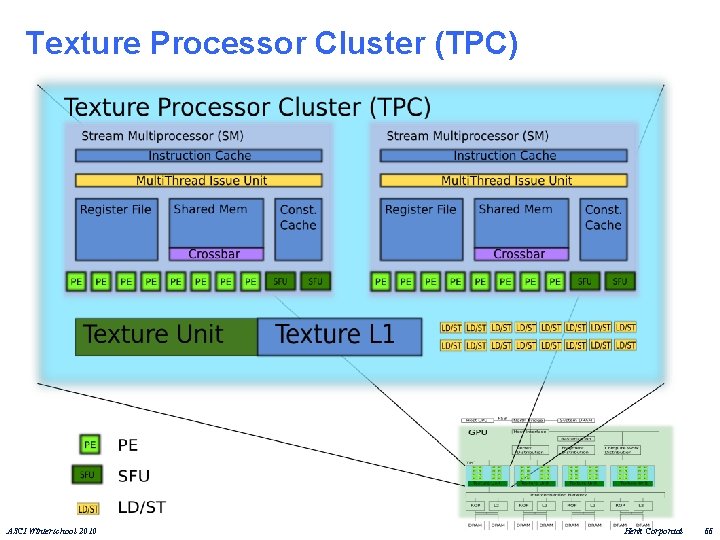

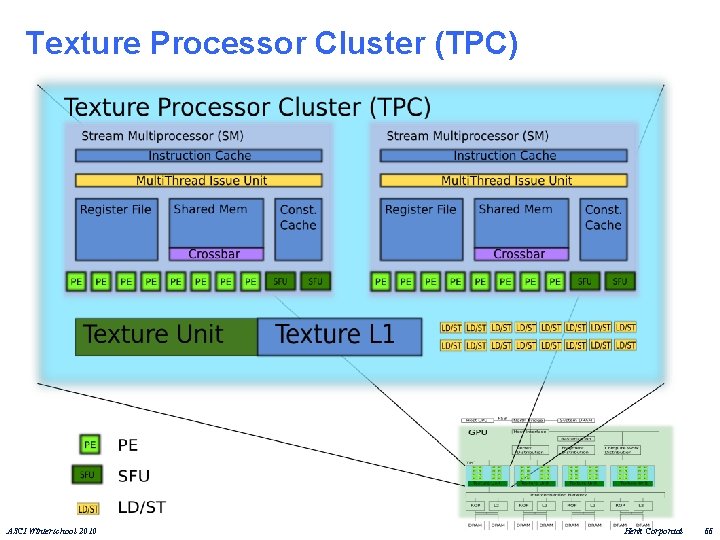

Texture Processor Cluster (TPC) ASCI Winterschool 2010 Henk Corporaal 66

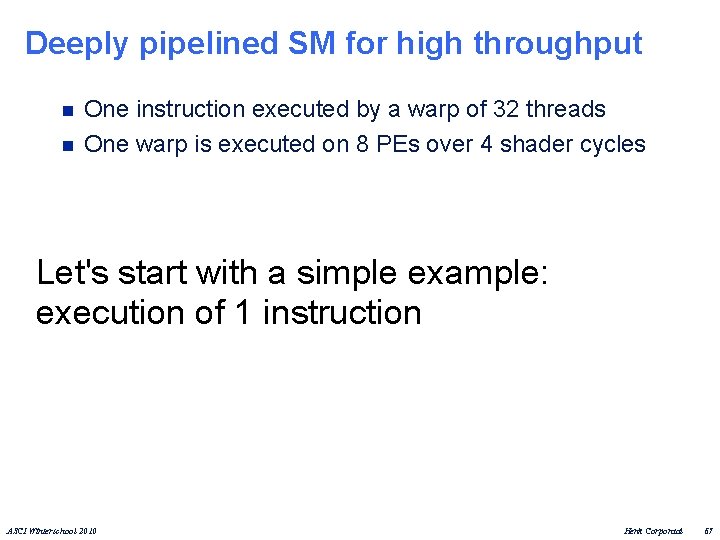

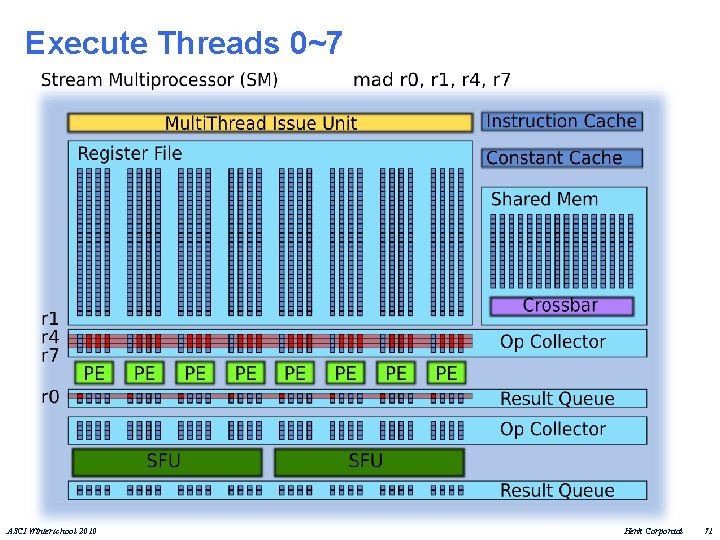

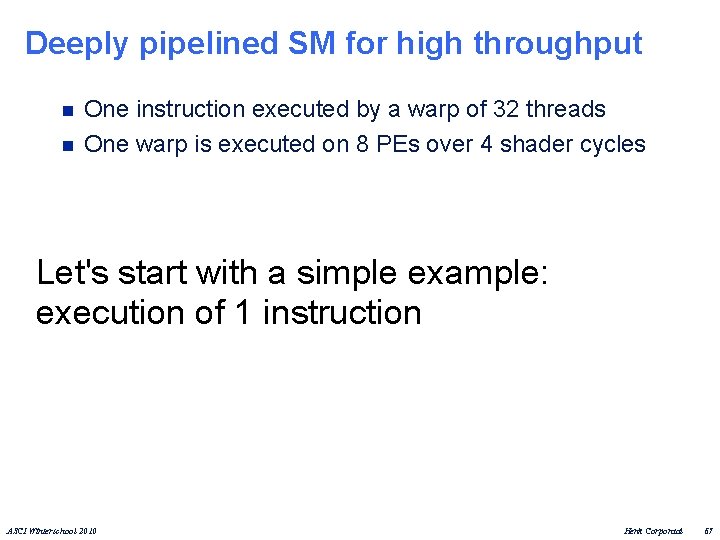

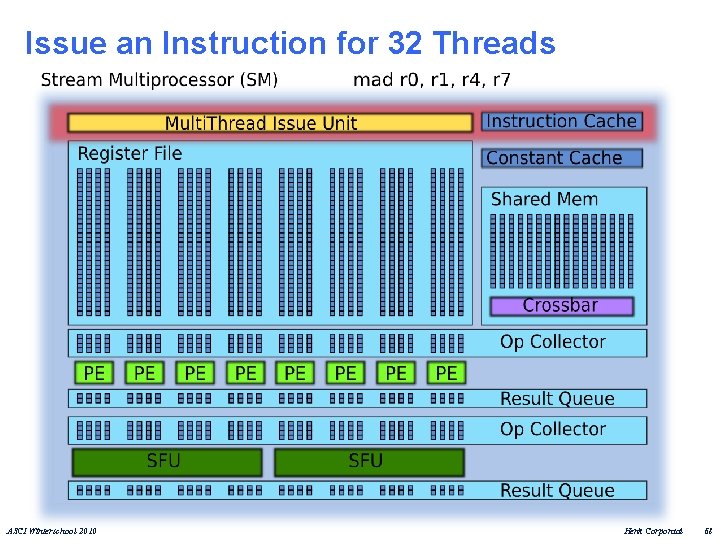

Deeply pipelined SM for high throughput n n One instruction executed by a warp of 32 threads One warp is executed on 8 PEs over 4 shader cycles Let's start with a simple example: execution of 1 instruction ASCI Winterschool 2010 Henk Corporaal 67

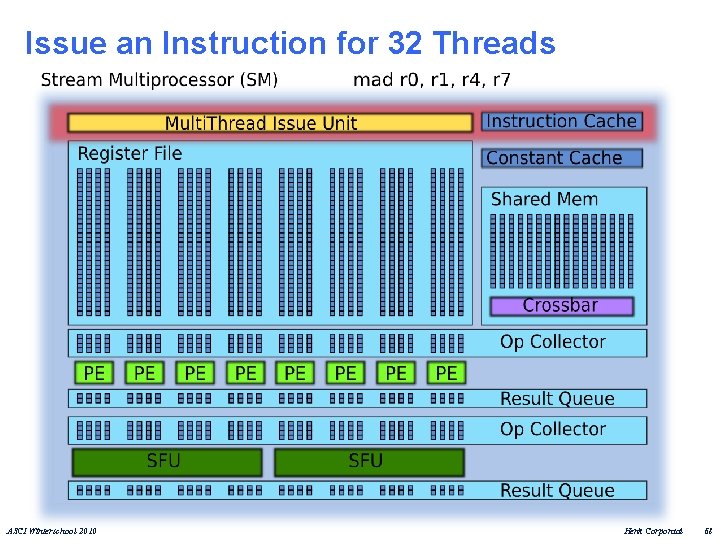

Issue an Instruction for 32 Threads ASCI Winterschool 2010 Henk Corporaal 68

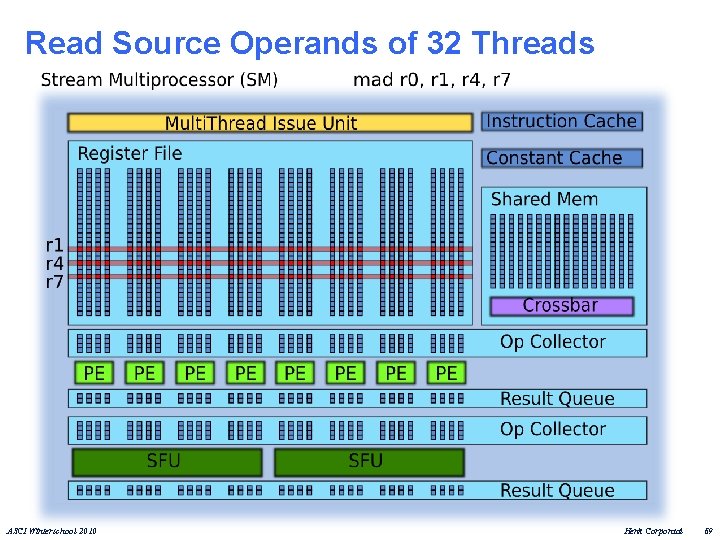

Read Source Operands of 32 Threads ASCI Winterschool 2010 Henk Corporaal 69

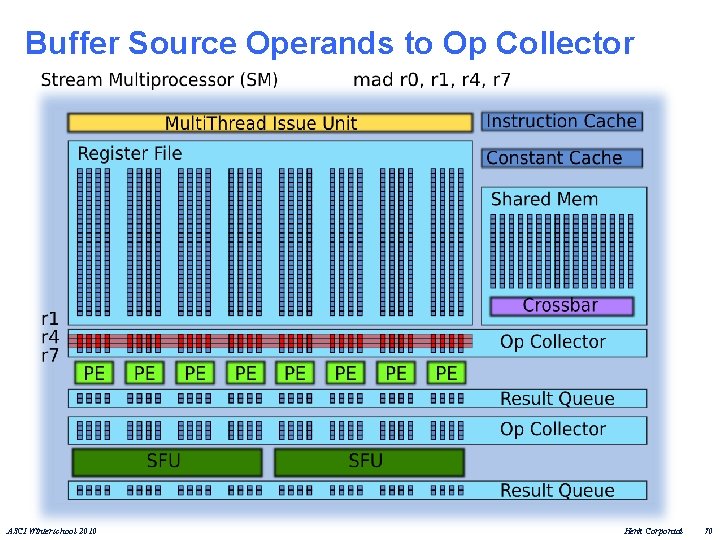

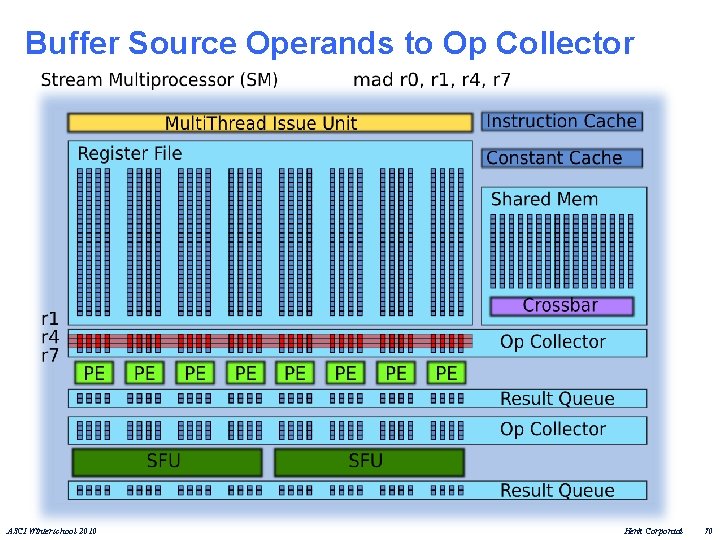

Buffer Source Operands to Op Collector ASCI Winterschool 2010 Henk Corporaal 70

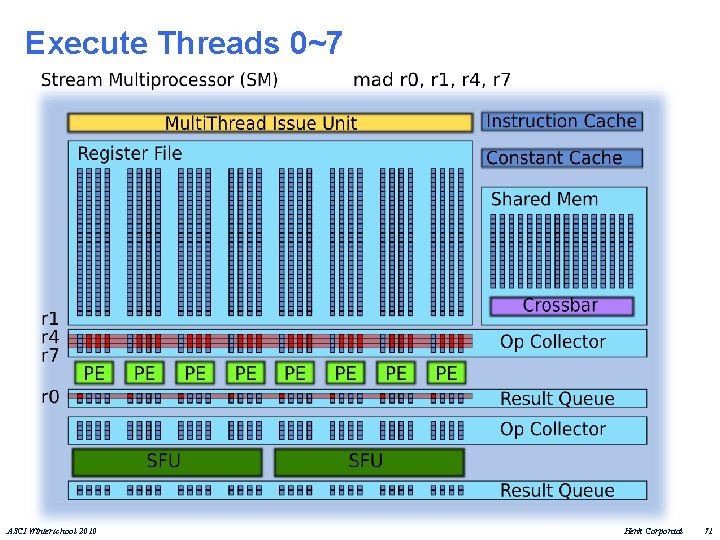

Execute Threads 0~7 ASCI Winterschool 2010 Henk Corporaal 71

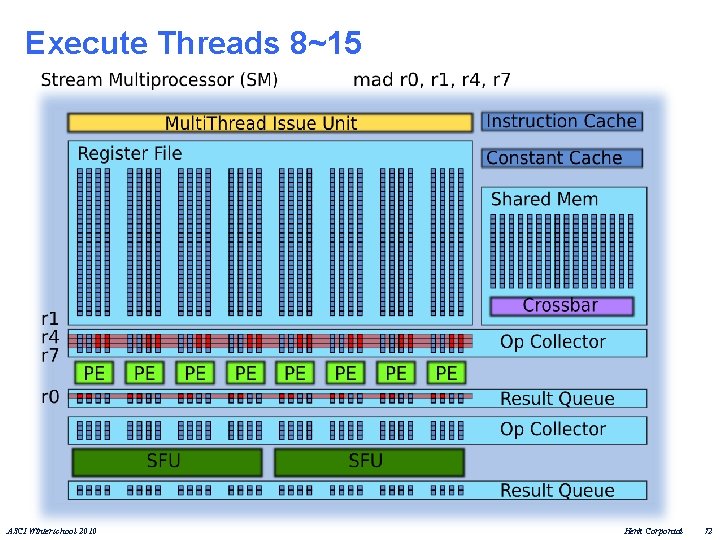

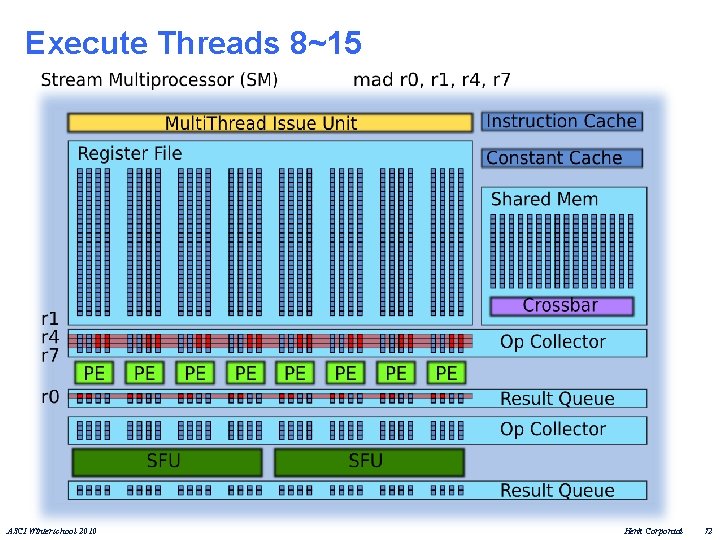

Execute Threads 8~15 ASCI Winterschool 2010 Henk Corporaal 72

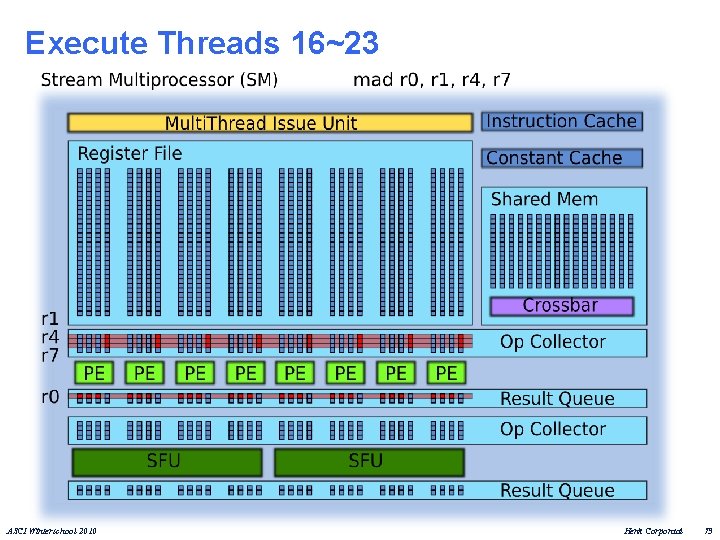

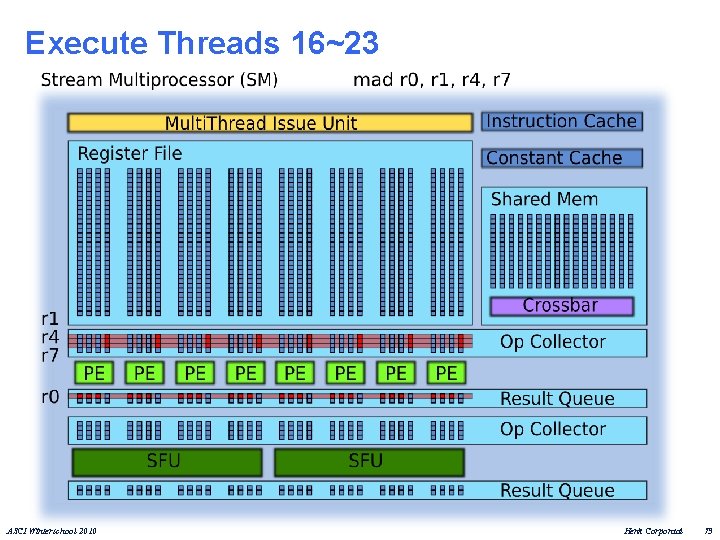

Execute Threads 16~23 ASCI Winterschool 2010 Henk Corporaal 73

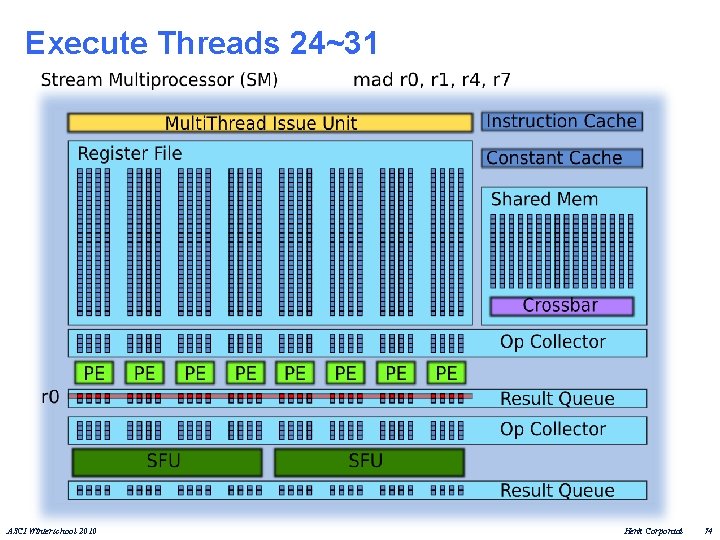

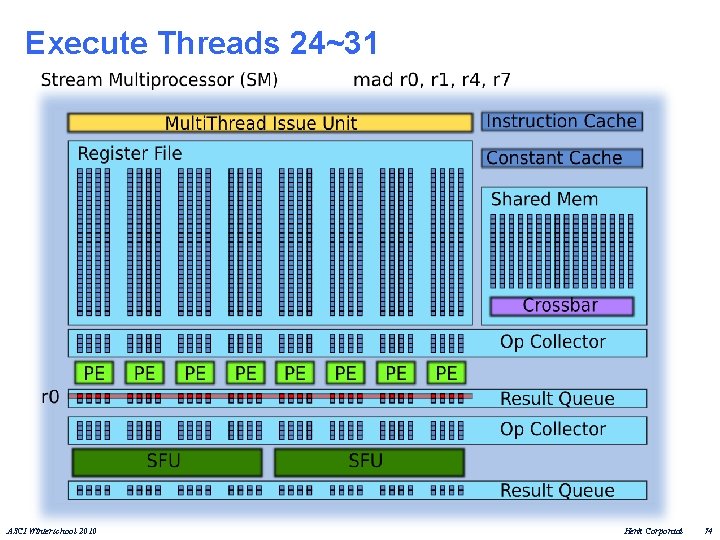

Execute Threads 24~31 ASCI Winterschool 2010 Henk Corporaal 74

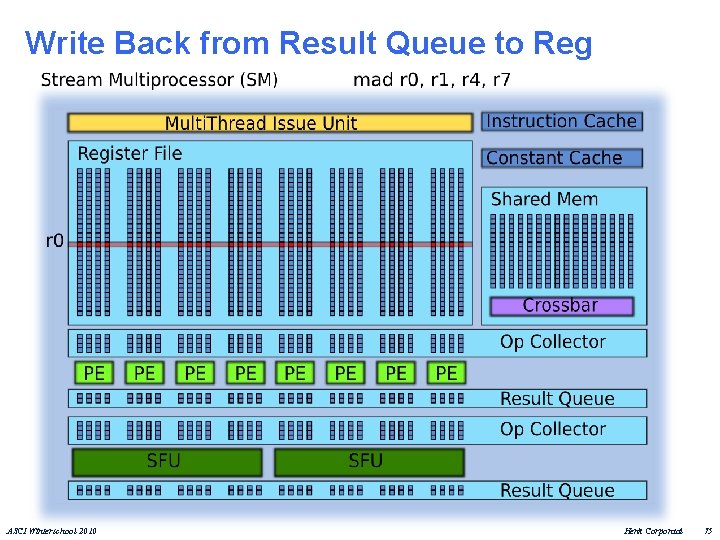

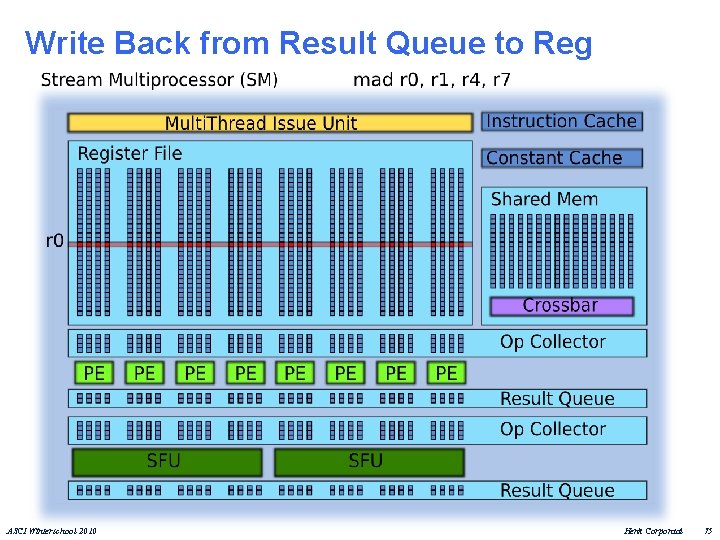

Write Back from Result Queue to Reg ASCI Winterschool 2010 Henk Corporaal 75

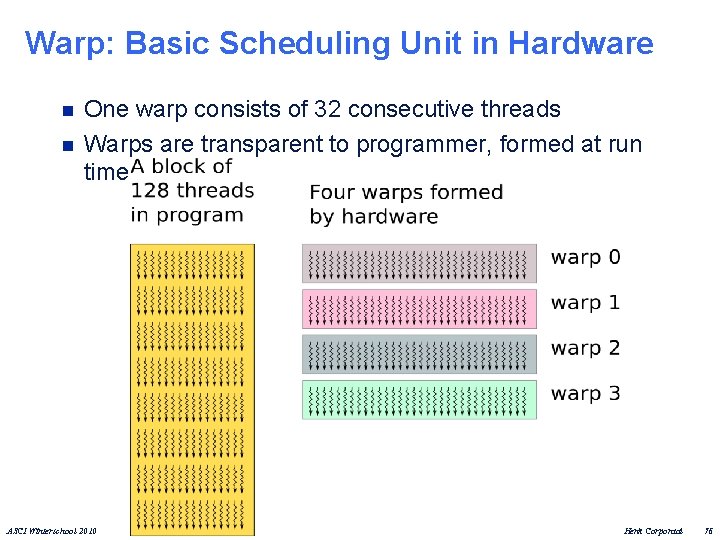

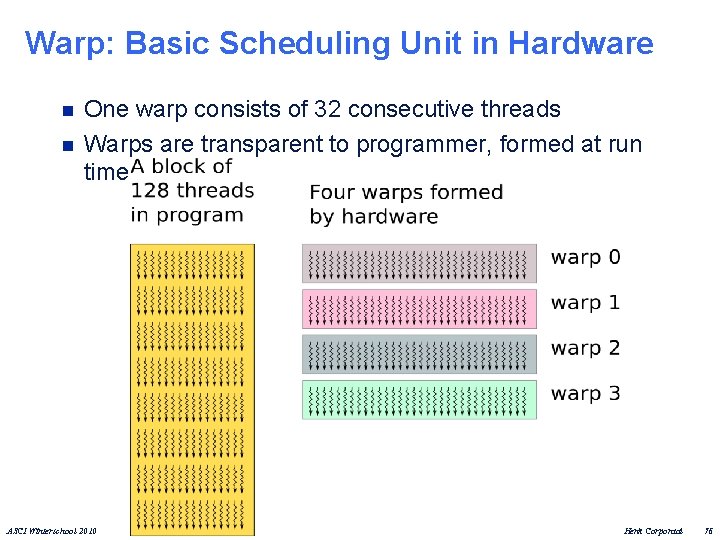

Warp: Basic Scheduling Unit in Hardware n n One warp consists of 32 consecutive threads Warps are transparent to programmer, formed at run time ASCI Winterschool 2010 Henk Corporaal 76

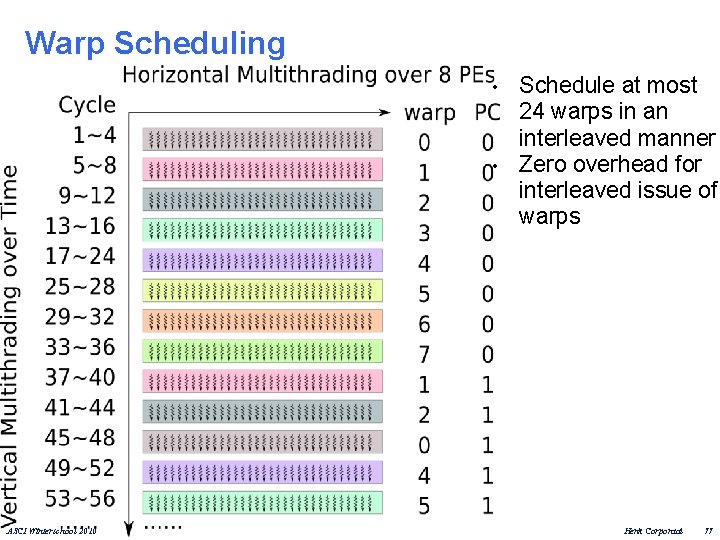

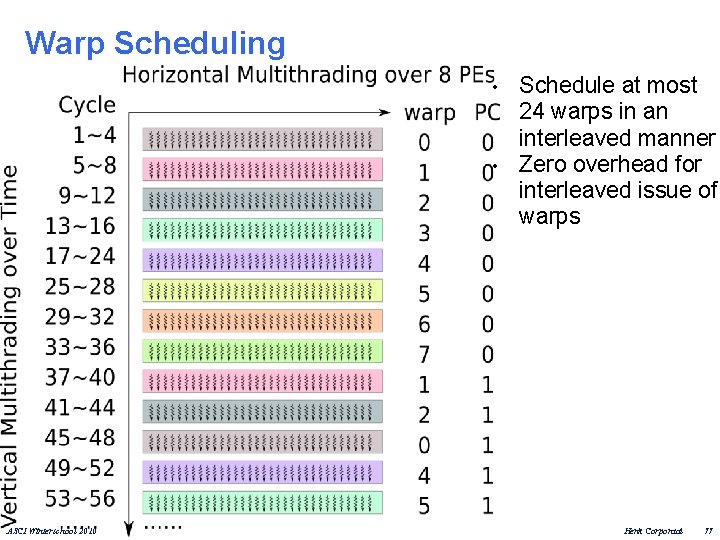

Warp Scheduling • • ASCI Winterschool 2010 Schedule at most 24 warps in an interleaved manner Zero overhead for interleaved issue of warps Henk Corporaal 77

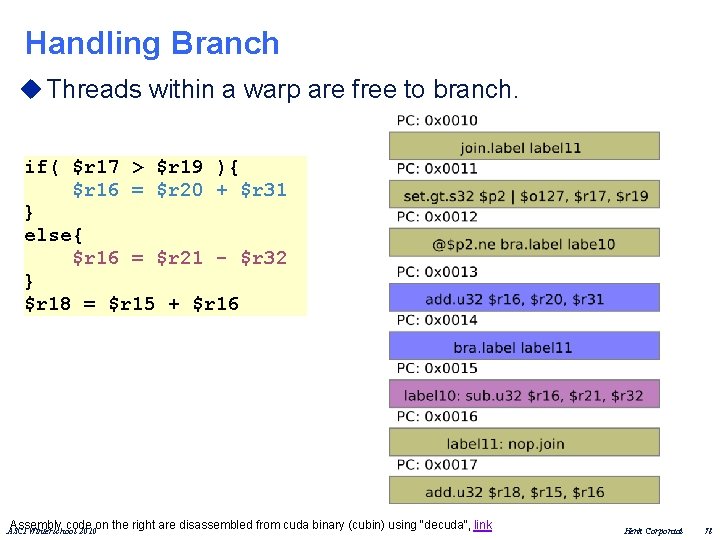

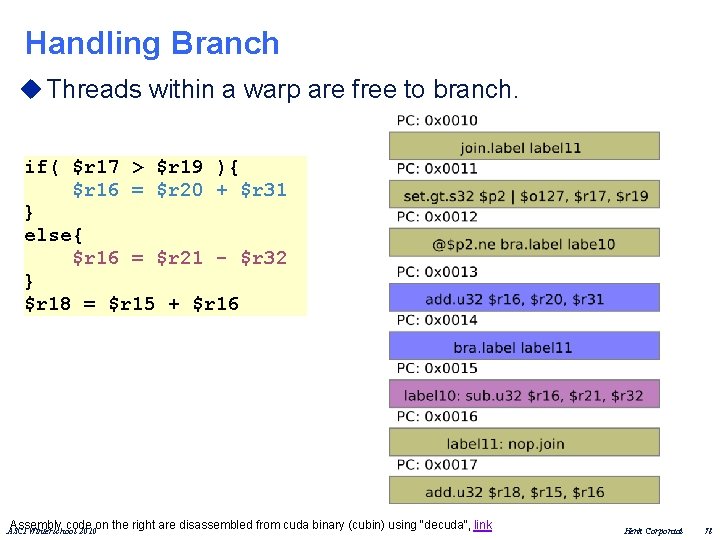

Handling Branch u Threads within a warp are free to branch. if( $r 17 > $r 19 ){ $r 16 = $r 20 + $r 31 } else{ $r 16 = $r 21 - $r 32 } $r 18 = $r 15 + $r 16 Assembly code on the right are disassembled from cuda binary (cubin) using "decuda", link ASCI Winterschool 2010 Henk Corporaal 78

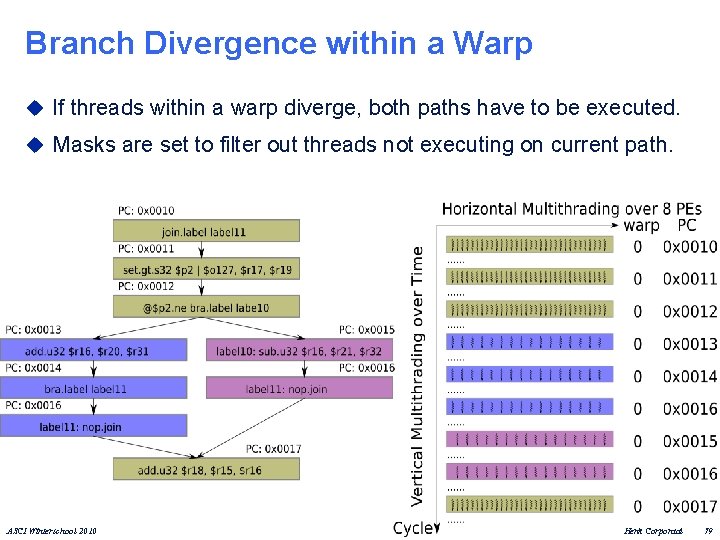

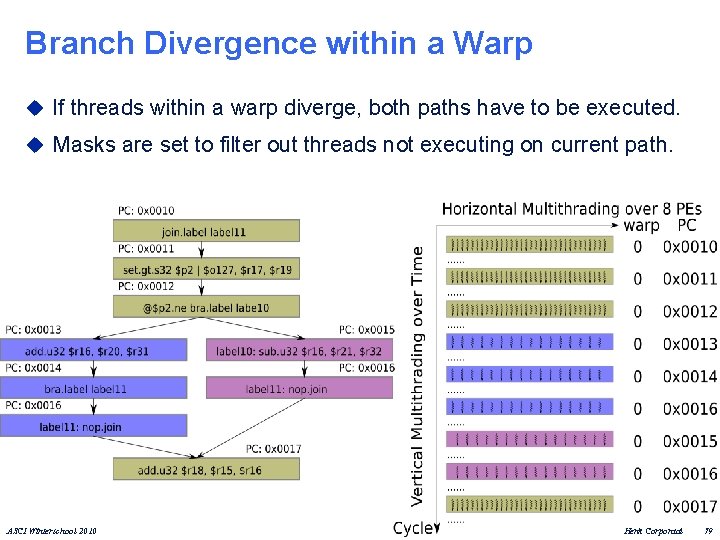

Branch Divergence within a Warp u If threads within a warp diverge, both paths have to be executed. u Masks are set to filter out threads not executing on current path. ASCI Winterschool 2010 Henk Corporaal 79

CPU Programming: NVIDIA CUDA example • CUDA program expresses data level parallelism (DLP) in terms of thread level parallelism (TLP). • Hardware converts TLP into DLP at run time. Single thread program CUDA program float A[4][8]; do-all(i=0; i<4; i++){ do-all(j=0; j<8; j++){ A[i][j]++; } } float A[4][8]; ASCI Winterschool 2010 kernel. F<<<(4, 1), (8, 1)>>>(A); __device__ kernel. F(A){ i = block. Idx. x; j = thread. Idx. x; A[i][j]++; } Henk Corporaal 80

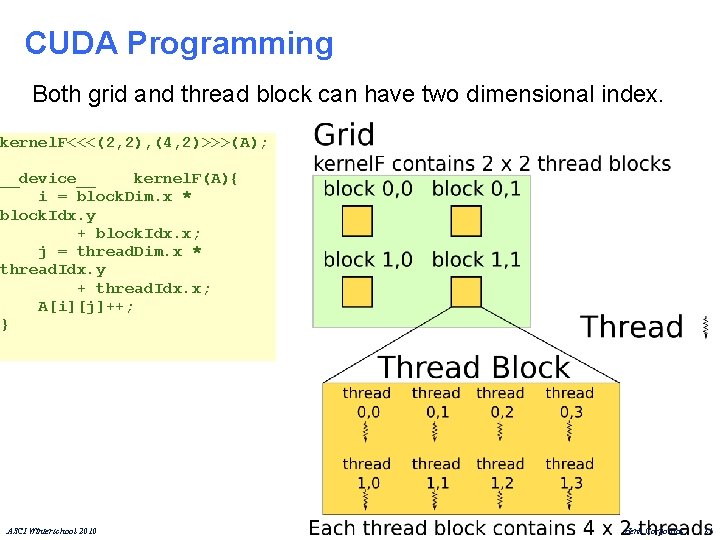

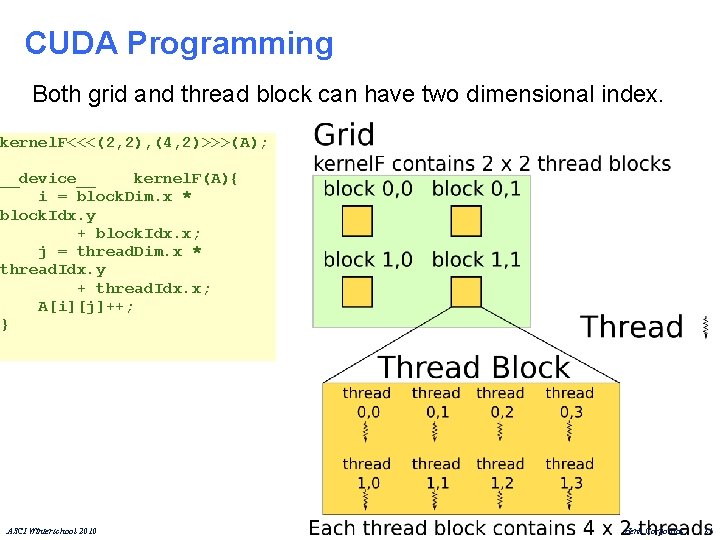

CUDA Programming Both grid and thread block can have two dimensional index. kernel. F<<<(2, 2), (4, 2)>>>(A); __device__ kernel. F(A){ i = block. Dim. x * block. Idx. y + block. Idx. x; j = thread. Dim. x * thread. Idx. y + thread. Idx. x; A[i][j]++; } ASCI Winterschool 2010 Henk Corporaal 81

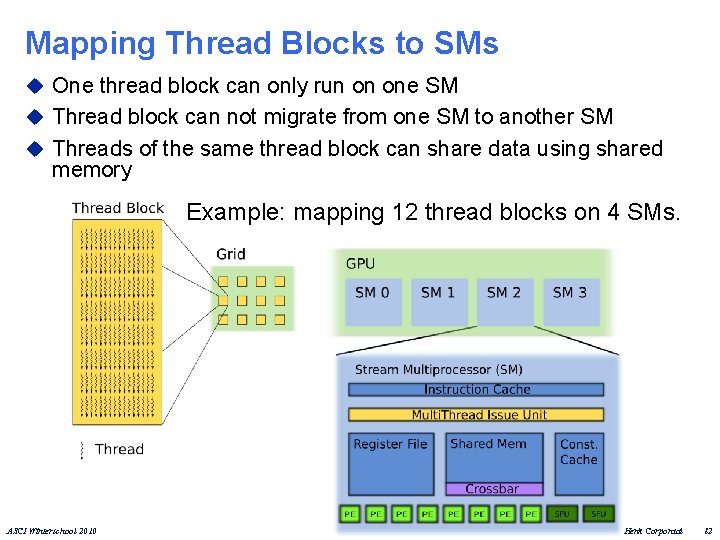

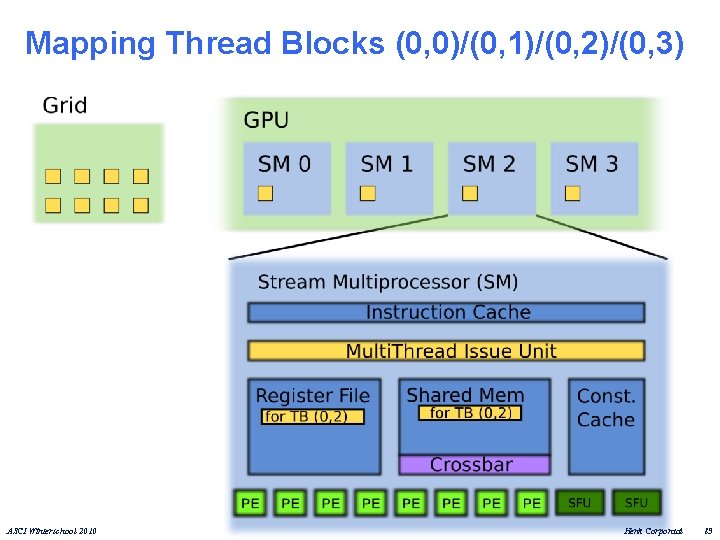

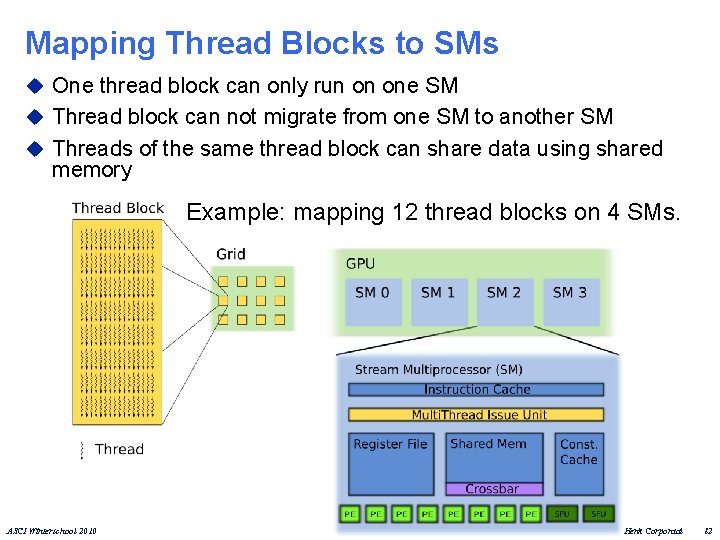

Mapping Thread Blocks to SMs u One thread block can only run on one SM u Thread block can not migrate from one SM to another SM u Threads of the same thread block can share data using shared memory Example: mapping 12 thread blocks on 4 SMs. ASCI Winterschool 2010 Henk Corporaal 82

Mapping Thread Blocks (0, 0)/(0, 1)/(0, 2)/(0, 3) ASCI Winterschool 2010 Henk Corporaal 83

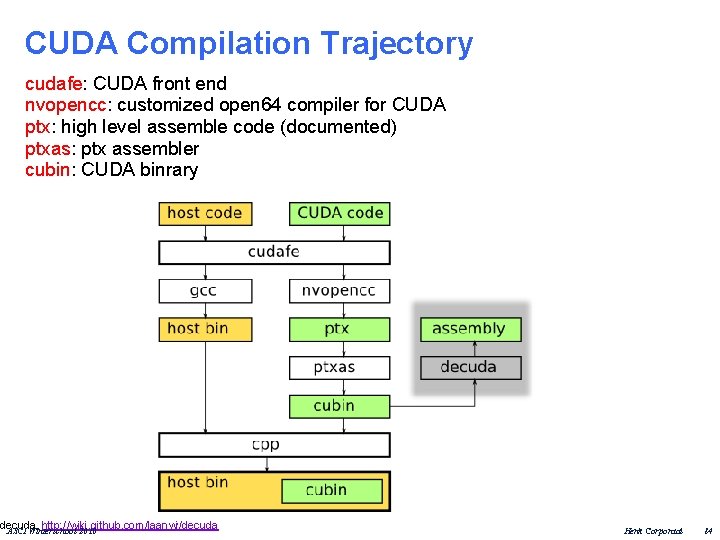

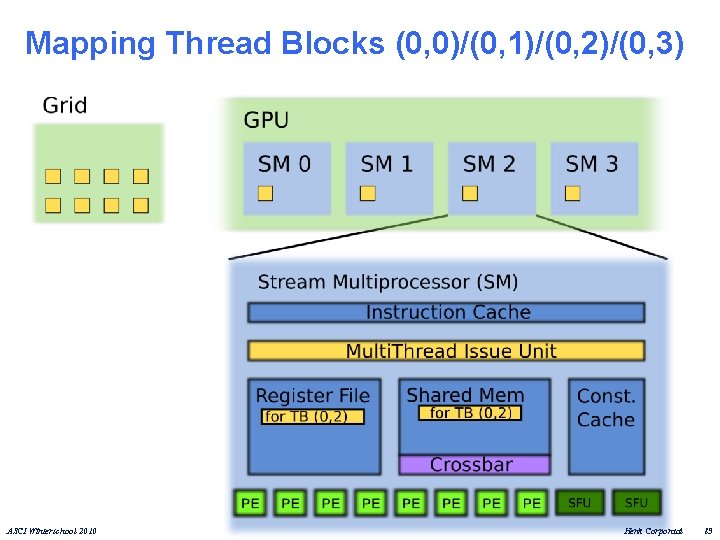

CUDA Compilation Trajectory cudafe: CUDA front end nvopencc: customized open 64 compiler for CUDA ptx: high level assemble code (documented) ptxas: ptx assembler cubin: CUDA binrary decuda, http: //wiki. github. com/laanwj/decuda ASCI Winterschool 2010 Henk Corporaal 84

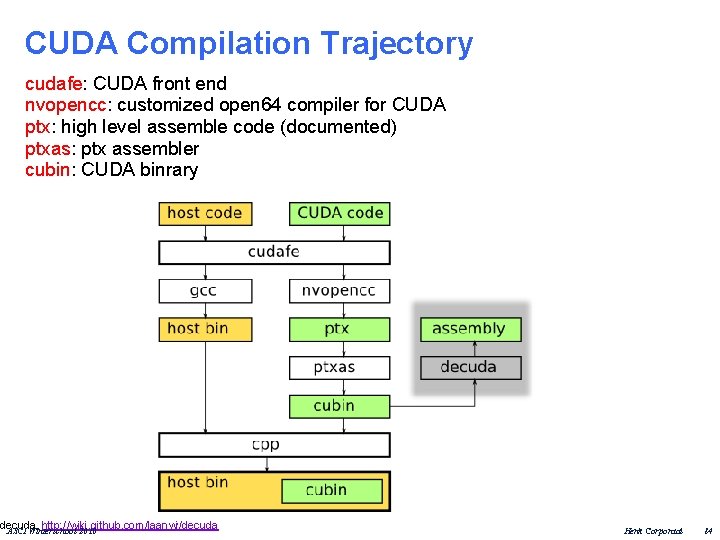

Optimization Guide u Optimizations on memory latency tolerance n Reduce register pressure n Reduce shared memory pressure u Optimizations on memory bandwidth n Global memory coalesce n Shared memory bank conflicts n Grouping byte access n Avoid Partition camping u Optimizations on computation efficiency n Mul/Add balancing n Increase floating point proportion u Optimizations on operational intensity n Use tiled algorithm n Tuning thread granularity ASCI Winterschool 2010 Henk Corporaal 85

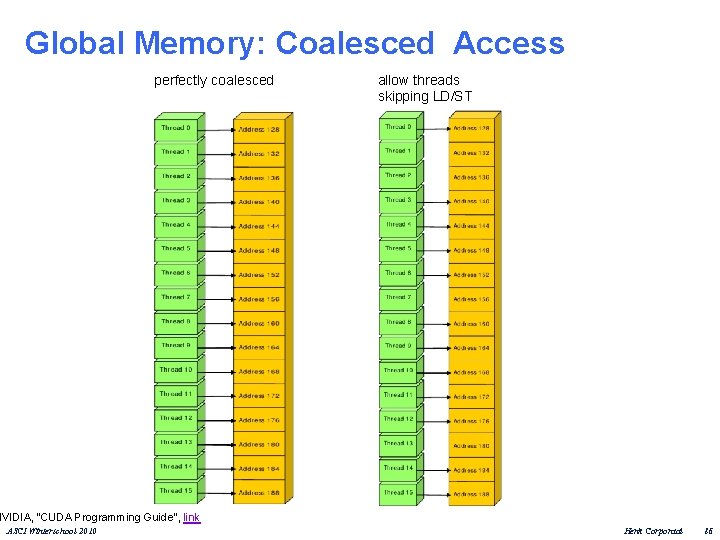

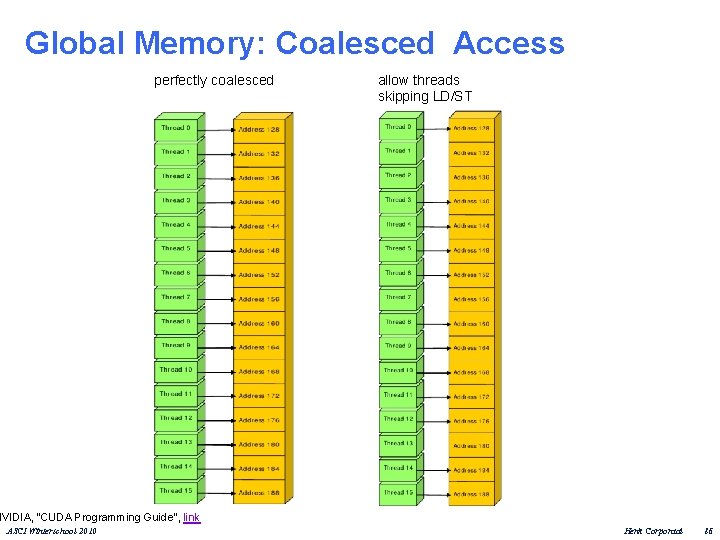

Global Memory: Coalesced Access perfectly coalesced allow threads skipping LD/ST NVIDIA, "CUDA Programming Guide", link ASCI Winterschool 2010 Henk Corporaal 86

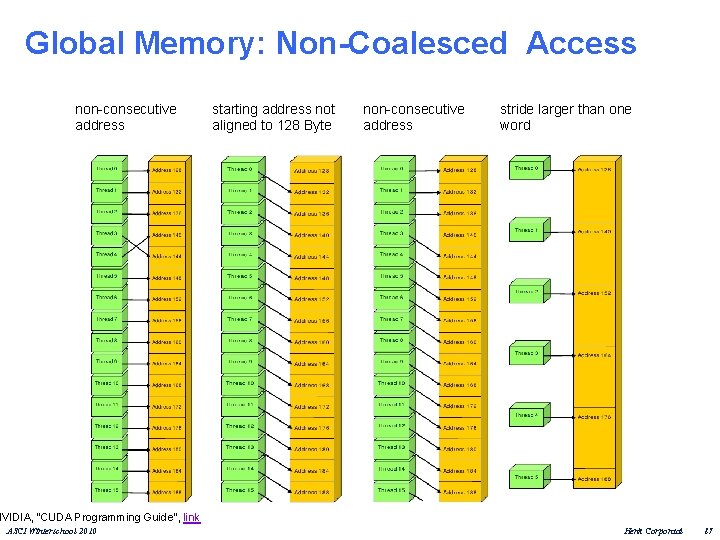

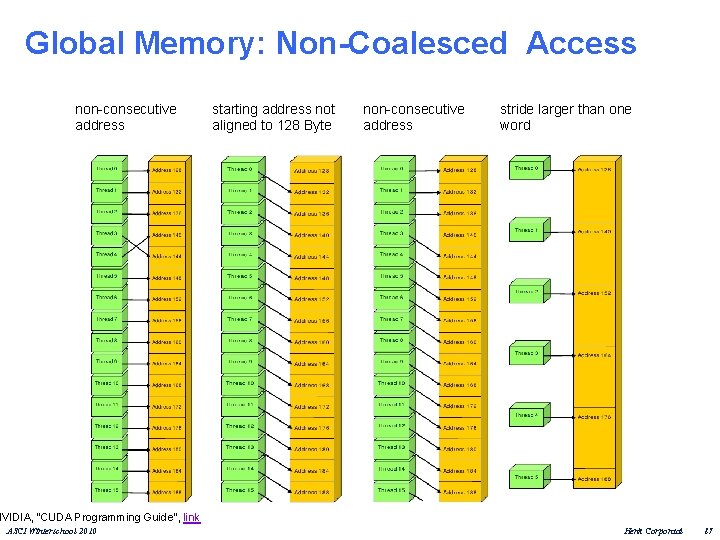

Global Memory: Non-Coalesced Access non-consecutive address starting address not aligned to 128 Byte non-consecutive address stride larger than one word NVIDIA, "CUDA Programming Guide", link ASCI Winterschool 2010 Henk Corporaal 87

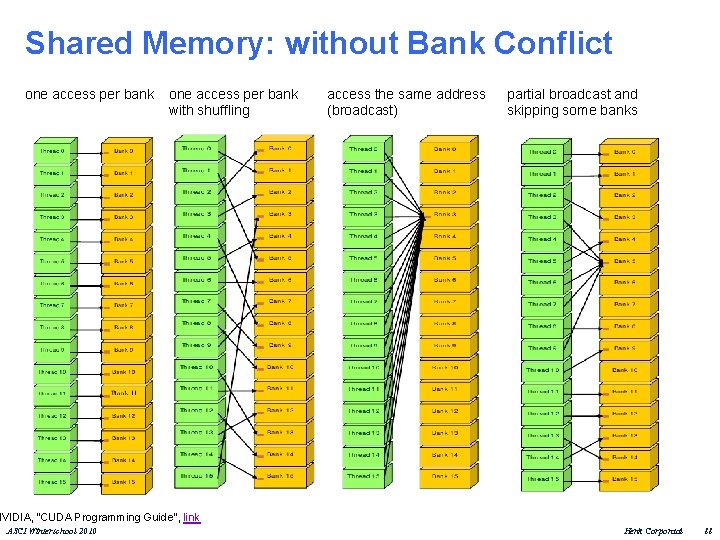

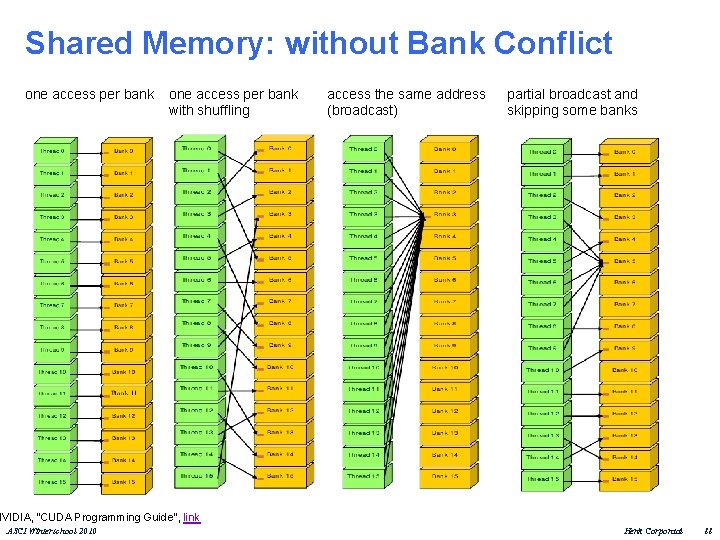

Shared Memory: without Bank Conflict one access per bank with shuffling access the same address (broadcast) partial broadcast and skipping some banks NVIDIA, "CUDA Programming Guide", link ASCI Winterschool 2010 Henk Corporaal 88

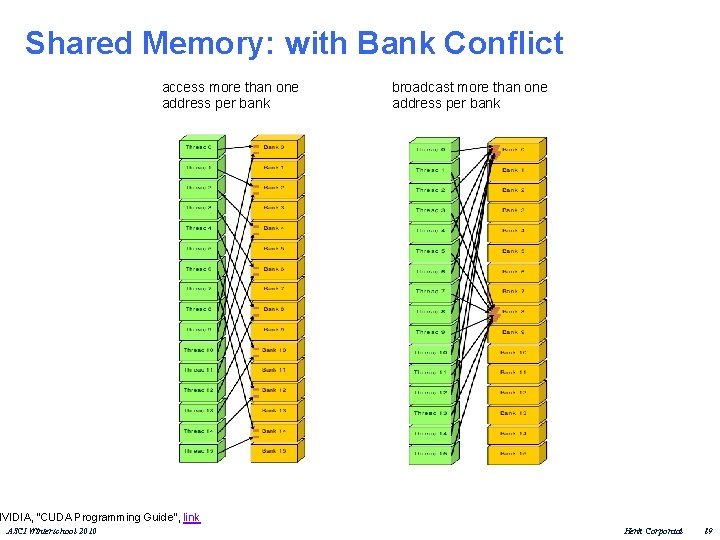

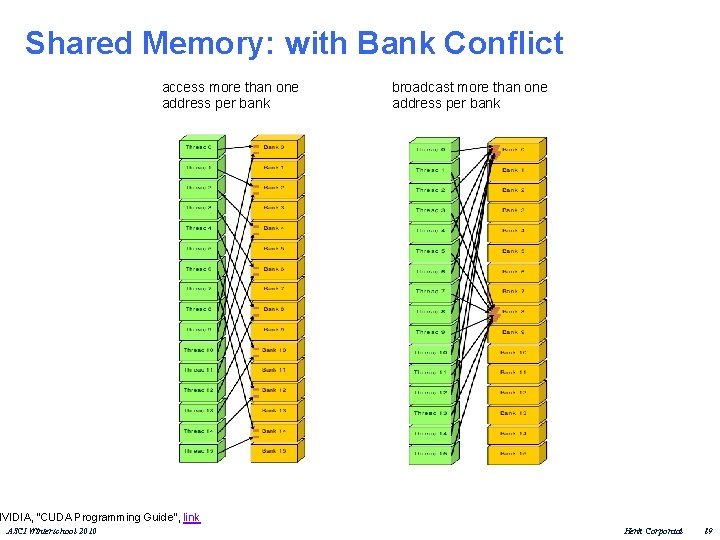

Shared Memory: with Bank Conflict access more than one address per bank broadcast more than one address per bank NVIDIA, "CUDA Programming Guide", link ASCI Winterschool 2010 Henk Corporaal 89

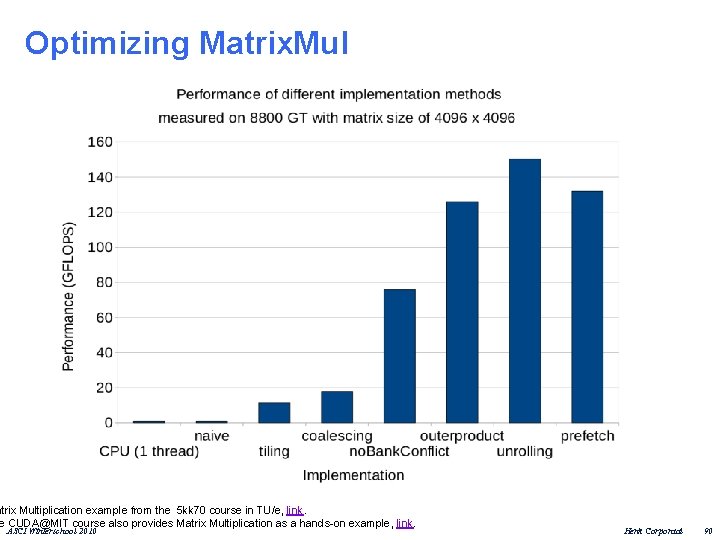

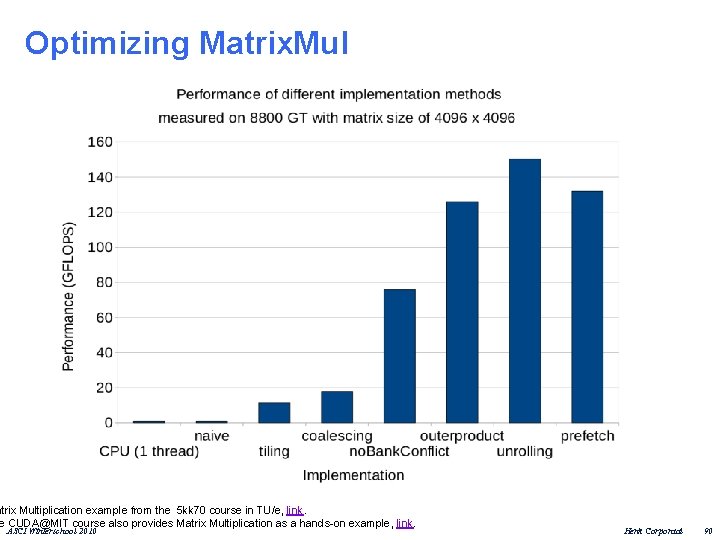

Optimizing Matrix. Mul atrix Multiplication example from the 5 kk 70 course in TU/e, link. e CUDA@MIT course also provides Matrix Multiplication as a hands-on example, link. ASCI Winterschool 2010 Henk Corporaal 90

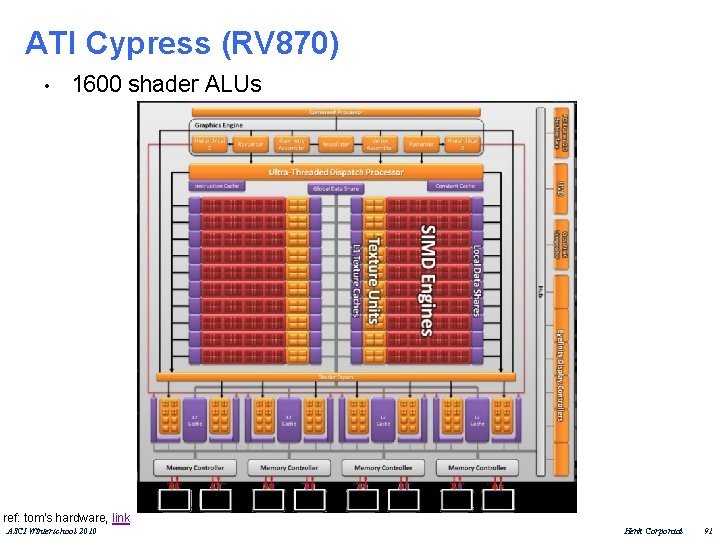

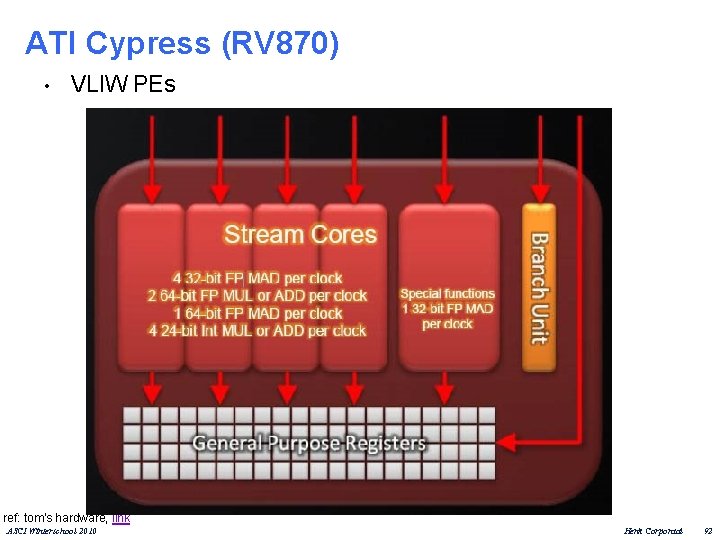

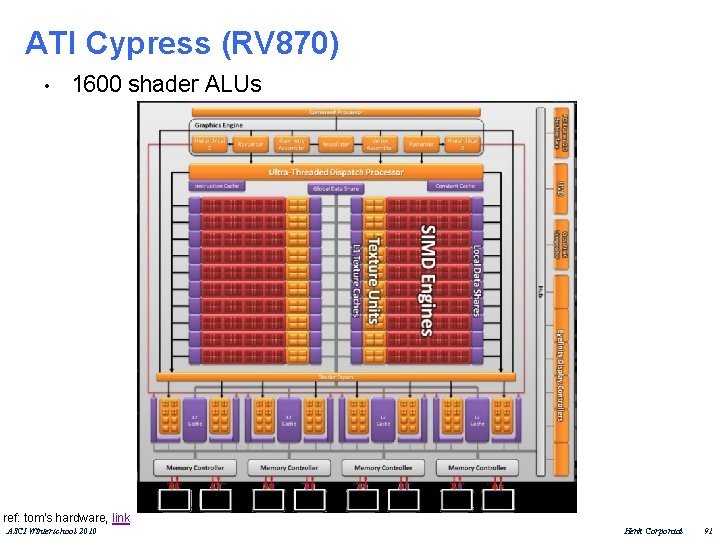

ATI Cypress (RV 870) • 1600 shader ALUs ref: tom's hardware, link ASCI Winterschool 2010 Henk Corporaal 91

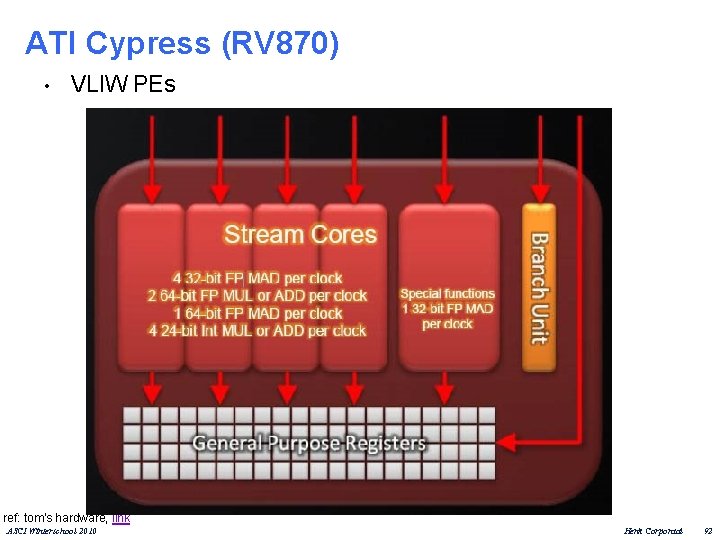

ATI Cypress (RV 870) • VLIW PEs ref: tom's hardware, link ASCI Winterschool 2010 Henk Corporaal 92

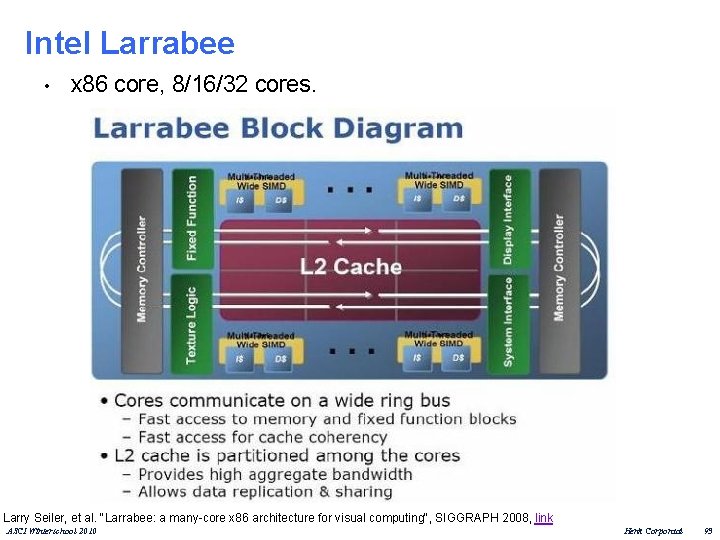

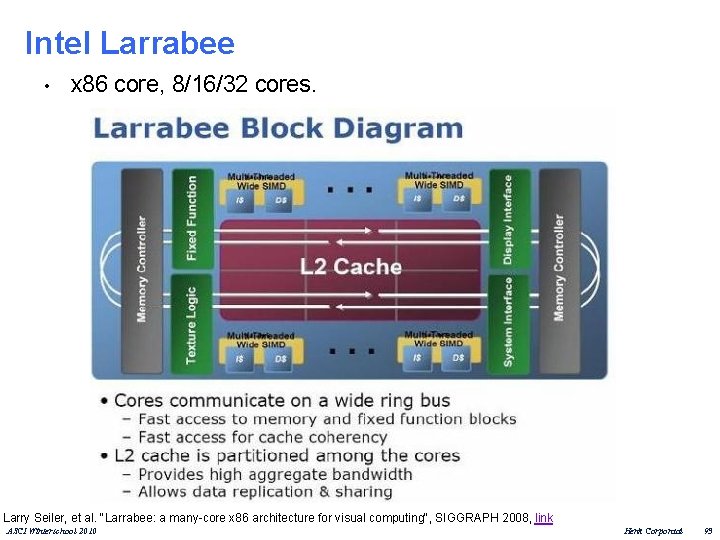

Intel Larrabee • x 86 core, 8/16/32 cores. Larry Seiler, et al. "Larrabee: a many-core x 86 architecture for visual computing", SIGGRAPH 2008, link ASCI Winterschool 2010 Henk Corporaal 93

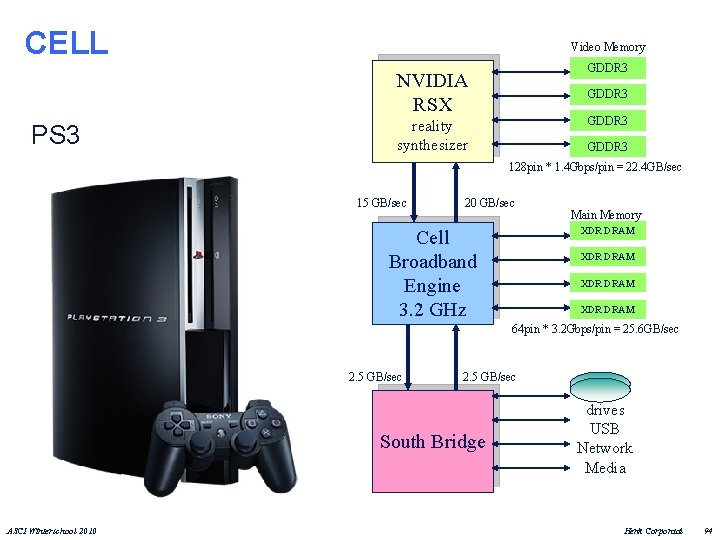

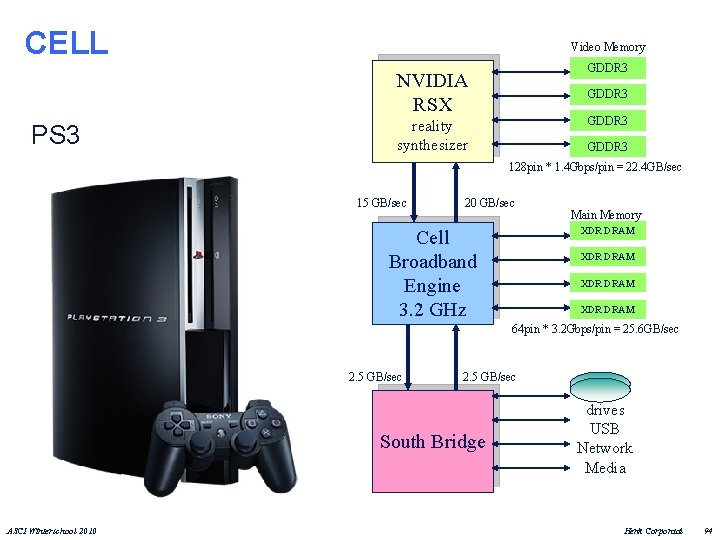

CELL Video Memory GDDR 3 NVIDIA RSX PS 3 GDDR 3 reality synthesizer GDDR 3 128 pin * 1. 4 Gbps/pin = 22. 4 GB/sec 15 GB/sec 20 GB/sec Main Memory XDR DRAM Cell Broadband Engine 3. 2 GHz XDR DRAM 64 pin * 3. 2 Gbps/pin = 25. 6 GB/sec 2. 5 GB/sec South Bridge ASCI Winterschool 2010 drives USB Network Media Henk Corporaal 94

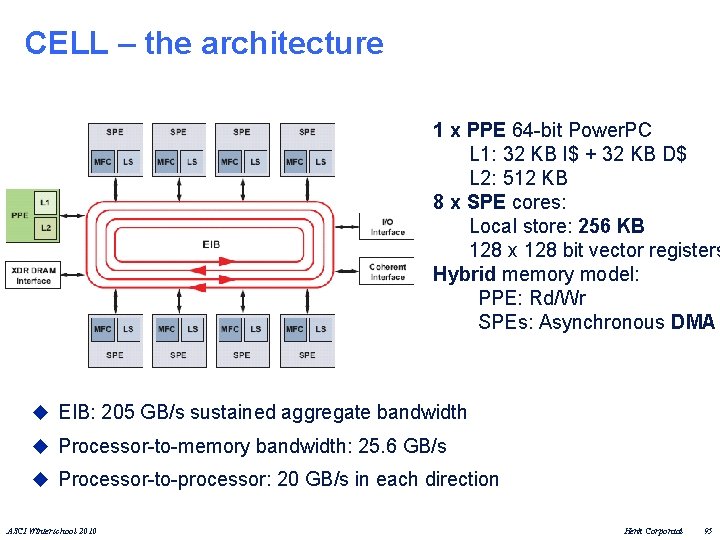

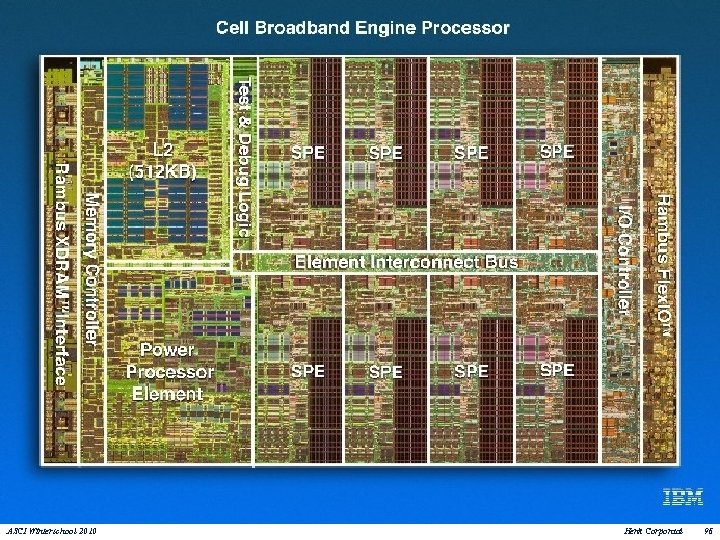

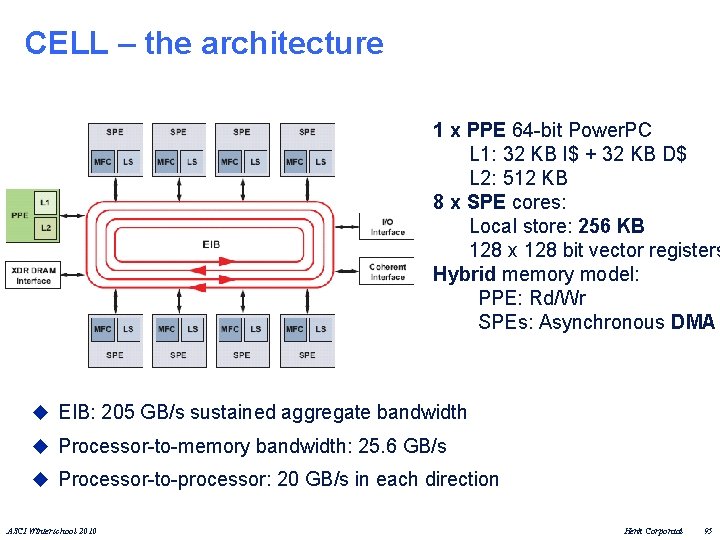

CELL – the architecture 1 x PPE 64 -bit Power. PC L 1: 32 KB I$ + 32 KB D$ L 2: 512 KB 8 x SPE cores: Local store: 256 KB 128 x 128 bit vector registers Hybrid memory model: PPE: Rd/Wr SPEs: Asynchronous DMA u EIB: 205 GB/s sustained aggregate bandwidth u Processor-to-memory bandwidth: 25. 6 GB/s u Processor-to-processor: 20 GB/s in each direction ASCI Winterschool 2010 Henk Corporaal 95

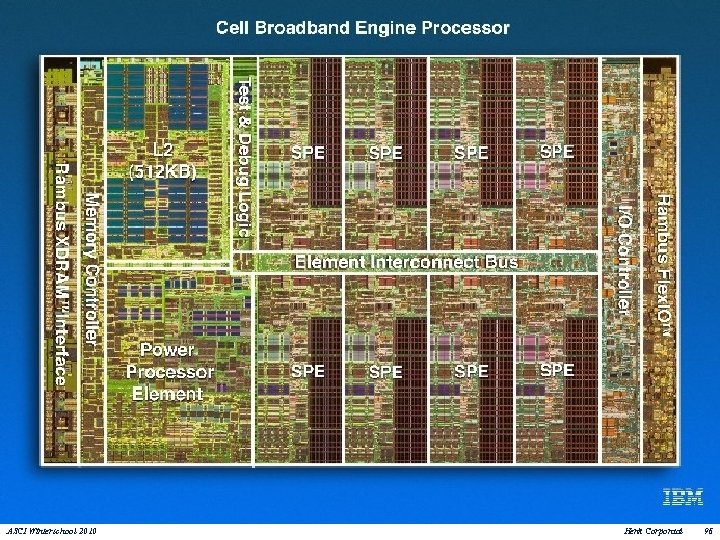

ASCI Winterschool 2010 Henk Corporaal 96

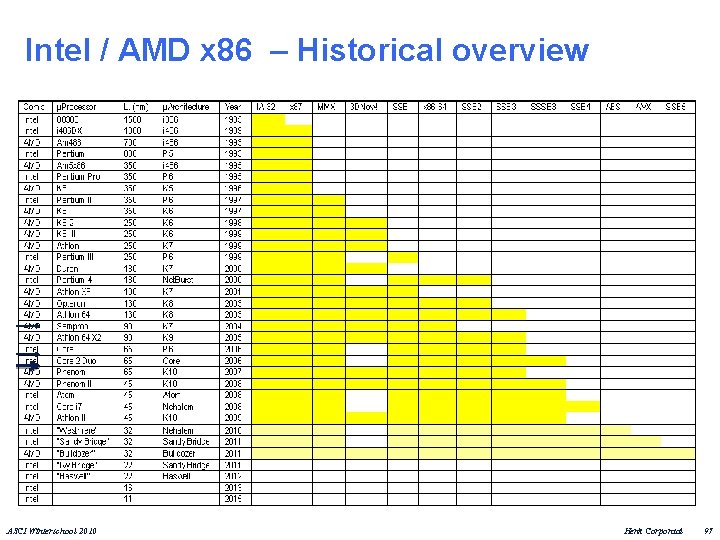

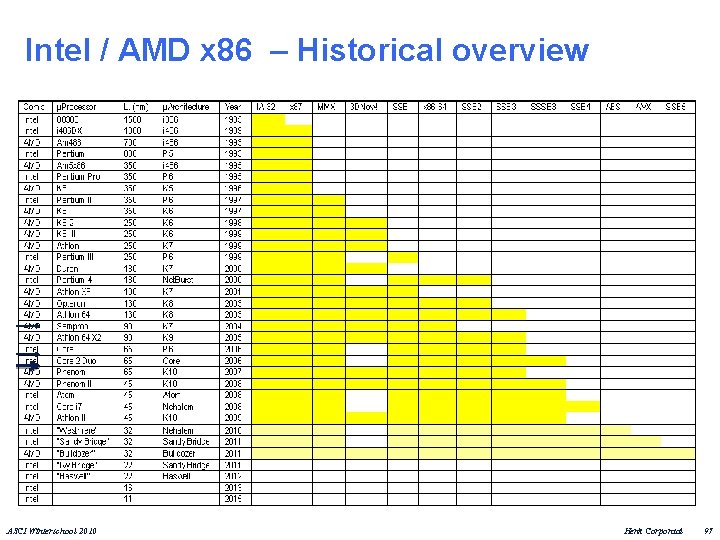

Intel / AMD x 86 – Historical overview ASCI Winterschool 2010 Henk Corporaal 97

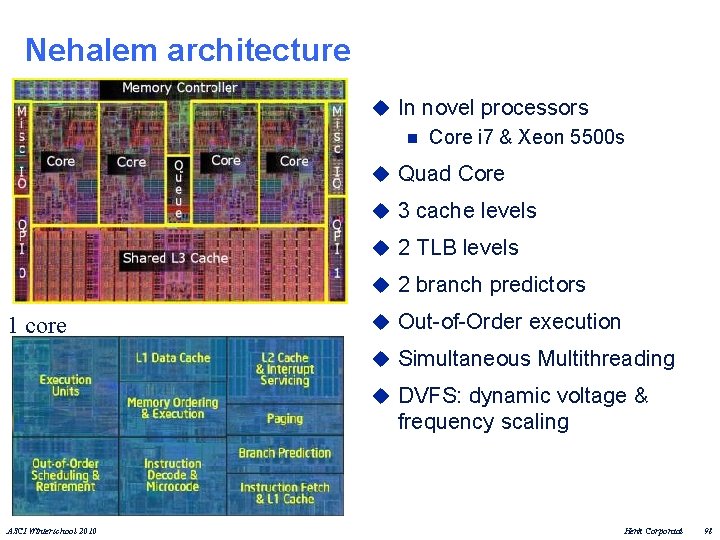

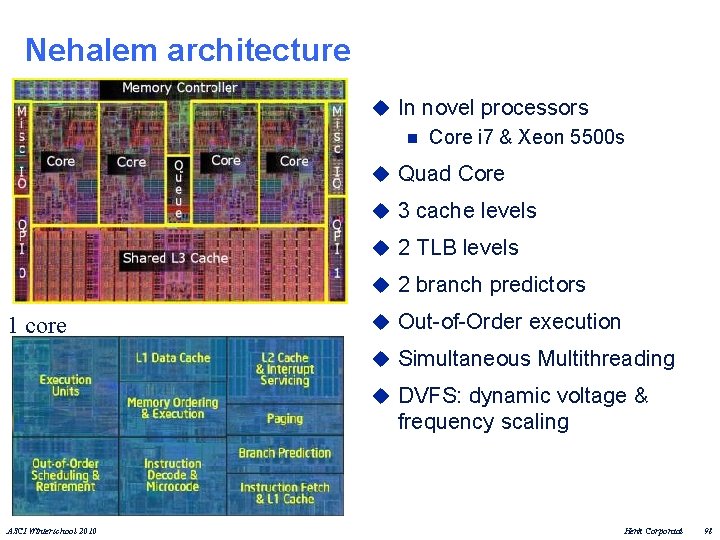

Nehalem architecture u In novel processors n Core i 7 & Xeon 5500 s u Quad Core u 3 cache levels u 2 TLB levels u 2 branch predictors 1 core u Out-of-Order execution u Simultaneous Multithreading u DVFS: dynamic voltage & frequency scaling ASCI Winterschool 2010 Henk Corporaal 98

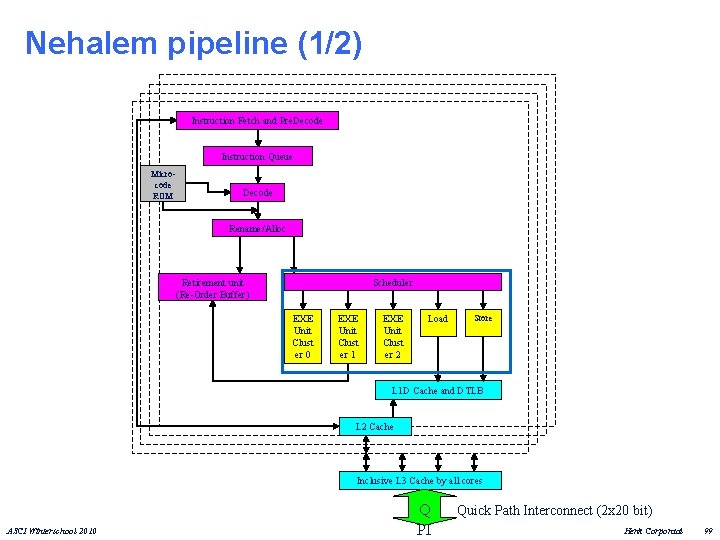

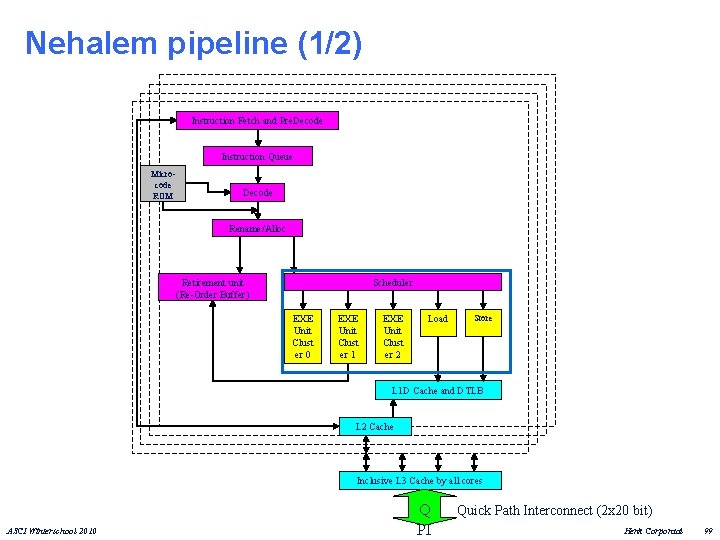

Nehalem pipeline (1/2) Instruction Fetch and Pre. Decode Instruction Queue Microcode ROM Decode Rename/Alloc Retirement unit (Re-Order Buffer) Scheduler EXE Unit Clust er 0 EXE Unit Clust er 1 EXE Unit Clust er 2 Load Store L 1 D Cache and DTLB L 2 Cache Inclusive L 3 Cache by all cores ASCI Winterschool 2010 Q PI Quick Path Interconnect (2 x 20 bit) Henk Corporaal 99

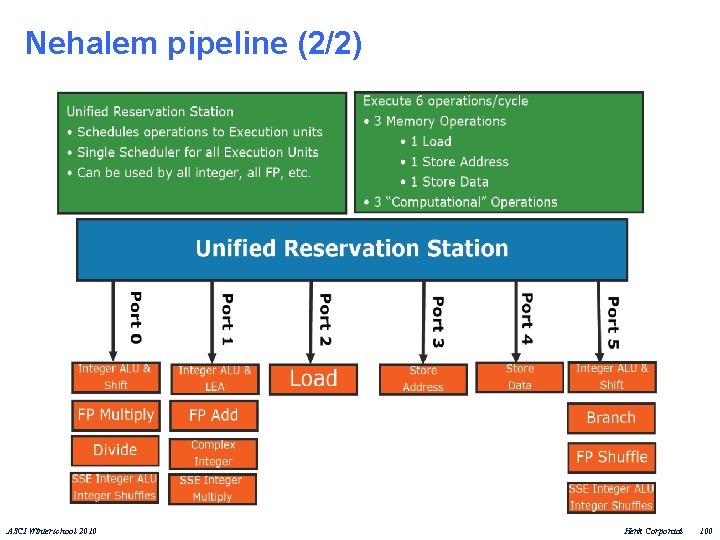

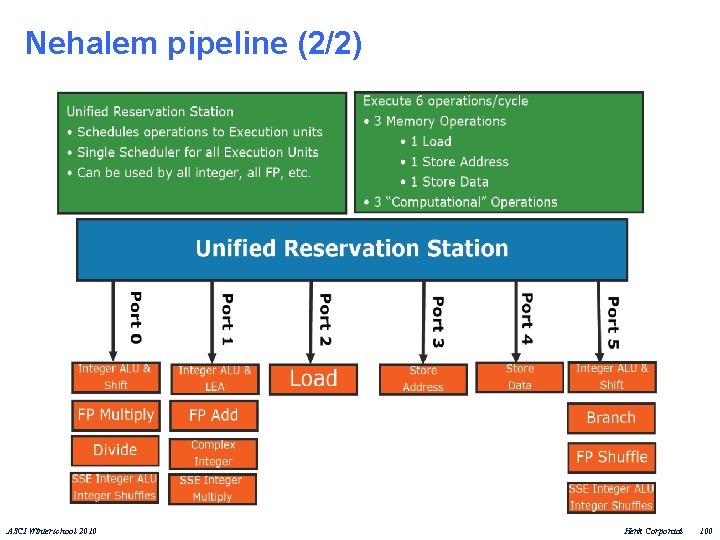

Nehalem pipeline (2/2) ASCI Winterschool 2010 Henk Corporaal 100

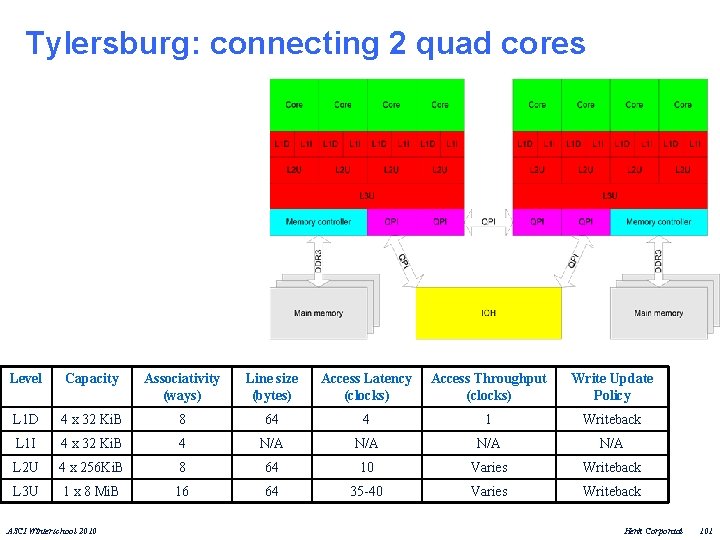

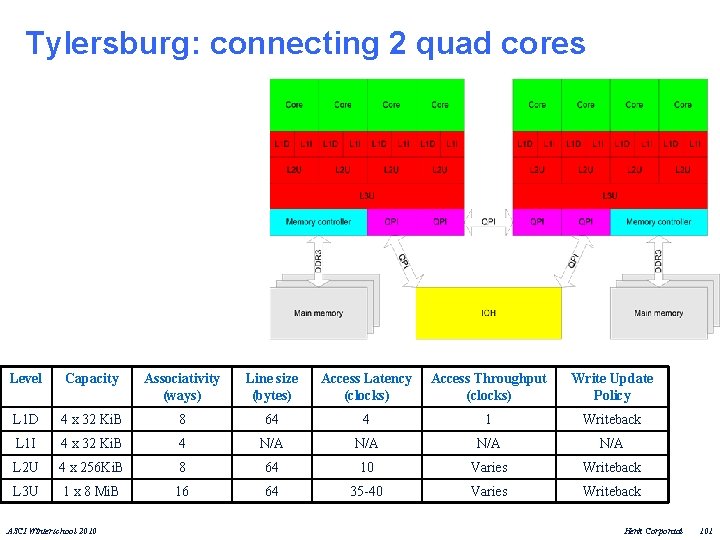

Tylersburg: connecting 2 quad cores Level Capacity Associativity (ways) Line size (bytes) Access Latency (clocks) Access Throughput (clocks) Write Update Policy L 1 D 4 x 32 Ki. B 8 64 4 1 Writeback L 1 I 4 x 32 Ki. B 4 N/A N/A L 2 U 4 x 256 Ki. B 8 64 10 Varies Writeback L 3 U 1 x 8 Mi. B 16 64 35 -40 Varies Writeback ASCI Winterschool 2010 Henk Corporaal 101

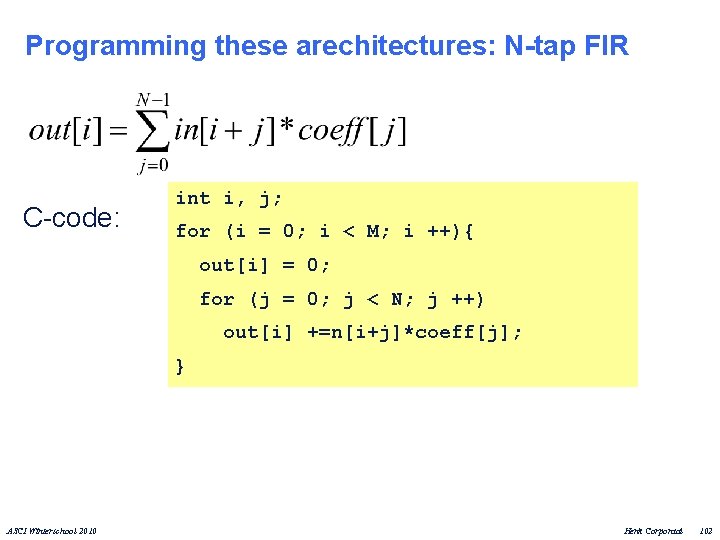

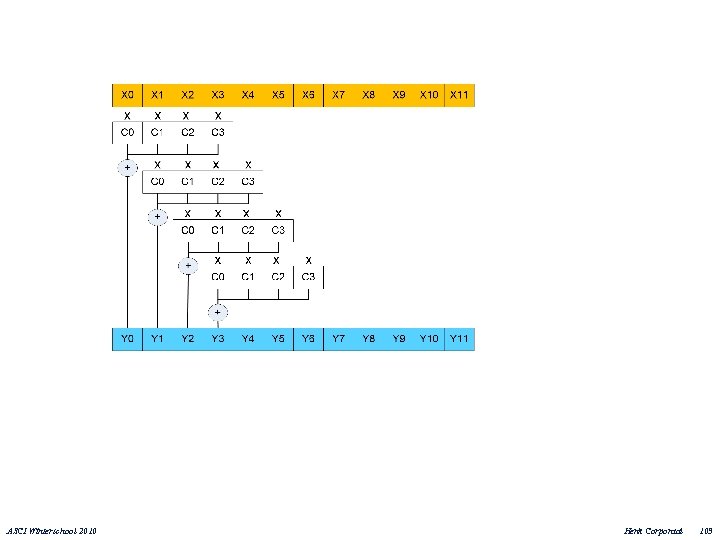

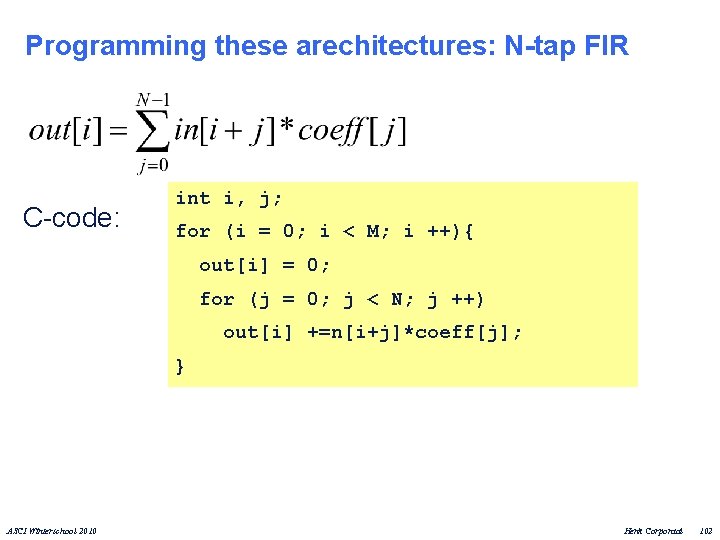

Programming these arechitectures: N-tap FIR C-code: int i, j; for (i = 0; i < M; i ++){ out[i] = 0; for (j = 0; j < N; j ++) out[i] +=n[i+j]*coeff[j]; } ASCI Winterschool 2010 Henk Corporaal 102

ASCI Winterschool 2010 Henk Corporaal 103

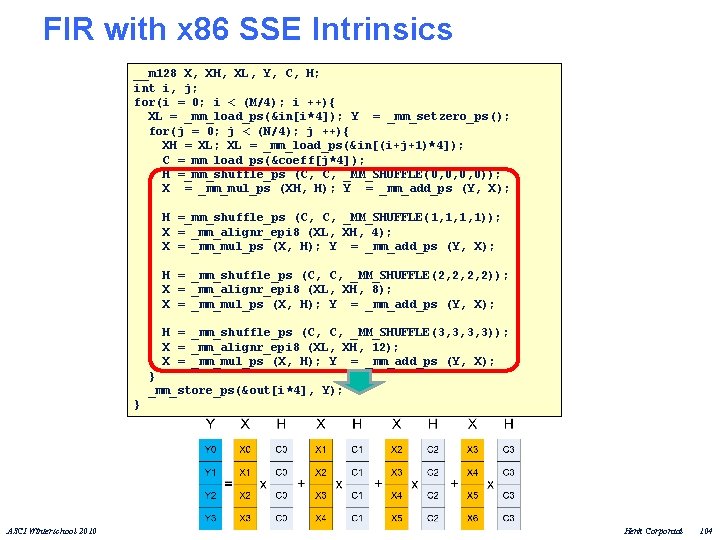

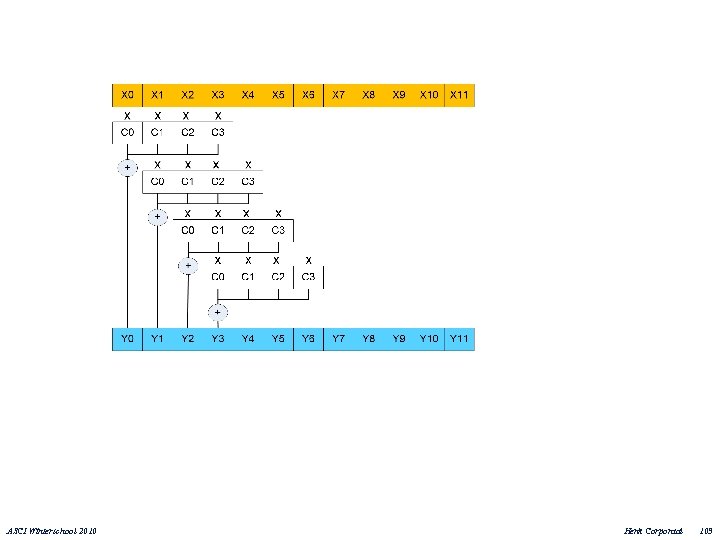

FIR with x 86 SSE Intrinsics __m 128 X, XH, XL, Y, C, H; int i, j; for(i = 0; i < (M/4); i ++){ XL = _mm_load_ps(&in[i*4]); Y = _mm_setzero_ps(); for(j = 0; j < (N/4); j ++){ XH = XL; XL = _mm_load_ps(&in[(i+j+1)*4]); C =_mm_load_ps(&coeff[j*4]); H =_mm_shuffle_ps (C, C, _MM_SHUFFLE(0, 0, 0, 0)); X = _mm_mul_ps (XH, H); Y = _mm_add_ps (Y, X); H =_mm_shuffle_ps (C, C, _MM_SHUFFLE(1, 1, 1, 1)); X = _mm_alignr_epi 8 (XL, XH, 4); X = _mm_mul_ps (X, H); Y = _mm_add_ps (Y, X); H = _mm_shuffle_ps (C, C, _MM_SHUFFLE(2, 2, 2, 2)); X = _mm_alignr_epi 8 (XL, XH, 8); X = _mm_mul_ps (X, H); Y = _mm_add_ps (Y, X); H = _mm_shuffle_ps (C, C, _MM_SHUFFLE(3, 3, 3, 3)); X = _mm_alignr_epi 8 (XL, XH, 12); X = _mm_mul_ps (X, H); Y = _mm_add_ps (Y, X); } _mm_store_ps(&out[i*4], Y); } ASCI Winterschool 2010 Henk Corporaal 104

![FIR using pthreadt firthreadsNTHREAD firarg faNTHREAD tsize MNTHREAD fori 0 i FIR using pthread_t fir_threads[N_THREAD]; fir_arg fa[N_THREAD]; tsize = M/N_THREAD; for(i = 0; i <](https://slidetodoc.com/presentation_image_h2/d9de3b692d14856c617e033d3ae12fd4/image-105.jpg)

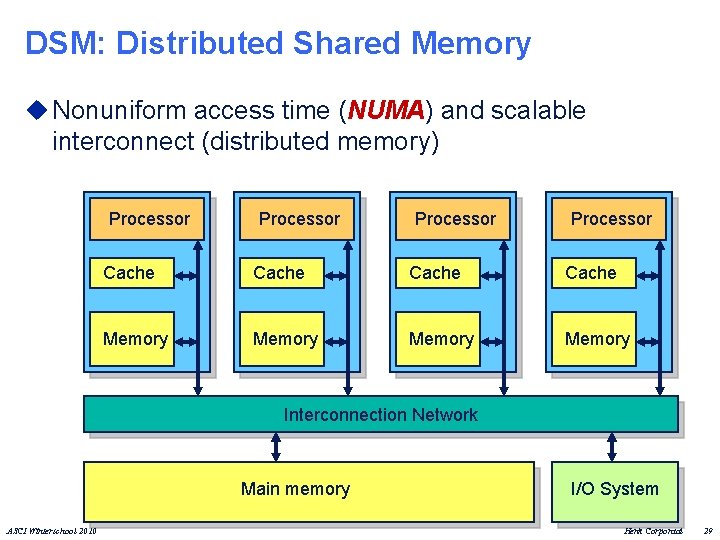

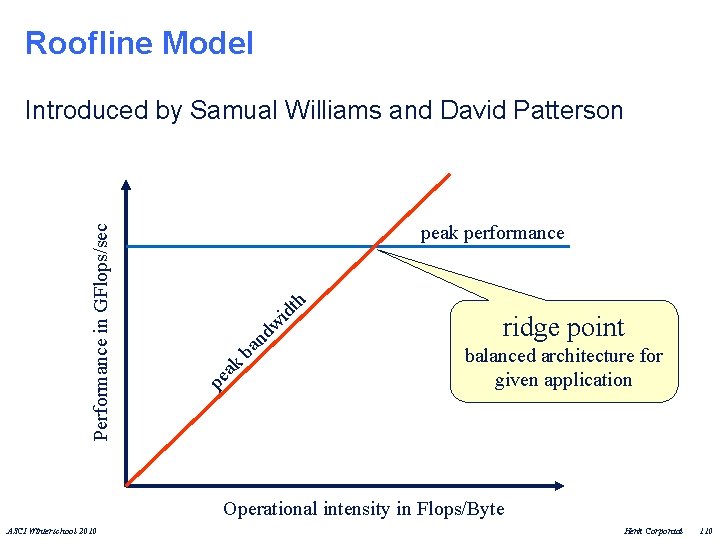

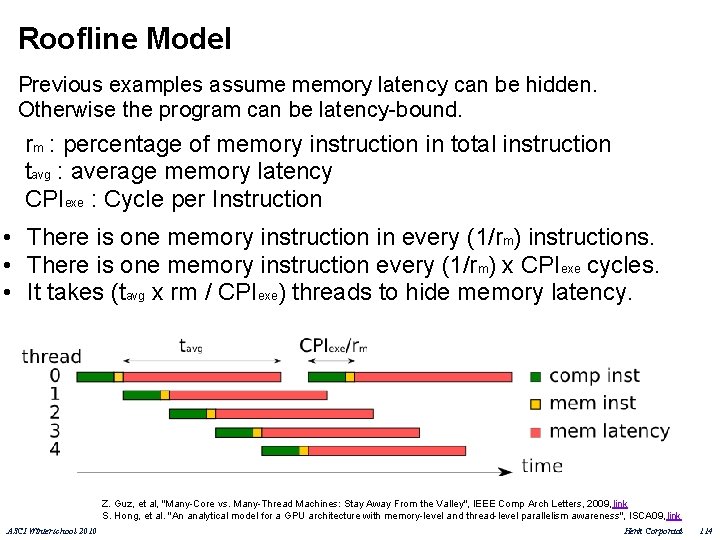

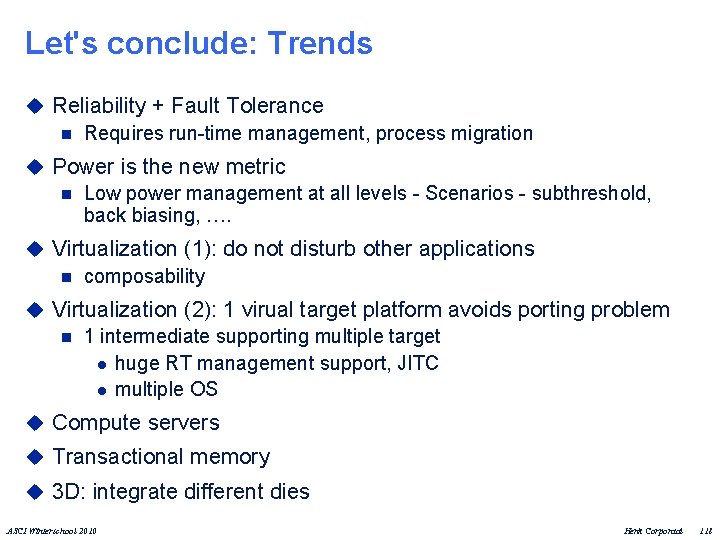

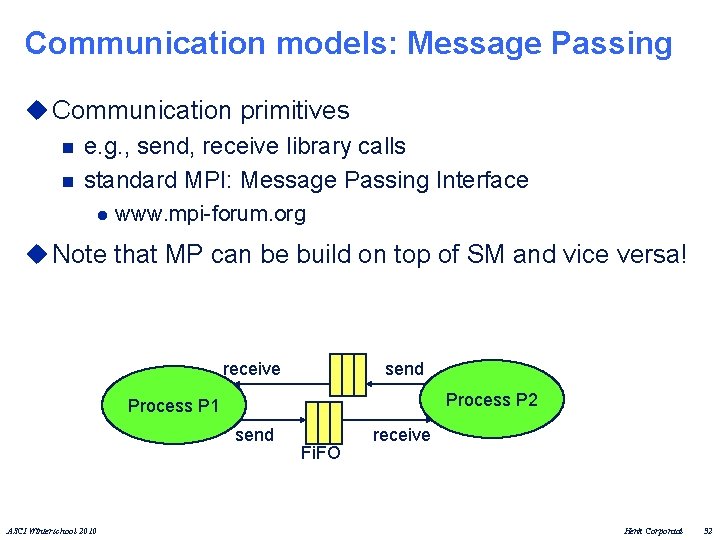

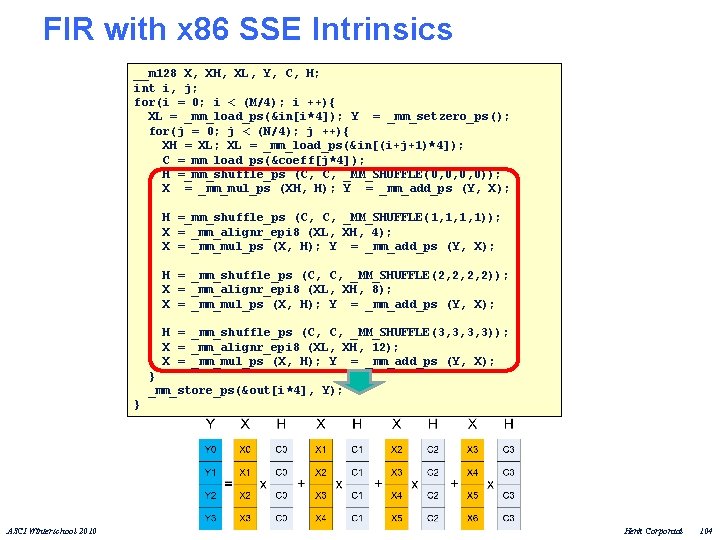

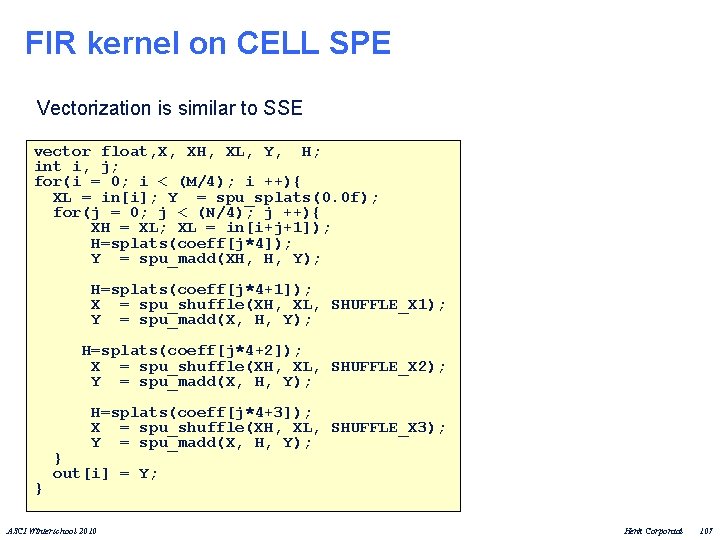

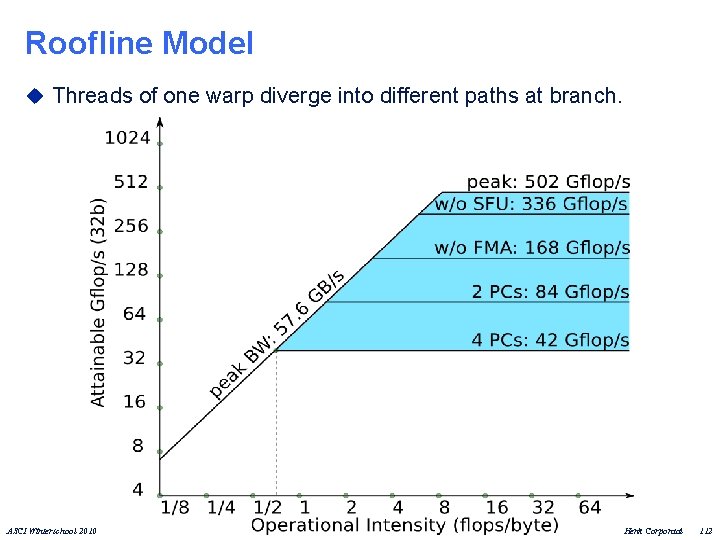

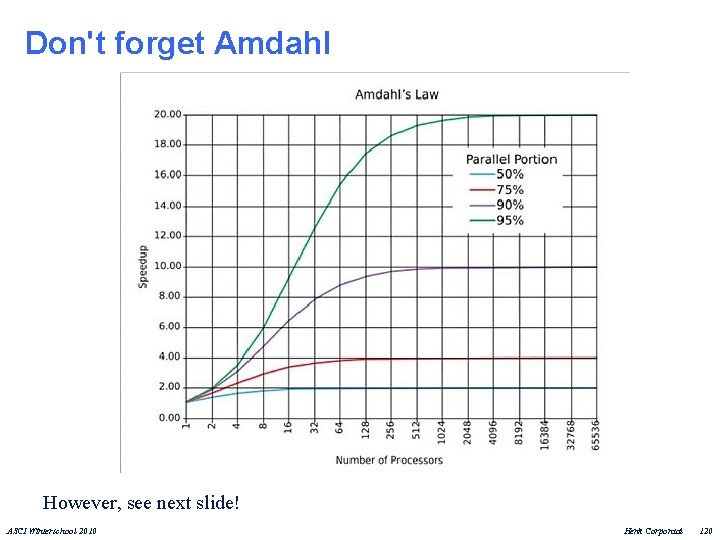

FIR using pthread_t fir_threads[N_THREAD]; fir_arg fa[N_THREAD]; tsize = M/N_THREAD; for(i = 0; i < N_THREAD; i ++){ /*… Initialize thread parameters fa[i] … */ rc = pthread_create(&fir_threads[i], NULL, fir_kernel, (void *)&fa[i]); } for(i=0; i<N_THREAD; i++) { rc = pthread_join(fir_threads[i], &status); } Sequential FIR kernel or Vectorized FIR kernel ASCI Winterschool 2010 Henk Corporaal 105

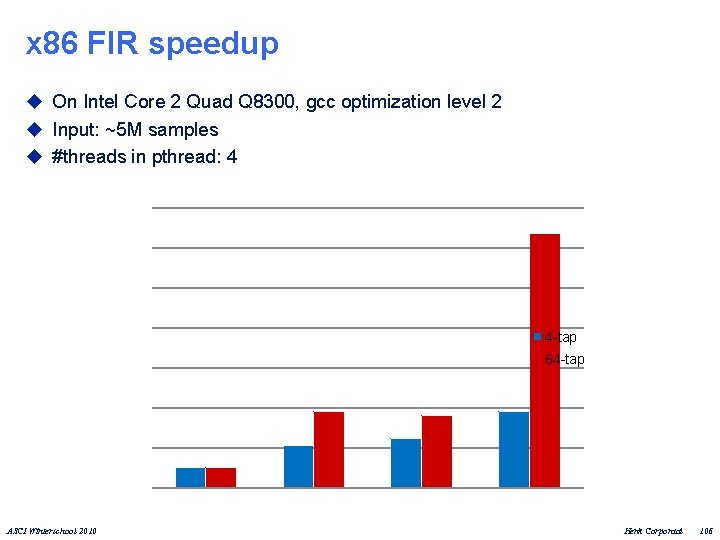

x 86 FIR speedup u On Intel Core 2 Quad Q 8300, gcc optimization level 2 u Input: ~5 M samples u #threads in pthread: 4 4 -tap 64 -tap ASCI Winterschool 2010 Henk Corporaal 106

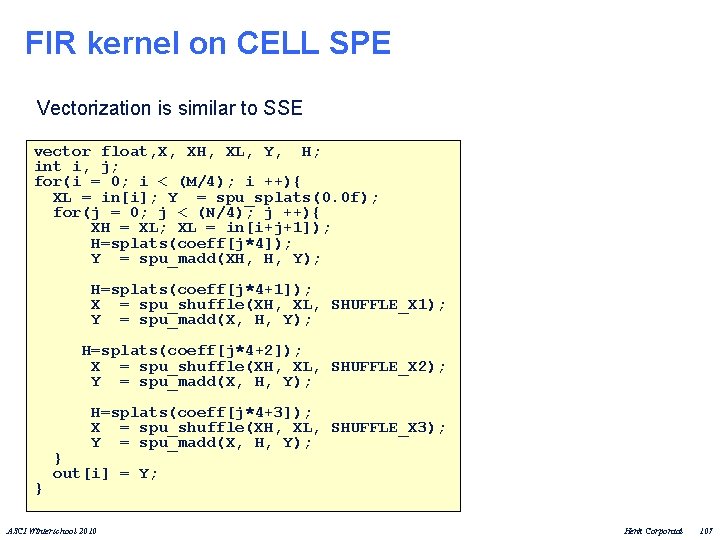

FIR kernel on CELL SPE Vectorization is similar to SSE vector float, X, XH, XL, Y, H; int i, j; for(i = 0; i < (M/4); i ++){ XL = in[i]; Y = spu_splats(0. 0 f); for(j = 0; j < (N/4); j ++){ XH = XL; XL = in[i+j+1]); H=splats(coeff[j*4]); Y = spu_madd(XH, H, Y); H=splats(coeff[j*4+1]); X = spu_shuffle(XH, XL, SHUFFLE_X 1); Y = spu_madd(X, H, Y); H=splats(coeff[j*4+2]); X = spu_shuffle(XH, XL, SHUFFLE_X 2); Y = spu_madd(X, H, Y); H=splats(coeff[j*4+3]); X = spu_shuffle(XH, XL, SHUFFLE_X 3); Y = spu_madd(X, H, Y); } } out[i] = Y; ASCI Winterschool 2010 Henk Corporaal 107

![SPE DMA double buffering float i Buf2BUFSIZE float o Buf2BUFSIZE int idx0 int bufferssizeBUFSIZE SPE DMA double buffering float i. Buf[2][BUF_SIZE]; float o. Buf[2][BUF_SIZE]; int idx=0; int buffers=size/BUF_SIZE;](https://slidetodoc.com/presentation_image_h2/d9de3b692d14856c617e033d3ae12fd4/image-108.jpg)

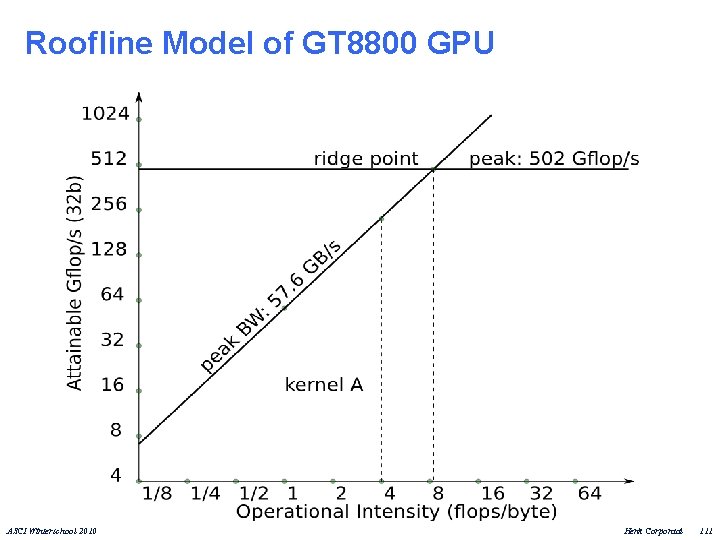

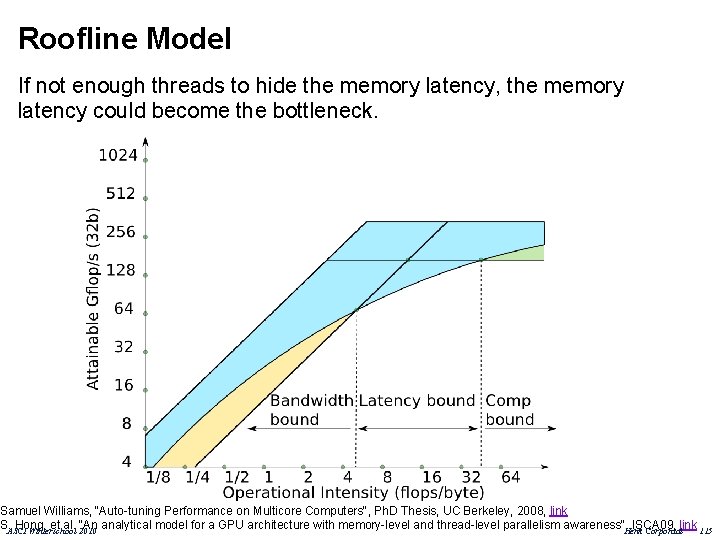

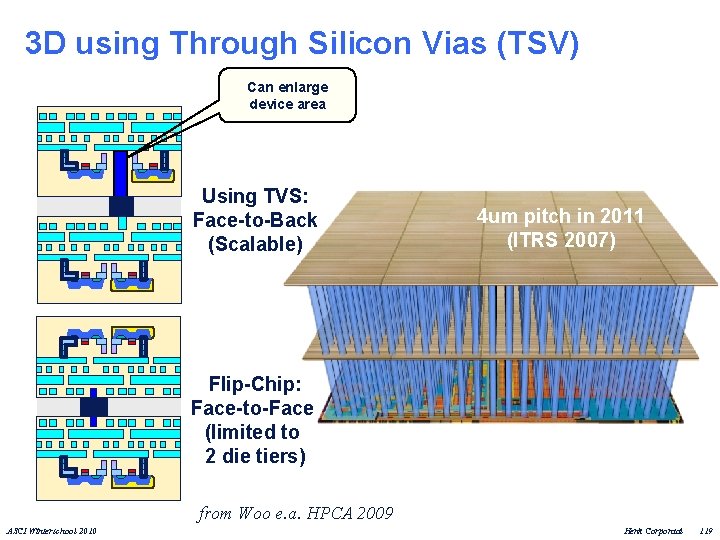

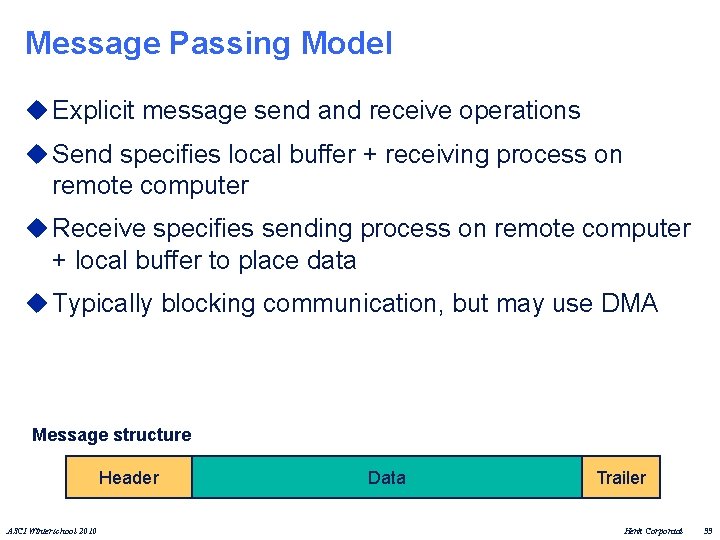

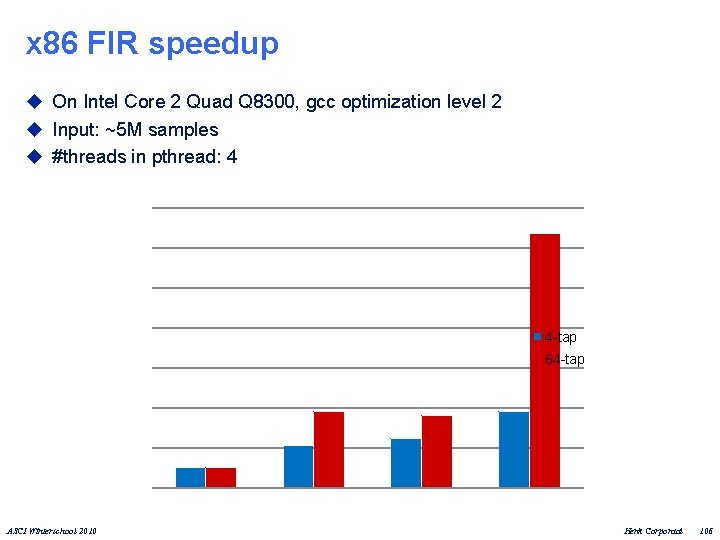

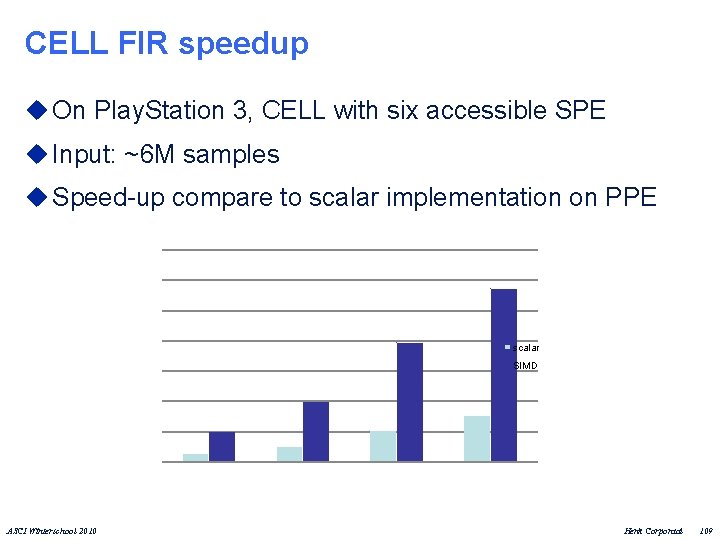

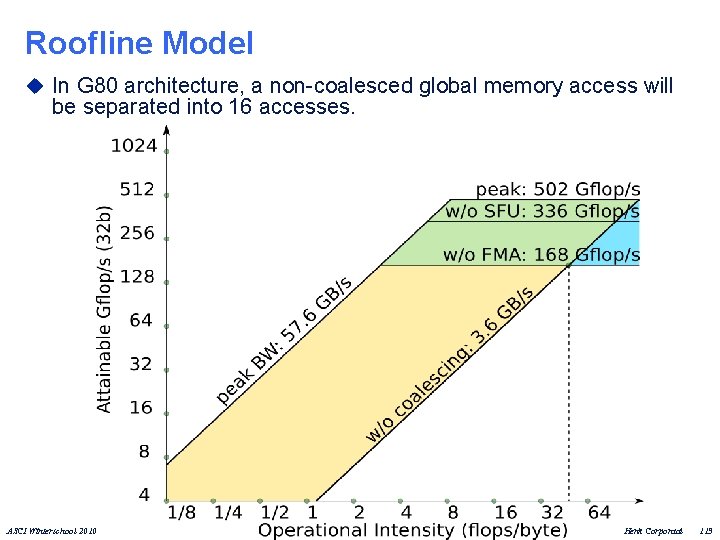

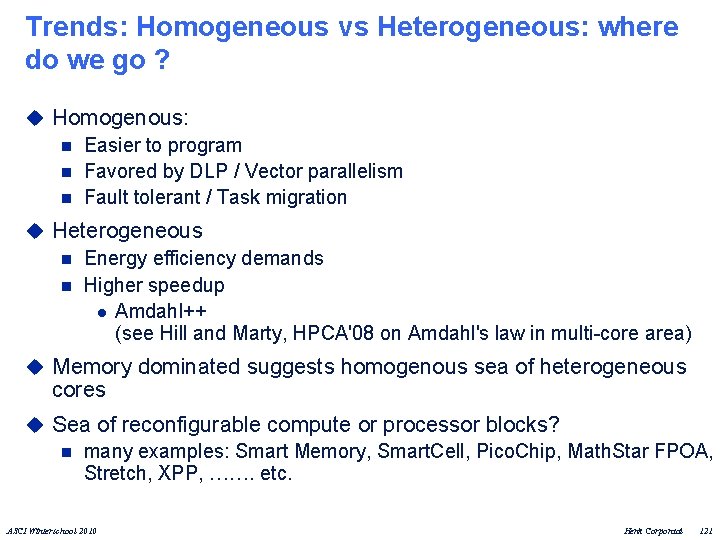

SPE DMA double buffering float i. Buf[2][BUF_SIZE]; float o. Buf[2][BUF_SIZE]; int idx=0; int buffers=size/BUF_SIZE; mfc_get(i. Buf[idx], argp, BUF_SIZE*sizeof(float), tag[idx], 0, 0); for(int i = 1; I < buffers; i++){ wait_for_dma(tag[idx]); next_idx = idx^1; mfc_get(i. Buf[next_idx], argp, BUF_SIZE*sizeof(float), 0, 0, 0); fir_kernel(o. Buf[idx], i. Buf[idx], coeff, BUF_SIZE, taps); mfc_put(o. Buf[idx], outbuf, BUF_SIZE*sizeof(float), tag[idx], 0, 0); idx = next_idx; } /* Finish up the last block. . . */ ASCI Winterschool 2010 Henk Corporaal 108

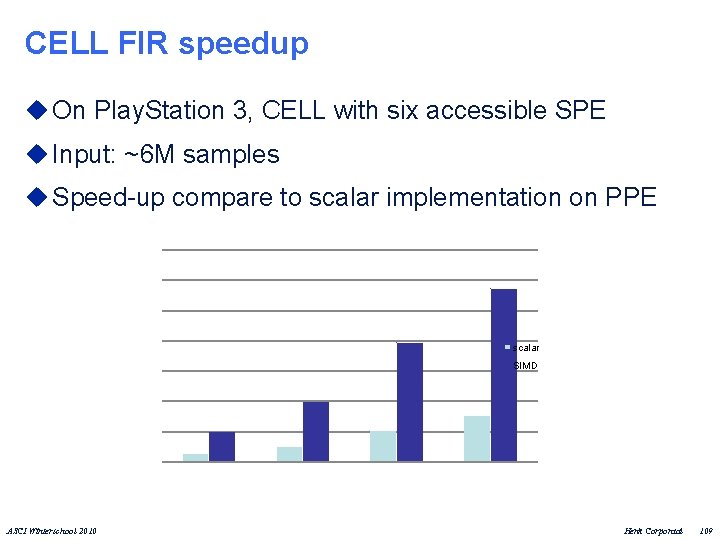

CELL FIR speedup u On Play. Station 3, CELL with six accessible SPE u Input: ~6 M samples u Speed-up compare to scalar implementation on PPE scalar SIMD ASCI Winterschool 2010 Henk Corporaal 109

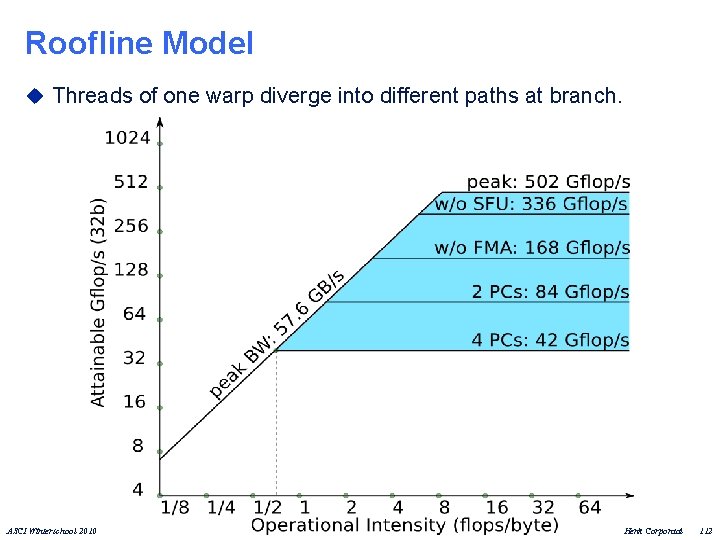

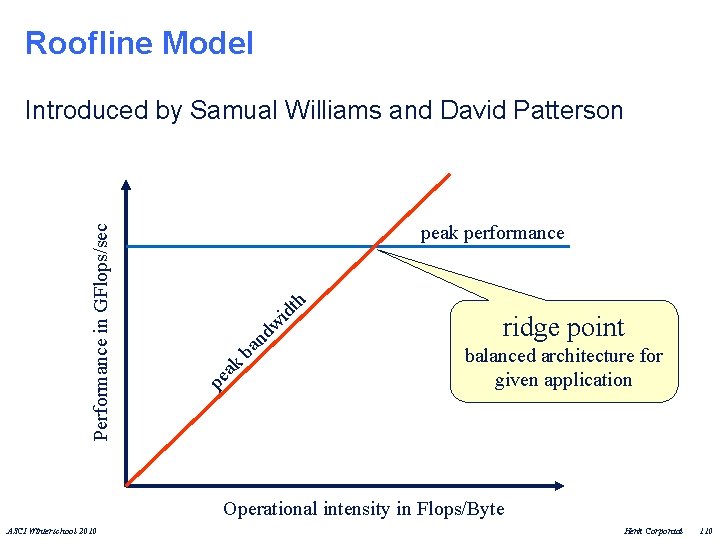

Roofline Model ak ba nd w id th peak performance pe Performance in GFlops/sec Introduced by Samual Williams and David Patterson ridge point balanced architecture for given application Operational intensity in Flops/Byte ASCI Winterschool 2010 Henk Corporaal 110

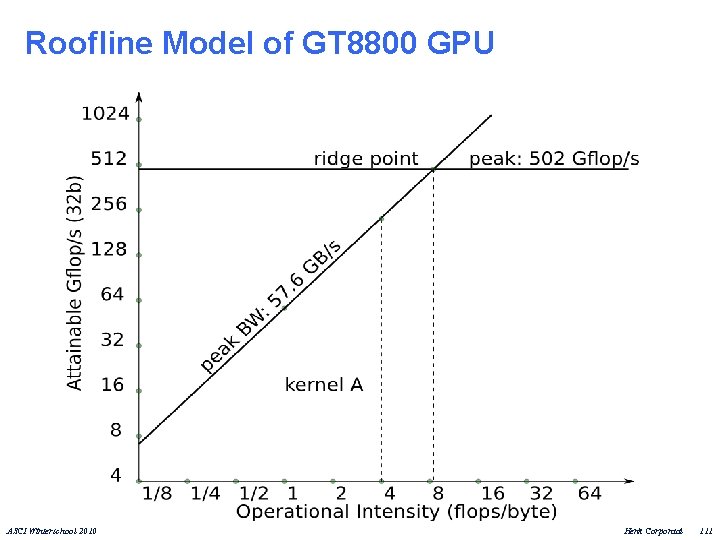

Roofline Model of GT 8800 GPU ASCI Winterschool 2010 Henk Corporaal 111

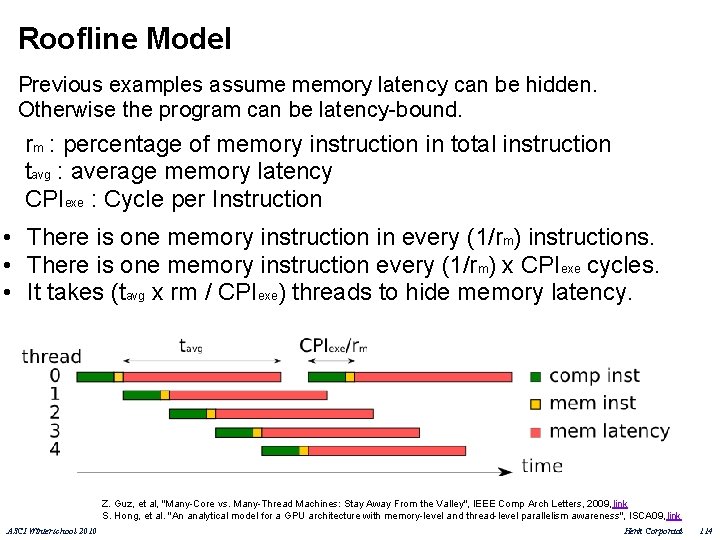

Roofline Model u Threads of one warp diverge into different paths at branch. ASCI Winterschool 2010 Henk Corporaal 112

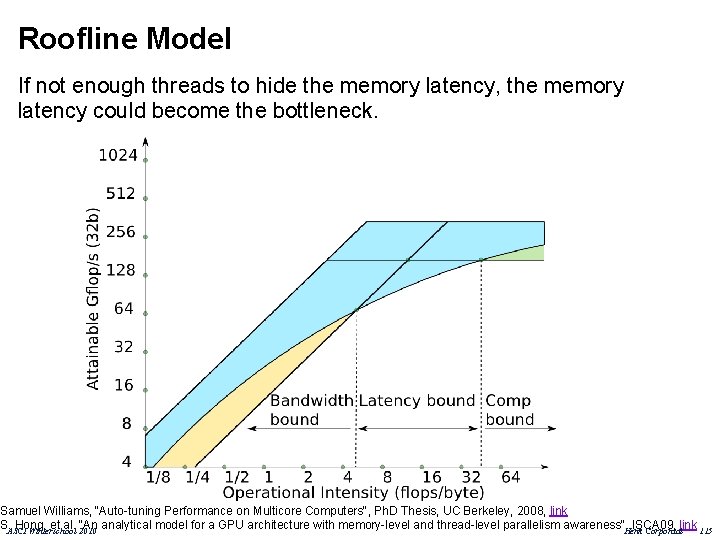

Roofline Model u In G 80 architecture, a non-coalesced global memory access will be separated into 16 accesses. ASCI Winterschool 2010 Henk Corporaal 113

Roofline Model Previous examples assume memory latency can be hidden. Otherwise the program can be latency-bound. rm : percentage of memory instruction in total instruction tavg : average memory latency CPIexe : Cycle per Instruction • There is one memory instruction in every (1/rm) instructions. • There is one memory instruction every (1/rm) x CPIexe cycles. • It takes (tavg x rm / CPIexe) threads to hide memory latency. Z. Guz, et al, "Many-Core vs. Many-Thread Machines: Stay Away From the Valley", IEEE Comp Arch Letters, 2009, link S. Hong, et al. "An analytical model for a GPU architecture with memory-level and thread-level parallelism awareness", ISCA 09, link ASCI Winterschool 2010 Henk Corporaal 114

Roofline Model If not enough threads to hide the memory latency, the memory latency could become the bottleneck. Samuel Williams, "Auto-tuning Performance on Multicore Computers", Ph. D Thesis, UC Berkeley, 2008, link S. ASCI Hong, et al. "An analytical model for a GPU architecture with memory-level and thread-level parallelism awareness", Henk ISCA 09, link 115 Winterschool 2010 Corporaal

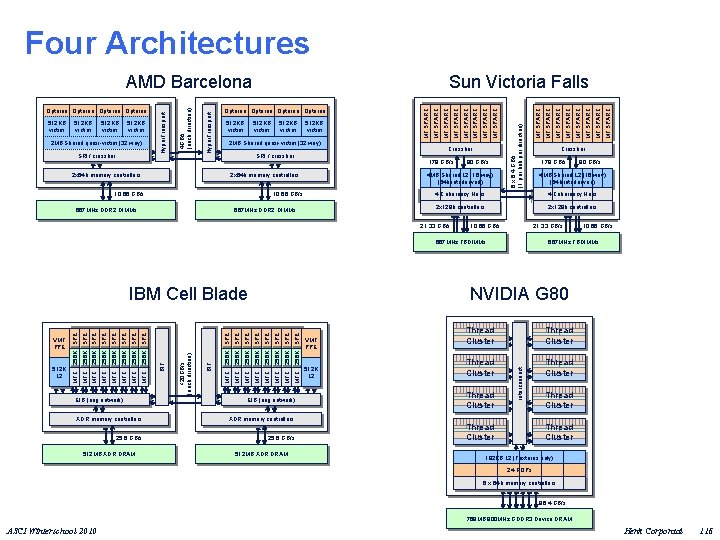

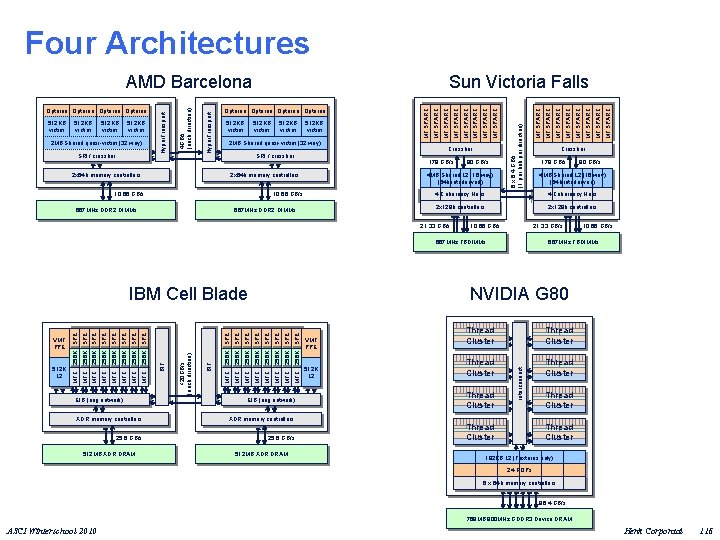

Four Architectures SRI / crossbar 2 x 64 b memory controllers 2 MB Shared quasi-victim (32 way) SRI / crossbar 179 GB/s 10. 66 GB/s 667 MHz DDR 2 DIMMs 90 GB/s 667 MHz DDR 2 DIMMs 2 x 128 b controllers 10. 66 GB/s MT SPARC MT SPARC 4 Coherency Hubs 21. 33 GB/s 10. 66 GB/s 667 MHz FBDIMMs SPE MFC 256 K XDR memory controllers 25. 6 GB/s 512 MB XDR DRAM VMT PPE 512 K L 2 Thread Cluster Thread Cluster interconnect SPE MFC 256 K SPE MFC 256 K SPE EIB (ring network) XDR memory controllers 512 MB XDR DRAM 90 GB/s NVIDIA G 80 MFC 256 K BIF <20 GB/s (each direction) SPE MFC 256 K EIB (ring network) BIF SPE SPE MFC 256 K MFC 256 K SPE MFC 256 K 512 K L 2 179 GB/s 4 MB Shared L 2 (16 way) (64 b interleaved) 2 x 128 b controllers 667 MHz FBDIMMs VMT PPE Crossbar 4 Coherency Hubs 21. 33 GB/s IBM Cell Blade MT SPARC Crossbar 4 MB Shared L 2 (16 way) (64 b interleaved) 2 x 64 b memory controllers 10. 66 GB/s MT SPARC 512 KB victim 8 x 6. 4 GB/s (1 per hub per direction) 2 MB Shared quasi-victim (32 way) Opteron MT SPARC 512 KB victim Sun Victoria Falls MT SPARC 512 KB victim Hyper. Transport 512 KB victim 4 GB/s (each direction) Opteron Hyper. Transport AMD Barcelona Thread Cluster 192 KB L 2 (Textures only) 24 ROPs 6 x 64 b memory controllers 86. 4 GB/s 768 MB 900 MHz GDDR 3 Device DRAM ASCI Winterschool 2010 Henk Corporaal 116

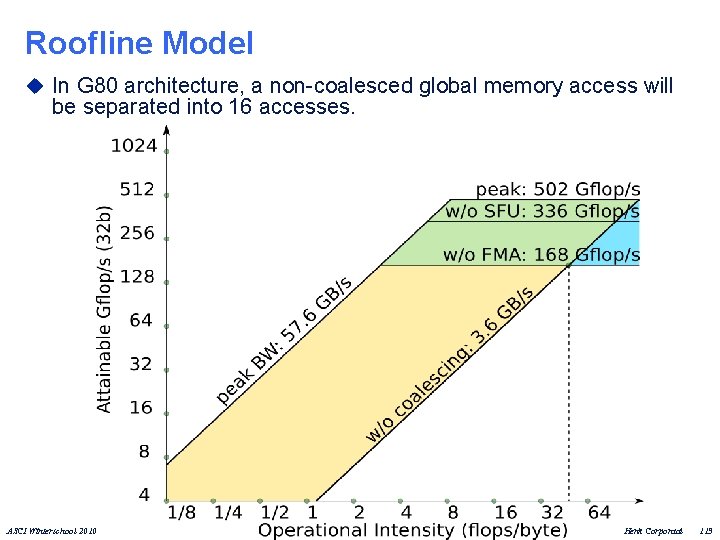

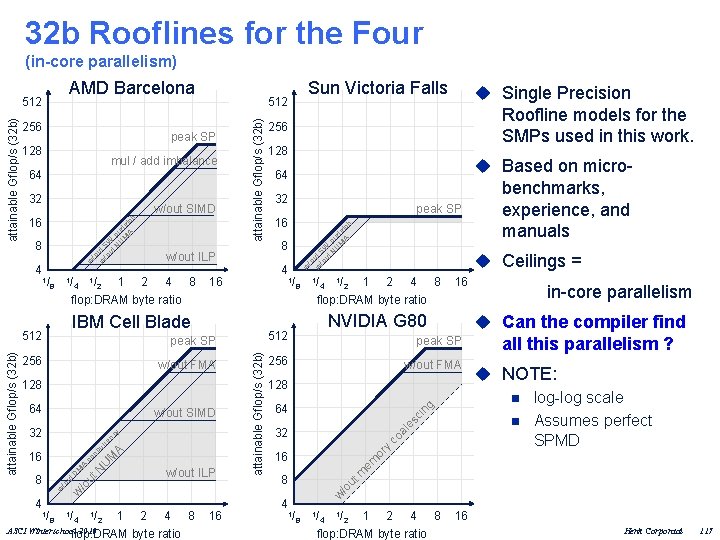

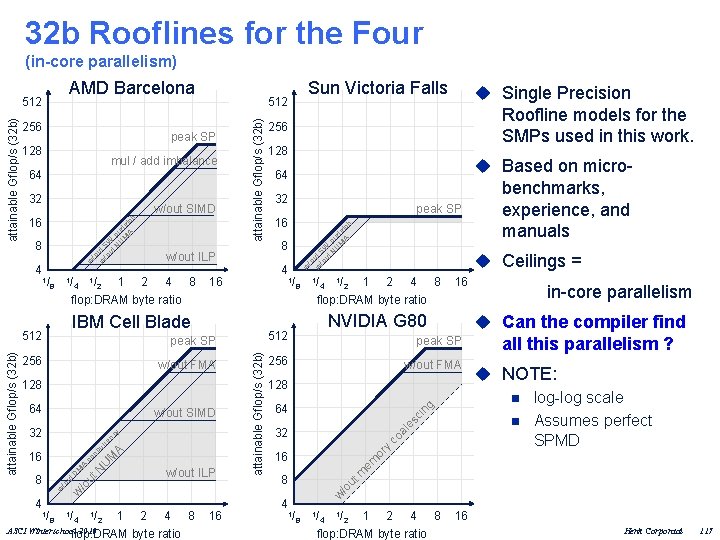

32 b Rooflines for the Four (in-core parallelism) AMD Barcelona 16 IBM Cell Blade w/out FMA 128 64 w/out SIMD w 4 M U N /o ut 8 /o ut D M A co nc 16 A ur re nc y 32 1/ w/out ILP 1 2 4 8 ASCI Winterschool 2010 flop: DRAM byte ratio 8 8 1/ 1 2 4 8 flop: DRAM byte ratio 4 1/ 2 16 NVIDIA G 80 512 peak SP 256 1/ peak SP 256 w/out FMA 128 64 32 16 8 4 in-core parallelism u Can the compiler find ng 2 ci 1 2 4 8 flop: DRAM byte ratio 512 attainable Gflop/s (32 b) 1/ 4 u Ceilings = al es 1/ 8 benchmarks, experience, and manuals all this parallelism ? u NOTE: n log-log scale n Assumes perfect SPMD w 1/ 8 4 attainable Gflop/s (32 b) 4 w/out ILP peak SP 16 co w /o w ut S /o W ut N pre U f M et A ch 8 32 or y w/out SIMD 16 u Based on micro- 64 em 32 128 m 64 Roofline models for the SMPs used in this work. 256 /o ut mul / add imbalance u Single Precision w /o w ut S /o W ut N pre U f M et A ch peak SP 128 attainable Gflop/s (32 b) 256 Sun Victoria Falls 512 w attainable Gflop/s (32 b) 512 1/ 8 1/ 1 2 4 8 flop: DRAM byte ratio 4 1/ 2 16 Henk Corporaal 117

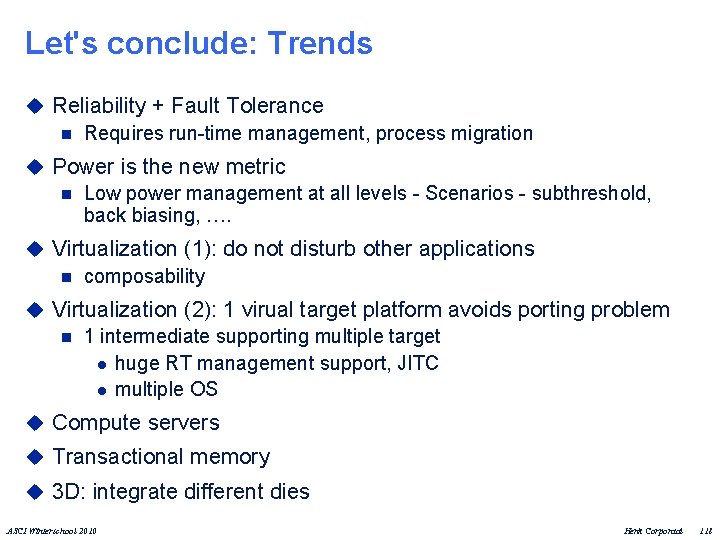

Let's conclude: Trends u Reliability + Fault Tolerance n Requires run-time management, process migration u Power is the new metric n Low power management at all levels - Scenarios - subthreshold, back biasing, …. u Virtualization (1): do not disturb other applications n composability u Virtualization (2): 1 virual target platform avoids porting problem n 1 intermediate supporting multiple target l huge RT management support, JITC l multiple OS u Compute servers u Transactional memory u 3 D: integrate different dies ASCI Winterschool 2010 Henk Corporaal 118

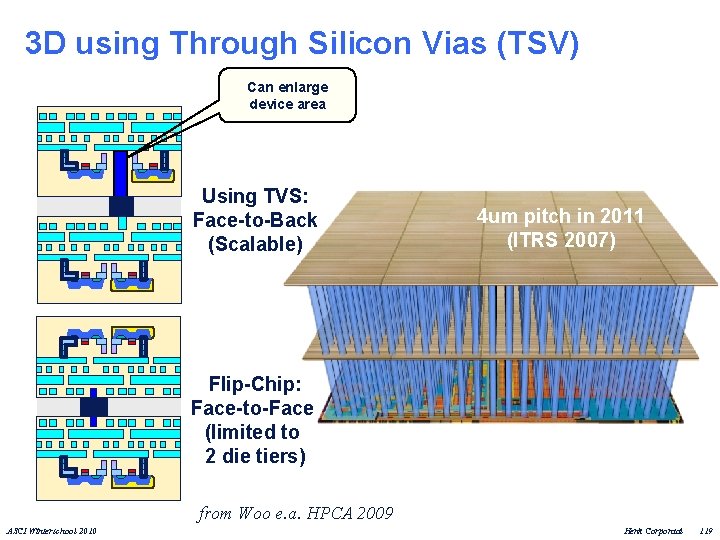

3 D using Through Silicon Vias (TSV) Can enlarge device area Using TVS: Face-to-Back (Scalable) 4 um pitch in 2011 (ITRS 2007) Flip-Chip: Face-to-Face (limited to 2 die tiers) from Woo e. a. HPCA 2009 ASCI Winterschool 2010 Henk Corporaal 119

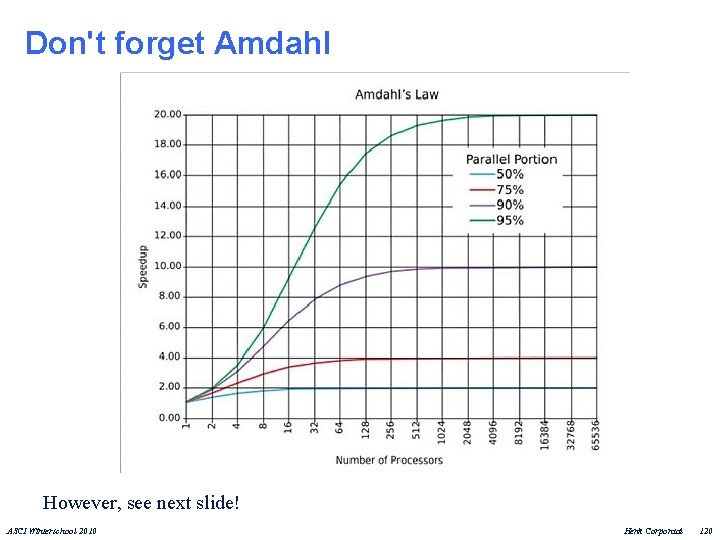

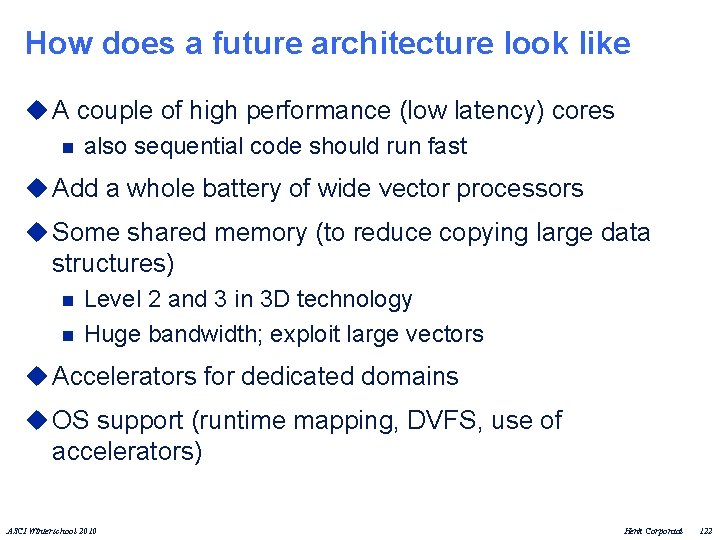

Don't forget Amdahl However, see next slide! ASCI Winterschool 2010 Henk Corporaal 120

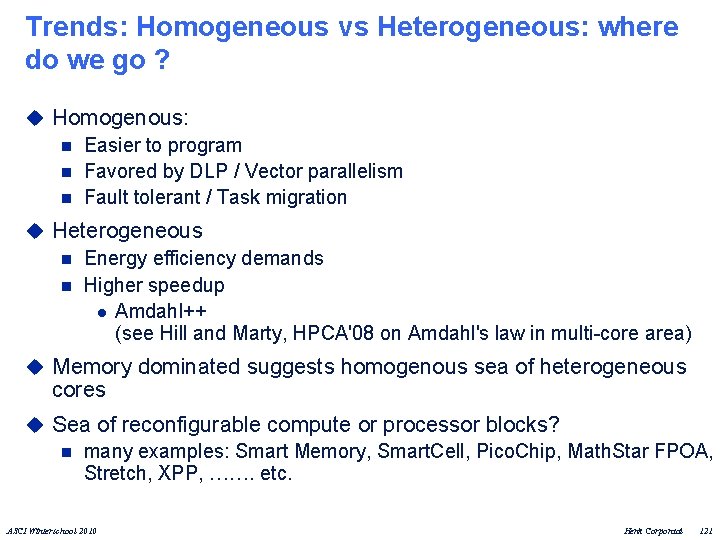

Trends: Homogeneous vs Heterogeneous: where do we go ? u Homogenous: n Easier to program n Favored by DLP / Vector parallelism n Fault tolerant / Task migration u Heterogeneous n Energy efficiency demands n Higher speedup l Amdahl++ (see Hill and Marty, HPCA'08 on Amdahl's law in multi-core area) u Memory dominated suggests homogenous sea of heterogeneous cores u Sea of reconfigurable compute or processor blocks? n many examples: Smart Memory, Smart. Cell, Pico. Chip, Math. Star FPOA, Stretch, XPP, ……. etc. ASCI Winterschool 2010 Henk Corporaal 121

How does a future architecture look like u A couple of high performance (low latency) cores n also sequential code should run fast u Add a whole battery of wide vector processors u Some shared memory (to reduce copying large data structures) n n Level 2 and 3 in 3 D technology Huge bandwidth; exploit large vectors u Accelerators for dedicated domains u OS support (runtime mapping, DVFS, use of accelerators) ASCI Winterschool 2010 Henk Corporaal 122

But the real problem is …. . u Programming parallel is the real bottleneck n new programming models like transaction based progr. u That's what we will talk about this week… ASCI Winterschool 2010 Henk Corporaal 123

ASCI Winterschool 2010 Henk Corporaal 124