Introduction to Machine Learning with Python Bompotas Agorakis

Introduction to Machine Learning with Python Bompotas Agorakis

pandas

What is pandas Pandas is a fast, powerful, flexible and easy to use open source data analysis and manipulation tool This tool is essentially your data’s home. Through pandas, you get acquainted with your data by cleaning, transforming, and analyzing it. Examples: Calculate statistics and answer questions about the data, like calculating average, median, max, or min of each column, remove missing values etc. Visualize the data with help from Matplotlib. Plot bars, lines, histograms, bubbles, etc. Store the cleaned, transformed data back into a CSV, other file or database

What is pandas Pandas is built on top of the Num. Py package Data in pandas is often used to feed statistical analysis in Sci. Py, plotting functions from Matplotlib, machine learning algorithms in Scikit-learn.

Install and import Install: pip install pandas conda install pandas Import: import pandas as pd

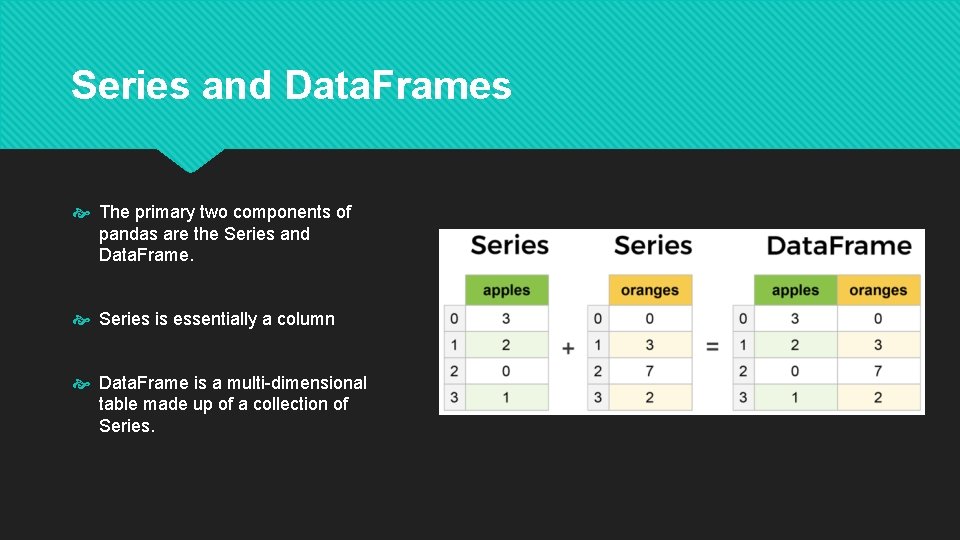

Series and Data. Frames The primary two components of pandas are the Series and Data. Frame. Series is essentially a column Data. Frame is a multi-dimensional table made up of a collection of Series.

![Creating Data. Frames From dictionaries: data = { 'apples': [3, 2, 0, 1], 'oranges': Creating Data. Frames From dictionaries: data = { 'apples': [3, 2, 0, 1], 'oranges':](http://slidetodoc.com/presentation_image_h2/aeb3f8a8d7083d5d0234de542fc9574c/image-7.jpg)

Creating Data. Frames From dictionaries: data = { 'apples': [3, 2, 0, 1], 'oranges': [0, 3, 7, 2] } purchases = pd. Data. Frame(data)

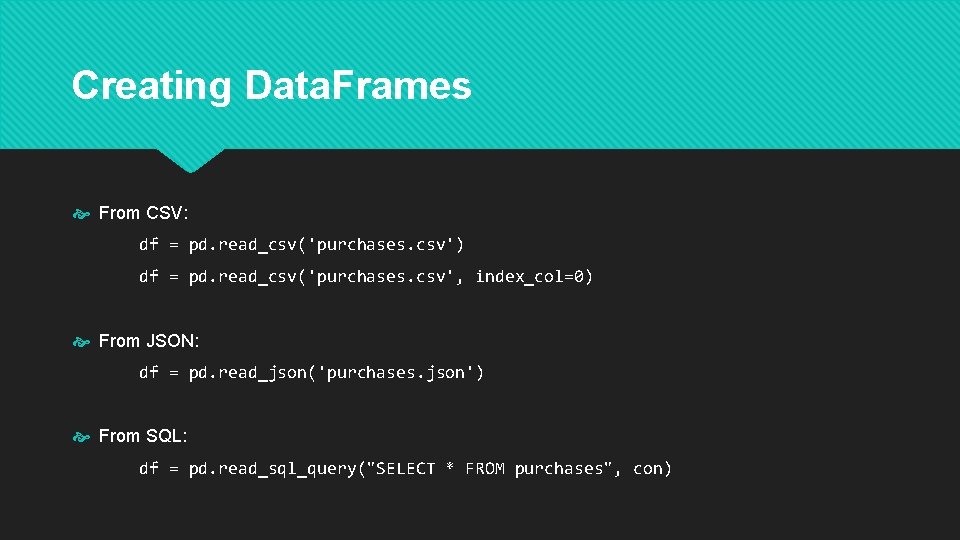

Creating Data. Frames From CSV: df = pd. read_csv('purchases. csv') df = pd. read_csv('purchases. csv', index_col=0) From JSON: df = pd. read_json('purchases. json') From SQL: df = pd. read_sql_query("SELECT * FROM purchases", con)

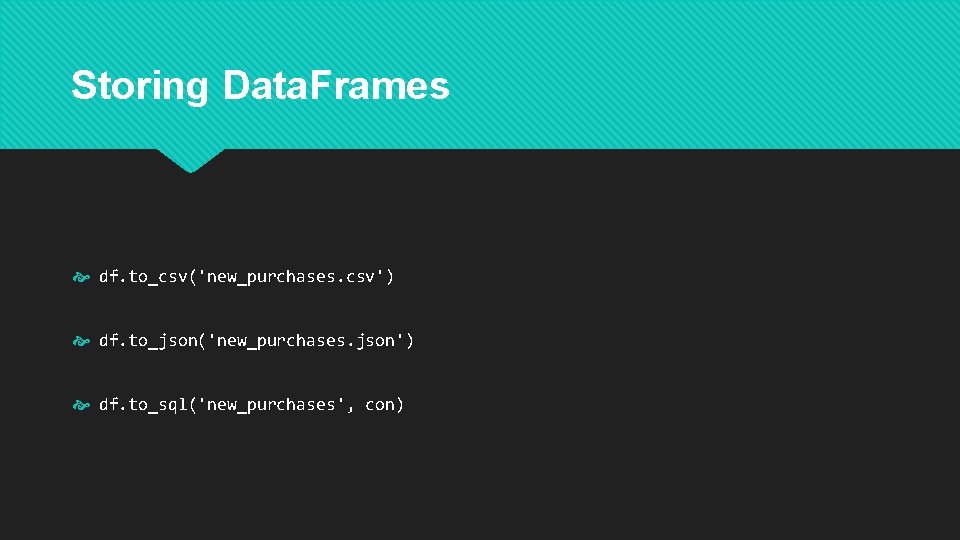

Storing Data. Frames df. to_csv('new_purchases. csv') df. to_json('new_purchases. json') df. to_sql('new_purchases', con)

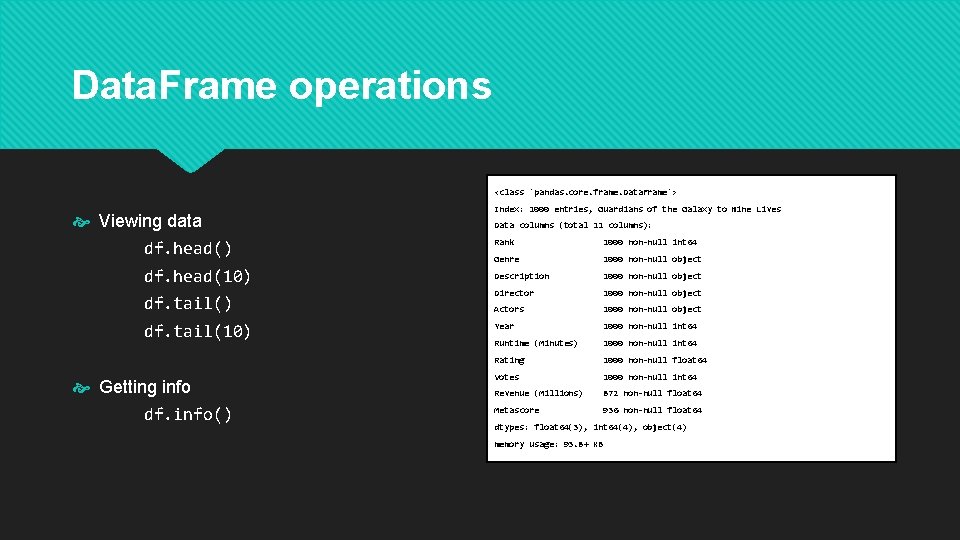

Data. Frame operations <class 'pandas. core. frame. Data. Frame'> Viewing data df. head() df. head(10) df. tail(10) Getting info df. info() Index: 1000 entries, Guardians of the Galaxy to Nine Lives Data columns (total 11 columns): Rank 1000 non-null int 64 Genre 1000 non-null object Description 1000 non-null object Director 1000 non-null object Actors 1000 non-null object Year 1000 non-null int 64 Runtime (Minutes) 1000 non-null int 64 Rating 1000 non-null float 64 Votes 1000 non-null int 64 Revenue (Millions) 872 non-null float 64 Metascore 936 non-null float 64 dtypes: float 64(3), int 64(4), object(4) memory usage: 93. 8+ KB

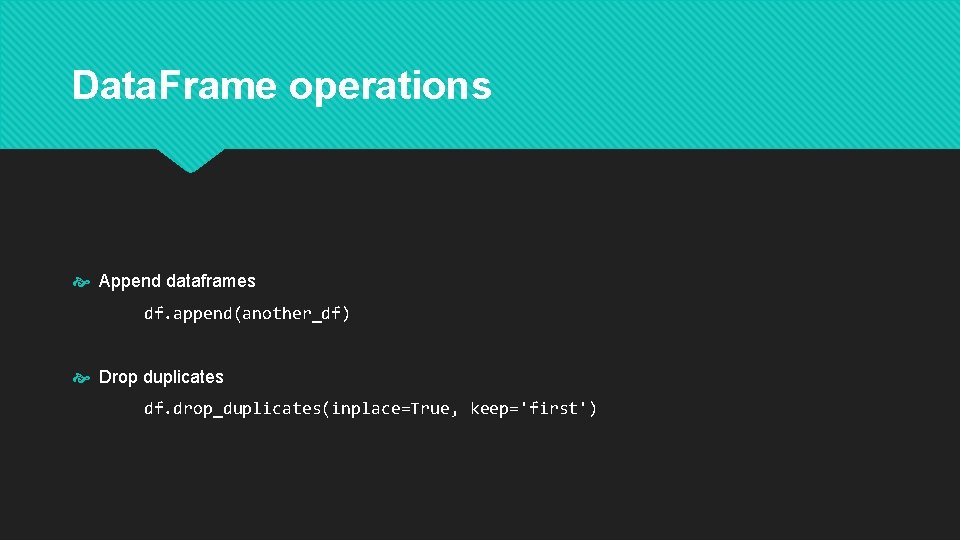

Data. Frame operations Append dataframes df. append(another_df) Drop duplicates df. drop_duplicates(inplace=True, keep='first')

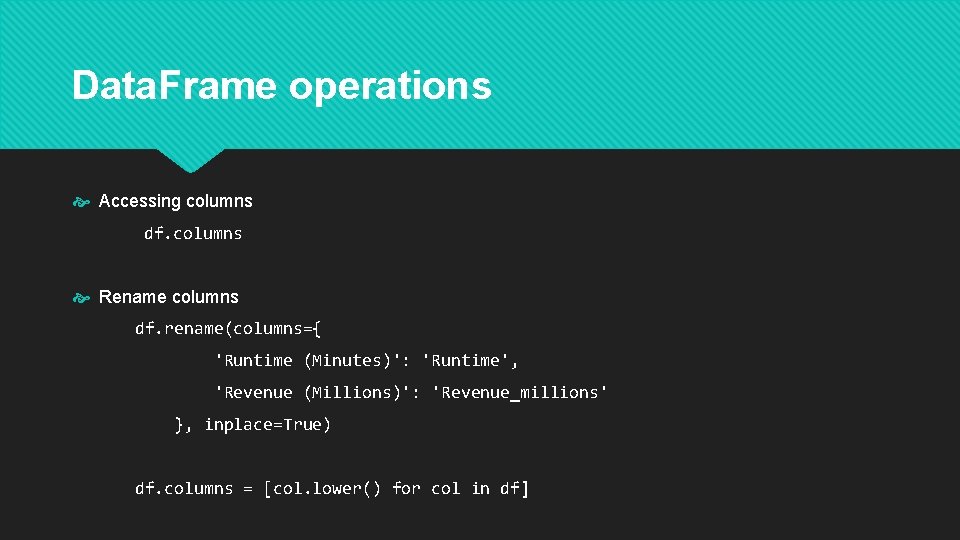

Data. Frame operations Accessing columns df. columns Rename columns df. rename(columns={ 'Runtime (Minutes)': 'Runtime', 'Revenue (Millions)': 'Revenue_millions' }, inplace=True) df. columns = [col. lower() for col in df]

![Data. Frame operations Select columns col = df['name'] # this is a Series col Data. Frame operations Select columns col = df['name'] # this is a Series col](http://slidetodoc.com/presentation_image_h2/aeb3f8a8d7083d5d0234de542fc9574c/image-13.jpg)

Data. Frame operations Select columns col = df['name'] # this is a Series col = df[['name']] # this is a Dataframe col = df[['name', 'surname']] # this is a Dataframe Select rows row = df. loc['Tony'] row = df. iloc[7]

![Data. Frame operations Conditional selections: condition = (movies_df['director'] == "Ridley Scott") movies_df['director'] == "Ridley Data. Frame operations Conditional selections: condition = (movies_df['director'] == "Ridley Scott") movies_df['director'] == "Ridley](http://slidetodoc.com/presentation_image_h2/aeb3f8a8d7083d5d0234de542fc9574c/image-14.jpg)

Data. Frame operations Conditional selections: condition = (movies_df['director'] == "Ridley Scott") movies_df['director'] == "Ridley Scott"] movies_df['rating'] >= 8. 6] movies_df['director']. isin(['Christopher Nolan', 'Ridley Scott'])] movies_df[ ((movies_df['year'] >= 2005) & (movies_df['year'] <= 2010)) & (movies_df['rating'] > 8. 0) & (movies_df['revenue_millions'] < movies_df['revenue_millions']. quantile(0. 25)) ]

Data. Frame operations Missing values: df. isnull(). sum() df. dropna(axis=1)

![Data. Frame operations Important statistical functions: df['column']. value_counts() df['column']. sum() df['column']. mean() df['column']. median() Data. Frame operations Important statistical functions: df['column']. value_counts() df['column']. sum() df['column']. mean() df['column']. median()](http://slidetodoc.com/presentation_image_h2/aeb3f8a8d7083d5d0234de542fc9574c/image-16.jpg)

Data. Frame operations Important statistical functions: df['column']. value_counts() df['column']. sum() df['column']. mean() df['column']. median() df. corr()

![Data. Frame operations Applying functions: movies_df["rating"]. apply(rating_function) def rating_function(x): if x >= 8. 0: Data. Frame operations Applying functions: movies_df["rating"]. apply(rating_function) def rating_function(x): if x >= 8. 0:](http://slidetodoc.com/presentation_image_h2/aeb3f8a8d7083d5d0234de542fc9574c/image-17.jpg)

Data. Frame operations Applying functions: movies_df["rating"]. apply(rating_function) def rating_function(x): if x >= 8. 0: return "good" movies_df["rating"]. apply(lambda x: 'good' if x >= 8. 0 else 'bad') else: return "bad"

![Data. Frame operations Plotting: import matplotlib. pyplot as plt movies_df['rating’]. plot(kind='hist', title='Rating') Data. Frame operations Plotting: import matplotlib. pyplot as plt movies_df['rating’]. plot(kind='hist', title='Rating')](http://slidetodoc.com/presentation_image_h2/aeb3f8a8d7083d5d0234de542fc9574c/image-18.jpg)

Data. Frame operations Plotting: import matplotlib. pyplot as plt movies_df['rating’]. plot(kind='hist', title='Rating')

scikit-learn

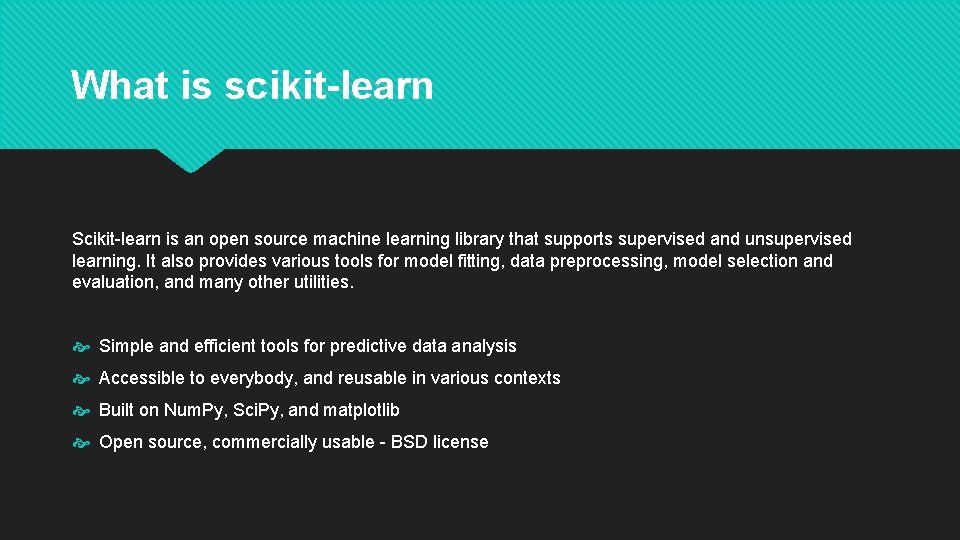

What is scikit-learn Scikit-learn is an open source machine learning library that supports supervised and unsupervised learning. It also provides various tools for model fitting, data preprocessing, model selection and evaluation, and many other utilities. Simple and efficient tools for predictive data analysis Accessible to everybody, and reusable in various contexts Built on Num. Py, Sci. Py, and matplotlib Open source, commercially usable - BSD license

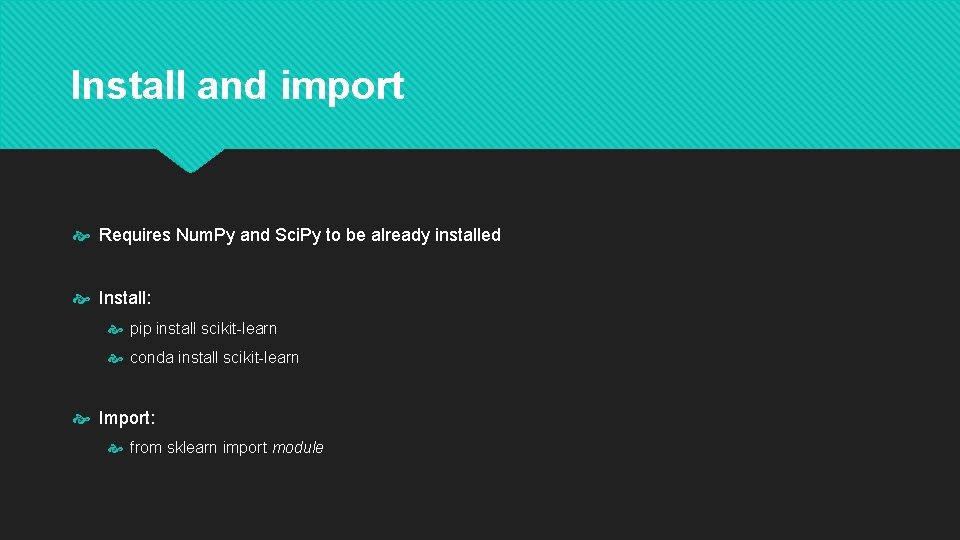

Install and import Requires Num. Py and Sci. Py to be already installed Install: pip install scikit-learn conda install scikit-learn Import: from sklearn import module

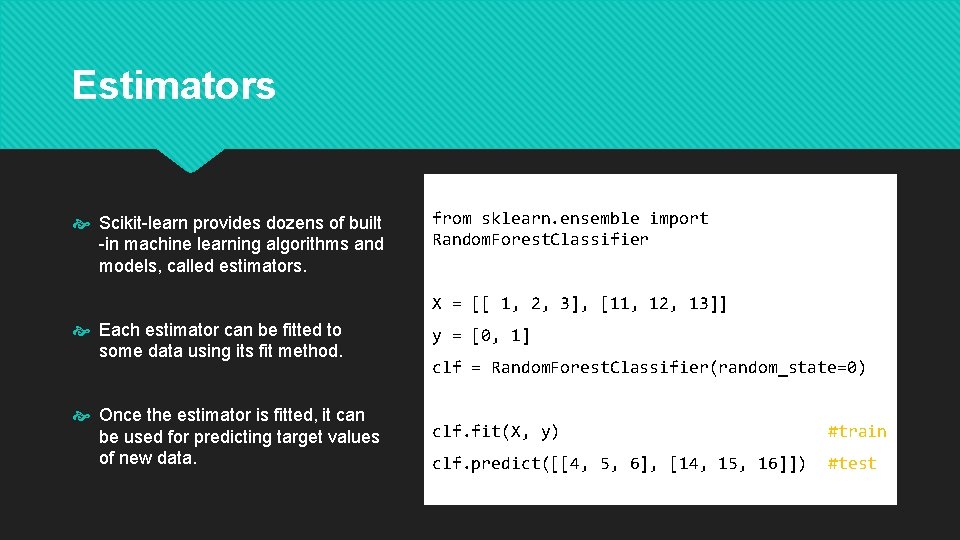

Estimators Scikit-learn provides dozens of built -in machine learning algorithms and models, called estimators. from sklearn. ensemble import Random. Forest. Classifier X = [[ 1, 2, 3], [11, 12, 13]] Each estimator can be fitted to some data using its fit method. y = [0, 1] Once the estimator is fitted, it can be used for predicting target values of new data. clf. fit(X, y) #train clf. predict([[4, 5, 6], [14, 15, 16]]) #test clf = Random. Forest. Classifier(random_state=0)

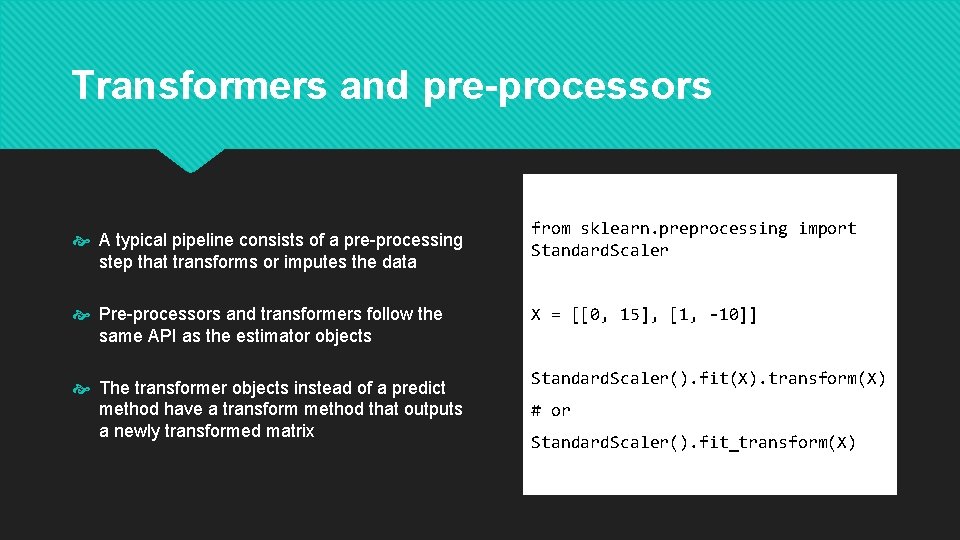

Transformers and pre-processors A typical pipeline consists of a pre-processing step that transforms or imputes the data Pre-processors and transformers follow the same API as the estimator objects The transformer objects instead of a predict method have a transform method that outputs a newly transformed matrix from sklearn. preprocessing import Standard. Scaler X = [[0, 15], [1, -10]] Standard. Scaler(). fit(X). transform(X) # or Standard. Scaler(). fit_transform(X)

Loading data scikit-learn comes with a few standard datasets, e. g. the iris (for classification) The digits (for classification) the boston house prices (for regression) scikit-learn works on any numeric data stored as numpy arrays or scipy sparse matrices Other types that are convertible to numeric arrays such as pandas Data. Frame are also acceptable

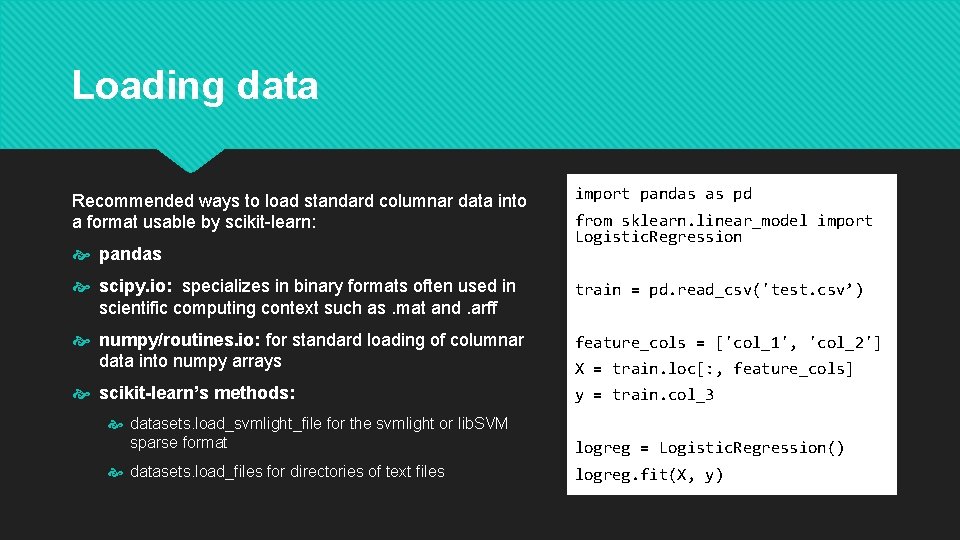

Loading data Recommended ways to load standard columnar data into a format usable by scikit-learn: pandas import pandas as pd from sklearn. linear_model import Logistic. Regression scipy. io: specializes in binary formats often used in scientific computing context such as. mat and. arff train = pd. read_csv('test. csv’) numpy/routines. io: for standard loading of columnar data into numpy arrays feature_cols = ['col_1', 'col_2'] scikit-learn’s methods: y = train. col_3 X = train. loc[: , feature_cols] datasets. load_svmlight_file for the svmlight or lib. SVM sparse format logreg = Logistic. Regression() datasets. load_files for directories of text files logreg. fit(X, y)

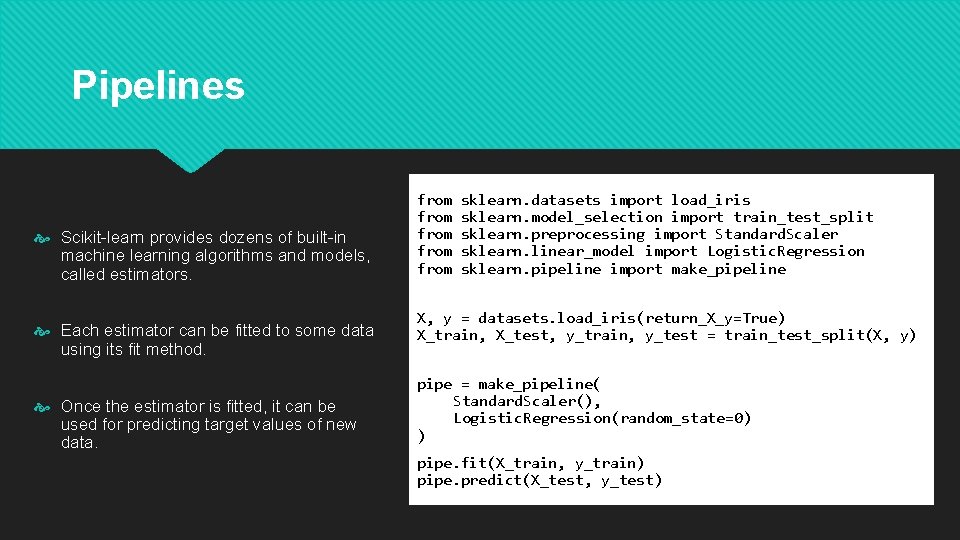

Pipelines Scikit-learn provides dozens of built-in machine learning algorithms and models, called estimators. Each estimator can be fitted to some data using its fit method. Once the estimator is fitted, it can be used for predicting target values of new data. from from sklearn. datasets import load_iris sklearn. model_selection import train_test_split sklearn. preprocessing import Standard. Scaler sklearn. linear_model import Logistic. Regression sklearn. pipeline import make_pipeline X, y = datasets. load_iris(return_X_y=True) X_train, X_test, y_train, y_test = train_test_split(X, y) pipe = make_pipeline( Standard. Scaler(), Logistic. Regression(random_state=0) ) pipe. fit(X_train, y_train) pipe. predict(X_test, y_test)

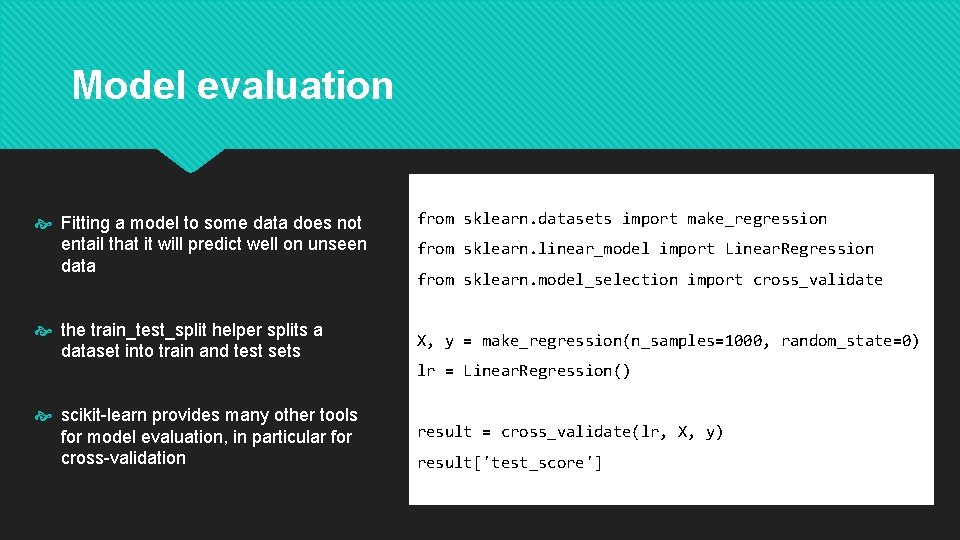

Model evaluation Fitting a model to some data does not entail that it will predict well on unseen data from sklearn. datasets import make_regression from sklearn. linear_model import Linear. Regression from sklearn. model_selection import cross_validate the train_test_split helper splits a dataset into train and test sets X, y = make_regression(n_samples=1000, random_state=0) scikit-learn provides many other tools for model evaluation, in particular for cross-validation result = cross_validate(lr, X, y) lr = Linear. Regression() result['test_score']

- Slides: 27