Introduction to Machine Learning What is Machine Learning

- Slides: 21

Introduction to Machine Learning

What is Machine Learning? • Machine learning: using algorithms to build models and generate predictions from data • Contrast to what we’ve learned in stats classes, where the user specifies a model

Why Use It? • Where accurate prediction matters more than causal inference • To select variables where there are many possibilities • To learn about the structure of the data and new variables • Robustness check

The ML Family • Machine learning is a field with many different methods • We’ll explore a few that have clear applications in the social sciences

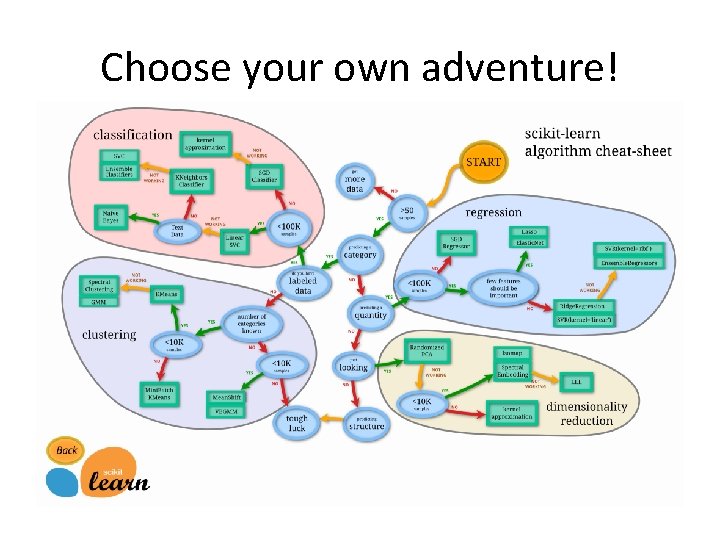

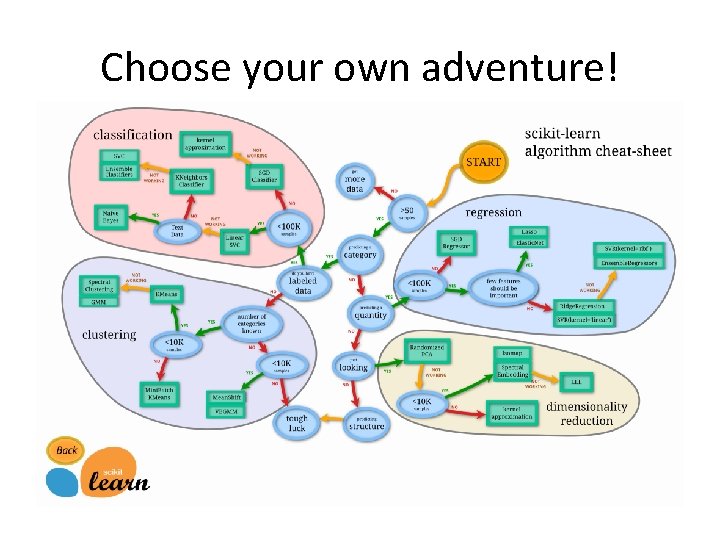

Choose your own adventure!

We’ll look at: • Cross-validation and parallelization (not strictly ML, just useful) • CART • Random forests • Honorable mentions: lasso, KNN, and neural networks.

Parallelization • You can split repetitive processes into batches and parcel them out to your computer’s cores • Cuts down on run time and is an efficient use of computing power • Many options for doing this in R; some packages make it really easy

Cross-validation • Partitioning and/or resampling your data to create a model with one subset and evaluate it with another • Point: limits overfitting

K-fold cross-validation • We will use 10 -fold cross validation • We randomly split the data into 10 subsets • We train the model on 9 of those and evaluate its predictive strength on the 10 th

Lab Section 1: Setting things up

Classifiers • CART, KNN, and random forests are all classifiers • They “learn” patterns from existing data, create rules or boundaries, and then make predictions about which group a given data point belongs to • They are all supervised learning: you tell the algorithm what you want and give it examples

CART: Decision Trees • Classification And Regression Trees • Partition the data into increasingly small segments in order to make a prediction • Find optimal splits in the data • The basis for random forests

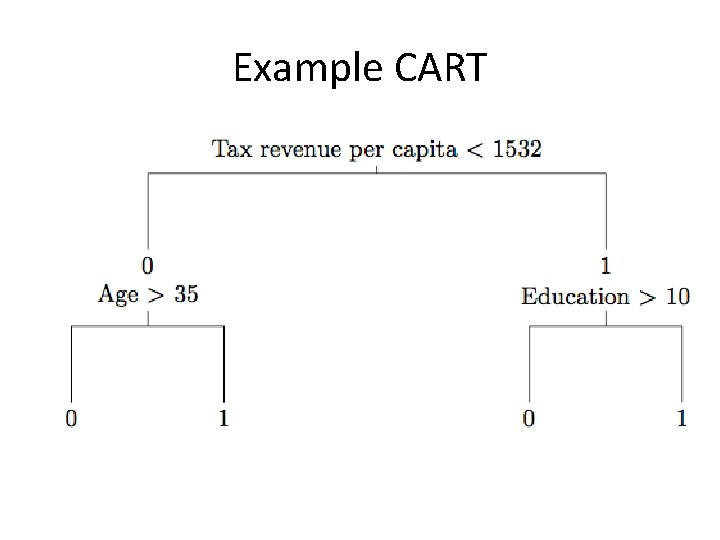

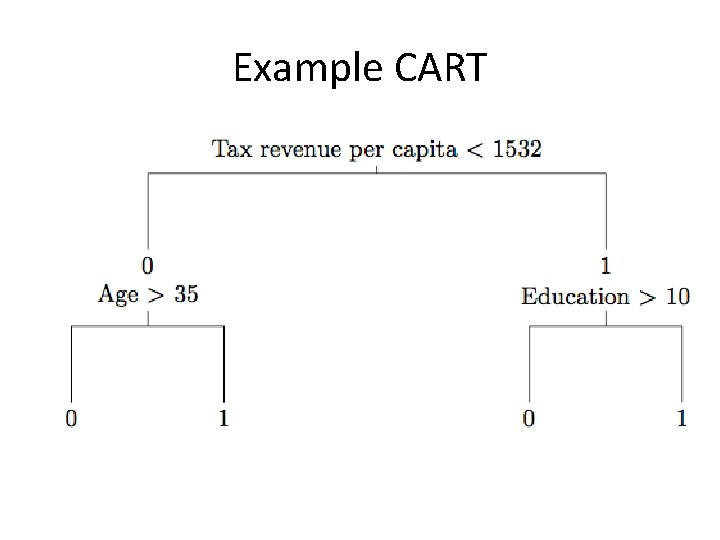

Example CART

Lab Section 2: Make a CART

Random Forests • Supervised ensemble method • Relies on bootstrap aggregating or bagging • Tree bagging: learning a decision tree on a random sample of training data • Random forests adds an additional step by randomizing the variables evaluated at each node

Random Forests • A random forests classifier grows a forest of classification trees • The classifier randomly samples variables at the nodes of the tree; trees uncorrelated • The classifier then combines the predictions • Note: can also be used with regression

Random Forests • At each node in each tree, the classifier finds the optimal split that best separates the remaining data into homogenous groups • A split can be a number, a linear combination, or a classification • This recursive partitioning process generates classification rules

Lab Section 3: Random Forests and Post-Estimation

Advanced Topics • Unsupervised learning and neural nets • Feature selection • Doing this in Python • Causal inference with machine learning

Resources • Muchlinski, David Siroky, Jingrui He, and Matthew Kocher. "Comparing Random Forest with Logistic Regression for Predicting Class. Imbalanced Civil War Onset Data. " Political Analysis 24, no. 1 (2016): 87103. (excellent and helpful replication files) • Breiman, Leo. "Random forests. " Machine learning 45. 1 (2001): 5 -32. • Breiman, Leo. "Statistical modeling: The two cultures (with comments and a rejoinder by the author). " Statistical science 16. 3 (2001): 199 -231. • Grimmer, Justin. "We are all social scientists now: how big data, machine learning, and causal inference work together. " PS: Political Science & Politics 48. 01 (2015): 80 -83. • Conway, Drew, and John White. Machine learning for hackers. " O'Reilly Media, Inc. ", 2012.

Free Online Classes and Tutorials • Data. Camp Machine Learning for Beginners: https: //www. datacamp. com/courses/machinelearning-toolbox • Udacity machine learning class: https: //www. udacity. com/course/intro-to-machinelearning--ud 120 • k. NN example in R: https: //www. r-bloggers. com/usingknn-classifier-to-predict-whether-the-price-of-stockwill-increase/