Introduction to Machine Learning Comp 3710 Artificial Intelligence

![1. Introduction n n Topics [Q] What is learning: Learning and intelligence are intimately 1. Introduction n n Topics [Q] What is learning: Learning and intelligence are intimately](https://slidetodoc.com/presentation_image_h/221b29ae8d63653c218c8c35f91e6ebe/image-5.jpg)

![2. Training n n [Q] How do we learn? How do you know which 2. Training n n [Q] How do we learn? How do you know which](https://slidetodoc.com/presentation_image_h/221b29ae8d63653c218c8c35f91e6ebe/image-6.jpg)

![6. Decision Tree Induction n Box-office success problem – [Q] classification or clustering? n 6. Decision Tree Induction n Box-office success problem – [Q] classification or clustering? n](https://slidetodoc.com/presentation_image_h/221b29ae8d63653c218c8c35f91e6ebe/image-12.jpg)

![n n (China, Yes, Romance) -> Success? [Q] In the box-office success problem, what n n (China, Yes, Romance) -> Success? [Q] In the box-office success problem, what](https://slidetodoc.com/presentation_image_h/221b29ae8d63653c218c8c35f91e6ebe/image-13.jpg)

![n [Q] In the box-office success problem, what must be the first question? Country, n [Q] In the box-office success problem, what must be the first question? Country,](https://slidetodoc.com/presentation_image_h/221b29ae8d63653c218c8c35f91e6ebe/image-16.jpg)

![8. The Nearest Neighbor Algorithm n [Q] Any other learning method? Film Country Big 8. The Nearest Neighbor Algorithm n [Q] Any other learning method? Film Country Big](https://slidetodoc.com/presentation_image_h/221b29ae8d63653c218c8c35f91e6ebe/image-27.jpg)

![Topics n n n [Q] How to classify, when k = 3? [Q] How Topics n n n [Q] How to classify, when k = 3? [Q] How](https://slidetodoc.com/presentation_image_h/221b29ae8d63653c218c8c35f91e6ebe/image-29.jpg)

- Slides: 36

Introduction to Machine Learning Comp 3710 Artificial Intelligence Computing Science Thompson Rivers University

Course Outline n n n Part I – Introduction to Artificial Intelligence Part II – Classical Artificial Intelligence -> Searching and reasoning Part III – Machine Learning n n n Introduction to Machine Learning Neural Networks Probabilistic Reasoning and Bayesian Belief Networks Artificial Life: Learning through Emergent Behavior Part IV – Advanced Topics n n Genetic Algorithms Fuzzy Reasoning, Fuzzy Expert Systems, Fuzzy Control Systems TRU-COMP 3710 Intro to Machine Learning 2

Learning Objectives n n n n Define what classification is. Define what clustering is. List the three types of attributes with examples. Summarize what concept learning is. Compute the information gain for an attribute from a given training data set. Construct a decision tree from a given training data set, using information gains. . TRU-COMP 3710 Intro to Machine Learning 3

Chapter Outline 1. 2. 3. 4. 5. 6. 7. 8. 9. 10. 11. 12. Introduction Training Rote Learning Concepts Inductive Bias Decision-Tree Induction The Problem of Overfitting The Nearest Neighbor Algorithm Learning Neural Networks Supervised Learning Reinforcement Learning Unsupervised Learning TRU-COMP 3710 Intro to Machine Learning 4

![1 Introduction n n Topics Q What is learning Learning and intelligence are intimately 1. Introduction n n Topics [Q] What is learning: Learning and intelligence are intimately](https://slidetodoc.com/presentation_image_h/221b29ae8d63653c218c8c35f91e6ebe/image-5.jpg)

1. Introduction n n Topics [Q] What is learning: Learning and intelligence are intimately related to each other. It is usually agreed that a system capable of learning deserves to be called intelligent. [Q] Is it possible to make a machine learn? We will discuss about the concept of learning methods. [Q] How are we going to make a machine learn? TRU-COMP 3710 Intro to Machine Learning 5

![2 Training n n Q How do we learn How do you know which 2. Training n n [Q] How do we learn? How do you know which](https://slidetodoc.com/presentation_image_h/221b29ae8d63653c218c8c35f91e6ebe/image-6.jpg)

2. Training n n [Q] How do we learn? How do you know which one is apple? Learning problems usually involve classifying inputs into a set of classifications. n n Learning is only possible if there is a relationship between the data and the classifications. [Q] What kind of relationship? How to classify? n n n Example of the people in this class [Q] How can we classify ourselves? Based on similarity Training involves providing the system with data which has been manually classified. Learning systems use the training data to learn to classify unseen data. TRU-COMP 3710 Intro to Machine Learning 6

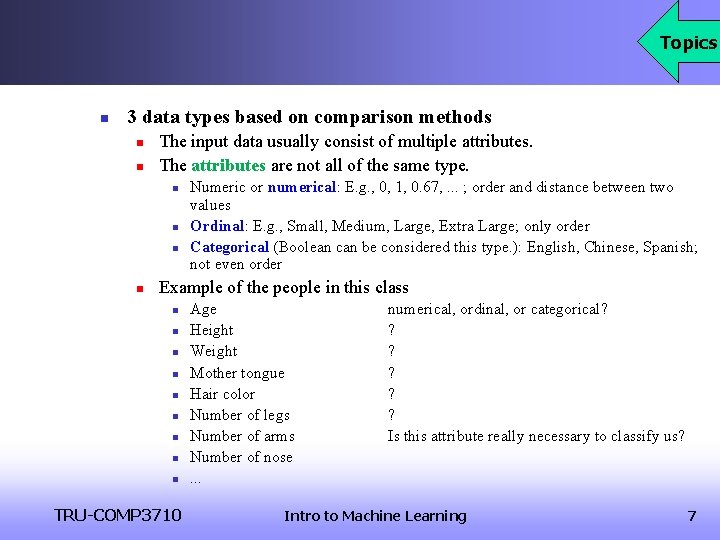

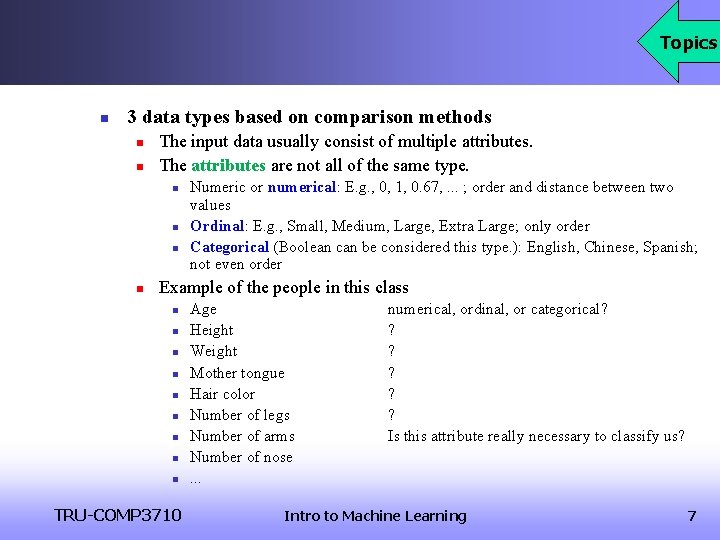

Topics n 3 data types based on comparison methods n n The input data usually consist of multiple attributes. The attributes are not all of the same type. n n Numeric or numerical: E. g. , 0, 1, 0. 67, . . . ; order and distance between two values Ordinal: E. g. , Small, Medium, Large, Extra Large; only order Categorical (Boolean can be considered this type. ): English, Chinese, Spanish; not even order Example of the people in this class n n n n n TRU-COMP 3710 Age Height Weight Mother tongue Hair color Number of legs Number of arms Number of nose. . . numerical, ordinal, or categorical? ? ? Is this attribute really necessary to classify us? Intro to Machine Learning 7

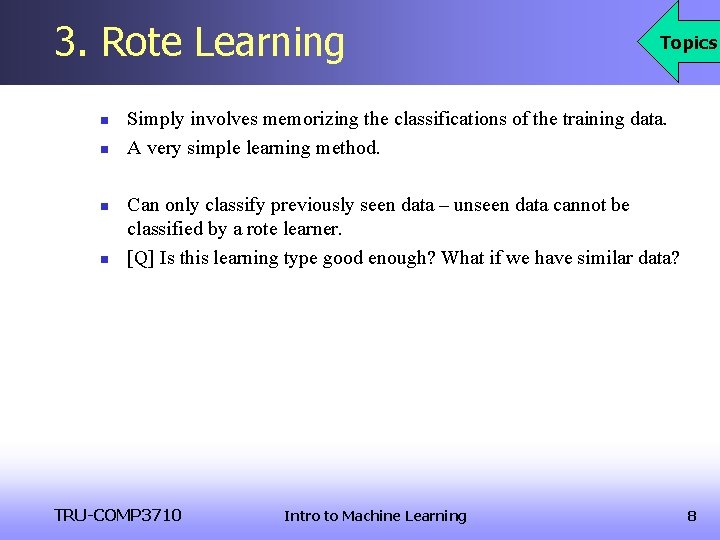

3. Rote Learning n n Topics Simply involves memorizing the classifications of the training data. A very simple learning method. Can only classify previously seen data – unseen data cannot be classified by a rote learner. [Q] Is this learning type good enough? What if we have similar data? TRU-COMP 3710 Intro to Machine Learning 8

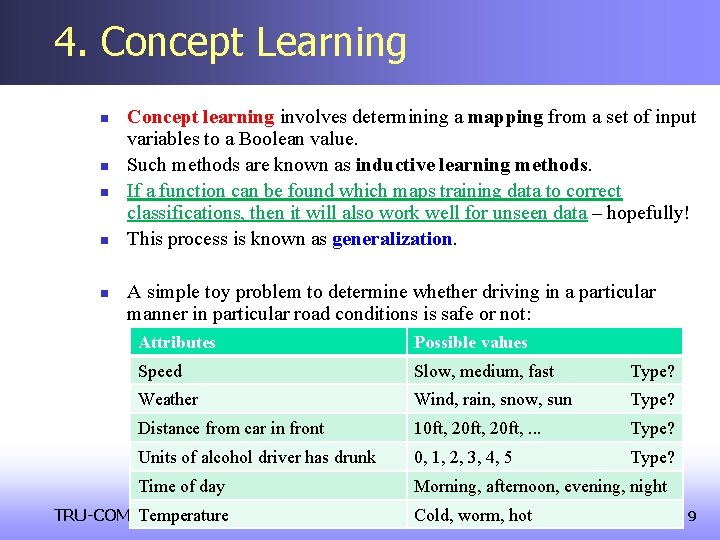

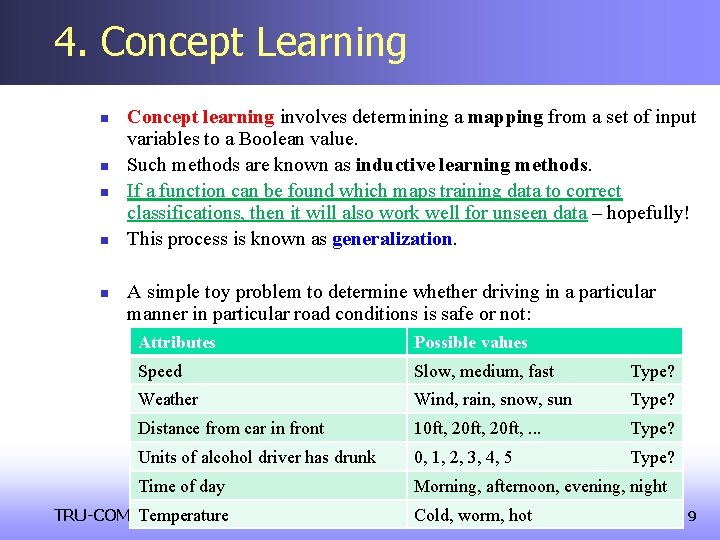

4. Concept Learning n n n Concept learning involves determining a mapping from a set of input variables to a Boolean value. Such methods are known as inductive learning methods. If a function can be found which maps training data to correct classifications, then it will also work well for unseen data – hopefully! This process is known as generalization. A simple toy problem to determine whether driving in a particular manner in particular road conditions is safe or not: Attributes Possible values Speed Slow, medium, fast Type? Weather Wind, rain, snow, sun Type? Distance from car in front 10 ft, 20 ft, . . . Type? Units of alcohol driver has drunk 0, 1, 2, 3, 4, 5 Type? Time of day Morning, afternoon, evening, night Temperature TRU-COMP 3710 Cold, worm, Intro to Machine Learning hot 9

Topics n A hypothesis (or object) is a vector (or list) of attributes: n n n h 1 = <slow, wind, 30 ft, 0, evening, cold> h 2 = <fast, rain, 10 ft, 2, ? > ? means “we do not care”, i. e. , any value This looks clearly untrue. -> Negative training example h 3 = <fast, rain, 10 ft, 2, , > means no value In concept learning, a training hypothesis is either a positive or negative (true or false) (or multiple classes). Concept learning can be thought as search through a search space that consists of hypotheses, where the goal is the hypothesis that is most closely mapped to a given query. [Q] How to define “closely”? TRU-COMP 3710 Intro to Machine Learning 10

5. Inductive Bias n All learning methods have an inductive bias. n n n Topics Inductive bias refers to the restrictions that are imposed by the assumptions made in the learning method. E. g. , the solution to the problem of road safety can be expressed as a conjunction of a set of six concepts (i. e. , attributes). This does not allow for more complex expressions that cannot be expressed as a conjunction. Therefore there can be some potential solutions that we cannot explore. However, without inductive bias, a learning method could not learn to generalize. Occam’s razor is an example of an inductive bias: The best hypothesis to select is the simplest one. n n h 1 = <slow, wind, 30 ft, 0, evening, cold> h 2 = <slow, wind, 30 ft, ? , ? > TRU-COMP 3710 Intro to Machine Learning Which one is better? 11

![6 Decision Tree Induction n Boxoffice success problem Q classification or clustering n 6. Decision Tree Induction n Box-office success problem – [Q] classification or clustering? n](https://slidetodoc.com/presentation_image_h/221b29ae8d63653c218c8c35f91e6ebe/image-12.jpg)

6. Decision Tree Induction n Box-office success problem – [Q] classification or clustering? n n Training data set: [Q] How to obtain this kind of table? Query: n n TRU-COMP 3710 Film Country Big Star Genre Success (classes) Film 1 USA Yes SF True Film 2 USA No Comedy False Film 3 USA Yes Comedy True Film 4 Europe No Comedy True Film 5 Europe Yes SF False Film 6 Europe Yes Romance False Film 7 Other Yes Comedy False Film 8 Other No SF False Film 9 Europe Yes Comedy True Film 10 USA Yes Comedy True (China, Yes, Romance) -> Success? [Q] What is your opinion? [Q] How many comparisons when brute-force search is used? Let’s try. Intro to Machine Learning 12

![n n China Yes Romance Success Q In the boxoffice success problem what n n (China, Yes, Romance) -> Success? [Q] In the box-office success problem, what](https://slidetodoc.com/presentation_image_h/221b29ae8d63653c218c8c35f91e6ebe/image-13.jpg)

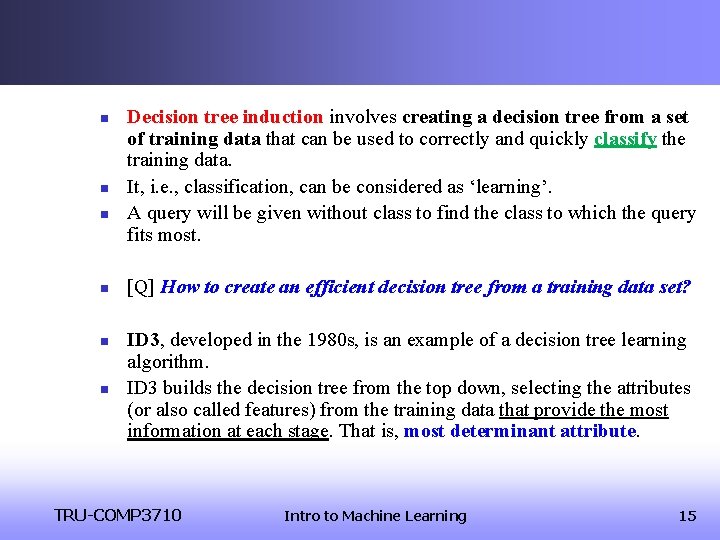

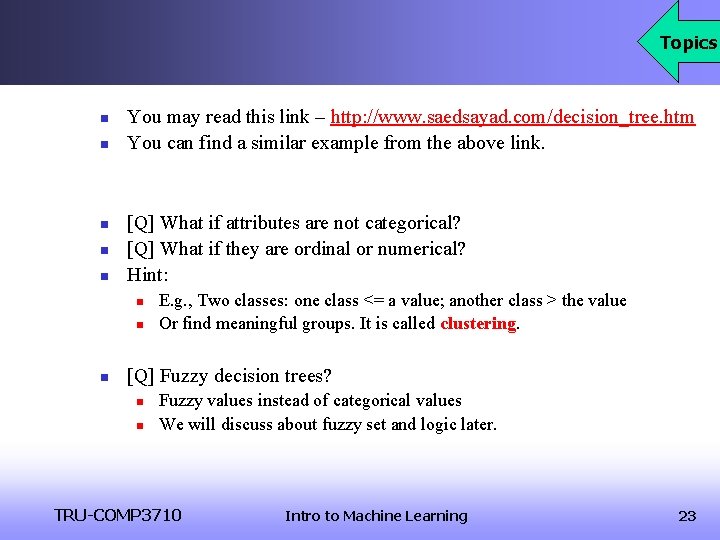

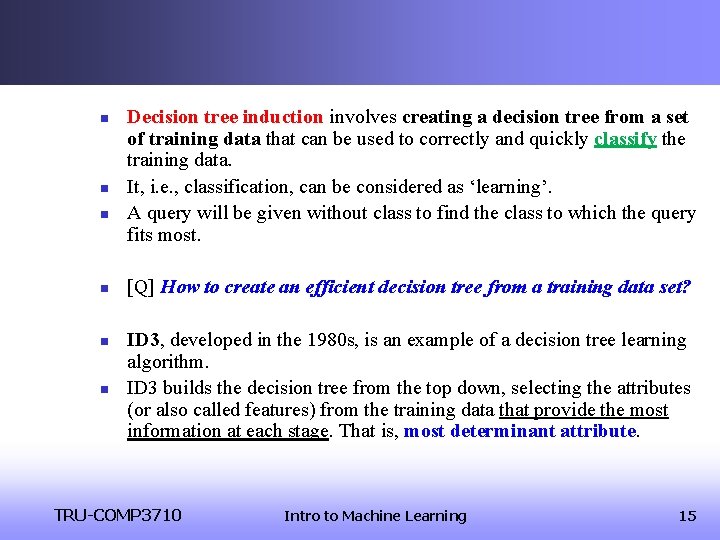

n n (China, Yes, Romance) -> Success? [Q] In the box-office success problem, what must be the first question? Film Country Big Star Genre Success Country, Big Start, or Genre? (classes) n n n Country is a significant determinant of whether a film will be a success or not. Hence the first question is Country. What is the next then? Using a tree? Film 1 USA Yes SF True Film 2 USA No Comedy False Film 3 USA Yes Comedy True Film 4 Europe No Comedy True Film 5 Europe Yes SF False Film 6 Europe Yes Romance False Film 7 Other Yes Comedy False Film 8 Other No SF False Film 9 Europe Yes Comedy True Film 10 USA Yes Comedy True [Q] How to determine which attribute is the most significant determinant? TRU-COMP 3710 Intro to Machine Learning 13

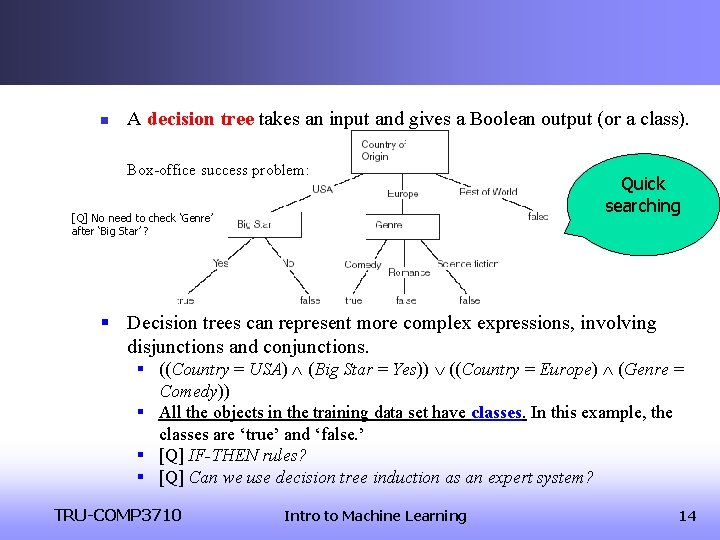

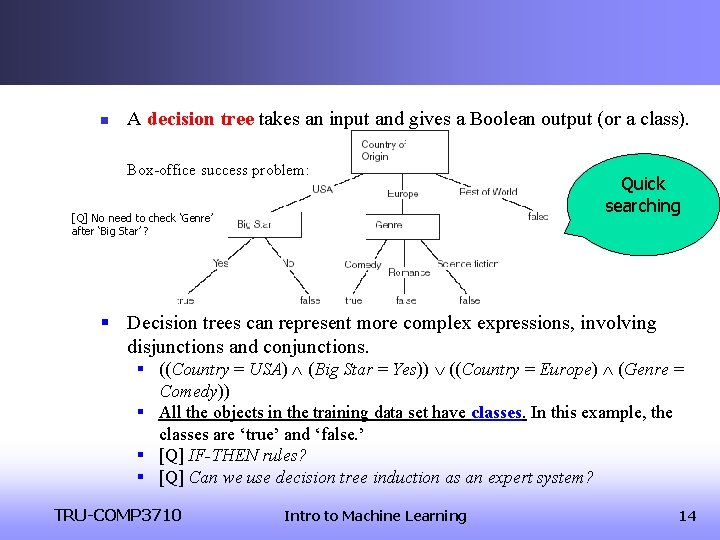

n A decision tree takes an input and gives a Boolean output (or a class). Box-office success problem: [Q] No need to check ‘Genre’ after ‘Big Star’ ? Quick searching § Decision trees can represent more complex expressions, involving disjunctions and conjunctions. § ((Country = USA) (Big Star = Yes)) ((Country = Europe) (Genre = Comedy)) § All the objects in the training data set have classes. In this example, the classes are ‘true’ and ‘false. ’ § [Q] IF-THEN rules? § [Q] Can we use decision tree induction as an expert system? TRU-COMP 3710 Intro to Machine Learning 14

n n n Decision tree induction involves creating a decision tree from a set of training data that can be used to correctly and quickly classify the training data. It, i. e. , classification, can be considered as ‘learning’. A query will be given without class to find the class to which the query fits most. [Q] How to create an efficient decision tree from a training data set? ID 3, developed in the 1980 s, is an example of a decision tree learning algorithm. ID 3 builds the decision tree from the top down, selecting the attributes (or also called features) from the training data that provide the most information at each stage. That is, most determinant attribute. TRU-COMP 3710 Intro to Machine Learning 15

![n Q In the boxoffice success problem what must be the first question Country n [Q] In the box-office success problem, what must be the first question? Country,](https://slidetodoc.com/presentation_image_h/221b29ae8d63653c218c8c35f91e6ebe/image-16.jpg)

n [Q] In the box-office success problem, what must be the first question? Country, Big Start, or Genre? Film Country Big Star Genre Success (classes) n n n Country is a significant determinant of whether a film will be a success or not. Hence the first question is Country. [Q] How do we know what the most significant determinant is? TRU-COMP 3710 Film 1 USA Yes SF True Film 2 USA No Comedy False Film 3 USA Yes Comedy True Film 4 Europe No Comedy True Film 5 Europe Yes SF False Film 6 Europe Yes Romance False Film 7 Other Yes Comedy False Film 8 Other No SF False Film 9 Europe Yes Comedy True Film 10 USA Yes Comedy True Intro to Machine Learning 16

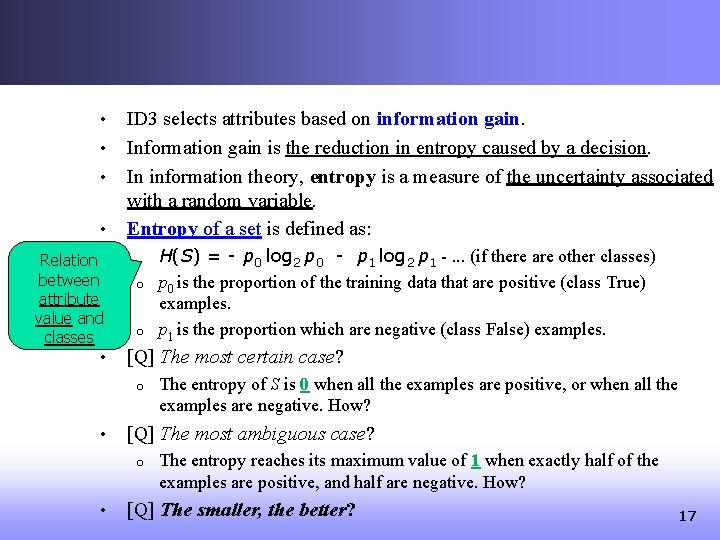

• • Relation between attribute value and classes • ID 3 selects attributes based on information gain. Information gain is the reduction in entropy caused by a decision. In information theory, entropy is a measure of the uncertainty associated with a random variable. Entropy of a set is defined as: o o [Q] The most certain case? o • The entropy of S is 0 when all the examples are positive, or when all the examples are negative. How? [Q] The most ambiguous case? o • H(S) = - p 0 log 2 p 0 - p 1 log 2 p 1 -. . . (if there are other classes) p 0 is the proportion of the training data that are positive (class True) examples. p 1 is the proportion which are negative (class False) examples. The entropy reaches its maximum value of 1 when exactly half of the examples are positive, and half are negative. How? [Q] The smaller, the better? 17

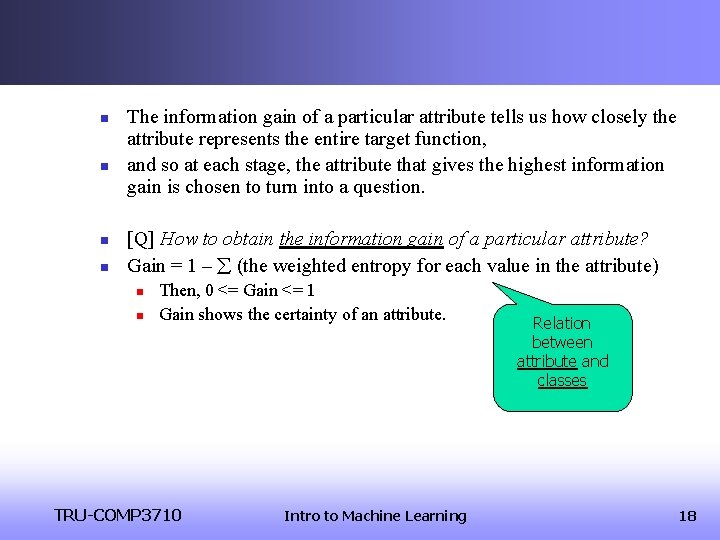

n n The information gain of a particular attribute tells us how closely the attribute represents the entire target function, and so at each stage, the attribute that gives the highest information gain is chosen to turn into a question. [Q] How to obtain the information gain of a particular attribute? Gain = 1 – (the weighted entropy for each value in the attribute) n n Then, 0 <= Gain <= 1 Gain shows the certainty of an attribute. TRU-COMP 3710 Intro to Machine Learning Relation between attribute and classes 18

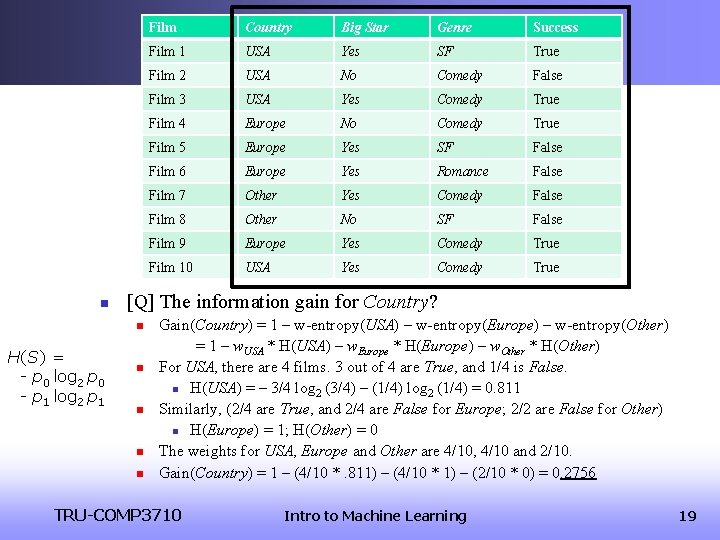

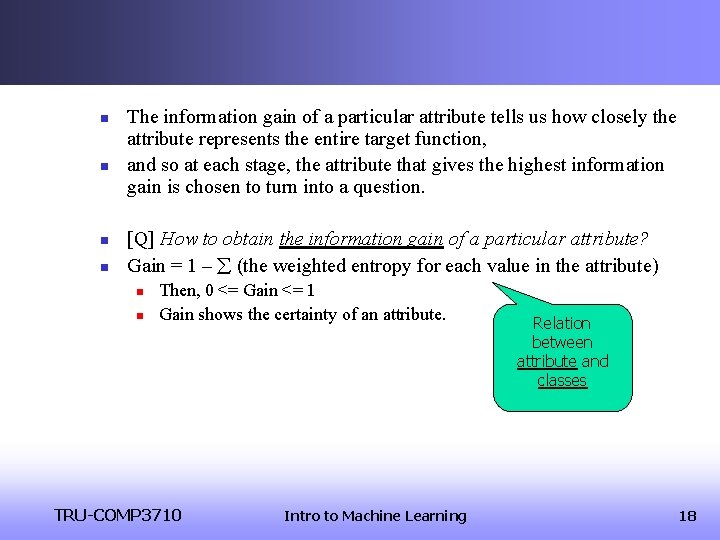

n Country Big Star Genre Success Film 1 USA Yes SF True Film 2 USA No Comedy False Film 3 USA Yes Comedy True Film 4 Europe No Comedy True Film 5 Europe Yes SF False Film 6 Europe Yes Romance False Film 7 Other Yes Comedy False Film 8 Other No SF False Film 9 Europe Yes Comedy True Film 10 USA Yes Comedy True [Q] The information gain for Country? n H(S) = - p 0 log 2 p 0 - p 1 log 2 p 1 Film n n Gain(Country) = 1 – w-entropy(USA) – w-entropy(Europe) – w-entropy(Other) = 1 – w. USA * H(USA) – w. Europe * H(Europe) – w. Other * H(Other) For USA, there are 4 films. 3 out of 4 are True, and 1/4 is False. n H(USA) = – 3/4 log 2 (3/4) – (1/4) log 2 (1/4) = 0. 811 Similarly, (2/4 are True, and 2/4 are False for Europe; 2/2 are False for Other) n H(Europe) = 1; H(Other) = 0 The weights for USA, Europe and Other are 4/10, 4/10 and 2/10. Gain(Country) = 1 – (4/10 *. 811) – (4/10 * 1) – (2/10 * 0) = 0. 2756 TRU-COMP 3710 Intro to Machine Learning 19

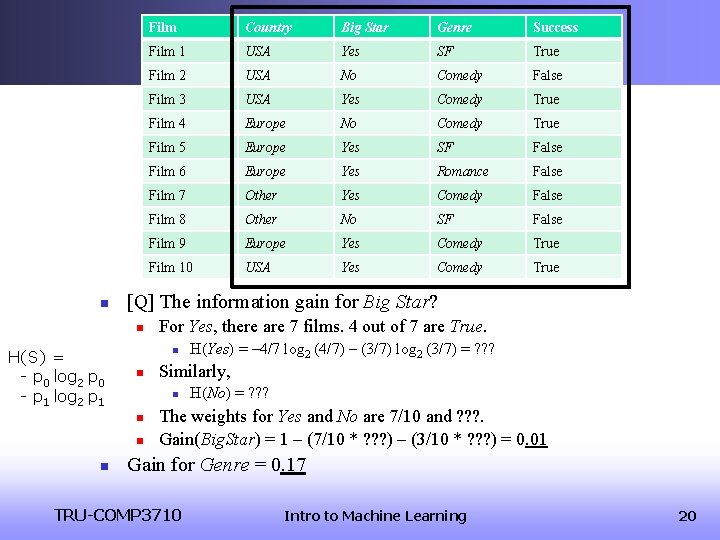

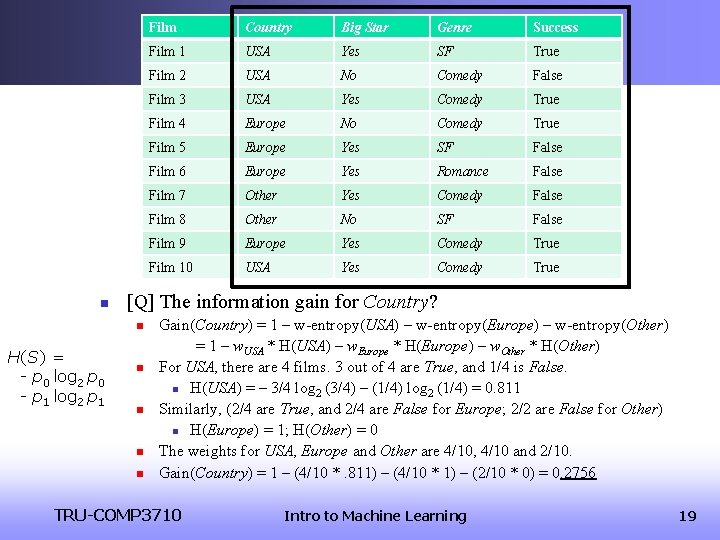

n Big Star Genre Success Film 1 USA Yes SF True Film 2 USA No Comedy False Film 3 USA Yes Comedy True Film 4 Europe No Comedy True Film 5 Europe Yes SF False Film 6 Europe Yes Romance False Film 7 Other Yes Comedy False Film 8 Other No SF False Film 9 Europe Yes Comedy True Film 10 USA Yes Comedy True For Yes, there are 7 films. 4 out of 7 are True. n n n H(Yes) = – 4/7 log 2 (4/7) – (3/7) log 2 (3/7) = ? ? ? Similarly, n n n Country [Q] The information gain for Big Star? n H(S) = - p 0 log 2 p 0 - p 1 log 2 p 1 Film H(No) = ? ? ? The weights for Yes and No are 7/10 and ? ? ? . Gain(Big. Star) = 1 – (7/10 * ? ? ? ) – (3/10 * ? ? ? ) = 0. 01 Gain for Genre = 0. 17 TRU-COMP 3710 Intro to Machine Learning 20

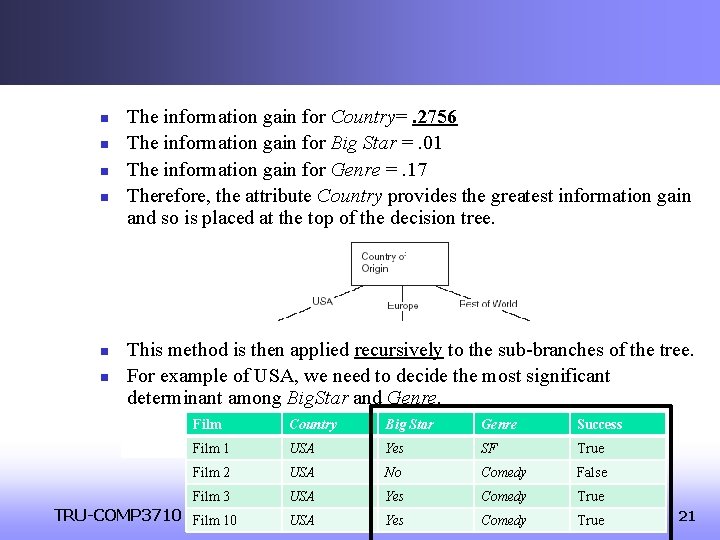

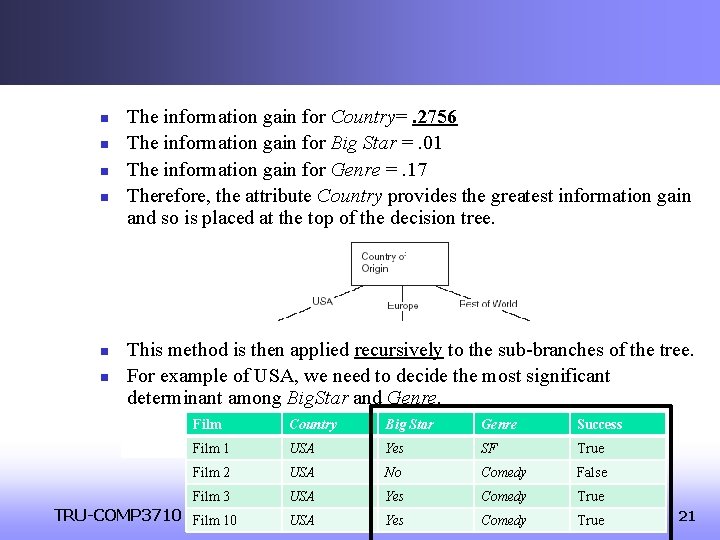

n n n The information gain for Country=. 2756 The information gain for Big Star =. 01 The information gain for Genre =. 17 Therefore, the attribute Country provides the greatest information gain and so is placed at the top of the decision tree. This method is then applied recursively to the sub-branches of the tree. For example of USA, we need to decide the most significant determinant among Big. Star and Genre. Film Country Big Star Genre Success Film 1 USA Yes SF True Film 2 USA No Comedy False Comedy True Film 3 TRU-COMP 3710 Film 10 USA Yes Intro USA to Machine Yes Learning 21

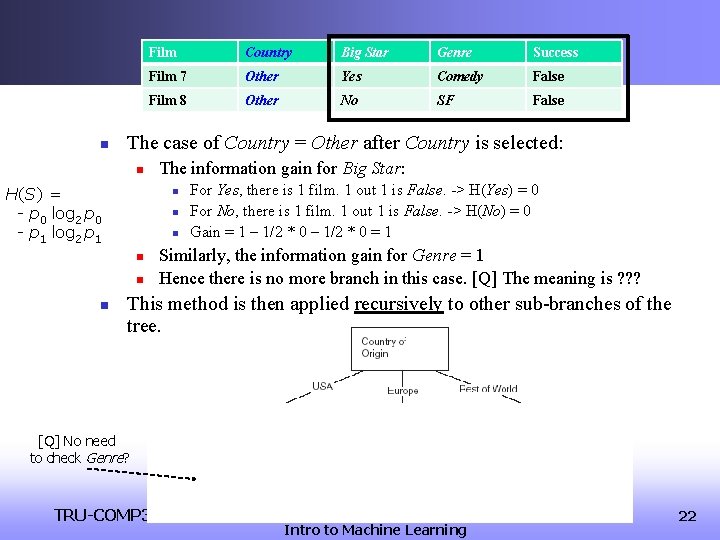

n Country Big Star Genre Success Film 7 Other Yes Comedy False Film 8 Other No SF False The case of Country = Other after Country is selected: n H(S) = - p 0 log 2 p 0 - p 1 log 2 p 1 The information gain for Big Star: n n n Film For Yes, there is 1 film. 1 out 1 is False. -> H(Yes) = 0 For No, there is 1 film. 1 out 1 is False. -> H(No) = 0 Gain = 1 – 1/2 * 0 = 1 Similarly, the information gain for Genre = 1 Hence there is no more branch in this case. [Q] The meaning is ? ? ? This method is then applied recursively to other sub-branches of the tree. [Q] No need to check Genre? TRU-COMP 3710 Intro to Machine Learning 22

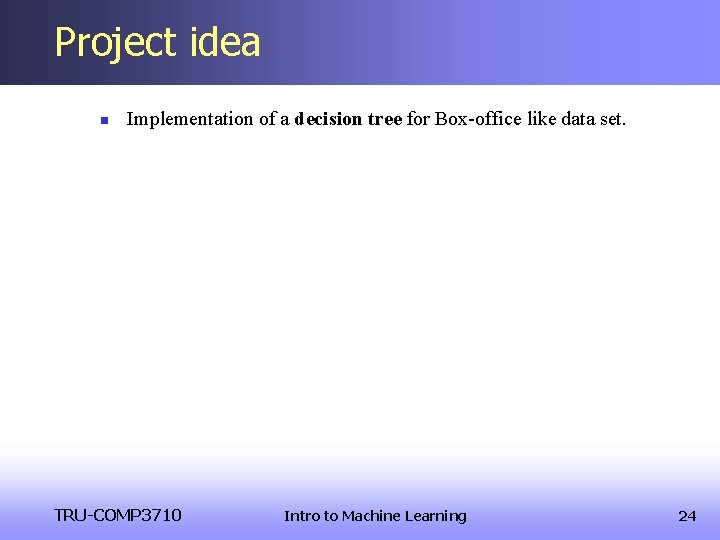

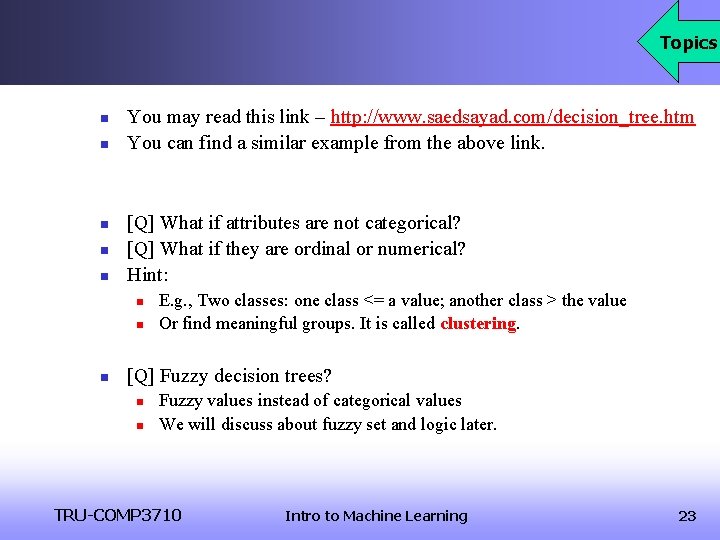

Topics n n n You may read this link – http: //www. saedsayad. com/decision_tree. htm You can find a similar example from the above link. [Q] What if attributes are not categorical? [Q] What if they are ordinal or numerical? Hint: n n n E. g. , Two classes: one class <= a value; another class > the value Or find meaningful groups. It is called clustering. [Q] Fuzzy decision trees? n n Fuzzy values instead of categorical values We will discuss about fuzzy set and logic later. TRU-COMP 3710 Intro to Machine Learning 23

Project idea n Implementation of a decision tree for Box-office like data set. TRU-COMP 3710 Intro to Machine Learning 24

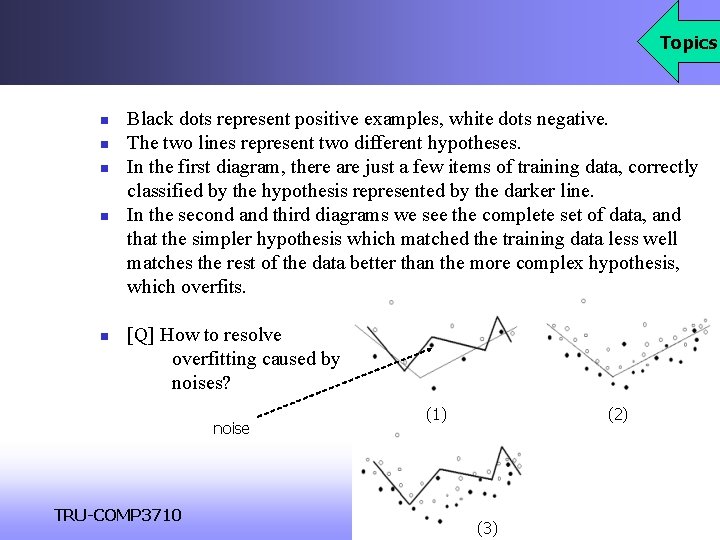

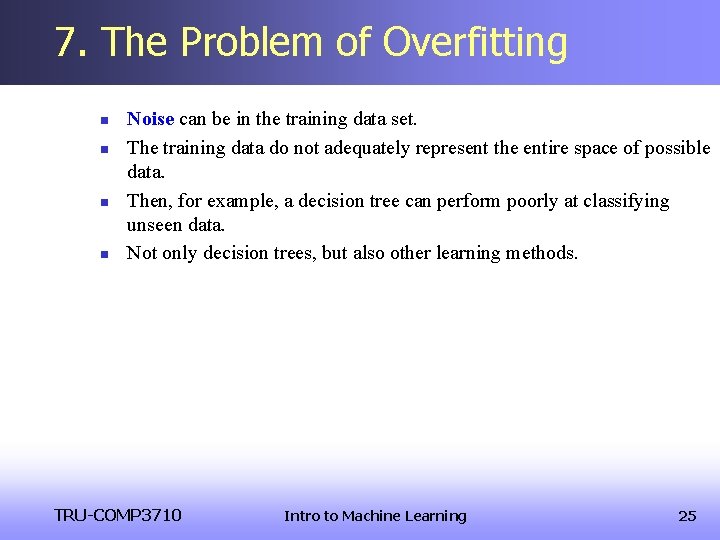

7. The Problem of Overfitting n n Noise can be in the training data set. The training data do not adequately represent the entire space of possible data. Then, for example, a decision tree can perform poorly at classifying unseen data. Not only decision trees, but also other learning methods. TRU-COMP 3710 Intro to Machine Learning 25

Topics n n n Black dots represent positive examples, white dots negative. The two lines represent two different hypotheses. In the first diagram, there are just a few items of training data, correctly classified by the hypothesis represented by the darker line. In the second and third diagrams we see the complete set of data, and that the simpler hypothesis which matched the training data less well matches the rest of the data better than the more complex hypothesis, which overfits. [Q] How to resolve overfitting caused by noises? noise TRU-COMP 3710 (1) (2) Intro to Machine Learning (3) 26

![8 The Nearest Neighbor Algorithm n Q Any other learning method Film Country Big 8. The Nearest Neighbor Algorithm n [Q] Any other learning method? Film Country Big](https://slidetodoc.com/presentation_image_h/221b29ae8d63653c218c8c35f91e6ebe/image-27.jpg)

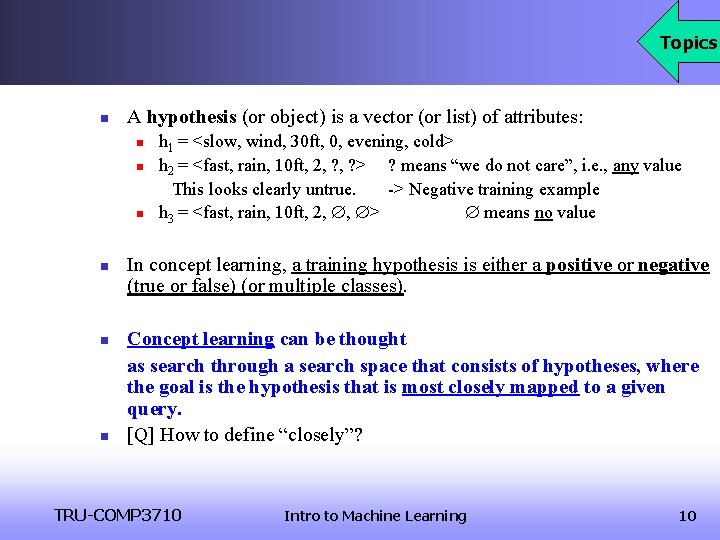

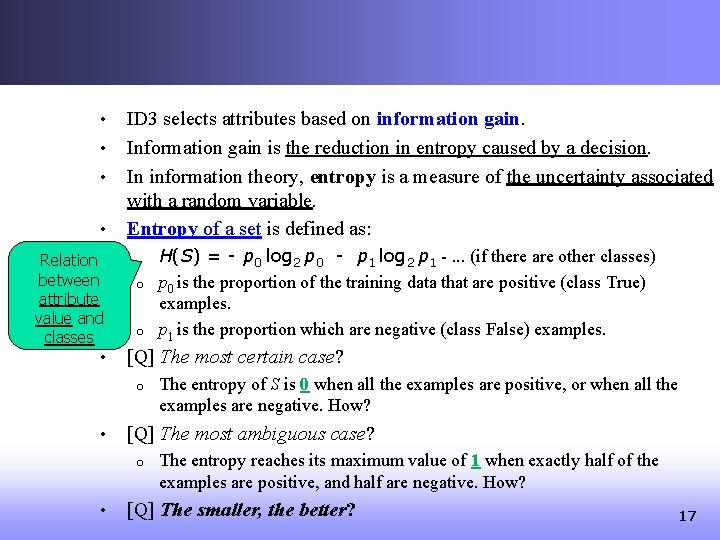

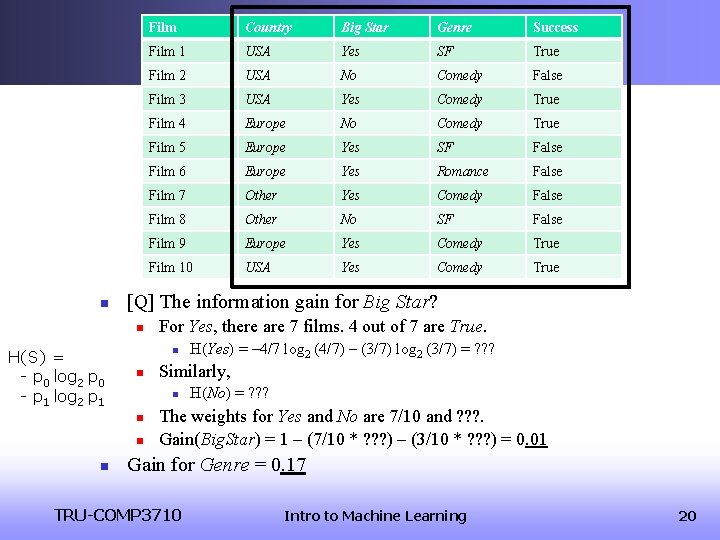

8. The Nearest Neighbor Algorithm n [Q] Any other learning method? Film Country Big Star Genre Success Film 1 USA Yes SF True Film 2 USA No Comedy False Film 3 USA Yes Comedy True Film 4 Europe No Comedy True Film 9 Europe Yes Comedy True Film 5 Europe Yes SF False Film 2 USA No Comedy False Film 6 Europe Yes Romance False Film 5 Europe Yes SF False Film 7 Other Yes Comedy False Film 5 Europe Yes SF False Film 8 Other No SF False Film 6 Europe Yes Romance False Film 9 Europe Yes Comedy True Film 7 Other Yes Comedy False Film 10 USA Yes Comedy True Film 8 Other No SF False Query Thailand Yes Romance ? ? ? n n The Nearest Neighbor algorithm is an example of instance based learning. Instance based learning involves storing training data and using it to attempt to classify new data as it arrives. TRU-COMP 3710 Intro to Machine Learning 27

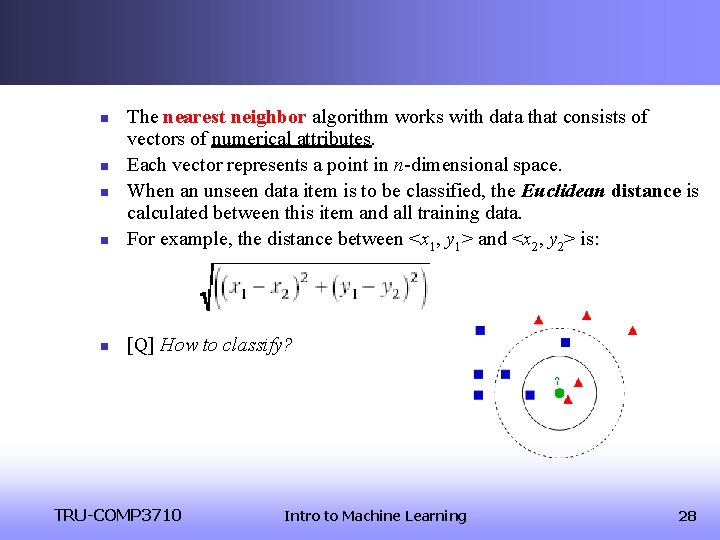

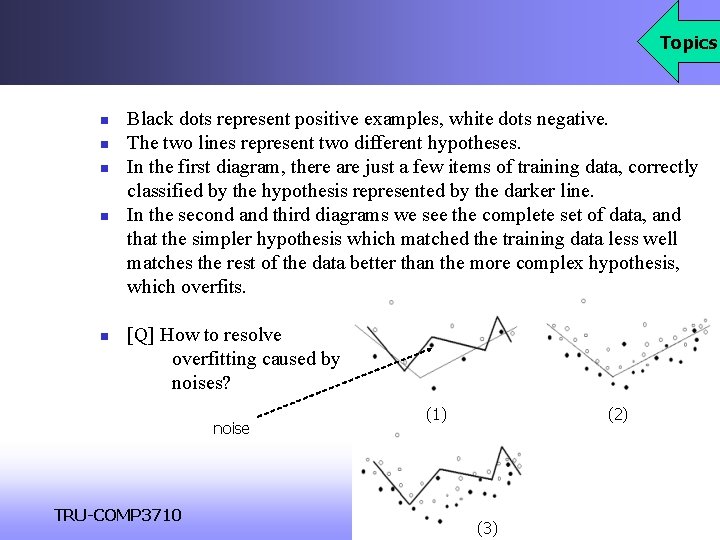

n The nearest neighbor algorithm works with data that consists of vectors of numerical attributes. Each vector represents a point in n-dimensional space. When an unseen data item is to be classified, the Euclidean distance is calculated between this item and all training data. For example, the distance between <x 1, y 1> and <x 2, y 2> is: n [Q] How to classify? n n n TRU-COMP 3710 Intro to Machine Learning 28

![Topics n n n Q How to classify when k 3 Q How Topics n n n [Q] How to classify, when k = 3? [Q] How](https://slidetodoc.com/presentation_image_h/221b29ae8d63653c218c8c35f91e6ebe/image-29.jpg)

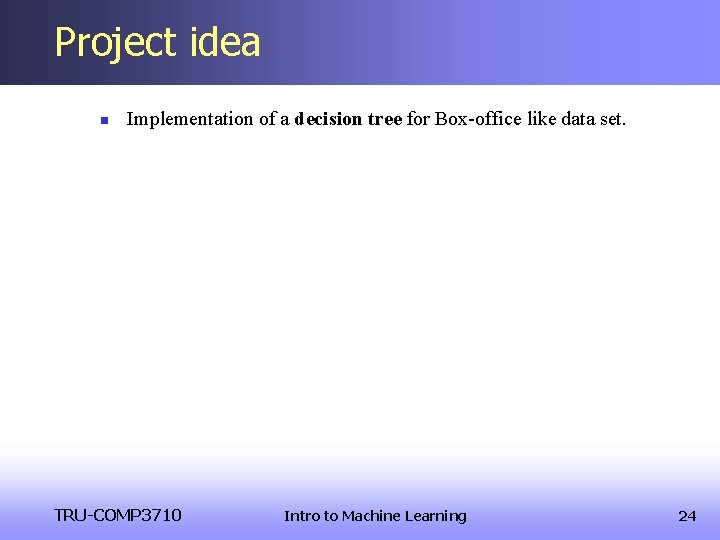

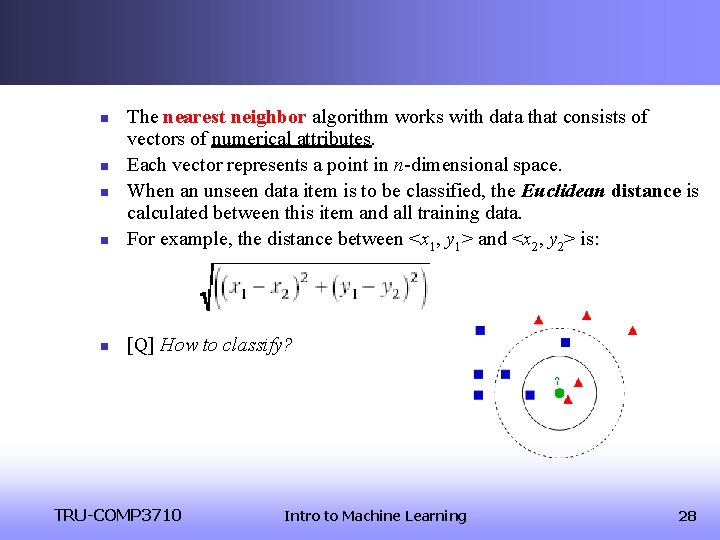

Topics n n n [Q] How to classify, when k = 3? [Q] How to classify, when k = 5? The k-nearest neighbor algorithm: n n Shepard’s method: n n The classification for the unseen data is usually selected as the one that is most common amongst the k-nearest neighbors. This involves allowing all training data to contribute to the classification with their contribution being proportional to their distance from the data item to be classified. For each class, 1/di . . . Advantage: Unlike decision tree learning, the nearest neighbor algorithm performs very well with noisy input data. Disadvantage: n n [Q] But, what if the training data set is huge? Any good idea? [Q] How to measure distance (or dissimilarity) for ordinal and categorical data? TRU-COMP 3710 Intro to Machine Learning 29

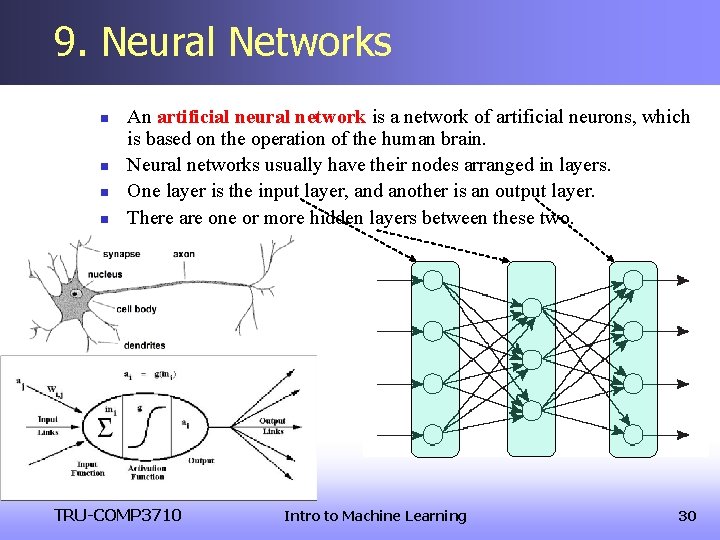

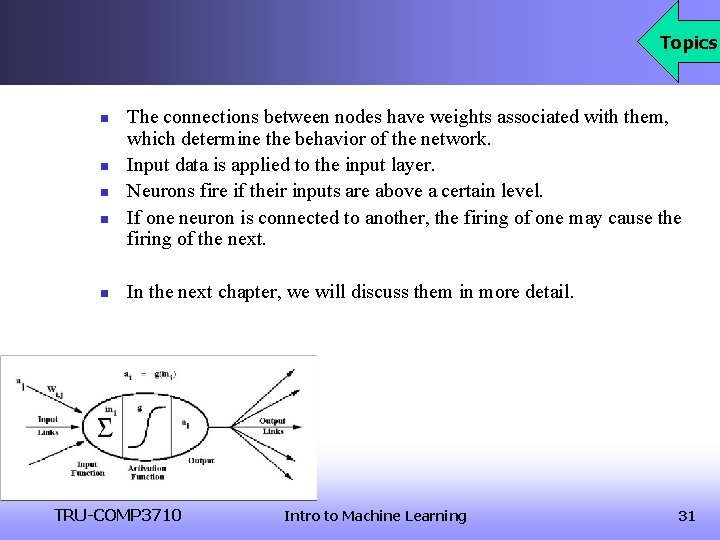

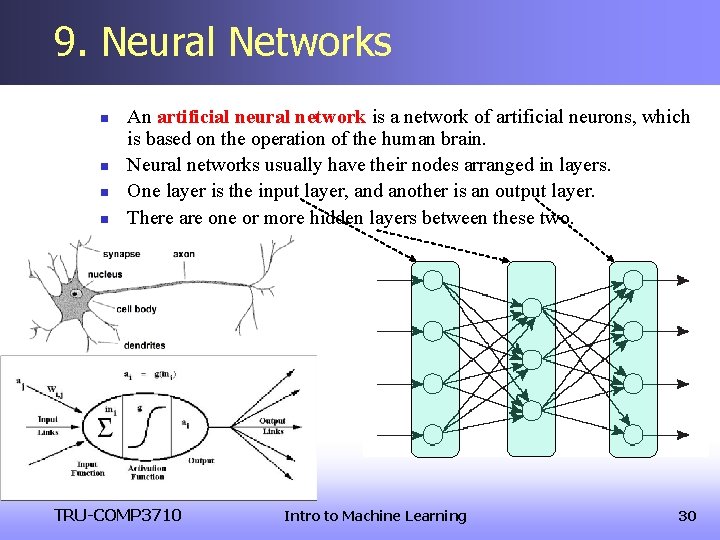

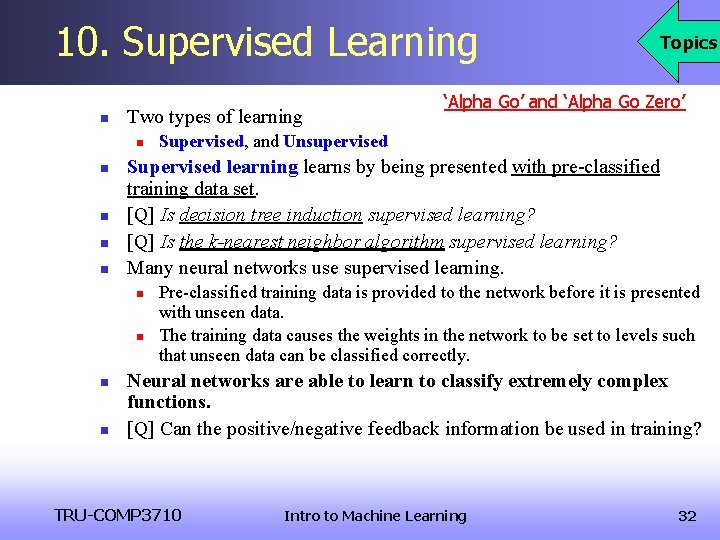

9. Neural Networks n n An artificial neural network is a network of artificial neurons, which is based on the operation of the human brain. Neural networks usually have their nodes arranged in layers. One layer is the input layer, and another is an output layer. There are one or more hidden layers between these two. TRU-COMP 3710 Intro to Machine Learning 30

Topics n n n The connections between nodes have weights associated with them, which determine the behavior of the network. Input data is applied to the input layer. Neurons fire if their inputs are above a certain level. If one neuron is connected to another, the firing of one may cause the firing of the next. In the next chapter, we will discuss them in more detail. TRU-COMP 3710 Intro to Machine Learning 31

10. Supervised Learning n Two types of learning n n n n ‘Alpha Go’ and ‘Alpha Go Zero’ Supervised, and Unsupervised Supervised learning learns by being presented with pre-classified training data set. [Q] Is decision tree induction supervised learning? [Q] Is the k-nearest neighbor algorithm supervised learning? Many neural networks use supervised learning. n n Topics Pre-classified training data is provided to the network before it is presented with unseen data. The training data causes the weights in the network to be set to levels such that unseen data can be classified correctly. Neural networks are able to learn to classify extremely complex functions. [Q] Can the positive/negative feedback information be used in training? TRU-COMP 3710 Intro to Machine Learning 32

11. Reinforcement Learning n n Topics Systems that learn using reinforcement learning are given a positive feedback when they classify data correctly, and negative feedback when they classify data incorrectly. Credit assignment is needed to reward nodes in a network correctly. TRU-COMP 3710 Intro to Machine Learning 33

12. Unsupervised Learning n Unsupervised learning learns without any training data set. n Unsupervised learning networks learn without requiring human intervention. No training data is required. n [Q] How is that possible? n Topics n The system learns to cluster input data into a set of classifications that are not previously defined. This is called clustering. Clustering is a basic tool for data mining and pattern recognition. Example: K-Means, Fuzzy C-Means, EM, Kohonen Maps. n We will revisit this topic if time permits. n n TRU-COMP 3710 Intro to Machine Learning 34

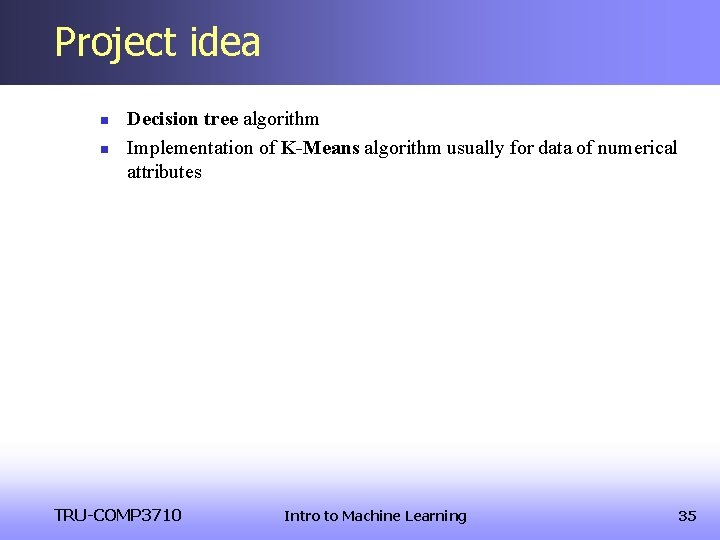

Project idea n n Decision tree algorithm Implementation of K-Means algorithm usually for data of numerical attributes TRU-COMP 3710 Intro to Machine Learning 35

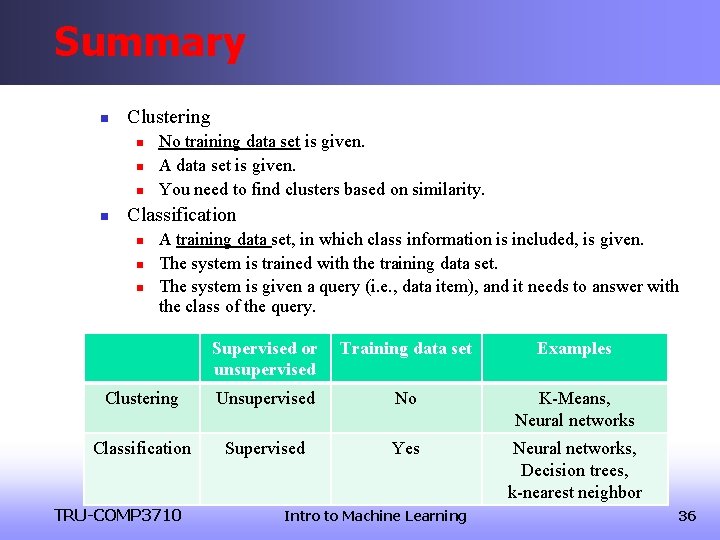

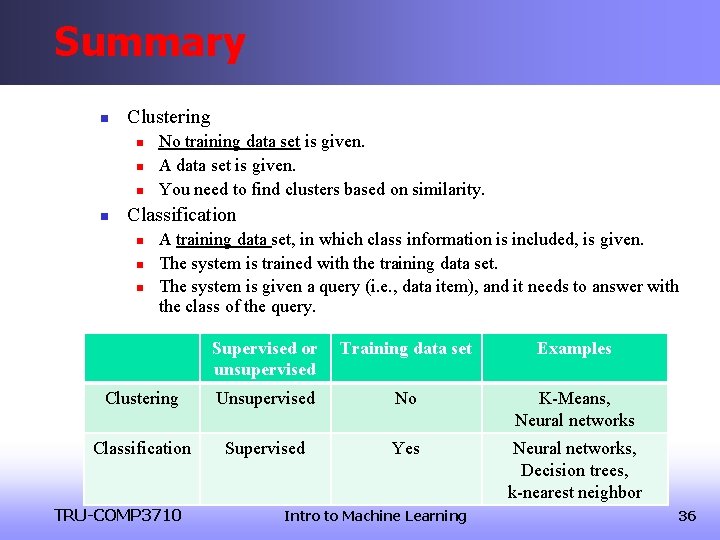

Summary n Clustering n n No training data set is given. A data set is given. You need to find clusters based on similarity. Classification n A training data set, in which class information is included, is given. The system is trained with the training data set. The system is given a query (i. e. , data item), and it needs to answer with the class of the query. Supervised or unsupervised Training data set Examples Clustering Unsupervised No K-Means, Neural networks Classification Supervised Yes Neural networks, Decision trees, k-nearest neighbor TRU-COMP 3710 Intro to Machine Learning 36