Introduction to Knowledge Representation and Conceptual Modeling Martin

- Slides: 27

Introduction to Knowledge Representation and Conceptual Modeling Martin Doerr Institute of Computer Science Foundation for Research and Technology – Hellas Heraklion – Crete, Greece ICS-FORTH October 2, 2006

Knowledge Representation Outline Introduction From tables to Knowledge Representation: Individual Concepts and Relationships Instances, classes and properties Generalization Multiple Is. A and instantiation A simple datamodel and notation ICS-FORTH October 2, 2006

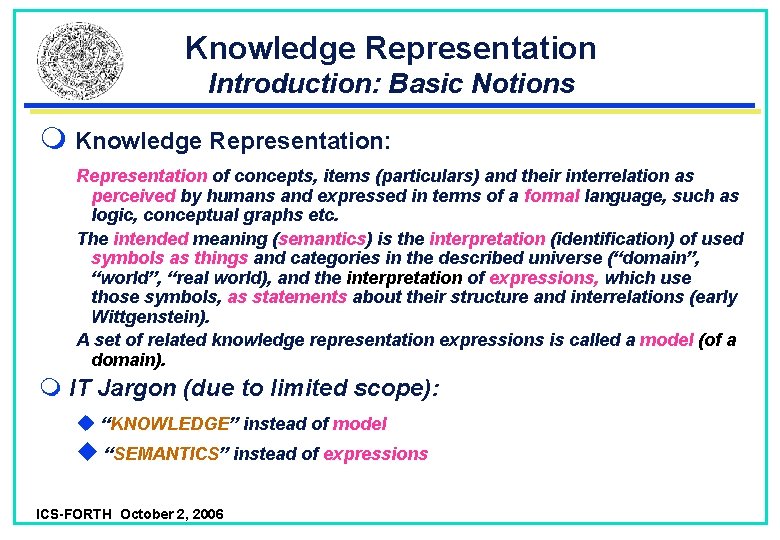

Knowledge Representation Introduction: Basic Notions Knowledge Representation: Representation of concepts, items (particulars) and their interrelation as perceived by humans and expressed in terms of a formal language, such as logic, conceptual graphs etc. The intended meaning (semantics) is the interpretation (identification) of used symbols as things and categories in the described universe (“domain”, “world”, “real world), and the interpretation of expressions, which use those symbols, as statements about their structure and interrelations (early Wittgenstein). A set of related knowledge representation expressions is called a model (of a domain). IT Jargon (due to limited scope): u “KNOWLEDGE” instead of model u “SEMANTICS” instead of expressions ICS-FORTH October 2, 2006

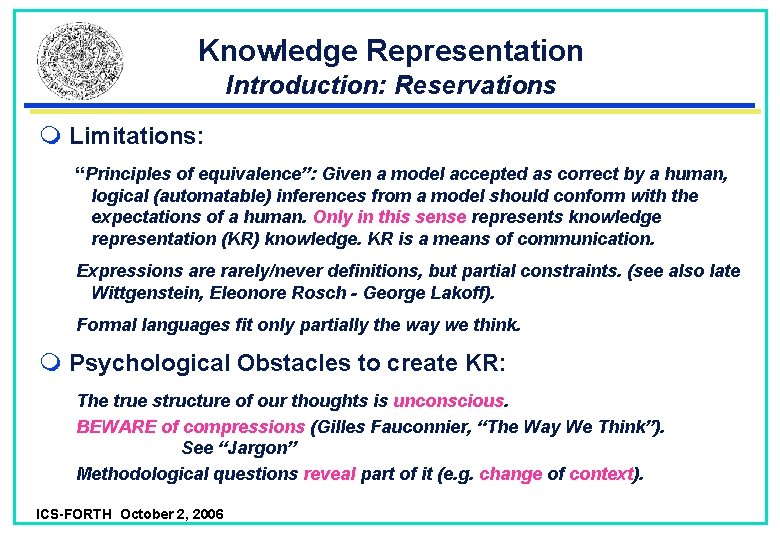

Knowledge Representation Introduction: Reservations Limitations: “Principles of equivalence”: Given a model accepted as correct by a human, logical (automatable) inferences from a model should conform with the expectations of a human. Only in this sense represents knowledge representation (KR) knowledge. KR is a means of communication. Expressions are rarely/never definitions, but partial constraints. (see also late Wittgenstein, Eleonore Rosch - George Lakoff). Formal languages fit only partially the way we think. Psychological Obstacles to create KR: The true structure of our thoughts is unconscious. BEWARE of compressions (Gilles Fauconnier, “The Way We Think”). See “Jargon” Methodological questions reveal part of it (e. g. change of context). ICS-FORTH October 2, 2006

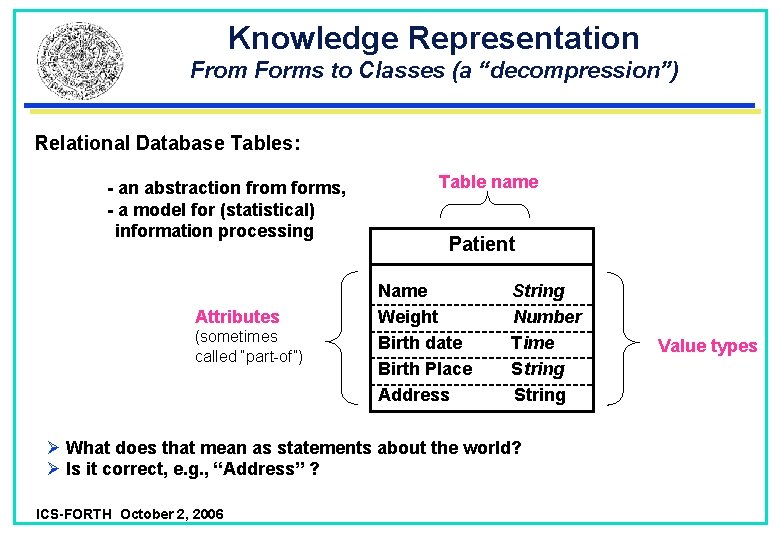

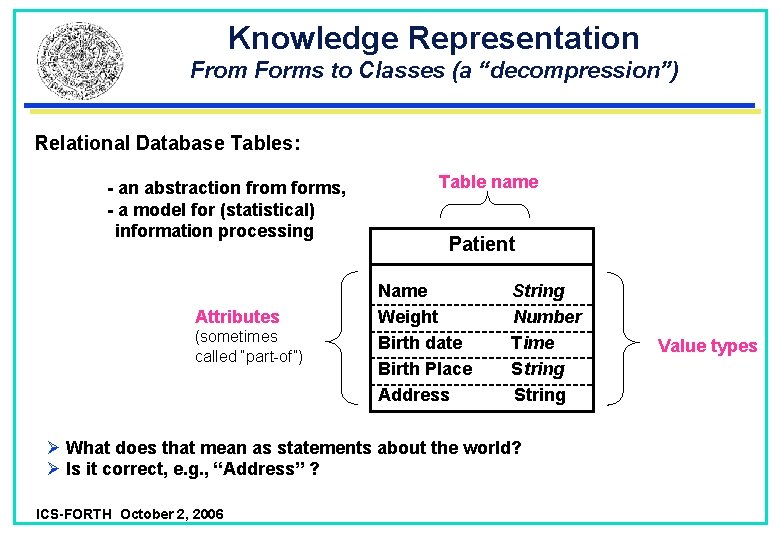

Knowledge Representation From Forms to Classes (a “decompression”) Relational Database Tables: - an abstraction from forms, - a model for (statistical) information processing Attributes (sometimes called “part-of”) Table name Patient Name Weight Birth date Birth Place Address String Number Time String Ø What does that mean as statements about the world? Ø Is it correct, e. g. , “Address” ? ICS-FORTH October 2, 2006 Value types

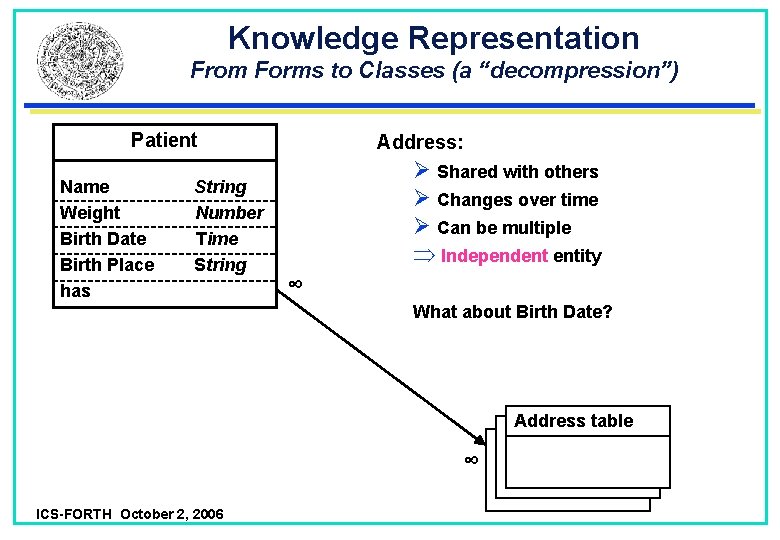

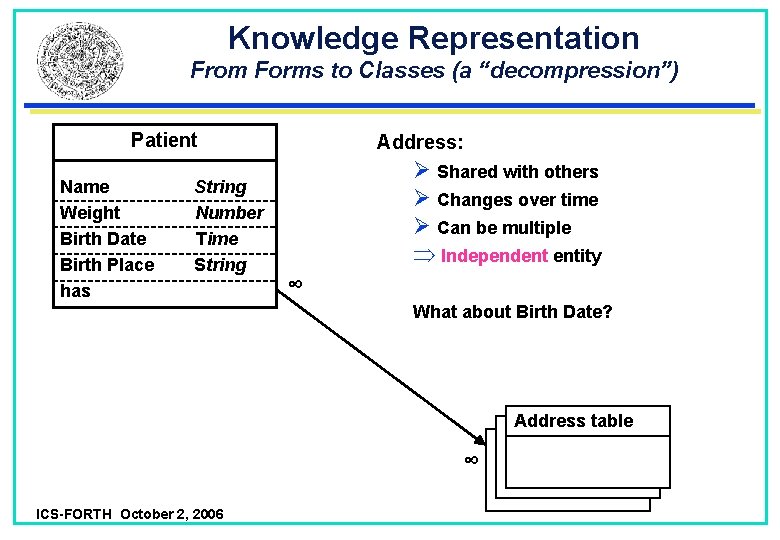

Knowledge Representation From Forms to Classes (a “decompression”) Patient Name Weight Birth Date Birth Place has String Number Time String Address: Ø Shared with others Ø Changes over time Ø Can be multiple Þ Independent entity ∞ What about Birth Date? Address table ∞ ICS-FORTH October 2, 2006

Knowledge Representation From Forms to Classes (a “decompression”) Patient Name Weight has Birth Date, Birth Place Ø Shared with others Ø Birth shared with others (twins)! Þ Independent entity String Number has ∞ ∞ 1 Birth Date Time Place String Address table ∞ ICS-FORTH October 2, 2006

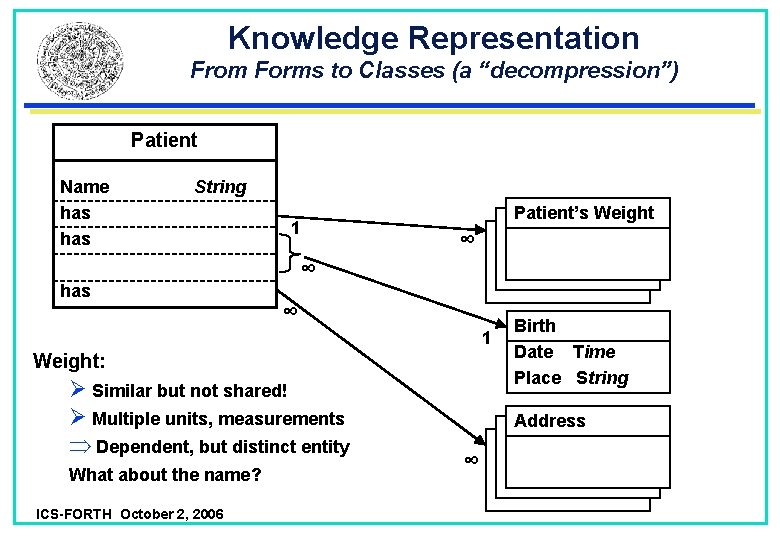

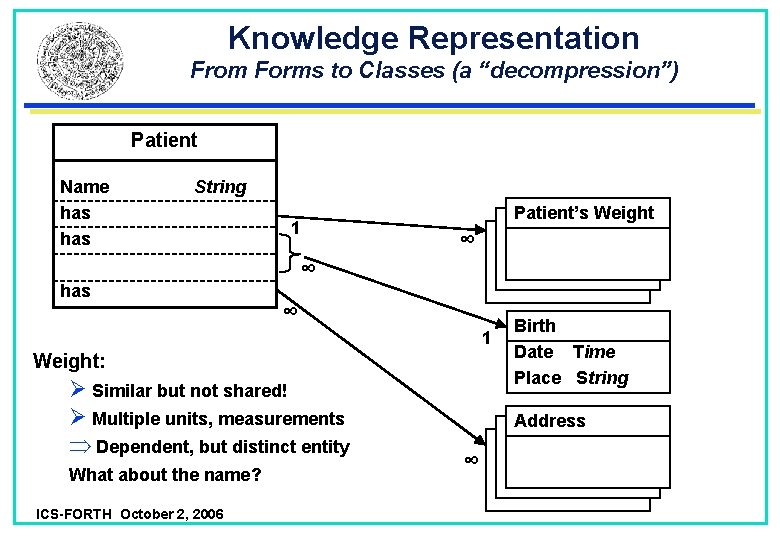

Knowledge Representation From Forms to Classes (a “decompression”) Patient Name has String has 1 Patient’s Weight ∞ ∞ ∞ 1 Weight: Ø Similar but not shared! Ø Multiple units, measurements Þ Dependent, but distinct entity What about the name? ICS-FORTH October 2, 2006 Birth Date Time Place String Address ∞

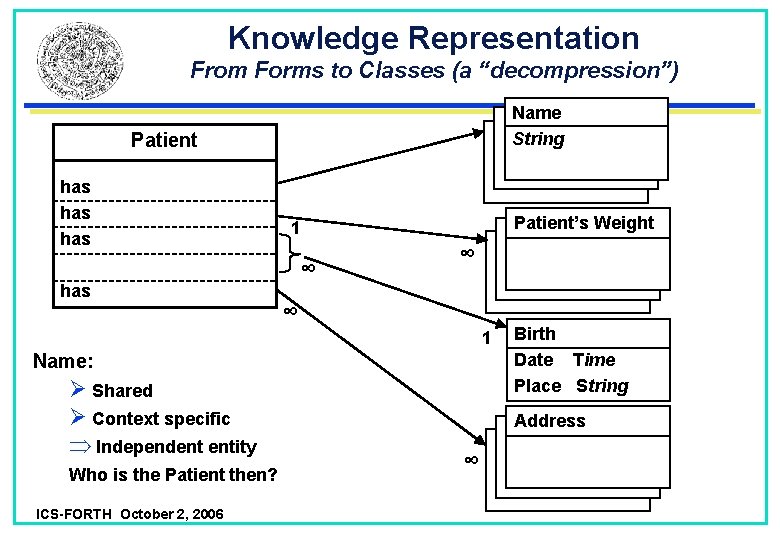

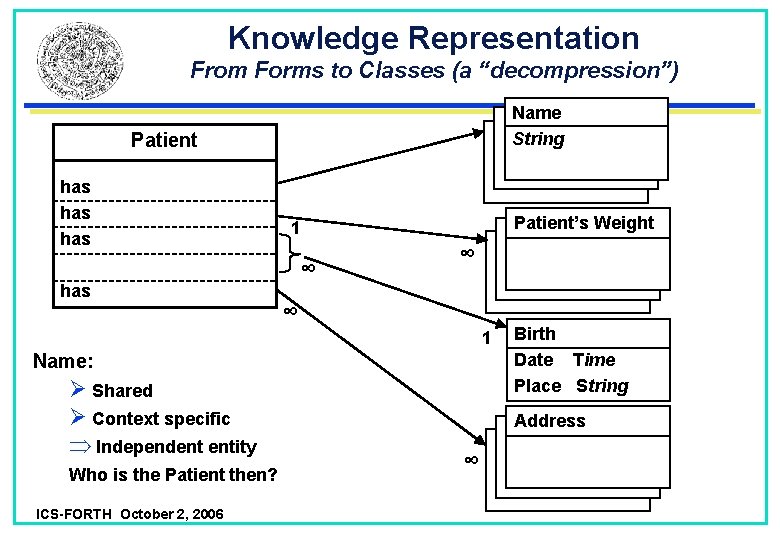

Knowledge Representation From Forms to Classes (a “decompression”) Name String Patient has has Patient’s Weight 1 ∞ ∞ ∞ 1 Name: Ø Shared Ø Context specific Þ Independent entity Who is the Patient then? ICS-FORTH October 2, 2006 Birth Date Time Place String Address ∞

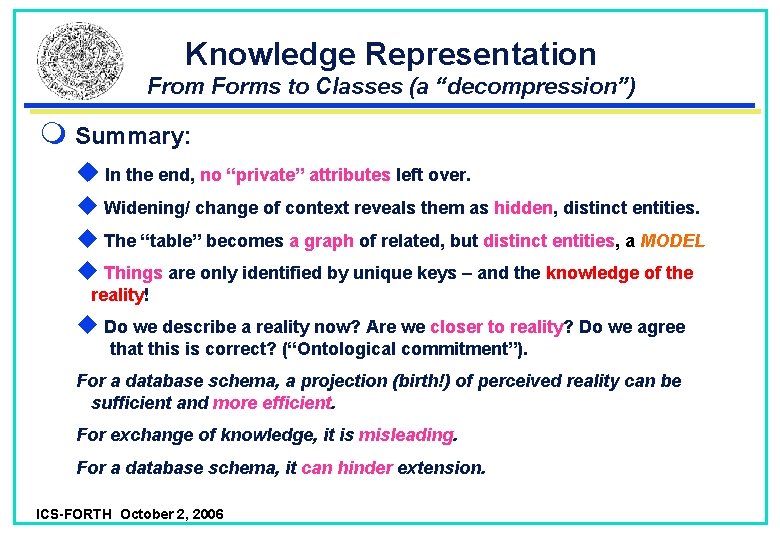

Knowledge Representation From Forms to Classes (a “decompression”) Summary: u In the end, no “private” attributes left over. u Widening/ change of context reveals them as hidden, distinct entities. u The “table” becomes a graph of related, but distinct entities, a MODEL u Things are only identified by unique keys – and the knowledge of the reality! u Do we describe a reality now? Are we closer to reality? Do we agree that this is correct? (“Ontological commitment”). For a database schema, a projection (birth!) of perceived reality can be sufficient and more efficient. For exchange of knowledge, it is misleading. For a database schema, it can hinder extension. ICS-FORTH October 2, 2006

Knowledge Representation Classes and Instances In KR we call these distinct entities classes: u. A class is a category of items that share one or more common traits serving as criteria to identify the items belonging to the class. These properties need not be explicitly formulated in logical terms, but may be described in a text (here called a scope note) that refers to a common conceptualisation of domain experts. The sum of these traits is called the intension of the class. A class may be the domain or range of none, one or more properties formally defined in a model. The formally defined properties need not be part of the intension of their domains or ranges: such properties are optional. An item that belongs to a class is called an instance of this class. A class is associated with an open set of real life instances, known as the extension of the class. Here “open” is used in the sense that it is generally beyond our capabilities to know all instances of a class in the world and indeed that the future may bring new instances about at any time. (related terms: universals, categories, sortal concepts). ICS-FORTH October 2, 2006

Knowledge Representation Particulars Distinguish particulars from universals as a perceived truth. Particulars do not have specializations. Universals have instances, which can be either particulars or universals. u particulars: 34 N 26 E. me, “hello”, 2, WW II, the Mona Lisa, the text on the Rosetta Stone, 2 -10 -2006, u universals: patient, word, number, war, painting, text u “ambiguous” particulars: numbers, saints, measurement units, geopolitical units. u “strange” universals: colors, materials, mythological beasts. u Dualisms: — Texts as equivalence classes of documents containing the same text. — Classes as objects of discourse, e. g. “chaffinch” and ‘Fringilla coelebs Linnaeus, 1758’ as Linné defined it. ICS-FORTH October 2, 2006

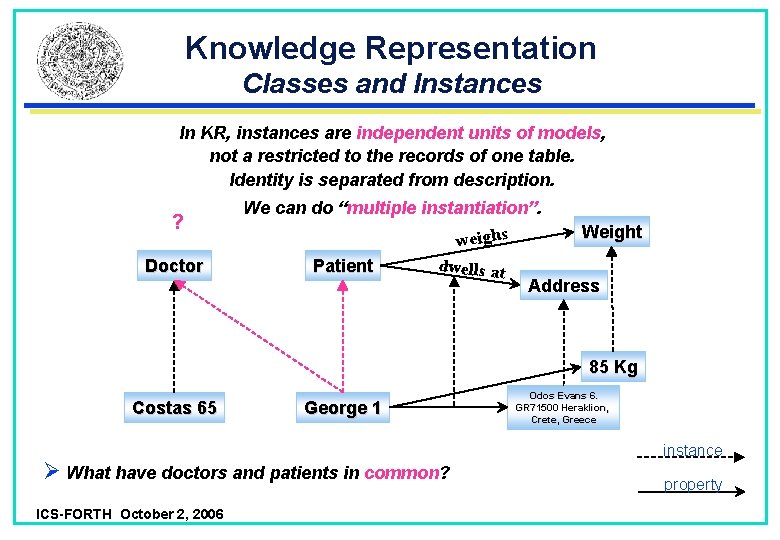

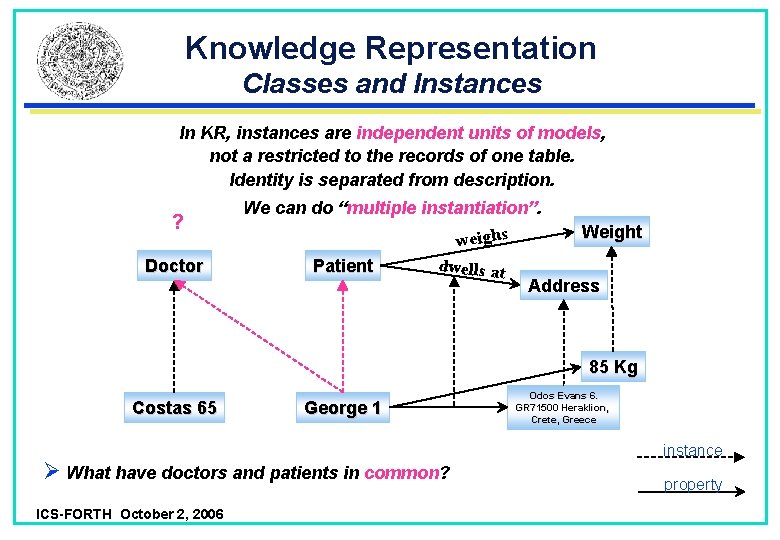

Knowledge Representation Classes and Instances In KR, instances are independent units of models, not a restricted to the records of one table. Identity is separated from description. ? Doctor We can do “multiple instantiation”. weighs Patient dwells at Weight Address 85 Kg Costas 65 George 1 Ø What have doctors and patients in common? ICS-FORTH October 2, 2006 Odos Evans 6. GR 71500 Heraklion, Crete, Greece instance property

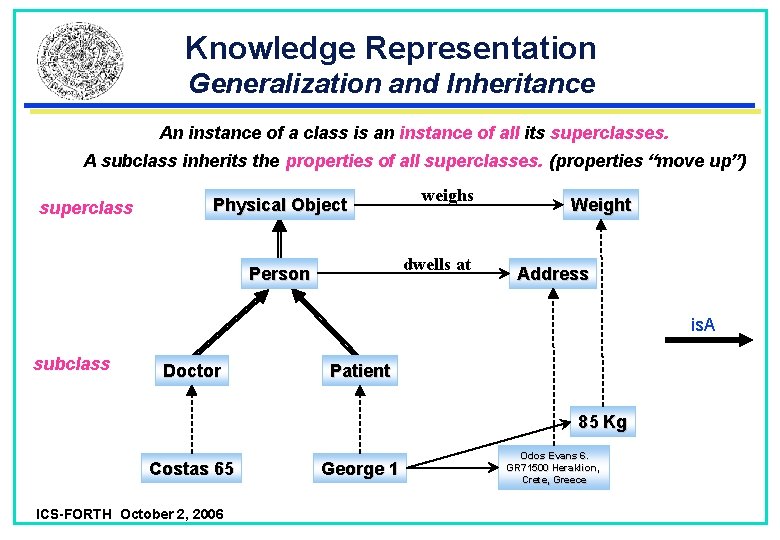

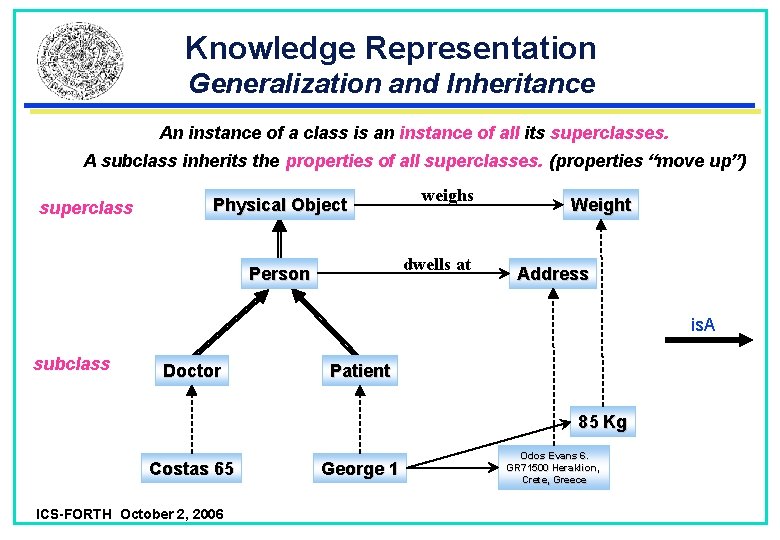

Knowledge Representation Generalization and Inheritance An instance of a class is an instance of all its superclasses. A subclass inherits the properties of all superclasses. (properties “move up”) superclass Physical Object weighs dwells at Person Weight Address is. A subclass Doctor Patient 85 Kg Costas 65 ICS-FORTH October 2, 2006 George 1 Odos Evans 6. GR 71500 Heraklion, Crete, Greece

Knowledge Representation Ontology and Information Systems An ontology is a logical theory accounting for the intended meaning of a formal vocabulary, i. e. its ontological commitment to a particular conceptualization of the world. The intended models* of a logical language using such a vocabulary are constrained by its ontological commitment. An ontology indirectly reflects this commitment (and the underlying conceptualization) by approximating these intended models. Nicola Guarino, Formal Ontology and Information Systems, 1998. * “models” are meant as models of possible states of affairs. Ontologies pertains to a perceived truth: A model commits to a conceptualization, typically of a group, how we imagine things in the world are related. Any information system compromises perceived reality with what can be represented on a database (dates!), and with what is performant. An RDF Schema is no more a “pure” ontology. Use of RDF does not make up an ontology. ICS-FORTH October 2, 2006

Knowledge Representation Limitations Complex logical rules may become difficult to identify for the domain expert and difficult to handle for an information system or user community. Distinguish between modeling knowing (epistemology) and modeling being (ontology): necessary properties may nevertheless be unknown. Knowledge may be inconsistent or express alternatives. Human knowledge does not fit with First Order Logic: There are prototype effects (George Lakoff), counter-factual reasoning (Gilles Fauconnier), analogies, fuzzy concepts. KR is an approximation. Concepts only become discrete if restricted to a context and a function! Paul Feyerabend maintains they must not be fixed. ICS-FORTH October 2, 2006

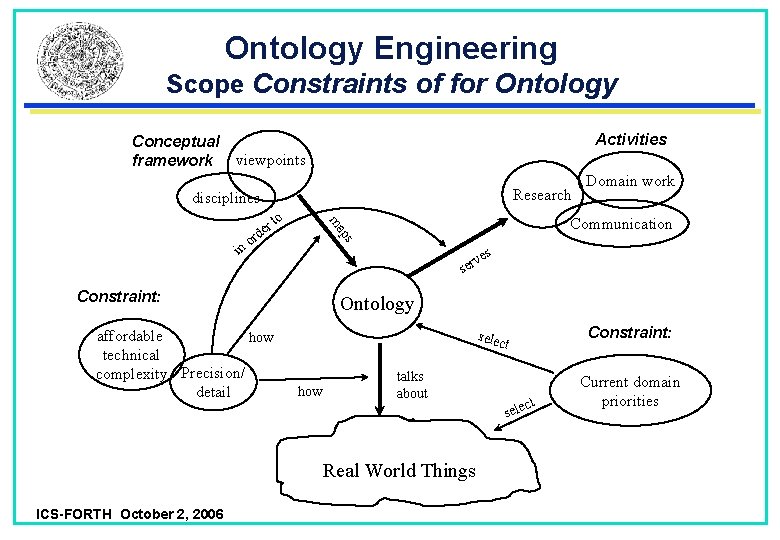

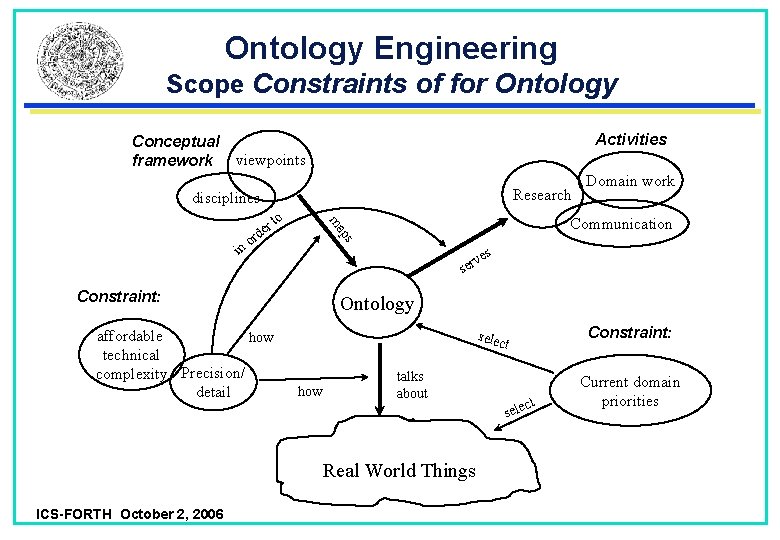

Ontology Engineering Scope Constraints of for Ontology Conceptual framework Activities viewpoints Research disciplines to ps or es v ser Constraint: affordable how technical complexity Precision/ detail Communication ma in r de Ontology Constraint: selec t how talks about Real World Things ICS-FORTH October 2, 2006 Domain work ct sele Current domain priorities

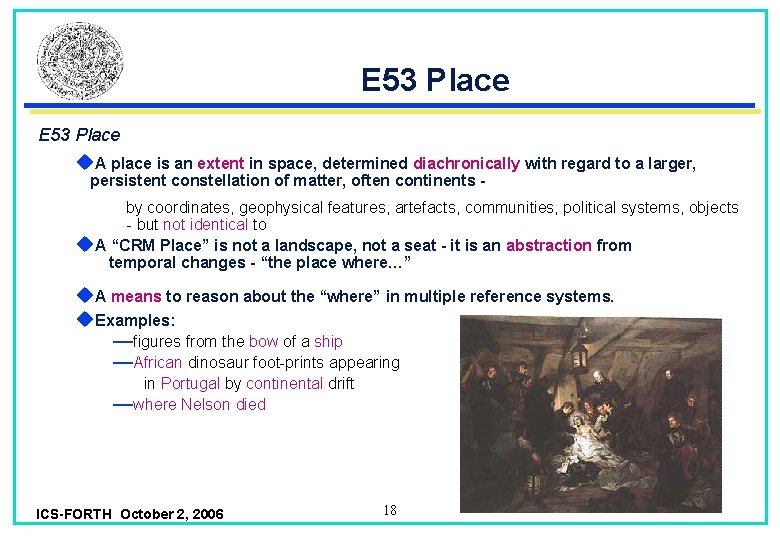

E 53 Place u. A place is an extent in space, determined diachronically with regard to a larger, persistent constellation of matter, often continents - by coordinates, geophysical features, artefacts, communities, political systems, objects - but not identical to u. A “CRM Place” is not a landscape, not a seat - it is an abstraction from temporal changes - “the place where…” u. A means to reason about the “where” in multiple reference systems. u. Examples: —figures from the bow of a ship —African dinosaur foot-prints appearing in Portugal by continental drift —where Nelson died ICS-FORTH October 2, 2006 18

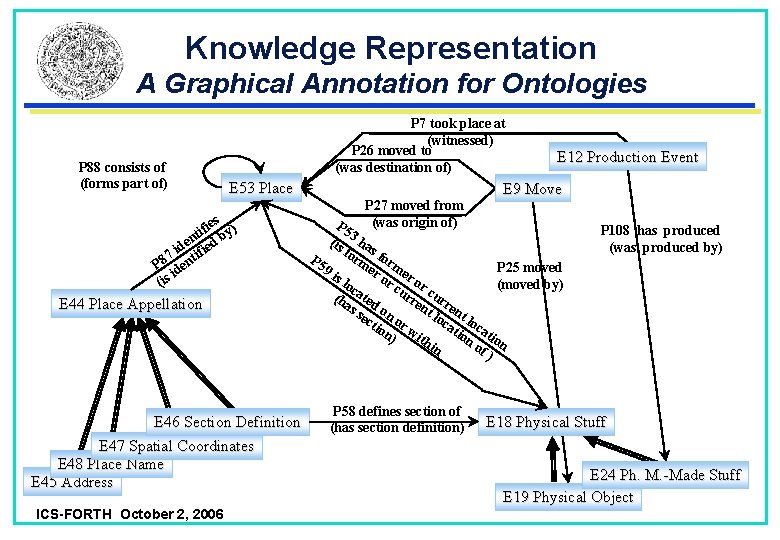

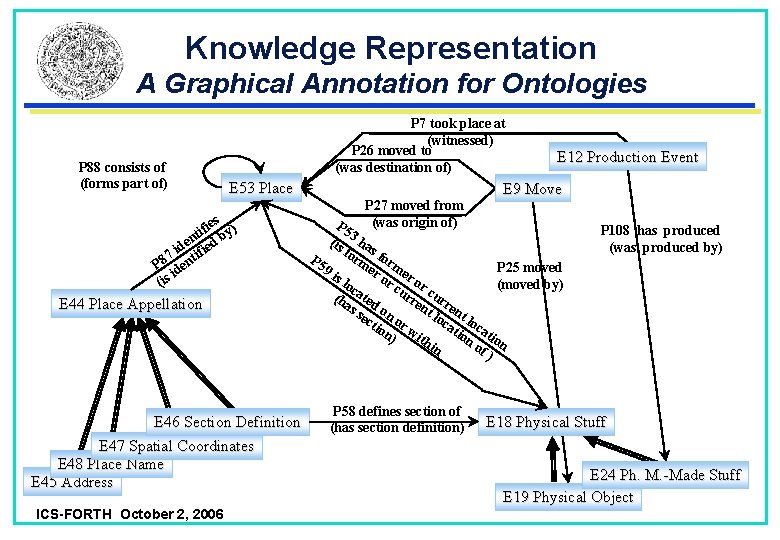

Knowledge Representation A Graphical Annotation for Ontologies P 88 consists of (forms part of) P 7 took place at (witnessed) P 26 moved to (was destination of) E 53 Place es ifi by) t en ed d i 7 ntifi 8 P de i (is E 44 Place Appellation E 46 Section Definition E 47 Spatial Coordinates E 48 Place Name E 45 Address ICS-FORTH October 2, 2006 P 27 moved from (was origin of) E 12 Production Event E 9 Move P 5 (is 3 ha fo s f rm or P 5 er me P 25 moved 9 i or r o s l oc (moved by) cu r c rr ur (h ated en re as t l nt se on oc lo cti or ati ca on w on tio ) ith of n in ) P 58 defines section of (has section definition) P 108 has produced (was produced by) E 18 Physical Stuff E 24 Ph. M. -Made Stuff E 19 Physical Object

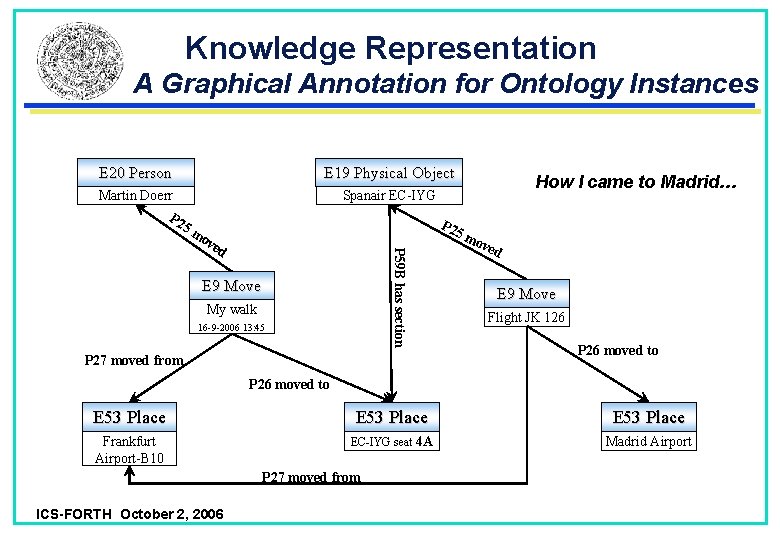

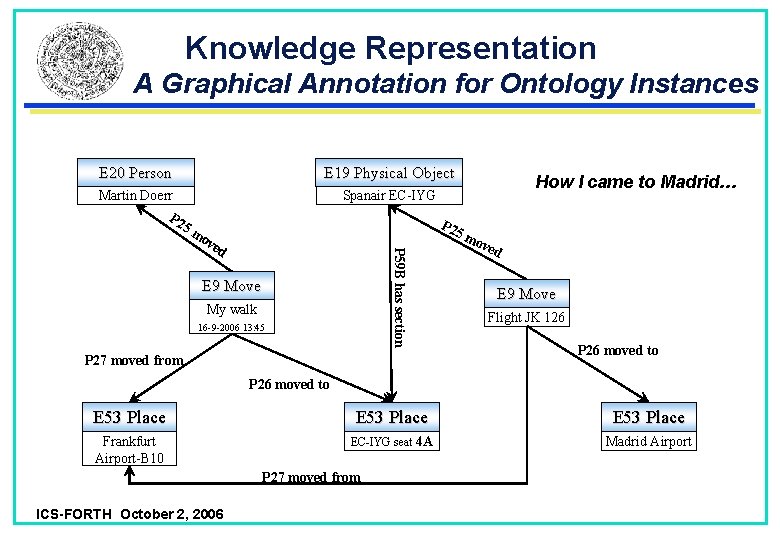

Knowledge Representation A Graphical Annotation for Ontology Instances E 20 Person E 19 Physical Object How I came to Madrid… Spanair EC-IYG Martin Doerr P 2 5 m P 59 B has section ov ed E 9 Move My walk 16 -9 -2006 13: 45 P 27 moved from ove d E 9 Move Flight JK 126 P 26 moved to E 53 Place Frankfurt Airport-B 10 EC-IYG seat 4 A Madrid Airport P 27 moved from ICS-FORTH October 2, 2006

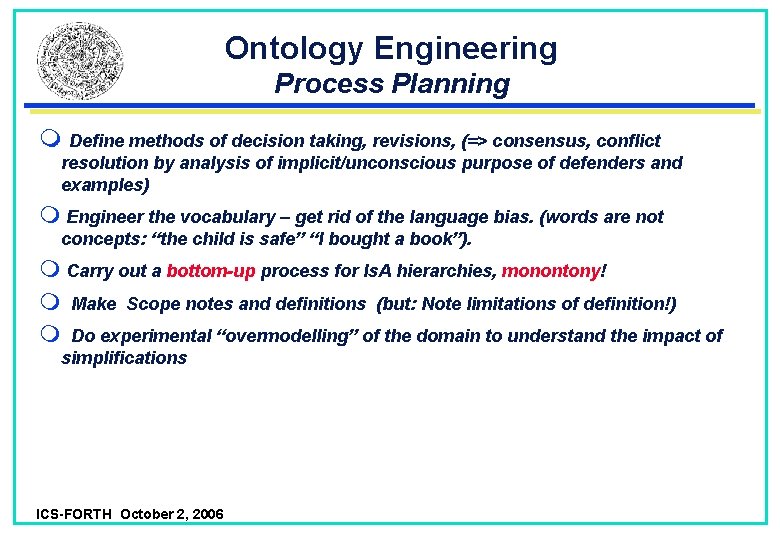

Ontology Engineering Process Planning Define methods of decision taking, revisions, (=> consensus, conflict resolution by analysis of implicit/unconscious purpose of defenders and examples) Engineer the vocabulary – get rid of the language bias. (words are not concepts: “the child is safe” “I bought a book”). Carry out a bottom-up process for Is. A hierarchies, monontony! Make Scope notes and definitions (but: Note limitations of definition!) Do experimental “overmodelling” of the domain to understand the impact of simplifications ICS-FORTH October 2, 2006

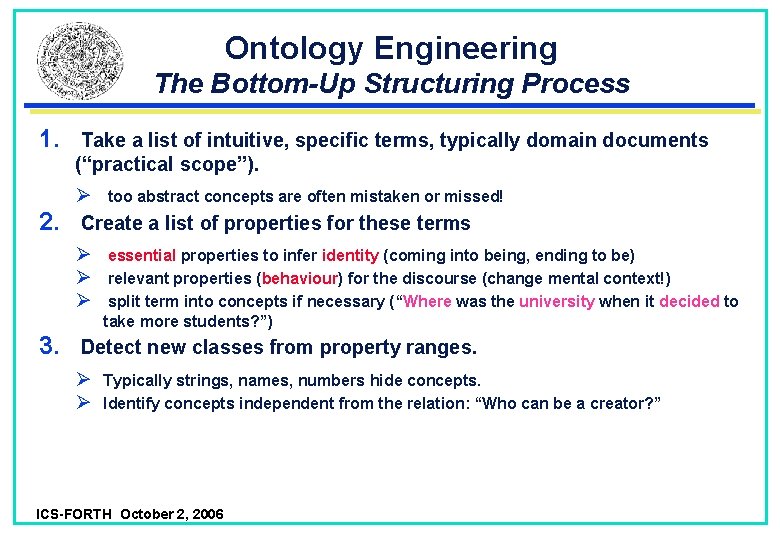

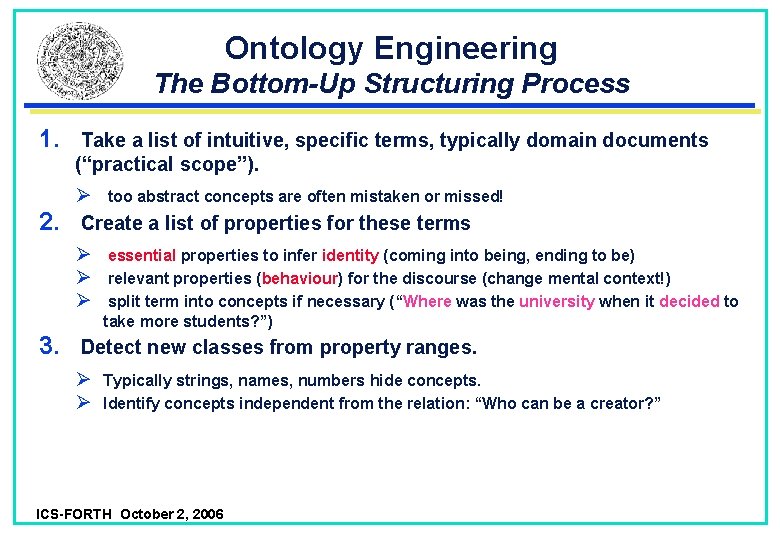

Ontology Engineering The Bottom-Up Structuring Process 1. 2. Take a list of intuitive, specific terms, typically domain documents (“practical scope”). Ø too abstract concepts are often mistaken or missed! Create a list of properties for these terms Ø Ø Ø 3. essential properties to infer identity (coming into being, ending to be) relevant properties (behaviour) for the discourse (change mental context!) split term into concepts if necessary (“Where was the university when it decided to take more students? ”) Detect new classes from property ranges. Ø Ø Typically strings, names, numbers hide concepts. Identify concepts independent from the relation: “Who can be a creator? ” ICS-FORTH October 2, 2006

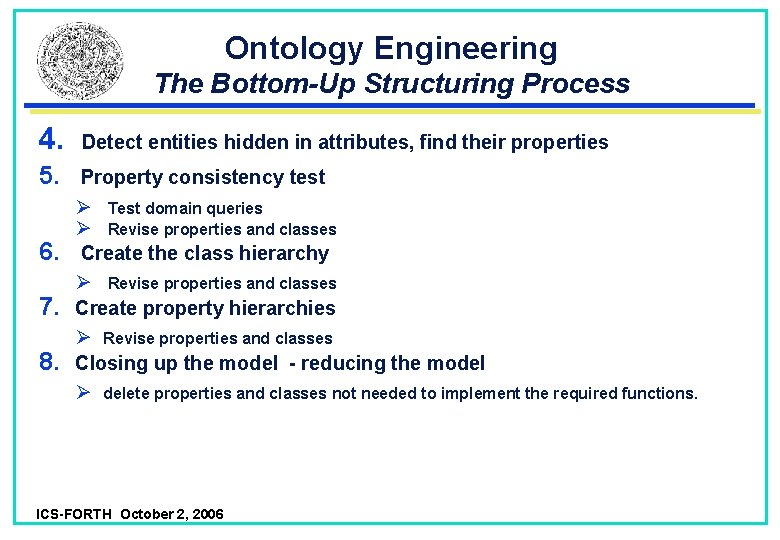

Ontology Engineering The Bottom-Up Structuring Process 4. 5. 6. 7. 8. Detect entities hidden in attributes, find their properties Property consistency test Ø Test domain queries Ø Revise properties and classes Create the class hierarchy Ø Revise properties and classes Create property hierarchies Ø Revise properties and classes Closing up the model - reducing the model Ø delete properties and classes not needed to implement the required functions. ICS-FORTH October 2, 2006

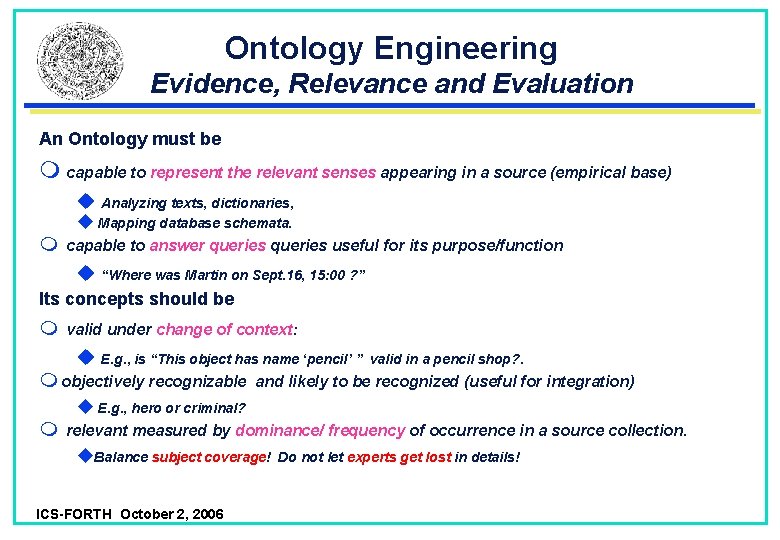

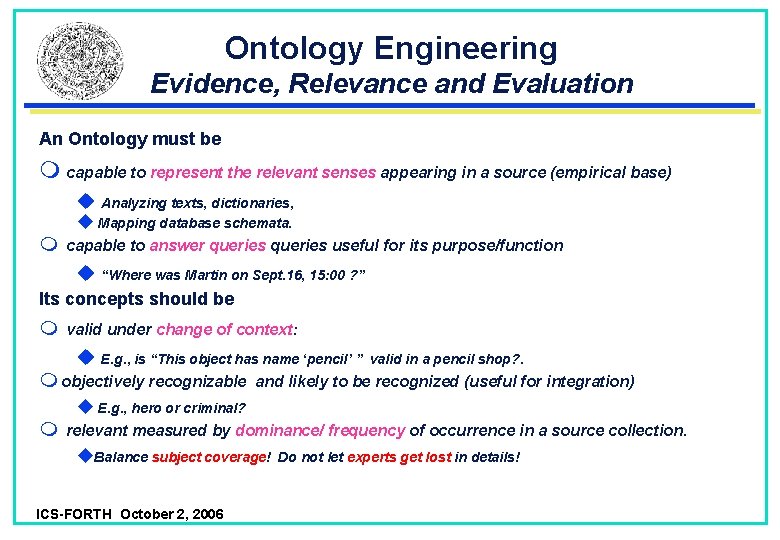

Ontology Engineering Evidence, Relevance and Evaluation An Ontology must be capable to represent the relevant senses appearing in a source (empirical base) u Analyzing texts, dictionaries, u Mapping database schemata. capable to answer queries useful for its purpose/function u “Where was Martin on Sept. 16, 15: 00 ? ” Its concepts should be valid under change of context: u E. g. , is “This object has name ‘pencil’ ” objectively recognizable valid in a pencil shop? . and likely to be recognized (useful for integration) u E. g. , hero or criminal? relevant measured by dominance/ frequency of occurrence in a source collection. u. Balance subject coverage! ICS-FORTH October 2, 2006 Do not let experts get lost in details!

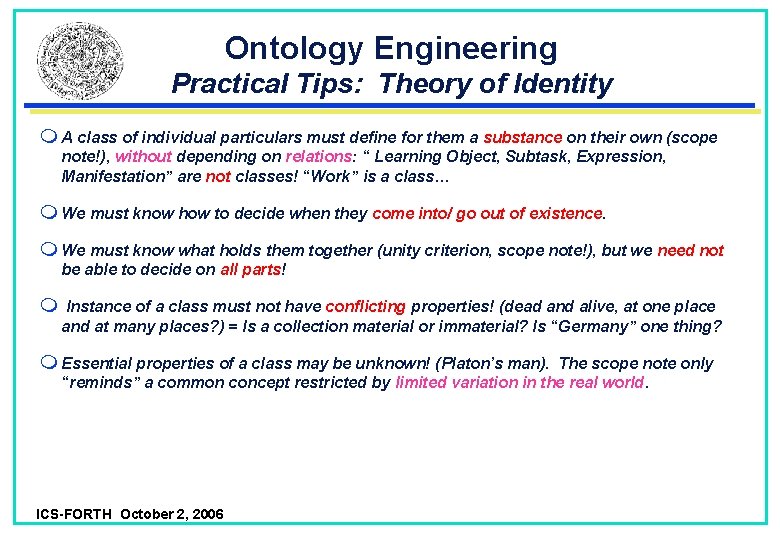

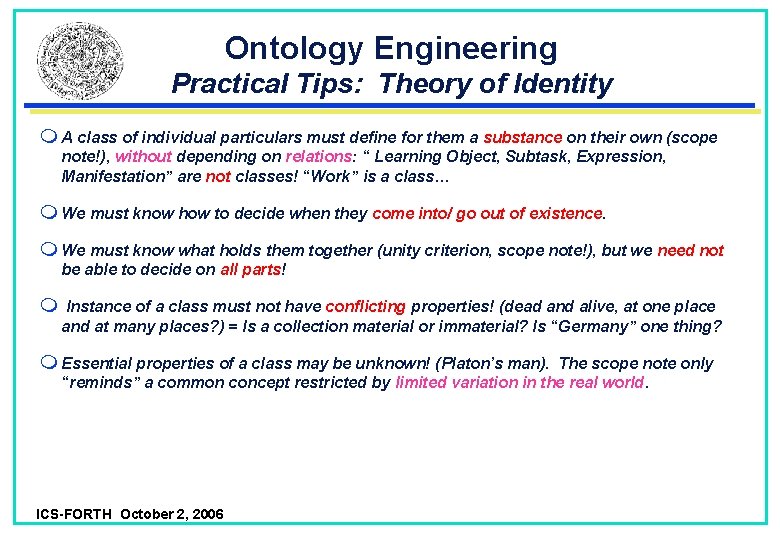

Ontology Engineering Practical Tips: Theory of Identity A class of individual particulars must define for them a substance on their own (scope note!), without depending on relations: “ Learning Object, Subtask, Expression, Manifestation” are not classes! “Work” is a class… We must know how to decide when they come into/ go out of existence. We must know what holds them together (unity criterion, scope note!), but we need not be able to decide on all parts! Instance of a class must not have conflicting properties! (dead and alive, at one place and at many places? ) = Is a collection material or immaterial? Is “Germany” one thing? Essential properties of a class may be unknown! (Platon’s man). The scope note only “reminds” a common concept restricted by limited variation in the real world. ICS-FORTH October 2, 2006

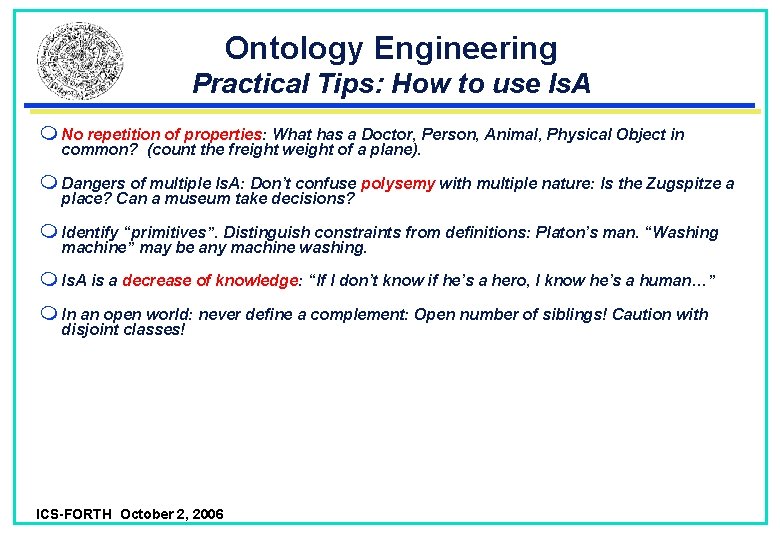

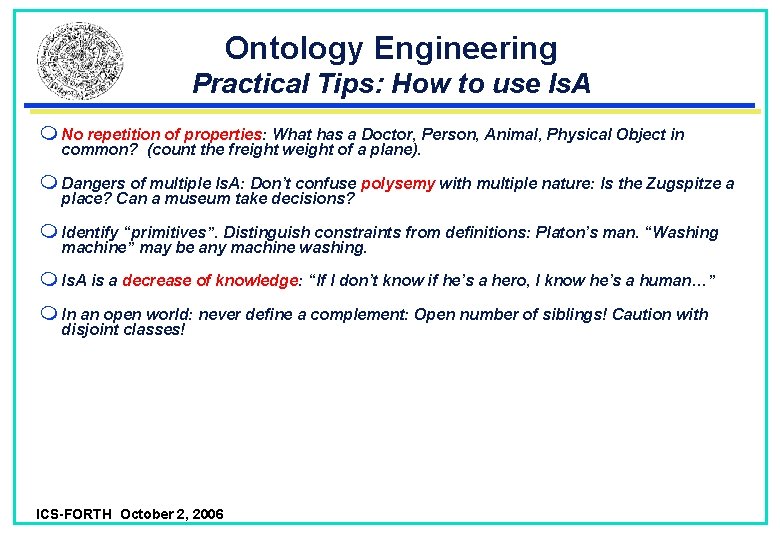

Ontology Engineering Practical Tips: How to use Is. A No repetition of properties: What has a Doctor, Person, Animal, Physical Object in common? (count the freight weight of a plane). Dangers of multiple Is. A: Don’t confuse polysemy with multiple nature: Is the Zugspitze a place? Can a museum take decisions? Identify “primitives”. Distinguish constraints from definitions: Platon’s man. “Washing machine” may be any machine washing. Is. A is a decrease of knowledge: “If I don’t know if he’s a hero, I know he’s a human…” In an open world: never define a complement: Open number of siblings! Caution with disjoint classes! ICS-FORTH October 2, 2006

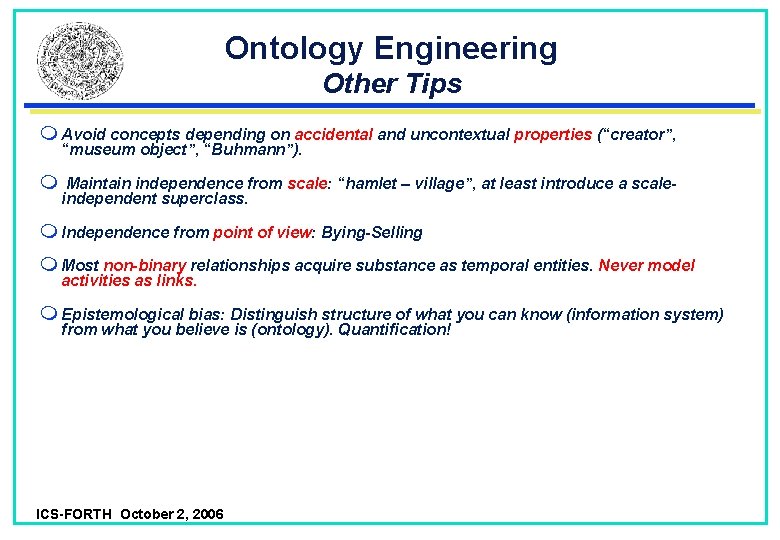

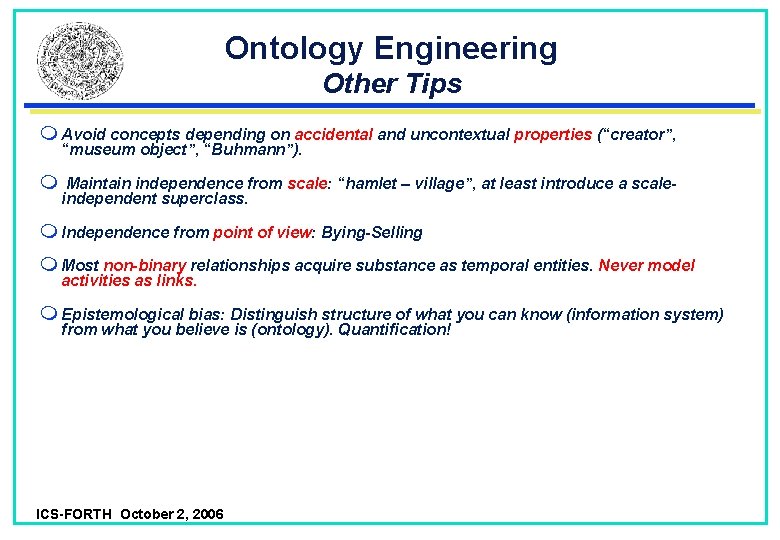

Ontology Engineering Other Tips Avoid concepts depending on accidental and uncontextual properties (“creator”, “museum object”, “Buhmann”). Maintain independence from scale: “hamlet – village”, at least introduce a scaleindependent superclass. Independence from point of view: Bying-Selling Most non-binary relationships acquire substance as temporal entities. Never model activities as links. Epistemological bias: Distinguish structure of what you can know (information system) from what you believe is (ontology). Quantification! ICS-FORTH October 2, 2006