Introduction to Instruction Level Parallelism ILP Traditional Von

- Slides: 18

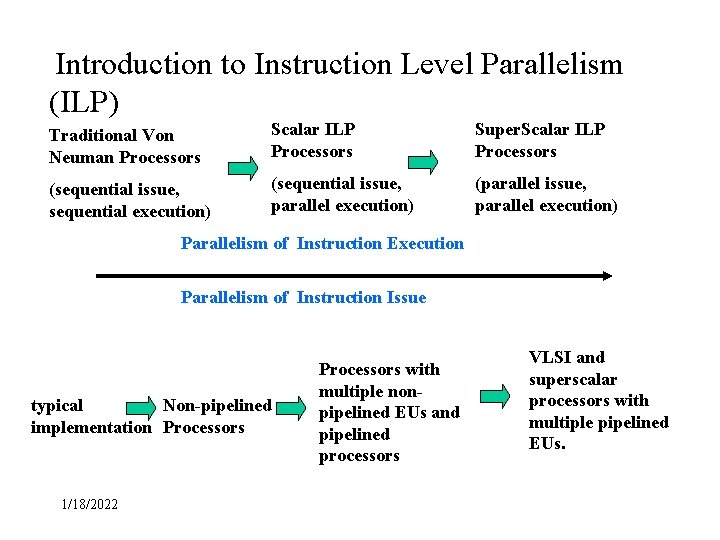

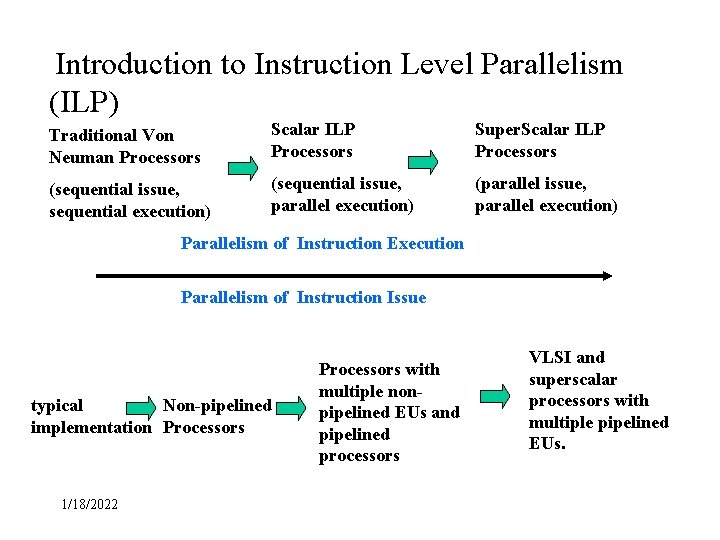

Introduction to Instruction Level Parallelism (ILP) Traditional Von Neuman Processors Scalar ILP Processors Super. Scalar ILP Processors (sequential issue, sequential execution) (sequential issue, parallel execution) (parallel issue, parallel execution) Parallelism of Instruction Execution Parallelism of Instruction Issue typical Non-pipelined implementation Processors 1/18/2022 Processors with multiple nonpipelined EUs and pipelined processors VLSI and superscalar processors with multiple pipelined EUs.

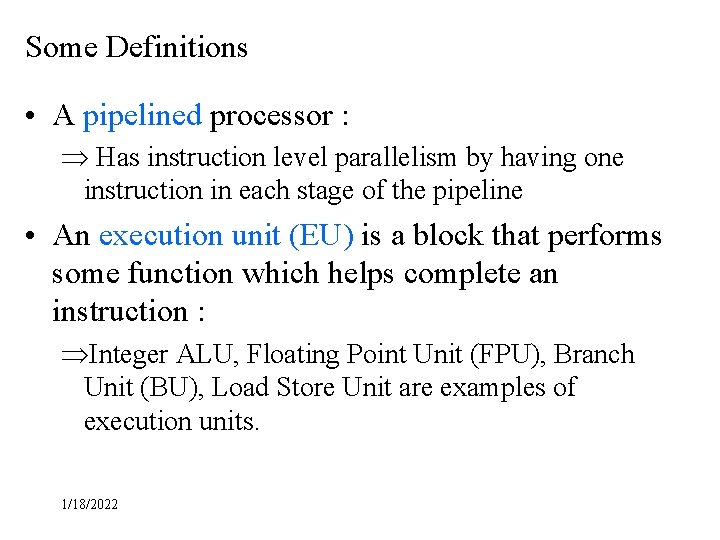

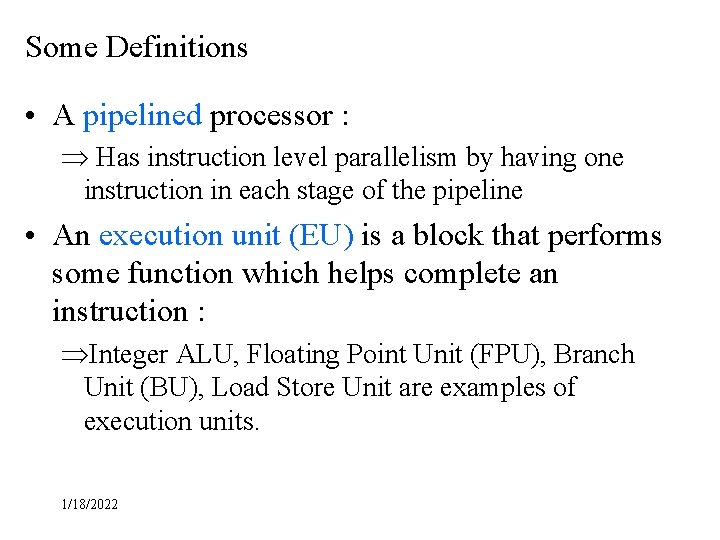

Some Definitions • A pipelined processor : Þ Has instruction level parallelism by having one instruction in each stage of the pipeline • An execution unit (EU) is a block that performs some function which helps complete an instruction : ÞInteger ALU, Floating Point Unit (FPU), Branch Unit (BU), Load Store Unit are examples of execution units. 1/18/2022

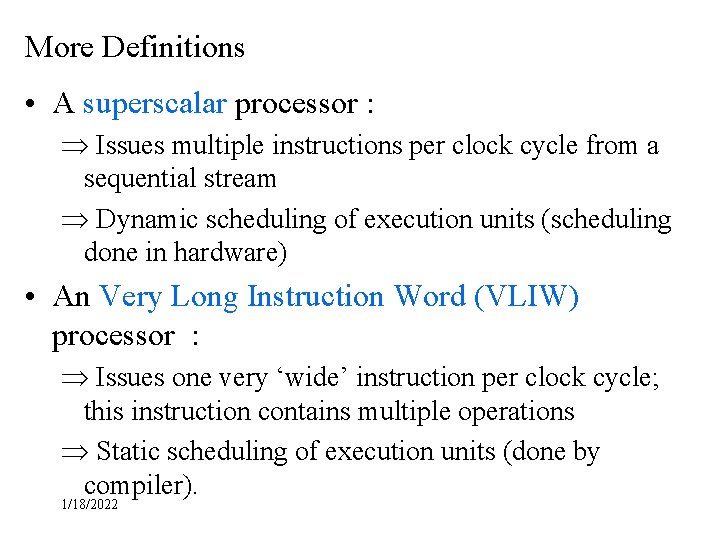

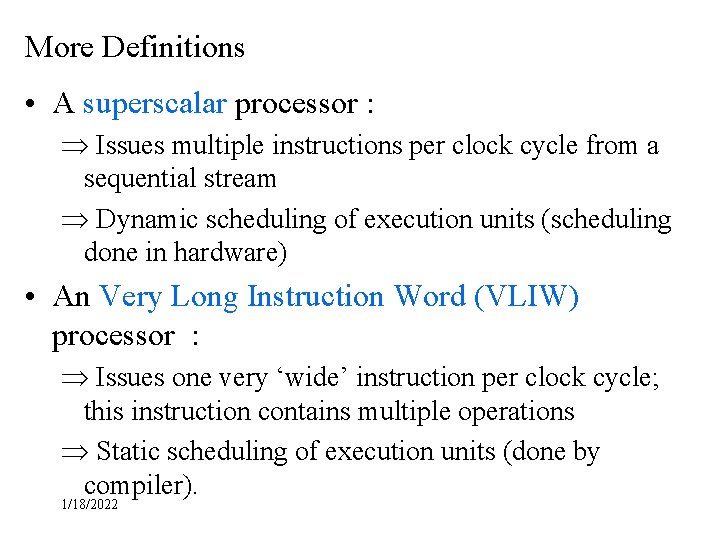

More Definitions • A superscalar processor : Þ Issues multiple instructions per clock cycle from a sequential stream Þ Dynamic scheduling of execution units (scheduling done in hardware) • An Very Long Instruction Word (VLIW) processor : Þ Issues one very ‘wide’ instruction per clock cycle; this instruction contains multiple operations Þ Static scheduling of execution units (done by compiler). 1/18/2022

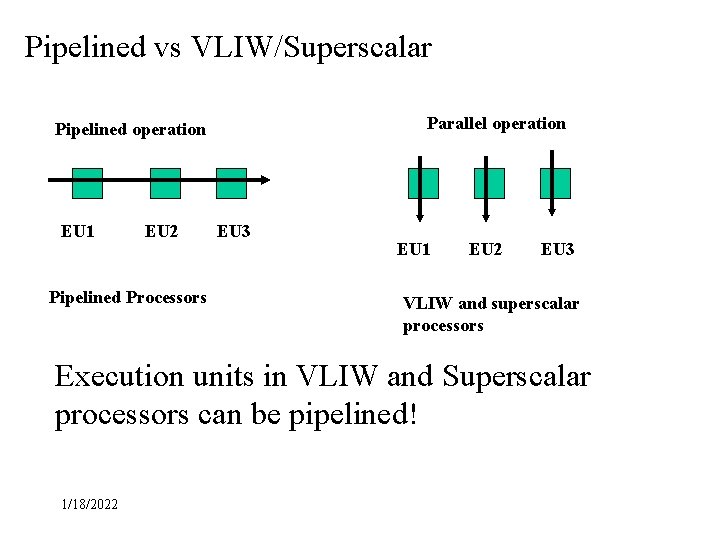

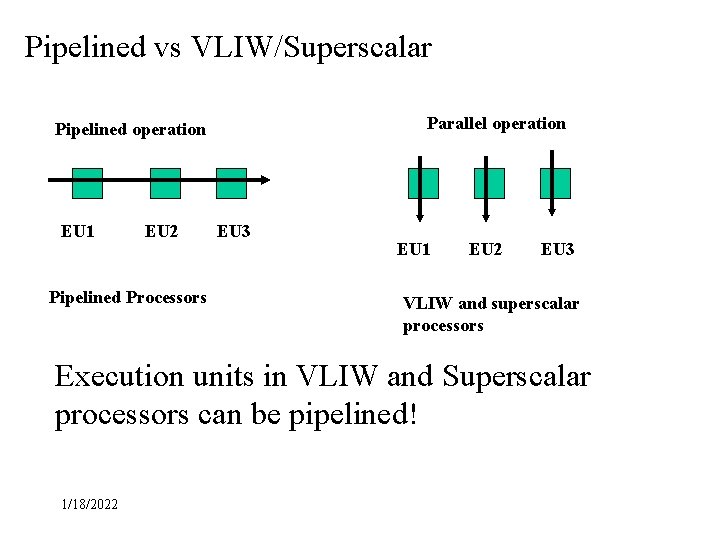

Pipelined vs VLIW/Superscalar Parallel operation Pipelined operation EU 1 EU 2 Pipelined Processors EU 3 EU 1 EU 2 EU 3 VLIW and superscalar processors Execution units in VLIW and Superscalar processors can be pipelined! 1/18/2022

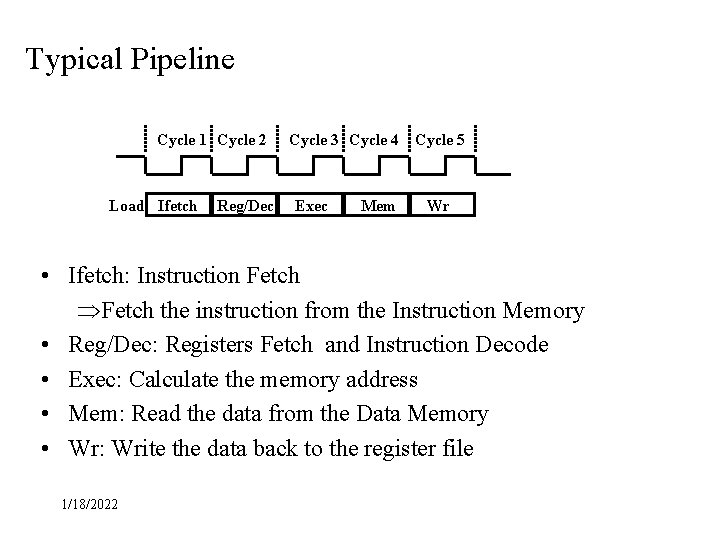

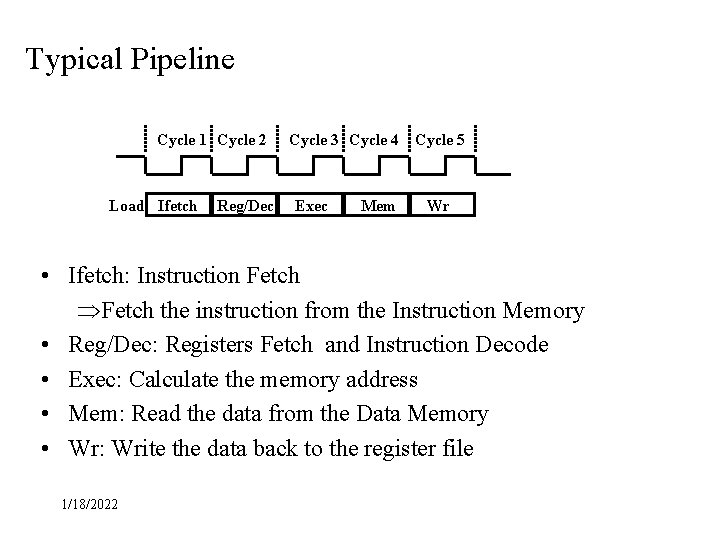

Typical Pipeline Cycle 1 Cycle 2 Load Ifetch Reg/Dec Cycle 3 Cycle 4 Cycle 5 Exec Mem Wr • Ifetch: Instruction Fetch ÞFetch the instruction from the Instruction Memory • Reg/Dec: Registers Fetch and Instruction Decode • Exec: Calculate the memory address • Mem: Read the data from the Data Memory • Wr: Write the data back to the register file 1/18/2022

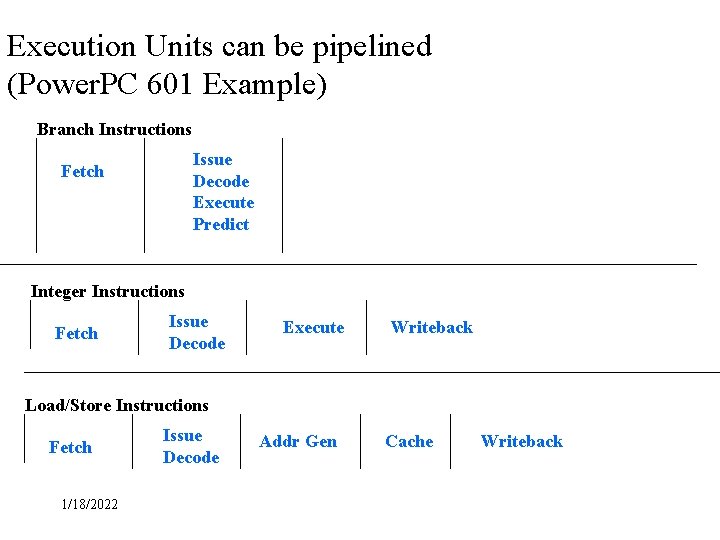

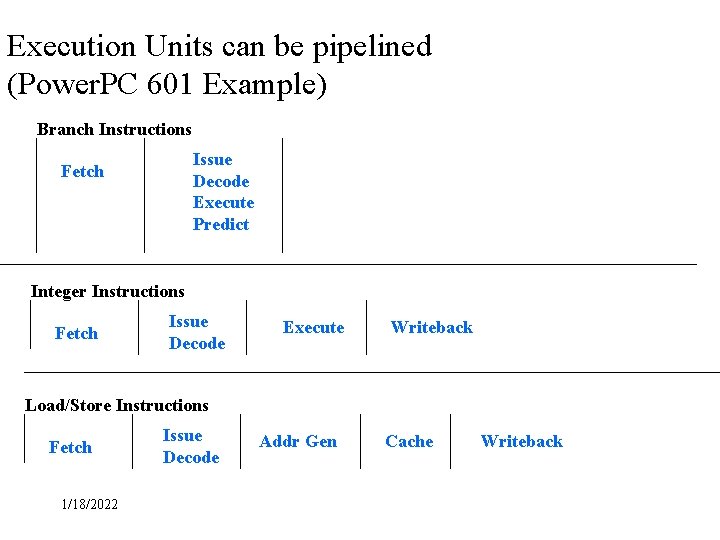

Execution Units can be pipelined (Power. PC 601 Example) Branch Instructions Issue Decode Execute Predict Fetch Integer Instructions Fetch Issue Decode Execute Writeback Load/Store Instructions Fetch 1/18/2022 Issue Decode Addr Gen Cache Writeback

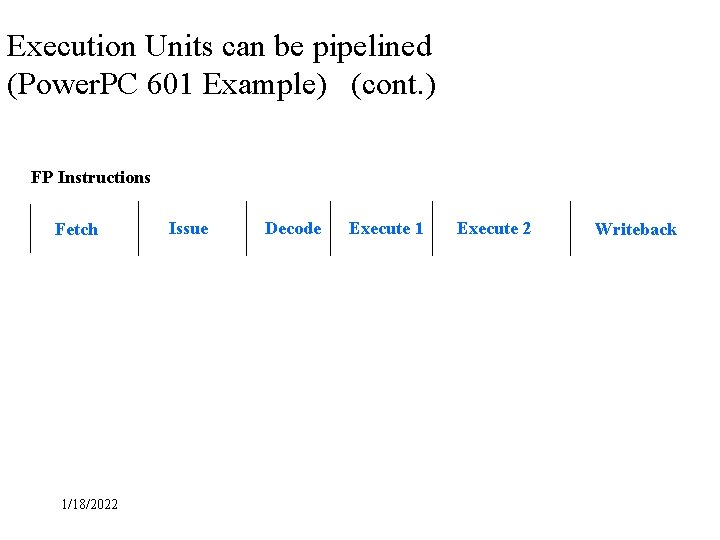

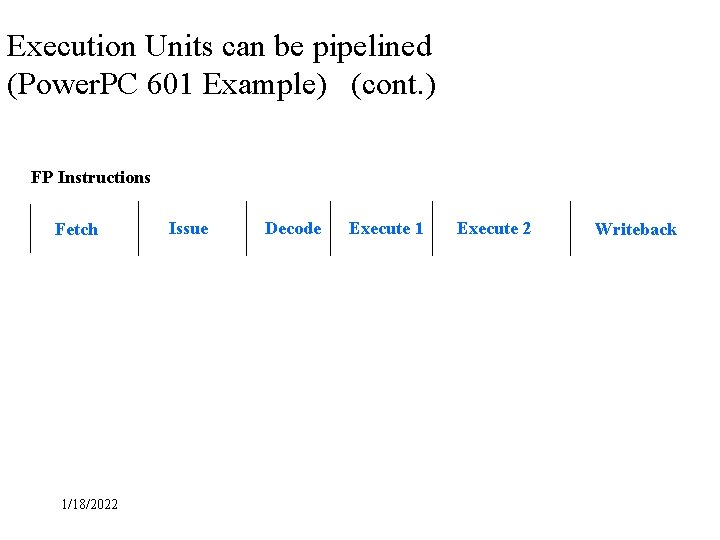

Execution Units can be pipelined (Power. PC 601 Example) (cont. ) FP Instructions Fetch 1/18/2022 Issue Decode Execute 1 Execute 2 Writeback

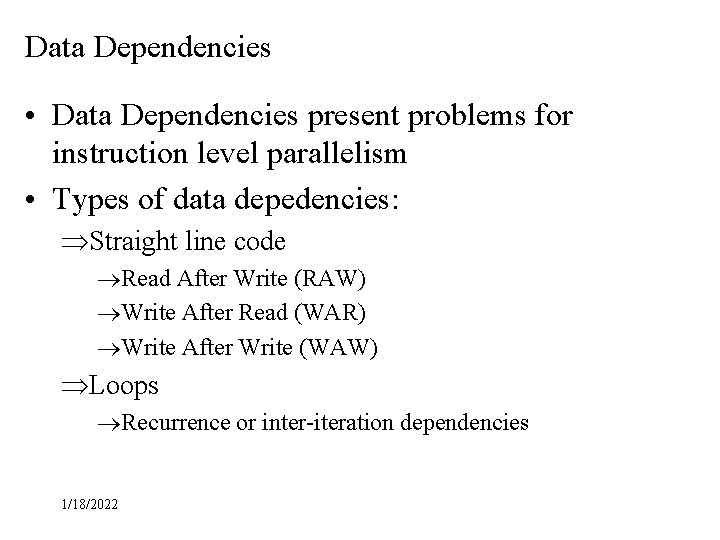

Data Dependencies • Data Dependencies present problems for instruction level parallelism • Types of data depedencies: ÞStraight line code ®Read After Write (RAW) ®Write After Read (WAR) ®Write After Write (WAW) ÞLoops ®Recurrence or inter-iteration dependencies 1/18/2022

Straight Line Dependencies Read After Write (RAW) i 1: load r 1, a; i 2: add r 2, r 1, r 1; Assume a pipeline of Fetch/Decode/Execute/Mem/Writeback When add is in the DECODE stage (which fetches r 1), the load is in the EXECUTE stage and the true value of r 1 has not been fetched yet! (r 1 is fetched in the Mem stage) Solve this by either stalling the ‘add’ until the value of r 1 is ready, or by forwarding the value of r 1 from the Mem stage to the Execute stage. 1/18/2022

Straight Line Dependencies (cont) Write after Read (WAR) i 1: mul i 2: add r 1, r 2, r 3; r 2, r 4 , r 5; r 1 <= r 2 * r 3 r 2 <= r 4 + r 5 If instruction i 2 (add) is executed before instruction i 1 (mul) for some reason, then i 1 (mul) could read the wrong value for r 2. One reason for delaying i 1 would be a stall for the ‘r 3’ value being produced by a previous instruction. Instruction i 2 could proceed because it has all its operands, thus causing the WAR hazard. Use register renaming to eliminate WAR dependency. Replace r 2 with some other register that has not been used yet. 1/18/2022

Straight Line Dependencies (cont) Write after Write (WAW) i 1: mul i 2: add r 1, r 2, r 3; r 1, r 4 , r 5; r 1 <= r 2 * r 3 r 2 <= r 4 + r 5 If instruction i 1 (mul) finishes AFTER instruction i 2 (add), then register r 1 would get the wrong value. Instruction i 1 could finish after instruction i 2 if separate execution units were used for instructions i 1 and i 2. One way to solve this hazard is to simply let instruction i 1 proceed normally, but disable its write stage. 1/18/2022

Loop Dependencies Recurrences: do I = 2, n X(I) = A * X(I-1) + B; enddo One way to parallelize this loop would be to ‘unroll’ this loop (create (N-2) copies of the loop). However, a dependency exists between the current X value and the previous loop value, so loop unrolling will not give us anymore parallelism. This type of data dependency cannot be solved at the implementation level, but must be addressed at the compiler level. 1/18/2022

Control Dependencies • Control Dependencies (ie. branches) are a major obstacle to instruction level parallelism ÞIn a pipelined machine, normally have branch condition computation done as EARLY as possible in the pipeline in order to lessen the impact of incorrect branch prediction (taken or not taken) • Conditional branch instructions are 20% for general purpose code, 5 -10% for scientific code. 1/18/2022

Branch Strategies • Static ÞAlways predict taken or not-taken • Dynamic ÞKeep a history of code execution and modify predictions based on execution history • Multi-way ÞExecute both branch paths and kill incorrect path as soon as branch condition is resolved. 1/18/2022

Control Dependency Graph i 0 i 1 i 0: i 1: i 2: i 3: i 4: i 5: i 6: i 7: i 8: r 1 = op 1; r 2 = op 2; r 3 = op 3; if (r 2 > r 1) { if (r 3 > r 1) { r 4 = r 3; else r 4 = r 1 } } else r 4 = r 2; r 5 = r 4 * r 4; i 2 i 3 i 4 i 5 i 6 i 8 1/18/2022 i 7

Resource Dependencies • A resource dependency is when an instruction requires a hardware resource being used by a previously issued instruction (also known as structural hazard) ÞExecution Units, Busses (e. g, external address/data bus) • A resource dependency can only be solved by resource duplication ÞThe Harvard architecture has separate address/data busses for instructions and data 1/18/2022

Instruction Scheduling • Instruction Scheduling is the assignment of instructions to hardware resources. ÞHardware resources are busses, registers, and execution units • Static scheduling is done by compiler or by human ÞHardware assumes that ALL hazards have been eliminated. ÞLessens the amount of control logic needed which hopefully speeds up maximum clock speed 1/18/2022

Instruction Scheduling (cont). • Dynamic Scheduling is implemented in hardware inside of processor. Þ All instruction streams are ‘legal’ ÞControl logic and hardware resources needed for dynamic scheduling can be significant. • If trying to execute legacy code streams, then dynamic scheduling may be the only option. 1/18/2022