Introduction to Hadoop SAP Open Source Summit October

Introduction to Hadoop SAP Open Source Summit - October 2010 Lars George – lars@cloudera. com Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent. 1010. 01

Traditional Large-Scale Computation § Traditionally, computation has been processor-bound – Relatively small amounts of data – Significant amount of complex processing performed on that data § For many years, the primary push was to increase the computing power of a single machine – Faster processor, more RAM Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

Distributed Systems § Moore’s Law: roughly stated, processing power doubles every two years § Even that hasn’t always proved adequate for very CPU-intensive jobs § Distributed systems evolved to allow developers to use multiple machines for a single job – MPI – PVM – Condor MPI: Message Passing Interface PVM: Parallel Virtual Machine Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

Distributed Systems: Problems § Programming for traditional distributed systems is complex – Data exchange requires synchronization – Finite bandwidth is available – Temporal dependencies are complicated – It is difficult to deal with partial failures of the system § Ken Arnold, CORBA designer: – “Failure is the defining difference between distributed and local programming, so you have to design distributed systems with the expectation of failure” – Developers spend more time designing for failure than they do actually working on the problem itself CORBA: Common Object Request Broker Architecture Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

Distributed Systems: Data Storage § Typically, data for a distributed system is stored on a SAN § At compute time, data is copied to the compute nodes § Fine for relatively limited amounts of data SAN: Storage Area Network Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

The Data-Driven World § Modern systems have to deal with far more data than was the case in the past – Organizations are generating huge amounts of data – That data has inherent value, and cannot be discarded § Examples: – Facebook – over 15 Pb of data – e. Bay – over 10 Pb of data § Many organizations are generating data at a rate of terabytes (or more) per day Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

Data Becomes the Bottleneck § Getting the data to the processors becomes the bottleneck § Quick calculation – Typical disk data transfer rate: 75 Mb/sec – Time taken to transfer 100 Gb of data to the processor: approx 22 minutes! – Assuming sustained reads – Actual time will be worse, since most servers have less than 100 Gb of RAM available § A new approach is needed Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

Partial Failure Support § The system must support partial failure – Failure of a component should result in a graceful degradation of application performance – Not complete failure of the entire system Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

Data Recoverability § If a component of the system fails, its workload should be assumed by still-functioning units in the system – Failure should not result in the loss of any data Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

Component Recovery § If a component of the system fails and then recovers, it should be able to rejoin the system – Without requiring a full restart of the entire system Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

Consistency § Component failures during execution of a job should not affect the outcome of the job Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

Scalability § Adding load to the system should result in a graceful decline in performance of individual jobs – Not failure of the system § Increasing resources should support a proportional increase in load capacity Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

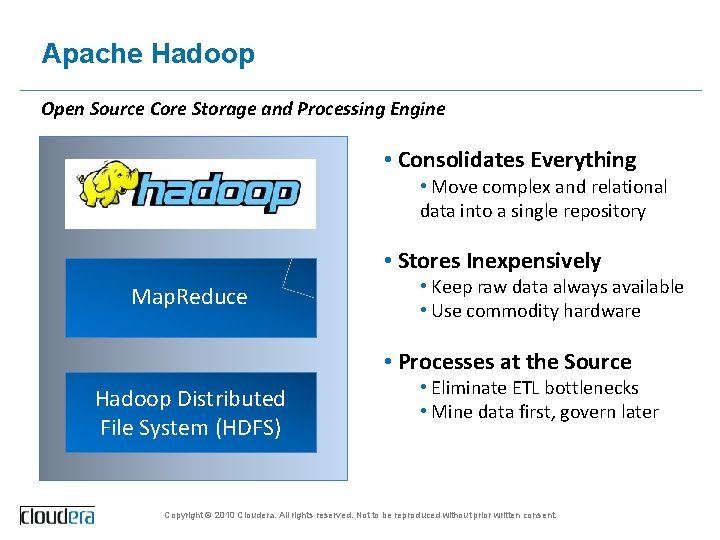

Apache Hadoop Open Source Core Storage and Processing Engine • Consolidates Everything • Move complex and relational data into a single repository • Stores Inexpensively Map. Reduce • Keep raw data always available • Use commodity hardware • Processes at the Source Hadoop Distributed File System (HDFS) • Eliminate ETL bottlenecks • Mine data first, govern later Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

The Hadoop Project § Hadoop is an open-source project overseen by the Apache Software Foundation § Originally based on papers published by Google in 2003 and 2004 § Hadoop committers work at several different organizations – Including Facebook, Yahoo!, Cloudera Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

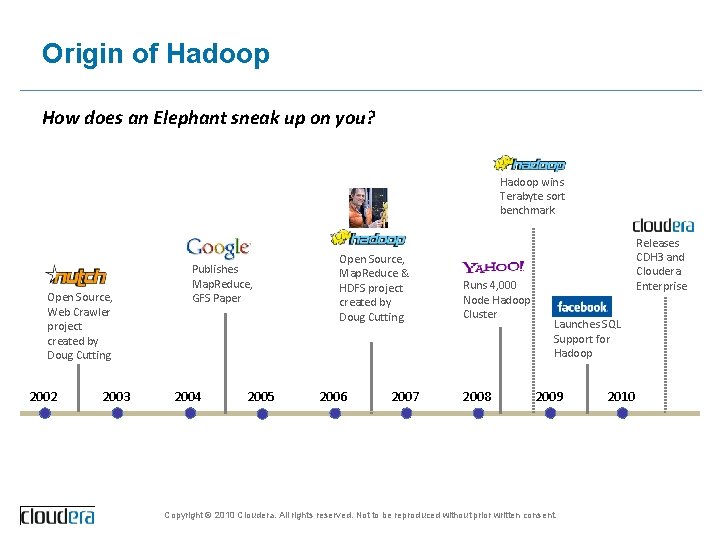

Origin of Hadoop How does an Elephant sneak up on you? Hadoop wins Terabyte sort benchmark Open Source, Web Crawler project created by Doug Cutting 2002 2003 Publishes Map. Reduce, GFS Paper 2004 2005 Open Source, Map. Reduce & HDFS project created by Doug Cutting 2006 2007 Runs 4, 000 Node Hadoop Cluster 2008 Releases CDH 3 and Cloudera Enterprise Launches SQL Support for Hadoop 2009 Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent. 2010

What is Hadoop? § A scalable fault-tolerant distributed system for data storage and processing (open source under the Apache license) § Scalable data processing engine – Hadoop Distributed File System (HDFS): self-healing high-bandwidth clustered storage – Map. Reduce: fault-tolerant distributed processing § Key value – Flexible -> store data without a schema and add it later as needed – Affordable -> cost / TB at a fraction of traditional options – Broadly adopted -> a large and active ecosystem – Proven at scale -> dozens of petabyte + implementations in production today Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

Apache Hadoop The Importance of Being Open No Lock-In - Investments in skills, services & hardware preserved regardless of vendor choice Rich Ecosystem - Dozens of complementary software, hardware and services firms Community Development - Hadoop & related projects are expanding at a rapid pace Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

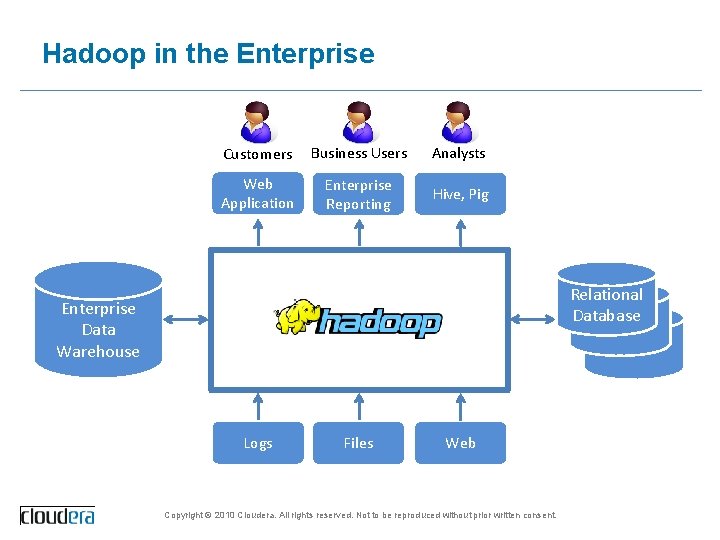

Hadoop in the Enterprise Customers Business Users Analysts Web Application Enterprise Reporting Hive, Pig Relational Database Enterprise Data Warehouse Logs Files Web Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

Apache Hadoop - Not Without Challenges § Ease of use – command line interface only; data import and access requires development skills § Complexity – more than a dozen different components, all with different versions, dependencies and patch requirements § Manageability – Hadoop is challenging to configure, upgrade, monitor and administer § Interoperability – limited support for popular databases and analytical tools Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

Hadoop Components § Hadoop consists of two core components – The Hadoop Distributed File System (HDFS) – Map. Reduce § There are many other projects based around core Hadoop – Often referred to as the ‘Hadoop Ecosystem’ – Pig, Hive, HBase, Flume, Oozie, Sqoop, etc § A set of machines running HDFS and Map. Reduce is known as a Hadoop Cluster – Individual machines are known as nodes – A cluster can have as few as one node, as many as several thousands – More nodes = better performance! Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

Hadoop Components: HDFS § HDFS, the Hadoop Distributed File System, is responsible for storing data on the cluster § Data is split into blocks and distributed across multiple nodes in the cluster – Each block is typically 64 Mb or 128 Mb in size § Each block is replicated multiple times – Default is to replicate each block three times – Replicas are stored on different nodes – This ensures both reliability and availability Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

Hadoop Components: Map. Reduce § Map. Reduce is the system used to process data in the Hadoop cluster § Consists of two phases: Map, and then Reduce – Between the two is a stage known as the sort and shuffle § Each Map task operates on a discrete portion of the overall dataset – Typically one HDFS block of data § After all Maps are complete, the Map. Reduce system distributes the intermediate data to nodes which perform the Reduce phase Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

HDFS Basic Concepts § HDFS is a filesystem written in Java – Based on Google’s GFS § Sits on top of a native filesystem – ext 3, xfs etc § Provides redundant storage for massive amounts of data – Using cheap, unreliable computers Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

HDFS Basic Concepts (cont’d) § HDFS performs best with a ‘modest’ number of large files – Millions, rather than billions, of files – Each file typically 100 Mb or more § Files in HDFS are ‘write once’ – No random writes to files are allowed – Append support is available in Cloudera’s Distribution for Hadoop (CDH) and in Hadoop 0. 21 § HDFS is optimized for large, streaming reads of files – Rather than random reads Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

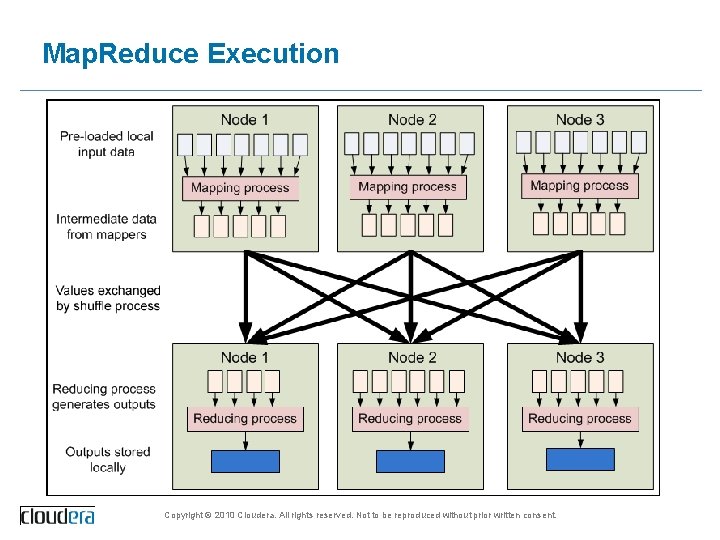

Map. Reduce Execution Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

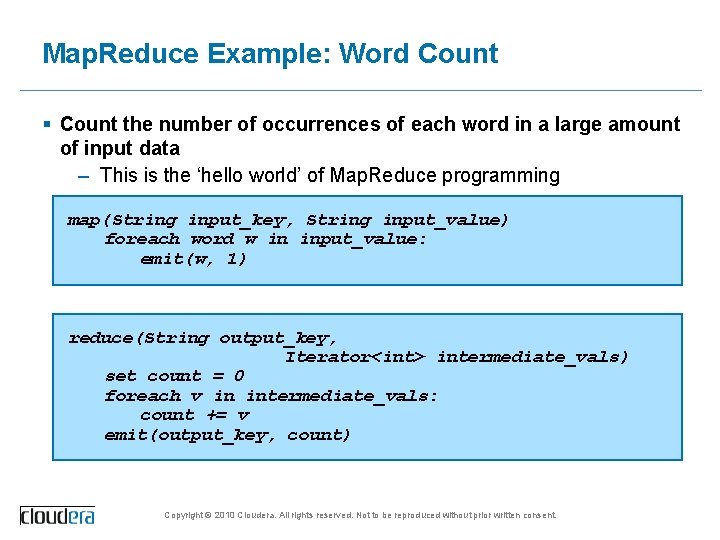

Map. Reduce Example: Word Count § Count the number of occurrences of each word in a large amount of input data – This is the ‘hello world’ of Map. Reduce programming map(String input_key, String input_value) foreach word w in input_value: emit(w, 1) reduce(String output_key, Iterator<int> intermediate_vals) set count = 0 foreach v in intermediate_vals: count += v emit(output_key, count) Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

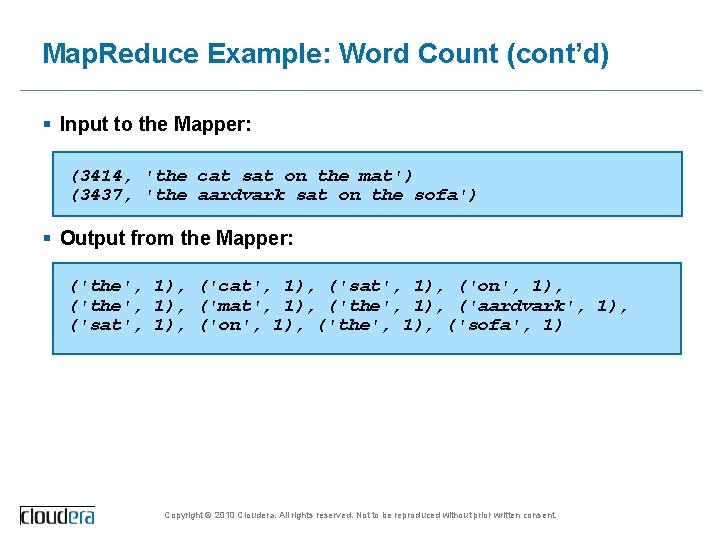

Map. Reduce Example: Word Count (cont’d) § Input to the Mapper: (3414, 'the cat sat on the mat') (3437, 'the aardvark sat on the sofa') § Output from the Mapper: ('the', 1), ('cat', 1), ('sat', 1), ('on', 1), ('the', 1), ('mat', 1), ('the', 1), ('aardvark', 1), ('sat', 1), ('on', 1), ('the', 1), ('sofa', 1) Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

Map. Reduce Example: Word Count (cont’d) § Intermediate data sent to the Reducer: ('aardvark', [1]) ('cat', [1]) ('mat', [1]) ('on', [1, 1]) ('sat', [1, 1]) ('sofa', [1]) ('the', [1, 1, 1, 1]) § Final Reducer Output: ('aardvark', 1) ('cat', 1) ('mat', 1) ('on', 2) ('sat', 2) ('sofa', 1) ('the', 4) Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

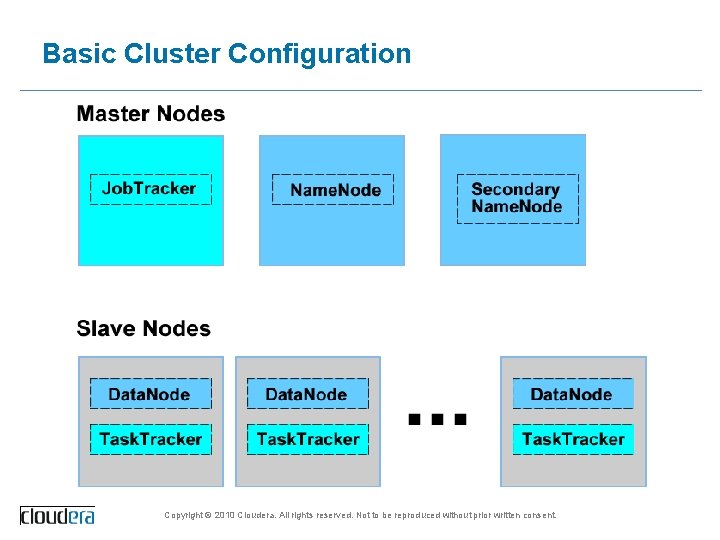

Basic Cluster Configuration Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

What is common across Hadoop-able problems? Nature of the data § Complex data § Multiple data sources § Lots of it Nature of the analysis § Batch processing § Parallel execution § Spread data over a cluster of servers and take the computation to the data Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

What Analysis is Possible With Hadoop? § Text mining § Collaborative filtering § Index building § Prediction models § Graph creation and analysis § Sentiment analysis § Pattern recognition § Risk assessment Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

Benefits of Analyzing With Hadoop • Previously impossible/impractical to do this analysis • Analysis conducted at lower cost • Analysis conducted in less time • Greater flexibility Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

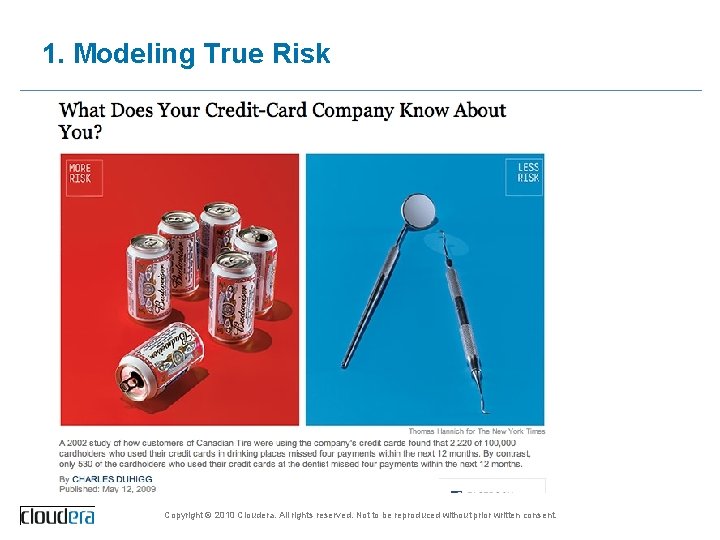

1. Modeling True Risk Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

1. Modeling True Risk Solution with Hadoop • Source, parse and aggregate disparate data sources to build comprehensive data picture • e. g. credit card records, call recordings, chat sessions, emails, banking activity • Structure and analyze • Sentiment analysis, graph creation, pattern recognition Typical Industry • Financial Services (Banks, Insurance) Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

2. Customer Churn Analysis Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

2. Customer Churn Analysis Solution with Hadoop • Rapidly test and build behavioral model of customer from disparate data sources • Structure and analyze with Hadoop • Traversing • Graph creation • Pattern recognition Typical Industry • Telecommunications, Financial Services Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

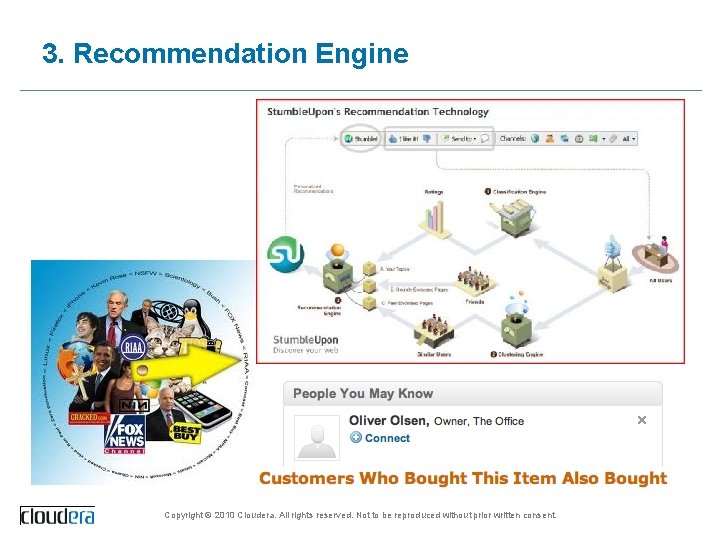

3. Recommendation Engine Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

3. Recommendation Engine Solution with Hadoop • Batch processing framework • Allow execution in in parallel over large datasets • Collaborative filtering • Collecting ‘taste’ information from many users • Utilizing information to predict what similar users like Typical Industry • Ecommerce, Manufacturing, Retail Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

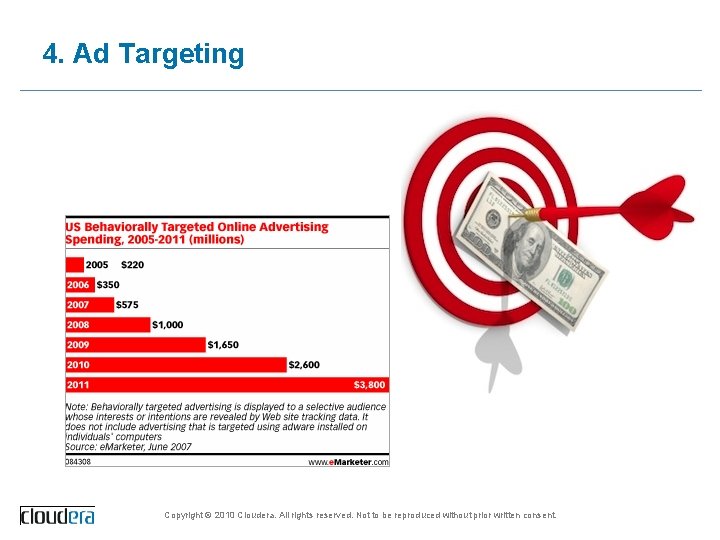

4. Ad Targeting Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

4. Ad Targeting Solution with Hadoop • Data analysis can be conducted in parallel, reducing processing times from days to hours • With Hadoop, as data volumes grow the only expansion cost is hardware • Add more nodes without a degradation in performance Typical Industry • Advertising Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

5. Point of Sale Transaction Analysis Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

5. Point of Sale Transaction Analysis Solution with Hadoop • Batch processing framework • Allow execution in in parallel over large datasets • Pattern recognition • Optimizing over multiple data sources • Utilizing information to predict demand Typical Industry • Retail Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

6. Analyzing Network Data to Predict Failure Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

6. Analyzing Network Data to Predict Failure Solution with Hadoop • Take the computation to the data • Expand the range of indexing techniques from simple scans to more complex data mining • Better understand how the network reacts to fluctuations • How previously thought discrete anomalies may, in fact, be interconnected • Identify leading indicators of component failure Typical Industry • Utilities, Telecommunications, Data Centers Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

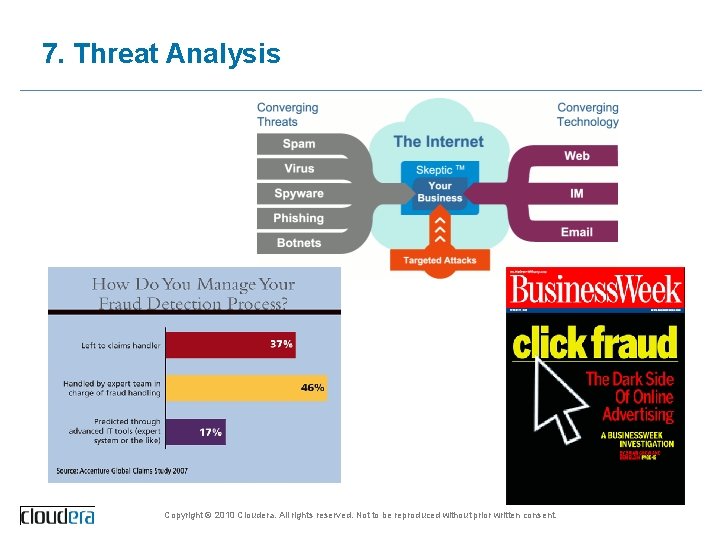

7. Threat Analysis Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

7. Threat Analysis Solution with Hadoop • Parallel processing over huge datasets • Pattern recognition to identify anomalies i. e. threats Typical Industry • Security • Financial Services • General: spam fighting, click fraud Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

8. Trade Surveillance Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

8. Trade Surveillance Solution with Hadoop • Batch processing framework • Allow execution in in parallel over large datasets • Pattern recognition • Detect trading anomalies and harmful behavior Typical Industry • Financial services • Regulatory bodies Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

9. Search Quality Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

9. Search Quality Solution with Hadoop • Analyzing search attempts in conjunction with structured data • Pattern recognition • Browsing pattern of users performing searches in different categories Typical Industry • Web • Ecommerce Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

10. Data “Sandbox” Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

10. Data “Sandbox” Solution with Hadoop • With Hadoop an organization can “dump” all this data into a HDFS cluster • Then use Hadoop to start trying out different analysis on the data • See patterns or relationships that allow the organization to derive additional value from data Typical Industry • Common across all industries Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

The Hadoop Ecosystem § Started as stand-alone project § Turned into thriving ecosystem § Full stack of tools covers complete set of business problems § Covers – data aggregationand collection – data input and output to legacy system – random access to data – high-level query languages – workflow system – UI framework to enable easy access –. . . Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

Hive and Pig § Although Map. Reduce is very powerful, it can also be complex to master § Many organizations have business or data analysts who are skilled at writing SQL queries, but not at writing Java code § Many organizations have programmers who are skilled at writing code in scripting languages § Hive and Pig are two projects which evolved separately to help such people analyze huge amounts of data via Map. Reduce – Hive was initially developed at Facebook, Pig at Yahoo! § Cloudera offers a two-day course, Data Manipulation with Hive and Pig Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

HBase: ‘The Hadoop Database’ § HBase is a column-store database layered on top of HDFS – Based on Google’s Big Table – Provides interactive access to data § Can store massive amounts of data – Multiple Gigabytes, up to Terabytes of data § High Write Throughput – Thousands per second (per node) § Copes well with sparse data – Tables can have many thousands of columns – Even if a given row only has data in a few of the columns § Has a constrained access model – Limited to lookup of a row by a single key – No transactions – Single row operations only Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

Flume § Flume is a distributed, reliable, available service for efficiently moving large amounts of data as it is produced – Ideally suited to gathering logs from multiple systems and inserting them into HDFS as they are generated § Developed in-house by Cloudera, and released as open-source software § Design goals: – Reliability – Scalability – Manageability – Extensibility Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

Zoo. Keeper: Distributed Consensus Engine § Zoo. Keeper is a ‘distributed consensus engine’ – A quorum of Zoo. Keeper nodes exists – Clients can connect to any node and be assured that they will receive the correct, up-to-date information – Elegantly handles a node crashing § Used by many other Hadoop projects – HBase, for example Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

Fuse-DFS § Fuse-DFS allows mounting HDFS volumes via the Linux FUSE filesystem – Enables applications which can only write to a ‘standard’ filesystem to write to HDFS with no application modification § Caution: Does not imply that HDFS can be used as a generalpurpose filesystem – Still constrained by HDFS limitations – For example, no modifications to existing files § Useful for legacy applications which need to write to HDFS FUSE: Filesystem in USEr space Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

Ganglia: Cluster Monitoring § Ganglia is an open-source, scalable, distributed monitoring product for high-performance computing systems – Specifically designed for clusters of machines § Not, strictly speaking, a Hadoop project – But very useful for monitoring Hadoop clusters § Collects, aggregates, and provides time-series views of metrics § Integrates with Hadoop’s metrics-collection system § Note: Ganglia doesn’t provide alerts – But is easily paired with alerting systems such as Nagios Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

Sqoop: Retrieving Data From RDBMSs § Sqoop: SQL to Hadoop § Extracts data from RDBMSs and inserts it into HDFS – Also works the other way around § Command-line tool which works with any RDBMS – Optimizations available for some specific RDBMSs § Generates Writeable classes for use in Map. Reduce jobs § Developed at Cloudera, released as Open Source Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

Hue § Hue: The Hadoop User Experience § Graphical front-end to developer and administrator functionality – Uses a Web browser as its front-end § Developed by Cloudera, released as Open Source § Extensible – Publically-available API § Cloudera Enterprise includes extra functionality – Advanced user management – Integration with LDAP, Active Directory – Accounting Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

About Cloudera § Cloudera is “The commercial Hadoop company” § Founded by leading experts on Hadoop from Facebook, Google, Oracle and Yahoo § Provides consulting and training services for Hadoop users § Staff includes several committers to Hadoop projects Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

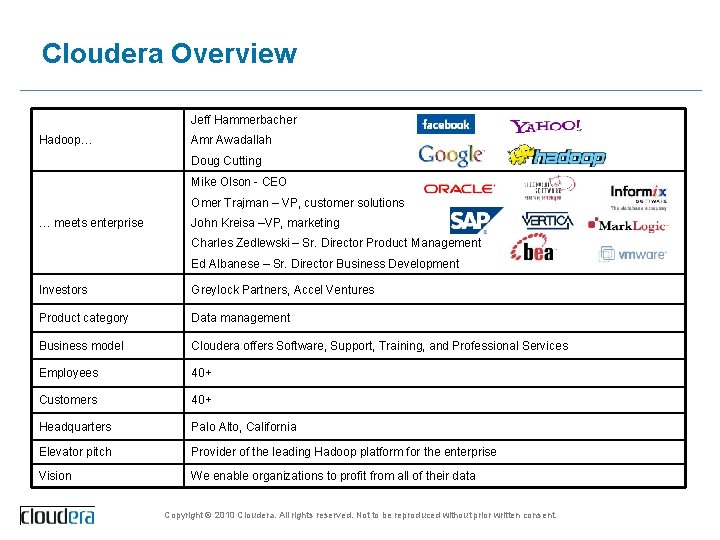

Cloudera Overview Jeff Hammerbacher Hadoop… Amr Awadallah Doug Cutting Mike Olson - CEO Omer Trajman – VP, customer solutions … meets enterprise John Kreisa –VP, marketing Charles Zedlewski – Sr. Director Product Management Ed Albanese – Sr. Director Business Development Investors Greylock Partners, Accel Ventures Product category Data management Business model Cloudera offers Software, Support, Training, and Professional Services Employees 40+ Customers 40+ Headquarters Palo Alto, California Elevator pitch Provider of the leading Hadoop platform for the enterprise Vision We enable organizations to profit from all of their data Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

Recent News & Awards § News – Partnership with Netezza to integrate Twin. Fin with Hadoop – Partnership with Quest to connect Oracle and Hadoop (Ora-Oop) – Partnership with NTT DATA to accelerate Hadoop adoption in APAC region – Partnership with Teradata, Aster Data, EMC Greenplum and more – Launched Cloudera Enterprise and Cloudera’s Distribution for Hadoop v 3 – $25 Million in Series C Funding, Led by Meritech Capital Partners § Awards – Venture Capital Journal: #1 of 20 most promising startups – Best Young Tech Entrepreneurs 2010 – Jeff Hammerbacher – Selected as On. Demand 100 Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

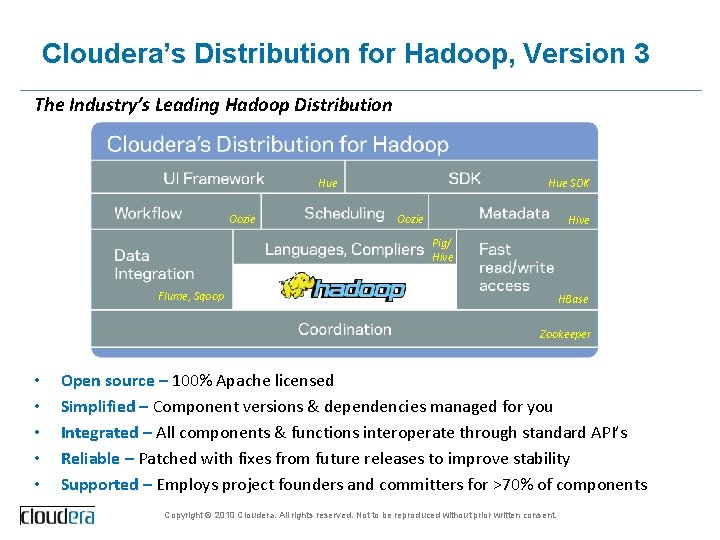

Cloudera’s Distribution for Hadoop, Version 3 The Industry’s Leading Hadoop Distribution Hue Oozie Hue SDK Oozie Hive Pig/ Hive Flume, Sqoop HBase Zookeeper • • • Open source – 100% Apache licensed Simplified – Component versions & dependencies managed for you Integrated – All components & functions interoperate through standard API’s Reliable – Patched with fixes from future releases to improve stability Supported – Employs project founders and committers for >70% of components Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

Cloudera Software (All Open-Source) § Cloudera’s Distribution of Hadoop (CDH) – A single, easy-to-install package from the Apache Hadoop core repository – Includes a stable version of Hadoop, plus critical bug fixes and solid new features from the development version § Hue – Browser-based tool for cluster administration and job development – Supports managing internal clusters as well as those running on public clouds – Helps decrease development time Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

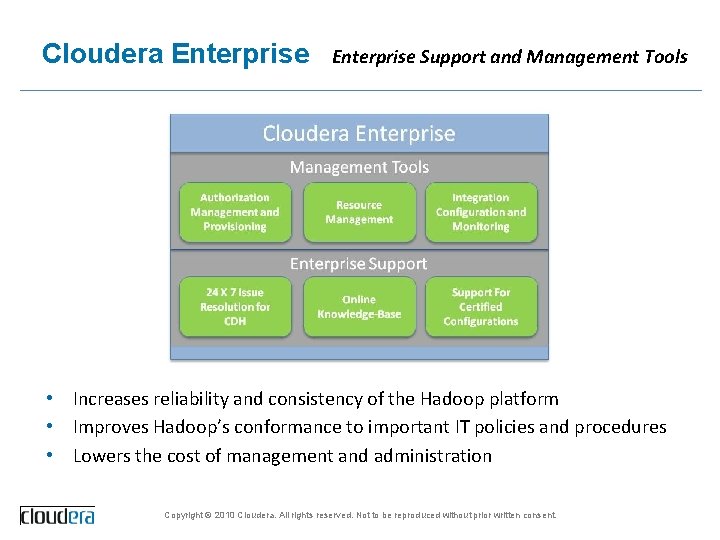

Cloudera Enterprise Support and Management Tools • Increases reliability and consistency of the Hadoop platform • Improves Hadoop’s conformance to important IT policies and procedures • Lowers the cost of management and administration Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

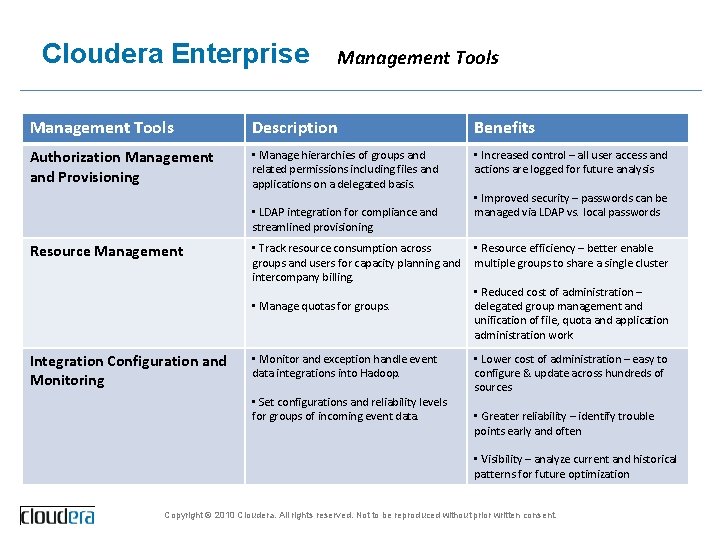

Cloudera Enterprise Management Tools Description Benefits Authorization Management and Provisioning • Manage hierarchies of groups and related permissions including files and applications on a delegated basis. • Increased control – all user access and actions are logged for future analysis • LDAP integration for compliance and streamlined provisioning Resource Management • Track resource consumption across groups and users for capacity planning and intercompany billing. • Manage quotas for groups. Integration Configuration and Monitoring • Monitor and exception handle event data integrations into Hadoop. • Set configurations and reliability levels for groups of incoming event data. • Improved security – passwords can be managed via LDAP vs. local passwords • Resource efficiency – better enable multiple groups to share a single cluster • Reduced cost of administration – delegated group management and unification of file, quota and application administration work • Lower cost of administration – easy to configure & update across hundreds of sources • Greater reliability – identify trouble points early and often • Visibility – analyze current and historical patterns for future optimization Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

Cloudera Enterprise § Cloudera Enterprise – Complete package of software and support – Built on top of CDH – Includes tools for monitoring the Hadoop cluster – Resource consumption tracking – Quota management – Etc – Includes management tools – Authorization management and provisioning – Integration with LDAP – Etc – 24 x 7 support Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

Cloudera Training Public and Private Classes § Current Curriculum – Developer Training (3 days) - Hands-on training for developers who want to analyze their data but are new to Hadoop – System Administrator Training (2 Days) - Hands-on training for administrators who will be responsible for setting up, configuring, monitoring a Hadoop cluster – HBase Training (2 Days) - Developers who want to use HBase to store data because they need one or more of (1) TB+ data sets, (2) Low latency, random reads & writes or (3) High Throughput (e. g. 10, 000’s writes/second) – Hive Training (2 Days) - Analysts or developers who want to use an SQL-like language to analyze data in Hadoop § Public classes and curriculum can be found at www. cloudera. com/events Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

Cloudera Professional Services Enable and Accelerate Hadoop Deployments § Cloudera Solution Architects (SAs) deliver expertise putting Hadoop in production – Function as tech leads, trainers – Can code and prototype, but goal is to enable customers to do the work § Services include – – Architecture review Good practices for developing and operationalizing Hadoop Customer specific code and templates Custom integrations and patches Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

Cloudera Professional Services § Provides consultancy services to many key users of Hadoop – Including Netflix, Samsung, Trulia, Com. Score, Linked. In, University of Phoenix, … § Solutions Architects are experts in Hadoop and related technologies – Several are committers to the Apache Hadoop project § Provides training in key areas of Hadoop administration and Development – Courses include Hadoop for System Administrators, Hadoop for Developers, Data Analysis with Hive and Pig, Learning HBase – Custom course development available – Both public and on-site training available Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

Questions? Copyright © 2010 Cloudera. All rights reserved. Not to be reproduced without prior written consent.

- Slides: 73