Introduction to Deep Learning without trying to go

- Slides: 16

Introduction to Deep Learning (without trying to go too deep and getting lost!) Sandeep Satheesan National Center for Supercomputing Applications University of Illinois at Urbana–Champaign

Outline • Some Background • • • Artificial Neuron Artificial Neural Networks Activation Function Gradient Descent Different types of datasets • What is Deep Learning? • Basic description • Why is Deep Learning Trending Now? • Hierarchical Representations in Deep Neural Networks • Machine Learning Strategy in the Deep Learning Era

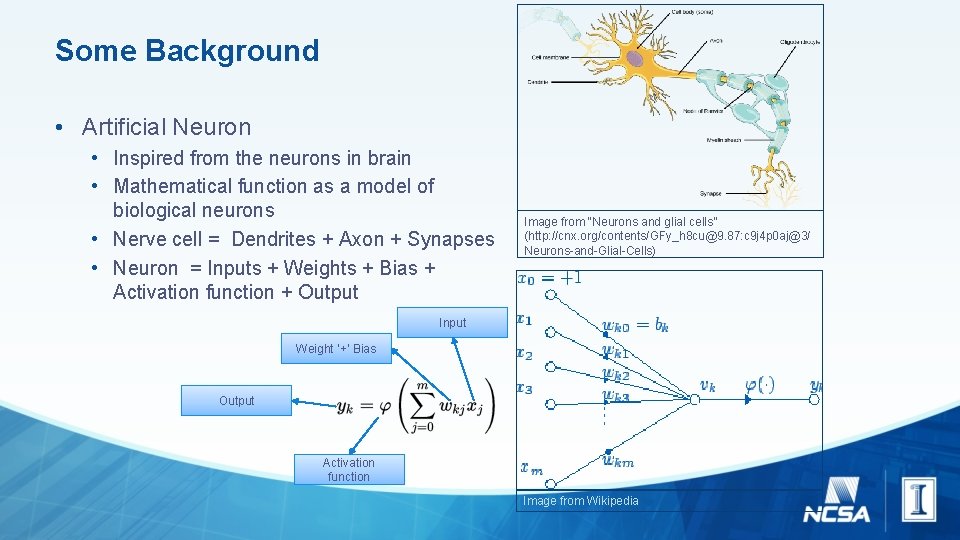

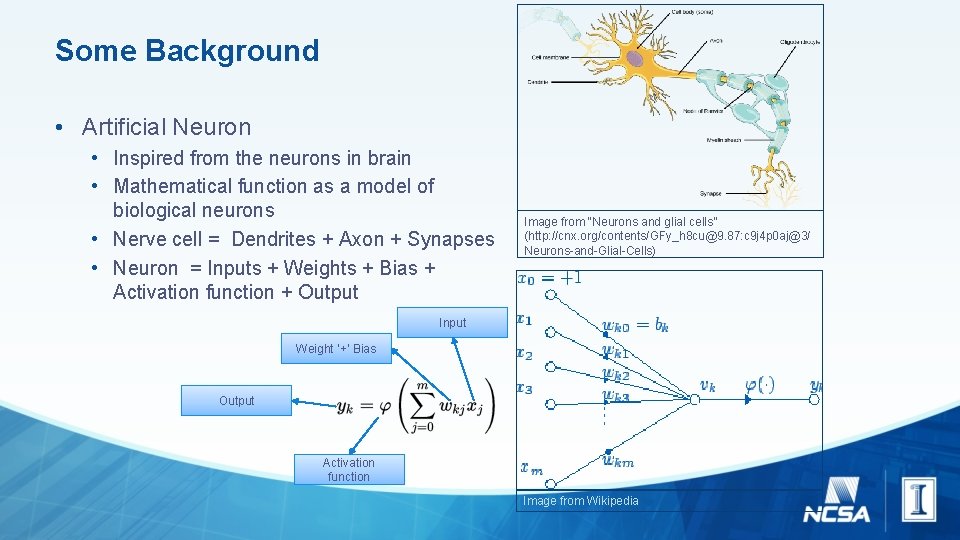

Some Background • Artificial Neuron • Inspired from the neurons in brain • Mathematical function as a model of biological neurons • Nerve cell = Dendrites + Axon + Synapses • Neuron = Inputs + Weights + Bias + Activation function + Output Image from “Neurons and glial cells” (http: //cnx. org/contents/GFy_h 8 cu@9. 87: c 9 j 4 p 0 aj@3/ Neurons-and-Glial-Cells) Input Weight ‘+’ Bias Output Activation function Image from Wikipedia

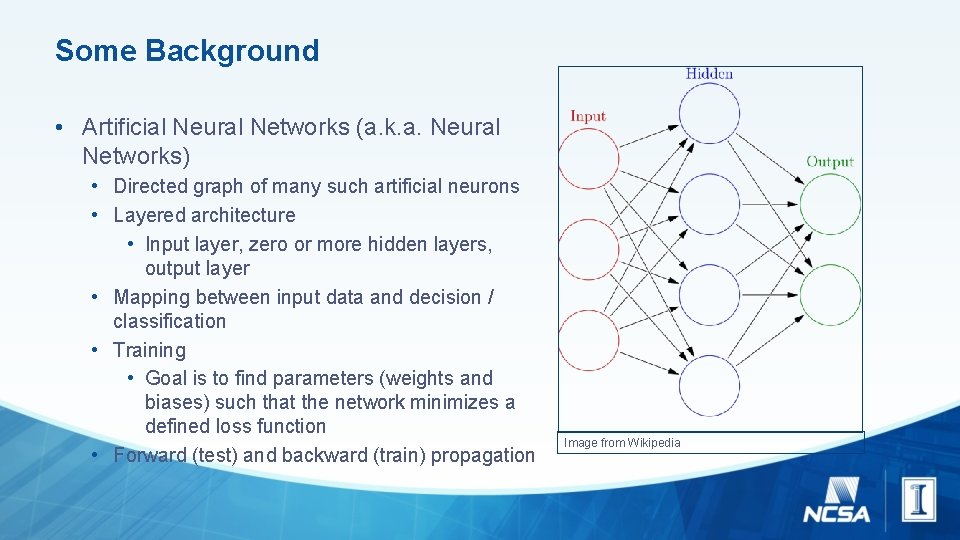

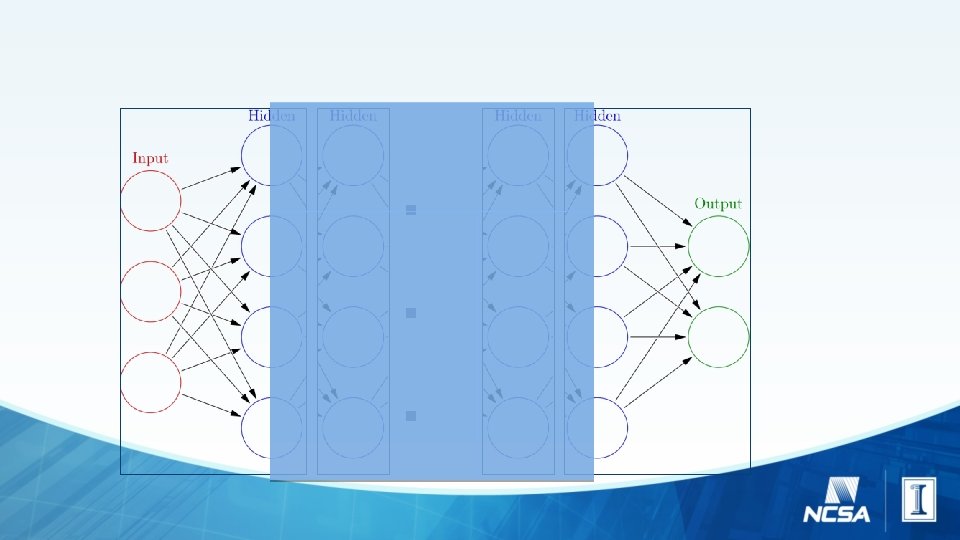

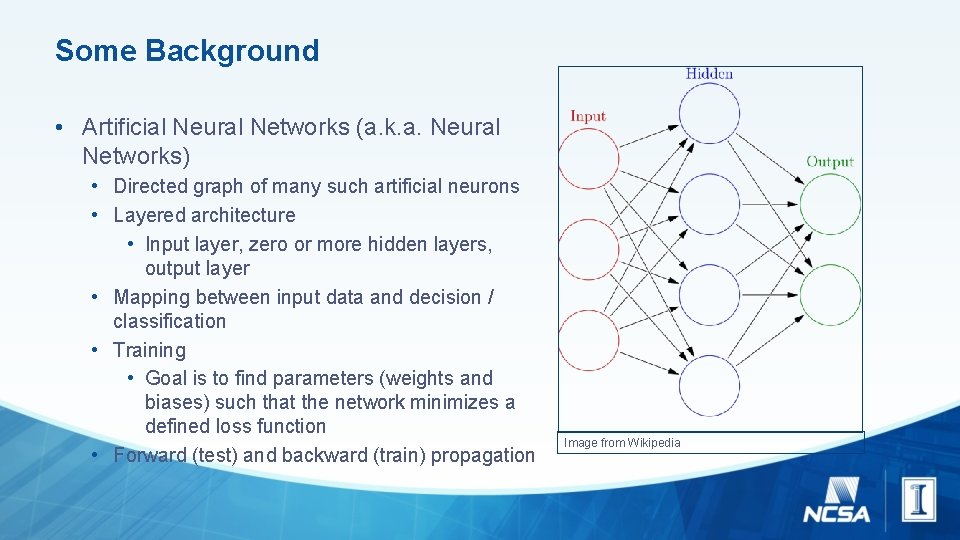

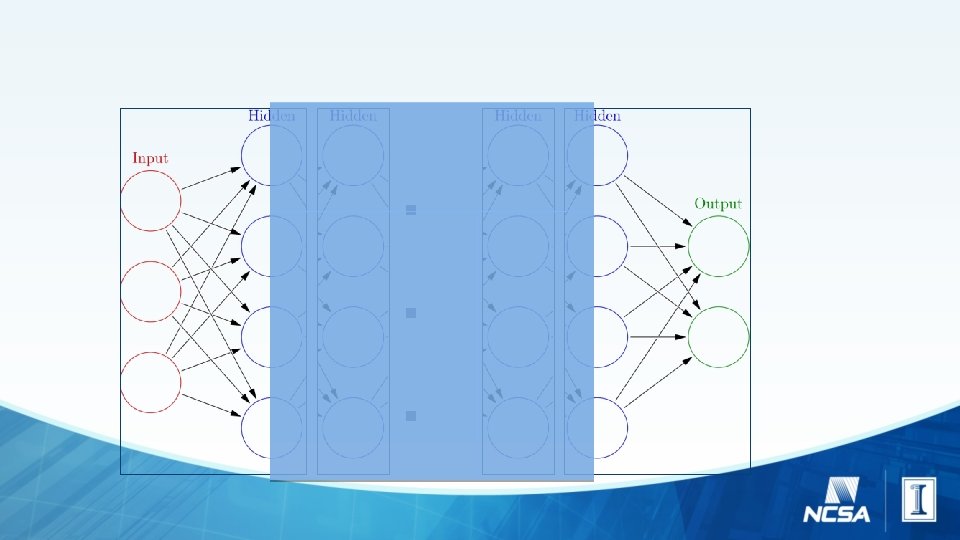

Some Background • Artificial Neural Networks (a. k. a. Neural Networks) • Directed graph of many such artificial neurons • Layered architecture • Input layer, zero or more hidden layers, output layer • Mapping between input data and decision / classification • Training • Goal is to find parameters (weights and biases) such that the network minimizes a defined loss function • Forward (test) and backward (train) propagation Image from Wikipedia

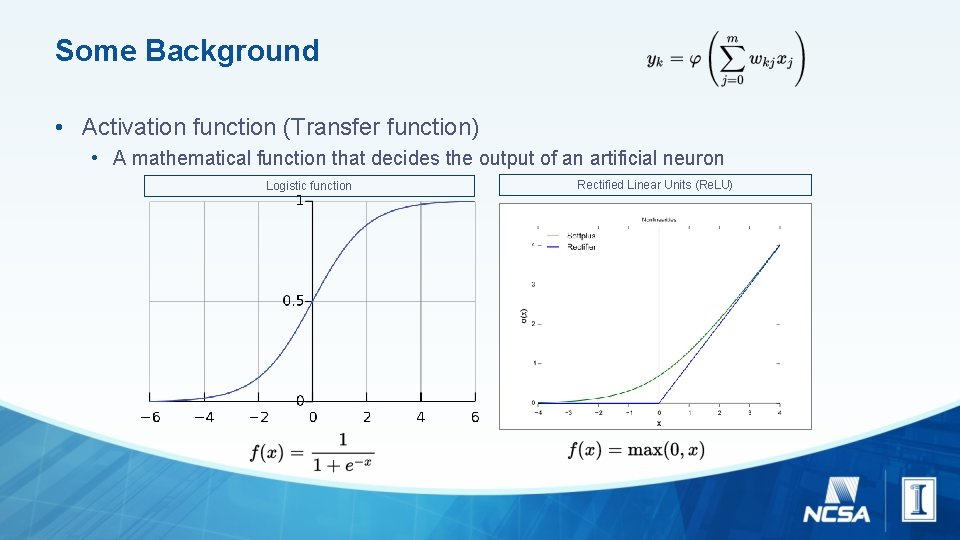

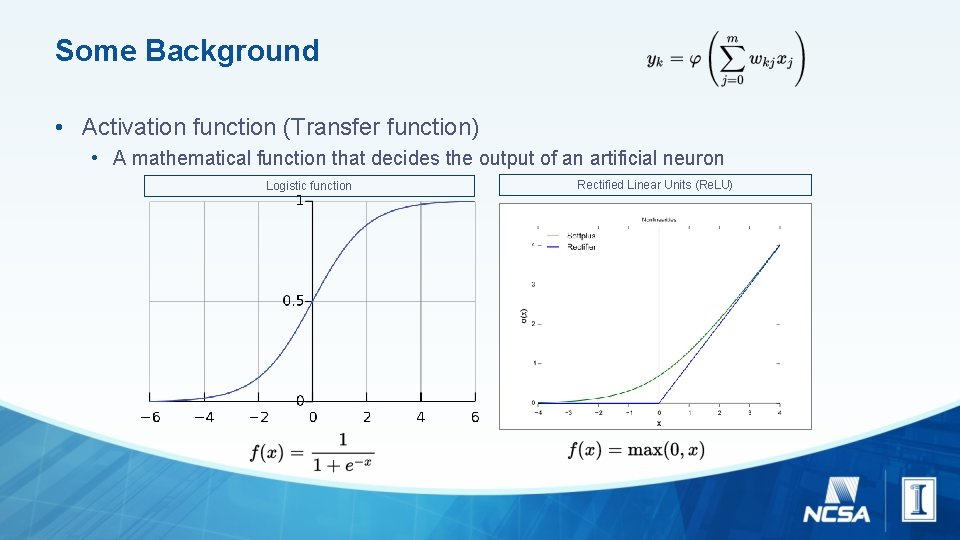

Some Background • Activation function (Transfer function) • A mathematical function that decides the output of an artificial neuron Logistic function Rectified Linear Units (Re. LU)

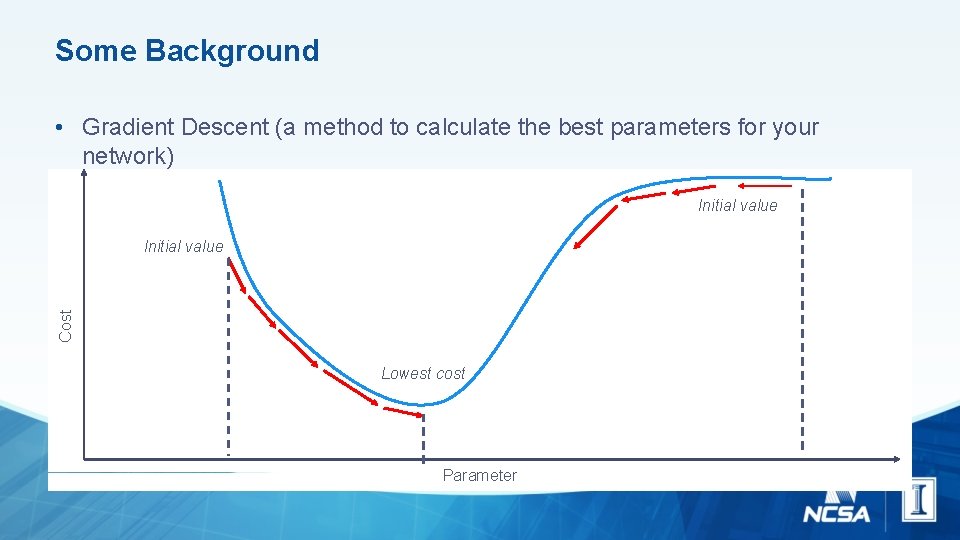

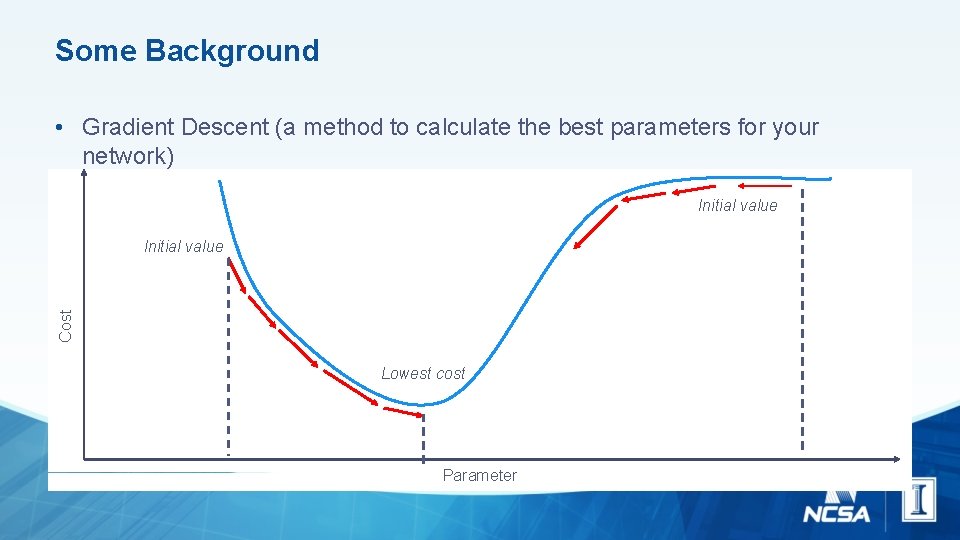

Some Background • Gradient Descent (a method to calculate the best parameters for your network) Initial value Cost Initial value Lowest cost Parameter

Some Background • Different types of datasets • • Training data Development data (should resemble real-world data) Test data (should resemble real-world data) Real-world data

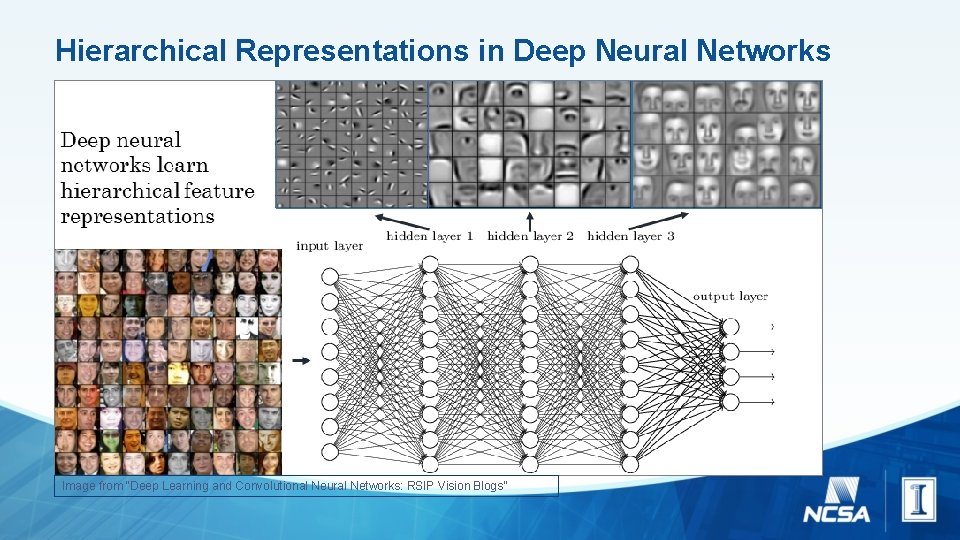

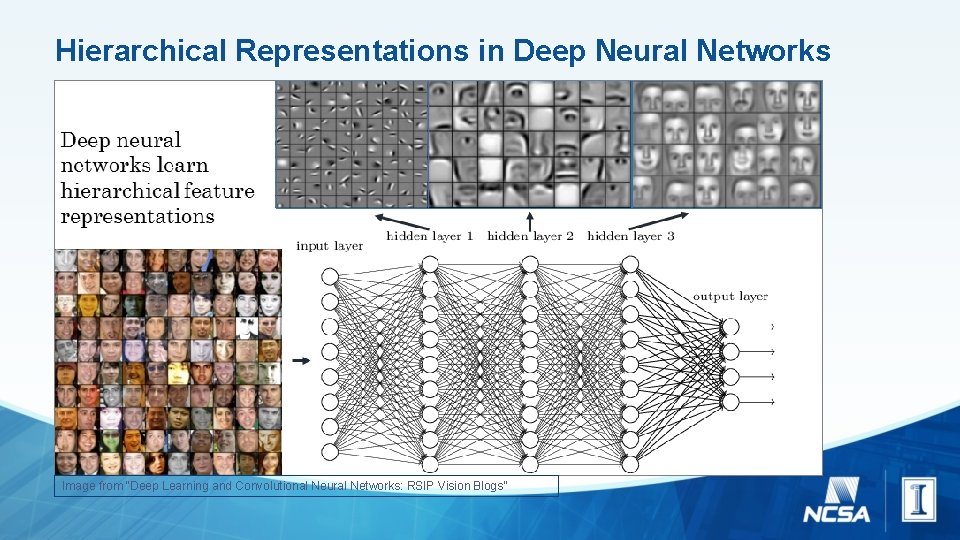

What is Deep Learning? • A group of machine learning algorithms applied to artificial neural networks with large number of layers and connections • Uses (mostly) supervised or unsupervised learning techniques • Multiple levels help in learning hierarchy of concepts / features • Pixels -> Edges -> Partial Faces -> Faces • Alphabets -> Phonemes -> Words -> Sentences

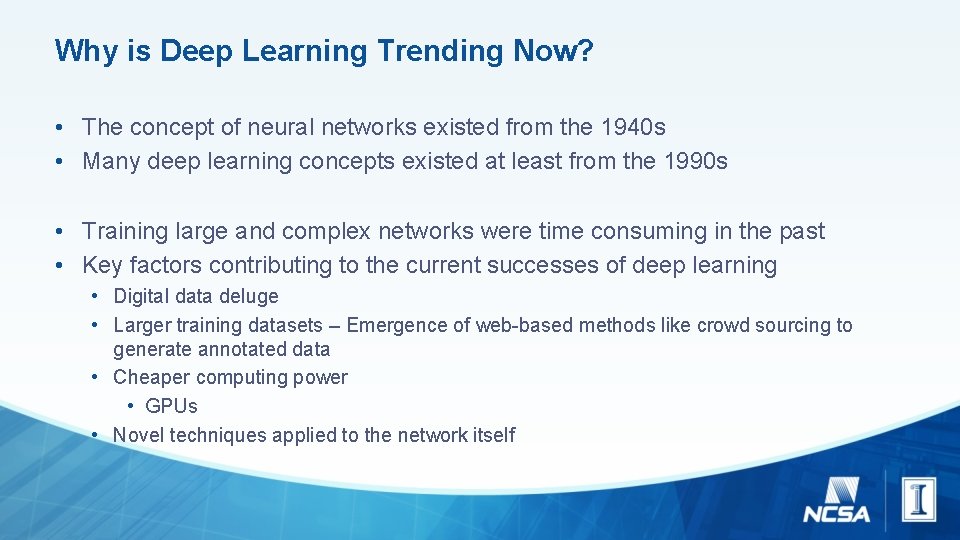

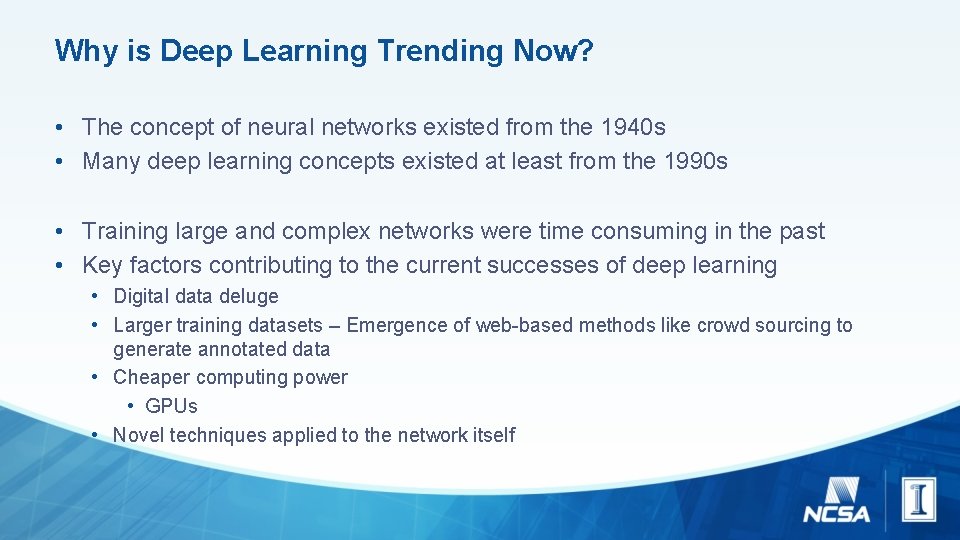

Why is Deep Learning Trending Now? • The concept of neural networks existed from the 1940 s • Many deep learning concepts existed at least from the 1990 s • Training large and complex networks were time consuming in the past • Key factors contributing to the current successes of deep learning • Digital data deluge • Larger training datasets – Emergence of web-based methods like crowd sourcing to generate annotated data • Cheaper computing power • GPUs • Novel techniques applied to the network itself

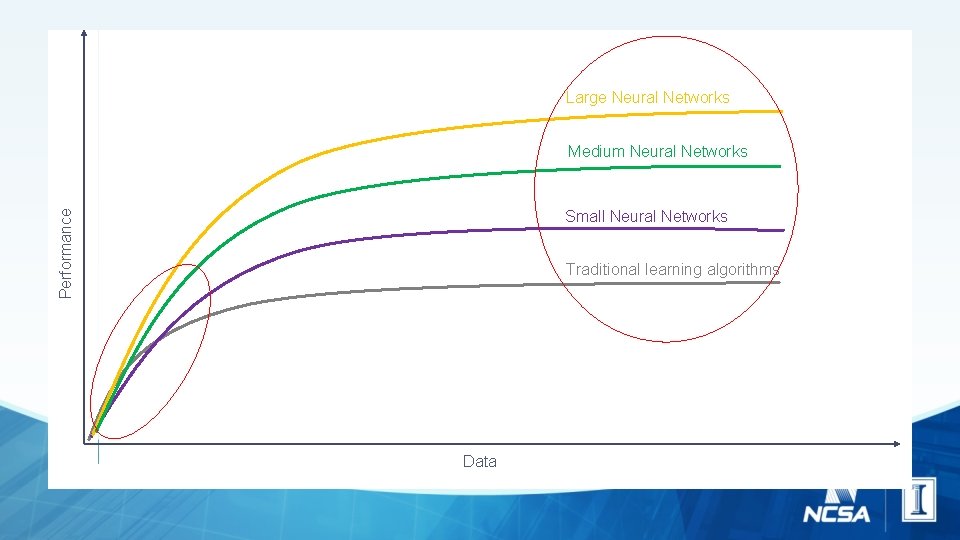

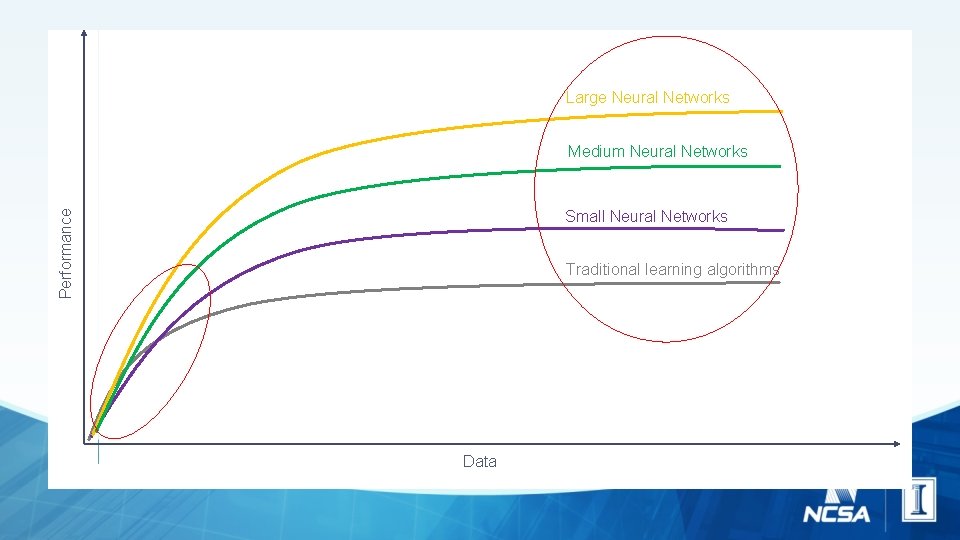

Large Neural Networks Medium Neural Networks Performance Small Neural Networks Traditional learning algorithms Data

Hierarchical Representations in Deep Neural Networks Image from “Deep Learning and Convolutional Neural Networks: RSIP Vision Blogs”

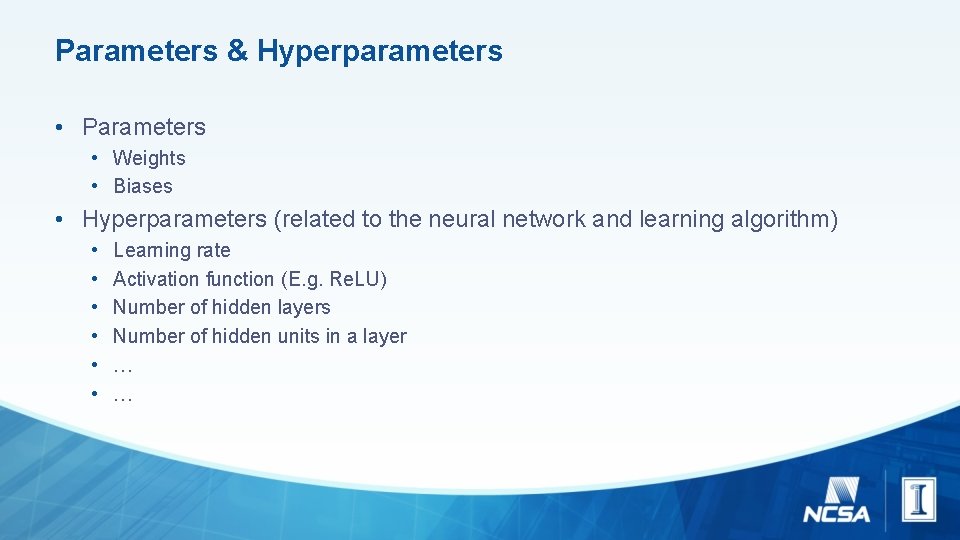

Parameters & Hyperparameters • Parameters • Weights • Biases • Hyperparameters (related to the neural network and learning algorithm) • • • Learning rate Activation function (E. g. Re. LU) Number of hidden layers Number of hidden units in a layer … …

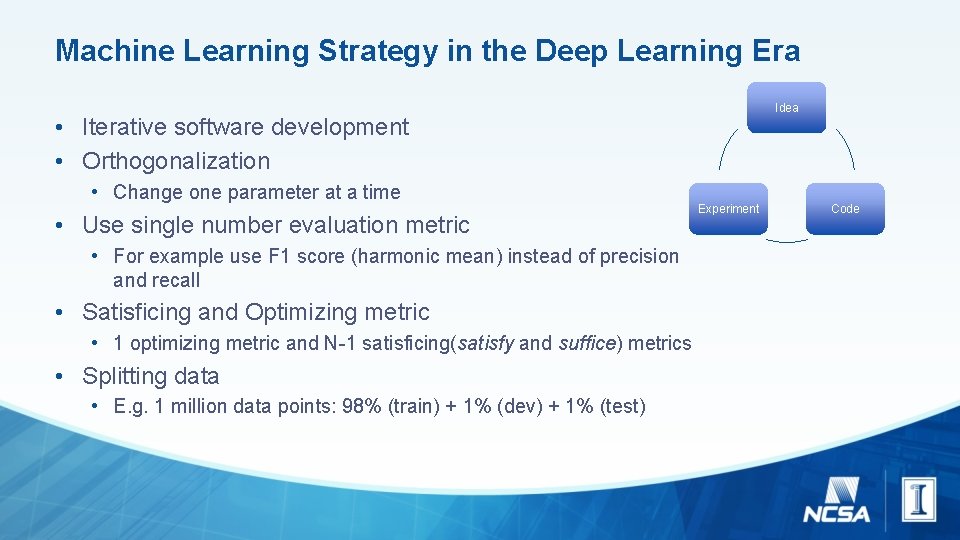

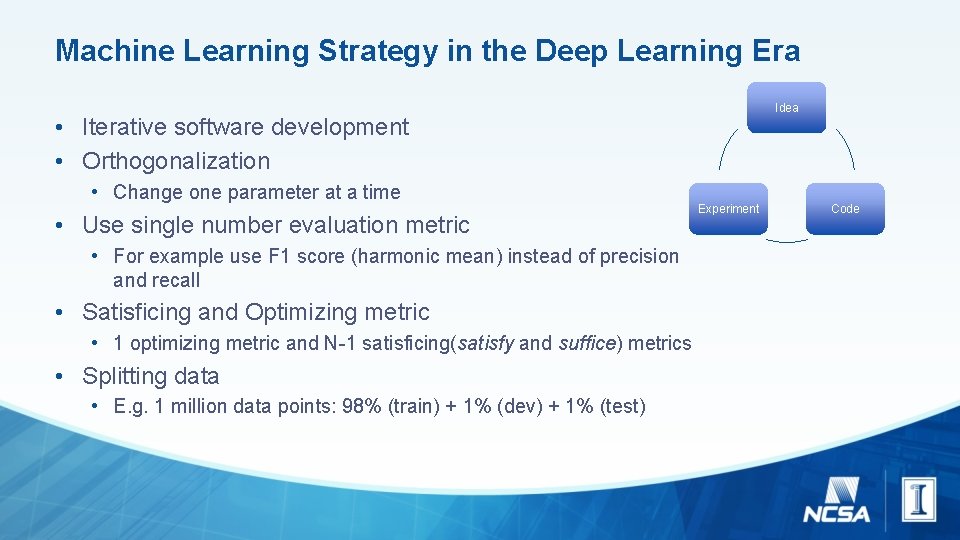

Machine Learning Strategy in the Deep Learning Era Idea • Iterative software development • Orthogonalization • Change one parameter at a time • Use single number evaluation metric • For example use F 1 score (harmonic mean) instead of precision and recall • Satisficing and Optimizing metric • 1 optimizing metric and N-1 satisficing(satisfy and suffice) metrics • Splitting data • E. g. 1 million data points: 98% (train) + 1% (dev) + 1% (test) Experiment Code

Key Takeaways • Some information on artificial neurons and neural networks • What is deep learning? • A group of machine learning algorithms applied to artificial neural networks with large number of layers and connections • Why is deep learning trending now? • Factors like large datasets, cheaper computing power contributing to quick development cycles • How deep neural networks are able to solve certain problems very successfully? • Hierarchical representation

References • Coursera Deep Learning & Neural Network Courses • Wikipedia