Introduction to Data Warehousing Randy Grenier Rev 11

- Slides: 68

Introduction to Data Warehousing Randy Grenier Rev. 11 November 2014

First of all what is data warehousing? The methods and architectures used to collect, integrate, transform and store operational data so that it can be used for analysis and reporting. Banking Data Integrate Transform Data Warehouse Analysis Manufacturing Data Integrate Transform Data Warehouse Analysis Healthcare Data Integrate Transform Data Warehouse Analysis

Contents • History • Paradigm Shift • Architecture • Emerging Technologies • Questions

History Some history of the following will facilitate a better understanding of how data warehousing came about: • How data is stored • How data is accessed • Transaction vs. analytical processing

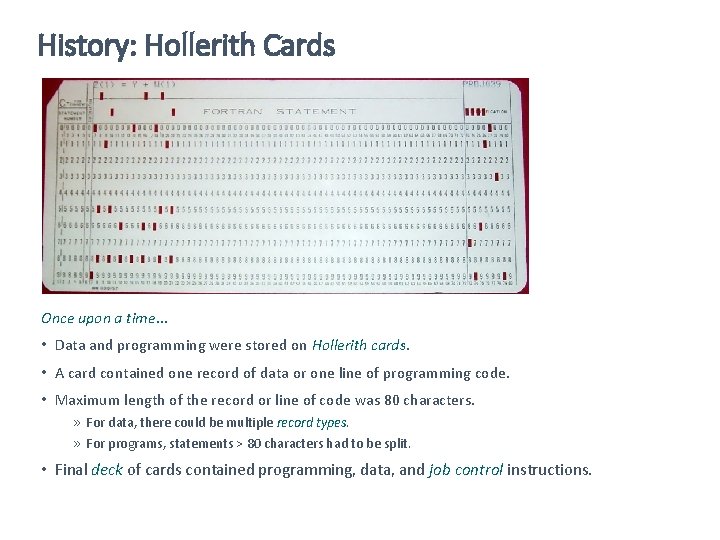

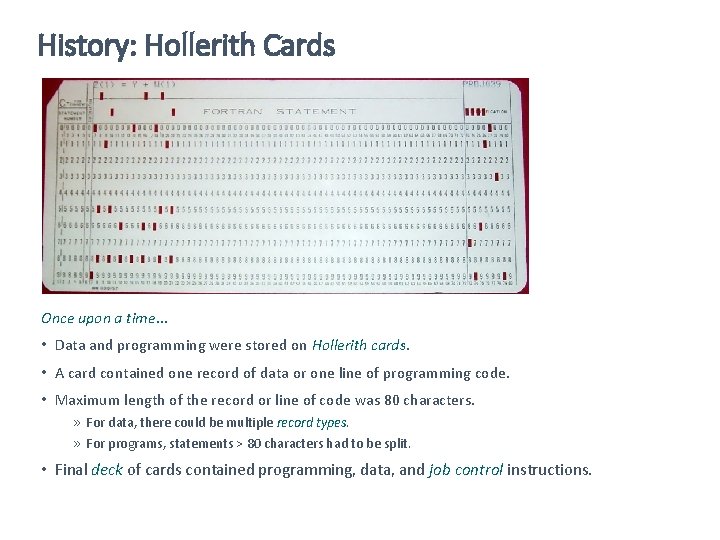

History: Hollerith Cards Once upon a time… • Data and programming were stored on Hollerith cards. • A card contained one record of data or one line of programming code. • Maximum length of the record or line of code was 80 characters. » For data, there could be multiple record types. » For programs, statements > 80 characters had to be split. • Final deck of cards contained programming, data, and job control instructions.

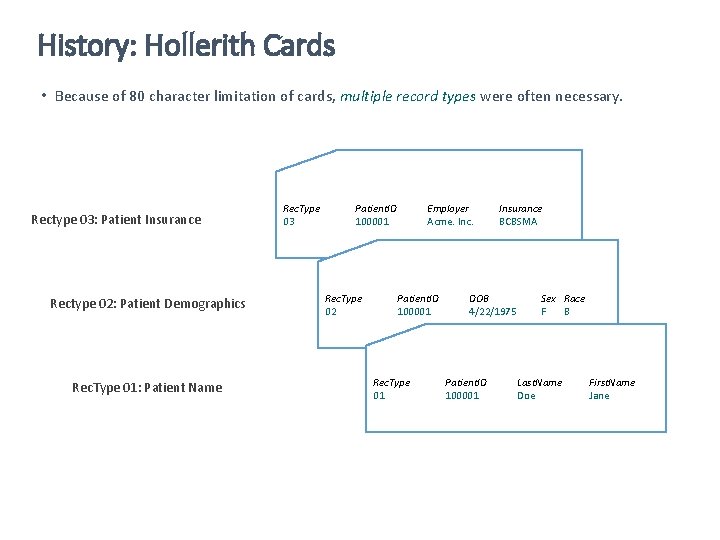

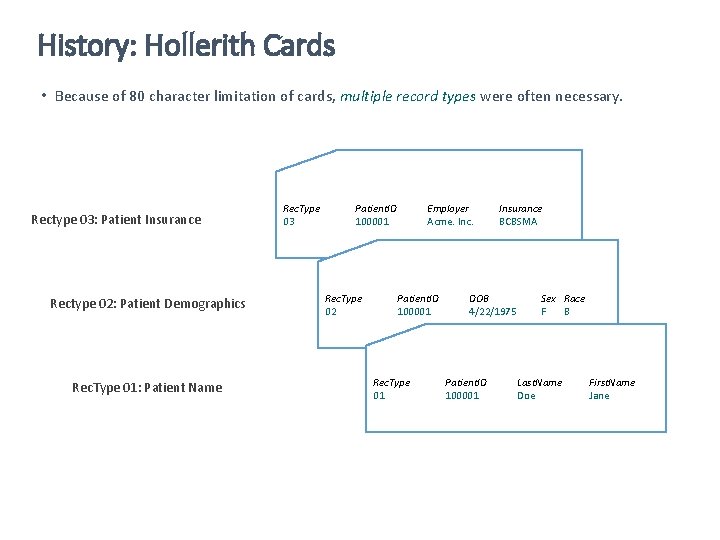

History: Hollerith Cards • Because of 80 character limitation of cards, multiple record types were often necessary. Rectype 03: Patient Insurance Rectype 02: Patient Demographics Rec. Type 01: Patient Name Rec. Type 03 Patient. ID 100001 Rec. Type 02 Employer Acme. Inc. Patient. ID 100001 Rec. Type 01 Insurance BCBSMA DOB 4/22/1975 Patient. ID 100001 Sex Race F B Last. Name Doe First. Name Jane

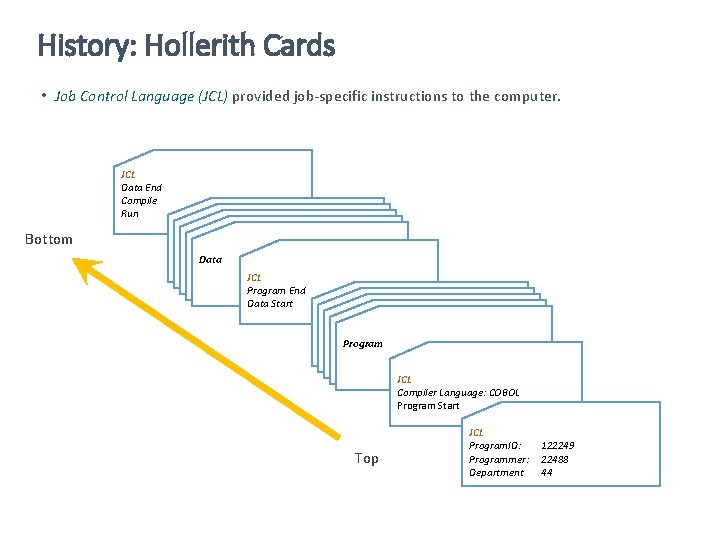

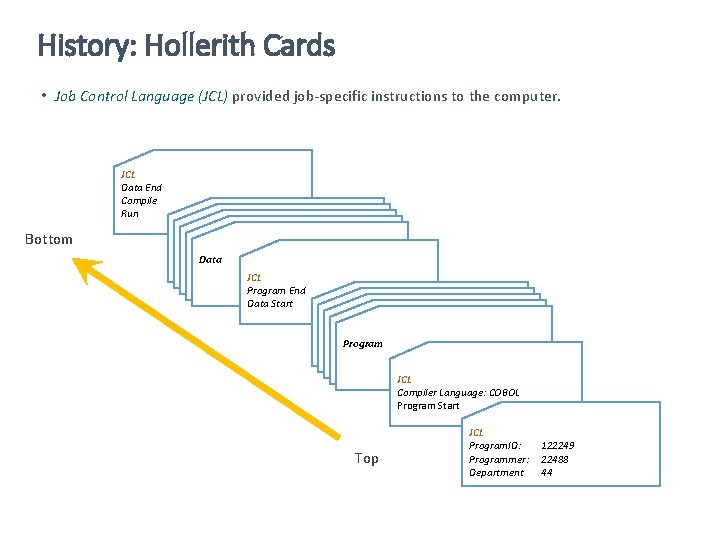

History: Hollerith Cards • Job Control Language (JCL) provided job-specific instructions to the computer. JCL Data End Compile Run Bottom Program Data Program JCL Program End Data Start Program Program JCL Compiler Language: COBOL Program Start Top JCL Program. ID: Programmer: Department 122249 22488 44

History: Hollerith Cards 1. Punch information onto cards using keypunch machines 2. Load with Card reader 3. Processed in computer memory 4. Create reports

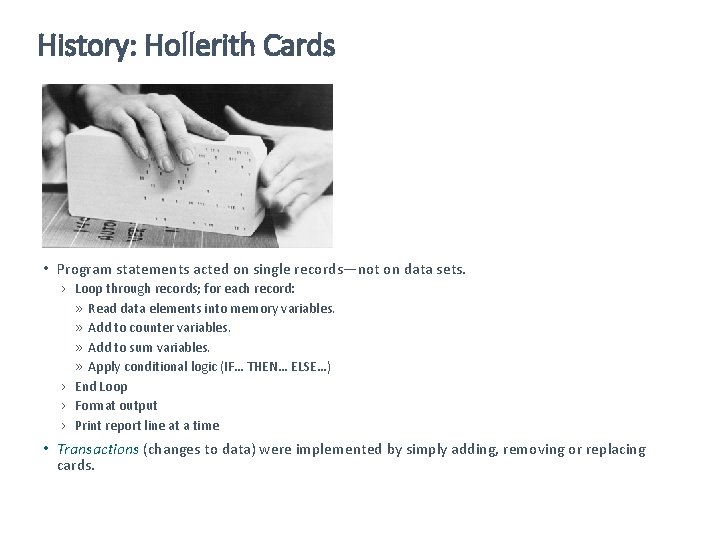

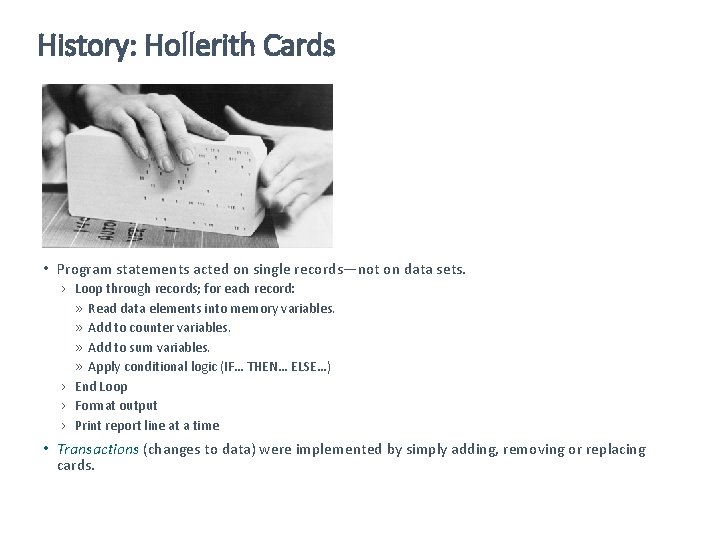

History: Hollerith Cards • Program statements acted on single records—not on data sets. › Loop through records; for each record: » Read data elements into memory variables. » Add to counter variables. » Add to sum variables. » Apply conditional logic (IF… THEN… ELSE…) › End Loop › Format output › Print report line at a time • Transactions (changes to data) were implemented by simply adding, removing or replacing cards.

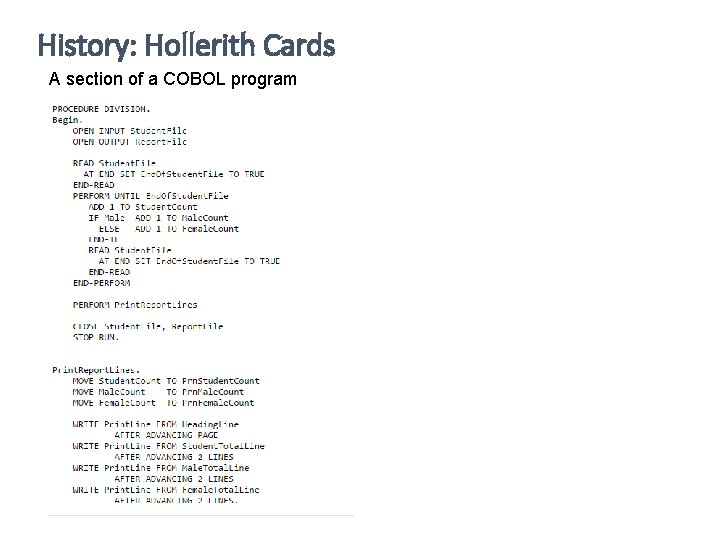

History: Hollerith Cards A section of a COBOL program

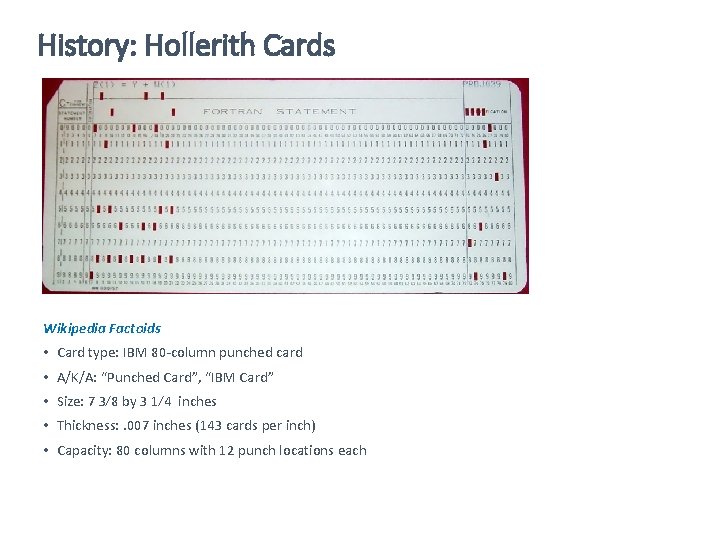

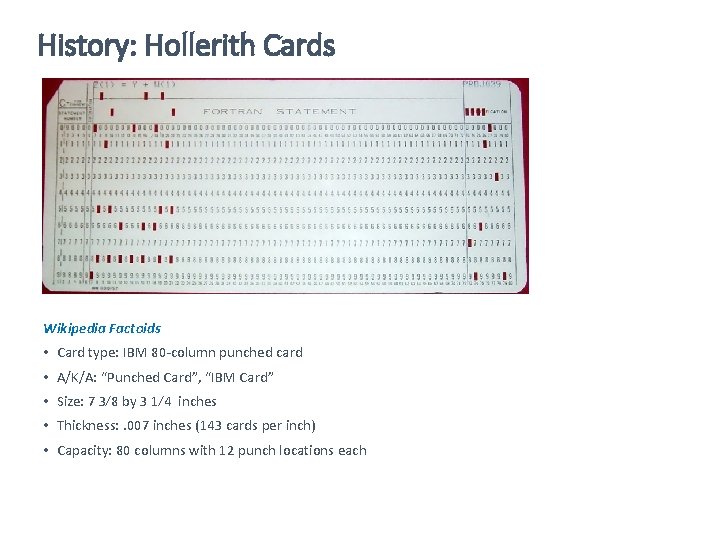

History: Hollerith Cards Wikipedia Factoids • Card type: IBM 80 -column punched card • A/K/A: “Punched Card”, “IBM Card” • Size: 7 3⁄8 by 3 1⁄4 inches • Thickness: . 007 inches (143 cards per inch) • Capacity: 80 columns with 12 punch locations each

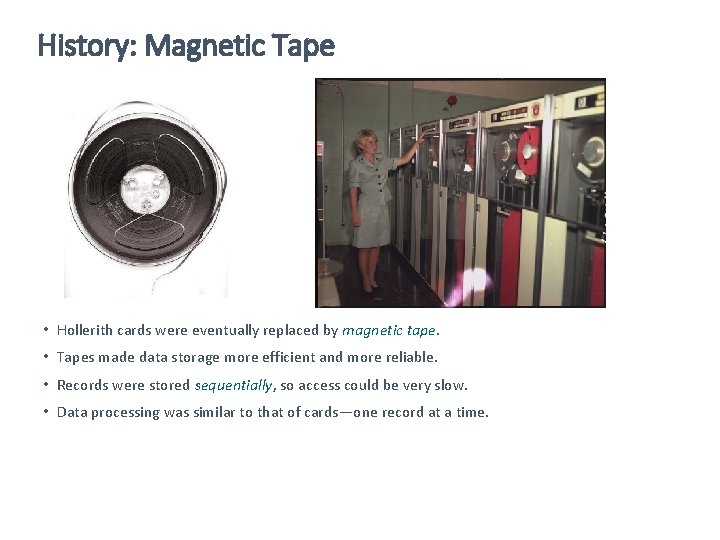

History: Magnetic Tape • Hollerith cards were eventually replaced by magnetic tape. • Tapes made data storage more efficient and more reliable. • Records were stored sequentially, so access could be very slow. • Data processing was similar to that of cards—one record at a time.

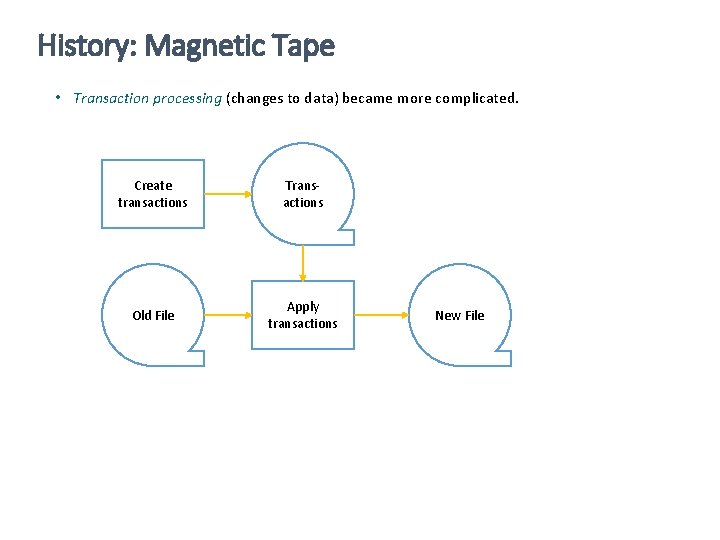

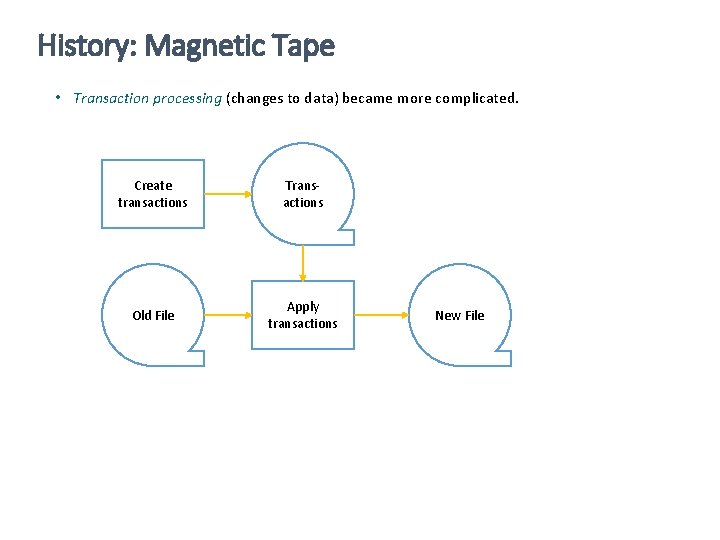

History: Magnetic Tape • Transaction processing (changes to data) became more complicated. Create transactions Transactions Old File Apply transactions New File

History: Disk Storage Flowchart symbol for disk storage • The arrival of disk storage revolutionized data storage and access. • Instead of having to load data for each process, data was always available online. • Data was available to multiple users at any given time. • This “home base” for data became known as a database. • Direct access replaced sequential access so data could be accessed more quickly.

History: Disk Storage Flowchart symbol for disk storage • Online storage required new methods for processing transactions. These became known as Online Transaction Processing (OLTP). • Reporting from online data became known as Online Analytical Processing (OLAP). • Programming reports was the same as before—one record at a time.

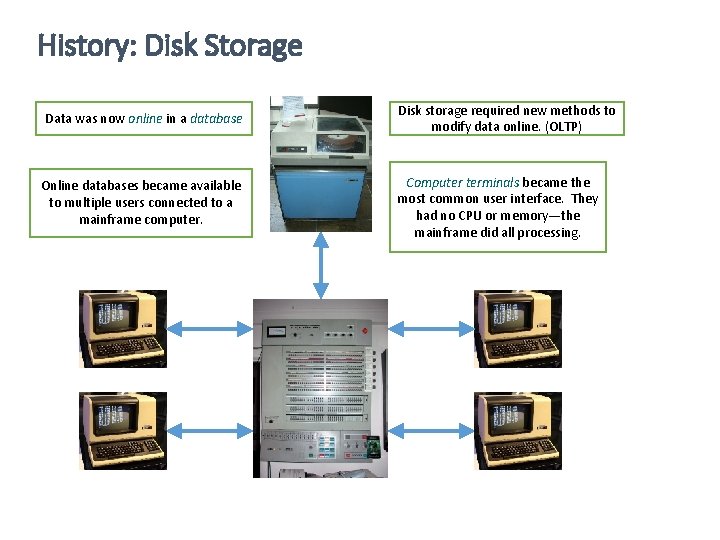

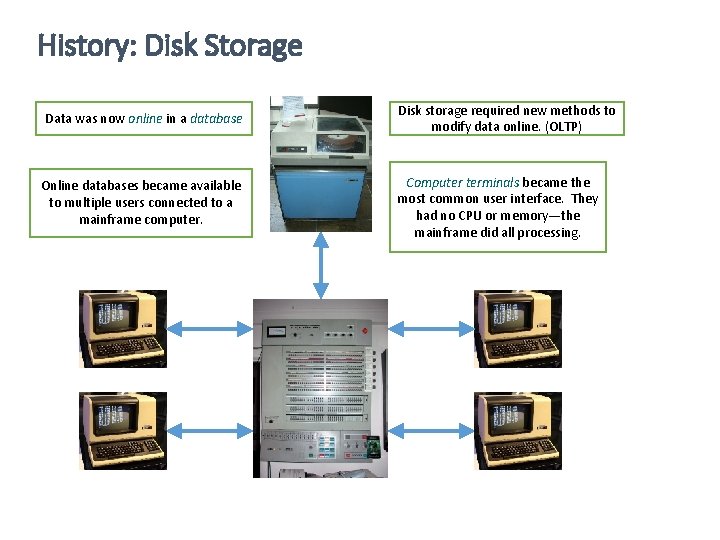

History: Disk Storage Data was now online in a database Disk storage required new methods to modify data online. (OLTP) Online databases became available to multiple users connected to a mainframe computer. Computer terminals became the most common user interface. They had no CPU or memory—the mainframe did all processing.

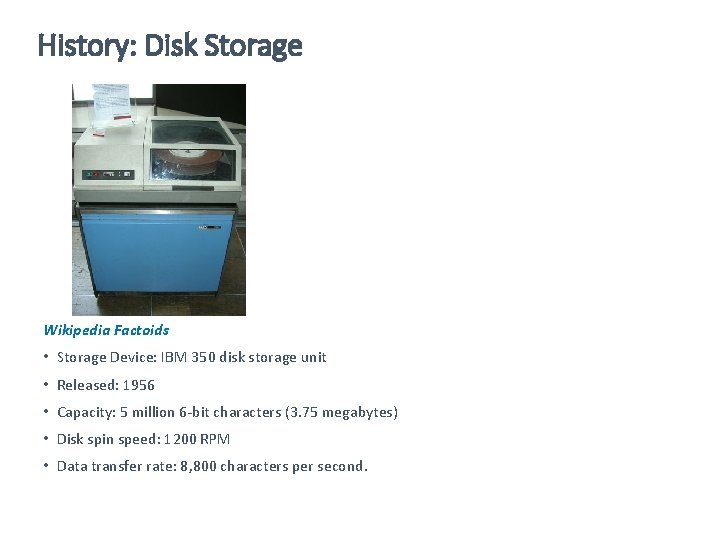

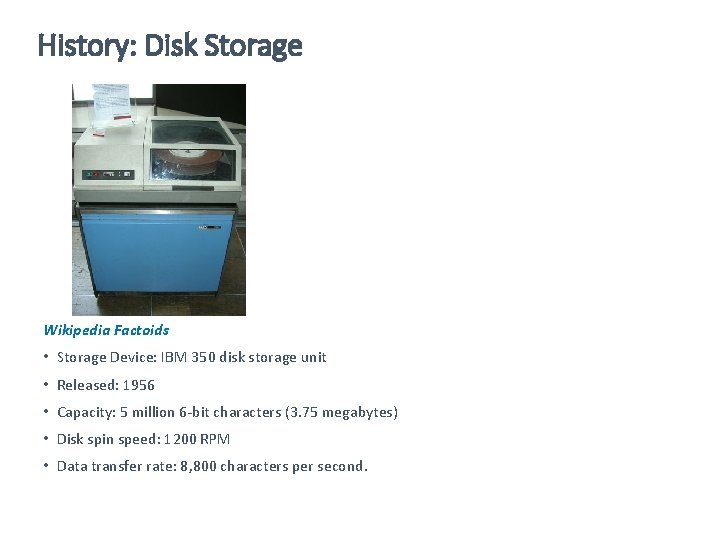

History: Disk Storage Wikipedia Factoids • Storage Device: IBM 350 disk storage unit • Released: 1956 • Capacity: 5 million 6 -bit characters (3. 75 megabytes) • Disk spin speed: 1200 RPM • Data transfer rate: 8, 800 characters per second.

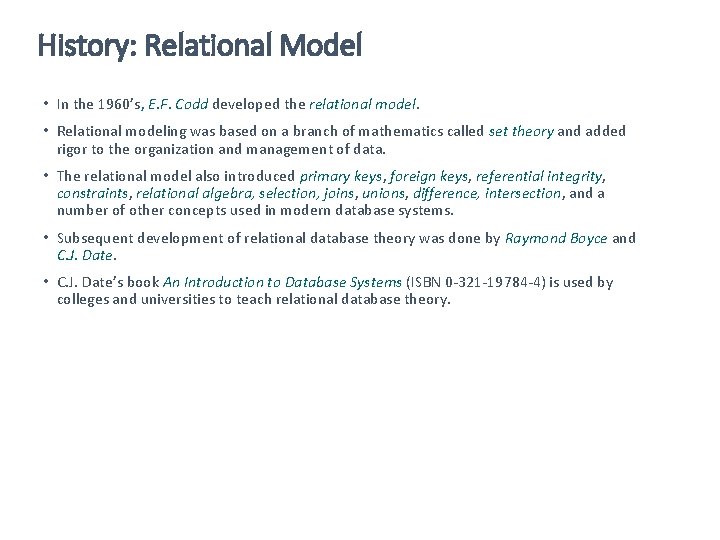

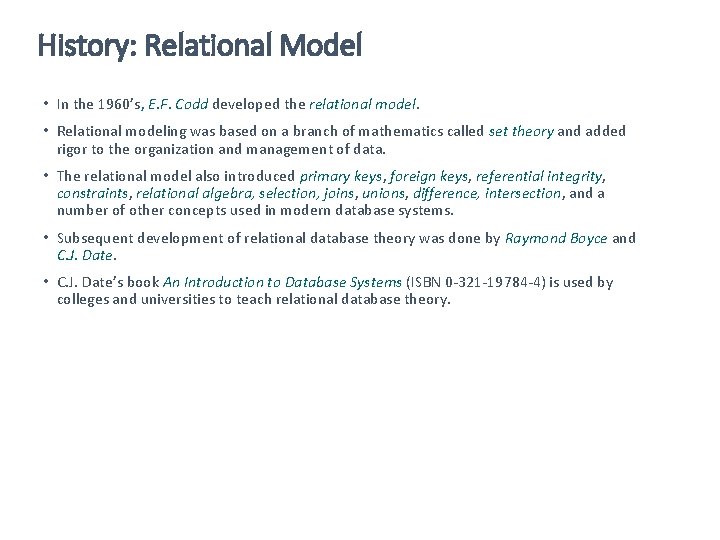

History: Relational Model • In the 1960’s, E. F. Codd developed the relational model. • Relational modeling was based on a branch of mathematics called set theory and added rigor to the organization and management of data. • The relational model also introduced primary keys, foreign keys, referential integrity, constraints, relational algebra, selection, joins, unions, difference, intersection, and a number of other concepts used in modern database systems. • Subsequent development of relational database theory was done by Raymond Boyce and C. J. Date. • C. J. Date’s book An Introduction to Database Systems (ISBN 0 -321 -19784 -4) is used by colleges and universities to teach relational database theory.

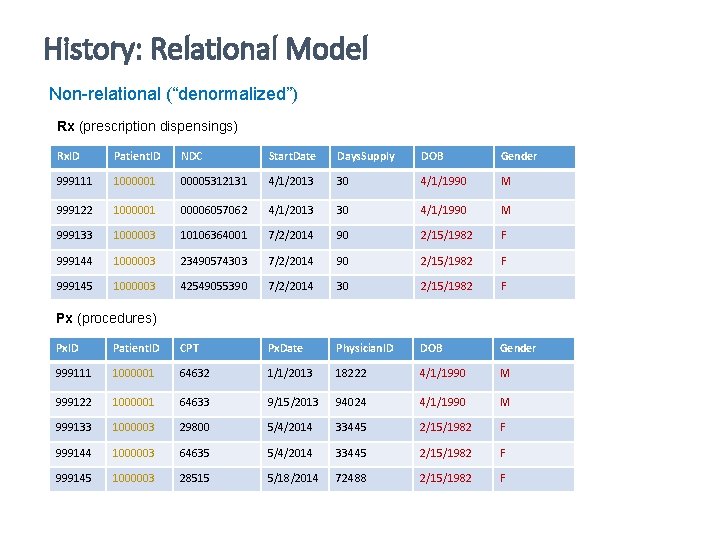

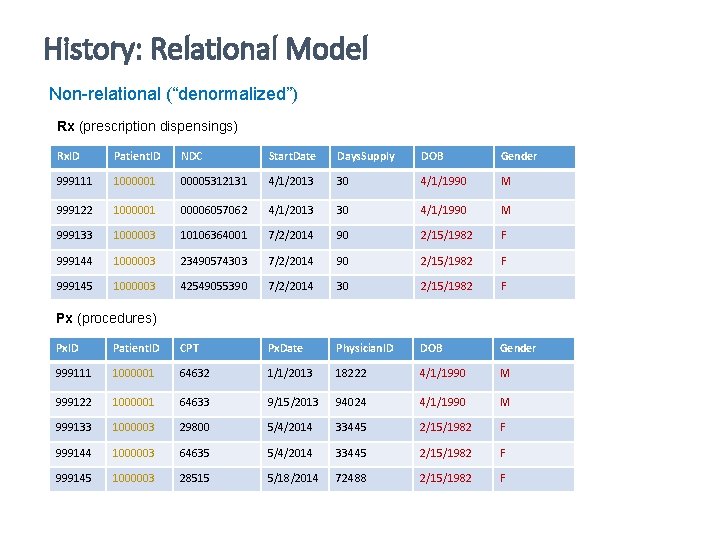

History: Relational Model Non-relational (“denormalized”) Rx (prescription dispensings) Rx. ID Patient. ID NDC Start. Date Days. Supply DOB Gender 999111 1000001 00005312131 4/1/2013 30 4/1/1990 M 999122 1000001 00006057062 4/1/2013 30 4/1/1990 M 999133 1000003 10106364001 7/2/2014 90 2/15/1982 F 999144 1000003 23490574303 7/2/2014 90 2/15/1982 F 999145 1000003 42549055390 7/2/2014 30 2/15/1982 F Px (procedures) Px. ID Patient. ID CPT Px. Date Physician. ID DOB Gender 999111 1000001 64632 1/1/2013 18222 4/1/1990 M 999122 1000001 64633 9/15/2013 94024 4/1/1990 M 999133 1000003 29800 5/4/2014 33445 2/15/1982 F 999144 1000003 64635 5/4/2014 33445 2/15/1982 F 999145 1000003 28515 5/18/2014 72488 2/15/1982 F

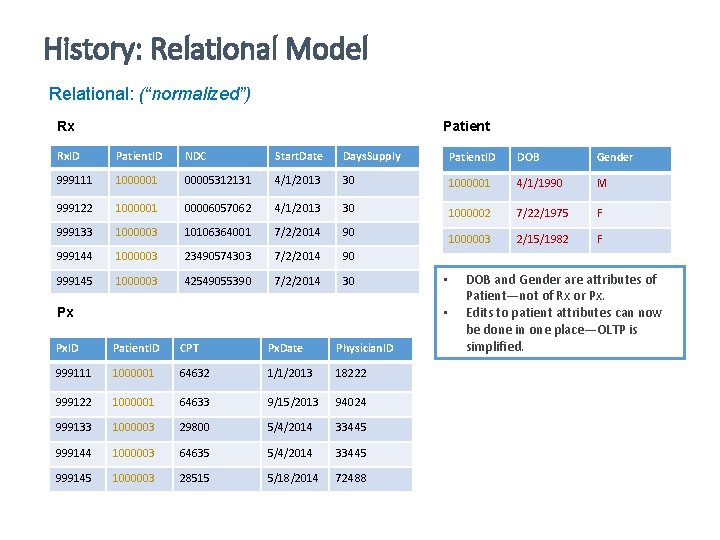

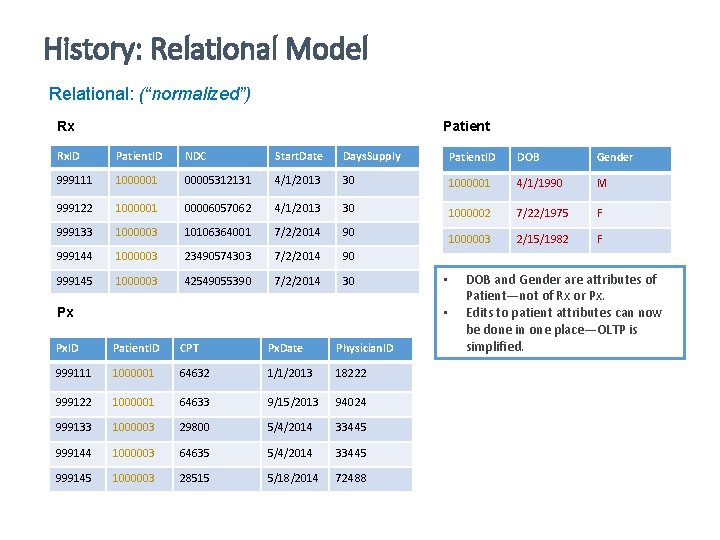

History: Relational Model Relational: (“normalized”) Rx Patient Rx. ID Patient. ID NDC Start. Date Days. Supply Patient. ID DOB Gender 999111 1000001 00005312131 4/1/2013 30 1000001 4/1/1990 M 999122 1000001 00006057062 4/1/2013 30 1000002 7/22/1975 F 999133 1000003 10106364001 7/2/2014 90 1000003 2/15/1982 F 999144 1000003 23490574303 7/2/2014 90 999145 1000003 42549055390 7/2/2014 30 • • Px Px. ID Patient. ID CPT Px. Date Physician. ID 999111 1000001 64632 1/1/2013 18222 999122 1000001 64633 9/15/2013 94024 999133 1000003 29800 5/4/2014 33445 999144 1000003 64635 5/4/2014 33445 999145 1000003 28515 5/18/2014 72488 DOB and Gender are attributes of Patient—not of Rx or Px. Edits to patient attributes can now be done in one place—OLTP is simplified.

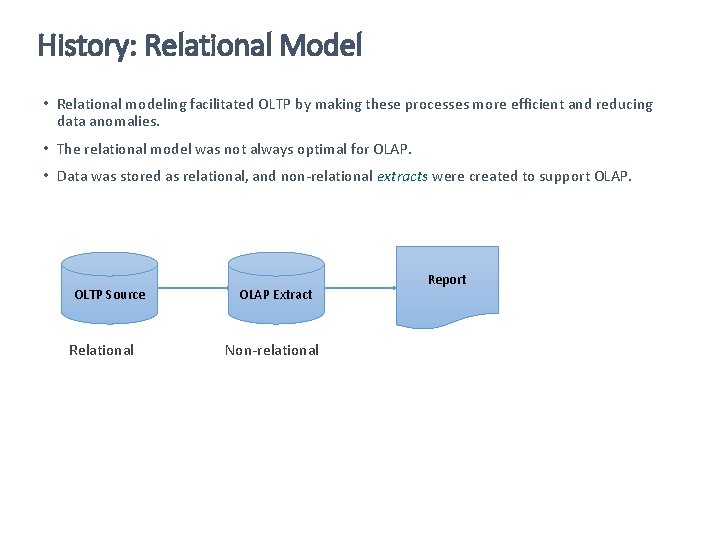

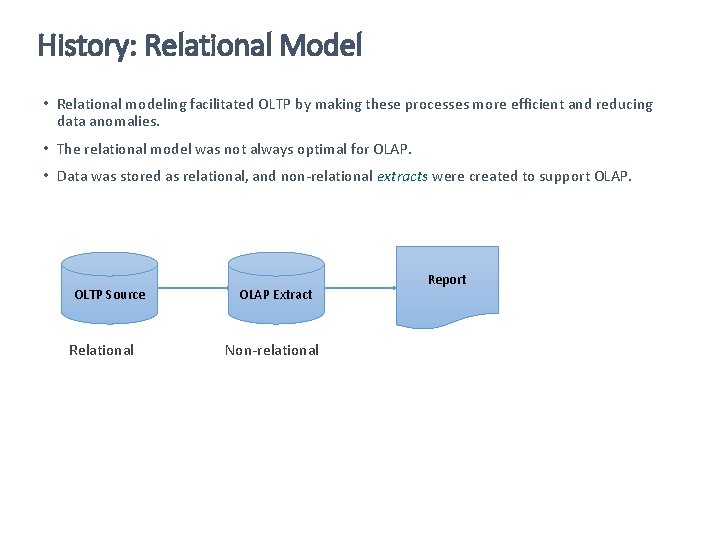

History: Relational Model • Relational modeling facilitated OLTP by making these processes more efficient and reducing data anomalies. • The relational model was not always optimal for OLAP. • Data was stored as relational, and non-relational extracts were created to support OLAP. OLTP Source Relational OLAP Extract Non-relational Report

History: Relational Model Wikipedia Factoids • E. F. Codd got his undergraduate degree in mathematics from Oxford. He received his doctorate in computer science from University of Michigan. • Angered by Mc. Carthyism in the U. S. during the 1950’s, Codd moved to Canada for several years. • Although E. F. Codd was employed by IBM when he created the relational model, IBM did not commercialize relational databases because it would have competed with another of their database products. • The first commercial implementation of relational database and SQL was from Relational Software, Inc. which is now Oracle Corporation.

History: SQL • Structured Query Language (SQL) was the first language created to support relational database operations for both OLAP and OLTP. • SQL could operate on sets of records instead of just one record at a time. • IBM originally called the language SEQUEL, but because that was a name proprietary to IBM it was renamed SQL. • SQL has been standardized by standards organizations American National Standards Institute (ANSI) and the International Standards Organization (ISO).

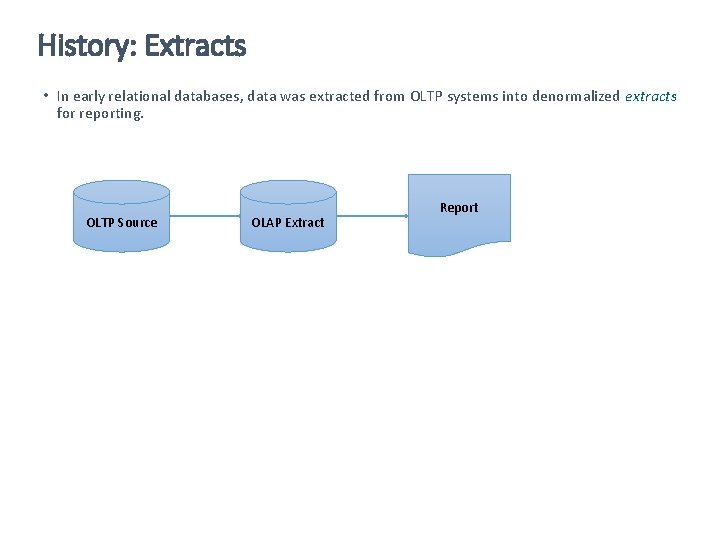

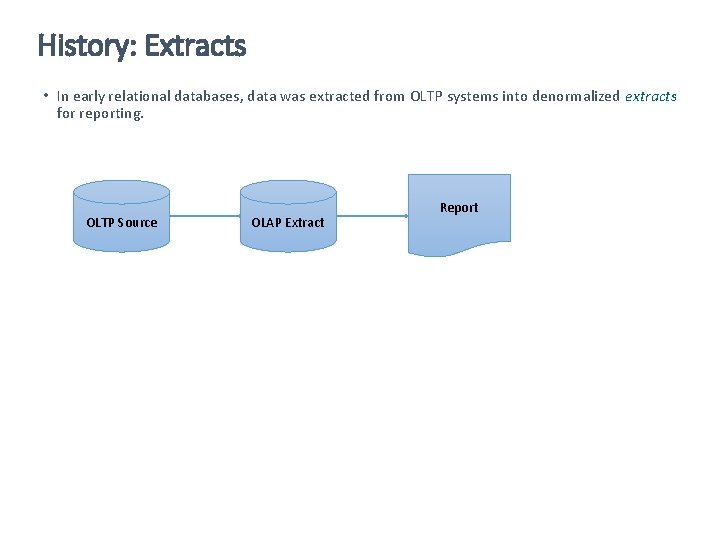

History: Extracts • In early relational databases, data was extracted from OLTP systems into denormalized extracts for reporting. OLTP Source OLAP Extract Report

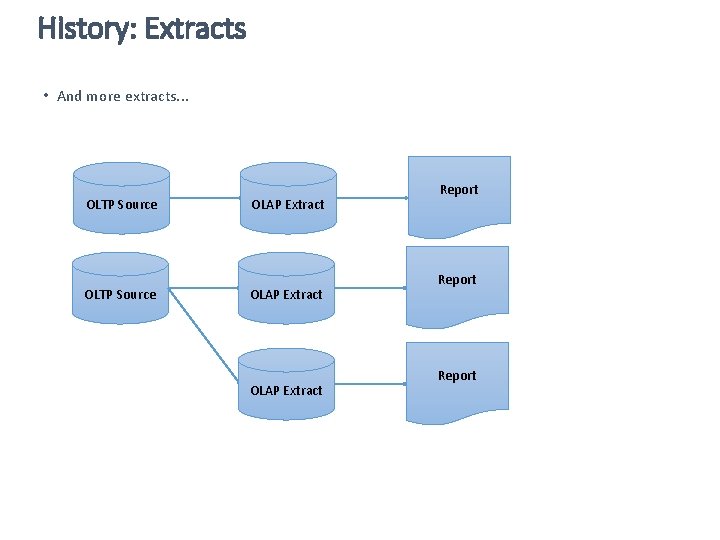

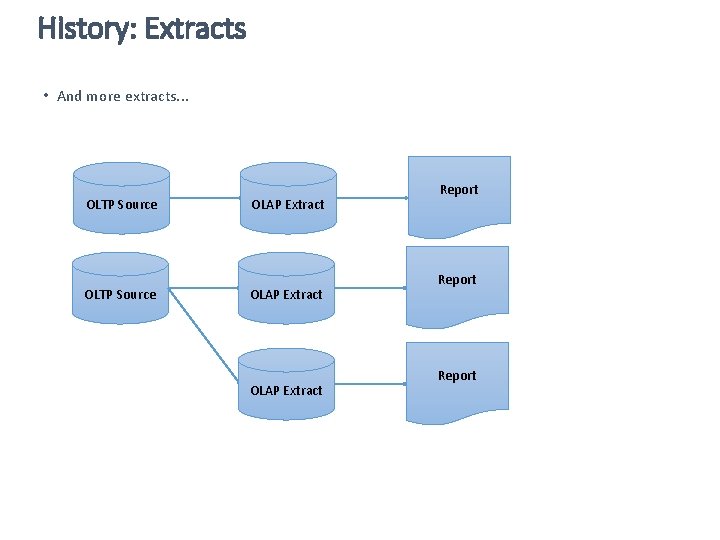

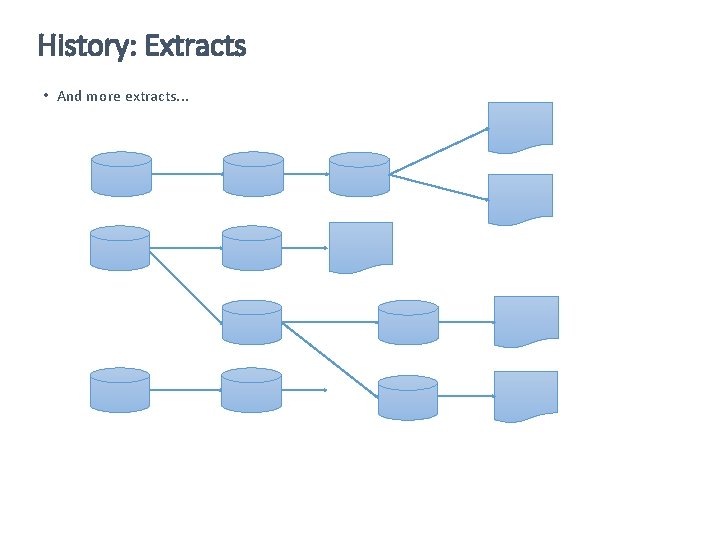

History: Extracts • And more extracts. . . OLTP Source OLAP Extract Report

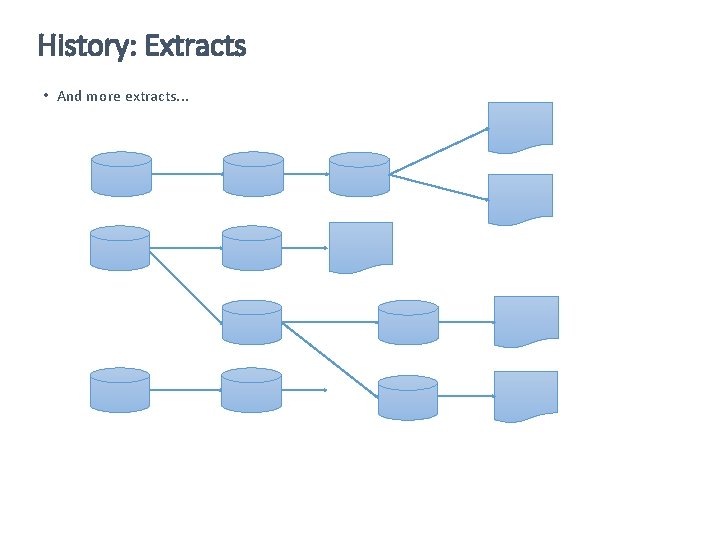

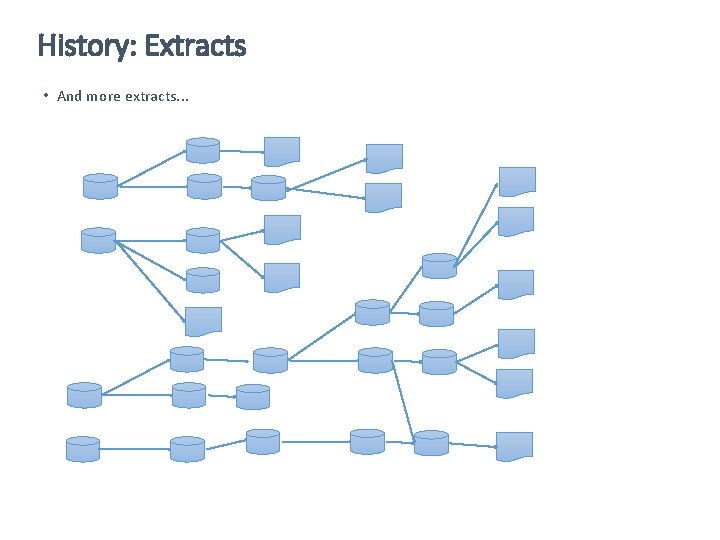

History: Extracts • And more extracts. . .

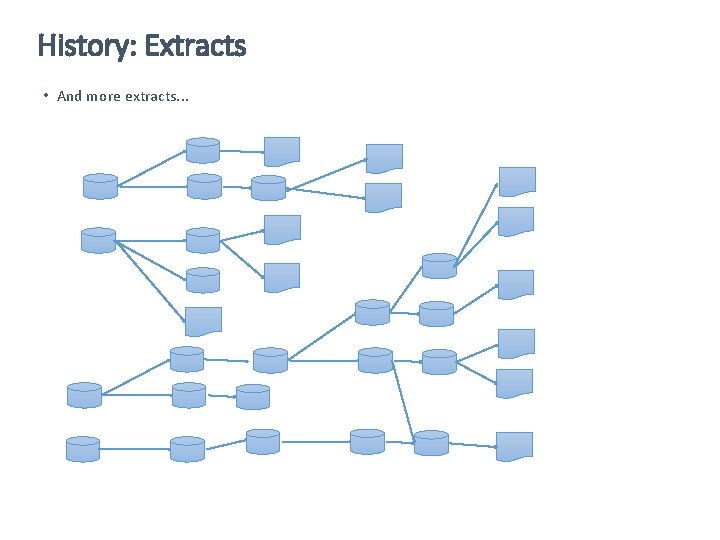

History: Extracts • And more extracts. . .

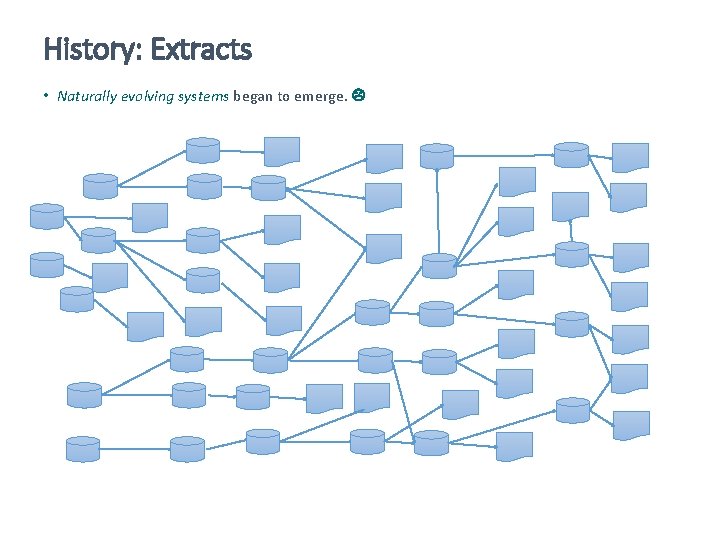

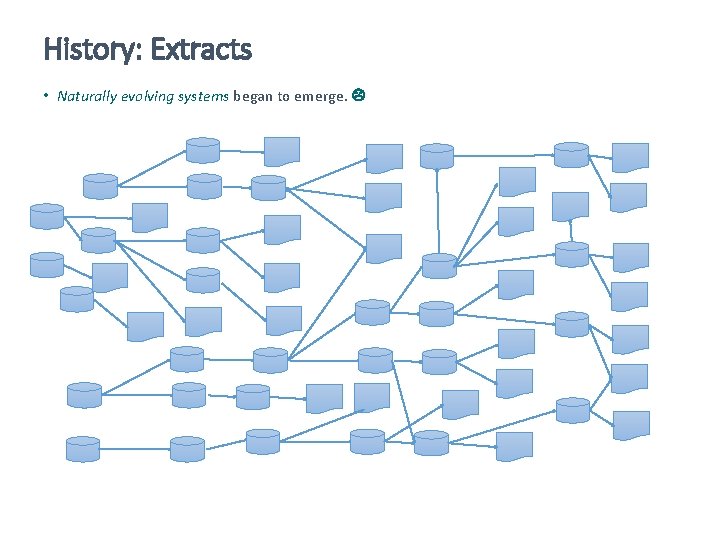

History: Extracts • Naturally evolving systems began to emerge.

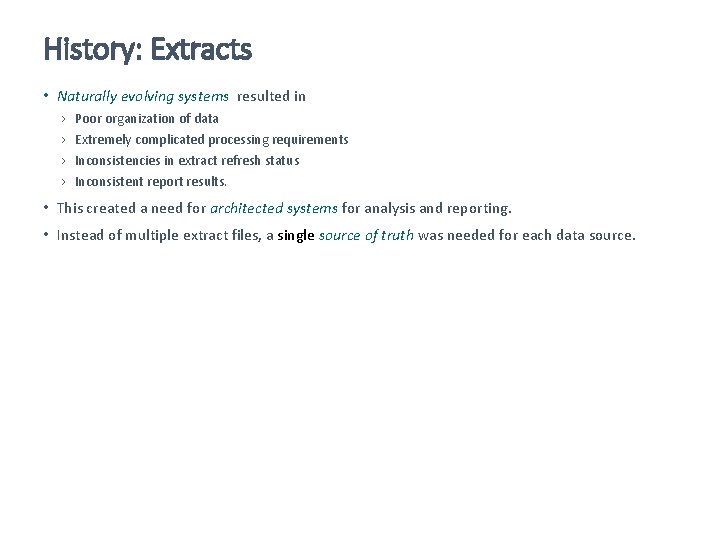

History: Extracts • Naturally evolving systems resulted in › › Poor organization of data Extremely complicated processing requirements Inconsistencies in extract refresh status Inconsistent report results. • This created a need for architected systems for analysis and reporting. • Instead of multiple extract files, a single source of truth was needed for each data source.

History: Architected Systems • Developers began to design architected systems for OLAP data. • Over time methods and techniques for architected systems began to evolve, and best practices began to emerge. • In the 1980’s organizations began to integrate data from all of their databases (. e. g accts. receivable, accts. payable, HR, inventory, etc. ). These integrated OLAP databases became known as Enterprise Data Warehouses (EDWs). • The term data warehousing came to be used for the methods and architectures used to build architected OLAP databases.

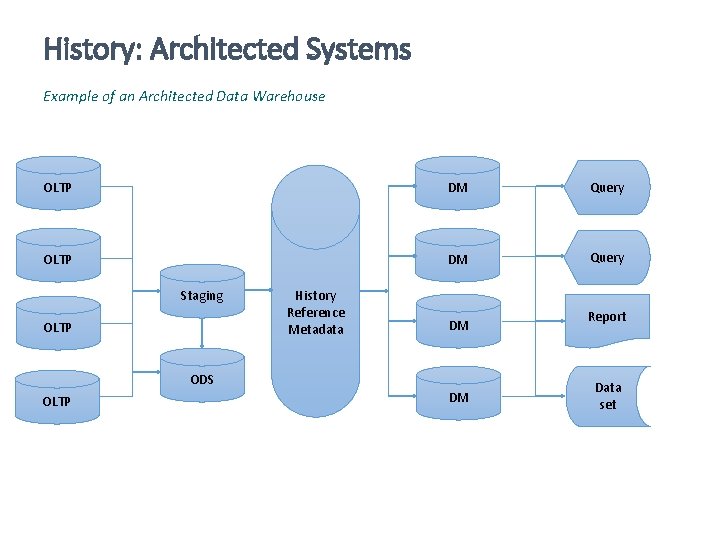

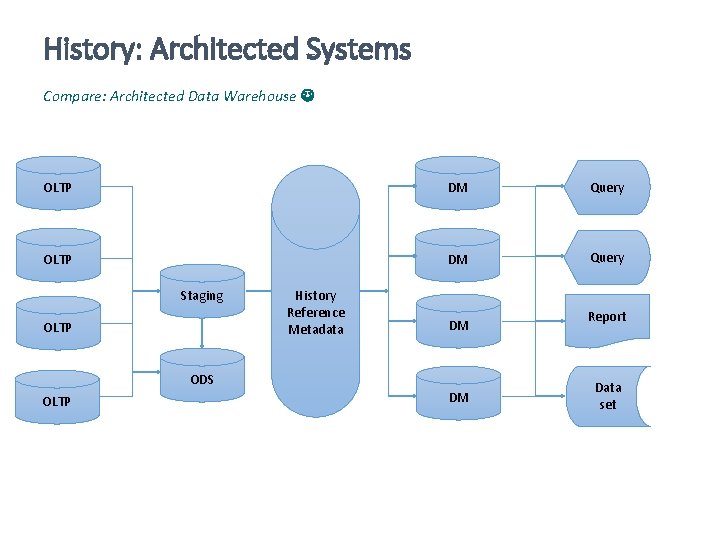

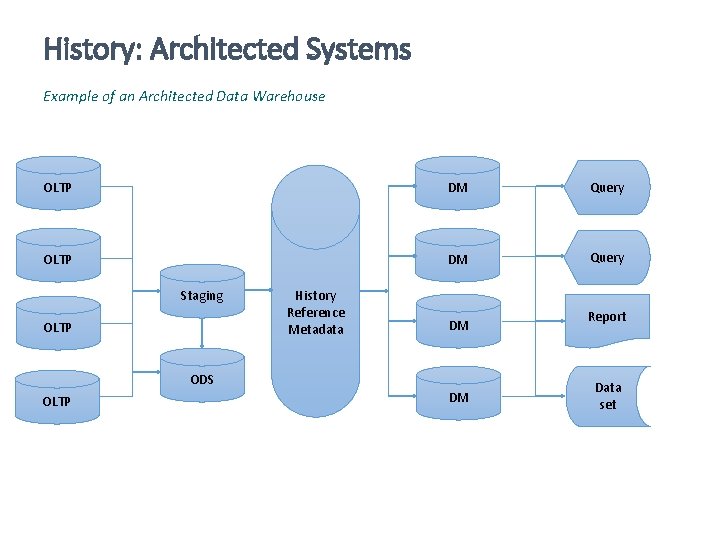

History: Architected Systems Example of an Architected Data Warehouse OLTP DM Query Staging OLTP History Reference Metadata DM ODS OLTP DM Report Data set

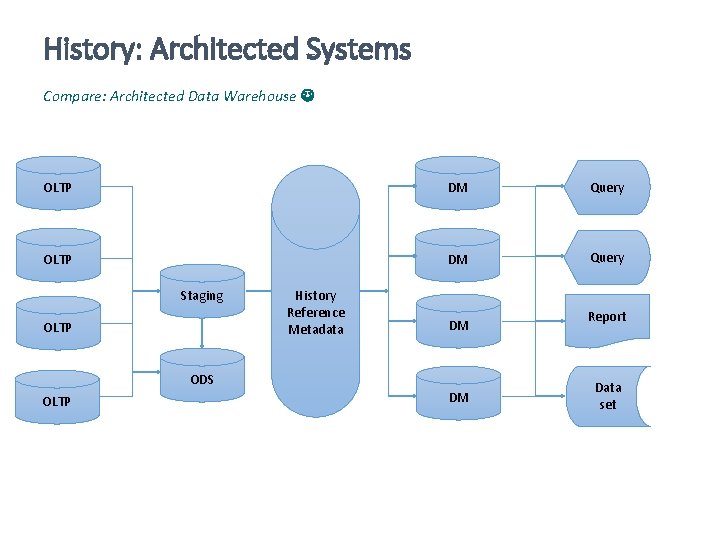

History: Architected Systems Compare: Naturally evolving system

History: Architected Systems Compare: Architected Data Warehouse OLTP DM Query Staging OLTP History Reference Metadata DM ODS OLTP DM Report Data set

History: Inmon • In the early 1990’s W. H. Inmon published Building the Data Warehouse (ISBN 10: 0471141615) • Inmon put together the quickly accumulating knowledge of data warehousing and popularized most of the terminology we use today. › Extract, Transform, and Load (ETL) › Transformation and Integration (T&I) › Operational data › History data › Snapshot › Source of Truth › Data Mart › Heuristic development • W. H. Inmon created the first and most commonly accepted definition of a data warehouse: A subject oriented, nonvolatile, integrated, time variant collection of data in support of management's decisions. • Inmon has subsequently published a more recent architecture called DW 2. 0, but it is not yet as widely accepted as his earlier ideas.

History: Inmon Wikipedia Factoids • W. H. Inmon coined the term data warehouse. • W. H. Inmon is recognized by many as the father of data warehousing. • Other firsts of W. H. Inman › Wrote the first book on data warehousing › Wrote the first magazine column on data warehousing › Taught the first classes on data warehousing

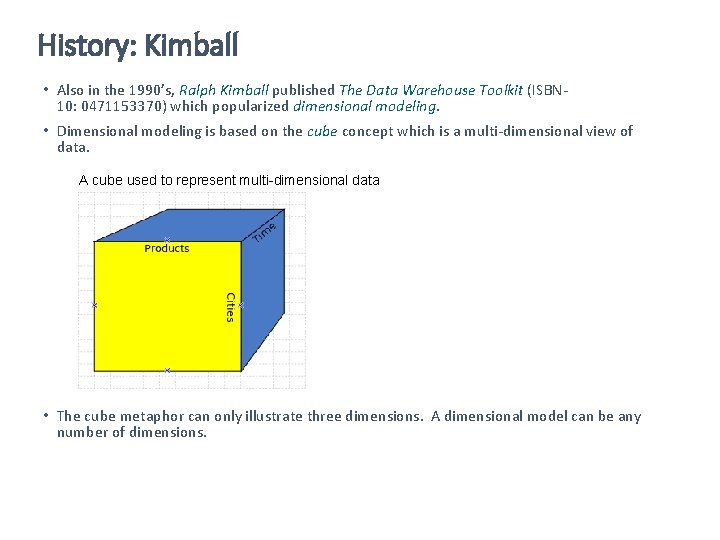

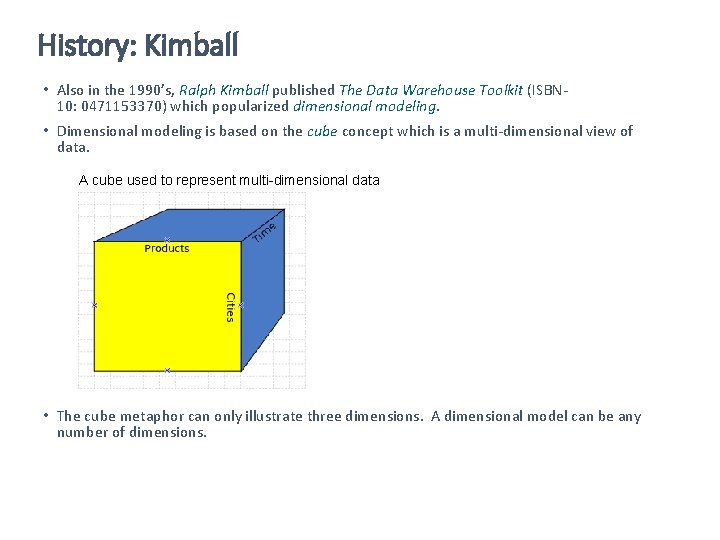

History: Kimball • Also in the 1990’s, Ralph Kimball published The Data Warehouse Toolkit (ISBN 10: 0471153370) which popularized dimensional modeling. • Dimensional modeling is based on the cube concept which is a multi-dimensional view of data. A cube used to represent multi-dimensional data • The cube metaphor can only illustrate three dimensions. A dimensional model can be any number of dimensions.

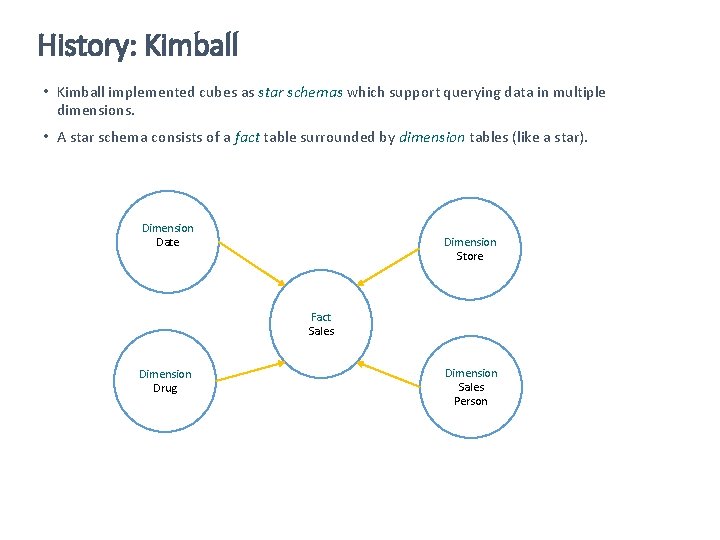

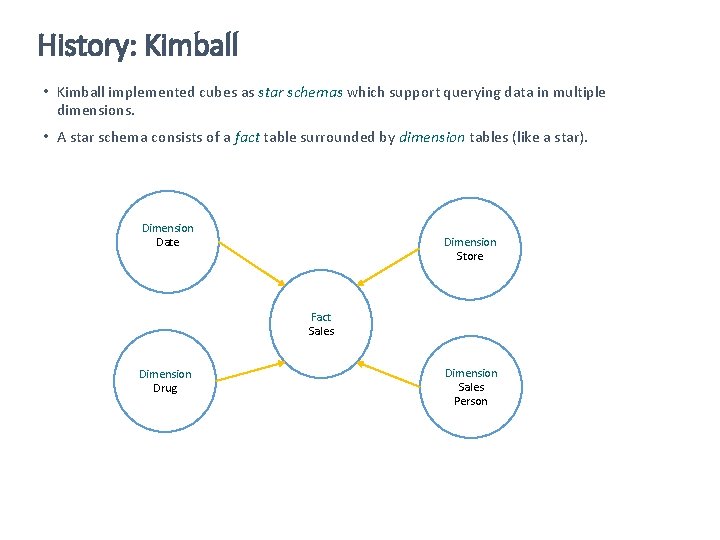

History: Kimball • Kimball implemented cubes as star schemas which support querying data in multiple dimensions. • A star schema consists of a fact table surrounded by dimension tables (like a star). Dimension Date Dimension Store Fact Sales Dimension Drug Dimension Sales Person

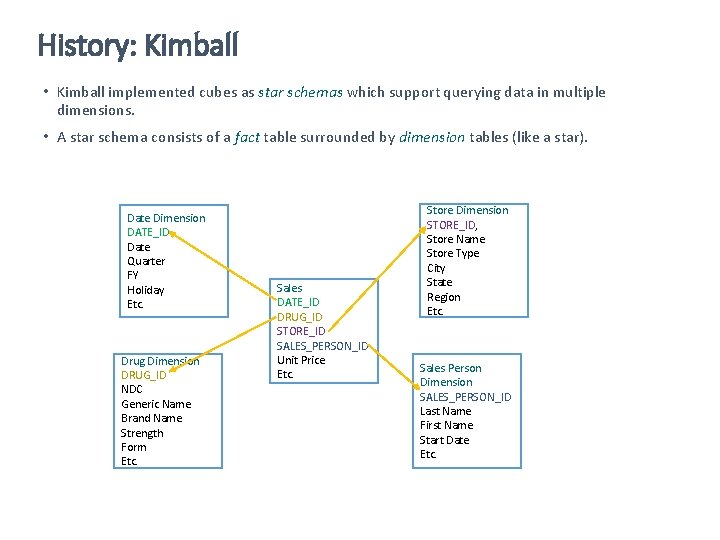

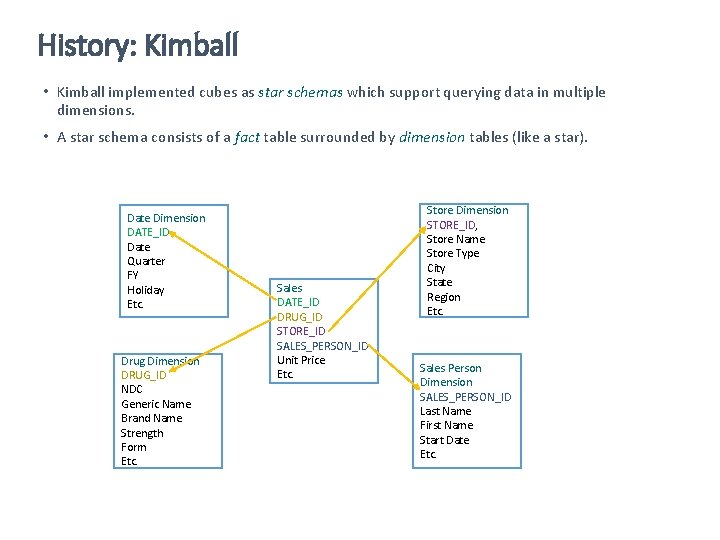

History: Kimball • Kimball implemented cubes as star schemas which support querying data in multiple dimensions. • A star schema consists of a fact table surrounded by dimension tables (like a star). Date Dimension DATE_ID Date Quarter FY Holiday Etc. Drug Dimension DRUG_ID NDC Generic Name Brand Name Strength Form Etc. Sales DATE_ID DRUG_ID STORE_ID SALES_PERSON_ID Unit Price Etc. Store Dimension STORE_ID, Store Name Store Type City State Region Etc. Sales Person Dimension SALES_PERSON_ID Last Name First Name Start Date Etc.

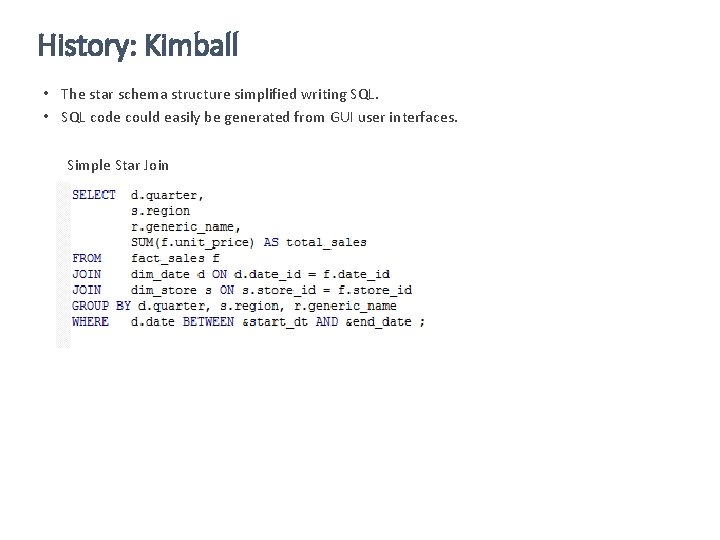

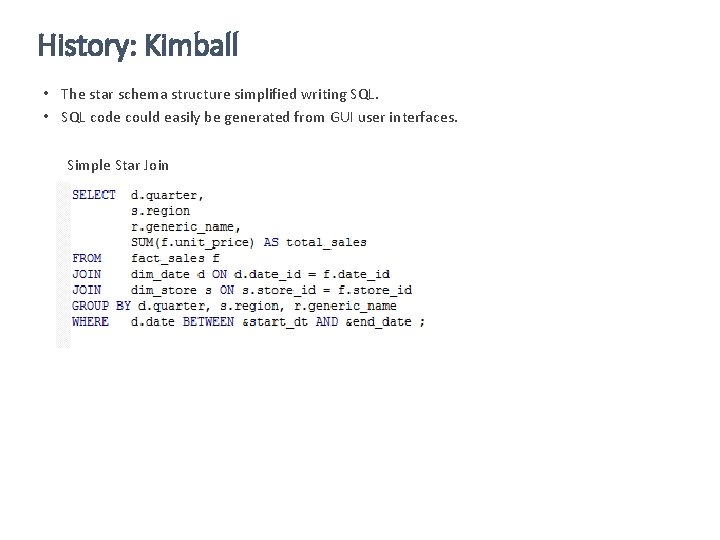

History: Kimball • The star schema structure simplified writing SQL. • SQL code could easily be generated from GUI user interfaces. Simple Star Join

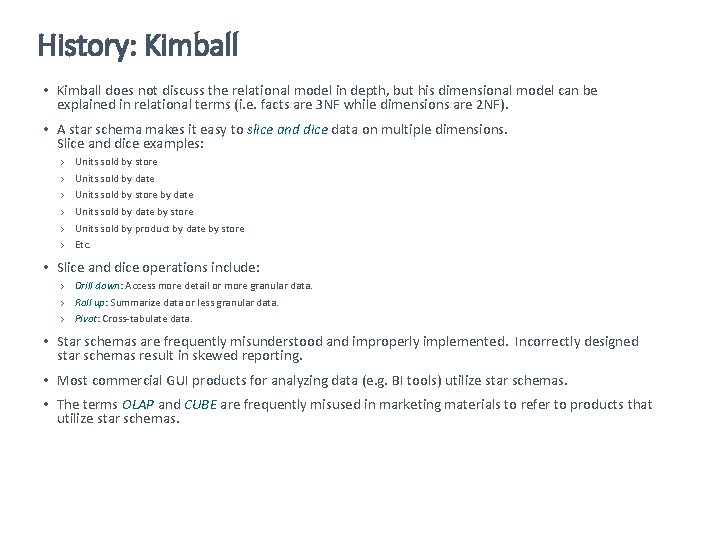

History: Kimball • Kimball does not discuss the relational model in depth, but his dimensional model can be explained in relational terms (i. e. facts are 3 NF while dimensions are 2 NF). • A star schema makes it easy to slice and dice data on multiple dimensions. Slice and dice examples: › › › Units sold by store Units sold by date Units sold by store by date Units sold by date by store Units sold by product by date by store Etc. • Slice and dice operations include: › Drill down: Access more detail or more granular data. › Roll up: Summarize data or less granular data. › Pivot: Cross-tabulate data. • Star schemas are frequently misunderstood and improperly implemented. Incorrectly designed star schemas result in skewed reporting. • Most commercial GUI products for analyzing data (e. g. BI tools) utilize star schemas. • The terms OLAP and CUBE are frequently misused in marketing materials to refer to products that utilize star schemas.

History: Kimball Wikipedia Factoids • Ralph Kimball had a Ph. D. in electrical engineering from Stanford University. • Kimball worked at the Xerox Palo Alto Research Center (PARC). PARC is where laser printing, Ethernet, object-oriented programming, and graphic user interfaces (GUIs) were invented. • Kimball was a principal designer of the Xerox Star Workstation which was the first personal computer to use a GUI, windows, icons, and mice.

History: Big Data • Rapidly increasing amounts of data in the 21 st Century are surpassing the capabilities of relational databases. • New methods of data storage and retrieval are rapidly emerging. • Unstructured databases which are sometimes referred to as No. SQL databases support vast amounts of text data and extremely fast text searches. • Unstructured databases utilize massively parallel processing (MPP) and extensive text indexing. • Open source software such as Hadoop from Apache is widely used to manage extremely large unstructured databases. • Unstructured databases are generally not useful for complicated transaction processing (OLTP) or complex informatics (OLAP). However, these databases are rapidly evolving to incorporate additional relational capabilities. • Oracle, Microsoft, and other RDBMS vendors sell hybrid database systems that combine unstructured data with relational database systems.

History: Big Data Wikipedia Factoids • Big data became an issue as early as 1880 with the U. S. Census which took several years to tabulate with then existing methods. • The term information explosion was first used in the Lawton Constitution, a small-town Oklahoma newspaper in 1941. • The first known use of the term big data was by NASA researchers Michael Cox and David Ellsworth discussing the inability of existing systems to handle increasing amounts of data. • 1 exabyte = 10006 bytes = 1018 bytes = 1000 petabytes = 1 billion gigabytes.

Paradigm Shift • OLTP vs. OLAP • Paradigm Shift for Management • Paradigm Shift for Database Administrators • Paradigm Shift for Architects and Developers • Paradigm Shift for Analysts and Data Users

Paradigm Shift OLTP vs. OLAP OLTP (i. e. operational data) OLAP (e. g. a data warehouse) Data is modelled specifically for the application. Data is taken from some other application. All data elements for application are present. Desired data elements may not be present. Each record is validated when submitted. Entire data files must be validated upon receipt. Data is almost always normalized (3 NF). Data may be normalized, denormalized, use dimensional model, cross-tabulated, or other models. Data is constantly updated. Historic data does not change. New date ranges are added. Typical operations are on small sets of records. (e. g. add a record, update a record) Typical operations are on large numbers of records. (e. g. load large data file, groupings and aggregations) All transactions are logged. Inserts may not be logged at record level. There normally are no updates or deletes. B-tree indexes used for performance. Partitioning and bitmap indexes used for performance. Traditional development life cycle Heuristic and agile development Date range is limited. Old records are archived. Date range can be many years. Development and production are in separate databases. All data is production. Code is version-controlled.

Paradigm Shift for Management • Traditional development life cycle doesn’t work well when building a data warehouse. There is a discovery process. Agile development works better. • Data warehouses are created from data that was designed for some other purpose. It is important to evaluate data content before planning applications. • Integrating data from multiple sources can be hampered by inconsistencies: › › › Different code values Different columns Different meaning of column names Variations in how well columns are populated Other inconsistencies • OLAP data tend to be much larger requiring more resources. • Storage, storage…

Paradigm Shift for Database Administrators • Different system configurations (in Oracle, different initialization parameters) • Backup frequency may be based on ETL scheduling rather than transaction volume. • Transaction log archiving may not be necessary since there are no transactions—just processes on large amounts of data. Methods for recovery from failure may be different. • Different tuning requirements: › Selects are high cardinality (large percentage of rows) › Massive sorting, grouping and aggregation › DML operations can involve thousands or millions of records. • Need much more temporary space for caching aggregations, sorts and temporary tables. • May be required to add new partitions and archive old partitions for rolling windows of history. • Storage, storage…

Paradigm Shift or Architects and Developers • Different logical modeling and schema design. • Extensive use of partitioning for history and other large tables • Use indexes differently (e. g. bitmap rather than b-tree) • Different tuning requirements › Selects are high cardinality (large percentage of rows) › Lots of sorting, grouping and aggregation › DML operations can involve thousands or millions of records. • ETL processes are different than typical DML processes › › Use different coding techniques Use packages, functions, and stored procedures but rarely use triggers or constraints Many steps to a process Integrate data from multiple sources • Iterative and incremental development process (agile development)

Paradigm Shift For Analysts and Data Users—All Good News • A custom schema (data mart) can be created for each application per the user requirements. • Data marts can be permanent, temporary, generalized or project-specific. • New data marts can be created quickly—typically in days instead of weeks or months. • Data marts can easily be refreshed when new data is added to the data warehouse. Data mart refreshes can be scheduled or on demand. • There may be additional query tools and dashboards available (e. g. Business Intelligence, Self. Service BI, data visualization, etc. ). • Several years of history can be maintained in a data warehouse—bigger samples. • There is a consistent single source of truth for any given data set.

Architecture: Main Components of a Data Warehouse Operational Data Warehouse ETL/T&I Analytic Data ETL/T&I OLTP DM Query Staging OLTP History Reference Metadata DM ODS OLTP DM Report Data set

Architecture: Staging and ODS Operational Data Warehouse • Data. Staging is the area OLAP where. Data operational data is initially loaded. ETL OLTP • OLTP Staging OLTP Query • Data sources may include Query data DM complete replacement files, but are usually new records only. • History reports should be run on staging data if it Validation Report DM REF originated from a source external to the organization. • An Operational Data Store (ODS) is an optional component that is used for Near-Real-Time. Data reporting. OLTP ODS DMdata at a given point in time Snapshots of operational are loaded into staging. • • DMand integration of data Limited transformation set Less history (typically only days)

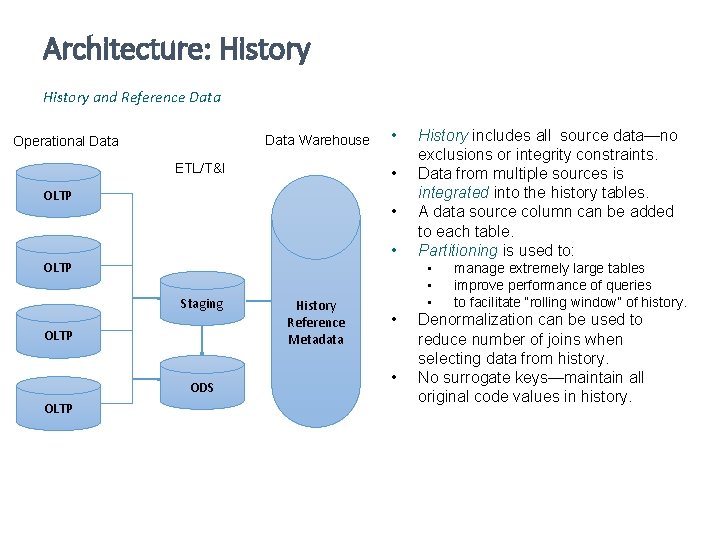

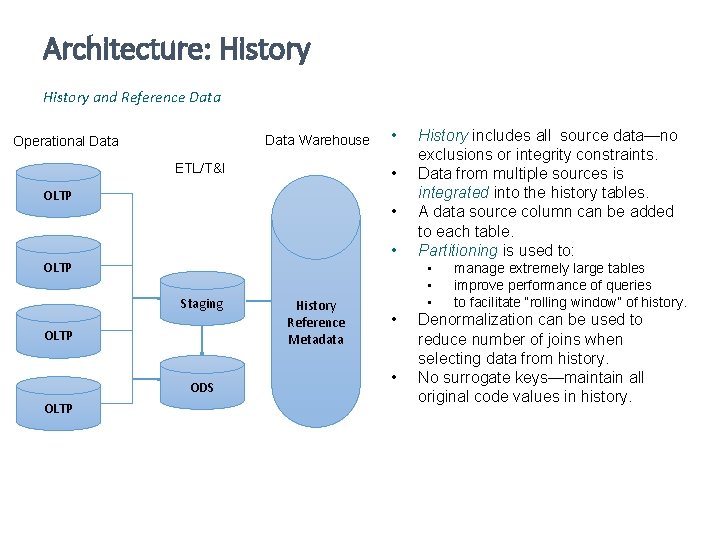

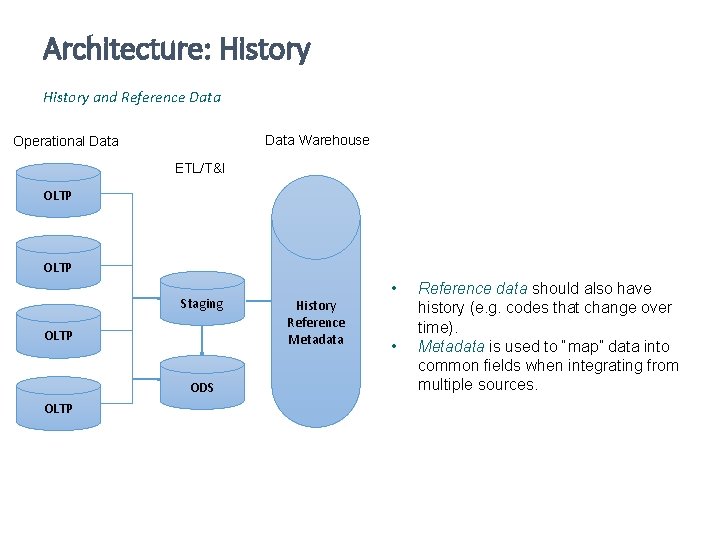

Architecture: History and Reference Data Warehouse Operational Data ETL/T&I • OLTP • • OLTP Staging OLTP ODS OLTP • History Reference Metadata History includes all source data—no OLAP Data exclusions or integrity constraints. Data from multiple sources is integrated tables. DM into the history. Query A data source column can be added to each table. Partitioning is used to: • • • Query DM manage extremely large tables improve performance of queries to facilitate “rolling window” of history. Denormalization can be Report used to DMnumber of joins when reduce selecting data from history. No surrogate keys—maintain all original code values in history. Data DM set

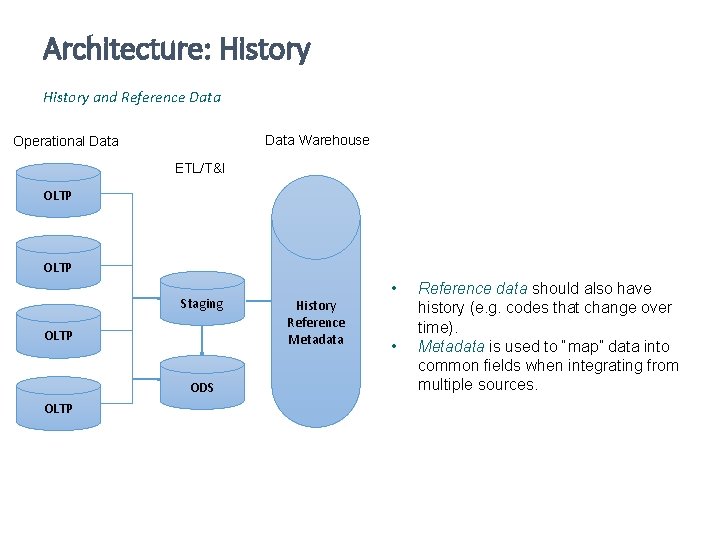

Architecture: History and Reference Data Warehouse Operational Data OLAP Data ETL/T&I OLTP DM Query Staging OLTP ODS OLTP History Reference Metadata • • Reference data should also have history (e. g. codes that change over Report time). DM Metadata is used to “map” data into common fields when integrating from multiple sources. DM Data set

Architecture: Data Marts • Data marts are per requirements of users and applications. ETL Operational Datacriteria (conditions • Selection in WHERE ETL/T&I clause) are applied when creating data OLTP marts. • Logical data modeling is applied here (e. g. denormalized, star schema, cross. OLTP tabulated, derived columns, etc. ). • Any surrogate keys can be applied at data Staging mart level (e. g. patient IDs). History • Data marts can be on different platforms REF (e. g. Oracle, SQL Server, text files, SAS data sets, etc. ) • Data marts can be permanent for ongoing ODS or temporary for one-time applications. • Data mart refreshes can be scheduled or on demand. OLAP Data DM Query DM DM Report Data set

Emerging Technologies Emerging technologies that are having an impact on data warehousing • Massively Parallel Processing (MPP) • In-Memory Databases (IMDB) • Column-Oriented Databases • Database Appliances • Advanced Access Tools • Cloud Database Services • Relational/Unstructured Hybrid Systems

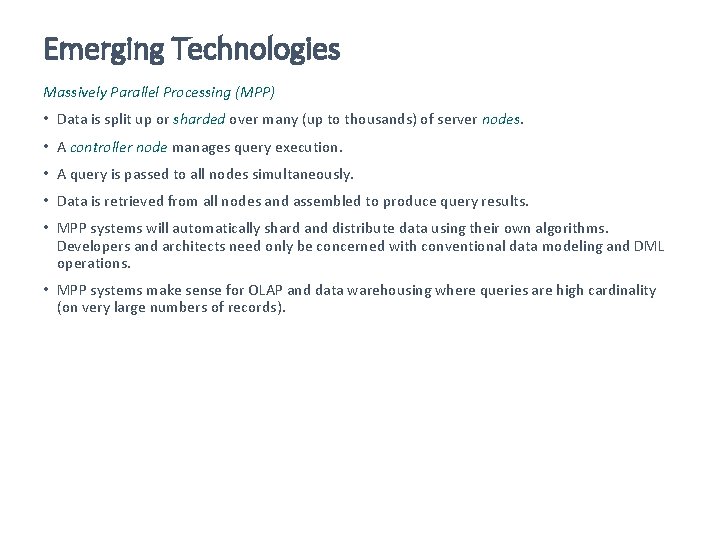

Emerging Technologies Massively Parallel Processing (MPP) • Data is split up or sharded over many (up to thousands) of server nodes. • A controller node manages query execution. • A query is passed to all nodes simultaneously. • Data is retrieved from all nodes and assembled to produce query results. • MPP systems will automatically shard and distribute data using their own algorithms. Developers and architects need only be concerned with conventional data modeling and DML operations. • MPP systems make sense for OLAP and data warehousing where queries are high cardinality (on very large numbers of records).

Emerging Technologies Massively Parallel Processing (MPP) Node 1 Node 2 Query Server Node Map Reduce Node 3 … Node n Result

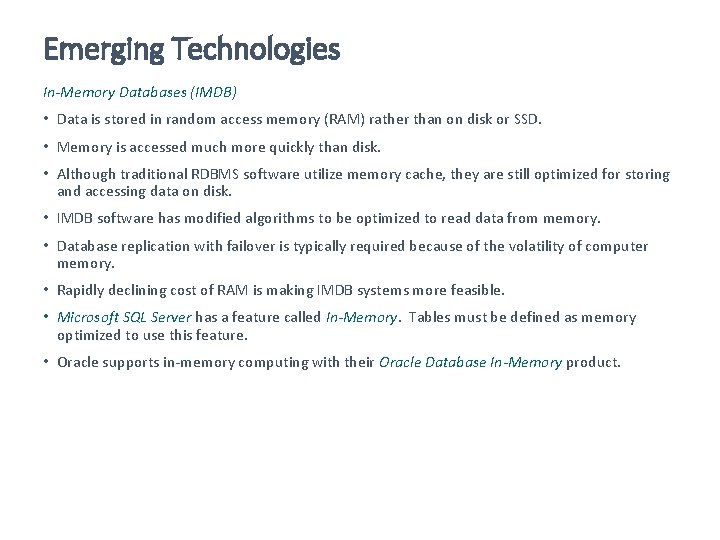

Emerging Technologies In-Memory Databases (IMDB) • Data is stored in random access memory (RAM) rather than on disk or SSD. • Memory is accessed much more quickly than disk. • Although traditional RDBMS software utilize memory cache, they are still optimized for storing and accessing data on disk. • IMDB software has modified algorithms to be optimized to read data from memory. • Database replication with failover is typically required because of the volatility of computer memory. • Rapidly declining cost of RAM is making IMDB systems more feasible. • Microsoft SQL Server has a feature called In-Memory. Tables must be defined as memory optimized to use this feature. • Oracle supports in-memory computing with their Oracle Database In-Memory product.

Emerging Technologies Column-Oriented Databases • Data in a typical relational database is organized by row. The row paradigm is used for physical storage as well as the logical organization of data. • Column-Oriented databases physically organize data by column while still able to present data within rows. • Since most queries select a subset of columns (rather than entire rows), column-oriented databases tend to perform much better for analytical processing. • Both Microsoft SQL Server and Oracle 12 c have support for column-based data storage. • See http: //nms. csail. mit. edu/~stavros/pubs/tutorial 2009 -column_stores. pdf.

Emerging Technologies Column-Oriented Databases Row-Oriented Storage PATIENT_ID STUDY_ID PROCSTEP_ID FORMDATA_SEQ DOB … HUNDREDS OF COLUMNS 10001 1040 10 0 9/14/1961 … HUNDREDS OF COLUMNS 10002 1040 10 0 4/27/1960 … HUNDREDS OF COLUMNS Column-Oriented Storage PROCSTEP_ID STUDY_ID 10001 1040 10 0 9/14/1961 … 1040 10 0 4/27/1960 … 10002 FORMDATA_SEQ DOB HUNDREDS OF COLUMNS PATIENT_ID … SELECT study_id, COUNT(*) FROM form_demog_data WHERE dob > ‘ 01/01/1960’ GROUP BY study_id ; • • In a row-oriented database, the entire row would be accessed. In a column-oriented database only STUDY_ID and DOB would have to be accessed.

Emerging Technologies Database Appliances • A database appliance is an integrated, preconfigured package of RDBMS software and hardware. • The most common type of database appliance is a data warehouse appliance. • Most major database vendors including Oracle and Microsoft and their hardware partners package and sell database appliances for data warehousing. • Data warehouse appliances utilize massively parallel processing (MPP). • Database appliances don’t always scale well outside of the purchased configuration. You generally don’t add storage to a database appliance. • The database appliance removes the burden of performance tuning. Conversely, database administrators have less flexibility. • A database appliance can be a cost-effective solution for data warehousing in many situations.

Emerging Technologies Advanced Access Tools • Business Intelligence (BI) tools allow users to view and access data, create aggregations and summaries, create reports, and view dashboards with current data. • BI tools are usually very good for slice and dice operations on star schemas. • BI tools typically sit on top of data marts created by the architects and developers. Data marts that support BI are typically star schema. • Newer Self-Service BI tools additional capabilities such as allowing users to integrate multiple data sources and do further analysis on result data sets from previous analyses. • Data visualization tools allow users to view data in various graphs. • Newer tools allow users to access and analyze data from multiple form factors including smart phones and tablets. • BI and data visualization tools do not always provide the capability to perform complex analyses or fulfill specific requirements of complex reports (e. g. complex statistical analyses or epidemiologic studies). Programming skills are frequently still required.

Emerging Technologies Cloud Database Services • A cloud database exists on remote servers and accessed securely over the Internet. • Oracle, Microsoft, and other database vendors offer cloud database services. • A cloud database platform provided by a vendor is called a Platform as a Service (Paa. S). • The cloud database service provider performs all database administrative tasks: › › Replicate data on multiple severs Make backups Scale growing databases Performance monitoring and tuning • Cloud services can be useful for prototyping and heuristic development. A large commitment to hardware purchases and administrative staff can be postponed for later assessment. • Cloud services could result in considerable cost savings for some organizations. • A cloud hybrid database is one that has database components both on the cloud and on local servers. • Cloud services may limit administrative options and flexibility vs. having your own DBAs and system administrators. • Cloud services may not meet regulatory requirements for security and storage for some applications (e. g. HIPAA, FDA regulations, etc. ).

Emerging Technologies Relational/Unstructured Hybrid Systems • Oracle, Microsoft, and other RDBMS vendors sell hybrid database systems that combine unstructured data with relational database systems. • Both Oracle and Microsoft incorporate Hadoop unstructured databases with their proprietary products. • Oracle product Big Data SQL allows standard Oracle SQL to be used against Hadoop data. › External tables are used on unstructured data so that Oracle can “see” the data as a relational table. › Data from Hadoop can be integrated with relational data (e. g. join operations). › Oracle’s Exadata technology is required to get high-performance. › Oracle security can be applied to the unstructured data. • Other Oracle products include Oracle Big Data Appliance, Oracle No. SQL Database, and Oracle Big Data Connectors. • Microsoft product HDInsight allows integration of Hadoop data with SQL Server. • Microsoft Azure with HDInsight integrates Hadoop and SQL Server data in the cloud. • Both Oracle and Microsoft market relational/unstructured hybrid database appliances.

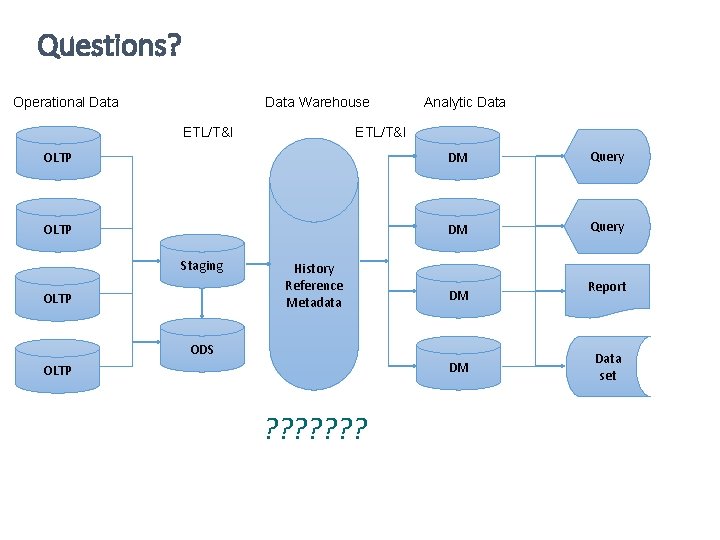

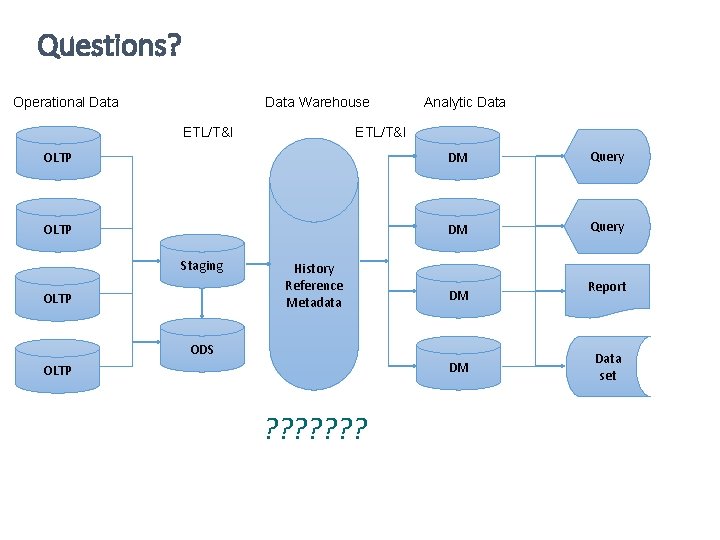

Questions? Operational Data Warehouse ETL/T&I Analytic Data ETL/T&I OLTP DM Query Staging OLTP History Reference Metadata DM ODS DM OLTP ? ? ? ? Report Data set

Image Author Attributions Slide #5 Image 1: "Fortran. Card. PROJ 039. agr" by Arnold Reinhold - I took this picture of an artifact in my possession. The card was created in the late 1960 s or early 1970 s and has no copyright notice. . Licensed under Creative Commons Attribution-Share Alike 2. 5 via Wikimedia Commons http: //commons. wikimedia. org/wiki/File: Fortran. Card. PROJ 039. agr. jpg#mediaviewer/File: Fortran. Card. PROJ 039. agr. jpg Slide #8 Image 1: "IBM Keypunch Machines in use" by born 1945 - Flickr: IBM Keypunch Machines. Licensed under Creative Commons Attribution-Share Alike 2. 0 via Wikimedia Commons - http: //commons. wikimedia. org/wiki/File: IBM_Keypunch_Machines_in_use. jpg#mediaviewer/File: IBM_Keypunch_Machines_in_use. jpg Image 2: “us__en_us__ibm 100__punched_card__hand_cards__620 x 350. jpg” from “IBM 100” web page http: //www 03. ibm. com/ibm/history/ibm 100/us/en/icons/punchcard/breakthroughs/ Image 3: "IBM 26" by Ben Franske - Own work. Licensed under Creative Commons Attribution-Share Alike 3. 0 -2. 5 -2. 0 -1. 0 via Wikimedia Commons http: //commons. wikimedia. org/wiki/File: IBM 26. jpg#mediaviewer/File: IBM 26. jpg Image 4: "IBM 1403 Printer opened" by Erik Pitti - originally posted to Flickr as IBM 1403 Printer. Licensed under Creative Commons Attribution 2. 0 via Wikimedia Commons - http: //commons. wikimedia. org/wiki/File: IBM_1403_Printer_opened. jpg#mediaviewer/File: IBM_1403_Printer_opened. jpg Slide #9 Image 1: “us__en_us__ibm 100__punched_card__hand_cards__620 x 350. jpg” from “IBM 100” web page http: //www 03. ibm. com/ibm/history/ibm 100/us/en/icons/punchcard/breakthroughs/ Slide #11 Image 1: "Fortran. Card. PROJ 039. agr" by Arnold Reinhold - I took this picture of an artifact in my possession. The card was created in the late 1960 s or early 1970 s and has no copyright notice. . Licensed under Creative Commons Attribution-Share Alike 2. 5 via Wikimedia Commons http: //commons. wikimedia. org/wiki/File: Fortran. Card. PROJ 039. agr. jpg#mediaviewer/File: Fortran. Card. PROJ 039. agr. jpg

Image Author Attributions Slide #12 Image 1: "Magtape 1" by Daniel P. B. Smith. . Original uploader was Dpbsmith at en. wikipedia. Later version(s) were uploaded by Boojit at en. wikipedia. - Image by Daniel P. B. Smith. ; Transferred from en. wikipedia. Licensed under Creative Commons Attribution-Share Alike 3. 0 via Wikimedia Commons http: //commons. wikimedia. org/wiki/File: Magtape 1. jpg#mediaviewer/File: Magtape 1. jpg Image 2: "Camp Smith, Hawaii. PFC Patricia Barbeau operates a tape-drive on the IBM 729 at Camp Smith. - NARA - 532417" by Unknown or not provided. Licensed under Public domain via Wikimedia Commons - http: //commons. wikimedia. org/wiki/File: Camp_Smith, _Hawaii. _PFC_Patricia_Barbeau_operates_a_tapedrive_on_the_IBM_729_at_Camp_Smith. _-_NARA_-_532417. tif#mediaviewer/File: Camp_Smith, _Hawaii. _PFC_Patricia_Barbeau_operates_a_tapedrive_on_the_IBM_729_at_Camp_Smith. _-_NARA_-_532417. tif Slide #14 and 15 Image 1: "IBM 2311 memory unit" by Deep silence (Mikaël Restoux) - 25 years of computers, La défense (Paris). Licensed under Creative Commons Attribution 2. 5 via Wikimedia Commons - http: //commons. wikimedia. org/wiki/File: IBM_2311_memory_unit. JPG#mediaviewer/File: IBM_2311_memory_unit. JPG Slide #16 Image 1: "IBM 2311 memory unit" by Deep silence (Mikaël Restoux) - 25 years of computers, La défense (Paris). Licensed under Creative Commons Attribution 2. 5 via Wikimedia Commons - http: //commons. wikimedia. org/wiki/File: IBM_2311_memory_unit. JPG#mediaviewer/File: IBM_2311_memory_unit. JPG Image 2: "DEC VT 100 terminal" by Jason Scott - Flickr: IMG_9976. Licensed under Creative Commons Attribution 2. 0 via Wikimedia Commons http: //commons. wikimedia. org/wiki/File: DEC_VT 100_terminal. jpg#mediaviewer/File: DEC_VT 100_terminal. jpg Image 3: "IBM 360 -65 -1. corestore" by Original uploader was Arnold. Reinhold at en. wikipedia - Originally from en. wikipedia; description page is/was here. . Licensed under Creative Commons Attribution-Share Alike 3. 0 via Wikimedia Commons - http: //commons. wikimedia. org/wiki/File: IBM 360 -651. corestore. jpg#mediaviewer/File: IBM 360 -65 -1. corestore. jpg Slide #17 Image 1: "IBM 2311 memory unit" by Deep silence (Mikaël Restoux) - 25 years of computers, La défense (Paris). Licensed under Creative Commons Attribution 2. 5 via Wikimedia Commons - http: //commons. wikimedia. org/wiki/File: IBM_2311_memory_unit. JPG#mediaviewer/File: IBM_2311_memory_unit. JPG

Image Author Attributions Slide #22 Image 1: "Edgar F Codd". Via Wikipedia - http: //en. wikipedia. org/wiki/File: Edgar_F_Codd. jpg#mediaviewer/File: Edgar_F_Codd. jpg Slide #35 Image 1: “inmon. gif” from “Bill Inmon: Date Warehouses and Decision Support Systems” http: //www. dssresources. com/interviews/inmon 05122005. html Slide #41 Image 1: "Ralph kimball" by Ralphfan 99 - Own work. Licensed under Creative Commons Attribution-Share Alike 3. 0 via Wikimedia Commons http: //commons. wikimedia. org/wiki/File: Ralph_kimball. jpg#mediaviewer/File: Ralph_kimball. jpg Slide #43 Image 1: "Big data cartoon t gregorius" by Thierry Gregorius - Cartoon: Big Data. Licensed under Creative Commons Attribution 2. 0 via Wikimedia Commons - http: //commons. wikimedia. org/wiki/File: Big_data_cartoon_t_gregorius. jpg#mediaviewer/File: Big_data_cartoon_t_gregorius. jpg All other images Copyright © 2014 Randy Grenier